1. Introduction

1.1. Critical Need to Evaluate Dropouts

Reducing undergraduate dropout rates is a significant challenge for higher education institutions worldwide [

1]. The consequences extend beyond individual students, affecting their personal development and career prospects and contributing to broader societal and economic instability [

2]. However, dropout is not universally detrimental. In the context of labor market transformation, some students who leave academic education pursue vocational and technical education, which in many countries (including Europe) is gaining importance by providing stable employment and competitive earnings, often more quickly than academic paths [

3]. Thus, dropout may, in certain cases, represent an adaptation to labor market realities rather than a failure.

High dropout rates underline the stability and quality of educational systems, disrupt social cohesion, and pose challenges for economic growth [

4]. At the same time, dropout reflects deeper social and cultural changes, such as the depreciation of academic diplomas due to declining educational quality, the rising demand for skills in the labor market, and the growing role of vocational education. In this context, dropout may indicate both a loss of certain educational benefits (e.g., social mobility, degree-related opportunities) and a rational choice for quicker labor market entry and economic independence [

5].

Despite widespread recognition of the issue, there is no universally accepted definition of dropout. Variations in how dropout is defined, along with differences in data sources and calculation methods, result in inconsistent estimates of dropout rates [

6]. These discrepancies complicate the development of a cohesive global strategy and underscore the need to understand the socio-economic and cultural drivers of dropout. Therefore, further research into the causes and predictors of undergraduate dropout is crucial for creating effective interventions and improving student retention in higher education.

While dropout risks affect all students, domestic and international students face distinct challenges that may influence their likelihood of leaving university [

7,

8]. Domestic students often have different academic profiles and experiences compared to international students, suggesting the need for studies that address their specific risks [

8]. Meanwhile, international students face additional obstacles, such as language barriers, cultural adjustment, and financial pressures, which can impact their academic performance and overall university experience [

9,

10]. These challenges may contribute to higher dropout rates within this group and require separate investigation to develop targeted strategies for retention.

For example, a study by Smith et al. [

11] at a Canadian university found that international students relied more on social networks, engaged more with campus life, and had a stronger connection to faith compared to domestic students. They also reported a greater need for a safe living environment and access to healthy food, factors that significantly impacted their academic success. Exploring these distinct factors will help universities create more effective retention strategies that address the unique needs of each group.

1.2. Current State of Literature

Undergraduate dropout risk assessment is a critical issue in higher education. Research consistently demonstrates that both subjective factors, such as mental health and motivation, and objective factors, including academic performance and financial status, significantly influence dropout intention [

12,

13,

14,

15,

16,

17]. For instance, Savio et al. [

12] tracked students before and after the COVID-19 pandemic, revealing a dramatic surge in dropout intention, from 0% to 25–40%, directly linked to deteriorating mental health and financial instability, highlighting the profound effect of external crises.

Cultural and technological factors also play an important role in shaping dropout risk. Migration patterns, content consumption habits, and the pervasive influence of social media are all shifting students’ priorities and behaviors, potentially influencing their academic engagement and decision to persist or dropout. For instance, Loureiro et al. [

13] show that while affirmative action policies increased enrollment of Black, mixed-race, Indigenous, and quilombola students, dropout rates for these groups also rose, suggesting that cultural factors continue to heighten dropout risk and that multicultural policy integration remains only partially successful.

Crucially, the drivers of dropout risk differ markedly between domestic and international student populations. Anastasiou et al. [

14], using an International Students’ Expectation Risk Assessment Matrix, found that international students prioritize campus living conditions and social integration as critical factors influencing their persistence. In contrast, Costa et al. [

15], employing Kaplan–Meier estimation and Cox modeling, identified strong academic performance as a primary protective factor against dropout overall, but emphasized that international students face compounded risks specifically stemming from language barriers, cultural adjustment, and integration challenges within the technological and social media-driven academic environment. These distinct risk profiles underscore the necessity for developing group-specific predictive models that account for both socio-cultural and technological dimensions.

Methodologically, traditional approaches to assessing dropout risk have often relied on resource-intensive longitudinal surveys, questionnaires, or interviews [

16,

17,

18,

19,

20]. Llauro et al. [

16], for example, longitudinally assessed instructors’ ability to predict student dropout intentions. While demonstrating moderate accuracy, this approach was found to demand an unsustainable administrative workload, highlighting the critical need for scalable, automated solutions leveraging artificial intelligence technologies.

Machine learning (ML) and deep learning (DL) techniques offer promising alternatives by effectively modeling complex, nonlinear interactions between diverse predictive factors [

21,

22,

23,

24]. ML models have successfully established links, such as higher family income correlating with lower dropout risk [

22], or identified gender as a key predictor in online education contexts using algorithms like Light Gradient Boosting Machine [

23]. More advanced DL methods, particularly the Conditional Tabular Generative Adversarial Network (CTGAN) technique based on neural networks, further address the challenges of limited data and potential overfitting [

25,

26,

27]. In combination with ML models, CTGAN enhances the robustness of predictions by leveraging the complementary strengths of data augmentation and predictive modeling [

21].

Despite significant advances, limitations persist in the current literature. Most studies focus on isolated factors, such as mental health or academic pressure, without assessing their relative weights and complex interactions. The differential impact of subjective and objective factors on domestic versus international students is also poorly understood [

14,

24,

28]. Additionally, while ML and CTGAN show potential, their application in modeling the complex, interdependent pathways leading to dropout, especially across student groups, remains underdeveloped. Therefore, research is needed that uses advanced computational methods, like deep learning, to assess the interplay of multiple risk factors, compare predictive models, identify key drivers for different student cohorts, and quantify the correlations between these factors to guide more targeted interventions.

1.3. Contributions of the Present Research

In this study, advanced ML and CTGAN techniques were applied to evaluate and predict undergraduate dropout risks, aiming to reveal the complex relationships between various factors and the outcome, as well as to develop a predictive tool. The workflow for conducting the ML research is depicted in

Figure 1.

The dataset used for this research includes 3544 data points for domestic students and 86 data points for international students, with 23 input features and 1 target outcome: whether a student will graduate or dropout. For domestic students, 85% of the data was used for training, while 15% was reserved for testing. For international students, data augmentation was performed using the CTGAN technique, a deep learning-based approach, to generate 487 synthetic data points. The training was performed using cross-validation on the synthetic data, while testing was conducted on the real 86 data points to ensure model reliability and generalizability. The Automated Machine Learning (AutoML) approach was used to streamline the process, offering a low-code solution accessible to researchers across various fields. Key factors influencing dropout risk were further assessed using SHapley Additive exPlanations (SHAP), and a web-based GUI app was developed using the Streamlit cloud platform for easy access.

The study addresses three key research questions:

- (1)

What are the relationships between the various factors and the dropout risk for both domestic and international students?

- (2)

Do the most significant factors differ between domestic and international students, and are objective factors more influential than subjective factors?

- (3)

How can educators use the forecasting models in practice, and how can researchers extend this study to other nations?

To answer the first question, a comprehensive dataset and data augmentation techniques were used to explore complex relationships between factors and dropout risk through ML. For the second question, separate ML models were developed for domestic and international students, combined with Explainable Artificial Intelligence (XAI) techniques like SHAP to rank impact factors and identify key ones for tailored support. For the third question, an online, web-based app was created for mobile access, with all ML code open-access in the

Supplementary Materials for further adaptation to other nations.

2. Machine Learning Methodology

A data augmentation strategy, in conjunction with an AutoML framework, was utilized to develop robust data-driven predictive models for undergraduate dropouts. To assess and interpret the resulting ML models, a variety of classification evaluation metrics and XAI techniques were employed. The foundational background supporting the autonomy of this study is briefly outlined below, with further details available in the referenced literature.

2.1. Data Augmentation for Enhancing Models

Building robust, data-driven ML models in real-world scenarios is often hindered by limited data availability. Additional data collection surveys are often resource-intensive and costly, rendering them an impractical solution. Consequently, deep learning data augmentation techniques like CTGAN are becoming increasingly important for generating high-quality synthetic data [

29].

CTGAN, introduced by Xu et al. in 2019 [

30], is a novel Generative Adversarial Network (GAN) variant specifically designed for generating synthetic tabular data, and falls within the domain of deep learning. It effectively preserves the statistical properties of the original dataset, making it highly suitable for data augmentation. The architecture consists of two neural networks trained in an adversarial manner: a Generator (

G), which creates synthetic data samples, and a Discriminator (

D), which attempts to distinguish between real data and the synthetic data produced by the generator (refer to

Figure 1). Although

G and

D continuously compete without fully achieving their individual goals during this adversarial process, the

G neural network gradually learns to produce realistic synthetic data that captures meaningful statistical characteristics from the original dataset [

29]. This ability to generate high-quality synthetic tabular data is the core purpose of applying CTGAN.

2.2. Automated Machine Learning Models

AutoML is transforming the field by enhancing accessibility and efficiency. It accelerates development and reduces the need for extensive coding expertise, democratizing ML technologies [

31,

32,

33].

PyCaret, an open-source library licenced under the MIT License, exemplifies this approach. It serves as a Python 3.7 wrapper that integrates multiple libraries and frameworks, such as scikit-learn and various ensemble models. This integration enables diverse tuning strategies and model customization (See

Figure 1).

PyCaret further simplifies model evaluation by providing a concise, tabular summarized overview of key performance metrics and training times across models. This facilitates rapid comparison and informed selection for specific tasks. Including training durations also offers critical insights into computational demands, aiding scalability assessment and resource planning. Finally, PyCaret supports flexible deployment to cloud platforms or local servers, ensuring seamless integration into production environments and enhancing operational readiness [

32].

2.3. Classification Evaluation Metrics

Performance metrics are crucial for rigorously assessing the effectiveness of classification models in PyCaret, such as distinguishing between graduate and dropout outcomes. These metrics quantify various aspects of model performance, such as accuracy, reliability, and its ability to differentiate between classes. This enables robust model comparisons and informs targeted improvements. This study uses several key metrics [

33,

34]:

Accuracy: Proportion of correct predictions.

AUC (Area Under the Curve): Measures class separation ability.

Recall: Ability to correctly identify positive cases.

Precision: Reliability of positive predictions.

F1 Score: Harmonic mean of precision and recall, useful for imbalanced classes.

Cohen’s Kappa: Agreement between predictions and actual outcomes, beyond chance.

Each metric ranges from 0 to 1, with 1 indicating optimal performance [

35]. Together, these metrics offer a comprehensive view of model strengths and weaknesses.

2.4. Interpretability and Model Explainability

As ML models grow more complex, their interpretability becomes essential for trust and accountability. XAI techniques address this by making model behavior transparent. A key method is SHAP, based on game theory. SHAP quantifies each feature’s contribution to a specific prediction using Shapley values, capturing both individual impacts and feature interactions by treating them as collaborators in a cooperative game, offering detailed insight into individual predictions [

35].

Complementing SHAP, ICE plots and PDPs help understand feature influence. ICE plots show how a prediction for a single instance change as one feature varies, revealing instance-specific effects. PDPs illustrate the average effect of a feature across the dataset, marginalizing over others, offering a global view [

36]. Together, these techniques provide crucial insights into model decision-making, significantly enhancing interpretability and trustworthiness.

3. Dataset Description

The real-world data sources utilized in this study include data from multiple institutions, such as the annual data from the General Directorate of Higher Education and data from the Contemporary Portugal Database [

37]. Due to the limited availability of data for international students, the CTGAN was meticulously trained to augment the dataset by generating synthetic data, thus enhancing the analysis.

3.1. Real-World Dataset

The dataset comprises 3544 records for domestic students and 86 records for international students, capturing 23 features that encompass various aspects of student identity, academic performance, and socioeconomic status. These features provide a comprehensive insight into student data.

An analysis of the summary statistics in

Table 1 reveals notable variation across the features, with some showing non-normal distributions. For instance, the Marital and Need features display significant skewness and kurtosis, indicating highly skewed distributions. Similarly, the target variable, representing the outcome of interest, exhibits a skewness of 4.34 and kurtosis of 20.89, further suggesting a non-normal distribution. While most features generally adhere to expected distribution patterns, the observed skewness in several variables may introduce challenges for modeling. Nevertheless, these findings provide valuable insights, and the work remains crucial for advancing our understanding of the dataset, with potential avenues for refinement and further exploration.

3.2. Synthetic Data Generation

Due to the limited size of the international student dataset (only 86 real samples), we applied the CTGAN-based data augmentation method (

Section 2.1) to generate a complementary synthetic dataset. The well-trained CTGAN effectively captured the underlying distribution of the original data, generating a synthetic dataset with statistically meaningful properties. Both the real and synthetic datasets are available in the

Supplementary Material; key statistics of the synthetic dataset are summarized in

Table 2.

Figure 2 compares the density distributions of the real and synthetic datasets, showing that the synthetic data closely follows the distribution of the real data across all variables, thereby confirming that CTGAN has effectively learned the underlying patterns. Notably, the proportions of discontinuous target outputs are almost identical in both datasets.

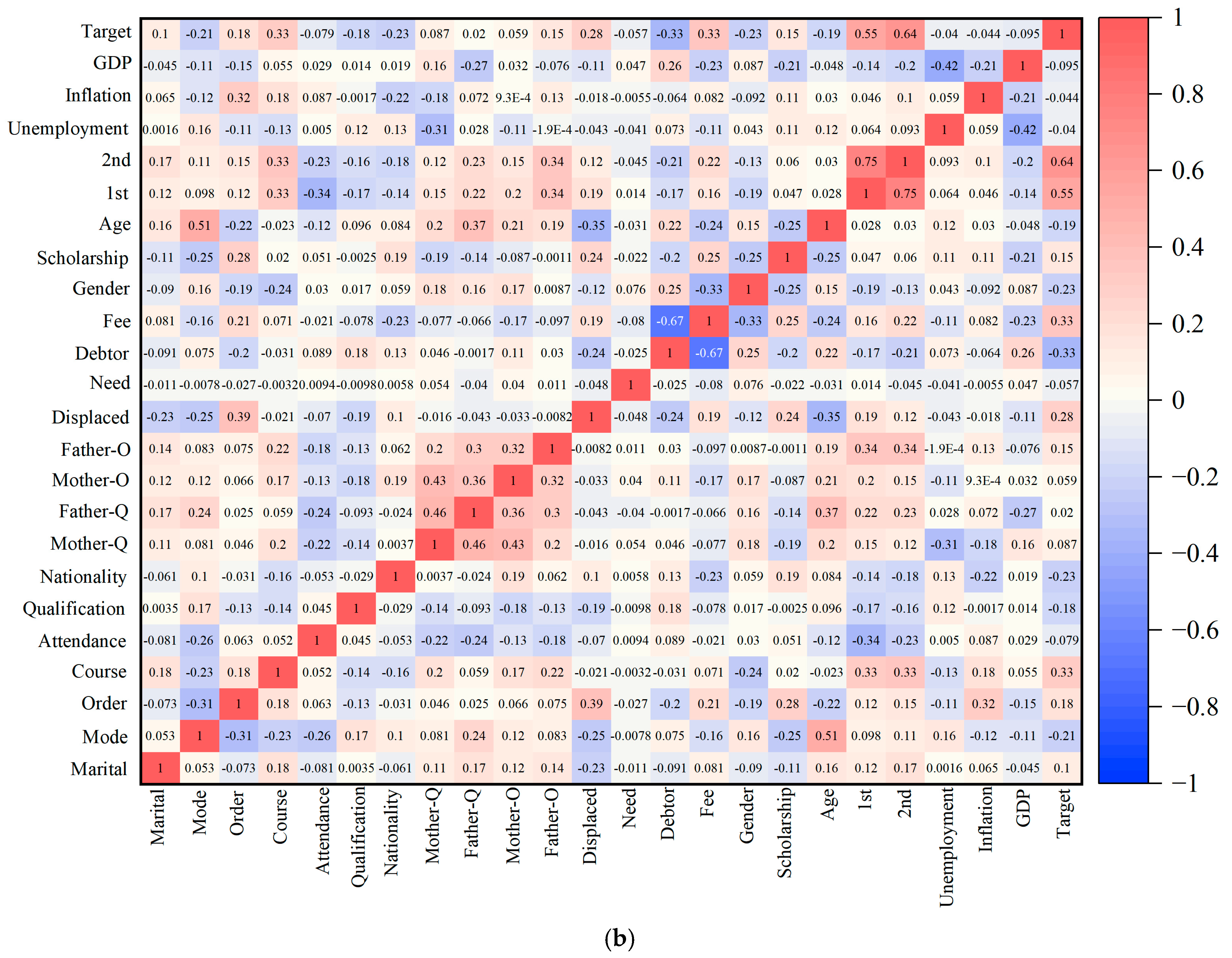

Figure 3 presents correlation matrices for both datasets. The Pearson correlation coefficient (ρ) ranges from −1 to 1, indicating strong positive (near 1), strong negative (near −1), or weak/no linear correlation (near 0). Key relationships, such as those between the Target and the 1st/2nd semester approved courses, show strong correlations (ρ > 0.55).

Table 3 illustrates the distributional similarity between the two datasets. The Jensen–Shannon Divergence (JSD) ranges from 0 to 1, where values closer to 0 indicate stronger distributional similarity, and values closer to 1 indicate greater divergence. Several key features, such as scholarship and gender, exhibit strong distributional similarity [

38].

While these plots provide valuable insights into potential variable influences, it is important to note that correlation values can vary significantly depending on the well-balanced distribution of the dataset [

29]. Therefore,

Section 4.3 provides a more comprehensive analysis of feature importance using the SHAP method.

4. Results

Leveraging machine learning approaches, this research investigates undergraduate dropout for domestic and international students. The implementation code is provided in the

Supplementary Material to enable replication and further application. This section describes the modeling process, performance evaluation, and interpretation of the prediction results.

4.1. Development of the Graduation Prediction Model

Classification algorithms were applied using an 85% training and 15% testing split, as illustrated in

Figure 4. The synthetic dataset served exclusively as the training set for the international student model, resulting in a validation strategy of cross-validation on synthetic data and a single test on real data. The 10-fold cross-validation approach maximized training data utilization and mitigated overfitting risks. Additionally, a real data comparison model is introduced in the CTGAN model for international students, enabling validation of the results with actual data.

Table 4 and

Table 5 present a detailed performance comparison of the top 10 models used for predicting dropout rates among domestic and international students, respectively. These models, developed with widely adopted algorithms, were evaluated based on the performance metrics outlined in

Section 2.3.

Among the models tested, the ensemble Boosting classifier stood out with the strongest overall performance. Specifically, for domestic students, the Light Gradient Boosting Machine (LightGBM) achieved the highest accuracy at 0.9014, while for international students, the CatBoost Classifier (CatBoost) emerged as the top performer, attaining an accuracy of 0.9004. This comparison highlights the nuanced performance of different algorithms across diverse student populations, demonstrating the adaptability and effectiveness of machine learning techniques in predicting dropout risk.

To further assess the risks associated with applying the CTGAN method for international students, we adopted the Train on Real, Test on Real (TRTR) approach for validation. The CatBoost classifier achieved an accuracy of 0.8518, which was lower than the performance obtained under the Train on Synthetic, Test on Real (TSTR) setting. After applying the CTGAN method, however, the accuracy increased substantially to 0.9004, demonstrating that CTGAN effectively augmented the data and improved CatBoost’s predictive capability on real data.

4.2. Performance Assessment of the Constructed Model

The performance of the LightGBM and CatBoost models was thoroughly evaluated to assess their prediction accuracy and provide insights for future research applications. The evaluation employed several visualization tools, including AUC plots, confusion matrices, and classification report heatmaps.

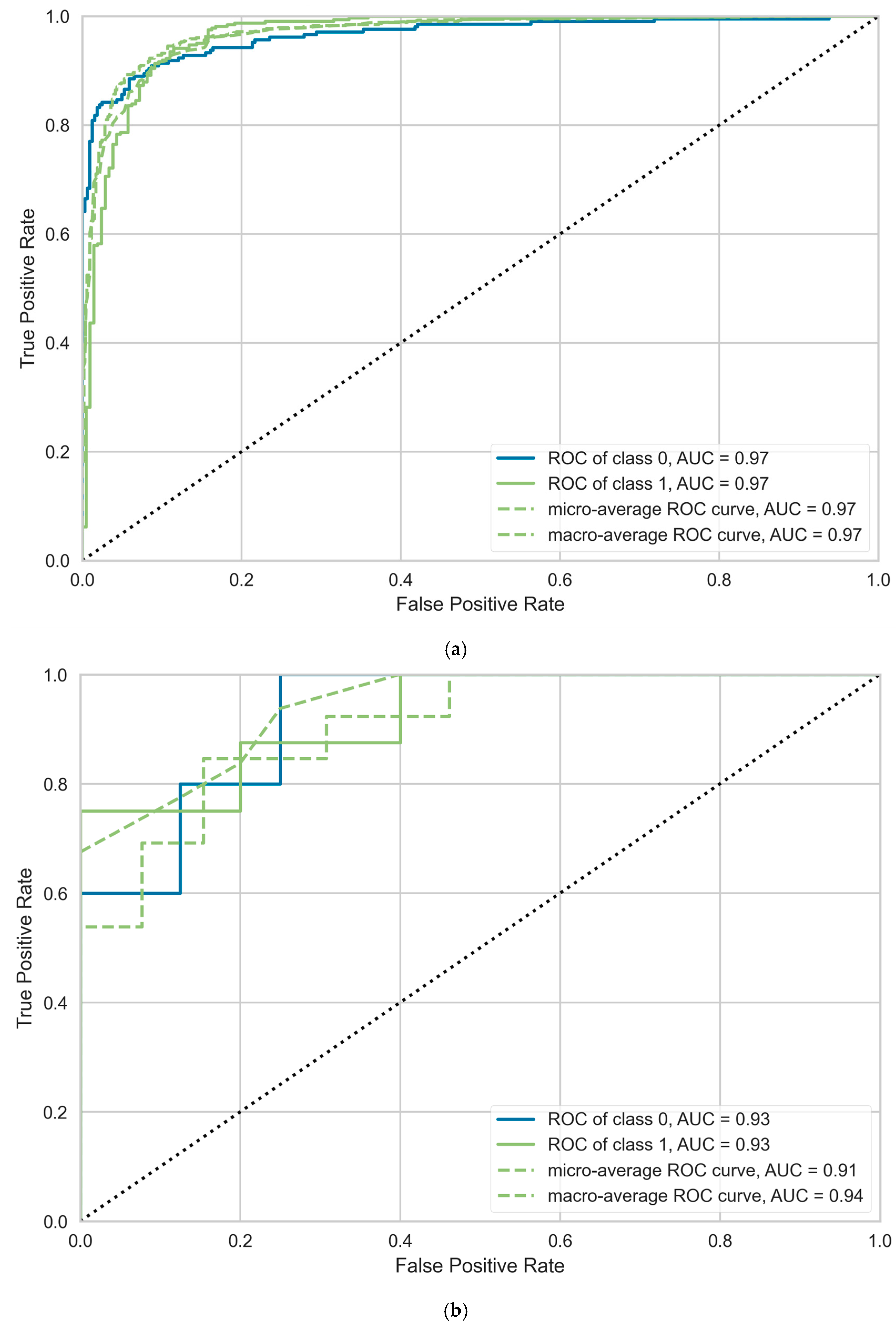

Figure 5 illustrates the AUC curves for both domestic and international students, where the classes are numbered 0 and 1.

Class 0 represents the negative outcome (dropout), and Class 1 indicates the positive outcome (graduation). Both the LightGBM model for domestic students and the TSTR model for international students demonstrate strong classification performance, with similarly high true positive rates. Among them, the domestic model performs slightly better, which can be attributed to the larger dataset available for domestic students. The classification performance of the international students under the TRTR approach was generally moderate. After applying the TSTR method, the model classification performance improved.

The confusion matrix, shown in

Figure 6, visually represents misclassified instances. As indicated in

Figure 5, the models perform with high accuracy, although some errors could be inevitable in machine learning applications, provided the models are not overfitted. Notably, misclassifications of dropouts as graduates could slightly outnumber the misclassification of graduates as dropouts for both domestic and international students. This could be an important consideration when deploying the models.

Figure 7 presents the classification report heatmap, which outlines the precision, recall, and F1 scores for each class. For domestic students, Class 1 (graduates) demonstrates high precision (0.912) and recall (0.954), yielding an F1 score of 0.922. For international students, Class 1 shows slightly lower precision (0.897), yet the model still demonstrates robust performance across all metrics, with high precision and recall values.

4.3. Analysis of Key Factors Influencing Dropout

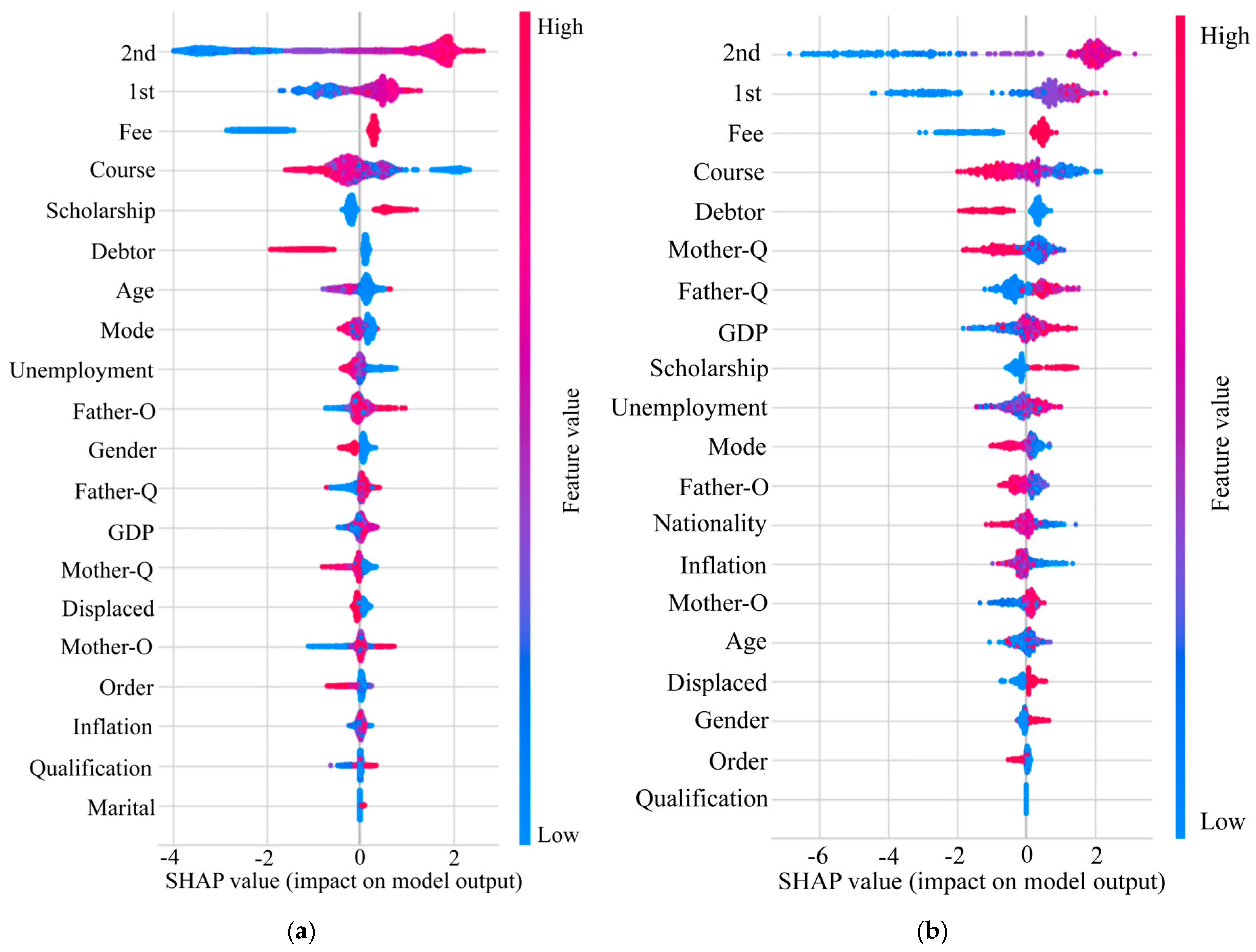

SHAP values provide a detailed interpretation of feature importance, quantifying both the direction and magnitude of each variable’s influence on the model’s predictions. As illustrated in

Figure 8, a color gradient ranging from blue to red represents the spectrum of SHAP values from lowest to highest, with each point corresponding to an individual observation within the dataset. The analysis reveals that subjective academic performance (the number of courses passed) is the primary determinant of undergraduate dropout risk. Notably, courses passed in the second semester (2nd) exert the strongest influence; higher pass rates correlate with significantly increased SHAP values, indicating a substantially greater likelihood of graduation. A similar, though slightly less pronounced, trend is observed for courses passed in the first semester (1st).

In contrast, objective demographic factors such as age, gender, family situation, and socioeconomic background demonstrate minimal predictive power, emphasizing that dropout propensity is predominantly linked to a student’s academic engagement and performance rather than inherent characteristics. Additionally, the SHAP plot reveals that there is no significant difference between domestic and international students regarding the factors influencing dropout risk, as their top four most influential features are identical.

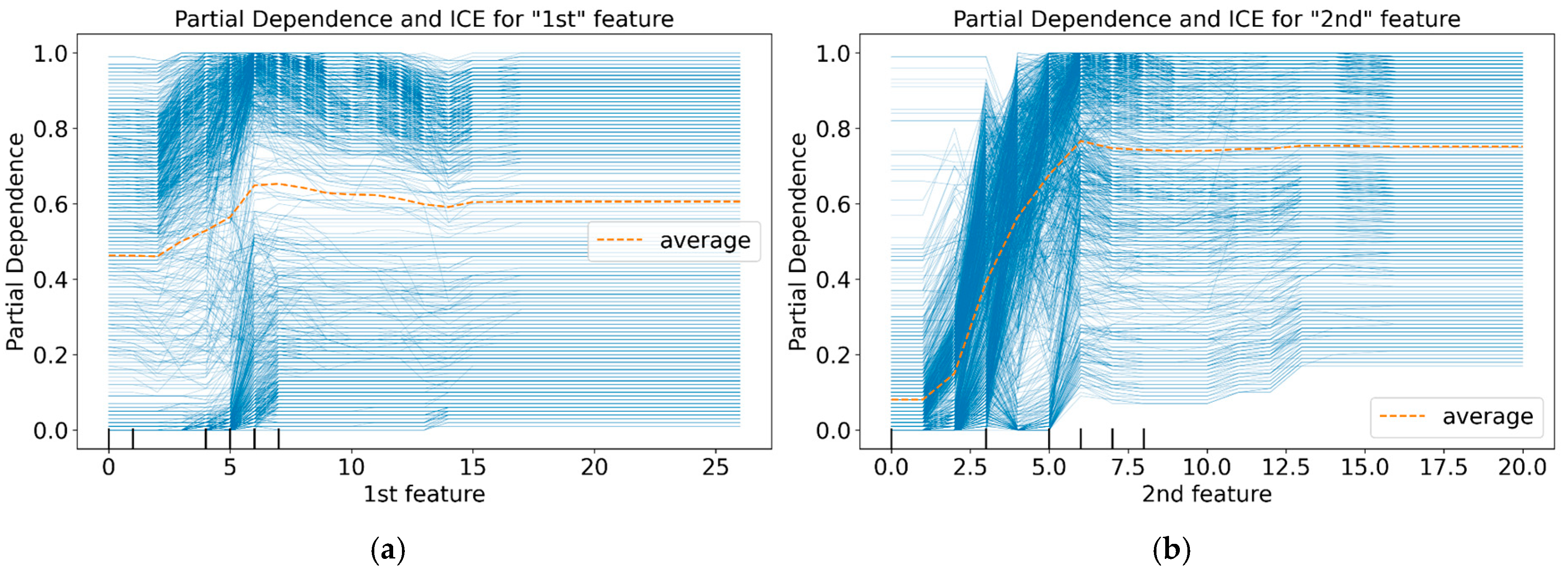

Complementary analysis using ICE and PDP in

Figure 9 offers deeper insights into the relationship between the top features (2nd and 1st) and the predicted probability of dropout or graduation for both student cohorts. The ICE plots illuminate individual variations in response, while the PDP confirms the overall global trend identified by the SHAP analysis (see

Figure 7).

Together, these techniques validate the developed models, demonstrating their effectiveness in capturing the various factors that influence undergraduate graduation outcomes. This integrated analytical approach provides valuable insights for educators and institutions to design targeted support strategies.

The findings provide clear and actionable insights. To reduce dropout risk, it is essential to improve academic performance, particularly pass rates during the critical first and second semesters. This relationship demonstrates a clear non-linear pattern: once a satisfactory level of course completion is achieved, it offers substantial protection against dropout, but further improvements beyond this threshold lead to diminishing returns. As a result, university support strategies should focus on assisting students in reaching this satisfactory performance level early in their academic journey, directing intervention efforts toward this pivotal stage rather than solely aiming for universal academic excellence.

4.4. Interactive Software Tool for Predictions

This web-based interactive application uses a GUI to forecast undergraduate dropout rates (accessible via the link:

https://aaatu4bq3njwchzgunv4ph.streamlit.app/, accessed on 1 August 2025) for domestic and international students based on user-defined parameters. Developed on the open-source Streamlit platform the tool converts locally stored Python scripts and data files into a dynamic web application. Streamlit simplifies development, requiring minimal coding expertise and broadening accessibility for non-technical users.

The application’s code and datasets are hosted on GitHub 3.15 and deployed via Streamlit Cloud, ensuring seamless online operation. This allows educators and researchers to utilize pre-trained models without additional programming. We have prepared the data for both domestic and international students along with the best-performing machine learning models, and have uploaded the code and files to GitHub. The repository is then detected and deployed, creating a unique web link for accessing the live Streamlit app. This link is distributed to researchers or the general public for easy access to the tool.

As illustrated in

Figure 10, the interface features adjustable slider controls (left panel) for parameter input. Users modify these sliders within ranges reflecting the dataset scope (

Section 3), and the integrated models generate real-time dropout predictions.

5. Discussion

This study employed CTGAN to augment the international student dataset, addressing the challenge of limited sample size. While this approach enhanced the robustness and predictive power of the models, it also carries inherent risks. Because synthetic data are generated from small or skewed real samples, there is potential for amplifying noise, producing spurious patterns, or inducing overfitting. Moreover, discrepancies between synthetic and real data may undermine generalizability, and sociocultural complexities unique to international students are not easily replicated by generative models. These limitations suggest that although CTGAN provides a feasible means of overcoming data scarcity, its outcomes should be interpreted with caution and validated against larger and more diverse real-world datasets.

Importantly, the accuracy of the TSTR paradigm was further substantiated through integration with the TRTR model. To rigorously evaluate the representativeness of the synthetic dataset, we employed correlation matrices, Jensen–Shannon Divergence (JSD) statistics, and complementary validation techniques to compare it against the real dataset. By establishing a TRTR-based machine learning framework, we were able to conduct a systematic assessment of TSTR performance. This comprehensive validation enhances the methodological robustness of CTGAN and strengthens the credibility of its application in addressing data scarcity.

Both SHAP analysis and ICE/PDP plots confirm that academic performance in the first two semesters (specifically, the number of courses passed) is the strongest predictor of dropout risk. This aligns with prior literature emphasizing the critical transition period in the early stages of university life [

15]. Notably, the relationship is non-linear: achieving a threshold of satisfactory grades (e.g., passing 4–6 courses per semester) drastically reduces the likelihood of dropout, but exceeding this threshold yields diminishing returns. This suggests that universities should prioritize helping students reach a baseline academic standard, rather than uniformly pushing for high excellence.

Contrary to some existing studies [

12,

13,

14,

15,

16,

17,

23], objective factors (e.g., age, gender, parental education, nationality) showed minimal influence in our models. This may reflect Portugal’s relatively homogeneous higher education demographics or the dominance of academic performance in mediating other risks. For international students, expected stressors such as language barriers or financial need [

14,

22] were overshadowed by academic outcomes, implying that academic support could mitigate broader challenges.

Although academic performance remains the primary predictor of dropout risk, it is equally important to consider the broader socioeconomic and cultural factors that influence student retention. Factors such as financial stress, family background, and cultural adaptation, particularly for international students, often interact with academic outcomes [

39,

40,

41]. These non-academic factors cannot be fully captured by academic performance metrics alone, yet they are crucial in understanding dropout risk.

An interdisciplinary approach, incorporating perspectives from sociology, economics, and psychology, can provide deeper insights into how these factors contribute to student attrition. By combining academic support with interventions addressing non-academic factors, universities can develop more comprehensive and effective strategies to assist at-risk students.

In practical terms, this study introduces a web-based application that provides real-time dropout predictions for students based on user-defined parameters. This tool holds promise for helping universities implement proactive measures to support at-risk students before they decide to leave their studies. However, it should be noted that despite the promising results, there are limitations to the current model, particularly in terms of its generalizability to other institutions and countries. Further research is required to validate these models across diverse educational settings and cultural contexts.

6. Conclusions

This study presents a novel approach to predicting undergraduate dropout risks using machine learning techniques, specifically tailored for both domestic and international students. The research highlights the critical role of academic performance, particularly in the first two semesters, in determining dropout likelihood, while also revealing that demographic and socioeconomic factors have a lesser impact on retention than previously believed.

By leveraging machine learning algorithms and data augmentation methods such as CTGAN, this research contributes significantly to the body of knowledge on student retention. The developed models, coupled with the web-based application, offer universities a valuable tool for forecasting dropout risks and implementing targeted interventions. The findings suggest that universities should focus on supporting students to reach a satisfactory academic level early in their studies, as this has the most profound effect on preventing dropouts.

However, when using the CTGAN method for data augmentation, it is crucial to pay special attention to risk assessment, such as the use of Jensen–Shannon Divergence (JSD), and compare the generated CTGAN data with the real data to ensure that the augmented data does not deviate from the actual distribution. Although CTGAN can generate high-quality synthetic data, if the generated data significantly differs from the distribution of the real data, it may negatively impact the model’s predictive performance. Therefore, when applying CTGAN to generate augmented data, it is essential to rigorously evaluate the quality of the generated data to ensure that it is representative and accurately reflects the diversity of the student population. Such careful handling will help enhance the reliability of the model and ensure the practical applicability of the prediction results.

Looking ahead, the study’s approach can be extended to other higher education systems across different countries to improve dropout prediction accuracy. Furthermore, the integration of the developed predictive models into university systems could pave the way for more personalized, data-driven strategies that can enhance student retention and success. Future work should also explore the integration of additional features, such as psychological and social factors, which may provide a more comprehensive understanding of the dropout phenomenon.

The contribution of this research lies not only in its predictive capabilities but also in its practical application through an open-access, easy-to-use tool that offers potential benefits to both educators and policymakers in their efforts to reduce dropout rates and improve student outcomes.