Abstract

The detection of photovoltaic panels from images is an important field, as it leverages the possibility of forecasting and planning green energy production by assessing the level of energy autonomy for communities. Many existing approaches for detecting photovoltaic panels are based on machine learning; however, they require large annotated datasets and extensive training, and the results are not always accurate or explainable. This paper proposes an automatic approach that can detect photovoltaic panels conforming to a properly formed significant range of colours extracted according to the given conditions of light exposure in the analysed images. The significant range of colours was automatically formed from an annotated dataset of images, and consisted of the most frequent panel colours differing from the colours of surrounding parts. Such colours were then used to detect panels in other images by analysing panel colours and reckoning the pixel density and comparable levels of light. The results produced by our approach were more precise than others in the previous literature, as our tool accurately reveals the contours of panels notwithstanding their shape or the colours of surrounding objects and the environment.

1. Introduction

The integration of renewable energy sources, such as solar power harnessed through photovoltaic panels, within the context of a smart grid has contributed to diminished reliance on conventional fossil fuel-based power generation facilities [1,2]. Photovoltaic (PV) systems are one of the most promising low-carbon energy generation methods [3].PV energy production has grown rapidly over the last decade, at a rate of more than 35% annually [4,5]. At the end of 2022, the world’s cumulative installed PV capacity was 1055.03 GW, compared to 589.43 GW in 2019, almost doubling in three years [6].

Estimating the total installed PV capacity and power generation can enhance the ability of policymakers and stakeholders to evaluate progress in terms of sustainability, quantify the actual benefits of green energy, and consider potential future installations [7]. Aerial and satellite images have been analysed to recognise PV panels by means of approaches using machine learning (ML), i.e., convolutional neural networks (CNN), deep learning methods [8,9,10,11,12,13,14,15,16,17], and random forests [18,19,20,21]. However, ML tools require a large amount of labelled datasets for their training to be effective, and the related effort to build datasets and perform training is costly and time consuming. Moreover, many such approaches rely on a training phase that can cause overfitting, resulting in the inability of the ML-based model to properly generalise when employed with other datasets.

Further approaches have focused on analysing the physical absorption and reflection characteristic of PV panels to identify them from airborne images [22,23]. The related results show that the identification of shapes and areas of PV panels is not very accurate. In addition, spectral detection is sensitive to the thresholds selected for the spectral bands of the different sensors.

In this paper, we propose an approach that identifies PV panels by means of a deterministic algorithm that carefully and extensively analyses the colours of the pixels forming the panels. The proposed approach first analyses images to reveal potential intermingling between PV panel colours and surrounding colours, which mostly affects images having a high level of colour saturation; as a result, images are organised according to their level of saturation. Second, pixel colours in selected PV panels of labelled images are collected and filtered by excluding the colours typical of background or portions surrounding PV panels. Colour tones commonly found in very dark portions (shadows, ground, etc.) as well as very bright portions (roads, roofs, etc.) are excluded from the colours characteristic of PV panels. The resulting colour selection is a set that properly characterises PV panel colours. This set is then used to analyse colours in unlabelled images and detect PV panels. Additionally, for the selected colours that appear in analysed images, their density is evaluated when considering whether a portion of an image contains PV panels.

Our proposed approach does not require large annotated datasets or computationally intensive training. The colours characterising PV panels can be found from a small dataset using a very low amount of computational resources, then readily used to identify PV panels in other images in a similar area. The approach is deterministic; hence, the set of colours and steps can be further tuned if needed. Moreover, the results of the detection tool can be easily explained, e.g., a lower than expected recall can be explained in terms of the number of selected characterising colours, level of darkness, etc. The approach has been validated on a publicly available aerial imagery annotated dataset, and several metrics, including accuracy, recall, and precision, show that it manages to locate PV panels more accurately than previous works in the recent literature.

Compared with other approaches, our approach has the following strengths: it is fast; it does not need annotated data (i.e., when the characterising colours have been gathered, they can be reused across areas); it separates images that are more prone to be used for panel detection, as certain levels of saturation or darkness make it hard to distinguish panels; and it automatically detects false positives and false negatives on the basis of the density of pixels in the tiles of the image under analysis.

The remainder of the paper is structured as follows. Section 2 presents related works in the field of PV panel detection. Section 3 details the phases of our proposed novel approach. Section 4 describes the experiments performed to test and validate our approach. Section 5 discusses our approach and the obtained results. Finally, our conclusions are drawn in Section 6.

2. Related Works

Estimating the number of PV panels in a region is a complex task due to the insufficiency (or even lack of) official registers. Many papers have proposed approaches to detect PV systems by analysing satellite and aerial images, often using Convolutional Neural Networks (CNN) or Random Forest (RF) classifiers.

A deep neural network model called Faster-RCNN was used to design the identification model of PV panels [12]. The approach consisted of two parts: first, a ResNet-50 classifier was pretrained, then a CNN was fine-tuned for the identification task of rooftop PV panels. Similarly, three convolutional layers and three fully connected layers were used to evaluate the performance of the identification [8]. Moreover, eight 2D convolutional layers were used to detect PV panels in residential areas; to achieve the best performance, thirteen architectures were trained and the most accurate was selected [9]. Other approaches have used InceptionV3, a CNN used for image analysis and object detection, which was fine-tuned for the task of PV panel identification [10,11]. These approaches were designed to detect PV panels in both residential and non-residential areas; however, due to the lack of PV panel images, data augmentation was performed during the training process. The framework proposed in [10] was employed for the detection of PV panels in Sweden to collect further market statistics [24]. Similarly, an innovative approach was presented to detect rooftop PV panels on the three-dimensional (3D) orientation [25]. This approach employed the InceptionV3 model to classify images; subsequently, segmentation and geocoding steps were performed to analyse the 3D images. ML and deep learning techniques were used for rooftops PV panels detection in [13]. The k-means approach was applied to segment the images in order to define the contours of each rooftop, then a support vector machine (SVM) classifier with a CNN was integrated to accurately identify solar PV arrays. A Mask-RCNN was used for segmentation and identification in [14,16,17]. These approaches applied the object detection technique to reveal PV panels on aerial images, with CNN being fine-tuned to characterise the mask contours used for the arrays. A CNN with the VGG16 encoder was presented in [15]; first, image segmentation was performed to select the suitable portions of solar panels, then the azimuth of the solar arrays was predicted using edge detection and the Hough transform.

Despite, generally, CNNs obtain high performance; however, they need a massive amount of labelled data during the training process, and several runs are required to properly tune a model. However, excessive training can lead to overfitting, meaning that the model fails to generalise. Moreover, CNNs are often considered black boxes, that is, hard to interpret and comprehend. Therefore, the debugging and validating process can be complex and time consuming, and the decisions are ultimately made by the model. These approaches are very costly both when preparing the dataset and when running the system during training.

A different approach was proposed in [18] to extract image features such as colours, textures, and other patterns from each pixel, then pass them as input to train an RF classifier to identify pixels related to PV arrays. In a similar approach [19], the focus was on the identification of water PV systems (WPV); an RF classifier with 400 trees was trained to extract pixels related to WPV, then postprocessing was performed to remove noise and rooftop PV panels. Another pixel-based RF algorithm used the L-8 surface reflectance (SR) product to identify suitable PV panels [20]. The RF classifier was based on the Google Earth Engine (GEE) and used to map PV power plants. Similarly, an RF classifier for an Object-Based Image Analysis (OBIA) approach used different combinations of multispectral Sentinel-2 imagery and radar backscatter from Sentinel-1 SAR imagery [26]. RF algorithms use a lot of computational power, and need additional resources to build numerous trees and combine their outputs. Furthermore, the training process is time consuming and resource intensive, as it needs to combine different decision trees to determine the class for the identification. In comparison, our proposed approach is more versatile and lightweight.

In [21], RF classification was combined with a CNN. First, the RF was used to assign a confidence value to each pixel in order to determine the possibility of that pixel belonging to a solar PV array; then, a CNN was used to classify 40 × 40 patches of RGB images to determine whether or not they corresponded to solar PV panels.

An innovative deep learning technique called EfficientNet-B7 was employed for PV panel detection in [27], showing better accuracy and efficiency compared to classic CNN approaches. EfficientNet-B7 was used as a backbone encoder to train a U-Net model for segmenting solar panels.

Spectral characteristics have been investigated to detect PV panels from hyperspectral data [22,23] by focusing on the physical absorption and reflection characteristics of PV panels. To handle the material diversity of PV panel types, these studies applied a tailored image spectral library, which together with the hydrocarbon index mitigated the spectral variance caused by the detection angle. The results of these approaches showed that the shape and the area of the PV panels were not accurate; moreover, the identification was sensitive to the thresholds set for the spectral bands of different sensors.

Conversely, our approach proposes several innovative aspects: (i) due to the absence of a training process, the process is light, fast, and does not require a large amount of labelled data; (ii) the automatic definition of a set of suitable colours makes the approach applicable to PV panels with colours that fall within the defined set, and the set can be updated with other colours to broaden the range of PV detected panels; (iii) the proposed algorithm makes it possible to explain the resulting outcomes, that is, the reason behind the detection (or lack of detection) of PV panels.

3. Proposed Approach

3.1. Overview

The main strategy of the proposed approach is to scan the colours of a small number of annotated aerial images and extract from them a set of characterising PV panel (cPV) colours. Then, these selected colours are used as a reference to detect the location of PV arrays in other (unannotated) aerial images. Our approach resembles and extends the methodology presented in [28,29], where colour analysis and mobile tile-based detection were employed to automatically determine the green and urban areas in an image and trace the boundaries between them.

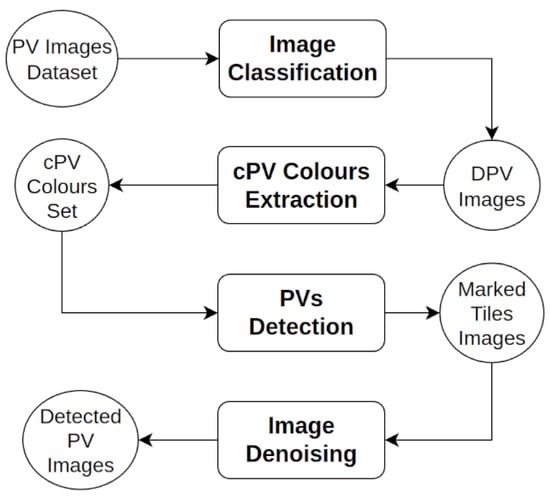

Figure 1 shows the high-level view of the four main phases of the proposed approach, consisting of: (i) classification, used to identify images featuring Detectable PV (DPV) panels, i.e., those exhibiting colours different enough from the colours of roofs, roads, ground, etc.; (ii) extraction of cPV colours from DPV images; (iii) detection of PVs in unannotated images using a sliding window that marks tiles on the panels’ surface; and (iv) denoising to filter the previous results. A brief overview is presented below, followed by a more detailed description of each phase.

Figure 1.

Overview of the proposed phases for PV panels detection.

The classification phase is designed to automatically categorise images based on the prominent colours of PV panels. Depending on illumination conditions or panel materials, PV arrays may exhibit a large variation in the range of their colours, and can include shades that are commonly found in background elements such as roads and buildings. For this reason, we leveraged a previously labelled dataset to locate PVs and analysed pixels to pinpoint only the images including DPV panels, i.e., those images with panels featuring colours that are not very common in the surrounding parts. In the subsequent cPV colour extraction phase, annotated DPV images are analysed to automatically collect a palette of colours that are representative of PV panels. During the detection phase, the set of cPV colours is used to identify and mark pixels that are recognised as belonging to the surface of PV panels. Thereafter, moving square windows are employed to cluster marked pixels based on proximity and density. Multiple passes of the moving square window are employed to refine the detection. Finally, a denoising phase aims to identify and remove noise in the background areas. For this, we utilise a moving detection window that assesses the structure and patterns of marked tiles to determine whether the previous identification can be confirmed as correct.

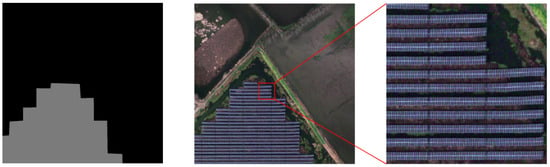

The final output of the proposed approach consists of images in which PV installations are detected. Depending on the algorithm parameters, both the panels’ surfaces and the whole PV array deployment areas can be detected, as shown in Figure 2.

Figure 2.

Example outputs of the proposed algorithm: the yellow part shows the detected area for PV panel deployment (middle) and the red part shows the PV panels (right). The original PV image is shown on the left. The analysed image dataset was presented in [30].

3.2. Phase 1: Image Classification

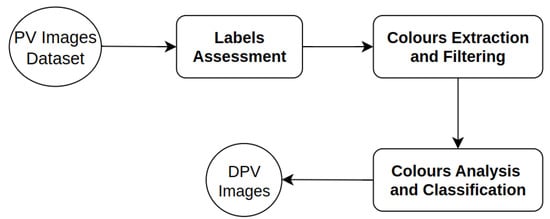

The image classification phase comprises three steps: (i) label assessment, which involves examining the area covered by the labels; (ii) pixel colour extraction and filtering, which finds PV panel colours that are different from the colours of other parts; and (iii) colour analysis and classification, which identifies images featuring DPV panels. Figure 3 provides an overview of the above steps. Each step is further described in the following subsections.

Figure 3.

Workflow of dataset image classification.

3.2.1. Label Assessment

The label assessment step aimed to reveal the accuracy of the pre-existing PV panel annotations in the initial dataset, as the detection results could be misleading if the annotations are not accurate. Figure 4 shows an example in which the bottom right corner of the image presents PV panels but the mask does not. When there are incorrect labels, the detection of PV panels in areas not covered by the labels would be erroneously classified as a false positive, leading to incorrect values in the evaluation metrics. For this reason, inaccurate labels were identified and the corresponding images were manually removed.

Figure 4.

An example of an incorrect label in the dataset [30]: PV panels (left) are visible in the top left corner and the bottom right corner; however, the label (right) indicates only the top left corner.

In addition, PV arrays with limited extension do not significantly contribute to the colour analysis; therefore, any image with labels covering less than of the total image area was excluded from the dataset.

3.2.2. Colour Extraction and Filtering

In general, labels in the dataset encompass a region that includes PV panels along with a significant portion of the surrounding area, such as terrain, vegetation, shadows, etc. (see Figure 5). As a consequence, dataset labels cannot be reliably employed to guide the extraction of PV colours. For this reason, we devised a strategy to analyse the colours within the area enclosed by labels and automatically exclude the subset of colours that are also commonly encountered outside the annotated area. Thus, only those colours that correspond to the surface of PV panels are retained.

Figure 5.

The region enclosed by the dataset labels includes both PV panels and background.

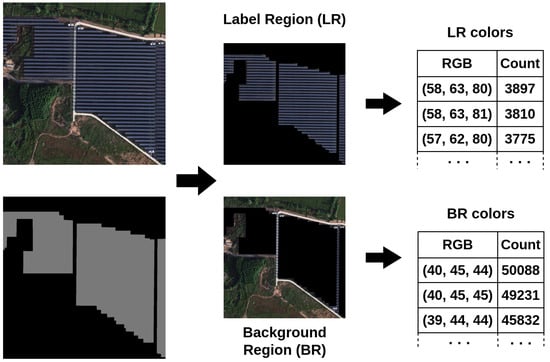

The implemented strategy operates as follows. Initially, dataset labels are used to partition each image into two regions: the Label Region (LR) and its complement, referred to as the Background Region (BR). Subsequently, the RGB colour components of the pixels are collected and the colour count for each region is computed, indicating the total number of pixels displaying every colour in each of the two regions. This information is aggregated from all images in the Ground dataset [30] and used to build two sets: LR colours and BR colours (see Figure 6). For LR pixels that are actually depicting the background, rather than PV panels, it is a reasonable assumption that their colours are also commonly found in the BR. Therefore, for each colour within the intersection of the two sets, a comparison is performed between the corresponding counts. If the ratio between the BR count and the LR count exceeds a threshold, that colour is characterised as a background colour and is removed from the LR set.

Figure 6.

Labels are used to partition images in Label Region (LR) and Background Region (BR), and then the colours and their counts are determined.

The outcome of this filtering operation is a subset denoted as PV Panel (PVP) colours that more accurately represents the actual colours of PV panel surfaces. PVP colours are the collection of filtered colours obtained from the whole Ground dataset [30]. Next, a colour analysis is performed, as described in the following subsection.

3.2.3. Colour Analysis and Classification

Our analysis of the PVP colour sets collected from several images revealed significant colour variations, possibly due to differences in illumination conditions and/or PV panel materials. We have identified three classes of PV panels based on the properties of their prominent colours. Figure 7 shows the three classes of PV panels. The first class is represented by dark panels, which are typically found in images captured in low-light conditions. Their significant number of dark shades renders such colours non-significant for characterising PV arrays, as they are also commonly encountered in shadows and dark terrain. The second class is denoted as grey–white panels. These shades can result from the panel material or from sunlight reflecting off the panel surface, and are not characterising colours for PV installations. In particular, such colours can also be found on roads and buildings. The third class is called detectable panels (DPV), and includes all images that do not fall into the previous two classes.

Figure 7.

Classes of PV panels: dark (left), grey–white (middle), and detectable (right).

We have developed a strategy to automate the classification of the dataset’s images into such three classes. The strategy is as follows: for each image, we consider the LR colour set and determine its intersection with the PVP colour set, resulting in the image-specific PVP colour set (iPVP). The iPVP set comprises all PVP colours found within that particular image along with their respective counts.

To assess the amount of dark pixels, we convert the RGB coordinates of the iPVP colours into the HSL (Hue, Saturation, Lightness) colour space [31,32]. Next, the lightness component is evaluated; if a colour falls below a threshold, it is designated as dark. If the aggregated count of dark colours surpasses a predetermined fraction of the total iPVP set counts, then the image is classified as dark.

To evaluate the amount of pixels displaying white or grey shades, we leverage the fact that such colours can be described as having low saturation. Consequently, we transform the colours in the iPVP set into the HSV (Hue, Saturation, Value) colour space [31,32], which in our analysis enables better classification based on saturation compared to the HSL space. Similar to the previous step, we aggregate the count of all colours with a saturation component below a threshold. If this combined count exceeds a predetermined fraction of the total counts of the iPVP set, the image is classified as belonging to the grey–white class.

Images not categorised as either dark or grey–white are automatically classified as DPV images, and serve as the input for the next phase.

3.3. Phase 2: Characterising PV (cPV) Colours Extraction

The goal of this phase is to extract colours from DPV images and isolate the characterising PV (cPV) colours. Similar to the image classification phase, we begin by partitioning each DPV image into a Label Region (LR) and its complementary Background Region (BR). Then, we aggregate the colours and their respective counts for all images, which are recorded separately for each region, resulting in sets for the LR colours and BR colours. Next, we remove the background colours by evaluating the ratio between the BR count and LR count for all colours within the intersection of the two sets. As a result of these operations, we derive the PVP colour set.

A further filtering process is applied to the PVP colour set with the objective of excluding both dark and low saturation colours. The reason this that, as illustrated earlier, these colours are not representative of PV panels. Again, this assessment uses thresholds for the lightness component in the HSL colour space and the saturation component in the HSV colour space. The result of this filtering operation is the cPV colour set, which is used in the detection algorithm discussed below.

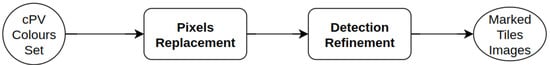

3.4. Phase 3: PV Detection

The algorithm consists of the two steps outlined in Figure 8: (i) pixel replacement, in which all pixels displaying cPV colours are marked, and (ii) detection refinement, in which windows of varying sizes are employed to cluster pixels belonging to PV arrays. The final output of the algorithm is a set of images in which the positions of detected PVs are marked. The individual steps are detailed in the following subsections.

Figure 8.

Workflow of the detection algorithm.

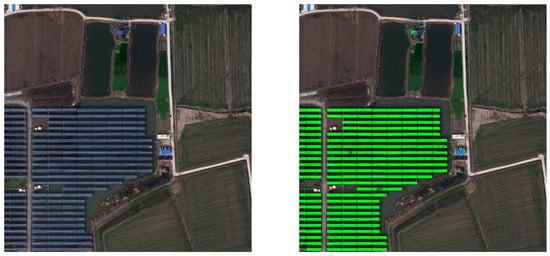

3.4.1. Pixel Replacement

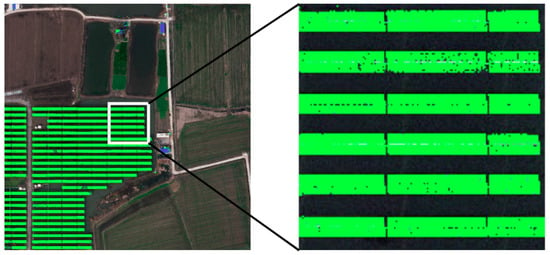

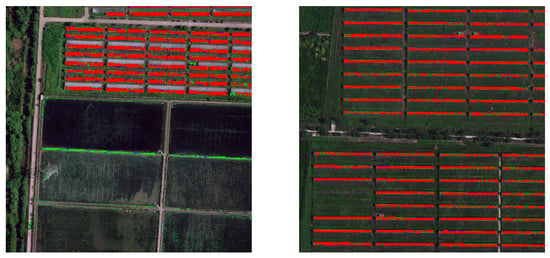

Initially, PV images are analysed and all pixels with a colour contained in the cPV set are identified and marked with RGB green. Figure 9 shows an example of the results of the pixel replacement operation. From the image, a high concentration of green pixels can be observed corresponding to the panel surfaces. Nonetheless, a more thorough inspection reveals that a significant minority of pixels in the same area have not been marked, as they do not feature cPV colours; see Figure 10 for a zoomed-in image. Therefore, the detection of PV panels in the images is further refined as described below.

Figure 9.

An example image with pixels displaying cPV panel colours marked in green (right); the original image is shown on the left for reference.

Figure 10.

An enlarged section of the above image reveals that not all pixels on the panels have been marked with cPV colours.

3.4.2. Detection Refinement

To enhance the detection of PV panels, we evaluate the colours within the area of a moving square window. In general, when starting a detection refinement run, three parameters are defined: (i) the window size; (ii) the threshold density for colouring the window; and (iii) the new marking colour.

In each step, the number of marked pixels is assessed; if their total count exceeds a threshold, e.g., of the total window pixels, then the entire tile is coloured with a new marking colour, e.g., RGB blue. This assessment is performed for the whole image. Depending on the features of PV installations, multiple passes with progressively larger windows can greatly enhance the effectiveness of PV detection.

The reason for utilising a new marking colour in each refinement run lies in the fact that each successive run assesses the marked colours within an increasingly larger area. If a window in a subsequent refinement iteration contains marked pixels but their cumulative count is not sufficient to trigger the threshold, this indicates that a significant number of pixels within the window do not feature cPV colours. Consequently, it is very likely that the previously marked pixels actually correspond to incorrect detections. In this case, the window is not coloured. Moreover, the remaining marked pixels are be dealt with during the denoising phase described in the next section. Therefore, only the marking colour from the last refinement run is considered final when evaluating the detection results.

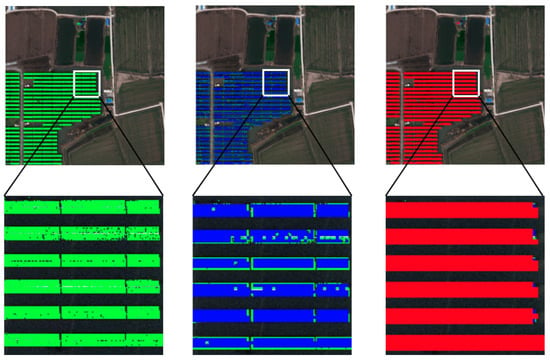

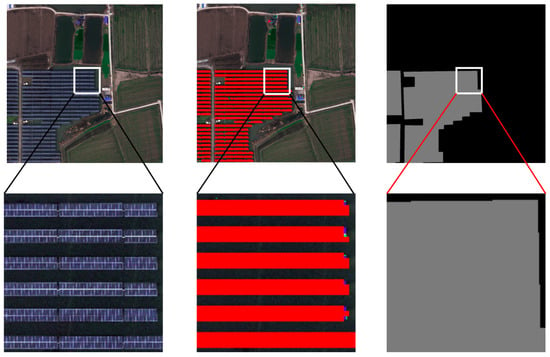

Figure 11 shows example results from two consecutive refinement runs using window sizes of and pixels, respectively. The enlarged sections demonstrate the improvements in PV detection after the successive window passes.

Figure 11.

Detection results after a window pass (middle) performed on the marked pixels image (left) and a subsequent window pass (right). The enlarged sections at the bottom show the detection enhancement after successive passes.

The same methodology involving a moving window can be applied to remove incorrect detections that correspond to coloured tiles in the background region, as explained in the following section.

3.5. Phase 4: Image Denoising

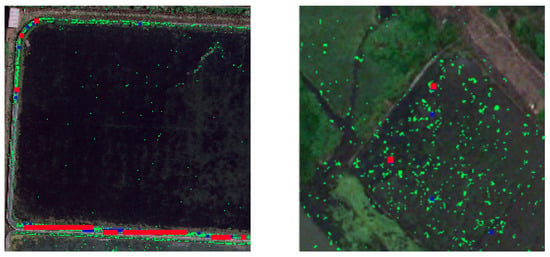

Pixels displaying cPV colours might be found in background regions, although less frequently than for actual PV panels. Occasionally, the count of such pixels is sufficient to trigger the moving window threshold, leading to incorrect detections. Examples are shown in Figure 12.

Figure 12.

Examples of detections in background regions.

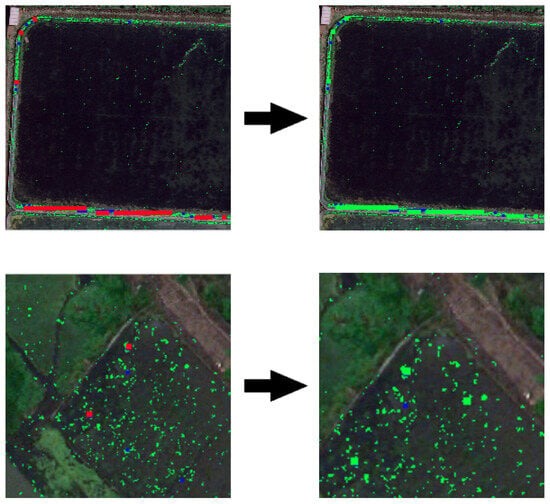

To address noise, i.e., occurrences of cPV pixels outside of actual panels, we initially note that panel surfaces typically contain large clusters of marked tiles. Conversely, incorrect detections in the surroundings often result in isolated coloured pixels or short coloured stripes. Leveraging this observation, our strategy for identifying and eliminating noise involves evaluating the surroundings of a coloured stripe.

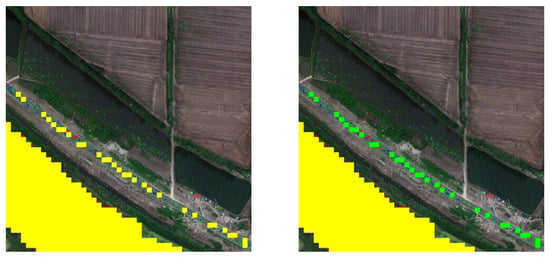

To achieve this, we apply the same methodology of the moving window, adapting it for the denoising task. We make adjustments to the three parameters; the specific evaluation logic as follows, First, in order to include the surrounding pixels, the size of the denoising window is set to be larger than the detection window size used in the last run of the algorithm. Then, a threshold is defined such that when the count of marked pixels within the window falls below the threshold, the pixels are classified as noise and consequently coloured in green, whereas detected panels are coloured in red. Figure 13 shows the results after the denoising pass. Pixels in green have been excluded from the detection results; only the pixels marked with the last execution’s marking colour (in this example, red) are considered detected panels.

Figure 13.

Detections in certain areas (the red dots in the left part) have been identified as noise and marked in green (the right part).

4. Experiment and Results

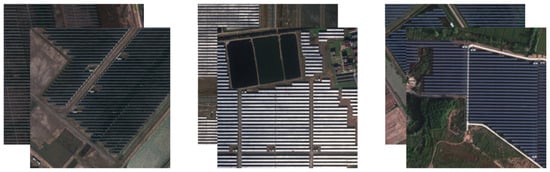

From the aerial imagery dataset [30], we selected images having a resolution of m/px and a size of from five distinct sub-datasets, namely: Cropland, Grassland, SalineAlkali, Shrubwood, and WaterSurface. The collected images constituted the Ground dataset, comprising 2072 images. The Ground dataset served as the input for the classification phase, which analysed its features and identified DPV images.

A total of 71 incorrect labels and 115 labels covering less than of the image area were discovered; the corresponding images were consequently removed from the dataset. Next, colours and counts were extracted from the LR and BR and the background colours were filtered out using a threshold of for the colour count ratio between BR and LR, resulting in the PVP colour set.

The colour set analysis proceeded as follows: first, to identify dark panels, the RGB coordinates were converted into the HSL colour space. A threshold of (in the range ) was applied to the lightness component, while a threshold for the pixel count was used to categorise images into the dark class. To detect grey–white panels, the RGB coordinates were transformed into the HSV colour space. A threshold of (in the range ) was used to evaluate the saturation component, with a threshold for the low saturation pixel count used to categorise images into the grey-white class.

After colour set analysis, images were classified as follows: 174 dark panels, 1254 grey–white panels, and 458 DPV panels. The latter were aggregated to constitute the DPV dataset on which PV detection was performed. Initially, of the DPV images were randomly selected for extraction of the characterising PV colours, while the remaining were used to test and evaluate the algorithm.

The results of colour extraction were as follows: an aggregated 1,133,935 distinct colours for the LR, and an aggregated 1,836,190 distinct colours for the BR. After colour filtering, there were 403,713 colours remaining, which accounted for of the total LR colours, representing the characterising PV (cPV) colour set. Table 1 shows the classification parameters.

Table 1.

Parameters used in the classification of the Ground dataset.

After obtaining the cPV set, test images were selected and analysed. Any pixel displaying a colour found within the cPV set was marked in green. Subsequently, PV detection was carried out using two moving windows, one of size pixels with a detection colour of RGB blue and another of size pixels with a detection colour of RGB red. The detection threshold for both windows was set to .

Figure 14 shows an image for which the two-pass detection was performed, together with the original dataset image and the corresponding label. The output image (middle) shows that the panel surfaces were identified and the background portions present between rows of PV installations were not marked by the detection colour (red). This confirms that the colour filtering approach was able to discern between PV-characterising colours and background colours with very high accuracy.

Figure 14.

An example PV image (left) and the results of PV detection after two successive detection passes (middle). The corresponding dataset label is shown on the right. The bottom part contains an enlarged section for each of the three images.

Furthermore, to detect the whole PV installation area, which in the labelled dataset includes the background between individual panels, an additional detection pass was performed in an attempt to enclose the entire region where PV panels were deployed.

4.1. PV Area Detection

To cluster PV arrays together, a window with a size of pixels was used. A lower detection threshold of was set in order to take into account the high number of colours not included in the cPV set that appeared in the background portions between the panels. The marking colour was set to RGB yellow. Table 2 shows the parameters used for the three detection passes, while Figure 15 shows the detection result. Finally, a denoising operation was carried out on the images. The size of the denoising window was pixels and the threshold was set to of the window area. Coloured tiles identified as noise were marked in green, as shown in Figure 16.

Table 2.

Parameters used for PV area detection.

Figure 15.

Example result of PV panel region detection (middle). The original dataset label is shown on the right.

Figure 16.

After denoising, certain detections in the background (yellow tiles) are reverted (green tiles).

We evaluated the performances of the proposed approach and corresponding detection algorithm by assessing five metrics: Accuracy, Precision, Recall, F1 Score, and Intersection over Union (IoU). Table 3 presents the results for the DPV Ground dataset as well as the five individual sub-datasets: Cropland, Grassland, SalineAlkali, Shrubwood, and WaterSurface. The last row of Table 3 displays the values reported by the authors of the dataset in [30].

Table 3.

Evaluation metrics for the DPV Ground dataset and the five sub-datasets.

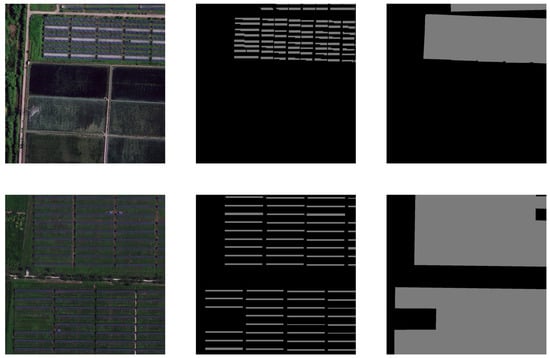

4.2. PV Panel Surface Detection

To evaluate the algorithm’s performance when detecting only the surface of PV panels, as opposed to the entire area covered by PV arrays, we manually created labels for five test images. These updated labels exclusively enclosed the panel surfaces without including background areas. Figure 17 shows a comparison between the updated labels and the original dataset labels. The algorithm was then run on the DPV Ground dataset with the parameters shown in Table 4.

Figure 17.

Updated labels exclusively covering the panel surfaces (middle) alongside the original dataset labels (right). The corresponding PV images are shown on the left.

Table 4.

Parameters used for detecting PV panel surfaces.

The experiments revealed that smaller window sizes produce better detection results when considering only the panel surfaces. Similarly, a higher threshold of can be employed for clustering those pixels displaying cPV colours, as the background does need not to be taken into account in this case. Figure 18 shows example results after two detection passes and subsequent denoising with a denoising window size of and threshold of of the window area.

Figure 18.

Example results of PV panel surface detection.

The Recall and Precision metrics for the test images were calculated using both the updated and original labels, with the results displayed in Table 5. The PV/Label columns represent the ratio between the area covered by the PV panel surfaces and the total label area. When using the updated labels, a mean Recall score of was achieved, demonstrating our algorithm’s effectiveness in detecting PV panel surfaces. Conversely, when utilising the original labels, the mean Recall score drops to . This value can be explained by considering the fact that the PV surface comprises only a fraction of the total labelled area, as indicated in the PVs/Label column. Indeed, the background accounts for more than of the original label area. The high Recall value with the updated labels was obtained while attaining an average Precision score of . The higher value obtained with the original labels is because most of the pixels surrounding the panels’ surface are considered true positives when using the dataset labels, despite actually representing the background.

Table 5.

Comparison of Recall and Precision scores between the updated and original labels.

5. Discussion

We evaluated the results of our approach by means of several metrics (see Table 3) and compared them with the results shown in [30]. The highest value achieved for each metric is marked in bold. Our approach outperforms the previous approach in all metrics except for precision; however, the difference between the two values is only . The values for the metrics vary across different datasets, with the highest variation for recall. Specifically, recall is recorded as for the Grassland and for SalineAlkali. This variation is due to the presence of common colours between PV panels and surrounding parts in Grassland. As our approach filters out the colours shared between PV panels and surround areas, this resulted in a reduction in the number of identified PV pixels for Grassland. In general, the ability to accurately identify a PV panel is directly influenced by the dissimilarity in colours between the surrounding area and PV panels.

The application of our proposed approach to other images having photovoltaic panels has been investigated and evaluated in the additional provided material (see Supplementary Materials) and has shown that the extracted set of colours is appropriate to analyse novel images.

Unlike most deep learning approaches, which identify PV panel areas, our approach identifies both PV panel areas and PV surfaces. The latter is a more precise estimation of the actual size of PV panels than the former. In our approach, this is achieved by the use of different detection window sizes (see Section 3.4.2) in which the marked pixel density is sufficient to recognise panels. If there is a low density of marked pixels within a window, it is likely that such pixels indicate noise, i.e., surroundings that share colours with PV panels.

In addition to the labels provided in the original dataset, which only marked PV areas, we have provided a small subset of images with accurate labels for PV panels. Our accurate labels were introduced because in several images the original labels marked PV areas containing more than of background pixels relative to the total pixel count. While the previous detection approach described in [30] broadly identifies the PV panel regions, it encompasses a significant portion of background pixels as well, significantly diminishing the ability to assess the detection of actual PV panels. Table 5 shows the detection performed by our approach measured using the updated labels and the original labels. While the precision scores remain high for both labels, the recall varies significantly and is much higher for the updated labels. Our approach achieves high sensitivity for PV panels, whereas the previous approach is useful only for the broad PV panel region.

In contrast to CNN-based approaches, our method forgoes a training phase, offering several advantages. First, it requires only a limited number of labelled images to extract the defining colours of PV panels, whereas ML and deep learning approaches need large datasets of labelled data for training. This training process can be time-consuming and error-prone, as discussed in Section 3.2.1, and imprecise labels can pose challenges. Second, training phases in deep learning typically require substantial computational power and significant storage capacity, in addition to prolonged execution times for model training. In contrast, our approach’s execution time is solely dependent on the number of images to be analysed. Finally, along with its shorter execution time, our approach delivers high performance levels, as shown in Table 3, which are comparable to if not better than those of CNN-based approaches. Moreover, the extraction of PV-characterising colours can be performed one time and used to analyse many images of the same area. Table 6 shows the average execution times of each phase in the proposed approach for each image. The algorithm was tested on a PC with the following characteristics: (i) Linux Ubuntu operating system kernel version 6.2.0-36-generic; (ii) 2.0 GHz AMD Ryzen 5 2500U 4-core processor; (iii) AMD Radeon Vega 8 Graphics; (iv) RAM memory 8 GB 2400 MHz DDR4.

Table 6.

Average execution times for image.

The PV panel colour characterisation phase provides a set of colours that are used in the detection phase. However, the colours of PV panels can be influenced by several factors, including the panel’s model (e.g., the materials used), the intensity of incident light (which can either lighten or darken the panel), and the panel’s inclination and orientation. Accordingly, PV panels with colours that closely resemble the background or exhibit darker colour shades pose challenges for detection. Thus, detection is more reliable for images with fewer very dark colours and where the background or surrounding objects have different colours. Our detection approach can make use of labels marking roads and related infrastructure to collect the characterising colours of these objects and compare them with the distinguishing colours of PV panels.

The versatility of our approach allows for its application to datasets from various geographic regions thanks to the phase in which the PV-characterising colours are collected. Because this colour set may vary across several geographic areas, it may be appropriate to extract a fresh colour profile that is more finely tuned to the characteristic colours of the geographic area under analysis.

Finally, the aerial images analysed in this paper exhibited a pixel resolution of metres per pixel. When using lower resolutions, the risk of lower detection performance increases due to the coarseness of the figure edges and the blending of pixel colours.

6. Conclusions

In this paper, we have presented an approach that accurately detects the parts of images showing photovoltaic panels. First, the proposed approach automatically classifies images according to the brightness and saturation of their colours and selects those images having appropriate brightness and saturation levels. This step is automatic and does not require annotated images. Second, the colours characterising photovoltaic panels are gathered by comparing panel colours to the colours of the background/surroundings. This step uses a small number of annotated images to discover the panel colours. Third, detection of photovoltaic panels is automatically performed on images using the obtained PV-characterising colours and their densities without the need for any previous analysis or or annotation. By comparing colour sets, the proposed approach is able to automatically exclude false positives and false negatives by quantifying colour densities within tiles.

To validate the effectiveness of our approach, experiments were performed using an open-source dataset of aerial images containing photovoltaic panels. The results showed that the proposed approach can accurately and consistently identify photovoltaic panels, achieving high precision and recall values. Furthermore, a comparison with a Convolutional Neural Network (CNN)-based approach revealed superior performance in terms of recall and accuracy on the part of our approach. Notably, unlike many CNN methods, our approach is capable of identifying both the areas and the actual precise surfaces of PV panels, offering a dual identification capability.

Thanks to the initial classification phase used in our approach, datasets for different regions can be analysed successfully, e.g., images that have been captured at different times of the day with a specific light can be detected and separated from others, then a subset of the whole set can be automatically identified for PV detection. Moreover, the automatic extraction of distinctive pixel colours characteristic of PV panel models specific to a given region provides a level of tuning, flexibility, and versatility when applying our proposed approach to images with widely varying colours of panels and surrounding areas. Finally, our proposed approach exhibits a fast execution time, as no training phase is required, and works well without a dataset of annotated images, as the gathered representative colours can be used for other regions.

Supplementary Materials

The detection of solar panels was performed on three additional images and the description of the analysis and the results can be downloaded at: https://github.com/damarletta/detecting-pv-panels.

Author Contributions

Conceptualization, E.T.; methodology, D.M., A.M. and E.T.; software, D.M. and E.T.; validation, D.M., A.M. and E.T.; writing—original draft preparation, D.M. and A.M.; writing—review and editing, D.M., A.M. and E.T.; supervision, E.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The analysed image dataset was presented in [30]. Further data are contained within the article and supplementary materials.

Acknowledgments

The authors acknowledge the support of the University of Catania PIACERI 2020/22 Project “TEAMS” and project CN-HPC, Big Data and Quantum Computing, Spoke 2 Fundamental Research and Space Economy.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gabbar, H.A.; Elsayed, Y.; Isham, M.; Elshora, A.; Siddique, A.B.; Esteves, O.L.A. Demonstration of Resilient Microgrid with Real-Time Co-Simulation and Programmable Loads. Technologies 2022, 10, 83. [Google Scholar] [CrossRef]

- Dorji, S.; Stonier, A.A.; Peter, G.; Kuppusamy, R.; Teekaraman, Y. An Extensive Critique on Smart Grid Technologies: Recent Advancements, Key Challenges, and Future Directions. Technologies 2023, 11, 81. [Google Scholar] [CrossRef]

- Slameršak, A.; Kallis, G.; O’Neill, D.W. Energy requirements and carbon emissions for a low-carbon energy transition. Nat. Commun. 2022, 13, 6932. [Google Scholar] [CrossRef] [PubMed]

- Bartie, N.; Cobos-Becerra, Y.; Fröhling, M.; Schlatmann, R.; Reuter, M. The resources, exergetic and environmental footprint of the silicon photovoltaic circular economy: Assessment and opportunities. Resour. Conserv. Recycl. 2021, 169, 105516. [Google Scholar] [CrossRef]

- Gómez-Uceda, F.J.; Varo-Martínez, M.; Ramírez-Faz, J.C.; López-Luque, R.; Fernández-Ahumada, L.M. Benchmarking Analysis of the Panorama of Grid-Connected PV Installations in Spain. Technologies 2022, 10, 131. [Google Scholar] [CrossRef]

- IRENA. Renewable Energy Statistics, 2023; IRENA: Abu Dhabi, United Arab Emirates, 2023. [Google Scholar]

- Mao, H.; Chen, X.; Luo, Y.; Deng, J.; Tian, Z.; Yu, J.; Xiao, Y.; Fan, J. Advances and prospects on estimating solar photovoltaic installation capacity and potential based on satellite and aerial images. Renew. Sustain. Energy Rev. 2023, 179, 113276. [Google Scholar] [CrossRef]

- Golovko, V.; Bezobrazov, S.; Kroshchanka, A.; Sachenko, A.; Komar, M.; Karachka, A. Convolutional neural network based solar photovoltaic panel detection in satellite photos. In Proceedings of the IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Bucharest, Romania, 21–23 September 2017; Volume 1, pp. 14–19. [Google Scholar] [CrossRef]

- Moraguez, M.; Trujillo, A.; de Weck, O.; Siddiqi, A. Convolutional Neural Network for Detection of Residential Photovoltalc Systems in Satellite Imagery. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1600–1603. [Google Scholar] [CrossRef]

- Yu, J.; Wang, Z.; Majumdar, A.; Rajagopal, R. DeepSolar: A Machine Learning Framework to Efficiently Construct a Solar Deployment Database in the United States. Joule 2018, 2, 2605–2617. [Google Scholar] [CrossRef]

- Ioannou, K.; Myronidis, D. Automatic Detection of Photovoltaic Farms Using Satellite Imagery and Convolutional Neural Networks. Sustainability 2021, 13, 5323. [Google Scholar] [CrossRef]

- Golovko, V.; Kroshchanka, A.; Bezobrazov, S.; Sachenko, A.; Komar, M.; Novosad, O. Development of Solar Panels Detector. In Proceedings of the International Scientific-Practical Conference Problems of Infocommunications. Science and Technology (PIC S&T), Kharkiv, Ukraine, 9–12 October 2018; pp. 761–764. [Google Scholar] [CrossRef]

- Li, Q.; Feng, Y.; Leng, Y.; Chen, D. SolarFinder: Automatic Detection of Solar Photovoltaic Arrays. In Proceedings of the ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Sydney, Australia, 21–24 April 2020; pp. 193–204. [Google Scholar] [CrossRef]

- Moradi Sizkouhi, A.M.; Aghaei, M.; Esmailifar, S.M.; Mohammadi, M.R.; Grimaccia, F. Automatic Boundary Extraction of Large-Scale Photovoltaic Plants Using a Fully Convolutional Network on Aerial Imagery. IEEE J. Photovoltaics 2020, 10, 1061–1067. [Google Scholar] [CrossRef]

- Edun, A.S.; Perry, K.; Harley, J.B.; Deline, C. Unsupervised azimuth estimation of solar arrays in low-resolution satellite imagery through semantic segmentation and Hough transform. Appl. Energy 2021, 298, 117273. [Google Scholar] [CrossRef]

- Schulz, M.; Boughattas, B.; Wendel, F. DetEEktor: Mask R-CNN based neural network for energy plant identification on aerial photographs. Energy AI 2021, 5, 100069. [Google Scholar] [CrossRef]

- Liang, S.; Qi, F.; Ding, Y.; Cao, R.; Yang, Q.; Yan, W. Mask R-CNN based segmentation method for satellite imagery of photovoltaics generation systems. In Proceedings of the Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 5343–5348. [Google Scholar] [CrossRef]

- Malof, J.M.; Bradbury, K.; Collins, L.M.; Newell, R.G. Automatic detection of solar photovoltaic arrays in high resolution aerial imagery. Appl. Energy 2016, 183, 229–240. [Google Scholar] [CrossRef]

- Xia, Z.; Li, Y.; Guo, X.; Chen, R. High-resolution mapping of water photovoltaic development in China through satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102707. [Google Scholar] [CrossRef]

- Zhang, X.; Zeraatpisheh, M.; Rahman, M.M.; Wang, S.; Xu, M. Texture Is Important in Improving the Accuracy of Mapping Photovoltaic Power Plants: A Case Study of Ningxia Autonomous Region, China. Remote Sens. 2021, 13, 3909. [Google Scholar] [CrossRef]

- Malof, J.M.; Collins, L.M.; Bradbury, K.; Newell, R.G. A deep convolutional neural network and a random forest classifier for solar photovoltaic array detection in aerial imagery. In Proceedings of the IEEE International Conference on Renewable Energy Research and Applications (ICRERA), Birmingham, UK, 20–23 November 2016; pp. 650–654. [Google Scholar] [CrossRef]

- Czirjak, D.W. Detecting photovoltaic solar panels using hyperspectral imagery and estimating solar power production. J. Appl. Remote Sens. 2017, 11, 026007. [Google Scholar] [CrossRef]

- Ji, C.; Bachmann, M.; Esch, T.; Feilhauer, H.; Heiden, U.; Heldens, W.; Hueni, A.; Lakes, T.; Metz-Marconcini, A.; Schroedter-Homscheidt, M.; et al. Solar photovoltaic module detection using laboratory and airborne imaging spectroscopy data. Remote Sens. Environ. 2021, 266, 112692. [Google Scholar] [CrossRef]

- Lindahl, J.; Johansson, R.; Lingfors, D. Mapping of decentralised photovoltaic and solar thermal systems by remote sensing aerial imagery and deep machine learning for statistic generation. Energy AI 2023, 14, 100300. [Google Scholar] [CrossRef]

- Mayer, K.; Rausch, B.; Arlt, M.L.; Gust, G.; Wang, Z.; Neumann, D.; Rajagopal, R. 3D-PV-Locator: Large-scale detection of rooftop-mounted photovoltaic systems in 3D. Appl. Energy 2022, 310, 118469. [Google Scholar] [CrossRef]

- Veerle Plakman, J.R.; van Vliet, J. Solar park detection from publicly available satellite imagery. GIScience Remote Sens. 2022, 59, 462–481. [Google Scholar] [CrossRef]

- Parhar, P.; Sawasaki, R.; Todeschini, A.; Vahabi, H.; Nusaputra, N.; Vergara, F. HyperionSolarNet: Solar panel detection from aerial images. arXiv 2022, arXiv:2201.02107. [Google Scholar]

- Spina, R.; Tramontana, E. An Image-Processing Approach for Computing the Size of Green Areas in Cities. In Proceedings of the 9th International Conference on Computer and Communications Management (ICCCM), Virtual, 16–18 July 2021; pp. 59–65. [Google Scholar] [CrossRef]

- Spina, R.; Tramontana, E. An automated classification system for urban areas matching the ‘city country fingers’ pattern: The cases of Kamakura (Japan) and Acireale (Italy) cities. J. Urban Ecol. 2021, 7, juab023. [Google Scholar] [CrossRef]

- Jiang, H.; Yao, L.; Lu, N.; Qin, J.; Liu, T.; Liu, Y.; Zhou, C. Multi-resolution dataset for photovoltaic panel segmentation from satellite and aerial imagery. Earth Syst. Sci. Data 2021, 13, 5389–5401. [Google Scholar] [CrossRef]

- Levkowitz, H.; Herman, G. GLHS: A Generalized Lightness, Hue, and Saturation Color Model. CVGIP Graph. Model. Image Process. 1993, 55, 271–285. [Google Scholar] [CrossRef]

- Saravanan, G.; Yamuna, G.; Nandhini, S. Real time implementation of RGB to HSV/HSI/HSL and its reverse color space models. In Proceedings of the International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016; pp. 462–466. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).