1. Introduction

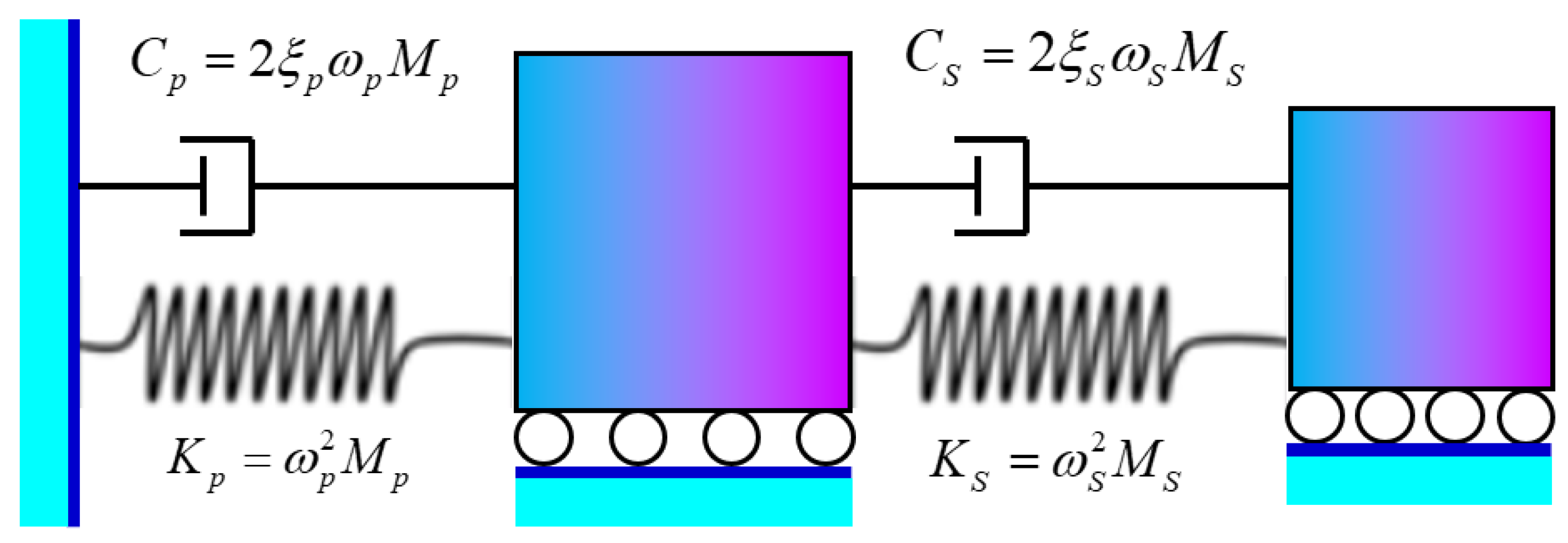

Structural reliability analysis of aeroengine components represents one of the most critical challenges in aerospace engineering, directly impacting flight safety, operational efficiency, and economic viability of modern aviation systems [

1]. It is significant to quantify the structural failure probability under complex working conditions for ensuring structural reliability and safety. In the past few decades, there have been many works about structural failure probability estimation [

1,

2,

3]. As the critical power components of aircraft, aeroengine structures like turbine blisks are typically exposed to the uncertain environment of high temperature, severe pressure differentials and alternating loads, which represent challenges to the accurate assessment of fatigue failure probability [

4,

5]. To quantify the structural failure probability, the equation can be defined as follows [

6]:

where

x = [

x1,

x2,

x3, …,

xn] represents the input vector of random variables including material properties, loads settings and fatigue parameters;

g(·) is the structural limit state function (LSF) or failure surface, which is shown as structural failure when

g(

x) ≤ 0;

f(·) denotes the joint probability density function associated with the random variables

x. However, it is an intractable analytically to calculate the failure probability of structures under complex operating conditions by the integral Equation (1). Therefore, numerical surrogate methods are needed to balance the computational efficiency with estimation accuracy.

Traditional reliability analysis methods, including the first-order reliability method (FORM), second-order reliability method (SORM), etc., approximate the LSF by Taylor series expansion around the design of points (DoPs) [

7,

8]. Despite the computational efficiency advantages, FORM and SORM are limited to precision accuracy in high-dimensional nonlinear problems by low-order polynomial approximations. Moreover, the fatigue failure mechanisms of turbine blisks result in non-convex failure domains, which violate the assumptions of FORM/SORM [

9]. Monte Carlo Simulation (MCS), as one of the most significant numerical simulation methods, provides a gold standard for failure probability estimation by directly sampling the probability space [

10]. Based on the MCS, the estimation of failure probability is described as follows:

where

I[·] is the indicator function of

g(

x), which equals 1 in case of structural failure; otherwise, it is 0;

N is the total number of simulation samples; and

Nf is the theoretical minimum simulation number with a relative error

ε of

Pf, and it is as follows:

where

z is the normal distribution;

α/2 is the quantile of

z, and 1 −

α is used to define the confidence interval. However, prohibitively high computational demands in the theoretical random sampling process of MCS are needed, which exponentially increase with the precision of failure probability. The typical structural failure probability of aeroengines is usually less than 1 × 10

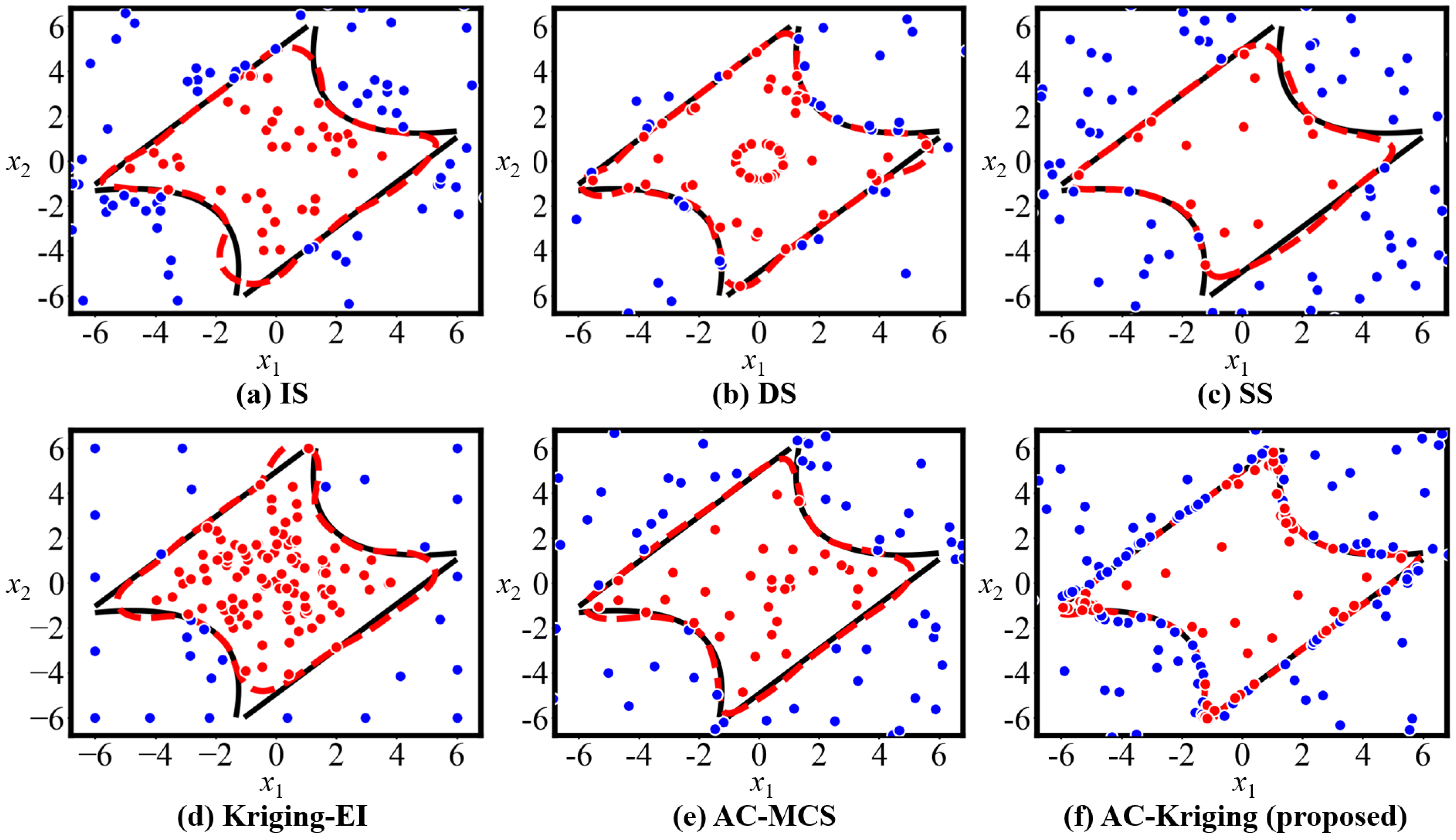

−5, which is needed for millions of high-fidelity finite element (FE) simulations in MCS, far beyond the acceptable range in practical projects. To address this issue, advanced reliability analysis methods, such as importance sampling (IS), directional sampling (DS) and subset simulation (SS), have been developed to estimate small probability of failure [

11,

12,

13]. However, IS faces challenges in accurately selecting the importance function and solving high-dimensional complex failure regions, and SS suffers from high computational cost in each subset and sensitivity to initial samples.

To address the above-mentioned problems, machine learning (ML)-based surrogate modeling has been applied to fit complex implicit LSF and calculate failure probability in structural reliability analysis problems, which has become one of the current research hotspots [

14,

15,

16,

17]. Recent studies demonstrate extensive applications of surrogate models in structural reliability analysis, such as polynomial chaos expansions [

18], support vector machines [

19], artificial neural networks (ANNs) [

20], and Kriging models [

21,

22]. Sun et al. developed a method using LIF, MCMC and MC to update the Kriging model and improve efficiency in structural reliability analysis [

21]. Qian et al. proposed a time-variant reliability method for an industrial robot rotate vector reducer with multiple failure modes using a Kriging model [

22]. Vazirizade et al. introduced an ANN-based method to reduce the computational effort required for reliability analysis and damage detection [

23]. Yang et al. enhanced sparse polynomial chaos expansion accuracy through sequential sample point extraction, successfully applying it to slope reliability analysis [

24]. Gaussian processes have been particularly well investigated for structural reliability analysis due to their statistical learning capabilities [

25,

26,

27]. However, it is critical to select the DoPs by a sampling method for training the surrogate models. The lack of training samples near the LSF will limit the accuracy and efficiency of surrogate models. For complex structures like aeroengines, it is difficult to construct an accurate model of the LSF by one-time sampling to obtain design points. Therefore, how to optimize sampling and improve the accuracy of models near the LSF has become one key research issue in structural reliability analysis.

Recently, active sampling methods have become interesting in the field of structural reliability analysis due to the advantages in enhancing accuracy of surrogate models and computational efficiency [

20,

28,

29]. The active selection of samples greatly reduces the computational cost by maximizing contributions of failure probability evaluation. For example, Ling et al. [

6] proposed a method combining adaptive Kriging with MCS to efficiently estimate the failure probability function; Wang et al. developed a new active learning method for estimating the failure probability based on a penalty learning function [

30]; and Yuan et al. proposed an efficient reliability method for structural systems with multiple failure modes, developing a new learning function based on the system structure function to select added points from a system perspective [

20]. These methods only require calling the real model once in each iteration, significantly improving computational efficiency. However, it is easy to fall into local optima in active-learning-based modeling due to variance estimation bias near nonlinear failure domain boundaries causing redundant samples or missing key areas. To address the above-mentioned problem, failure probability sensitivity-based sampling methods are proposed [

31,

32]. Dang et al. proposed a Bayesian active learning line sampling to improve the epistemic uncertainty about failure probability [

33]. Moustapha et al. developed an active learning strategy designed for solving the presence of multiple failure modes and the uneven contribution to failure [

34]. Liu et al. integrated the classical active Kriging-MCS and adaptive linked importance sampling to establish a novel reliability analysis in the extremely small failure probability [

35]. However, current active sampling approaches face two significant challenges that limit their effectiveness: (1) the majority of existing techniques rely on fixed learning functions that cannot dynamically adjust their sampling strategies based on evolving problem understanding or provide adaptive feedback for sample quality assessment; (2) the computational burden grows substantially with increasing problem dimensionality, creating practical barriers for implementation in high-dimensional engineering applications typical of complex structural systems.

Deep reinforcement learning (DRL), through the unique “state-action-reward” interactive framework, achieves autonomous optimization and iterative evolution of strategies, providing a new paradigm for active learning in structural reliability analysis. Recently, DRL has made significant breakthroughs in various fields, including the successes of AlphaGo [

36], Deep Q-Networks (DQNs) [

37] and large language models (like ChatGPT-3, Deepseek-R1) [

38,

39]. Many studies on DRL-based structural reliability analysis have emerged; for instance, Xiang et al. proposed a DRL-based sampling method for structural reliability assessment [

40]; Guan et al. developed high-accuracy structural dominant failure modes and self-play strategy searching methods based on DRL [

41,

42]. Wei et al. introduced a general DRL framework for structural maintenance policy [

43]. Li et al. proposed an efficient optimization method of the base isolation systems and shape memory alloy inverter using different DRL algorithms [

44]. The DRL-based reliability analysis methods have shown potential, but there are several challenges in the LSF modeling process: (1) It is not easy to converge in the training process of deep learning network. (2) The reward function settings still need improvement to reasonably select DoPs. However, DRL is still in the exploratory stage in structural reliability analysis, and its potential has been shown.

The critical gap in the current literature lies in the absence of a theoretically grounded, computationally efficient, and practically robust method that can adaptively optimize sampling strategies based on evolving the understanding of the limit state function, provide theoretical convergence guarantees for failure probability estimation accuracy, scale effectively to high-dimensional problems typical in aerospace engineering, balance multiple objectives including accuracy, efficiency, and computational budget constraints, and integrate seamlessly with established surrogate modeling frameworks. To solve the above-mentioned issues, an Actor–Critic network-enhanced Kriging method (AC-Kriging) is proposed to obtain the positive DoPs through DRL-based active searching and establish the surrogate LSF for structural reliability evaluation. Specifically, in the application of AC-Kriging, the following steps are carried out: (1) Firstly, an initial Kriging model is constructed using a Latin hypercube sampling (LHS)-generated DoPs to approximate the structural LSF. (2) The Actor network identifies candidate points within the DoPs, while the Critic network evaluates their potential contributions through global-local reward functions based on the Kriging model’s prediction errors, thereby selecting the next optimal sampling point. (3) The accuracy of the Kriging model is iteratively evaluated, and the convergence of reliability analysis is monitored until the dual convergence criteria (model precision and algorithmic stability) are satisfied. The AC-Kriging method aims to develop an efficient and accurate structural reliability analysis framework by the seamless integration of Kriging modeling and Actor–Critic reinforcement learning networks.

The primary contributions of this paper to the field of structural reliability analysis are as follows:

- (1)

This study introduces a comprehensive framework that combines Actor–Critic reinforcement learning with Kriging surrogate modeling for structural reliability analysis, enabling dynamic and intelligent sample selection that adapts to the evolving understanding of limit state boundaries throughout the analysis process.

- (2)

The proposed continuous state–action representation effectively addresses the curse of dimensionality that affects traditional active sampling methods, achieving improved computational scaling with problem size and making it more suitable for complex aerospace engineering applications.

- (3)

This research develops dual convergence criteria that monitor both Kriging model precision and algorithmic stability, providing a systematic approach for determining when sufficient sampling accuracy has been achieved in the reliability analysis process.

- (4)

The AC-Kriging method optimizes multiple competing objectives by its reward function design, addressing the real-world constraints faced by aerospace engineers while providing a theoretical foundation for integrating deep reinforcement learning principles with established surrogate modeling frameworks.

The remainder of this paper is structured as follows:

Section 2 introduces the theoretical basis of active sampling based on AC networks,

Section 3 elaborates on the proposed AC-Kriging method,

Section 4 verifies the accuracy and efficiency of the method through theoretical and engineering aeroengine structural reliability analysis, and

Section 5 provides the conclusions.

2. Deep Reinforcement Learning

DRL is a hybrid algorithm framework integrating deep learning with reinforcement learning principles to enable autonomous decision-making in high-dimensional state spaces [

45]. It is skilled at solving the sequential decision problem by trial and error of an agent in a complex environment. Based on the learning strategy, the final objective would be achieved by the DRL output continuous action of an agent. To evaluate the actions of agents, the rewards calculated by a specific reward function are specified according to each action. DRL aims to maximize the cumulative rewards by combining the rewards of each action and final reward upon task completion, along with a punishment function for undesired actions. Unlike supervised learning, the DRL model is trained by interactive data with rewards instead of diverse labeled data. In addition, the agent is represented by a deep neural network trained by multiple episodes, and each episode contains numerous steps.

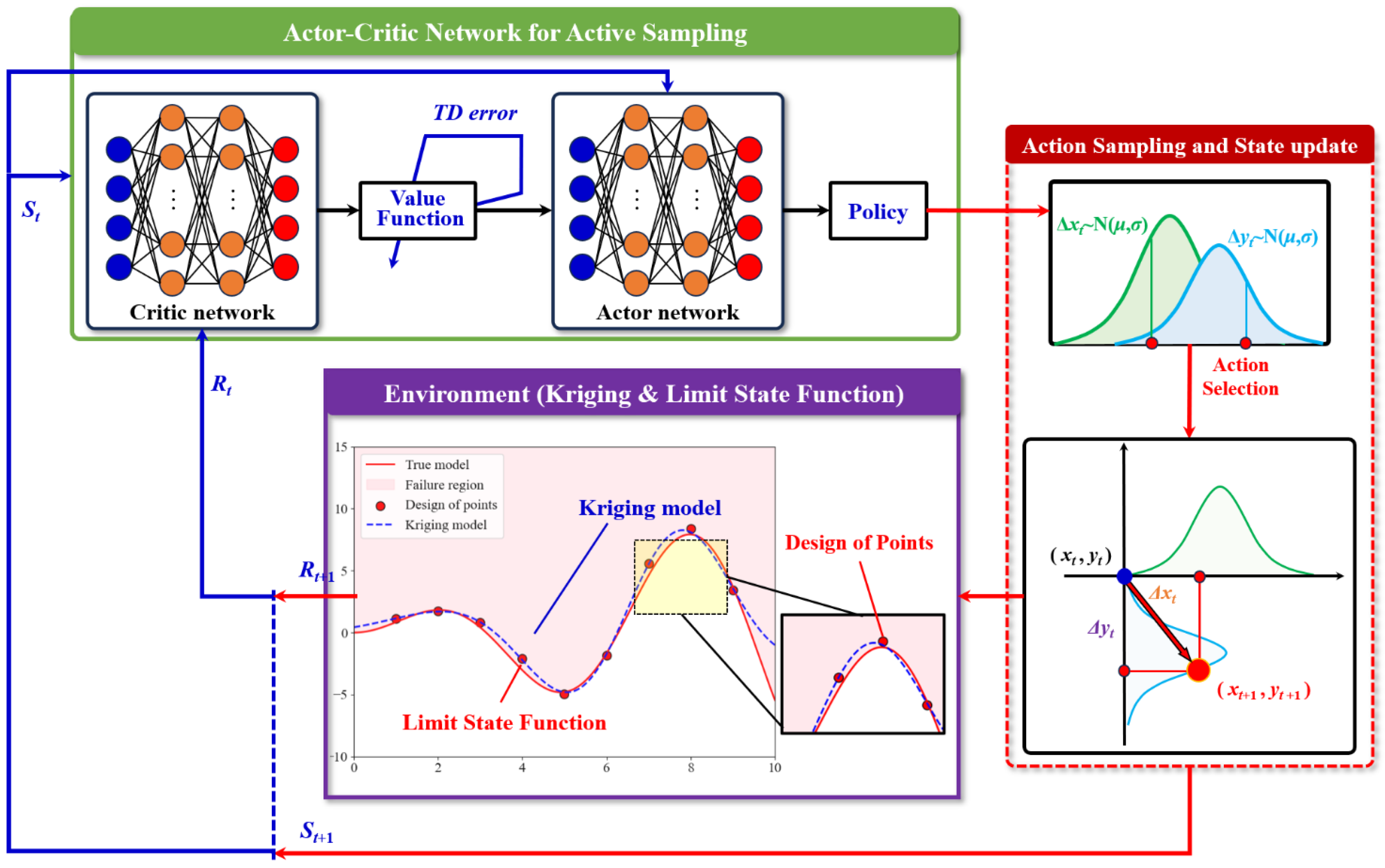

As illustrated in

Figure 1, DRL is governed by Markov decision process (MDP) in the training process, which provides a basic mathematical framework for solving the decision-making problems, with uncertainty and long-term cumulative rewards [

46]. MDP usually consists of five elements (

S,

A,

R,

P,

γ), in which

S is all the possible states or actual states of agent in the environment;

A is the actions of agent;

R is the reward obtained by agent after one action

a ∈

A in one state

s ∈

S;

P is the state transition probability function, which determines the next

S’ given state

S =

s and action

A =

a; and

γ is the discount rate, which determines the importance of a future reward.

In reinforcement learning, at time step

t,

st represents the state of one agent, and

at represents the action adopted by one agent in state

st. According to the reward function

r(

s,

a), the agent receives the reward

rt and reaches the next state

st+1. The trajectory of the agent in a game is recorded as follows:

where

n is the step number of one game;

St,

At and

Rt are the

t-th state, action and reward of agent, respectively. In addition, suppose state transitions have Markov properties, namely:

The action taken by one agent is determined by the policy function

π(

a|

s). The probability of an agent taking action

a in state

s is

π(

a|

s) =

P[

S =

s,

A =

a]. The agent is trained to maximize the expectation of the cumulative discounted return

Ut which is defined as follows:

where

T is the last step of each step, and

ri is the reward obtained by the agent at each time step.

Ut is a variable that includes future actions and states, with inherent randomness.

Taking the expectation of

Ut yields the action-value function

Qπ:

where

St =

st and

At =

at are the observed values of

St and

At, respectively.

Qπ depends on

t-th state

st and action

at, not on state

st+1 and action

at+1 from

t + 1 step, because the random variables are eliminated by expectation. To eliminate the influence of strategy policy

π, the optimal action-value function

Q* is described as follows:

where the best policy function is selected as follows:

which illustrates

Q* depends on

st and

at.

To quantify the chances of winning the game, the state-value function is defined as follows:

where

At is eliminated as a random parameter.

where the expectation eliminates the dependence of

Ut on the random variables

At, St+1,

At+1, …,

Sn,

An. The greater the state value, the higher the expected return. State value can be used to evaluate the quality of policy

π and state

st.

Reinforcement learning aims to learn a policy function π(a|s) to maximize cumulative discounted rewards. Because directly computing the action-value function Qπ(s,a) and state-value function Vπ(s) is often infeasible, DRL employs a deep neural network (DNN) to approximate these functions. For instance, DQNs use neural networks to estimate Qπ(s,a), policy gradient methods directly optimize the policy function parameters, and Actor–Critic methods combine both by using neural networks to approximate both the policy and value functions.

3. Proposed AC-Kriging Method

In this study, the AC-Kriging method is proposed for efficient structural reliability analysis, integrating the Actor–Critic reinforcement learning framework with the Kriging model to optimize the selection of experimental sampling points, aiming to accurately approximate the limit state surface while reducing computational cost. As illustrated in

Figure 2, the AC-Kriging method establishes correspondences between reliability analysis and the reinforcement learning paradigm: the sampling space represents the environment state, the deep neural network serves as the agent, and the selection of experimental points corresponds to actions.

Step 1: The Kriging model provides essential information about the current approximation of the limit state function.

Step 2: The AC-Kriging method consists of two key networks: (1) an Actor network that determines the optimal location for the next experimental point based on current information, and (2) a Critic network that evaluates the expected contribution of selected points to reliability assessment accuracy. Both networks share initial convolutional layers that extract relevant features from the sampling domain representation, and these features are subsequently processed through fully connected layers to generate either sampling decisions or value estimations.

Step 3: The method operates iteratively, with each cycle involving the extraction of state information from the current Kriging model, selection of a new experimental point, evaluation of the true limit state function at this point, updating of the Kriging surrogate model, and calculation of rewards to refine the neural network parameters.

Through this process, the AC-Kriging method overcomes the limitations of traditional sampling methods by adaptively learning optimal sampling strategies for various structural reliability problems.

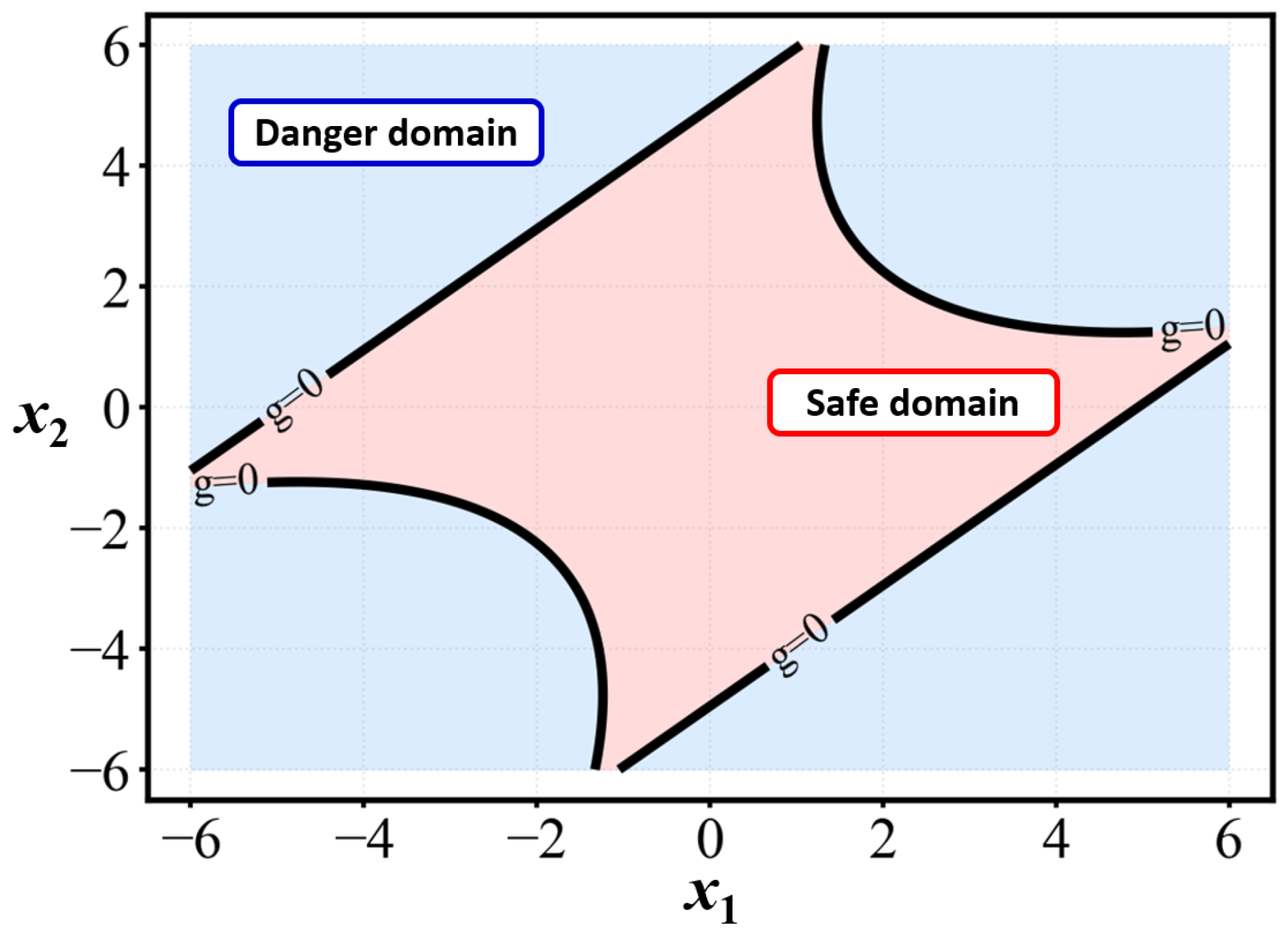

3.1. Environment and State Definition

To effectively transform structural reliability analysis into a reinforcement learning problem, the environment is represented as the n-dimensional design space x ∈ Rn, where random variables follow their respective probability distributions. The limit state function (LSF) g(x) partitions this space into failure (g(x) < 0) and safe (g(x) ≥ 0) domains.

3.1.1. State Space Design

In traditional reinforcement learning frameworks, state representations often rely on discretized sampling spaces, which can lead to excessive computational complexity when dealing with high-dimensional problems. To overcome this limitation, the AC-Kriging method proposes a continuous state representation that efficiently captures the essential information needed for reliability analysis. The state space is defined as a two-dimensional continuous space, where each state, s ∈ Rn, represents the spatial coordinates of an agent. Specifically, s = [x1, x2, …, xn], with xi denoting the agent’s position along the respective axes.

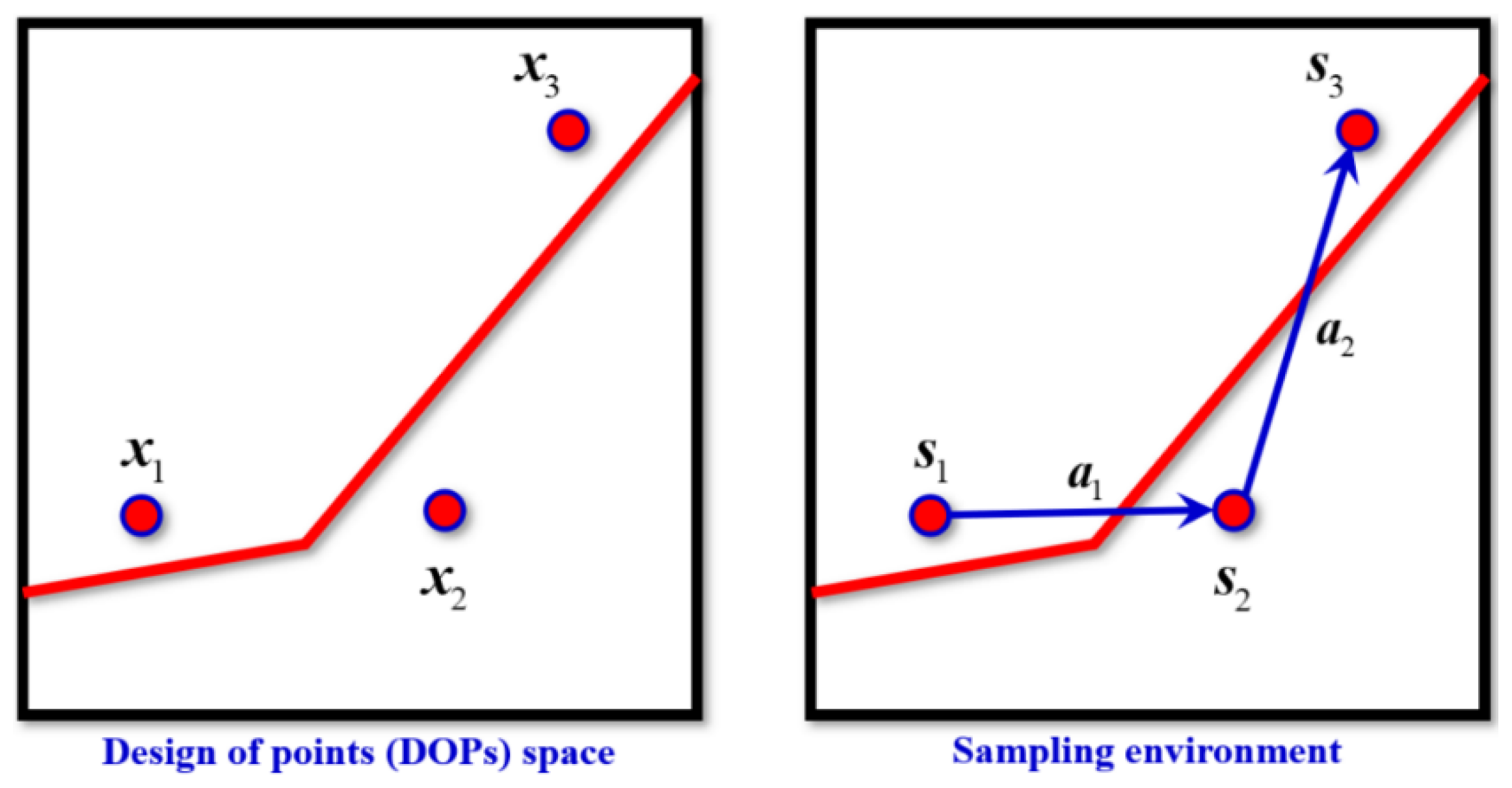

3.1.2. Action Space Design

The action space is a continuous space in

Rn, corresponding to the displacement vector an agent can execute. Formally, an action

a = [Δ

x1, Δ

x2, …, Δ

xn], where Δ

xi ∈ [−1, 1] in

Figure 3. This range allows for flexible movement in any direction within the environment while maintaining control over the scale of displacement, ensuring that the agent can perform both fine and coarse adjustments to its position as dictated by the boundary exploration strategy.

3.1.3. Reward Function Design

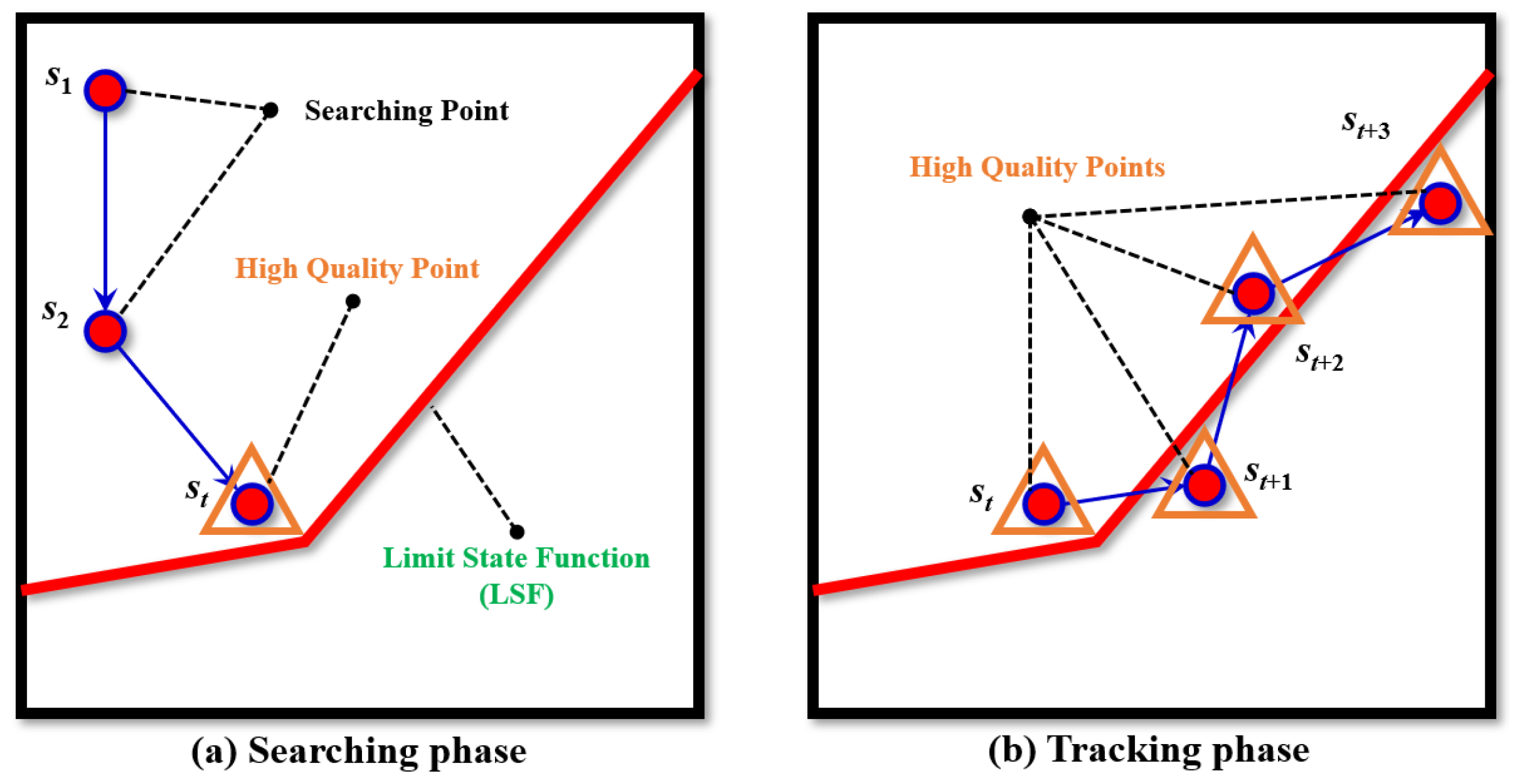

As shown in

Figure 4, the AC-Kriging sampling process consists of two phases: in the searching phase (a), the agent starts from random positions to locate the LSF and identify high quality (HQ) points; in the tracking phase (b), the agent initializes from the discovered HQ point to systematically extract more sampling points along the LSF, achieving efficient boundary-following for structural reliability analysis.

In

t-step, the reward function is a composite objective function designed to guide the agent’s learning process through a multi-faceted incentive structure, mathematically formulated as follows:

where the coefficients

a1,

a2,

a3 and

a4 are weighting factors that balance the influence of each reward component, determined through empirical tuning to optimize the agent’s performance in the boundary exploration task.

The reward function comprises four distinct components, each addressing a specific aspect of the boundary-following behavior, which are defined as follows:

- (1)

The edge proximity reward is defined as follows:

which decays exponentially with the agent’s distance from the boundary, encouraging the agent to remain near the boundary. This exponential decay ensures that the reward is highest when the agent is precisely on the boundary and diminishes rapidly as the agent moves away.

- (2)

To promote progress along the boundary, the movement reward is defined as follows:

which rewards the agent for making progress along the boundary by comparing the current boundary proximity

g(

s) with the previous value

glast. This component is activated only when the agent remains sufficiently close to the boundary, encouraging consistent boundary traversal rather than random movements.

- (3)

Exploration of uncharted regions is incentivized through the exploration reward is defined as follows:

if the distance from

s to the nearest boundary point in the shared boundary database exceeds 0.3; otherwise, it is 0. This mechanism encourages the agent to explore uncharted regions of the boundary, enhancing the completeness of the boundary mapping process and preventing the agent from repeatedly traversing already mapped sections.

- (4)

The keep reward is defined as follows:

which incentivizes the agent to maintain a consistent distance from the boundary, promoting stable boundary-following behavior. This component provides a graduated reward that is maximized when the agent maintains an optimal distance from the boundary, balancing the need to stay close to the boundary.

This meticulously designed reward function serves as the cornerstone for the reinforcement learning framework, guiding the agent to efficiently map the boundary while balancing exploration and exploitation, and ensuring adherence to the environmental constraints.

3.2. AC-Kriging-Based Active Sampling Method

The AC-Kriging method integrates the Actor–Critic network architecture with Kriging surrogate modeling to achieve efficient and accurate structural reliability analysis. This section details the key components of this integrated approach, focusing on the network structure and the adaptive sampling strategy.

3.2.1. Kriging Model

In the realm of structural engineering simulation and optimization design, the Kriging model stands as a widely utilized meta-model. Its construction process is as follows:

Given a sample set

x = {

x1,

x2, …,

xm},

xi ∈

Rn and the corresponding response values

g = [

g(

x1),

g(

x2), …,

g(

xm)], the Kriging model is expressed as follows:

where

f(

x) is an

n × 1 constant vector of ones,

β is the regression coefficient vector, and

z(

x) represents a Gaussian process with a mean of 0 and a variance of

σ2. The covariance is defined as follows:

where

R(

α,

xi,

xj) indicates the correlation function between sample points

xi and

xj. A Gaussian function is employed as the correlation function in this study.

α(

n × 1) is the correlation parameter vector. According to the maximum likelihood method,

α can be obtained by solving the following optimization problem:

where

R = [

Rij]

m×m (

Rij =

R(

α,

xi,

xj)) is the correlation matrix. The estimators of

σ2 and

β, namely,

and

, are defined as follows:

where

F = [F

ij]

m×n (F

ij =

fj(

xi)) is the regression matrix. Consequently, the predictor and prediction variance at an unknow point

x are defined as follows:

where the

r(

x) is an n dimensional vector with entry

ri =

R[z(

xi),z(

x)], defined as follows:

The vector of the

m observed function value can be calculated as follows:

3.2.2. Actor–Critic Network

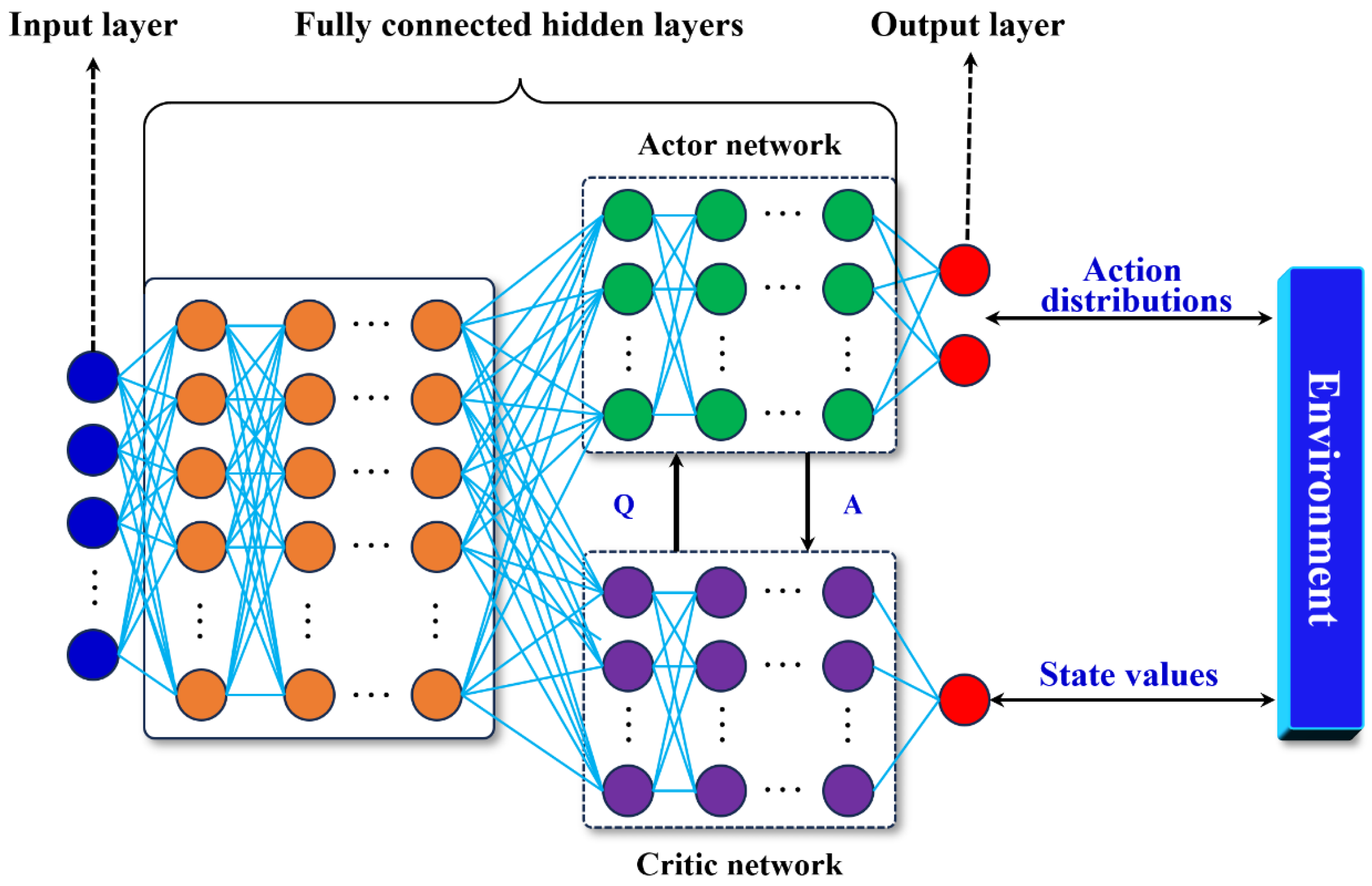

As shown in

Figure 5, the Actor–Critic network is a reinforcement learning architecture that combines policy gradient and value function estimation, in which (1) the Actor network uses neural net

π(

a|

s;

θ) to approximate

π(

a|

s), where

θ is trainable parameters of the neural net, aiming to learn an optimal policy that maximizes the expected cumulative reward; (2) the Critic network uses neural net

q(

s,

a,

ω) to approximate

Qπ(

s,

a), where

ω is a trainable parameter of the neural net, aiming to compare the expected return from the selected actions with the actual rewards received and provide feedback to the Actor for policy improvement.

The training strategy of policy network uses the approximate policy gradient

to update the parameter

θ. The unbiased estimation of the strategy gradient is described as follows

where

q(

s,

a,

ω) is the approximate of action-value function

Qπ(

s,

a). Then, parameter

θ of the policy neural network is updated by gradient ascent:

herein

ρ is the learning rate of policy network.

Based on the above-mentioned update strategy, the Actor will gain higher score, which results in the dependence of critic network evaluation ability. The state-value function

Vπ(s) can be approximate to the following:

where

v(s;

θ) is the mean of Critic score.

At

t-step, the output of value-state network is as follows:

which is the estimation of the action-value function

Qπ(

st,

at). At

t + 1 step, the temporal-difference (TD) target is calculated with the observed

rt,

st+1 and

at+1, defined as follows:

which is also the estimation of the action-value function

Qπ(

st,

at). However, the later estimation is closer to the truth due to the consideration of actual observed reward

rt. To update the parameters of value-state network, the loss function and its gradient are defined as follows:

then, conduct the next gradient descent to update

ω:

where

α is the learning rate of value-state network.

The training process of Actor–Critic network is defined as follows:

Assume the current policy network parameters are θnow and the value network parameters are ωnow, perform the following steps to update the parameters to θnew and ωnew:

Step 1: Observe the current state st and make a decision based on the policy network: at~π(·|st; θnow); then, let the agent perform the action at.

Step 2: Receive the reward rt and observe the next state st+1 from the environment.

Step 3: Make a decision based on the policy network: , but do not let the agent perform action .

Step 4: Evaluate the value network:

Step 5: Compute the TD target and TD error:

Step 6: Update the value network:

Step 7: Update the policy network:

3.3. The Framework of AC-Kriging-Based Structural Reliability Analysis

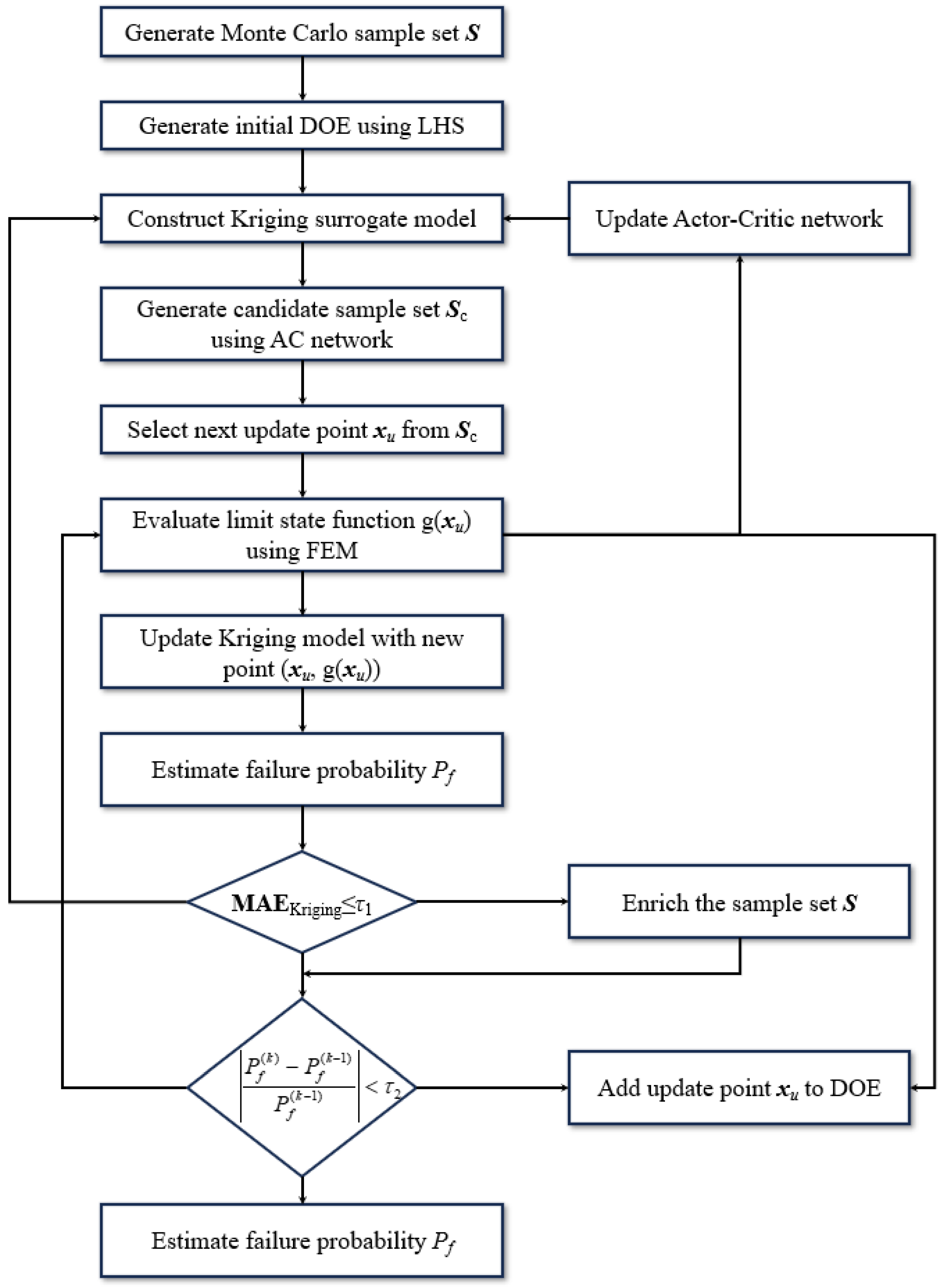

The proposed AC-Kriging method aims at introducing a novel active sampling and modeling framework for efficient and accuracy structural reliability analysis by integrating the DRL-based Actor–Critic network and the Kriging model. The detailed overview of the framework is illustrated in

Figure 6. The computational implementation of the AC-Kriging framework is detailed in Algorithm 1, which systematically describes the integration of reinforcement-learning-based active sampling with Kriging surrogate modeling for efficient structural reliability analysis. In addition, the AC-Kriging framework operates through three distinct phases: initialization, iterative optimization through Actor–Critic network-guided sampling, and final structural reliability assessment.

| Algorithm 1. Pseudocode of the AC-Kriging Method for Structural Reliability Analysis |

| 1. Input: Design space , initial sample size , convergence tolerance , reward weights of Actor-Critic network . |

| 2. Output: Failure probability , reliability degree . |

| 3. Initialization Phase. |

| 4. Generate initial samples using Latin Hypercube Sampling: ; |

| 5. Evaluate limit state function: ; |

| 6. Build initial Kriging model with samples ; |

| 7. Compute correlation matrix with parameters ; |

| 8. Estimate regression coefficients: ; |

| 9. Estimate process variance: ; |

| 10. Initialize Actor network parameters and Critic network parameters ; |

| 11. Randomly select starting point: , set iteration counter . |

| 12. Main Iteration Loop. |

| 13. While convergence criteria not satisfied Do |

14. Construct state based on current position and Kriging: ;

15. Generate displacement action using Actor network: ; |

16. Compute new sample point location: (ensure within design space);

17. Evaluate limit state function: ; |

| 18. Update sample sets: ,. |

| 19. Update Kriging model parameters. |

| 20. Update correlation matrix and correlation parameters ; |

| 21. Recompute regression coefficients and process variance . |

| 22. Calculate reward based on multiple criteria: |

| 23. (boundary proximity reward); |

| 24. if , else 0; |

| 25. if distance to boundary , else 0; |

| 26. if , else 0; |

| 27. . |

| 28. Update Actor-Critic networks. |

| 29. Update Critic network: ; |

| 30. Update Actor network: . |

| 31. Check and Update. |

32. Criteria 1: Kriging model convergence ;

33. Criteria 2: Failure probability convergence |

| 34. Update: , . |

| 35. End While |

| 36. Structural Reliability Analysis. |

| 37. Generate samples based on Monte Carlo: ; |

| 38. Count failures: ; |

| 39. Calculate failure probability: |

| 40. Return |

3.3.1. Initialization

The initialization phase establishes the computational foundation for the AC-Kriging method by creating both the initial Kriging surrogate model and the reinforcement learning environment. This phase begins with the generation of initial design points using Latin Hypercube Sampling (LHS) to ensure uniform coverage across the n-dimensional design space. The LHS approach generates m initial sample points X0 = {x1, x2, …, xm}, where each point represents a realization of the random variables in the structural reliability problem.

Following sample generation, the limit state function g(xi) is evaluated at each initial sample point through high-fidelity finite element analysis or other appropriate computational methods. These evaluations yield the response dataset G0 = {g(x1), g(x2), …, g(xm)}, which forms the basis for constructing the initial Kriging surrogate model.

The initial Kriging model construction involves the estimation of three critical parameter sets. First, the correlation matrix R is computed, along with the correlation parameters α = {α1, α2, …, αn}, which control the spatial correlation structure of the Gaussian process. Second, the regression coefficients and process variance are estimated by Equation (20). The initialization concludes by establishing the reinforcement learning components. The Actor network parameters θ0 and Critic network parameters ω0 are randomly initialized, creating the foundation for the subsequent active learning process. One starting point xk is randomly selected from the initial sample set X0, which serves as the agent’s initial position. The iteration counter k is set to 1, marking the beginning of the iterative optimization phase.

3.3.2. Actor–Critic Network-Based Active Sampling and Kriging Modeling Method

The iterative optimization phase represents the core innovation of the AC-Kriging method. This phase operates through a continuous feedback loop between the Actor–Critic networks and the evolving Kriging surrogate model, with each iteration strategically selecting new sample points to maximize information gain for reliability analysis. The details are defined as follows:

Step 1: Each iteration begins with state construction, where the current system state sk is formulated based on the agent’s current position xk and the Kriging model’s predictions. The state vector sk = [xk, ĝ(xk), σ2ĝ(xk), boundary proximity] encapsulates essential information including the current location, the Kriging model’s prediction at that location, the associated prediction uncertainty, and proximity indicators to the limit state boundary. This comprehensive state representation enables the Actor network to make informed decisions about the next sample.

Step 2: The Actor network processes the state information to generate a displacement action ak~π(·|sk; θk), which represents the optimal direction and magnitude for moving to the next sample point. The action space is designed as a continuous domain, allowing for precise positioning of sample points anywhere within the feasible design space. The new sample point location is then computed using xk+1 = xk + ak, with appropriate boundary constraints to ensure the new point remains within the design space.

Step 3: The newly selected sample point undergoes evaluation, where the true limit state function g(xk+1) is computed through high-fidelity analysis. This new information is incorporated into the sample database by updating both the sample set Xk+1 = Xk ∪ xk+1 and the response set Gk+1 = Gk ∪ gk+1.

Step 4: The Kriging model update process ensures that the surrogate model continuously improves its approximation of the limit state function. The correlation matrix R and correlation parameters α are updated to accommodate the new sample point, followed by recomputation of the regression coefficients and process variance . This incremental update strategy maintains computational efficiency while ensuring model accuracy.

Step 5: The Actor–Critic network updates employ temporal difference learning for the Critic network by Equation (31) and policy gradient methods for the Actor network by Equation (26). These updates enable the networks to learn optimal sampling strategies specific to the current reliability problem.

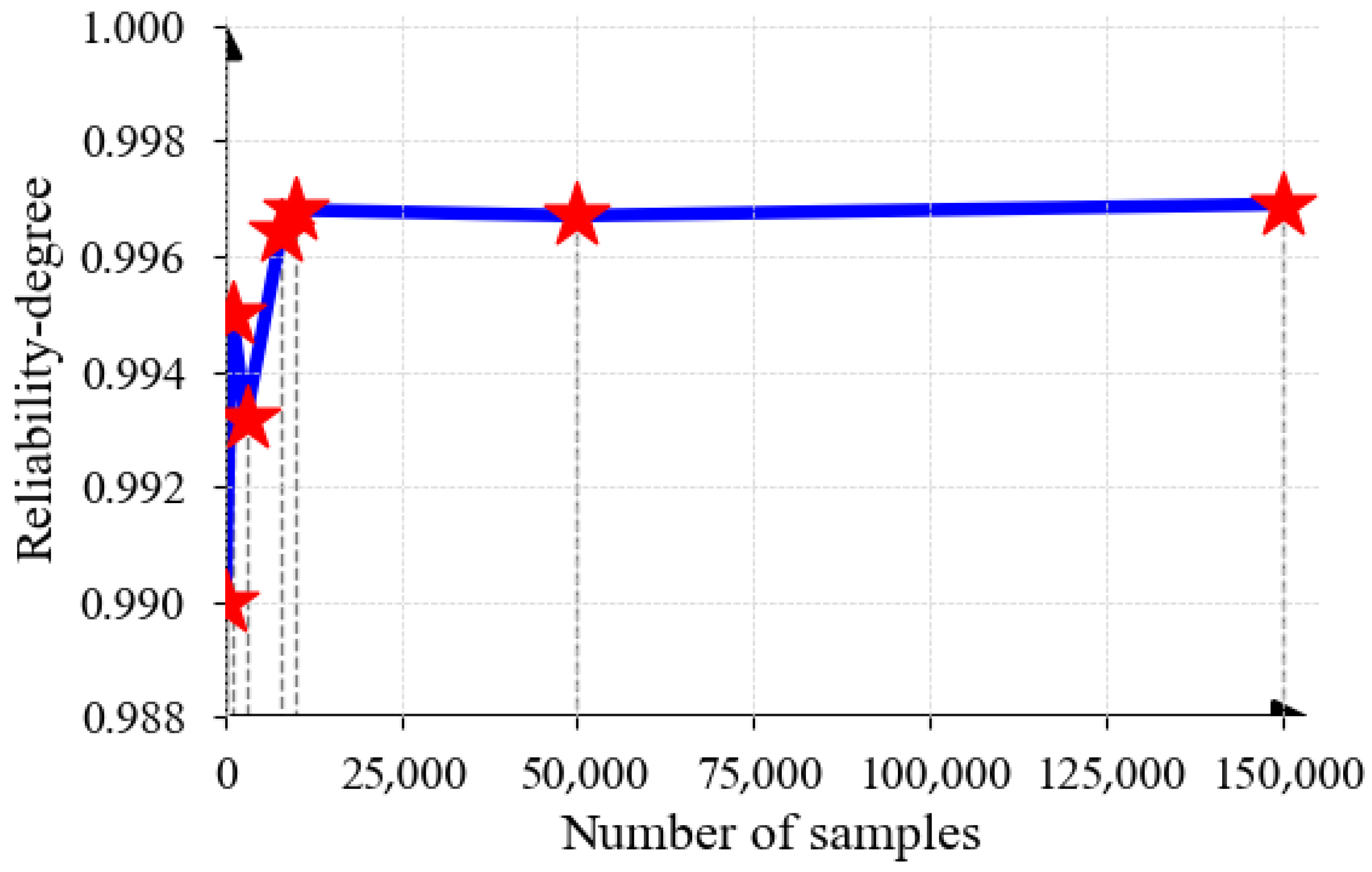

Step 6: During the iterative process, dual convergence criteria are continuously monitored. The first criterion evaluates Kriging model precision by checking whether the maximum prediction uncertainty falls below the threshold εm. The second criterion assesses the stability of failure probability estimates by monitoring the relative change between successive iterations. The iteration completes by updating the agent’s position (xk ← xk+1) and incrementing the iteration counter, preparing for the next cycle of the optimization process.

3.3.3. Structural Reliability Analysis

The sample points selected through the AC-Kriging method are utilized to construct the Kriging model for structural reliability analysis. The training process follows standard procedures with samples divided into training and validation sets. During the iterative optimization phase, the process monitors dual convergence criteria.

The first criterion is the mean absolute error (MAE) focusing on Kriging model precision, which is defined as follows:

where

n is the number of test samples;

τ1 is the first criteria of convergence;

yi is the

i-th true output of Kriging model; and

is the

i-th prediction output of Kriging model. Then, the coefficient of variation (COV) of the failure probability estimation of the Kriging model is calculated to evaluate the convergence of the reliability analysis:

where

N is the number of samples based on MCS. Finally, the trained Kriging model is applied to structural reliability assessment.

Subsequently, the convergence of the prediction accuracy based on the pre-determined number of iterations or the surrogate model, which can be defined as follows:

where

Pf(k) is the

t-step failure probability estimate, and

τ2 is the convergence threshold of criteria 2. In this study,

τ1 and

τ2 are equal to 0.05 and 0.01, respectively.