Abstract

With the rapid proliferation of Internet of Things (IoT) devices and mobile applications and the growing demand for low-latency services, edge computing has emerged as a transformative paradigm that brings computation and storage closer to end users. However, the dynamic nature and limited resources of edge networks bring challenges such as load imbalance and high latency while satisfying user requests. Service migration, the dynamic redeployment of service instances across distributed edge nodes, has become a key enabler for solving these challenges and optimizing edge network characteristics. Moreover, the low-latency nature of edge computing requires that service migration strategies must be in real time in order to ensure latency requirements. Thus, this paper presents a systematic survey of real-time service migration in edge networks. Specifically, we first introduce four network architectures and four basic models for real-time service migration. We then summarize four research motivations for real-time service migration and the real-time guarantee introduced during the implementation of migration strategies. To support these motivations, we present key techniques for solving the task of real-time service migration and how these algorithms and models facilitate the real-time performance of migration. We also explore latency-sensitive application scenarios, such as smart cities, smart homes, and smart manufacturing, where real-time service migration plays a critical role in sustaining performance and adaptability under dynamic conditions. Finally, we summarize the key challenges and outline promising future research directions for real-time service migration. This survey aims to provide a structured and in-depth theoretical foundation to guide future research on real-time service migration in edge networks.

1. Introduction

1.1. From Cloud to Edge: The Evolutionary Foundation for Service Migration

The explosive growth of smart applications, such as autonomous driving, Augmented Reality (AR), industrial automation, and real-time video analytics, has introduced increasingly stringent requirements on service latency, reliability, energy efficiency, and adaptability. These applications typically operate in dynamic environments, interact with mobile users or devices, and often generate massive volumes of time-sensitive data. Initially, cloud computing emerged as a foundational infrastructure paradigm by providing centralized, elastic, and scalable computing and storage resources, which can effectively address the limitations of resource-constrained end devices [1]. However, the centralized nature of cloud computing introduces significant latency due to long transmission paths, causes bandwidth bottlenecks under massive data streams, and raises privacy concerns due to remote data processing, all of which undermine its suitability for real-time and location-sensitive applications [2].

To overcome these limitations, edge computing has emerged as a decentralized paradigm that relocates computational and storage capabilities to the proximity of data sources and end users, typically at edge servers, access points, or base stations [3]. This architectural evolution significantly reduces end-to-end latency, alleviates backbone network congestion, and improves data privacy and availability by enabling localized processing. With the maturation of Fifth-Generation (5G) technology and the anticipated rollout of Sixth-Generation (6G) technologies, the proliferation of Mobile Edge Computing (MEC) platforms further strengthens the role of edge infrastructures in supporting ultra-low-latency, high-bandwidth, and mission-critical services [4,5]. Nevertheless, this architectural decentralization introduces new operational complexities. Edge environments are inherently heterogeneous, with edge nodes varying in computing capacity, energy profiles, connectivity, and geographic distribution, and they are highly dynamic due to fluctuating workloads, user mobility, and environmental uncertainty. To ensure consistent Quality of Service (QoS), edge service management should be both responsive and adaptive. Unlike cloud environments where services can remain relatively static, edge systems should support dynamic service migration: the timely and efficient relocation of running service instances across edge nodes in response to changes in workload distribution, resource availability, or user location [6,7]. Service migration, in this context, refers to the process of transferring service states, runtime contexts, and application logic from one edge node to another without significant service interruption. It plays a foundational role in maintaining system performance and reliability by enabling load balancing, congestion avoidance, failure recovery, and seamless service continuity for mobile users [8,9]. Typical triggers for service migration include resource over-utilization (e.g., CPU or memory saturation), user handoffs between access points, energy-aware scheduling on battery-constrained devices, and anticipated latency violations due to changing network conditions [10]. Beyond continuity and responsiveness, service migration contributes to multiple system-wide optimization objectives. These include reducing service response times, improving resource utilization balance across nodes, prolonging device lifetime through thermal and energy-aware load redistribution, and supporting green computing goals by reducing redundant computation. Furthermore, by integrating with intelligent orchestration frameworks, such as those based on reinforcement learning (RL), metaheuristics, or federated control, service migration can be proactively triggered based on predictive models of future system states, enabling anticipatory adaptation to dynamic environments [11].

In summary, the transition from cloud to edge computing represents not only an architectural shift but also a paradigm change in how services are provisioned, managed, and optimized. In this new paradigm, real-time service migration emerges as a central mechanism for achieving agility, scalability, and resilience in edge networks, especially under latency-sensitive conditions. This paper presents a comprehensive survey of real-time service migration in edge environments, analyzing the driving factors, key challenges, enabling technologies, and representative solutions across diverse application domains.

1.2. Service Migration in Edge Networks

In recent years, service migration has emerged as a critical research focus in the context of edge networks, attracting increasing attention from both academia and industry. Unlike traditional cloud computing environments, where services are deployed and executed in centralized, static data centers, edge environments are distributed, mobile, and resource-constrained, thus demanding more agile and real-time service adaptation. In particular, the need to maintain low latency and uninterrupted service continuity under dynamic conditions underscores the importance of real-time service migration. Before delving into the mechanisms and challenges, two fundamental questions must be addressed.

1.2.1. What Is Service Migration in Edge Networks?

Service migration in edge networks refers to the process of dynamically relocating service instances, along with their computational states and execution contexts, across heterogeneous edge nodes in response to changing network conditions, user mobility, resource availability, and application demands. Unlike traditional service configuration, which focuses on initial deployment and static orchestration, service migration emphasizes runtime adaptability and continuity, ensuring that services remain responsive, efficient, and reliable under dynamic edge environments. In this paper, “real-time” service migration is defined as the process of relocating service instances within a time frame that satisfies the latency requirements of the specific application or end users. This notion of “real-time” is application-specific and depends on the latency tolerance determined by the service context. For instance, latency-sensitive scenarios such as autonomous driving or real-time video streaming require timely migration to avoid perceptible service degradation or operational instability. The goal is to perform migration quickly enough to maintain continuous, responsive service delivery in edge environments. To fully understand service migration, it is essential to clarify several related concepts and components that collectively define its scope and mechanisms:

- Service Resources: These are the computing, storage, and networking assets distributed across the edge network that support service execution and migration. Unlike static allocation, service migration enables the temporal reallocation of these resources to optimize utilization and responsiveness. Typical resources include CPU/GPU cycles, memory, bandwidth, and cache, which are often subject to spatial and temporal constraints [12,13].

- Service Instances: These refer to the actual running units of services (e.g., containers, virtual machines (VMs), microservices) deployed on edge devices or servers. During migration, these instances are transferred—either via live migration, checkpoint restart, or state rehydration—to new nodes without disrupting service continuity [14,15].

- Participants: Multiple entities collaborate in service migration, including mobile end devices (e.g., smartphones, sensors, vehicles), edge servers (e.g., base stations, fog nodes), and cloud platforms. Coordination models may follow device–edge, edge–edge, or edge–cloud topologies. Effective migration depends on synchronization and negotiation among these participants [16,17].

- Objectives: Service migration aims to address several optimization goals, including minimizing response latency, avoiding overloaded nodes, maintaining service availability under user mobility, reducing energy consumption, and enhancing overall QoS. In latency-critical or mission-critical applications such as autonomous driving or remote healthcare, timely migration is key to meeting service-level agreements (SLAs) [18,19].

- Actions: The core operations involved in service migration include monitoring node loads and user positions, evaluating migration triggers (e.g., SLA violations, mobility prediction, energy thresholds), transferring the service state, and reinitializing execution at the target node. This also involves handling dependencies, preserving data integrity, and updating routing or session states [8,20].

- Methodologies: Various methodologies have been developed to enable intelligent and efficient service migration. These include heuristic and metaheuristic optimization, deep reinforcement learning for adaptive decision-making, and container-based orchestration technologies such as Kubernetes or KubeEdge. Moreover, distributed cooperative mechanisms, often supported by blockchain or federated learning, are used to ensure scalability and decentralization in multi-domain edge environments [19,21].

1.2.2. Why Is Service Migration Necessary in Edge Computing Environments?

As edge computing evolves to support vast numbers of heterogeneous and mobile devices, the demand for real-time, adaptive, and context-aware service provisioning has grown rapidly. Static service deployment strategies are often inadequate for addressing the dynamic nature of edge workloads and user mobility. Against this backdrop, real-time service migration emerges as a key enabler for maintaining continuous, efficient, and high-quality service delivery across distributed edge environments. Its importance can be viewed from the following three perspectives:

- Users: Edge networks connect billions of geographically distributed devices—including stationary sensors, mobile phones, autonomous vehicles, and drones—each with diverse and evolving QoS needs. For example, autonomous driving scenarios require ultra-low-latency access to decision-making services, while battery-powered IoT devices prioritize energy-efficient offloading and minimal data transmission. As users move across network boundaries, static placements can lead to service delays or disruptions. Real-time service migration supports location-aware, demand-responsive relocation of services, ensuring seamless and low-latency experiences even under dynamic mobility patterns [22,23].

- Service Providers: The edge ecosystem includes various commercial stakeholders such as network operators, infrastructure providers, and third-party application vendors. These providers should balance performance, cost, and resource constraints while delivering high service quality. Static placement of services often leads to unbalanced loads, underutilized resources, or SLA violations in hotspots. Real-time service migration enables dynamic reassignment of services based on current traffic, resource availability, and user distribution. This enhances efficiency, reduces energy costs, and aligns with revenue-driven strategies such as demand-aware scaling and pricing differentiation [24,25]. In today’s MEC settings, AI-driven and cooperative migration frameworks have demonstrated success in reducing latency and increasing operational gains.

- Edge Network Infrastructure: Edge computing infrastructure is inherently decentralized and heterogeneous, often consisting of micro-data centers, access points, vehicular nodes, and even end devices with opportunistic computing capabilities. These resources exhibit varying computational power, energy availability, and connectivity quality. Many edge resources—such as parked autonomous vehicles or idle roadside units (RSUs)—remain underutilized unless they are actively integrated into the service ecosystem. Real-time service migration serves as the orchestrator, redistributing workloads across these fragmented units based on real-time availability. Through migration-aware scheduling, edge systems can proactively or reactively bypass overloaded nodes and utilize transient capacity, improving both efficiency and resilience across the network [26,27].

1.3. Contribution and Organization

This article provides a comprehensive survey of recent research on service migration in edge computing networks, with a particular focus on key migration motivations, namely, overload mitigation and resource rebalancing, user mobility and location awareness, energy efficiency and device lifespan management, and latency optimization and QoS enhancement, as well as enabling techniques, application scenarios, and future challenges. To offer readers a structured overview of the field, this article provides contributions on five main aspects:

- Architecture, Basic Model, Benchmark Datasets, and Open Platforms (Section 2): We present four representative edge computing architectures, i.e., cloud–edge–end, edge–edge, cloud–edge fusion, and edge–device collaboration, and analyze their respective support for real-time service migration. We also introduce four analytical models (network model, latency model, energy consumption model, and utility model) that collectively provide a theoretical foundation for service migration decision-making and performance evaluation.

- Migration Motivation (Section 3): We identify and elaborate on four primary motivations for real-time service migration in edge environments: (i) overload mitigation and resource rebalancing; (ii) user mobility and location awareness; (iii) energy efficiency and device lifespan management; and (iv) latency optimization and QoS enhancement. These motivations reflect the practical challenges faced by edge systems and drive the design of adaptive migration strategies.

- Key Techniques for Service Migration (Section 4): We categorize and examine six mainstream technical approaches for enabling real-time service migration: (i) approximate algorithms, (ii) heuristic algorithms, (iii) game-theoretic models, (iv) reinforcement learning, (v) deep learning, and (vi) deep reinforcement learning. We analyze how each technique contributes to efficient migration decisions under constraints such as delay, energy, load imbalance, and mobility uncertainty.

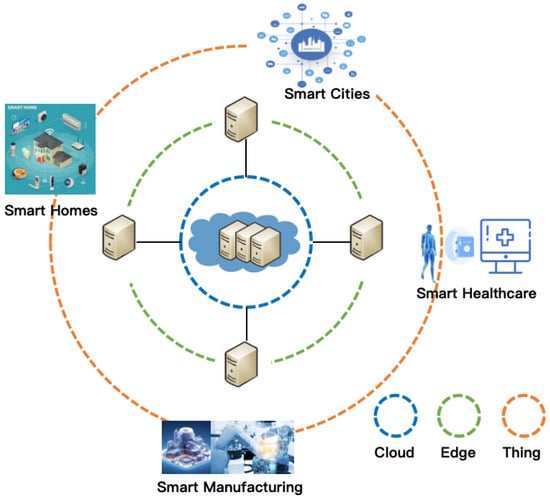

- Service Migration Application Scenarios (Section 5): We explore the deployment and effectiveness of real-time service migration in four key application domains: smart cities, smart homes, smart manufacturing, and smart healthcare. These scenarios demonstrate how timely and adaptive migration supports system scalability, responsiveness, and contextual awareness in real-world edge environments.

- Challenges and Future Directions (Section 6): We highlight critical open issues: inaccurate or delayed migration decisions that limit service awareness; scheduling and coordination challenges in large-scale heterogeneous edge networks; lack of comprehensive security and privacy protection during service migration; lack of adaptive and context-aware autonomous migration mechanisms; challenges and opportunities of AI-driven service migration; migration in the age of 6G: ultra-dense and high-mobility networks; and sustainable and energy-aware service migration.

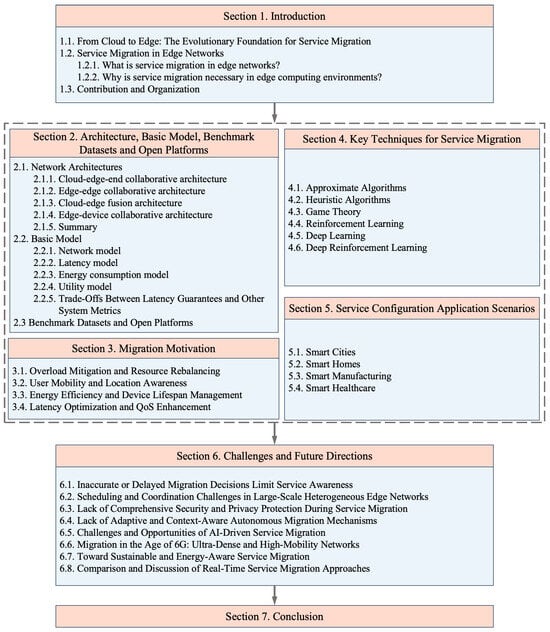

Although this survey does not follow the PRISMA guidelines for systematic reviews, we have curated a representative and focused set of recent studies. The selection was guided by three main principles: (i) technical relevance to real-time service migration in edge environments; (ii) publication quality, including peer-reviewed papers from high-impact venues; and (iii) empirical rigor, as demonstrated by the presence of evaluation, modeling, or implementation results. To facilitate a comprehensive understanding of this survey’s structural framework, Figure 1 schematically illustrates its overall organization. To ensure the consistency and relevance of our survey, we adopted the following criteria for including or excluding specific service migration strategies:

Figure 1.

Road map of this survey.

- The strategy must support real-time or low-latency requirements, which are fundamental for delay-sensitive edge applications. Given our focus on real-time migration, this serves as the primary inclusion criterion.

- The strategy must be applicable to edge computing environments, such as MEC, IoT, and other distributed edge infrastructures, where computational offloading and proximity-aware scheduling are essential.

- The strategy should be compatible with the system models introduced in Section 2. This ensures comparability and analytical consistency across reviewed methods.

- The strategy must address practical challenges such as overload mitigation, user mobility support, energy efficiency, and QoS enhancement—all of which are elaborated in Section 3.

- The strategy should adopt one of the key technical approaches reviewed in Section 4, such as heuristic optimization, approximation methods, game-theoretic models, or reinforcement learning. We selected representative works from each category to reflect methodological diversity.

- The strategy should align with the architectural patterns outlined in Section 2.1. Only strategies where migration decisions and executions are primarily performed at the edge layer are considered, excluding those solely based on centralized cloud-side control.

2. Architecture, Basic Model, Benchmark Datasets, and Open Platforms

2.1. Network Architectures

With the increasing demand for latency-sensitive, high-throughput, and dynamically changing service requirements in the IoT, traditional cloud-centric infrastructures face growing challenges. Edge computing has emerged as a key paradigm for addressing these issues by moving computation and data storage closer to the data source. In this context, service migration is not limited to static deployment but is evolving toward dynamic, context-aware, and migration-enabled frameworks. This section explores four representative architectures that underpin modern service migration systems, with a focus on how each supports efficient service migration.

2.1.1. Cloud–Edge–End Collaborative Architecture

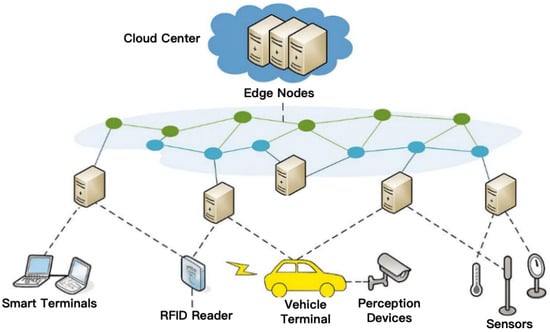

This classical architecture partitions computing responsibilities across three layers: cloud, edge, and end devices (as illustrated in Figure 2). The cloud layer is responsible for macro-level orchestration, historical data analysis, and global decision-making. The edge layer enables near-source processing and real-time response, while the end layer, comprising sensors and mobile devices, conducts lightweight data acquisition and preliminary computation.

Figure 2.

Cloud–edge–end collaborative architecture.

Service migration in this hierarchical model is typically performed from the cloud to the edge to reduce latency or from the edge back to the cloud to relieve local node overload. A notable feature is its support for vertical service migration, which facilitates adaptive offloading based on task priority, data size, or network conditions.

To support such vertical migration, containerization and virtualization are adopted to encapsulate services in lightweight, portable units. Policies based on latency thresholds, bandwidth usage, and node energy status determine when and where migrations occur.

2.1.2. Edge–Edge Collaborative Architecture

In edge–edge collaborative architectures, services are not solely coordinated via the cloud. Instead, multiple edge nodes form a lateral cooperative network. Such architecture is particularly advantageous for highly dynamic or mobile applications like vehicular networks and unmanned aerial vehicle (UAV) systems, where continuous connectivity to the cloud is impractical.

Service migration in this framework occurs horizontally between the edge nodes. Migration decisions are made based on real-time load balancing, resource availability, and proximity to the data source. Predictive models based on user mobility patterns or traffic forecasts help optimize migration timing.

This lateral migration requires a decentralized orchestration strategy, commonly enabled by distributed RL, gossip protocols, or blockchain-based service registries. Inter-edge synchronization mechanisms are crucial to prevent service inconsistency or migration delays.

2.1.3. Cloud–Edge Fusion Architecture

The cloud–edge fusion architecture blurs the boundaries between the cloud and the edge by treating them as an integrated computing fabric. Services are dynamically decomposed, with components concurrently running in both layers depending on computational demands, latency constraints, and resource availability.

Service migration in this architecture is highly flexible and hybrid, supporting both vertical and horizontal movements. For instance, in a video analytics pipeline, preprocessing may occur at the edge, while deep feature extraction and long-term pattern analysis are migrated to the cloud.

Such collaborative service execution relies on fine-grained service decomposition, container orchestration platforms such as Kubernetes with edge extensions, and elastic scaling. Shared state management across layers ensures service integrity during migrations.

This architecture model not only enables cooperative service execution but also supports dynamic service migration across heterogeneous cloud–edge infrastructures. Several recent studies have proposed real-time service migration strategies tailored for hybrid cloud–edge environments. For example, some people introduced a data- and computation-intensive service adaptation method based on service migration, which utilizes a combination of greedy algorithms and the Non-dominated Sorting Genetic Algorithm II (NSGA-II) to optimize placement and reduce communication overhead during migration. Similarly, they proposed a game-theoretic distributed migration strategy for dynamic network reallocation, enabling lightweight containerized services to be adaptively reassigned based on temporal and spatial resource demands.

These approaches demonstrate how real-time service migration can be efficiently achieved within a cloud–edge fusion architecture, improving quality of service, reducing latency, and ensuring better resource utilization in dynamic and heterogeneous environments.

2.1.4. Edge–Device Collaborative Architecture

With the increasing computational power of terminal devices, the edge–device collaborative architecture has gained traction. In this model, end devices such as smartphones, AR glasses, or embedded sensors actively participate in service execution.

Service migration between edge nodes and end devices enables device-aware migration, a strategy where lightweight services or early-stage processing are pushed to capable devices. For instance, in mobile AR applications, scene recognition modules can be migrated to a user’s smartphone to reduce network dependency.

Migration is guided by RL agents on the edge nodes, continuously evaluating device status like CPU load, battery level, and location. Services are often sandboxed and encrypted to protect privacy due to the semi-trusted nature of end devices.

2.1.5. Summary

The architectural diversity inherent in edge computing reflects the system-of-systems nature of IoT environments, where heterogeneous components should seamlessly interact across dynamic and distributed layers. As Fortino et al. [28] emphasize, the IoT can be conceptualized as an integrated system of systems, which demands robust methodologies and frameworks to coordinate services across multiple computing domains. The architectural approaches discussed above offer complementary strategies for addressing this complexity, supporting scalable, context-aware, and latency-sensitive service migration mechanisms. These four architectures represent complementary strategies in supporting service configuration and migration:

- Cloud–Edge–End: Global orchestration and stable vertical migration.

- Edge–Edge: High-frequency, localized lateral migration for dynamic contexts.

- Cloud–Edge Fusion: Fine-grained hybrid service placement and joint orchestration.

- Edge–Device: Maximizes responsiveness and privacy via terminal computation.

All these architectures aim to enhance QoS, reduce latency, and optimize resource utilization in heterogeneous IoT-driven edge networks.

2.2. Basic Model

The notations used in the paper and their descriptions are summarized in Table 1.

Table 1.

Notations and descriptions.

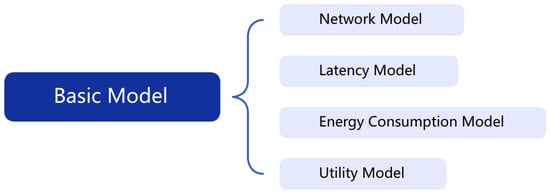

We elaborate on the basic models, including Network Model, Latency Model, Energy Consumption Model, and Utility Model, as shown in Figure 3.

Figure 3.

Basic model.

2.2.1. Network Model

In edge computing environments, efficient service configuration and scheduling are foundational to ensuring QoS under heterogeneous and resource-constrained conditions. The core objective of the network model is to determine how service tasks should be distributed across the device layer (e.g., mobile or IoT terminals), edge layer (e.g., MEC servers, base stations), and cloud layer so as to optimize system performance in terms of delay, energy, reliability, and resource utilization.

A complex service request R is typically decomposed into a set of interrelated subtasks , each with distinct computational demands, data characteristics, and latency sensitivity. Each subtask is characterized by three key attributes:

- : Data volume required for processing or transmission.

- : Computational demand (e.g., CPU cycles).

- : Latency constraint or deadline for subtask execution.

To formulate the assignment of tasks, we define a binary scheduling variable such that

This binary decision variable is used extensively in service deployment problems, where node can be a device, edge node, or cloud server [29]. The goal is to ensure that each subtask is assigned to a computational node for which its resources and network connectivity are sufficient for meeting both its processing and communication demands.

Furthermore, in real-world service scenarios, such as multi-stage IoT data analytics, AR, or industrial control systems, subtasks are often not independent. Instead, they exhibit strict inter-task dependencies where a task can only start execution after the completion of one or more prerequisite tasks. These dependency relationships can be effectively captured by a directed acyclic graph (DAG), denoted as , where each vertex represents a subtask , and each directed edge implies that subtask depends on the output of .

DAG-based task modeling has been widely adopted in edge computing, especially for complex workflows in video processing pipelines, smart healthcare monitoring, and vehicular networks. For instance, in a real-time video surveillance service, subtasks may include frame capture, preprocessing, object detection, and result aggregation, each sequentially dependent on prior stages. DAG scheduling ensures correctness in execution order while optimizing makespan or latency.

In this context, the network model must solve a joint problem of task-to-node assignment and inter-node scheduling under dependency constraints. This introduces several practical challenges:

- Resource Heterogeneity: Edge nodes vary in computing power, storage, and connectivity.

- Mobility and Dynamism: Task execution environments may change due to user mobility or fluctuating load.

- Dependency-Aware Scheduling: Inter-task communication delays must be considered in dependent subtasks.

To address these challenges, several task scheduling strategies have been proposed, including heuristic approaches (e.g., earliest finish time, minimum critical path), graph partitioning methods, and learning-based schedulers. For example, some people proposed a context-aware dynamic scheduling method for DAG-based service graphs in edge–cloud collaborative networks. And they further introduced a multi-level dependency inference mechanism that allows runtime reconfiguration of the DAG under uncertain task arrivals.

By integrating DAG models with real-time resource state and network metrics, the network model provides a formal basis for dynamic, distributed, and latency-aware task allocation. This modeling framework lays the foundation for subsequent latency, energy, and utility optimization discussed in the following subsections.

2.2.2. Latency Model

In edge computing environments, latency is a core performance metric that directly affects the responsiveness and reliability of time-sensitive applications such as autonomous driving, real-time video analytics, and industrial control. Accurately modeling latency is therefore essential for task scheduling, resource allocation, and service migration decisions.

The total latency for executing a subtask on node is commonly decomposed into two additive components: computation latency and communication latency:

Here, is the computational requirement of task , is the processing capacity of node , is the volume of data to transmit, and is the available bandwidth. These parameters are heterogeneous across nodes due to differing hardware and networking capabilities.

This latency decomposition is widely adopted in the mobile edge computing literature, and aligns with empirical observations in 5G-enabled IoT platforms. For instance, Li et al. [30] experimentally showed that computation delay is dominant for CPU-intensive tasks, whereas communication latency becomes critical in bandwidth-constrained scenarios such as UAV swarms or remote sensing networks.

In practice, latency is not only a function of node capabilities but also influenced by dynamic factors such as background workload, link congestion, and user mobility. For tasks with dependencies (e.g., DAG-modeled workflows), the end-to-end delay should also account for waiting time and data transfer latency between predecessor and successor tasks:

This reflects the critical-path-based latency formulation adopted in delay-aware DAG scheduling algorithms. To optimize latency in such settings, researchers have developed multi-hop service placement models, predictive mobility-aware routing, and computation offloading strategies that proactively reassign tasks to nodes with lower execution and transmission latency.

By integrating real-time bandwidth sensing, queue length estimation, and edge load profiling, advanced latency models can support adaptive service migration. This is particularly important in applications requiring end-to-end latency guarantees (e.g., haptic control, telemedicine), where even minor fluctuations may violate SLAs.

2.2.3. Energy Consumption Model

In edge computing systems, energy efficiency is a critical design objective, especially under constraints imposed by battery-powered IoT devices and mobile edge nodes. Accurately modeling energy consumption is essential for optimizing task offloading, scheduling, and service migration decisions in real-world scenarios.

The total energy consumed by a task executed on node consists of two main components: computation energy and communication energy:

In Equation, is the energy coefficient dependent on the hardware characteristics of node j, such as CPU types and cooling efficiencies. The term represents the dynamic power consumption of the processor when executing CPU cycles at frequency . This model is consistent with the power–frequency squared relationship derived from the dynamic voltage and frequency scaling (DVFS) principle, which is widely implemented in modern mobile and embedded processors [31].

In real deployments, edge servers, mobile devices, and cloud nodes exhibit heterogeneous values. For instance, Li et al. [29] observed that terminal nodes such as smartphones exhibit significantly higher energy cost per CPU cycle than fixed edge servers, which justifies prioritizing local execution only for low-complexity tasks.

In Equation (6), communication energy is modeled as a linear function of transmission time, which is determined by the data size and bandwidth . is the average transmission power of node j, which may vary depending on its radio technology (e.g., Wi-Fi, 5G, LoRa). This model assumes a constant transmit power during data transfer, a simplification that aligns with most empirical studies on wireless edge networks.

Advanced models may further refine this by incorporating link quality indicators such as the signal-to-noise ratio (SNR) or packet error rate (PER). For example, adaptive modulation and coding schemes may adjust dynamically to trade off energy with reliability.

From a system-wide perspective, the total energy consumption is the summation of energy consumed by all subtasks across all layers. Optimizing this metric is vital in applications such as environmental sensing or smart wearables, where energy conservation directly prolongs operational lifespan.

Recent studies have proposed multi-objective scheduling strategies that jointly minimize latency and energy, often expressed as the energy-delay product (EDP). They introduced a lightweight EDP-aware migration policy that achieved over 35% energy savings in UAV edge systems without compromising response time. Similarly, RL agents have been trained to minimize long-term energy costs under workload uncertainty.

In summary, energy modeling at the edge requires capturing the quadratic impact of CPU frequency on computation and the linear dependence of transmission energy on bandwidth. Such models lay the foundation for green computing strategies in resource-constrained edge environments.

2.2.4. Utility Model

In real-time edge computing systems, service orchestration often involves balancing multiple conflicting performance goals such as minimizing latency, reducing energy consumption, maximizing task success rate, and ensuring QoS. To address this, a utility function is typically defined to quantitatively assess overall system performance and guide decision-making.

The utility function can be expressed as a weighted combination of key metrics:

where the following definitions are provided:

- : An aggregate score based on service availability, reliability, and responsiveness.

- : Ratio of completed to requested tasks.

- : Average task latency.

- : Total energy consumption.

- and : Scenario-specific weights.

This formulation allows system designers to trade off between performance metrics according to the application context. For instance, autonomous driving systems prioritize latency and reliability (), whereas smart home scenarios may prefer energy efficiency and cost () [30].

Utility-based models form the foundation of many optimization frameworks, including the following:

- Pareto-Based Multi-Objective Optimization: Used when no single solution dominates all objectives (e.g., NSGA-II, multi-objective evolutionary algorithms (MOEAs)).

- RL: Agents learn optimal migration/scheduling policies by maximizing long-term utility over dynamic environments.

- Heuristic/Metaheuristic Search: Methods like genetic algorithms, simulated annealing, or ant colony optimization are used to explore the utility landscape efficiently.

In recent work, some people proposed a double-objective utility function considering EDP, while they employed a Deep Q-Network (DQN)-based learning agent to maximize service utility under dynamic task arrivals and user mobility. Such models have been successfully applied in smart city platforms, UAV swarms, and mobile health applications.

Therefore, the utility function serves not only as a performance metric but also as a core design principle for service migration algorithms, enabling the system to adaptively allocate resources under diverse operational constraints.

2.2.5. Trade-Offs Between Latency Guarantees and Other System Metrics

In real-time edge service migration, latency is often treated as a primary optimization objective due to the stringent response time requirements of applications such as autonomous driving and real-time video analytics. However, enforcing strict latency guarantees often introduces trade-offs with other critical system metrics, including energy consumption, operational cost, and reliability.

First, optimizing for minimal latency typically involves deploying services to nodes closest to end users or rapidly migrating services upon user mobility. This low-latency objective often results in increased service migration frequency, leading to higher energy consumption on edge nodes and mobile devices. Additionally, the need for more frequent computation offloading or redundant service instances to meet latency constraints increases bandwidth usage and processing overhead, ultimately raising infrastructure cost and system complexity.

Second, minimizing latency may conflict with energy-efficient scheduling strategies. For instance, offloading tasks to underutilized but distant nodes could save energy but induce higher delays. Conversely, using powerful nearby nodes ensures low latency but drains energy faster, reducing sustainability in resource-constrained environments.

Third, latency-optimized strategies may occasionally sacrifice reliability. Real-time migration that favors latency may not always consider node failure risks or resource instability, potentially leading to service interruptions.

Therefore, a balanced design is crucial. For latency-critical applications (e.g., autonomous vehicles), latency must be prioritized even at the cost of energy or cost efficiency. In contrast, for delay-tolerant scenarios (e.g., periodic data aggregation in smart homes), system designers may lean toward optimizing cost or energy while relaxing latency constraints.

A comprehensive service migration model should integrate latency as one of multiple objectives, with weights dynamically adjusted based on application requirements and environmental context. Some recent studies propose RL or Pareto-based multi-objective optimization frameworks to adaptively balance latency and other metrics in dynamic edge environments.

2.3. Benchmark Datasets and Open Platforms

To support reproducible experimentation and to bridge the gap between theoretical models and real-world deployment, several studies have introduced benchmark datasets and open platforms tailored for service migration in edge computing environments. These resources play a critical role in validating the effectiveness of migration policies under realistic mobility, network, and resource conditions.

Wang et al. [32] conducted one of the earliest comprehensive surveys on service migration in mobile edge computing, emphasizing the importance of leveraging empirical datasets, such as GPS traces from urban vehicles and mobile users, to evaluate latency and continuity under dynamic mobility patterns. Expanding on this idea, Wang et al. [33] formulated the service migration problem as a Markov decision process (MDP) and evaluated their solution using real-world taxi traces from San Francisco. Their experiments demonstrated how realistic mobility patterns can influence the decision boundaries of migration policies and highlighted the benefit of data-driven evaluation in capturing spatiotemporal user behaviors.

In a more recent study, Liu et al. [34] proposed a deep reinforcement learning (DRL)-based framework to jointly optimize service migration and resource allocation in edge-enabled IoT systems. The effectiveness of the approach was evaluated using real-world urban taxi mobility traces, demonstrating how realistic user mobility patterns impact the adaptability and efficiency of migration strategies. This work further highlights the importance of benchmark datasets in capturing the dynamic and context-sensitive nature of edge computing environments.

Yuan et al. [35] proposed a joint optimization framework for vehicular service migration and mobility control, and they used large-scale vehicular traffic simulations to emulate realistic road conditions and service demands. By incorporating routing constraints and edge resource limitations into their test scenarios, their work illustrated the complex interplay between migration strategies and user trajectory planning—offering a valuable benchmark for future research.

In terms of implementation, Chen et al. [36] introduced an edge cognitive computing architecture that includes a dynamic service migration mechanism based on user behavior cognition. They also developed an edge cognitive computing (ECC)-based test platform that supports real-time migration and load adaptation, enabling elastic and personalized service delivery. This practical system was evaluated using user interaction data and demonstrated measurable gains in latency reduction and energy efficiency—making it a rare example of an open experimental platform supporting real-world service migration scenarios.

Together, these works provide both methodological guidance and practical tools for the community, illustrating how real datasets, mobility traces, and cognitive platforms can be combined to develop, test, and benchmark service migration solutions under diverse and dynamic edge environments.

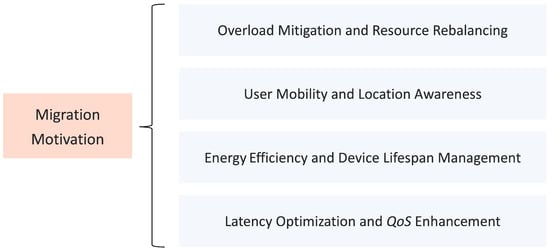

3. Migration Motivation

We elaborate on the key aspects of service migration motivation, including Overload Mitigation and Resource Rebalancing, User Mobility and Location Awareness, Energy Efficiency and Device Lifespan Management, and Latency Optimization and QoS Enhancement, as shown in Figure 4.

Figure 4.

Service migration motivation.

3.1. Overload Mitigation and Resource Rebalancing

In edge computing networks, localized overload frequently arises due to highly dynamic user behavior, bursty traffic patterns, or spatially uneven service demands. Without timely mitigation, overloaded nodes suffer from degraded QoS, prolonged response times, and potential service failures. Service migration serves as an effective strategy to redistribute workloads toward underutilized edge nodes, thereby maintaining system performance and improving user experience.

A broad body of research has explored overload-triggered service migration mechanisms. Gao et al. [37] proposed a time-aware model that migrates services based on real-time load and response delay to reduce latency. Liang et al. [38] introduced a joint optimization framework, leveraging game theory to dynamically schedule service migration and bandwidth allocation in multi-cell edge environments. Yuan et al. [35] extended this idea with an MDP-based model to determine optimal migration policies under multi-user interference and mobility uncertainty.

Container-level service migration has also received attention for its granularity and responsiveness. Yang et al. [39] designed a task-aware migration mechanism using checkpointed containers to minimize cold-start delays and enable load-sensitive relocation of microservices. Kaur et al. [40] provided a survey on container migration strategies across cloud, fog, and edge systems, highlighting how predictive load monitoring and adaptive migration policies can improve performance and energy efficiency.

In addition, Gao et al. [37] presented a time-segmented cloud–edge collaboration framework that dynamically reconfigures edge services across spatial and temporal dimensions to alleviate overload and improve resource balance in large-scale infrastructures. Wang et al. [33] analyzed service migration trends from a holistic perspective, identifying congestion mitigation as a primary driver and emphasizing the integration of AI-driven orchestration frameworks to enable proactive migration. Li et al. [41] emphasized the importance of hybrid orchestration mechanisms that combine centralized global controllers with distributed local agents to address overload scenarios. Federated learning (FL) has also emerged as a promising enabler. Caro et al. [42] developed a privacy-preserving federated migration framework that enables edge nodes to collaboratively learn overload handling policies without raw data exchange. Building upon these developments, Bozkaya-Aras [43] introduced a digital twin-assisted migration framework that replicates the real-time operational state of IoT edge systems. By incorporating predictive analytics with bipartite graph optimization, the framework proactively reallocates services to balance workloads, reduce energy consumption, and minimize latency. This approach demonstrates how real-time resource sensing and system-level modeling can significantly enhance overload mitigation strategies, particularly under dynamic and traffic-intensive conditions.

Overall, service migration for overload mitigation and resource rebalancing plays a crucial role in maintaining edge system stability, improving responsiveness, and optimizing resource utilization in dynamic environments. As edge infrastructures become increasingly heterogeneous and demand-intensive, intelligent and adaptive migration strategies will remain a core mechanism for balancing workloads and sustaining service quality.

3.2. User Mobility and Location Awareness

In MEC, user mobility significantly impacts the effectiveness of service delivery. As users traverse different network regions—such as vehicles moving between RSUs, pedestrians switching between access points, or drones navigating dynamic airspace—the static deployment of edge services often results in increased latency, service discontinuity, and degraded QoE. To address this, service migration driven by mobility and location awareness aims to dynamically adapt service placement in response to user movements, enabling persistent low-latency access and seamless user experience.

Recent research underscores the importance of mobility-aware service migration, particularly in vehicular networks and IoT-rich urban environments. Jia et al. [44] proposed a Lyapunov optimization-based MEC framework for Internet of Vehicles (IoV), where computing resources are dynamically allocated and services are migrated across RSUs to minimize communication delay and handoff overhead. Labriji et al. [22] further designed a dynamic migration model tailored for vehicular MEC environments, where RSU loads and vehicle positions are continuously monitored to facilitate predictive disruption-free service handover.

To better align migration decisions with user behavior, trajectory prediction techniques are widely adopted. Maleki et al. [45] leveraged machine learning models to predict short-term user positions and proactively trigger migration events before users leave a given edge node’s coverage, thus reducing service drop rates. Filiposka et al. [46] developed a real-time mobility-aware resource management scheme that adjusts task allocation based on both current locations and historical movement traces, enhancing service stability in urban deployments. However, uncertainty in mobility patterns—especially in non-deterministic or multi-modal transportation settings—still poses challenges for the accuracy and robustness of these migration strategies. Service migration is also increasingly extended to heterogeneous and aerial edge computing environments. For instance, Liu et al. [47] investigated dynamic task offloading in multi-UAV edge networks, where services are adaptively assigned to UAVs in proximity to moving users, improving both energy efficiency and responsiveness. Meanwhile, Zhang et al. [48] examined QoS-driven mobility-aware and dependence-aware service chain adaptation, where interdependent modules are dynamically reallocated in accordance with both user movement and latency constraints between modules.

To safeguard user privacy during mobility-aware orchestration, FL was introduced. Wang et al. [49] proposed an asynchronous FL-based framework that enables vehicles to collaboratively learn location popularity models and optimize caching decisions without exchanging raw mobility data. Building on this, Wu et al. [50] integrated FL with DRL to develop a proactive mobility-aware caching and migration system that effectively reduces service interruption under rapid mobility conditions.

In summary, mobility-aware and location-aware service migration is essential for delivering reliable and responsive services in MEC, especially in highly dynamic or privacy-sensitive environments.

3.3. Energy Efficiency and Device Lifespan Management

In edge computing systems, where distributed edge nodes often operate under stringent energy and hardware limitations, maintaining long-term service reliability requires not only efficient task allocation but also proactive workload redistribution. Service migration, when driven by energy-awareness and hardware health considerations, becomes an essential mechanism to offload stress from battery-depleted, thermally saturated, or aging devices. This not only helps reduce the risk of abrupt service termination but also enhances the overall sustainability of edge infrastructures.

Recent studies have increasingly highlighted the importance of energy-efficient service migration. Li et al. [13] developed an online decision-making framework that triggers service migration when node-level energy consumption exceeds adaptive thresholds, enabling reduced energy footprints across the system without degrading service quality. Similarly, Zhou et al. [51] investigated energy-aware migration strategies for dense multi-user networks, demonstrating that offloading computation from heavily loaded base stations to neighboring energy-sufficient nodes can significantly extend device operational time and lower network-wide energy cost.

Service migration also plays a vital role in managing thermal stress and preventing hardware wear. As prolonged high-load execution increases thermal cycling, researchers have proposed thermal-aware migration to shift workloads away from overheating units. For example, Toumi et al. [52] proposed a scheduling strategy that integrates thermal monitoring with energy metrics to decide migration timing in industrial IoT scenarios. Additionally, Ning et al. [53] designed a lightweight imitation learning-based mechanism to migrate services between mobile edge nodes in real time, ensuring minimal energy consumption and extended hardware uptime, particularly under fluctuating workloads and constrained power budgets.

In mobility-heavy edge environments such as vehicular or drone-based systems, migration decisions must also take into account dynamic energy availability across moving nodes. Niu et al. [54] proposed a meta-RL approach that adapts service scheduling according to residual energy, node aging characteristics, and user demand density, achieving higher overall efficiency compared to static heuristics. Furthermore, Ma et al. [55] integrated energy consumption prediction into a trajectory-aware migration policy, reducing unnecessary retransmission and improving the energy–delay tradeoff in user-centric edge applications.

Finer-grained strategies have emerged at the microservice level. Tocze and Nadjm-Tehrani’s work [56] on distributed microservice placement formulates an integer linear programming (ILP) model to jointly optimize energy consumption and latency for edge service request placement. Their framework takes into account the resource heterogeneity across edge nodes, varying microservice communication patterns, and the stochastic nature of service arrivals. By using workload-aware prediction and request batching, their solution enables proactive scheduling that reduces redundant migrations and limits energy-intensive task duplications.

Overall, energy efficiency and device lifespan management form one of the most pragmatic motivations for service migration in edge computing, especially as edge nodes scale in number, diversity, and mission-critical responsibilities. Migration offers a viable path to shift computation away from vulnerable nodes toward stable, energy-rich environments, ultimately contributing to more resilient, cost-effective, and sustainable edge ecosystems.

3.4. Latency Optimization and QoS Enhancement

In latency-critical applications such as autonomous driving, virtual reality, and industrial control, the delay in service response can significantly degrade system performance and user experience. MEC aims to meet these demands by offering computation closer to users; however, fluctuating workloads, user mobility, and dynamic network states often disrupt this goal. Service migration emerges as a vital mechanism for dynamically adjusting service placement, ensuring low latency and consistent QoS.

Recent studies have increasingly emphasized latency-aware and collaborative service migration frameworks. Zeng et al. [57] proposed a two-stage collaborative microservice migration strategy for DAG-based applications, utilizing DRL and network flow optimization to reduce end-to-end latency in MEC. Their approach effectively distributes microservices across edge clusters, addressing both workload imbalance and latency-sensitive dependencies. Similarly, Zhang et al. [58] introduced the quality-of-service-aware edge–cloud service migration framework, which performs dynamic service migration guided by QoS metrics and mobility patterns, adapting to time-varying latency constraints across edge–cloud infrastructures. To anticipate latency degradation before it occurs, predictive migration models have been widely explored. Ma et al. [59] developed a forecasting-based placement scheme using Deep Learning (DL) to anticipate network congestion and proactively reallocate services to minimize potential response delays. Likewise, Peng et al. [60] designed a transfer RL-based approach to handle computing and communication costs in vehicular edge networks, ensuring low-latency service continuity even under high mobility and workload surges.

In highly dynamic environments, probabilistic and context-aware models improve adaptability. Xu et al. [61] introduced a probabilistic delay- and mobility-aware migration framework (PDMA) that uses trajectory uncertainty modeling and real-time delay profiling to trigger migration events. Additionally, in large-scale edge deployments, Chi et al. [62] proposed a multi-criteria decision-making model that jointly considers network load, latency violations, and node reliability to select optimal migration targets for microservices in ultra-large MEC infrastructures. Moreover, low-latency service migration also benefits from contextual scheduling. Saha et al. [63] explored a scheduling mechanism based on contextual information such as link quality, service criticality, and temporal load fluctuation to drive proactive service relocation. Their model demonstrated improved QoS stability and reduced average migration delay.

Together, these advancements reflect a shift toward more intelligent, proactive, and fine-grained latency-sensitive service migration mechanisms. By integrating predictive analytics, learning-based decision models, and real-time context awareness, MEC systems can sustain high QoS standards in increasingly heterogeneous and mobile network environments.

4. Key Techniques for Service Migration

In edge-native computing environments, dynamic resource availability, task heterogeneity, and user mobility necessitate intelligent, adaptive service migration techniques. To address these challenges, this section systematically presents six representative approaches, emphasizing their real-time decision-making capabilities, deployment rationality, and suitability under constrained edge scenarios.

4.1. Approximate Algorithms

In the context of edge computing, service migration should respond to highly dynamic environments characterized by constrained resources, heterogeneous device capabilities, and fluctuating user demands. Approximate optimization algorithms have emerged as effective tools for tackling the inherent complexity of multi-objective migration problems in such environments. These methods offer a favorable trade-off between computational efficiency and solution quality, making them suitable for latency-sensitive applications at the edge.

In this work, we adopt NSGA-II to solve a tri-objective migration optimization problem that balances delay, energy consumption, and system load imbalance. NSGA-II enables the construction of Pareto-optimal migration strategies by maintaining solution diversity and converging toward global optima, which is particularly advantageous in multi-service edge scenarios with dynamic interaction topologies. Compared with deterministic or exhaustive search methods, NSGA-II significantly reduces computational overhead, thereby supporting real-time reconfiguration under volatile system loads.

Inspired by the operational scale and concurrency of IoT applications, swarm intelligence algorithms such as particle swarm optimization (PSO) and artificial bee colony (ABC) are further employed to explore decentralized solution spaces. For example, based on the methodology proposed in [64], PSO enables rapid exploration of high-dimensional migration states by simulating the social behavior of agents. ABC, on the other hand, enhances global search ability through division of exploration and exploitation phases. These algorithms are well-suited for non-convex and nonlinear optimization problems that are frequently encountered in real-world edge networks.

In addition, recent work has demonstrated the effectiveness of parallel multi-objective approximate algorithms in IoT service composition, where the energy-aware integration of temporal service flows can be optimized under shared constraints. Building on this insight, we extend the use of approximate models to the migration domain, adapting their structural advantages to reduce the frequency and cost of unnecessary migrations while maximizing system-wide performance consistency.

The principal strength of these approximate models lies in their scalability and adaptability. Heuristic-enhanced approximations can maintain optimal task scheduling under dynamically detected event boundaries. Our system design therefore incorporates these approximate methods not merely as auxiliary optimizers but as core enablers of real-time decision-making in migration control capable of supporting distributed edge networks with minimal tuning effort.

4.2. Heuristic Algorithms

In latency-sensitive edge computing scenarios, where real-time responsiveness and low-overhead decision-making are essential, heuristic algorithms provide practical and lightweight solutions for dynamic service migration. These methods rely on problem-specific knowledge and rule-based logic to generate near-optimal results within constrained time budgets, making them ideal for environments with limited computational capacity and high service volatility.

This work integrates a hybrid heuristic framework that combines offline initialization with online learning. Specifically, we adopt a two-stage method in which the initial service deployment is constructed using a minimum spanning tree (MST) over service dependency graphs, ensuring a low-latency communication baseline among interconnected service modules. On top of this static topology, we deploy a Q-value learning based online decision module to adaptively trigger migration in response to environmental changes [65]. This reinforcement-enhanced heuristic balances exploitation of known optimal placements with exploration of new migration paths, facilitating continuous optimization during system operation.

To further improve responsiveness under bursty workloads, we implement a time-window-based greedy scheduling mechanism. This approach collects and analyzes short-term system metrics such as CPU usage, memory availability, and link bandwidth within sliding time windows. Based on this historical state profile, the algorithm selects target nodes for service migration by evaluating marginal resource gains and local utility scores. Compared to static rule sets, this dynamic heuristic enables early identification of potential hot spots and mitigates performance bottlenecks before they escalate.

The effectiveness of such hybrid heuristics has been demonstrated in recent edge-centric scheduling frameworks where real-time task adaptation to detected events significantly reduces average processing delay [66]. Moreover, the system outlined shows that heuristic selection strategies guided by service execution history and task completion feedback can outperform static placement under variable request patterns and constrained device availability.

In our implementation, heuristic models act as lightweight controllers embedded within each edge node. Their low algorithmic complexity ensures negligible latency overhead, while their rule-adaptable architecture enables fast convergence to high-performing migration plans. This makes them particularly suitable for fine-grained service orchestration in scenarios involving mobile users, constrained bandwidth, or time-sensitive applications such as video analytics and industrial monitoring.

4.3. Game Theory

In decentralized edge computing environments, service migration decisions often involve multiple autonomous nodes or services, each seeking to optimize its own performance under shared resource constraints. Game-theoretic approaches offer a powerful modeling framework to capture these interactive and potentially conflicting objectives. By formulating migration as a strategic decision process among rational agents, such models support the design of stable and self-organizing service placement strategies without relying on centralized control.

In this work, we construct a non-cooperative game model in which each service instance acts as a self-interested player aiming to minimize its individual migration cost [67]. The utility function integrates delay, energy consumption, and migration overhead:

Each service selects a target node such that no unilateral move can further reduce its cost—resulting in a Nash equilibrium. Compared with purely heuristic policies, the game-theoretic formulation guarantees convergence to stable configurations even under partial observability and asynchronous updates. Then, decentralized task allocation models based on potential games and evolutionary game theory can improve system efficiency while preserving individual rationality.

To enhance global resource utilization, we further integrate cooperative game principles by introducing incentive-compatible mechanisms such as reputation-based credits and resource sharing rewards. These mechanisms encourage edge nodes to participate in collaborative scheduling and migration, particularly in federated or heterogeneous edge clusters [68]. Drawing from the framework, we design a token-based exchange model where nodes gain credits by accepting external migration requests and spend them when offloading their own services. This balances load across the network while maintaining fairness and autonomy.

The use of game-theoretic models offers several advantages for real-time edge migration. First, it enables distributed execution and local decision-making, significantly reducing migration latency and coordination overhead. Second, it accounts for dynamic system states and strategic behaviors, allowing robust adaptation to varying traffic, node availability, and service priorities. Third, game-theoretic frameworks naturally extend to multi-agent and multi-tenant scenarios, supporting scalability in complex edge infrastructures.

By embedding game-theoretic agents within the service migration controller, our architecture ensures that migration decisions are both resource-aware and strategically sound. The combination of non-cooperative equilibrium seeking and cooperative incentive alignment enables intelligent workload reallocation that respects both system-wide objectives and local node preferences, making it well-suited for heterogeneous, large-scale edge deployments.

4.4. Reinforcement Learning

To address the sequential and uncertain nature of service migration in dynamic edge computing environments, RL offers an effective framework for modeling migration decisions as MDPs [69]. Unlike heuristic or rule-based methods, RL enables the system to autonomously learn optimal migration strategies through trial-and-error interactions with the environment, making it particularly suitable for environments characterized by user mobility, fluctuating workloads, and partial observability.

In our architecture, we formulate the service migration problem as a continuous-time Markov decision process (CTMDP), where the system state s encapsulates node resource usage, bandwidth availability, and user request queues. The reward function penalizes high delay and energy consumption:

To solve the CTMDP, we employ temporal-difference (TD) learning to update the state-value function iteratively:

This approach enables online policy refinement in response to newly observed system feedback, enhancing the model’s real-time adaptability.

The effectiveness of RL in edge service migration has been validated, where dynamic scheduling policies trained using tabular Q-value learning or TD learning frameworks significantly outperform static policies under non-stationary conditions. In particular, these works highlight RL’s ability to handle diverse QoS metrics, including task urgency, queue latency, and node-specific failure rates [69].

To further improve convergence speed and policy robustness, our system integrates historical replay buffers and adaptive learning rates. The replay buffer stores past state transitions and reward signals, enabling more stable and sample-efficient learning, especially in bursty service scenarios where instantaneous data may not be representative. Additionally, we initialize policies using heuristic priors to reduce exploration time in early deployment phases.

Compared to approximate or game-theoretic approaches, RL offers several key advantages in edge computing contexts:

- Online Adaptation: RL dynamically adjusts migration decisions in response to real-time feedback, accommodating non-deterministic workloads and mobility patterns.

- Policy Generalization: Once trained, the learned policy can be reused or fine-tuned across similar edge environments, reducing retraining costs.

- QoS Awareness: RL inherently supports multi-objective optimization, enabling fine-grained control over delays, energy, and resource utilization.

These properties make RL an attractive component for intelligent migration control. In our design, RL agents are deployed at edge orchestrators, interacting with local node monitors and network profilers to continuously refine migration behavior. As edge environments grow in complexity and scale, RL provides the flexibility and autonomy needed to maintain performance and responsiveness under evolving operating conditions.

4.5. Deep Learning

In edge computing environments characterized by dynamic user demands and fluctuating resource availability, DL serves as a critical predictive engine for intelligent service migration. Rather than relying on static heuristics or reactive rules, DL empowers the system with foresight—enabling proactive migration decisions that anticipate changes in workload intensity, service reliability, and node availability.

This work incorporates three classes of DL models tailored to distinct contextual sensing tasks. Convolutional neural networks (CNN) are employed to encode service state matrices, capturing spatial correlations in resource occupancy and communication bandwidth. These structured encodings are then fed into long short-term memory (LSTM) networks, which excel in learning temporal patterns in time-series traces such as CPU loads, memory usage, and request rates [70]. Furthermore, to model the complex topological dependencies among distributed edge nodes, graph convolutional networks (GCNs) are utilized, which construct migration-affinity graphs based on latency, bandwidth, and workload similarity, facilitating global coordination during large-scale service relocation.

The system integrates DL-based prediction modules in a hybrid learning-control loop. When offline, these models are trained using historical logs and event traces extracted from the cloud–edge system, with feature extraction driven by sliding-window analysis and variance-aware sampling. When online, the models operate periodically, issuing preemptive migration signals when predicted resource bottlenecks or service disruptions exceed a learned threshold. This predictive mechanism helps maintain service continuity under volatile traffic, especially in scenarios involving mobile users or bursty sensor streams.

While deep models introduce computational overhead, they are deployed as auxiliary modules—decoupled from the core real-time control loop and updated asynchronously. In this architecture, migration decisions are enacted by lightweight agents that reference DL outputs without incurring their full runtime cost. Such decoupling ensures that predictive accuracy is retained while the system adheres to stringent delay constraints imposed by edge applications like smart surveillance and industrial control.

The adoption of DL in our migration framework marks a shift from reactive adaptation to anticipatory intelligence. By embedding learning-driven predictions into edge orchestration, the system achieves enhanced resilience and adaptability across diverse operating conditions. Empirical results from recent IoT scheduling systems further confirm that DL-enabled prediction reduces unnecessary migrations by up to 38% while maintaining SLA compliance, offering a compelling trade-off between foresight and efficiency.

4.6. Deep Reinforcement Learning

In highly dynamic and resource-constrained edge environments, where service placement decisions should respond to uncertain workloads, user mobility, and volatile network conditions, DRL offers a powerful solution framework. By combining deep neural networks with sequential decision-making logic, DRL enables edge systems to learn adaptive service migration policies directly from experience, without relying on fixed rules or explicit performance models.

In this work, we employ DRL models to capture the long-term effects of migration actions in Markovian edge environments. Specifically, DQN are adopted to approximate state-action value functions based on system states such as the CPU load, node availability, and bandwidth utilization. The agent iteratively refines its migration policy by minimizing TD errors between predicted and actual rewards [71]. To mitigate instability during training, we use experience replay buffers and periodically updated target networks.

To address the limitations of discrete action spaces in DQN, we further implement an actor–critic structure. In this design, the actor network generates continuous migration actions, while the critic evaluates action-value estimates and provides gradient-based feedback. This architecture, aligned with recent advances such as deep deterministic policy gradient (DDPG) and twin delayed deep deterministic policy gradient (TD3), is particularly suitable for multi-node resource scheduling where fine-grained migration control is required under latency and energy constraints.

In large-scale edge deployments, service migration often involves multiple decentralized entities. We thus extend our model to multi-agent DRL (MADRL), where each edge node functions as an autonomous agent. The MADRL system supports decentralized learning with centralized training, enabling agents to learn coordinated policies while preserving local autonomy [72]. This is particularly valuable in vehicular and federated edge scenarios, where communication overhead must be minimized and partial observability is common.

The integration of DRL into our service migration architecture enables predictive, adaptive, and energy-aware orchestration. Agents continuously refine their behavior based on environmental feedback, learning to avoid overloading hotspots, reduce inter-node latency, and maximize overall system utility. The experimental results from multi-service edge scheduling scenarios demonstrate that DRL-based migration can reduce average response latency by over 30% while simultaneously lowering energy usage and SLA violation rates compared to heuristic and static approaches.

By endowing edge infrastructure with DRL-enhanced decision logic, the system transitions from reactive response to autonomous optimization—supporting long-term service sustainability in highly heterogeneous and dynamic operational environments.

5. Service Migration Application Scenarios

Service migration plays a pivotal role in optimizing the performance and efficiency of modern computing paradigms, particularly in edge computing and IoT environments. Below, we summarize its applications in four key domains: smart cities, smart homes, smart manufacturing, and smart healthcare (as illustrated in Figure 5).

Figure 5.

Service migration application scenarios.

5.1. Smart Cities

With the rapid advancement of technologies such as IoT, cloud computing, edge computing, and wireless communications, smart cities have evolved from theoretical frameworks into practical, interconnected ecosystems. These technologies empower urban infrastructure to deliver intelligent, efficient, and real-time services that significantly enhance residents’ quality of life. However, as data volumes surge due to pervasive IoT deployment, traditional cloud-centric architectures encounter limitations, including high latency, bandwidth constraints, and heightened privacy risks. To mitigate these issues, edge computing combined with service migration has emerged as a promising solution that pushes processing closer to data sources, reducing response times and offloading core cloud resources [73].

Service migration enables dynamic relocation of computing tasks across distributed edge nodes in response to changing contexts, loads, and mobility. In traffic management systems, for instance, migrating traffic optimization algorithms between edge nodes near congested intersections allows real-time responsiveness and adaptive load balancing. Similarly, in smart surveillance applications, video analytics tasks can be relocated to local edge servers for rapid anomaly detection, improving both timeliness and data sovereignty. To optimize this migration process, Xu et al. [74] proposed a trust-aware IoT service provisioning strategy, integrating evolutionary algorithms with multi-criteria decision-making to balance load, energy consumption, and privacy. In healthcare and public services, real-time service migration ensures uninterrupted operation even under user mobility. Ganesan [75] proposed a VM migration strategy for mobile cloud computing in healthcare applications, leveraging ant colony optimization to minimize latency and optimize resource utilization under dynamic user movement. Their model highlights the importance of predictive migration strategies in achieving quality-of-service guarantees in time-sensitive environments.

Further, Kientopf et al. [73] developed a service management platform under a fog computing architecture to support autonomous service migration. Their platform leverages network metrics such as the expected transmission count (ETX) and round trip time (RTT) to position services near active users in dense IoT environments, significantly reducing latency compared to centralized deployments. These insights reveal the potential of service-aware edge architectures in scaling smart city systems efficiently. Moreover, the smart city operating system (SCOS) [76] proposed by Vögler et al. provides a cloud-native framework for seamless migration and deployment of smart city services. With its microservice-based architecture and support for service mobility, SCOS enables applications to dynamically shift across edge, cloud, and hybrid infrastructures, ensuring resilience and agility in urban service delivery.

In summary, real-time service migration in edge networks enhances the responsiveness, scalability, and sustainability of smart cities. By dynamically reallocating computing services based on contextual factors—such as mobility, resource load, and network conditions—urban systems can maintain low-latency operation, support dynamic user demands, and uphold data governance standards. This adaptive migration paradigm forms a cornerstone of future-proof smart city infrastructure.

5.2. Smart Homes

The proliferation of smart home devices and the increasing demands for real-time, low-latency responses challenge traditional cloud-centric architectures due to constraints such as network congestion, limited bandwidth, and privacy concerns. Edge computing, particularly through service migration strategies, offers a viable solution by enabling services to dynamically relocate among local devices, edge gateways, and nearby servers based on contextual factors like user behavior, network conditions, and device status [77].