Abstract

The pine wood nematode (PWN), one of the globally significant forest diseases, has driven the demand for precise detection methods. Recent advances in satellite remote sensing technology, particularly ultra-high-resolution optical imagery, have opened new avenues for identifying PWN-infected trees. In order to systematically evaluate the ability of ultra-high-resolution optical remote sensing and the influence of spatial and spectral resolution in detecting PWN-infected trees, this study utilized a U-Net network model to identify PWN-infected trees using three remote sensing datasets of the ultra-high-resolution multispectral imagery from Beijing 3 International Cooperative Remote Sensing Satellite (BJ3N), with a panchromatic band spatial resolution of 0.3 m and six multispectral bands at 1.2 m; the high-resolution multispectral imagery from the Beijing 3A satellite (BJ3A), with a panchromatic band resolution of 0.5 m and four multispectral bands at 2 m; and unmanned aerial vehicle (UAV) imagery with five multispectral bands at 0.07 m. Comparison of the identification results demonstrated that (1) UAV multispectral imagery with 0.07 m spatial resolution achieved the highest accuracy, with an F1 score of 89.1%. Next is the fused ultra-high-resolution BJ3N satellite imagery at 0.3 m, with an F1 score of 88.9%. In contrast, BJ3A imagery with a raw spatial resolution of 2 m performed poorly, with an F1 score of only 28%. These results underscore that finer spatial resolution in remote sensing imagery directly enhances the ability to detect subtle canopy changes indicative of PWN infestation. (2) For UAV, BJ3N, and BJ3A imagery, the identification accuracy for PWN-infected trees showed no significant differences across various band combinations at equivalent spatial resolutions. This indicates that spectral resolution plays a secondary role to spatial resolution in detecting PWN-infected trees using ultra-high-resolution optical imagery. (3) The 0.3 m BJ3N satellite imagery exhibits low false-detection and omission rates, with F1 scores comparable to higher-resolution UAV imagery. This indicates that a spatial resolution of 0.3 m is sufficient for identifying PWN-infected trees and is approaching a point of saturation in a subtropical mountain monsoon climate zone. In conclusion, ultra-high-resolution satellite remote sensing, characterized by frequent data revisit cycles, broad spatial coverage, and balanced spatial-spectral performance, provides an optimal remote sensing data source for identifying PWN-infected trees. As such, it is poised to become a cornerstone of future research and practical applications in detecting and managing PWN infestations globally.

1. Introduction

The pine wood nematode (PWN, Bursaphelenchus xylophilus), the causative agent of pine wilt disease, ranks among the world’s most destructive forest pathogens due to its rapid spread, high mortality rates, and severe economic impacts [,]. Since its first detection in Nanjing, China, in 1982, PWN has devastated over one billion pine trees nationwide, incurring losses exceeding hundreds of billions of yuan []. Early detection of PWN-infected trees remains critical for containing outbreaks, as timely and accurate identification of PWN-infected trees is important for prevention and control [].

Traditional methods for identifying PWN-infected trees have relied on labor-intensive field surveys. These approaches lack the capacity for rapid, large-scale monitoring of PWN infestation dynamics and often lead to delayed interventions that hinder effective disease management [,,,]. Remote sensing technology has emerged as a primary investigative tool for detecting PWN-infected trees due to its cost-effectiveness, operational efficiency, and streamlined data-collection capabilities [,,]. Early identification of PWN-infected trees through satellite remote sensing mainly depended on medium-resolution satellites, such as Landsat, which utilized the spectral differences between the canopies of infected and healthy pine trees [,]. Franklin et al. [] achieved approximately 73% detection accuracy for PWN-infected trees using single-date 30 m resolution Landsat imagery. Skakun et al. [] enhanced PWN identification accuracy by to 78% by analyzing multi-temporal Landsat data in the Prince George Forest Region of British Columbia, Canada. However, mixed-pixel effects in medium-resolution imagery obscure sparse infections, limiting practical utility.

Advances in high-resolution imagery (≤1 m) have improved detection precision. Wang et al. [] utilized Gaofen-2 satellite imagery with 1 m spatial resolution and four spectral bands, achieving an MIoU of 68.36%. Poona and Ismail [] aimed to explore the utility of transformed high spatial resolution QuickBird imagery, which has a 0.6 m spatial resolution and four spectral bands, combined with artificial neural networks (ANNs), to detect and map trees infested with PWN, achieving a kappa coefficient of 0.65. Takenaka et al. [] employed WorldView-3 imagery with 0.5 m spatial resolution to construct 18 vegetation indices for identifying PWN-infected trees in the Matsumoto region of central Japan, achieving a total precision of 72%. Despite these improvements, resolution thresholds for reliable PWN detection remain undefined, and spectral-spatial tradeoffs are poorly quantified.

The recent deployment of ultra-high-resolution satellites, with a resolution of less than 0.5 m, presents significant potential. The Beijing 3 International Cooperative Remote Sensing Satellite (BJ3N), launched on 29 April 2021, is currently the highest-resolution commercial remote sensing satellite. It offers a 0.3 m panchromatic band and six spectral bands with a 1.2 m resolution, as well as pansharpened 0.3 m fused multispectral images. Such capabilities promise unprecedented precision in detecting subtle canopy changes indicative of early PWN infestation. However, despite this promising potential, the application of ultra-high-resolution optical imagery for PWN detection remains unexplored, and no study has yet systematically evaluated its performance against established platforms like UAVs.

To address this research gap, the study conducted a comprehensive comparison of ultra-high-resolution BJ3N imagery with high-resolution Beijing 3A satellite (BJ3A) imagery, which has a 0.5 m spatial resolution for the panchromatic band and 2 m for the four multispectral bands, and UAV multispectral imagery with a 0.07 m spatial resolution for five multispectral bands. The objectives are to systematically evaluate the capabilities of ultra-high-resolution remote sensing images and the impact of spatial and spectral resolution on the detection of PWN-infected trees, and to identify the optimal data sources that can balance spatial resolution, spectral capabilities, coverage, and practical applicability, ultimately enhancing the efficiency of future PWN monitoring.

2. Materials and Methods

2.1. Study Area

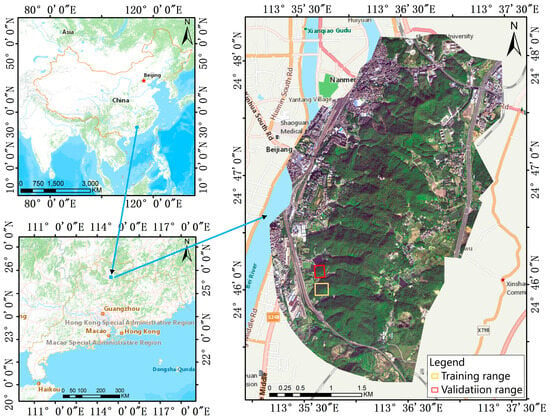

The study area is located in Shaoguan, Guangdong Province, China (geographical coordinates 113°35′ to 113°37′ E, 24°45′–24°48′ N), as shown in Figure 1. The study area lies within a subtropical mountain monsoon climate zone, characterized by an average annual temperature of approximately 20 °C, an average annual rainfall of 1600 mm, and an annual frost-free period of about 310 days. These climatic conditions are conducive to the proliferation of the pine bark beetle, leading to a particularly severe PWN outbreak in the area. The area covers approximately 5 km2, with elevations ranging from 60 to 255 m. Forest coverage reaches around 90%, dominated by species such as horsetail pine, fir, and camphor. A schematic overview of the study area is provided in Figure 1.

Figure 1.

The location of the study area in Shaoguan City, Guandong Province, China.

2.2. Data

The data used in this study included ultra-high-resolution BJ3N satellite imagery and high-resolution Beijing 3A (BJ3A) satellite multispectral imagery, as well as very-high-resolution unmanned aerial vehicle (UAV) multispectral imagery.

2.2.1. BJ3N

The BJ3N satellite was launched on 29 April 2021, as a state-of-the-art, ultra-high-resolution optical remote sensing satellite, jointly developed by China 21st Century Space Technology Co., Ltd. (Beijing, China) and Airbus. It acquires imagery at a spatial resolution of 0.3 m in the panchromatic band and 1.2 m in the multispectral bands, ranking among the highest-resolution commercial remote sensing currently in operation. The main parameters of BJ3N are shown in Table 1.

Table 1.

BJ3N satellite parameters.

The imagery of BJ3N for the study area was acquired on 8 December 2021. The processing applied to the BJ3N data included calibration, atmospheric correction, and orthorectification.

To fully leverage the spatial information of BJ3N data and enhance the spatial resolution of its multispectral bands, this study adopts the Gram–Schmidt pansharpening method for data fusion. This approach establishes a transformation by simulating a panchromatic band, enabling the effective incorporation of spatial details while minimizing spectral distortion.

2.2.2. BJ3A

The BJ3A satellite was launched on 11 June 2021 and was developed by China 21st Century Space Technology Co., Ltd. It is known for its “three supers” feature, which includes ultra-high agility, stability, and precision. The satellite is equipped with a high-resolution, wide-swath panchromatic and multispectral bi-directional camera system, providing imagery at spatial resolutions of 0.5 m for the panchromatic band and 2 m for the multispectral bands. The main parameters of BJ3A are shown in Table 2.

Table 2.

BJ3A satellite parameters.

The imagery of BJ3A for the study area was acquired on 6 December 2021. The data preprocessing procedure applied to the BJ3A imagery was consistent with that used for the BJ3N data.

2.2.3. UAV

The multispectral UAV imagery for the study area was acquired using a DJI P4 Multispectral RTK (DJI, Shenzhen, China). The UAV is equipped with an integrated MicaSense RedEdge multispectral sensor and a high-precision real-time kinematic (RTK) module, which provides centimeter-level positioning accuracy to support the generation of high-accuracy geotagged images. The sensor captures imagery in five spectral bands within the visible to red-edge and infrared spectrum. The main characteristics of the multispectral sensor are given in Table 3.

Table 3.

Characteristics of the multispectral sensor.

The multispectral UAV remote sensing data were acquired on December 18, 2021, at around 11 a.m. local time. The weather conditions during the flight were adequate with enough solar illumination, calm wind with a slight breeze, and no clouds. The raw imagery was processed using DJI Zhitu software 3.0.0, to produce a digital orthophoto map of the study area with a spatial resolution of 0.07 m.

2.3. Sample Plotting

The samples were divided into a training set and a validation set, which were collected from two spatially segregated areas within the study area to ensure independence, as illustrated in Figure 1.

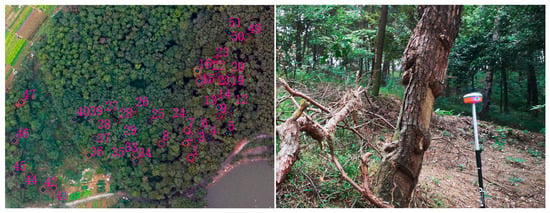

2.3.1. Training Set

Pine trees infected with PWN disease typically exhibit visible symptoms. As the infection progresses, the needles of affected trees transition from green to yellowish-brown, then reddish-brown, and eventually dark reddish-brown upon complete death []. These color shifts are accompanied by changes in the reflectance of specific wavelength bands, causing the spectral characteristics of the infected trees to deviate from those of healthy vegetation. These spectral changes provide a robust theoretical foundation for detecting PWN-infected trees using optical remote sensing imagery []. Notably, healthy pine trees can be easily distinguished from those infected with PWN in the visible light spectrum []. Based on these symptomatic color features, this study establishes visual interpretation markers for identifying PWN-infected trees.

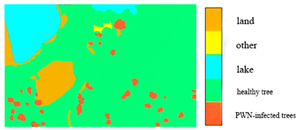

Given the diversity of features within the forest area and the influence of light and shadow, this study selects a sub-region with a high concentration of PWN-infected trees as a common training sample area for all three types of remote sensing data. The sample area was characterized by five main feature types: PWN-infected trees, healthy trees, lake, land, and other. Corresponding labels for these features were manually delineated on the BJ3A, BJ3N, and UAV imagery through visual interpretation. These labeled datasets were then used for model training. The image data and corresponding labels for the selected sample areas are presented in Table 4.

Table 4.

Sample area and label drawing results of BJ3A images, BJ3N images, and UAV imagery.

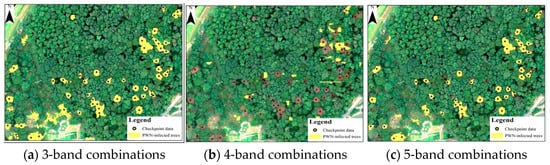

2.3.2. Validation Sets

To evaluate the accuracy of the BJ3A, BJ3N, and UAV imagery in detecting PWN-infected trees, a field survey was conducted to collect the geographic coordinates of 51 PWN-infected trees using a GNSS receiver as validation sets (Figure 2).

Figure 2.

Checkpoint data imagery. The numbers in the figures are the numbers of Validation Sets.

2.4. Research Methodology

2.4.1. U-Net Network Model

Semantic segmentation networks are widely used deep learning models for identifying PWN-infected trees in high-resolution remote sensing imagery [,,]. Previous studies have demonstrated the effectiveness of U-Net in this context. For example, Ye et al. [] compared the performance of U-Net and SVM algorithms for identifying PWN-infected trees using Landsat imagery, with U-Net achieving an accuracy of 0.60, compared to 0.21 for SVM. Similarly, Han et al. [] evaluated several deep learning algorithms and found that the U-Net model yielded the highest accuracy for detecting PWN outbreaks in high-resolution imagery. Based on these findings, the U-Net model was selected as the most suitable approach for extracting PWN-infected trees in this study.

The U-Net network model [], initially introduced in 2015 at the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), is a classic architecture for semantic segmentation. It builds upon the Fully Convolutional Network (FCN) by incorporating convolutional layers, max-pooling layers, inverse convolutional layers, and ReLU nonlinear activation functions. It utilizes a symmetric U-shaped architecture, consisting of two main components: the encoder and the decoder. The encoder, situated on the left, performs downsampling to extract imagery details, whereas the decoder on the right executes upsampling to restore these details, thus enabling the accurate localization of the target []. This model exhibits strong segmentation capabilities, effectively fusing both high- and low-dimensional information. It can be trained with relatively limited data, yielding a robust model for edge extraction []. The network ultimately produces a feature map with the same resolution as the input image.

2.4.2. Accuracy Evaluation Method

To evaluate model performance, this study employs precision (P), recall (R), and the F1 score []. These accuracy metrics are defined as follows:

where TP denotes the number of correctly identified infected trees, FP denotes the number of falsely identified trees, and FN denotes the number of undetected infected trees.

P, also known as the positive predictive value, reflects the proportion of true positives among all predicted positives, indicating the accuracy of the prediction model. A higher P value suggests a greater likelihood of correct predictions, and consequently, better model performance. R, or sensitivity, measures the proportion of actual infected trees correctly identified by the model. A higher R value indicates a better ability to correctly predict the true positives. The F1 score is the harmonic mean of precision and recall, balancing the tradeoff between the two metrics. Since precision and recall are often inversely related, improving one typically sacrifices the other. Thus, the F1 score provides a comprehensive measure of model performance, where a higher value of F1 indicates an optimal balance between precision and recall, reflecting a more accurate and reliable model.

3. Results and Analyses

3.1. Experimental Setup

The U-Net network model was conducted in Matlab software R2023b, on a computer equipped with an AMD Ryzen 7 5800H CPU, 16 GB of memory, and an NVIDIA GeForce RTX 3050 Ti graphics card. A set of training options was configured through programming, and the Stochastic Gradient Descent with Momentum (SGDM) optimization algorithm was used for training with momentum. During the training process, the base learning rate was set to 0.01, with 2000 iterations per epoch. Each iteration used a mini-batch size of 8, and MaxEpochs was set to 1. The BJ3A, BJ3N, and UAV image data were paired with the corresponding sample training set. In each iteration of every training epoch, mini-batches consisting of eight image patches of size 128 × 128 pixels were fed into the network. To avoid excessive memory usage by large images and effectively augment the available training data, 16,000 mini-batches were processed per epoch.

3.2. Identifying PWN-Infected Trees Using BJ3N Imagery

3.2.1. Using Multispectral Imagery with Raw Spatial Resolution of 1.2 m

The BJ3N satellite image comprises six raw multispectral bands at a resolution of 1.2 m, including red (R), green (G), blue (B), near-infrared (NIR), red-edge, and deep-blue. Four different band combinations were sequentially used as input to the training samples: the three-band (R, G, B), four-band (R, G, B, NIR), five-band (R, G, B, NIR, Red-edge), and six-band (R, G, B, NIR, Red-edge, Deep-blue) combinations. For each combination, a corresponding U-Net model was constructed under the specified training environment and parameters, incorporating the respective labeled data. The models’ accuracies were evaluated using validation sets, as presented in Table 5.

Table 5.

The accuracy of identifying PWN-infected trees using the BJ3N satellite’s multispectral bands with a raw spatial resolution of 1.2 m.

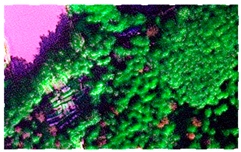

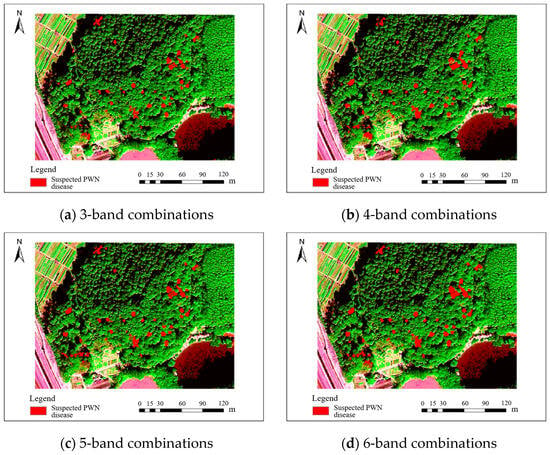

Four raw band combinations of the BJ3N satellite at 1.2 m resolution showed low accuracy in identifying PWN-infected trees, and these identification results are illustrated in Figure 3. The three-band combination with red (R), green (G), and blue (B) achieved the highest F1 score of 66.6%. The four-band combination (R, G, B, and NIR) and the five-band combination (R, G, B, NIR, and Red-edge) yielded F1 scores of 50.5% and 50.4%, respectively, while the six-band combination (R, G, B, NIR, Red-edge, and Deep-blue) performed the worst, with an F1 score of only 24%.

Figure 3.

Results of BJ3N satellite with 0.5 m raw spatial resolution for identifying PWN-infected trees in this study area.

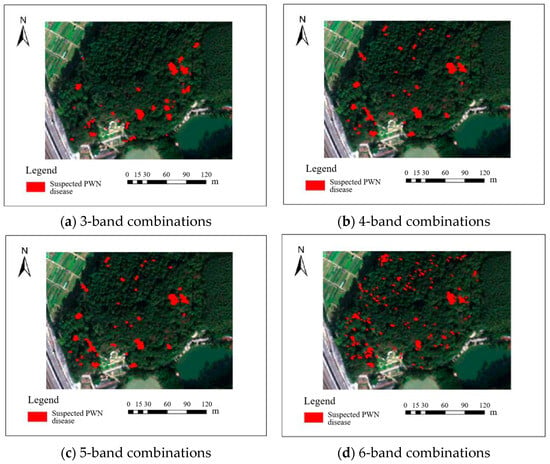

3.2.2. Using Multispectral Imagery with a Fused Spatial Resolution of 0.3 m

The BJ3N satellite’s 0.3 m panchromatic band and six 1.2 m multispectral bands were fused using the pansharpening fusion method to generate six fused multispectral bands at a spatial resolution of 0.3 m. Like raw spatial resolution multispectral imagery, the fused three-band (R, G, B), fused four-band (R, G, B, NIR), fused five-band (R, G, B, NIR, Red-edge), and fused six-band (R, G, B, NIR, Red-edge, Deep-blue) combinations were subsequently employed as input to the training samples for constructing U-Net models to identify PWN-infected trees. The models were trained under the predefined environment and parameters, incorporating the corresponding labeled data. The models’ accuracy was evaluated using validation sets, as presented in Table 5.

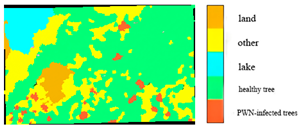

The accuracy of identifying PWN-infected trees using the fused 0.3 m BJ3N multispectral imagery was significantly higher than that achieved with the raw 1.2 m data, with only minor differences observed among the various band combinations. Among these, the fused five-band combination (R, G, B, NIR, Red-edge) achieved the highest F1 value of 88.9%, followed by the fused three-band (R, G, B) combination with an F1 value of 85.1%, and the fused six-band (R, G, B, NIR, Red-edge, Deep-blue) combination with an F1 value of 84.3%. The fused four-band (R, G, B, NIR) combination demonstrated the lowest precision, with an F1 value of 81.9%. All these identification results are illustrated in Figure 4.

Figure 4.

Results of BJ3N satellite with 0.3 m fused spatial resolution for identifying PWN-infected trees in this study area.

3.3. Identifying PWN-Infected Trees Using BJ3A Imagery

3.3.1. Using Multispectral Imagery with a Raw Spatial Resolution of 2 m

The BJ3A satellite image comprises four raw multispectral bands with a spatial resolution of 2 m, including R, G, B, and NIR. During the training process, three-band combinations (R/G/B) and four-band combinations (R/G/B/NIR) serve as input variables for the U-Net model. The model was built using the predefined training environment and parameters, along with the corresponding labeled data. The models’ accuracy was evaluated using the test samples, as summarized in Table 6.

Table 6.

The accuracy of identifying PWN-infected trees using the BJ3A satellite’s multispectral bands with a raw spatial resolution of 2 m.

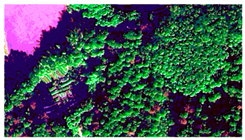

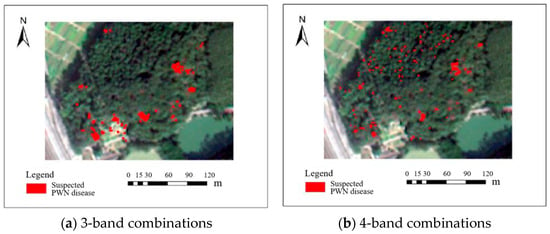

Two raw band combinations of the BJ3A satellite at 2 m resolution showed limited accuracy in identifying PWN-infected trees, with F1 scores of 58% for the three-band (R, G, B) and 28% for the four-band (R, G, B, NIR) combination. All these identification results are illustrated in Figure 5.

Figure 5.

Results of the BJ3A satellite with 2 m raw spatial resolution for identifying PWN-infected trees in this study area.

3.3.2. Using Multispectral Imagery with a Fused Spatial Resolution of 0.5 m

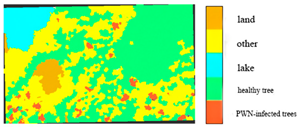

The pansharpening fusion technique was applied to combine the 0.5 m panchromatic band with the four 2 m multispectral bands from the BJ3A satellite, resulting in four fused multispectral bands at 0.5 m resolution. The fused three-band (R, G, B) and fused four-band (R, G, B, NIR) combinations were subsequently employed as input to the training samples for constructing U-Net models to identify PWN-infected trees. The models were trained under the predefined environment and parameters, incorporating the corresponding labeled data. The models’ accuracy was evaluated using validation sets, as presented in Table 6, and the identification results are illustrated in Figure 6.

Figure 6.

Results of the BJ3A satellite with 0.5 m fused spatial resolution for identifying PWN-infected trees in this study area.

The accuracy of identifying PWN-infected trees was significantly improved by using 0.5 m fused band combinations of the BJ3A satellite, compared with the raw band combinations. Notably, the F1 score for the fused three-band combination of R, G, and B reached 86.3%. The fused four-band combination of R, G, B, and NIR exhibited a similar performance, with an F1 score of 85.8%, indicating comparable performance between the two band combinations in identifying PWN-infected trees.

3.4. Identifying PWN-Infected Trees Using UAV Multispectral Imagery

The UAV multispectral imagery used in this study has five spectral bands: R, G, B, NIR, and Red-edge. The three-band (R, G, B), four-band (R, G, B, NIR), and five-band (R, G, B, NIR, Red-edge) combinations were subsequently employed as input to the training samples for constructing U-Net models to identify PWN-infected trees. The models were trained under the predefined environment and parameters, incorporating the corresponding labeled data. The accuracy of each model was assessed based on the validation sets, as summarized in Table 7.

Table 7.

The accuracy of identifying PWN-infected trees using the UAV multispectral bands with a spatial resolution of 0.7 m.

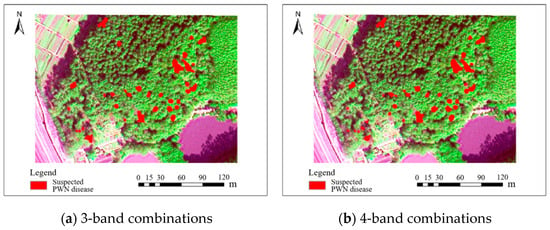

Comparison with BJ3N and BJ3A satellite imagery, 0.07 m UAV multispectral imagery achieved the highest accuracy in identifying PWN-infected trees. Among UAV band combinations, there were few differences in the identification accuracy. The five-band combinations of R, G, B, NIR, and Red-edge demonstrated the highest accuracy, achieving an F1 score of 89.1%. Both the four-band combinations of R, G, B, and NIR and the three-band combinations of R, G, and B exhibited similar performance, with F1 scores of 87.7% and 88.5%, respectively. All these identification results are illustrated in Figure 7.

Figure 7.

Results of UAV multispectral bands with a spatial resolution of 0.7 m for identifying PWN-infected trees in this study area.

4. Discussion

4.1. Effect of Spatial Resolution of Remote Sensing Images on Identification of PWN-Infected Trees

When comparing the F1 accuracy of BJ3N, BJ3A, and UAV band combination in identifying PWN-infected trees separately, it was found that there was a clear relationship between spatial resolution and identifying accuracy. The UAV imagery at 0.07 m resolution achieved the highest F1 score of 89.1%, followed by the fused BJ3N imagery at 0.3 m and the fused BJ3A imagery at 0.5 m, with F1 scores of 88.9% and 88.5%, respectively. In comparison, the raw-resolution BJ3N imagery at 1.2 m and raw-resolution BJ3A imagery at 2.0 m attained maximum F1 scores of only 66.6% and 58.0%, respectively. These results demonstrate that higher spatial resolution in remote sensing imagery leads to improved accuracy in identifying PWN-infected trees. Ota et al. [] also found that spatial resolution has a certain impact on tree species identification. The accuracy of identifying tree species using 4 m high-resolution remote sensing images is significantly higher than that using 30 m coarse resolution images. However, there is not much difference in the accuracy of identifying tree species using 25 m and 30 m resolution remote sensing images. Similarly, Zhang et al. [] examined a sample set of semantic segmentation model input sizes and found that increasing the resolution of images enhances the recognition accuracy of each category of the semantic segmentation model, further supporting the importance of spatial resolution in remote sensing-based detection tasks.

Bolch et al. [] demonstrated that increasing the spatial resolution of hyperspectral UAV imagery improved the detection accuracy of small invasive aquatic plant patches, though at a significantly higher cost, highlighting the need to balance detection accuracy with resolution in practical applications. Similarly, Saltiel et al. [] discovered that enhancing image spatial resolution from 22.8 cm to 7.8 cm only marginally improved wetland vegetation recognition accuracy using a deep learning semantic segmentation model, while substantially increasing processing time and reducing coverage. These findings align with the study, in which the F1 accuracy for identifying PWN-infected trees using fused 0.3 m BJ3N multispectral imagery differed by only 0.2% from that achieved with 0.07 m UAV multispectral imagery.

In contrast, Müllerová et al. [] resampled 0.05 m UAV imagery into 0.5 m Pleiades remote sensing image data and found that spatial resolution had little effect on the identification of tree species. However, the study reveals that for the interval of spatial resolution between 0.07 m UAV images and 0.5 m satellite remote sensing images, there is a certain impact on recognizing tree species, with the F1 value decreasing by 2.8%.

Based on the above discussion, it is evident that 0.3 m ultra-high-resolution may represent the optimal choice for detecting PWN-infected trees in a subtropical mountain monsoon climate zone.

4.2. Effect of Spectral Features of Remote Sensing Imagery on Identification of PWN-Infected Trees

Based on the analysis of 0.07 m UAV imagery, the identification accuracy achieved with the three-band combination (R, G, B) was comparable to that of the four-band and five-band combinations across precision, recall, and F1-score metrics. A similar pattern was observed for both BJ3N and BJ3A imagery, where no significant differences in identification accuracy were found among the various band combinations at same spatial resolutions. Collectively, these findings indicate that the number of spectral bands has a negligible impact on the accuracy of identifying PWN-infected trees, and that a limited number of bands can be sufficient to achieve high performance.

This phenomenon is supported by Lopatin et al. [], who compared UAV RGB and hyperspectral imagery for detecting three invasive species in the forests of south-central Chile. Their study demonstrated that the recognition accuracy of RGB imagery using only three bands exceeded that of hyperspectral imagery with 41 bands for two of the invasive species. This result challenges the conventional assumption that a higher number of spectral bands necessarily leads to improved classification accuracy, suggesting instead that increasing spectral resolution does not always enhance species identification outcomes. Therefore, the number of spectral features is not a critical factor in identifying PWN-infected trees, and a three-band combination (R, G, B) can be sufficient for effective detection using high-resolution remote sensing imagery.

5. Conclusions

This study investigates the identification of PWN-infected trees using ultra-high-resolution optical remote sensing satellite images. By comparing the accuracy of identifying PWN-infected trees using BJ3N, as well as BJ3N and UAV imagery, it was found that the higher the spatial resolution, the higher the accuracy of identifying PWN-infected trees. The significance of spectral features in identifying PWN-infected trees varies among different spatial resolution remote sensing images. Nevertheless, the impact of differences in spectral resolution diminishes for identifying PWN-infected trees at higher spatial resolutions. The ultra-high-resolution BJ3N remote sensing imagery with a spatial resolution of 0.3 m exhibited lower false detection and missed detection rates when identifying trees affected by pine wilt nematode (PWN), indicating that a spatial resolution of 0.3 m is sufficient for detecting PWN-affected trees and is the closest to the resolution saturation point in a subtropical mountain monsoon climate zone. Therefore, ultra-high-resolution optical remote sensing satellite imagery clearly demonstrates the optimal resolution for detecting PWN-infected trees, while also offering advantages such as repeatable data acquisition and extensive spatial coverage. Moreover, ultra-high-resolution remote sensing can be applied to forest disease monitoring and prevention strategies to enhance the practical value of research.

Author Contributions

Conceptualization, C.W., L.Q. and P.X.; formal analysis, Z.N. and C.W.; funding acquisition, C.W. and L.Q.; investigation, Z.N. and X.M. (Xianjin Meng); methodology, Z.N. and X.M. (Xuelian Meng); software, Z.N.; supervision, Z.N. and L.Q.; validation, Z.N. and X.M. (Xuelian Meng); visualization, C.W. and L.Q.; writing—original draft, Z.N. and K.Q.; writing—review and editing, Z.N., L.Q., P.X., X.M. (Xuelian Meng), X.M. (Xianjin Meng), K.Q. and C.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China (Grant No. 42471340, 42474045).

Data Availability Statement

The data supporting the study findings are available on request from the corresponding author. The data are not publicly available due to the authors’ plan to conduct a series of follow-up studies based on this dataset.

Conflicts of Interest

Author Ziqi Nie was employed by the company Aerial Photogrammetry and Remote Sensing Group Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PWN | Pine wood nematode |

| BJ3N | Beijing 3 International Cooperative Remote Sensing Satellite |

| BJ3A | Beijing 3A satellite |

| UAV | Unmanned aerial vehicle |

| ANNs | artificial neural networks |

| SGDM | Stochastic Gradient Descent with Momentum |

References

- Ye, J. Epidemic Status of Pine Wilt Disease in China and Its Prevention and Control Techniques and Counter Measures. Sci. Silvae Sin. 2019, 55, 1–10. [Google Scholar] [CrossRef]

- Suzuki, K. Pine Wilt Disease—A Threat to Pine Forests in Europe. In The Pinewood Nematode, Bursaphelenchus xylophilus; Mota, M., Vieira, P., Eds.; BRILL: Leiden, The Netherlands, 2004; pp. 25–30. ISBN 978-90-474-1309-7. [Google Scholar] [CrossRef]

- Qin, J.; Wang, B.; Wu, Y.; Lu, Q.; Zhu, H. Identifying Pine Wood Nematode Disease Using UAV Images and Deep Learning Algorithms. Remote Sens. 2021, 13, 162. [Google Scholar] [CrossRef]

- Giordan, D.; Manconi, A.; Tannant, D.D.; Allasia, P. UAV: Low-Cost Remote Sensing for High-Resolution Investigation of Landslides. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2025; IEEE: Milan, Italy, 2015; pp. 5344–5347. [Google Scholar] [CrossRef]

- Li, M.; Li, H.; Ding, X.; Wang, L.; Wang, X.; Chen, F. The Detection of Pine Wilt Disease: A Literature Review. Int. J. Mol. Sci. 2022, 23, 10797. [Google Scholar] [CrossRef]

- Wulder, M.; Dymond, C.C.; White, J.C.; Erickson, R.D. Detection, Mapping, and Monitoring of the Mountain Pine Beetle. In The Mountain Pine Beetle: A Synthesis of Biology, Management, and Impacts on Lodgepole Pine; Natural Resources Canada, Canadian Forest Service, Pacific Forestry Centre: Victoria, BC, Canada, 2006; ISBN 978-0-662-42623-3. [Google Scholar]

- Kim, S.-R.; Lee, W.-K.; Lim, C.-H.; Kim, M.; Kafatos, M.; Lee, S.-H.; Lee, S.-S. Hyperspectral Analysis of Pine Wilt Disease to Determine an Optimal Detection Index. Forests 2018, 9, 115. [Google Scholar] [CrossRef]

- Zhou, X.; Liao, L.; Cheng, D.; Chen, X.; Huang, Q. Extraction of the individual tree infected by pine wilt disease using unmanned aerial vehicle optical imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B3-2020, 247–252. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, B.; Chen, W.; Wu, Y.; Qin, J.; Chen, P.; Sun, H.; He, A. Recognition of Abnormal Individuals Based on Lightweight Deep Learning Using Aerial Images in Complex Forest Landscapes: A Case Study of Pine Wood Nematode. Remote Sens. 2023, 15, 1181. [Google Scholar] [CrossRef]

- Zhang, B.; Ye, H.; Lu, W.; Huang, W.; Wu, B.; Hao, Z.; Sun, H. A Spatiotemporal Change Detection Method for Monitoring Pine Wilt Disease in a Complex Landscape Using High-Resolution Remote Sensing Imagery. Remote Sens. 2021, 13, 2083. [Google Scholar] [CrossRef]

- Kim, J.-B.; Jo, M.-H.; Kim, I.-H.; Kim, Y.-K. A Study on the Extraction of Damaged Area by Pine Wood Nematode Using High Resolution IKONOS Satellite Images and GPS. J. Korean Soc. For. Sci. 2003, 92, 362–366. [Google Scholar]

- White, J.; Wulder, M.; Brooks, D.; Reich, R.; Wheate, R. Detection of Red Attack Stage Mountain Pine Beetle Infestation with High Spatial Resolution Satellite Imagery. Remote Sens. Environ. 2005, 96, 340–351. [Google Scholar] [CrossRef]

- Franklin, S.E.; Wulder, M.A.; Skakun, R.S.; Carroll, A.L. Mountain Pine Beetle Red-Attack Forest Damage Classification Using Stratified Landsat TM Data in British Columbia, Canada. Photogramm. Eng. Remote Sens. 2003, 69, 283–288. [Google Scholar] [CrossRef]

- Skakun, R.S.; Wulder, M.A.; Franklin, S.E. Sensitivity of the Thematic Mapper Enhanced Wetness Difference Index to Detect Mountain Pine Beetle Red-Attack Damage. Remote Sens. Environ. 2003, 86, 433–443. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, J.; Sun, H.; Lu, X.; Huang, J.; Wang, S.; Fang, G. Satellite Remote Sensing Identification of Discolored Standing Trees for Pine Wilt Disease Based on Semi-Supervised Deep Learning. Remote Sens. 2022, 14, 5936. [Google Scholar] [CrossRef]

- Poona, N.K.; Ismail, R. Discriminating the Occurrence of Pitch Canker Infection in Pinus Radiata Forests Using High Spatial Resolution QuickBird Data and Artificial Neural Networks. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium, Munich, Germany, 22–27 July 2012; IEEE: Munich, Germany, 2012; pp. 3371–3374. [Google Scholar] [CrossRef]

- Takenaka, Y.; Katoh, M.; Deng, S.; Cheung, K. Detecting forests damaged by pine wilt disease at the individual tree level using airborne laser data and worldview-2/3 images over two seasons. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-3/W3, 181–184. [Google Scholar] [CrossRef]

- Vollenweider, P.; Günthardt-Goerg, M.S. Diagnosis of Abiotic and Biotic Stress Factors Using the Visible Symptoms in Foliage. Environ. Pollut. 2005, 137, 455–465. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Shu, Q.; Wu, Q.; Liu, Y.; Ji, Y. Application of Imaging Spectral Remote Sensing Techniques in Monitoring of Forestry Disease and Insect Pests. China Plant Prot. 2018, 38, 24–28. [Google Scholar] [CrossRef]

- Zhang, S.; Qin, J.; Tang, X.; Wang, Y.; Huang, J.; Song, Q.; Min, J. Spectral Characteristics and Evaluation Model of Pinus massoniana Suffering from Bursaphelenchus xylophilus Disease. Spectrosc. Spectr. Anal. 2019, 39, 865–872. [Google Scholar] [CrossRef]

- Huang, J.; Lu, X.; Chen, L.; Sun, H.; Wang, S.; Fang, G. Accurate Identification of Pine Wood Nematode Disease with a Deep Convolution Neural Network. Remote Sens. 2022, 14, 913. [Google Scholar] [CrossRef]

- Zhu, X.; Wang, R.; Shi, W.; Yu, Q.; Li, X.; Chen, X. Automatic Detection and Classification of Dead Nematode-Infested Pine Wood in Stages Based on YOLO v4 and GoogLeNet. Forests 2023, 14, 601. [Google Scholar] [CrossRef]

- Ye, W.; Lao, J.; Liu, Y.; Chang, C.-C.; Zhang, Z.; Li, H.; Zhou, H. Pine Pest Detection Using Remote Sensing Satellite Images Combined with a Multi-Scale Attention-UNet Model. Ecol. Inform. 2022, 72, 101906. [Google Scholar] [CrossRef]

- Han, Z.; Hu, W.; Peng, S.; Lin, H.; Zhang, J.; Zhou, J.; Wang, P.; Dian, Y. Detection of Standing Dead Trees after Pine Wilt Disease Outbreak with Airborne Remote Sensing Imagery by Multi-Scale Spatial Attention Deep Learning and Gaussian Kernel Approach. Remote Sens. 2022, 14, 3075. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Zhu, Y.; Xu, Q.; Yang, J.; Mo, H. Full Convolution Neural Network Based Building Extraction Approach from High Resolution Aerial Image. Geomat. World 2020, 27, 101–106. [Google Scholar] [CrossRef]

- Zhou, Z. Machine Learning; Tsinghua University Press: Beijing, China, 2016. [Google Scholar]

- Ota, T.; Mizoue, N.; Yoshida, S. Influence of Using Texture Information in Remote Sensed Data on the Accuracy of Forest Type Classification at Different Levels of Spatial Resolution. J. For. Res. 2011, 16, 432–437. [Google Scholar] [CrossRef]

- Bolch, E.A.; Hestir, E.L.; Khanna, S. Performance and Feasibility of Drone-Mounted Imaging Spectroscopy for Invasive Aquatic Vegetation Detection. Remote Sens. 2021, 13, 582. [Google Scholar] [CrossRef]

- Saltiel, T.M.; Dennison, P.E.; Campbell, M.J.; Thompson, T.R.; Hambrecht, K.R. Tradeoffs between UAS Spatial Resolution and Accuracy for Deep Learning Semantic Segmentation Applied to Wetland Vegetation Species Mapping. Remote Sens. 2022, 14, 2703. [Google Scholar] [CrossRef]

- Müllerová, J.; Brůna, J.; Bartaloš, T.; Dvořák, P.; Vítková, M.; Pyšek, P. Timing Is Important: Unmanned Aircraft vs. Satellite Imagery in Plant Invasion Monitoring. Front. Plant Sci. 2017, 8, 887. [Google Scholar] [CrossRef]

- Lopatin, J.; Dolos, K.; Kattenborn, T.; Fassnacht, F.E. How Canopy Shadow Affects Invasive Plant Species Classification in High Spatial Resolution Remote Sensing. Remote Sens. Ecol. Conserv. 2019, 5, 302–317. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).