Abstract

This paper provides an overview of collaborative robotics towards manufacturing applications. Over the last decade, the market has seen the introduction of a new category of robots—collaborative robots (or “cobots”)—designed to physically interact with humans in a shared environment, without the typical barriers or protective cages used in traditional robotics systems. Their potential is undisputed, especially regarding their flexible ability to make simple, quick, and cheap layout changes; however, it is necessary to have adequate knowledge of their correct uses and characteristics to obtain the advantages of this form of robotics, which can be a barrier for industry uptake. The paper starts with an introduction of human–robot collaboration, presenting the related standards and modes of operation. An extensive literature review of works published in this area is undertaken, with particular attention to the main industrial cases of application. The paper concludes with an analysis of the future trends in human–robot collaboration as determined by the authors.

1. Introduction

Traditional industrial robotic systems require heavy fence guarding and peripheral safety equipment that reduce flexibility while increasing costs and required space. The current market, however, asks for reduced lead times and mass customization, thus imposing flexible and multi-purpose assembly systems [1]. These needs are particularly common for small- and medium-sized enterprises (SMEs). Collaborative robots (or cobots [2]) represent a natural evolution that can solve existing challenges in manufacturing and assembly tasks, as they allow for a physical interaction with humans in a shared workspace; moreover, they are designed to be easily reprogrammed even by non-experts in order to be repurposed for different roles in a continuously evolving workflow [3]. Collaboration between humans and cobots is seen as a promising way to achieve increases in productivity while decreasing production costs, as it combines the ability of a human to judge, react, and plan with the repeatability and strength of a robot.

Several years have passed since the introduction of collaborative robots in industry, and cobots have now been applied in several different applications; furthermore, collaboration with traditional robots is considered in research, as it takes advantage of the devices’ power and performance. Therefore, we believe that it is the proper time to review the state of the art in this area, with a particular focus on industrial case studies and the economic convenience of these systems. A literature review is considered a suitable approach to identify the modern approaches towards Human–Robot Collaboration (HRC), in order to better understand the capabilities of the collaborative systems and highlight the possible existing gap on the basis of the presented future works.

The paper is organized as follows: After a brief overview of HRC methods, Section 2 provides an overview of the economic advantages of the collaborative systems, with a brief comparison with traditional systems. Our literature review analysis is presented in Section 3, and Section 4 contains a discussion of the collected data. Lastly, Section 5 concludes the work.

Background

Despite their relatively recent spread, the concept of cobots was invented in 1996 by J. Edward Colgate and Michael Pashkin [2,4]. These devices were passive and operated by humans, and are quite different from modern cobots that are more represented by the likes of lightweight robots such as KUKA LBR iiwa, developed since the 1990s by KUKA Roboter GmbH and the Institute of Robotics and Mechatronics at the German Aerospace Center (DLR) [5], or the first commercial collaborative robot sold in 2008, which was a UR5 model produced by the Danish company Universal Robots [6].

First of all, we believe that it is important to distinguish the different ways of collaboration, since the term collaboration often generates misunderstandings in its definition.

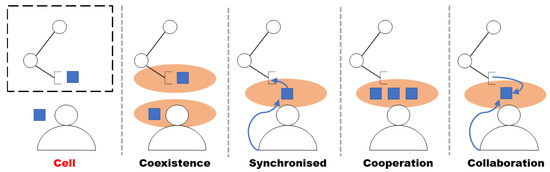

Müller et al. [7] proposed a classification for the different methodologies in which humans and cobots can work together, as summarized in Figure 1, where the final state shows a collaborative environment.

Figure 1.

Types of use of a collaborative robot.

- Coexistence, when the human operator and cobot are in the same environment but generally do not interact with each other.

- Synchronised, when the human operator and cobot work in the same workspace, but at different times.

- Cooperation, when the human operator and cobot work in the same workspace at the same time, though each focuses on separate tasks.

- Collaboration, when the human operator and the cobot must execute a task together; the action of the one has immediate consequences on the other, thanks to special sensors and vision systems.

It should be noted that neither this classification nor the terminology used are unique, and others may be found in the literature [8,9,10,11].

To provide definitions and guidelines for the safe and practical use of cobots in industry, several standards have been proposed. Collaborative applications are part of the general scope of machinery safety regulated by the Machinery Directive, which defines the RESS (Essential Health and Safety Requirements). For further documentation, we refer to [12].

The reference standards as reported in the Machinery Directive are:

- UNI EN ISO 12100:2010 “Machine safety, general design principles, risk assessment, and risk reduction”.

- UNI EN ISO 10218-2:2011 “Robots and equipment for robots, Safety requirements for industrial robots, Part 2: Systems and integration of robots”.

- UNI EN ISO 10218-1:2012 “Robots and equipment for robots, Safety requirements for industrial robots, Part 1: Robots”.

In an international setting, the technical specification ISO/TS 15066:2016 “Robots and robotic devices, Collaborative Robots” is dedicated to the safety requirements of the collaborative methods envisaged by the Technical Standard UNI EN ISO 10218-2:2011.

According to the international standard UNI EN ISO 10218 1 and 2, and more widely explained in ISO/TS 15066:2016, four classes of safety requirements are defined for collaborative robots:

- Safety-rated monitored stop (SMS) is used to cease robot motion in the collaborative workspace before an operator enters the collaborative workspace to interact with the robot system and complete a task. This mode is typically used when the cobot mostly works alone, but occasionally a human operator can enter its workspace.

- Hand-guiding (HG), where an operator uses a hand-operated device, located at or near the robot end-effector, to transmit motion commands to the robot system.

- Speed and separation monitoring (SSM), where the robot system and operator may move concurrently in the collaborative workspace. Risk reduction is achieved by maintaining at least the protective separation distance between operator and robot at all times. During robot motion, the robot system never gets closer to the operator than the protective separation distance. When the separation distance decreases to a value below the protective separation distance, the robot system stops. When the operator moves away from the robot system, the robot system can resume motion automatically according to the requirements of this clause. When the robot system reduces its speed, the protective separation distance decreases correspondingly.

- Power and force limiting (PFL), where the robot system shall be designed to adequately reduce risks to an operator by not exceeding the applicable threshold limit values for quasi-static and transient contacts, as defined by the risk assessment.

Collaborative modes can be adopted even when using traditional industrial robots; however, several safety devices, e.g., laser sensors and vision systems, or controller alterations are required. Thus, a commercial cobot that does not require further hardware costs and setup can be a more attractive solution for industry.

Lastly, cobots are designed with particular features that distinguish them considerably from traditional robots, defined by Michalos et al. [13] as technological and ergonomic requirements. Furthermore, they should be equipped with additional features with respect to traditional robots, such as force and torque sensors, force limits, vision systems (cameras), laser systems, anti-collision systems, recognition of voice commands, and/or systems to coordinate the actions of human operators with their motion. For a more complete overview, we refer to [8,13]. Table A1 shows the characteristics of some of the most popular cobots, with a brief overview of some kinematic schemes in Table A2.

2. Convenience of Collaborative Robotics

The choice towards human–robot collaborative systems is mainly dictated by economic motivations, occupational health (ergonomics and human factors), and efficient use of factory space. Another advantage is the simplification in the robot programming for the actions necessary to perform a task [14]. In addition, learning by demonstration is a popular feature [15].

Furthermore, the greater convenience of collaborative systems is their flexibility: Theoretically, since collaborative cells do not require rigid safety systems, they could be allocated in other parts of plants more easily and more quickly; therefore, they could adapt well to those cases in which the production layout needs to change continuously [16]. However, it should be noted that high-risk applications have to be constrained as in any other traditional system, thus restricting the flexibility.

Collaborative systems can also achieve lower direct unit production costs: [17] observed that a higher degree of collaboration, called , has a high impact on throughput; moreover, depending on the assembly process considered, the throughput can be higher than in traditional systems.

Table 1 provides a comparison between collaborative and traditional systems for four different jobs: assembly (the act of attaching two or more components), placement (the act of positioning each part in the proper position), handling (the manipulation of the picked part), and picking (the act of taking from the feeding point). In order to adapt to market needs, a manual assembly system could be used, though this can lead to a decrease in productivity due to variations in quality and fluctuations in labor rates [18]. Comparing the human operator capabilities to automated systems, it is clear that the performance of manual assembly is greatly influenced by ergonomic factors, which restrict the product weight and the accuracy of the human operator [19]. Therefore, these restrictions limit the capabilities of human operators in the handling and picking tasks of heavy/bulky parts. These components can be manipulated with handling systems such as jib cranes: These devices could be considered as large workspace-serving robots [20], used for automated transportation of heavy parts. However, to the authors’ knowledge, there are no commercial end-effectors that allow these systems to carry out complex tasks, such as assembly or precise placing, since they are quite limited in terms of efficiency and precision [21].

Table 1.

Qualitative evaluation of the most suitable solutions for the main industry tasks.

Traditional robotic systems [22] bridge the presented gap, presenting manipulators with both high payload (e.g., FANUC M-2000 series with a payload of 2.3 t [23]) and high repeatability. However, the flexibility and dexterity required for complex assembly tasks could be too expensive, or even impossible, to achieve with traditional robotic systems [24]. This gap can be closed by collaborative systems, since they combine the capabilities of a traditional robot with the dexterity and flexibility of the human operator. Collaborative robots are especially advantageous for assembly tasks, particularly if the task is executed with a human operator. They are also suitable for pick and place applications, though the adoption of a traditional robot or a handling system can offer better results in terms of speed, precision, and payload.

3. Literature Review

This literature review analyses works from 2009–2018 that involved collaborative robots for manufacturing or assembly tasks. Reviewed papers needed to include a practical experiment involving a collaborative robot undertaking a manufacturing or assembly task; we ignored those that only considered the task in simulation. This criterion was implemented as, often, only practical experiments with real hardware can highlight both the challenges and advantages of cobots.

For this literature review, three search engines were used to collect papers over our time period that were selected using the following boolean string: ((collaborative AND robot) OR cobot OR cobotics) AND (manufacturing OR assembly). Our time period of 2009–2018 was chosen as the timeline for this literature review, as it is only in the last 10 years that we have seen the availability of collaborative robots in the market.

- ScienceDirect returned 124 results, from which 26 were found to fit our literature review criteria after reading the title and abstract.

- IEEExplore returned 234 results, from which 44 were found to fit our literature review criteria after reading the title and abstract.

- Web of Science returned 302 results, from which 62 were found to fit our literature review criteria after reading the title and the abstract.

Of all these relevant results, 16 were duplicated results, leaving us with 113 papers to analyze. Upon a complete read-through of the papers, 41 papers were found to fully fit our criteria and have been included in this review. It should be noted that in the analysis regarding industry use cases, only 35 papers are referenced, as 6 papers were focused on the same case study as others and did not add extra information to our review.

The following parameters were studied: The robot used, control system, application, objectives, key findings, and suggested future work for all these studies, as summarized in Table A3. These were chosen for the following reasons. The robot choice is important, as it highlights which systems are successfully implemented for collaborative applications. The control system is interesting to analyze, as it dictates both safety and performance considerations of the task. Furthermore, when a human is in the control loop, the control system choice is specific to the manner of human–machine interaction— by seeing which methods are more popular and successfully implemented, we can identify trends and future directions. We characterized control systems as vision systems (such as cameras and laser sensors), position systems (such as encoders which are typical of traditional industrial robots), impedance control systems (through haptic interfaces), admittance control (taking advantage of the cobot torque sensors or voltage measurement), audio systems (related to voice command and used for voice/speech recognition), and other systems (that were not easily classified, or that were introduced only in one instance).

The application represents the task given to the cobot, which we believe allows a better understanding to be made regarding the capabilities of collaborative robots. These tasks were divided into assembly (when the cobot collaborates with the operator in an assembly process), human assistance (when the cobot acts as an ergonomic support for the operator, e.g., movable fixtures, quality control, based on vision systems), and lastly, machine tending (when the cobot performs loading/unloading operations).

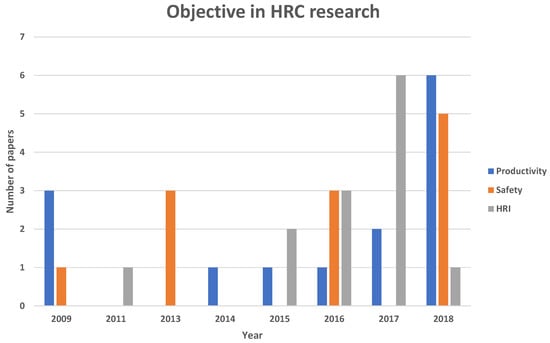

Furthermore, we divided the objectives into three main topics: Productivity, representing the studies focused on task allocation, quality increase, and reduction of cycle time; safety, which includes not only strictly safety-related topics such as collision avoidance, but also an increase in human ergonomics and reduction of mental stress; and HRI (Human–Robot Interaction), which is focused on the development of new HRI methodologies, e.g., voice recognition. It should be noted that in no way is the proposed subdivision univocal; an interesting example could be [25,26,27]. These works were considered as safety because, even if the proposed solutions keep a high level of productivity, they operate on HRC safety.

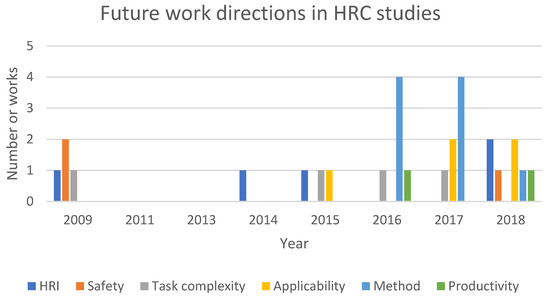

The key findings were not grouped, since we believe they depend on the specific study and are too varied; however, they have been summarized in Table A3. Key findings were useful to present the capabilities of the collaborative systems and what HRC studies have achieved. They were included in our analysis in order to identify common solutions. Future work has been grouped into: HRI (works that focus on increasing HRI knowledge and design), safety (works that focusing on increasing the operator safety when working with the cobot), productivity (works focusing on increasing the task productivity in some manner), task complexity (works that focus on increasing the complexity of the task for a particular application), applicability (works that focus on increasing the scope of the work to be used for other industrial applications), and method (works that focus on enhancing the method of HRI via modeling, using alternative robots, or applying general rules and criteria to the design and evaluation process). From these groupings, we can identify ongoing challenges that still need to be solved in the field; by seeing what researchers identify as future work for industrial uptake, we can find trends across the industry in the direction research on which is focused. Our analysis of these parameters is presented in Section 4.

4. Discussion: Trends and Future Perspective

4.1. Trends in the Literature

By examining the literature as summarized in Table A3, we can identify several trends in the use of cobots in industrial settings. It should be noted that, for some of the considered studies in Table A3, we could not identify all of our parameters as specified in Section 3; thus, they are not considered in this specific discussion.

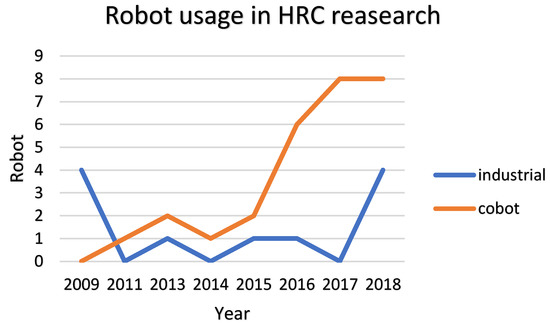

Although early researches utilized traditional industrial robots (Figure 2), the subsequent spread of cobots led to several studies based on the DLR LWR-III (2011), followed by the upgraded KUKA iiwa (from 2016 to 2018), ABB YuMi (also called FRIDA) in 2017 and 2018, and Universal Robots from 2014 to 2018. Several researchers applied the collaborative methods to industrial robots, usually due to their increased performance and widespread availability; however, the disadvantage of this choice is the increase in cost and complexity due to the inclusion of several external sensors and the limited HRC methodologies available. A relationship between the kinematics of the cobot and the application was not explicitly considered, since we believe that other parameters, such as the presence of force sensors in each axis, influenced the cobot choice made in these papers. However, it should be noted that the kinematics—precisely, the number of axes—was a feature considered in [28], whereas future works are focused on verifying their findings with kinematically redundant robots [29] or utilizing the redundancy for achieving better stiffness in hand-guiding [30].

Figure 2.

Robot usage in selected human–robot collaboration studies in the period 2009–2018.

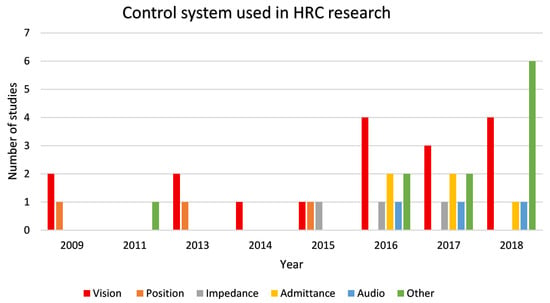

Figure 3 presents the different control systems in the selected human–robot collaboration (HRC) studies. Position control systems were only used for traditional industrial robots, often using extra vision systems for safety reasons. Due to the inherent compliance of cobots, impedance control was more commonly chosen for these systems, though in many cases where an inherently compliant cobot was used, vision was also included for feedback [31,32,33]. Robot compliance can often be a trade-off with robot precision, so including a separate channel for feedback to monitor collisions and increase safety can be a useful method of maintaining manipulation performance. Vision is indeed the prevalent sensor used in HRC studies, also due to the flexibility and affordability of the systems, especially when using depth cameras such as Microsoft Kinect cameras. It is interesting to note that in recent years, Augmented Reality (AR) systems, such as the Microsoft Hololens, have been used more in HRC research, as they are able to provide information to the operator without obscuring their view of the assembly process. In one study, a sensitive skin was incorporated with the cobot to provide environmental information and maintain the operator’s safety. As these skins become more widely studied and developed, we could see this feedback control input become more common, though challenges such as response time must still be solved [34].

Figure 3.

Control systems used in selected human–robot collaboration studies in the period 2009–2018: In red, the number of vision systems; in orange, position-controlled systems (used especially for traditional industrial robots); in gray, the cases for impedance control (e.g., through haptic interfaces); in yellow, admittance control (e.g., through torque sensors); in blue, audio systems (for voice/speech recognition); and green for other systems.

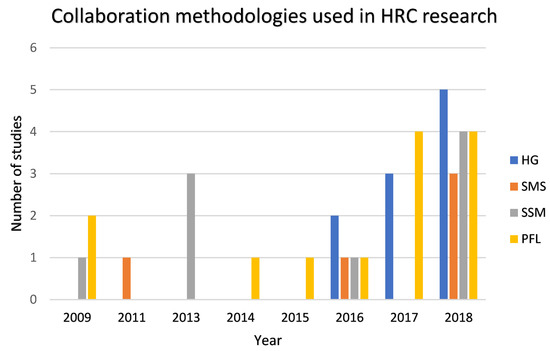

The considered studies used the aforementioned robots, both traditional industrial robots and cobots with different collaborative methodologies. Early studies were focused on SSM and PFL methodologies; we believe this focus is due to the need for safety and flexibility in traditional robotic systems and the early spread of cobots. Since 2016 and the introduction of ISO/TS 15066:2016, the considered research sample began to study other methodologies, especially the HG method, which, as shown in Figure 4, has become prevalent in recent years. The HG method is indeed a representative function of collaborative robots [30], since it allows even unskilled users to interact with and program the cobot, which can allow some degree of flexibility—even if the robot moves only on predefined directions—without the need for expensive algorithms [35]. It should be noted that the HG method could also be employed with traditional industrial robots, such as a COMAU NJ130 [36]: This allows one to take advantage of the robot’s characteristics, such as high speed and power, and increase the system’s flexibility.

Figure 4.

Collaboration methods used in selected human–robot collaboration in the period 2009–2018: In blue, hand guiding (HG); in orange, safety-rated monitored stop (SMS); in gray, speed and separation monitoring (SSM); in yellow, power and force limiting (PFL).

As stated previously, the collaborative mode depends on the considered application. Figure 5 depicts the considered tasks over the last decade. The most studied task is assembly, likely due to the required flexibility in the task, which makes traditional robotic systems too expensive or difficult to implement. However, the task of production also requires flexibility, and could greatly benefit from collaborative applications. Likely, until the fundamental challenges of setting up collaborative workcells are solved for the easier tasks of assembly, we will not see many case studies targeting production.

Figure 5.

Tasks assigned to the robot in selected collaborative applications in research in the period 2009–2018: In blue, assembly tasks; in orange, the tasks used to assist the operator, e.g., handover of parts, quality control tasks, or machine tending, i.e., loading and/or unloading.

In our review, 35 papers presented unique case studies of industrial applications. Two industries seem to drive this research—the automotive industry accounted for 22.85% of studies, and the electronics industry a further 17.14%. Interestingly, research for the automotive industry only began after 2015, and will likely continue to drive research in this area.

HRC studies present several objectives that can be grouped into three main topics. Figure 6 depicts the focus of HRC studies in the last decade. It is interesting to note that the first phase of HRC study [37,38,39,40,41] was more focused on increasing the production and safety aspects of HRC, at least in a manufacturing context. As the research progressed, an increasing number of studies were focused on HRI methodologies, becoming a predominant objective in 2017. The ostensible reduction in 2018 should not mislead us to believe that HRI studies were abandoned in that year: As stated before, the presented classification is not univocal, thus studies such as [42,43,44] could also be considered HRI studies.

Figure 6.

Main topics or objectives in HRC studies. The objectives were divided into productivity studies (blue), safety studies (orange), e.g., ergonomics and collision avoidance, HRI (Human–Robot Interaction) studies (gray), e.g., development or improvement of HRI methodologies.

The key findings of these studies highlight challenge areas that research has successfully addressed, or even solved, when cobots are used for industrial tasks. Multiple studies reported an increase in task performance—e.g., by reducing completion time and minimizing error [25,37,38,43]— as well as a better understanding of the operator space [29,31,32,41] and higher precision of workpiece manipulation [28,30,45]. Thematic areas of research intent can be identified, such as increasing and quantifying the trust of the operator in the robotic system [29,46,47], as well as improving safety by minimizing collisions [40].

The directions of future work identified in literature are summarized in Figure 7. Historically, researchers aimed to increase the HRI relevance of their work, also with a focus on higher safety requirements and more complex tasks. In recent years, the scope of future work has expanded, with researchers focusing on more complex methods that improve the performance of their systems— whether this is by applying their method to different application fields or more complex tasks. This is likely due to the prevalence of new cobots and sensing methodologies coming onto the market, maturing algorithms, and experience in designing collaborative workcells.

Figure 7.

Future work topics from HRC studies. The work was divided into directions of HRI (dark blue), safety (orange), task complexity (gray), applicability (yellow), method (light blue), and productivity (green).

Many of the reviewed works highlight future work in terms of the method they used, whether it be by increasing the complexity of their modeling of the operator and/or environment [48], or using different metrics to evaluate performance [33,49,50] and task choice [51]. Others believe that expanding their research setup to other application areas is the next step [31,45,52]. In our view, these works can be achieved without any step change in existing technology or algorithms; rather, it requires more testing time. To increase safety, productivity, and task performance, researchers will need to improve planners, [39,53], environment and task understanding [28,40,54,55], operator intention understanding [38], and ergonomic cell setups [37,56]. To improve HRI systems, common future work focuses on increasing the robots’ and operators’ awareness of the task and environment by object recognition [44] and integrating multi-modal sensing in an intuitive manner for the operator [3,32,36].

In essence, this future direction focuses on having better understanding of the scene—whether this is what the operator intends to do, what is happening in the environment, or the status of the task. Researchers propose solving this by using more sensors and advanced algorithms, and fusing this information in a way that is easy to use and intuitive for the operator to understand. These systems will inherently lead to better safety, as unexpected motions will be minimized, leading consequently to more trust and uptake. We can expect that many of these advances can come from other areas of robotics research, such as learning by demonstration through hand-guiding or simulation techniques that make it easy to teach a robot a task, and advances in computer vision and machine learning for object recognition and semantic mapping. Other reviews, such as [8], identify similar trends, namely those of improved modeling and understanding, better task planning, and adaptive learning. It will be very interesting to see how this technology is incorporated into the industrial setting to take full advantage of the mechanics and control of cobots and the HRI methodologies of task collaboration.

4.2. Trend of the Market

We believe that the current market should also be presented in order to better place our literature review in the manufacturing context. According to [57], the overall collaborative robot market is estimated to grow from 710 million USD in 2018 to 12,303 million USD by 2025 at a compounded annual growth rate (CAGR) of 50.31% during the forecasted period. However, the International Federation of Robotics (IFR), acknowledging an increase in the robot adoption with over 66% of new sales in 2016, expects that market adoption may proceed at a somewhat slower pace over the forecasted timeframe [58]. However they suggest that the fall in robot prices [59] has led to a growing market for cobots, especially considering that small- and medium-sized enterprises (SMEs), which represent almost 70% of the global number of manufacturers [60] and could not afford robotic applications due to the high capital costs, are now adopting cobots, as they require less expertise and lower installation expenses, confirming a trend presented in scientific works [3].

Finally, [57] highlights that cobots, presenting different payloads, were preferred with up to 5 kg payload capacity; indeed, they held the largest market size in 2017, and a similar trend is expected to continue from 2018 to 2025. This preference of the market towards lightweight robots, which are safer but do not present the high speed and power typically connected with industrial robots [36,61], restrains the HRC possibilities in the current manufacturing scenario. However, we believe that without proper regulation, the current market will continue to mark a dividing line between heavy-duty tasks and HRC methods.

5. Conclusions

Human–robot collaboration is a new frontier for robotics, and the human–robot synergy will constitute a relevant factor in industry for improving production lines in terms of performances and flexibility. This will only be achieved with systems that are fundamentally safe for human operators, intuitive to use, and easy to set up. This paper has provided an overview of the current standards related to Human–Robot Collaboration, showing that it can be applied in a wide range of different modes. The state of the art was presented and the kinematics of several popular cobots were described. A literature analysis was carried out and 41 papers, presenting 35 unique industrial case studies, were reviewed.

Within the context of manufacturing applications, we focused on the control systems, the collaboration methodologies, and the tasks assigned to the cobots in HRC studies. From our analysis, we can identify that the research is largely driven by the electronics and automotive industries, but as cobots become cheaper and easier to integrate into workcells, we can expect SMEs from a wide range of industrial applications to lead their adoption. Objective, key findings and future research directions are also identified, the latter highlighting ongoing challenges that still need to be solved. We can expect that many of the advances needed in the identified directions could come from other areas of robotics research; how these will be incorporated into the industrial setting will lead to new challenges in the future.

Author Contributions

Conceptualization, E.M. and G.R.; Methodology, E.M. and G.R.; Formal analysis, E.M., R.M., and E.G.G.Z.; Investigation, E.M., R.M. and E.G.G.Z.; Data curation, E.M., R.M., and E.G.G.Z.; Writing—original draft preparation, E.M., R.M., and E.G.G.Z.; Writing—review and editing, M.F., E.M., R.M., G.R., and E.G.G.Z.; Supervision, M.F. and G.R.; Project administration, M.F. and G.R.; Funding acquisition, G.R.

Funding

This research was funded by University of Padua—Program BIRD 2018—Project no. BIRD187930, and by Regione Veneto FSE Grant 2105-55-11-2018.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Tables

Table A1.

List of characteristics of some of the most used cobots for different kinematics.

Table A1.

List of characteristics of some of the most used cobots for different kinematics.

| Brand | Product Name | Payload (Range) [kg] | Reach (Range) [mm] | Repeatability [mm] | Internal Sensors & Control System | n° of Axis | Kinematics |

|---|---|---|---|---|---|---|---|

| ABB (CHE-SWE) | YuMi (dual arm IRB 14000, single arm IRB 14050) | 0.5 for each arm | 500–559 | ±0.02 | Position control (encoder) and voltage measurement | 7 for each arm | // |

| Comau (ITA) | AURA | 170 | 2800 | ±0.1 | Position control (encoder), sensitive skin, laser scanner (optional) | 6 | // |

| Fanuc (JPN) | CR-4iA, CR-7iA, CR-7iA/L, CR-14iA/L, CR-15iA, CR-35iA | 4–35 | 550–1813 | ± 0.01–0.03 | Force sensor (base) | 6 | Spherical wrist |

| Kawasaki (JPN) | duAro1, duAro2 | 2–3 for each arm | 760–785 | ±0.05 | Position controlled | 4 for each arm | Dual arm scara (duAro1) |

| Kuka (DEU) | iisy, iiwa 7 R800, iiwa 14 R820 | 3–14 | 600–820 | ±0.1–0.15 (iisy not yet defined) | Torque sensor in each axis and position control (iiwa) | 6 (iisy) 7 (iiwa) | Spherical wrist (and shoulder for iiwa) |

| Omron (JAP)/ Techman (TWN) | TM5 700, TM5 900, TM12, TM14 | 4–14 | 700–1300 | ±0.05 (TM5) ±0.1 | 2d wrist camera, position control (encoder), voltage measurement | 6 | Three parallel axes |

| Precise Automation (USA) | PP100, PF3400, PAVP6, PAVS6 | 2–7 | 432–770 or 685–1270 | ±0.02–0.1 | Position control (encoder), voltage measurement | 4 (cartesian, scara) 6 (spherical wrist) | Cartesian, scara, spherical wrist |

| Rethink Robotics (USA/DEU) | Baxter, Sawyer | 4 (2 for each arm for Baxter) | 1260 for each arm | ±0.1 | Torque sensors in each axis, position control | 7 for each arm | // |

| Staübli | TX2-40, TX2(touch)-60, TX2(touch)-60L, TX2(touch)-90, TX2(touch)-90L, TX2touch-90XL | 2.3–20 | 515–1450 | ±0.02–0.04 | Position control, sensitive skin (touch) | 6 | Spherical wrist |

| Universal Robots (DNK) | UR3, UR3e, UR5, UR5e, UR10, UR10e, UR16e | 3–16 | 500–1300 | ±0.03–0.1 | Position control (encoder), voltage measuremen, Force torque multiaxis load cell (e-series) | 6 | Three parallel axes |

| Yaskawa (JPN) | HC10, HC10DT | 10 | 1200 | ±0.1 | Torque sensors in each axis, position control | 6 | // |

Table A2.

Denavit–Hartenberg parameters and singularity configurations for the considered kinematic schemes.

Table A2.

Denavit–Hartenberg parameters and singularity configurations for the considered kinematic schemes.

| Kinematic Scheme | Denavit–Hartenberg Parameters | Singularity Configurations | |||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Six axes with spherical wrist |

| Wrist: J4 and J6 aligned |  | ||||||||||||||||||||||||||||||||||||||||

| Shoulder: Wrist aligned with J1 |  | ||||||||||||||||||||||||||||||||||||||||||

| Elbow: Wrist coplanar with J2 and J3 |  | ||||||||||||||||||||||||||||||||||||||||||

| Six axes with three parallel axes |

| Wrist: J6 // J4 |  | ||||||||||||||||||||||||||||||||||||||||

| Shoulder: Intersection of J5 and J6 coplanar with J1 and J2 |  | ||||||||||||||||||||||||||||||||||||||||||

| Elbow: J2, J3, and J4 coplanar |  | ||||||||||||||||||||||||||||||||||||||||||

| Six axes with offset wrist |

| Wrist: J5 ≈ 0° or 180° ± 15° |  | ||||||||||||||||||||||||||||||||||||||||

| Shoulder: Wrist point near the yellow column (300 mm of radius) |  | ||||||||||||||||||||||||||||||||||||||||||

| Elbow: J3 ≈ 0° or 180° ±15° |  | ||||||||||||||||||||||||||||||||||||||||||

| Seven axes with spherical joints |

| Wrist motion: J6 = 0 & J4 = 90° |  | ||||||||||||||||||||||||||||||||||||||||

| Shoulder Motion: J2 = 0 & J3 = ± 90° |  | ||||||||||||||||||||||||||||||||||||||||||

| Elbow Motion: J5 = ± 90° & J6 = 0 |  | ||||||||||||||||||||||||||||||||||||||||||

| Seven axes without spherical joints |

| Wrist: J5 // J7 |  | ||||||||||||||||||||||||||||||||||||||||

| Shoulder: Wrist point near J1 direction |  | ||||||||||||||||||||||||||||||||||||||||||

| Elbow: J3 // J5 |  | ||||||||||||||||||||||||||||||||||||||||||

Table A3.

Literature review analysis.

Table A3.

Literature review analysis.

| Author | Year | Robot Used | Control System | Collaboration Methods | Application | Objective of the Study | Key Findings | Future Works |

|---|---|---|---|---|---|---|---|---|

| J. T. C. Tan et al. [37] | 2009 | Industrial robot | Assembly | Productivity: Information display for operator support | Development and implementation of an information support system, which leads to a reduction in assembly time. | HRI: Studies on the position of the LCD TV (information source) in order to improve information reception. | ||

| T. Arai et al. [38] | 2009 | Industrial robot | Vision | Power and Force limiting | Assembly | Productivity: Improve efficiency through devices that support the operator | HRC doubles productivity in comparison with manual assembly; reduction of human errors up to no defects | Safety: Monitoring operator’s fatigue and intention |

| J. T. C. Tan et al. [39] | 2009 | Industrial robot | Vision | Power and Force limiting | Assembly | Productivity: Optimize working efficiency, improving quality and productivity | Safety analysis lead to an increased distance and a reduction in the robot speed; mental strain due to working with robots reduced | Task complexity: Analysis of the improvements obtained with HRC to order tasks. |

| C. Lenz et al. [40] | 2009 | Industrial robot | Position | Speed and separation monitoring | Assembly | Safety: HRC with traditional industrial robots, with focus on safety | Collision avoidance method based on restricted robot movements and virtual force fields | Safety: Estimation and tracking of human body pose to avoid collision without the use of any markers. |

| T. Ende et al. [41] | 2011 | DLR LWR-III 3 | Gesture recognition | Human assistant | Productivity: Gather gestures for HRC from humans | Eleven gestures present recognition rate over 80%; recognition problem when torso position is part of the gesture | N/A | |

| H. Ding et al. [25] | 2013 | ABB FRIDA (YuMi) | Vision | Speed and separation monitoring | Assembly | Safety: Collaborative behavior with operator safety and without productivity losses due to emergency stops | Speed reduction applied based on the distance between human arm and Kinect position avoids emergency stops | N/A |

| H. Ding et al. [26] | 2013 | ABB FRIDA (YuMi) | Vision | Speed and separation monitoring | Assembly | Safety: Multiple operators in collaborative behavior with operators safety concern and without productivity losses | Development of a finite state automaton; speed reduction improves the uptime while respecting safety constraints | N/A |

| A. M. Zanchettin et al. [27] | 2013 | Industrial robot | Position | Speed and separation monitoring | Quality control | Safety: Compromise between safety and productivity; Adaptable robot speed | Development of a safety-oriented path-constrained motion planning, tracking operator, and reducing robot speed | N/A |

| K. P. Hawkins et al. [32] | 2014 | Universal Robots UR10 | Vision | Power and Force limiting | Assembly | Productivity: Robots need to anticipate human actions even with task or sensor ambiguity | Compromises between human wait times and confidence in the human action detection | HRI: impact of the system on the operator’s sense of fluency, i.e., synchronization between cobot and operator |

| K. R. Guerin et al. [3] | 2015 | Universal Robots UR5 | Impedance | Machine tending | Productivity: Robot assistant with set of capabilities for typical SMEs | Test of machine tending: 82% of parts taken from machine (due to bad weld or bad grasp) | HRI: Test ease of use and focus on HRC, gesture recognition for learning | |

| I. D. Walker et al. [46] | 2015 | Industrial robot | Position | Human assistant | HRI: Trust not considered in handoffs; derive model for robot trust on operator | The robot pose changes accordingly to trust in human, reducing impact forces in case of low trust | Applicability: Effectiveness of the approach in SME scenarios | |

| T. Hamabe et al. [54] | 2015 | Kawada HIRO | Vision | Power and Force limiting | Assembly | HRI: Learn task from human demonstration | Human and robot roles fixed, due to limits in robot’s manipulation capabilities; changing task order increases time due to recognition system | Task complexity: Complete set of scenarios assumed, cobot should be able to recognize new tasks autonomously |

| S. M. M. Rahman et al. [29] | 2016 | Kinova MICO 2-finger | Vision | Assembly | HRI: Derive model for robot trust on human; trust-based motion planning for handover tasks | pHRI gets better with trust for contextual information transparent to human; increase: 20% safety, 30% handover success, 6.73% efficiency | Method: Apply the proposed method with kinematically redundant robot | |

| S. M. M. Rahman et al. [49] | 2016 | Rethink Baxter | Vision | Assembly | Productivity: Autonomous error detection with human’s intervention | Regret based method leads to improvement of fluency, due to an increase in synchronization, reduction in mean cognitive workload and increase in human trust compared to a Bayesian approach | Method: Different objective criteria for the regret-based approach to evaluate HRC performance | |

| L. Rozo et al. [48] | 2016 | WAM robot/ KUKA LWR iiwa | Impedance/ admittance | Hand-guiding | Assembly | HRI: Robotic assistant needs to be easily reprogrammed, thus programming by demonstration | Model adapts to changes in starting and ending point, task, and control mode; with high compliance, the robot can not follow trajectory | Method: Estimation of the damping matrix for the spring damper model; study how interaction forces can change the robot behaviors |

| A. M. Zanchettin et al. [62] | 2016 | ABB FRIDA (YuMi) | Vision | Speed and separation monitoring | Assembly | Safety: Collision avoidance strategy: Decrease the speed of the cobot | Speed reduction method based on minimum distance; distance threshold adaptable to the programmed speed; continuous speed scaling | N/A |

| A. Cherubini et al. [63] | 2016 | KUKA LWR4+ | Admittance | Safety rated monitored stop | Assembly | Safety: Collaborative human–robot application to assembly a car homokinetic joint | Framework that integrates many state-of-the-art robotics components, applied in real industrial scenarios | Productivity: Deploying the proposed methodologies on mobile manipulator robots to increase flexibility |

| H. Fakhruldeen et al. [51] | 2016 | Rethink Baxter | Vision and audio recognition | Power and Force limiting | Assembly | HRI: Implementation of a self-built planner in a cooperative task where the cobot actively collaborates | API development combining an object-oriented programming scheme with a Prolog meta interpreter to create these plans and execute them | Method: add cost to action evaluation, add non-productive actions, action completion percentage should be considered |

| S. Makris et al. [64] | 2016 | Industrial robot | AR system | Hand-guiding | Human assistant | Safety: Development of an AR system in aid of operators in human–robot collaborative environment to reduce mental stress | The proposed system minimizes the time required for the operator to access information and send feedback; it decreases the stoppage and enhances the training process | Task complexity: Application in other industrial environments |

| B. Whitsell et al. [28] | 2017 | KUKA LBR iiwa | Impedance / admittance | Hand-guiding/ Power and Force Limiting | Assembly | HRI: Cooperate in everyday environment; robots need to adapt to human; haptics should adapt to the operator ways | 100% correct placement of a block in 1440 trials; robot can control a DOF if the operator does not control it (95.6%); lessening the human responsibility by letting the robot control an axis reduces the completion time | Applicability: Adapt variables to environment, e.g. task and robot coordinate system not aligned |

| J. Bös et al. [65] | 2017 | ABB YuMi | Admittance | Power and Force limiting | Assembly | Productivity: Increase assembly speed without reducing the flexibility or increasing contact forces using iterative learning control (ILC) | Increase acceleration by applying Dynamic Movement Primitives to an ILC, reduce contact forces by adding a learning controller. Stochastic disturbances do not have a long term effect; task duration decreases by 40%, required contact force by 50% | Method: Study and theoretical proof on stability in the long term |

| M. Wojtynek et al. [33] | 2017 | KUKA LBR iiwa | Vision | Hand-guiding | Assembly | Productivity: Create a modular and flexible system; abstraction of any equipment | Easy reconfiguration, without complex programming | Method: Introduce metrics for quantitative measurement of HRC |

| B. Sadrfaridpour et al. [47] | 2017 | Rethink Baxter | Human tracking system | Power and Force limiting | Assembly | HRI: Combination of pHRI and sHRI in order to predict human behavior and choose robot path and speed | Augmenting physical/social capabilities increases one subjective measure (trust, workload, usability); assembly time does not change | N/A |

| I. El Makrini et al. [56] | 2017 | Rethink Baxter | Vision | Power and Force limiting | Assembly | HRI: HRC based on natural communication; framework for the cobot to communicate | The framework is validated; more intuitive HRI | Task Complexity: Adapt robot to user; adjust parts position based on user’s height |

| P. J. Koch et al. [52] | 2017 | KUKA LWR4+ | Admittance | Screwing for maintenance | HRI: Cobot development: Focus on intuitive human–robot interface | HR interface, simple for user reconfiguration. Steps in order to transform a mobile manipulator into a cobot | Applicability: Expand to several industrial maintenance tasks | |

| M. Haage et al. [66] | 2017 | ABB YuMi | Vision | Assembly | HRI: Reduce the time and required expertise to setup a robotized assembly station | A web-based HRI for assisting human instructors to teach assembly tasks in a straightforward and intuitive manner | N/A | |

| P. Gustavsson et al. [50] | 2017 | Universal Robots UR3 | Impedance and audio recognition | Hand-guiding/ Power and Force Limiting | Assembly | HRI: Joint Speech recognition and a haptic control in order to obtain an intuitive HRC | Developed a simplified HRI responsive to vocal commands, that guides the user in the progress of the task with haptics | Method: Test if haptic control can be used to move the robot with linear motions; an automatic way of logging the accuracy |

| M. Safeea et al. [30] | 2017 | KUKA LBR iiwa | Admittance | Hand-guiding/ Safety rated Monitored Stop | Assembly | Safety: Precise and intuitive hand guiding | Possible to hand-guide the robot with accuracy, with no vibration, and in a natural and intuitive way | Method: Utilizing the redundancy of iiwa to achieve better stiffness in hand-guiding |

| W. Wang et al. [45] | 2018 | Industrial robot | Gesture recognition | Hand-guiding/ Power and Force Limiting | Assembly | Productivity: Easier reconfiguration of the cobot using a teaching-by-demonstration model | 95% of accuracy (higher than previous methods based on vision); Lower inefficient time | Applicability: Use multimodal information to investigate different applications |

| N. Mendes et al. [31] | 2018 | KUKA LBR iiwa | Vision | Speed and separation monitoring | Assembly | HRI: Need for a flexible system with simple and fast interface | Gesture is intuitive but delays process; constrained flexibility | Applicability: Expand use case to several industrial fields |

| K. Darvish et al. [42] | 2018 | Rethink Baxter | Vision | Power and Force limiting | Assembly | Productivity: Increase robot adaptability integrated in the FlexHRC architecture by an online task planner | Planning and task representation require little time (less than 1% of total); the simulation follows the real data very well | N/A |

| A. Zanchettin et al. [43] | 2018 | ABB YuMi | Vision | Power and Force limiting | Assembly | Productivity: Predict human behavior in order to increase the robot adaptability | Decrease of task time equal to 17%; | N/A |

| G. Pang et al. [34] | 2018 | ABB YuMi | Sensitive skin | Safety rated monitored stop/ Speed and separation monitoring | Test of collision | Safety: Cobot perceives stimulus only by its torque sensors, not guaranteeing collision avoidance | Integration on cobot of sensitive skin; delay in the system reaction | Method: Reduce contact area of the sensitive skin; test with multisensing systems |

| V. V. Unhelkar et al. [53] | 2018 | Universal Robots UR10 | Vision | Speed and separation monitoring | Human assistant | Productivity: Cobots can be successful, but they have restricted range: Mobile cobots for delivering parts | Prediction of long motion (16 s) with a prediction time horizon up to 6 s; in simulation, reduced safety rated monitored stops, increasing task efficiency | Safety: Recognize unmodeled motion and incremental planners |

| V. Tlach et al. [55] | 2018 | Industrial robot | Admittance | Hand-guiding/ Safety rated Monitored Stop | Assembly | Productivity: Design of collaborative tasks in an application | The method is flexible to the type of product | Productivity: Improve methods for recognizing objects |

| S. Heydaryan et al. [35] | 2018 | KUKA LBR iiwa 14 R820 | Vision / admittance | Hand-guiding/ Safety rated Monitored Stop | Assembly | Safety: Task allocation to ensure safety of the operator, increase productivity by increasing ergonomics | Assembly time of 203 s in SMS, but the robot obstructs the access to some screws; proposed a hand-guided solution (210s) | N/A |

| G. Michalos et al. [36] | 2018 | Industrial robot | Admittance | Hand-guiding | Assembly | Safety: Implementation of a robotic system for HRC assembly | Development of HRC assembly cell with high payload industrial robots and human operators. | HRI: Improve human immersion in the cell. Integrate all the sensing and interaction equipment |

| V. Gopinath et al. [61] | 2018 | Industrial robot | AR system | Assembly | Safety: Development of a collaborative assembly cell with large industrial robots | Development of two work stations | N/A | |

| A. Blaga et al. [44] | 2018 | Rethink Baxter | Vision and audio recognition | Power and Force limiting | Assembly | Productivity: Improve the possibilities of the integration of Augmented Reality in collaborative tasks to shorten lead times | AR and HRC were integrated into an unitary system, meant to ease a worker’s daily tasks regarding the visualization of the next possible assembly step | HRI: Using object recognition combined with 3D printing, along with the latest HMD devices |

References

- Barbazza, L.; Faccio, M.; Oscari, F.; Rosati, G. Agility in assembly systems: A comparison model. Assem. Autom. 2017, 37, 411–421. [Google Scholar] [CrossRef]

- Colgate, J.E.; Edward, J.; Peshkin, M.A.; Wannasuphoprasit, W. Cobots: Robots for Collaboration with Human Operators. In Proceedings of the 1996 ASME International Mechanical Engineering Congress and Exposition, Atlanta, GA, USA, 17–22 November 1996; pp. 433–439. [Google Scholar]

- Guerin, K.R.; Lea, C.; Paxton, C.; Hager, G.D. A framework for end-user instruction of a robot assistant for manufacturing. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6167–6174. [Google Scholar]

- Peshkin, M.A.; Colgate, J.E.; Wannasuphoprasit, W.; Moore, C.A.; Gillespie, R.B.; Akella, P. Cobot architecture. IEEE Trans. Robot. Autom. 2001, 17, 377–390. [Google Scholar] [CrossRef]

- DLR—Institute of Robotics and Mechatronics. History of the DLR LWR. Available online: https://www.dlr.de/rm/en/desktopdefault.aspx/tabid-12464/21732_read-44586/ (accessed on 30 November 2019).

- Universal Robots. Low Cost and Easy Programming Made the UR5 a Winner. Available online: https://www.universal-robots.com/case-stories/linatex/ (accessed on 30 November 2019).

- Müller, R.; Vette, M.; Geenen, A. Skill-based dynamic task allocation in Human-Robot-Cooperation with the example of welding application. Procedia Manuf. 2017, 11, 13–21. [Google Scholar] [CrossRef]

- Wang, L.; Gao, R.; Váncza, J.; Krüger, J.; Wang, X.V.; Makris, S.; Chryssolouris, G. Symbiotic human-robot collaborative assembly. CIRP Ann. 2019, 68, 701–726. [Google Scholar] [CrossRef]

- Müller, R.; Vette, M.; Mailahn, O. Process-oriented task assignment for assembly processes with human-robot interaction. Procedia CIRP 2016, 44, 210–215. [Google Scholar] [CrossRef]

- Wang, X.V.; Kemény, Z.; Váncza, J.; Wang, L. Human–robot collaborative assembly in cyber-physical production: Classification framework and implementation. CIRP Ann. 2017, 66, 5–8. [Google Scholar] [CrossRef]

- Krüger, J.; Lien, T.K.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann. 2009, 58, 628–646. [Google Scholar]

- Gaskill, S.; Went, S. Safety issues in modern applications of robots. Reliab. Eng. Syst. Saf. 1996, 53, 301–307. [Google Scholar] [CrossRef]

- Michalos, G.; Makris, S.; Tsarouchi, P.; Guasch, T.; Kontovrakis, D.; Chryssolouris, G. Design considerations for safe human-robot collaborative workplaces. Procedia CIrP 2015, 37, 248–253. [Google Scholar] [CrossRef]

- Gravel, D.P.; Newman, W.S. Flexible robotic assembly efforts at Ford Motor Company. In Proceeding of the 2001 IEEE International Symposium on Intelligent Control (ISIC’01) (Cat. No. 01CH37206), Mexico City, Mexico, 5–7 September 2001; pp. 173–182. [Google Scholar]

- Zhu, Z.; Hu, H. Robot learning from demonstration in robotic assembly: A survey. Robotics 2018, 7, 17. [Google Scholar]

- Fechter, M.; Foith-Förster, P.; Pfeiffer, M.S.; Bauernhansl, T. Axiomatic design approach for human-robot collaboration in flexibly linked assembly layouts. Procedia CIRP 2016, 50, 629–634. [Google Scholar] [CrossRef]

- Faccio, M.; Bottin, M.; Rosati, G. Collaborative and traditional robotic assembly: A comparison model. Int. J. Adv. Manuf. Technol. 2019, 102, 1355–1372. [Google Scholar] [CrossRef]

- Edmondson, N.; Redford, A. Generic flexible assembly system design. Assem. Autom. 2002, 22, 139–152. [Google Scholar] [CrossRef]

- Battini, D.; Faccio, M.; Persona, A.; Sgarbossa, F. New methodological framework to improve productivity and ergonomics in assembly system design. Int. J. Ind. Ergon. 2011, 41, 30–42. [Google Scholar] [CrossRef]

- Sawodny, O.; Aschemann, H.; Lahres, S. An automated gantry crane as a large workspace robot. Control Eng. Pract. 2002, 10, 1323–1338. [Google Scholar] [CrossRef]

- Krüger, J.; Bernhardt, R.; Surdilovic, D.; Spur, G. Intelligent assist systems for flexible assembly. CIRP Ann. 2006, 55, 29–32. [Google Scholar] [CrossRef]

- Rosati, G.; Faccio, M.; Carli, A.; Rossi, A. Fully flexible assembly systems (F-FAS): A new concept in flexible automation. Assem. Autom. 2013, 33, 8–21. [Google Scholar] [CrossRef]

- FANUC Italia, S.r.l. M-2000—The Strongest Heavy Duty Industrial Robot in the Marker. Available online: https://www.fanuc.eu/it/en/robots/robot-filter-page/m-2000-series (accessed on 30 November 2019).

- Hägele, M.; Schaaf, W.; Helms, E. Robot assistants at manual workplaces: Effective co-operation and safety aspects. In Proceedings of the 33rd ISR (International Symposium on Robotics), Stockholm, Sweden, 7–11 October 2002; Volume 7. [Google Scholar]

- Ding, H.; Heyn, J.; Matthias, B.; Staab, H. Structured collaborative behavior of industrial robots in mixed human-robot environments. In Proceedings of the 2013 IEEE International Conference on Automation Science and Engineering (CASE), Madison, WI, USA, 17–20 August 2013; pp. 1101–1106. [Google Scholar]

- Ding, H.; Schipper, M.; Matthias, B. Collaborative behavior design of industrial robots for multiple human-robot collaboration. In Proceedings of the IEEE ISR 2013, Seoul, Korea, 24–26 October 2013; pp. 1–6. [Google Scholar]

- Zanchettin, A.M.; Rocco, P. Path-consistent safety in mixed human-robot collaborative manufacturing environments. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1131–1136. [Google Scholar]

- Whitsell, B.; Artemiadis, P. Physical human–robot interaction (pHRI) in 6 DOF with asymmetric cooperation. IEEE Access 2017, 5, 10834–10845. [Google Scholar] [CrossRef]

- Rahman, S.M.; Wang, Y.; Walker, I.D.; Mears, L.; Pak, R.; Remy, S. Trust-based compliant robot-human handovers of payloads in collaborative assembly in flexible manufacturing. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 355–360. [Google Scholar]

- Safeea, M.; Bearee, R.; Neto, P. End-effector precise hand-guiding for collaborative robots. In Iberian Robotics Conference; Springer: Berlin, Germany, 2017; pp. 595–605. [Google Scholar]

- Mendes, N.; Safeea, M.; Neto, P. Flexible programming and orchestration of collaborative robotic manufacturing systems. In Proceedings of the 2018 IEEE 16th International Conference on Industrial Informatics (INDIN), Porto, Portugal, 18–20 July 2018; pp. 913–918. [Google Scholar]

- Hawkins, K.P.; Bansal, S.; Vo, N.N.; Bobick, A.F. Anticipating human actions for collaboration in the presence of task and sensor uncertainty. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2215–2222. [Google Scholar]

- Wojtynek, M.; Oestreich, H.; Beyer, O.; Wrede, S. Collaborative and robot-based plug & produce for rapid reconfiguration of modular production systems. In Proceedings of the 2017 IEEE/SICE International Symposium on System Integration (SII), Taipei, Taiwan, 11–14 December 2017; pp. 1067–1073. [Google Scholar]

- Pang, G.; Deng, J.; Wang, F.; Zhang, J.; Pang, Z.; Yang, G. Development of flexible robot skin for safe and natural human–robot collaboration. Micromachines 2018, 9, 576. [Google Scholar] [CrossRef]

- Heydaryan, S.; Suaza Bedolla, J.; Belingardi, G. Safety design and development of a human-robot collaboration assembly process in the automotive industry. Appl. Sci. 2018, 8, 344. [Google Scholar] [CrossRef]

- Michalos, G.; Kousi, N.; Karagiannis, P.; Gkournelos, C.; Dimoulas, K.; Koukas, S.; Mparis, K.; Papavasileiou, A.; Makris, S. Seamless human robot collaborative assembly—An automotive case study. Mechatronics 2018, 55, 194–211. [Google Scholar] [CrossRef]

- Tan, J.T.C.; Zhang, Y.; Duan, F.; Watanabe, K.; Kato, R.; Arai, T. Human factors studies in information support development for human-robot collaborative cellular manufacturing system. In Proceedings of the RO-MAN 2009—The 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 334–339. [Google Scholar]

- Arai, T.; Duan, F.; Kato, R.; Tan, J.T.C.; Fujita, M.; Morioka, M.; Sakakibara, S. A new cell production assembly system with twin manipulators on mobile base. In Proceedings of the 2009 IEEE International Symposium on Assembly and Manufacturing, Suwon, Korea, 17–20 November 2009; pp. 149–154. [Google Scholar]

- Tan, J.T.C.; Duan, F.; Zhang, Y.; Watanabe, K.; Kato, R.; Arai, T. Human-robot collaboration in cellular manufacturing: Design and development. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, Saint Louis, MO, USA, 10–15 October 2009; pp. 29–34. [Google Scholar]

- Lenz, C.; Rickert, M.; Panin, G.; Knoll, A. Constraint task-based control in industrial settings. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, Saint Louis, MO, USA, 10–15 October 2009; pp. 3058–3063. [Google Scholar]

- Ende, T.; Haddadin, S.; Parusel, S.; Wüsthoff, T.; Hassenzahl, M.; Albu-Schäffer, A. A human-centered approach to robot gesture based communication within collaborative working processes. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3367–3374. [Google Scholar]

- Darvish, K.; Bruno, B.; Simetti, E.; Mastrogiovanni, F.; Casalino, G. Interleaved Online Task Planning, Simulation, Task Allocation and Motion Control for Flexible Human-Robot Cooperation. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 58–65. [Google Scholar]

- Zanchettin, A.; Casalino, A.; Piroddi, L.; Rocco, P. Prediction of human activity patterns for human-robot collaborative assembly tasks. IEEE Trans. Ind. Inf. 2018, 15, 3934–3942. [Google Scholar] [CrossRef]

- Blaga, A.; Tamas, L. Augmented Reality for Digital Manufacturing. In Proceedings of the 2018 26th Mediterranean Conference on Control and Automation (MED), Akko, Israel, 1–4 July 2018; pp. 173–178. [Google Scholar]

- Wang, W.; Li, R.; Diekel, Z.M.; Chen, Y.; Zhang, Z.; Jia, Y. Controlling Object Hand-Over in Human–Robot Collaboration Via Natural Wearable Sensing. IEEE Trans. Human-Mach. Syst. 2018, 49, 59–71. [Google Scholar] [CrossRef]

- Walker, I.D.; Mears, L.; Mizanoor, R.S.; Pak, R.; Remy, S.; Wang, Y. Robot-human handovers based on trust. In Proceedings of the 2015 IEEE Second International Conference on Mathematics and Computers in Sciences and in Industry (MCSI), Sliema, Malta, 17 August 2015; pp. 119–124. [Google Scholar]

- Sadrfaridpour, B.; Wang, Y. Collaborative assembly in hybrid manufacturing cells: An integrated framework for human–robot interaction. IEEE Trans. Autom. Sci. Eng. 2017, 15, 1178–1192. [Google Scholar] [CrossRef]

- Rozo, L.; Calinon, S.; Caldwell, D.G.; Jimenez, P.; Torras, C. Learning physical collaborative robot behaviors from human demonstrations. IEEE Trans. Robot. 2016, 32, 513–527. [Google Scholar] [CrossRef]

- Rahman, S.M.; Liao, Z.; Jiang, L.; Wang, Y. A regret-based autonomy allocation scheme for human-robot shared vision systems in collaborative assembly in manufacturing. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 897–902. [Google Scholar]

- Gustavsson, P.; Syberfeldt, A.; Brewster, R.; Wang, L. Human-robot collaboration demonstrator combining speech recognition and haptic control. Procedia CIRP 2017, 63, 396–401. [Google Scholar] [CrossRef]

- Fakhruldeen, H.; Maheshwari, P.; Lenz, A.; Dailami, F.; Pipe, A.G. Human robot cooperation planner using plans embedded in objects. IFAC-PapersOnLine 2016, 49, 668–674. [Google Scholar] [CrossRef]

- Koch, P.J.; van Amstel, M.K.; Dȩbska, P.; Thormann, M.A.; Tetzlaff, A.J.; Bøgh, S.; Chrysostomou, D. A skill-based robot co-worker for industrial maintenance tasks. Procedia Manuf. 2017, 11, 83–90. [Google Scholar] [CrossRef]

- Unhelkar, V.V.; Lasota, P.A.; Tyroller, Q.; Buhai, R.D.; Marceau, L.; Deml, B.; Shah, J.A. Human-aware robotic assistant for collaborative assembly: Integrating human motion prediction with planning in time. IEEE Robot. Autom. Lett. 2018, 3, 2394–2401. [Google Scholar] [CrossRef]

- Hamabe, T.; Goto, H.; Miura, J. A programming by demonstration system for human-robot collaborative assembly tasks. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 1195–1201. [Google Scholar]

- Tlach, V.; Kuric, I.; Zajačko, I.; Kumičáková, D.; Rengevič, A. The design of method intended for implementation of collaborative assembly tasks. Adv. Sci. Technol. Res. J. 2018, 12, 244–250. [Google Scholar] [CrossRef]

- El Makrini, I.; Merckaert, K.; Lefeber, D.; Vanderborght, B. Design of a collaborative architecture for human-robot assembly tasks. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1624–1629. [Google Scholar]

- MarketsandMarkets™ Research Private Ltd. Collaborative Robots Market by Payload Capacity (Up to 5 kg, Up to 10 kg, Above 10 kg), Industry (Automotive, Electronics, Metals & Machining, Plastics & Polymer, Food & Agriculture, Healthcare), Application, and Geography—Global Forecast to 2023. Available online: https://www.marketsandmarkets.com/Market-Reports/collaborative-robot-market-194541294.html (accessed on 30 November 2019).

- International Federation of Robotics (IFR). Robots and the Workplace of the Future. 2018. Available online: https://ifr.org/papers (accessed on 30 November 2019).

- Barclays Investment Bank. Technology’s Mixed Blessing. 2017. Available online: https://www.investmentbank.barclays.com/our-insights/technologys-mixed-blessing.html (accessed on 30 November 2019).

- Tobe, F. Why Co-Bots Will Be a Huge Innovation and Growth Driver for Robotics Industry. 2015. Available online: https://spectrum.ieee.org/automaton/robotics/industrial-robots/collaborative-robots-innovation-growth-driver (accessed on 30 November 2019).

- Gopinath, V.; Ore, F.; Grahn, S.; Johansen, K. Safety-Focussed Design of Collaborative Assembly Station with Large Industrial Robots. Procedia Manuf. 2018, 25, 503–510. [Google Scholar] [CrossRef]

- Zanchettin, A.M.; Ceriani, N.M.; Rocco, P.; Ding, H.; Matthias, B. Safety in human-robot collaborative manufacturing environments: Metrics and control. IEEE Trans. Autom. Sci. Eng. 2015, 13, 882–893. [Google Scholar] [CrossRef]

- Cherubini, A.; Passama, R.; Crosnier, A.; Lasnier, A.; Fraisse, P. Collaborative manufacturing with physical human–robot interaction. Robot. Comput. Integr. Manuf. 2016, 40, 1–13. [Google Scholar] [CrossRef]

- Makris, S.; Karagiannis, P.; Koukas, S.; Matthaiakis, A.S. Augmented reality system for operator support in human–robot collaborative assembly. CIRP Ann. 2016, 65, 61–64. [Google Scholar] [CrossRef]

- Bös, J.; Wahrburg, A.; Listmann, K.D. Iteratively Learned and Temporally Scaled Force Control with application to robotic assembly in unstructured environments. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3000–3007. [Google Scholar]

- Haage, M.; Piperagkas, G.; Papadopoulos, C.; Mariolis, I.; Malec, J.; Bekiroglu, Y.; Hedelind, M.; Tzovaras, D. Teaching assembly by demonstration using advanced human robot interaction and a knowledge integration framework. Procedia Manuf. 2017, 11, 164–173. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).