Abstract

Mobile applications users consider it especially important to have quality certification, and particularly in an e-health context. However, if the app is certified, will user satisfaction be greater? This paper is intended to help answer that question. Therefore, with user satisfaction as a determinant of the quality of services, the purpose of the paper is to understand the extent to which certified and non-certified mHealth apps influence user experience. This was measured by online reviews and downloads. An empirical study was carried out with 50 mHealth apps (21 certified, 29 non-certified), which is a convenient sampling procedure. The Mahalanobis matching technique was used to test for significant differences between the groups. A comparative content analysis was performed on 6188 user comments and on app downloads. Non-parametric tests were preferred because the variables analyzed had a categorical structure. A log-linear model and advanced contingency tables were used to examine the more than two-way relationships of variables. The results show differences between the two samples (certified and non-certified mHealth apps). However, quality certification labels as a guarantee of the quality of apps do not have a modulating effect on the online reviews and downloads relationship. The model showed a statistically significant partial association between “certification” and “online user reviews” (Z = 2.174; sig = 0.042)] and between “download” and “online user reviews” (Z = 2.387; sig = 0.017).

1. Introduction

The exponential growth of mobile health (“mHealth”) applications (“apps”) in recent years has not been accompanied by mandatory control or regulation processes to mitigate risk or to guarantee quality [1,2,3,4]. These apps can collect a variety of personal data and, because they are designed to influence health, it is important to ensure their safety, validity, reliability, privacy, and security. In most countries, medical device regulation applies only to a subset of high-risk mHealth apps that have well-defined medical purposes. However, most mHealth apps available on the market target a wide range of health-related issues (monitoring, control, medical advice, and so on), while still being considered non-medical. In addition, some apps have been evaluated based on specific regulation procedures, validation or accreditation systems, or standards. Initiatives by the United States’ Food and Drug Administration, the American Medicines Agency, and the European Union are clear examples of this. They have already put themselves to work to regulate and set minimum quality criteria for mHealth apps. These processes allow the quality of mHealth apps to be measured. Compliance with certain requirements (usability, privacy, security, safety, and so on) help to guarantee proper use and integration into medical practice; otherwise, because they collect a variety of personal data, the apps may be indirectly responsible for misinformed self-care [5]. Through quality assurance, the fulfilment of the purpose of the apps, such as monitoring, goal-setting and schedule management, gathering health-related information, and managing health conditions, can be guaranteed [6]. This helps to satisfy the needs of users (e.g., patients, health professionals, or the general public), who in many cases do not possess media and technology literacy skills.

In general, for many, the certification of a product or service is synonymous with quality. Obviously, the health-apps are no exception to this phenomenon. Therefore, this should result in a positive evaluation of its users based on their personal experiences. However, even when the app is included in a repository or catalogue that guarantees compliance with different requirements widely recognised in the literature [7], and is tested by specific accreditation programs for accessibility, usability, reliability, privacy, security, and so on, if it does not meet users’ needs, their perception of the app’s quality will differ. Consumer satisfaction indicates the degree to which products and services meet or exceed customer expectations [8] and is the result of service quality evaluation [9]. In the present research, we consider consumer satisfaction as the overall affective response based on the use of apps and/or related attributes. This can change after each use of the apps. The users of the app constitute the key element; they are the ultimate “testers” in evaluating the service offered by the devices.

The purpose of this study is to evaluate the implementation and certification of quality and its impact on user experience in m-health apps. We explore the extent to which user experience differs according to whether an mHealth app is certified or not. User satisfaction is the foundation of quality. Therefore, it is expected that these variables (user online reviews and downloads) will discriminate certified apps from non-certified ones.

The paper is organised as follows. First, a literature review of quality certification and online user comments is conducted. The methodology is then described, and the results presented and discussed. The conclusion includes some of the paper’s limitations, and finally a number of suggestions are made for relevant future research.

2. Theoretical Background and Related Work

Two areas of research are particularly relevant to the current study. The first covers the certification programs or regulations systems that verify app quality. The second is online user review evaluation.

2.1. Apps and Certified Quality

The term “quality” itself hinders the development of any work in this area. The subjectivity of the concept, and therefore the lack of a universally accepted definition, may be the main reason for this obstacle. In addition, the features or attributes that have traditionally been used to evaluate products and services are either insufficient or inappropriate in today’s digital environment. The products and services to be evaluated are different in the health field. Here, the technology enables more information to be gathered, and at less cost. Furthermore, the process takes place in real time.

This situation perhaps makes it difficult to establish a system or standard that allows the regulation of a verification process that could guarantee accessibility, security, and so on. However, quality criteria and measurements have recently been introduced, and these can be used by users and academics to evaluate the quality of apps.

Although there is still no consensus on the matter, the United States Food and Drug Administration (FDA) and the American Medicines Agency launched a guide with the objective of regulating and setting minimum quality criteria for health apps, proposing a series of recommendations that give guidelines on which apps would be on track and which ones would not. Likewise, it indicates that the mHealth apps that function as medical devices and that therefore might “put the patient at risk” will be regulated by the FDA. For its part, the European Union has created a directory of mHealth apps to “support patients to find useful and reliable apps.”

In addition, alternatives have been introduced to guarantee basic quality and safety standards for mHealth applications. In the United States, for example, a system similar to the one used to accredit and certify laboratory tests is laid out in the Clinical Laboratory Improvement Amendment [10]. In Europe, there are companies such as the Société Générale de Surveillance (SGS) that are dedicated to testing and verifying products, including medical devices. These certify health software which is considered safe for use by applying the new health software products security brand [11]. In addition, in the United Kingdom, the National Health Service (NHS) Applications Library helped users find reliable health and wellness applications that had been certified as clinically safe and secure. In fact, several studies examining mHealth apps have used NHS certification as a criterion for inclusion, and recommended users access NHS-certified applications [3,12,13,14]. However, the library was shut down in 2015 due to security concerns [15].

A number of other mHealth app certification initiatives have been taken to integrate mobile health in the medical field with a view to guaranteeing safety and quality. One was the Health App Certification Program (HACP seal). This was developed by Happtique, a mobile health solutions company focused on applications targeting the United States market. It was suspended in December 2013, after the CEO of a health IT firm posted a blog post exposing security issues with two apps Happtique had certified as secure. However, several papers have used Happtique-certified apps, and have recommend the use of Happtique-certified applications [2,16,17].

Users in different six countries (Estonia, Germany, Portugal, Slovenia, the United Kingdom, and Spain) have the option of using accredited health apps from either private organisations or public entities [18]. Amongst them, the United Kingdom has a more formalised process developed by the government. In Spain, there are two certification models, Appsalut and Appsaludable, both developed by public health agencies (in the Catalonian and Andalusian regions, respectively), that judge the quality and safety of health apps. These are free and available for all public or private apps, Spanish or otherwise. The importance of these badges justifies the selection criteria of apps analyzed in several studies [19,20,21,22,23,24,25]. This is evidence of the interest in security seals, which allow people to place more confidence in new technological solutions. Llorens-Vernet and Miró (2020) provide a standard for mHealth apps based on the information that is available in published studies, guidelines, and standards [4].

Despite all these initiatives, greater transparency and knowledge of the benefits and limitations of using these digital tools are necessary. In the Table 1 we can analyze some of the commented initiatives.

Table 1.

Initiatives of accreditation de mHealth apps.

All initiatives have the same limitations, in that accreditation should ensure compliance with industry standard levels of encryption and authentication. Besides, the regulators should consider establishing standards for accreditation processes and be ready to intervene if accreditation programs cannot manage risks effectively. A general agreement is necessary to regulate the use of health applications with full guarantee and quality [26].

2.2. Online User Reviews

The irruption of new technologies enables the collection of large amounts of data about app users. As an alternative to conventional methods, studies from different fields have shown that user-generated content (including online user reviews) can be used as an information source to understand customer preferences and demands [27]. Usually, online consumer reviews are provided by customers who had direct (and usually recent) experience with a product or service; they are reported in multiple forms including online ratings (e.g., number of stars) and online reviews (e.g., personal opinion in text format) [28,29,30].

The data are used for a variety of purposes. The form of interaction with the app, the user’s profile and behaviour, activity, downloads, location, and so on, are amongst the variables most commonly analyzed in evaluation of apps. It may be argued that this type of analysis is essential if apps are to be improved. In addition, mobile app reviews are valuable repositories of information, because they are written by the users themselves. Therefore, they constitute a valuable source of data, the analysis of which can allow the detection of possible defects and aspects that might be improved. Also, the app can be classified according to the positive or negative connotation that are expressed in the language. Hence, the evaluation and comparison of mHealth apps based on the opinions of its users has become a research objective, with the results used for different purposes. The user reviews contain a wealth of information about user experience and expectations and may provide valuable information for making decision about applications and their features [31,32]. For research, results help to increase the understanding of customer expectations formation in online settings [33].

A great number of papers have been published on this topic, and a range of approaches have been taken. Specialist journals have shown their interest by presenting articles that examine qualitative feedback based on the analysis of user reviews [34,35,36,37]. Several researchers have studied the utility for users of mHealth apps aimed at certain diseases. These address unmet needs and determine users’ expectations [38,39,40]. A number of researchers have examined the perception of users of mHealth apps [1,41,42,43]. All these studies further prove the degree of interest in the subject.

On the other hand, today, the integration of the public in research and development in health care is considered essential for the advancement of innovation. This is a paradigmatic shift away from the traditional assumption that solely health care professionals are able to devise, develop, and disseminate novel concepts and solutions in health care [44]. The active participation of other groups is essential.

Thus, app reviews given by users usually contain active, heterogeneous and real life user experience of mobile app which can be useful to improve the quality of app [32,45]. The participation of the users, through the online reviews, allows obtaining very valuable information from the user regarding the application: what is wrong, what could be improved, what is missing, what is left over, etc. Take advantage of this feedback to prioritize the new features to be developed, according to what your audience is waiting for. Furthermore, it has been proven that platforms, online communities and applications are an important intermediary for patient empowerment [44,46]. All of this demonstrates the importance of the open innovation in analysis of mobile health applications. The user review and, consequently, the improvements that can be carried out by them being types of user-lead innovations, are an important source of innovation [47]. Open innovation models bring added value resulting in the use of technical potential and ensuring the growth of economic value [48,49,50,51]. This could benefit healthcare delivery to improve individual well-being and the overall quality of life of the population.

In general, stakeholders need to be involved in the development and implementation of eHealth via co-creation processes, and design should be mindful of vulnerable groups and eHealth illiteracy [52].

Another related area of research is the relationship between user online reviews and downloads. Some authors have studied the connection between app downloads and users’ perception of quality [31]. There is considerable disparity here; some studies claim there is a direct link between these variables while others do not. For example, Chen et al. (2014), Duan et al. (2008) and Liu (2006) argue that user ratings do not influence choice, and therefore downloads [53,54,55]. Chen and Liu (2011) demonstrate that apps at the top of the download rankings are not the ones with the best user ratings [56].

However, other authors, such as Harman et al. (2012) [57], who analyzed more than 30,000 Blackberry apps, have identified a strong correlation between app ratings and the number of downloads. Chevalier and Mayzlin (2006) show this to be the case [58]. It should be kept in mind that, although many users trust other users’ ratings to decide whether to use a particular app, sometimes misleading comments and false reviews can lead to poor decisions. Automated systems exist to identify these, but their use is limited and they are not fully developed. Computer models such as AR-Miner perform exhaustive analyses of user reviews to provide app developers with clear and concise information about user opinions so they can subsequently improve the product [53]. The variety of opinions of a particular app may sometimes be the consequence of a failure to analyze user reviews uniformly across studies [59].

Attempts to understand the relationship between online reviews and downloads are not been made within a regulatory framework. The present study adds to the existing literature by providing a new view of the quality of apps that is linked directly to the user experience, which is mediated through online reviews and downloads [60]. The following objectives are proposed in order to achieve the general purpose of this study: (1) verify if there are any significant differences between certified and non-certified apps; and (2) determine if the apps’ quality label affects the user experience, according to the type of applications (certified and non-certified).

Under these considerations the following hypotheses are proposed:

Hypothesis 1a (H1a).

There are user online review differences between apps with quality certification and non-certified.

Hypothesis 1b (H1b).

There are user online review differences between apps with respect to variables (user, developer, stars rating and categories).

Hypothesis 1c (H1c).

There are download differences between apps with quality certification and non-certified.

Hypothesis 2a (H2a).

User online review discriminates certified apps from non-certified ones.

Hypothesis 2b (H2b).

Downloads discriminates certified apps from non-certified ones.

Hypothesis 2c (H2c).

The quality labels affect the relationship between downloads and online user feedback.

3. Materials and Methods

3.1. Sample

First of all, we focused our efforts on collecting a list of accredited and non-accredited apps. All AppSaludable-accredited apps were selected for inclusion in the study. In this sense, we also chose a set of unaccredited Google Play applications with significant recognition by users, so each of these had to meet two criteria: classification in the medical category, and inclusion in at least two of the three existing rankings (top apps; top grossing; or trending). The end result was a set of 55 apps (22 certified and 33 non-certified). In order to eliminate selection bias from the sample of non-certified apps, to ensure an appropriate comparison, a matching technique was used (Mahalanobis distances) [61]. Finally, despite the alleged lack of randomness, the final sample retains 50 apps with comparable recognitions, of which only 5 of the original apps were left out.

The AppSaludable Quality Seal is the first Spanish seal that recognises quality and safety of health apps. It is free and open to all public or private apps, both Spanish and from other countries.

Those apps that accomplish the Andalusian Agency for Healthcare Quality (ACSA) validation process will award the AppSaludable Quality Seal.

The certification process is mainly based on the self-assessment of the app in accordance with recommendations included in the guide, and the assessment carried out by a committee of the Agency’s experts in order to identify possible improvements. Once the seal is awarded, the app becomes part of a list of mobile health apps with remarkable safety and quality. This seal will be in force while the app is active or until its content and functions change. The responsible persons of the app will commit themselves to a revision of the process carried out by ACSA when the app suffers important changes.

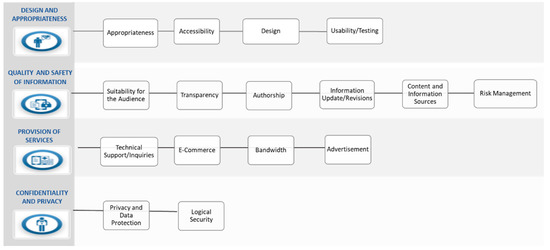

The quality seal is based on 31 recommendations, grouped into four broad categories containing 16 criteria and published in the Guide for Recommendations on the Design, Use, and Assessment of Health Apps [62] (see Figure 1).

Figure 1.

Recommendations on design, use and assessment of health apps. Source: Andalusian Agency for Healthcare Quality.

In the Appendix A, the complete list of recommendations for the design, use and evaluation of health applications can be consulted.

This certification program is part of Safety and Quality Strategy in Mobile Health Apps (ACSA). It is a dynamic and integrated process that counts with the collaboration of those users (developer, professionals, patients and general public) who want to share any suggestions or ideas to contribute to this project, through the open innovation project “Collaborate With Us”.

3.2. Data Analysis and Collection

To analyze the apps, we collected descriptive information (e.g., price, platform, certification date, and so on), as well as technical details (e.g., developer, sharing capabilities, and so on). Information was also gathered on the target user group and other aspects of the apps that may have been of interest. These data domains could be adapted to include or exclude specific content areas as needed. Next, web scraping was carried out to convert the unstructured web data into a structured format that could be easily accessed and used. For this study we used R language for scraping the reviews [63]. We used the rvest and RSelenium packages in R and scraped the following data from each app: app name; date; user’s name; start; review; likes; reply. All comments recorded for the 55 apps were collected from Google Play. The total number amounted to 6188 user reviews, and all were analyzed. The strengths of researching customer textual reviews are that they can show customer consumption experiences, highlight the product and service attributes customers care about, and provide customers’ perceptions in a detailed way through the open-structure form [64].

Before we performed the text mining, we cleaned up all the text to render the results more accurate [65,66]. Our goal at this stage was to create independent terms (i.e., words) from the data file before we could begin counting their frequency. To achieve our goal, we used the tm and SnowballC packages, which support all text mining functions. We removed websites, emojis, punctuations, numbers, whitespace, and stop words from the data set. After the cleaning process, we were left with 13,881 independent terms that exist throughout the online reviews. These were stored in a matrix that shows each of their occurrences. This matrix (called a term matrix) logs the number of times the term appears in our clean data set. We used sentiment analysis for data labelling. This was carried out automatically by the R programme using a set of more frequently-occurring positive words to extract the meaning from the reviews and to define their polarity. Word weighting accorded to frequency of occurrence. The more often a word appeared in the reviews overall, the greater its weight; the term was then considered to be a strong candidate for inclusion in the content analysis [67]. The set of positive word selected amounted to 29 terms, and, according to the presence or otherwise of positive words, we rated each review. We rated a total of 2509 positive comments.

After that, we created an app quartile ranking based on the Q ratio. We determined the ratio as the total number of positive words from the app’s positive comments divided by the total number of words from all positive comments. Finally, each app was assigned to a group of Q depending on the value of that ratio. Thus, Q4 represented those apps with the highest value of Q, and Q1 the opposite.

To contextualise the study, descriptive analyses of the variables were carried out using the data obtained. Two-sample t and chi-square tests were used to assess the differences between certified and non-certified apps based on online user reviews and downloads. To check the possible relationship between the variables, non-parametric tests of chi-square were carried out.

With regard to categorical data, a log-linear model was applied to study the possible modulating effect of the certification process between user reviews and downloads. This model has been suggested in the literature as a way of determining the relationship structures of multi-dimensional contingency tables [68]. The log-linear (conditional independence) model was chosen to model the association between the X and Y variables conditioned by the Z variable because it was the model with the best fit; it is used in cases when one of the variables is dependent on the other two variables (X and Y), and those two variables are independent of each other (Z). The model (XY, YZ) is expressed as:

donde:

- X → 1, 2, …, i

- Y → 1, 2, …, j

- Z → 1, 2, …, k

- = frequency determined for the ijk cell in the contingency table

- = Main impact of the X variable

- = Main impact of the Y variable

- = Main impact of the Z variable

- = Second degree interaction impact of variables X and Y

- = Second degree interaction impact of variables Y and Z

4. Results. Analysis and discussion

4.1. Sample Characteristics

The study found a variety of mHealth apps that had been classified by different users, category, developer, and type of communication (bidirectional or unidirectional). Table 2 summarizes the main characteristics of the 55 mHealth apps.

Table 2.

Details of h-apps (n = 50) (frequency).

4.2. Hypotheses Testing

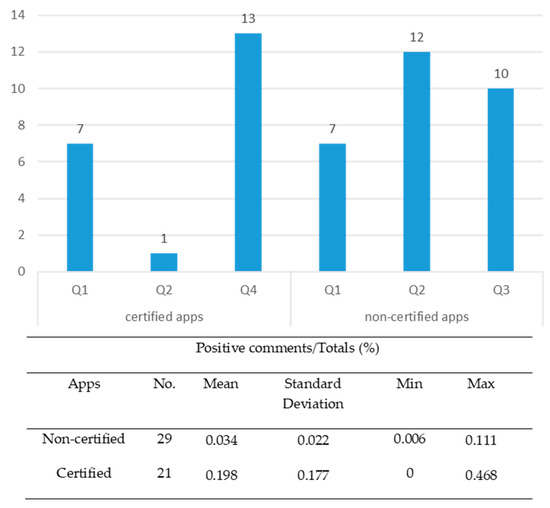

Figure 2 shows the distribution of apps according to the ranking established by user ratings in online reviews (0 ≤ Q1 ≤ 0.015; 0.015 < Q2 ≤ 0.038; 0.038 < Q3 ≤ 0.114; 0.114 < Q4 ≤ 0.75) and descriptive statistical summaries.

Figure 2.

Distribution of apps according to the user opinion ranking (n = 50 apps).

The results of Student’s t-test for the two samples (certified apps and non-certified apps) showed a statistically significant difference (t = 4.22; p = 0.000). For this reason, H1a hypothesis is confirmed.

This significant difference highlights the effectiveness of the application quality certification processes. This is likely to be justified by meeting the seal requirements in terms as important to the user as ensuring the quality and security of information (requirements 5–15, see Appendix A) as well as privacy and data protection (requirements 21–31, see Appendix A).

On the other hand, the chi-square tests carried out to assess the possible relationships between the different variables (user, developer, category, and stars) and user comments showed a significant relationship between all the variables, except for the categories of functions (see Table 3). Then, the results obtained confirmed the H1b hypothesis except for the category variable.

Table 3.

Chi-square tests.

The higher percentage of h-apps used by professionals (as a group supposed to have more objective opinion about the applicability of a h-app) in the group of certified apps, perhaps marks the possible relationship between the type of users and online comments. While the satisfaction of general public users and patients is of great importance, it has less impact from the view of the scientific applicability of an h-app.

Certified apps, whose main developers are tech companies and healthcare workers, get the highest proportion of positive reviews (Q4). This demonstrates the importance of technical and health aspects for users. These aspects provide a high level of usability, guaranteed, in the case that it is being studied, through compliance with requirements, such as accessibility, design, authorship, updating or revision of information, among others (see Appendix A).

Opinions such as the following are a clear example:

“It’s excellent. The design of the app makes it easy to use. The interface is very intuitive. I recommend it”.

Furthermore, as expected, and in line with other studies [64,69], the more stars there are, the more positive comments will be obtained from users. In this study, chi-square test results show a significant difference in the star ratings. The fact that certified apps gather the highest positive response rates (Q4) may lead us to the claim that the star ratings pose trusty the user’s experience and the validity of the reviews posted by them. It could be due to there is an adequate mechanism to control user’s feedback that is guaranteed by the certification program. Consequently, this will contribute to reaching a high level of user satisfaction and thus, go on increasing positive reviews.

Finally, in relation to functionality, apps that dealt with general health and well-being show a higher proportion of positive´s comments. By contrast, apps manage health get the lower positive´s comments ratio.

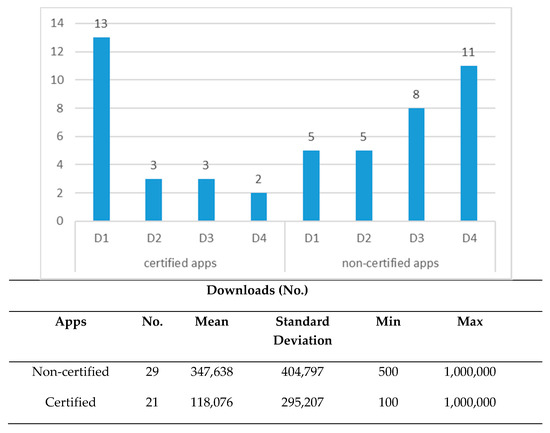

Regarding H1c hypothesis, Figure 3 shows the distribution of apps according to classification by downloads (D1 ≤ 10,000; 10,000 < D2 ≤ 50,000; 50,000 < D3 ≤ 100,000; D4 > 100,000) and descriptive statistical summaries.

Figure 3.

Distribution of apps according to download behaviour (n = 50 apps).

The results of Student’s t-test of the two samples (certified and non-certified apps) showed a statistically significant difference (t = 2.32; p = 0.025) confirming the H1c hypothesis.

In contrast with online reviews, the average download was significantly higher in the group of non-certified applications. This does not imply that users consciously opt for downloading non-certified apps. It can be explained by the fact that the sample of non-certified apps was made up of the top apps on Google Play and, obviously, these generate a greater number of downloads. Both user downloads and user ratings offer an arbitrary indicator of the popularity, acceptability, and satisfaction with apps. In addition, other factors such as, e.g., the fact that the number of apps used by professional users (supposedly a smaller group), was much higher in the certified group, could explain these results.

To test the H2a hypothesis, a cross-tabulations test was performed resulted in an association between online user reviews and app quality certification (chi-square = 41.570; p = 0.000). Although the percentages can help us to intuit possible association patterns, the corrected standardised residuals allow us to determine precisely the relationship between both variables [70] (see Table 4). Thus, for the apps in categories Q2 and Q3, the proportion of non-certified apps was significantly higher than the certified (2.900 and 3.000 > 1.96, respectively; 95% confidence level); and for Q4, the proportion of certified apps was significantly higher than the non-certified (4.9 > 1.96). Of the highest rank apps (Q4), 100% were certified. These represented 61.9% of the total certified apps.

Table 4.

Contingency table user reviews and quality seal.

Besides, the results of the chi-square tests and the statistics associated with the cross tables also showed a significant association between certified apps and downloads (103.447; GL = 8; p = 0.000), confirming the H2b hypothesis. The analysis of corrected standardised residuals enables us to identify apps with fewer downloads (D1), a more significant proportion in certified apps (5.2 > 1.96; 95% confidence level); for those in D3, the proportion of non-certified apps was significantly higher than the certified (2.9 > 1.96) and in the group of most downloaded non-certified apps (D4), there was significant association (4.1 > 1.96) (see Table 5).

Table 5.

Contingency table downloads and quality seal.

Additionally, as was stated previously, the log-linear conditional independence model was used to analyze the association between the three variables (downloads, online reviews, and certification) and to test the H2c hypothesis. The download variable was converted to dichotomous with two categories, D1 (≤100,000 downloads) and D2 (>100,000 downloads). Goodness of fit results can be seen in Table 6.

Table 6.

Goodness of fit results for the model.

The model design is: constant + certification * user online reviews + user online reviews * download. Table 7 shows the parameter estimates and standardised errors.

Table 7.

Model parameter estimates.

It was observed as a result of the partial relations test, that the statistically significant parameters were the relationship between “certification” and “online user reviews” and the relationship between “downloads” and “online user reviews.” When the Z values of double-way relationship terms were examined, it was determined that ‘download” and “online user reviews” had the highest Z value (2.387; the dependency was between [online user reviews (Qi) = 4] and [downloads (Di) = 0]). Therefore, it was the most important factor in determining the frequencies in the contingency table. The more the number of positive comment increased, the more the probability of a download increased, regardless of whether the app was certified or not. Additionally, the model showed a statistically significant association between “certification” and “online user reviews” ([online user reviews (Qi) = 3] and [certification = 0]; Z = 2.174; sig = 0.042).

5. Conclusions

This paper is a preliminary analysis of the impact of mHealth app certification on user experience. It complements previous research on studies of the association between user opinions and app downloads, the differentiating element being an analysis of the role played by a quality seal that guarantees the quality and safety of certain mHealth apps.

The first finding is the significant difference between the sample of apps analyzed with regard to user reviews and download behaviour. It could be due to the particular features of each group of apps which present different users and functionality. The sample selection criterion could also affect to this results. In respect of possible differences in the user experience revealed by online reviews, the results show a significant association between the type of user and the developers of the app; this confirms the results of previous studies. It is also noteworthy to point out, the highest proportion of positive comments belong to certified apps which the effectiveness of the self and external evaluation process is demonstrated.

Another important finding is contained within the results of the relationship between user experience and certified app quality. Although the quality of what is offered (i.e., the product or service) must prevail if the user experience is to be satisfactory, the existence of a quality mark is not a significant or relevant factor. This conclusion is based on the joint evaluation of user comments and app downloads using a log-linear conditional independence model. The model showed a statistically significant partial association between “certification” and “online user reviews” (Z = 2.174; sig = 0.042)] and between ‘download” and “online user reviews” (Z = 2.387; sig = 0.017).

5.1. Implications: Open Innovation and Certification in mHealth Application Industry

Having taken the results of our investigation as a whole into account, it is possible to consider their practical implications and subsequently make some recommendations. First, questions about the relevance of the app quality validation process are a reminder that it needs to be modified or revised to ensure that a satisfactory level of quality (usability, security, reliability, and so on) will be perceived by users. Therefore, new quality and security seals for mHealth apps need to be developed for people to have greater confidence in them. What is more, they should cover the design, development, and deployment stages. Second, as is widely accepted, the clearer and simpler a technology is, the easier and more useful it will be for users, increasing their user experience [50]. Information from user reviews related to this aspect can provide valuable information for quality assurance agencies involved in validation to improve their accreditation processes. This way, users become in active participants in health apps open innovation projects settings.

In this context, to embrace efficient, equitable, and accountable values in culture for open innovation dynamics is important to conquer cultural barriers in addition to legal and institutional barriers in public organizations. The increased culture of multi-stakeholder collaboration in this sector will allow for more active collaboration with external parties and open innovation in public administration could be used to explore collective action to improve healthcare management for the benefit of all the agents involved (patients, professionals, healthcare industry, public administrations, etc.) [71]. This model is followed by the AppSaludable seal which is accessible to any interested agent who wishes to share a suggestion or idea, our work being an example of academic involvement as a concerned party. In this field, the pioneering project in mobile health of the European mHealth Hub is a reference in innovation and knowledge in mobile health for the European territories of the World Health Organization.

For all the above, according to the concept of the quadruple helix model this sets the direction in which open innovation policy makers should move [50]. This model suggests four main players engaged to the application of open innovation: university, government, industry and society. Therefore, within the overall health context, open innovation policy makers should focus on providing enough information feedback loops to open innovation systems and at the same time take actions to strengthen the open innovation culture of the organization.

Obviously, in the digital age, all of this contributes to the progressive shift from the traditional knowledge management approach to a network approach to knowledge exchange.

In short, the applications have come to the health field to stay and evolve towards a new model of medicine: mHealth, which will help the patient and the doctor to improve well-being indices and greater efficiency in prevention, diagnosis and treatment. Research in mHealth can ensure that important social, behavioral and environmental data is used to understand the determinants of health, improve health outcomes, and prevent the development of disorders. This new area of research has the potential to be a transformative force, because it is dynamic, based on a continuous process of data entry and evaluation.

5.2. Limitations and Further Research

Finally, this study has limitations that should be considered when interpreting the results. We would like to note these limitations come mainly from the sampling methodology. To eliminate selection and analysis bias, the initial sample was adjusted. A matching technique was used to test the significant differences between the groups and thus guarantee an appropriate comparison between them.

A second limitation lies in the fact that the survey is based on the users’ experience, without focusing on distinguishing between “aware users” or “non-aware users” in relation to the accreditation of apps. Probably some aware users download the application because the distinction of quality offers them a greater guarantee and security, being their preferences and expectations completely different. A study that considers this situation can provide interesting information for a deeper analysis of user satisfaction. This also involves the use of a fit methodology for this aim far from the purpose of this paper.

Logically, the lack of trust associated with the risks of use an app is present in the choice of the application by the user and the existence of a quality seal can mitigate this perceived risk [72]. However, the seal or distinction of quality of an app is not among the most influential factors to download an app. In a Redbox Mobile study of mobile app searches, based on responses from 1500 mobile users from the US, China, UK, Spain, Germany and Italy, the most influential factor is price followed by other factors that would also be quite relevant such as a clear description, the score that the application has obtained and its reviews [73]. Some of these factors are included in the App Store Optimization OFF-METADATA factors, which are those that the developers do not control directly, and that depend on the reaction of the market and the marketing of the application that has been carried out [74]. These are the most important factors that influence Google and Apple’s positioning algorithms in their app stores, and that directly impact users’ installation decisions. One again, a quality distinction or seal is not a relevant factor, so it could be supported by the statement that the quality, trust, or safety certification culture and implementation is at an early stage with a long way to go.

Despite these limitations, this study provides a framework for others working to create sustainable health solutions in the developing world.

Author Contributions

Conceptualization, A.G.; Data curation, A.G.; Formal analysis, A.G., A.J. and P.S.; Methodology, A.G., A.J. and P.S.; Software, A.J. and P.S.; Supervision, A.G.; Validation, A.J. and P.S.; Visualization, A.J. and P.S.; Writing—original draft, A.G., A.J. and P.S.; Writing—review & editing, A.G., A.J. and P.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Guide of Recommendations on Design, Use and Assessment of Health Apps

Design and Appropriateness

Recommendation 1. The health App clearly defines its functional reach and its purpose, identifying the target groups of information and the aims pursued regarding these groups.

Recommendation 2. The health App follows the Principles of Universal Design, as well as reference accessibility standards and recommendations.

Recommendation 3. The health App follows the recommendations, patterns and directives included in the official manuals of the different platforms.

Recommendation 4. The health App has been tested by potential users before its availability to the public.

Quality and Safety of Information

Suitability for the Audience

Recommendation 5. The health App adapts itself to its target audience.

Transparency

Recommendation 6. The health App offers transparent information about its owners’ identity and location.

Recommendation 7. The health App offers information about its funding sources, promotion and sponsorship, as well as about possible conflicts of interests.

Authorship

Recommendation 8. The health App identifies the authors of its content and their professional qualification.

Information Update/Revisions

Recommendation 9. The health App includes the date of the last revision made in the published material.

Recommendation 10. The health App warns of those updates which modify or influence the functioning of health-related content, as well as other sensitive data.

Content and Information Sources

Recommendation 11. The health App is based on one or more reliable information sources, and takes into account the available scientific evidence.

Recommendation 12. The health App offers concise information about the procedure used in order to select its content.

Recommendation 13. health App is based on ethical principles and values.

Risk Management

Recommendation 14. The possible risks for patient safety caused by the use of the health App are identified.

Recommendation 15. The known risks and adverse events (near misses) are analysed, and the convenient actions start to be developed.

Provision of Services

Technical Support/Inquiries

Recommendation 16. The health App has a support system about its use.

Recommendation 17. The health App offers a contact mechanism for technical support with an assured and fixed response time.

E-Commerce

Recommendation 18. The health App informs about the terms and conditions on its products and services’ commercialisation.

Bandwidth

Recommendation 19. The health App makes an efficient use of communications bandwidth.

Advertisement

Recommendation 20. The health App warns of the use of advertisement mechanisms and allows deactivating or skipping it.

Confidentiality and Privacy

Privacy and Data Protection

Recommendation 21. Before downloading and installing, the health App informs about the kind of user’s data to be collected and the reason, about the access policies and data treatment, and about possible commercial agreements with third parties.

Recommendation 22. The health App clearly describes the terms and conditions about recorded personal data.

Recommendation 23. The functioning of the health App preserves privacy in the recorded information, collects express consents granted by users, and warns of risks coming from the use of online mobile health Apps.

Recommendation 24. The health App ensures pertinent security measures when users’ health information or sensitive data has to be collected or exchanged.

Recommendation 25. The health App informs the users when it has access to other resources of the device, to users’ accounts and to profiles in social networks.

Recommendation 26. The health App ensures the right of access to recorded information and the updates regarding changes in its privacy policy.

Recommendation 27. The health App has measures regarding minors’ protection in accordance with the current legislation.

Logical Security

Recommendation 28. The health App neither presents no sort of known susceptibility nor any type of malicious code.

Recommendation 29. The health App describes the security procedures established in order to avoid unauthorised access to personal data collected, as well as to limit the access by third parties.

Recommendation 30. The health App has encryption mechanisms for the storage and exchange of information, as well as mechanisms for passwords management.

Recommendation 31. When the health App uses services from the Cloud (cloud computing), the terms and conditions of those services are declared, and the pertinent security measures are ensured.

Source: Andalusian Agency for Healthcare Quality, 2012. Complete List of Recommendations on Design, Use and Assessment of Health Apps. Available online: http://www.calidadappsalud.com/en/listado-completo-recomendaciones-app-salud/

References

- BinDhim, N.F.; Hawkey, A.; Trevena, L. A systematic review of quality assessment methods for smartphone health apps. Telemed. e-Health 2015, 21, 97–104. [Google Scholar] [CrossRef] [PubMed]

- Boulos, M.N.K.; Brewer, A.C.; Karimkhani, C.; Buller, D.B.; Dellavalle, R.P. Mobile medical and health apps: State of the art, concerns, regulatory control and certification. Online J. Public Health Inform. 2014, 5, 229. [Google Scholar] [CrossRef]

- Huckvale, K.; Prieto, J.T.; Tilney, M.; Benghozi, P.-J.; Car, J. Unaddressed privacy risks in accredited health and wellness apps: A cross-sectional systematic assessment. BMC Med. 2015, 13, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Llorens-Vernet, P.; Miró, J. Standards for Mobile Health–Related Apps: Systematic Review and Development of a Guide. JMIR mHealth uHealth 2020, 8, e13057. [Google Scholar] [CrossRef] [PubMed]

- Kanthawala, S.; Joo, E.; Kononova, A.; Peng, W.; Cotten, S. Folk theorizing the quality and credibility of health apps. Mob. Media Commun. 2018, 7, 175–194. [Google Scholar] [CrossRef]

- Krebs, P.; Duncan, D.T. Health app use among us mobile phone owners: A national survey. JMIR mHealth uHealth 2015, 3, e101. [Google Scholar] [CrossRef]

- Stoyanov, S.R.; Hides, L.; Kavanagh, D.J.; Zelenko, O.; Tjondronegoro, D.; Mani, M. Mobile app rating scale: A new tool for assessing the quality of health mobile apps. JMIR mHealth uHealth 2015, 3, e27. [Google Scholar] [CrossRef]

- Payne, A.; Frow, P. Strategic Customer Management: Integrating Relationship Marketing and CRM; Cambridge University Press: Cambridge, UK, 2013. [Google Scholar]

- Oliver, R.L. Satisfaction: A Behavioral Perspective on the Consumer, 2nd ed.; M.E. Sharpe: New York, NY, USA, 2010. [Google Scholar]

- Larson, R.S. A path to better-quality mhealth Apps. JMIR mHealth uHealth 2018, 6, e10414. [Google Scholar] [CrossRef]

- Hughes, O. Safety Stamp for Health Apps to Bring More Trust to New Solutions. Digitalhealth. 2018. Available online: https://www.digitalhealth.net/2018/06/safety-stamp-health-apps/ (accessed on 17 January 2020).

- Boudreaux, E.D.; Waring, M.; Hayes, R.B.; Sadasivam, R.S.; Mullen, S.; Pagoto, S. Evaluating and selecting mobile health apps: Strategies for healthcare providers and healthcare organizations. Transl. Behav. Med. 2014, 4, 363–371. [Google Scholar] [CrossRef]

- Wicks, P.; Chiauzzi, E. ‘Trust but verify’—Five approaches to ensure safe medical apps. BMC Med. 2015, 13, 205. [Google Scholar] [CrossRef]

- McMillan, B.; Hickey, E.; Patel, M.; Mitchell, C.; Information, P.E.K.F.C. Quality assessment of a sample of mobile app-based health behavior change interventions using a tool based on the National Institute of Health and Care Excellence behavior change guidance. Patient Educ. Couns. 2016, 99, 429–435. [Google Scholar] [CrossRef] [PubMed]

- Kao, C.-K.; Liebovitz, D.M. Consumer mobile health Apps: Current state, barriers, and future directions. PM&R 2017, 9, S106–S115. [Google Scholar] [CrossRef]

- Powell, A.C.; Landman, A.B.; Bates, D.W. In search of a few good apps. JAMA 2014, 311, 1851–1852. [Google Scholar] [CrossRef] [PubMed]

- Zanni, G.R. Medical apps worth having. Consult. Pharm. 2013, 28, 322–324. [Google Scholar] [CrossRef] [PubMed]

- Deshpande, S.; Rigby, M.; Blair, M. The limited extent of accreditation mechanisms for websites and mobile applications in Europe. Stud. Health Technol. Inform. 2019, 262, 158–161. [Google Scholar] [PubMed]

- Castro, M.L.; Huerta, F.L.; May, A.L.H. Aplicaciones médicas en dispositivos móviles. Interconectando Saberes 2018, 6, 101–109. [Google Scholar] [CrossRef]

- Grau, I.; Kostov, B.; Gallego, J.; Iii, F.G.; Fernández-Luque, L.; Sisó-Almirall, A. Método de valoración de aplicaciones móviles de salud en español: El índice iSYScore. SEMERGEN Med. Fam. 2016, 42, 575–583. [Google Scholar] [CrossRef]

- Moreno, J.M.; Moreno, O.A.M.; Castelló, S.M.; Castelló, F.M.; Rodriguez, L.M.; Garví, O.M. Análisis de la calidad y seguridad de la información de aplicaciones móviles en prevención terciaria. Farm. Comunitarios 2015, 7, 23–26. [Google Scholar] [CrossRef]

- Pérez-Alcántara, P.; Herrera-Usagre, M. Improving health empowerment and evidence-based decision-making using collective intelligence and self-management health system: Em-Phasys project. In Proceedings of the 5th International Conference on Technological Ecosystems for Enhancing Multiculturality, Cádiz, Spain, 18–20 October 2017; pp. 1–4. [Google Scholar]

- Mira, J.J.; Carrillo, I.; Fernández, C.; Vicente, M.A.; Guilabert, M.; Elliott, P.; Lorenzo, S. Design and testing of the safety agenda mobile app for managing health care managers’ patient safety responsibilities. JMIR mHealth uHealth 2016, 4, e131. [Google Scholar] [CrossRef]

- Scott, K.M.; Richards, D.; Londos, G. Assessment criteria for parents to determine the trustworthiness of maternal and child health apps: A pilot study. Health Technol. 2018, 8, 63–70. [Google Scholar] [CrossRef]

- De La Vega, R.; Miró, J. mHealth: A strategic field without a solid scientific soul. A systematic review of pain-related Apps. PLoS ONE 2014, 9, e101312. [Google Scholar] [CrossRef] [PubMed]

- Car, J. Accreditation of Health and Wellness Apps. Harvard Global Health Institute. 2016. Available online: https://www.oecd.org/sti/ieconomy/4%20-%20Josip%20Car.pdf (accessed on 26 June 2020).

- Chau, M.; Xu, J. Business intelligence in blogs: Understanding consumer interactions and communities. MIS Q. 2012, 36, 1189. [Google Scholar] [CrossRef]

- Flanagin, A.J.; Metzger, M.J. Trusting expert- versus user-generated ratings online: The role of information volume, valence, and consumer characteristics. Comput. Hum. Behav. 2013, 29, 1626–1634. [Google Scholar] [CrossRef]

- Jin, J.; Ji, P.; Gu, R. Identifying comparative customer requirements from product online reviews for competitor analysis. Eng. Appl. Artif. Intell. 2016, 49, 61–73. [Google Scholar] [CrossRef]

- Sparks, B.A.; So, K.K.F.; Bradley, G.L. Responding to negative online reviews: The effects of hotel responses on customer inferences of trust and concern. Tour. Manag. 2016, 53, 74–85. [Google Scholar] [CrossRef]

- Genc-Nayebi, N.; Abran, A. A systematic literature review: Opinion mining studies from mobile app store user reviews. J. Syst. Softw. 2017, 125, 207–219. [Google Scholar] [CrossRef]

- Palomba, F.; Linares-Vásquez, M.; Bavota, G.; Oliveto, R.; Di Penta, M.; Poshyvanyk, D.; De Lucia, A. Crowdsourcing user reviews to support the evolution of mobile apps. J. Syst. Softw. 2018, 137, 143–162. [Google Scholar] [CrossRef]

- Picazo-Vela, S. The Effect of Online Reviews on Customer Satisfaction: An Expectation Disconfirmation Approach; Southern Illinois University: Carbondale, IL, USA, 2010. [Google Scholar]

- Ahmed, I.; Ahmad, N.S.; Ali, S.; Ali, S.; George, A.; Danish, H.S.; Uppal, E.; Soo, J.; Mobasheri, M.H.; King, D.; et al. Medication adherence apps: Review and content analysis. JMIR mHealth uHealth 2018, 6, e62. [Google Scholar] [CrossRef] [PubMed]

- Frie, K.; Hartmann-Boyce, J.; Jebb, S.; Albury, C.; Nourse, R.; Aveyard, P.; Bardus, M.; Brinker, T.; Lin, P.-H. Insights from google play store user reviews for the development of weight loss Apps: Mixed-method analysis. JMIR mHealth uHealth 2017, 5, e203. [Google Scholar] [CrossRef]

- Mendiola, M.F.; Kalnicki, M.; Lindenauer, S. Valuable features in mobile health apps for patients and consumers: Content analysis of apps and user ratings. JMIR mHealth uHealth 2015, 3, e40. [Google Scholar] [CrossRef]

- Switsers, L.; Dauwe, A.; Vanhoudt, A.; Van Dyck, H.; Lombaerts, K.; Oldenburg, J. Users’ perspectives on mhealth self-management of bipolar disorder: Qualitative focus group study. JMIR mHealth uHealth 2018, 6, e108. [Google Scholar] [CrossRef] [PubMed]

- Nicholas, J.; Fogarty, A.S.; Boydell, K.; Christensen, H. The reviews are in: A qualitative content analysis of consumer perspectives on apps for bipolar disorder. J. Med. Internet Res. 2017, 19, e105. [Google Scholar] [CrossRef] [PubMed]

- Stawarz, K.; Preist, C.; Tallon, D.; Wiles, N.J.; Coyle, D. User experience of cognitive behavioral therapy apps for depression: An analysis of app functionality and user reviews. J. Med. Internet Res. 2018, 20, e10120. [Google Scholar] [CrossRef] [PubMed]

- Thornton, L.; Quinn, C.; Birrell, L.; Guillaumier, A.; Shaw, B.; Forbes, E.; Deady, M.; Kay-Lambkin, F. Free smoking cessation mobile apps available in Australia: A quality review and content analysis. Aust. N. Z. J. Public Health 2017, 41, 625–630. [Google Scholar] [CrossRef] [PubMed]

- Anderson, K.; Burford, O.; Emmerton, L. Mobile health apps to facilitate self-care: A qualitative study of user experiences. PLoS ONE 2016, 11, e0156164. [Google Scholar] [CrossRef] [PubMed]

- Nicolai, M.; Pascarella, L.; Palomba, F.; Bacchelli, A. Healthcare android apps: A tale of the customers’ perspective. In Proceedings of the 3rd ACM SIGSOFT International Workshop on App Market Analytics, Tallinn, Estonia, 27 August 2019; pp. 33–39. [Google Scholar]

- Peng, W.; Kanthawala, S.; Yuan, S.; Hussain, S.A. A qualitative study of user perceptions of mobile health apps. BMC Public Health 2016, 16, 1–11. [Google Scholar] [CrossRef]

- Bullinger, A.C.; Rass, M.; Adamczyk, S.; Möslein, K.; Sohn, S. Open innovation in health care: Analysis of an open health platform. Health Policy 2012, 105, 165–175. [Google Scholar] [CrossRef]

- Khalid, M.; Shehzaib, U.; Asif, M. A case of mobile App reviews as a crowdsource. Int. J. Inf. Eng. Electron. Bus. 2015, 7, 39–47. [Google Scholar] [CrossRef][Green Version]

- Yoo, Y.; Henfridsson, O.; Lyytinen, K. Research commentary—the new organizing logic of digital innovation: An agenda for information systems research. Inf. Syst. Res. 2010, 21, 724–735. [Google Scholar] [CrossRef]

- Chatterji, A.K. Spawned with a silver spoon? Entrepreneurial performance and innovation in the medical device industry. Strat. Manag. J. 2009, 30, 185–206. [Google Scholar] [CrossRef]

- Davey, S.M.; Brennan, M.; Meenan, B.J.; McAdam, R. Innovation in the medical device sector: An open business model approach for high-tech small firms. Technol. Anal. Strat. Manag. 2011, 23, 807–824. [Google Scholar] [CrossRef]

- Chesbrough, H.W.; Garman, A.R. How open innovation can help you cope in lean times. Harv. Bus. Rev. 2009, 87. Available online: https://hbr.org/2009/12/how-open-innovation-can-help-you-cope-in-lean-times (accessed on 6 June 2020). [CrossRef]

- Yun, J.J.; Liu, Z. Micro- and macro-dynamics of open innovation with a quadruple-helix model. Sustainability 2019, 11, 3301. [Google Scholar] [CrossRef]

- Peter, L.; Hajek, L.; Maresova, P.; Augustynek, M.; Penhaker, M. Medical devices: Regulation, risk classification, and open innovation. J. Open Innov. Technol. Mark. Complex. 2020, 6, 42. [Google Scholar] [CrossRef]

- Van Der Kleij, R.M.; Kasteleyn, M.J.; Meijer, E.; Bonten, T.N.; Houwink, E.J.; Teichert, M.; Van Luenen, S.; Vedanthan, R.; Evers, A.; Car, J.; et al. SERIES: eHealth in primary care. Part 1: Concepts, conditions and challenges. Eur. J. Gen. Pract. 2019, 25, 179–189. [Google Scholar] [CrossRef] [PubMed]

- Chen, N.; Lin, J.; Hoi, S.C.; Xiao, X.; Zhang, B. AR-miner: Mining informative reviews for developers from mobile app marketplace. In Proceedings of the 36th International Conference on Software Engineering, Hyderabad, India, 31 May–7 June 2014; pp. 767–778. [Google Scholar] [CrossRef]

- Duan, W.; Gu, B.; Whinston, A.B. The dynamics of online word-of-mouth and product sales—An empirical investigation of the movie industry. J. Retail. 2008, 84, 233–242. [Google Scholar] [CrossRef]

- Liu, Y. Word of mouth for movies: Its dynamics and impact on box office revenue. J. Mark. 2006, 70, 74–89. [Google Scholar] [CrossRef]

- Chen, M.; Liu, X. Predicting popularity of online distributed applications: ITunes app store case analysis. In Proceedings of the 2011 iConference, Seattle, WA, USA, 8–11 February 2011; Association for Computing Machinery (ACM): New York, NY, USA, 2011; pp. 661–663. [Google Scholar]

- Harman, M.; Jia, Y.; Zhang, Y. App store mining and analysis: MSR for app stores. In Proceedings of the 2012 9th IEEE Working Conference on Mining Software Repositories (MSR), Zurich, Switzerland, 2–3 June 2012; pp. 108–111. [Google Scholar]

- Chevalier, J.A.; Mayzlin, D. The effect of word of mouth on sales: Online book reviews. J. Mark. Res. 2006, 43, 345–354. [Google Scholar] [CrossRef]

- Zhou, W.; Duan, W. Online user reviews, product variety, and the long tail: An empirical investigation on online software downloads. Electron. Commer. Res. Appl. 2012, 11, 275–289. [Google Scholar] [CrossRef]

- Sharma, T.; Bashir, M. Privacy apps for smartphones: An assessment of users’ preferences and limitations. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2020; pp. 533–546. [Google Scholar] [CrossRef]

- Mahalanobis, P.C. On tests and measures of groups divergence. J. Asiat. Sociol. Bengal 1930, 26, 541–588. [Google Scholar]

- Andalusian Agency for Healthcare Quality. Complete List of Recommendations on Design, Use and Assessment of Health Apps. 2012. Available online: http://www.calidadappsalud.com/en/listado-completo-recomendaciones-app-salud/ (accessed on 12 January 2020).

- Jackman, S. Data from Web into R. Political Methodol. 2006, 14, 11–16. [Google Scholar]

- Zhao, Y.; Xu, X.; Wang, M. Predicting overall customer satisfaction: Big data evidence from hotel online textual reviews. Int. J. Hosp. Manag. 2019, 76, 111–121. [Google Scholar] [CrossRef]

- Van Der Meer, T.G. Automated content analysis and crisis communication research. Public Relat. Rev. 2016, 42, 952–961. [Google Scholar] [CrossRef]

- Grimmer, J.; Stewart, B.M. Text as data: The promise and pitfalls of automatic content analysis methods for political texts. Political Anal. 2013, 21, 267–297. [Google Scholar] [CrossRef]

- Erlingsson, C.; Brysiewicz, P. A hands-on guide to doing content analysis. Afr. J. Emerg. Med. 2017, 7, 93–99. [Google Scholar] [CrossRef]

- Yurt-Öncel, S.; Erdugan, F. Kontenjans Tablolarının Analizinde Log-Lineer Modellerin Kullanımı Ve Sigara Bağımlılığı Üzerine Bir Uygulama. SAÜ Fen Bil. Der. 2015, 19, 221–235. [Google Scholar] [CrossRef]

- Ye, B.H.; Luo, J.M.; Vu, H.Q. Spatial and temporal analysis of accommodation preference based on online reviews. J. Destin. Mark. Manag. 2018, 9, 288–299. [Google Scholar] [CrossRef]

- Haberman, S.J. The analysis of residuals in cross-classified tables. Biometrics 1973, 29, 205. [Google Scholar] [CrossRef]

- Yun, J.J.; Zhao, X.; Jung, K.; Yigitcanlar, T. The culture for open innovation dynamics. Sustainability 2020, 12, 5076. [Google Scholar] [CrossRef]

- Malik, A.; Suresh, S.; Sharma, S. Factors influencing consumers’ attitude towards adoption and continuous use of mobile applications: A conceptual model. Procedia Comput. Sci. 2017, 122, 106–113. [Google Scholar] [CrossRef]

- Freier, A. 55% of Consumers Download an App from a Paid Advert. Businessofapps. 2019. Available online: https://www.businessofapps.com/news/55-of-consumers-download-an-app-from-a-paid-advert/ (accessed on 5 October 2020).

- Strzelecki, A. Application of developers’ and users’ dependent factors in app store optimization. Int. J. Interact. Mob. Technol. (iJIM) 2020, 14, 91–106. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).