Abstract

In the era of artificial intelligence (AI), generative AI tools like ChatGPT 4.5 have greatly improved the ease of obtaining answers to questions, thereby diminishing the importance of memorizing declarative knowledge while increasing the significance of procedural knowledge required in the problem-solving process. However, conventional assessment approaches such as paper-based assessments still focus on assessing declarative knowledge; these are difficult to adapt to the challenges of the AI era. This study aims to explore an innovative approach for the assessment of procedural knowledge, with a specific emphasis on gamification. This study employs a comprehensive approach involving an experimental research method, a case study, and a questionnaire survey. A total of 151 undergraduate students were recruited and randomly assigned to an experimental group and a control group for the experiment. We compared performance outcomes between a gamification-based assessment and paper-based assessment. The results confirmed the effectiveness of the gamification-based assessment, demonstrating its superiority over the paper-based assessment in assessing procedural knowledge. The findings of the paper are not only applicable to assess knowledge in emergency situations such as fire safety but can also be applied to the assessment of procedural knowledge across various academic subjects within educational institutions.

1. Introduction

In the AI era, new technologies have facilitated the acquisition of answers to questions, especially generative AI like ChatGPT 4.5, which can efficiently generate results. Research from University College London has revealed the intrinsic mechanisms of reasoning in AI large language models, emphasizing the importance of procedural knowledge in building AI’s reasoning capabilities. It points out that future AI development will place greater emphasis on constructing reasoning abilities through procedural knowledge to better solve complex problems [1]. The importance of memorizing declarative knowledge has decreased, while the significance of procedural knowledge required in the problem-solving process has increased [2]. Procedural knowledge refers to algorithms or operational steps in specific contexts [3]. It is step-based knowledge in the process of problem-solving, focusing on the “how-to” aspect [4]. Due to its operational, procedural, and dynamic characteristics, procedural knowledge is essential for problem exploration. In the AI era, procedural knowledge is of great value for human–machine communication and collaboration. Using AI tools such as ChatGPT 4.5 requires the initial input of prompt words, and prompts that include specific steps are rated as excellent, which facilitates AI to work more efficiently. Algorithms can be understood as steps of problem-solving [5], serving as one of the core driving forces behind the development of AI, and they can be categorized as typical procedural knowledge. Both algorithm design and program design necessitate the application of procedural knowledge.

At present, there still exists a large number of assessments that mainly test memorized declarative knowledge, which is difficult to adapt to the challenges of the AI era, underscoring an urgent need for innovative knowledge assessment methodologies. Paper-based assessment (PBA) is a static assessment method that mainly assesses declarative knowledge [6]. PBA has limitations in assessing dynamic procedural knowledge. Conventional PBA lacks time constraints in real-life situations and can be modified back and forth many times, so it is difficult to assess the ability to apply knowledge in emergency situations, especially in the assessment of safety-related procedural knowledge such as fire rescue and fire escape. There is an urgent need to explore new assessment methods that facilitate the evaluation of procedural knowledge.

There are two academic interpretations of gamification assessment, which are game-based assessment and gamification-based assessment [7,8,9,10], both of which are abbreviated as GBA. Based on the distinction between gamification and game, we argue that gamification-based assessment is more consistent with the concept of gamification; the later part of this study will discuss this further, and in this paper, GBA refers to gamification-based assessment. This paper aims to validate the efficacy of GBA in assessing procedural knowledge, explore gamified design approaches, especially in emergency situations, and make up for the limitations of traditional PBA in assessing procedural knowledge. It also seeks to pioneer knowledge assessment strategies suitable for the AI era. This paper presents a literature review to search Web of Science, Scopus, Elsevier, Wiley, Google Scholar, ProQuest, and CNKI. It conducts a thematic search using keywords such as “gamification OR Gamification-Based Assessment” and “assessment approach OR Knowledge Assessment OR Procedural Knowledge Assessment”. A total of approximately 270 relevant papers were retrieved. From the perspective of the number of publications, the research on gamification and GBA shows a gradually increasing trend from 2013 to 2024. More and more scholars are paying attention to gamification, which is widely applied in education, human–computer interactions, computer science, psychology, business, emergency preparedness, human resources, and other fields. Science and Nature have published several papers on games and gamification [11,12,13,14]. For instance, scientists at the University of Washington applied gamification to study the structure of proteins, and they designed the scientific game Foldit, which attracted thousands of game players to explore protein structure and led to the discovery of new protein structures; the game players and scientists are co-authors of the paper published in Nature. In 2022, the Open University of the UK and the Open University of Catalonia jointly released the report “Innovative Pedagogies”, which once again recommended the method of gamification [15]. In terms of gamification assessment, academics and industries have recognized the limitations of conventional PBA, and well-known enterprises such as PwC, Deloitte, KPMG, and Accenture have changed PBA to GBA in talent recruitment. Overall, the development of current educational assessment and knowledge assessment technology has shifted from focusing on results to focusing on processes, from selection to development, from a passive acceptance of assessment to active participation, developing diversified and personalized assessments, improving students’ autonomy, and emphasizing the contextualization and authenticity of assessments. GBA reflects this trend in the development of knowledge assessment. However, there still remains a significant gap in the literature regarding GBA that specifically addresses procedural knowledge assessment, with insufficient comparative research examining the efficacy of GBA versus traditional PBA from the perspective of procedural knowledge assessment. Limited exploration exists regarding the gamification design of assessment item types tailored to different knowledge categories. Particularly inadequate is the exploration of procedural knowledge assessment in emergency scenarios, especially within safety education domains, where GBA methodologies remain neglected.

In this study, participants (N = 151) were undergraduates recruited from nine different schools across the entire university, ensuring multidisciplinary representation. This included students from three major categories of disciplines within the university, mainly including engineering, liberal arts, and art and design disciplines. The participant pool included students from freshman to senior year. All the participants were randomly divided into an experimental group and a control group. The experiment was conducted using fire safety knowledge assessment as a case study. The differences in procedural knowledge between the two groups were compared using GBA and PBA, verifying the effectiveness of GBA in assessing procedural knowledge and exploring the design method for assessing procedural knowledge in fire emergency situations. The primary objective of this study is to develop knowledge assessment methods and theories tailored for the AI era based on the gamification approach, thereby fostering participants’ ability to apply what they have learned. The research results and methods of the paper are applicable not only to the assessment of procedural knowledge within fire and traffic safety domains but also to the enhancement of participants’ learning experiences. The new methods can improve emergency response capabilities and bolster the efficacy of safety education across various fields. The findings of this study can be applied to the assessment of subject knowledge, including the assessment of procedural knowledge in universities, high schools, and elementary schools, and it can also be used in the field of e-learning.

2. Related Work

2.1. Knowledge Classification

Knowledge is organized and structured information [16]. Michael Polanyi classified knowledge into explicit and tacit knowledge from a philosophical perspective. However, the classification is not conducive to the specific guidance of education and knowledge assessment, and scholars have subsequently proposed a more specific classification of knowledge. Representative scholars include J.R. Anderson, who categorizes knowledge into declarative knowledge and procedural knowledge according to the way of representation [17], and procedural knowledge is a kind of algorithm or a set of operational steps and strategies used in a specific situation [18,19,20]. Beyond Michael Polanyi’s classification of knowledge, scholars such as J.R. Anderson and R.E. Mayer’s classification includes procedural knowledge, which is defined as knowledge of skills, and strategic knowledge is also procedural knowledge. We propose that procedural knowledge is characterized by a structured flow governed by specific steps and rules, emphasizing a sequential progression.

Knowledge pertaining to fire rescue, fire evacuation, and traffic safety encompasses a substantial amount of procedural knowledge, which is essential for the development of effective emergency response capabilities. Descriptive knowledge is “static” narrative knowledge, mainly to answer the “what”, as displayed in Table 1. Declarative knowledge emphasizes memorization, while procedural knowledge is dynamic knowledge, which is based on the operational steps of a situation. Descriptive knowledge is relatively fixed and can be expressed in words, which can be learned and tested through text work [21]. However, procedural knowledge is more difficult to express symbolically and can only be presented through specific activities, which requires assignments to be somewhat active [22]. Procedural knowledge represents a form of dynamic knowledge that is optimally assessed through the application of interactive and dynamic assessment methodologies; hence, GBA aligns with this requisite attribute.

Table 1.

Comparative analysis of declarative and procedural knowledge.

The current classification of knowledge demonstrates a significant neglect of affective knowledge and fails to explore the relationship between procedural knowledge and affective knowledge, the latter of which pertains to the value and meaning associated with a given context, and affective knowledge exerts a pivotal influence on the decision-making processes and selection criteria related to procedural knowledge. In the AI era, affective knowledge serves to guide AI technologies towards ethical practices and holds significant value. The classification of knowledge should emphasize the intrinsic connections among its various types, declarative knowledge forms the foundation for the application of procedural knowledge [23], while affective knowledge influences the judgment and selection of procedural knowledge. Moreover, all types of knowledge function as interconnected wholes.

2.2. Gamification

Pelling was the first to explicitly use the term “gamification”. Deterding et al. proposed a definition of gamification as the use of game design elements in non-game contexts [24]; the definition emphasizes the use of game elements rather than the use of complete games. Furthermore, Werbach and Hunter expanded the definition to the use of game elements and game design techniques in non-game contexts [25]; this definition emphasizes the three elements of game elements, game design techniques, and non-game contexts. Werbach offers a gamification MOOC on Coursera, promoting the dissemination and application of gamification. He indicates that the flow theory serves as the psychological foundation for gamification. The flow theory, proposed by psychologist Csikszentmihalyi, describes a mental state of focused engagement, complete immersion, and enjoyment in an activity. Clear goals, immediate feedback, and balance between challenge and skill are the three conditions for achieving flow experience [26]. The flow theory has become an important theoretical foundation for gamification design. Gamification reflects the use of game elements and game thinking and is an expansion of the influence of games in real-world contexts, expanding the value of games in the social arena and focusing on their impact on reality. Game elements are not used in isolation, and different game elements work together to serve the needs of the real world, thereby forming a gamification system. The starting point of gamification originates from the needs of the real world and has a wider range of uses. Gamification design is to break the “magic circle” of games. Gamification is developed on the basis of games, and the influence of games enhances the impact of gamification. Both gamification and games focus on fun, but gamification transcends the entertainment layer and relates to the real world, focusing more on the significance of reality and the impact on the real world.

Gamification is widely applied in the field of education. Kapp applied gamification to learning and offered a comprehensive framework for implementing gamification in learning [27]. He argued that gamification contributes to enhancing students’ motivation and engagement in learning. Building on the research of educational gamification, scholars have developed different classifications of gamification. Kapp categorized gamification into content gamification and structural gamification [27], in which content gamification refers to the direct integration of game elements into the instructional content, thereby imbuing the learning process itself with game-like characteristics that enhance engagement, while structural gamification involves the use of external game mechanics to incentivize learning behaviors without altering the instructional content, thereby motivating learners to achieve predetermined objectives. Furthermore, he proposed that design gamification should be according to different types of knowledge; this concept provides a theoretical basis for the application of gamification in knowledge assessment. However, there is a lack of more specific and systematic research focusing on the gamified design for procedural knowledge. Lieberoth proposed a framework for differentiating deep gamification and shallow gamification [28]. Deep gamification emphasizes embedding game mechanics into the core structure of an activity to foster intrinsic motivation in the learning process, while shallow gamification implies adding game elements (e.g., points, badges, leaderboards, etc.) to non-game activities without altering their core structure, relying on extrinsic rewards and surface-level framing. Additionally, Pishchanska and Karmanova et al. have introduced a classification that encompasses light gamification [29,30], which involves using game elements in the educational process without significantly altering the original primary objective and basic mechanism. These taxonomies elucidate the differential impacts of varying levels of gamification design on learning outcomes. Despite these different classifications, based on the characteristics of diversity and flexibility in gamification, we propose that it is possible to integrate the concepts of content gamification, structural gamification, depth gamification, and light gamification in accordance with the learning objectives. Additionally, gamification has also been applied in the field of emergency preparedness. Arinta and Emanuel provided empirical evidence for the effectiveness of gamification in enhancing community preparedness for flood emergencies [31]. Vancini et al. demonstrated that the incorporation of gamification in first aid training can lead to more effective skill acquisition and better preparedness for real-life emergency situations [32]. DiCesare et al. developed a novel gamification exercise to improve emergency medical service (EMS) performance in critical care scenarios, demonstrating its effectiveness in promoting teamwork among EMS staff [33]. These studies have recognized the value of gamification in emergency preparedness; however, there remains a lack of research on the impact of gamification design on procedural knowledge within emergency contexts, where procedural knowledge is critical to life safety. Furthermore, a persistent gap exists in research that integrates gamification with the learning and assessment of procedural knowledge for emergency preparedness.

In summary, as shown in the development timeline of gamification (Figure 1), gamification has gradually evolved into various types of gamifications. Undergoing a diversification from learning to assessment, it has been widely applied in multiple fields such as education, human–computer interactions, psychology, emergency preparedness, human resources, and business, becoming an interdisciplinary domain. Based on the development of gamification, the concepts of GBA have been developed.

2.3. Gamification-Based Assessment

The development of gamification has promoted the integration of gamification and assessment. Armstrong et al. argued that the gamification of assessment involves integrating game elements into non-game contexts to enhance assessment effectiveness, and it refers to a broader set of tools than situational judgment tests (SJTs) and badges [34]. Moreover, they emphasized the flexibility and diversity of the assessment approach in their research. Computer technology and machine learning algorithms have given rise to adaptive gamified assessments. Zhang and Huang explored the impact of such assessments on learners in a blended learning environment, and found that such assessments can significantly improve learners’ motivation and language proficiency [35]. Swacha and Kulpa introduced a game-like online assessment tool that leverages the fear of failure to improve student engagement and reduce stress, aiming to provide a more effective and immersive assessment experience, moving beyond the shallow gamification approaches commonly found in existing educational systems [36]. Robert J. Mislevy et al. state that game-based assessment refers to the explicit use of information from the game or surrounding activities to ground inferences about players’ capabilities [37]. The term “Gamification-Based Assessment” was used in the study of Sudsom, Phongsatha, and Pitoyo et al., as well as Sudsom and Phongsatha, who compared gamification-based assessment and formative-based assessment on motivation for learning and memory retention in a training setting [10]. Blaik and Mujcic argued that Game-based assessment is a type of more immersive assessment [38]. Pitoyo et al. stated that gamification-based assessment had a positive washback effect on students’ learning [39]. However, their study did not give a clear definition for the term of “Gamification-Based Assessment”. We propose that gamification-based assessment is a new approach that integrates game elements into traditional assessment frameworks without changing the core tasks and objectives of the assessment itself, with the core content being the assessment material; this kind of approach represents the application of gamification theory in the field of assessment. The distinction between gamification-based assessment and game-based assessment primarily arises from the difference between gamification and games. The GBA that we focus on in our research refers to gamification-based assessment.

GBA is the application of game elements, digital game technology, and gamification thinking in the assessment field. The game elements added to GBA can stimulate the interest of the participants, increase the attractiveness of the assessment, and improve the motivation of users to participate. GBA does not refer to an independent assessment game but adds game elements, game thinking, and game mechanisms to existing assessment techniques to improve the effectiveness of conventional assessment. Because of its obvious “test” format, conventional talent assessment techniques are prone to test anxiety, which can significantly affect the assessment results by interfering with the subject’s knowledge memory and cognitive performance, thereby affecting concentration and lowering test performance, leading to the underestimation of their true capabilities. Lowman argued that gamification assessment technology was an innovative tool for identifying, attracting, and retaining talent in the context of the internet era, which provided new ideas for collecting information about individual potential and quality and improving organizational attractiveness and employee retention [40].

Figure 1.

The development timeline of gamification [24,27,28,34,35,39,41].

As a new form of assessment, GBA has garnered academic recognition for its multifaceted benefits; some well-known enterprises have explored the use of GBA in talent recruitment [41,42,43,44]. Deloitte, PwC, and Accenture have combined GBA to select candidates for interviews. Chinese companies such as Tencent, ByteDance, and Trip have also begun to use GBA in their talent recruitment processes. These companies recognize the value of GBA and apply it to assess character, behavior, and personality in a relaxed state. These assessments are based on light gamification, embedding game elements into conventional test questions to form question-based games, with multiple-choice questions as the main question type. However, the main purpose of these assessments is not to assess knowledge, and the assessments lack a focus on assessing knowledge itself and have no direct correlation with the scores of the answers. In recent years, scholars have also studied the use of Kahoot and Quizizz to conduct GBA in schools, Wang integrated game elements such as points and leaderboards into Kahoot and compared the academic changes in students before and after gamification, and then found that the incorporation of competitive game elements generally increased students’ motivation and engagement [45]. Zhang and Huang applied Quizizz to explore the impact of the adaptive gamified assessment on learners in blended learning [35]. Maryo applied Quizizz for the assessment of English learning [46].

The above analysis demonstrates that GBA has gained extensive applications in corporate and educational settings. Previous scholars from various academic disciplines have investigated the value of gamification and GBA through multidimensional perspectives (Table 2). While Kapp recognized the necessity and significance of differentiated gamification design based on various knowledge types, providing a theoretical basis for the application of gamification in knowledge assessment, there is still an existing research gap in applying gamification to the assessment of procedural knowledge. Armstrong et al.’s studies primarily research GBA for talent recruitment in human resources, and the evaluation is predominantly centered on the assessment of characterological, behavior, and personality dimensions while neglecting systematic examination from the dimension of knowledge content.

Table 2.

Literature research on gamification and GBA.

To summarize, the current research gaps can be summarized into the following three points: (1) there is a lack of focused research on GBA targeting procedural knowledge, with insufficient comparative research examining the efficacy of GBA versus traditional PBA from the perspective of procedural knowledge assessment. (2) Limited exploration exists regarding the gamification design of assessment item types tailored to different knowledge categories and knowledge content. (3) There is a particularly inadequate exploration of procedural knowledge assessment in emergency scenarios, especially within safety education domains such as fire safety, where GBA methodologies are insufficiently applied. Addressing these gaps constitutes the primary research objective of this paper.

3. Case Study: GBA for Procedural Knowledge in Firefighting Scenarios

3.1. Research Hypothesis

The main research hypothesis of this paper is that GBA can be used as a more effective approach of knowledge assessment, surpassing conventional PBA in evaluating procedural knowledge, especially in assessing procedural knowledge in emergencies such as fire rescue and fire escape. GBA can significantly enhance students’ motivation and engagement in the assessment of procedural knowledge. The theoretical foundation of this hypothesis is the flow theory, which emphasizes that immediate feedback constitutes a critical condition for achieving a state of flow [26]. This flow experience can positively affect the users’ motivation [48]. GBA has stronger characteristics of immediate feedback compared to PBA, which is more conducive to helping participants enter a state of flow. Consequently, this enhances the participants’ motivation and engagement, ultimately improving the overall effectiveness of the assessment. Based on the research hypothesis, we choose the GBA of procedural knowledge in firefighting scenarios as a research case. Fire safety knowledge contains a large amount of procedural knowledge, which is critical during fire emergencies. The ability to master and apply this knowledge is closely related to life safety, highlighting its importance as a representative case. Procedural knowledge is the key knowledge to cultivate emergency response ability; the conventional PBA makes it difficult to assess the participants’ emergency response ability and improvisation ability in firefighting scenarios. GBA is helpful to make up for the limitations of PBA, to enhance the ability of the participants to use procedural knowledge in emergency situations, and to provide a new way to assess procedural knowledge.

3.2. Research Methodology

3.2.1. Participants

A total of 151 participants were recruited for this study. They were undergraduates from nine different schools at Guangzhou Maritime University, Guangdong province, China. In order to improve multidisciplinary representation, the sample comprised students from three major categories of disciplines within the university, which mainly included engineering, liberal arts, and art and design disciplines. The participant pool included students from freshman to senior year of the university, aged between 18 and 23 years, and the mean age was 20 years (SD = 1.2). All participants had no prior experience with the GBA system. They were randomly assigned to the experimental group (N = 77) and the control group (N = 74). Participants received gifts of stationery and books as compensation for their time and effort. Written informed consent was obtained from participants prior to the experiment, and the information of the participants was anonymized to safeguard their privacy. The research protocol was reviewed and approved by the university’s Human Subjects Committee (approval number: GDUTXS20250012).

3.2.2. Procedure

Based on the research objective and hypothesis of this study, we systematically studied five representative and professional fire safety textbooks and drew fire safety knowledge maps by refining, classifying, and summarizing the fire safety knowledge into four major categories, namely fire safety awareness, fire prevention knowledge, fire extinguishing knowledge, and escape knowledge. Then, these four categories of knowledge were assessed separately.

Based on the study of fire safety textbooks and fire safety knowledge graphs, we constructed both PBA and GBA question banks based on computer software technology. Thirty questions were selected, and the number of questions and knowledge points of PBA and GBA were kept consistent—both used 30 questions. Three experts in the field of fire protection were invited to check and evaluate all the questions in the PBA and GBA to ensure the professionalism of the knowledge. We increased the proportion of procedural knowledge, improved the rationality of knowledge coverage, then designed questions based on knowledge types and visualized the knowledge content of the questions in PBA. We subsequently designed GBA using the BODOUDOU platform developed by CODEAGES, China, which serves as a gamification design platform and system, incorporating game thinking and game elements into the platform. The main game elements include points, badges, leaderboards, progress bars, countdown, pictures, etc., ultimately forming an organic gamification system. The types of questions were designed based on the study of fire safety knowledge, and the GBA was divided into four major levels, specifically containing fire awareness, fire prevention knowledge, firefighting knowledge, and escape knowledge. Before the formal experiment, 10 undergraduates were invited to make predictions and test whether there were semantic problems in the assessment. In the experiment, the students were randomly divided into the experimental group and the control group, of which 77 were in the experimental group and 74 were in the control group, as presented in Figure 2.

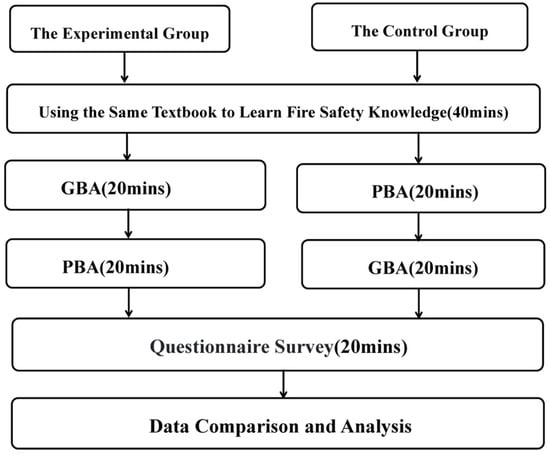

Figure 2.

Overall experimental flow chart.

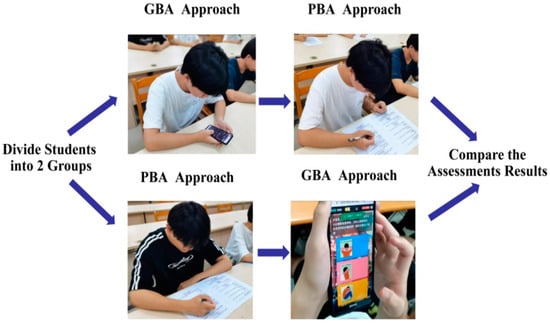

The two groups of participants were first allowed to use the same fire safety textbook to learn fire safety knowledge so that the two groups of participants received the same cognitive level of fire safety knowledge, and then the group crossover experimental method was used as follows: the experimental group was first assessed using the GBA type, followed by the PBA type. Meanwhile, the control group was first assessed using the PBA, and then the GBA was conducted. The participants in the above experiments took the GBA through their mobile phones, as shown in Figure 3. After the experimental group and the control group were assessed, a questionnaire was administered to all the participants to investigate their experiences in taking part in the GBA and PBA. The questionnaire mainly used five-level Likert scale questions with 29 questions, including 24 scale questions and 5 questions on demographic variables (Please refer to Appendix A). A total of 151 questionnaires were sent out, and 120 valid questionnaires were finally recovered by eliminating the questionnaires with a shorter response time and eliminating the questionnaire with all the same options. A statistical analysis of the assessment scores and questionnaire data was carried out using SPSS29, and graphs were plotted using Origin. In-depth interviews were conducted with 25 students after the experiment to further understand their experiences.

Figure 3.

Subgroup cross-assessment experiments.

3.3. Research Results

Both GBA and PBA use a percentage scoring system, with scores of 90 or above considered excellent, 80 or above considered good, 70 or above considered average, and 60 or above considered passing. Table 3 illustrates that the experimental group achieved an average score of 73.5 in the PBA, with an excellent rate of 35% and a pass rate of 87%. In contrast, the control group’s PBA average score is 58.7, with no excellent scores and a 53% pass rate.

Table 3.

Comparison of fire safety knowledge assessment scores.

The experimental group’s average score of the PBA was 14.8 points higher than the control group’s average score, with a 35% increase in the excellent and good rate and a 34% increase in the passing rate, indicating that participation in the GBA improved performance in the PBA. The experimental group’s scores in the PBA were also higher than the control group’s scores in the GBA, with an average score that was 7.4 points higher. The experimental group’s average score for the PBA was 13.5 points higher than the average score of the GBA in the same group; the control group’s average score for the GBA was 7.3 points higher than the average score of the PBA in this group. It can be inferred that the increase in the experimental group’s scores was more significant than that of the control group.

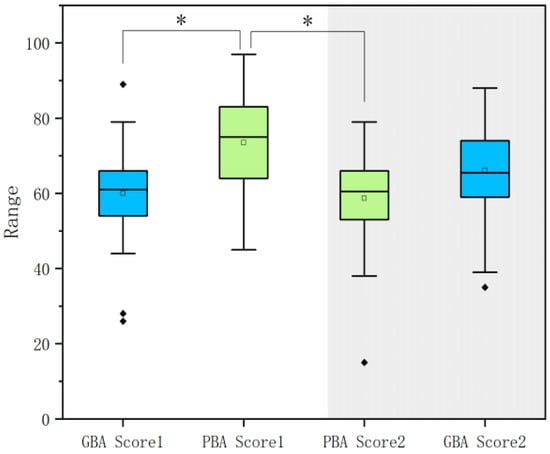

We plotted a box plot based on the fire safety knowledge assessment scores of the experimental and control groups, as depicted in Figure 4, with GBA Score1 and PBA Score1 corresponding to the two scores of the experimental group and GBA Score2 and PBA Score2 with a gray background showing the two scores of the control group, respectively. Figure 4 also labels the results of the t-tests performed on each group’s scores. GBA Score1 is significantly different from PBA Score1 (p = 0.000 < 0.001), and there is a significant difference between PBA Score1 and PBA Score2 (p = 0.000 < 0.001). The box plot further indicates that there is a significant improvement in the performance of the PBA after taking part in the GBA, thus validating the research hypothesis.

Figure 4.

Analysis of the assessment scores of the experimental and control groups. Note: * p < 0.05.

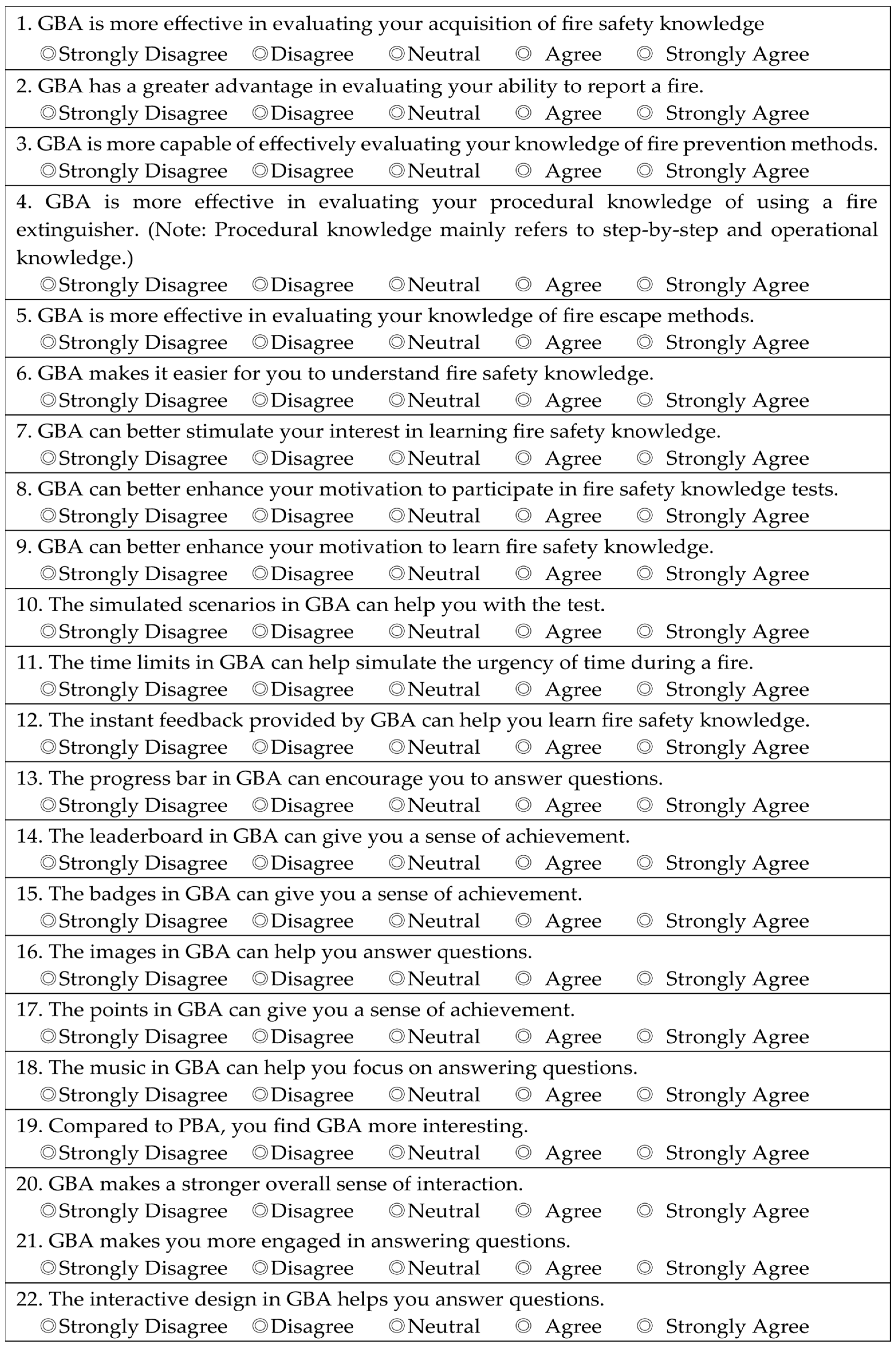

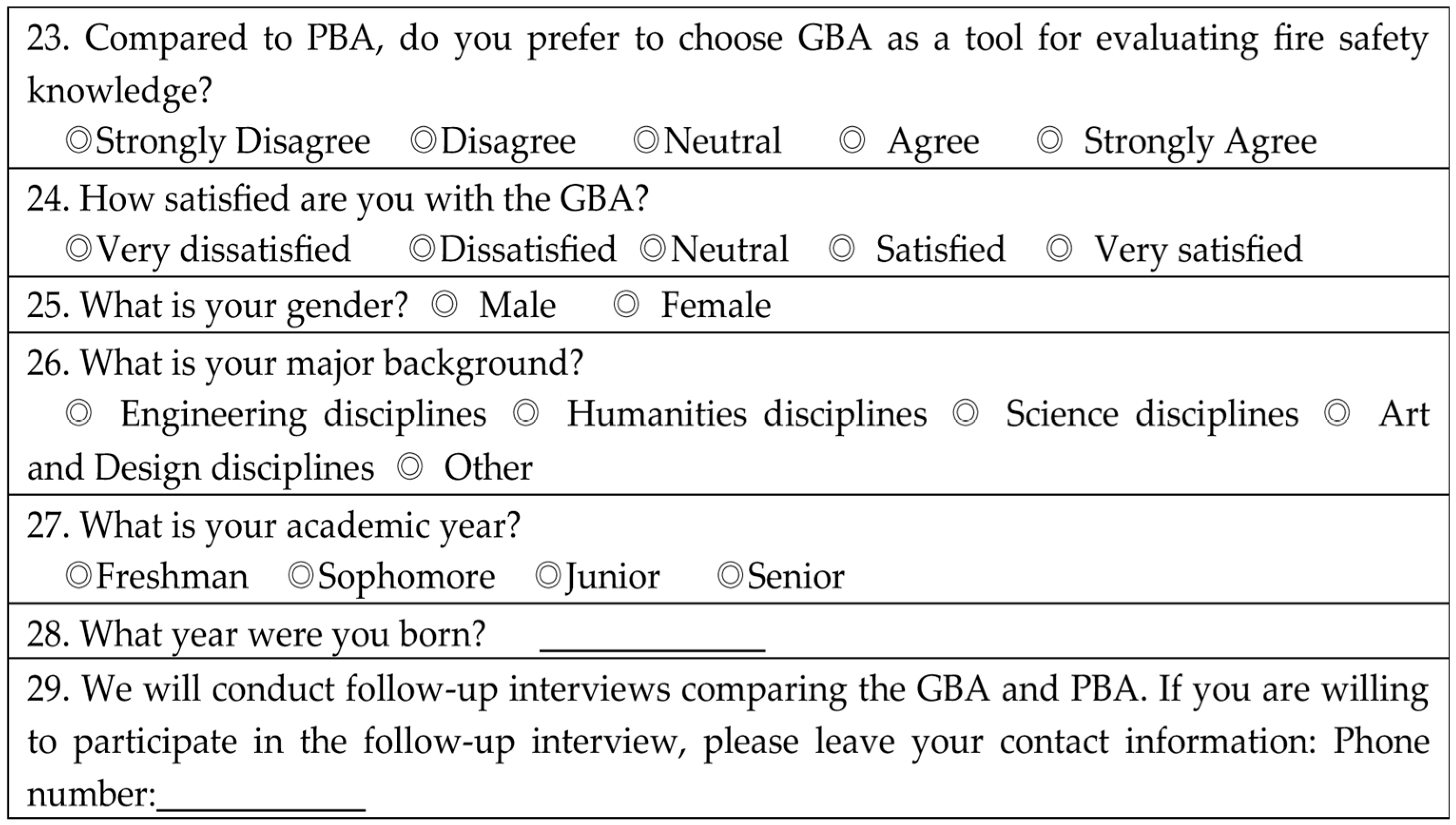

After completing the above experiment, we further conducted a questionnaire survey to investigate the participants’ experiences and evaluations of taking part in the GBA and PBA. Firstly, the reliability and validity of the questionnaire were tested, as shown in Table 4. The Cronbach’s alpha coefficient of the questionnaire was 0.85, with an excellent reliability level, which indicated that the questionnaire had a high degree of internal consistency. The KMO coefficient is 0.922, the sample size is sufficient, and after Bartlett’s sphericity test, it shows that p = 0.000 < 0.001. Combined with the two indicators, it is appropriate for factor analysis. After exploratory factor analysis, the dimensions can be divided into four, which is consistent with the dimensions preset in the questionnaire. The main dimensions of the questionnaire are knowledge learning, game elements, motivation, and experiences. The knowledge learning dimension includes step knowledge, strategy knowledge, cognitive load, and application ability. Game elements include time, feedback, points, badges, leaderboards, progress bars, pictures, music, interactions, contexts, etc. The design of the GBA incorporates these ten game elements, which together constitute the gamification system. Motivation is mainly categorized into the motivation to participate in the firefighting knowledge test and the motivation to learn firefighting knowledge; experiences include satisfaction, fun, and interest. Time is the answer time for each question. Interaction refers to the human–computer interaction design based on computer software in the GBA, picture is the visualization design for knowledge in the GBA, and feedback is the immediate feedback of the respondents’ answers in the GBA. Music mainly refers to the background music in the GBA. We statistically analyzed the 120 valid questionnaires, and Table 5 presents the average scores and standard deviations of 24 questions.

Table 4.

Questionnaire reliability, KMO, and Bartlett’s test of sphericity.

Table 5.

Statistical data of scores for each question in the questionnaire.

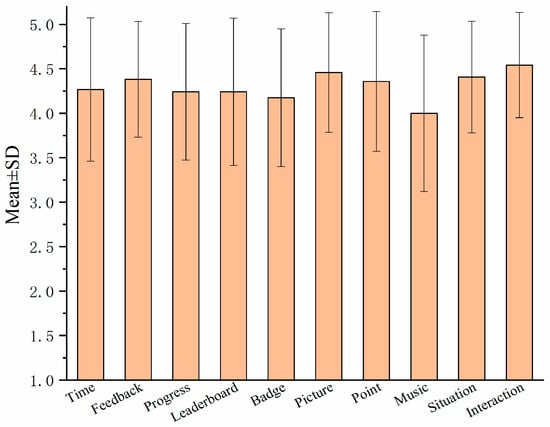

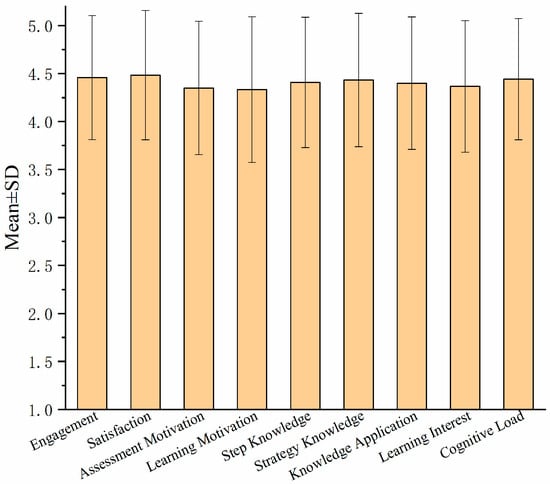

To further analyze the scores of each dimension, we plotted bar charts, as shown in Figure 5 and Figure 6, where the vertical axis values show the average scores given by the respondents to the game elements and the error bars represent the standard deviation (SD). Figure 4 reveals that the highest scores are given to the interaction among the various game elements (4.542 ± 0.5926) and the score of pictures is the second highest (4.458 ± 0.6723), followed by the score of immediate feedback (4.383 ± 0.6506). The lowest score is for music (4.0 ± 0.8793).

Figure 5.

Scores for each game element in the questionnaire.

Figure 6.

Scores on the knowledge learning dimension and the experiential dimension.

Figure 6 presents the scores of the knowledge learning dimension and experiences dimension; the knowledge learning dimension mainly includes variables such as cognitive load, and the experiences dimension mainly includes variables such as satisfaction, which mainly refers to the subjects’ satisfaction with the GBA. As exhibited in Figure 6, the score of satisfaction is the highest (4.483 ± 0.6734), and the score of engagement (4.458 ± 0.6469) is the second highest, which indicates that the satisfaction and engagement of the participants in the GBA are high.

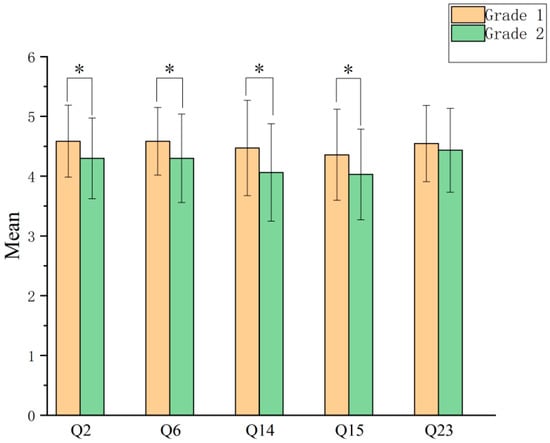

This study conducted a one-way ANOVA on the variable of grade level, as depicted in Figure 7. There were significant differences in the responses to questions 2, 6, 14, and 15 between first-year and second-year students. Specifically, first-year students had higher average scores for questions 2, 6, 14, and 15, indicating that they recognized the GBA as being more effective in learning fire safety knowledge and improving the application of that knowledge. They also felt that the leaderboards and badges in the GBA enhanced their sense of self-efficacy.

Figure 7.

One-way ANOVA for grade level. Note: * p < 0.05.

4. Discussion

The primary objective of this study was to verify the validity of the GBA for the procedural knowledge assessment and to make up for the shortcomings of PBA through a gamification approach. Werbach et al. argued that immediate feedback served as a core element of gamification [25,49] and was a key feature of the GBA. The participants can receive immediate confirmation of the correctness and incorrectness of their answers to each question. Additionally, the progress bar enables real-time tracking of the remaining number of questions. Immediate feedback helps to facilitate the participants’ entry into the state of flow, and upon completing all tasks in the GBA, students receive immediate feedback regarding their scores and rankings, which is conducive to maintaining the flow experience [50] and enhancing both concentration and learning outcomes [51]. Conversely, PBL lacks the immediate feedback that students perceive as inefficient and tedious, thereby impeding effective knowledge acquisition during the assessments. The leaderboard in the GBA dynamically displays students’ rankings, and the system can openly rank them in real time for the whole class, which creates a sense of competition. The leaderboard will display the top-ranked students in real-time, the top ten will be displayed publicly, and the top three will be awarded with virtual prizes. Similarly to Lopez-Barreiro’s research, we also found that leaderboards and prizes stimulate the students’ competitive spirit and gives players a stronger sense of achievement, which further enhances the students’ participation [52]. These findings are consistent with the research objective of this study.

Immediate feedback is also reflected in the time constraints within the GBA. In authentic fire rescue and escape scenarios, timing is critical for life safety. To accurately reflect real-life emergency situations, every question in the GBA enforces strict time constraints, and the participants will lose the chance to answer the questions if they exceed the time limit. This approach is more conducive to cultivating students’ emergency preparedness skills, which aligns with the perspective discussed by Vancini et al. regarding gamification in emergency preparedness. In contrast, traditional PBA lacks a time limit for each question, so the participants can repeatedly modify their answers, which is not in line with the real situation of fire extinguishing and escape; thus, it is difficult to cultivate students’ emergency response ability. PBA also has limitations in evaluating the speed of responses for individual questions, while in the GBA, the scoring system integrates both response time and accuracy rates, thereby reflecting students’ answering speed comprehensively. Visualization design is an important technique and strategy in the design of gamification. In GBA, procedural knowledge is visualized through visualization design based on computer software applications, and each procedural step is decomposed, corresponding to the design of abundant pictures, which makes it easier to understand the knowledge and is conducive to the learning of firefighting knowledge in the assessment. For example, the implementation of cardiopulmonary resuscitation during fire escape procedures involves a series of complex steps. Through the visualization design to break down each step, from judging awareness to chest compressions and artificial respiration, the complexity can be simplified so that students can understand the complex procedural knowledge at a glance, which is conducive to reducing the cognitive load for students. In contrast, conventional PBA is based on written expression, which is less effective in conveying complex procedural knowledge, resulting in increased cognitive demands when multiple procedural knowledge responses are required for the questions.

Confucius famously stated “The one who knows is not as good as the one who likes, and the one who likes is not as good as the one who enjoys”. Enjoyment serves as a fundamental characteristic of the digital game. Elements such as points, badges, leaderboards, and progress bars collectively enhance the enjoyment of the GBA. This heightened enjoyment contributes to fostering sustained motivation, consistent with the findings from Zhang, Huang, Katinka, and Yang [35,53,54]. We conducted in-depth interviews with experts responsible for fire education in universities and found that universities need to conduct fire safety knowledge assessments almost every year, and conventional fire knowledge assessments such as PBA are commonly used. However, participants are reluctant to participate in such kinds of knowledge assessments and are even resistant to them, so the lack of motivation is a key issue that hinders the advancement of fire safety education. Compared with the research of Sudsom and Phongsatha about GBA impact on learning motivation [10], this study further identifies that the GBA improves both assessment motivation and learning motivation. Figure 5 illustrates that interaction achieved the highest score; interactivity is an essential characteristic of games [55]. Interactivity is also a key feature that distinguishes GBA from PBA. The human–computer interaction mode of the GBA is to manipulate the digital game interface through fingers on mobile devices to sort pictures and click on options.

Based on this interactive approach, this study transforms traditional sorting questions into gamified sequencing items and questions through gamification design, which are more appropriate for assessing procedural knowledge than conventional choice questions of PBA, especially suitable for assessing procedural knowledge that contains multiple steps. For instance, the usage steps for fire extinguishers, fire hydrants, and smoke masks require three or more sequential actions. Participants in the GBA can pass the level by sorting the pictures that represent these actions with their fingers and arranging them in the correct order, enhancing the interactivity of the knowledge assessment. We conducted in-depth interviews with 25 students and found that conventional PBL is commonly perceived as synonymous with “examination”, leading to heightened anxiety among students. In contrast, participation in the GBA redefines students’ roles as players, framing knowledge assessment as an engaging game rather than a formal examination, thus reducing examination anxiety. This validates the perspectives on reducing test anxiety as articulated by Swacha, Kulpa, and Pitoyo [36,47]. Questionnaire data indicate that approximately 96% of students express a preference for GBA. The experimental group engaged with the GBA before participating in the PBA. The GBA’s immediate feedback, visual design, gamified elements, enjoyment factors, and interactive frameworks collectively enhanced knowledge acquisition, particularly in procedural comprehension, thereby facilitating the integration of assessment and learning. Therefore, as evidenced in Figure 4, the experimental group’s PBA scores significantly surpass those of the control group, corroborating our research hypothesis. Furthermore, during the statistical analysis phase, the one-way ANOVA revealed that first-year students exhibited the highest satisfaction levels with the GBA, thereby suggesting that fire safety education and the application of GBA should commence in the first year of university.

In contrast to Kapp’s research, this study not only proposes the design of gamification based on distinct knowledge types but also extends its application by exploring how to gamify the assessment of procedural knowledge. The gamification framework developed in this research incorporates Kapp ’s concept of content gamification and structural gamification [27]. For instance, customized levels were designed according to specific fire safety knowledge content, and diverse question types were developed based on knowledge categorization. This approach revealed that gamified sequencing items and questions demonstrate superior effectiveness over multiple-choice questions of traditional PBA in evaluating procedural knowledge. Furthermore, this study exemplifies the implementation of the light gamification concept in Pishchanska et al.’s study [29], which seeks to balance educational rigor and entertainment value while preserving core assessment objectives and content integrity. The findings validate the practical utility of light gamification and demonstrate that GBA can strategically integrate content gamification, structural gamification, and light gamification principles according to specific assessment goals. Coordinating the relationship between knowledge and game elements is the difficulty in designing a GBA, where the focus should remain on the knowledge content while maintaining moderate control over the game elements. Deep gamification may weaken the knowledge component, and a lighter approach is more suitable for knowledge assessments with a larger number of questions and knowledge points; this study corroborates Armstrong’s assertions regarding the inherent flexibility and multidimensional adaptability of GBA.

5. Conclusions

The experimental results of this study indicate that the increase in the experimental group’s scores was more significant than that of the control group. After participating in the GBA, the rate of participants achieving excellent and good increased by 34% in the experimental group’s scores; the result demonstrates the effectiveness of GBA. The analysis of the questionnaire conducted with 120 participants reveals that the three game elements scoring the highest are ranked as follows: interactivity (4.542 ± 0.5926), picture (4.458 ± 0.6723), and immediate feedback (4.383 ± 0.6506). Then, the results of interviews conducted with the participants further validated that the satisfaction and engagement levels of the participants in the GBA are higher than those in the PBA, and the participants widely perceive that the gamified sequencing items and questions in GBA are more suitable for assessing procedural knowledge compared to the multiple-choice questions in PBA. The aforementioned results collectively validate the main hypothesis of this study.

Therefore, GBA is an innovative assessment approach, which is particularly advantageous for evaluating procedural knowledge in emergency contexts such as firefighting. The approach addresses the limitations of PBA in this regard. PBA allows for multiple revisions of responses, while GBA incorporates time constraints for each task and prohibits answer modifications, thereby enhancing real-time feedback mechanisms. This methodological framework more closely mirrors real-life scenarios compared to PBA, particularly in its capacity to effectively assess the participant’s procedural knowledge application. Such advantages prove especially valuable for evaluating procedural knowledge utilization in an emergency context. Procedural knowledge is especially critical in emergency scenarios, as it directly relates to life safety, which is more appropriate to be assessed by the GBA. The new approach can serve as a valuable complement to conventional assessment methodologies. The findings of this research can be applied to the assessment of procedural knowledge in emergency situations, offering significant value for safety education.

Moreover, the main objective of knowledge assessment is not only to assess knowledge but also to enhance learning, thereby improving the overall learning outcomes. GBA can effectively promote the integration of assessment and learning. The gamification approach facilitated participants’ learning of procedural knowledge. The importance of procedural knowledge is more evident in emergency situations related to firefighting. In the AI era, the significance of memorizing declarative knowledge has decreased, whereas the importance of procedural knowledge has increased. GBA remains a new assessment approach, and there is still a certain technical threshold for educators. With the rapid advancement of computer science in the AI era, computer software will lower the technical barriers of GBA; this progress is expected to further enhance the sense of interaction and immersion in the assessment. As a result, GBA is anticipated to be an innovative assessment approach for procedural knowledge in the AI era.

However, this study still faces the following research limitations: The duration of the experiment was insufficient. Future work will involve further tracking of students’ learning outcomes by re-evaluating the same cohort after a certain period in order to validate the durability of the GBA effectiveness. The sample size of the study is limited—future research will expand the sample size to enhance the generalizability of the findings, and future plans include applying the methodologies of this paper to the assessment of procedural knowledge related to traffic safety. Further systematic research is required to elucidate new pathways for the assessment of safety knowledge and safety education.

Author Contributions

Writing—original draft, K.C.; methodology, experiment, literature search, data collection, and data analysis, K.C.; software and formal analysis, S.Y.; conceptualization, study design, and supervision, D.L.; investigation and data analysis, Z.C. and H.A.; visualization and investigation, Y.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Humanities and Social Sciences Planning Fund Project of the Ministry of Education of China, grant number 23YJA760014.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Guangdong University of Technology (protocol code GDUTXS20250012, 1st December).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data used in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Survey on User Experience in Gamification-Based Assessment of Fire Safety Knowledge (Questionnaire)

Dear Student,

This questionnaire aims to investigate your experience with Gamification-Based Assessment (GBA) of fire safety knowledge compared to Paper-Based Assessment (PBA). It does not involve any personal privacy. Your responses will be strictly confidential and used solely for academic research purposes. To ensure the validity of the data, please complete the questionnaire independently and provide honest feedback based on your true experiences. Thank you for your cooperation!

Note: The following multiple-choice questions are all single-choice questions.

Note: This questionnaire is published through the Questionnaire Star digital platform, and students fill it out on their mobile devices.

References

- Ruis, L.; Mozes, M.; Bae, J.; Kamalakara, S.R.; Talupuru, D.; Locatelli, A.; Kirk, R.; Rocktäschel, T.; Grefenstette, E.; Bartolo, M. Procedural Knowledge in Pretraining Drives Reasoning in Large Language Models. arXiv 2024, arXiv:2411.12580. [Google Scholar] [CrossRef]

- Cope, B.; Kalantzis, M.; Searsmith, D. Artificial intelligence for education: Knowledge and its assessment in AI-enabled learning ecologies. Educ. Philos. Theory 2020, 53, 1229–1245. [Google Scholar] [CrossRef]

- Stefanutti, L. On the assessment of procedural knowledge: From problem spaces to knowledge spaces. Br. J. Math. Statist. Psychol. 2019, 72, 185–218. [Google Scholar] [CrossRef] [PubMed]

- Saks, K.; Ilves, H.; Noppel, A. The Impact of Procedural Knowledge on the Formation of Declarative Knowledge: How Accomplishing Activities Designed for Developing Learning Skills Impacts Teachers’ Knowledge of Learning Skills. Educ. Sci. 2021, 11, 598. [Google Scholar] [CrossRef]

- Rani, R.; Jain, S.; Garg, H. A review of nature-inspired algorithms on single-objective optimization problems from 2019 to 2023. Artif. Intell. Rev. 2024, 57, 126. [Google Scholar] [CrossRef]

- Judith, D.; Katharina, K.G.; Prindle, J.P.; Kröhne, U.; Goldhammer, F.; Schmiedek, F. Paper-Based Assessment of the Effects of Aging on Response Time: A Diffusion Model Analysis. J. Intell. 2017, 5, 12. [Google Scholar] [CrossRef]

- Freitas, S.d.; Uren, V.; Kiili, K.; Ninaus, M.; Petridis, P.; Lameras, P.; Dunwell, I.; Arnab, S.; Jarvis, S.; Star, K. Efficacy of the 4F Feedback Model: A Game-Based Assessment in University Education. Information 2023, 14, 99. [Google Scholar] [CrossRef]

- Liu, Q.; Hong, X. Do Chinese Preschool Children Love Their Motherland? Evidence from the Game-Based Assessment. Behav. Sci. 2024, 14, 959. [Google Scholar] [CrossRef]

- Raghavan, M.; Sherifal, Q. A review of online reactions to game-based assessment mobile applications. Int. J. Sel. Assess. 2022, 30, 14–26. [Google Scholar] [CrossRef]

- Sudsom, U.; Phongsatha, T. The Comparative Analysis of Forgetting and Retention Strategies in Gamification-Based Assessment and Formative-Based Assessment: Their Impact on Motivation for Learning. Int. J. Sociol. Anthropol. Sci. Rev. 2024, 4, 273–286. [Google Scholar] [CrossRef]

- Perolat, J.; De Vylder, B.; Hennes, D.; Tarassov, E.; Strub, F.; De Boer, V.; Muller, P.; Connor, J.; Burch, N.; Anthony, T.; et al. Mastering the game of Stratego with model-free multiagent reinforcement learning. Science 2022, 378, 990–996. [Google Scholar] [CrossRef]

- Meta Fundamental AI Research Diplomacy Team (FAIR); Bakhtin, A.; Brown, N.; Dinan, E.; Farina, G.; Flaherty, C.; Fried, D.; Goff, A.; Gray, J.; Hu, H.; et al. Human-level play in the game of Diplomacy by combining language models with strategic reasoning. Science 2022, 378, 1067–1074. [Google Scholar] [CrossRef] [PubMed]

- Sørensen, J.J.W.H.; Pedersen, M.K.; Munch, M.; Haikka, P.; Jensen, J.H.; Planke, T.; Andreasen, M.G.; Gajdacz, M.; Mølmer, K.; Lieberoth, A.; et al. Editorial Expression of Concern: Exploring the quantum speed limit with computer games. Nature 2016, 532, 210–213. [Google Scholar] [CrossRef]

- Koepnick, B.; Flatten, J.; Husain, T.; Ford, A.; Silva, D.-A.; Bick, M.J.; Bauer, A.; Liu, G.; Ishida, Y.; Boykov, A.; et al. De novo protein design by citizen scientists. Nature 2019, 570, 390–394. [Google Scholar] [CrossRef]

- Kukulska Hulme, A.; Bossu, C.; Charitonos, K.; Coughlan, T.; Ferguson, R.; FitzGerald, E.; Gaved, M.; Guitert, M.; Herodotou, C.; Maina, M.; et al. Innovating Pedagogy: Open University Innovation Report; The Open University: Milton Keynes, UK, 2022; pp. 15–17. [Google Scholar]

- Cooper, P. Data, information and knowledge. AI.&ICM 2010, 11, 505–506. [Google Scholar] [CrossRef]

- Anderson, L.W.; Krathwohl, D.R. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Longman: New York, NY, USA, 2001; pp. 52–128. [Google Scholar]

- Adegbenro, J.B.; Olugbara, O.O. Investigating Computer Application Technology Teachers’ Procedural Knowledge and Pedagogical Practices in ICT- Enhanced Classrooms. Afr. Educ. Rev. 2019, 16, 1394516. [Google Scholar] [CrossRef]

- Calle, O.; Antúnez, A.; Ibáñez, S.J.; Feu, S. Analysis of Tactical Knowledge for Learning an Alternative Invasion Sport. Educ. Sci. 2024, 14, 1136. [Google Scholar] [CrossRef]

- Richter-Beuschel, L.; Grass, I.; Bögeholz, S. How to Measure Procedural Knowledge for Solving Biodiversity and Climate Change Challenges. Educ. Sci. 2018, 8, 190. [Google Scholar] [CrossRef]

- Nousiainen, M.; Koponen, I.T. Pre-Service Teachers’ Declarative Knowledge of Wave-Particle Dualism of Electrons and Photons: Finding Lexicons by Using Network Analysis. Educ. Sci. 2020, 10, 76. [Google Scholar] [CrossRef]

- Liu, H.; Li, D. Understanding homework: Reflections and enlightenment on homework from the perspective of knowledge classification. Contemp. Educ. Sci. 2020, 25–29. [Google Scholar]

- Lohse, K.R.; Healy, A.F. Exploring the contributions of declarative and procedural information to training: A test of the procedural reinstatement principle. J. Appl. Res. Mem. Cogn. 2012, 1, 65–72. [Google Scholar] [CrossRef]

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From game design elements to gamefulness: Defining gamification. In Proceedings of the MindTrek’11: Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments, Tampere, Finland, 28–30 September 2011; pp. 9–15. [Google Scholar]

- Werbach, K.; Hunter, D. For the Win: How Game Thinking Can Revolutionize your Business; Wharton Digital Press: Philadelphia, NY, USA, 2012; pp. 12–27. [Google Scholar]

- Csikszentmihalyi, M. Flow: In the Psychology of Optimal Experience; Harper & Row: New York, NY, USA, 1990; ISBN 978-00-609204-3-2. [Google Scholar]

- Kapp, K.M. The Gamification of Learning and Instruction: Game-Based Methods and Strategies for Training and Education; Pfeiffer: Hoboken, NJ, USA, 2012; ISBN 978-1-118-09634-5. [Google Scholar]

- Lieberoth, A. Shallow Gamification: Testing Psychological Effects of Framing an Activity as a Game. Games Cult. 2015, 10, 229–248. [Google Scholar] [CrossRef]

- Pishchanska, V.; Altukhova, A.; Prusak, Y.; Kovmir, N.; Honcharov, A. Gamification of education: Innovative forms of teaching and education in culture and art. Rev. Tecnol. Inform. Comun. Educ. 2022, 16, 119–133. [Google Scholar] [CrossRef]

- Karmanova, E.V.; Shelemetyeva, V.A. Hard and Light Gamification in Education: Which One to Choose? Inform. Educ. 2020, 1, 20–27. [Google Scholar] [CrossRef]

- Arinta, R.R.; Emanuel, A.W. Effectiveness of gamification for flood emergency planning in the disaster risk reduction area. Int. J. Eng. Pedagog. 2020, 10, 108–124. [Google Scholar] [CrossRef]

- Vancini, R.L.; Russomano, T.; Andrade, M.S.; de Lira, C.A.B.; Knechtle, B.; Herbert, J.S. Gamification vs. Teaching First Aid: What Is Being Produced by Science in the Area? Health Nexus 2023, 1, 71–82. [Google Scholar] [CrossRef]

- DiCesare, D.; Scheveck, B.; Adams, J.; Tassone, M.; Diaz-Cruz, V.I.; Van Dillen, C.; Ganti, L.; Gue, S.; Walker, A. The Pit Crew Card Game: A Novel Gamification Exercise to Improve EMS Performance in Critical Care Scenarios. Int. J. Emerg. Med. 2025, 18, 3–5. [Google Scholar] [CrossRef]

- Armstrong, M.; Ferrell, J.Z.; Collmus, A.B.; Landers, R.N. Correcting Misconceptions About Gamification of Assessment: More Than SJTs and Badges. Ind. Organ. Psychol. 2016, 9, 671–677. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, X. Exploring the Impact of the Adaptive Gamified Assessment on Learners in Blended Learning. Educ. Inf. Technol. 2024, 29, 21869–21889. [Google Scholar] [CrossRef]

- Swacha, J.; Kulpa, A. A Game-Like Online Student Assessment System. In Harnessing Opportunities: Reshaping ISD in the Post-COVID-19 and Generative AI Era (ISD2024 Proceedings); Marcinkowski, B., Przybylek, A., Jarzębowicz, A., Iivari, N., Insfran, E., Lang, M., Linger, H., Schneider, C., Eds.; University of Gdańsk: Gdańsk Poland, 2024; ISBN 978-83-972632-0-8. [Google Scholar] [CrossRef]

- Mislevy, R.J.; Corrigan, B.; Oranje, A.; DiCerbo, K.; Bauer, M.I.; Von Davier, A.; John, M. Psychometrics and Game-Based Assessment. In Technology and Testing: Improving Educational and Psychological Measurement; Drasgow, F., Gierl, M.J., Eds.; Taylor & Francis, Routledge: New York, NY, USA, 2016; pp. 44–46. [Google Scholar]

- Blaik, J.; Mujcic, S. Gamifying Recruitment: The Psychology of Game-Based Assessment. InPsych 2021, 5. Available online: https://psychology.org.au/for-members/publications/inpsych/2021/april-may-issue-2/the-psychology-of-game-based-assessment (accessed on 2 December 2024).

- Pitoyo, M.D.; Sumardi, S.; Asib, A. Gamification-Based Assessment: The Washback Effect of Quizizz on Students’ Learning in Higher Education. Int. J. Lang. Educ. 2020, 4, 1–10. [Google Scholar] [CrossRef]

- Lowman, G. Moving Beyond Identification: Using Gamification to Attract and Retain Talent. Ind. Organ. Psychol. 2016, 70, 678–681. [Google Scholar] [CrossRef]

- Obaid, I.; Farooq, M.S.; Abid, A. Gamification for recruitment and job training: Model, taxonomy, and challenges. IEEE Access 2020, 8, 65164–65178. [Google Scholar] [CrossRef]

- Kirovska, Z.; Josimovski, S.; Kiselicki, M. Modern trends of recruitment-introducing the concept of gamification. J. Sustain. Dev. 2020, 10, 55–65. [Google Scholar]

- Vardarlier, P. Gamification in human resources management: An agenda suggestion for gamification in HRM. Res. J. Bus. Manag. 2021, 8, 129–139. [Google Scholar] [CrossRef]

- Varghese, J.; Deepa, R. Gamification as an Effective Employer Branding Strategy for Gen Z. NHRD Netw. J. 2023, 16, 269–279. [Google Scholar] [CrossRef]

- Wang, A.I. The wear out effect of a game-based student response system. Comput. Educ. 2015, 82, 217–227. [Google Scholar] [CrossRef]

- Maryo, F.A.; Pujiastuti, E. Gamification in Efl Class using Quizizz as an Assessment Tool. Proc. Ser. Phys. Form. Sci. 2022, 3, 75–80. [Google Scholar] [CrossRef]

- Pitoyo, M.D.; Sumardi; Asib, A. Gamification based assessment: A Test Anxiety Reduction through Game Elements in Quizizz Platform. Int. Online J. Educ. Teach. 2019, 6, 456–471. [Google Scholar] [CrossRef]

- Hsu, C.L.; Lu, H.P. Why do people play on-line games? An extended TAM with social influences and flow experience. Inf. Manag. 2004, 41, 853–868. [Google Scholar] [CrossRef]

- Dawson, P.; Henderson, M.; Mahoney, P.; Phillips, M.; Ryan, T.; Boud, D.; Molloy, E. What makes for effective feedback: Staff and student perspectives. Assess. Eval. High. Educ. 2019, 44, 25–36. [Google Scholar] [CrossRef]

- Bressler, D.M.; Shane Tutwiler, M.; Bodzin, A.M. Promoting student flow and interest in a science learning game: A design-based research study of School Scene Investigators. Educ. Technol. Res. Dev. 2021, 69, 2789–2811. [Google Scholar] [CrossRef]

- Liu, S.; Ma, G.; Tewogbola, P.; Gu, X.; Gao, P.; Dong, B.; He, D.; Lai, W.; Wu, Y. Game principle: Enhancing learner engagement with gamification to improve learning outcomes. J. Workpl. Learn. 2023, 35, 450–462. [Google Scholar] [CrossRef]

- Lopez-Barreiro, J.; Alvarez-Sabucedo, L.; Garcia-Soidan, J.L.; Santos-Gago, J.M. Towards a Blockchain Hybrid Platform for Gamification of Healthy Habits: Implementation and Usability Validation. Appl. Syst. Innov. 2024, 7, 60. [Google Scholar] [CrossRef]

- Katinka, V.D.K. Gamification as a Sustainable Source of Enjoyment During Balance and Gait Exercises. Front. Psychol. 2019, 10, 294. [Google Scholar] [CrossRef]

- Yang, W.; Fang, M.; Xu, J.; Zhang, X.; Pan, Y. Exploring the Mediating Role of Different Aspects of Learning Motivation between Metaverse Learning Experiences and Gamification. Electronics 2024, 13, 1297. [Google Scholar] [CrossRef]

- Nah, F.F.-H.; Eschenbrenner, B.; Claybaugh, C.C.; Koob, P.B. Gamification of Enterprise Systems. Systems 2019, 7, 13. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).