Abstract

To defeat AI-based steganalysis systems, various techniques using adversarial example attack methods have been reported. In these techniques, adversarial stego images are generated using adversarial attack algorithms and steganography embedding algorithms sequentially and independently. However, this approach can be inefficient because both algorithms independently insert perturbations into a cover image, and the steganography embedding algorithm could significantly lower the undetectability or indistinguishability of adversarial attacks. To address this issue, we propose an innovative adversarial stego image generation method (ASIGM) that fully integrates the two separate algorithms by using the Jacobian-based Saliency Map Attack (JSMA). JSMA, one of the representative l0 norm-based adversarial example attack methods, is used to compute a set of pixels in the cover image that increases the probability of being classified as the non-stego class by the steganalysis model. The reason for this calculation is that if a secret message is inserted into the limited set of pixels in such a way, noise is only required for message embedding, and even misclassification of the target steganalysis model can be achieved without additional noise insertion. The experimental results demonstrate that our proposed ASIGM outperforms two representative steganography methods (WOW and ADS-WOW).

1. Introduction

Steganography refers to the art and science of hiding communication by secretly embedding data in plain cover media without arousing an eavesdropper’s suspicion [1]. One of the most preferred cover media is an image (cover image), and, in particular, a high-resolution image provides abundant places (pixels) for hiding data in it [2]. In general, an image steganography algorithm takes a cover image and a secret message as inputs and then generates a stego image that appears indistinguishable from a cover image to the human eyes. Meanwhile, steganography and cryptography are clearly different according to their purposes in that cryptography randomly scrambles a message (plaintext) in a way that the scrambled message (ciphertext) cannot be understood by eavesdroppers, while steganography hides a message into a cover medium in a way that the existence of the message itself cannot be recognized. As a result, a ciphertext might arouse suspicion by an eavesdropper (warden), while an invisible message created with a steganographic method will not [3].

On the other hand, image steganalysis is a technique that detects a stego image with a hidden message [4]. Therefore, steganalysis deals with a binary classification problem in which a particular image is classified into either the stego image class or the non-stego image class. In early studies, machine-learning (ML)-based models were presented, and most of them considered statistical characteristics of images to detect stego images [5,6]. Recently, thanks to the rapid advancement of deep-learning (DL) technology, advanced steganalysis models based on DL have been actively proposed, and they showed very high-classification accuracy. In particular, Convolutional Neural Network (CNN)-based steganalysis models (such as YeNet, XuNet, and SRNet) significantly improved detection and classification performance [7,8,9,10].

Meanwhile, the emergence of CNN-based steganalysis has become a new challenge to attackers who want to use steganography techniques for their covert communication. Consequently, attackers have focused on devising new steganography methods to avoid CNN-based steganalysis models. One promising approach is to exploit an inherent limitation, which is that DL-based models are known to be vulnerable to adversarial example attacks [11,12,13]. In this approach, an adversarial stego image (ASI) is created in the following two independent steps: (1) converting a cover image to an adversarial image to avoid a target CNN steganalysis model; and (2) generating a stego image by hiding a secret message in the adversarial image. However, this approach can be inefficient in that the latter step can significantly lower the performance of the former step in terms of (by a target DL steganalysis model) and indistinguishability (with cover images), and it can even break their final goal (successful stealthy delivery of a secret message to a recipient) since each step independently inserts some perturbation into a cover image and thus compromises the embedded secret message, which leads to the failure of extracting a hidden message from the stego image.

Therefore, to address the above limitations of the existing approach, we propose an innovative adversarial stego image generation method (ASIGM) that fully integrates two independent steps to improve undetectability and indistinguishability. Specifically, ASIGM first finds a set of pixels SP that can increase the probability of misclassifying a cover image as a non-stego image class by a target DL model when some perturbation is added to SP and then embeds a secret message to a subset of SP as required. We designed our proposed method based on the Jacobian-based Saliency Map Attack (JSMA) [14], which is a representative adversarial attack method.

The main contributions of this study can be summarized as follows:

- We propose an innovative adversarial stego image generation method (ASIGM) that combines the two independent processes (adversarial example attack and steganography embedding) into one single process to improve the undetectability and indistinguishability of attack against CNN image steganalysis models.

- We develop and implement the proposed AGISM by using the Jacobian-based Saliency Map Attack (JSMA), one of the representative l0 norm-based adversarial example attack methods, such that AGISM converts a particular cover image to an adversarial stego image that can avoid a target CNN steganalysis model such as YeNet.

- To validate our idea, we conduct extensive experiments to compare ASIGM with two existing steganography methods, Wavelet Obstained Weight (WOW) [15] and Adverse Distinction Steganography [16], on the state-of-the-art steganalysis model YeNet. Our experiment results show that, in terms of the Missed Detection Rate (MDR), ASIGM outperformed WOW and ADS-WOW by up to 97.2%p and 17.7%p, respectively. In addition, ASIGM outperformed WOW and ADS-WOW in terms of Peak-Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM) between the cover image and the stego image.

The rest of the paper is organized as follows. In Section 2, we briefly overview the background knowledge and related studies. In Section 3, we describe the proposed adversarial stego image generation method (ASIGM). In Section 4, we conduct comparative experiments to validate our idea and show the performance improvement of ASIGM over other methods. Finally, we conclude the study with future research directions in Section 5.

2. Background and Related Works

2.1. Image Steganography

Steganography is the art and science of using the digital communication object in such a way that it conceals the existence of secret information. In general, communication mediums (or communication carriers) are digital files or data (i.e., images, audio, video, text, and network protocol). Especially, in the image steganography, the cover image denotes a plain image carrier used to hide secret data, and the stego image denotes the output image that conceals the secret data [17].

The most basic type of steganography is designed by using the Least-significant Bit (LSB)-based approach. Each pixel of an image contains color information. In grayscale format, a single-color channel is represented by 8 bits and, thus, the LSB steganography method hides a message in the least-significant bit of each pixel within a single channel. On the other hand, in the case of the RGB format with three color channels, the LSB steganography method hides a message in the least-significant bit of each pixel in each channel. The change in color due to the alteration of the least-significant bit is extremely subtle, making it difficult for the human visual system to distinguish. As a result, a third party that does not know the fact of concealment recognizes the cover image and the stego image as the same image.

To improve the security of steganography techniques, content-adaptive steganography methods have recently been devised by developing basic LSB methods. The content-adaptive method embeds a secret message into a visually complex area in the cover image so that the generated stego image becomes more similar to the cover image from the human visual system. Representative content-adaptive steganography methods in the spatial domain include Wavelet Observed Weight (WOW) [15], Spatial Universal Wavelet Relative Distance (S-UNIWARD) [18], High pass, Low pass, and Low pass (HILL) [19].

2.2. Steganalysis Using Deep Learning: CNN-Based Steganalysis Model

As a countermeasure to steganography, research has been conducted on steganalysis, which refers to a technique aimed at detecting stego images from the defender’s perspective. In general, steganalysis deals with the binary classification problem such that it classifies a particular image into stego or non-stego. Therefore, machine learning (ML), or deep learning (DL) that shows excellent performance in various classification problems, has been actively used for advancing steganalysis.

Accordingly, an early study proposed utilizing statistical characteristics of images such as an ML-based Spatial Rich Model (SRM) [20]. This involved statistically measuring the changes in the medium that inevitably occur when using steganography to hide information and detecting the presence of hidden information [5,6]. However, the limitation of this approach was the requirement for demanding hand-crafted feature extraction. On the other hand, DL is making major advances in solving problems that have resisted the best attempts of the artificial intelligence (AI) community for many years [21]. Unlike ML, DL involves automatic feature extraction using neural networks. Consequently, various CNN-based models have been proposed in the field of image steganalysis, and there is a significant advancement compared to the previous studies in terms of detection accuracy. Representative CNN-based steganalysis models include XuNet [8], YeNet [9], and SRNet [10].

2.3. Adversarial Example Attacks Fooling Deep-Learning Models

Deep neural networks are powerful machine-learning models that achieve excellent performance on visual and speech recognition problems. Meanwhile, it was found that applying an imperceptible non-random perturbation to a test image can induce the DL model’s false classification [22]. In particular, adversarial example images (or adversarial examples in this study) refer to crafted images generated by inserting subtle perturbation into an input image so that it is difficult for the human visual system to recognize them. Thus, DL-based image classification models cannot classify such adversarial examples correctly into their ground-truth labels. Therefore, when an attacker performs an adversarial example attack, it becomes possible to manipulate the DL model to cause misclassification according to the attacker’s intent. The study by Szegedy et al. has sparked widespread interest in adversarial example attacks.

In adversarial example attacks, distance metrics are used to quantify the similarity between input data and adversarial examples. This is related to minimizing both the attacker’s cost to generate adversarial examples and the detectability of adversarial examples by the human visual system. These metrics play the role of constraints that limit the transformation of adversarial examples within a certain distance from an input image. In other words, the distance metric is the most important factor determining the size and shape of the perturbation.

Distance can be measured in various ways, such as l∞, l0, and l2 Norm, which are generalized as lp Norm [23].

First, l∞ Norm attack limits the maximum change in pixel values among the altered pixels during adversarial example generation. By this approach, l∞ Norm attack generates imperceptible perturbation. The most representative method implementing the l∞ Norm attack is Fast Gradient Sign Method (FGSM) [24].

Next, l0 Norm attack aims to minimize the number of changed pixels during adversarial example generation. l0 Norm-based attack methods have the characteristic of inserting perturbation into specific pixels, not all pixels. Thus, they generate perturbation that is less noticeable to the human eye. A notable method of l0 Norm attack is the Jacobian-based Saliency Map Attack (JSMA) [14].

Last, the l2 Norm attack aims to minimize the Euclidean distance (or the shortest distance) between the input image and the adversarial image. This is the most common distance calculation method, computed as the square root of the sum of the square of each pixel changes. By minimizing this value, l2 Norm-based attacks effectively limit the perturbation to a very subtle scale. DeepFool [25] is a representative l2 Norm-based attack method.

In this study, we designed our adversarial stego image generation method (ASIGM) based on the l0 Norm attack because of the following reasons. For adversarial example generation, l∞ Norm and l2 Norm create imperceptible perturbation by limiting the size of the perturbation, making it difficult to visually identify. Consequently, perturbation is inserted into almost the entire image. In contrast, the l0 Norm restricts the number of changed pixels to make the perturbation less noticeable to the human eye. As a result, unlike l∞ Norm or l2 Norm, l0 Norm inserts perturbation into specific pixels rather than all pixels.

In this study, we proposed an innovative adversarial stego image generation method that completely integrates the two noise insertion steps of the adversarial example attack and steganography into a single process and used JSMA as the core component of our method to insert a certain amount of perturbation only into specific pixels.

In accordance with this, we briefly introduce JSMA as follows [14].

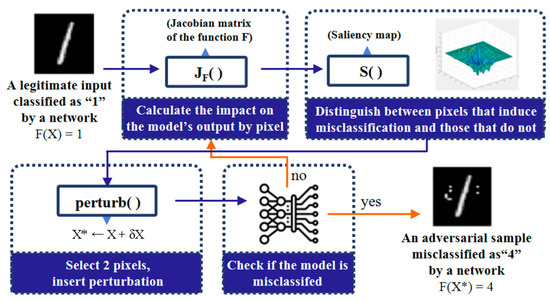

JSMA is an l0 Norm-based attack that aims to identify which pixels in an input image should be altered to change the output of a DL model. Thus, to induce misclassification by the DL model, JSMA inserts perturbation into the minimum number of pixels rather than the entire image. The working steps of JSMA are depicted in Figure 1. The first step is to compute the Jacobian matrix that represents the forward derivative for a particular sample image X. The forward derivative computes gradients that are similar to those computed for backpropagation, but there are two important distinctions: It takes the derivative of the learning network directly, rather than of its cost function, and it differentiates with respect to the input features rather than the network parameters. Consequently, instead of propagating gradients backwards, it propagates them forward. This allows us to find input components that lead to significant changes in network outputs. Afterward, the Jacobian matrix is mapped to a saliency map, and perturbation is inserted by selecting the pixel with the highest value in the saliency map. This process is repeated until successful misclassification, or until the maximum number of alterable pixels is reached.

Figure 1.

The working steps of JSMA.

2.4. Existing Studies on Steganography Methods with Adversarial Example Attacks

The objective of adversarial example attacks on a typical DL model is to induce misclassification. In contrast, adversarial example attacks on steganalysis models consider not only inducing their misclassification but also preserving the hidden message from stego images. This distinction arises because the attack loses its meaning if the hidden message is destroyed. We overview representative existing studies on steganography methods using adversarial example attacks as below.

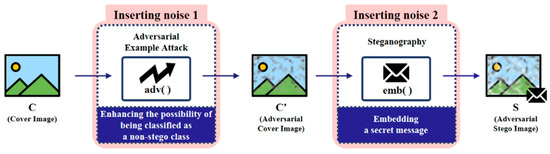

The basic approach to preserve hidden messages while bypassing a steganalysis model is to first perform an adversarial example attack to increase the probability of being classified as a non-stego class and then insert a hidden message. Specifically, the first step is to prepare a cover image to serve as the medium for hidden communication. The next step is to perform an adversarial example attack on this cover image to increase the probability of being classified as the non-stego class. The resulting image is referred to as an adversarial cover image. The last step is to embed a secret message in the adversarial cover image using steganography. Through this process, an adversarial stego image is generated.

Adversarial Distortion Steganography (ADS) is the first study to propose and implement such an approach [16]. It repetitively applies the Fast Gradient Sign Method (FGSM), which is one of the representative l0 Norm-based attack methods, to the cover image, creating an enhanced cover. Subsequently, it embeds a secret message into this adversarial cover image to generate the final adversarial stego image. The adversarial stego image produced in this manner is able to not only evade steganalysis models but also allow for the extraction of hidden messages. However, this approach is inefficient in that it requires repetitive adversarial example attacks, and it lowers the overall quality of the final image.

Afterwards, ADVerserial EMBedding (ADV-EMB) was proposed, which divides a cover image into two parts and performs only steganographic embedding in the first part and an adversarial example attack in the second part [26]. ADV-EMB was designed under the framework of distortion minimization, adjusting the costs of image element modifications according to the gradients back propagated from the target CNN steganalyzer.

Among the above two approaches, the ADS-like approach became more mainstream in subsequent studies, rather than ADV-EMB. This is because constructing an adversarial cover image has a more obvious misclassification ability than adjusting the distortion cost [27].

After that, attempts to generate adversarial stego images using certain types of deep-learning networks, such as Siamese networks or GANs, have followed. Adversarial Cover Generator for Image Steganography with noise residual feature-preserving (ACGIS) uses the Siamese network to train an adversarial cover generator [27]. The Siamese network consists of twin blocks with a shared structure and parameters. In the ACGIS, the cover is divided in half, and each half is input into one of the twin blocks for training. The trained generator can then produce multiple adversarial covers, allowing for various combinations with steganography methods.

In addition, there have been various studies aiming to use Generative Adversarial Networks (GAN). GAN consists of two competing networks: a discriminator, which functions as a classification model, and a generator, which is trained to produce fake data that can deceive the discriminator [28]. Several studies using GAN have also been conducted in the fields of steganography and steganalysis, and the first study was SSGAN (Secure Steganography Based on Generative Adversarial Networks) [29]. Recently, a network architecture for image steganography with channel attention mechanisms based on GAN was proposed, which tunes channel-wise features in the deep representation of images dynamically by exploiting channel interdependencies [30]. Also, a method of combining Evolutionary GAN with steganography has been proposed to find the optimal architecture and hyperparameters of the generator [2]. In 2025, UAPs (Universal Adversarial Perturbations) using GAN were proposed, which generate perturbations that can be applied to all images without the need to design image-specific perturbations [31].

3. ASIGM: Adversarial Stego Image Generation Method Based on JSMA

3.1. Key Idea Behind ASIGM

To achieve the goals of evading classification models and preserving stego messages simultaneously, existing studies have focused on effectively dividing and applying adversarial example attacks and steganography embedding to one image. In previous studies, the attack procedure first applies an adversarial example attack on cover images to increase the probability of them being classified as a non-stego class, thereby creating adversarial cover images. Afterward, secret messages are embedded into these adversarial cover images using steganography, resulting in the final creation of adversarial stego images. Figure 2 illustrates the basic procedure for generating adversarial stego images in previous studies, especially the ADS-like approach.

Figure 2.

The basic procedure for generating adversarial stego images in previous studies.

However, this approach has two shortcomings. First, it might significantly degrade the attack performance in terms of detection avoidance. Even if the adversarial example attack increases the probability of the cover image being classified as non-stego, the subsequent embedding of a stego message could outweigh this effect, potentially leading to its classification as stego. Second, the amount of distortion to obtain an adversarial stego image might be unnecessarily large. Since both adversarial example attacks and steganography are applied to the single image separately, noise is inserted twice.

To address the issues arising from this two-step noise insertion process, we study an innovative way to accomplish the two objectives of the attack (embedding secret messages and evading steganalysis models) in a single process by considering the process similarities of inserting noise into an image in both the steganography algorithm and the adversarial example attack algorithm. For the rest of this section, we design an innovative adversarial stego image generation method (ASIGM) that performs the noise insertion processes of both techniques in a single step.

3.2. Working Steps

The proposed method (ASIGM) generates an adversarial stego image that bypasses the CNN-based steganalysis model while concealing a secret message with only one noise insertion. To this end, the proposed method operates in the following two main steps (Step 1 and Step 2).

- Step 1 (Selecting a set of pixels to hide a secret message): ASIGM calculates a group of pixels desirable for concealing a secret message in a prepared cover image. For this step, we use the modified version of JSMA to effectively find such pixels. Step 1 is implemented by Module 1 in Section 3.3.1.

- Step 2 (Embedding a secret message): ASIGM embeds a secret message into the pixels chosen from the pixel set obtained in Step 1. As a result, an adversarial stego image is generated. Step 2 is implemented by Module 2 in Section 3.3.2.

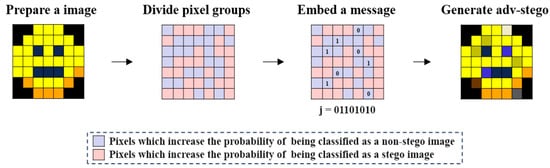

If the adversarial stego image generated through this procedure is classified as a non-stego image when input into the target steganalysis model, it is considered successful in generating an adversarial stego image. The attack scenario and working steps of the proposed method are shown in Figure 3.

Figure 3.

Attack scenario and working steps of ASIGM. The hidden message (011010102) is embedded only into the blue pixel group to increase the probability of being classified as a non-stego image.

3.3. Design

In this part, we design two modules (Module 1 and Module 2) that conduct the two main steps of our proposed method introduced in Section 3.2.

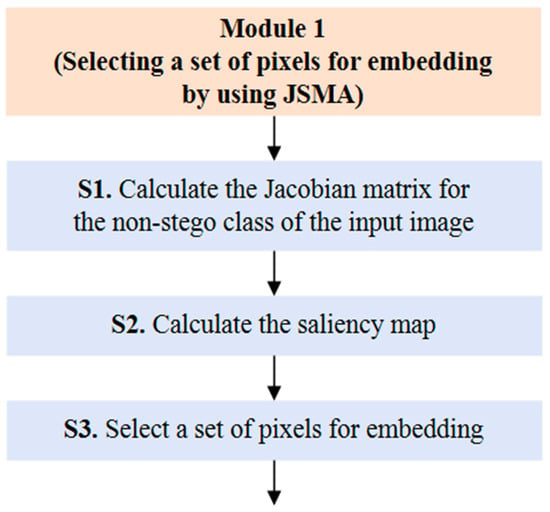

3.3.1. Design of Module 1: Selecting a Set of Pixels for Embedding by Using JSMA

Module 1 is performed in the following three sub-steps (S1–S3), and each sub-step works as shown in Figure 4. In Module 1, JSMA is used to compute a set of pixels in the cover image that increases the probability of being classified as the non-stego class by the target steganalysis model. If we can obtain enough pixels by this approach, we can avoid introducing additional noise insertion by embedding a secret message only into the pixels. If sufficient pixels cannot be obtained due to the cover image, a larger cover image or additional cover images can be used.

Figure 4.

The execution process of Module 1.

- Sub-step 1 (S1): Calculating the Jacobian matrix for the non-stego class of the input image

The first sub-step S1 calculates the Jacobian matrix to reinforce the predicted class of the image as the non-stego class. Thus, it computes forward derivative of each pixel for the network output. The equation for this is shown in (1).

In the equation, F is a function learned by the network during the training phase. X is the input (i.e., a cover image) and Y is the prediction class of the network. JF is the Jacobian matrix of the function F (i.e., a forward derivative). xi is the i-th pixel in the cover image X and j is the prediction classes of Y. If the target class is designated as non-stego, the Jacobian matrix is calculated by considering how each pixel affects the probability of being classified as a non-stego class.

- Sub-step 2 (S2): Calculating the saliency map

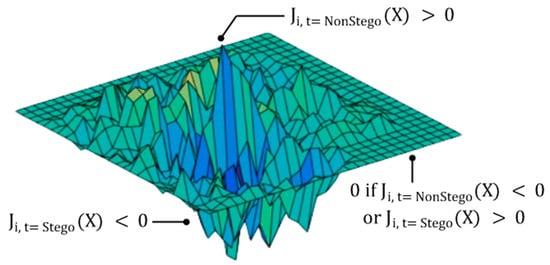

The saliency map S(X, t) distinguishes pixels that increase and decrease the probability of being classified as non-stego using the Jacobian matrix. Figure 5 illustrates the saliency map, and it is calculated as (2).

Figure 5.

The illustration of saliency map.

In (2), i denotes the input pixel and denotes . Thus, is the Jacobian matrix for the target class t, and denotes the Jacobian matrix of all the other classes, except the target class t. The steganalysis deals with the binary classification problem that classifies an input image into a non-stego or stego class. If the target class is designated as non-stego, the remaining class is only stego. Therefore, by removing , (2) can be simplified as (3):

In (3), the first line means setting the value of the saliency map to 0 when the forward derivative for the non-stego class of a specific pixel is a negative value or the forward derivative for the stego class is a positive value. The second line means setting the value of the saliency map for the corresponding pixel as the result of multiplying the two forward derivatives if it does not meet the condition of the first line. At this point, since the forward derivative for the stego class is a negative value, it is multiplied by its absolute value. This calculation is performed for all pixels to complete the saliency map.

- Sub-step 3 (S3): Selecting a set of pixels for embedding a secret message

S3 selects a pixel set by choosing pixels with non-zero values from the saliency map. Thus, it makes a pixel set using only the pixels that increase the probability of being classified as the non-stego class. The size of the selected pixel set must be equal to or greater than the length of the secret message.

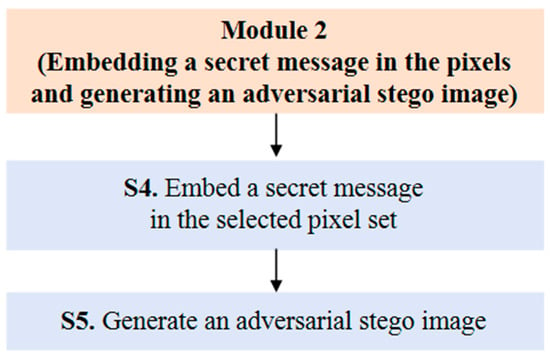

3.3.2. Design of Module 2: Embedding a Secret Message in the Pixels and Generating an Adversarial Stego Image

Module 2 is performed in the following two sub-steps (S4 and S5), and each sub-step works as shown in Figure 6.

Figure 6.

The execution process of Module 2.

- Sub-step 4 (S4): Embedding a secret message in the selected pixel set

When a pixel set is selected in the previous step, the secret message is embedded only into the pixel set. In this manner, the two noise insertion processes for the adversarial example attack and the steganography technique can be completely combined into one single process. The adversarial example attack induces misclassification of the network in a way that it increases or decreases the intensity of the pixel, and steganography embeds a secret message by manipulating the least significant bit of the pixel to 0 or 1 depending on the content of the message. Thus, if the secret message is embedded by confining it to a set of pixels calculated using an adversarial example attack, this results in an increase in the intensity of the set of pixels that reinforce the non-stego class.

- Sub-step 5 (S5): Generating an adversarial stego image

The generated adversarial stego image is finally inputted into the target steganalysis model. If the model misclassifies the generated image into non-stego class, the attack is considered a success. Conversely, if the model classifies the image into the stego class, the attack is considered a failure. We will show that our proposed method can achieve a very high attack success performance in terms of the Missed Detection Rate (MDR) later in Section 4.

3.4. Algorithms

Algorithm 1 shows the entire process of ASIGM as we described in previous sections. ASIGM takes a benign cover image X, a secret message M, and target steganalysis model F as the input and then generates an adversarial stego image X* such that F(X*) = Y.

| Algorithm 1: ASIGM (Adversarial Stego Image Generation Method Based on JSMA) |

| Input: X: cover image Y: target class (=non-stego class) F: function learned by target steganalysis during training Γ: full set of pixels in a cover image X M: a secret mesage Output: X*: an adversarial stego image |

| 1: X* ← X 2: SET selected_Γ 3: compute forward derivative JF(X*) 4: selected_Γ ← saliency_map(JF(X*), Γ, Y, len(M)) 5: embed M to selected_Γ 6: return X* |

In Algorithm 1, to obtain X*, forward derivative JF(X*) and saliency map should be calculated. To this end, Algorithm 2 calculates the saliency map, which is called to select a pixel set for embedding in Algorithm 1. In the saliency map, the forward derivative for the non-stego class of pixel p is assigned to α, and the forward derivative for the stego class is assigned to β, respectively. If α is greater than 0 and β is less than 0 (i.e., if pixel p increases the probability of being classified as a non-stego class), it is included in the pixel set for embedding. At this point, pixels are selected in the unit of N for each calculation of the saliency map. For example, when N = 1000 and the length of the secret message M = 10,000 bits, the calculation of the saliency map is conducted 10 times.

| Algorithm 2: Saliency map to select a pixel set for embedding |

| Input: JF(X*): forward derivative (Jacobian matrix) of an input image Γ: full set of pixels in a cover image X t: target class len(M): length of a secret message Output: selected_Γ: the set of pixels selected using the saliency_map |

| 1: SET selected_Γ 2: SET N // a unit of pixels to select 3: iteration = len(M)/N // the number of calculations for Saliency Map 4: for i ← 0 to (iteration − 1) do 5: for j ← 0 to (N − 1) do 6: , 7: if α > 0 and β < 0 then 8: add pixel p to selected_Γ 9: return selected_Γ |

4. Experiments

4.1. Purpose and Procedures

The main purpose of the experiments is to evaluate the performance of our proposed method by comparing with existing methods in terms of the following three performance metrics: attack performance, the similarity between a cover image and an adversarial stego image, and time taken for generating an adversarial stego image.

The detailed experimental setup and procedures are as follows:

- Experimental Platform and Program: For the experimental platform, we used Google Colab Pro (Intel Xeon CPU 2.20 GHz and NVIDIA A100 with Ubuntu 20.04.6 LTS). All programs for experiments were coded by Python program language v.3.10.12.

- Preparing Image Dataset: For the cover image dataset to train the target steganalysis model, we used the BOSSbase v.1.01 [32] and BOWS2 [33] datasets, which are commonly used in steganography and steganalysis studies. Each dataset contains 10,000 grayscale images with a resolution of 512 × 512. For our experiments, these images were resized to 256 × 256 to ensure the optimal performance of the models by making them the same as the dataset configuration used in the referenced studies.

- Generating Adversarial Stego Images: The adversarial stego images using the proposed method were generated by Algorithm 1 and Algorithm 2, as presented in Section 3. N in Algorithm 2 was set to 1000. We also utilized the Adversarial Robustness Toolbox (ART) v1.0.0 [34] as JSMA’s baseline, which is an open source provided by IBM.

- Constructing the Target Steganalysis Model: For the target CNN-based image steganalysis model, we chose YeNet because its performance has been proven in datasets most used in the research field of steganography and steganalysis, such as BOSSBase. Thus, it is the most widely used steganalysis model in existing studies. To train the model, 4000 images were randomly selected from the BOSSBase dataset, and the entire 10,000 images from the BOWS2 datasets were used. In addition, 1000 images of BOSSBase were used for validation, and 5000 images of that were used for testing. This is the same as the setup in the paper [9] that proposed YeNet to ensure the optimal performance of the model. Additionally, we used adversarial stego images generated through each attack method. By setting up the experimental environment for each attack method in the same way, we can easily compare the performance of the proposed method with that of existing method.

- Performance Testing and Experimental Results Analysis: For performance comparison, we compared our proposed method with two existing methods, such as the WOW [15] and the ADS [16]. For intuitive comparison, we used the ADS-WOW because it is the most basic attack method that performs adversarial example attacks and steganography embedding separately without using any other networks. In addition, for the baseline of comparison, we used WOW, which is a basic content-adaptive steganography method without evasion capabilities against the steganalysis model.

4.2. Metrics for Evaluation and Comparison

For performance evaluation and comparison, we use the following three metrics in our experiments: (1) attack performance, (2) similarity between cover images and adversarial stego images, and (3) time taken for adversarial stego image generation.

4.2.1. Attack Performance

To evaluate the performance of adversarial stego image generation methods, it is common to use the Missed Detection Rate (MDR), False Alarm Rate (FAR), and Error Rate (ER) as indicators for evaluating the performance of adversarial stego image generation methods. MDR represents how well the adversarial stego images deceive the target steganalysis model, while FAR indicates how well the cover images deceive the target steganalysis model. ER can be obtained as the average of MDR and FAR. Among these, we use MDR for evaluating the attack performance because ASIGM achieves both evasion of the classification model and message hiding with a single process of noise insertion, eliminating the need to generate adversarial cover images, which is an intermediate step, before creating adversarial stego images. Therefore, measuring FAR is meaningless. MDR can be considered an attack success rate in this study and can be calculated by (4):

In the equation, True Positive (TP) refers to a case in which the steganalysis model correctly classifies the stego image as the stego image, and False Negative (FN) refers to a case in which the model misclassifies the stego image as a cover image.

4.2.2. Similarity Between Cover Images and Adversarial Stego Images

To check the similarity between cover images and adversarial stego images, we use two metrics, such as PSNR and SSIM, that are commonly used in this literature.

- Peak Signal-to-Noise Ratio (PSNR)

PSNR is an index indicating the ratio between the maximum possible power of a signal and the power of corrupting noise that affects the fidelity of its representation. It is mainly used to evaluate information loss in image quality. The unit of measurement of PSNR is dB, and it is calculated as Equation (5).

MAXI is the maximum pixel value of the corresponding image, which is 255 for an 8-bit gray-scale image. Mean Squared Error (MSE) is calculated as the difference between each pixel value for the original image and the image containing noise. The smaller the MSE, the larger the PSNR value. Therefore, the better the quality image, the larger the PSNR value. Depending on the formula, PSNR has a value between 0 and ∞ and is generally considered to have a high similarity between the two images if it is 30 dB or higher [35].

- SSIM (Structural Similarity Index)

SSIM is an indicator to evaluate the similarity between the two images in a way similar to the human visual system. With two images x and y, SSIM is calculated as

In (6), l represents luminance, C represents contrast, and S represents structure, evaluating the image quality in these three aspects. SSIM ranges from −1 to 1. As SSIM goes to 1, the similarity between two images increases [36]. Typically, the value of SSIM above 0.9 is considered high similarity between the two images.

4.2.3. Adversarial Stego Image Generation Time (ASIGT)

To measure the speed of each adversarial stego image generation method, we use the adversarial stego image generation time (ASIGT), which measures the time required to generate one adversarial stego image by a particular adversarial stego image generation method.

4.3. Results and Analysis

4.3.1. Attack Performance: Missed Detection Rate (MDR)

Table 1 shows the MDR for YeNet for each embedding method, and the experimental results are as follows.

Table 1.

MDR (%) of each method.

First, the proposed method (ASIGM) showed the highest MDR over the ADS-WOW and WOW. It is not surprising that the WoW has the lowest MDR, ranging from 0 to 10%, since it is a basic steganography embedding method without evasion capabilities against the classification model, and we used it for the comparison baseline. On the other hand, the ADS-WOW, designed with adversarial example attacks, demonstrated a relatively higher MDR ranging from 78% to 85% compared to the WOW. The MDR of our proposed method was 97.9% and 96.1% when bpp = 0.2 and 0.4, respectively. Specifically, the maximum improvement in MDR was 95.2%pt compared to WOW and up to 17.7%pt compared to ADS-WOW when bpp = 0.4. The difference in MDR between ADS-WOW and ASIGM arises from the difference in noise insertion steps and algorithms. Thus, ADS-WOW first generates an adversarial cover image and then embeds the message. If the impact of embedding is greater than the reinforcement strength, it fails to induce misclassification. In contrast, our ASIGM performs both evasion of the steganalysis model and message embedding in a single process of noise insertion. This approach eliminates the possibility of changes in classification results due to subsequent embeddings.

Second, all methods showed higher MDR at the lower bpp (=0.2). bpp is a value obtained by dividing the amount of hidden data by the total number of pixels. Thus, the higher the bpp, the more messages are hidden in the stego image. Therefore, a low bpp implies that there are few meaningful changes from the perspective of the steganalysis model, indicating a limited number of detectable alterations.

4.3.2. Similarity Between Cover Images and Adversarial Stego Images

Table 2 and Table 3 show the PSNR scores and the SSIM scores of the adversarial stego images generated by embedding methods, respectively. We explain experimental results in turn as follows.

Table 2.

Average PSNR (dB) scores of adversarial stego images generated by each method.

Table 3.

SSIM scores of adversarial stego images generated by each method.

First, our ASIGM outperformed ADS-WOW in PSNR, which means that our ASIGM can generate adversarial stego images that look much more similar to cover images than ADS-WOW. Since our proposed method inserts noise only for the length of a secret message in the cover image, PSNR scores were much higher than ADS-WOW, which was designed using the FGSM based on l∞ Norm and inserts noise into all pixels. Our ASIGM has slightly lower PSNR than the WOW. As we explained above, the WOW has a very low attack performance (MDR) against YeNet.

Second, the WOW and the proposed method showed similar SSIM scores and slightly higher SSIM scores than the ADS-WOW. SSIM determines that the two images are similar when it is above 0.9. Therefore, the SSIM scores of each method were all 0.99 or higher, and there was no significant difference.

Although SSIM was introduced to provide an improved measure of perceived difference between two images, the experimental results show that the SSIM values for all three methods (WOW, ADS-WOW, and ASIGM) were above 0.99, indicating that all methods generated perceptually very similar images. As such, SSIM did not provide significant discriminatory value compared to PSNR in this context. On the other hand, the PSNR values presented in Table 2 show more noticeable differences between the methods. PSNR values above 30 dB are generally considered good, and while all methods performed well in terms of perceptual quality, the PSNR results indicate that ASIGM generated adversarial stego images with a quality much closer to the original cover images. Specifically, ASIGM achieved a PSNR of 61.18, which is much higher than the 49.45 of ADS-WOW, which suggests that our ASIGM introduced less distortion into the image while achieving a higher attack performance.

In summation, according to PSNR and SSIM results, we consider that our ASIGM outperforms the existing ADS-WOW and does not significantly lower the perceptual quality of cover images.

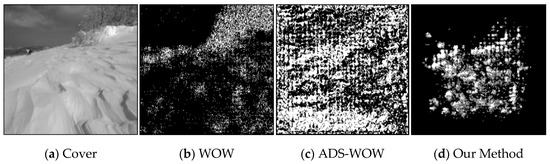

Additionally, Figure 7 visually shows the noise inserted when creating an image with each method. Through this comparison, we can see the difference in the morphological characteristics of each noise. The differences in the noise patterns observed in these methods help clarify the distinct attack objectives pursued by each method. In the case of WOW, the noise is inserted mainly around the edges of the objects in the image to ensure that the distortion is not easily noticeable by the human eye. On the other hand, in the ADS-WOW, the adversarial example attack is based on FGSM with l∞ Norm, which results in noise being distributed more uniformly across the entire image. This method is less concerned with perceptual distortion and more focused on altering the image in a way that maximizes the attack’s impact on the steganalysis model. For ASIGM, which is based on JSMA as introduced in Section 2.3, it inserts noise primarily into the pixels that are most likely to influence the steganalysis classifier’s decision, thus targeting those specific regions of the image that are crucial for classification. This explains the bias towards certain pixels in the noise distribution observed in our method.

Figure 7.

An original cover image and noises inserted by methods when bpp = 0.4. (a) shows the original cover image, and (b–d) show noises inserted by WOW, ADS-WOW, and our method, respectively.

4.3.3. Adversarial Stego Image Generation Time (ASIGT)

Table 4 shows the adversarial stego image generation time (ASIGT) of the proposed method and ADS-WOW and the results are as follows.

Table 4.

Adversarial stego image generation time for each method (in second).

First, the adversarial stego image generation time of the proposed method was much faster than the ADS-WOW. The one drawback of the original JSMA is that its time complexity is very high. Unlike the saliency map computed every two pixels in the original JSMA, we modified the JSMA in an optimized way such that the saliency map is computed every 1000 pixels. This change is possible because the purpose of the original JSMA is to deceive the DL model by inserting perturbation only in the minimum pixels, but the purpose of the proposed method is that noise insertion as long as the length of the secret message must be guaranteed. If more than 1000 pixels are selected at once in the proposed method, computational complexity can be further reduced, but the MDR may decrease. Therefore, the selection of the number of pixels to be perturbed involves a trade-off between MDR and computational complexity of our method.

Second, the ADS-WOW required almost similar time to generate one adversarial stego image regardless of bpp values, but the AGIST of our method grows as bpp increases. ADS-WOW always inserts noise into the entire image. Thus, the amount of noise inserted is not significantly affected by the length of the message. On the other hand, the proposed method inserts an equal amount of noise as the size of the message. Accordingly, the longer the length of the message, the greater the amount of noise inserted.

Additionally, the suitability of the ASIGM method for real-time processing depends on the specific application requirements. The proposed method generates adversarial stego images in an average time of approximately 0.6 s. Therefore, for applications that can accommodate this generation time, the method may be considered suitable for real-time processing.

5. Conclusions and Future Works

In this paper, we proposed an innovative adversarial stego image generation method (ASIGM) based on JSMA to avoid being detected by CNN-based steganalysis models. In our proposed method, a cover image is computed through JSMA’s saliency map, distinguishing pixels that increase the probability of being classified as the non-stego class and pixels that increase the probability of being classified as the stego class. We constrained the region to pixels that increase the probability of being classified as the non-stego class to embed the secret message. The adversarial stego image generated by this progress contained the hidden message, but it was classified as a non-stego image by the steganalysis model. Therefore, the proposed method is an efficient and effective way that makes it possible to simultaneously perform misclassification induction and message insertion with only one step of pixel distortion. In experiments, we evaluated the attack performance of the generated adversarial stego image, its similarity to the cover image, and the adversarial stego image generation time, demonstrating that the proposed method is superior to the existing methods.

Our future research directions are as follows. First, we will focus on enhancing the effectiveness of our method with higher payloads. As discussed in Section 4.3.1, both our method and existing approaches experience a decline in performance at higher bpp, as embedding more data increases detectability by steganalysis models. By addressing this challenge, we aim to enhance the robustness of our methods, enabling them to better maintain performance even with larger payloads. Second, we will focus on enhancing the method’s resistance to various types of attacks. Specifically, we aim to improve the robustness of ASIGM against geometric and transformation attacks (e.g., rotation, scaling, cropping, and flipping), noise attacks (e.g., Gaussian noise and salt-and-pepper noise), lossy compression (e.g., JPEG), and frequency-domain transformations (e.g., DCT). This research seeks to make ASIGM more resilient to these attacks, further strengthening its effectiveness in practical scenarios. Third, we will extend our study by applying our combined approach to other kinds of norm distance-based adversarial attacks and conduct experiments to compare the performance of our approach with them in terms of undetectability and indistinguishability. Fourth, we will consider a scenario where the message is embedded across multiple images instead of a single image. With the rise of cloud technology, cloud storage has become a prominent solution for managing large collections of digital images, which offers a new approach for steganography to embed secret information across multiple images [37]. Last, we will advance the proposed method to apply not only to grayscale images but also to color images and then carry out attacks on color image steganalysis. To do this, we will examine the state-of-the-art color image steganography method, such as ACMP (Amplifying Channel Modification Probabilities) [38], and the color image steganalysis, such as UCNet [39].

Author Contributions

Conceptualization, M.K. and Y.C.; Methodology, Y.C.; Software, M.K. and H.P.; Validation, M.K. and H.P.; Resources, M.K. and H.P.; Data curation, M.K. and H.P.; Writing—original draft, M.K.; Writing—review & editing, Y.C. and G.Q.; Visualization, M.K.; Supervision, Y.C.; Project administration, Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to a patent application.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Provos, N.; Honeyman, P. Hide and seek: An introduction to steganography. IEEE Secur. Priv. 2003, 1, 32–44. [Google Scholar] [CrossRef]

- Martín, A.; Hernández, A.; Alazab, M.; Jung, J.; Camacho, D. Evolving Generative Adversarial Networks to improve image steganography. Expert Syst. Appl. 2023, 222, 119841. [Google Scholar] [CrossRef]

- Johnson, N.F.; Jajodia, S. Exploring Steganography: Seeing the Unseen. Computer 1998, 31, 26–34. [Google Scholar] [CrossRef]

- Chaumont, M. Deep learning in steganography and steganalysis. Digit. Media Steganography 2020, 321–349. [Google Scholar] [CrossRef]

- Li, B.; He, J.; Huang, J.; Shi, Y.-Q. A Survey on Image Steganography and Steganalysis. J. Inf. Hiding Multimed. Signal Process. 2011, 2, 142–172. Available online: https://www.jihmsp.org/2011/vol2/JIH-MSP-2011-03-005.pdf (accessed on 14 January 2025).

- Qian, Y.; Dong, J.; Wang, W.; Tan, T. Deep Learning for Steganalysis via Convolutional Neural Networks. Media Watermarking Secur. Forensics 2015, 9409, 171–180. [Google Scholar] [CrossRef]

- Reinel, T.-S.; Raúl, R.-P.; Gustavo, I. Deep Learning Applied to Steganalysis of Digital Images: A Systematic Review. IEEE Access 2019, 7, 68970–68990. [Google Scholar] [CrossRef]

- Xu, G.; Wu, H.-Z.; Shi, Y.-Q. Structural design of convolutional neural networks for steganalysis. IEEE Signal Process. Lett. 2016, 23, 708–712. [Google Scholar] [CrossRef]

- Ye, J.; Ni, J.; Yi, Y. Deep learning hierarchical representations for image steganalysis. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2545–2557. [Google Scholar] [CrossRef]

- Boroumand, M.; Chen, M.; Fidrich, J. Deep Residual Network for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1181–1193. [Google Scholar] [CrossRef]

- Akhtar, N.; Mian, A. Threat of Adversarial Attacks on Deep Learning in Computer Vision: A Survey. IEEE Access 2018, 6, 14410–14430. [Google Scholar] [CrossRef]

- Zhang, L.; Jiang, C.; Chai, Z.; He, Y. Adversarial attack and training for deep neural network based power quality disturbance classification. Eng. Appl. Artif. Intell. 2024, 127, 107245. [Google Scholar] [CrossRef]

- Amini, S.; Heshmati, A.; Ghaemmaghami, S. Fast adversarial attacks to deep neural networks through gradual sparsification. Eng. Appl. Artif. Intell. 2024, 127, 107360. [Google Scholar] [CrossRef]

- Papernot, N.; McDaniel, P.; Jha, S. The limitations of deep learning inadversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbruecken, Germany, 21–24 March 2016; pp. 372–387. [Google Scholar] [CrossRef]

- Holub, V.; Fridrich, J. Designing Steganographic Distortion Using Directional Filters. In Proceedings of the 2012 IEEE International Workshop on Information Forensics and Security (WIFS), Costa Adeje, Spain, 2–5 December 2012; pp. 234–239. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, W.; Chen, K.; Liu, J.; Liu, Y.; Yu, N. Adversarial examples against deep neural network based steganalysis. In Proceedings of the 2018 6th ACM Workshop on Information Hiding and Multimedia Security (IH&MMSec ’18), Innsbruck, Austria, 20–22 June 2018; pp. 67–72. [Google Scholar] [CrossRef]

- Hussain, M.; Wahab, A.W.A.; Idris, Y.I.B.; Ho, A.T.; Jung, K.-H. Image steganography in spatial domain: A survey. Signal Process. Image Commun. 2018, 65, 46–66. [Google Scholar] [CrossRef]

- Holub, V.; Fridrich, J.; Denemark, T. Universal distortion functionfor steganography in an arbitrary domain. EURASIP J. Inf. Secur. 2014, 2014, 1. [Google Scholar] [CrossRef]

- Li, B.; Wang, M.; Huang, J.; Li, X. A new cost function for spatial image steganography. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4206–4210. [Google Scholar] [CrossRef]

- Fridrich, J.; Kodovsky, J. Rich Models for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2012, 7, 868–882. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013. [Google Scholar] [CrossRef]

- Vorobeychik, Y.; Kantarcioglu, M. Adversarial Machine Learning; Morgan & Claypool: San Rafael, CA, USA, 2018. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessingadversarial examples. arXiv 2014. [Google Scholar] [CrossRef]

- Moosavi-Dezfooli, S.-M.; Fawzi, A.; Frossard, P. Deepfool: A simple andaccurate method to fool deep neural networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2574–2582. [Google Scholar] [CrossRef]

- Tang, W.; Li, B.; Tan, S.; Barni, M.; Huang, J. CNN-based adversarial embedding for image steganography. IEEE Trans. Inf. Forensics Secur. 2019, 14, 2074–2087. [Google Scholar] [CrossRef]

- Yang, J.; Liao, X. ACGIS: Adversarial Cover Generator for Image Steganography with Noise Residuals Features-Preserving. Signal Process. Image Commun. 2023, 113, 116927. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Shi, H.; Dong, J.; Wang, W.; Qian, Y.; Zhang, X. SSGAN: Secure steganography based on generative adversarial networks. Pac. Rim Conf. Multimed. 2017, 534–544. [Google Scholar] [CrossRef]

- Tan, J.; Liao, X.; Liu, J.; Cao, Y.; Jiang, H. Channel Attention Image Steganography With Generative Adversarial Networks. IEEE Trans. Netw. Sci. Eng. 2021, 9, 888–903. [Google Scholar] [CrossRef]

- Liu, L.; Liu, X.; Wang, D.; Yang, G. Enhancing image steganography security via universal adversarial perturbations. Multimed Tools Appl. 2025, 84, 1303–1315. [Google Scholar] [CrossRef]

- Bas, P.; Filler, T.; Pevný, T. Break our steganographic system: The ins and outs of organizing BOSS. Int. Workshop Inf. Hiding 2011, 59–70. [Google Scholar] [CrossRef]

- Bas, P.; Furon, T. BOWS-2. 2007. Available online: https://data.mendeley.com/datasets/kb3ngxfmjw/1 (accessed on 14 January 2025).

- Nicolae, M.-I.; Mathieu, S.; Tran, M.N.; Buesser, B.; Rawat, A.; Wistuba, M.; Zantedeschi, V.; Baracaldo, N.; Chen, B.; Ludwig, H.; et al. Adversarial Robustness Toolbox v1.0.0. arXiv 2019. [Google Scholar] [CrossRef]

- Saeed, A.; Islam, M.B.; Islam, M.K. A Novel Approach for Image Steganography Using Dynamic Substitution and Secret Key. Am. J. Eng. Res. 2013, 2, 118–126. Available online: https://www.ajer.org/papers/v2(9)/Q029118126.pdf (accessed on 14 January 2025).

- Ndajah, P.; Kikuchi, H.; Yukawa, M.; Watanabe, H.; Muramatsu, S. SSIM image quality metric for denoised images. In Proceedings of the 3rd WSEAS International Conference on Visualization, Imaging and Simulation, VIS’10, Faro, Portugal, 3–5 November 2010; pp. 53–57. Available online: https://dl.acm.org/doi/abs/10.5555/1950211.1950221 (accessed on 14 January 2025).

- Liao, X.; Yin, J.; Chen, M.; Qin, Z. Adaptive Payload Distribution in Multiple Images Steganography Based on Image Texture Features. IEEE Trans. Dependable Secur. Comput. 2022, 19, 897–911. [Google Scholar] [CrossRef]

- Liao, X.; Yu, Y.; Li, B.; Li, Z.; Qin, Z. A New Payload Partition Strategy in Color Image Steganography. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 685–696. [Google Scholar] [CrossRef]

- Wei, K.; Luo, W.; Tan, S.; Huang, J. Universal Deep Network for Steganalysis of Color Image Based on Channel Representation. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3022–3036. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).