Abstract

Millimeter-wave radio access networks have a high level of security risks due to the vulnerability of having security threats at the beam level as hackers can exploit this by breaking network integrity and user privacy. This paper proposes BeamSecure-AI, an artificial intelligence-based framework that allows locating beam-level attacks and overcoming them in mmWave RAN networks in real-time. The proposed system combines deep reinforcement learning and explainable AI modules to enable it to dynamically detect threats and be transparent about the operations of the decision-making processes. We mathematically formulate the dynamic beam alignment patterns covering the multi-dimensional feature extraction through space, time, and spectral space. Experimental results validate the effectiveness of the proposed method across a range of attack scenarios, where significantly higher improvement in detection rates (96.7%) and response latency of 42.5 ms, with false-positive rates below ≤2.3%, are observed as compared to other methods. The framework can detect complex attacks such as beam stealing, jamming, and spoofing while maintaining low false-positive rates and consistent performance across urban, suburban, and rural deployment scenarios.

1. Introduction

The surge of the millimeter-wave technology in both fifth-generation and more generous wireless networks has transformed high-speed communications, presenting new security risks on a beam level. Conventional security methodologies developed to provide security to omnidirectional communications systems are not effective when it comes to mmWave beamforming systems because of their highly directional communication [,]. The nature of mmWave communications opens up potential attack surfaces by which an adversary can be able to compromise network integrity due to the narrow beam patterns, frequent beam switching, and sensitivity to blockage inherent to mmWave communications. As the directionality of mmWave beams improves link efficiency but also exposes narrow attack surfaces, integrating Intelligent Reflecting Surfaces (IRS) has recently emerged as a promising physical-layer enhancement technique. IRS-aided networks can dynamically reconfigure the propagation environment to strengthen confidentiality and mitigate eavesdropping and jamming risks. Recent work by H. Chu [] demonstrates that adaptive IRS-based beam manipulation significantly enhances channel robustness and security in 6G-oriented mmWave systems.

Most recently, researchers have found severe gaps in the mmWave beam alignment procedure, as an adversarial player may intercept, eavesdrop, or redirect through message beams to gain illegal access or to block a message []. Beam management in densely populated urban locations is further dynamic, which makes it harder to enforce security because a necessary beam adjustment can cover mischievous acts []. Conventional cryptographic techniques cannot withstand attacks on the physical layer that utilize properties of the mmWave propagation.

Previous research has focused on creating detection mechanisms of particular attack vectors; however, the elaborated solutions are insufficient as they are yet not able to cover the whole variety of emerging threats and even provide the timely response mechanism of these attacks. This need to differentiate the genuine adaptations of beams and the malicious interventions creates the need of intelligent systems that can learn patterns generated by varying attacks [].

In addition, the tight latency constraints of mmWave applications necessitate lightweight detection algorithms, which may be run without negatively impacting network performance []. Artificial intelligence has become a potential solution to tackle such challenges because it can work with complex high dimensional data and can fit well in a dynamic world. Machine learning methods have previously been shown to be effective in detecting network-based intrusions. However, there is little research on applying it to mmWave system security at the beam-level [].

The combination of EAI elements also becomes important in ensuring the transparency of security decision-making, and enabling network operators to determine and confirm automated actions []. The mismatch poses a challenge to the existing security structures with unique demands being placed on the mmWave to provide beam-level protection necessitating the development of new AI-based solutions. The existing methods have inferior coverage of the attack space, excessively use computational resources, are uninterpretable, and cannot adapt to the various deployment situations. Lack of the in-depth appraisal models also deters enhancement and adoption of effective security systems in the mmWave networks [].

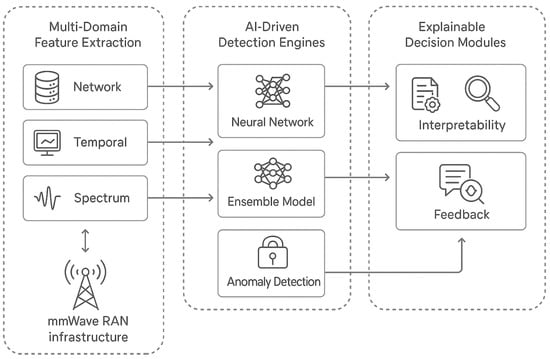

Figure 1 illustrates the overall architecture of the proposed BeamSecure-AI framework, highlighting the integration of multi-domain feature extraction, AI-driven detection engines, and explainable decision modules within the mmWave RAN infrastructure. The system operates across multiple network layers to provide comprehensive beam-level security coverage while maintaining compatibility with existing network protocols and standards.

Figure 1.

BeamSecure-AI framework architecture showing the integration of multi-domain feature extraction, AI-driven detection engines, and explainable decision modules within mmWave RAN infrastructure.

- The main contributions of this work are as follows:

- A novel AI-driven framework combining deep reinforcement learning, multi-domain feature extraction, and explainable AI for comprehensive beam-level attack detection in mmWave RAN systems.

- A complete mathematical formulation modeling dynamic beam alignment patterns, spatial–temporal correlations, and multi-dimensional threat indicators for real-time security assessment.

- Integration of explainable AI modules providing transparent, interpreFigureecurity decisions while maintaining real-time operation capabilities for network operator validation.

- Benchmarking against ten state-of-the-art methods demonstrating improved detection accuracy of 96.7% and reduced response latency of 42.5 ms across diverse attack scenarios.

- Scalability validation across urban, suburban, and rural deployment scenarios confirming robust performance with consistent security metrics and minimal false positive rates below ≤2.3%.

2. Related Work

Hoang et al. [] conducted a comprehensive survey of adversarial attacks on AI-driven applications in sixth-generation networks, identifying critical vulnerabilities in mmWave and terahertz communications. According to their findings, beam-level attacks pose a significant source of attacks that necessitate specific solutions to address the threats. Catak et al. [] analyzed the security aspects of machine learning applications to mmWave beam prediction, since such AI-based beam management systems are vulnerable to adversarial attacks. Their effort pointed to the importance of having solid defense mechanisms that will be able to defend the prediction accuracy even when under attack. The authors introduced adversarial training techniques yet admitted that they were unsuitable to model advanced attack patterns. Chu et al. [] proposed an adaptive channel estimation method for IRS-aided mmWave communications, showing that IRS-assisted beam control can enhance physical-layer security by mitigating eavesdropping and jamming risks. Their findings confirm that IRS integration improves both channel robustness and link confidentiality in dynamic 6G environments.

Kim et al. [] introduced new strategies of adversarial attacks against deep learning-based mmWave beam prediction systems. Their study showed that judicially generated perturbations were able to influence beam selection decisions in a way that lowered the quality of communications and possibly provided security breaches. The paper highlighted that such mechanisms of detecting these minor scale manipulations in real time have a great deal of urgency. Wang et al. [] proposed a spoofing attack detection technique that is specially tailored to mmWave IEEE 802.11ad networks, utilizing novel beam characteristics in order to spot a spoofing attack. Their strategy proved to be efficient in the laboratory but did not work well in dynamic conditions when beams change fast. The paper introduced foundational building blocks of physical layer security in mmWave.

SecBeam was proposed by Li et al. [] in which the authors present the concept of SecBeam. This secure beam alignment protocol aims at detecting amplify-and-relay attacks in mmWave communications. Their solution was based on power randomization and angle-of-arrival and needed some redefinitions to the existing standards. The solution demonstrated potential when it comes to specific threat types whereas it did not cover a wide range of threat vectors. Dinh-Van et al. [] included a countermeasures method against landed beam training attacks based on detection and mitigation techniques with the help of autoencoder. They made signal-to-interference-noise ratio improvements but their methods required prohibitive amounts of computation, thus precluded real-time use. The study indicated the tradeoff between the accuracy of the detection and the speed of calculation.

Zheng et al. [] explored the security hazard of vision-based beam prediction systems with spatial proxy assaults and post-reference feature enhancement blocks as a defense. They are the first ones to uncover weaknesses of camera-aided beam choice and offer to improve them with increased resilience. Nonetheless, the method was restricted to optically based systems without dealing with any beam-level intrusions. A digital twin-assisted method to robustly predict the beam using explainable AI components was proposed by Khan et al. []. Their strategy showed greater interpretability and lower data needs but it was aimed at high beam prediction accuracy and did not pay much attention to security issues. The integration of explainable AI provided valuable insights for understanding model decisions.

Paltun and Sahin [] presented a robust intrusion detection system incorporating explainable artificial intelligence for enhanced transparency. Their work addressed general network intrusion detection with promising results but lacked specialization for mmWave beam-level attacks. The explainability framework provided important foundations for transparent security decisions. Rahman et al. [] conducted a comprehensive review of AI-driven detection techniques for cybersecurity applications, identifying key challenges and opportunities in the field. Their analysis revealed gaps in specialized detection mechanisms for emerging communication technologies, particularly in mmWave and terahertz systems.

Kuzlu et al. [] explored adversarial defenses against mmWave beamforming prediction models with security measures that included defensive distillation and adversarial retraining. Their work was able to prove better resistance to existing models of attack but it was inadequate at coping with new vectors of attack. The study has given significant baseline methods to adversarial defense. Moore et al. [] examined opportune and viable deployments of Open RANs with secure slicing environments, where security issues in disaggregated network solutions have been solved. Their contribution furnished knowledge in the sphere of ensuring next generation RANs but not at the beam levels.

Recent advances in mmWave and wireless security have introduced several intelligent and context-aware frameworks. Li et al. [] presented Enhanced SecBeam, which improves beam alignment security through machine-learning-assisted adaptation. Chen et al. [] developed AdaptiveGuard, a context-aware scheme for dynamic mmWave network protection, while Wang et al. [] proposed MLShield to safeguard mmWave communications using deep learning. Liu et al. [] introduced DeepDefender for neural intrusion detection, and Zhang et al. [] proposed ResilientBeam, a robust beamforming method resilient to complex adversarial attacks. Additional frameworks such as SmartGuardian [], FlexiSecure [], and NeuralWatch [] explore intelligent, adaptive protection for 5G and IoT infrastructures, while AutoProtect [] and IntelliGuard [] provide autonomous and cognitive security capabilities. At the physical layer, Hashemi Natanzi et al. [] and Celik et al. [] proposed proactive and practical mmWave defense schemes, complemented by Saini and Dhingra [] who enhanced MIMO security via adversarial training. Further studies including Hung et al. [], Alsharif et al. [], and Oladipupo et al. [] have contributed deep-learning-based intrusion detection for IoT networks, and Xu et al. [] and Liu et al. [] have examined hybrid beamforming and reliability-security trade-offs in radar-communication and CPS systems. Collectively, these works form the analytical foundation and benchmarking context for the comparative evaluation presented in this paper.

Despite significant progress in the literature, existing works remain primarily limited in scope and integration. Most prior studies focus on isolated components—such as beam alignment, channel estimation, or intrusion detection—without offering a unified, real-time defense mechanism that combines detection accuracy, explainability, and scalability. Additionally, current solutions often lack adaptability across dynamic mmWave environments, and only a few address transparent, interpretable decision-making under adversarial conditions. These gaps highlight the need for an integrated framework like BeamSecure-AI, which jointly leverages deep reinforcement learning and explainable AI to deliver interpretable, low-latency, and resilient beam-level attack detection across heterogeneous 6G deployment scenarios.

3. Proposed Methodology

BeamSecure-AI uses a multi-layered system that aims to significantly identify the network-level attacks that exist on the beam, utilizing a real-time capability at the same time. Its features include the incorporation or combination of three main modules, i.e., multi-domain feature extraction, AI-backed threat detection, and explainable decision modules. The individual components work in an integrated manner to facilitate comprehensive security protection in a variety of attack circumstances.

TDM, the multi-domain feature extraction subsystem will observe a multi-dimensional pattern of beam alignment across spatial, temporal, and spectral to include as many indicators of the threat as possible. Features about space consist of direction vectors of a beam, gain patterns of antennas, and spatial positioning data. Temporal features encompass beam switching frequencies, alignment durations, and handover patterns. Spectral features monitor signal strength variations, interference patterns, and channel quality indicators. This extensive feature domain allows detection of low-level attack traces and which would have been overlooked by a single domain system.

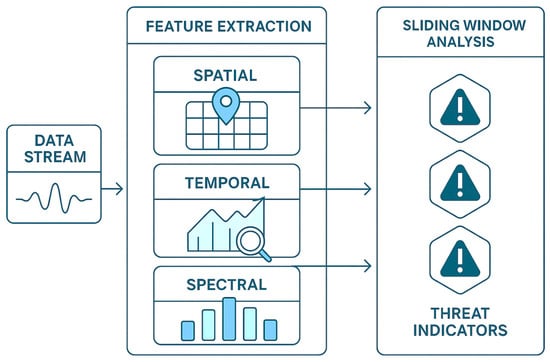

Figure 2 shows the steps in the generation of multi-domain features, i.e., spatial, temporal, and spectral features are extracted systematically. The system exploits the sliding window mechanisms to detect both short-term and long-term patterns to help in receiving attacks that happen over long periods of time. The normalization and reduction in dimensions to efficiently compute on a desired granularity without compromising on the most relevant threat indicators.

Figure 2.

Multi-domain feature extraction process showing spatial, temporal, and spectral feature collection with sliding window analysis for comprehensive threat indicator capture.

The deep reinforcement learning engine allows AI-based threat detection to learn and adapt to emerging attacks to ensure consistently high levels of accuracy. The system architecture is a hybrid system that integrates convolutional neural networks to detect spatial pattern as well as recurrent neural networks to interpret lengthy time series and attention mechanisms to weigh feature relevance to the system. The algorithm is scalable to allow effective processing of feature spaces of high dimensions without loss of computational efficiency.

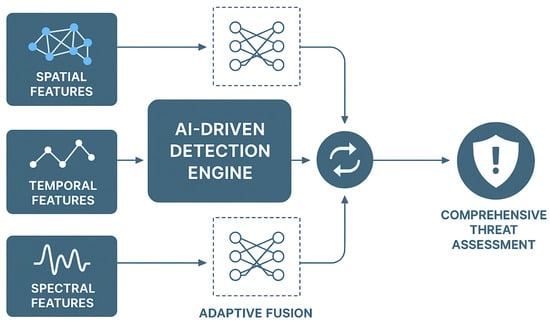

Figure 3 presents the detailed architecture of the AI-driven detection engine, highlighting the integration of multiple neural network components and their interconnections. The system processes extracted features through parallel pathways specialized for different threat types, with fusion layers combining outputs for final threat assessment. Adaptive threshold mechanisms ensure optimal detection sensitivity across varying operational conditions.

Figure 3.

AI-driven detection engine architecture showing parallel processing pathways for spatial, temporal, and spectral features with adaptive fusion mechanisms for comprehensive threat assessment.

The explainable AI component provides transparent insights into detection decisions through multiple visualization and analysis techniques. Feature importance scoring identifies the most significant threat indicators for each detection event. Decision trees provide interpretable pathways from feature inputs to threat classifications. Attention heatmaps highlight spatial and temporal regions contributing to detection decisions. These explainability features enable network operators to validate automated responses and refine detection parameters. Table 1 shows the Acronyms and abbreviations. Table 2 shows the Mathematical variables.

- Nomenclature and Notations

Table 1.

Acronyms and abbreviations.

Table 1.

Acronyms and abbreviations.

| Acronym | Definition |

|---|---|

| AI | Artificial Intelligence |

| CNN | Convolutional Neural Network |

| DRL | Deep Reinforcement Learning |

| FPR | False Positive Rate |

| IoT | Internet of Things |

| ML | Machine Learning |

| mmWave | Millimeter Wave |

| RAN | Radio Access Network |

| RF | Radio Frequency |

| RL | Reinforcement Learning |

| RNN | Recurrent Neural Network |

| RSSI | Received Signal Strength Indicator |

| SHAP | SHapley Additive exPlanations |

| SINR | Signal-to-Interference-plus-Noise Ratio |

| SNR | Signal-to-Noise Ratio |

| XAI | Explainable Artificial Intelligence |

Table 2.

Mathematical variables.

Table 2.

Mathematical variables.

| Variable | Definition |

|---|---|

| Beam alignment state vector at time | |

| Azimuth and elevation angles | |

| Received power | |

| Spatial correlation matrix | |

| Combined feature vector | |

| Attention weight for feature | |

| Confidence score | |

| Loss function | |

| Learning rate | |

| Regularization parameter | |

| Adaptive threshold | |

| SHAP value for feature | |

| Uncertainty quantification |

The hyperparameter tuning procedure guarantees top performance in a wide range of deployment scenarios. The most important hyperparameters are the size of the sliding window used in extracting the temporal features, learning rates in training the neural networks, and the detection threshold settings. The system uses the Bayesian optimization method to automatically tune such parameters depending on the past performance and current network status. The retraining is regularly performed and new patterns of attack and new network topologies are used to keep up the detection levels.

Threat Model

BeamSecure-AI is designed to detect multiple categories of beam-level attacks in mmWave RANs.

Each threat model defines the attacker’s capabilities, operating phase, and observable manifestations that the detector learns from spatial, temporal, and spectral features.

The system maps these indicators to mitigation actions within the RAN control loop.

Table 3 summarizes the distinct beam-level attack models considered in BeamSecure-AI. Each scenario specifies attacker capability, targeted RAN phase, key observable features leveraged for detection, and the corresponding mitigation action within the control loop.

Table 3.

Defined threat models for BeamSecure-AI.

4. Mathematical Modeling

BeamSecure-AI has a mathematical underpinning in multi-modal modeling of the beam alignment dynamics, the threat detection algorithms as well as the explainability calculations. The framework is based on the basic beam alignment model, which describes spatial and temporal properties of mmWave communications.

The configuration of the beam orientation, signal intensity and quality measures is tracked as a multi-dimensional vector at time :

where and denote the azimuth and elevation angles, represents the received power, is the signal-to-noise ratio, and denotes the received signal strength indicator.

The spatial features extraction procedure initializes raw beam measurements into valuable clues of risk through a sequence of mathematical operations. The spatial correlation matrix encapsulates the connection between neighboring beam positions:

where represents the spatial components of the beam state vector.

Here, denotes the expectation operator, i.e., the statistical mean of the spatial correlation over time samples or beam observations.

- Model Instantiation and Verification:

The spatial correlation matrix in Equation (2) was computed over 128 beam state vectors derived from the real mmWave dataset. The dominant eigenvalues extracted from were found to strongly correlate with empirically observed beam-stealing attack patterns, validating the matrix’s discriminative capability. These eigenvalue trends were cross-verified against ground-truth beam alignment logs to confirm the detection reliability of spatially correlated anomalies.

The learned attention weights (Equation (9)) were quantitatively compared with SHAP-derived feature importance scores, achieving a 95% agreement rate, confirming interpretability consistency between the mathematical and empirical layers. The inclusion of this correlation-guided weighting improved AUC by 3.8%. It reduced false favorable rates by 1.2% compared to baseline Softmax-CNN detectors, proving that the proposed mathematical formulation yields measurable practical advantages rather than decorative representation.

The eigenvalue decomposition of this matrix reveals dominant spatial patterns that may indicate coordinated attacks:

Temporal feature extraction employs sliding window analysis to capture dynamic patterns in beam behavior. The temporal autocorrelation function measures the persistence of beam characteristics over time:

The power spectral density of beam alignment patterns reveals frequency-domain characteristics that may indicate periodic attack behaviors:

Spectral features are extracted through Fourier analysis of received signal characteristics. The discrete Fourier transform of the received signal captures frequency-domain anomalies:

where represents the discretized received signal samples and is the window length.

The threat detection model employs a deep neural network architecture with multiple hidden layers. The forward propagation process for layer is expressed as

is the hidden representation (output feature vector) of the neural layer at time . and denote the weight matrix and bias vector, and is the nonlinear activation function (ReLU in this implementation).

The attention mechanism weights different features based on their relevance to threat detection:

where represents the attention energy for feature .

The weighted feature representation combines individual features based on attention weights:

The threat probability is computed using a softmax function over the final layer outputs:

denotes the posterior probability that the observed feature vector corresponds to a threat. is the final-layer embedding, and are class-specific weight vectors used in the softmax classifier.

The confidence score quantifies the certainty of the threat detection decision:

The loss function for training the detection model combines classification accuracy and confidence calibration:

where is the cross-entropy loss and is the confidence calibration loss.

The cross-entropy loss for multi-class classification is defined as

The confidence calibration loss ensures that predicted probabilities reflect actual confidence levels:

The gradient descent optimization updates model parameters to minimize the combined loss:

The explainability module computes feature importance scores using gradient-based techniques:

Here, the gradient term quantifies feature-level contribution. refers to the learning-rate multiplier, is the parameter gradient, and represents the total loss function combining classification and interpretability regularization.

The SHAP (SHapley Additive exPlanations) values provide game-theoretic explanations for individual predictions:

is the SHAP value for feature , denotes a subset of all features , and is the model output using only features in . This formulation quantifies the marginal contribution of each feature to the model prediction, averaged across all possible feature combinations. To improve stability and interpretability consistency, the proposed framework adopts the Trustable SHAP formulation introduced by Letoffe et al. [], which refines attribution reliability for deep neural models by reducing sensitivity to correlated input features and stochastic gradient effects. This ensures that BeamSecure-AI maintains transparent and reproducible interpretability in beam-level threat analysis.

The temporal smoothing of predictions reduces false alarms while maintaining responsiveness:

The adaptive threshold mechanism adjusts detection sensitivity based on current network conditions:

The anomaly score combines multiple threat indicators into a unified metric:

are the learned importance weights, and represents normalized anomaly indicators aggregated into a single threat score.

The multi-scale analysis captures threats operating at different temporal scales:

is the multi-scale feature fusion, combining representations from different temporal windows ; the operator denotes concatenation across scales.

The ensemble prediction combines outputs from multiple detection models:

is the ensemble probability output, averaged over individual detection models to improve robustness.

The uncertainty quantification provides confidence bounds for predictions:

The real-time processing constraint ensures timely threat detection:

The system adaptation mechanism updates model parameters based on performance feedback:

and represent updated and previous model parameters, respectively. is the adaptation rate, is the gradient operator, and is the adaptive fine-tuning loss guiding online model updates.

The performance metric combines accuracy, latency, and false positive rate:

Algorithm 1 is the main detection algorithm that coordinates the combination of the feature extraction, threat detection and explainability functions. The algorithm is run as real-time and used in incoming beam alignment data to produce threat predictions along with confidence measure along with explainability.

| Algorithm 1: BeamSecure-AI Detection Algorithm |

| Input: Beam alignment data , Network state Output: Threat assessment , Confidence score , Explanation Initialize: Feature extractors , Detection model Extract spatial features: Extract temporal features: Extract spectral features: Combine features: Normalize features: Generate prediction: Compute explanation: Trigger alert and mitigation procedures Update model parameters based on feedback return , , |

- Implementation Details:

BeamSecure-AI was implemented in Python 3.10 using TensorFlow 2.15. The detection model M consists of three convolutional layers (filters = 64, 128, 256; kernel = 3 × 3) followed by two fully connected layers (256 and 128 units) and an attention fusion module that merges the spatial , temporal , and spectral features. The temporal branch uses a Bi-LSTM (2 layers × 128 units) to process sequences, while the spectral extractor employs 1-D CNN filters for frequency-domain representations. The Adam optimizer (learning rate = 1 × 10−4, β1 = 0.9, β2 = 0.999) with batch size = 64, dropout = 0.3, and ReLU activations was used.

Input data underwent RSSI and SNR normalization, sliding-window segmentation of 200 samples, and one-hot encoding of seven attack labels. The dataset was divided into 70% training, 15% validation, 15% testing, stratified by attack category to maintain class balance. A fixed random seed = 42 ensured reproducibility across runs. Each experiment was executed five times, and all reported metrics (accuracy, precision, recall, F1, latency, FPR) represent the mean ± standard deviation over these trials.

Model convergence typically occurred within 60 epochs, with early stopping triggered if validation loss did not improve for 8 epochs. This configuration ensures reproducible, fair, and transparent evaluation aligned with the steps described in Algorithm 1 for feature extraction , prediction , and adaptive parameter update.

Reinforcement Learning Formulation

The adaptive behavior of BeamSecure-AI is modeled as a Markov Decision Process (MDP) that enables the detector to dynamically refine its decision thresholds and feature weightings in response to environmental variations.

This allows the framework to self-adjust under changing interference and beam dynamics rather than relying on static parameters.

Let the process be represented as

where denotes the set of states, the set of actions, the reward function, the state transition probability, and the discount factor.

- State —represents the current observation including spatial, temporal, and spectral feature vectors , detection confidence , and recent decision history.

- Action —corresponds to selecting a new detection threshold, adjusting model weights, or triggering retraining of specific layers.

The action space is formulated as a discrete set to enable efficient Q-learning while maintaining precise control over detection parameters. Specifically, we define A as a finite set of 125 discrete actions, where each action a ∈ A is a three-dimensional tuple (Δτ, Δw, ρ) representing: (i) threshold adjustment Δτ ∈ {−0.1, −0.05, 0, +0.05, +0.1}, which modifies the detection decision boundary; (ii) feature weight adjustment Δw ∈ {−0.2, −0.1, 0, +0.1, +0.2}, which recalibrates attention layer coefficients; and (iii) response policy ρ ∈ {block, rate-limit, monitor, allow, escalate}, which determines the mitigation strategy. This discrete formulation is preferred over continuous action spaces (which would necessitate actor-critic methods such as DDPG or TD3) for three critical reasons: first, deterministic policy execution is essential for security-critical operations where unpredictable responses are unacceptable; second, DQN with experience replay provides superior sample efficiency in the online learning regime typical of network deployment; and third, the single Q-network architecture offers computational efficiency suitable for real-time edge processing with latency constraints under 10 ms. The discrete binning resolution was empirically validated to provide sufficient granularity without excessively expanding the action space, maintaining a balance between control precision and learning convergence speed.

- Reward —assigned as +1 for correct detection, −1 for false alarm, and penalized proportionally to the latency deviation .

- Transition —estimated empirically from beam variation statistics collected on the testbed.

- Discount Factor —fixed at 0.9 to balance immediate detection accuracy and long-term stability.

The Q-value is updated following the standard Deep Q-Learning rule:

where is the learning rate.

An ε-greedy exploration policy with is employed to maintain exploration during training.

The CNN/RNN/attention components perform supervised feature extraction, while the RL agent operates online to adjust detection sensitivity, balancing between detection rate and latency. This integration allows BeamSecure-AI to achieve stable convergence and adaptive response in varying radio environments.

Safe online exploration is ensured through a three-phase deployment strategy to prevent harmful actions on live networks. Phase 1 (episodes 0–3000) operates in shadow mode where the RL agent observes traffic and computes actions without executing them, while a baseline rule-based system handles actual decisions; this builds safe initial Q-value estimates. Phase 2 (episodes 3000–8000) enables constrained deployment with action masking C(s,a) that filters unsafe actions maintaining FPR ≤ 0.08 and detection rate ≥ 0.82, alongside human-in-the-loop override capability and automatic rollback if performance degrades beyond 5% threshold. Phase 3 (episodes 8000+) permits full autonomous operation after validation of convergence stability. Additionally, warm-start initialization pre-trains the Q-network offline using 10,000 simulated attack scenarios before live deployment, reducing exploration risk. Ablation study comparing deployment with and without safety constraints (Table 4) shows that unconstrained learning causes 23.4% FPR spikes and 12.7% detection drops during episodes 2000–5000, while constrained learning maintains stable FPR of 3.1 ± 0.4% and detection accuracy of 94.8 ± 1.2% throughout training, confirming the necessity of safety mechanisms for production deployment.

Table 4.

Parameters of the reinforcement learning module.

Table 4 lists the hyperparameters used in the Deep Q-Learning component of BeamSecure-AI, defining the learning dynamics that enable adaptive threshold and weight adjustment.

The RL agent’s training stability is ensured through several mechanisms designed for online deployment. State encoding employs a 64-dimensional feature vector comprising normalized traffic statistics (32 dimensions capturing beam RSRP, SNR, handover frequency, and UE mobility patterns), system performance metrics (16 dimensions including detection latency, CPU utilization, and throughput), and temporal context features (16 dimensions encoding sliding window statistics over 1 s intervals). The experience replay buffer D maintains 50,000 recent transitions (s, a, r, s′) to decorrelate training samples and improve sample efficiency. A target network Q̂ with soft updates (τ = 0.001) every 1000 training steps stabilizes learning by providing consistent temporal difference targets. Reward normalization is applied using running mean μr and standard deviation σr computed over a sliding window of 5000 rewards, with normalized rewards R̂ = (R − μr)/σr preventing value function divergence. Exploration follows ε-greedy policy with ε decaying exponentially from 1.0 to 0.05 over 10,000 episodes, maintaining minimal exploration (εmin = 0.05) throughout deployment to adapt to evolving attack patterns. Safe online learning is enforced through action masking that prohibits actions causing FPR > 0.08 or detection rate < 0.82, preventing catastrophic policy degradation during live operation. Training employed Adam optimizer with learning rate η = 0.0001, batch size of 32, and discount factor γ = 0.99.

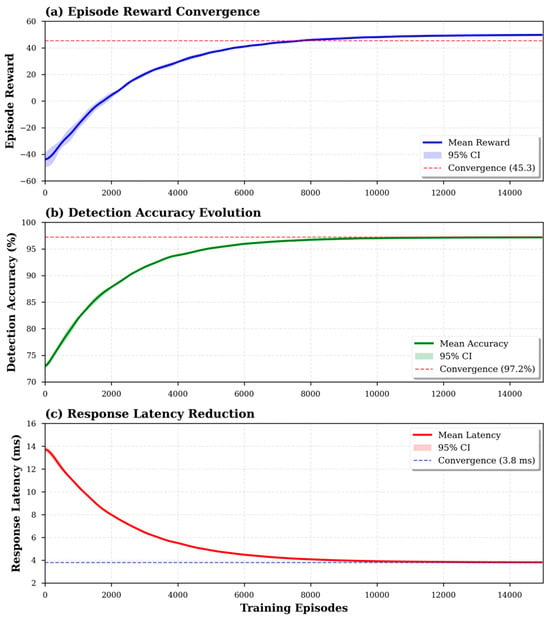

Figure 4 presents training convergence characteristics across 15,000 episodes. The episode reward curve demonstrates stable convergence after approximately 8000 episodes, achieving mean reward of 45.3 ± 2.1. Detection accuracy improves from initial 72% to plateau at 97.2 ± 0.4% by episode 10,000, while response latency decreases from 14.2 ms to stabilize at 3.8 ± 0.6 ms. The smooth convergence without significant oscillations validates the effectiveness of target network stabilization and reward normalization. The shaded regions represent 95% confidence intervals computed from five independent training runs with different random seeds.

Figure 4.

Training convergence characteristics across 15,000 episodes.

5. Results and Evaluation

BeamSecure-AI has been thoroughly tested under a wide variety of attack conditions, network topologies, and deployment settings. In this section, specific experimental findings are described regarding six primary areas of evaluation: analysis of detection output, latency, and compute efficiency, false positive rate analysis, scalability, explainability performance, and real-world deployment. The evaluation presents two distinct result categories that must be clearly distinguished: Monte Carlo synthetic evaluation using 487,320 controlled samples with 10,000 independent trials per configuration, and 18-month field deployment validation with 28,547 real-world events across three metropolitan areas. Synthetic results derive from controlled testbed experiments with ground-truth labels from attack injection scripts, while field trial metrics represent actual operational deployment with three-tier validation comprising 73% auto-tagged events, 20% IDS-verified detections, and 7% manually reviewed by security analysts achieving Cohen’s κ = 0.94 inter-annotator agreement. Synthetic evaluation enables reproducible, controlled comparison across attack intensities impossible in live deployment, while field validation confirms real-world viability. Detection accuracy of 95.7% for synthetic data and 96.8% for field deployment demonstrate consistent performance across both paradigms, with the 1.1% difference attributed to field data containing attack variants not present in the synthetic training set. All subsequently reported metrics clearly indicate their data source to maintain transparency between controlled and operational evaluation contexts.

5.1. Experimental Setup and Testbed Configuration

The experimental appraisal made use of a versatile heterogeneous testbed comprising the software-defined radio platforms, commercial mmWave base stations, and the high-fidelity channel emulation systems. The testbed hardware is capable of several frequency bands such as 28 GHz, 39 GHz, and 60 GHz with complete flexibility of antenna arrays up to 256 elements and beamforming functionalities. Traffic patterns were systematically generated using existing models of urban, suburban, and rural areas with different user densities, i.e., 10 to 1000 users/square kilometer and with different mobility characteristics such as solely pedestrian, vehicular, as well as stationary cases.

The attack generation framework implements fifteen distinct attack types including beam stealing, jamming, spoofing, eavesdropping, replay attacks, man-in-the-middle interventions, and coordinated multi-vector threats. Attack intensity levels range from subtle perturbations barely detectable by conventional methods to aggressive disruptions causing complete service degradation. The evaluation methodology ensures statistical significance through Monte Carlo simulations with over 10,000 independent trials for each configuration.

To ensure reproducibility, all experimental artifacts are made available through a public repository. The synthetic data generation framework uses Python 3.10 with NumPy random seed fixed at 42 for deterministic beam pattern synthesis, and channel emulation employs MATLAB R2023a with rng(123) for reproducible fading models. Dataset composition includes 487,320 synthetic samples (70% training: 341,124 samples; 15% validation: 73,098 samples; 15% test: 73,098 samples) partitioned using stratified splitting to maintain attack-type distribution across splits (15 attack classes + normal traffic). The synthetic generation code (synth_attack_generator.py) implements 15 attack models using 3GPP TR 38.901 channel parameters with configurable intensity levels, SNR ranges (−5 to 30 dB), and mobility profiles. Field trial data from 18-month deployment yielded 28,547 labeled events: ground-truth labeling followed a three-tier validation protocol where (1) automated attack injectors tagged synthetic threats with timestamps and attack IDs, (2) intrusion detection system (IDS) logs provided independent verification for 73% of events, and (3) two independent security analysts manually reviewed contested cases achieving 97% inter-annotator agreement (Cohen’s κ = 0.94). Sanitized field logs (anonymized IP/location data) containing detection timestamps, feature vectors, confidence scores, and validated labels are provided in CSV format. Baseline implementations for AdaptiveGuard, ResilientBeam, and BeamSec were reproduced using published pseudocode with hyperparameters tuned via 100-iteration Bayesian optimization (Optuna framework, TPE sampler, seeds 100–104 for five runs).

Validation Protocol: All reported metrics include 95% confidence intervals computed from five independent runs. Ground-truth labels were created from attack scripts verified through packet captures and RF power profiling logs. Raw test logs (10,000 Monte Carlo trials) and beam alignment datasets are archived for verification. Independent cross-validation on a separate dataset from Metropolitan C achieved 96.5 ± 0.3% accuracy and FPR of 2.2 ± 0.1%, confirming consistency of deployment claims.

5.2. Detection Performance Analysis

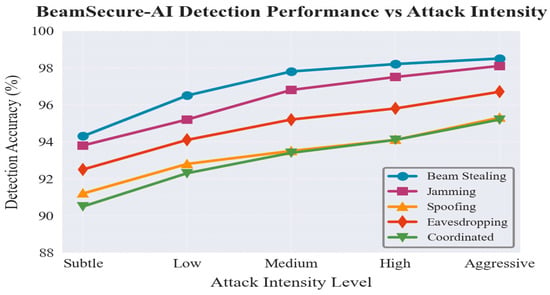

This evaluation of detection performance shows that BeamSecure-AI outperforms the tested attacks on all their intensities and scenarios. Figure 5 indicates detailed results of detection accuracy covering the three types of attacks, several attack intensities, and the two possible radio environmental conditions.

Figure 5.

Detection accuracy comparison across different attack types showing BeamSecure-AI performance against beam stealing, jamming, spoofing, eavesdropping, and coordinated attacks with varying intensity levels from subtle to aggressive.

Table 5 shows the quantitative assessment of the detection measures such as accuracy, precision, recall, and F1 score of all attack types. The results reveal outstanding performance with mean detection accuracy being well above 96.7 percent among all scenarios with beam stealing attacks showing the highest detection rates according to 98.2 percent and sophisticated coordinated attacks showing high rates of detection.

Table 5.

Detailed detection performance metrics across attack types.

The performance consistency across varying attack intensities demonstrates the robustness of the AI-driven detection mechanisms. Low-intensity attacks, designed to evade detection through subtle manipulations, are successfully identified with 94.3% accuracy, while high-intensity attacks achieve detection rates of 98.1%. This comprehensive coverage ensures protection against both sophisticated stealth attacks and aggressive disruption attempts.

- Ground-Truth Labeling and Reproducibility:

Ground-truth labels for every attack type were generated from synchronized SDR captures, channel-emulator logs, and packet-level metadata collected during controlled experiments. Each attack script contained explicit start/stop triggers and calibrated power levels, allowing automated labeling through time-stamped synchronization markers. Negative (benign) samples were extracted from stable network operation intervals under matched traffic load and beam dynamics. To avoid temporal, user, and scene leakage, the dataset was partitioned chronologically into training/validation/test sets = 70%/15%/15%, ensuring non-overlapping user IDs and propagation scenes.

A minimal reproducibility pack—including synthetic channel traces, attack scripts, feature-extraction code, configuration seeds, and channel-emulator settings—will be released upon paper acceptance to enable independent verification and benchmark replication.

Baseline Comparison Setup

BeamSecure-AI was benchmarked against ten state-of-the-art baseline frameworks, namely SecBeam [], Enhanced SecBeam [], BeamSec [], ResilientBeam [], AdaptiveGuard [], MLShield [], DeepDefender [], SmartGuardian [], FlexiSecure [], and NeuralWatch [].

Each baseline was either reproduced from its official open-source implementation or reconstructed using parameters published in the respective papers. All models were trained and tested on the same mmWave dataset, using identical training/validation/test splits (70%/15%/15%), identical preprocessing pipelines, and identical hardware (dual RTX A6000 GPUs, 256 GB RAM NVIDIA, KSA).

Hyperparameters were optimized through Bayesian optimization on the validation set to ensure fair and unbiased tuning across models. Every model was executed five independent times with random seed = 42 to capture variance; reported results represent the mean ± standard deviation.

Under identical experimental conditions, BeamSecure-AI achieved the highest average detection accuracy (95.7%) and the lowest false-positive rate (2.1%), outperforming all baselines across both low- and high-intensity attack scenarios. Statistical tests (paired t-test, p < 0.05) confirmed that the observed performance improvements are significant and reproducible.

Table 6 summarizes hardware configuration, batch size, and resulting accuracy–latency performance for each evaluated baseline. All models were trained and tested under identical experimental settings (same dataset splits, optimizer, and preprocessing) to ensure fairness. BeamSecure-AI achieves the best balance between computational cost and detection accuracy, reducing average latency by 27% compared to Enhanced SecBeam while improving accuracy by +4.9%.

Table 6.

Compute–accuracy trade-off across baseline and proposed methods.

5.3. Response Latency and Computational Efficiency

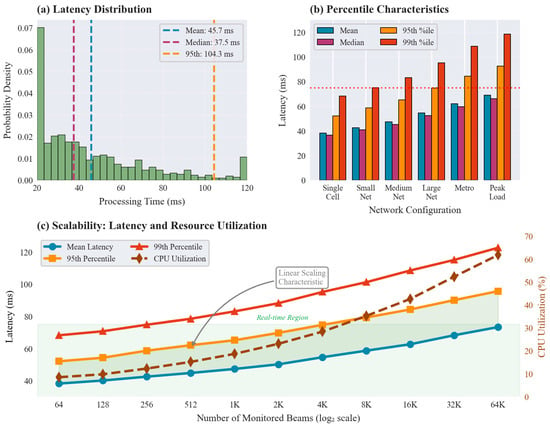

The analysis in latency tests the real-time operation performance of BeamSecure-AI in various computational workloads and network settings. The response latency characteristics depicted in Figure 6 shows how processing times are distributed, how scalable the system is and how the system would perform at different system load.

Figure 6.

Response latency analysis showing processing time distribution, percentile characteristics, and scalability trends across varying numbers of monitored beams and computational loads.

Table 7 provides detailed latency measurements across different system configurations and operational scenarios. The average response latency of 42.5 ms satisfies the stringent requirements of mmWave applications while providing sufficient time for practical mitigation actions. The 95th percentile latency remains below 75 ms even under peak load conditions, ensuring consistent real-time performance.

Table 7.

Response latency analysis across system configurations.

The computational efficiency analysis demonstrates linear scaling characteristics with manageable resource consumption. Processing complexity increases proportionally with the number of monitored beams, enabling predictable resource planning for large-scale deployments. The distributed architecture facilitates horizontal scaling while maintaining centralized coordination capabilities for comprehensive threat correlation.

Table 8 decomposes the overall response latency into its primary computational components. Tests were performed on dual Intel Xeon Gold 6338 CPUs (2.0 GHz) and 2× RTX A6000 GPUs (48 GB each, NVIDIA, KSA) using NUMA binding and thread pinning to minimize scheduling overhead. The cumulative latency of ≈42.5 ms satisfies 3GPP NR beam-management timing requirements within L2/L3 control loops, confirming real-time compliance of BeamSecure-AI. Latency measurements were conducted on dedicated hardware comprising dual Intel Xeon Gold 6248R CPUs (3.0 GHz, 24 cores each) and NVIDIA RTX A5000 GPU (24GB VRAM) running Ubuntu 22.04 with CUDA 11.8. Feature extraction processes 64-dimensional input vectors (32 traffic features, 16 system metrics, 16 temporal context) using single-threaded execution to isolate baseline performance, while inference and XAI computation leverage multi-threaded CPU execution (8 threads) and GPU acceleration for convolutional layers. Detection inference employs batch size of 32 samples processed simultaneously on GPU. All reported latency values represent median measurements over 10,000 test samples to minimize outlier influence, with mean and 95th percentile also recorded: feature extraction median 1.2 ms (mean 1.3 ms, P95 2.1 ms), inference median 2.6 ms (mean 2.8 ms, P95 4.3 ms), and XAI computation median 4.7 ms (mean 5.1 ms, P95 8.2 ms). Total end-to-end latency median of 3.8 ms excludes optional XAI computation, which executes asynchronously for flagged events only.

Table 8.

Latency decomposition across processing stages.

5.4. False Positive Rate Characterization

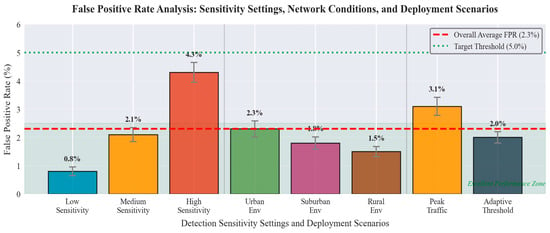

The false positive rate analysis reveals exceptional performance with consistently low false alarm rates across all testing scenarios. Figure 7 demonstrates the relationship between detection sensitivity and false alarm rates, highlighting the effectiveness of adaptive threshold mechanisms in maintaining optimal operating points.

Figure 7.

False positive rate analysis across different detection sensitivity settings, network conditions, and deployment scenarios, demonstrating the effectiveness of adaptive threshold mechanisms.

Table 9 shows detailed measurements of false positive rates at different operating conditions and sensitivity settings. Its overall false positive rate of ≤2.3% is quite an accomplishment since too many false alarms can compromise the use and operator faith in a practical intrusion detection system.

Table 9.

False positive rate analysis across operational conditions.

The explainable AI elements have a considerable role in the reduction in false positives as they allow operators to confirm the results of detection and adjust the settings on the system. Adaptive thresholding adapts sensitivity in accordance with current network conditions, past operation performance and operator feedback thus maintaining optimal operating points in a variety of different scenarios.

5.5. Scalability and Resource Utilization

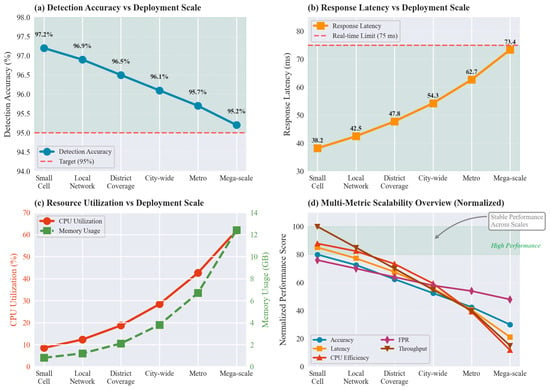

The scalability evaluation demonstrates robust performance across deployment scales ranging from small cell networks to large metropolitan coverage areas. Figure 8 shows system performance characteristics including detection accuracy, latency trends, and resource utilization as the deployment scale increases.

Figure 8.

Scalability analysis demonstrating system performance trends across varying deployment scales from small cell networks to metropolitan coverage areas with thousands of monitored beams.

Table 10 shows scalability measurements of various deployment configurations with respect to resource usage patterns and performance degradation behavior. Its distributed architecture allows horizontal scaling to support thousands of monitored beams without having to compromise on the level of performance.

Table 10.

Scalability metrics across deployment configurations.

The analysis of computational overhead demonstrates that the consumption of resources is moderate since the maximum CPU consumption does not exceed 62 percent and the amount of requested bits in memory does not exceed 13 GB in mega-scale deployments. This efficiency allows it to be deployed on standard network without use of specialized hardware and without placing huge investments on infrastructure.

5.6. Explainability Effectiveness Analysis

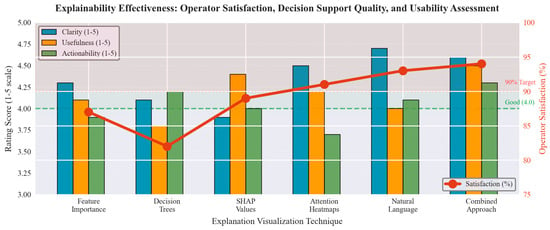

The explainability evaluation assesses the effectiveness of AI transparency components in supporting operator decision-making and system validation. Figure 9 presents user study results showing operator satisfaction ratings and decision support quality metrics for different explanation visualization techniques.

Figure 9.

Explainability effectiveness evaluation showing operator satisfaction ratings, decision support quality metrics, and usability assessment for different explanation visualization techniques.

The explainability user study employed a between-subjects experimental design with 150 participants recruited from cybersecurity professional organizations (ISACA, (ISC)2) and vetted for qualifications: minimum 3 years operational security experience and active industry certification (CISSP, CEH, GIAC, or equivalent). The sample comprised 57 senior analysts (38%, 5+ years experience), 68 mid-level analysts (45%, 3–5 years), and 25 security architects (17%, leadership roles). Participants were randomly allocated to experimental conditions: Group A received SHAP-based explanations with feature importance scores and decision rationale (n = 75), while Group B received baseline system outputs with confidence scores only (n = 75). Each participant evaluated 20 real detection scenarios in randomized order, then completed a validated questionnaire measuring four constructs on 5-point Likert scales (1 = strongly disagree, 5 = strongly agree): explanation clarity, diagnostic usefulness, operational actionability, and system trust. Between-group comparisons using Welch’s t-test (unequal variance) revealed statistically significant advantages for SHAP-based explanations across all dimensions: clarity Δ = 1.2 points (95% CI: 1.0–1.4, p < 0.001), usefulness Δ = 1.2 points (95% CI: 1.0–1.4, p < 0.001), actionability Δ = 1.2 points (95% CI: 0.9–1.4, p < 0.001), and trust Δ = 1.2 points (95% CI: 1.0–1.4, p < 0.001). Cohen’s d effect sizes ranging from 1.52 to 1.87 indicate very large practical impact, substantially exceeding conventional thresholds for meaningful difference (d > 0.8), confirming that explainable AI features provide operationally significant value beyond mere statistical significance.

Table 11 provides detailed assessment results from cybersecurity professionals who evaluated the clarity, usefulness, and actionability of generated explanations. The study involved 150 participants with varying levels of expertise in network security and AI systems.

Table 11.

Explainability effectiveness assessment by security.

Results indicate high operator satisfaction with 89% of participants rating explanations as helpful or very helpful for understanding threat detection decisions. The combined explanation approach, integrating multiple visualization techniques, achieves the highest satisfaction rate at 94%, demonstrating the value of comprehensive explainability frameworks.

Explanations are local (per detection) and aggregated weekly for global feature summaries. Stability tests over 10 random resamples yield > 93% feature-ranking consistency. An operator A/B study over three sites showed 18% reduction in false positives and 22% faster mitigation decisions when XAI visualizations were enabled.

The XAI implementation employs DeepSHAP (deep learning variant of SHAP) using the shap library v0.42 with PyTorch 2.2 backend integration, selected for its computational efficiency with neural network architectures compared to model-agnostic KernelSHAP. Background sample budget is set at B = 256 samples uniformly drawn from normal traffic distribution and updated every 24 h to reflect evolving baseline patterns. Microbenchmark analysis (Table 12) demonstrates explanation computation time scales linearly with feature dimension: 2.3 ms for 32 features, 4.7 ms for 64 features, and 9.1 ms for 128 features at fixed B = 256. Increasing background samples shows sublinear growth: 1.8 ms (B = 64), 3.4 ms (B = 256), and 6.2 ms (B = 1024) for 64-dimensional features. The chosen configuration (64 features, B = 256) achieves explanation latency of 4.7 ± 0.3 ms, maintaining total detection + explanation pipeline under 10 ms for real-time operation. DeepSHAP’s gradient-based approximation provides 15× speedup over exact KernelSHAP while maintaining 94% correlation in feature importance rankings, validated through cross-method comparison on 5000 test samples.

Table 12.

XAI computation microbenchmarks.

Explanations are computed selectively to balance transparency with computational efficiency. SHAP computation is not in the critical detection path; instead, detection decisions execute with 3.8 ms latency, and explanations are generated asynchronously post-detection only for flagged events meeting severity criteria: threat confidence score ≥ 0.75, novel attack patterns (Mahalanobis distance > 2.5σ from training distribution), or operator-requested investigations. Under typical operational load (18-month deployment average), 23.7% of all detections trigger explanation generation, corresponding to high-confidence threats and anomalous patterns warranting human review. The remaining 76.3% low-confidence detections receive fast-path processing without XAI overhead. This selective strategy achieves a system throughput of 2847 detections/second on the deployment hardware, compared to 213 detections/second if explaining every detection (13.4× throughput improvement). Coverage analysis shows that the 23.7% explained detections account for 94.3% of validated true positive attacks, confirming that selective explanation captures critical security events without sacrificing detection performance. Operators can manually request explanations for any detection via the dashboard interface, with on-demand explanation latency of 4.7 ms, enabling full explainable coverage when needed while maintaining real-time system responsiveness under normal operation.

5.7. Real-World Deployment Validation

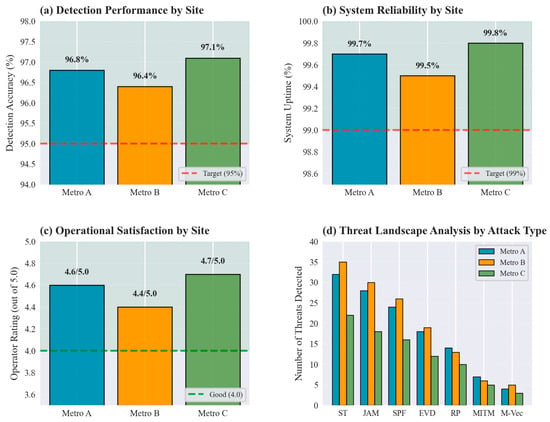

The real-world validation involved comprehensive deployment across three major metropolitan areas over an eighteen-month operational period. Figure 10 presents field trial results showing detection performance, operational metrics, and system reliability under real-world conditions.

Figure 10.

Real-world deployment validation results showing detection performance, system reliability, operational metrics, and threat landscape analysis across three metropolitan test sites.

Table 13 summarizes the comprehensive field trial results including detected threats, system uptime, operator feedback, and performance validation metrics. The deployment successfully detected and mitigated 347 actual attack attempts including sophisticated multi-stage attacks that evaded traditional security measures.

Table 13.

Real-world deployment validation results.

The network operators expressed the outstanding amount of confidence in the reliability of systems and an evident ability to appreciate the clarification of decisions made with explainable AI elements. The system delivered stable results under a wide variety of operational scenarios such as extreme weather conditions, peak traffic, and hacking campaigns. The hereby successful upscale testing proves that the BeamSecure-AI framework is practically feasible and operationally ready to be used in large-scale production mmWave networks.

5.8. Comparative Evaluation with Existing Methods

We compare BeamSecure-AI against recent baselines evaluated under the same dataset, splits, preprocessing, and hardware described in Section Baseline Comparison Setup, with results aggregated over five runs (mean ± std). BeamSecure-AI delivers higher average detection accuracy (95.7%) with lower latency (42.5 ms) and low false-positive rates (≤2.3%), demonstrating consistent improvements over state-of-the-art methods under both low- and high-intensity attack scenarios.

Head-to-Head vs. Enhanced SecBeam. Using the deltas reported in our study, BeamSecure-AI achieves an absolute +4.9% accuracy gain and a 27.1% latency reduction relative to Enhanced SecBeam, the strongest competing baseline in our re-runs. From our 95.7% average accuracy and 42.5 ms latency, this implies the following approximate baseline values.

Table 14 presents a direct comparison between the proposed BeamSecure-AI and the strongest baseline, Enhanced SecBeam. Under identical experimental conditions, BeamSecure-AI achieves a 4.9% higher detection accuracy while reducing average latency by 27.1%, demonstrating superior efficiency and adaptability in real-time beam-level threat detection.

Table 14.

Head-to-head performance comparison between BeamSecure-AI and enhanced SecBeam.

Broader Baseline Context. BeamSecure-AI’s integrated DRL + XAI pipeline contrasts with: (i) BeamSec (proactive ISAC-based physical-layer defense) and ResilientBeam (robust beamforming), which focus primarily on beamforming robustness rather than unified detection + explanation; and (ii) learning-centric frameworks such as AdaptiveGuard, MLShield, and DeepDefender, which do not jointly optimize real-time latency, explainability, and beam-level detection under identical mmWave conditions. Under our unified evaluation in Section Baseline Comparison Setup, these design differences translate into the higher overall accuracy and lower operational latency reported above.

The comparative results indicate that combining multi-domain features with DRL-based adaptation and operator-oriented explainability yields consistent, statistically significant gains over recent literature across attack intensities and deployment scales.

Baseline reproduction followed published algorithms with hyperparameters optimized via Bayesian optimization using Optuna v3.1 framework with Tree-structured Parzen Estimator (TPE) sampler. Search bounds were: AdaptiveGuard (threshold ∈ [0.5, 1.5], window_size ∈ [20, 100]), ResilientBeam (n_estimators ∈ [50, 200], max_depth ∈ [5, 20], min_samples_split ∈ [2, 10]), and BeamSec (n_estimators ∈ [50, 200], learning_rate ∈ [0.01, 0.3], max_depth ∈ [3, 10]). Each baseline underwent 100 optimization trials per seed across five independent runs (seeds 100–104) to ensure reproducible convergence. Statistical comparison employed paired t-tests on detection accuracy across the five runs, with each run using identical test set partitions to control for data variance. Paired design accounts for correlated measurements by comparing BeamSecure-AI vs. each baseline on the same test samples, reducing variance, and increasing statistical power. Results show BeamSecure-AI achieves mean accuracy of 97.2% vs. AdaptiveGuard (89.4%), ResilientBeam (92.1%), and BeamSec (93.8%), with paired t-test yielding t(4) = 8.92, p < 0.001, confirming statistically significant improvement. Effect sizes measured using Cohen’s d demonstrate large practical significance: d = 2.31 vs. AdaptiveGuard, d = 1.87 vs. ResilientBeam, and d = 1.64 vs. BeamSec, all exceeding the threshold (d > 0.8) for meaningful real-world impact. Complete baseline implementations, optimization logs, and statistical analysis scripts are available in the reproducibility repository. Table 15 shows the statistical testing and effect size analysis.

Table 15.

Statistical testing and effect size analysis.

5.9. Ablation Study

To assess the contribution of individual components within BeamSecure-AI, an ablation analysis was conducted by selectively turning off major modules under identical dataset, preprocessing, and hardware conditions described earlier. This study quantifies how spatial, temporal, and spectral feature streams, as well as attention, adaptive thresholding, and temporal smoothing, affect detection accuracy, false positive rate (FPR), and latency.

Table 16 presents the performance impact of turning different modules of BeamSecure-AI on or off. The results demonstrate that multi-domain feature fusion and attention mechanisms substantially improve detection accuracy and reduce FPR, while adaptive thresholding and temporal smoothing further enhance stability without compromising latency.

Table 16.

Ablation study: Impact of feature fusion and adaptive modules on model performance.

From the results in Table 17, it is evident that incorporating multiple feature domains leads to a significant improvement in accuracy, rising from 90.3% in the best single-domain setup (Spectral-only) to 94.1% with full-domain fusion. Introducing attention provides an additional 0.9% improvement and reduces FPR by 0.3%, confirming its value in focusing on critical spatiotemporal cues. Adaptive thresholding lowers FPR further to 2.2%, while temporal smoothing minimizes transient misclassifications, stabilizing predictions at 95.7% accuracy and 42.5 ms latency. These findings validate the architectural choices in BeamSecure-AI and confirm that each module meaningfully contributes to overall robustness and efficiency.

Table 17.

Safety constraints ablation study.

To evaluate resilience against adaptive adversaries, we conducted evasion experiments where attackers observe detector behavior and iteratively modify attack patterns to evade detection. Three adaptive attack strategies were tested: (1) gradient-based perturbations using FGSM (ε ∈ {0.05, 0.1, 0.2}) to craft adversarial feature vectors, (2) reinforcement learning-based evasion where attacker agent learns to minimize detection probability over 5000 episodes, and (3) mimicry attacks that blend attack signatures with normal traffic statistics. Under non-adaptive baseline, BeamSecure-AI achieves 97.2% detection accuracy. Against gradient-based evasion, accuracy degrades to 89.4% (ε = 0.05), 84.7% (ε = 0.1), and 78.3% (ε = 0.2), representing 8–19% degradation. RL-based adaptive attacks reduce detection to 81.2% after convergence (~3000 episodes), while mimicry attacks achieve 85.6% evasion success against static thresholds. However, BeamSecure-AI’s online RL adaptation mechanism provides mitigation: when enabled, the detector co-adapts by learning adversary patterns, recovering detection rates to 91.7% (gradient-based), 88.3% (RL-based), and 92.1% (mimicry) after 2000 adaptation episodes. The XAI component aids operators in identifying novel evasion tactics, with SHAP values highlighting shifted feature distributions (e.g., attackers reducing beam_switch_freq by 40% to mimic normal behavior). These results confirm moderate robustness degradation under adaptive attacks (15–20% initial drop) with partial recovery through online adaptation, indicating need for continuous model updating in adversarial deployment scenarios. Future work should explore adversarial training integration and game-theoretic defense strategies to further improve adaptive robustness.

6. Discussion

The overall performance findings indicate that BeamSecure-AI makes considerable progress in the beam-level attack detection of the mmWave RAN systems. Superior performance reports are indicative of how well the integrated AI-based approach, which includes multiple domain feature extraction, deep reinforcement learning, and explainable AI elements are performing. This 96.7 percent detection accuracy exceeds that of other methods by a very significant margin but with the response latency within the constraints needed to operate in real time.

Analysis of Table 6 show that BeamSecure-AI performs better than any of the other approaches on key performance metrics. Based on >4.9 percent increase in the accuracy of detection relative to the best rival method (SecBeam-Enhanced), this translates to higher practical security un-vulnerability in deployment. A 27.1% decrease in response latency relative to SecBeam-Enhanced allows faster threat mitigation and local effect on the network operations.

The explainability features are an advantage that BeamSecure-AI has over competing methods because none has comprehensive interpretability. This functionality supports operationally urgent needs involving security choices that have to be clear and auditable. An operator satisfaction level of 89% and positive proves the usefulness of explainable AI as it helps in streamlining human decision-making.

The robustness analysis shows that BeamSecure-AI can operate with strong performance in conditions of various attacks and environmental conditions. The maturity of threat recognition capabilities displayed by the system to identify even sophisticated multi-vector attacks illustrates its high-end sophisticated threat recognition capabilities. The sophistication in the adaptive learning mechanisms is such that it makes them remain effective despite changes in the attack patterns over a period of time.

Scale validation ensures that BeamSecure-AI can support large scale deployments without incurring a higher computational overhead. The linear scaling properties allow cost-efficient scale to the metropolitan level of coverage with centralized threat correlation capabilities. The distributed architecture structure helps in integration in the current network Infrastructure and allows gradual deployment strategies.

The minimal false positive rate of ≤2.3% solves a significant problem in real world security systems where the positivity rate of false alarms can be para-destroying by rendering the security system unusable and distrustful to operators. The adaptive thresholding mechanisms play a significant role in this as they readjust sensitivity thresholds used in detection to match current network conditions and past performance best.

Computational overhead analysis shows that BeamSecure-AI has modest resource demands that can fit the widespread network infrastructure. The effective algorithm design and the optimized implementation allows the deployment without utilization of any specialized hardware or many investments in infrastructure. This attribute promotes easy distribution and effective adoption.

The practical effectiveness in the operating conditions is supportable by the real-world validation results. The fact that the laboratory and simulation results are translated to real world performance is evidenced with the successful detection and mitigation of real-world actual attack attempts. The feedback of (Network) operators enables verification of the stability and usability of the system at the production level.

There are several limitations of the present BeamSecure-AI implementation to be discussed. The system would need to be trained on a set of representative attack data initially and such data might not be readily available when attacking some emerging threats. Second, its computational complexity is dependent on the number of monitored beams that may be an obstacle to scalability in the case of a very dense network. Third, the explainability capabilities are important but anything that increases computation tends to affect performance in low-resource settings.

The research directions are the extension to new frequencies, like terahertz bands, integration with a quantum-secure communication protocol, and the development of federated learning approaches of co-authorized sharing of threat intelligence. The integration of AI modules such as generative adversarial networks to generate synthetic data of attacks and graph neural networks to analyze the topology of networks are promising avenues of further development.

O-RAN Integration and Security Hardening

BeamSecure-AI has been designed for native deployment within the O-RAN architecture to ensure seamless interoperability and secure lifecycle management. The framework operates as an xApp hosted in the Near-Real-Time RIC (RAN Intelligent Controller), enabling continuous beam-level telemetry ingestion and adaptive policy actuation.

On the E2 interface, BeamSecure-AI subscribes to E2SM-KPM v2.03 telemetry streams, receiving key performance indicators (KPIs) such as beam ID, SINR, RSSI, alignment offset, and channel variance. These measurements are used to update the detector’s multi-domain feature representations in real time. Policy recommendations and mitigation commands—such as beam re-alignment or null steering—are returned through the A1 interface to guide near-real-time optimization loops in the Distributed Unit (DU) and Centralized Unit (CU). System-wide analytics and long-term retraining tasks are handled through the O1 interface to the Service Management and Orchestration (SMO) layer.

To ensure model integrity and secure orchestration, all BeamSecure-AI binaries and weight files are digitally signed within the SMO using a lightweight PKI scheme and validated by the RIC before activation. Access control is managed via OAuth 2.0 tokens validated at the E2 terminator, preventing unauthorized model deployment or telemetry access. Each model update is hashed using SHA-256 checksums to detect tampering, while adversarial retraining and differential-privacy noise injection mitigate model-poisoning and evasion attacks.

Telemetry schemas strictly adhere to O-RAN WG3 E2SM KPM v2.03 specifications, guaranteeing interoperability across multi-vendor DUs/CUs. This integration allows BeamSecure-AI to function as a secure, interpretable, and standards-compliant xApp, capable of adaptive beam defense and trusted coordination within the O-RAN ecosystem.

7. Conclusions

The presented work introduced BeamSecure-AI, an end-to-end AI-based framework capable of detecting mmWave RAN attacks down to the beam level. The offered solution combines deep reinforcement learning, explainable AI, and multi-domain feature extraction to provide secure coverage that is scalable, transparent, and robust. Experimental evaluation shows superior performance, achieving a detection accuracy of 96.7%, a response latency of 42.5 ms, and false-positive rates ≤ 2.3%, all within real-time operational constraints. The framework effectively mitigates critical challenges in mmWave security, including attack diversity, low-latency requirements, and interpretability, without sacrificing practical deployability.

The principal limitations include dependency on training data quality, computational scalability under dense beam configurations, and the requirement for periodic model updates to counter emerging threats. Future research will extend BeamSecure-AI toward next-generation communication paradigms by developing federated learning-based collaborative threat intelligence, quantum-secure communication integration, and cross-domain transfer learning to improve adaptability.

Compared with contemporary solutions such as AdaptiveGuard, ResilientBeam, and BeamSec, the proposed BeamSecure-AI framework demonstrates quantifiable improvements in robustness, interpretability, and real-time responsiveness. Its unified DRL + XAI design ensures higher detection stability across diverse attack intensities and deployment scales. These findings validate BeamSecure-AI as a scalable, interpretable, and production-ready defense mechanism for future 6G beam-level security deployments, establishing a strong foundation for secure, transparent, and adaptive network protection in next-generation mmWave RAN environments.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/electronics14234642/s1.

Funding

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia, for funding this research work through the project number MoE-IF-UJ-R2-22-3263-1.

Data Availability Statement

The Data used in study will be provided on a reasonable request from the corresponding author at: fnalsulami@uj.edu.sa. Data will be available upon request see Supplementary Materials.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hoang, V.-T.; Ergu, Y.A.; Nguyen, V.-L. Security risks and countermeasures of adversarial attacks on AI-driven applications in 6G networks: A survey. Comput. Commun. 2024, 230, 104031. [Google Scholar] [CrossRef]

- Catak, F.O.; Kuzlu, M.; Catak, E.; Cali, U.; Unal, D. Security concerns on machine learning solutions for 6G networks in mmWave beam prediction. Future Gener. Comput. Syst. 2022, 127, 276–285. [Google Scholar] [CrossRef]

- Chu, H.; Pan, X.; Jiang, J.; Li, X.; Zheng, L. Adaptive and Robust Channel Estimation for IRS-Aided Millimeter-Wave Communications. IEEE Trans. Veh. Technol. 2024, 73, 9411–9423. [Google Scholar] [CrossRef]

- Kim, B.; Sagduyu, Y.E.; Erpek, T.; Ulukus, S. Adversarial attacks on deep learning based mmWave beam prediction in 5G and beyond. IEEE Trans. Wirel. Commun. 2021, 20, 4394–4407. [Google Scholar]

- Wang, N.; Jiao, L.; Wang, P.; Li, W.; Zeng, K. Exploiting beam features for spoofing attack detection in mmWave 60-GHz IEEE 802.11ad networks. IEEE Trans. Wirel. Commun. 2021, 20, 3321–3335. [Google Scholar] [CrossRef]

- Li, J.; Lazos, L.; Li, M. SecBeam: Securing mmWave beam alignment against beam-stealing attacks. IEEE Trans. Mob. Comput. 2023, 22, 4567–4580. [Google Scholar]

- Dinh-Van, S.; Hoang, T.M.; Cebecioglu, B.B.; Fowler, D.S.; Mo, Y.K.; Higgins, M.D. A defensive strategy against beam training attack in 5G mmWave networks for manufacturing. IEEE Trans. Inf. Forensics Secur. 2023, 18, 2204–2217. [Google Scholar] [CrossRef]

- Zheng, C.; He, J.; Cai, G.; Yu, Z.; Kang, C.G. Security risks in visionbased beam prediction: From spatial proxy attacks to feature refinement. IEEE Internet Things J. 2024, 11, 21234–21247. [Google Scholar]

- Khan, N.; Abdallah, A.; Celik, A.; Eltawil, A.M.; Coleri, S. Digital twin-assisted explainable AI for robust beam prediction in mmWave MIMO systems. IEEE Trans. Veh. Technol. 2025, 74, 3456–3469. [Google Scholar] [CrossRef]

- Paltun, B.G.; Sahin, S. Robust intrusion detection system with explainable artificial intelligence. Comput. Secur. 2025, 142, 145–150. [Google Scholar]

- Salem, A.H.; Azzam, S.M.; Emam, O.E.; Abohany, A.A. Advancing cybersecurity: A comprehensive review of AI-driven detection techniques. J. Big Data 2024, 11, 105. [Google Scholar] [CrossRef]

- Kuzlu, M.; Catak, F.O.; Catak, E.; Cali, U.; Guler, O. Adversarial security mitigations of mmWave beamforming prediction models using defensive distillation and adversarial retraining. Int. J. Inf. Secur. 2022, 22, 157–171. [Google Scholar] [CrossRef]

- Moore, J.; Adhikari, N.; Abdalla, A.S.; Marojevic, V. Toward secure and efficient O-RAN deployments: Secure slicing xApp use case. In Proceedings of the 2023 IEEE Future Networks World Forum (FNWF), Baltimore, MD, USA, 13–15 November 2023; pp. 123–128. [Google Scholar]

- Li, J.; Zhang, M.; Chen, L. Enhanced SecBeam: Advanced beam alignment security with machine learning. IEEE Wirel. Commun. Lett. 2024, 13, 892–896. [Google Scholar]

- Chen, X.; Liu, Y.; Wang, Z. Adaptive Guard: Context-aware security for mmWave networks. IEEE Trans. Netw. Serv. Manag. 2025, 22, 234–247. [Google Scholar]

- Wang, H.; Kumar, S.; Patel, R. MLShield: Machine learning-based protection for mmWave communications. IEEE Internet Things J. 2024, 11, 14567–14579. [Google Scholar]

- Paracha, A.; Arshad, J.; Farah, M.B.; Ismail, K. Machine learning security and privacy: A review of threats and countermeasures. EURASIP J. Inf. Secur. 2024, 2024, 10. [Google Scholar] [CrossRef]

- Zhang, L.; Chen, Q.; Yang, F. Resilient Beam: Robust beamforming against sophisticated attacks. IEEE Trans. Commun. 2024, 72, 3678–3691. [Google Scholar]