Abstract

The integration of artificial intelligence (AI) into the Internet of Things (IoT) has revolutionized industries, enabling smarter decision-making through real-time data analysis. However, the inherent complexity and opacity of many AI models pose significant challenges to trust, accountability, and safety in critical applications such as healthcare, industrial automation, and cybersecurity. Explainable AI (XAI) addresses these challenges by making AI-driven decisions transparent and interpretable, empowering users to understand, validate, and act on algorithmic outputs. This paper examines the pivotal role of XAI in IoT development, synthesizing advancements, challenges, and opportunities across domains. Key issues include the computational demands of XAI methods on resource-constrained IoT devices, the diversity of data types requiring adaptable explanation frameworks, and vulnerabilities to adversarial attacks that exploit transparency. By looking at healthcare IoT, predictive maintenance, and smart homes, we can see how XAI bridges the gap between complex algorithms and human-centric usability—for instance, clarifying medical diagnoses or justifying equipment failure alerts. We discuss multiple XAI implementations with IOT, such as lightweight XAI for edge devices and hybrid models combining rule-based logic with deep learning. This paper advocates for XAI as a cornerstone of trustworthy IoT ecosystems, ensuring transparency without compromising efficiency. As IoT continues to shape industries and daily life, XAI will remain essential to fostering accountability, safety, and public confidence in automated systems.

1. Introduction

The Internet of Things (IoT) has emerged as a cornerstone of modern technological advancement, connecting billions of devices—from wearable health monitors to industrial sensors—to collect, analyze, and act on data in real time. This connectivity has enabled unprecedented efficiency in sectors such as healthcare, agriculture, manufacturing, and urban infrastructure. For instance, smart factories leverage IoT sensors to predict equipment failures, while precision agriculture systems automate irrigation based on soil moisture and weather forecasts. Yet, as IoT systems grow more autonomous and data-driven, a critical challenge has come to the forefront: the lack of transparency in the artificial intelligence (AI) models that power them.

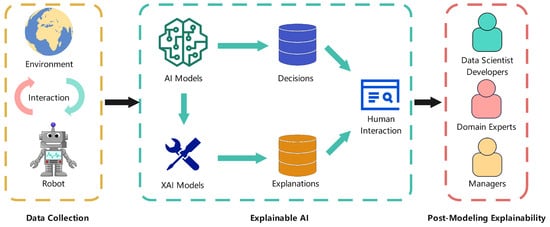

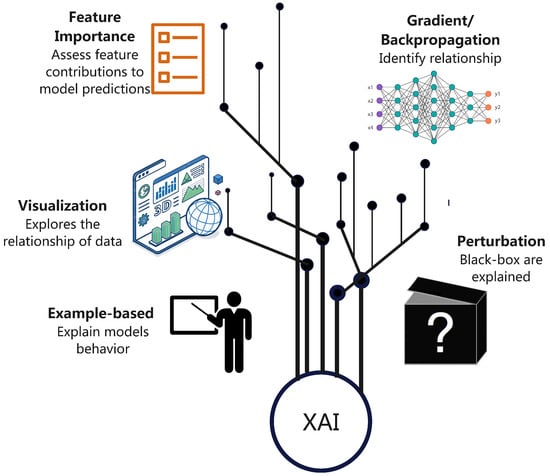

Many AI algorithms, particularly deep learning models, function as “black boxes”, producing decisions without clear explanations. In healthcare IoT, for example, a system might flag a patient’s irregular sleep patterns as a depression risk, but clinicians cannot act confidently without understanding why. Similarly, in industrial settings, an AI might shut down a conveyor belt due to suspected faults, but engineers need to know which sensor readings triggered the alert to address the root cause. This lack of transparency undermines trust, complicates troubleshooting, and raises ethical concerns—especially in applications where errors can lead to safety risks, financial losses, or legal liabilities [1]. This is where Explainable AI (XAI) can be a tremendous help. Using XAI can increase the transparency of machine learning models to avoid the issues of “black box” models. XAI can help humans understand how a model made a decision. As seen in Figure 1, data is taken from the environment and is given to the model. The AI model makes a decision such as whether or not an intrusion is taking place if the AI is part of an IDS. The XAI part of the model takes the decision and simplifies the explanation to something humans can understand during post modeling explainability. In Figure 2, the XAI methodologies are shown. Perturbation involves looking at the XAI model’s decisions. The example-based methodology involves examining model behavior in order to improve the model. Visualization is looking at the relationships between data used in the model. Back-propagation is the method to train the model to identify relationships between data. Finally, feature importance is identifying the weight certain features have on a model’s training.

Figure 1.

Overview of XAI.

Figure 2.

XAI Methodologies.

Figure 1 illustrates the overall workflow of XAI. Data is first collected through interactions between a model and its environment. AI models use this data to generate decisions, while XAI models produce accompanying explanations. Both the decisions and explanations are presented to humans through an interface. Finally, the output is interpreted by different stakeholders including but not limited to data scientists, domain experts, and managers, relying on these explanations to understand, validate, and trust the AI system.

Despite its promise, integrating XAI into IoT systems faces significant hurdles. First, IoT devices often operate under strict resource constraints such as limited processing power, memory, and energy. This makes it difficult to deploy computationally intensive XAI methods like Shapley Additive Explanations (SHAP) or Local Interpretable Model-agnostic Explanations (LIME). Second, the diversity of IoT data (e.g., time-series sensor readings, images, text) demands adaptable explanation frameworks. A one-size-fits-all approach fails when explaining a medical diagnosis which is completely different from justifying a cybersecurity alert. Third, the rise in adversarial attacks targeting XAI itself, such as manipulating explanations to hide malicious activity, poses new security risks.

The importance of XAI in IoT extends beyond technical necessity. Regulatory bodies, including the European Union and the U.S. Federal Trade Commission, now mandate transparency in AI systems affecting human safety or rights [2]. For example, the EU’s AI Act requires “high-risk” IoT applications, such as medical devices or critical infrastructure, to provide auditable explanations for their decisions [2]. Similarly, industries are increasingly adopting XAI to comply with ethical standards and build consumer trust.

However, current implementations often fall short of expectations. Many XAI methods prioritize simplicity over accuracy, offering superficial explanations that obscure critical details. For instance, a feature importance chart might highlight “network traffic volume” as a factor in a cybersecurity alert but fails to clarify how specific packet sizes or protocols contributed to the decision. This “checkbox compliance” risks creating a false sense of security, where systems meet regulatory requirements without delivering meaningful transparency.

This paper reviews recent advancements in XAI for IoT systems, discussing methodologies, applications, and challenges across diverse domains. To establish a solid foundation for this review, we began by gathering a diverse range of scholarly resources on Explainable AI for IoT over the past five years. We aimed to capture the most current and significant developments in the field. We conducted a broad search across major academic databases and journals, focusing on publications that connected key themes of AI interpretability with IoT applications. The identified literature was then carefully reviewed and synthesized to ensure this paper provides a comprehensive overview of the latest methodologies, diverse applications, and emerging challenges. Our contributions focus on:

- We analyze surveys covering XAI frameworks in IoT ecosystems, the various ways XAI is being implemented in cybersecurity, as well as challenges, legislative policies, the current state of XAI in the market, ethical issues involving XAI, XAI taxonomies, and enhancing the security of XAI.

- We discuss various models that focus on using XAI in cybersecurity. We analyze the models using XAI to build efficient intrusion detection systems. We then discuss how XAI is being used to enhance security in the Internet of Medical Things. In Table A1, we list each dataset used in the models discussed. We list their type, their contributions to the models for which they are used, and the impact the datasets have on those models.

- We look at how XAI is being used with machine learning in a variety of fields, such as smart homes and agriculture. We discuss the benefits, core challenges, and ethical considerations of implementing XAI. We discuss ways to address various challenges in implementing secure and understandable XAI. By decentralizing model training and explanation generation, protecting explanation methods from being exploited, ensuring adaptable explanations, and using real-world datasets to train the models, we can create a secure XAI model that people can understand.

This paper synthesizes advancements, challenges, and opportunities in XAI for IoT, drawing on peer-reviewed studies across domains. We emphasize practical insights, such as the balance of explanation granularity with computational costs, and discuss ethical decisions including avoiding bias in automated decisions. By addressing these issues, developers and policymakers can ensure IoT systems are not only intelligent but also trustworthy, equitable, human-centric. As IoT continues to permeate daily life, XAI will remain indispensable in fostering a future where technology empowers users through clarity, not complexity.

This paper is organized as follows: Section 1 illustrates introductions and our contributions. Section 2 depicts the current trend of XAI. Section 3 describes the development of XAI in IoT Cybersecurity. Section 4 presents the XAI applications with machine learning methods. Section 5 discusses ways to address various challenges in implementing secure and understandable XAI. Section 6 concludes this paper.

2. Current Trend of XAI

The rapid expansion of the Internet of Things (IoT) across domains such as healthcare, industry, and finance has amplified the need for transparent and interpretable artificial intelligence systems. XAI frameworks offer a solution by enhancing the visibility and trustworthiness of AI-driven decisions within interconnected IoT environments. This section explores the integration of XAI in IoT ecosystems including specialized branches like the Internet of Medical Things (IoMT) and Industrial IoT (IIoT). Examining existing research that addresses challenges of decentralization, real-time decision-making, and human-AI collaboration. It also highlights ongoing efforts to develop lightweight, interoperable, and human-centric XAI models capable of functioning effectively under the computational and privacy constraints inherent to IoT infrastructures.

2.1. XAI Frameworks for IoT Ecosystems

The integration of XAI into IoT networks—such as IoMT (Internet of Medical Things), IIoT (Industrial IoT), and IoHT (Internet of Health Things)—addresses transparency challenges in critical domains like healthcare, finance, and industrial automation. Ref. [1] provides a comprehensive survey of XAI frameworks, emphasizing Distributed Deep Reinforcement Learning (DDRL) for decentralized decision-making in IoT environments. The paper identifies gaps in real-time deployment, interoperability, and human-AI collaboration, advocating for lightweight XAI models tailored to resource-constrained IoT devices. Figure 2 illustrates several core methodologies used in XAI. Feature importance methods assess how individual input features contribute to a model’s predictions. Gradient and backpropagation techniques identify the internal relationships learned by neural networks. Visualization approaches explore patterns in the data and help reveal how models behave. Example-based methods explain model decisions by comparing them to similar reference instances. Perturbation techniques analyze how black-box models respond when inputs are intentionally modified. Together, these methods offer multiple ways to interpret and understand XAI systems.

Ref. [3] expands on IoT application-layer protocols (e.g., MQTT, CoAP, HTTP) and their security mechanisms, highlighting a case study where SHAP enhances transparency in intrusion detection for MQTT-based IoT networks. Challenges include limited computational resources, sparse datasets for training robust AI models, and adversarial risks such as evasion attacks. The authors propose AI-enabled IoT (AIoT) as a future model, combining scalable AI models with XAI that preserves the user’s privacy. Ref. [4] critiques the slow adoption of XAI in IoT despite its theoretical benefits, identifying unresolved issues like balancing interpretability with performance, handling heterogeneous data streams, and adapting XAI to edge computing constraints. Ref. [5] focuses on XAI-driven anomaly detection for IoT, where traditional methods lack transparency. Techniques like LIME and attention mechanisms generate human-readable explanations for anomalies, but challenges persist in dynamic environments (e.g., concept drift) and computational overhead.

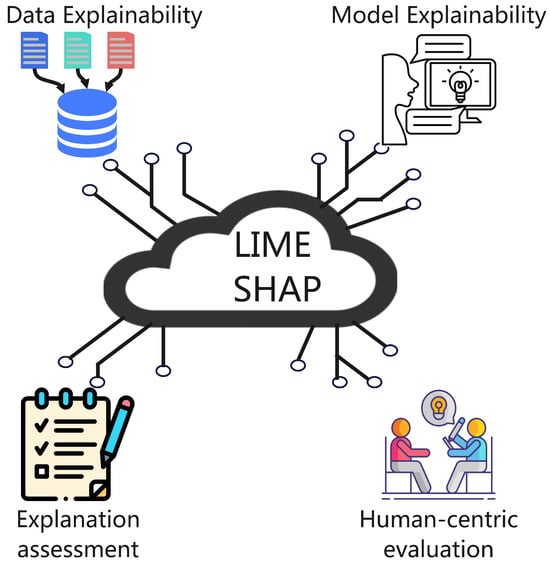

Ref. [6] provides a detailed exploration of XAI methodologies, offering a structured framework to understand how AI models generate interpretable explanations. The survey organizes XAI into four key axes:

- Data explainability: This involves visualizing and interpreting data shifts in IoT sensor streams, enabling users to understand how input data influences model decisions.

- Model explainability: This axis distinguishes between intrinsic methods (e.g., decision trees, which are inherently interpretable) and post-hoc techniques (e.g., LIME or SHAP, which explain black-box models after training).

- Explanation assessment: This focuses on evaluating explanations using metrics like faithfulness (how accurately the explanation reflects the model’s decision process) and stability (the consistency of explanations across similar inputs).

- Human-centric evaluation: This emphasizes tailoring explanations to the expertise and needs of different users, such as network engineers requiring technical details or medical professionals needing simplified, actionable insights.

Figure 3 illustrates key strategies of XAI, emphasizing how different components contribute to making AI systems more transparent and trustworthy. There are prominent post-hoc explanation techniques like LIME and SHAP, which are core tools for generating interpretable insights from complex models. There are four important parts of XAI. The first one is data explainability, which focuses on understanding and validating the data used for training. Model explainability, which concerns making the internal mechanisms and decisions of AI models more interpretable. Explanation assessment, which involves evaluating the quality, fidelity, and usefulness of generated explanations. Human-centric evaluation which ensures that explanations are comprehensible, meaningful, and aligned with user needs. Together, these components represent an integrated framework for developing and assessing interpretable AI systems. Ref. [7] builds on this by categorizing XAI methods into additional taxonomies, such as model-specific approaches (e.g., decision rules for linear models) and feature-based techniques (e.g., SHAP values that quantify feature contributions). Meanwhile, ref. [8] delves into deep learning explainability, highlighting techniques like attention mechanisms and saliency maps, which identify important regions in input data (e.g., highlighting suspicious code segments in malware detection). However, the paper notes trade-offs between interpretability and model performance, as simpler explanations may oversimplify complex decision processes.

Figure 3.

XAI Strategies.

2.2. Privacy Vulnerabilities and Adversarial Threats to XAI

XAI’s transparency introduces privacy and adversarial risks. Ref. [7] evaluates XAI taxonomies against privacy attacks:

- Model-agnostic methods (e.g., LIME) make black-box models vulnerable to membership inference attacks.

- Backpropagation-based techniques (e.g., Grad-CAM) are susceptible to model inversion attacks, exposing sensitive training data.

- Perturbation-based methods (e.g., LIME) resist inference attacks but struggle with data drift. Privacy-enhancing techniques like differential privacy degrade explanation quality by adding noise, while federated learning increases vulnerability to model inversion. Ref. [9] systematizes threats under adversarial XAI (AdvXAI), including:

- -

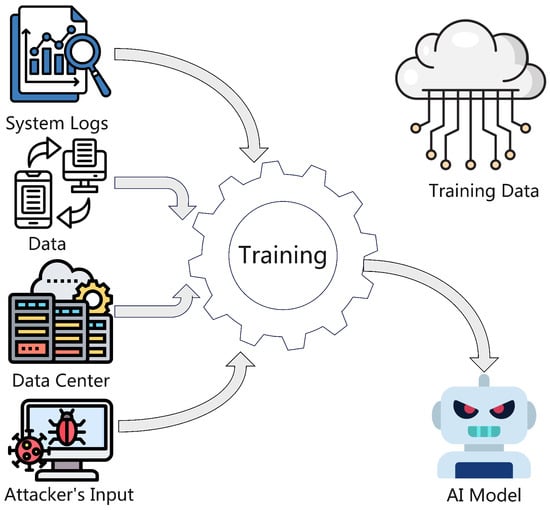

- Data poisoning: Corrupting training data to manipulate explanations. As you can see in Figure 4, the attacker corrupts the training data that was pulled from system logs, streaming data, and the data center to create a vulnerable XAI model.

Figure 4. AI Poisoning Diagram.

Figure 4. AI Poisoning Diagram. - -

- Adversarial examples: Crafting inputs to mislead models and their explanations.

- -

- Model manipulation: Altering parameters to produce biased explanations.

Defenses include explanation aggregation (ensembling multiple XAI methods) and robustness regularization. Ref. [10] extends this analysis to 5G/B5G networks, advocating for pre-model (transparent design), in-model (attention layers), and post-model (LIME) explainers to counter cross-layer threats like adversarial attacks on RAN protocols.

2.3. Enhancing IoT Security with Emerging Technologies

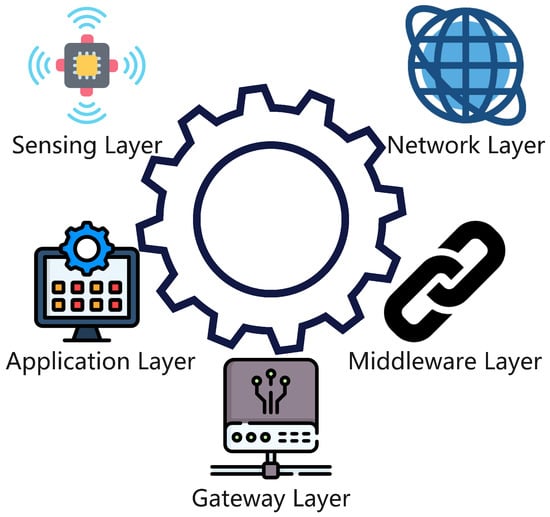

Beyond XAI, IoT security benefits from complementary technologies. Ref. [11] reviews blockchain for immutable audit trails in device authentication and edge computing for localized XAI to reduce latency. Challenges include model drift in dynamic environments and adversarial attacks on federated learning systems. Ref. [12] analyzes ML/DL advancements for IoT threat detection, noting that while CNNs excel in malware image detection, their complexity hinders explainability. Hybrid models (e.g., CNNs with rule-based systems) and synthetic data generation are proposed to address dataset scarcity. Refs. [3,5] advocate for lightweight, scalable AI models tailored to IoT’s resource constraints. Figure 5 shows the various layers that make up the architecture of the IoT framework. First, the sensing layer gathers data from the environment. Second, the network layer facilitates connections between devices on an IoT network. Third, the middleware layer manages the devices and networks. Fourth, the gateway layer collects data from the sensors and sends the data to the cloud. Finally, the application layer is the layer users interact with.

Figure 5.

IoT Framework Architecture.

2.4. Legislative Policies, Ethical Concerns, and Market Realities

Regulatory frameworks struggle to address XAI’s technical complexities. Ref. [2] critiques the EU’s AI Act and GDPR for mandating explainability without implementation guidelines, leading to “checkbox compliance” with ineffective XAI. The October 2023 U.S. Executive Order on AI safety is similarly criticized for lacking actionable details. The paper identifies five XAI flaws:

- Robustness: Inconsistent explanations for similar inputs.

- Adversarial attacks: Explanations manipulated to hide bias.

- Partial explanations: Overemphasizing irrelevant features (e.g., blaming benign IP addresses for intrusions).

- Data/concept drift: Outdated explanations in dynamic environments.

- Anthropomorphization: Assigning human intent to AI decisions.

Market issues include companies marketing “explainable” AI without transparency about their methods.

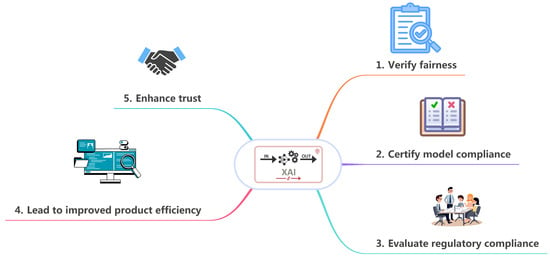

Figure 6 shows different scenarios where XAI is used and the purpose of using it. First, users that use XAI when designing models would use it to ensure the fairness of the model. Without XAI in this case, the users would have no idea if the model was making fair decisions. Second, administrative staff would use XAI to certify model compliance. They would use XAI to make sure that the model meets the standards set by a government or a business. Third, management would use XAI to evaluate regulatory compliance. Similarly to the admin staff, management would make sure that the model meets all standards set by governments and businesses. Fourth, data scientists would use XAI to have improved product efficiency. Finally, users and experts use XAI to make improve their trust in a model. Without the XAI, the AI would be a “black box” with no guarantee of the model making good decisions.

Figure 6.

XAI in Different Scenarios.

Ref. [13] examines XAI in autonomous vehicles, where ethical dilemmas (e.g., the trolley problem) require auditable decision trails. Techniques like layer-wise relevance propagation (LRP) map sensor inputs to steering decisions, aiding debugging and compliance. Refs. [14,15] stress Responsible AI, advocating for XAI to integrate fairness (e.g., bias detection in fraud prediction) and accountability (e.g., tracing misdiagnoses in healthcare IoT). Ref. [15] proposes a novel XAI definition centered on the “audience” (developers vs. end-users) and “purpose” (debugging vs. regulatory compliance).

3. IoT Cybersecurity

The IoT and IIoT have transformed industries through interconnected smart systems, enabling real-time monitoring, automation, and data-driven decision-making. However, this connectivity exposes critical vulnerabilities, as billions of devices from medical sensors to industrial controllers become targets for cyberattacks. Traditional machine and deep learning models used in intrusion detection often operate as “black boxes”, prioritizing accuracy over transparency. In high-stakes areas like healthcare and manufacturing, where misinterpreted alerts can jeopardize safety or compliance, this lack of transparency undermines trust and actionable responses.

XAI is pivotal in cybersecurity for demystifying AI-driven decisions in intrusion detection, malware analysis, and threat prioritization. Ref. [16] explores explainable intrusion detection systems (IDS) for IoT, emphasizing the need to maintain detection accuracy while providing granular explanations (e.g., feature importance, attack patterns). The paper warns of adversarial evasion techniques targeting XAI itself and suggests hybrid models combining rule-based systems with XAI [17].

- Malware detection: Signature-based (interpretable rule sets), anomaly-based (SHAP for outlier justification), and heuristic-based (behavioral analysis).

- Zero-day vulnerabilities: XAI traces model decisions to unknown attack patterns.

- Crypto-jacking: Highlighted as an under-researched area where XAI could map mining behaviors to network logs.

These evasion techniques are shown in Figure 7 as the three main threats to XAI that need to be addressed. These issues need to be solved in order to ensure that the XAI model is secure and not vulnerable to attackers. Ref. [18] bridges a research gap by surveying XAI in intrusion detection, malware detection, and spam filtering, contrasting intrinsically interpretable models (e.g., decision trees) with post-hoc techniques like LIME. A key finding is that oversimplified explanations may mislead users, showing the necessity of context-aware XAI. Ref. [19] links XAI to reducing alert fatigue in Security Operations Centers (SOCs), where explanations prioritize threats by clarifying confidence scores or highlighting malicious code segments. However, the paper warns of XAI security risks, such as model inversion attacks exploiting explanation methods. Ref. [8] ties the “black-box problem” to eroding trust in AI-driven cyber defenses, advocating for counterfactual explanations (e.g., “What-if” scenarios for phishing detection) and prototype-based comparisons to known attack patterns.

Figure 7.

XAI in Cybersecurity.

3.1. XAI with Intrusion Detection in IOT/IIOT

As the number of connected devices in homes, industries, and healthcare grows, so do risks such as hacking and data breaches. AI helps detect these threats, but its decisions are often hard to understand, making it difficult for experts to trust or act on its warnings. XAI solves this by showing how and why threats are detected such as highlighting unusual patterns in device behavior or network traffic. This clarity helps experts fix problems faster and ensures safety in critical areas like hospitals or factories, where mistakes can have serious consequences. However, making AI both accurate and easy to explain remains a challenge, especially as attacks become more complex.

Transformer-based autoencoders [20] leverage self-attention mechanisms to model temporal dependencies in IoT network traffic, achieving state-of-the-art anomaly detection. SHAP is employed to interpret feature contributions, such as packet header anomalies or protocol mismatches, providing granular explanations for flagged events. Similarly, reconstruction error-driven autoencoders [21] train on benign and adversarial data, using SHAP to quantify deviations in metrics like source IP frequency or payload entropy. These frameworks show XAI’s ability to bring unsupervised learning and human-understandable diagnostics together.

DDoS attack detection benefits uniquely from XAI’s transparency. For instance, ref. [22] proposes a method to identify network-layer DDoS attacks by analyzing anomalous traffic patterns (e.g., UDP/ICMP flood rates) with SHAP, achieving 97% detection efficiency which surpasses traditional threshold-based systems by 14%. This approach not only flags attacks but also explains how specific features such as packet size distribution correlate with malicious behavior, enabling targeted mitigation.

Ensemble techniques, such as Decision Trees, XGBoost, and Random Forests [23], achieve over 96% accuracy in multi-class attack classification. Their black-box nature is mitigated through post-hoc XAI tools like LIME and ELI5, which reveal critical features such as session duration, TCP flag patterns, or DNS query volumes. For IIoT environments, ensemble models paired with SHAP [24] identify unauthorized PLC access attempts by correlating anomalies with operational thresholds (e.g., sensor voltage fluctuations), ensuring compliance with industrial safety protocols.

Recurrent neural networks (RNNs), particularly LSTMs and GRUs, are widely adopted for sequential data analysis. In [25], the IRNN Framework integrates the Marine Predators Algorithm for feature selection, optimizing LSTM performance on NSL-KDD by prioritizing metrics like HTTP error rates or SYN packet counts. In [26], the SPIP Framework combines SHAP, Permutation Feature Importance (PFI), and Individual Conditional Expectation (ICE) to explain LSTM decisions, achieving 98.2% detection accuracy for zero-day attacks on UNSW-NB15. Notably, while CNNs are slightly better than DNNs in raw accuracy (e.g., 99.1% vs. 98.7% on NSL-KDD [27]), DNNs are preferred for their computational efficiency and compatibility with SHAP’s additive feature attribution.

Adversarial attacks, including noise injection and gradient-based perturbations, exploit model vulnerabilities in IoT systems. The TXAI-ADV Framework [28] evaluates ML/DL models (KNN, SVM, DNNs) under adversarial conditions, using SHAP to identify manipulated features such as distorted packet inter-arrival times. Countermeasures include robust feature engineering like filtering high-entropy payloads and adversarial training, which improves F1-scores by 12–15% on the CIC IoT 2023 dataset.

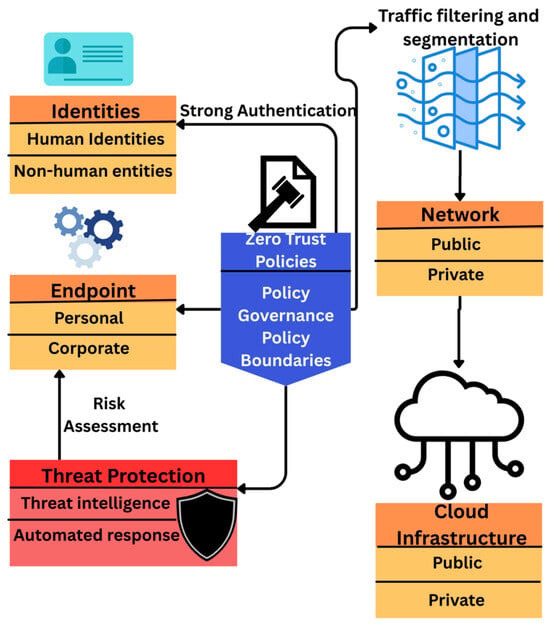

Domain-specific innovations show XAI’s versatility. In marine IoT, zero-trust frameworks combine SHAP/LIME with human-in-the-loop feedback to filter false alarms like fake distress signals in maritime environments, reducing false positives by 22% compared to conventional IDS [29]. For smart cities, blockchain-authenticated Bidirectional GRU networks [30] achieve 99.53% accuracy on CICIDS2017, with SHAP clarifying how features like SSL show validity or login attempt frequency drive detection. In industrial settings, where operational continuity and safety are vital, tailored XAI solutions address unique cybersecurity challenges. A novel deep learning framework [31] combining Convolutional Neural Networks (CNNs) and Autoencoder-LSTM architectures detects anomalies in IIoT networks by extracting temporal-spatial features from gas sensor array (GSA) data. This hybrid model employs a two-step sliding window process: first, CNNs capture spatial correlations (e.g., sensor voltage patterns), while the Autoencoder-LSTM layer models temporal dependencies (e.g., pressure fluctuations over time). The framework achieves exceptional metrics (99.35% accuracy, 98.86% precision) on industrial datasets, surpassing standalone CNNs, LSTMs, and hybrid benchmarks. SHAP analysis reveals that sensor redundancy and command-response latency are critical features for anomaly detection, enabling plant operators to prioritize maintenance or isolate compromised nodes. In IIoT, TRUST XAI [32] uses factor analysis to derive latent variables (e.g., PLC command sequences) from raw sensor data, outperforming LIME in interpretability for WUSTL-IIoT datasets. Figure 8 shows how to implement zero trust policies; you need to implement policies in various areas. Policies for strong authentication need to be put in place for identities and endpoint security. A strong policy for risk assessment of endpoints needs to be created as well. Traffic filtering and segmentation need to be put in place on the network.

Figure 8.

XAI Processing.

Modular IDS architectures have also gained traction. For example, ref. [33] proposes a six-module framework (data capturing, flow generation, preprocessing, detection, explainability, notification) tested on CIC-IoT 2022and IEC 60870-5-104 datasets. SHAP provides both global explanations such as “HTTP errors are critical for ransomware detection” and local insights (e.g., “This MQTT packet’s payload entropy triggered an alert”), achieving 98.9% precision across attack scenarios.

Despite advancements, significant challenges persist. Real-time scalability remains an issue, as frameworks like SPIP [26] achieve sub-second latency for LSTM-based IDS but struggle with high-speed IIoT networks such as 10 Gbps industrial Ethernet. Explanation consistency is another concern; while SHAP demonstrates superior robustness over LIME in adversarial settings [7,18], the lack of standardized metrics for faithfulness (e.g., explanation accuracy) hinders cross-study comparisons. Human-centric design gaps also emerge, with user surveys [34] revealing a disconnect between technical XAI outputs and operator needs, underscoring the demand for context-aware visualizations such as traffic heatmaps or natural language summaries. Data imbalance further hinders detection, as rare attack classes such as APT in IoTID20 [35] remain undetected despite SMOTE oversampling [35], necessitating hybrid sampling-XAI pipelines. Regulatory compliance adds another layer of complexity, with GDPR and NIST mandates requiring auditable AI. This need is only met by blockchain-XAI hybrids at the moment [30], which provide immutable explanation logs.

Future research must address these gaps through unified frameworks, resilience against adversarial attacks, and edge-cloud collaboration. Cross-domain frameworks like XAI-IoT [36] could evolve to support heterogeneous IoT protocols like ZigBee, LoRaWAN and edge-device constraints, while explanation-aware defense mechanisms such as perturbation-resistant SHAP variants may reduce model evasion attacks [28]. Frameworks like [37] demonstrate the value of combining global and local explanations like RuleFit’s “IF-THEN” rules paired with SHAP’s feature attribution to enhance analyst trust, but broader adoption requires standardized benchmarks. Federated XAI architectures could distribute workloads between edge devices (local LIME) and cloud servers (global SHAP), balancing speed and depth. Community-driven benchmarks like XAI-IoT 2.0 should standardize evaluation criteria such as explanation latency, operator trust, and regulatory compliance to foster reproducibility.

3.2. XAI in Internet of Medical Things (IoMT)

The integration of IoMT into healthcare has revolutionized patient monitoring and data-driven diagnostics. However, the sensitive nature of medical data demands robust security and interpretability. To address these challenges, recent research has focused on combining blockchain and XAI for secure, transparent IoMT ecosystems. For instance, the XAI-Driven Intelligent IoMT Secure Data Management Framework [38] leverages blockchain to ensure tamper-proof data transfer while employing XAI to demystify decision-making processes, increasing trust among healthcare providers.

Beyond data management, threat detection in IoMT networks is critical. The XSRU-IoMT model [39] introduces bidirectional Simple Recurrent Units (SRUs) with skip connections to identify malicious activities in real time. Evaluated on the ToN-IoT dataset, XSRU-IoMT outperforms traditional RNN variants like GRU and LSTM, achieving higher detection accuracy while maintaining computational efficiency—a vital feature for resource-constrained medical devices [39].

Despite its benefits, XAI introduces unintended vulnerabilities. Ref. [40] reveals how adversarial tools like DiCE and ZOO exploit XAI explanations to manipulate Random Forest (RF)-based intrusion detection systems. By generating counterfactuals and adversarial samples, attackers disguised Okiru malware attacks in the Aposemat IoT-23 [40] dataset, compromising RF models. This paradox underscores the need for robust XAI frameworks that balance transparency with resistance to exploitation.

Broadly, XAI’s fusion with IoT extends beyond domain-specific applications. A Unified XAI-IoT Framework.

Ref. [41] transforms sensor data into Gramian Angular Field images, processed via VGG16 for tasks like prediction and intrusion detection. Optimized with genetic algorithms, this approach highlights XAI’s versatility in interpreting complex IoT data patterns while maintaining model efficiency.

While not directly IoT-centric, the jailbreaking of LLMs such as GPT and BLOOM through prompt manipulation raises concerns for AI-driven IoT security. As LLMs continue to support IoT decision-making, their susceptibility to generating harmful outputs via jailbreaks poses systemic risks [42]. This emerging threat calls for rigorous detection mechanisms and underscores the broader cybersecurity implications of explainability in AI systems.

4. XAI Applications with Machine Learning

The integration of XAI with ML has become increasingly essential in the development of intelligent Internet of Things (IoT) systems. By providing transparency and interpretability, XAI enhances trust, accountability, and informed decision-making across diverse IoT applications. As these systems grow in complexity and autonomy, explainability ensures that human operators can understand, validate, and effectively manage AI-driven outcomes. This section presents key applications of XAI-enabled ML within the IoT landscape, highlighting its role in advancing transparency, reliability, and ethical system design. Figure 9 shows the various areas XAI is being applied in. It is being applied in cybersecurity in healthcare to detect threats and protect sensitive patient information. XAI is being applied in industrial IOT to reduce false alarms and increase engineer confidence. XAI is being used in agriculture to perform tasks such as automating irrigation.

Figure 9.

XAI Applications.

In healthcare, IoT systems increasingly monitor behavioral patterns to infer mental health states. Ref. [43] proposes an MLP-based framework that analyzes kitchen sensor data like appliance usage and meal preparation frequency to detect depression. The XAI layer generates feature importance scores, enabling medical professionals to validate predictions against patient histories. For instance, irregular meal times and reduced appliance activity are flagged as depression indicators. This work highlights XAI’s role in transforming passive surveillance into proactive, ethical healthcare tools by prioritizing transparency and user agency.

4.1. Emergency Response and Public Safety

High-stakes scenarios like disaster response demand both speed and accountability. Ref. [44] combines CNNs for visual analysis of drone-captured disaster imagery with signal-processing models to detect SOS signals (e.g., irregular heartbeats). The XAI component employs saliency maps to highlight critical image regions (e.g., collapsed structures) and attention mechanisms to classify SOS signals. By exposing decision logic, the system reduces automation bias among responders. However, real-time explanation delivery remains a challenge, prompting exploration of federated learning for privacy-preserving distributed networks.

4.2. Smart Home Automation and User-Centric Design

Smart homes face adoption barriers due to opaque automation. Ref. [45] introduces an agent-based IoT architecture where devices act as autonomous agents governed by reinforcement learning. These agents generate natural-language explanations (e.g., “Thermostat adjusted based on historical occupancy”) and adapt explanation granularity to context—concise alerts during emergencies versus detailed logs for routine operations. This approach bridges the trust gap by aligning system behavior with user expectations, though balancing simplicity and technical accuracy requires further research.

4.3. Industrial IoT and Predictive Maintenance

Predictive maintenance (PdM) in industrial IoT benefits from XAI’s ability to clarified predictions. Ref. [46] proposes Balanced K-Star, a method addressing class imbalance in PdM datasets. It achieves 98.75% accuracy in fault prediction and uses logical decision trees to trace failures to variables like excessive torque. Complementing this, ref. [47] introduces GSX, a framework combining CNN-LSTM models with Gumbel-Sigmoid-based feature selection to explain sensor-derived fault predictions (e.g., vibration anomalies). Both studies emphasize how XAI reduces false alarms and enhances engineers’ confidence in maintenance decisions.

4.4. Sensor Reliability and Edge Computing

Low-cost IoT sensors are prone to faults in harsh environments. Ref. [48] presents XAI-LCS, an edge-compatible method using XGBoost and SHAP values to diagnose sensor issues like bias or drift. For example, humidity-induced temperature sensor errors are flagged with reports like “Sensor #3 deviation: 2.5 °C (SHAP score: 0.92)”. While effective in remote applications such as wildfire detection, challenges persist in distinguishing sensor faults from environmental anomalies, necessitating advanced feature selection algorithms.

4.5. Agricultural IoT and Sustainability

In agriculture, IoT systems must justify automation to gain farmer trust. Ref. [49] details “Vital,” an XAI-driven platform integrating soil moisture sensors and weather APIs to automate irrigation. Decision trees link actions to environmental thresholds (e.g., activating sprinklers when soil moisture falls below 25%), while SMS alerts explain decisions like “Irrigation triggered due to forecasted drought.” This transparency bridges data-driven automation with traditional farming intuition, promoting sustainable practices in climate-vulnerable regions.

4.6. IoT Security in Emerging 6G Networks

Ref. [50] addresses the critical challenge of securing IoT systems in next-generation 6G networks by leveraging XAI methodologies. The authors propose a framework combining XGBoost with SHAP and LIME to enhance both security and transparency. Tested on the CICIoT 2023 dataset, the model achieved 95.59% accuracy in detecting network intrusions, with recursive feature elimination (RFE) further improving performance to 97.02%. The XAI components(SHAP and LIME) provide granular insights into feature contributions, such as identifying abnormal packet sizes or irregular traffic patterns as key indicators of malicious activity. This work underscores XAI’s dual role in 6G-enabled IoT: improving security model efficacy while demystifying decisions for network administrators. By translating complex threat detection logic into interpretable outputs, the framework fosters trust in automated security systems, a necessity as IoT expands into ultra-low-latency 6G environments.

In summary, the integration of XAI within IoT cybersecurity represents a critical advancement toward transparent, trustworthy, and efficient threat detection. By revealing the reasoning behind AI-driven intrusion and anomaly detection, XAI enhances human understanding, accelerates response times, and reduces false alarms in complex networked environments. Despite substantial progress, challenges persist in achieving real-time scalability, explanation consistency, and resilience against adversarial manipulation. Future research must prioritize standardized evaluation metrics, lightweight explainability methods for edge devices, and context-aware visualization tools that bridge the gap between technical outputs and operator decision-making. Ultimately, XAI serves as the foundation for secure, interpretable, and human-centric AI in the evolving IoT landscape.

5. Discussion

5.1. Bridging Interpretability with Real-World Utility

Explainable AI (XAI) has proven instrumental in transforming opaque AI models into tools that support actionable decision-making across IoT domains.

In critical applications such as healthcare, industrial automation, and cybersecurity, XAI serves not only as a post-hoc tool for interpretability but as an operational enabler.

For instance, in the Internet of Medical Things (IoMT), monitoring frameworks using multilayer perceptrons and SHAP can help clinicians trace mental health predictions to behavioral indicators such as diminished appliance usage or irregular sleeping patterns.

Industrial IoT (IIoT) systems benefit from XAI-enhanced predictive maintenance pipelines, where explainability supports operational efficiency by identifying root causes of equipment failure. Deep learning models—such as CNN-LSTM hybrids—can be paired with SHAP to map fault predictions to high-vibration zones or thermal anomalies in sensor arrays, enabling targeted interventions and minimizing costly downtime.

In cybersecurity, XAI reduces operator burden in Security Operations Centers (SOCs) by clarifying the rationale behind threat classifications. For example, decision-tree-based IDS frameworks equipped with LIME can highlight payload entropy or packet timing anomalies in DDoS traffic.

Beyond interpretation, explainability plays a key role in triage prioritization, supporting incident response teams in identifying the most critical alerts based on interpretable metrics such as confidence scores or protocol irregularities.

Moreover, blockchain-integrated XAI frameworks further support forensic traceability and regulatory auditability by maintaining immutable logs of explanations.

5.2. Core Challenges: Technical, Operational, and Sociotechnical Constraints

Despite progress, the integration of XAI into IoT systems presents multi-layered challenges:

- Resource Constraints at the Edge: Many IoT devices operate under severe constraints in memory, computation, and energy availability. Traditional XAI methods like SHAP and LIME are computationally expensive, often necessitating centralized inference.

- Adversarial Exploitation of Transparency: Explanations can be reverse-engineered by adversaries to manipulate outputs or infer sensitive training data. Membership inference, model inversion, and explanation-guided evasion attacks are increasingly documented.For instance, adversarial inputs crafted to evade IDS models have leveraged explanation outputs to modify payload entropy or mimic benign protocol structures.

- Superficial Compliance with Regulatory Frameworks: Current explainability tools often satisfy compliance checkboxes without delivering truly actionable insights.A feature importance chart stating “network traffic volume” as a cause for alert lacks depth unless it contextualizes the decision using features such as protocol type, connection duration, and correlation with threat intelligence.

- Human–AI Mismatch in Explanation Design: Explanation design often neglects user roles. Security analysts may need packet-level visibility, while non-expert stakeholders require natural language justifications.

5.3. Toward Scalable, Secure, and Human-Centric Explainability

Addressing these challenges requires a multidimensional research and development agenda:

- Federated and Edge-Aware Explainability: By decentralizing model training and explanation generation, federated learning enables local explanations (e.g., LIME) for immediate alerts, while cloud-based SHAP provides richer global insight.

- Adversarially-Robust XAI Pipelines: Explanation aggregation, adversarial training, and robust attribution masking can shield explanation methods from exploitation.

- Context-Aware, Role-Specific Interface Design: Explanation outputs must adapt to urgency and expertise level. In smart homes, natural-language explanations may suffice; in factories, layered visualizations may be needed.Multimodal interfaces—combining text, graphs, and heatmaps—can help bridge this divide.

- Standardized Benchmarks and Auditable Metrics: There is a need for evaluation metrics such as faithfulness, latency, and stability, as well as real-world benchmarks like CICIDS2017 in [30] or NSL-KDD in [25,26,32].

5.4. Ethical and Governance Considerations

Explainability is essential for transparency, but alone it does not guarantee ethical or responsible AI. Poorly implemented XAI can obscure bias, erode accountability, or give users a false sense of security. This is particularly concerning in high-stakes domains like healthcare, finance, and security, where automated decisions directly impact human well-being.

- Bias Auditing and Fairness: XAI systems must surface disparities in model behavior across demographic groups. For example, IoMT applications trained on skewed data may underperform for underrepresented patients. Explanations should reveal such imbalances and guide model refinement toward fairness.

- Traceability and Accountability: Explanations should link decisions to model states, inputs, and environmental context to enable meaningful auditing. Blockchain-based logging frameworks can preserve immutable records of decisions and their justifications, supporting post hoc investigation.

- Participatory Design: Stakeholder involvement through co-design, interviews, and usability studies ensures explanations are relevant and understandable. Engaging end-users, domain experts, and policymakers improves the likelihood of trust and adoption.

- Legal and Regulatory Alignment: XAI must meet requirements of frameworks like the EU AI Act and GDPR, which demand “meaningful information” about algorithmic logic. This calls for both technical clarity and accessible formats, including natural-language summaries and just-in-time user interfaces.

- Mitigating Misuse: Explanations may be selectively presented or misunderstood, especially if oversimplified. Developers must communicate limitations and uncertainties clearly, avoiding cherry-picking or deceptive presentation.

- Sociotechnical Responsibility: XAI should be integrated into broader systems of documentation, oversight, and continuous feedback. In critical contexts, explanations must address not just “what” the system did, but “why”, and under what conditions, limitations, and assumptions.

Ultimately, explainability must be a foundational design principle, embedded throughout the development lifecycle. Ethical XAI is not just about opening black boxes, it is about ensuring that what is revealed is meaningful, fair, and usable by the people affected.

The integration of Explainable AI into IoT systems presents a promising yet complex frontier. Across healthcare, industrial automation, and cybersecurity, XAI enhances interpretability, operational transparency, and trust—enabling domain experts to understand, validate, and act on model outputs. However, the road to trustworthy automation is hindered by challenges such as resource-constrained edge deployments, adversarial exploitation of explanations, and the superficial compliance of many explainability implementations.

This paper advocates for the development of scalable, secure, and human-centric XAI frameworks that balance technical feasibility with ethical integrity. Federated architectures and lightweight explainers offer potential solutions for edge environments, while adversarially-robust pipelines and multimodal interfaces can improve resilience and usability. Standardized benchmarks and stakeholder-involved design processes are essential for ensuring meaningful transparency across user types and domains.

Finally, ethical considerations must guide every phase of XAI development. From bias auditing and regulatory alignment to participatory design and misuse prevention, explainability must be framed as a core principle of responsible AI—not merely a technical accessory. By embedding XAI within broader sociotechnical systems of accountability, IoT ecosystems can evolve into transparent, equitable, and resilient infrastructures for human-centered decision support.

6. Conclusions

In conclusion, this review has synthesized recent advancements in Explainable AI (XAI) for IoT systems, underscoring its critical role in bridging the gap between complex AI decision-making and the need for transparency in sectors like healthcare, cybersecurity, and smart infrastructure. We have discussed a range of XAI methodologies and their applications, highlighting both their transformative potential and the significant challenges that remain—particularly concerning computational constraints, data diversity, and security vulnerabilities. As IoT ecosystems continue to evolve and integrate deeper into the fabric of daily life, the demand for interpretable, trustworthy, and ethically sound AI will only intensify.

Future progress hinges on developing resource-efficient XAI techniques for the edge, creating robust standards against adversarial attacks, and fostering a human-centric approach to design. By continuing to address these challenges, researchers and practitioners can ensure that the intelligent systems of tomorrow are not only powerful but also accountable, equitable, and ultimately, worthy of our trust.

Author Contributions

Conceptualization, J.M. and J.G.; methodology, J.M. and W.D.; validation, J.M., J.G. and W.D.; formal analysis, J.M. and J.G.; investigation, J.M., J.G. and W.D.; resources, Y.L., Q.W. and J.W.; data curation, J.M. and W.D.; writing—original draft preparation, J.M., J.G. and W.D.; writing—review and editing, J.M., J.G., W.D., Y.L., Q.W. and J.W.; visualization, J.G. and W.D.; supervision, Y.L., Q.W. and J.W.; project administration, Y.L. and Q.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Datasets and Performance Benchmarking.

Table A1.

Datasets and Performance Benchmarking.

| Dataset | Scope | Key Contributions | Accuracy/XAI Impact |

|---|---|---|---|

| NSL-KDD | General intrusion detection | Benchmark for LSTM/GRU models [25,26]; SHAP identifies SYN flood patterns; evaluate TRUST XAI model [32] | Over 95% TPR/TNR; 18% faster than DL-IDS; shows how the TRUST XAI models performs (average accuracy of 98%) |

| CICIDS2017 | Smart city attacks | Validates blockchain-GRU hybrid [30]; SHAP highlights SSL/TLS anomalies | 99.53% accuracy; 14% lower FPR than SOTA |

| N-BaIoT | Botnet detection | XGBoost with SMOTE achieves 100% binary accuracy [35]; SHAP exposes IoT device jitter; Used to test proposed model and compare to other algorithms [41] | 99% precision; 20% faster inference than CNN; Shows that the proposed method outperforms other algorithms such as RNN-LF |

| GSA-IIoT | Industrial sensor anomalies | Validates CNN-Autoencoder-LSTM [31]; SHAP explains sensor redundancy | 99.35% accuracy; 98.86% precision |

| WUSTL-IIoT | IIoT attacks | TRUST XAI [32] identifies PLC command spoofing via latent variables; | N/A (qualitative interpretability focus) |

| ToN IoT | Multi-class attacks | Random Forest/AdaBoost [51] classify 17 attack types; LIME explains DNS tunneling; evaluate efficiency of framework and train IDS [24]; Train framework [26]; Evaluate XSRU-IoMT [39] | >99% accuracy; 12% improvement over SVM; systems have above 99% accuracy; higher precision; shows that the model is more robust than other detection models such as the GRU model |

| BoT-IoT | Botnet analysis | Sequential backward selection [52]; SHAP reduces feature space by 40% | 99% precision; 30% lower computational overhead |

| UNSW-NB15 | Network attacks | Train framework [26]; evaluate TRUST XAI model [32] | Higher recall and accuracy; shows how well TRUST XAI performs(average accuracy of 98%) |

| CICIoT2023 | IoT attacks | Used to create a novel dataset to test TXAI-ADV [28]; Test model [29]; train proposed model [50] | 96% accuracy rate; 99% accuracy; 95.59% accuracy |

| Traffic Flow Dataset | Test anomaly detection algorithms | Tests model effectiveness [20] | 96.28% recall metric |

| USB-IDS | Network intrusion detection | Test model effectiveness [21,22] | 84% accuracy with benign data and 100% accuracy with attack data; 98% detection accuracy |

| 2023 Edge-IIoTset | IoT attacks | Tests model [29] | 92% accuracy |

| GSP Dataset | Anomaly detection | tests proposed model [31] | 99.35% accuracy |

| CIC IoT Dataset 2022 | IoT attacks | evaluate the proposed IDS [33] | Shows that the IDS can detect attacks effectively |

| IEC 69870-5 104 Intrusion Dataset | Cyberattacks | evaluate the proposed IDS [33] | Shows that the IDS can detect attacks effectively |

| IoTID20 Dataset | IoT attacks | train model [35] | 85-100% accuracy depending on the type of attack |

| Aposemat IoT-23 Dataset (IoT-23 Dataset) | IoT attacks | Okiru malware and samples from the dataset used to train the model [40] | Shows that detection models can be fooled with counterfactual explanations |

| IPFlow | IoT Network Devices | Used to test proposed method and compare to other algorithms [41] | Shows that the proposed method outperforms other algorithms such as RNN-LF |

| PTB-XL Dataset | electrocardiogram data | evaluate the two proposed models [44] | 96.21% accuracy on ID CNN and 95.53% accuracy on GoogLeNet |

| AI4I2020 Dataset | Predictive maintenance | Evaluate balanced K-Star Method [46] | Shows that the balanced K-Star method has 98.75% accuracy |

| Electrical fault detection dataset | Fault detection | Tested with various models such as GRU and is then compared to the proposed model (GSX) [47] | Shows that the GSX model outperforms other models |

| Invisible Systems LTD dataset | Fault prediction | Tested with various models such as GRU and is then compared to the proposed model (GSX) [47] | Shows that the GSX model outperforms other models |

References

- Jagatheesaperumal, S.K.; Pham, Q.V.; Ruby, R.; Yang, Z.; Xu, C.; Zhang, Z. Explainable AI over the Internet of Things (IoT): Overview, State-of-the-Art and Future Directions. IEEE Open J. Commun. Soc. 2022, 3, 2106–2136. [Google Scholar] [CrossRef]

- Chung, N.C.; Chung, H.; Lee, H.; Brocki, L.; Chung, H.; Dyer, G. False Sense of Security in Explainable Artificial Intelligence (XAI). arXiv 2024, arXiv:2405.03820. [Google Scholar] [CrossRef]

- Quincozes, V.E.; Quincozes, S.E.; Kazienko, J.F.; Gama, S.; Cheikhrouhou, O.; Koubaa, A. A survey on IoT application layer protocols, security challenges, and the role of explainable AI in IoT (XAIoT). Int. J. Inf. Secur. 2024, 23, 1975–2002. [Google Scholar] [CrossRef]

- Kök, İ.; Okay, F.Y.; Muyanlı, Ö.; Özdemir, S. Explainable Artificial Intelligence (XAI) for Internet of Things: A Survey. IEEE Internet Things J. 2023, 10, 14764–14779. [Google Scholar] [CrossRef]

- Eren, E.; Okay, F.Y.; Özdemir, S. Unveiling anomalies: A survey on XAI-based anomaly detection for IoT. Turk. J. Electr. Eng. Comput. Sci. 2024, 32, 358–381. [Google Scholar] [CrossRef]

- Ali, S.; Abuhmed, T.; El-Sappagh, S.; Muhammad, K.; Alonso-Moral, J.M.; Confalonieri, R.; Guidotti, R.; Del Ser, J.; Díaz-Rodríguez, N.; Herrera, F. Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Inf. Fusion 2023, 99, 101805. [Google Scholar] [CrossRef]

- Spartalis, C.N.; Semertzidis, T.; Daras, P. Balancing XAI with Privacy and Security Considerations. In Proceedings of the Computer Security. ESORICS 2023 International Workshops, The Hague, The Netherlands, 25–29 September 2023; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Masud, M.T.; Keshk, M.; Moustafa, N.; Linkov, I.; Emge, D.K. Explainable Artificial Intelligence for Resilient Security Applications in the Internet of Things. IEEE Open J. Commun. Soc. 2024, 6, 2877–2906. [Google Scholar] [CrossRef]

- Baniecki, H.; Biecek, P. Adversarial attacks and defenses in explainable artificial intelligence: A survey. Inf. Fusion 2024, 107, 102303. [Google Scholar] [CrossRef]

- Senevirathna, T.; La, V.H.; Marchal, S.; Siniarski, B.; Liyanage, M.; Wang, S. A Survey on XAI for 5G and Beyond Security: Technical Aspects, Challenges and Research Directions. IEEE Commun. Surv. Tutor. 2024, 27, 941–973. [Google Scholar] [CrossRef]

- Sahu, S.K.; Mazumdar, K. Exploring security threats and solutions Techniques for Internet of Things (IoT): From vulnerabilities to vigilance. Front. Artif. Intell. 2024, 7, 1397480. [Google Scholar] [CrossRef] [PubMed]

- Bharati, S.; Podder, P. Machine and Deep Learning for IoT Security and Privacy: Applications, Challenges, and Future Directions. Secur. Commun. Netw. 2022, 2022, 8951961. [Google Scholar] [CrossRef]

- Hussain, F.; Hussain, R.; Hossain, E. Explainable Artificial Intelligence (XAI): An Engineering Perspective. arXiv 2021, arXiv:2101.03613. [Google Scholar] [CrossRef]

- Saeed, W.; Omlin, C. Explainable AI (XAI): A systematic meta-survey of current challenges and future opportunities. Knowl.-Based Syst. 2023, 263, 110273. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Moustafa, N.; Koroniotis, N.; Keshk, M.; Zomaya, A.Y.; Tari, Z. Explainable Intrusion Detection for Cyber Defences in the Internet of Things: Opportunities and Solutions. IEEE Commun. Surv. Tutor. 2023, 25, 1775–1807. [Google Scholar] [CrossRef]

- Capuano, N.; Fenza, G.; Loia, V.; Stanzione, C. Explainable Artificial Intelligence in CyberSecurity: A Survey. IEEE Access 2022, 10, 93575–93600. [Google Scholar] [CrossRef]

- Zhang, Z.; Hamadi, H.A.; Damiani, E.; Yeun, C.Y.; Taher, F. Explainable Artificial Intelligence Applications in Cyber Security: State-of-the-Art in Research. IEEE Access 2022, 10, 93104–93139. [Google Scholar] [CrossRef]

- Charmet, F.; Tanuwidjaja, H.C.; Ayoubi, S.; Gimenez, P.F.; Han, Y.; Jmila, H.; Blanc, G.; Takahashi, T.; Zhang, Z. Explainable artificial intelligence for cybersecurity: A literature survey. Ann. Telecommun. 2022, 77, 789–812. [Google Scholar] [CrossRef]

- Saghir, A.; Beniwal, H.; Tran, K.; Raza, A.; Koehl, L.; Zeng, X.; Tran, K. Explainable Transformer-Based Anomaly Detection for Internet of Things Security. In Proceedings of the The Seventh International Conference on Safety and Security with IoT, Bratislava, Slovakia, 24–26 October 2023. [Google Scholar]

- Kalutharage, C.S.; Liu, X.; Chrysoulas, C. Explainable AI and Deep Autoencoders Based Security Framework for IoT Network Attack Certainty (Extended Abstract). In Proceedings of the Attacks and Defenses for the Internet-of-Things, Copenhagen, Denmark, 30 September 2022. [Google Scholar]

- Kalutharage, C.S.; Liu, X.; Chrysoulas, C.; Pitropakis, N.; Papadopoulos, P. Explainable AI-Based DDOS Attack Identification Method for IoT Networks. Computers 2023, 12, 32. [Google Scholar] [CrossRef]

- Tabassum, S.; Parvin, N.; Hossain, N.; Tasnim, A.; Rahman, R.; Hossain, M.I. IoT Network Attack Detection Using XAI and Reliability Analysis. In Proceedings of the 2022 25th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 17–19 December 2022. [Google Scholar]

- Shtayat, M.M.; Hasan, M.K.; Sulaiman, R.; Islam, S.; Khan, A.U.R. An Explainable Ensemble Deep Learning Approach for Intrusion Detection in Industrial Internet of Things. IEEE Access 2023, 11, 115047–115061. [Google Scholar] [CrossRef]

- Djenouri, Y.; Belhadi, A.; Srivastava, G.; Lin, J.C.W.; Yazidi, A. Interpretable intrusion detection for next generation of Internet of Things. Comput. Commun. 2023, 203, 192–198. [Google Scholar] [CrossRef]

- Keshk, M.; Koroniotis, N.; Pham, N.; Moustafa, N.; Turnbull, B.; Zomaya, A.Y. An explainable deep learning-enabled intrusion detection framework in IoT networks. Inf. Sci. 2023, 639, 119000. [Google Scholar] [CrossRef]

- Sharma, B.; Sharma, L.; Lal, C.; Roy, S. Explainable artificial intelligence for intrusion detection in IoT networks: A deep learning based approach. Expert Syst. Appl. 2024, 238, 121751. [Google Scholar] [CrossRef]

- Ojo, S.; Krichen, M.; Alamro, M.A.; Mihoub, A. TXAI-ADV: Trustworthy XAI for Defending AI Models against Adversarial Attacks in Realistic CIoT. Electronics 2024, 13, 1769. [Google Scholar] [CrossRef]

- Nkoro, E.C.; Njoku, J.N.; Nwakanma, C.I.; Lee, J.M.; Kim, D.S. Zero-Trust Marine Cyberdefense for IoT-Based Communications: An Explainable Approach. Electronics 2024, 13, 276. [Google Scholar] [CrossRef]

- Kumar, R.; Javeed, D.; Aljuhani, A.; Jolfaei, A.; Kumar, P.; Islam, A.K.M.N. Blockchain-Based Authentication and Explainable AI for Securing Consumer IoT Applications. IEEE Trans. Consum. Electron. 2024, 70, 1145–1154. [Google Scholar] [CrossRef]

- Khan, I.A.; Moustafa, N.; Pi, D.; Sallam, K.M.; Zomaya, A.Y.; Li, B. A New Explainable Deep Learning Framework for Cyber Threat Discovery in Industrial IoT Networks. IEEE Internet Things J. 2022, 9, 11604–11613. [Google Scholar] [CrossRef]

- Zolanvari, M.; Yang, Z.; Khan, K.; Jain, R.; Meskin, N. TRUST XAI: Model-Agnostic Explanations for AI With a Case Study on IIoT Security. IEEE Internet Things J. 2023, 10, 2967–2978. [Google Scholar] [CrossRef]

- Siganos, M.; Radoglou-Grammatikis, P.; Kotsiuba, I.; Markakis, E.; Moscholios, I.; Goudos, S.; Sarigiannidis, P. Explainable AI-based Intrusion Detection in the Internet of Things. In Proceedings of the 18th International Conference on Availability, Reliability and Security, Benevento, Italy, 29 August–1 September 2023; Association for Computing Machinery: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Gaspar, D.; Silva, P.; Silva, C. Explainable AI for Intrusion Detection Systems: LIME and SHAP Applicability on Multi-Layer Perceptron. IEEE Access 2024, 12, 30164–30175. [Google Scholar] [CrossRef]

- Muna, R.K.; Hossain, M.I.; Alam, M.G.R.; Hassan, M.M.; Ianni, M.; Fortino, G. Demystifying machine learning models of massive IoT attack detection with Explainable AI for sustainable and secure future smart cities. Internet Things 2023, 24, 100919. [Google Scholar] [CrossRef]

- Namrita Gummadi, A.; Napier, J.C.; Abdallah, M. XAI-IoT: An Explainable AI Framework for Enhancing Anomaly Detection in IoT Systems. IEEE Access 2024, 12, 71024–71054. [Google Scholar] [CrossRef]

- Houda, Z.A.E.; Brik, B.; Khoukhi, L. “Why Should I Trust Your IDS?”: An Explainable Deep Learning Framework for Intrusion Detection Systems in Internet of Things Networks. IEEE Open J. Commun. Soc. 2022, 3, 1164–1176. [Google Scholar] [CrossRef]

- Liu, W.; Zhao, F.; Nkenyereye, L.; Rani, S.; Li, K.; Lv, J. XAI Driven Intelligent IoMT Secure Data Management Framework. IEEE J. Biomed. Health Inform. 2024, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Khan, I.A.; Moustafa, N.; Razzak, I.; Tanveer, M.; Pi, D.; Pan, Y.; Ali, B.S. XSRU-IoMT: Explainable simple recurrent units for threat detection in Internet of Medical Things networks. Future Gener. Comput. Syst. 2022, 127, 181–193. [Google Scholar] [CrossRef]

- Pawlicki, M.; Pawlicka, A.; Kozik, R.; Choraś, M. Explainability versus Security: The Unintended Consequences of xAI in Cybersecurity. In Proceedings of the 2nd ACM Workshop on Secure and Trustworthy Deep Learning Systems, Singapore, 2–20 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Djenouri, Y.; Belhadi, A.; Srivastava, G.; Lin, J.C.W. When explainable AI meets IoT applications for supervised learning. Clust. Comput. 2022, 26, 2313–2323. [Google Scholar] [CrossRef]

- Rao, A.; Vashistha, S.; Naik, A.; Aditya, S.; Choudhury, M. Tricking LLMs into Disobedience: Formalizing, Analyzing, and Detecting Jailbreaks. arXiv 2024, arXiv:2305.14965. [Google Scholar] [CrossRef]

- García-Magariño, I.; Muttukrishnan, R.; Lloret, J. Human-Centric AI for Trustworthy IoT Systems With Explainable Multilayer Perceptrons. IEEE Access 2019, 7, 125562–125574. [Google Scholar] [CrossRef]

- Knof, H.; Bagave, P.; Boerger, M.; Tcholtchev, N.; Ding, A.Y. Exploring CNN and XAI-based Approaches for Accountable MI Detection in the Context of IoT-enabled Emergency Communication Systems. In Proceedings of the 13th International Conference on the Internet of Things, Nagoya, Japan, 7–10 November 2023; Association for Computing Machinery: New York, NY, USA, 2024; pp. 50–57. [Google Scholar] [CrossRef]

- Dobrovolskis, A.; Kazanavičius, E.; Kižauskienė, L. Building XAI-Based Agents for IoT Systems. Appl. Sci. 2023, 13, 4040. [Google Scholar] [CrossRef]

- Ghasemkhani, B.; Aktas, O.; Birant, D. Balanced K-Star: An Explainable Machine Learning Method for Internet-of-Things-Enabled Predictive Maintenance in Manufacturing. Machines 2023, 11, 322. [Google Scholar] [CrossRef]

- Mansouri, T.; Vadera, S. A Deep Explainable Model for Fault Prediction Using IoT Sensors. IEEE Access 2022, 10, 66933–66942. [Google Scholar] [CrossRef]

- Sinha, A.; Das, D. XAI-LCS: Explainable AI-Based Fault Diagnosis of Low-Cost Sensors. IEEE Sensors Lett. 2023, 7, 6009304. [Google Scholar] [CrossRef]

- Tsakiridis, N.L.; Diamantopoulos, T.; Symeonidis, A.L.; Theocharis, J.B.; Iossifides, A.; Chatzimisios, P.; Pratos, G.; Kouvas, D. Versatile Internet of Things for Agriculture: An eXplainable AI Approach. In Proceedings of the Artificial Intelligence Applications and Innovations, Neos Marmaras, Greece, 5–7 June 2020; Maglogiannis, I., Iliadis, L., Pimenidis, E., Eds.; Springer: Cham, Switzerland, 2020; pp. 180–191. [Google Scholar]

- Kaur, N.; Gupta, L. Enhancing IoT Security in 6G Environment With Transparent AI: Leveraging XGBoost, SHAP and LIME. In Proceedings of the 2024 IEEE 10th International Conference on Network Softwarization (NetSoft), Saint Louis, MO, USA, 24–28 June 2024; pp. 180–184. [Google Scholar] [CrossRef]

- Tasnim, A.; Hossain, N.; Tabassum, S.; Parvin, N. Classification and Explanation of Different Internet of Things (IoT) Network Attacks Using Machine Learning, Deep Learning and XAI. Bachelor’s Thesis, Brac University, Dhaka, Bangladesh, 2022. [Google Scholar]

- Kalakoti, R.; Bahsi, H.; Nõmm, S. Improving IoT Security With Explainable AI: Quantitative Evaluation of Explainability for IoT Botnet Detection. IEEE Internet Things J. 2024, 11, 18237–18254. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).