Real-Time Occluded Target Detection and Collaborative Tracking Method for UAVs

Abstract

1. Introduction

Key Points of the Article

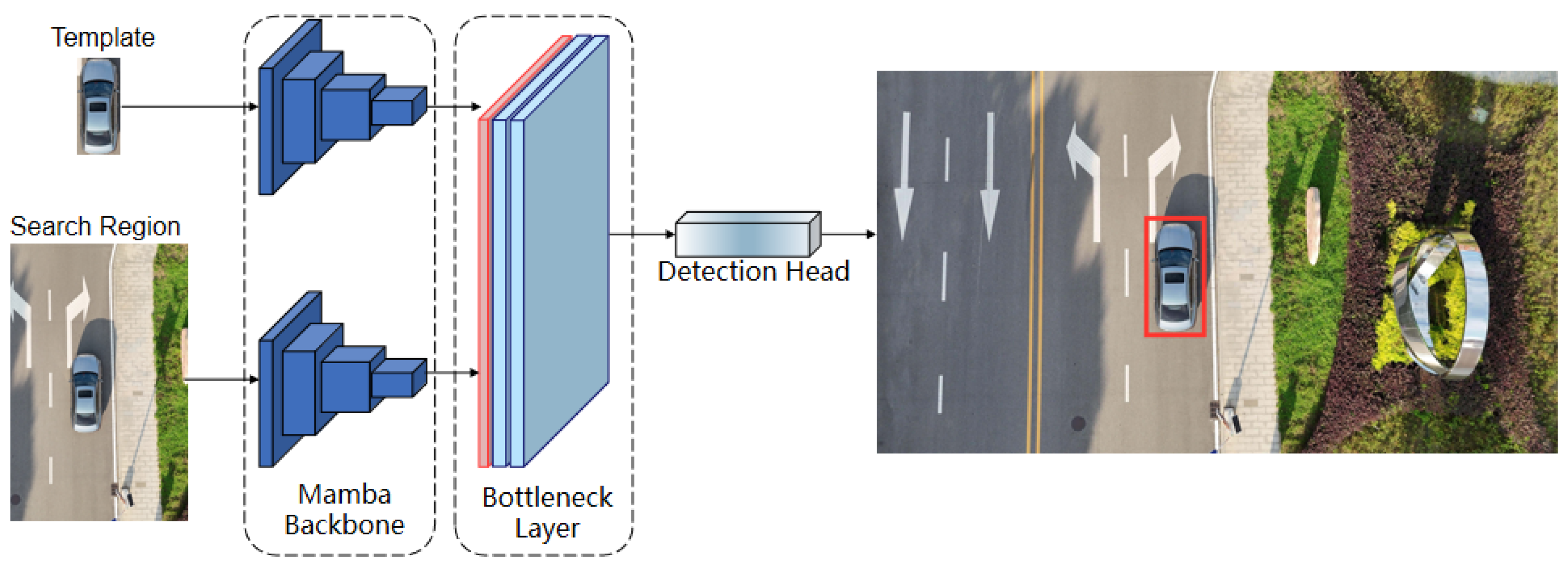

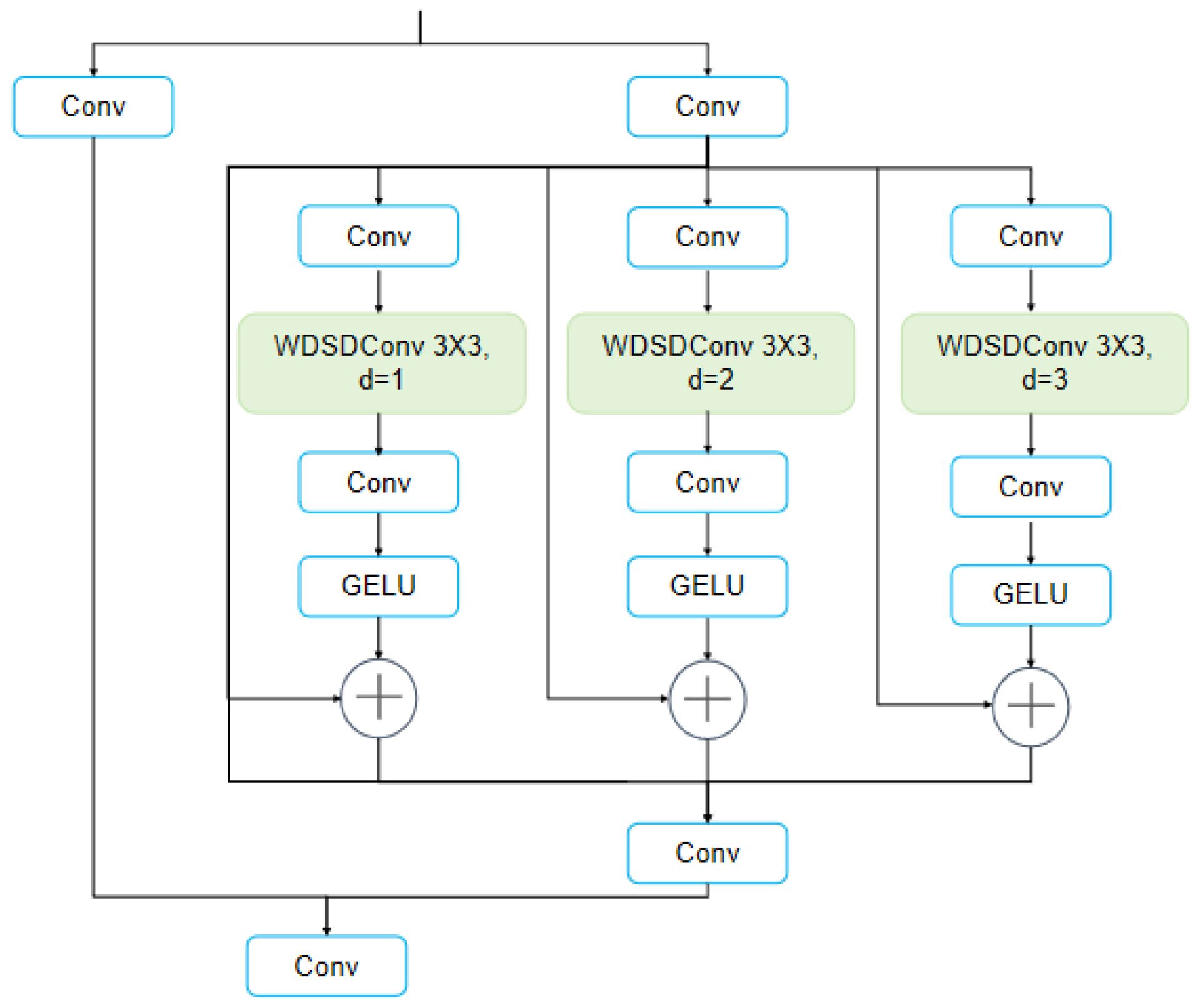

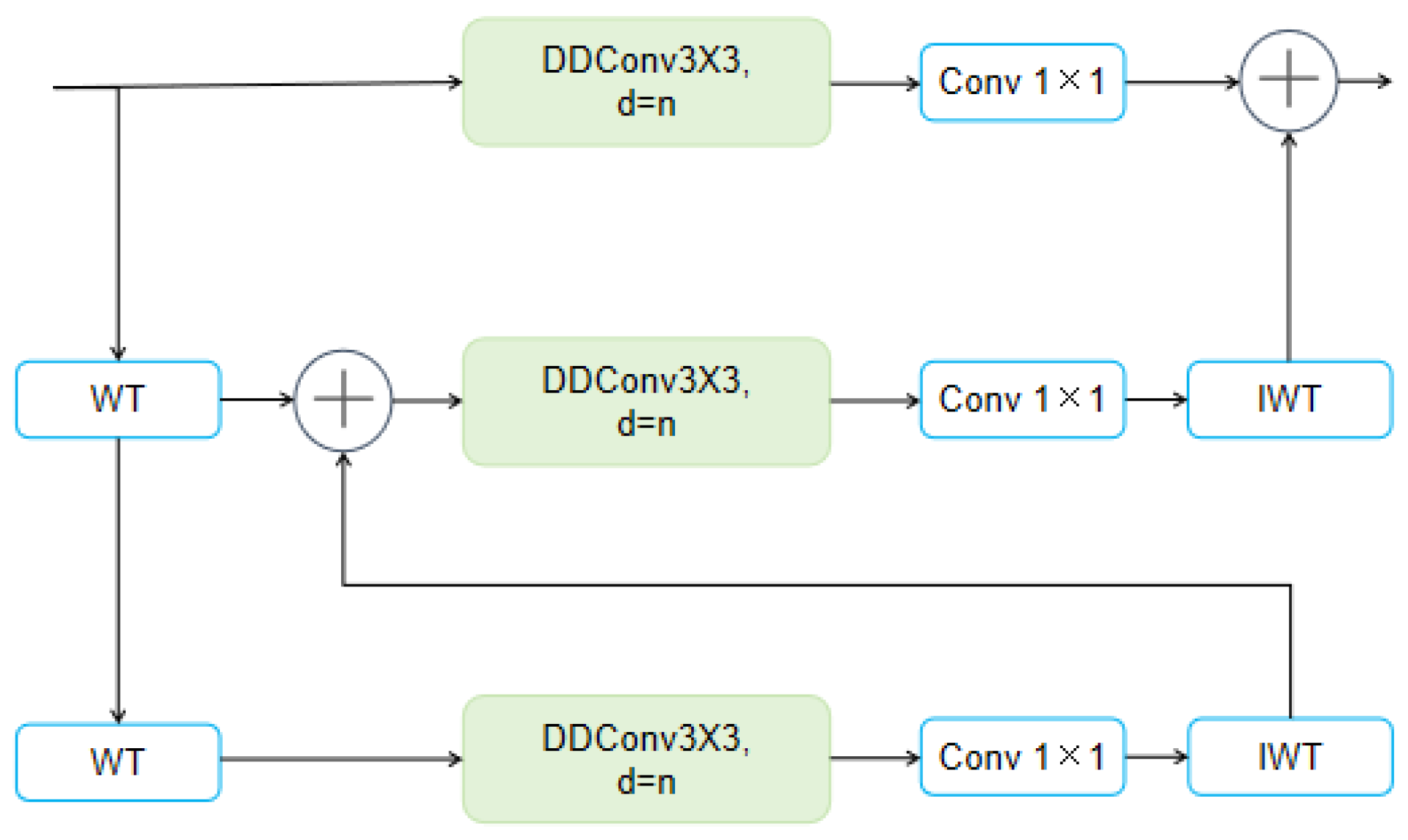

- To address the challenges of contour discontinuity and feature discriminability degradation in heavily occluded environments, an occlusion-robust tracking methodology is proposed. This approach employs a Mamba backbone architecture integrated with a DWRFEM, which amplifies local feature extraction capabilities. Multi-scale contextual information is fused via Wavelet Depthwise Separable Dilated Convolutions (WDSDConv), preserving holistic target contours while enhancing obscured edge detail resolution. Simultaneously, a dual-branch feature refinement framework incorporates DiTAC to elevate nonlinear feature representation, complemented by integrated KAN bottleneck layers for target saliency amplification. This methodology substantially mitigates occlusion-induced feature degradation, enabling sustained tracking robustness under severe occlusion scenarios.

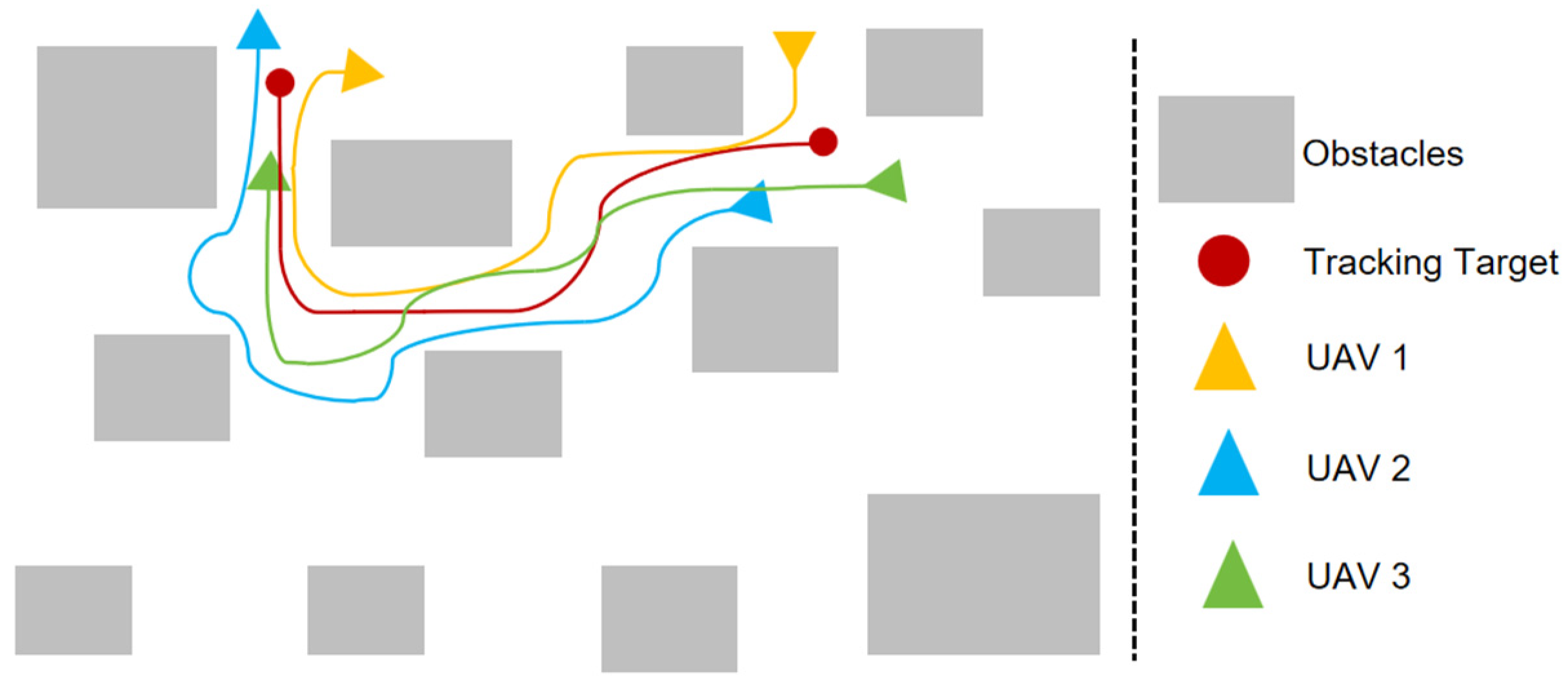

- To overcome tracking interruptions caused by individual drones’ field-of-view limitations and complete occlusions, a multi-UAV collaborative tracking framework is introduced. First, cooperative localization is established via multi-UAV ray intersection to reduce positioning uncertainty. Subsequently, an adaptive spherical sampling algorithm dynamically generates occlusion-free viewpoint distributions based on obstacle density, ensuring continuous target presence within the collective visual coverage. Finally, flight path optimization integrates the CPO for smooth trajectory generation, while real-time yaw angle adjustments maintain target-centering within the visual field.

2. Related Works

2.1. Single-Object Tracking

2.2. UVA Tracking Control

2.3. Motivation of the Article

3. Occlusion-Robust Target Tracking Methodology

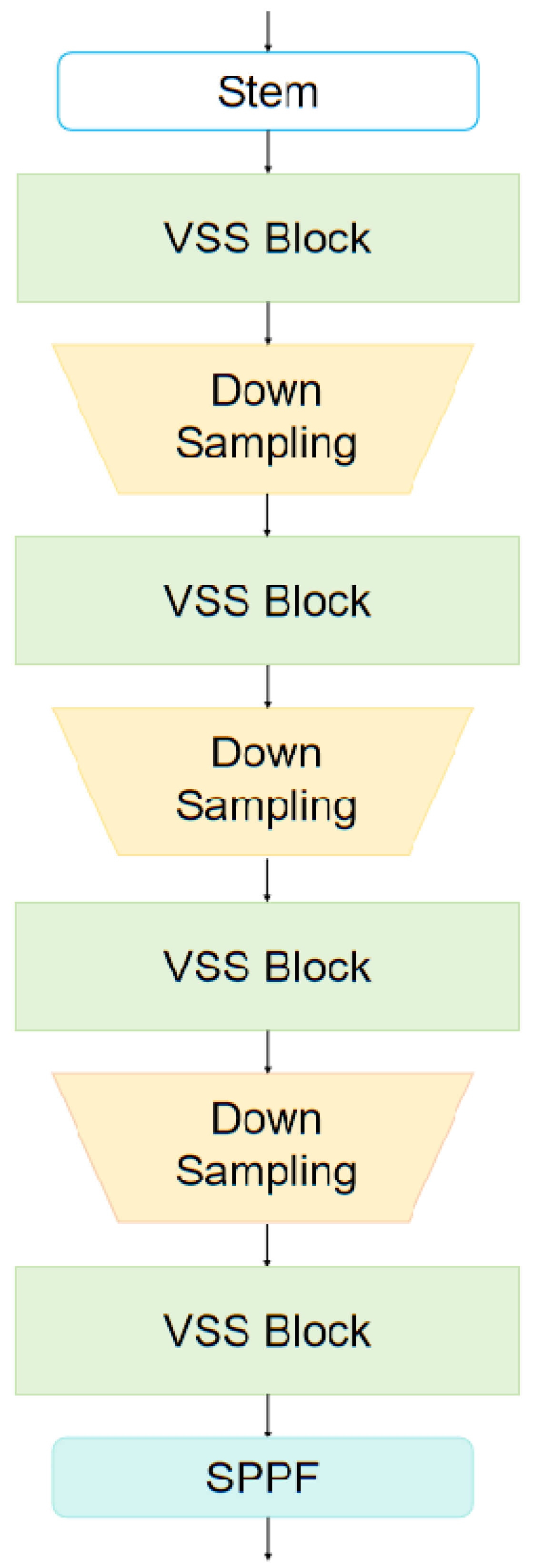

3.1. Mamba Backbone

3.1.1. VSS Block

3.1.2. MixPooling SPPF

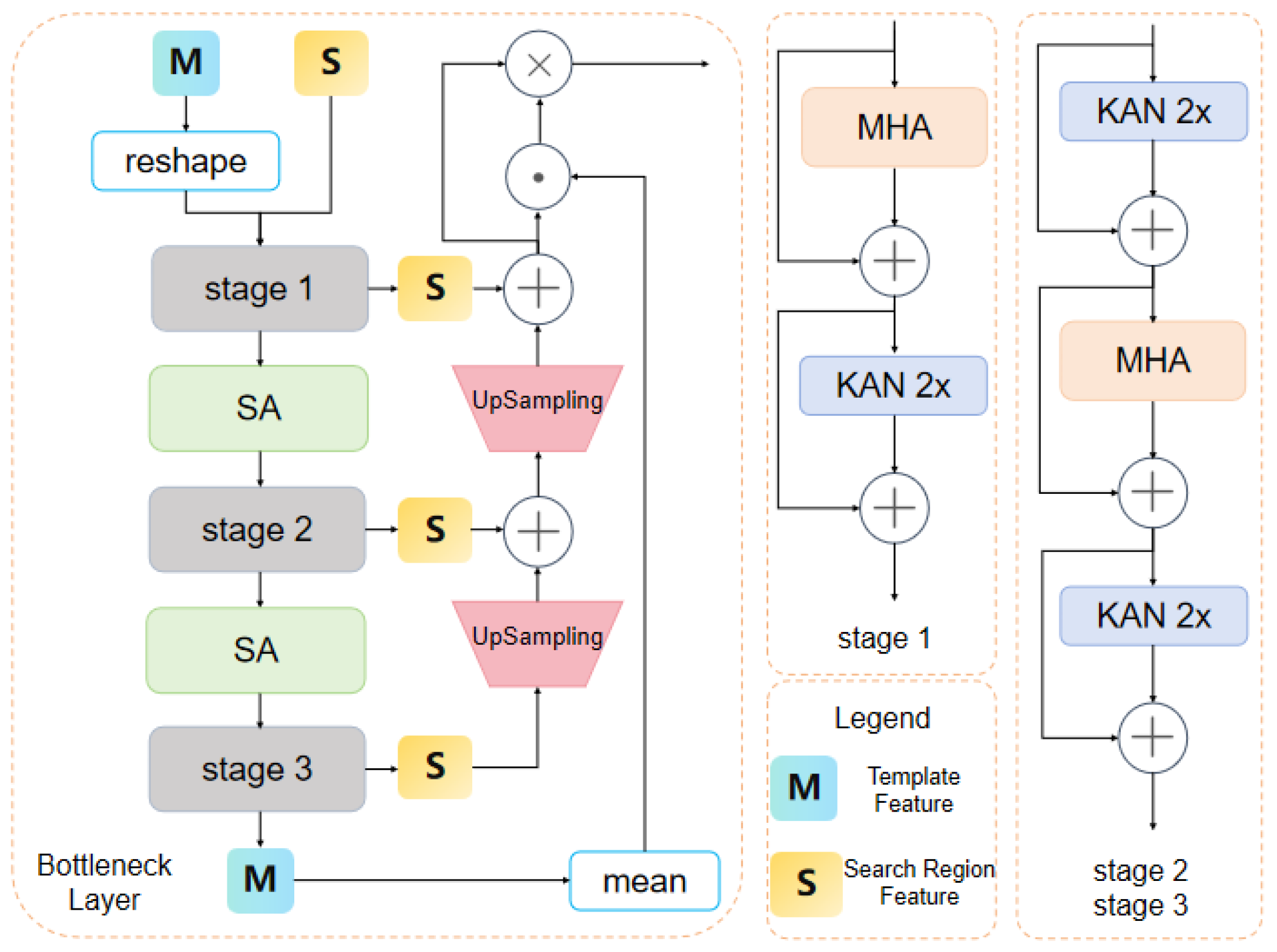

3.2. Bottleneck Layer

3.2.1. Feature Extraction with MHA

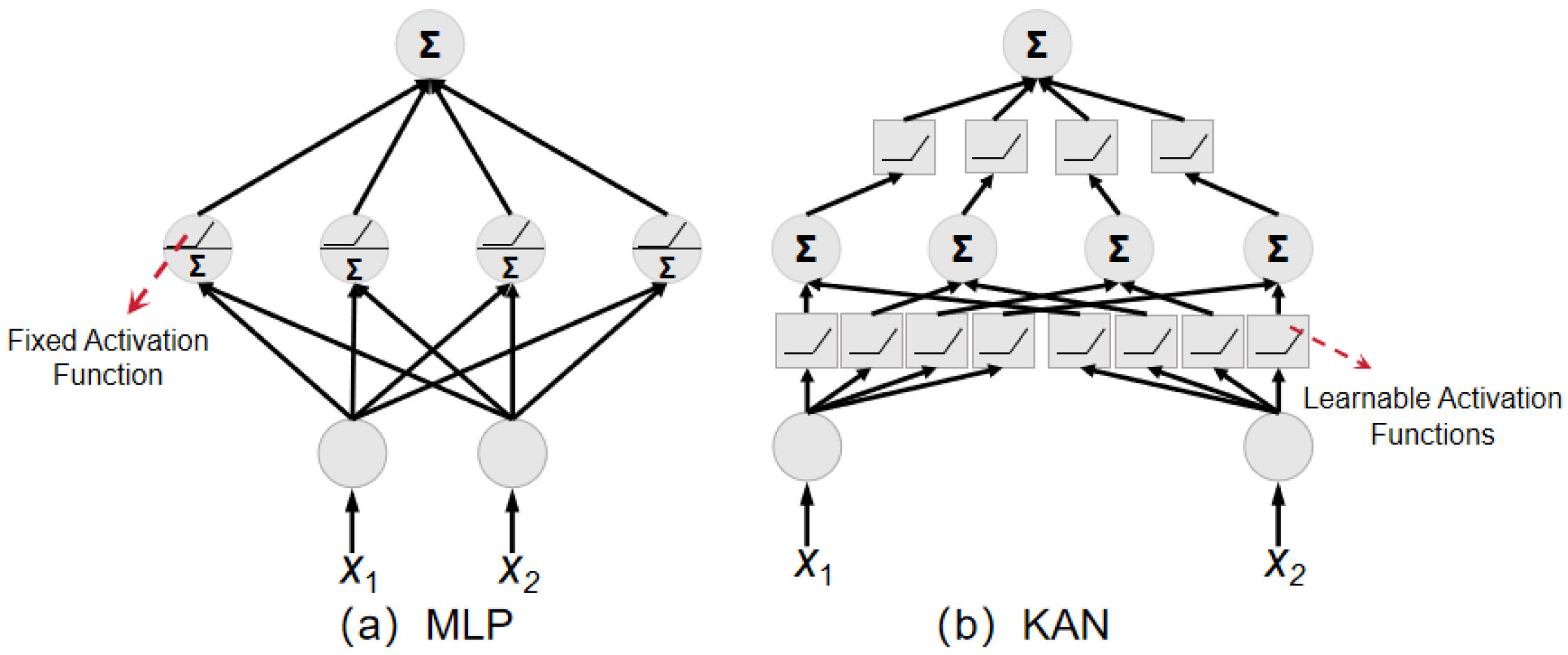

3.2.2. Feature Enhancement and Fusion with KAN

4. Multi-Perspective Collaborative Tracking with Multi-UAV

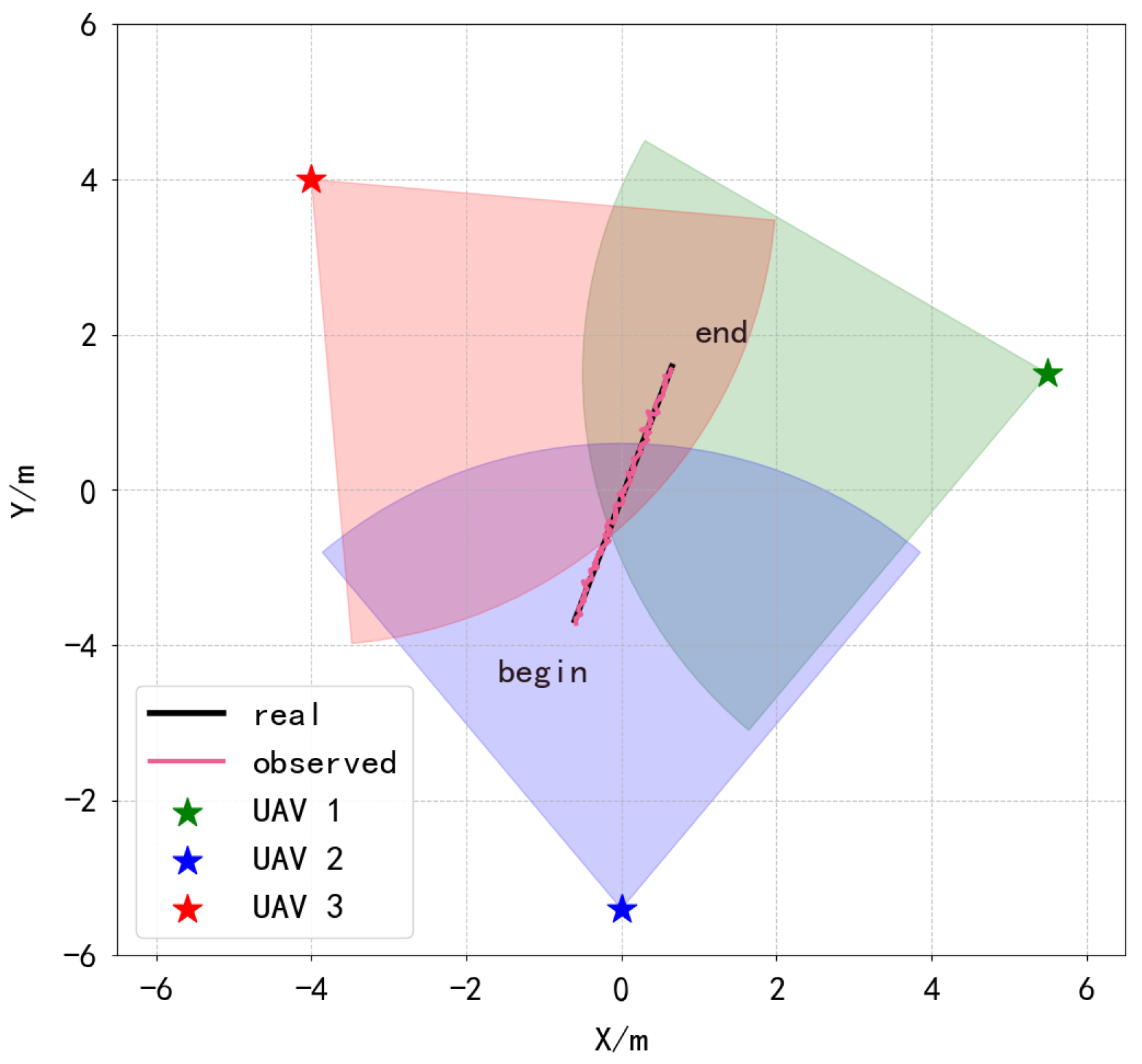

4.1. Collaborative Target Localization with Multi-UAV

4.2. Spherical Visibility Sampling with Viewpoint Optimization

4.2.1. Line-of-Sight Reachability Detection

4.2.2. Visibility Volume Fusion

4.2.3. Adaptive Sampling Optimization

| Algorithm 1: Spherical-sampling-based visible region generation and viewpoint optimization algorithm |

| Input: Target position point , obstacle set , maximum observation distance , UAV count m, base sampling count , density sensitivity coefficient , grid map resolution . Output: Observation point collection 1: Initialize visible point set ; 2: Compute adaptive sample count N; 3: FOR i = 1 to N do 4: Generate sampling point on sphere (center ) via spherical parametrization; 5: IF ray is unobstructed THEN 6: Add to ; 7: END IF 8: END FOR 9: Project onto tangent plane at , obtain 2D point set ; 10: Compute convex hull of ; 11: Merge angular intervals of the convex hull vertices to generate visible regions ; 12: IF m ≤ number of visible regions THEN 13: Generate observation points at centroids of , store in ; 14: ELSE 15: Use Particle Swarm Optimization (PSO) to allocate m observation points within , store in ; 16:END IF 17: RETURN ; |

4.3. Multi-UAV Trajectory Planning

| Algorithm 2: Multi-UAV path planning via crested porcupine optimizer |

| Input: Start point set , observation point set , threat model , weight vector w Output: Optimal path set 1: Initialize crested porcupine path parameter population ; 2:FOR iter=1 to MaxIter do 3: FOR each individual in do 4: Compute path via Dubins(); 5: Calculate cost: ; 6: Apply constraint penalty: : penalty coefficient); 7: END FOR 8: Determine leader: = argmin() 9: FOR each individual in do 10: IF rand() < THEN 11: Random exploration: (: step size, : random individual); 12: ELSE 13: Threat-driven adjustment: ( and are weights, is derived from threat intelligence); 14: END IF 15: END FOR 16: Update population : Combine the incumbent population and newly generated individuals; 17: END FOR 18: return Leader solution (convert to path sets ); |

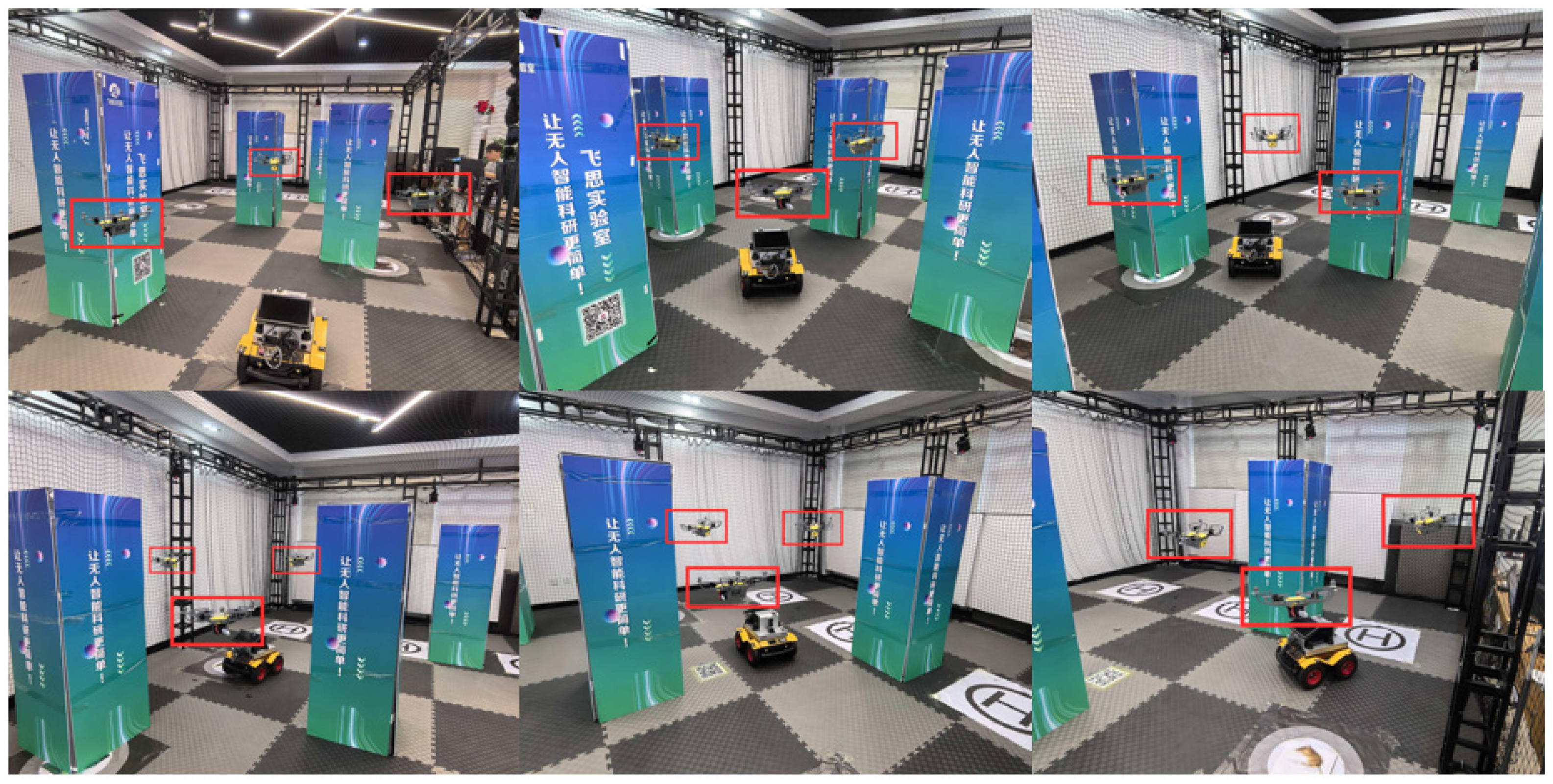

5. Experiments

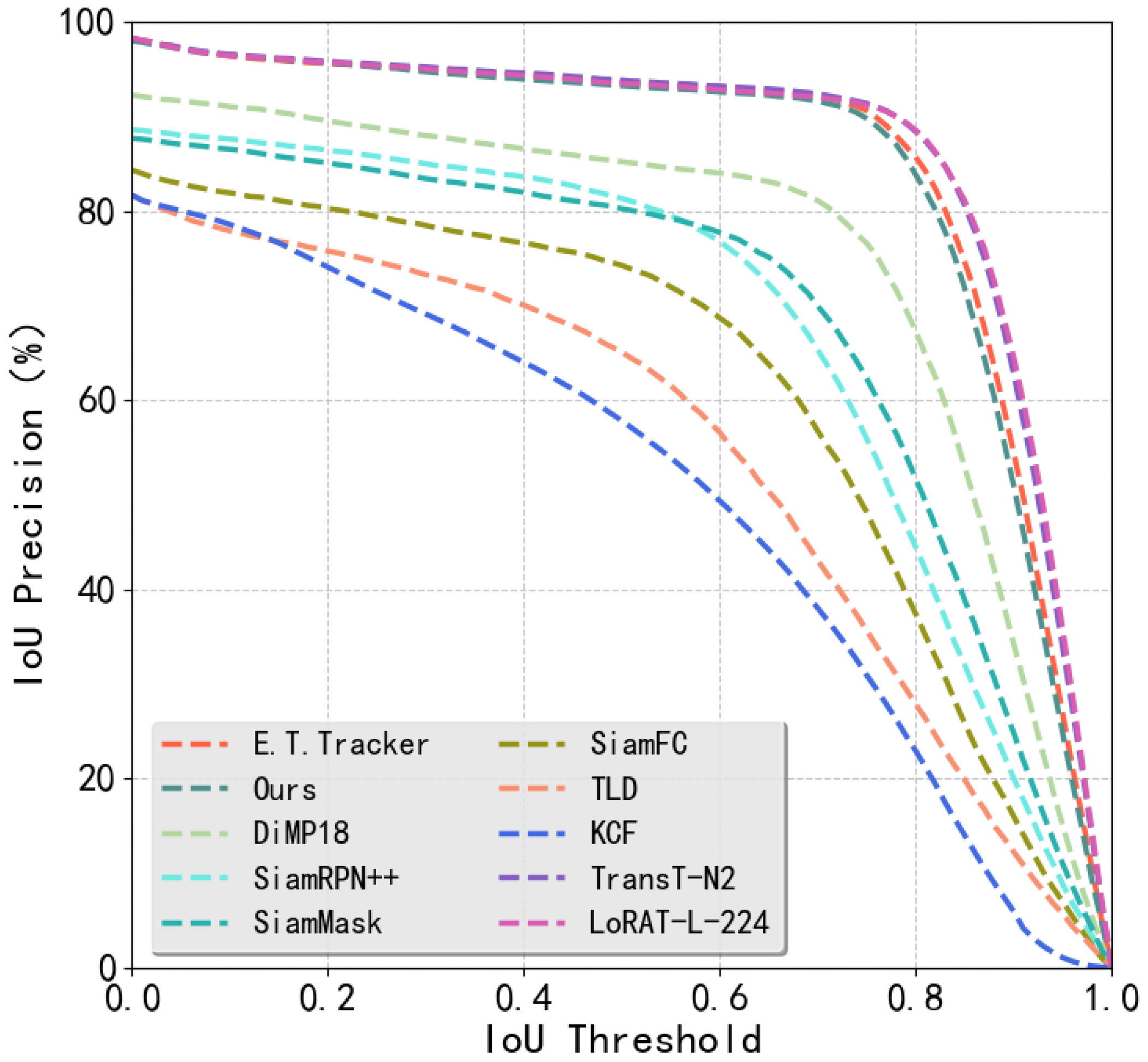

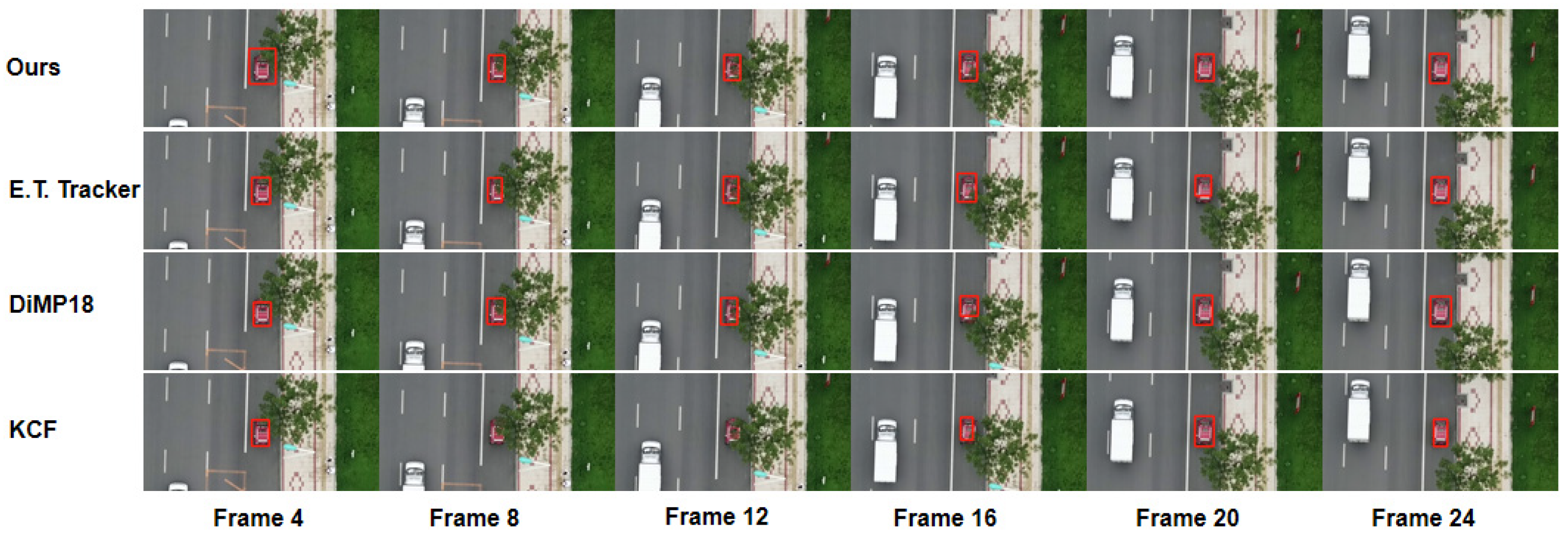

5.1. Evaluation of Occlusion-Robust Target Tracking Methodology

5.2. Evaluation of Multi-UAV Collaborative Tracking Methodology

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- San, C.T.; Kakani, V. Smart Precision Weeding in Agriculture Using 5IR Technologies. Electronics 2025, 14, 2517. [Google Scholar] [CrossRef]

- Li, J.; Hua, Y.; Xue, M. MSO-DETR: A Lightweight Detection Transformer Model for Small Object Detection in Maritime Search and Rescue. Electronics 2025, 14, 2327. [Google Scholar] [CrossRef]

- Ouyang, Y.; Liu, W.; Yang, Q.; Mao, X.; Li, F. Trust Based Task Offloading Scheme in UAV-Enhanced Edge Computing Network. Peer-to-Peer Netw. Appl. 2021, 14, 3268–3290. [Google Scholar] [CrossRef]

- Dong, L.; Liu, Z.; Jiang, F.; Wang, K. Joint Optimization of Deployment and Trajectory in UAV and IRS-Assisted IoT Data Collection System. IEEE Internet Things J. 2022, 9, 21583–21593. [Google Scholar] [CrossRef]

- Jiang, F.; Peng, Y.; Wang, K.; Dong, L.; Yang, K. MARS: A DRL-Based Multi-Task Resource Scheduling Framework for UAV with IRS-Assisted Mobile Edge Computing System. IEEE Trans. Cloud Comput. 2023, 11, 3700–3712. [Google Scholar] [CrossRef]

- Jiang, F.; Wang, K.; Dong, L.; Pan, C.; Xu, W.; Yang, K. AI Driven Heterogeneous MEC System with UAV Assistance for Dynamic Environment: Challenges and Solutions. IEEE Netw. 2020, 35, 400–408. [Google Scholar] [CrossRef]

- Chen, G.; Zhu, P.; Cao, B.; Wang, X.; Hu, Q. Cross-Drone Transformer Network for Robust Single Object Tracking. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4552–4563. [Google Scholar] [CrossRef]

- Yeom, S. Thermal Image Tracking for Search and Rescue Missions with a Drone. Drones 2024, 8, 53. [Google Scholar] [CrossRef]

- Chinthi-Reddy, S.R.; Lim, S.; Choi, G.S.; Chae, J.; Pu, C. DarkSky: Privacy-Preserving Target Tracking Strategies Using a Flying Drone. Veh. Commun. 2022, 35, 100459. [Google Scholar] [CrossRef]

- Wang, K.; Yu, X.; Yu, W.; Li, G.; Lan, X.; Ye, Q.; Jiao, J.; Han, Z. ClickTrack: Towards Real-Time Interactive Single Object Tracking. Pattern Recogn. 2025, 161, 111211. [Google Scholar] [CrossRef]

- Lopez-Sanchez, I.; Moreno-Valenzuela, J. PID Control of Quadrotor UAVs: A Survey. Annu. Rev. Control. 2023, 56, 100900. [Google Scholar] [CrossRef]

- Song, Y.; Scaramuzza, D. Policy Search for Model Predictive Control with Application to Agile Drone Flight. IEEE Trans. Robot. 2022, 38, 2114–2130. [Google Scholar] [CrossRef]

- Saleem, O.; Kazim, M.; Iqbal, J. Robust Position Control of VTOL UAVs Using a Linear Quadratic Rate-Varying Integral Tracker: Design and Validation. Drones 2025, 9, 73. [Google Scholar] [CrossRef]

- Dang, Z.; Sun, X.; Sun, B.; Guo, R.; Li, C. OMCTrack: Integrating Occlusion Perception and Motion Compensation for UAV Multi-Object Tracking. Drones 2024, 8, 480. [Google Scholar] [CrossRef]

- Chang, Y.; Zhou, H.; Wang, X.; Shen, L.; Hu, T. Cross-Drone Binocular Coordination for Ground Moving Target Tracking in Occlusion-Rich Scenarios. IEEE Robot. Autom. Lett. 2020, 5, 3161–3168. [Google Scholar] [CrossRef]

- Hansen, J.G.; de Figueiredo, R.P. Active Object Detection and Tracking Using Gimbal Mechanisms for Autonomous Drone Applications. Drones 2024, 8, 55. [Google Scholar] [CrossRef]

- Meibodi, F.A.; Alijani, S.; Najjaran, H. A Deep Dive into Generic Object Tracking: A Survey. arXiv 2025, arXiv:2507.23251. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking Using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In Proceedings of the European Conference on Computer Vision, Florence Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 702–715. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef]

- Yang, S. A Novel Study on Deep Learning Framework to Predict and Analyze the Financial Time Series Information. Future Gener. Comput. Syst. 2021, 125, 812–819. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, X.; Li, W.; Yu, X. Pharmaceutical Cold Chain Management Based on Blockchain and Deep Learning. J. Internet Technol. 2021, 22, 1531–1542. [Google Scholar] [CrossRef]

- Shi, D.; Zheng, H. A Mortality Risk Assessment Approach on ICU Patients Clinical Medication Events Using Deep Learning. Comput. Model. Eng. Sci. 2021, 128, 161–181. [Google Scholar] [CrossRef]

- Tong, Y.; Sun, W. The Role of Film and Television Big Data in Real-Time Image Detection and Processing in the Internet of Things Era. J. Real-Time Image Process. 2021, 18, 1115–1127. [Google Scholar] [CrossRef]

- Zhou, W.; Zhao, Y.; Chen, W.; Liu, Y.; Yang, R.; Liu, Z. Research on Investment Portfolio Model Based on Neural Network and Genetic Algorithm in Big Data Era. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 228. [Google Scholar] [CrossRef]

- Zeng, Y.; Ouyang, S.; Zhu, T.; Li, C. E-Commerce Network Security Based on Big Data in Cloud Computing Environment. Mob. Inf. Syst. 2022, 2022, 9935244. [Google Scholar] [CrossRef]

- Tao, R.; Gavves, E.; Smeulders, A.W. Siamese Instance Search for Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1420–1429. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking with Siamese Region Proposal Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 8126–8135. [Google Scholar]

- Lin, L.; Fan, H.; Zhang, Z.; Wang, Y.; Xu, Y.; Ling, H. Tracking Meets Lora: Faster Training, Larger Model, Stronger Performance. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 300–318. [Google Scholar]

- Kopyt, A.; Narkiewicz, J.; Radziszewski, P. An Unmanned Aerial Vehicle Optimal Selection Methodology for Object Tracking. Adv. Mech. Eng. 2018, 10, 1–12. [Google Scholar] [CrossRef]

- Gong, K.; Cao, Z.; Xiao, Y.; Fang, Z. Abrupt-Motion-Aware Lightweight Visual Tracking for Unmanned Aerial Vehicles. Vis. Comput. 2021, 37, 371–383. [Google Scholar] [CrossRef]

- Lin, C.; Zhang, W.; Shi, J. Tracking Strategy of Unmanned Aerial Vehicle for Tracking Moving Target. Int. J. Control Autom. Syst. 2021, 19, 2183–2194. [Google Scholar] [CrossRef]

- Lee, K.; Chang, H.J.; Choi, J.; Heo, B.; Leonardis, A.; Choi, J.Y. Motion-Aware Ensemble of Three-Mode Trackers for Unmanned Aerial Vehicles. Mach. Vis. Appl. 2021, 32, 54. [Google Scholar] [CrossRef]

- Campos-Martínez, S.-N.; Hernández-González, O.; Guerrero-Sánchez, M.-E.; Valencia-Palomo, G.; Targui, B.; López-Estrada, F.-R. Consensus Tracking Control of Multiple Unmanned Aerial Vehicles Subject to Distinct Unknown Delays. Machines 2024, 12, 337. [Google Scholar] [CrossRef]

- Zhou, Z.; Hu, J.; Chen, B.; Shen, X.; Meng, B. Target Tracking and Circumnavigation Control for Multi-Unmanned Aerial Vehicle Systems Using Bearing Measurements. Actuators 2024, 13, 323. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, Y.; Zheng, W. Multi-UAVs Tracking Non-Cooperative Target Using Constrained Iterative Linear Quadratic Gaussian. Drones 2024, 8, 326. [Google Scholar] [CrossRef]

- Upadhyay, J.; Rawat, A.; Deb, D. Multiple Drone Navigation and Formation Using Selective Target Tracking-Based Computer Vision. Electronics 2021, 10, 2125. [Google Scholar] [CrossRef]

- Liu, Y.; Li, X.; Wang, J.; Wei, F.; Yang, J. Reinforcement-Learning-Based Multi-Uav Cooperative Search for Moving Targets in 3D Scenarios. Drones 2024, 8, 378. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet Convolutions for Large Receptive Fields. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 363–380. [Google Scholar]

- Zhong, S.; Wen, W.; Qin, J. Mix-Pooling Strategy for Attention Mechanism. arXiv 2022, arXiv:2208.10322. [Google Scholar] [CrossRef]

- Kang, B.; Chen, X.; Wang, D.; Peng, H.; Lu, H. Exploring Lightweight Hierarchical Vision Transformers for Efficient Visual Tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 9612–9621. [Google Scholar]

- Chelly, I.; Finder, S.E.; Ifergane, S.; Freifeld, O. Trainable Highly-Expressive Activation Functions. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 200–217. [Google Scholar]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Liu, Z.; Shang, Y.; Li, T.; Chen, G.; Wang, Y.; Hu, Q.; Zhu, P. Robust Multi-Drone Multi-Target Tracking to Resolve Target Occlusion: A Benchmark. IEEE Trans. Multimed. 2023, 25, 1462–1476. [Google Scholar] [CrossRef]

| Model | FPS | AP |

|---|---|---|

| ResNet18 | 85 | 79.1% |

| ResNet50 | 35 | 83.8% |

| Mamba | 95 | 84.1% |

| Model | FPS | AP |

|---|---|---|

| DWConv | 103 | 81.1% |

| DWRFEM | 95 | 84.1% |

| Model | FPS | AP |

|---|---|---|

| None | 95 | 82.4% |

| SPPF | 95 | 84.1% |

| Model | FPS | AP |

|---|---|---|

| Hardswish | 95 | 82.9% |

| DiTAC | 95 | 84.1% |

| Model | FPS | AP |

|---|---|---|

| MLP | 95 | 83.2% |

| KAN | 95 | 84.1% |

| Model | Ours | E.T.Tracker | KCF | DiMP18 | TransT-N2 | LoRAT-L-224 |

|---|---|---|---|---|---|---|

| AP | 82.9% | 83.1% | 47.9% | 71.3% | 84.5% | 84.6% |

| Metrics | |

|---|---|

| Frame Rate | 39 FPS |

| TPU Utilization | 32.8% |

| CPU Utilization | 19.8% |

| TPU Memory Usage | 624 M |

| CPU Memory Usage | 542 M |

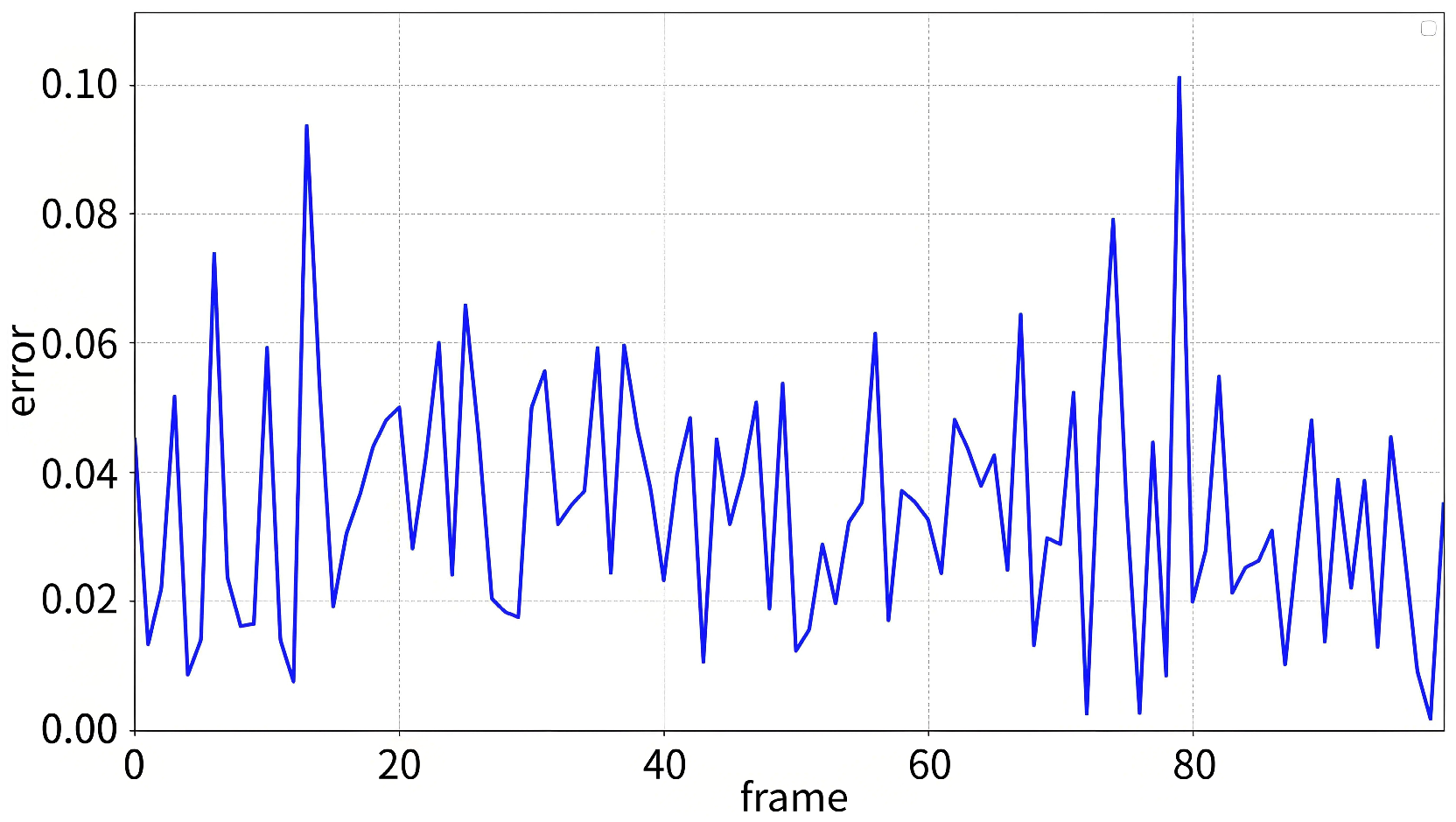

| Tracking Loss Rate | Average Positioning Error |

|---|---|

| 3.7% | 0.031 m |

| Route | Total_Times | CPO | PSO |

|---|---|---|---|

| 1 | 58 s | 79 ms | 58 ms |

| 2 | 70 s | 77 ms | 53 ms |

| 3 | 60 s | 78 ms | 58 ms |

| 4 | 53 s | 74 ms | 52 ms |

| 5 | 59 s | 78 ms | 51 ms |

| 6 | 63 s | 70 ms | 47 ms |

| 7 | 55 s | 80 ms | 44 ms |

| 8 | 64 s | 71 ms | 56 ms |

| 9 | 50 s | 80 ms | 55 ms |

| 10 | 58 s | 78 ms | 42 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ai, Y.; Li, R.; Xiang, C.; Liang, X. Real-Time Occluded Target Detection and Collaborative Tracking Method for UAVs. Electronics 2025, 14, 4034. https://doi.org/10.3390/electronics14204034

Ai Y, Li R, Xiang C, Liang X. Real-Time Occluded Target Detection and Collaborative Tracking Method for UAVs. Electronics. 2025; 14(20):4034. https://doi.org/10.3390/electronics14204034

Chicago/Turabian StyleAi, Yandi, Ruolong Li, Chaoqian Xiang, and Xin Liang. 2025. "Real-Time Occluded Target Detection and Collaborative Tracking Method for UAVs" Electronics 14, no. 20: 4034. https://doi.org/10.3390/electronics14204034

APA StyleAi, Y., Li, R., Xiang, C., & Liang, X. (2025). Real-Time Occluded Target Detection and Collaborative Tracking Method for UAVs. Electronics, 14(20), 4034. https://doi.org/10.3390/electronics14204034