Abstract

Online advertising is vital for reaching target audiences and promoting products. In 2020, US online advertising revenue increased by 12.2% to $139.8 billion. The industry is projected to reach $487.32 billion by 2030. Artificial intelligence has improved click-through rates (CTR), enabling personalized advertising content by analyzing user behavior and providing real-time predictions. This review examines the latest CTR prediction solutions, particularly those based on deep learning, over the past three years. This timeframe was chosen because CTR prediction has rapidly advanced in recent years, particularly with transformer architectures, multimodal fusion techniques, and industrial applications. By focusing on the last three years, the review highlights the most relevant developments not covered in earlier surveys. This review classifies CTR prediction methods into two main categories: CTR prediction techniques employing text and CTR prediction approaches utilizing multivariate data. The methods that use multivariate data to predict CTR are further categorized into four classes: graph-based methods, feature-interaction-based techniques, customer-behavior approaches, and cross-domain methods. The review also outlines current challenges and future research opportunities. The review highlights that graph-based and multimodal methods currently dominate state-of-the-art CTR prediction, while feature-interaction and cross-domain approaches provide complementary strengths. These key takeaways frame open challenges and emerging research directions.

1. Introduction

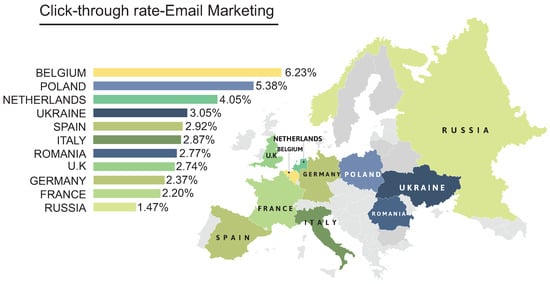

Over the past decade, rapid advancements in information technology have considerably changed the advertising and marketing industry [1]. Advertisers increasingly use various technological methods to promote their products and services more effectively and in a timely manner. This shift has resulted in the widespread use of online advertising as a crucial modern marketing communication tool [2]. Due to the increased amount of time that people of various socioeconomic levels spend on the internet, online advertising is used in e-commerce advertising and as a means of distribution. There are several key reasons why the internet has become an important advertising medium. These include the shift of television viewers to the internet, the increased time people spend on mobile devices and tablets, and the widespread availability of advanced technology and communication networks. Consequently, advertisers increasingly use social networking sites such as Facebook, Twitter, and YouTube to promote their products, as they recognize the value of online advertising as an essential tool for reaching potential customers [3]. Figure 1 shows the effect of online advertisements distributed via the internet to GetResponse customers from January to December 2023 [4], specifically through email, and the click-through rate (CTR) for these ads in various European countries.

Figure 1.

The email CTR in various European countries.

Online advertising is currently regarded as the most effective method of reaching a target audience personally. By leveraging users’ pages on various platforms, online advertising has become crucial for promoting products, raising awareness, and influencing purchase decisions. While direct sales may not occur through these platforms, marketers recognize their importance in building customer relationships and maximizing ad visibility and exposure. Hence, online advertising has emerged as a dominant force within the industry. Online advertising revenue in the US rose from $124.6 billion to $139.8 billion in 2020, a 12.2 percent increase from 2019 [5]. The online advertising industry is projected to reach 487.32 billion by 2030 [6].

The rise of artificial intelligence (AI) in advertising presents tremendous potential and opportunities. It allows for more targeted, efficient, and effective advertising. AI has improved the CTR, which measures the ratio of users clicking on an ad to the total number of users viewing the ad [7]. This metric is crucial in evaluating the effectiveness of online advertising [8]. The CTR directly impacts the success of online businesses, making it a fundamental measure of online advertising quality for these companies [9]. AI creates personalized and pertinent advertising content by analyzing extensive data, comprehending user behavior, and providing real-time predictions. This AI capability eliminates the need for traditional advertising methods, thereby enhancing the effectiveness, efficiency, and appeal of online advertising [10,11].

Despite this rapid progress, existing surveys on CTR prediction remain fragmented and limited in scope. Some prior works provide broad overviews of recommendation systems or advertising technologies. Still, they do not specifically synthesize the advances in CTR prediction driven by deep learning across multiple data modalities. Some surveys focus on specific aspects of CTR prediction, such as feature interactions or graph models, which creates gaps in understanding the connections between methods. Without a comprehensive review, researchers may duplicate efforts, miss opportunities, and struggle to identify challenges. Therefore, this review is necessary to make more contributions, highlight the state-of-the-art, and provide a forward-looking perspective that can guide both academic research and industrial practice in CTR prediction. This survey focuses on CTR prediction research from the last three years. As deep learning advances—especially in transformer architectures, multimodal data integration, and distributed training—traditional methods are becoming outdated. Earlier surveys do not cover the recent innovations in the field. By highlighting trends from the most recent three years, this review offers an updated perspective that complements existing surveys.

The contributions of this survey can be summarized as follows:

- This paper addresses gaps in previous surveys, emphasizes the need for an updated perspective and reviews state-of-the-art CTR prediction methods (those that demonstrate competitive or superior performance on widely used public datasets using standard metrics and/or those validated in industrial-scale deployments) published in the last three years as well as those that were not covered by previous surveys (e.g., [5,12,13,14]). By focusing on developments from the last three years, it highlights key advancements that provide valuable insights for researchers and practitioners.

- We expand existing knowledge by outlining a taxonomy of CTR prediction methods, which are divided into two main categories: text-based methods and multivariate data-based methods. The multivariate data-based methods are further classified into four groups: graph-based approaches, feature interaction methods, customer behavior techniques, and cross-domain methods. This layered classification highlights methodological complementarities and clarifies how different techniques address distinct challenges in CTR prediction.

- We provide a synthesized state-of-the-art summary that identifies graph-based and multimodal methods as the leading approaches, highlights complementary strengths of feature-interaction and cross-domain techniques, and distills key takeaways to guide future CTR research. For instance, graph-based models consistently demonstrate superior scalability on industrial-scale datasets. At the same time, multimodal methods show clear benefits from combining text, image, and behavioral signals.

- Finally, we identify open challenges and forward-looking research directions, including multimodal integration, transfer learning for cold-start and generalization challenges, meta-learning, and distributed edge computing, thereby providing a roadmap for future work that builds upon and extends the current CTR prediction methods.

The paper is organized as follows. Section 3 introduces the background of digital marketing and the CTR. Section 2 describes the methodology used to identify the works covered in this review. Section 4 presents the background of click-through prediction. Section 5 explores and analyzes state-of-the-art CTR prediction methods. Section 6 introduces the challenges facing current CTR prediction methods and recommends future research directions for overcoming these challenges. Section 7 concludes the paper.

2. Research Methodology

We outline the methodology for identifying works in this review to ensure transparency and reproducibility. We searched multiple academic databases, including IEEE Xplore, ACM Digital Library, SpringerLink, ScienceDirect, and Google Scholar. The searches were restricted to peer-reviewed journal articles and conference proceedings published between January 2021 and July 2024, aligning with our focus on the last three years. We employed combinations of the following keywords and Boolean strings: “click-through rate prediction” or “CTR prediction” and (“deep learning” or “neural network” or “transformer” or “graph neural network” or “multimodal” or “recommendation systems”). To capture domain-specific contributions, we also used refinements such as “CTR and advertising,” “CTR and feature interaction,” and “CTR and cross-domain.”

Inclusion criteria required that studies: proposed or evaluated a CTR prediction method, used deep learning or graph-based models, provided empirical validation using public datasets (e.g., Criteo, Avazu, MovieLens, Frappe) or industrial-scale data, and were written in English. We excluded purely theoretical works without experimental validation, non-peer-reviewed preprints, and studies focused only on traditional machine learning that were not relevant to current state-of-the-art methods. This search strategy yielded an initial set of over 300 papers. After removing duplicates and applying inclusion/exclusion criteria, we selected approximately 110 studies that form the basis of this review.

3. Background on Digital Marketing and the Click-Through Rate

A business’s ultimate ad and marketing goal is to accurately deliver information to potential consumers. In the mass marketing era, ads were designed to target the entire market without any differentiation because manufacturers needed more means to obtain multidimensional consumer information and the computational ability to analyze such data. This behavior has resulted in problems associated with high investment waste and low accuracy [15]. Thus, identifying potential customers and delivering tailored ads to those consumers has become essential in marketing. Over the past century, marketing professionals have continuously pursued precision in marketing and advertising strategies, including corner shopkeepers, direct response, and database-driven marketing.

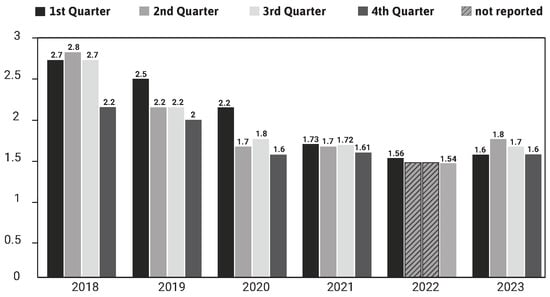

With the internet rapidly expanding and e-commerce becoming more prevalent, companies have implemented numerous methods to gather multidimensional consumer information. The rapid advancement of data analysis techniques, such as big data, machine learning, and cognitive computing, has enabled companies to categorize various customer types and profile users accurately [16]. Consequently, companies can deliver product information directly to potential customers through targeted marketing, leading to increased effectiveness and precision [17]. Various ads exist, including images, basic cards, videos, basic and optional cards, and advertiser mentions in interactive ads. Users typically are shown one or more ads and may click on an ad if a product interests them. The ad generally shows only partial information about the product, such as an image, price, and brand. Detailed information is revealed only when the customer clicks on the ad. Therefore, the CTR is a vital metric for assessing ad effectiveness. Figure 2 shows the CTR for each quarter over the past five years, which is a series compiled by Statista from underlying industry sources from the first quarter of 2018 to the second quarter of 2024 [18]. The CTR is decreasing over time, indicating that focusing on targeted customers is essential for improvement.

Figure 2.

The CTR for every quarter from 2018 to 2023.

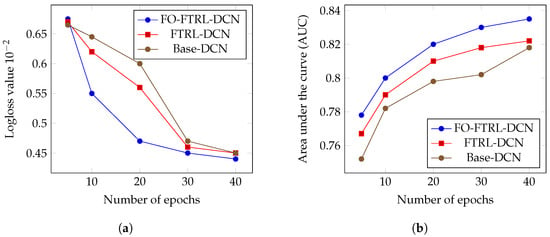

The CTR is crucial in business valuation across various applications, including online advertising, recommender systems, and product search. Generally, CTR prediction is vital for accurately estimating the likelihood of a user clicking or interacting with a suggested item. A slight increase in prediction accuracy for large user base applications will undoubtedly lead to a considerable increase in overall revenue. For example, researchers from Microsoft and Google have indicated that even a slight improvement of 1% in logloss or area under the curve is considered significant in real CTR prediction problems [19]. When solving CTR prediction problems, the data are usually large scale and highly sparse. They include numerous categorical features of different characteristics. For example, app recommendations on Google Play consist of billions of samples and millions of features [20]. Constructing a model that significantly enhances CTR prediction accuracy is a formidable task. The significance of CTR and its distinctive challenges have attracted the attention of numerous researchers from both industry and academia. CTR models have evolved from simple conventional machine learning models to more complex deep learning models.

4. Click-Through Prediction

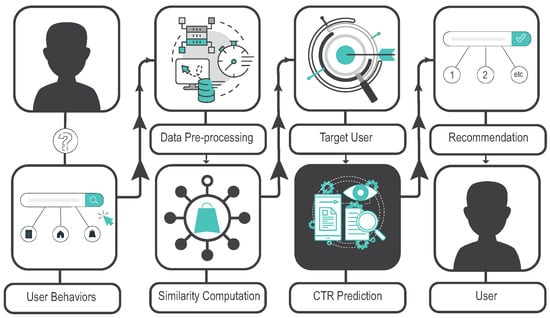

Figure 3 illustrates the typical CTR framework. It begins when a customer visits a website or app. The customer’s behavior, including their navigation of the interface and clicks on specific items, is monitored and recorded for further analysis. This behavior is then preprocessed to highlight meaningful features, making it suitable for machine learning training. Once the training is complete, a customer profile is created for the target user and integrated into the CTR prediction model. The suggested ads are subsequently presented to the target customer. This section presents an overview of CTR prediction, which can be classified into models that utilize multivariate data and models that employ text to predict click-through.

Figure 3.

The typical framework for predicting the click-through rate.

4.1. CTR Prediction Utilizing Multivariate Data

CTR prediction aims to predict the probability of a customer clicking on a given ad. Improving the accuracy of CTR prediction is undeniably a formidable research challenge. Compared with research problems that involve only one data type (e.g., texts or images), data in CTR prediction are multivariate and generally tabular. They consist of categorical, numerical, and other multivalued features. Furthermore, CTR prediction problems involve many samples, and the feature space is highly sparse. A CTR prediction model typically includes the following main components [19].

4.1.1. Embedding Features

Generally, CTR prediction input samples consist of three feature groups: item profile, user profile, and context information. Each feature group contains several fields. Item profile consists of various features, such as category, brand, tags, item ID, seller, and price. User profile comprises personal features such as gender, age, occupation, city, and interests. Context information includes other details such as position, weekday, hour, and slot ID.

Each field in these feature groups contains a different type of data, such as numeric, categorical, or multivalued data (e.g., a single item might have more than one tag). Features are very sparse and require preprocessing via one-hot or multihot encoding, which leads to a high-dimensional feature space. Thus, applying feature embedding is a common practice for mapping high-dimensional features to low-dimensional dense vectors. The embedding strategy utilizing three groups of features can be summarized as follows.

- Numeric Features: A numeric feature field i has various alternatives for feature embedding, such as the following:

- 1.

- Numeric values can be contained in discrete features by manually creating numeric values, such as grouping the age of users by age categories (e.g., grouping users aged between 40 and 59 as middle adulthood) or training them using tree-based algorithms such as decision trees. These features can then be embedded and represented as categorical features.

- 2.

- The embedding of the normalized scalar value is set, where is the intercommunicated embedding vector of the field i features and e is the embedding dimension.

- 3.

- Rather than retaining each value in one vector or allocating a given vector to each numeric field, there are other solutions, such as applying a numeric feature embedding technique to automatically incorporate the numeric feature and calculate the embedding via a meta embedding matrix [21].

- Categorical Features: A categorical feature j is assigned a one-hot feature vector and possesses an embedding , where is the embedding matrix, which is represented as , where e is the embedding dimension and w is the vocabulary size.

- Multivalued Features: A multivalued feature u has several features, each representing a sequence. Each feature embedding can be represented as , and the sequence element’s one-hot encoded vector can be denoted as , where x represents the maximal length of the sequence. The mean pooling or sum pooling can aggregate the embedding to an e-dimensional vector. Other enhancements can be applied through sequential models, such as target attention, which aggregate multivalued behavior sequence features [22,23].

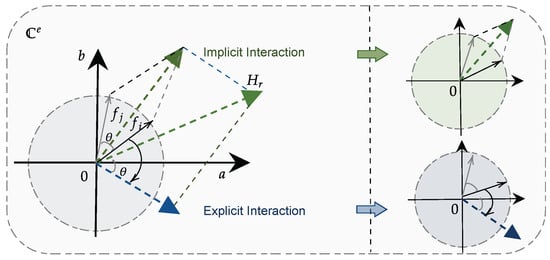

4.1.2. Feature Interaction

Applying a prediction model in CTR after embedding features is straightforward; however, feature interactions (i.e., conjunctions between features) are crucial for improving prediction performance. Pairwise feature conjunctions can be captured utilizing inner products through factorization machines (FMs) [24]. Due to the success of FMs, many researchers have concentrated on capturing the conjunctions among features from various perspectives, including inner and outer products [25,26], cross-networks [27], bi-interactions [28], explicit and implicit feature interactions [29], graph neural networks [30], convolutions [31] and circular convolutions [32], bilinear interactions [33], self-attention [34], and hierarchical attention [35,36]. Several researchers have recently explored ways to merge inner and outer feature interactions with fully connected layers.

4.1.3. Evaluation Metrics

CTR prediction is a binary classification task (i.e., there are two classes: click or not); therefore, the common performance metric used is cross-entropy loss, which is denoted as:

where the training set consists of S samples and has the ground truth labels l and an estimated click probability . The estimated click probability can be represented as , where denotes the function of the model and i represents the input features. The sigmoid function that maps to can be denoted as . The core concept of predicting CTR relies on creating the model function and optimizing the parameters from the training data .

Another standard metric utilized to assess the likelihood of a randomly selected positive instance being classified as larger than a randomly chosen negative instance is the area under the ROC curve (AUC). A higher AUC is directly correlated with superior CTR prediction performance. This metric serves as a strong indicator of the predictive model’s accuracy and effectiveness. Importantly, as the user base increases, an increase in the AUC of 0.001 is significant for the CTR prediction task when deployed in industries [37].

4.1.4. Statistical Information on Datasets

Most of the surveyed research papers evaluate their methods using two publicly available datasets: Criteo, Avazu, MovieLens-1M [38], and Frappe. These datasets include real click logs in production and comprise tens of millions of instances, enabling CTR prediction models to be trained and efficiently deployed in industrial settings. Table 1 lists the statistical information of these datasets.

Table 1.

Statistics of three well-known CTR prediction datasets.

The Criteo dataset captures data on advertisement clicks gathered over a period spanning more than a week. The dataset contains 13 numerical and 26 categorical feature fields. The number of samples is approximately 49 million. The Avazu dataset consists of advertisement clicks obtained over a period of approximately ten days. The dataset contains 23 categorical feature fields and approximately 40 million samples. The MovieLens-1M dataset consists of seven feature fields and approximately 740,000 instances. The frappe dataset consists of 288,609 and 10 feature fields.

4.1.5. Prediction Models

The CTR prediction task is known to involve large-scale data. Thus, logistic regression (LR) [39] is commonly applied or utilized as a baseline model because of its simplicity and computational efficiency. Other enhanced conventional machine learning models, such as FM, field-aware FM, and gradient boosting, are adopted for CTR prediction tasks to improve computational efficiency and nonlinear expressiveness [40]. Recently, deep learning has been integrated into CTR prediction to enhance performance. Methods using recurrent neural networks (RNNs) can capture user behavior and directly extract user interests from time sequence data instead of aggregating interest vectors. For example, a model based on an RNN [41] predicts user preferences from their past click-through behavior. Additionally, an attentive capsule network (ACN) technique [42] was developed to address users’ diverse interests. To enhance performance, targeting advertisement segment groups is a highly effective technique for efficiently delivering customized ads to specific segments [43].

4.1.6. Imbalanced Data Issue

In machine learning, class imbalance is a common issue [44]. When there is an imbalanced distribution of classes in the dataset, the machine-learning model tends to favor the majority class samples over the minority class samples. Therefore, this behavior can lead to a biased output favoring the dominant class. Due to the model’s disregard for the class with fewer samples, the reliability of its performance is questionable. Thus, considerable research proposals have recently concentrated on class imbalance because of the negative effect that class imbalance might have on learning and prediction processes.

In the CTR prediction task, class imbalance techniques are mature enough and can be categorized into three categories: algorithm-level techniques, sampling methods, and ensemble methods.

Algorithm-level techniques are methods that isolate the classifier from the skewed distribution or when traditional machine learning models are adjusted and bound to a cost variable or weight [45]. Other studies have focused on addressing the issue of class imbalance at the algorithm level [46].

Sampling methods employ several steps during training to scale the class distribution by tightening samples from the majority class or appending more samples to the minority class [47]. The sampling methods are typically applied during the data preprocessing phase. These techniques involve redistributing training data from multiple classes through resampling [48]. To balance the imbalanced class, it is necessary to alter the data layout; hence, some studies resample the data to modify the instances of the analog distribution to enhance the model’s performance [49]. Resampling methods can be categorized into three classes:

- 1.

- Undersampling strategies maintain meaningful data for the learning process while ignoring instances from the majority class until each class number of instances is approximately equal. Nevertheless, when undersampling is applied to a dataset, it is expected that certain meaningful instances for the training model may be overlooked. Various undersampling methods utilize several filtering fundamentals, including the following:

- (a)

- Random undersampling is a straightforward technique that randomly discards samples from the majority class, effectively balancing the dataset [50].

- (b)

- The edited nearest neighbor (ENN) technique checks each sample against the others via k-nearest neighbors (KNN), discarding improperly identified instances and updating the dataset with the remaining samples [51].

- (c)

- The neighborhood cleaning rule (NCR) is a technique that considers the three nearest neighbors for each sample. It removes the sample that belongs to the majority class and is misclassified by its three nearest neighbors. Furthermore, the samples of the majority class that are close to an instance of the minority class and are misclassified by their three nearest neighbors are eliminated [52].

- (d)

- Near miss is a technique that selects majority instances based on their proximity to minority instances, specifically those with the least average distances from the three nearest minority samples [53].

- (e)

- The instance hardness threshold (IHT) identifies which samples from the majority class are most likely to be misclassified or redundant during training and excludes them to balance the dataset [54].

- (f)

- The Tomek links method relies on a pair of instances that belong to distinct classes and that are neighbors. These are the links for which the method considers noisy or boundary samples; thus, the boundary sample that belongs to the majority class is removed [55].

- (g)

- One-sided selection (OSS) uses the one-nearest neighbor (1-NN) technique to identify misclassified samples from both distributions (i.e., the minority class and the majority class). The Tomek links method eliminates noisy and borderline majority class samples [56]. The condensed nearest neighbor rule is then employed to remove instances that belong to the majority class, are redundant, and are distant from the decision board to form a constituent subset of the dataset.

- 2.

- Oversampling techniques provide an equal class distribution while preserving class borders; these techniques are responsible for constructing new instances from the minority class to balance the dataset. Due to the replication or synthesis of samples, these techniques are susceptible to overfitting [57]. Oversampling techniques include the following:

- (a)

- Random oversampling is the oldest oversampling technique. It replicates random instances from the minority class to equalize or approximates the majority class [58].

- (b)

- The synthetic minority oversampling technique (SMOTE) is a well-known oversampling approach. It is based on the hypothesis that the feature space of minority class samples is similar. For each minority class sample , SMOTE identifies its k nearest neighbors, and then one of the searched neighbors is randomly chosen as ; these two types of samples and are called seed instances. The method then generates a random number between . A newly synthesized instance is artificially assembled as:In contrast to the random oversampling method, SMOTE can effectively prevent overfitting.

- (c)

- Borderline SMOTE is a technique that identifies the borderline instances repeatedly misclassified by their nearest neighbors and then applies the SMOTE technique to them [59].

- (d)

- Adaptive synthetic sampling is another oversampling method that distributes the minority class samples based on their learning difficulty, constructing more synthetic instances for the minority samples that are harder to learn. Thus, the method enhances the learning concerning the data distributions in two ways:

- Decreasing the bias created by the imbalanced class is crucial for ensuring accurate results.

- The classification decision boundary is adaptively shifted toward difficult-to-learn instances, thus enhancing the learning performance.

- 3.

- Hybrid sampling methods merge oversampling and undersampling techniques to extrapolate a balanced dataset. Although these approaches demonstrate their effectiveness in a way that surpasses individual oversampling/undersampling techniques, they may still exhibit the drawbacks of these methods, such as the loss of significant information in undersampling and overfitting in oversampling. To address these drawbacks, two effective approaches have been introduced:

- (a)

- SMOTE-ENN method is introduced to perform linear interpolation between neighboring minority class instances to produce new minority class instances, effectively resolving the issue of substantial data overlap compared with random oversampling [60]. The k nearest neighboring instance of the same class C is found for each sample that belongs to the minority class and can be represented as . Then, i samples can be selected from class C and represented as . Random linear interpolation is achieved on the lines between and . Thus, the synthetic instance can be represented as:where is the synthetic sample, o is a random coefficient between 0 and 1, and is a vector that contains the synthetic samples.

- (b)

- SMOTE-Tomek is a hybrid approach that combines an oversampling method (SMOTE) and an undersampling method (Tomek links) to avoid the drawbacks of both methods [61]. The approach uses SMOTE to address overfitting issues when increasing the minority class instances. It applies Tomek links to reduce noise by removing samples that consist of pairs of data points from different classes [62].

Ensemble methods are employed to address data internally and adjust the category distribution of the instance. These techniques utilize data-level methods to change the learning process internally via well-known classifiers. This behavior prevents the model from disproportionately favoring the majority class during prediction. The notable resampling ensemble methods can be categorized as follows:

- 1.

- Balanced random forest constructs a balanced dataset from which each tree is assembled. In particular, a bootstrap instance is drawn from the minority class in each iteration. The same number of cases is randomly drawn with replacements from the majority class. A classification tree is generated from the data without pruning (i.e., to the maximum size). Finally, the final prediction is made by aggregating the ensemble (i.e., a certain number of trees) predictions [63].

- 2.

- Balanced bagging uses random undersampling and bagging to address imbalanced data. After resampling each data subgroup, balanced bagging employs integrated estimators.

- 3.

- Easy ensemble uses the AdaBoost algorithm to train multiple classifiers on a proportional subset of the data. The outputs from each classifier are then combined to create an ensemble method [64]. For the majority class samples and the minority class samples , the undersampling technique randomly selects a portion from . The selected subset of samples is usually equal to the minority samples (i.e., ). Thus, various subsets are independently sampled from the majority training set . A classifier constructed by AdaBoost is trained utilizing each subset and the complete set of the minority class . The constructed classifiers are combined to make the final decision. The learning process is as follows:where is the number of iterations, are the weak classifiers, are the corresponding weights, and is the ensemble threshold. This learning process continues until and then outputs the ensemble, which is denoted as follows:An easy ensemble trains the data in an unsupervised manner.

- 4.

- The balance cascade is similar to the easy ensemble. However, it investigates in a supervised fashion. Once the first classifier completes the training process, if a given instance is correctly classified by as part of , then it is likely that is redundant in the majority class. Therefore, some majority class instances would be removed to balance the dataset.

- 5.

- Random undersampling boost utilizes both boosting and sampling to balance the data. This method uses the random undersampling technique in each round of boosting [65]. The algorithm is given a set of samples S, represented as , which includes a minority class . Initially, the algorithm initializes each instance weight as , where z is the number of instances in the training. The H weak learners are trained in an iterative manner as follows. During training, the algorithm applies random undersampling in each iteration to the majority class samples until a specific percentage of the new subset of data , which belongs to the minority class, is obtained. The new subset of data has a new weight distribution . The base learner then receives and to construct the weak hypotheses . The pseudo-loss is then calculated for the original data and weight distribution as follows:The update parameter for the weight is then calculated as:Then, the upcoming iteration weight distribution is revised as follows:The upcoming iteration weight distribution is normalized as follows:Once the iterations are completed, the final hypothesis is acquired as a weighted vote of the weak hypotheses as follows:

4.2. CTR Prediction Utilizing Text

A commercial search engine entices user clicks by pairing search queries with multiple ads (a.k.a. sponsored search). Recent studies have suggested the use of deep learning models to detect similarities between texts and explore the application of deep learning in web searches. Additionally, many studies have suggested implementing the learning process at the word or character level to predict customers’ click-through behaviors [66].

4.2.1. Sponsored Search

Sponsored search is a feature that displays advertisements next to organic search results. The sponsored search ecosystem comprises three crucial components: the user, the search platform, and the advertiser. The search platform aims to display the advertisement that best matches the user’s intent [67]. The key concepts of the ecosystem can be interpreted in the following way:

- Query: the text entered by the user into the search engine website’s search textbox.

- Keyword: the text provided by the advertiser to match a user’s query that includes relevant product-related words.

- Title: the sponsored advertisement label chosen by the advertiser to capture the user’s attention.

- Landing page: the web page the user reaches after clicking on the corresponding advertisement, where the product is featured [68].

- Match type: an option provided to advertisers to specify how closely a user’s query should match the keyword. There are typically four match types: broad, exact, phrase, and contextual [69].

- Campaign: a set of ads with similar settings, including location targeting and budgets, that are often employed to classify products.

- Impression: an instance of a displayed advertisement by a given user that is usually saved in a log file along with other information and is available at runtime.

- Click: indicates whether a user clicks an impression. It is saved in the same log file as the impression and is available at runtime.

- Click-through rate: the total number of clicks divided by the total number of impressions.

- Click prediction: a crucial capability of the search platform. It forecasts the likelihood of a user clicking on an advertisement for a specific query.

Sponsored search is one of many web-scale applications. This approach remains challenging to implement due to the richness of the problem space, the various feature types, and the small data volume. However, features play an integral role in designating sponsored searches.

- 1.

- Sponsored search features: is available at runtime once an impression is clicked (i.e., an advertisement is displayed) and is provided offline to train the model. Features comprise two types:

- (a)

- Individual features: Each feature in the dataset is represented as a vector. Text features such as they query, keyword, and title should be converted into a suitable format for the model, such as a tri-letter gram with a given number of dimensions. Categorical features such as match type could be represented using a one-hot encoder, where, for example, the exact match could be represented as , the phrase match represented as , the broad match represented as , and the contextual match represented as . The sponsored search paradigm contains millions of campaigns, and each campaign usually has a unique ID, so converting the campaigns’ IDs into a one-hot vector (e.g., named campaign ID) would significantly increase the model’s size. Thus, using a pair of companion features to solve this issue is better. One feature consists of campaign IDs; the other represents only the top (e.g., 20,000 campaigns) with the highest number of clicks (the 20,000th cell starts with the remaining campaigns starting from an index of 0); the second feature should be a one-hot vector. Different campaigns can be represented by different numerical features that reflect the corresponding campaign’s data, such as the CTR. In some contexts, such features are called counting features in CTR prediction research. The features explained here are sparse features except for the counting features.

- (b)

- Combinatorial features: Given features and , a combinatorial feature can be represented as . Both sparse representation and dense representation are common in combinatorial features. For example, the product of the campaign ID and match type feature (campaign ID × match type) could be represented as sparse and placed into a one-hot vector of 80,004 (i.e., 20,001 × 4). An instance of a dense representation is the total number of advertisement clicks for a combination of campaign and match types. The dense representation dimension in this situation is identical to its sparse counterpart.

- 2.

- Semantic features: The raw text features (e.g., query) are mapped into semantic space features using a machine learning architecture (e.g., the DNN model) [70]. The raw text features are fed into the model as a high-dimensional term vector, such as a document, before the normalization step or counts of terms found in the query are applied. The model produces a vector in a semantic feature space with low dimensions. A web document is ranked by the model as follows:

- (a)

- It is utilized to map term vectors to their semantic vector counterparts.

- (b)

- It calculates the relevance score between a document and a query using the cosine similarity of their semantic vectors.

Let us denote v as the input (i.e., term vector), o as the output (i.e., semantic vector), as the hidden layers (i.e., usually located between the input and output layers in a neural network), as the n-th weight matrix, and as the n-th bias. Therefore, the web document ranking would be performed through the model as follows:where the function is used at the output layer as an activation function, and the hidden layers are represented as:The score of semantic relevance between a given query q and a specific document d can be estimated as follows:where is the query term vector and is the document vector. Therefore, when a user enters a query into the search engine text box, the documents are saved by their semantic relevance scores.The term vector size can be visualized as bag-of-word features. It is identical to the vocabulary employed to index the web document collection. In real-world web search tasks, the vocabulary size is significantly high. Thus, when the term vector is used as input, the input layer size of the neural network becomes uncontrollable, making it inefficient to train the model and deduce a meaningful interpretation of the input. Thus, various methods have been implemented at the word and character levels to address this issue, as discussed in the following subsection.

4.2.2. Word-Level and Character Level CTR

The research community has investigated the following two alternatives to address the issues that face text-level models.

- 1.

- Some research conveys the learning process from the text level to the word level to address the issues encountered by text-level models. The task is similar to that of text-level models (i.e., word-level models (e.g., convolutional neural networks (CNNs)) trained to learn the clicked and nonclicked sponsored impressions and output the prediction of the thought query) [66].These models process word vectors and are pretrained via external sources such as Wikipedia [71] or search logs. The search for the best way to train word vectors is still an intriguing open issue. Successful proposals with similarities in the processing phase have been introduced. Let us denote a given word as w, the word vector dimension as , the length of the fixed query as , and the length of the fixed advertisement as . Therefore, the query matrix dimension is , and the advertisement matrix dimension is .

- 2.

- The character-level prediction models process characters instead of texts or words. More formally, the input character is denoted as c, and the length of the fixed query is represented as ; thus, queries can be defined as a binary of size , which is considered a matrix of one-hot encodings. The query sequence is composed of characters. Each character in the input matrix corresponds to a tuple of size . A particular tuple consists of only one unique entry. The objective is to set the entry to 1 at the position corresponding to the dimension implied by the supposed character at the query while setting all other entries of the same tuple to 0. Therefore, the query matrix includes a value of 1, which denotes the query length ; hence, it also indicates the number of tuples in the matrix. Thus, the character in the query is encoded by the tuple in the matrix. On the one hand, if the length of the query is less than , the zero-padding mechanism is employed to fill the remaining tuples with zeroes.On the other hand, if the length of the query is more significant than , the characters that appear after the character of the query are eliminated. An identical concept is applied to represent advertisements. The textual advertisement encapsulates three essential elements: the advertisement’s display uniform resource locator (URL), description, and title. These elements are initially combined into a unique sequence and then encoded into a matrix containing one-hot encodings of size .

5. CTR Prediction Methods

CTR prediction methods can be categorized into two main categories: CTR prediction that utilizes text and CTR prediction that uses multivariate data. A comparison of these two categories reveals their respective strengths and weaknesses. Text-based models effectively leverage semantic relationships present in user queries and behavioral data, rendering them particularly advantageous in personalized contexts and cold-start situations. Nevertheless, these models encounter challenges such as out-of-vocabulary issues, data sparsity, and increased latency during inference, especially when handling large and evolving text corpora. In contrast, multivariate data-driven models—especially graph-based and feature-interaction-based methods—are highly scalable, can efficiently process large-scale categorical and numeric features, and typically deliver lower inference times due to the structured nature of their inputs. These models demonstrate superior performance in large-scale industrial applications that require both efficiency and speed, particularly when managing substantial volumes of data. However, their effectiveness may be compromised in situations that necessitate thorough feature engineering or precise relationship modeling. Furthermore, they may exhibit reduced efficacy in contexts where structured data is either minimal or noisy.

5.1. Retrieval of Items for Search Engine Methods That Utilize Text

This subsection reviews the approaches that predict CTR that utilizes text. Table 2 compares these approaches with various metrics. Grbovic et al. [72] investigate the semantic embedding for ads and queries by mining a search session that consists of clicks on advertisements, queries, search links, skipped ads, and dwell times and proposes an approach called the search embedding model (search2vec). They leveraged cosine similarity between the acquired embeddings to assess the similarity between a query and an advertisement. The main issue of the proposed method is its reliance on the whole query level and advertisement identifier level, which means that the technique cannot differentiate two queries with similar content if they appear in the same context in search sessions. The method also suffers from the out-of-vocabulary issue, as many search queries are new, and advertisers continually update their advertisements. Other approaches, such as DeepIntent [73], a method that combines attention and RNNs to bind queries and ads to real-valued vectors, have tried to solve these issues. The authors utilize the cosine similarity mechanism to investigate the query and ad vector likenesses. The authors apply their method at the word level, making their approach less sensitive to the issue of out-of-vocabulary words.

Table 2.

Comparison of the approaches that utilize NLP models to predict the CTR using texts.

Regarding the sponsored search task, Huang et al. [70] propose n-gram-based word hashing letter trigram-based word hashing with DNN (L-WH DNN), which differs from traditional one-hot vector encoding, as it represents a query or document using a vector with reduced dimensionality. Compared with character-level one-hot encodings, this method has much higher dimensionality. The dimension of the character-level encodings corresponds to the input (i.e., the number of characters) multiplied by the alphabet size. Therefore, it is much lower than the vector dimension that utilizes letter trigrams. Additionally, unlike character-level encoding, word hashing encoding sacrifices sequence information. Shen et al. [74] utilize queries of word-n-gram representations to learn the query-document similarity using a convolutional latent semantic model (CLSM). The authors convert individual words or word sequences into low-dimensional vectors. They then use a max pooling operation to select the highest neuron activation value across all word and word sequence features. The authors also make effective use of negative sampling on search click logs. This approach enhances the quality and relevance of the data, leading to more accurate results.

Alves Gomes et al. [75] introduce a two-stage method that includes a customer behavior embedding representation and an RNN. The authors first utilize customer activity data to train a self-supervised skip-gram embedding; the output of this phase is employed to encode the consumer sequences in the second phase. The production of the second phase is utilized to train the model. This method differs from traditional methods, which use comprehensive end-to-end models to train and predict click-through. The authors evaluate their method via an industrial use case and a publicly available benchmark, i.e., the Amazon review 2018 (can be found at https://nijianmo.github.io/amazon/index.html, accessed on 18 September 2025) dataset [78]. The experimental results demonstrate the effectiveness of their approach compared with state-of-the-art methods such as the multimodal adversarial representation network (MARN) [79] and TIEN [80] in terms of the F1 score. The proposed method yields an F1 score of 79% on the public dataset, outperforming the baseline models by more than 7%. This result indicates that applying an extensive end-to-end model to present an accurate method is not always necessary; some light and well-developed models outperform complicated and computationally intensive models. The exceptional performance of this approach is attributed to the representation of the customer, which is derived from self-supervised pretrained behavior embeddings. However, this method relies on obtaining personal information that is difficult to acquire due to legal and government restrictions. Moreover, traditional approaches that use manually designed features can be easily understood by experts in the field. Therefore, end-to-end methods and decoupled models such as this approach are difficult to explain because of the black-box nature of deep learning architectures. Thus, there is a need to incorporate eXplainable AI (XAI) with such approaches to increase explainability and interoperability. Cui et al. [76] introduce a recommendation system that uses the sentiment knowledge graph attention network (ASKAT). The authors obtain aspectual sentiment features from reviews via an enhanced aspect-based sentiment analysis technique. They develop a collaborative knowledge mapping method that enhances sentiment to make the most of the information gathered from reviews. They generate an innovative graph attention network equipped with sentiment-aware attention capabilities, enabling the effective collection of neighbor information. The authors evaluated their method with three publicly available datasets: Amazon book (can be found at: http://jmcauley.ucsd.edu/data/amazon, accessed on 31 July 2025), Movie (can be found at: https://developer.imdb.com/non-commercial-datasets/, accessed on 31 July 2025), and Yelp (can be found at https://www.yelp.com/dataset, accessed on 31 July 2025). The experimental evaluation demonstrates the superiority in terms of accuracy and personalized recommendation of the proposed method over the baseline state-of-the-art methods, such as the knowledge graph attention (KGAT) network [81], knowledge-based embeddings for explainable recommendation [82], knowledge graph convolutional networks (KGCNs) [83], and the LightGCN [84], on two recommendation tasks (i.e., CTR prediction and top-k recommendation).

Edizel et al. [66] introduce two methods (i.e., at the word and character levels named deep word match (DWM) and deep character match (DCM), respectively) based on a CNN and use text content to forecast the CTR of a query-ad pair. The proposed method utilizes texts represented in the query-ad pair, feeds them into the architecture as input, and outputs the CTR prediction. The authors conclude that the character-level method is more effective than the word-level method because it learns the language representation when trained on sufficient data. The authors evaluate their two methods via a commercial search engine’s real-world data of 1.5 billion query ad pairs. The proposed methods are compared with baseline methods such as feature-engineered logistic regression (FELR) [85] and Search2Vec [72] and outperform them in terms of AUC. Geng et al. [77] present an approach named behavior aggregated hierarchical encoding (BAHE) to improve the efficiency of LLM-based CTR prediction. The proposed approach dismantles the customer behavior encoding from the interactions of interbehavior. The authors use pretrained shallow layers to avoid duplicating the encoding of the same user behaviors. These layers extract detailed user behaviors from long sequences and store them in a database for later use. Hence, deeper trainable layers in large language models facilitate complex behavior interactions, thus creating comprehensive user embeddings. This difference permits the independent training of high-level customer representations from low-level behavior encoding, thus significantly lowering computational complexity. Then, the authors combine the refined customer and processed item embeddings in the CTR model to calculate the CTR scores. The experimental results show a significant improvement (i.e., five times) over similar approaches, including the unified framework for CTR prediction (Uni-CTR) [86], fine-grained feature-level alignment between ID-based models and pretrained language models (FLIP) [87], and the knowledge augmented recommendation (KAR) framework [88], particularly when using longer customer sequences for training time and memory usage compared with CTR models based on LLMs. The authors successfully implemented this approach in a real-world operational environment. This implementation has enabled them to achieve daily updates of 50 million CTR data points on 8 A100 GPUs. Consequently, this advancement has made large language models (LLMs) a viable and practical option for industrial CTR prediction.

Text-based approaches (e.g., Search2Vec [72], DeepIntent [73], BAHE [77]) highlight the importance of leveraging semantic embeddings of queries, ads, and reviews. These approaches are efficient in resolving the cold-start concerns of sparse settings. However, they encounter issues regarding scalability when dealing with rapidly changing ad corpora and out-of-vocabulary problems. The key takeaway is that text-only solutions remain valuable for semantic personalization but must often be combined with other modalities or structural data for robust industrial performance.

5.2. Showing Ads or Product Solutions Using Multivariate Data

This section surveys solutions that utilize various data types to predict the CTR. These solutions can be classified into graph-based approaches, feature-interaction-based methods, customer behavior techniques, and cross-domain approaches.

5.2.1. CTR Prediction Utilizing Graph Models

This subsection presents solutions that utilize graph models to predict the CTR. A comparison of these approaches using different criteria is shown in Table 3. Liu et al. [89] introduce an approach based on graph convolutional neural networks named graph convolutional network interaction (GCN-int). This approach facilitates the learning of the hard-to-comprehend interaction between various features, offers a decent interaction representation across high-order features, and enhances the explainability of feature interaction. The method is evaluated on two public datasets (i.e., Criteo and Avazu) and a customized dataset comprising internet protocol television (IPTV) movie recommendation records. The experimental results demonstrate the effectiveness of the proposed method in terms of accuracy and efficiency compared with existing methods, such as the attentional factorization machine (AFM) [90] and DeepCrossing [67]. However, the proposed method omits the weights of interactions between features and instead performs feature interactions with identical weight values. Zhang et al. [91] propose a graph fusion reciprocal recommender (GFRR) approach, which can learn reciprocal information circulation across customers to predict pair matching. This approach can also learn structural information about customers’ historical behaviors and is based on a graph neural network (GNN). Compared with previous reciprocal recommender systems (RSSs) that concentrate only on reply prediction, this approach focuses on both transmit and response signals. Additionally, the authors present negative instance mining to investigate the impact of various kinds of instances on recommendation precision in real-world settings. The authors validate their approach on a real-world dataset, which yielded decent results compared with those of other previous works, such as latent factor for reciprocal recommender (LFRR) systems [92] and deep feature interaction embedding (DFIE) [93], with prediction results of 73.15% for the AUC and 26.01% for the average precision, good response prediction results of 68.95% for the AUC and 23.02% for the average precision, and proper fusion reciprocal prediction results of 71.26% for the AUC and 23.95% for the average precision. However, the only information utilized is the user’s profile and historical behavior; hence, user embeddings could be enriched with more information, such as social networks and interest features, to improve the recommender system.

Table 3.

Comparison of the approaches that utilize graph models to predict the CTR using multivariate data.

Existing methods usually overcome the cold start and sparsity issues in collaborative filtering using side information such as knowledge graphs and social networks. Yang et al. [94] address these limitations similarly: they introduce a knowledge-enhanced user multi-interest modeling (KEMIM) approach to act as a recommender system. The authors initially use a historical interaction between customers and items, which serves as the main component of the knowledge graph. They then create a customer’s specific interests and use the connection path to broaden their potential interests by leveraging the relationships within the knowledge graph. They analyze changes in customer interest and use a capability to understand the customer’s attention to each past interaction and potential interest. The authors subsequently concatenate the customer’s interests with the attribute features to address the cold start issue in an effective manner. The framework consists of structured data from a knowledge graph, which can describe the user’s characteristics in detail and provide understandable recommendation results to users. The framework is evaluated extensively on three publicly available datasets for two distinctive research problems: top-k recommendation and CTR prediction. The experimental results show that the method outperforms the state-of-the-art models on two datasets (i.e., Book-crossing [102] and Last.FM), including knowledge-enhanced recommendation with feature interaction and intent-aware attention networks (FIRE) [103], hierarchical knowledge and interest propagation networks (HKIPNs) [104], and collaborative guidance for personalized recommendation (CG-KGR) [105]. However, the performance of this method could be improved, particularly knowledge extraction in the knowledge graph-based recommender system, and an explainable recommendation model could be introduced. Li et al. [95] introduce a graph factorization machine (GraphFM) to illustrate features in the graph configuration. Specifically, the authors create a capability that chooses meaningful feature interactions and designates these interactions as edges between features. The framework then incorporates the FM’s interaction function into the GNN, represented by the feature aggregation mechanism. The feature aggregation mechanism uses stacking layers to model the arbitrary-order feature interactions on the graph-structured features. The authors validate their method with three public datasets (i.e., Criteo, Avazu, and MovieLens-1M) and compare it with several previous methods, such as higher-order FMs (HOFMs) [106], adaptive factorization networks (AFNs) [107] and FM2 [108]. The proposed method outperforms the other techniques in terms of logloss and AUC.

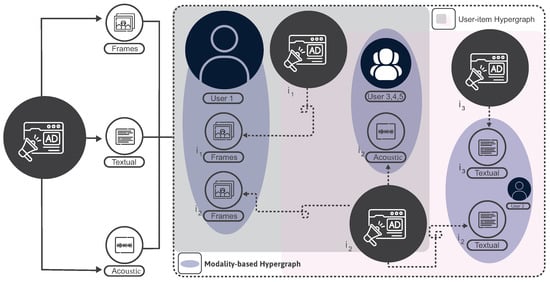

The surge in multimodel sharing platforms such as TikTok has ignited heightened fascination with online microvideos. These concise videos contain diverse multimedia elements, including visual, textual, and auditory components. Thus, merchants can enhance the user experience by incorporating microvideos into their advertising strategies to their advantage. In many CTR prediction studies, item representations rely on unimodal content. A few studies concentrate on feature representations in a multimodal fashion; one of these approaches is hypergraph CTR (HyperCTR), which was proposed by [96]. The hypergraph neural network inspired the hypergraph CTR approach. A hypergraph is an extension of an edge in graph theory [109,110] that can link more than two edges. It guides feature representation learning in a multimodal manner (i.e., textual, acoustic, and frames) by leveraging temporal user-item interactions to comprehend user preferences. Figure 4 depicts an example of applying the proposed method, where users and have interacted with various microvideos, for example, videos and . A microvideo (e.g., ) might be watched by more than one user (e.g., , , ) because of the exciting soundtracks. A group-aware hypergraph can be created using these signals, consisting of various users interested in the same item. This interaction enables the proposed framework to connect multiple item nodes on a single edge via hyperedges. The hypergraph’s unique ability to utilize degree-free hyperedges allows it to capture pairwise connections and high-order data correlations effectively. This ability facilitates CTR prediction for microvideo items, as it can generate model-specific representations of users and microvideos to capture user preferences efficiently. The authors also develop a mutual network for time-aware user-item pairs to learn the correlation of intrinsic data [111] (this approach is inspired by the success of self-supervised learning (SSL) [112]), which addresses multimodal information. This enrichment process aims to enrich each user-item representation with the generated interest-based user and item hypergraphs. The authors validate their proposed technique with three publicly available datasets: Kuaishou [113], Micro-Video 1.7 [114], and MovieLens-20M. The proposed method is compared with several state-of-the-art techniques, such as user behavior retrieval for CTR prediction (UBR4CTR) [115] and automatic feature interaction selection (AutoFIS) [116]. The results demonstrate its superiority over these methods.

Figure 4.

The constructed hypergraphs for user preferences.

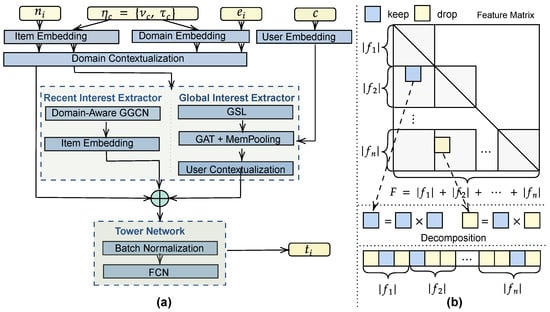

As shown in Figure 5a, Ariza-Casabona et al. [97] propose a multidomain graph-based recommender (MAGRec), which uses graph neural networks to learn a multidomain representation of sequential customers’ interplays. Specifically, the customer c, the chosen user history representation , the target item domain , and the target item itself are fed as inputs into the model. The graph comprises edge features, denoted by target and source domains, and node features, denoted by item embeddings. The authors employ temporal intradomain and interdomain interaction capabilities that act as contextual information and are equipped with their method. In a specific multidomain environment, the relationships are efficiently captured via two graph-based sequential representations that work simultaneously: a general sequence representation for long-term interest and a domain-guided representation for recent user interest. The proposed method effectively addresses the negative knowledge transfer issue and improves the sequential representation. The method is evaluated on the Amazon review dataset [78], which outperforms baseline approaches such as the full graph neural network (FGNN) [117] and multigate mixture-of-experts (MMoE) [118].

Figure 5.

(a) The mechanism used to capture global and local customer preferences utilizing a multidomain customer history graph representation. (b) The technique utilized to unify feature selection and its corresponding interaction to either include or exclude the feature from the feature set.

Sang et al. [98] introduce a framework named the adaptive graph interaction network (AdaGIN), which consists of three mechanisms: a multisemantic feature interaction module (MFIM), a graph neural network-based feature interaction module (GFIM), and a negative feedback-based search (NFS). The purpose of the MFIM is to obtain information from various semantic domains, while the purpose of integrating the GFIM is to combine information across features and evaluate their significance explicitly. The framework uses the NFS capability to employ negative feedback to increase model complexity. The proposed method is validated on four publicly available datasets: Avazu, Frappe (which can be found at http://baltrunas.info/research-menu/frappe, accessed on 31 July 2025), Criteo, and MovieLens-1M (can be found at https://grouplens.org/datasets/movielens, accessed on 31 July 2025). The extensive evaluation proves that the proposed approach is more effective than previous methods in terms of logloss and AUC.

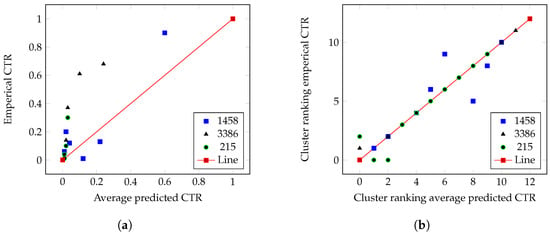

Shih et al. [99] introduce a new evaluation metric called the cluster-aware ranking-based bidding strategy (CARBS). This metric evaluates the worth of each bid request by comparing it to a cluster of similar bid requests via a measure called the cluster expected win rate (CEWR). Bid requests with similar predicted CTRs are grouped into clusters using a two-step clustering mechanism to consolidate matching information. The CARBS sets a clear affordability threshold and prioritizes spending to ensure optimal efficiency and cluster ranking to spend the budget wisely and efficiently. The results of the CARBS are evaluated with CEWR, which proves that it can correlate and that its performance is better than that of inaccurate individual CTR predictions. The authors also introduce a bidding strategy based on reinforcement learning to modify the bid request expected win rate (BEWR). It is a hybrid mechanism that combines CEWR and the dynamic market to derive the final bid prices. As shown in the figure, the authors evaluate their method with three real advertising campaigns, confirming its effectiveness. In Figure 6a, the correlations between the average predicted CTR after utilizing the clustering techniques proposed by [119,120] are depicted alongside their empirical CTR counterparts for three advertising campaigns (1458, 3386, and 215). Ideally, if the average predicted CTR equals its empirical counterpart, the data points indicating clusters will lie on the diagonal dashed line. However, it is evident that in most cases, the predicted CTRs differ significantly from their empirical counterparts, suggesting that the CTR predictions are not correlated with the actual environments. In Figure 6b, the correlations between the average predicted CTR after applying the proposed clustering method are shown alongside their empirical CTR counterparts on the same advertisement campaigns, which proves the effectiveness of the method, as most data points lie on the ideal line. Even in a hard-to-predict campaign with an exceptionally tight budget, the AUC is 0.73, representing an improvement of approximately 33% and indicating the effectiveness of this approach.

Figure 6.

Cluster rankings using the average predicted CTR shown on the x-axis against cluster rankings using the empirical CTR shown on the y-axis. (a) The correlations between the average predicted CTR after utilizing the clustering techniques proposed by [119,120] are depicted alongside their empirical CTR counterparts for three ad campaigns. (b) The correlations between the average predicted CTR after applying the clustering method proposed by [99] are depicted alongside their empirical CTR counterparts for the three advertisement campaigns.

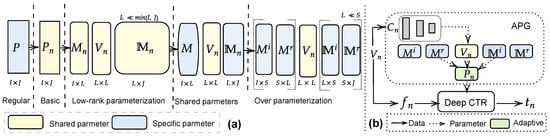

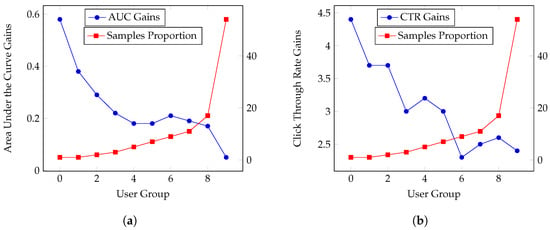

Conventional CTR models utilize deep learning to train the model statically, and the network architecture parameters are identical across all the samples. Hence, these models face challenges in characterizing each sample, as they may stem from diverse underlying distributions. This limitation significantly impacts the CTR model’s representation capability, resulting in suboptimal outcomes. Yan et al. [101] developed a new universal module known as adaptive parameter generation (APG), which aims to address this issue by dynamically generating parameters for CTR models based on different samples. As shown in Figure 7a, when the authors add certain parameters, the model captures specific patterns for distinctive samples, particularly long-tailed samples. This figure analyzes the effects of different samples when these parameters are used. The participants were divided into ten groups based on frequency. The number of participants in each group is the same, and the frequency increases from the first group to the tenth group. More formally, the basic version of the method dynamically generates parameters via the distinct condition ; hence, , where G denotes the adaptive parameter generation network. The produced parameters are subsequently fed into the deep CTR models, which are represented as , where is the neural network and represents the input features. The authors introduce three types of techniques to design different conditions (i.e., groupwise, mixedwise, and selfwise). Once the conditions are obtained, the framework utilizes an MLP as to produce parameters that rely on the three conditions, where are the adaptive parameters, is the input-aware condition, and e is the reshape operation responsible for reshaping the vectors generated by the multilayer perceptron (MLP) into a matrix form. Consequently, the CTR model that utilizes APG can be represented as , where denotes the activation function. This basic version is time and memory inefficient and not particularly effective in pattern recognition. Thus, the authors present three versions to solve these issues: low-rank parameterization, shared parameters, and overparameterization. Low-rank parameterization uses the low-rank subspace to optimize the task. The authors suggest that the adaptive parameters contain a low intrinsic rank; thus, they suggest representing the parameters of the weight matrix as a low-rank matrix. This matrix is generated by three different matrices (i.e., ), and the low rank can be represented as . In the shared parameters version, the framework decomposes the weight matrix into three submatrices (i.e., , , and ). The authors introduce the overparameterized version, which enlarges the capacity of the model by increasing the number of shared parameters. Two matrices are introduced in this version to replace the shared parameters proposed in the previous version, i.e., , where i is the i-th hidden layer, and . Figure 7b depicts the final APG framework without the decomposed feed-forwarding mechanism. The authors then evaluate the performance via AUC and CTR gains for distinctive groups. As shown in Figure 8a,b, the authors conclude that because group nine represents the participants that have the highest frequency, even though they represent only 10% of all participants, this group generates more than half of the total samples. The parameters contribute more to the performance of low-frequency participants (for example, participants in group zero), as they result in higher CTR and AUC gains. Therefore, these parameters allow low-frequency samples to adequately represent their features, leading to improved performance. This approach allows for adapting model parameters to better fit the characteristics of diverse data samples, potentially improving the model’s performance across various scenarios. The authors conducted multiple experiments to evaluate their proposed technique and incorporated the technique as a capability in several deep learning models to enhance performance. The evaluation demonstrated the effectiveness of the proposed technique in significantly improving the CTR of the deep models. Additionally, the proposed method reduced time costs by 38.7% and memory usage by 96.6% compared with a deep CTR model. Furthermore, the model was deployed in a real environment, resulting in a 3% increase in CTR and a 1% gain in revenue per mile (RPM).

Figure 7.

Different versions of APG are shown in (a), and a simplified version of the final APG framework is shown in (b).

Figure 8.

The area under the curve and the CTR gains for sample proportions in different user groups. (a) The area under the curve gains for sample proportions in different user groups. (b) The CTR gains for sample proportions in different user groups.

Graph-based approaches (e.g., GCN-int [89], GraphFM [95], HyperCTR [96]) represent the current state-of-the-art in handling large-scale structured data. They are highly effective in capturing high-order feature interactions, modeling user-item relations in non-Euclidean spaces, and delivering strong performance on industrial datasets such as Criteo and Avazu. Their scalability and predictive accuracy make them highly deployable, though preprocessing pipelines and graph construction can be complex. A clear trend is the integration of multimodal signals into graph frameworks, enabling richer representations of users and items.

Table 4 illustrates representative graph-based CTR methods. Graph-based CTR models are effective for large, structured datasets, such as Criteo and Avazu, where feature interactions significantly impact prediction accuracy. They are ideal for industrial applications that require high AUC and the handling of sparse features. However, practitioners must weigh their computational cost and engineering complexity against simpler baselines. Graph-based models have some limitations. They require extensive preprocessing and graph construction, with some models, such as HyperCTR, necessitating a substantial amount of GPU hours, which limits their scalability for real-time use. Additionally, many of these models assume uniform or static weights for feature interactions. This assumption may not be valid in the context of dynamic industrial environments, where conditions can change rapidly.

Table 4.

Summary of representative graph-based CTR methods.

The rise of multimodal data—such as text, images, and behavioral signals—is transforming CTR prediction. Traditional text-only approaches struggle to fully capture user intent. Recent studies demonstrate that integrating different data types enables models to learn more effective representations by communicating sentiment through text, showcasing product attractiveness with images, and representing interaction dynamics through behavioral logs. For example, ASKAT [76] leverages graph attention networks to combine textual sentiment features with user interaction data, whereas BAHE [77] aggregates multimodal behavioral patterns (e.g., search logs, mini-program visits, and item titles) at an industrial scale, significantly reducing redundancy in representation learning. Similarly, HyperCTR [96] uses hypergraph neural networks to merge textual, acoustic, and visual frame-level features for microvideo CTR prediction, attaining considerable improvements in AUC and log loss on datasets like Kuaishou and MovieLens.

Multimodal frameworks show that combining different modalities offers complementary benefits. They enhance content understanding by merging visual and textual embeddings, while sequential behavior models effectively manage temporal dependencies.This holistic approach strengthens CTR models, making them more robust against data sparsity, addressing cold-start challenges, and enhancing personalization in recommendations. However, the integration of these modalities also introduces computational complexities, which require extensive parallel training and sophisticated fusion techniques, such as attention-based late fusion and cross-modal transformers. Future directions point toward end-to-end multimodal representation learning with eXplainable AI (XAI) components to enhance interpretability, scalability, and industrial deployability.

5.2.2. Cross-Domain CTR Prediction Methods

This subsection introduces the approaches that transfer knowledge across domains to predict the CTR. Table 5 compares these approaches via different assessment measures. Liu et al. [121] introduced a groundbreaking approach to continual transfer learning (CTL), a field that has received relatively limited attention from researchers. CTL focuses on transferring knowledge from a source domain that evolves over time to a target domain that also changes dynamically. By addressing this underexplored aspect of transfer learning, this work (i.e., CTNet) has the potential to significantly advance how knowledge can be effectively conveyed and utilized in evolving environments. The main idea of this approach is to process the representations of the source domain as transferred knowledge for target domain CTR prediction. Thus, the target and source domain parameters are continuously reused and retained during knowledge transfer. This approach outperforms other methods, such as the knowledge extraction and plugging (KEEP) [122] method and progressive layered extraction (PLE) [123]. It has been evaluated via extensive offline experiments, where it yielded significant enhancements. It is now utilized online at Taobao (a Chinese e-commerce platform).

An et al. [124] introduce the disentangle-based distillation framework for cross-domain recommendation (DDCDR), a cutting-edge approach operating at the representational level. This approach is based on the teacher-student knowledge distillation theory. The proposed method first creates a teacher model that operates across different domains. This model undergoes adversarial training side by side with a domain discriminator. Then, a student model is constructed for the target domain. The trained domain discriminator detaches the domain-shared representations from the domain-specific representations. The teacher model effectively directs the domain-shared feature learning process, whereas contrastive learning approaches significantly enrich the domain-specific features. The method is evaluated thoroughly on two publicly available datasets (i.e., Douban and Amazon) and a real-world dataset (i.e., Ant Marketing). The evaluation phase demonstrates the method’s effectiveness, which achieved a new state-of-the-art performance compared with previous methods such as the collaborative cross-domain transfer learning (CCTL) framework [125] and disentangled representations for cross-domain recommendation (DisenCDR) [126]. The deployment of the technique on an e-commerce platform proves the efficiency of the method, which yields improvements of 0.33% and 0.45% compared with the baseline models in terms of unique visitor CTRs in two different recommendation scenarios.

Table 5.

Comparison of the cross-domain approaches to CTR prediction using multivariate data.

Table 5.

Comparison of the cross-domain approaches to CTR prediction using multivariate data.

| Model | Key Idea | Dataset | Performance | Limitations (+)/Advantages (−) |

|---|---|---|---|---|

| CTNet [121] | Processed the source domain | Taobao production: three | 0.7474 AUC and | − The homogeneous features |

| representations as transferred | domains (A, B, and C); | 0.6888 GAUC from | might affect this method’s | |

| knowledge for the target | the aim is to validate | domain A to B; 0.7451 | performance in practice (e.g., | |

| domain; thus, the source and | the framework’s transfer | AUC and 0.7040 GAUC | heterogeneous input features | |

| target domain parameters are | effectiveness from A to B | from domain A to C | which indicate two domain have | |

| continuously reused and retained | and A to C. A dataset size | different feature fields, e.g., | ||

| during knowledge transfer | is 150B, B dataset | image retrieval technique relies | ||

| size is 2B, and C | on image features while | |||

| dataset size is 1B. | text retrieval method relies on | |||

| features preprocessed from text) | ||||

| DDCDR [124] | Based on the teacher-student | Douban (consists of 1.5 M | 0.6350, 0.6602, and | + The approach filters useful |

| knowledge distillation, it constructs | samples), Amazon (consists | 0.8096 AUC on | information for transfer, | |