1. Introduction

The global ceramics industry has consistently maintained strong market vitality, with applications spanning daily use ceramics, architectural ceramics, and high-end artistic wares. Driven by increasing cultural consumption, active international trade, and growing demand for personalized design, the traditional ceramics market continues to expand, demonstrating substantial development prospects. This prosperity not only reflects the economic value of ceramics but also underscores their significance as carriers of cultural heritage and symbols of identity [

1].

Nevertheless, behind this flourishing market lies a critical bottleneck: a shortage of highly skilled professionals. Mastery of traditional ceramic craftsmanship requires long-term training, encompassing both systematic theoretical knowledge and extensive practical experience [

2]. However, the limited number of experts and the slow pace of talent cultivation cannot meet the growing market demand for innovative design and practical guidance. Consequently, the industry faces difficulties in balancing heritage preservation with modern innovation, resulting in challenges such as product homogenization, slow craft iteration, and insufficient talent supply.

In this context, there is an urgent need for novel, high-quality, scalable, and reliable support tools to sustain cultural continuity while promoting industrial innovation. Artificial intelligence, particularly large language models (LLMs), offers promising potential to address these challenges [

3,

4]. By integrating vast amounts of domain-specific knowledge, LLMs can significantly lower the learning barrier, provide expert-level intelligent consultation, and accelerate the dissemination of knowledge and skills [

5,

6]. More importantly, leveraging their multimodal capabilities, LLMs can process not only textual ceramic knowledge but also analyze and generate ceramic images, thereby facilitating personalized design innovation that reconciles traditional asthetics with contemporary market demands [

7,

8,

9].

Current research shows that although LLMs have achieved significant success, in general, knowledge question answering and image generation, their ability to extend and apply in highly specialized vertical domains remains limited [

10,

11,

12]. To better serve the ceramics industry, LLMs must possess a comprehensive understanding and in-depth analytical capabilities regarding specialized ceramic knowledge [

13].

To address these limitations and bridge the gap in large models within the ceramics domain, we constructed EvalCera, the first ceramic knowledge evaluation dataset, to comprehensively assess the professional capabilities of current ChatGPT models in the ceramics field. The evaluation includes ceramics domain knowledge competence, as well as their abilities in the understanding and generation of art ceramic images.

At the same time, we developed ChatCAS, an interactive multi-agent studio for ceramics domain consultation, multimodal analysis, and creative image generation. The system comprises the following three core components: First, we trained a ceramics domain question answering model—CeramicGPT—which possesses highly specialized knowledge in ceramics, with outstanding performance particularly in the Yaozhou porcelain field. In related Q&A tasks, this model achieved an accuracy of 80.24%, outperforming GPT-4o by approximately 19.33%. Second, we constructed an art ceramic image recognition module driven by GPT-4o. By designing an intelligent agent, the model can accurately identify the production stage depicted in a ceramic image, analyze which kiln system the ceramic belongs to, and provide expert-level interpretation. In related image recognition tasks, the model achieved an accuracy of 80.52%, exceeding GPT-4o by 23.27%. Third, we developed an art ceramic image generation module also driven by GPT-4o. Through agent-based architecture, the model can generate personalized creative ceramic images based on user requirements, with particularly strong performance in generating creative designs for Yaozhou porcelain. To facilitate reproducibility and further research, we have released the datasets, code, and models as open source at

https://github.com/HanYongyi/HYY (accessed on 18 September 2025).

The main contributions of this paper are as follows:

We constructed and open-sourced EvalCera, the first multimodal evaluation dataset in the ceramics domain, which includes (A) multiple-choice and true/false questions on specialized ceramic knowledge, covering topics ranging from general knowledge of ceramic production to the historical and cultural background of ceramics; (B) multiple-choice questions for image analysis in the ceramics domain, including identification of the geographic origin of ceramics and recognition of different stages in the production process; (C) subjective questions evaluating image generation capabilities, including creative generation tasks focused on the five famous kilns of China.

We evaluated the professional capabilities of OpenAI’s latest multimodal model in the ceramics domain through EvalCera, from both objective assessments and subjective evaluations, and analyzed its performance in specialized vertical domains based on the results.

We developed CeramicGPT and open-sourced our training dataset, the first large language model tailored for the ceramics field, capable of intelligently handling knowledge-based Q&A in the ceramics field.

We built the first multi-agent system: ChatCAS, driven by CeramicGPT and GPT-4o. It categorizes tasks related to the various stages of a ceramic studio, completing collaborative work through role-playing and task goal consensus. ChatCAS can effectively handle comprehensive tasks in the ceramics field, including Q&A, image analysis, and generation.

The remainder of this paper is organized as follows:

Section 2 introduces the proposed methodology, including the construction of the EvalCera dataset, the development of CeramicGPT, and the multi-agent framework ChatCAS.

Section 3 presents the experimental setup and evaluation results, analyzing the performance of general-purpose LLMs, CeramicGPT, and ChatCAS in the ceramics domain.

Section 4 discusses the main findings, potential applications, and limitations of this study. Finally,

Section 5 concludes the paper and outlines directions for future work.

2. Method

2.1. Evaluation Dataset

This section will introduce our LLM evaluation set for the ceramics domain, EvalCera, including its origins and construction process. EvalCera consists of three parts: the first part is Ceramic Knowledge, the second part is Ceramic Image Analysis, and the third part is Ceramic Image Generation, which is used to comprehensively evaluate the performance of LLMs in the ceramics domain.

2.1.1. Ceramic Knowledge

To evaluate the level of expertise that existing LLMs possess in the field of ceramics, we have developed a multiple-choice and true/false question bank titled EvalCera (A): Ceramic Knowledge. This evaluation covers a broad range of topics from fundamental ceramic production to ceramic history and cultural context. The question bank is divided into three major modules: General Knowledge of Ceramic Production, Ceramic Craft and Technology, and Ceramic History and Culture. By employing diverse question formats, we aim to comprehensively assess the model’s mastery, understanding, and reasoning ability in this specialized domain [

14].

The General Knowledge of Ceramic Production module focuses on core processes, such as raw material selection, forming methods, drying, and firing [

15]. It evaluates the learner’s overall grasp of the entire ceramic production workflow. The questions cover topics such as the mineralogy of kaolin and flux agents, glaze chemical formulations, diagnosis and prevention of common defects, and foundational concepts.

The Ceramic Craft and Technology module emphasizes decorative techniques and technological innovations [

16], including blue-and-white porcelain, famille rose, high-temperature colored glazes, carving and piercing, integrated forming, and digital printing. Through contextualized multiple-choice and true/false questions, it examines the learner’s understanding of traditional craft parameters and modern operational details.

The Ceramic History and Culture module focuses on the historical development and cultural significance of ceramics [

17]. The question sources encompass the styles of renowned kilns from various dynasties, the appreciation of iconic vessel forms, the influence of cross-cultural trade, folkloric applications, and contemporary artistic expressions. This module aims to assess the learner’s deep understanding of ceramic asthetics, social functions, and cultural value.

A total of 9450 questions are included, with 70% multiple-choice questions and 30% true/false questions. The questions are drawn from sources such as ceramic arts and crafts curriculum exams, national occupational qualification tests, actual test questions from the Tongchuan City Ceramic Vocational Competition, and clinical teaching cases compiled by Li Jinping, a provincial-level ceramic arts and crafts master. Each of the three major modules is further subdivided into 12 specific categories, as shown in

Figure 1a.

2.1.2. Ceramic Image Analysis

The images used in this study primarily come from the collections of ceramic museums, the Baidu Baike image database (

https://image.baidu.com/ (accessed on 20 January 2025)), and clinical teaching cases compiled by Li Jinping. We manually selected high-quality images that clearly display the key elements required for judgment and annotated them by hand. The test questions include identifying the origin of ceramics (such as Ru kiln, Yaozhou kiln, Guan kiln, Ding kiln, Cizhou kiln, Jun kiln, Jingdezhen kiln, and Ge kiln [

18]), as well as recognizing different stages of ceramic craftsmanship (such as throwing, trimming, glazing, firing, etc. [

19]). The purpose of constructing these multiple-choice questions using the above methods is to comprehensively evaluate the capabilities of LLMs in image understanding and analysis within the field of ceramics. There are a total of 160 annotated image-based items, forming EvalCera (B), as shown in

Figure 1b.

2.1.3. Ceramic Image Generation

Based on the five famous kilns of China, we constructed 72 subjective image generation items for EvalCera (C). As illustrated in

Figure 1c, to enable systematic evaluation, the prompts are organized by creative-element source into three categories: (i) anime elements (e.g., ninja attire, Sharingan, Rasengan, and hand signs from Naruto; the four-dimensional pocket, bamboo-copter, and time machine from Doraemon; Pikachu, electric symbols, Poké Balls, and evolution stones from Pokémon; as well as the Wind Fire Wheels, Hun Tian Ling, and lotus pedestal from Nezha); (ii) natural elements (e.g., floral motifs such as lotus, peony, and chrysanthemum; bamboo–plum–orchid–chrysanthemum sets; cloud and ruyi patterns; mountains, rivers, and animal forms); and (iii) modern cultural elements (e.g., emoji/sticker sets, meme-style compositions, pixelated QR codes, and kaomoji). Each prompt requires integrating traditional ceramic asthetics with the specified elements to generate ceramic designs that are both visually appealing and practically manufacturable.

2.2. CeramicGPT

CeramicGPT was first subjected to incremental pre-training on Qwen2-7B-Instruct [

20] using a diverse corpus that includes one billion tokens of specialized ceramic-related texts, filtered Chinese and English Wikipedia content, and other sources. This approach preserves general capabilities while injecting domain-specific knowledge. We then constructed the Ceramic SFT Dataset with single-turn and multi-turn dialog. The dataset is categorized into four types: knowledge-based, task-oriented, negative samples, and multi-turn dialog. It was generated with assistance from GPT-4 and refined through expert human review. In fine-tuning, we first conducted rapid experiments on smaller models using low-rank adaptation techniques such as LoRA [

21,

22] and DoRA [

23], and then performed full-parameter supervised fine-tuning (SFT) [

24] on the base model. This section introduces the carefully curated pre-training corpus and the Ceramic SFT Dataset prepared for CeramicGPT.

2.2.1. Pretrain Dataset

Ceramic Arts Domain Corpus. This primary corpus was constructed from multiple authoritative and domain-representative sources, serving as the core foundation for input in the pre-training process. Key reference texts include

A History of Chinese Ceramics,

General Terminology of Craft Ceramics, and

World Ceramics, among other foundational works. In addition, we extracted ceramic-related knowledge from the Open Chinese Knowledge Graph Data (a total of 1.4 billion triples), applying relevance-based filtering. All data sources were processed through the steps of chunking, knowledge extraction, and deduplication [

25]. The final curated corpus comprises approximately 1.01 billion tokens, forming the backbone of the domain-specific pretraining dataset.

Wikipedia-cn-20250123-filtered. To enhance general language understanding while preserving domain specificity, we incorporated a refined subset of Chinese Wikipedia. Based on the Wikipedia snapshot from 23 January 2025, we applied a series of preprocessing steps: removal of non-article namespaces (e.g., Template, Category, Help), heuristic filtering of low-quality or irrelevant entries, and exclusion of sensitive or controversial content. Language normalization, including simplified–traditional conversion and terminology alignment, was also conducted. The remaining 14,265 high-quality articles were then processed using the same segmentation, knowledge extraction, and text generation pipeline, ensuring consistency across all components of the dataset.

Multilingual Wikipedia Corpus. To foster multilingual generalization and enrich semantic diversity, we integrated the English and Chinese segments of the multilingual Wikipedia (

https://dumps.wikimedia.org/, retrieved on 23 January 2025) [

26]. This content was cleaned and filtered in the same way as the Chinese Wikipedia subset. The processed texts underwent sequential steps of text segmentation, knowledge extraction, vector-based deduplication, and text regeneration [

27,

28,

29]. This portion of the dataset supports the generalization capacity of language models beyond the ceramic arts domain.

2.2.2. SFT Dataset

To train CeramicGPT, we constructed a high-quality custom dataset named the Ceramic SFT Dataset, which comprises 100,000 single-turn Q&A pairs and 4000 multi-turn dialog samples. This dataset was carefully curated and designed based on pre-training data specific to the ceramics domain. The original corpus was collaboratively annotated by Jinping Li and GPT series models, followed by extensive reconstruction and optimization to ensure professional accuracy and quality [

30]. The Ceramic SFT Dataset has been made publicly available as open-source. The primary sources of the dataset include reconstructed samples derived from real-world ceramic dialog records, knowledge-based question–answer samples, dialog scenarios designed by ceramic experts, and general supplementary data intended to strengthen the foundational competence of the model. After thorough processing and categorization, the dataset was organized into four distinct subsets: the Knowledge Dataset, Task Dataset, Negative Sample Dataset, and Multi-turn Dialog Dataset.

Knowledge Dataset. The construction of the knowledge-based Q&A samples in arts and crafts ceramics adopts a structured approach. First, specialized texts related to arts and crafts ceramics are carefully selected. The GPT-4o model [

31] analyzes the content to extract key concepts and terminology. Based on these distilled core concepts, accurate and high-quality Q&A pairs are generated to comprehensively reflect the professional knowledge system of the arts and crafts ceramics domain [

32]. Subsequently, these Q&A pairs are adapted to various real-life scenarios, such as exhibition interpretation, education, and professional consultation. The sample content is further expanded to enhance linguistic richness and expressiveness while strictly ensuring the professionalism and factual accuracy of the content.

Task Dataset. Each sample in the task-oriented Q&A dataset is clearly structured into three parts: an instruction, optional background information or supporting materials (input), and a standard task response (output). The instructions are generated in various formats and expressions. All output content undergoes strict manual review and professional evaluation to ensure correctness, relevance, and practicality. The finalized task-oriented data samples are organized in JSON format for fine-tuning training of the CeramicGPT model.

Negative Sample Dataset. The negative sample dataset is created by extracting questions from the existing knowledge Q&A set and generating multiple possible answers using the model. These generated answers are then professionally reviewed, and those that are unclear, inaccurate, or misleading are clearly labeled, thereby forming high-quality negative samples. This process supports training the model’s error recognition capabilities, effectively enhancing its reasoning and error-detection performance in the ceramics domain.

Multi-turn Dialog Dataset. The multi-turn dialog dataset is generated by simulating expert-level professional exchanges within the field of arts and crafts ceramics using the GPT-4o model. Specifically, key topics and hot issues within the domain’s knowledge system are identified, and the model is guided to generate logically coherent, contextually relevant, and in-depth interactive dialog around these topics. This enhances the model’s ability to handle complex scenarios and multi-turn interactions.

2.3. ChatCAS

Despite rapid advances, in general, the purposed multi-agent frameworks such as AutoGen [

33] and CAMEL [

34], systematic adaptation for the ceramics domain remains clearly lacking. On the one hand, there is little domain-specific guidance for image-generation models, making it difficult to produce high-quality ceramic images consistently. On the other hand, there is no effective way to organize multi-turn reasoning in ceramic models, so domain knowledge cannot flow smoothly into executable decisions. To close this gap, we propose ChatCAS, in which agent roles and handoffs map directly to real ceramics production stages—raw materials, forming, trimming, glazing, firing, and inspection—rather than relying on generic “assistant/critic” pairs. Based on this mapping, ChatCAS provides guidance for image generation and delivers an end-to-end path translating knowledge, reasoning, and decisions into practice.

This section describes our proposed collaboration framework, ChatCAS, powered by CeramicGPT and GPT-4o. The symbols in this subsection are summarized in

Table 1. An overview of the pipeline and an illustrative example are shown in

Figure 2. The ChatCAS framework has two components: task assignment driven by CeramicGPT and question answering powered by CeramicGPT and GPT-4o. These two components are further divided into five sub-stages: task assignment, analysis of recommendations, voting, discussion and modification, and final summarization. Each stage corresponds to a production step in ceramics, ensuring that user intent is translated into transparent and reliable decisions. The specific mechanisms of each stage are elaborated in the following subsections.

2.3.1. Task Assignment

The core objective of this section is to accurately assign and document tasks to ensure that the ChatCAS system can meet the specific needs of users in the field of ceramics. To achieve accurate task assignment, a deep understanding of user intent is required. Building on this foundation, the integration of human–computer interaction with systematic task allocation enables the system to significantly improve the efficiency and accuracy of subsequent ceramic problem solving. This stage consists of three predefined agent roles: the front desk agent, the leader agent, and a pool of employee agents corresponding to different stages of the ceramic production process.

Front-desk agent: responsible for interacting with users and collecting information through multiple rounds of conversations. After data collection, the front-desk agent analyzes and summarizes the user’s questions and historical conversation information.

Leader agent: tasked with allocating work, selecting the most appropriate candidate agents from the employee agent pool to address user queries. The leader agent collaborates with the front desk agent to devise a reliable and systematic task allocation strategy.

Employee agents: a pool of agents corresponding to different stages of the ceramic production process (Steps 1–8 in

Figure 2), such as raw material preparation, batching and mixing, molding, repairing, glazing, firing, cooling, and inspection. Each agent possesses specialized expertise, collectively forming a diversified expert team.

The specific allocation is carried out by the Leader Agent, which based on the summary

s generated by the front desk agent, and in combination with the assignment guidance prompt

and the task-specific prompt

, selects the most suitable

n candidate agents from

to construct the set

(symbol definitions are provided in

Table 1). For example, when a user inputs the query “I want to design a Yaozhou porcelain with Pikachu elements,” the front desk agent generates a summary

s, and the Leader Agent selects the “molding” and “glazing” agents, resulting in

. The task allocation procedure can then be formalized as follows:

Here, s is a summary generated by the front desk agent, encapsulating the initial understanding and analysis of the query. The is an assignment guidance prompt that provides expert-level prompts to help the language model understand the background of each employee agent, thereby facilitating intelligent task assignment. The is a task-specific instruction prompt, aimed at generating appropriate instructions tailored to the particular domain or problem.

2.3.2. Analysis of Recommendations

After task assignment, each participating employee agent will analyze the query based on its domain expertise and generate relevant recommendations. Given the summary

s and the domain-specific query

, the

, functioning as a domain expert, generates analysis outcomes

based on the prompt

. The analysis formula is as follows:

Here, represents the analysis prompt for the domain-specific query , providing guidance for each agent to generate recommendations. is the set of predefined domain collections, representing all domains within the system or model. These domains are established to accomplish the tasks at hand.

2.3.3. Voting Step

The voting step is a critical part of the decision-making process in ChatCAS, during which agents collaboratively evaluate the proposed solution. Once the employee agents (e.g., the molding Agent) completes its proposed plan, other agents vote to confirm its suitability. If the total voting weight in favor of the solution exceeds a predefined threshold, the solution is approved; otherwise, the system proceeds to the Discussion and Modification phase. This mechanism ensures that the decision-making process incorporates diverse perspectives and is supported by the appropriate domain expertise.

2.3.4. Discussion and Modification

If the proposed solution does not pass the voting step, the system enters the Discussion and Modification phase. Here, agents work together to refine the solution based on the feedback from the voting process. The system generates alternative solutions, which are then discussed and evaluated by the agents. The agents suggest improvements, and through several rounds of discussion, the best alternative is chosen. This phase focuses on collaboration, ensuring the final solution is both feasible and well-supported. If no consensus is reached after a set number of rounds, the most viable alternative is selected as the final solution.

2.3.5. Collate and Summarize

The manager agent assumes a pivotal role at the terminal stage of the collaborative workflow, where it systematically summarizes and integrates the execution of tasks and the outcomes of inter-agent collaboration. Specifically, the manager agent distills and synthesizes the discussions conducted at each stage as well as the diverse recommendations proposed by expert agents. Furthermore, based on voting mechanisms and negotiation outcomes, it identifies the most feasible and scientifically sound solutions.

The final recommendation report not only provides a complete record of the workflow—from task decomposition and role assignment to solution iteration—but also offers detailed explanations of the rationales and trade-offs underlying key decisions. Such design ensures transparency and traceability in the decision-making process, while simultaneously strengthening users’ comprehension of and trust in the system’s outputs. Beyond immediate decision support, the report also functions as a knowledge repository for subsequent accumulation and reuse, enabling rapid transfer and refinement in similar tasks, thereby enhancing both the interpretability and long-term value of the system.

3. Experiments

In this section, we evaluate the professional capabilities of OpenAI’s latest multimodal model in the ceramics domain using EvalCera and analyze its performance in specialized vertical applications based on the results. We then present the training process of CeramicGPT and evaluate the performance of both CeramicGPT and ChatCAS on EvalCera.

3.1. Performance of Existing LLM in Ceramic

We adopted accuracy as the primary evaluation metric. First, we assessed the proficiency of state-of-the-art large models in ceramics-related knowledge using EvalCera (A). Next, we evaluated their ability to understand and analyze ceramic images on EvalCera (B). Finally, we conducted a subjective scoring assessment to evaluate their capability in generating ceramic images.

3.1.1. Performance of OpenAI Models in EvalCera (A&B)

We tested the domain-specific knowledge of o1-mini, o3-mini, GPT-4o, and GPT-4o-mini in the ceramics field on EvalCera (A) via API calls. As shown in

Figure 3a, the o3-mini model performed the best, achieving an accuracy of 80.22%, slightly higher than o1-mini’s 78.23%. In contrast, GPT-4o and its lightweight version GPT-4o-mini achieved lower accuracies of 67.25% and 60.91%, respectively. These findings indicate that, despite GPT-4o’s powerful general reasoning and comprehension capabilities, its performance in specialized domains such as ceramics remains limited, particularly in the absence of targeted fine-tuning. This highlights the critical importance of domain adaptation for LLMs in professional tasks and further validates the necessity of this study. To achieve higher levels of expertise, existing general-purpose models may require deeper fine-tuning or the integration of domain-specific expert knowledge. Similarly, for EvalCera (B), the o3-mini model again outperformed the others with an accuracy of 72.11%, followed by o1-mini at 66.58%. GPT-4o and GPT-4o-mini trailed behind, registering accuracies of 65.32% and 57.25%, respectively. These results further underscore the value of targeted fine-tuning and raise important considerations for the deployment of LLMs in specialized professional settings.

3.1.2. Performance of Existing LLMs in EvalCera (C)

We designed a set of subjective evaluation criteria for ceramic image generation, referred to as EvalCera (C), to assess the image generation capabilities of existing LLMs. The evaluation is divided into four dimensions:

Asthetic Quality assesses the visual appeal, balance, and artistic harmony of the generated ceramic images. This includes shape, texture, proportion, and color coordination as perceived by experienced ceramic designers.

Cultural Relevance evaluates the extent to which the image reflects traditional or contemporary ceramic styles, motifs, and symbolism, and whether it is rooted in relevant cultural contexts [

35].

Creativity measures the originality, innovation, and uniqueness of the design, including unexpected forms, patterns, or conceptual approaches that go beyond standard templates.

Functional Plausibility considers whether the generated object appears realistically manufacturable and usable as a ceramic item, with appropriate structural features (e.g., base, handle, spout) and accurate physical proportions.

Each dimension is rated on a scale from 1 to 10 (1–2 poor, 3–4 fair, 5–6 adequate, 7–8 good, 9–10 excellent). The total score for each image is the sum of the four dimensions, with a maximum possible score of 40 points.We invited a five-member panel—two ceramic design experts, two art historians, and one lay user—to independently evaluate images generated by different models on these four dimensions. The evaluation results reveal that current models exhibit noticeable shortcomings in several areas. As shown in

Figure 3b, o3-mini maintains relatively stable performance in terms of detail representation; however, its scores on cultural relevance and creativity are noticeably lower, at only 6 and 7 points, respectively. This suggests that the model has not yet effectively acquired or integrated professional ceramic cultural knowledge. In contrast, GPT-4o demonstrates a more balanced performance across all four dimensions, while Doubao and Qwen-Image generally receive lower scores, with particularly pronounced weaknesses in functional plausibility and cultural relevance.

3.2. Performance of CeramicGPT

In this section, we will introduce the training process and results of CeramicGPT. Ultimately, we developed and open-sourced our CeramicGPT, which is the first large model in the field of decorative ceramic arts and is also a leading large model in the domain of ceramic text understanding and reasoning.

In our experiments, we selected four models as base models for SFT training: Qwen2-7B-Chat, Qwen2-7B-Instruct, InternLM2-Chat-7B, and ChatGLM3-6B-32K [

36]. We used a self-constructed domain-specific dataset, Ceramic-SFT, to investigate the strengths and weaknesses of different base models and fine-tuning methods in the specialized ceramic field. Comparative experiments were conducted using identical hyperparameter settings. Initial evaluations were performed using the LoRA fine-tuning method across all models. Among these models, Qwen2-7B demonstrated superior performance in domain-specific learning and was selected as the benchmark model for further comparison of five different training strategies. We employed vLLM for deploying and running inferences on the local model. The specific deployment configurations are detailed in

Table 2, with an average throughput of around 80 tokens per second for each local model.

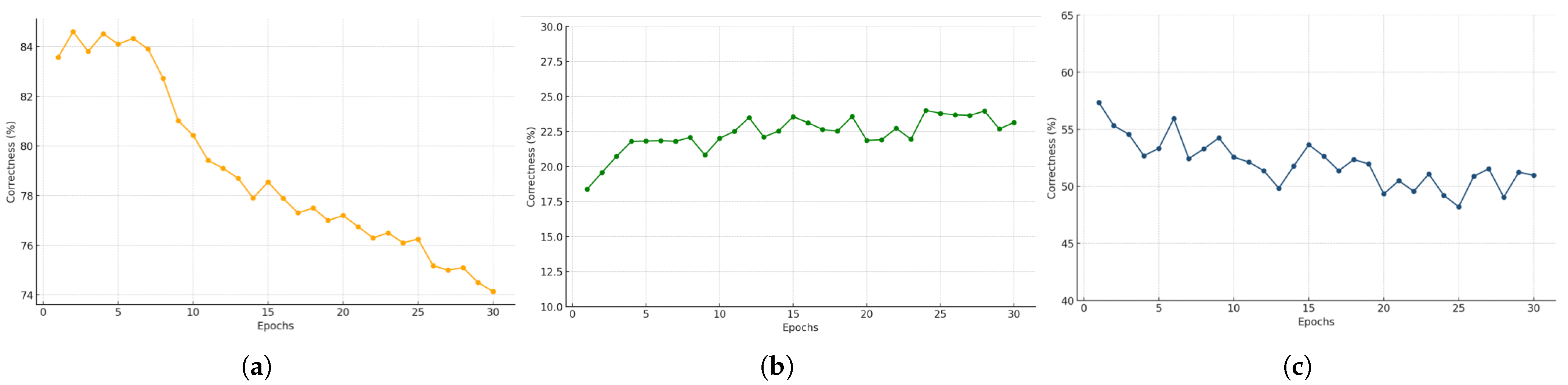

To preliminarily examine model behavior before initiating large-scale sample-based training, we randomly drew 5000 examples from the existing SFT dataset for a smaller-scale initial experiment. The experimental parameters are detailed in

Table 3, aiding the assessment of how the model’s performance evolves during training. After each epoch was completed, the updated model was stored and tested using subsets of samples extracted from the Ceramic-SFT dataset to evaluate its effectiveness. These evaluation results were then used to plot forgetting and memory curves, visually illustrating the model’s learning and forgetting trends as the number of training epochs increased. Furthermore, we assessed the general capabilities of the model at each epoch using the C-Eval dataset, with the related results presented in

Figure 4.

From the analysis of the aforementioned charts, it is evident that as the model undergoes training on the specialized domain dataset, some level of knowledge forgetting occurs. In particular, from the eighth epoch onward, the forgetting phenomenon becomes more pronounced, accompanied by a substantial reduction in the general capabilities of the model. In contrast, the model’s ability to learn specialized domain knowledge shows a decrease in performance improvement starting at the tenth epoch, with the magnitude of change becoming less significant. These observations suggest that the optimal number of training epochs for models focused on specialized domain datasets lies between eight and ten epochs. This insight is crucial for refining future training strategies, helping to strike a balance between acquiring specialized knowledge and preserving general capabilities, thus mitigating the adverse effects of overtraining. Based on the results of the above experiments, we determined that the optimal number of training epochs is 10.

To evaluate both general-purpose and domain-specific capabilities, we conducted a comparative assessment on the C-Eval benchmark and the domain-specific EvalCera (A) dataset; the results are reported in

Table 4. In the non-fine-tuned baseline cohort, GPT-4o achieved the best performance on both evaluations, DeepSeek-V2 performed the worst, and the remaining baselines exhibited relatively small differences. In the LoRA-tuned cohort, we applied supervised fine-tuning (SFT) on our high-quality ceramics dataset with low-rank adaptation, among which Qwen2-7B-Ceramic attained the best overall performance. Considering both training settings, GPT-4o remains the overall top performer, whereas DeepSeek-V2 is the overall worst. Relative to their original C-Eval scores, the fine-tuned models did not exhibit a statistically significant decrease.

We performed SFT training on two A800 GPUs, with all local model inferences conducted on a single A800 GPU. This training used our custom-built finely tuned dataset. During the SFT training process, we configured the learning rate, batch size, and maximum context length, with the specific parameters provided in

Table 5. We chose a lower learning rate to improve domain-specific performance while minimizing significant loss of general capabilities. To further enhance general domain proficiency, we incorporated a general supplementary dataset consisting of 500,000 entries, as detailed in

Table 6, and conducted mixed-dataset SFT training, followed by an evaluation of its effectiveness. Ultimately, we compared the performance of models pre-trained with different datasets after SFT.

Referring to the experimental results of ChatHome, when Qwen2-7B-Chat was trained on a mixed dataset (with a 1:5 ratio between the SFT dataset and the general supplementary dataset), the evaluation results showed no significant improvement in either domain-specific or overall performance. We utilized the Qwen2-7B-Instruct model for incremental pre-training and full fine-tuning.

Table 7 summarizes the comparative results of this process. Ultimately, we selected the Pretrain + SFT (Mix_data) training strategy to fine-tune the Qwen2-7B-Instruct model, resulting in the final model—CeramicGPT.

3.3. Performance of ChatCAS

First, we used EvalCera (A&B) to assess the ChatCAS operating mechanism’s ability in ceramic-related knowledge and image understanding. Additionally, we conducted a subjective evaluation to assess ChatCAS’s ability to generate ceramic images.

3.3.1. Performance of ChatCAS Models in EvalCera (A&B)

As shown in

Figure 5a, we can observe that ChatCAS significantly outperforms other models in both tasks, especially in EvalCera (A), where its accuracy reached 82.65%, and in EvalCera (B), where the accuracy was 80.52%. The professional knowledge in the ceramics field provided by CeramicGPT helped ChatCAS process and interpret the relevant data more accurately, enhancing the model’s understanding and accuracy in the tasks. Furthermore, the system mechanism we constructed allows ChatCAS to leverage the domain knowledge acquired through extensive domain-specific fine-tuning, enabling it to achieve significant improvements when facing complex ceramics-related tasks.

3.3.2. Performance of ChatCAS Models in EvalCera (C)

Figure 6 shows examples of the performance of three different models under the prompts of Yaozhou ceramics with Naruto, Nezha and Pikachu elements. Using the same subjective evaluation method, we tested the image generation capabilities of ChatCAS, and the results are shown in the figure. From the results, we can see that ChatCAS has made significant improvements in image generation. First, the professional prompt capabilities provided by CeramicGPT make the generated images better match the kiln’s background, avoiding the hallucination problem where the image did not correspond to the kiln in previous generations. This is due to CeramicGPT’s expertise in ceramics and its precise prompt support, enabling ChatCAS to understand and generate images that more accurately reflect the actual background. Additionally, the multi-agent collaborative discussion mechanism of ChatCAS further enhances the quality of image generation. Under this mechanism, multiple agents collaborate and discuss in real-time during the generation process, gradually adjusting and optimizing the process to ensure that every step of the image generation aligns with the design requirements, leading to higher-quality images step by step. In the illustration, we can clearly see that elements such as Naruto, the Wind Fire Wheels, and the Hun Tian Ling are accurately and skillfully integrated into the images using the Yaozhou ware Fengming ewer as the foundation. This collaborative mechanism not only improves the accuracy of image generation but also enhances the handling of details, ultimately resulting in more refined and practically suitable images.

As shown in

Figure 5b, in the subjective evaluation of ceramic image generation, ChatCAS exhibited the most outstanding overall performance. The model received a score of 8 in both Asthetic Quality and Cultural Relevance, 7 in Creativity, and a full score of 10 in Functional Plausibility. These results indicate that, under the guidance of CeramicGPT, the generated ceramic images not only exhibit superior visual appeal and cultural alignment but also consistently produce structurally sound and practically applicable designs, thereby achieving the best overall performance.

4. Discussion

This study presents an initial academic exploration of constructing LLMs tailored to the ceramics domain and developing ceramics-specific intelligent agents. Our experimental results highlight the advantages of CeramicGPT and ChatCAS, effectively demonstrating how LLMs can empower the ceramics field. Nevertheless, certain limitations must be acknowledged. First, EvalCera (B) contains only 160 image samples, which are insufficient to fully capture the complexity of diverse kiln systems and production stages. Second, the three-way classification schema of EvalCera (C) remains preliminary and may suffer from category overlap, necessitating sample expansion, expert/user studies, and inter-rater consistency analysis to improve robustness. Third, ChatCAS has thus far been validated only on ceramics-specific tasks; systematic evaluation is still lacking for boundary cases, cross-stage reasoning, and cross-domain applications. Finally, only five users were invited for the qualitative evaluation, which enhances authority but still necessitates the inclusion of a broader and more diverse reviewer group to reduce bias.

Overall, this study validates the value of integrating domain-specific data, specialized models, and process-oriented multi-agent collaboration in the ceramics domain. The research project has been implemented at the Yaozhou porcelain Livestreaming Base in Tongchuan City, Shaanxi Province, China, aiming to address challenges in transmitting the Yaozhou porcelain intangible cultural heritage as part of Tongchuan’s city IP—namely, inheritance disruption, insufficient innovation, and inadequate integration with cultural consumption. The livestreaming platform can collect personalized requests and comments from viewers in real time, and our LLM rapidly generates multiple corresponding customized ceramic patterns and design proposals. Upon customer confirmation and order placement, production is immediately initiated. This framework integrates technological innovation, cultural dissemination, and market demand, establishing a brand-new business model for ceramics.

5. Conclusions

This study makes significant progress in applying LLMs to the ceramics domain. By developing EvalCera, the first specialized dataset for ceramic knowledge, image analysis, and generation, we assessed the capabilities of state-of-the-art models, highlighting their limitations in the ceramics field. In response, we introduced CeramicGPT, a domain-specific LLM that outperformed general models in ceramic knowledge and image recognition tasks. We also developed ChatCAS, a multi-agent system powered by CeramicGPT and GPT-4o. Evaluation results show that our model and agents achieve the best performance on EvalCera (A) and (B) text tasks as well as (C) image generation tasks.

In future work on datasets, we plan to expand the scale of EvalCera, develop a more fine-grained taxonomy of creative categories, involve a larger and more diverse group of experts, and adopt inter-rater reliability metrics to enhance evaluation credibility. For ChatCAS, we will improve task allocation, voting, and discussion processes through strategy optimization and ablation studies, introduce confidence estimation and human–AI collaboration mechanisms to enhance efficiency and reliability, and develop ChatCAS 2.0 to further optimize ceramic design generation and extend the multi-agent framework to other specialized domains.

Author Contributions

Conceptualization, Y.H. and D.L.; methodology, Y.H. and D.L.; data curation, Y.R. and Z.L.; writing—original draft, Y.H. and D.L.; writing—review and editing, L.S., J.L. and Z.L.; visualization, Y.H. and Y.R.; supervision and project administration, L.S., Y.H. and D.L. contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the National Natural Science Foundation of China (Program No. 62471280), which provided essential funding for the theoretical framework, experimental validation, and the overall execution of the project.

Data Availability Statement

This study introduces the first domain-specific resources for ceramics, including the evaluation dataset EvalCera, the fine-tuning dataset Ceramic SFT, and the large language model CeramicGPT. These resources have been described in the manuscript, and part of them have been released as open-source. To ensure full reproducibility, we have additionally made public the data split scripts, random seed settings, and detailed hardware configurations in our GitHub (

https://github.com/HanYongyi/HYY (accessed on 18 September 2025)). Moreover, the copyright and data-cleaning standards for images collected from museums and Baidu Baike have been explicitly clarified in the repository. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to express their sincere gratitude to Jinping Li for his valuable support in compiling ceramic craft cases and question banks, and to the Yaozhou porcelain Livestreaming Base for providing practical scenarios and resources during the application testing stage.

Conflicts of Interest

Author J.L. is employed by Tongchuan Damenli Porcelain Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflicts of interest.

References

- Zhang, J.; Ren, T. A conceptual model for ancient Chinese ceramics based on metadata and ontology: A case study of collections in the Nankai University Museum. J. Cult. Herit. 2024, 66, 20–36. [Google Scholar] [CrossRef]

- White, E.; Adu-Ampong, E.A. In the potter’s hand: Tourism and the everyday practices of authentic intangible cultural heritage in a pottery village. J. Herit. Tour. 2024, 19, 781–800. [Google Scholar] [CrossRef]

- Bewersdorff, A.; Hartmann, C.; Hornberger, M.; Seßler, K.; Bannert, M.; Kasneci, E.; Kasneci, G.; Zhai, X.; Nerdel, C. Taking the next step with generative artificial intelligence: The transformative role of multimodal large language models in science education. Learn. Individ. Differ. 2025, 118, 102601. [Google Scholar] [CrossRef]

- Sun, L.; Liu, D.; Wang, M.; Han, Y.; Zhang, Y.; Zhou, B.; Ren, Y.; Zhu, P. Taming unleashed large language models with blockchain for massive personalized reliable healthcare. IEEE J. Biomed. Health Inform. 2025, 29, 4498–4511. [Google Scholar] [CrossRef]

- Han, Y.; Liu, D.; Sun, L.; Li, J.; Cao, W.; Yi, R.; Zhu, P. When ChatGPT Meets Ceramics: How Far Are We? 2024. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5088915 (accessed on 18 September 2025).

- Chkirbene, Z.; Hamila, R.; Gouissem, A.; Devrim, U. Large language models (llm) in industry: A survey of applications, challenges, and trends. In Proceedings of the 2024 IEEE 21st International Conference on Smart Communities: Improving Quality of Life Using AI, Robotics and IoT (HONET), Doha, Qatar, 3–5 December 2024; pp. 229–234. [Google Scholar] [CrossRef]

- Long, S.; Tan, J.; Mao, B.; Tang, F.; Li, Y.; Zhao, M.; Kato, N. A survey on intelligent network operations and performance optimization based on large language models. IEEE Commun. Surv. Tutor. 2025; early access. [Google Scholar] [CrossRef]

- Smajić, A.; Karlović, R.; Bobanović Dasko, M.; Lorencin, I. Large Language Models for Structured and Semi-Structured Data, Recommender Systems and Knowledge Base Engineering: A Survey of Recent Techniques and Architectures. Electronics 2025, 14, 3153. [Google Scholar] [CrossRef]

- Bhattacharya, M.; Pal, S.; Chatterjee, S.; Lee, S.S.; Chakraborty, C. Large language model to multimodal large language model: A journey to shape the biological macromolecules to biological sciences and medicine. Mol. Ther. Nucleic Acids 2024, 35, 102255. [Google Scholar] [CrossRef]

- Liang, X.; Wang, D.; Zhong, H.; Wang, Q.; Li, R.; Jia, R.; Wan, B. Candidate-Heuristic In-Context Learning: A new framework for enhancing medical visual question answering with LLMs. Inf. Process. Manag. 2024, 61, 103805. [Google Scholar] [CrossRef]

- Di Sciullo, A.M. Towards Reliable and Efficient Natural Language Processing in Emergent Technologies. Electronics 2025, 14, 2922. [Google Scholar] [CrossRef]

- Du, J.; Li, B.; Chen, Z.; Shen, L.; Liu, P.; Ran, Z. Knowledge-Augmented Zero-Shot Method for Power Equipment Defect Grading with Chain-of-Thought LLMs. Electronics 2025, 14, 3101. [Google Scholar] [CrossRef]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 39. [Google Scholar] [CrossRef]

- Cai, Y.; Wang, L.; Wang, Y.; de Melo, G.; Zhang, Y.; Wang, Y.; He, L. Medbench: A large-scale chinese benchmark for evaluating medical large language models. In Proceedings of the AAAI Conference on Artificial Intelligence 2024, Vancouver, BC, Canada, 20–28 February 2024; Volume 38, pp. 17709–17717. [Google Scholar] [CrossRef]

- Webber, K.G.; Clemens, O.; Buscaglia, V.; Malič, B.; Bordia, R.K.; Fey, T.; Eckstein, U. Review of the opportunities and limitations for powder-based high-throughput solid-state processing of advanced functional ceramics. J. Eur. Ceram. Soc. 2024, 44, 116780. [Google Scholar] [CrossRef]

- Feng, X.; Yu, L.; Tu, W.; Chen, G. Craft representation network and innovative heritage: The Forbidden City’s cultural and creative products in a complex perspective. Libr. Hi Tech 2025, 43, 711–745. [Google Scholar] [CrossRef]

- Wang, M. The Role of Ming Dynasty Ceramics in the Development of Vietnamese Ceramic Traditions: An Archaeological Perspective. Int. J. Hist. Archaeol. 2024, 28, 1–33. [Google Scholar] [CrossRef]

- Yang, J. Utilization of Song Dynasty Ceramic Elements Within Contemporary Packaging Design. J. China-ASEAN Stud. 2024, 5, 1–17. [Google Scholar]

- Moyer, I.E.; Bourgault, S.; Frost, D.; Jacobs, J. Throwing Out Conventions: Reimagining Craft-Centered CNC Tool Design through the Digital Pottery Wheel. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–22. [Google Scholar] [CrossRef]

- Quan, S.; Tang, T.; Yu, B.; Yang, A.; Liu, D.; Gao, B.; Tu, J.; Zhang, Y.; Zhou, J.; Lin, J. Language models can self-lengthen to generate long texts. arXiv 2024, arXiv:2410.23933. [Google Scholar] [CrossRef]

- Mao, Y.; Ge, Y.; Fan, Y.; Xu, W.; Mi, Y.; Hu, Z.; Gao, Y. A survey on lora of large language models. Front. Comput. Sci. 2025, 19, 197605. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, S.; An, Z.; Li, Z.; Zhang, C. Analogical Reasoning with Multimodal Knowledge Graphs: Fine-Tuning Model Performance Based on LoRA. Electronics 2025, 14, 3140. [Google Scholar] [CrossRef]

- Wang, L.; Chen, S.; Jiang, L.; Pan, S.; Cai, R.; Yang, S.; Yang, F. Parameter-efficient fine-tuning in large language models: A survey of methodologies. Artif. Intell. Rev. 2025, 58, 227. [Google Scholar] [CrossRef]

- Li, Z.; Wu, D.; Wang, S.; Su, Z. Api-guided dataset synthesis to finetune large code models. Proc. ACM Program. Lang. 2025, 9, 786–815. [Google Scholar] [CrossRef]

- Bender, E.M.; Friedman, B. Data statements for natural language processing: Toward mitigating system bias and enabling better science. Trans. Assoc. Comput. Linguist. 2018, 6, 587–604. [Google Scholar] [CrossRef]

- Feith, T.; Arora, A.; Gerlach, M.; Paul, D.; West, R. Entity Insertion in Multilingual Linked Corpora: The Case of Wikipedia. arXiv 2024, arXiv:2410.04254. [Google Scholar] [CrossRef]

- Peng, C.; Xia, F.; Naseriparsa, M.; Osborne, F. Knowledge graphs: Opportunities and challenges. Artif. Intell. Rev. 2023, 56, 13071–13102. [Google Scholar] [CrossRef] [PubMed]

- Kandpal, N.; Wallace, E.; Raffel, C. Deduplicating training data mitigates privacy risks in language models. In Proceedings of the International Conference on Machine Learning, PMLR 2022, Baltimore, MD, USA, 17–23 July 2022; pp. 10697–10707. [Google Scholar]

- Hu, Z.Z.; Leng, S.; Lin, J.R.; Li, S.W.; Xiao, Y.Q. Knowledge extraction and discovery based on BIM: A critical review and future directions. Arch. Comput. Methods Eng. 2022, 29, 335–356. [Google Scholar] [CrossRef]

- Zheng, B.; Liu, F.; Zhang, M.; Zhou, T.; Cui, S.; Ye, Y.; Guo, Y. Image captioning for cultural artworks: A case study on ceramics. Multimed. Syst. 2023, 29, 3223–3243. [Google Scholar] [CrossRef]

- Wu, Y.; Hu, X.; Fu, Z.; Zhou, S.; Li, J. GPT-4o: Visual perception performance of multimodal large language models in piglet activity understanding. arXiv 2024, arXiv:2406.09781. [Google Scholar] [CrossRef]

- Yu, X.f.; Gan, L.; He, Y. Construction of Knowledge Map of Ceramic Art Design. Packag. Eng. 2022, 43, 247–256. [Google Scholar]

- Wu, Q.; Bansal, G.; Zhang, J.; Wu, Y.; Li, B.; Zhu, E.; Jiang, L.; Zhang, X.; Zhang, S.; Liu, J.; et al. Autogen: Enabling next-gen LLM applications via multi-agent conversations. In Proceedings of the First Conference on Language Modeling 2024, Philadelphia, PA, USA, 7–9 October 2024. [Google Scholar]

- Li, G.; Hammoud, H.; Itani, H.; Khizbullin, D.; Ghanem, B. Camel: Communicative agents for “mind” exploration of large language model society. Adv. Neural Inf. Process. Syst. 2023, 36, 51991–52008. [Google Scholar]

- Chen, J. Cultural Heritage and Artistic Innovation of Decorative Patterns: A Case Study of Contemporary Fiber Art. Mediterr. Archaeol. Archaeom. 2025, 25, 256–266. [Google Scholar]

- Team GLM; Zeng, A.; Xu, B.; Wang, B.; Zhang, C.; Yin, D.; Zhang, D.; Rojas, D.; Feng, G.; Zhao, H.; et al. Chatglm: A family of large language models from glm-130b to glm-4 all tools. arXiv 2024, arXiv:2406.12793. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).