Abstract

The visual cryptography scheme (VCS) is a fundamental image encryption technique that divides a secret image into two or more shares, such that the original image can be revealed by superimposing a sufficient number of shares. A major limitation of conventional VCS methods is pixel expansion, wherein the generated shares and reconstructed image are typically at least twice the size of the original. Additionally, thin lines or curves—only one pixel wide in the original image—often appear distorted or duplicated in the reconstructed version, a distortion known as the thin-line problem (TLP). To eliminate the reliance on predefined codebooks inherent in traditional VCS, Kafri introduced the Random Grid visual cryptography scheme (RG-VCS), which eliminates the need for such codebooks. This paper introduces novel algorithms that are among the first to explicitly address the thin-line problem in the context of random grid based schemes. This paper presents novel visual cryptography algorithms specifically designed to address the thin-line preservation problem (TLP), which existing methods typically overlook. A comprehensive visual and numerical comparison was conducted against existing algorithms that do not explicitly handle the TLP. The proposed methods introduce adaptive encoding strategies that preserve fine image details, fully resolving TLP-2 and TLP-3 and partially addressing TLP-1. Experimental results show an average improvement of 18% in SSIM and 13% in contrast over existing approaches. Statistical t-tests confirm the significance of these enhancements, demonstrating the effectiveness and superiority of the proposed algorithms.

1. Introduction

The foundational principles of the deterministic visual cryptography scheme () were first formally introduced by Naor and Shamir []. As outlined in [], the core concept of involves dividing a secret image into two or more random shares, each of which individually does not reveal any information about the original image. When the appropriate shares are overlaid—where “overlaying” refers to the application of the logical OR operation—the hidden image becomes visible. Given a secret image (SI) as input, a typical generates n shares (images) that satisfy two key conditions: (1) any combination of k or more of the n shares can reconstruct the secret image (SI), and (2) any subset of fewer than k shares reveals no information about the secret image.

The size of the revealed image (RI) and the shares in the deterministic visual cryptography scheme () is typically at least twice that of the secret image (SI), athough the visual quality of the RI remains generally high. The primary limitation of is the pixel expansion problem. To address this issue, Yang [] proposed a non-expansion method known as the probabilistic size-invariant visual cryptography scheme (), which eliminates pixel expansion but results in RIs with reduced visual quality. Subsequently, Hou and Tu [] introduced a multipixel size-invariant visual cryptography scheme () that incorporates both variance and contrast considerations. Consequently, the generates higher-quality RIs than the .

Further advancements were made by Yan [] and Sun [], who improved by applying forward and backward operations between the revealed and secret images to optimize the halftoning techniques and encryption process. However, their algorithms are limited to grayscale images, specifically to , restricting their applicability to random grid VCS (), which is discussed later in this paper. In addition, their methods face challenges with thin lines, which are common in binary images. Wu [] later proposed algorithms aimed at enhancing the contrast of the .

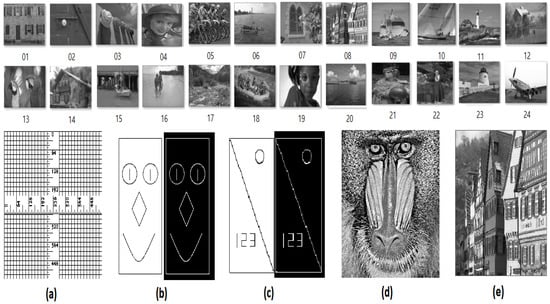

Fine details, such as maps and shapes represented by thin lines, frequently appear in SIs. A thin line is defined as a line or curve that is one pixel thick, either black on a white background or white on a black background (Figure 1a–c). When is applied to such SIs, the resulting RI often suffers from ambiguity, and thin lines become difficult to discern because of low visual quality. This issue was initially termed as the thin-line problem (TLP-1). Two additional problems were later identified in the context of the : TLP-2, in which certain parts of the RI lacked vertical or horizontal lines, and TLP-3, in which thin vertical or horizontal lines in the RI appeared thickened. Further details on these issues are provided in Section 3.

Figure 1.

The data set used to analyze the proposed algorithms was labeled 1 to 24, starting with the top left image. The last row (a–e) contains the original image that serve as visual references to compare the proposed algorithm with other algorithms.

Liu et al. [] evaluated four algorithms for this purpose. The third algorithm is particularly susceptible to TLP-3. On the other hand, the fourth Algorithm addresses all three problems (TLP-1, TLP-2, and TLP-3), but its security guarantees are weaker than conventional standards. Furthermore, some images produced by the fourth Algorithm lacked the visual clarity achieved by the , partly due to residual effects of TLP-1. Despite these improvements, the algorithms still depend on codebooks.

The scheme without a codebook was first proposed by Kafri []. However, it is subject to the same limitation as , namely, the generation of low-quality reconstructed images (RIs). To address this issue, Hu [] proposed algorithms aimed at improving the visual quality in random grids. In 2024, Wafy [] introduced two algorithms that enhanced the visual quality of random grids; however these did not effectively resolve the thin-line problem. To the best of the author’s knowledge, the thin-line problem (TLP) has not previously been addressed within the context of random grid visual cryptography schemes (). This paper introduces two novel algorithms that, for the first time, explicitly address the thin-line problem (TLP) within the framework. In addition to addressing this problem, the proposed algorithms offer several improvements.

- Effectively solve TLP-2 and TLP-3, and partly solve TLP-1.

- Enhance the contrast of the reconstructed images for both grayscale and binary images.

- Improve the local contrast and local pixel correlation, thus contributing to the overall visual quality and structural consistency of the generated random grids.

The remainder of this paper is organized as follows. Section 2 provides traditional definitions and background on the , , , and . Section 3 introduces two proposed algorithms. The experimental results and analyses are presented in Section 4. Finally, Section 5 concludes the paper and offers directions for future research.

2. Background and Definitions

Algorithms in the visual cryptography scheme () can be broadly classified into three categories: deterministic visual cryptography schemes (), probabilistic size-invariant (), and random grid (). Within the , we further distinguish between two types: with pixel expansion () and multipixel size-invariant (). Both the and rely on Boolean matrices, commonly referred to as codebooks, whereas the operates without the need for codebooks. In this paper, pixel values are represented such that white is denoted by 0 and black by 1.

Definition 1

(). The two collections of Boolean matrices, for example (both matrices have the same dimension, ), are if they satisfy the following two conditions:

- 1.

- Security: Let be the two functions that count the frequencies of rows in and , respectively. Then, .

- 2.

- Contrast: Assume that and are nonnegative integers that satisfy and . is satisfied if any k of the n rows executes the operation for any S in .

Example 1.

Suppose . Let and be two collections.

- For each pixel in the secret image (), if the pixel is black (resp. white), randomly selecting matrix (resp. ).

- First row of (resp. ) in the first share and the second row of (resp. ) on the . With two pixels representing each pixel in the image in each share, the size of the revealed image (RI) was twice that of the secret image .

- A two-pixel (=row in matrix a) cannot determine whether it comes from or , because every row in a matrix, () has an equal number of ones and zeros. The number of trials is , and z is the image size to obtain the secret image from a single share. In addition, random matrix selection a ensures that we cannot distinguish between shares.

The aforementioned algorithm was designed specifically for binary images. However, subsequent algorithms, including our proposed algorithms, employ a halftoning technique to convert gray-scale images into binary format before applying the visual cryptography process. Consequently, the proposed algorithms are also applicable to gray scale images.

The pixel expansion problem in was addressed by Yang [], although this solution resulted in a low visual quality. Although the global contrast between the black and white pixels was statistically preserved across the entire image, the local contrast distribution exhibited a considerable variance.

To enhance the local contrast within small regions, such as a block of adjacent pixels (two pixels or more), Tu and Hou [] proposed an algorithm that improves visual quality without introducing pixel expansion in .

Definition 2

(). Let be a secret image, and we have shares . The block , with size m and b is the number of black pixels in the block , Matrix M is randomly selected from or according to the following rule:

Then, the matrix M is distributed on shares . m in this algorithm must be a square matrix.

Suppose that block consists of two pixels in the secret image (SI), one of which is black and the other white, that is . When applying Tu’s algorithm, block is encoded using either or , resulting in a loss of either black or white pixels. By contrast, according to Liu’s algorithm, a pixel block containing b black pixels is encrypted exactly b times using .

Definition 3

(Liu’s algorithm ). Let and , two matrices, and be the basis matrices of a . The next procedure encrypts one block, . Specify b as the number of black pixels in the block, and m as the block size and pixel expansion of the corresponding . Specify the number of blocks that have already undergone encryption and have b black pixels; is the block of the secret image.

- for

- for ( is the total number of blocks in the secret image)

- Encrypt by after randomly permuting its columns if

- Else encrypt using after randomly rearranging its columns

- end

- end

The issue with Tu’s algorithm is partially addressed by the algorithm presented above but remains unresolved.

For all algorithms discussed above, a codebook containing matrices and was employed. To overcome this limitation, Shyu [,,] introduced a modified version of the Kafri [] algorithm which is compatible with a random grid VCS () and does not require a codebook.

Definition 4

(Shyu [] ). An scheme consists of two shares, constructed as follows:

- 1.

- The first share, denoted as , is generated using a uniform random distribution.

- 2.

- The second share, denoted as , is constructed based on the secret image and the first share .Let represent a pixel in the secret image at position , with corresponding pixels and . All , , and are of size . For all and , the pixel values of are defined as:

where denotes the logical negation of the value h.

Shyu proved the following security condition.

Theorem 1

(Shyu [] ). Shyu’s construction satisfies the security condition for , which requires that:

- 1.

- ;

- 2.

- .

For all and , where and .

According to Shyu’s construction, if a pixel is black and the corresponding pixel in the first share is white (respectively, black), then based on Equation (3), the pixel will be black (respectively, white). Consequently, the pixel in the revealed image, computed as (⊗ is OR operation), will always be black. This guarantees that a black pixel in the secret image will always appear as black in the reconstructed image , with a probability of 1.

On the other hand, if is white, then , meaning both and are identical. Since is generated using a uniform random distribution, the resulting pixel will be white with probability and black with probability . Therefore, the probability that a white pixel in is reconstructed as white in is .

This analysis confirms that Equation (3) satisfies the contrast condition required for a valid .

Shyu [] introduced the definitions of two contrast measurements used in the .

Definition 5.

(Contrast) All white (resp. black) pixels in the SI as (resp. ) and (resp. ) is the corresponding pixels in RI. Let be a probability function.

Additionally, Shyu introduced the following practical definition of contrast, derived from experimental data.

Definition 6

(Experimental Contrast). Let the total number of white pixels in the RI (revealed image) be and z be the size of the RI. Experimental contrast (EC):

Although the white and black pixels in Shyu’s algorithm are uniformly distributed, the contrast is minimal. Although Shyu’s algorithm guarantees global contrast through a uniform distribution of black and white pixels, the local contrast varies significantly.

In 2018, Hu [] modified Shyu’s algorithm for ; however, for the case of , Hu’s algorithm is essentially identical to Shyu’s algorithm. By refining the share selection methods, Wafy [] (2024) introduced two algorithms that incorporated the concept of an encrypted pixel block within a random grid for the first time. In comparison, Hu’s algorithm is less effective than Wafy’s.

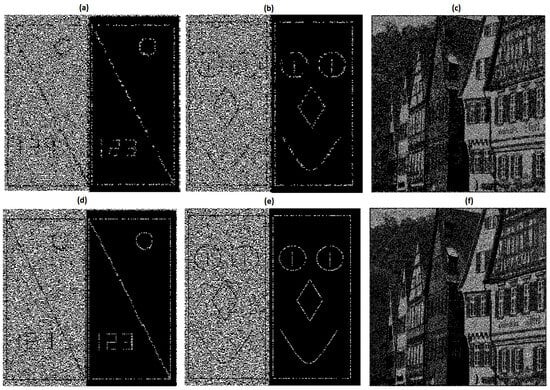

Wafy’s algorithm produces the results shown in Figure 2a–c and Figure 3a–c. In these figures, parts of the horizontal and vertical lines are missing from the revealed image (RI), as shown in Figure 2a,b and Figure 3a,b, which indicates the presence of issue TLP-2. This problem affects the local correlation between the secret image (SI) and RI. Additionally, some horizontal lines in the RI are thicker than their corresponding lines in the SI, illustrating the occurrence of TLP-3.

Figure 2.

The top row shows the Wafy [] (Algorithm 1) for in (a–c). The bottom row shows the Algorithm 2 for in (d,e) and the Algorithm 3 in (f).

Figure 3.

(a,b) represent Wafy [] (Algorithm 1) for and in (c). The bottom row shows Algorithm 2 for in (d,e) and Algorithm 3 for in (f).

The proposed algorithm addresses the local contrast problem with two modifications: First, a uniform distribution is applied to each block of pixels rather than to individual pixels, as in the previous approaches. In addition, both algorithms satisfy Shyu’s security condition. However, in this study, we specifically adhered to Shyu’s security condition as outlined above.

| Algorithm 1 Wafy [] |

Input: An SI is , where a pixel . Output: n random grids . Step 1: When two adjacent pixels, denoted as and , exhibit mixed connectivity, where one pixel is black and the other is white, they are randomly reassigned to either black or white to ensure consistency in the pixel representation. Step 2: Randomly select block size m for block , where m can take values of 1, 2, or 4, corresponding to block sizes of one pixel (), two pixels (), or four pixels (), respectively. Step 3: A matrix a is randomly selected from the set , as defined in Equation (1). Step 4: If pixels, then matrix a is distributed . In the case where , column d is randomly selected from matrix a and is distributed over share . When , a single element is randomly selected from the matrix a and assigned to region . Step 5: Repeat steps 2–4 above to include all pixels in (first share). Step 6: Repeat steps 2–5 to generate the remaining shares Step 7: For . , where ⊕ is XOR logical operation, end Step 8: Randomly permute pixels , and subsequently distribute them to . Step 9: Repeat steps 7–8 above to include all pixels in . |

3. Proposed Algorithms

This section introduces the first two algorithms that address the thin-line problem in while ensuring high visual quality.

Let the probabilities of selecting a block with be denoted as , respectively. The stacking operation (OR-logical operation) ⊗ for shares is defined as

Algorithm 2 guarantees a high-quality visual experience by enhancing the sharpness of thin lines.

| Algorithm 2 |

Input: An SI is , where a pixel . Output: n shares (random grid) of size . Step 1: Select a block containing b black pixels with probability , . Step 2: Randomly select blocks subject to constraints Step 3: Distribute blocks across shares . Step 4: To include every pixel in , repeat steps 1–3 above. Step 5: For , where ⊕ is XOR logical operation, end Step 6: Randomly permute the pixels , and assign them to shares . Step 7: Repeat steps 5–6 above to include all pixels in . |

For both the illustrative example and performance analysis of this algorithm, a block size of 2 was used; that is, was applied. Consequently, three probabilities, , , and corresponding to blocks , , and , respectively, were considered. The following example demonstrates how to determine the values of these probabilities and illustrates Steps 1–3 of Algorithm 2.

Example 2.

This example demonstrates two scenarios: first, the encryption scheme , and second, the encryption scheme . Consider a two pixels block , where b is black pixels in . For the encryption scheme,

- Let denote the result of stacking and , that is . Let be the third share, defined as , where represents the secret image (SI). Block in , for , can take one of three possible forms: , , or , each selected randomly with probabilities , , and , respectively. For each b, block can be generated from a subset of a set of 16 pairs, where each pair consists of two blocks, from and from , such that .

- Specifically, block in can be generated by stacking block (i.e.,

) from with block (i.e.,

) from with block (i.e., ) from . Among the 16 possible combinations, only one pair results in . Consequently, the probability of obtaining in is given by . Thus, the pair consisting of and is assigned to shares and , respectively, with a probability .

) from . Among the 16 possible combinations, only one pair results in . Consequently, the probability of obtaining in is given by . Thus, the pair consisting of and is assigned to shares and , respectively, with a probability . - Block , which contain one black and one white pixel, can be generated from six possible block pairings. Two of these pairings produce directly throughnamely,

⊗

⊗ and

and  ⊗

⊗ .The remaining four pairings involve one block have one white pixel and the other block has two white pixels

.The remaining four pairings involve one block have one white pixel and the other block has two white pixels ⊗

⊗ ,

, ⊗

⊗ ,

, ⊗

⊗ , or

, or  ⊗

⊗ .As there are six equally likely pairings, the probability of selecting a block is .When a block is chosen, one of these six pairings is randomly assigned to shares and .

.As there are six equally likely pairings, the probability of selecting a block is .When a block is chosen, one of these six pairings is randomly assigned to shares and . - Block , consisting of two black pixels, is selected with probability , similar to the selection process for the previous blocks.

In the encryption scheme denoted as , the generation of shares, specifically (), is performed as follows:

- Block can be constructed exclusively from three component blocks, , , and , similar to , with a probability of .

- The block is selected with a probability of .

- The block is selected with probability .

Algorithm 2 demonstrates superior performance compared to all previously proposed algorithms, as it preserves thin lines without any loss and maintains a high visual quality, as illustrated in Figure 2d. Furthermore, Algorithm 2 achieves an enhanced local contrast relative to the other algorithms.

However, in certain scenarios, Algorithm 3 exhibits a better performance than Algorithm 2 and offers the advantage of reduced computational complexity.

| Algorithm 3 |

Input: An SI is , where pixel . Output: n shares (random grid) of size . Step 1: Distribute a block with probability , where on subject to constraint . Step 2: To ensure that every pixel is included in , repeat Step 1 as described above. Step 3: Repeat Steps 1 and 2 as outlined above to generate shares . Step 4: For , where ⊕ is XOR logical operation, end Step 5: Randomly permute the pixels , and assign them to the shares . Step 6: Repeat steps 4-5 above to include all pixels in . |

In the following example and throughout the analysis of Algorithm 3, a block size of 3 (i.e., ) is employed, whereas Algorithm 2 utilizes a block size of 2. This highlights the flexibility of the proposed algorithms in accommodating even and odd block sizes.

The probabilities used in the experimental results were and ; however, these values are not fixed. Readers may adjust, them for instance, by setting and to explore different outcomes.

The results corresponding to Algorithm 3 are shown in Figure 2f and Figure 3f. Steps 1–3 of Algorithm 3 are demonstrated in the following example:

Example 3.

Consider the encryption of using Algorithm 3, where the block to be utilized is denoted as . The encryption of shares proceeds as follows:

- Step 1

- Select a block, from among the possible (representing three white pixels), and , according to the respective probabilities subject to constraints .

- Step 2

- Assume that the selected block is .

- Step 3

- Distribute block across shares accordingly.

The constraints and ensure a key security property: for any pixel x in shares , the probability that x is white is equal to the probability that it is black, that is,

According to Shyu’s security condition in Theorem1, it is enough to prove that the shares satisfy for any pixel in the shares respectively, satisfy .

According to Shyu’s security condition in Theorem 1, it is sufficient to prove that the shares satisfy the following: for any combination of pixels in the respective shares , the probability that the corresponding pixel in share , is black or white is equal, i.e.,

Theorem 2.

Algorithm 3 satisfies the security condition in Theorem 1.

Proof.

Let denote the selection probabilities for the blocks , where each block contains b black pixels and white pixels, for . According to Algorithm 3, the selection probabilities are symmetric such that for all b. This symmetry implies that for every block containing b black and white pixels, there exists a corresponding block containing black and b white pixels, both selected with equal probability.

The total number of black pixels across both blocks and is m, and similarly, the total number of white pixels is also m. Therefore, for every pixel , where , the probability that x is black is equal to the probability that it is white, i.e.,

This guarantees that no information regarding the original pixel values ( denotes the secret image) is revealed by any individual share. Consequently, the fundamental security condition of the visual cryptography scheme was proven. □

4. Results

Figure 1a–d serve as benchmarks for the evaluation of all algorithms, including both the proposed and previous algorithms. Lines are depicted in Figure 1b,c, which consist of various geometries, including curvature lines (circles); small lines; and vertical, horizontal, and diagonal lines. Specifically, the lines in Figure 1b are uniformly one pixel thick. A further challenge arises from the contrasting nature of the image, where the lines on the right side of the figure are white on a black background, whereas the line on the left side is adjacent to the right side, thus presenting additional visual complexity. The ruler shown in Figure 1a illustrates the proximity between the vertical and horizontal lines, compounded by blurred lines. In comparison, Figure 1b shows a greater number of curved lines than Figure 1c.

4.1. Feasibility Analysis

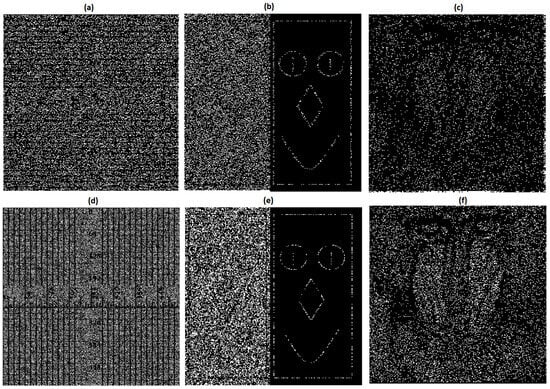

Visual evidence supporting the security of the proposed algorithm is presented in Figure 4a–f, which displays the shares generated by Algorithm 2. Notably, none of these four shares reveal any information regarding the secret image.

Figure 4.

Shares of the Algorithm 3, , for are shown by (a–c), and the stack over and is shown by (d). Algorithm 2 shares for are shown by (e,f).

In this study, we compared the performance of our algorithm with that of Wafy’s Algorithm 1 (2024) [], which has demonstrated superior performance compared with other algorithms in the literature.

The output of Algorithm 2, as shown in Figure 2d,e, was compared with the results of Wafy’s Algorithm 1, as depicted in Figure 2a,b. The white areas on the left side of Figure 2d,e performed better in terms of clarity than the corresponding areas in Figure 2a,b. Furthermore, the ruler in Figure 2d appears clearer than that in Figure 2a, with the numbers being more distinct.

The proposed Algorithm 2 demonstrates a successful balance in enhancing the local contrast (as shown in Figure 2d–f when compared to Wafy’s Algorithm 1 (Figure 2a–c), without necessitating the removal of specific black and white pixels, which is a step required in Wafy’s Algorithm 1. Moreover, Algorithm 2 effectively preserves the local correlations.

When evaluating the output of Algorithm 3, as shown in Figure 3f, it is evident that this method outperforms Wafy’s Algorithm 1, as shown in Figure 3c. In comparison, Wafy’s Algorithm 1, as shown in Figure 3a,b, failed to accurately depict parts of the diagonal line, right vertical line, and portions of the left vertical line, along with part of the circle on the left (with a white background). Additionally, Figure 2a from Wafy’s Algorithm 1 shows a complete loss of both the numbers and vertical lines. In contrast, the output of Algorithm 2 (Figure 2d,e) preserves all elements, leaving no parts missing. Algorithm 3, as shown in Figure 3f, also offers superior clarity compared with Wafy’s Algorithm 1 as shown in Figure 3c.

4.2. Experiment Comparison

The quality of thin lines in reconstructed images can be assessed by determining whether the line thickness matches that of the secret image, measuring the number of pixels lost in horizontal, vertical, or curved structures, and evaluating the overall clarity of the image. However, no existing metric fully captures all these criteria.

The Contrast Degradation Ratio [] is simple to compute but ignores local structural information and is insensitive to thin-line artifacts. The Edge-Preservation Measure [] focuses on boundary sharpness but is sensitive to noise and less effective for textured thin lines. The Pixel Error Rate (PER) [] directly compares pixel values but penalizes small spatial shifts excessively and ignores perceptual similarity. The Structural Similarity Index (SSIM), while considering local correlation, contrast, and luminance, is generally the most effective for assessing overall image quality.

Since the proposed algorithms are specifically designed to preserve thin-line structures and enhance the quality of the reconstructed image, SSIM was selected as the primary evaluation metric in our experiments.

The Structural Similarity Index (SSIM) is a widely recognized metric for assessing the perceived quality of digital images and videos by evaluating their similarity. The SSIM is computed with reference to an assumed distortion-free, uncompressed image. This metric evaluates the image quality by combining comparisons of local correlation, local contrast, and local luminance between two images into a single value. The SSIM was calculated using a Gaussian filter applied to both the smoothed revealed image (RI) and smoothed secret image (SI). Detailed information regarding the SSIM computation can be found in []. Elmer [] demonstrated that the SSIM provides the most accurate measurement for comparing original and distorted images. In addition to the SSIM, we employed experimental contrast (EC), as defined in Definition 5, to further assess the performance of our proposed algorithms.

The dataset used in this study consisted of 24 images from [], with Figure 1 serving as the basis for comparing the performance of our proposed algorithms with that of existing algorithms. Among the algorithms tested, Wafy’s Algorithm 1 outperformed other algorithms. Consequently, we compared Algorithms 2 and 3 with Wafy’s Algorithm 1 using both the SSIM and contrast metrics on 24 datasets from [].

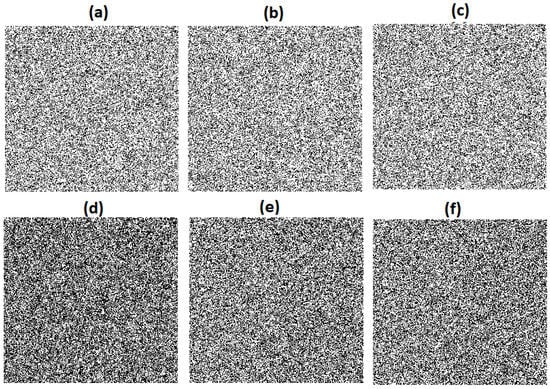

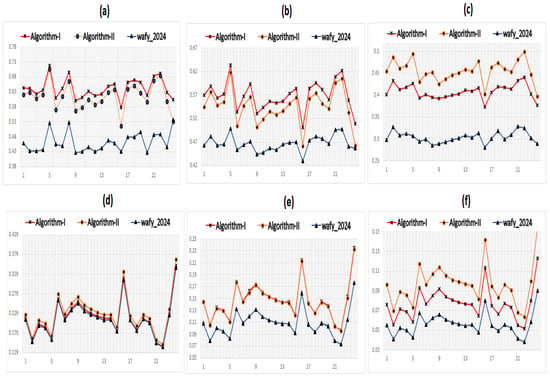

Concerning the SSIM, both the proposed algorithms (Algorithms 2 and 3) outperformed Wafy’s Algorithm 1, as shown in the top row of Figure 5a–c, corresponding to , and , respectively. The lower SSIM scores observed in Wafy’s Algorithm 1 are primarily attributed to the reduced local correlation, which results from the loss of image details and the presence of missing lines.

Figure 5.

Comparison between the proposed algorithms and 1 []. The top row (subfigures (a–c)) presents the SSIM results for , , and , respectively. The bottom row (subfigures (d–f)) shows the corresponding contrast comparisons for the same schemes.

The bottom row of Figure 5 presents a comparison, for contrast score), in which the proposed algorithms again exhibit superior performance. Although the difference in the experimental contrast (EC) for the scheme is relatively small, the SSIM difference is more pronounced, as SSIM captures both local correlation and contrast.

Overall, both the SSIM and contrast evaluations reveal that the performance gap between the proposed algorithms and Wafy’s Algorithm 1 widens as the number of stacking shares k increases. Moreover, for and , Algorithm 2 achieved higher SSIM scores than Algorithm 3, despite their contrast values being nearly identical. This improvement is due to Algorithm 2’s ability to preserve more image details by maintaining white pixels after stacking shares (), thus enhancing the local correlation.

However, when the number of shares is greater than three, Algorithm 2 begins to lose its local contrast and correlation. On the contrary, Algorithm 3 maintains the local contrast more effectively, leading to higher SSIM scores as the number of stacking shares increases. Specifically, in the scheme, Algorithm 3 outperformed Algorithm 2 in both the SSIM (Figure 5c) and contrast (Figure 5f). This finding is further supported by the visual comparison shown in Figure 3f (Algorithm 3) and Figure 3c (Algorithm 2), where Algorithm 3 provides a clearer and more detailed output than Algorithm 2 in the .

Table 1 presents the average Structural Similarity Index Measure (SSIM) computed over a dataset of 24 images, comparing the proposed algorithms with Wafy’s Algorithm 1 [] for the schemes , , and . The results in the table support the conclusions drawn from Figure 5.

Table 1.

Comparison between Wafy’s Algorithm 1 and the proposed algorithms based on the average SSIM and average EC values over a dataset of 24 images.

A t-test was conducted to determine whether there is a statistically significant difference between the SSIM scores of Algorithm 2 and Wafy’s Algorithm 1. The minimum p-value obtained across all schemes was , indicating a significant difference in SSIM scores between the two algorithms. Similarly, for contrast, the minimum p-value was , with the exception of the (2,2) scheme, where the test did not detect a significant difference. Comparable results were observed for Algorithm 3. The minimum p-value for the SSIM was , and for contrast, it was , confirming that the differences were also statistically significant.

4.3. Computational Complexity

The computational complexity is a critical factor in real-world applications. Therefore, the proposed algorithms were compared with Wafy’s Algorithm 1 [], as presented in Table 2. All the algorithms exhibit linear time complexity (), where represents the image dimensions. Among them, the second proposed algorithm (Algorithm 3) demonstrated the best performance in terms of execution time, as it required fewer computational steps than Algorithm 2 and Wafy’s Algorithm 1.

Table 2.

Runtime comparison between Wafy’s Algorithm 1 and the two proposed algorithms. Experiments were conducted using MATLAB 2016 on a system with an Intel Core i7 processor (2.20 GHz) and 16 GB RAM.

5. Conclusions

This paper proposes two novel algorithms for random grid visual cryptography schemes, denoted as and , specifically developed to address the thin-line problem (TLP). Although the TLP has been previously examined in the context of multi-secret-image visual cryptography schemes (), which are known to suffer from codebook-related issues, it has not yet been investigated within the framework of .

With respect to thin-line problems (TLP-1, TLP-2, and TLP-3), the proposed algorithms successfully resolved TLP-2 and TLP-3, and offered partial improvements for TLP-1. Moreover, the algorithms demonstrated enhanced visual quality for both binary and grayscale images. A comprehensive visual and quantitative evaluation was conducted to assess their performance and results were benchmarked against existing algorithms. The findings indicate that the proposed approaches outperform the previous algorithms in terms of overall performance.

Future work will aim to further enhance the visual quality of the reconstructed images, particularly by more effectively addressing TLP-1, and may focus on extending the proposed algorithms through formal parameter optimization within the framework of weak security. This would involve developing strategies to systematically tune algorithmic parameters based on quantitative measurements of share randomization. The objective would be to maximize the randomness score of the shares while simultaneously achieving optimal SSIM values, with particular attention to preserving the distribution of gray levels and maintaining fine structural details in the reconstructed image.

The author wishes to express gratitude to the reviewers for their valuable comments.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Naor, M.; Shamir, A. Visual Cryptography, Eurocrypt, Lncs; De Santis, A., Ed.; Springer: Berlin/Heidelberg, Germany, 1995; Volume 950, pp. 1–12. [Google Scholar]

- Yang, N. New visual secret sharing schemes using probabilistic method. Pattern Recognit. Lett. 2004, 25, 481–494. [Google Scholar] [CrossRef]

- Hou, Y.; Tu, S. A Visual cryptographyic technique for chromatic images using multi-pixel encoding method. J. Res. Pract. Inf. Technol. 2005, 37, 179–191. [Google Scholar]

- Yan, B.; Xiang, Y.; Hua, G. Improving the visual quality of size-Invariant Visual Cryptography for Grayscale Images, An Analysis-by-Synthesis (AbS) approach. IEEE Trans. Image Process. 2019, 28, 896–911. [Google Scholar] [CrossRef] [PubMed]

- Sun, R.; Fu, Z.; Yu, B. Size-invariant visual cryptography with improved perceptual quality for Grayscale Image. IEEE Access 2020, 8, 163394–163404. [Google Scholar] [CrossRef]

- Wu, X.; Fang, J.; Yan, W. Contrast optimization for size invariant visual cryptography scheme. IEEE Trans. Image Process. 2023, 32, 2174–2189. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Teng, G.; ChuanKun, W.; Lina, Q. Improving the Visual Quality of Size Invariant Visual Cryptography Scheme. J. Vis. Commun. Image Represent. 2005, 23, 331–342. [Google Scholar]

- Kafri, O.; Keren, E. Encryption of pictures and shapes by random grids. Opt. Lett. 1987, 12, 377–379. [Google Scholar] [CrossRef] [PubMed]

- Shyu, S. Visual cryptograms of random grids for general access structures. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 414–424. [Google Scholar] [CrossRef]

- Wafy, M. Random grid visual cryptography scheme based on block encoding. Int. J. Inf. Tecnol. 2024, 17, 2513–2521. [Google Scholar] [CrossRef]

- Shyu, S. Visual cryptograms of random grids for threshold access structures. Theor. Comput. Sci. 2015, 565, 30–49. [Google Scholar] [CrossRef]

- Shyu, S. Image encryption by random grids. Pattern Recognit. 2007, 40, 1014–1031. [Google Scholar] [CrossRef]

- Hu, H.; Shen, G.; Fu, Z.; Yu, B. Improved contrast for threshold random-grid-based visual cryptography. KSII Trans. Internet Inf. Syst. 2018, 12, 3401–3420. [Google Scholar]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Comparison of Full-Reference Image Quality Models for Optimization of Image Processing Systems. Int. J. Comput. Vis. 2021, 129, 1258–1281. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Elmer, J. Quality Assessment Halftone Images. Ph.D. Thesis, Linkopings Unviersitet, Linköping, Sweden, 2023. [Google Scholar]

- Available online: https://www.kaggle.com/datasets/sherylmehta/kodak-dataset (accessed on 1 June 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).