Abstract

The collaborative construction of large-scale, diverse datasets is crucial for developing high-performance machine learning models. However, this collaboration faces significant challenges, including ensuring data security, protecting participant privacy, maintaining high dataset quality, and aligning economic incentives among multiple stakeholders. Effective risk management strategies are essential to systematically identify, assess, and mitigate potential risks associated with data collaboration. This study proposes a federated blockchain-based framework designed to manage multiparty dataset collaborations securely and transparently, explicitly incorporating comprehensive risk management practices. The proposed framework involves six core entities—key distribution center (KDC), researcher (RA), data owner (DO), consortium blockchain, dataset evaluation platform, and the orchestrating model itself—to ensure secure, privacy-preserving and high-quality dataset collaboration. In addition, the framework uses blockchain technology to guarantee the traceability and immutability of data transactions, integrating token-based incentives to encourage data contributors to provide high-quality datasets. To systematically mitigate dataset quality risks, we introduced an innovative categorical dataset quality assessment method leveraging label reordering to robustly evaluate datasets. We validated this quality assessment approach using both publicly available (UCI) and privately constructed datasets. Furthermore, our research implemented the proposed blockchain-based management system within a consortium blockchain infrastructure, benchmarking its performance against existing methods to demonstrate enhanced security, reliability, risk mitigation effectiveness, and incentive alignment in dataset collaboration.

1. Introduction

1.1. Overview

The principle “garbage in, garbage out” is widely disseminated within the machine learning community, where dataset quality typically emerges as the paramount factor in determining the efficacy of a machine learning system [1]. Without sufficient high-quality datasets for training, obtaining high-performance machine learning models is a pipe dream. In recent years, large models (e.g., GPT [2], BERT [3]) have demonstrated strong capabilities and potentials in various fields, and the training of these large models relies on large-scale diversified datasets, which requires all parties to collaborate to provide original datasets and break data silos. Collaborative machine learning, represented by federated learning [4], is considered an attractive paradigm to address this problem. Federated learning enables collaborative training among multiple participants while preserving data privacy, thereby facilitating the development of enhanced models for optimizing business decisions and achieving economic gains. Such collaborative data sharing has been successfully applied in areas such as healthcare [5], asset valuation [6], and smart agriculture [7]. The prerequisite for each participant to benefit from federated learning is that all parties share identical business requirements and demonstrate a genuine interest in accessing other participants’ data. However, it should be noted that this condition may not hold true for numerous machine learning tasks. For instance, an e-commerce platform necessitates the aggregation of customer order data from diverse merchants operating in various sectors, including digital products, apparel, and beauty companies. These datasets are then collaboratively employed to train a generalized product recommendation system. However, due to substantial dissimilarities in data attributes among digital product companies, apparel companies, and beauty companies—which operate within distinct industries and businesses—participants may not be inclined to share their enterprise-specific data for mutual benefits through collaborative training. To address such situations, a generic blockchain-based solution is proposed.

1.2. Key Challenges

Building high-quality ML datasets with multiparticipant collaboration is not an easy task, and various issues need to be addressed. The main challenges are listed below.

- (1)

- Data Security and Privacy: While certain data can be made publicly available, other types must remain confidential. For instance, the disclosure of enterprise order information along with customers’ addresses, contact details, and other private data could significantly damage a company’s reputation. The existing centralized approach to data management fails to instill sufficient confidence in data owners regarding security levels. Due to its reliance on a centralized entity, the presence of a single point of failure becomes challenging to circumvent [8]. Additionally, when data are stored on conventional cloud servers, the absence of technical measures to ensure security (e.g., data identification and traceability) can give rise to issues such as ambiguous copyrights and potential information leakage.

- (2)

- No Generic or Fair Incentives: Data collection is a costly endeavor and often holds significant commercial value. In the absence of a shared business need, there is little incentive for participants to contribute their datasets unconditionally. Furthermore, if an incentivization mechanism is implemented, it becomes imperative to ensure complete openness, transparency, and traceability of all economic rewards to prevent any crisis of confidence arising from potential fraudulent practices [9].

- (3)

- Lack of Reliable Dataset Quality Assessment Methods: The utilization of low-quality datasets often exerts a detrimental impact on the performance of machine learning models [9,10]. Blindly incorporating such datasets without thoroughly assessing their quality is highly likely to result in model failure, leading to wastage of valuable resources, including time and money. Most conventional approaches for evaluating dataset quality are confined to theoretical frameworks, providing a superficial assessment based on a limited set of dimensions such as accuracy, completeness, and timeliness, which can compromise reliability.

1.3. Solution

- (1)

- Addressing Challenge 1: Blockchain technology inherently resolves the trust and security issues of centralized systems [10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26]. As a distributed ledger, it eliminates the risk of a single point of failure [10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26]. We have established trustworthiness among participants through cryptographic techniques and consensus protocols, which guarantee the immutability and traceability of all data transactions recorded on the chain. Furthermore, by utilizing smart contracts, all data operations—from invocation to storage—are executed securely on the blockchain, directly mitigating risks such as ambiguous copyrights and potential information leakage that are prevalent in conventional cloud storage.

- (2)

- Addressing Challenge 2: Our framework has incorporated a token-based incentive mechanism built upon smart contracts to encourage participation. This system has provided fair and transparent economic rewards to data contributors. The issuance of token rewards generates corresponding transaction credentials on the blockchain that can be audited at any time. This has ensured the entire process is open and traceable, thereby building confidence and preventing disputes over rewards.

- (3)

- Addressing Challenge 3: To combat the risk of “garbage in, garbage out” and the resulting model underperformance, we have moved beyond superficial, theoretical quality metrics. We have introduced an innovative and practical method for assessing the quality of categorical datasets based on label reordering. This approach evaluates the intrinsic strength of the association between a dataset’s features and its labels. It provides a robust tool to scrutinize participant-provided datasets, ensuring that only high-quality data are accepted and rewarded, thus preventing the wastage of valuable resources.

To mitigate the risk of token reward manipulation by participants providing subpar datasets—which can lead to model underperformance and a waste of resources—a method for assessing the quality of categorical datasets based on label reordering is presented. This approach evaluates the strength of association between features and labels as an assessment metric, ensuring comprehensive scrutiny of participant-provided datasets to guarantee high-quality data for researchers.

1.4. Our Contributions

Based on blockchain [10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26], we implemented a secure and practical privacy-preserving and rewarding dataset sharing scheme and herein propose a reliable quality assessment method for categorical datasets. The main contributions of this paper are as follows.

- (1)

- To address the challenges of data security, privacy, and the lack of fair incentives [27,28,29,30], we propose a blockchain-based decentralized trust management and secure use control scheme in response to concerns raised by data providers regarding the security and privacy of datasets. This scheme effectively handled all data operations and management through smart contracts, while also designing a fair and equitable incentive system based on credit value for participating parties, thereby encouraging the provision of high-quality datasets.

- (2)

- To overcome the lack of reliable dataset quality assessment methods [31,32,33,34,35,36], a two-part quality evaluation method for categorized datasets based on label rearrangement is proposed: a correlation coefficient test and a performance evaluation test. The abstract problem of the strength of correlation between features and labels is concretized into observing the trends of correlation coefficients, model performance, and feature contribution. A comprehensive evaluation of the categorized dataset is conducted to ensure that each participant provides high-quality data.

- (3)

- To validate the feasibility and effectiveness of our proposed solutions, we conducted extensive experiments. Simultaneously, we simulated a complete evaluation process through the UCI Dry Bean Dataset as a high-quality dataset sample and the self-constructed dataset Toy Dataset as a low-quality dataset sample, which proved that the proposed categorized dataset quality evaluation method can make correct judgments on the quality of datasets. Furthermore, we developed a complete dataset management scheme on the consortium blockchain platform FISCO BCOS and evaluated its performance against existing schemes, confirming the feasibility of our proposed approach.

The paper is organized as follows. Section 2 outlines the work related to the key aspects of the paper. The details of our proposed scheme are presented in Section 3. The feasibility of the proposed scheme is demonstrated in Section 4 by simulating the complete dataset quality evaluation process with self-built datasets and public datasets and by performing performance evaluations of the main operations. Finally, Section 5 concludes the paper.

2. Related Work

2.1. Blockchain-Based Secure Data Sharing

Blockchain, originally proposed by [37] in 2008, is a digital cryptocurrency based on distributed ledger technology. Blockchain technology is widely used in the fields of healthcare, the Internet of Things, and smart infrastructure with its attractive features [38]. Blockchain enables decentralized data sharing and immutably records data transactions between data providers and data requesters. At the same time, data exchange can be monitored, and data transaction history can be kept across nodes in a distributed and leaderless manner.

To ensure effective data sharing and privacy protection of intelligent transportation devices in intelligent transportation systems (ITSs), a blockchain-based multi-keyword search protocol for ITS data is proposed, which has improved search efficiency and privacy protection [39]. To enable data sharing of personal health records among patients, doctors, and trusted medical device platforms, a module chaining-based PHRE management and sharing scheme is proposed and provides evidence for validating information indexes through zero-knowledge proofs [40]. Aiming at the realistic scenario of asset transfer between enterprises, a secure and effective provable data possession scheme is proposed to ensure the integrity of data in the transfer process through the special data structure of blockchain [41]. Silva et al. [42] proposed a blockchain-based hierarchical data sharing framework (BHDSF) to provide fine-grained access control and efficient retrieval encryption in the process of secure IoT data sharing. To have better privacy protection during data sharing, Sim et al. [43] proposed an edge-closed chain authorization secure data access control scheme to enforce fair accountability in smart grid data sharing.

Although the above methods ensure the security of data sharing, most users will not share their data unconditionally, and several researchers have already noticed the problem. Spanaki et al. [44] has proposed incentivizing users to participate in collaborative machine learning tasks by evaluating participants’ rewards based on Shapley values and information gained on model parameters, but only discussed the incentive scheme, not the privacy protection of the participants. Wang et al. [45] constructed a blockchain-based privacy-preserving and rewarding private data sharing scheme (BPRPDS) for the Internet of Things (IoT), with the help of deniable ring signatures and Monroe coins to exclude disputes arising from transactions of private data sharing under anonymity. Wang et al. [46] designed an efficient and privacy-preserving quality-aware incentive scheme based on blockchain to construct an efficient and privacy-preserving knowledge discovery protocol using lightweight encryption and task allocation strategies. The scheme also provides system efficiency by providing appropriate rewards to the computing nodes. However, the above incentives do not consider the user’s historical contribution or reputation value, resulting in the user’s rights and interests being compromised.

2.2. Most Conventional

Traditional dataset quality evaluation methods [47] are primarily based on dimensions such as accuracy, completeness, validity, consistency, and reliability. However, most of this evaluation can only stay at the theoretical level, and it is difficult to carry out specific measurements in practice. Wen et al. [48] believes that when assessing dataset quality across various dimensions, it is crucial to consider the specific usage scenarios of the dataset. Furthermore, they emphasize that different domains require distinct methods for evaluating dataset quality. In light of this, we have compiled several approaches for assessing dataset quality. Wu et al. [49] assessed categorized datasets from three perspectives—data adequacy, data category balance, and data labeling accuracy—but the main contribution of this paper is how to improve the quality of datasets, and it does not provide an in-depth discussion on dataset quality assessment. Xuan et al. [50] evaluates the quality of an image dataset by labeling files from six perspectives, such as the labeling completeness, labeling category validity, and the uniqueness of labeling categories. The dataset is analyzed, the evaluation of the indicator is judged by comparison with the indicator threshold, and an evaluation report is obtained. Xue et al. [51] took the enhancement of the automatic classification performance of biological cell images with deep learning as the research purpose and improved the quality of the dataset from the two perspectives of removing the noisy data in the dataset and increasing the dataset’s volume.

Several studies have begun to evaluate the quality of datasets from the perspective of their intrinsic connections. Yang et al. [52] proposed a method to assess the quality of autonomous network datasets based on the replacement test, which divides the dataset into an observation part and a labeling part, arranges the labeling part in different proportions, and determines the quality of the dataset by observing the performance of each model with the resulting p-value. However, the method is only validated for dichotomous experimental datasets in the experimental discussion part, and it is not possible to determine whether it is suitable for multi-classified datasets. You and Zhang’s team [53,54] discussed the quality of network traffic datasets in terms of the intrinsic connection between data quality and label quality.

3. Scheme Overview

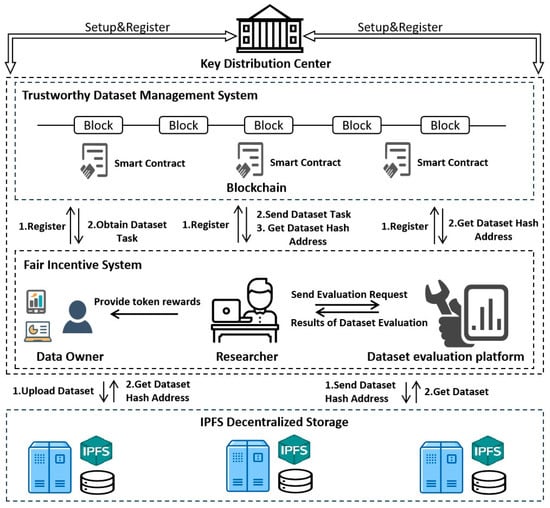

For clarity, the architecture explicitly involves six entities: KDC, RA, DO, consortium blockchain, dataset evaluation platform, and the proposed orchestrating model. Our proposed blockchain-based high-quality ML dataset construction scheme has a specific architecture, as shown in Figure 1, which mainly consists of six entities: key distribution center, researcher, data owner, blockchain, dataset evaluation platform, and IPFS decentralized storage. Some notations used in the scheme are shown in Table 1.

Figure 1.

Architecture of the proposed model.

Table 1.

Summary of blockchain-based secure data sharing approaches.

- Key distribution center (KDC): usually a trusted third party that distributes corresponding certificates and keys for registered users after they complete the corresponding registration.

- Data owner (DO): an individual or company that owns the dataset required by the RA and may be an honest participant who provides a high-quality dataset or a malicious participant who provides a poor dataset.

- Researcher (RA): usually the leader of a machine learning project that needs many high-quality datasets for model training and is willing to pay a fee to the DO for access to the datasets.

- Blockchain: Blockchain is an entity that protects the privacy and security of datasets. By deploying well-designed smart contracts, DOs can set up access policies for datasets on the blockchain, and RAs can obtain the desired datasets by paying a certain number of tokens, both of which remain anonymous throughout the entire data-sharing transaction. We use consortium blockchain to ensure better privacy and scalability of the proposed scheme.

- Decentralized storage: The InterPlanetary File System (IPFS) is a decentralized storage system based on content addressing, which ensures the security of dataset storage while relieving the storage pressure on the blockchain.

- Dataset evaluation platform: provides a feasible quality evaluation method for categorized datasets. Through this platform, the RA can conduct quality checks on datasets provided by DOs and determine whether to reward them with corresponding tokens based on the results of the evaluation.

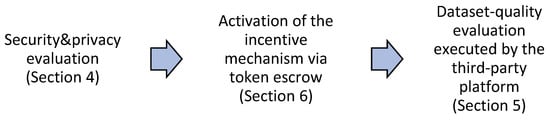

We first built a secure and trustworthy dataset management system based on a federated blockchain, which decentralizes the security management of all uploaded datasets and ensures that all operations on datasets are traceable. Second, we provided a reliable categorized dataset quality evaluation method in the dataset evaluation platform to ensure that the datasets obtained by RAs were of high quality. Lastly, we established a fair incentive system to protect the basic interests of all the DOs who provide high-quality datasets. As shown in Figure 2, we elaborate on our proposed scheme in detail, covering aspects such as the dataset management system, categorized dataset quality evaluation method, and incentive system. Our security framework adheres to NIST SP 800-53 audit trail standards [55] and ISO/IEC 27001:2022 access control requirements [56]. PBFT consensus (Section 7.1) and attribute-based encryption (Section 4.3) were implemented following Hyperledger Fabric’s security guidelines [57].

Figure 2.

Secure incentive-driven data quality assessment pipeline.

4. Trustworthy Dataset Management System

4.1. Design Goals

Our goal was to develop a blockchain-based dataset management system that ensures robust security and trustworthiness, alleviating concerns of dataset owners regarding the integrity of their provided datasets while also preventing any unauthorized manipulation. We guaranteed the reliability of user identities, devised secure storage schemes for uploaded datasets, and enforced stringent access controls for all interactions with the datasets.

4.2. Reliable Identity Information

In contrast to the publicly accessible and transparent nature of a public blockchain, this scheme employs a consortium blockchain that implements a stringent participant access system, requiring authentication and real-name management for all participants.

Initially, the key distribution center conducts system initialization while the blockchain deploys smart contracts. Data owners (DOs), researchers (RAs), and third-party dataset quality evaluation platforms (DEs) register with both the key distribution center and the blockchain. DOs intending to provide datasets and RAs seeking to acquire datasets are required to furnish the following information for registration completion and acquisition of a unique system global identity ID:

CI refers to the user’s trusted contact information, which can be their email address, phone number, or social media account. S represents the user’s status, such as RA or DO. All entities participating in this system must provide this basic information during initial registration before they can access subsequent services. Simultaneously, a pseudonymous identity is generated by the system for each registered user to safeguard their real identity. Herein, denotes a registered user who securely submits their actual identity to the system and selects as the hash key to compute a proprietary pseudonym identity:

is a valid time interval for the pseudonymous identity. Then, storing , within the membership list of the sum system and not allowing any tampering by any person, chooses a random number as its secret value.

The KDC is an authoritative third-party organization responsible for generating unique private key and public key pairs for each legitimate participating user upon completion of the initialization process in the key generation algorithm.

Let and be two groups of the same order of prime q, where is the additive group of prime q and is the multiplicative group of prime q. e denotes the mapping between group and group : , and satisfies the following conditions.

- (1)

- Bilinear: For all and for all is bilinear if there exists:

- (2)

- Nondegenerate: The map does not convert all pairs of to the identity in , and there exists the fact that if P is a generator of , then is a generator of .

- (3)

- Computable: For any , there exists an efficient algorithm to compute .

A bilinear mapping is admissible when it satisfies the above three properties, and we define the following two secure cryptographic hash functions:

After successful registration, the participants will be provided with their respective private and public key pairs. The dataset provider selects a random number and generates a private key , which is then computed as a public key . This process results in the generation of the dataset provider’s public–private key pair as . Similarly, the researcher chooses a random number , generates a private key , and computes it as a public key , thereby generating the researcher’s public–private key pair as . It is essential for users to securely store their private key (SK) while making their corresponding public key (PK) publicly available.

The specific steps for implementation are illustrated in Algorithm 1. Firstly, verify the existence of the user’s ID, and if it is valid, add it to the corresponding set based on the user’s attribute information and assign the appropriate blockchain address using public key . Once engages in a specific transaction, generate a signature for the transmitted message to ensure message integrity and authentication. If the following equation holds true, authentication is successfully accomplished:

The correctness of a single verification is as follows:

| Algorithm 1. User setup and registration | |

| Require: List of RegisteredUsers, List of AppliedUsers Ensure: Blockchain Address Addru.ID for valid users 1: for each user in RegisteredUsers do 2: userInfo ← user.UploadInfo(username, pswd, CI, S) 3: if Verify(userInfo) is false then 4: return Registration Failed 5: else 6: x, y ← RandomlyChooseFrom(Z*q); 7: PKu ← (Px, hy, Z = Pxhy); 8: SKu ← (x, y); 9: SendToUser(user, SKu, PKu, GenerateUniqueID()); 10: end if 11: end for 12: 13: for each user in AppliedUsers do; 14: if user.ID not in ID.list then 15: return Join Network Failed 16: else//User ID is in ID.list, proceed to generate address 17: Addru.ID ← GenerateAddress(PKu, SID); 18: return Addru.ID; 19: end if 20: end for |

4.3. Secure Dataset Storage

The value of datasets relies on their integrity and authenticity, which are crucial for both DOs and RAs. For DOs, it is imperative to avoid providing datasets that can be manipulated, deleted, or falsified. Similarly, any machine learning research based on erroneous data poses significant risks for RAs. Hence, ensuring the secure storage of datasets becomes paramount.

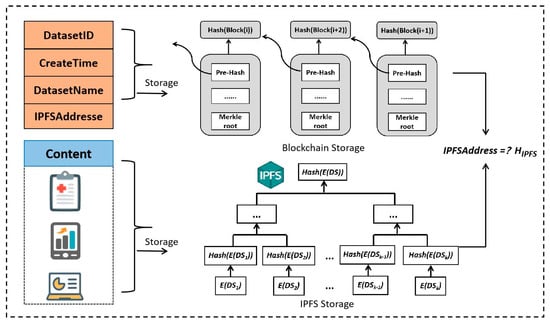

To ensure dataset privacy, the system initially assigns a predetermined keyword w to the uploaded dataset DS by the owner. Subsequently, H(w) is obtained through hash calculation and E(DS), the encrypted dataset, is generated using the AES encryption algorithm with key k. Considering on-chain storage limitations, an IPFS service provider is utilized for storing the dataset in a structure, depicted in Figure 3.

Figure 3.

Blockchain group model.

The overall storage structure, which we have termed the blockchain group model, is illustrated in Figure 3. This model employs a hybrid on-chain and off-chain storage strategy to ensure both security and efficiency. The core dataset content, after being encrypted (e.g., E(DS)), is stored off-chain on the IPFS network. As shown in the lower part of Figure 2, the encrypted dataset is split into fixed-size blocks (E(DS1), E(DS2) …), and their corresponding hash values are organized in a Merkle tree-like structure within the IPFS, ensuring data integrity. The root hash of this structure effectively serves as the unique IPFS address (H_IPFS) for the entire dataset. The metadata and transaction records are stored on-chain in the blockchain. As depicted in the upper part of Figure 2, each block in the blockchain contains essential information such as the DatasetID, DatasetName, CreateTime, and the crucial IPFSAddress. This on-chain information acts as an immutable and traceable pointer to the off-chain data, while each blockchain block is linked to its predecessor via a hash, guaranteeing the integrity of the entire transaction history.

The encrypted dataset E(DS) is divided into fixed-size data blocks , …, and each block generates a content identifier (CID) containing its corresponding content information through hash computation:

The data blocks containing CIDs are stored across various nodes within the IPFS network, while the original dataset file retains the corresponding CID information for each block. On the agent side of the IPFS, an access tree is generated based on the formulated access policy A. If x represents the nodes in the access tree, a polynomial is assigned to each node starting from the root node R and progressing from top to bottom for each subsequent node.

During polynomial definition, it is assumed that represents the highest term count. Additionally, a secret number is algorithmically randomly selected to satisfy , followed by random selection of other coefficients of defining other nodes x within the access tree :

where denotes the index of parent node in the access tree. After that, the other term coefficients of are randomly selected to construct the other polynomials of that node. The encrypted ciphertext of the dataset DS is:

The storage index hash of the encrypted dataset and the ciphertext are returned to the system. After receiving , the system selects a random number and performs the following computation:

after that, get the key cipher CT:

After the DO stores the dataset content into IPFS, it uploads the returned IPFS storage address IPFSAddress, DatasetID, DatasetName, CreateTime to the blockchain network:

generating trusted depository information to ensure the traceability of the stored data:

The storage structure of the blockchain is illustrated in Figure 2, wherein each block incorporates the hash value of its preceding block. Any attempt to tamper with the data stored within a block would result in a mismatched hash. In order for an unauthorized user to modify the hash value of data stored within the blockchain system simultaneously, they would need to execute a 51% attack, which is highly improbable due to computational limitations. Furthermore, given the weak collision resistance property of hashes, it becomes impossible for two distinct file hash values to be identical. This characteristic effectively eliminates any possibility of forgery and ensures both integrity and authenticity of data on the chain.

4.4. Access Control

As previously mentioned, machine learning datasets typically involve a substantial amount of sensitive information and possess significant economic value. Consequently, the data owner aims to restrict access to these datasets and requires all users to obtain approval from the data owner before accessing them. Therefore, this scheme implements a stringent access control regime while ensuring traceability through trusted authorization information.

The access policy is formulated by the data owner (DO) based on the attribute set during user registration. Simultaneously, to serve as an obfuscation mechanism, DO constructs the interference attribute set M, from which a subset of attributes is selected to form the corresponding interference policy . By employing Boolean-equivalent substitution, the interference policy is integrated into the correct access policy , resulting in as the final access policy. Consequently, if a user satisfies the prespecified access policy set by the dataset owner when initiating dataset access, they will also satisfy the final access policy . To complete decryption of encrypted datasets during an access request, users require their own private keys.

After receiving the appropriate dataset access request, the system computes the corresponding access private key SK based on the characteristics of each type of user registration. Firstly, a random number is selected, and is calculated to successfully verify the attributes of the accessing user. Similarly, for each attribute, a corresponding random number is chosen and used to compute the user’s private key SK:

where D is used for key decryption and is used for user information verification. Subsequently, the dataset visitor acquires E(SK) using the key SK and establishes a deadline for user access. Finally, both E(SK) and the deadline are uploaded to the blockchain. The user initiating the dataset access request queries pertinent information by invoking the corresponding smart contract interface from the blockchain and retrieves the private key required for decryption of E(SK) through preregistration to obtain SK.

The RA initiates access requests to datasets provided by the DO based on specific machine learning task requirements:

The blockchain account address $Addr_{d}$ corresponds to the DO who uploaded this dataset, while represents the blockchain account address of the researcher requesting access to the dataset. Additionally, denotes the attribute identifier of the RA, and Timestampr indicates the time when the researcher initiated this dataset access request. Furthermore, it should be noted that this request is also signed by utilizing the private key that has been distributed by KDC:

where is the access structure specific to this ciphertext that forms the trusted depository information for this researcher’s access to the dataset:

The trusted access information established by the RA for the dataset is securely transmitted to the corresponding DO. Only RAs who have made the required prepayment are granted access to the respective dataset by the DO. In advance, the DO records the identity of the authorizing RA in the ciphertext of the dataset and grants authorization to their address through a smart contract construction. When an access request is initiated by an RA, a contract check is triggered, and Algorithm 2 outlines the specific verification process.

| Algorithm 2. Access verification process |

| Require: Requester’s Address requesterAddr, Data Owner’s Address ownerAddr, Requester’s Attribute ID SID, datasetID Ensure: IPFSStorageAddress or an error message 1: if IsOnAllowedList(SID) is false then 2: return Error: No Access Privileges 3: else 4: GenerateAccessAuthorization(requesterAddr, ownerAddr); 5: if IsAuthorized(requesterAddr) and HasCompletedPrepayment(requesterAddr) is true then 6: GenerateAcceptAuthorization(ownerAddr); 7: IPFSAddress, CT2 ← RetrieveDatasetInfo(datasetID); 8: SendToUser(requesterAddr, IPFSAddress, CT2); 9: GenerateSendAuthorization(ownerAddr); 10: φ ← e(C, gβ D01/d)); 11: if VerifyDecryptionKey(CT, H2(φ)) is true then 12: return IPFSAddress 13: else 14: return Error: Decryption Failed 15: end if 16: else 17: return Error: Address not Authorized or Prepayment Incomplete 18: end if 19: end if |

If the RA that initiated this access request is acknowledged by the DO, the trusted depository information, indicating that the DO has granted access, is initially generated as evidence for subsequent traceability of transaction information:

Send the IPFS storage address of the complete dataset to the RA over a secure channel to generate trusted depository information sent by the hash address:

Upon receiving a request for the complete dataset through the IPFS storage address, the system is required to authenticate the user’s access rights and subsequently perform privacy-preserving calculations:

is sent to the blockchain smart contract for verification. If the user attribute set satisfies the access policy, the system then verifies whether it falls within the designated access period. In cases of valid access, the key cipher CT and IPFS storage address associated with the dataset are provided. Conversely, this attempt fails. The decryption process for the IPFS involves recursive computation. For each child node y in the access tree, p(y) is defined as the set of all nodes on the path from y to the root node R. For a given node x in the access tree, is set to include all sibling nodes of (including x). For , there exists:

The root node R in the access tree is used to calculate . If the user attributes satisfy the access policy, the corresponding encrypted dataset E(DS) is obtained through hash indexing and sent to the dataset accessing user via a secure channel with . Upon receiving it, the key cipher CT is tested. Using the private key SK, we calculate . If holds true, then decryption is correct. Otherwise, decryption fails.

5. Reliable Method for Quality Evaluation of Categorized Dataset

The quality of a dataset is a multifaceted concept, traditionally evaluated across several key dimensions such as accuracy, completeness, consistency, and timeliness. While these dimensions provide a crucial, static snapshot of data quality, they often fall short in assessing a dataset’s suitability for a specific machine learning task, which heavily depends on the predictive power of its features.

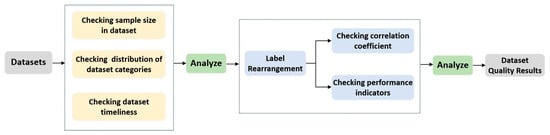

To address this gap, we propose a novel and dynamic method for evaluating categorized datasets that focuses on the intrinsic relationship between features and labels. Our approach, based on label rearrangement, moves beyond static checks to provide a practical, empirical measure of a dataset’s utility for model training. The complete evaluation process is illustrated in Figure 4.

Figure 4.

Dataset evaluation process.

Before performing the specific label rearrangement test, an initial check of the overall dataset situation was performed in terms of the total number of dataset samples, the distribution of dataset classes, and the timeliness of the dataset.

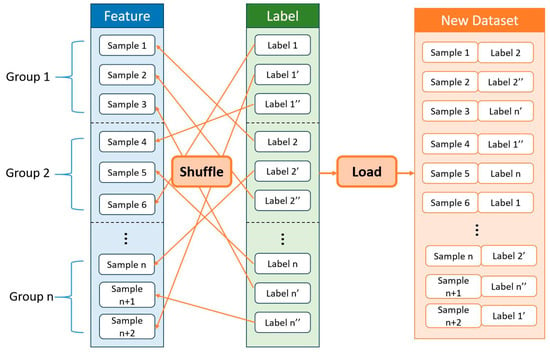

The specific process of label rearrangement is illustrated in Figure 5: the dataset under evaluation is partitioned into Group 1, Group 2, Group 3 … Group n based on the specific categories that require classification according to their labels. In the evaluation procedure, we set the proportions of labels to be ranked as 1%, 5%, 10%, 25%, and 50%. Subsequently, data samples from each group were sequentially extracted from the labeled proportions mentioned above and randomly exchanged with eigenvalues of samples from other groups to generate a novel dataset.

Figure 5.

Label rearrangement.

A high-quality categorized dataset should exhibit a well-defined correspondence between its features and labels, with a strong positive correlation indicating the effectiveness of the features in explaining or predicting the labels. To assess this correspondence, we employed a correlation coefficient test using the Spearman correlation coefficient as an evaluation index. The algorithm for conducting the correlation coefficient test is presented in Algorithm 3.

| Algorithm 3. Correlation coefficient test |

| Require: originalDataset, List of rearrangement percentages Ensure: “Proceed to Performance Test” or “Dataset is Low Quality” 1: initialCoefficients ← CalculateSpearmanCoefficients(originalDataset)//A map of feature to coefficient, e.g., {feature_j: p_j} 2: rearrangedCoefficients ← an empty map; 3: for each percentage in percentages do 4: tempCoefficients ← an empty list 5: for i from 1 to T do//Perform T trials for statistical stability 6: rearrangedDataset ← RearrangeLabels(originalDataset, percentage) 7: trialCoeffs ← CalculateSpearmanCoefficients(rearrangedDataset) 8: Add(trialCoeffs) to tempCoefficients 9: end for 10: meanCoefficients ← CalculateMean(tempCoefficients); 11: rearrangedCoefficients[percentage] ← meanCoefficients 12: end for 13: 14: // Compare coefficients for each feature j 15: for each feature_j in initialCoefficients do 16: pj ← initialCoefficients[feature_j] 17: p1% ← rearrangedCoefficients[1%] [feature_j] 18: p5% ← rearrangedCoefficients[5%] [feature_j] 19: … // and so on for other percentages 20: if not (pj > p1% > p5% > …) then 21: return Dataset is Low Quality 22: end if 23: end for 24: return Proceed to Performance Test |

The dataset under evaluation is assumed to consist of n samples, with the observed values of the characteristic variable and the labeled variable denoted and , respectively. The corresponding ranks are then determined as follows:

Spearman’s correlation coefficient between features and labels is calculated as:

where n denotes the sample size, denotes the difference between the rank of the characteristic variable and the rank of the labeling variable for the ith sample, and represents the sum of the squares of the rank differences for each sample.

Assuming the dataset consists of m features, prior to conducting label rearrangement testing, it is necessary to calculate the Spearman correlation coefficient between each feature in the original dataset and the label. The correlation coefficients of features and labels are calculated multiple times under different label rearrangement ratios, ensuring that datasets used for each calculation have the same percentage of label rearrangement, but different arrangement orders. By generating t datasets with varying alignment orders under this specific percentage, we obtain corresponding Spearman correlation coefficients and compute their mean value as the final correlation coefficient. Herein, represents the Spearman’s correlation coefficient between feature and label :

We assess the label alignment percentages (1%, 5%, 10%, 25%, and 50%) for each feature in the dataset, as well as the correlation coefficients between features and labels. In high-quality datasets, disruptions in labeling that undermine the inherent correspondence between features and labels can lead to a decrease in statistical correlation.

If the features and labels of the original dataset exhibit weak correlation or lack correspondence, the impact of label rearrangement on statistical relevance will be negligible.

However, just by observing the changes in feature correlation coefficients before and after label exchange, it is not possible to conclude that this is caused by weak correlations between features and labels after label rearrangement. If the original dataset is labeled with relatively reasonable labels, different labels correspond to different feature distributions. After label rearrangement, the same category samples are given different random labels and the distribution of features within the category is disrupted, which greatly reduces the information provided by the labels themselves and may also lead to a decrease in the feature correlation coefficient. To solve this problem, the performance of common classifiers on new datasets is supplemented with performance tests, and the complete procedure is shown in Algorithm 4.

To ensure the objectivity of the performance test, we carefully selected state-of-the-art published works and included four commonly used ML classification algorithms, namely k-nearest neighbor (KNN), support vector machine (SVM), decision tree (DT), and random forest (RF), as a pool of classifiers for conducting the performance evaluation.

Since the possibility of dataset imbalance in terms of categories has been eliminated during the initial inspection, accuracy is adopted as a standardized performance metric for subsequent evaluations. Initially, the original dataset was utilized to compute accuracy, denoted M on three classical classifiers (KNN, SVM, DT, and RF) as performance coefficients. Like correlation coefficients, accuracy was tested using t datasets generated with varying label reordering ratios and different orderings to obtain corresponding performance coefficients . The average of these obtained performance coefficients is considered the final performance coefficient () for that alignment ratio.

The performance metrics are sequentially obtained for label permutation percentages of 1%, 5%, 10%, 25%, and 50%. In the case of a high-quality dataset characterized by a strong correlation between the features and the labels, there is an observed trend of decreasing correlation between the dataset’s features and the labels as label permutations increase. Furthermore, comprehensive quantification of the performance metrics is conducted:

The contribution of each feature to the degradation in model performance, , should also be calculated. This can be approximated by summing the product of each feature’s weight, , and its correlation degradation degree, :

If it is observed that a larger decrease in correlation corresponds to a greater contribution of the features to model performance degradation. This indicates that the underlying decline in the correlation coefficient, i.e., deterioration in dataset quality, can be attributed to the loss of alignment between features and random labels. Consequently, it can be inferred that there exists a strong positive correlation between the original dataset’s labels and its features, highlighting high-quality coded correspondence.

| Algorithm 4. Performance testing |

| Require: originalDataset, a classifierPool, List of rearrangement percentages Ensure: “High-Quality Dataset” or “Low-Quality Dataset” 1: for all perc ∈ Percentages do 2: //Phase 1: Establish Baseline 3: Minitial ← CalculateMeanPerformance(originalDataset, classifierPool) 4: W ← CalculateFeatureWeights(originalDataset, classifierPool)//A map {feature_j: weight W_j} 5: pinitial ← CalculateSpearmanCoefficients(originalDataset)//From Algorithm 3 6: 7: //Phase 2: Evaluate Performance on Rearranged Datasets 8: Mrearranged ← an empty map 9: prearranged ← an empty map//To store mean coefficients from Algorithm 3 10: for each percentage in percentages do 11. meanPerf ← CalculateMeanPerformance(RearrangedDatasets(percentage), classifierPool) 12: Mrearranged[percentage] ← meanPerf 13: //Assume mean Spearman’s coeffs for each percentage are available from Algorithm 3 14: prearranged[percentage] ← GetMeanSpearmanCoefficients(percentage) 15: end for 16: 17: //Phase 3: Final Verification 18: if the performance trend isDecreasing(Minitial, Mrearranged) is false then 19: return Low-Quality Dataset//Performance should decrease as data quality degrades 20: end if 21: 22: //Calculate performance degradation contribution for each feature 23: for each feature_j in W do 24: //Degradation in correlation for feature j (using 50% rearrangement as example) 25: corrDegradationj ← pinitial[feature_j] − prearranged[50%][feature_j] 26: //Contribution to performance drop 27: Dj ← corrDegradationj × W[feature_j] 28: end for 29: 30: //Check if features with higher weights contribute more to the performance drop 31: if IsProportional(D, W) is true then 32: return High-Quality Dataset 33: else 34: return Low-Quality Dataset 35: end if 36: end for |

Aligned all algorithms so that Algorithm 2 (access control) → Algorithm 5 (incentive lock) → Algorithms 3 and 4 (quality assessment) → Algorithm 5 (final settlement) reflect the actual runtime sequence.

6. Fair Incentive System

6.1. Incentive Principles

To incentivize more honest participants to provide high-quality datasets, it is crucial to offer sufficient financial rewards for those DOs that consistently deliver such datasets. In this regard, we propose a fair incentive scheme based on reputation value, and the detailed implementation of this scheme is presented in Algorithm 5.

| Algorithm 5. Reward method |

| Input: Addr_r, Addr_d, S_ID, PA_Pre, Dataset_ID Output: Payment Result 1: RA pays corresponding advance to DO; 2: if RAPayment < SpecifiedAmount then 3: return Please Pay Advance Payment; 4: else 5: Generate Prepay—Autr; 6: Checking Addr_r for Authorization; 7: if Addr_r ∉ Addr.List then 8: return Address not Authorized; 9: else // Addr_r is in Addr_List(User is authorized) 10: Sending IPFS Address to User; 11: Generate Send—Autd; 12: if IsHighQualityDataset(Dataset_Id) then // Inferred condition for dataset quality 13: Calculate US_cv; 14: Generate Fullpay—Autr; 15: else // Dataset is not high quality 16: Generate Refund—Autr; 17: return Dataset is not a High Quality Dataset; 18: end if 19: end if 20: end if |

6.2. Credit Value Evaluation

The credit value reflects the performance of that user in accomplishing the task, and in this scheme, we apply the following evaluation criteria.

- (1)

- Increase credit value: If the DO provides whose quality is greater than the dataset quality standard set by the system, the dataset provider will receive a higher reputation value as the number of high-quality dataset submissions increases.

- (2)

- Reduce credit value: (1) If the DO accepts a task sent by the system, but uploads a dataset that is unrelated to the task or if the content of the dataset is suspected to be in violation of the law, appropriate actions will be taken. (2) In cases where a DO repeatedly provides datasets below the quality standard despite being required to provide relevant datasets, necessary measures will be implemented. (3) If a DO engages in multiple instances of malicious pricing by offering prices significantly higher than , their account will be terminated after committing this misdeed more than three times.

Initially, all users start with an equal reputation value; however, through a series of dataset transactions, honest and trustworthy DOs accumulate a higher reputation value, while those engaging in malicious behavior receive a lower reputation value. This facilitates the selection of the most suitable sellers by RAs during dataset transactions.

The credit value is determined by two primary factors: the prior performance of the data object (DO) before the current task and its performance in the current task. represents the historical creditworthiness value of the dataset provider.

The variable represents the cumulative value of datasets provided by the DO prior to this task, while denotes the total value of all datasets in this system. We utilize their ratio to quantify the historical reputation value. On the other hand, signifies the reputation value of datasets specifically for this task. In addition, the range of i is from 1 to n, where n represents the total value of all datasets in the system:

where is a stochastic constant that assumes a value of 1 for high-quality datasets and 0 otherwise, while represents the value associated with the current dataset. The user’s reputation score for each task can be calculated as follows:

The final reputation value is , where t is the number of misdeeds and m is the ratio of the two parts of the reputation value.

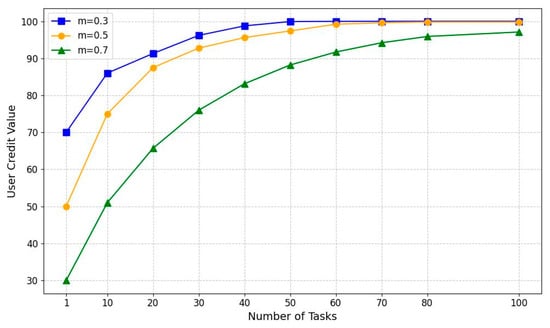

To demonstrate the determination of weights for and , we assigned a value of 100 to each offer of and assumed all DOs to be truthful (i.e., t = 0). The ratio m was varied as 0.3, 0.5, and 0.7 to repeat the same task for a total of 100 times, with the corresponding results presented in Figure 6.

Figure 6.

Ratio m effect on credit value.

When m = 0.3, if the proportion of is too low and more emphasis is placed on the DO’s , the credit value of each user will tend to converge quickly across a smaller number of tasks, resulting in limited differentiation. This lack of differentiation undermines fairness for experienced DOs who have consistently provided high-quality datasets by completing a larger volume of tasks to attain their current reputation value. Therefore, when adjusting the parameter m, increasing the weight assigned to ensures fairness and equity within the scheme while incentivizing DOs to provide sustained delivery of high-quality datasets.

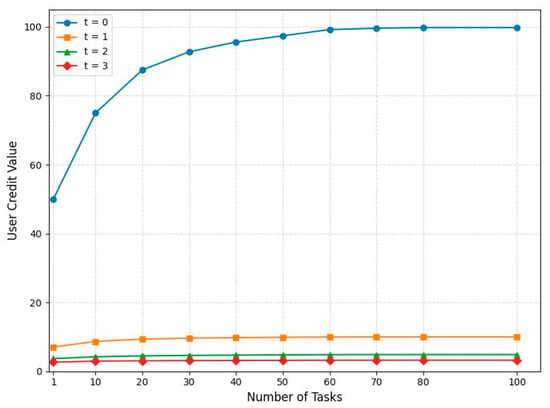

The impact of malicious behavior by the DO on its credit value is illustrated in Figure 7. In this experiment, we set the scale factor m to 0.5 and repeated the same task 100 times, examining the variation in reputation value for three scenarios: t = 0 (honest user), t = 1, t = 2, and t = 3. From the graph, it is evident that once a DO engages in malicious behavior, there is a significant decline in its reputation value assessment. Even if the DO subsequently provides high-quality datasets, it will not be able to regain the reputation value expected from an honest DO. Therefore, this scheme imposes stringent requirements on DOs, as any occurrence of malicious behavior will have an irreversible negative impact on their credit value.

Figure 7.

Effect of the number of offenses on credit value.

6.3. Reward Acquisition

All participants must complete the registration process in the system, as described above, to obtain reliable identity information. The system sends a task to the DO with relevant requirements for the dataset, where and represent the start and end times, respectively. Here, denotes the minimum quality requirement for the dataset. To prevent an uncontrollable increase in dataset prices and maintain order on the trading platform, we establish pricing criteria for the dataset value ():

The pricing of datasets should not exceed this threshold. Here, represents the total number of datasets provided by the DO, m denotes the number of dataset categories included in this upload, and signifies the value assigned to each dataset within different categories, which is calculated using . In this equation, corresponds to the total number of dataset providers who have completed uploading this job, while refers to the count of users who possess datasets belonging to that specific category.

While safeguarding the pertinent interests of the RA, it is also imperative to ensure the relevant interests of the DO as a seller. Therefore, prior to executing the transaction for the complete dataset, an advance payment of no less than tokens must be made by the RA as a credit guarantee. Upon successful completion of this payment, a license will be issued to the researcher through a smart contract, which shall operate as follows:

- (1)

- Verify the amount paid by the RA and if the specified amount is reached, proceed to the next step; otherwise, terminate the transaction.

- (2)

- Generate the permit (Pmt) for RA:where is the unique identifier of the , includes the usage rights (e.g., download, browse, etc.) and the expiration time of the license, and Timestampp is the timestamp of the . At the same time, credible deposit information is generated, where is the specific amount of advance payment made by the RA.

The RA makes the advance payment and the system generates two random numbers and , where is used to prove that the system as an intermediary has received the dataset successfully and is used to prove that the dataset has passed the check. The user keeps and strictly confidential and the DO publishes . The RA needs to deposit enough money to pay the fees required at a later stage. The DO first sends the message to the intermediary, where is the corresponding signature , and the intermediary sends the message to the corresponding RA. The tuple is sent to the third-party dataset quality evaluation platform, and after confirming that the dataset is of high quality, the system encrypts the Pmt with the RA’s public key as PL = . Then, it is sent (hp, PL) to the RA. The RA obtains the corresponding dataset by accessing the specific IPFS storage address and pays the DO the full amount of the promise as a reward, generating the complete payment voucher , which also completes the broadcasting in the blockchain network and generates the corresponding trusted depository, the generation process of which is as follows:

where represents the specific advance that the RA is required to pay, and in cases where any RA fails to fulfill this payment obligation, the administrator has the authority to settle their account balance to ensure fairness within the incentive system. Subsequently, upon receiving a corresponding payment message, the RA verifies signature and sends a confirmation message along with it to the system.

The scenario illustrates the case of a DO uploading high-quality datasets. However, there are still unscrupulous DOs who provide datasets with a quality that falls below the acceptable threshold . In such instances, the DO will be required to reimburse the advance payment previously made by the RA. Additionally, denotes the evaluation outcome assigned by an impartial third-party dataset aggregator and serves as evidence for generating the corresponding refund certificate :

7. Security Analysis of Our Scheme

7.1. Validation and Consensus

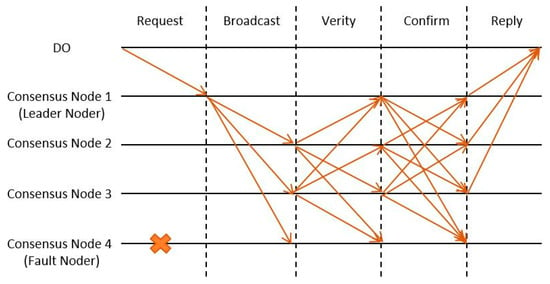

When a DO sends transactions to the blockchain, we use practical Byzantine fault tolerance (PBFT) as the consensus protocol to ensure the consistency of the blockchain ledger. The consensus protocol process of our scheme is shown in Figure 8.

Figure 8.

PBFT-based consensus process of the proposed scheme. (Note: The ‘X’ mark indicates a faulty node.).

Initially, the DO transmits the transaction to the leader node. Subsequently, the leader node disseminates the transaction to other consensus nodes to ascertain the storage proof based on the within said transaction. We define , and proceed as follows:

- (1)

- Calculate .

- (2)

- Calculate .

After completing the above calculations, verify to see if the equation holds true:

If the verification is successful, it indicates that the IPFS possesses the hash address of the ciphertext of the DO-uploaded dataset. Subsequently, each consensus node will multicast the transaction along with its signature to mutually validate one another. If the consensus node receives more than two-thirds of valid confirmation messages, the transaction is considered valid and written to a new block. Finally, the consensus node responds with the outcome to the DO that initiated the transaction.

7.2. Dataset Interaction Security

Controllability: A specified RA can use the key to transform the access structure of an encrypted dataset into a re-encrypted dataset of the access structure only if the encryption key satisfies the access structure with a of -specific random number. The non-specified RA cannot transform the channel structure to put the encrypted dataset into the corresponding access structure because of the lack of . Therefore, the non-specified RA cannot access the corresponding dataset by decryption to satisfy the controllability of dataset interaction.

Correctness: We use the encrypted ciphertext The successful verification of the RA can be ensured if both the DO and RA execute the process with utmost integrity, thereby validating the accuracy of the dataset accessed on the chain.

Simultaneously, the original dataset stored in the IPFS within the chain generates a corresponding CID based on its content. In cases of any malicious tampering with the dataset, the CID will consequently change and become irretrievable, thereby ensuring the integrity of the original dataset.

7.3. Privacy Protection

The process of accessing the key consists of two main components: (1) embedding a random number into the private key of the DO; and (2) encrypting the random number under the access structure . These two components can be independently performed by the DO without relying on a third party. The decryption of the encrypted dataset relies on R0. Similarly, R0 can be utilized by the DO to determine whether the dataset is encrypted, thereby preventing unauthorized entities from decrypting it and avoiding privacy breaches caused by unauthorized access to the RA, thus ensuring data confidentiality.

To better protect the privacy of users, we also ensure the anonymity of DO and RA during the transaction of all datasets:

- (1)

- In the anonymous purchase phase, the payment address associated with the RA’s public key is a random point on .

- (2)

- In the anonymous license implementation, the RA generates a signature . T is a random point on . are n-1 randomly selected points on , and is calculated:since is randomly selected by the RA, the signature does not reveal any information about the RA’s identity.

- (3)

- In the anonymized data acquisition phase, the RA acquires data through a private address on the blockchain. The private address is independent of the RA’s identity.

8. Experimental Evaluations

8.1. Dataset Introduction

Dry Bean Dataset (high-quality dataset) [58]: This publicly available dataset comprises high-resolution images of 13,611 grains from seven different types of dry beans. The dataset considers features such as shape, type, and structure based on market standards. A computer vision system was employed to segment and extract features from the soybean images, resulting in a total of 16 features: 12 sizes and 4 shapes [52].

Toy Dataset (low-quality dataset): We utilized the classical categorical dataset Iris [59] as the foundational dataset and intentionally reduced its data quality by disambiguating the corresponding feature values. The four categories, namely SepalLengthCm, SepalWidthCm, PetalLengthCm, and PetalWidthCm, were augmented to 10,000 samples through random data generation while reserving one bit within value ranges of 4–7, 2–4, 1–7, and 0–3 as eigenvalues, respectively.

8.2. Validation of Quality Assessment Methods for Categorized Dataset

To validate the efficacy of the proposed label rearrangement-based method for assessing dataset quality, we selected the Dry Bean Dataset from UCI’s public repository as a representative example of high-quality datasets, and utilized our self-constructed Toy Dataset to exemplify low-quality datasets.

After conducting a preliminary assessment of the overall status of both datasets, it was determined that neither dataset exhibited insufficient sample size or imbalanced data categories prior to proceeding with the specific label rearrangement.

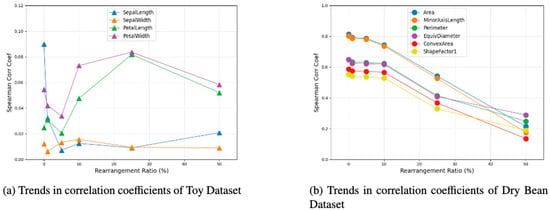

After conducting the initial check, the datasets under evaluation, namely the Toy Dataset and Dry Bean Dataset, were reorganized with labels based on alignment percentages of 1%, 5%, 10%, 25%, and 50%, as previously described. This approach resulted in the formation of ten datasets with distinct alignment orders for each alignment percentage. Subsequently, a correlation coefficient test was performed on each dataset to evaluate it. The Spearman correlation coefficients for every feature were computed across all ten datasets formed by varying label alignments. The mean value of these coefficients was then calculated to obtain the final correlation coefficients for features corresponding to the five alignment percentages: . Table 2 presents the Spearman correlation coefficients for each feature within the Toy Dataset, while Table 3 displays those pertaining to the Dry Bean Dataset.

Table 2.

Summary of traditional dataset quality evaluation methods.

Table 3.

Description of major notations.

Figure 9a,b show trends in feature correlation coefficients under different reordering ratios more intuitively. It is worth noting that the Dry Bean Dataset comprises a total of 16 features; however, due to space constraints in this paper, we have selected the 6 features with the highest correlation coefficients for presentation.

Figure 9.

Trends in correlation coefficients.

Upon examining the Spearman correlation coefficient table and trend graphs of the Toy Dataset and Dry Bean Dataset, it is evident that the correlation coefficients of each feature in the Toy Dataset exhibit minimal variation, ranging from 0 to 0.07. Furthermore, Figure 9a illustrates an irregular pattern in this variation, wherein the correlation coefficients increase and decrease as the proportion of label reordering rises. Conversely, the correlation coefficients of each feature in the Dry Bean Dataset undergo significant changes, with a sharp decline observed when the proportion of label rearranging reaches 50%. This declining trend persists, as depicted intuitively in Figure 9b.

Through the above analysis, we determined that Toy Dataset is not a high-quality dataset and the Dry Bean Dataset meets the conditions of for the correlation coefficient test, which needs to be performed for the performance test to come up with the final evaluation.

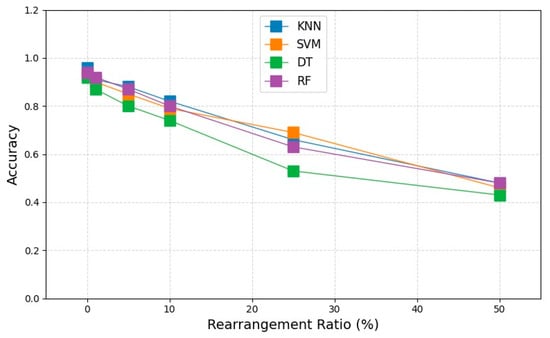

Like the correlation coefficient test, we generated 10 datasets with different alignment orders for each alignment percentage and used them as realignment datasets in our experiments. The resulting rearranged dataset was then used to calculate the accuracy of four classical classifiers, namely k-nearest neighbor (KNN), support vector machine (SVM), decision tree (DT), and random forest (RF). Taking the mean value of these accuracies provides us with performance metrics for the final five alignment percentages . The specific performance is illustrated in Figure 10.

Figure 10.

Classifier performance on Dry Bean Dataset.

By considering the weights assigned to each feature in the classifier pool, as presented in Table 4, along with the corresponding Spearman’s correlation coefficient sum obtained from the correlation coefficient test, we can readily determine the contribution of each feature towards model performance degradation.

Table 4.

Toy Dataset Spearman coefficients (Iris features).

By analyzing the Spearman’s correlation coefficients of geometric features in the toy dataset, as presented in Table 5, we can observe the feature—related change patterns.

Table 5.

Toy Dataset Spearman coefficients (geometric features).

To determine the influence of different features on classifiers, we refer to the weights of features on 4 classifiers in the classifier pool, which is shown in Table 6.

Table 6.

Weights of features on 4 classifiers in classifier pool.

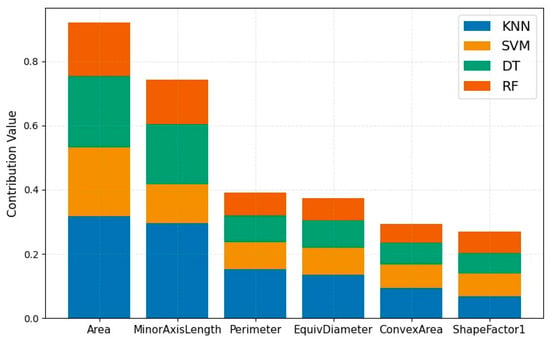

Considering the distinctive characteristics of each classifier, the feature weights were computed using different methods. To enhance the visual representation of model performance degradation in the histogram, we scaled down the SVM feature values by a factor of 10. This data processing approach ensured accurate interpretation of experimental results, as our focus was solely on evaluating individual feature contributions within the same model. The resulting contribution of each feature to model performance degradation is illustrated in Figure 11.

Figure 11.

Contribution of features to model performance degradation.

Based on the figure, it can be observed that when the proportion of feature rearrangement reaches 50%, features such as Area and MinorAxisLength exhibit a significant decrease in Spearman’s correlation, resulting in a substantial decline in model performance compared to other features. This finding confirms that the primary reason for the reduction in correlation coefficient is loss of correspondence between features and random labels, indicating a deterioration in dataset quality.

Through the complete evaluation process described above, we can determine that the Dry Bean Dataset has the conditions that need to be met by a high-quality categorized dataset, while the Toy Dataset is not a high-quality dataset. This result also coincides with the real time that we preset the Dry Bean Dataset as a high-quality dataset and Toy Dataset as a low-quality dataset, which validates the feasibility of the proposed methodology.

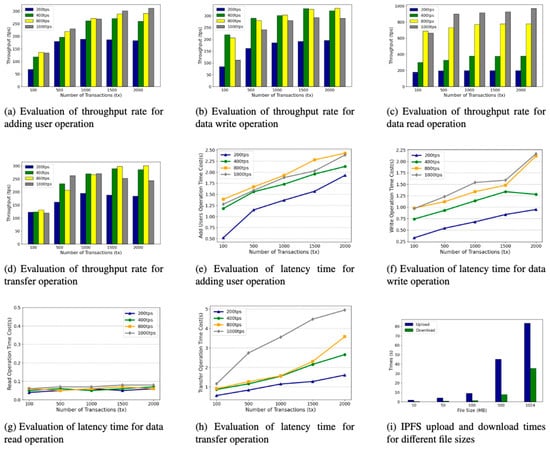

Experiments were conducted on a two-core, 4 GB Ubuntu 18.04 platform, with the proposed scheme developed based on the permissioned blockchain platform Fisco Bcos. The performance evaluation focuses on four key functions implemented by the scheme: (1) user addition; (2) participants sending dataset hash addresses; (3) RAs obtaining dataset hash addresses; and (4) monetary transfers between RAs and DOs. Caliper-Benchmarks was employed as the performance testing tool. To assess the specific performance of the proposed scheme, we varied the number of simulated experimental transactions from 100 to 2000, measuring corresponding throughput rates and average delays while varying sending rates (200 tps, 400 tps, 800 tps, 1000 tps). Additionally, we tested file upload/download times for different sizes in the IPFS. Evaluation results are presented in Figure 12.

Figure 12.

Results of performance evaluation. (a) Evaluation of throughput rate for adding user operation, (b) evaluation of throughput rate for data-write operation, (c) evaluation of throughput rate for data-read operation, (d) evaluation of throughput rate of transfer operation, (e) evaluation of time cost for adding user operation, (f) evaluation of time cost for data-write operation, (g) evaluation of time cost of data-read operation, (h) evaluation of time cost of transfer operation, (i) IPFS upload and download times for different file sizes.

8.3. Blockchain Network Performance

The throughput rate reaches its maximum when the user additions reach 1500, and a slight dip in the throughput rate occurs as the user additions rise further for all sending rates. Moreover, transaction latency gradually increases with the growth in transaction volume. From the results shown in Figure 11, it can be observed that the latency reaches its peak at a sending rate of 800 tps, which amounts to approximately 2.43 s.

Figure 12 presents a comprehensive performance evaluation of the key operations within our proposed scheme. For transaction-based operations such as adding a user (a,e), writing data (b,f), and transferring assets (d,h), the results consistently show that throughput scales well with an increasing number of transactions, generally peaking around 1500 transactions before stabilizing or slightly decreasing. Correspondingly, latency for these operations increases with higher transaction volumes and sending rates, with the transfer operation exhibiting the highest delay under heavy load (~4.95 s). Notably, the data-read operation (c,g) is exceptionally efficient, maintaining a stable and near-instantaneous response time (~0.06 s) regardless of the load. Finally, the performance of the off-chain storage component was tested on the IPFS (i), demonstrating that file upload and download times scale reasonably with file size, with a 1024 MB file being processed in an acceptable timeframe. Collectively, these results confirm that our system is robust, scalable, and performs efficiently under a range of simulated loads, validating its practical feasibility.

By analyzing the experimental results, it is evident that within the range of 2000 transactions, the throughput rate of the primary four operations in this scheme remains consistently stable. Furthermore, the latency also falls within an acceptable range, thereby successfully achieving our anticipated objectives.

8.4. Performance Comparison

8.4.1. Comparison Dimensions and Strategy

To ensure a systematic evaluation, we have focused on four key aspects that are critical to a trustworthy data-sharing system:

- (1)

- End-to-end latency of open (write + read) and transfer operations.

- (2)

- Stable throughput under a constant load of 100 tps.

- (3)

- Storage-integrity guarantees—hash-based vs. PDP-based mechanisms.

- (4)

- Privacy-preservation techniques—ABAC, ring-signature, and public-chain pseudonymity [57].

8.4.2. Experimental Setup and Metric Collection

All experiments were executed on a single four-core, 8 GB Ubuntu 20.04 machine with Hyperledger Caliper 0.5.0. We generated workloads ranging from 100 to 1000 transactions at a fixed sending rate of 100 tps. Each configuration was run five times, and we report the means ± 95% confidence interval.

8.4.3. Results and Analysis

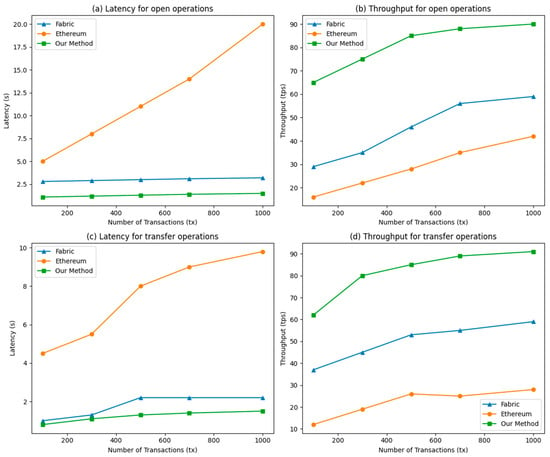

Most existing data security sharing schemes based on blockchain are predominantly developed using either the Ethernet public chain platform or the Hyperledger Fabric federation chain platform. In this section, we compare our proposed scheme with Fabric-based schemes [57] and Ethernet platform-based schemes [48], employing the open-source Ethernet client Hyperledger Besu to simulate the Ethernet public chain platform for a more comprehensive comparison. The performance evaluation was conducted in two main steps: open (involving complete writes and reads) and transfer, maintaining a fixed send rate of 100 tps. For the performance test, we selected 100, 300, 500, 800, and 1000 experimental transactions. Figure 13a illustrates the end-to-end latency under the first comparison dimension, Figure 13b illustrated stable throughput under a constant load of 100 tps, Figure 13c shown storage-integrity guarantees—hash-based vs. PDP-based mechanisms, and Figure 13d illustrated privacy-preservation techniques—ABAC, ring signature, and public-chain pseudonymity [48].

Figure 13.

Performance comparison of three types of methods.

It is evident from the figure that the latency of both open and transfer operations in the data sharing scheme based on the Ethereum platform [60,61] exhibits a significantly higher value compared to that of the scheme based on the Fabric platform [57] as well as our proposed scheme. Furthermore, this gap further widens with an increase in transaction volume. It is reasonable to expect that the disparity in latency between the Ethernet-based scheme and the schemes developed by the two federation chain platforms for open and transfer operations will be even more pronounced when the number of transactions exceeds 1000. By examining the performance comparison graph between our proposed scheme and the Fabric platform-based scheme [57], it can be observed that there is not a significant difference in terms of latency testing for open and transfer operations, with both schemes exhibiting a delay of approximately 1 s. However, our proposed scheme demonstrates a clear advantage in terms of throughput. The maximum stable throughput of [57] is approximately 56 tps for the open operation and 59 tps for the transfer operation. In contrast, our proposed scheme achieves a throughput of 93 tps and 91 tps for these two types of operation, demonstrating its superiority over existing schemes in terms of efficiency and throughput. Our scheme exhibits enhanced efficiency and scalability.

8.5. Summary of Results

The experimental evaluations in this section have validated the feasibility and effectiveness of our proposed framework from multiple perspectives. The key results are summarized as follows.

- (1)

- Effectiveness of quality assessment: Our label rearrangement method successfully distinguished between high-quality and low-quality datasets. The high-quality Dry Bean Dataset showed a clear, predictable decline in feature correlation and model performance as label randomness increased, while the low-quality Toy Dataset exhibited irregular and weak correlations, confirming the method’s reliability.

- (2)

- System performance and scalability: The performance benchmarks were conducted on the FISCO BCOS platform and demonstrated the system’s robustness. For key operations like user addition, data write/read, and transfers, the network achieved stable throughput rates (e.g., up to ~301 tps for transfers) and acceptable latency within a load of 2000 transactions. The data-read operation was exceptionally efficient, and the IPFS proved capable of handling large-file storage within reasonable timeframes.

- (3)

- Comparative advantage: In a direct performance comparison with schemes based on the Hyperledger Fabric and Ethereum platforms, our proposed solution showed a clear advantage. While maintaining low latency comparable to the Fabric-based scheme, our method achieved significantly higher throughput for both open and transfer operations, confirming its superior efficiency and scalability.

Collectively, these results provide strong empirical support for our blockchain-based data management method, proving it to be secure, efficient, and reliable.

9. Conclusions

In this paper, we addressed the critical problem of constructing high-quality, large-scale machine learning datasets through multiparty collaboration, which is often hindered by risks related to security, incentives, and data quality. We have proposed a comprehensive, blockchain-based framework to mitigate these risks, and our work successfully achieves the following.

- (1)

- Overcoming data security, privacy, and incentive challenges: To address the lack of trust in centralized systems and the absence of fair incentives, our solution provides a secure and reliable data management system. By leveraging a consortium blockchain, the IPFS, and smart contracts, we guarantee the traceability and immutability of all data transactions, alleviating provider concerns. Furthermore, our reputation-based incentive mechanism ensures that honest participants who provide high-quality datasets are rewarded, promoting sustainable collaboration.

- (2)

- Establishing a reliable dataset quality assessment method: To counter the risk of “garbage in, garbage out” stemming from unreliable quality assessment, we introduced and validated a novel method based on label rearrangement. This practical approach allowed us to robustly evaluate the intrinsic quality of categorized datasets, ensuring that only high-quality data were used for model training. Our experiments confirmed its effectiveness in distinguishing between high- and low-quality data samples.

In conclusion, our proposed scheme provides an integrated solution that enhances security, reliability, and incentive alignment in collaborative data projects. Although the framework meets its primary objectives, we have identified areas for future enhancement. The classifier pool in our quality evaluation could be expanded to include more complex models and larger datasets. Additionally, the incentive scheme could be further fortified by incorporating principles from game theory to prevent sophisticated misuse, such as fraudulent secondary data submissions. We believe that these future directions will build upon the solid foundation established by this work.

Author Contributions

Conceptualization, C.H. and T.Z.; Methodology, C.H.; Formal analysis, Y.W.; Software, Y.W. and F.H.; Validation, Y.W. and F.H.; Visualization, F.H.; Writing—original draft, C.H. and Y.W.; Writing—review and editing, T.Z. and Y.M.; Supervision, T.Z. and Y.M. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the National Key Research and Development Program of China (Grant No. 2022YFB3104300).

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

Authors Chuan He, Yunfan Wang, Tao Zhang and Yuanyuan Ma were employed by the company China Electric Power Research Institute Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Buterin, V. A next-generation smart contract and decentralized application platform. White Pap. 2014, 37, 2-1. [Google Scholar]

- Camacho, J.; Wasielewska, K. Dataset quality assessment in autonomous networks with permutation testing. In Proceedings of the NOMS 2022—IEEE/IFIP Network Operations and Management Symposium (2022), Budapest, Hungary, 25–29 April 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Camacho, J.; Wasielewska, K.; Fuentes-García, M.; Rodríguez-Gómez, R. Quality in/quality out: Assessing data quality in an anomaly detection benchmark. arXiv 2023, arXiv:2305.19770. [Google Scholar]

- Chen, H.; Pipeta, L.F.; Ding, J. Construction and evaluation of a high-quality corpus for legal intelligence using semiautomated approaches. IEEE Trans. Reliab. 2022, 71, 657–673. [Google Scholar] [CrossRef]

- Ding, J.; Li, X.; Kang, X.; Gudivada, V.N. A case study of the augmentation and evaluation of training data for deep learning. J. Data Inf. Qual. (JDIQ) 2019, 11, 1–22. [Google Scholar] [CrossRef]