Abstract

LLMs have created new possibilities for machines to learn from data and produce content without human intervention. We address the question of whether they provide reliable and efficient Natural Language Processing. We differentiate Generative Grammar Language Models from Large Language Models. We take Structure Dependency to be a first principle of language, a prerequisite to Semantic Compositionality, applying in conjunction with Principles of Efficient Computation to generate structured content. We discuss the behavior of ChatGPT-3.5, GPT-4.o mini and Grok 3 in response to complex queries including pronominal Binding, Coreference, and structural ambiguity and point to the relevance of deep syntactic and semantic principles for LLMs. We outline consequences for the development of reliable and efficient Natural Language Processing systems in emergent technologies.

Keywords:

Large Language Models; ChatGPT; Grok; Generative Grammar Language Model; I-language; E-language; Structure Dependency; Language First Principles; Principles of Efficient Computation; Semantic Compositionality; pronominal Binding; Coreference; structural ambiguity; Natural Language Processing; Asymmetry Recovering Parser 1. Introduction

We ask whether LLMs, such as ChatGPT and Grok, provide reliable and efficient Natural Language Processing (NLP). We take reliability to be a function of usefulness and efficiency to be the product of a relationship between computational costs and accurate outputs. We approach this question from the perspective of human language science and related computational implementations.

The purpose of this paper is to clarify principles of modern linguistics and identify conceptual pathways to integrate them into LLMs, leading to further research and applications. Our findings provide initial support for this line of inquiry based on prompting LLMs with a few representative examples of the syntactic and semantic complexity typical of natural language. Systematic testing with diverse examples under controlled conditions and the use of statistical analysis to evaluate LLMs’ performance falls outside of the scope of this paper.

In this framework, this article compares Generative Grammar Language Models (GGLMs) developed in Generative Grammar since the seminal work of [1,2,3] to LLMs developed in Machine Learning since the work done by [4,5,6]. These models rely on different assumptions regarding natural language and how it is learned and processed by humans and machines.

Anchored in biolinguistics [7,8,9,10,11], GGLMs provide explanatory theories of human language, specifically of its acquisition, evolution, and use. GGLMs are based on mathematical linguistics [1] and neurosciences [12,13] to model systems according to language science rather than behavioral science [14,15]. The assumption that language, like behavior, is shaped by association, conditioning, and simple induction, along with analogy and general learning procedure. See [14] for discussion. In this framework, Natural Language Processing (NLP) relies on efficient parsing of linguistic expressions based on a small set of operations that recover their structure at the phrasal level [16,17,18] and below [19,20,21]. In this framework, structure provides the basis for further computation related to specific NLP tasks.

Anchored in generative artificial intelligence, LLMs rely on Deep Learning and Artificial Neural Networks [22,23,24,25] to train statistical and probabilistic models on very large corpora. In LLMs, calculations of statistical and co-occurrence probability of words within sequences are used to identify patterns and predict the next word in a text. The meaning of linguistic expressions relies on Distributional Semantics, which assumes that words that occur in the same distributional context tend to have similar meanings [26,27,28,29]. More recently, Generative Pre-trained Transformer (GPT) models, relying on advanced artificial neural networks, have been used for NLP [30]. This is the case, for example, for ChatGPT, released in 2022, which has attracted a great deal of attention for its impressive performance in providing replies to incoming prompts.

Reliability issues have been reported with ChatGPT; since the program may generate unreliable replies to incoming text. For example, when ChatGPT is prompted with data it has not been pre-trained with, it may provide responses that are probable but not factual; this is the so-called “hallucination” problem [31,32,33]. The program may also forget or perform poorly on a new task after being trained on a previous one; this is the so-called “catastrophic forgetting” problem [34,35]. These issues have been attributed to the data used to train the model in the first case and to continuous finetuning on task-specific data in the second case, as well as to issues related to reasoning. Remedial strategies are under investigation, including Chain of Thought [36], Humans in the Loop [37,38], and User Intent Taxonomies [39]. Efficiency issues arise with the very large size of the pre-training datasets used by the model, which may contain hundreds of billions of tokens, as well as the large number of parameters adjusted through gradient descent, which require very large amounts of computational power, with very high associated costs [40,41].

In addition to reliability and efficiency issues, other works point to the fact that ChatGPT is not sensitive to the properties of human language, including creativity [42,43], that it does not model language and how humans acquire it [44], that it is truth-agnostic and fails to pass the Turing Test [45], and to the fact that LLMs cannot constitute theories of language [46].

Building on previous work [47,48], this paper points to further foundational shortcomings of ChatGPT, which we attribute to the absence from LLMs of modelling of first principles of natural language and principles reducing complexity, and we propose conceptual pathways for their integration.

The organization of this paper is as follows. First, we identify core properties of GGLMs, focusing on Structure Dependency, its implementation in a parser, and a prerequisite to the Semantic Compositionality of natural language expressions. Secondly, we identify core properties of LLMs and point to shortcomings of ChatGPT and Grok in processing complex queries including pronominal Binding, Coreference, and structural ambiguity, basing this analysis on a few representative examples. We also point to issues related to evaluation metrics currently used to measure LLMs’ performance. Lastly, we point to GGLM-related insights that could be operationalized in LLMs.

2. GGLMs

2.1. I-Language and E-Language

Nearly all processes associated with our capacity for language are inaccessible to our consciousness. Nonetheless, theories of human language can be defined with increasing levels of explanatory capacity by adopting a Newtonian view of science. Explanatory theories provide the simplest explanation for the fact that children cross-linguistically acquire the language that they are exposed to in a short period of time based on limited evidence, as well as for the fact that novel natural language expressions can be produced and understood without previous learning. From this viewpoint, the human capacity for language cannot be reduced to an acquired behavior.

Assuming that the human capacity for language is innate, the language internal to the mind (I-language) is distinguished from the externalized language (E-language) [49]. Given a theory of I-language, it is possible to model the innate human capacity for language as a generative system capable of accounting for the discrete infinity of language and its acquisition by children, notwithstanding the poverty of the stimulus [2,3] According to this model, human languages cannot be described by enumerating all their instances in a finite corpus or learned by extensive training on large quantity of data. Instead, this model provides explanatory theories of human language consisting of a small set of recursive operations that generates the underlying structure of linguistic expressions. The systematic analysis of representative examples, including minimal pairs, led to the discovery of underlying deep principles of language.

Several theories of I-language have been formulated through the development of Generative Grammar. We will refer to them as Generative Grammar Models of Language (GGLMs). They include the Standard Theory [3], the Principles and Parameters Theory [50], and the Minimalist Program [51,52]. Each model simplifies the technical apparatus required to generate the form articulating the interpretation of linguistic expressions. GGLMs aim to provide the simplest theory of language that can account for its universal properties, the apparent diversity of languages, language acquisition by children, and the effect of factors reducing complexity [53,54]. The following sections point to core properties of these models.

2.2. Overall Architecture and Syntactic Operations

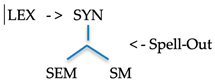

According to GGLMs, human language can be thought of as a system relating meaning to sensory form based on finite means. The modular architecture of the grammar (see (1)) includes a Lexicon (LEX) in which underivable lexical items are listed with their features. An array of lexical items and their features constitutive of linguistic expressions is selected to form the LEX and transferred to the syntactic workspace (SYN), where the syntactic derivations take place. Spell-Out is a point in the derivation at which syntactic structures are passed to the semantic (SEM) and sensorimotor (SM) interfaces and transferred to the external systems. This architecture, as adopted in the current GGLM, is simpler than the complex models that have been used previously

| (1) |  |

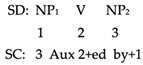

Through the development of GGLMs, previously complex syntactic operations have been replaced by simpler ones. For example, in the Standard Theory [3], the syntactic component includes the Base and the Transformational Component. The operations of the Base take the form of rewriting rules such that only one category precedes the rewrite operator “->” and one or more categories may follow it; see (2). The transformations are applied to a Structural Description (SD) to derive a Structural Change (SC) (see (3)) with the PASSIVE transformation, which involve several operations, including displacement, insertion and affixation. In the Minimalist program [51,52,53], syntactic operations are reduced to a single recursive set-formation operation; see (4).

| (2) | S -> NP VP NP -> Det N | ||

| VP -> V NP | |||

| (3) |  | ||

| (4) | Merge (x, y), where x and y are syntactic objects. | ||

Simplicity in the number and the form of the operations of I-language is preferable by the Occam’s Razor principle, according to which, between two formal systems with equivalent descriptive capacity, the one with the simpler grammar has more explanatory value. The preference for simplicity is also motivated by the fact that children acquire language notwithstanding the poverty of the stimulus [2,3], as well as by the fact of the early emergence of language in time, as is known based on archeological records [54]. In this framework, First Principles of I-language interact with Principles of Efficient Computation external to I-language, reducing complexity; we now turn to these first principles.

2.3. First Principle of Language: Structure Dependency

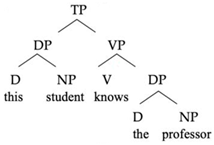

According to the principle of Structure Dependency (see (5)), the operations of I-language apply to structures, not to sequences of words. Given Structure Dependency, linguistic expressions are hierarchically structured expressions and not sequences of words. For example, the sentence in (6a) can be represented in terms of oriented graphs including to lower nominal (NP) and verbal (VP) constituents, which are dominated by higher functional constituents, including DP and TP. Lower syntactic projections host argument-structure configurations, while higher functional structures host modification and operator-variable structures.

| (5) | Structure Dependency |

| I-language operations are structure-dependent. | |

| (6) | a. This student knows the professor. |

| b. | |

|

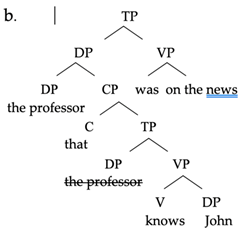

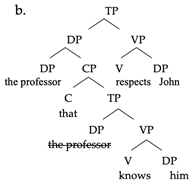

Structure Dependency is at play across the board in language, including in the generation of syntactic constituents in specific syntactic positions and their displacements to higher syntactic positions. For example, in (7b), the DP the professor in the lower TP position is displaced to the higher DP position, leaving in its initial position a covert copy, represented as a strikethrough.

| (7) | a. The professor that knows John was on the news. |

|

Without Structure Dependency, it would not be possible to differentiate possible from impossible linguistic expressions, including agrammatical (*) expressions such as *student this professor the likes and arbitrary sequences of words. Experimental results support Structure Dependency’s status as a First Principle of I-language. If this were not the case, it would not be possible to explain why intonational phrases, rather than perceptual breaks such as pauses, influence syntactic attachments in syntactic disambiguation; see [55,56,57,58], among other works.

2.4. Principles of Efficient Computation

Principles of I-language apply in conjunction with Principles of Efficient Computation, which are external to I-language and contribute to reducing the complexity of syntactic computation. Several economy conditions on syntactic derivations have been proposed in GGLMs. One of them is Phase theory; see (8) [59,60].

| (8) | Phase Theory: Derivations proceed by phases. |

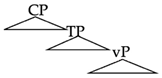

A phase is a unit of computation and interpretation that optimizes the efficiency of the cyclic transfer of syntactic constituents to the external systems. Phases are typically complete propositional structures (CP) and complete argument structures (vP) that are sent to the external systems at Spell-Out for semantic interpretation on the one hand and linearization on the other; these may require separate operations [61,62].

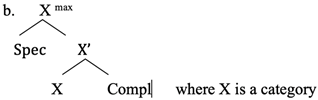

The internal structure of a phase includes a Head, a Complement and an Edge, the latter being the only position from which displacement can take place, a constraint that simplifies the complexity of displacements. Derivation by phase builds on X-bar Theory (see (9)), as proposed in the Principle and Parameter framework [49,50,63]. According to X-bar Theory, syntactic projections are endocentric. Each head X, irrespective of its category, projects higher syntactic levels (X′, …, Xmax), including a Complement (Compl) and a Specifier (Spec). The Compl of X is selected by X and generated in the X′ projection; whereas the Spec hosts the subject. Adjuncts are not selected by X and are generated in a higher position in its projection chain.

| (9) | a. X-bar Theory: Every category project more than one syntactic level, |

| according to the following schema. | |

|

X-bar Theory subsumes all category-specific phrase-structure rules and thus contributes to the computational efficiency of syntactic derivations. Moreover, it is supported by experimental results from language-acquisition studies [64]. In this framework, the syntactic representation of a linguistic expression takes the form of an oriented graph, where formal relations hold between their constituents. A central relation that has been extensively investigated is the asymmetrical c-command relation, where “C” stands for constituent; see (10), from [33,65].

| (10) | a. | C-command: X c-commands Y if X and Y are categories, X |

| excludes Y, and every category that dominates X dominates Y. | ||

| b. | Asymmetric c-command: X asymmetrically c commands Y; | |

| if X c-commands Y, Y does not c-command X. |

This relationship is at play across the board in syntax, including in the structural relation between a displaced constituent and its unexpressed copies. To illustrate briefly, in (9b) the Specifier asymmetrically c-commands the Head and Comp, but the inverse is not true. Structural asymmetries are also observed with displacements of constituents in question-formation from embedded contexts. The examples in (11) illustrate the fact that the displacement of a constituent from an embedded clause is possible from the Spec position (11b) but not from an Adjunct position (11c). The examples in (12) illustrate that while it is possible to front a modal, such as can, in a root position (12b), it is not possible to do so from a center-embedded clause, as in (12c).

| (11) | a. | [CP [TP you asked [CP who behaved how]]] |

| b. | [CP who [TP do you ask [CP | |

| c.* | [CP how [TP do you ask [CP who behaved | |

| (12) | ||

| a. | [TP [DP a man [CP that can walk] can run]]] | |

| b. | [CP can [TP a man [CP that can walk] | |

| c.* | [CP can [TP a man [CP that |

Thus, I-language operations apply to constituent structures and not to words or linear sequences of words; see also [66,67,68,69]. Structural asymmetry reduces complexity and thus contributes to computational efficiency. See [70,71,72,73] for its pervasive role in language.

2.5. Structure-Dependent Semantic Compositionality

In this framework, Structure Dependency is a pre-requisite for the semantic interpretation of linguistics expressions, including heads, arguments, and adjuncts. Syntactic conditions in (13) and (14) impose a biconditional relation between arguments and syntactic positions ((13) [50]) and between arguments and the head that selects them ((14) [74]).

| (13) | Theta Theory | ||

| a. | Each argument must saturate a theta position. | ||

| b. | Each theta position must be saturated by an argument. | ||

| (14) | Argument Criterion | ||

| a. | Each argument is introduced by a single argument-introducing head. | ||

| b. | Each argument-introducing head introduces a single argument. | ||

Argument saturation, predicate modification, and operator–variable binding are applied to a syntactic structure to derive the semantic interpretation of the whole structure compositionality, that is, according to the interpretation of their parts in their syntactic arrangement. See [75,76] for a featural approach to semantic interpretation and [77] for a type-theoretical approach to semantics.

For example, in the simplified syntactic representation in (6a) above for this student knows the professor, the verbal predicate like has two arguments, namely an internal argument the professor and an external argument this student, which occupy different syntactic positions. The first is located inside the verbal projection (VP), and the latter is outside the VP. This structural asymmetry contributes to semantic interpretation, since altering the structural position of these constituents, as in the professor knows this student, result in a different semantic interpretation, including truth value and semantic implicatures. Thus, notwithstanding the fact that they may include the same words, linguistic expressions may not be semantically equivalent. The semantic compositionality of linguistic expressions builds on their syntactic compositionally, which also contribute to the efficiency of the mapping of syntactic structures to their semantic interpretation at the SEM interface. Why would syntax be independent of semantics? The autonomy of syntax with respect to semantics has been evidenced by Chomsky’s example colorless green ideas sleep furiously, which is syntactically well formed but not semantically interpretable. Given that each lexical item is associated with a set of features, including their categorial and semantic features, the semantic interpretation of the expressions they participate in is derived compositionally, as it is the case for expressions such as interesting novel hypotheses spread rapidly.

2.6. Structural Asymmetry of Binding vs. Coreference

Pronouns do not have independent referents, unlike proper nouns and definite descriptions. They must be co-indexed with an antecedent with independent reference and matching morphological features, such as gender, number, and person. The conditions A and B of the Binding Theory in (15) apply under asymmetrical c-command in the local domain of a pronominal, such as he, she, and him, her or an anaphor, such as himself or herself [50]. Binding Theory is structure-dependent, as if x is bound by y, x asymmetrically c-commands y, but this is not the case for Coreference.

| (15) | Binding Theory | |

| A. | A pronominal must be free. | |

| B. | An anaphor must be bound. | |

According to condition A in (15), a pronominal is free in its local domain. However, the pronominal can be co-indexed with a constituent outside of its local domain. This is the case in (16), where the pronominal him is in a relative clause modifying the subject, i.e., the professor. Given its syntactic position, this pronominal must be free in its local domain, i.e., its reference is not determined by the DP the professor. However, it can be co-indexed, and thus share a referential index, with a nominal constituent located outside of its local domain, such as John in (16), or be coreferential to a different entity in the broader discourse.

| (16) | a. | The professor that knows him respects John. |

. . |

Furthermore, pronominals can be interpreted without being expressed overtly. This is the case for implicit arguments represented as unexpressed pronouns PRO [78] and pro [79]. PRO and pro are also subject to structure-dependent Theory of Control, including obligatory and non-obligatory Control. PRO is generated in the external argument position (Subject) of embedded infinitival clauses and is subject to Control Theory. With non-obligatory Control verbs such as want, the interpretation of PRO is determined by the closest possible antecedent located in the main clause, as in [Johni wants [PROi to leave]] and [John wants Pauli [PROi to leave]]. Obligatory control verbs such as promised and allow impose lexical constraint on control relations: [Maryi promised Susy [PROi to leave]] and [Mary allowed Susyi [PROi to leave]].

Pro is generated in the subject position of tensed clauses in pro-drop languages, such as Italian but not French, e.g., parlerà francese (It.) vs. *parlera français (Fr.) (Lit. he/she will speak French). Pro is also generated in object position in English and Romance languages and interpreted as [human] and [generic]. The interpretation of null object Pro depends on the properties of their selecting verb, e.g., eat, drink and study. Interpreted unexpressed constituents can also be part of age- and time-telling expressions, as in He is one (YEAR OLD) and It is one (HOUR O‘clock), where the interpretation of the unpronounced constituent is context-dependent; see [80].

Unexpressed interpreted constituents, including the covet copies of displaced constituents, null pronouns, and classifiers are pervasive in natural languages. They are not processed by ChatGPT, since LLMs process overt sequences of words to guess/predict the next. This has consequences for their reliability, as further discussed below.

2.7. Computational Implementations of GGLMs

Computational implementations have been proposed through the development of GGLMs, including Principle and Parameter Parsing [81,82] and Minimalist Parsing [83,84,85,86].

Given the central role of asymmetrical relation in syntax, such as the Asymmetrical C-command relation, the following paragraphs illustrate the fine-grained syntactic structures generated by the Asymmetry-Recovering Parser [87,88,89,90]. This parser implements the core Structure Dependency Principle of syntactic operations, including structure-building and displacement operations, as well as their interaction with Principles of Efficient Computation, including X-bar projections, with more than one layer of projections, see [74], as well as the displacement of constituents from lower to higher syntactic projections, leaving covert copies in their extraction site.

See (17) for a simplified bracketed representation of the trace generated by the Asymmetry Recovering Parser for the wh-question who saw Mary? It illustrates the displacement of the wh-word who from the lower functional argument structure vP to the upper functional structure vP TP and CP. The trace provides an articulated syntactic structure enabling fine-grained compositional semantic interpretation.

| (17) | [CPwh who C [TP |

|

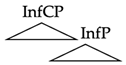

In addition to the recovery of the copies of displaced constituents, the parser also recovers the content of covert constituents including PRO and Pro. As mentioned previously, in languages such as English, PRO occupies the subject position of an infinitive clause, and Pro may occupy the complement position of a control verb such as want in sentences such as John wants to study, see (18). The parser also recovers the antecedents of covert pronominals.

| (18) | [John wants [PRO to study Procons]] |

| |_____^ |___^ |

The simplified output of the parser in (19) includes the generation of covert pronominals PRO and Pro in their appropriate syntactic positions. PRO is generated in the Specifier position of the infinitive clause (InfP), and Procons is generated in the complement position of the verb study. It also shows the displacement of PRO from the Specifier of its infinitival clause (InfP) to the Specifier of its Complementizer (InfC).

| (19) | [TP [DP John D [VP wants V [InfCP PRO toInf [InfP [DP |

|

Given the Principles of Control Theory, and since want is a non-obligatory Control verb, the interpretation of PRO is controlled by the DP John, which is its closest possible antecedent located in the Specifier position of the main clause in (19). The interpretation of Procons is determined by the properties of the local verb that select it, namely study.

The interaction of Principles of I-language Principles reducing complexity in syntactic parsing provides the configurational basis for fine-grained compositional semantic. See [48] for active, passive and wh-question traces generated by the parser.

2.8. The Brain and Language-Acquisition Studies

Experimental results from neurosciences bring validation to GGLMs. It has been established that Broca’s area supports the processing of syntax in general, with Bronfman areas BA 44/45 being the neural correlate [91,92]. Neurobiological results provide support for the role of networks involving the temporal cortex and the inferior frontal cortex that support syntactic processes in natural language processing. These findings are in line with the results reported for syntactic embedding and displacement. Humans are predetermined to compute linguistic recursion and complex hierarchical structures [93,94]. Results from experiments using electrophysiological measurements indicate that syntactic processes in language processing precede the assignment of grammatical and semantic relations in a sentence [12,95]. See also [13] for a discussion of the contributions of neurosciences to the biology of language.

Results from first language acquisition studies also bring support for GGLMs. According to [96], problems with identifying the antecedent of pronouns in children around the ages of 3 and 4 can be attributed to delays in the integration of language-specific and language-independent complexity-reducing mechanisms. Other studies also support the view that children’s “mistakes” are not violations of structure-dependency [97]. Results from eye-tracking experiments show that children recognize functional categories very early [98] and that recursive constituent structures are produced and understood very early in the child’s language-acquisition path [64]. As they are genetically endowed with I-language Structure Dependency and rely on principles of Efficient Computation, children’s path to language acquisition is simplified.

2.9. Section Summary

In this section, we focused on core principles of I-language, applying them in conjunction with Principles of Efficient Computation to reduce derivational complexity. We showcased the results of the asymmetry-recovering syntactic parser. The latter is used in NLP to process structure-dependent semantic relations, including the relations between constituents lacking independent referents, such as pronouns. We also pointed to results in neuroscience, from brain studies and studies of first-language acquisition, that provided experimental support to GGLMs.

3. LLMs

In this section, we focus on LLMs, differentiate them from GGLMs, and point to shortcomings of different versions of ChatGPT and Grok in terms of their ability to provide reliable information when prompted with queries including pronominals in root and embedded sentences, as well as structural ambiguity.

3.1. Basic Properties

LLMs are very large computer programs based on Deep Learning, which is a type of machine learning that uses Artificial Neural Networks [23,99,100]. This method is based on the idea that an artificial system can learn from the data it has been exposed to, use statistical and probabilistic models to identify patterns in the data, and make decisions without human intervention.

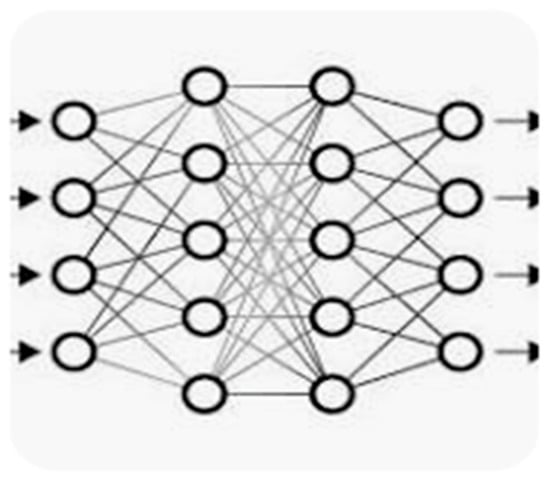

LLMs are complex computer programs. They rely on a relatively large number (100 k) of sub-word units or tokens extracted from large linear sequence of text from the Internet and combine these tokens according to statistical information on their co-occurrence frequencies to predict the next word. They include several rules and millions of parameters. They are very large Deep Learning Models with Artificial Neural Networks (see (Figure 1)) that are pre-trained on very large corpora, which may reach trillions of tokens/words in size. LLMs are said to simulate information processing by the human brain’s neuronal system.

Figure 1.

Artificial Neural Network. Inspired by neuronal networks, ANN architecture typically consists of interconnected nodes, which are organized into layers: an input layer, one or more hidden layers, and an output layer. These layers work together to process information, with connections between nodes having associated weights that determine the strength of influence. The network learns by adjusting these weights during training, enabling it to perform various tasks like classification, regression, and pattern recognition.

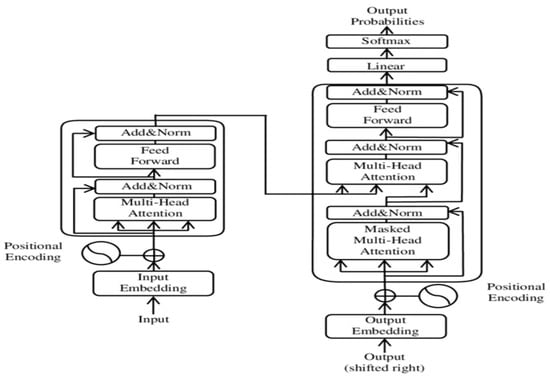

LLM architectures include Transformers [101,102], which enabled earlier seq-to-seq models, used for translation, to predict the next word; see (Figure 2). Transformers can perform multiple NLP tasks, including Tokenization, Part-Of-Speech tagging, summarization, and sentiment analysis [103].

Figure 2.

Transformer. In deep learning, a transformer is an architecture based on the multi-head attention mechanism, in which text is converted to numerical representations called tokens and each token is converted into a vector via lookup from a word-embedding table. At each layer, each token is then contextualized within the scope of the context window with other (unmasked) tokens via a parallel multi-head attention mechanism, allowing the signals for key tokens to be amplified and those for less important tokens to be diminished.

Transformers use a sequence-to-sequence architecture; that is, they take a sequence of words as input and produce another sequence of words as output. This requires positional embedding to identify the position of the element in the sequence. Self-attention used in a Transformer encoder is bidirectional; consequently, each hidden state can see the full sequence. BERT masked language modelling achieved good results in predicting the next word or character in a document. T5 is a multi-task text-to-text model. Transformers trained on massive amounts of data such as GPT-3, GPT-4o mini, and GPTX are said to achieve human-like behavior for different language modelling tasks, including resolution of pronominal anaphora. The Transformer architecture is entirely based on Attention, which provides a solution to the memory and long-term-dependency problems of Recurrent Neural Network architectures. Attention heads are said to improve sequence-based processing of subject-predicate relations and pronominal anaphora. BERT and its variants, including AIBERT, and roBERTa, help to achieve so-called “state of the art” performance in NLP.

With LLMs, natural language expressions take the form of sequences of machine-readable units, e.g., tokens and words separated by blanks and other machine-readable markers. Words are sequences of tokens, sentences are sequences of words, and texts are sequences of sentences. This contrasts with GGLMs, where lexical items and their features are combined by a small set of operations to generate hierarchical syntactic structures, which provide the skeleton for their compositional semantic interpretation. The fine-grained syntactic structures of natural language expressions are not modelled with LLMs, even though recent research aims to recover syntactic constituent structure at the Attention layer of Transformers, a point to which we come back below.

In current AI work, Machine Learning NLP is considered to outperform humans in narrowly defined structured tasks such as internet search, named-entity recognition, topic detection, and question answering. However, the next section points to shortcomings of ChatGPT-3.5, GPT-4o mini, and Grok 3.

3.2. ChatGPT

ChatGPT is an LLM-based conversational AI program trained on a very large amount of data from the Internet. It attempts to produce a “reasonable continuation” to incoming prompts. According to [104,105], ChatGPT is also efficient with discrete data, such as artificial languages used for programming. However, research on LLMs shows that it hallucinates when dealing with formal languages as well, and the accuracy of its products on some benchmarks is quite poor; see [106], among other works. The architecture of ChatGPT actualizes only the decoder part of the original Transformer model. It differs from BERT, which processes text bidirectionally, from right to left and from left to right. Thus, is not the case for ChatGPT, which uses a decoder-only transformer model; the encoder is not necessary for text generation. As mentioned previously, ChatGPT’s reliability is limited. Several works point to factual errors, incoherent results, and “hallucinations” generated by the chatbot [107,108,109]. These limitations have been attributed to the lack of general and domain-specific inference capacities in LLMs, and recent research aims to enhance these capacities by focusing on reasoning and causal inference [110,111,112]. However, more basic NLP limitations may originate from the lack of modelling principles at play in language syntax and compositional semantics in LLMs.

3.3. Prompting

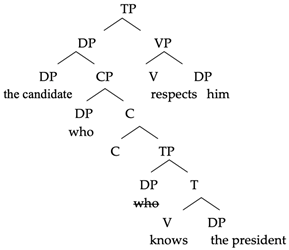

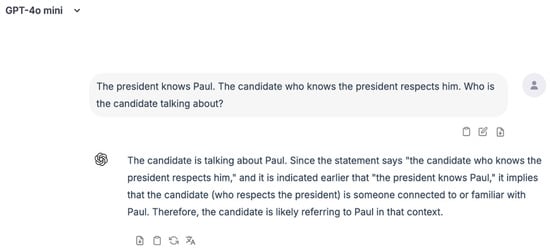

In this section, we prompt different versions of ChatGPT with pronominal anaphora resolution with the following prompt: The president knows Paul. The candidate who knows the president respects him, Who is the candidate talking about? This prompt is syntactically complex, as it includes a center-embedded relative clause who knows the president; see (20), as well as and its semantic interpretation is complex, as the reference of pronominal him can be flexible.

| (20) |  |

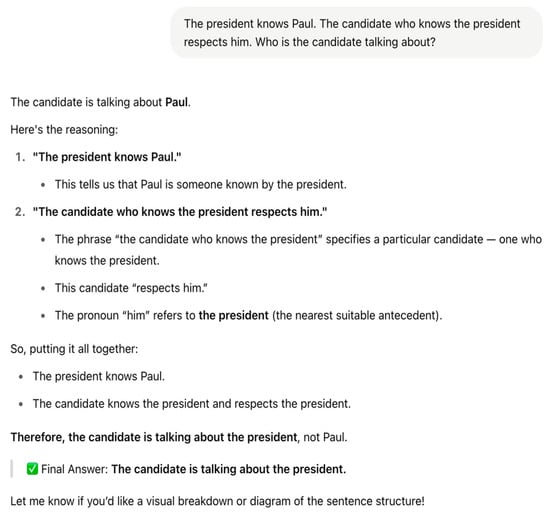

The selection of this prompt is indicative of whether or not LLMs such as ChatGPT-3.5, GPT-4o mini, and Grok-3 are sensitive to the structure-dependent Principle A of the Binding Theory and to the difference between Binding and Coreference. As mentioned previously, according to Principle A of the Binding Theory, the pronominal him in (20) is free in its c-command domain, i.e., it cannot be bound by the candidate, in the upper TP. However, it can be coreferential with another nominal constituents outside of its local domain, namely with the president in the lower TP, or with another nominal constituent mentioned previously in the discourse, which is also part of the first sentence of the prompt, namely Paul. ChatGPT-3.5’s reply in (Figure 3) indicates no sensitivity to Principle A of the Binding Theory or to the contrast between Binding and Coreference.

Figure 3.

Snapshot of ChatGPT-3.5 reply to the prompt “The president knows Paul. The candidate who knows the president respects him. Who is the candidate talking about?”. The program provides paraphrases for the two first sentences of the query and concludes that “The candidate is talking about the president” without mentioning the alternative interpretation, where “the candidate” is talking about someone else.

In the first step of its reasoning, the program replaces the first sentence of the prompt into a passive. In the second step. It eliminates the relative clause “who knows the candidate” and generates a sentence “the candidate respects him” that is not part of the prompt, and incorrectly takes him to refer to president, based on it being “the closest suitable antecedent” to the pronominal. In the “putting it all together” step, the program transforms the relative clause in the second sentence of the prompt into a coordinate structure. This leads to the Final Answer: “the candidate is talking about the president”. This answer is incomplete, since the candidate could also be talking about Paul or about another person not mentioned in the prompt, but part of a larger context.

Prompting GPT-4o mini, which is an advanced version of ChatGPT-3.5, does not improve the results significantly; see (Figure 4). This is also the case for other LLMs, as we illustrate in (Figure 5) with Grok-3.

Figure 4.

GPT-4o mini’s reply to the same prompt “The president knows Paul. The candidate who knows the president respects him. Who is the candidate talking about?” is also incomplete. The program, which is an updated version of ChatGPT-3.5, identifies “Paul” as the most likely antecedent for the pronoun based on the “familiarity” of “Paul” to “the candidate” brought about by the verb “know” in the first sentence of the prompt. Like ChatGPT-3.5, it does not point to other possible antecedents for the pronoun.

Figure 5.

Grok 3 reply to the “The president knows Paul. The candidate who knows the President respects him. Who is the candidate talking about?” provides “reasoning” that consists of paraphrasing the query with other words. The program also provides a partial answer to the query, as “the candidate” could be talking about “Paul” or to someone else mentioned in the previous discourse.

ChatGPT-3.5 and GPT-4.o mini, as well as Grok 3, rely on a string-linear notion of “closeness” between constituents and not on the structure-dependent notion of ‘’local domain”; additionally, they do not differentiate Binding from Coreference. As mentioned previously, Binding Theory applies when there is an asymmetrical c-command relation between pronominals and nominal expressions. This is not the case for Coreference, which is a free association of referential indexes to nominal expressions. The structure-dependent principles of the Binding Theory limit the range of possible antecedents of pronominals. While a pronominal is free in its local domain, it can be coreferential with a nominal expression located outside of that domain. The Binding Theory relies on asymmetrical c-command, a structure-dependent relation between nodes in syntactic trees, whereas Coreference is a free attribution of referential index to pronouns and their potential antecedents.

Relying on surface string-linear properties of natural language expressions, the difference between the structure-dependent Binding Theory vs. Coreference is not at play in ChatGPT and other LLMs. Binding vs. Coreference processing does not narrow down the set of possible anaphoric relations between pronouns and their potential antecedents or contribute to providing more reliable answers to queries including pronominals. While additional work is needed to validate this point on systematic experimental grounds, our observations lead us to question the reliability of at least some LLMs with respect to pronominal anaphora resolution; these LLMs may thus fail to provide useful and complete replies to prompts including pronominals and more than one potential antecedent.

3.4. Emergence of Structure

Attempts have been made to predict syntactic constituent structure using artificial neural networks trained by self-supervision. This is the case for [113], which reports progress with respect to previous attempts using supervised learning on a treebank of sentences that had been hand-labeled for constituent structure. However, a closer look at their assumptions and results suggests that further work is needed, as we point out in the following paragraphs.

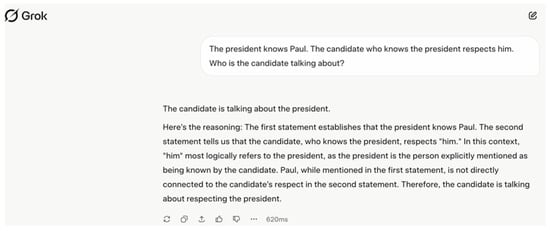

A basic assumption in this framework is that knowledge of linguistic structure can be learned by large artificial networks that are trained via self-supervision, that is, by a model that tries to predict the next word in a word context of a given length. However, the resulting representation for the sentence in (21a), namely (21b), is not a syntactic constituent structure. Namely, it does not provide syntactic categories, unexpressed copies of displaced constituents, or syntactic asymmetries, including the argument–adjunct asymmetry. The representation in (21b) can be derived with Dependency Grammar or Relational Grammar [114,115], but not with Generative Grammar.

| (21) | a. | The chef who ran to the store was out of food. |

| b. |  |

The rich articulate hierarchical structure provided by GGLMs is central to the interpretation of linguistic expressions. The limitations of artificial neural networks in recovering “emerging linguistic structure” can be attributed to their attempt to generate syntactic structure without access to the interaction of principles intrinsic to language and Principles of Efficient Computation that reduce complexity.

Other works aim to induce dependency and constituency structure at the same time and to transform constituent dependencies (Dependency Grammar) into directed phrase-structure trees (Phrase-Structure Grammar) [116]. The proposed parsing framework can jointly generate a dependency graph and a phrase-structure tree. It integrates the induced dependency relations into the Transformer in a differentiable manner, through a novel dependency-constrained self-attention mechanism. The parsing network assumed takes word embeddings as input and feeds them into several convolution layers.

These studies suggest that transformer-based models can capture certain syntactic patterns through attention mechanisms. However, such syntactic patterns capture regularities for simple structures but do not generalize to more complex structures. The full range of structure-dependent asymmetries that articulate the semantic interpretation of natural language expressions is not recovered by the parsing network. These include head-complement, modifier-head and subject-predicate asymmetries, along with interpreted covert constituents, be they silent copies of displaced constituents or silent pronominal PRO and Pro.

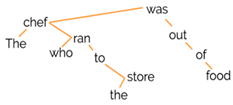

Deep Neural Networks are not pre-programmed to represent partial structural descriptions of linguistic expressions, as observed in [15,117], nor are they pre-programmed to represent complex syntactic structures. Recent research provides evidence that the emergent syntactic representations in neural networks are not robust [118]. Context-free (e.g., GloVe) and, in some cases, context-dependent representations from LLMs (e.g., BERT) are equally brittle to syntax-preserving perturbations. Furthermore, ChatGPT-3.5 and other language models produce substitutions that do not retain the original sentence’s syntax coherence. Moreover, performance metrics such as accuracy do not measure the quality of the model in terms of its ability to robustly represent complex linguistic structures. For example, recent experimental studies on the processing of relative clause ambiguity in different languages [119] report that LLMs do not handle complex syntactic structure and human-like comprehension. The results of the experiment indicate that LLMs exhibit a preference for local (low) attachment over a non-local (high) attachment, irrespective of language-specific attachment pattens. See (22) from ([118] p. 3484), which presents simplified representations of relative-clause attachment.

| (22) |  |

LLMs’ lack of flexibility in human language processing and comprehension is also observed with ambiguous complex nominal structures, as we show in Section 4. These complex nominal, whose surface properties appears to be simpler than those of relative clauses, also highlight LLMs’ limitations in handling complex structures and human-like interpretations.

3.5. Section Summary

We prompted ChatGPT-3.5, GPT-4.o mini, and Grok-3 with representative complex queries including a pronominal in a relative clause and more than one potential antecedent for that pronoun. The replies were not reliable, notwithstanding slight improvements in the program update. We also discussed attempts to induce syntactic structure at the attention layer of Transformers, a point to which we come back in Section 5. In the next section, we identify issues related to LLMs’ evaluation metrics for NLP and propose introducing structure-dependent dimensions to their evaluation. We also discuss representative cases in which at least some LLMs fall short with respect to processing structural ambiguity.

4. Benchmarking ChatGPT

In this section, we focus on current metrics to evaluate the performance of ChatGPT on specific NLP tasks in the light of GGLM’s assumptions on natural language, its acquisition, its parsing, and its interpretation. This section speaks to the recent BIG-Bench suite for measuring the performance of ChatGPT and its successors [120]. We point to shortcomings of current metrics used to assess the performance of LLM-based NLP systems from the viewpoint of GGLMs, which is anchored in the Biolinguistics Program. Firstly, the sample size required to evaluate the performance of an LLM-based chatbot may reach hundreds of thousands of data points. This contrasts with human evaluation of the question–answer pairs. Secondly, attempts to modify GPT prompts in hopes of improving the chatbot’s replies also contrast with human behavior. This can be attributed to the fact that LLMs predict the next word based on the data on which they have been pre-trained. This again contrasts with humans’ biological capacity to generate and process novel expressions without prior training. See [31] on the limits of Benchmarking LLM-based chatbots on different NLP tasks and on the idea that new benchmarks such as Massive Multitask Language Understanding (MMLU) cannot measure machine intelligence.

Standard metrics of performance used in NLP, including ROUGE_1, ROUGE_2, BLEU, BERT.precision, BERT.recall, and BERT_F1, rely on the overlap, in terms of number of words (unigram, bigrams, and n-grams) between the inference and the ground truth. ROUGE and BLEU measure the similarity in word-matching in the ground truth and inferences, while BERT scores take into consideration semantic similarity. The greater the value, the greater the similarity. However, the use of similarity metrics to evaluate ChatGPT performance does not measure the performance of the chatbot with respect to its capacity to process the structure-dependent asymmetries underlying linguistic expressions—features that are essential to articulating their semantic interpretation.

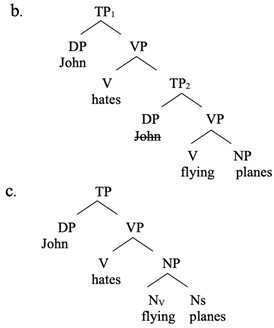

As expected, ChatGPT fails to recover structural ambiguity, the fact that certain linguistic expressions, such as (23a), have more than one semantic interpretation. With GGLMs, each interpretation is based on a different syntactic structure; see the simplified representations in (23b) and (23c). The difference is that in (23b), flying planes is a verbal structure (VP) in an embedded clause (TP2), whereas in (23c), it is a nominal structure (NP) in a root clause (TP).

| (23) | a. John hates flying planes. |

|

The structural difference between (23b) and (23c) corresponds to a different semantic interpretation. In (23b), flying is analyzed as a gerundive -ing nominal and is de-sentential (TP); whereas in (23c), it shares properties with derived nominals and can be listed in the LEX as a nominal compound. Gerundive nominals (Nv) may refer to event types and have the semantics of events, whereas this is not the case for derived nominals, as the contrast in (24) illustrates.

| (24) | a. John’s perfectly flying planes surprised everyone. (gerundive nominal) |

| b. John’s private flying planes surprised everyone. (derived nominal) |

Gerundive -ing nominals are formed from propositions, including a subject and a predicate; derived nominals have the internal structure of a nominal compound. In addition, there is an intermediate class of -ing nominals sharing several properties with derived nominals, as discussed in [121]. This is the case for flying planes, as the contrast between (24a) and (24b) illustrates. For example, in (24a), the event nominal interpretation of the complex nominal is overtly manifested by the subject-oriented adverbial modifier perfectly, whereas in (24b), the derived nominal interpretation is overtly manifested by the object-oriented adjectival modifier private. See also [122] for further discussion.

LLMs are trained on very large language data (mainly from the Internet) and are optimized to capture statistical co-occurrence patents over sequences of tokens (i.e., words and parts thereof) and use Distributional Semantics to access word meaning in texts. The latter is a usage-based model of meaning, according to which the linguistic distribution of lexical items in similar context plays a key role in characterizing their semantic behavior. However, Distributional Semantics provides neither the semantics of words themselves nor the semantics of syntactic constituents, which jointly determine the semantics of sentences according to their syntactic arrangement. ChatGPT-3.5 does not seem to rely on Structure Dependency or related Semantic Compositionality, which could account for shortcomings in processing structural ambiguity and supporting flexible interpretation.

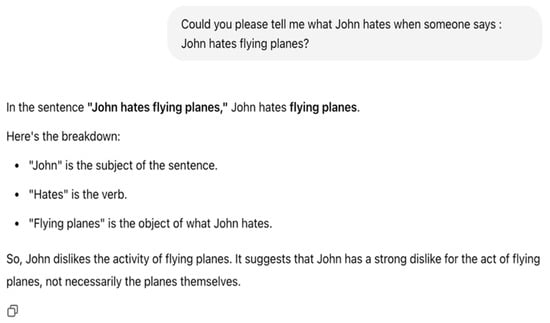

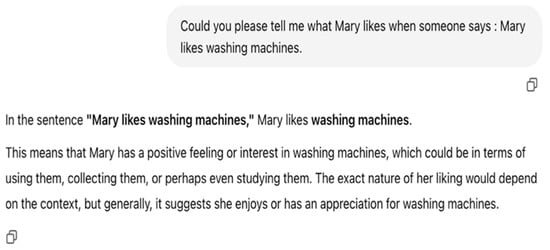

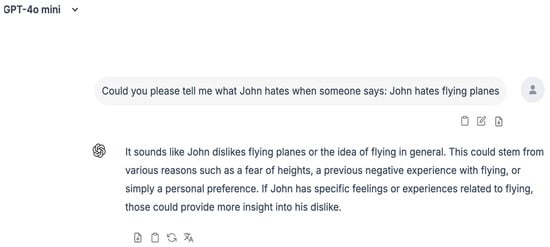

The result of prompting Chat GPT-3.5 with the queries in (Figure 6 and Figure 7) indicates that the program does not provide more than one interpretation in response to a structurally ambiguous prompt. Furthermore, using the same queries with Chat GPT-4o mini does not improve the results; see (Figure 8 and Figure 9). Moreover, while Grok-3’s replies to the same queries are more elaborate, they still provide partial replies to ambiguous prompts; see (Figure 8, Figure 9, Figure 10 and Figure 11).

Figure 6.

ChatGPT-3.5’s reply to the prompt “Could you please tell me what John hates when someone says: John hates flying planes” breaks down the query into sequences of words identified as subject, verb and object. As an LLM, Chat GPT-3.5 generates probability distributions over word sequences to generate the next word. It does not rely on deep semantic properties of the verb “hates”, describing the emotional state of the experiencer “John” and the cause of the emotion. However, the program relies on widespread stereotype biases [123,124] and favors the event-nominal reading in this case, as the last two lines of the program’s reply indicate.

Figure 7.

ChatGPT-3.5’s reply to the prompt “Could you please tell me what Mary likes when someone says: Mary likes washing machines” identifies alternative causes of the emotion experienced by “Mary” brought about by the Psych verb “hate”. The program requests additional context but still favors a concrete interpretation over an event-nominal reading for the complex nominal “washing machines.

Figure 8.

GPT-4o mini’s reply to the prompt “Could you please tell me what John hates when someone says: John hates flying planes” enumerates possible causes for John’s emotion with respect to “flying planes” stemming from the Psych verb “hate” and requests additional information without considering possible event and concrete readings for the complex nominal.

Figure 9.

GPT-4o mini’s reply to the prompt “Could you please tell me what Mary likes when someone says: Mary likes washing machines” provides further possible causes of Mary’s emotion without mentioning possible event or concrete readings for the complex nominal. The narrative of the reply; however, suggests that widespread stereotype biases might be at play.

Figure 10.

Grok 3’s reply to the prompt “Could you please tell me what John hates when someone says: John hates flying planes” indicates that the program selects an event interpretation for the complex nominal and requests additional information from the context. It provides alternative causes for John’s dislike in the form of inferences and asks for additional information. Here, again, the flexible interpretation of as “flying planes” is not at play in the reasoning.

Figure 11.

Grok 3’s reply to the prompt “Could you please tell me what Mary likes when someone says: Mary likes washing machines” lists four different causes for the emotional state experienced by Mary. In this case, the program favors a concrete interpretation for the complex nominal “washing machines” in the query including the psychological attitude verb “like”; this interpretation is based on distributional co-occurrences probabilities of the constitutive words of the query, Distributional Semantics, and stereotypes.

The results of prompting Chat GPT 3-5, GPT-4o mini, and Grok 3 with syntactically and semantically complex examples do not indicate sensitivity to the structural ambiguity underlying the flexible interpretation of the complex nominals such as “washing machines” and “flying planes”. Structure-dependent principles may provide more reliable NLP systems and add novel dimensions to the evaluation metrics of different tasks, including structural ambiguity detection and pronominal anaphora resolution. Further work is needed to evaluate these results and the implications thereof in a systematic experimental framework, including quantitative data comparing LLM and GGLM performance on targeted NLP tasks.

5. An Apparent Paradox and Potential Paths for Integration from Theory to Applications

The apparent paradox between the theoretical limitations and practical success of LLMs requires clarification. This paradox can be attributed to basic foundational differences between GGLMs and LLMs, encompassing theoretical, methodological and applicative domains. As acknowledged in the previous sections of this paper, there have been recent efforts within the LLM community to incorporate syntactic bias and structural awareness into large-scale models. However, several works, including [117,118], point to the fact that the emergence of structure in LLMs lacks robustness in handling syntactic complexity and does not preserve the integrity of constituent structures, conceived as syntactic patterns.

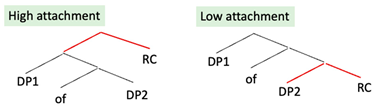

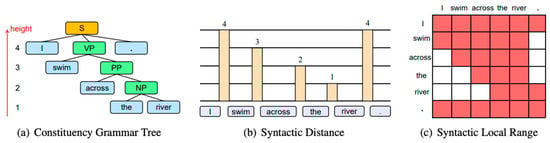

Drawing from the comparison with GGLMs, specific strategies could be operationalized to improve NLP in LLMs. A promising strategy would be to develop a hybrid architecture that integrates a GGLM-based parser in the pipeline and to limit the attention mask to the syntactic local ranges of each constituent, as is formalized in in terms of the asymmetrical c-command relation in GGLMs. Access to a GGLM parser would provide better parsing quality than learning syntactic structures from data, given that reliability also depends on the quality and scale of the training dataset. This strategy would also have the merit of extending the syntax-guided localized self-attention mechanism into Transformers, as discussed in [125], based on simplified Constituency Grammar Trees. See Figure 12 from ([124] p. 2335) for simple sentences such as I swim across the river—to the full-fledged constituency grammar trees generated by GGLMs. The latter have the generative capacity to parse simple and complex sentences, including recursive asymmetrical layers of constituents and their covert constituents.

Figure 12.

(a) The constituency tree for the example sentence “I swim across the river”. (b) Its syntactic distances. (c) The attention mask reflecting the syntactic local ranges of each word. For example, rather than attending to the whole sentence, “across” encourages attention towards swim, the, and river while suppresses the others.

However, limiting the attention map to the syntactic range of each constituent would require extending the localized self-attention mechanism according to GGLM Principles. This includes Principle A of the Binding Theory, at play in pronominal anaphora resolution, along with the core principle of Structure Dependency, including in the detection of structural ambiguity. In the first case, as exemplified in this paper with the candidate who knows the president respects him, the localized self-attention mechanism should be extended to the full syntactic structure. Likewise, for the detection of the structural ambiguity of phrasal constituents, such as flying planes, which would also require the LLM to extend the localized self-attention mechanism to higher levels of syntactic structure. These include preceding adjunct modifiers and the complete propositional structure that the complex nominal participates in, including the subject, as discussed in Section 4. Furthermore, a compositional semantic processor would be added to the parsing architecture, enabling LLMs to process Coreference relations, and, more broadly, to go beyond Distributional Semantics.

Thus, from a conceptual perspective, the enhancement of LLMs with a hybrid architecture that incorporates GGLM parsers driven by deep syntactic principles, as well as a compositional semantic processor, could enhance LLMs’ performance, improving their NLP capacities across different applications.

6. Overall Summary and Consequences for NLP

The distinction between I-language and E-language was used as a starting point to differentiate GGLMs from LLMs. We identified conceptual, methodological, and computational differences between these models. We focused on the I-language first principle of Structure Dependency, established in GGLMs, and its role in processing naturalistic linguistic expressions that include center embedding in sentences, covert constituents, pronominal Binding vs. Coreference, and structural ambiguity. These expressions are challenging for LLMs. A problem with center embedding in sentences is that it breaks the contiguity of sequences of words. A problem with pronominals is that their antecedents are located outside of their local domain. A problem with structural ambiguity is that a given sequence of words may have multiple interpretations, which cannot be disentangled by distributional semantics. However, interpreted but unexpressed constituents, pronominal Binding and Coreference, and structural ambiguity are processed by GGLM parsers. The emergence of syntactic structure in Intelligent Machines is paving the way for the development of NLP systems in which the semantic compositionality of natural language expressions builds on access to I-language principles that operate in conjunction with Principles Reducing Complexity.

This study provides insights into LLMs’ behaviors based on a very small set of syntactically complex and interpretably flexible representative examples. Further work is needed to run experiments using controlled prompting methods and statistical analysis supporting our preliminary observations. Thorough evaluation requires testing across diverse examples, control for prompt engineering effects, comparison with human performance on the same tasks, and systematic variation of the test cases. Notwithstanding these limitations, our proposal to integrate GGLM-based parsers at the attention layer of LLMs and use GGLM principles as part of LLM benchmarks may lead to more reliable and efficient applications.

Funding

This work was supported by grants to the Major Collaborative Research Initiative on Interface Asymmetry (SSHRC 214-2003-1003) and the Research Program on Dynamic Interfaces III (FQRSC 137253103690), awarded to Anna Maria Di Sciullo, Department of Linguistics at the University of Quebec in Montreal (www.interfaceasymmetry.uqam.ca, www.biolinguistics.uqam.ca, accessed on 9 July 2025).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

A preliminary version of this paper was presented at SoMeT 2023. Many thanks to the organizers of this conference for their questions and comments and to the Guest Editorial Team for their invitation to contribute to this special issue on Emergent Technologies. Intelligent Software Methodologies, Tools, and Techniques (Selected Papers from SoMeT 2023). Many thanks to the anonymous reviewers for their comments and suggestions and to Vincent Di Sciullo for lively discussions through the writing of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chomsky, N. Three Models for the Description of Language. IEEE Trans. Inf. Theory 1956, 2, 113–124. [Google Scholar] [CrossRef]

- Chomsky, N. Syntactic Structures; Current Issues in Linguistic Theory; Werner Hildebrand: Berlin, Germany, 1957. [Google Scholar]

- Chomsky, N. Aspect of the Theory of Syntax; MIT Press: Cambridge, MA, USA, 1965. [Google Scholar]

- Hebb, D.O. The Organization of Behavior: A Neuropsychological Theory; John Wiley & Sons: New York, NY, USA, 1949. [Google Scholar]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; ISBN 0-387-31073-8. [Google Scholar]

- Chomsky, N. Linguistic Contributions to the Study of Mind. In Language and Mind; Harcourt, Brace & World: New York, NY, USA, 1968. [Google Scholar]

- Chomsky, N. Of Minds and Language. Biolinguistics 2007, 1, 9–27. [Google Scholar] [CrossRef]

- Chomsly, N. The Biolinguistic Program: Where Does It Stand Today; MIT: Cambridge, MA, USA, 2008. [Google Scholar]

- Di Sciullo, A.M.; Piattelli-Palmarini, M.; Wexler, K.; Berwick, R.C.; Boeckx, C.; Jenkins, L.; Uriagereka, J.; Stromswold, K.; Lai-Shen Cheng, L.; Heidi Harley, H.; et al. The Biological nature of Human Language. Biolinguistics 2010, 4, 4–34. [Google Scholar] [CrossRef]

- Di Sciullo, A.M.; Jenkins, L. Biolinguistics and the Human Language Faculty. Language 2016, 92, e205–e236. [Google Scholar] [CrossRef]

- Friederici, A. The Brain Basis of Language Processing: From Structure to Function. Physiol. Rev. 2011, 91, 1357–1392. [Google Scholar] [CrossRef] [PubMed]

- Friederici, A. Language in Our Brain; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Chomsky, N. A Review of B.F. Sinner’s Verbal Behavior. Language 1959, 35, 26–58. [Google Scholar] [CrossRef]

- Lenneberg, E. Biological Foundation of Language; Wiley: New York, NY, USA, 1967. [Google Scholar]

- Berwick, R.C.; Weinberg, A.S. Parsing efficiency, Computational Complexity, and the evaluation of grammatical theories. Linguist. Inq. 1982, 13, 165–191. [Google Scholar]

- Barton, G.E.; Berwick, R.C.; Ristad, E.S. Computational Complexity and Natural Language; MIT Press: Cambridge, MA, USA, 1987. [Google Scholar]

- Berwick, R.C. Natural Language, Computational Complexity, and Generative Capacity. Comput. Artif. Intell. 1989, 8, 423–441. [Google Scholar]

- Di Sciullo, A.M.; Fong, S. Efficient parsing for word structure. In Proceedings of the 6th Natural Language Processing Pacific Rim Symposium (NLPRS-2001), Tokyo, Japan, 27–30 November 2001; pp. 741–748. [Google Scholar]

- Di Sciullo, A.M.; Fong, S. Asymmetry, Zero Morphology and Tractability. Lang. Inf. Comput. 2001, 15, 61–72. [Google Scholar]

- Di Sciullo, A.-M.; Fong, S. UG and External Systems; Di Sciullo, A.M., Delmonte, R., Eds.; John Benjamins: Amsterdam, The Netherlands, 2005; pp. 247–268. [Google Scholar]

- Bengio, Y. Artificial Neural Networks and Their Application to Sequence Recognition. Ph.D. Thesis, McGil University, Montreal, QC, Canada, 1991. [Google Scholar]

- Bengio, Y.; Ducharme, R.; Vincent, P.; Gauvin, C. A Neural Probabilistic Language Model. J. Mach. Learn. Res. 2003, 3, 1137–1155. [Google Scholar]

- Bengio, Y. Neural Net Language Models. Scholarpedia 2008, 3, 3881. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Harris, Z.S. Distributional Structure. WORD 1954, 10, 146–162. [Google Scholar] [CrossRef]

- Harris, Z.S. Mathematical Structures of Language; John Wiley & Sons: New York, NY, USA, 1964. [Google Scholar]

- Harris, Z.S. Language and Information; Columbia University Press: New York, NY, USA, 1988. [Google Scholar]

- Harris, Z.S. A Theory of Language and Information: A Mathematical Approach; Clarendon Press: Oxford, UK, 1991. [Google Scholar]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar] [PubMed]

- Roitblat, H.L. Algorithms Are Not Enough: Creating General Intelligence; MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Bender, E.M.; Koller, A. Climbing towards NLU: On meaning, form, and understanding in the Age of Data. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 5185–5198. [Google Scholar]

- Bender, E.M.; Gebru, T.; Major, A.M.; Shmitchell, S. On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, FAccT ’21, Virtual, 3–10 March 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 610–623. [Google Scholar]

- Carta, A.; Cossu, A.; Errica, A.; Bacciu, D. Catastrophic Forgetting in Deep Graph Networks: A Graph Classification Benchmark. Front. Artif. Intell. 2022, 5, 824655. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Cui, L.; Wang, A.; Yang, C.; Liao, X.; Song, L.; Yao, J.; Su, J. Mitigating Catastrophic Forgetting in Large Language Models with Self-Synthesized Rehearsal. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 1416–1428. [Google Scholar]

- Chen, L.; Zaharia, M.; Zou, J. How is ChatGPT’s Behavior Changing over Time? arXiv 2023, arXiv:2307.09009. [Google Scholar] [CrossRef]

- Amiritziani, M.; Yao, J.; Lavergne, A.; Okada, E.S.; Chadha, A.; Roosta, T.; Shah, C. LLM Auditor: Developing a Framework for Auditing Large Language Models Using Human-in-the Loop. arXiv 2024, arXiv:2402.09346. [Google Scholar]

- Chirag, S. From Prompt Engineering to Prompt Science with Human in the Loop. arXiv 2024, arXiv:2401.04122. [Google Scholar] [CrossRef]

- Shah, C.; White, R.W.; Andersen, R.; Buscher, G.; Counts, S.; Das, S.S.S.; Montazer, A.; Ivannan, S.M.C.; Neville, J.; Ni, X.; et al. Using Large Language Models to Generate, Validate and Apply User Intent Taxonomies. In ACM Transactions on the Web; Association for Computing Machinery: New York, NY, USA, 2023. [Google Scholar] [CrossRef]

- Treviso, M.; Lee, J.-U.; Ji, T.; van Aken, B.; Cao, Q.; Hassid, M.R.C.M.; Heafield, K.; Hooker, S.; Raffel, C.; Martins, P.H.; et al. Efficient Methods for Natural Language Processing: A Survey. Trans. Assoc. Comput. Linguist. 2023, 11, 826–860. [Google Scholar] [CrossRef]

- Tianyu, D.; Chen, T.; Zlo, H.; Zhong, J.J.Y.; Wang, J.Z.G.; Zhu, A.; Zharkov, L.; Liang, L. The Efficiency Spectrum of Large Language Models: An Algorithmic Survey. arXiv 2024, arXiv:2312.00678. [Google Scholar] [CrossRef]

- Thorp, H.H. ChatGPT is Fun but not an Author. Science 2023, 379, 313. [Google Scholar] [CrossRef] [PubMed]

- Kenney, N.N. A Brief Analysis of the Architecture, Limitations, and Impacts of Chat GPT; Georgia Institute of Technology: Atlanta, GA, USA, 2023. [Google Scholar]

- Bolhuis, J.J.; Crain, S.; Fong, S.; Moro, A. Three Reasons why AI doesn’t Model Huma correspondence. Nature 2024, 627, 289. [Google Scholar] [CrossRef] [PubMed]

- Moro, A.; Greco, M.; Cappa, S.F. Large languages, impossible languages and human brains. Cortex 2023, 167, 82–85. [Google Scholar] [CrossRef] [PubMed]

- Kodner, J.; Payne, S.; Heinz, J. Why Linguistics Will Thrive in the 21st Century: A Reply to Piandosi. arXiv 2023, arXiv:2308.0322. [Google Scholar]

- Di Sciullo, A.M. Knowledge of Language and knowledge science. In New Trends in Intelligent Software Methodologies, Tools and Techniques; Fujita, H., Herrera-Viedma, E., Eds.; IOS Press: Granada, Spain, 2018; pp. 858–869. [Google Scholar] [CrossRef]

- Di Sciullo, A.M. Knowledge of Language and Natural Language processing. In New Trends in Intelligent Software Methodologies, Tools and Techniques; Fujita, H., Guizzi, G., Eds.; IOS Press: Granada, Spain, 2023. [Google Scholar] [CrossRef]

- Chomsky, N. Knowledge of Language: Its Nature, Origin, and Use; Praeger Publishers: New York, NY, USA, 1986. [Google Scholar]

- Chomsky, N. Lectures on Government and Binding; Foris: Dordrecht, The Netherlands, 1981. [Google Scholar]

- Chomsky, N. The Minimalist Program; MIT Press: Cambridge, MA, USA, 1995. [Google Scholar]

- Chomsky, N. Three factors in language design. Linguist. Inq. 2005, 36, 1–22. [Google Scholar] [CrossRef]

- Chomsky, N. Minimalism: Where are we Now, and Where can we Hope to Go. Gengo Kenkyu 2021, 160, 1–41. [Google Scholar]

- Chomsky, N. The language capacity: Architecture and evolution. Psychon. Bull. Rev. 2017, 24, 200–203. [Google Scholar] [CrossRef] [PubMed]

- Stack, C.H.; Watson, D.G. Pauses and Parsing: Testing the Role of Prosodic Chunking in Sentence Processing. Languages 2023, 8, 157. [Google Scholar] [CrossRef]

- Steedman, M. Surface Structure, Intonation, and Focus. In Natural Language and Speech; Springer: Berlin/Heidelberg, Germany, 1991; pp. 21–38. [Google Scholar]

- Wagner, M.; Watson, D.G. Experimental and theoretical advances in prosody: A review. Lang. Cogn. Process. 2010, 25, 905–945. [Google Scholar] [CrossRef] [PubMed]

- Watson, D.G.; Gibson, E. Intonational phrasing and constituency in language production and comprehension. Stud. Linguist. 2005, 59, 279–300. [Google Scholar] [CrossRef]

- Chomsky, N. Derivation by Phase. In Ken Hale: A Life in Language; Kenstowicz, M., Ed.; MIT Press: Cambridge, MA, USA, 2001; pp. 1–52. [Google Scholar]

- Chomsky, N. On Phases. In Foundational Issues in Linguistic Theory: Essays in Honor of Jean-Roger Vergnaud; Frieden, R., Otero, C.P., Zubizarreta, M.L., Eds.; MIT Press: Cambridge, MA, USA, 2008; pp. 133–166. [Google Scholar]

- Uriagereka, J. Multiple Spell-Out. In Working Minimalism; Epstein, S.D., Hornstein, N., Eds.; MIT Press: Cambridge, MA, USA, 1999; pp. 251–282. [Google Scholar] [CrossRef]

- Uriagereka, J. C-Command. In Spell-Out and the Minimalist Program; OUP: Oxford, UK, 2011; pp. 121–151. [Google Scholar] [CrossRef]

- Stowell, T.A. Origins of Phrase Structure. Ph.D. Thesis, Department of Linguistics and Philosophy, MIT, Cambridge, MA, USA, 1981. [Google Scholar]

- Friedmann, N.; Belletti, A.; Rizzi, L. Growing trees: The acquisition of the left periphery. Glossa 2021, 6, 131. [Google Scholar] [CrossRef]

- Kayne, R. The Antisymmetry of Syntax; MIT Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Huang, C.-T.J. Logical Relations in Chinese and the Theory of Grammar. Ph.D. Dissertation, MIT, Cambridge, MA, USA, 1982. [Google Scholar]

- Huang, C.-T.J. The Syntax of Chinese; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Nunes, J.; Uriagereka, Y. Cyclicity and Extraction Domains. Syntax 2002, 3, 20–43. [Google Scholar] [CrossRef]

- Di Sciullo, A.M.; Paul, I.; Somesfalean, S. The Clause Structure of Extraction Asymmetries. In Asymmetry in Grammar: Volume 1: Syntax and Semantics; Di Sciullo, A.M., Ed.; John Benjamins: Amsterdam, The Netherlands, 2003; pp. 279–299. [Google Scholar] [CrossRef]

- Di Sciullo, A.M. Asymmetry in Morphology; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Di Sciullo, A.M. Asymmetry and the Language Faculty. Riv. Lett. Spec. Ed. Anniv. Syntactic Struct. 2017, 13, 88–107. [Google Scholar] [CrossRef]

- Di Sciullo, A.M. Maximize Asymmetry, Minimize Externalization, and Interface Asymmetries. In Keynotes from the International Conference on Explanation and Prediction in Linguistics CEP; Kosta, P., Schlund, K., Eds.; Potsdam Linguistic Investigations Recherches Linguistiques: Potsdam, Germany, 2021; pp. 79–104. [Google Scholar]

- Boskovic, Z. Generalized Asymmetry. In Keynotes from the International Conference on Explanation and Prediction in Linguistics: Formalist and Functionalist Approaches; Kosta, P., Schlund, K., Eds.; Peter Lang: Frankfurt am Main, Germany, 2021; pp. 15–77. [Google Scholar]

- Collins, C. Principles of Argument Structure; MIT Press: Cambridge MA, USA, 2024. [Google Scholar]

- Katz, J.; Postal, P.M. An Integrated Theory of Linguistic Descriptions; MIT Press: Cambridge, MA, USA, 1964. [Google Scholar]

- Pietrosky, P.M. Meaning before Truth. In Contextualism in Philosophy: Knowledge, Meaning, and Truth; OUP: Oxford, UK, 2023; pp. 255–302. [Google Scholar]

- Heim, I.; Krater, E. Semantics in Generative Grammar; Blackwell Textbooks in Linguistics; Blackwell Publishers: Malden, MA, USA, 1998. [Google Scholar]

- Chomsky, N.; Lasnik, H. Filters and Control 1977. Linguist. Inq. 1977, 8, 425–504. [Google Scholar][Green Version]

- Rizzi, L. Null Objects in Italian and the Theory of pro. Linguist. Inq. 1986, 17, 501–557. [Google Scholar][Green Version]

- Kayne, R.S. Movement and Silence; Oxford University Press: New York, NY, USA, 2005. [Google Scholar]

- Fong, S. Computational Properties of Principled-Based Grammatical Theories. Ph.D. Thesis, MIT, Cambridge, MA, USA, 1991. [Google Scholar]

- Fong, S.; Berwick, C. Isolating Cross-linguistic Parsing Complexity with a Principles-and-Parameter Parser: A Case study of Japanese and English. In COLING Vol.2: The 14th International Conference on Computational Linguistics; Association for Computational Linguistics: Stroudsburg, PA, USA, 1992; pp. 631–637. [Google Scholar]

- Stabler, E. Computational perspectives on minimalism. In Oxford Handbook of Linguistic Minimalism; Boeckx, C., Ed.; Oxford Academic: Oxford, UK, 2011; pp. 617–642. [Google Scholar]

- Berwick, R.; Stabler, E. (Eds.) Minimalist Parsing; Oxford University Press: Oxford, UK, 2019. [Google Scholar]

- Fong, S.; Ginsburg, J. Towards a Minimalist Machine. In Minimalist Parsing; Berwick, R., Stabler, E., Eds.; OUP: Oxford, UK, 2019. [Google Scholar]

- Ginsburg, J.; Fong, S. Combining Linguistic Theory on a Minimalist Machine. In Minimalist Parsing; Digital Press; OUP: Oxford, UK, 2019. [Google Scholar]

- Di Sciullo, A.M. Parsing asymmetries. In Natural Language Processing—NLP 2000; Christodoulakis, D.N., Ed.; Springer Computer Science Press: Berlin/Heidelberg, Germany, 2000; pp. 24–39. [Google Scholar]

- Di Sciullo, A.M.; Gabrini, P.; Batori, C.; Somesfalean, S. Asymmetry, the Grammar, and the Parser, Linguistica e modelli tecnologici di ricerca. In Proceedings of the Atti del XL Congresso Internazionale di Studi della Società Linguistica Italiana, Vercelli, Italy, 21–13 September 2006; pp. 477–494. [Google Scholar]

- Di Sciullo, A.M. Asymmetry Theory and Asymmetry Based Parsing. In Communications in Computer and Information Science; Fujita, H., Guizzi, G., Eds.; Springer: New York, NY, USA, 2015; Volume 532, pp. 252–268. [Google Scholar][Green Version]

- Di Sciullo, A.M. Argument Structure Parsing. In Papers in Natural Language Processing; Ralli, A., Grigoriadou, M., Philokyprou, G., Christodoulakis, D., Eds.; Diavlos Press: Athènes, Greece, 2017; pp. 55–77. [Google Scholar]

- Grodzinsky, Y.; Friederici, A. Neuroimaging of Syntax and Syntactic Processing. Curr. Opin. Neurobiol. 2006, 16, 240–246. [Google Scholar] [CrossRef]

- Makuuchi, M.; Bahlmann, J.; Anwander, A.; Friederici, A. Segregating the Core Computational Faculty of Human Language from Working Memory. Proc. Natl. Acad. Sci. USA 2009, 106, 8362–8367. [Google Scholar] [CrossRef] [PubMed]

- Friederici, A.; Bahlmann, J.; Friederich, R.; Makuuchi, M. The Neural Basis of Recursion and Complex Syntactic Hierarchy. Biolinguistics 2011, 5, 87–104. [Google Scholar] [CrossRef]

- Grodzinsky, Y.; Pieperhoff, P.; Thompson, C. Stable Brain Loci for the Processing of Complex Syntax: A Review of the Current Neuroimaging Evidence. Cortex 2021, 142, 252–271. [Google Scholar] [CrossRef] [PubMed]

- Friederichi, A.D.; Weissenbron, J. Mapping Sentence Form Onto Meaning: The Syntax-Semantic Interface. Brain Res. 2007, 1146, 50–58. [Google Scholar] [CrossRef] [PubMed]

- Di Sciullo, A.M.; Bautista, C.A. The Delay of Condition B Effect and Its Absence in Certain Languages. Lang. Speech 2008, 51, 77–100. [Google Scholar] [CrossRef] [PubMed]

- Teresa Guasti, M.T. Universal Grammar Approaches to Language Acquisition. In Language Acquisition; Foster-Cohen, S., Ed.; Palgrave Macmillan: London, UK, 2009; pp. 87–108. [Google Scholar]

- Gavarró, A.; Zhu, J. Functional categories in very early acquisition. Linguist. Anal. 2020, 42, 583–600. [Google Scholar]

- Socher, R.; Bengio, Y.; Manning, C. Deep learning for NLP. In Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics: Tutorial Abstracts, Jeju Island, Republic of Korea, 8 July 2012. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Comez, A.N.; Kalser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]