Abstract

While collections of documents are often annotated with hierarchically structured concepts, the benefits of these structures are rarely taken into account by classification techniques. Within this context, hierarchical text classification methods are devised to take advantage of the labels’ organization to boost classification performance. In this work, we aim to deliver an updated overview of the current research in this domain. We begin by defining the task and framing it within the broader text classification area, examining important shared concepts such as text representation. Then, we dive into details regarding the specific task, providing a high-level description of its traditional approaches. We then summarize recently proposed methods, highlighting their main contributions. We also provide statistics for the most commonly used datasets and describe the benefits of using evaluation metrics tailored to hierarchical settings. Finally, a selection of recent proposals is benchmarked against non-hierarchical baselines on five public domain-specific datasets. These datasets, along with our code, are made available for future research.

1. Introduction

Text classification (TC) is one of the most widely researched tasks within the natural language processing (NLP) community [1]. Shortly put, TC methods are supervised learning algorithms whose objective is to map documents (i.e., pieces of text) to a predefined set of labels. The most common classification setting in practice is multiclass, where only one label (i.e., class) can be associated with each document. There might only be two labels to choose from (binary classification) or multiple. In contrast, multilabel classification allows every document to be labeled with multiple categories. In either case, TC has many practical applications, such as topic labeling, sentiment analysis, and named entity recognition [2].

From a theoretical point of view, binary classification is the most generic classification scenario, as long as the categories are stochastically independent [3]. If this is the case, the problem can be translated into independent problems, where C is a set of classes. However, there are many practical scenarios in which this is not the case; hierarchical text classification (HTC) is one of these. HTC is a sub-task of TC, as well as part of the wider hierarchical multilabel classification (HMC). Vens et al. [4] define HMC as a classification task where instances (i) may belong to multiple classes simultaneously, and (ii) are organized within a hierarchy. Thus, as labels do indeed have a dependency on each other (as made explicit by the hierarchy), the simplifying independence assumption cannot be made.

1.1. What Is Hierarchical Text Classification?

The distinguishing property of HTC tasks is that the data they utilize have labels organized in a multi-level hierarchy. Within this, each label can be seen as a node and may have many possible children. In these types of situations, the hierarchical structure is key to the achievement of well-performing classification methods.

This type of label organization is quite common; real-world classification datasets often contain a large number of categories explicitly organized into a class hierarchy or taxonomy. Moreover, this arrangement of information can sometimes be found even in corpora for which the hierarchy was not initially devised. For example, a set of documents categorized by their topics can usually be organized within large macro-areas that encompass subsets of related subjects (for instance, the “sports” macro category can contain both “tennis” and “football”). Indeed, hierarchies allow for intuitive modeling of what could instead be complex relationships among labels (such as the is-a and part-of relationships).

Thus, thanks to the increased availability of TC datasets that integrate hierarchical structure in their labels, as well as a general interest in industrial applications that utilize TC, a large number of new methods for HTC have been proposed in recent years. Indeed, HTC has many practical applications beyond classic TC, such as International Classification of Diseases (ICD) medical coding [5,6], legal document concept labeling [7], patent labeling [8], IT ticket classification [9], and more.

The strength of these “hierarchical classifiers” comes from their ability to leverage the dependency between labels to boost their classification performance. This is particularly important when considering the wider task of multilabel TC, which, in general, can be quite difficult, and even more so when dealing with large sets of labels that contain many similar or related labels [10]. Moreover, HTC methods tend to exhibit better generalization when faced with new classes compared to their non-hierarchical counterparts. Newly introduced classes are often subcategories of larger, pre-existing macro-categories. Consequently, these hierarchical methods retain some of their knowledge from the parent nodes of these newly introduced categories.

1.2. Related Work

Text classification is one of the most active research areas within NLP, and many surveys and reviews have been published in recent years. These cover a wide range of techniques used in NLP, from traditional methods to the latest deep learning applications [1,2,11,12,13]. However, these works cover the broader TC field and do not cover HTC specifically (or mention it very briefly). In the following paragraphs, we instead present some notable works within the field of HTC. We briefly touch on seminal works that describe this field, while also highlighting recently published reviews that perform an analysis of the existing methods.

One of the first works to directly address HTC is that of Koller and Sahami [14]. The authors already highlight some of the most notable characteristics of HTC, such as the inadequacy of flat classifiers as opposed to their hierarchical counterparts (which we describe in Section 3.1) and the proliferation of topic hierarchies. Sun and Lim [15] similarly address the difficulties tied to utilizing flat approaches in hierarchical settings, as well as discussing the issues related to standard performance metrics. As we will discuss in Section 3.3, classification metrics such as precision, recall, and accuracy assume independence between categories, which might give a skewed representation of a classifier’s real performance (e.g., performing a misclassification on a child not but not on its parent should be considered better than entirely incorrect classifications). In a subsequent work, Sun et al. [16] propose a specification language to describe hierarchical classification methods to facilitate the description and creation of HTC systems.

More recently, Silla and Freitas [17] give a precise definition of hierarchical classification and propose a unifying framework to classify this task across different domains (i.e., not limited to text). They provide a comprehensive overview of this research area, including a conceptual and empirical comparison between different hierarchical classification approaches, as well as some remarks on the usage of specialized evaluation metrics. Stein et al. [18], on the other hand, address HTC directly, proposing an evaluation of traditional and neural models on a hierarchical task. In particular, the authors aim to gauge the efficacy of different word embedding strategies (which we outline in Section 2.1) with several methods for the specific HTC task. Several methods are tested, also comparing the effect of different text embedding techniques by evaluating their effect on both standard and specialized metrics. Similarly to other authors, they also advocate for the inadequacy of traditional “flat” classification metrics in hierarchical settings. Lastly, Defiyanti et al. [19] provide a review of a sub-class of hierarchical methods, namely, global (big-bang) approaches (described in Section 3.1). The authors detail various algorithms using the big-bang approach, mainly focusing on applications in bioinformatics and text classification.

1.3. Contributions

In this work, we propose an analysis of the current research trends related to HTC, performing a systematic search of all papers that have been published in the last 5 years (i.e., between 2019 and 2023). We deem this range effective for analyzing recent work while also providing a way to limit the scope. We collect papers querying the keywords “hierarchical text classification”, “hierarchical multilabel”, and “multilevel classification” in Google Scholar (https://scholar.google.com, accessed on 17 March 2024), PapersWithCode (https://paperswithcode.com, accessed on 17 March 2024), Web of Science (https://webofscience.com, accessed on 17 March 2024), and DBLP (https://dblp.org, accessed on 17 March 2024). We complement our search results by searching with the query “hierarchical AND `text classification`” on Scopus (https://www.scopus.com, accessed on 17 March 2024).

Moreover, in our experimental section, we report the performance of a set of recent proposals, as well as several baselines, on five datasets. Three of these datasets are popularly utilized in the literature, while two of them are newly proposed versions of existing collections. In summary, the main contributions of this work can be summarized as follows:

- We provide an extensive review of the state of current research regarding HTC;

- We explore the NLP background of text representation and the various neural architectures being utilized in recent research;

- We analyze HTC specifically, providing an analysis of common approaches to this paradigm and its evaluation measures;

- We summarize a considerable number of recent proposals for HTC, spanning between 2019 and 2023. Among these, we dive deeper into the discussion of several methods and how they approach the task;

- We test a set of baselines and recent proposals on five benchmark HTC datasets that are representative of five different domains of applications;

- We release our code (https://gitlab.com/distration/dsi-nlp-publib/-/tree/main/htc-survey-24, accessed on 17 March 2024) and dataset splits for public usage in research. The datasets are available on Zenodo [20], including two new benchmark datasets derived from existing collections;

- Lastly, we summarize our results and discuss current research challenges in the field.

1.4. Structure of the Article

The rest of this article is organized as follows. Section 2 introduces the main NLP topics and recent advancements relevant to the latest proposals in HTC. Section 3 then dives into the specifics of HTC, describing the various approaches found in the literature and hierarchical evaluation measures. Section 4 summarizes recently proposed methods, analyzing in more detail a subset of them we wish to explore in the experimental section. This section also introduces the most popular datasets utilized in HTC research. The experimental part of this survey begins in Section 5, which outlines the datasets utilized and the methods being benchmarked, as well as a discussion of the results. The manuscript then moves towards its end in Section 6, which briefly explores current research challenges and research directions in the specific field of HTC, and draws its conclusions in Section 7.

2. NLP Background

Given the significant advancements in NLP over the last few years, our discussion begins with an updated overview of the NLP topics that lay the foundation of any HTC method. First, we describe text representation and classification from a generic point of view, highlighting some of the most prominent approaches to the extraction of features from text. Then, to provide sufficient background for recent methods discussed throughout this article, we highlight some of the most notable neural architectures being utilized in the literature as of now.

2.1. Text Representation and Classification

The interpretation of text in a numerical format is the fundamental first step of any application that processes natural language. How text is viewed and represented has changed drastically in the last few decades, moving from relatively simple statistics-based word counts to more semantically and syntactically meaningful vectorized representations [21,22,23]. A richer text representation translates into more meaningful features, which—if utilized adequately—lead to massive improvements in downstream task performance. In this section, we overview the main approaches to text representation, highlighting major milestones and how they differ from one another.

2.1.1. Text Segmentation

First, bodies of text must be segmented into meaningful units—a process called tokenization for historical reasons [10]. Naturally, the most intuitive one is that of words, though they are far from the only atomic unit of choice for this task. Indeed, this is a non-trivial task because of many factors. For instance, vocabularies (i.e., the set of unique words in a corpus of documents) might be too large and sparse when segmenting for words. Moreover, some languages do not have explicit word boundary markers (such as spaces for English).

This is a vast and interesting topic of its own, and we point readers to the work by Mielke et al. [24] for further information on it. Very briefly, it is important to mention that recent approaches rely on sub-word tokenization approaches, which broadly operate on the assumption that common words should be kept in the vocabulary, while rarer words should be split into “sub-word” tokens. This allows for smaller and more dense vocabularies and has been successfully applied to many recent approaches. Examples of sub-word tokenization approaches include Byte-pair Encoding [25], WordPiece [26], and SentencePiece [27]. Even more recently, some authors have argued for different forms of decomposition, such as ones utilizing underlying bytes [28], or even visual modeling based on the graphical representation of text [29].

2.1.2. Weighted Word Counts

Some of the more traditional and widely utilized approaches in the past are based on word occurrence statistics, effectively ignoring sentence structure and word semantics entirely. The most common example is that of the Bag-Of-Words (BoW) representation; in it, documents are simply represented as a count of their composing words, which are then often weighted with normalizing terms such as the well-studied Term Frequency-Inverse Document Frequency (TF-IDF) [21]. Briefly, TF-IDF measures word relevancy by weighting it positively by how frequently the word appears in a document (TF), but also negatively by how frequently it appears in all other documents (IDF). This way, words that frequently appear in a document are highlighted, but only if they do not appear often in other documents as well (as this negates their discriminative power).

2.1.3. Word Embeddings

Weighted word counts such as TF-IDF weighted BoW fail to capture any type of semantic and syntactic property of text. A major milestone towards the development of more meaningful representations is the development of word embeddings, initially popularized by works such as Word2Vec [30,31] and GloVe [22]. These vectorial representations of text are learned through unsupervised language modeling tasks; briefly, language modeling refers to the creation of statistical models created through word prediction tasks and has been studied for many decades [32]. The resulting language models (LMs) are useful in a variety of tasks, one of which is indeed the extraction of meaningful word and sentence representations.

The LMs utilized to develop word embeddings are based on shallow neural networks pre-trained on massive corpora of documents, allowing them to develop meaningful vectorial representations for words. The underlying semantic properties of these representations have often been exemplified through vector arithmetic operations, such as the classic example of . Nonetheless, at a practical level, these vectors—i.e., word embeddings—can then be utilized as input features for downstream algorithms (e.g., classifiers), leading to vast improvements in terms of performance.

2.1.4. Contextualized Language Models

Word embeddings have sometimes been defined as “static”, as their early iterations produced representations that were unable to disambiguate the meaning of a word with multiple meanings (i.e., polysemous words) [1]. An embedding for such a word, then, would be an average of its multiple meanings, leading to an inevitable loss of information. While much research has been dedicated to the addition of context to word embeddings (with notable results such as ELMO [33]), the introduction of the Transformer architecture [23] (see Section 2.2.3) has been certainly the most pivotal moment towards the development of contextualized LMs.

In layman’s terms, these models contextualize word embeddings by studying their surrounding words in a sentence (the “context”) [34,35,36,37,38]. A notable change of Transformer-based LMs over previous approaches is the lack of recurrence in their architectures, which instead utilize the attention mechanism [39,40] as their main component. This was a drastic change when considering that recurrent neural networks (RNNs) [41,42] were the go-to approach for text interpretation before the introduction of purely attention-based models, as they are particularly effective when dealing with sequential data. However, Transformers are much more parallelizable and also have been shown to scale positively with increased network depth, something that is not true for RNNs [43]. Therefore, larger and larger Transformer-based LMs can be built (both in terms of training data and model parameters), a practice that has seen widespread use in the most recent literature. Many recent models are now referred to with the moniker of large language models (LLMs) because of the massive amount of parameters they contain. Examples of this trend include GPT-3 (175 billion parameters) [38], LLaMA (65 billion parameters) [44], GShard (600 billion parameters) [45], and Switch-C (1.6 trillion parameters) [46]. More recently, some LLMs are being called foundation LMs because of the broad range of few-shot capabilities that allow them to be adapted to several tasks [47]. Within this context, researchers have been active in proposing LLMs for HTC tasks [48].

2.1.5. Classification

In terms of classification, traditional word representation methods such as TF-IDF (and, to a lesser extent, word embeddings) have been widely utilized as input features for traditional classification methods such as decision trees [49,50], support vector machines [51,52], and probabilistic graphical models (e.g., naive Bayes and hidden Markov models) [53,54]. The same can be said about word embeddings, which have, however, seen much greater use with specialized neural network architectures such as convolutional neural networks (CNNs) [55,56,57,58] and RNNs [59,60,61]. Transformer-based LMs such as the Bidirectional Encoder Representations from Transformers (BERT) [34] and Generative Pre-trained Transformer (GPT) [36], on the other hand, have showcased outstanding classification results by passing the contextualized embeddings through a simple feed-forward layer. The ease of adaptation of these models has highlighted the importance of developing well-crafted representations for text. For a more in-depth description of classification approaches in NLP, we refer the readers to Gasparetto et al. [1].

2.2. Notable Neural Architectures

Much of the progress achieved in the development of meaningful text representation is attributable to neural networks, and deep learning in particular. While earlier approaches discussed (such as Word2Vec and GloVe) were initially based on shallow multilayer perceptrons [22,30], better results were later obtained with larger and deeper networks (i.e., more layers, more parameters). In this section, we briefly overview some of the most influential neural architectures and mention notable applications in the field of text representation.

2.2.1. Recurrent Neural Networks

RNNs [41] are networks particularly well-suited to environments that utilize sequential data, such as text or time series. This particular architecture allows these networks to retain a certain amount of “memory”, making them able to extract latent relationships between elements within sequences. In practice, this is performed by utilizing information from prior inputs to influence the current input and output of the network.

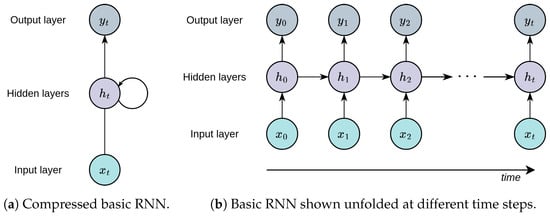

Simple RNNs for text processing are fed a sequence of word embeddings, which are processed sequentially. At each time step, the network receives both the next word vector as well as the hidden state of the previous time step (Figure 1). Unfortunately, because of their structure, standard RNN architectures are vulnerable to gradient-related issues, such as vanishing and exploding gradients [62]. In response to this issue, these architectures are frequently enhanced with gating mechanisms, the most popular being long short-term memory (LSTM) [63] and gated recurrent unit (GRU) [64]. Briefly, these gates allow the network to control which and how much information to retain, such as to enable the modeling of long-term dependencies. RNNs that utilize these gates are often referred to by the acronym of the gate itself, i.e., LSTM networks and GRU networks.

Figure 1.

Exemplification of a simple RNN structure.

A simple RNN [65] can be defined as in the equation below, where and are the hidden state and input vector at time t, respectively, while and are learnable weight matrices:

Here, is an activation function, typically tanh or Rectified Linear Unit (ReLU), and is a bias term. The output at each time step is usually derived from the state of the last layer of the RNN at all time steps, for instance:

In the context of text representation, RNNs based on encoder–decoder architectures have been widely utilized to extract meaningful textual representations [66]. Briefly, an encoder–decoder structure can be understood as an architecture by which inputs are mapped to a compressed yet meaningful representation (contained in the hidden states between encoder and decoder). This representation should hopefully capture the most relevant features of the input and can then be decoded for a variety of different tasks (e.g., translation). Autoencoders [67] are a particular class of encoder–decoder network that attempts to regenerate the input exactly; they are particularly useful in creating efficient representations of unlabeled data.

In this context, the hidden states between the encoder and the decoder make for a semantically meaningful and compact representation of input words. The introduction of bidirectionality (influence in both the left-to-right and right-to-left directions) in RNNs has also been proved to be beneficial and has been used to achieve notable results, such as the aforementioned ELMo [33], a bidirectional LSTM-based language model that marked one of the first milestones towards the development of contextualized word embeddings. Nonetheless, RNNs have inherent limitations because of how they process data sequentially, making them ill-suited for parallelization. Furthermore, despite the improvements introduced by LSTM and GRUs, RNNs still struggle with long sequences because of memory constraints and their tendency to forget earlier parts of the sequence [62].

2.2.2. Convolutional Neural Networks

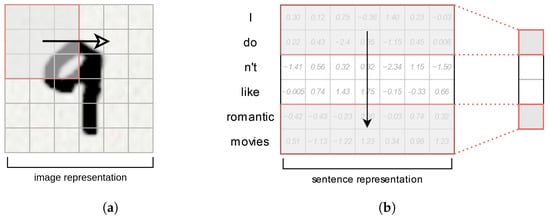

CNNs [68] are well-known neural architectures originally devised for computer vision applications. However, these networks have since been extended to other fields, achieving excellent results in NLP tasks as well [55,58]. The core structural element of CNNs is the convolutional layer, which applies a feature detector (kernel or filter) across subsets of the input (i.e., the convolution operation) to extract features. While this has a more intuitive interpretation in computer vision (where the filter moves across the image to search for features), the same reasoning can be applied to text. Intuitively, convolution as applied to images can be thought of as a weighted average of each pixel based on its neighborhood; the general idea of the process is outlined in Figure 2a. If we consider a vectorial representation of text (i.e., word embeddings), applying a filter as wide as the embedding size (a common approach) allows us to search for features within the sentence, as shown in Figure 2b. CNNs often use pooling operators (such as max or average) to reduce the size of the learned feature maps, as well as to summarize information.

Figure 2.

Exemplification of the application of convolutional filters in images and text. (a) A convolutional filter (top left) sliding across a digit from the MNIST dataset [69]. (b) A convolutional filter sliding across the vectorial representation of a sentence. Here, the filter is sliding in the conventional reading direction.

Various works have tested the efficacy of CNNs, especially as feature extractors on word embeddings [58]. An obvious upside of these architectures is their speed (as they are much more parallelizable than RNNs). Thus, CNNs produce efficient yet effective latent representations that can be used to solve a variety of tasks (e.g., classification). Recent works have also revitalized the interest of CNNs in NLP by introducing temporal CNNs. In short, these aim to extend CNNs by allowing them to capture high-level temporal information [70,71].

2.2.3. Transformer Networks and the Attention Mechanism

As previously mentioned, one of the most influential neural architectures introduced in recent years—especially in terms of text processing—is the Transformer architecture [23]. The foundational framework is that of an encoder–decoder with a variable number of encoder and decoder blocks. In Section 2.1.4, we briefly touched upon the main innovation introduced by Transformers, which is the complete lack of recurrence as a learning mechanism. Instead, Transformers model context dependency between words entirely through the attention mechanism [39,40], which is outlined in the remaining part of this section.

Attention is, in essence, a weighting strategy devised to learn how different components contribute to a result. It was initially proposed in the machine translation domain [39] as an alignment mechanism that matched each word of the output (translated sentence) to the respective words in the input sequence (original sentence). The rationale behind this is that, when translating a sentence, a good translation can only be obtained by looking at the context of words and paying attention to specific words.

Vaswani et al. [23] used this mechanism in the Transformer architecture to allow the model to process all input tokens simultaneously, rather than sequentially, as was the case in previous recurrent networks. Input sequences are fed to the Transformer encoder at the same time, and a positional encoding scheme is used in the first layers of the encoder and decoder to inject some ordering information in the word embeddings. This ensures word ordering properties are not lost, e.g., that two words appearing in different positions in a sentence will have a different representation. Then, in all the remaining layers, Transformers use self-attention layers to learn dependencies between tokens. The layers are “self”-attentive, as each token pays attention to every other token in a sentence, which is the main learning mechanism that allows for the disposal of recurrence. Indeed, since tokens in the input sequence are being processed simultaneously, the encoders can look at surrounding tokens in the same sentence and produce context-dependent token representations [1].

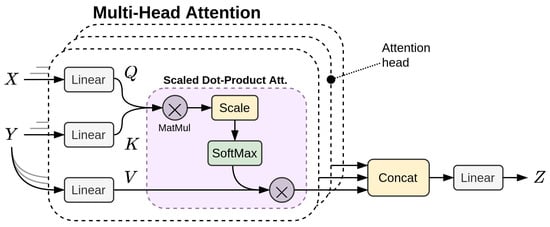

Stacking several self-attention layers produces a multi-head attention (MHA) layer, whose structure is shown in Figure 3. Vaswani et al. [23] argue that having multiple attention heads in the layer enables the model to pay attention to different information in distinct feature spaces and at different positions. Input sequences in the attention heads are transformed using linear transformations to generate three different representations, which the authors name (queries), (keys), and (values), following the naming convention used in information retrieval (as in Equation (3)):

where are the learned weight matrices. In the Transformer architecture, and are always generated from the same sequence (as we will discuss, is used differently in the encoder and decoder part). The transformed sequences are then used to compute the scaled dot-product attention, as follows:

Figure 3.

The multi-head attention layer used in the Transformer architecture.

While many definitions of attention exist in the literature, the authors decided to use the scaled dot-product, mainly for efficiency reasons. The product of the key and query matrices is scaled by to improve the stability of the gradient computation. At an intuitive level, we may envision a word as being queried for similarity/relatedness against keys , finally obtaining the relevant word representation by multiplying by V.

The final result of the MHA layer is the concatenation of all heads multiplied by matrix , which reduces the output to the desired dimension :

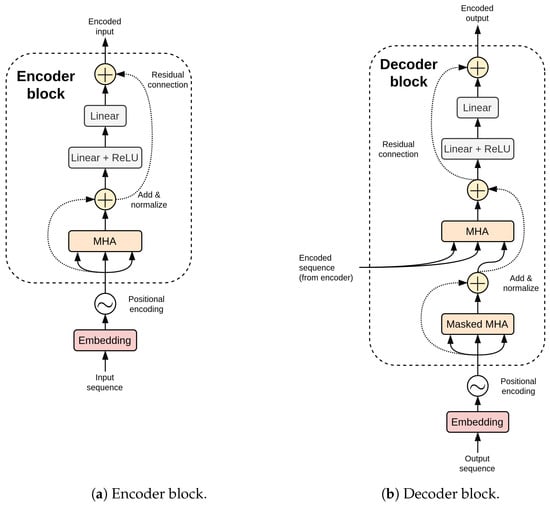

In encoder blocks, , , and are all generated by the input sequence and have the same size; the general structure of an encoder block can be seen in Figure 4a. In decoder blocks, comes from the previous decoder layer, while and come from the output of the associated encoder. These blocks structurally differ from encoders in the presence of another MHA layer, which precedes the standard one and is introduced to mask future tokens in a sentence (Figure 4b). This allows the decoder to be “autoregressive” during training, as it otherwise would trivially look forward in a sentence to obtain the result.

Figure 4.

Transformer encoder–decoder architecture.

With the widespread usage of Transformer blocks with MHA, researchers have studied the features extracted at each layer. Interestingly, some of these works found that each encoding block may focus on the extraction of linguistic features at different levels: syntactic features are mostly extracted in the first blocks, while deeper layers progressively focus on semantic features [72,73]. This “layer specialization” phenomenon suggests that stacking attention layers creates expressive representations that blend morphological and grammatical features.

The attention mechanism served as the basis of various derived attention schemes. For example, in the TC (including HTC) domain, hierarchical attention networks (HANs), proposed by Yang et al. [74], have been widely used [75,76,77]. Their idea is to apply attention hierarchically at different levels of granularity. In many applications, the mechanism is initially applied to words and used to produce more informative sentence representations, and then it is also applied to sentences to produce better document representations. This method adapts particularly well to the processing of long texts, since it leverages the hierarchical nature of human-produced written documents, which are often structured hierarchically.

2.2.4. Graph Neural Networks

The ubiquity of graph structures in most domains has sparked much interest in the application of neural networks directly to graph representations. In the NLP domain, a body of text can be represented as a graph of words, where connections represent relations that are potentially semantic or grammatical. As a simple example, a sentence could be represented by word nodes, and adjacent words in the sentence would be linked by an edge. Entire documents can also be linked together in a graph, for instance, in citation networks, or simply considering their relatedness [1,78].

In the particular context of hierarchical classification, graphs of labels have often been used to propagate hierarchy information between connected labels, with connections usually representing parent–child relations.

Message Passing

The principle behind graph processing models is generalized by the message passing neural network [79]. In this model, a message passing phase, lasting T time steps, is used to update node and edge representations by propagating information along edges. First, for a graph , the message at time is computed for each node , depending on the previous values of the nodes and edges . Therefore, for a node u,

where is a function learned by a neural network, function gives the neighbor nodes of u, and are node embeddings (representations). Equation (6) uses the sum operation to aggregate the multiple messages coming from the neighbors of u, although it could be replaced with other permutation-invariant operations, such as the average or minimum. Once the messages have been computed, the node embeddings are updated using an update function , which is also learned by a neural network:

Analogously, Equations (6) and (7) could be adapted to compute the message starting from neighbor edges instead of nodes and to update the edge representation accordingly. Finally, in the readout phase, values from all nodes are aggregated by summing them together and the output is used for a graph-level classification task.

Graph Convolution

The concept of message passing lends itself to the definition of a convolution operation for graphs that can be used within graph convolutional neural networks (GCNs) [80]. In the literature, two main categories of GCNs are typically distinguished: spatial-based and spectral-based. Similarly to the conventional convolution operation over an image, spatial-based GCNs define graph convolutions based on the graph topology, while spectral-based methods are based on the graph’s spectral representation [78,81,82]. In this paragraph, we will only discuss the former type, which is more closely related to the message-passing concept.

While many different definitions of convolution have been proposed, a simple spatial convolution operator on a graph can be defined as:

in which ⨀ is a permutation invariant operation. The result of this operation can be used to update each node representation, as exemplified in Figure 5. When and , which is a simple linear transformation of the representation of neighbor nodes, this operation can be defined in matrix form as:

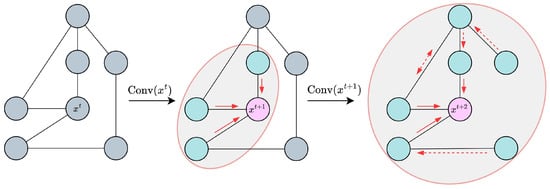

Figure 5.

The propagation effect operated by two sequential graph convolutions concerning node x. The red arrows showcase the information flow toward the target node.

In the equation above, is the adjacency matrix, contain the nodes’ representation of dimensionality d, and and are the weight matrix and bias term, respectively. The multiplication by the adjacency matrix guarantees that only neighbor nodes contribute to the updated representation . Consequently, if multiple convolutions are stacked, the message from each node can propagate further in the graph. However, if too many layers are used, large portions of the graph could end up having similar node representations, an issue often referred to as oversmoothing [83].

As a natural extension of the operation defined in Equation (9), can be weighted to reflect the importance of neighbors, for instance, by multiplying each entry with edge weights. The attention mechanism can also be used to autonomously learn how much each node should contribute to their neighbors’ representations. An example of this is graph attention networks (GATs) [84], which use the Transformer’s MHA to compute the hidden states of each node.

2.2.5. Capsule Networks

Much recent literature explores the usages of capsule networks (CNs) [85] to find structure in complex feature spaces. These networks group perceptrons—the base units of feed-forward networks—into capsules, which can essentially be interpreted as groups of standard neurons. Each capsule specializes in the identification of a specific type of entity, like an object part, or, in general, a concept. Since a capsule is a group of neurons, its output is a vector instead of a scalar, and its length represents the probability that an entity exists in the given input, as well as its spatial features.

Capsules have been first applied to object recognition [86] to address the shortcomings of CNNs, such as the lack of rotational invariance. However, capsules have been proposed in NLP applications as well; for instance, TC datasets often present labels that can be grouped into related meta-classes that share common concepts. A hierarchy of topics may include a “Sports” macro-topic, with “Swimming”, “Football”, and “Rugby” as sub-topics. However, the latter two sports are more similar, both being “team sports” and “ball sports”, something that is not made explicit by the hierarchy. CNs have been applied to HTC tasks for their ability to model latent concepts and, hence, capture the latent structure present in the label space. It is expected that a better understanding of the labels’ organization, such as modeling the “team sports” and “ball sports” concepts, can be effectively exploited to improve decision-making during classification [87,88].

Capsules

As briefly mentioned above, a capsule is a unit specialized in the following tasks:

- Recognizing the presence of a single entity (e.g., deciding how likely it is that an object, or piece-of, is present in an image);

- Computing a vector that describes the instantiation parameters of the entity (e.g., the spatial orientation of an object in an image or the local word ordering and semantics of a piece of text [88]).

As a result, capsules can specialize and estimate parameters about the selected entities. In contrast, neurons in standard MLPs can only output scalar values that cannot encapsulate such a richness of information. Within CNs, capsules are organized in layers, and the output of each child capsule is fed to all capsules in the next layer (i.e., parent capsules) using weighted connections that depend on the level of agreement between child capsules. Higher layers tend to specialize in recognizing more high-level entities, estimating their probability using the information about sub-parts that are propagated by lower-level capsules [89]. Output between capsules is routed through the dynamic routing mechanism.

Dynamic Routing

Because each layer of capsules specializes in recognizing specific entities, subsequent layers should make sense of the information extracted by previous layers and use it to recognize more complex entities. However, not all entities detected in lower-level capsules may be relevant. Hence, a routing-by-agreement method was proposed to regulate the flow of information to higher layers.

The general principle behind the routing-by-agreement algorithm known as dynamic routing [89] is that connections between capsules in layer l and a capsule j in layer should be weighted depending on how much the capsules in l collectively agree on the output of capsule j. When many of them agree, it means that all the entities they recognized can be part of the composite entity recognized by capsule j, and as such, their output should be sent mostly to capsule j.

The routing algorithm updates the routing weights (i.e., connections between capsules in different layers) so that they reflect the agreement between them. Let be two subsequent layers of a CN, each made up of several capsules. Predictions made by capsule about the output of capsule are computed as:

where is the activation vector of capsule i and matrix is the learned transformation matrix that encodes the part-to-whole relationship between pairs of capsules in two subsequent layers. A distinct weight matrix is used to store the connection weights between each capsule and capsule . The agreement is measured using the dot product, and weights are updated as in the equation below:

where output represents the probability that the entity recognized by capsule j is present in the current input and therefore, should be consistent with the information extracted by lower-level capsules (i.e., the “guesses”), which is encoded in vector . Hence, the output of a capsule is the weighted sum of the predictions made by all capsules in the previous layer . Note that, since the activation vector must represent a probability, the squash function is used to shrink the output into the range:

During each iteration, the coupling coefficients (or routing weights) are computed as follows:

This process ensures that the capsules in that were more in agreement with the capsules in will send a stronger signal than the capsules that made a different prediction, as opposed to higher-level capsules. Moreover, as Equation (12) shows, the output of the capsules in subsequent layers is dependent on the prediction made in the previous layers. This means that the higher the number of capsules that agree on the most likely prediction of some capsules in the next layer (i.e., ), the more they can influence the output of those capsules.

The routing process is repeated a fixed number of times before the algorithm proceeds to the next layer and stops when all the connections between capsules are weighted. Finally, this routing mechanism has been recently improved using the expectation-maximization algorithm [90] to overcome some of the limitations of the former approach.

3. Hierarchical Text Classification

As a sub-task of the broader TC area, HTC methods have much in common with standard text classifiers. In this section, we will outline the main aspects that differentiate HTC from standard classification approaches and how they can be leveraged to achieve a higher classification accuracy in the presence of a taxonomy of labels.

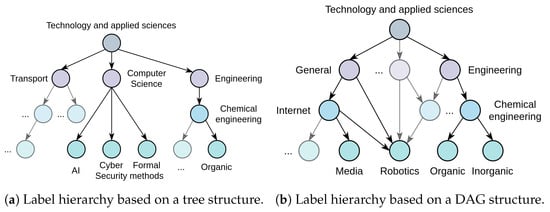

3.1. Types of Hierarchical Classification

Standard classifiers focus on what the HTC literature defines as flat classification. In it, categories are treated in isolation (i.e., as having no relationship between one another) [15,16]. In contrast, HTC deals with documents whose labels are organized in structures that resemble a tree or a directed acyclic graph (DAG) [91,92]. In these structures, each node contains a label to be assigned, such as in Figure 6. Methods able to work with both trees and DAGs can be devised, though a simpler technique is to simply “unroll” or “flatten” sub-nodes with multiple parents for DAGs, thus obtaining a tree-like representation. For this reason, this article (and much of the HTC literature) focuses on hierarchies with a tree structure.

Figure 6.

Hierarchically structured labels (inspired by Wang et al. [91]). Both forms are frequent in practice, though labels organized in a DAG require adaptation of either the method or the structure.

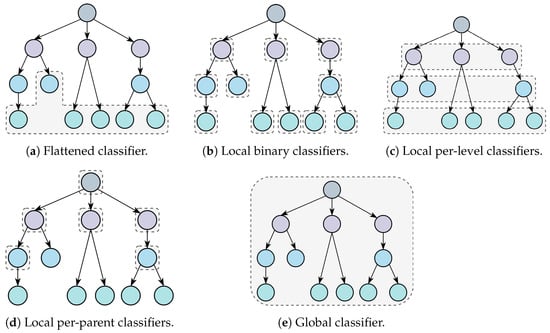

HTC approaches can be divided into two groups: local and global approaches [93]. Local approaches (sometimes called “top-down”) are defined as such because they “dissect” the hierarchy, constructing multiple local classifiers that work with a subset of the node labels. While more informed than flat classifiers—which ignore the hierarchy—there is an inevitable loss of hierarchical information, as the aggregation of these classifiers tends to ignore the holistic structural information of the taxonomic hierarchy. Depending on the chosen approach, the amount of information regarding the hierarchy can be partial or absent [94]. Local approaches (Figure 7b–d,) have been criticized because of their structural issues, the most notable one being that they may easily propagate misclassifications [95,96]. Furthermore, these models are often large in terms of trainable parameters and may easily develop exposure bias because of the lack of holistic structural information [97]. While we will discuss this in more detail later, this arises from the fact that, at test time, lower-level classifiers use the prediction of previous classifiers, thus leading to a discrepancy in the training process (which is usually based on ground truths).

Figure 7.

The most common approaches to HTC, exemplified on a tree-like hierarchy. Flattened classifiers (a) lose all hierarchical information, while local classifiers (b–d) can incorporate some of this information. Global classifiers (e) aim to fully exploit the label structure.

Global methods aim to solve these shortcomings, as well as being frequently less computationally expensive (as there is a single classifier) [17]. While the definition of global classifiers is deliberately generic, one might imagine as global any individual classification algorithm that directly takes into account the hierarchy. It is also worth noting that global and local approaches can also be combined [98,99]. Figure 7 showcases the main approaches to HTC, which we now briefly summarize.

3.1.1. Flattened Classifiers

The flat classification approach (Figure 7a) reduces the task to a multiclass (or multilabel) classification problem, therefore discarding hierarchical information entirely. Typically, only leaf nodes are considered, and the classification of higher levels of the hierarchy is inherited from parent nodes [88,94].

3.1.2. Local Classifiers

Local classification approaches are generally divided into three categories that differ in how they dissect the hierarchy. First, the class of local per-node (binary) approaches (Figure 7b) considers each label as a separate class, disregarding the hierarchy completely. This approach is among the simplest and resembles a one-versus-rest approach [14]. The individual classifiers receive hierarchical information from the specific training and testing phases, which we outline in Section 3.1.4. Local per-level methods (Figure 7d) assign a classifier to each relevant category level, which is tasked to decide for that level alone. Finally, local per-parent methods (Figure 7d) assign a classifier to all parent nodes, tasking them to assign a sample to one of its children—therefore capturing part of the sample’s current path through the hierarchy.

3.1.3. Global Classifiers

A global (or big-bang) [19] classification approach (Figure 7e) makes use of the entire hierarchy to make the final classification decision. It is common (though not strictly necessary) for global classifiers to perform actual classification on a flattened representation; hierarchy information is therefore achieved through structural bias (i.e., the architecture of the model) [17].

3.1.4. Training and Testing Local Classifiers

Differently from standard (flat) classification approaches, local classifiers usually require specialized procedures for training and testing, both in terms of actual methods and in the definition of positive and negative examples, as outlined in the following paragraphs.

Testing

Testing phases are usually characterized by a “top-down” flow of information. This is the preferred approach to all local approaches. The system performs a prediction at the first, most generic level, then forwards the decision to the children of the predicted class. As mentioned, this makes these approaches vulnerable to error propagation (where a mistake at higher levels leads to an inevitable mistake at the lower ones), unless a specialized procedure is set in place to avoid this.

Local classifiers can be used independently, therefore lending themselves naturally to multilabel scenarios. However, this might lead to class-membership inconsistency (by which a child node disagrees with a parent classification); therefore, top-down approaches are usually utilized.

Training

As local classifiers are defined at different levels of the hierarchy, examples may need to be altered so that the objective makes sense at the local level. Several possible policies may be utilized for the creation of these subsets of examples, each of which differs in how “inclusive” they are. For instance, a decision can be made on whether or not to include samples labeled with “AI” as positive examples for classifiers at the higher “Computer Science” level in Figure 6a (while everything else is considered as a negative example). We point interested readers to Silla and Freitas [17] for an in-depth overview of these policies.

It is also possible to allow for different classification algorithms in different nodes, an approach that is often attributed to per-parent approaches [17]. To do this, training data may be further split into sub-train and validation sets, and the best decider for each node is selected dynamically.

3.2. Non-Mandatory Leaf Node Prediction and Blocking

In hierarchical classification datasets, it may not always be the case that all prediction targets correspond to leaves. Many authors distinguish between mandatory and non-mandatory leaf node prediction (MLNP, NMLNP) [15,100]. Quite simply, in NMLNP scenarios, the classification method should be able to consider stopping the classification at any level of the hierarchy, regardless of whether it is a leaf node or not. The term applies to both tree- and DAG-structured hierarchies. In this section, we briefly outline how the different types of HTC approaches can deal with the latter, more complex case.

3.2.1. Flattened Classifiers

The flat classification approach simplifies the problem to a standard, non-hierarchical classification problem. In that sense, if the target is restricted to the leaf nodes of the structure, methods that follow this paradigm are unable to deal with NMLNP by design [17]. It is possible to naively extend the classification targets to include all possible labels, though this would make the task much harder since flattened classifiers do not have any inherent information about the hierarchy. As we will discuss in the following paragraphs, it is possible to inject hierarchical information whilst maintaining the general classification target and algorithm, which some global approaches do [101].

3.2.2. Local Classifiers

To deal with NMLNP, local approaches must implement a blocking mechanism, so that inference may be stopped at any level of the hierarchy. A simple way to do this is by utilizing a threshold on the confidence of each classifier; if during top-down prediction the confidence does not meet the requirement, the inference process is halted [102].

An issue with such thresholds is that they may lead to incorrect early stopping of classification. Sun et al. [103] define this as the blocking problem, which refers to any case in which a low confidence rating of a parent classifier mistakenly hints that an example does not belong to its actual macro-class. As a consequence, the example will never reach the classifier for its appropriate sub-class. The authors propose some blocking reduction methods, which generally act by reducing the thresholds of inner classifiers or by allowing lower-level classifiers to have a second look at rejected examples.

3.2.3. Global Classifiers

Global classifiers utilize a single, usually complex algorithm that integrates the hierarchy into its internal reasoning. As mentioned, it is common to base global classifiers on an existing flat classification approach and modify it to take into account the class hierarchy. Global classifiers can also be used in NMLNP scenarios, though specific strategies might be needed in this case to enforce class-membership consistency in predictions. Likewise, it is also possible to integrate a top-down prediction approach (which would be internal to the algorithm) to avoid this issue.

3.3. Evaluation Measures

As HTC issues are inherently multiclass (or multilabel), many researchers choose to utilize standard evaluation metrics widely adopted in classification scenarios. As mentioned, however, many authors argue that these measures are inappropriate [15,17,18,104,105,106]. Intuitively, these arguments are based on the shared belief that ignoring the hierarchical structure in the evaluation of a model is wrong because of the concept of mistake severity; in other words, a model that performs “better mistakes” should be preferred [107]. This follows from two considerations. Firstly, predicting a label that is structurally close to the ground truth should be less penalizing than predicting a distant one. Secondly, errors in the upper levels of the hierarchy are inherently worse (e.g., misclassifying “football” as “rugby” is comparatively better than misclassifying “sport” as “food”). These considerations also make sense when considering real-world applications of HTC, such as ICD coding [5,6] and legal document concept labeling [7]. A better mistake entails that most ancestor nodes in the prediction path were correct, meaning that most of the macro categorizations of the sample were accurate. In most scenarios, a mistake of this type is preferable to one in which the macro category is wrong, the latter of which might have severe consequences. Moreover, understanding the severity and type of a model’s errors can aid during the development process and possibly lead to new strategies to prevent them.

In this section, we will provide an outline of the most common evaluation metrics utilized in HTC, both standard and hierarchical. For a more in-depth analysis of these metrics, as well as of the issues they present and how to address them, we refer to Kosmopoulos et al. [105].

3.3.1. Standard Metrics

The most common performance measures utilized in “flat” classification are derived from the classic information retrieval notions of accuracy (), precision (), and recall (). As in any other supervised learning task, we consider the truthfulness of a model’s predictions against the ground truth derived from the dataset. Formally, let be a set of labeled training examples, where is an input example and is the associated label vector, with L being the set of label indices (therefore, is the number of categories). For each label , we can calculate category-specific metrics by considering positive (P) and negative (N) predictions for each example. A prediction is considered true (T) if it agrees with the ground truth and false (F) otherwise.

Accuracy measures the ratio of correct predictions over the total of number predictions. Precision is instead a measure of correctness, quantifying the proportion of true positive predictions among the ones made, while recall is a measure of completeness, quantifying the proportion of overall positives captured by the model. Notably, the latter two metrics have a larger focus on the impact of false predictions. Accuracy, precision, and recall are defined as follows (Equation (14)):

Precision and recall do not effectively measure classification performance in isolation [3]. Therefore, a combination of the two is commonly utilized. The F-measure () is the most popular of these combinations, providing a single score according to some user-defined importance of precision and recall (i.e., ). Normally, , resulting in the harmonic mean of the two measures (Equation (15)):

Accuracy naturally extends to multiclass settings without being able to weigh the contribution of class differently. Precision and recall can be averaged in different ways; macro averaging considers all class contributions equally (Equation (16)), while micro averaging treats all examples equally (Equation (17)):

where m is the number of categories. Micro-averaging may be useful when the class imbalance is severe and needs to be accounted for in the measurements. Support-weighted metrics are also an alternative in such cases. F-measures may be micro- or macro-averaged as well, utilizing the corresponding averaged versions of precision and recall.

3.3.2. Hierarchical Metrics

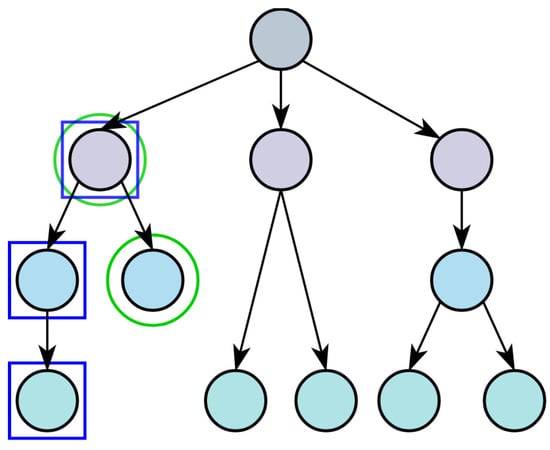

As outlined before, standard metrics lack the capability of reflecting the relationships that exist among classes. In this context, Sun and Lim [15] propose to solve this issue by introducing hierarchical metrics based on category similarity and distance. Category similarity evaluates the cosine distance of the feature vectors representing predicted and true categories, while category distance considers the number of links between the two in the hierarchy structure. While interesting, these measures have practical issues, which Kiritchenko et al. [104] outline (such as inapplicability to DAGs and multilabel tasks). Instead, they propose to extend traditional precision and recall. To do this, they augment the set of predicted and true labels to include all their ancestors (Figure 8) and calculate the metrics on the augmented sets.

Figure 8.

Tree hierarchy with predicted (squares) and ground truth (circles) labels, with the ancestors of each highlighted. As the two sets have a node in common, the misprediction should be considered less severe.

Formally, let be the augmented set of predictions containing the most specific predicted classes and all of their ancestors and the augmented set of the most specific ground truth class(es) and all of their ancestors. Then, hierarchical metrics (h-metrics) can be defined as follows (Equation (18)):

However, Kosmopoulos et al. [105] observe that this approach overpenalizes errors in which nodes have many ancestors. Specifically, false positives predicted at lower levels of the hierarchy strongly decrease the precision metric, while recall tends to be boosted, since adding ancestors is likely to increase the number of true positives (false positives are ignored). Drawing from the lowest common ancestor (LCA) concept defined in graph theory [108], they propose LCA-based measures as a solution. Briefly, and in the context of tree structures, the LCA of two nodes can be defined as the lowest (furthest from the root) node that is an ancestor of both. Therefore, these new measures are defined similarly to the one in Equation (18), but with the expanded sets defined in terms of LCA rather than on full node ancestries [18]. Despite these issues, the hierarchical metrics of Equation (18) are still considered effective measures across a broad range of scenarios [17].

Some authors report metrics that explicitly evaluate the difference, in terms of path distances through the hierarchy, between class predictions and ground truth labels. In particular, Sainte Fare Garnot and Landrieu [109] define the average hierarchical cost (AHC) of a set of predictions. Given two labels , define as the number of edges that separate two labels in the hierarchy tree. In turn, for a set of labels S, let be the minimum distance between the node and the set, i.e., . If predictions and ground truth labels are expressed as vectors (as before), the AHC can be defined as (Equation (19)):

In practice, this measure evaluates how “far off” the predictions were from the closest common ancestor (and, in that, are not too dissimilar for LCA-based measures). As a consequence, a low AHC indicates that mistakes were, on average, not too distant from the ground truth.

3.3.3. Other Metrics

Depending on the particular aspect of HMC being tackled, other types of metrics, often borrowed from neighboring disciplines, may be warranted. In this section, we briefly introduce them.

Some works report rank-based evaluation metrics, which are widely used in the more general context of multilabel classification [110,111]. Among many, the two most commonly utilized are precision and normalized discounted cumulative gain at k (, ). Briefly, given a model’s prediction (i.e., a probability vector across labels), we can sort the labels in descending order based on the output probabilities. Then, indicates the fraction of correct predictions in the top-k labels of this sorted list, whereas measures the ranking quality (i.e., how high correct labels are ranked). For a more precise description of these metrics, which are often utilized in recommendation systems and, more generally, in information retrieval, see Marcuzzo et al. [112].

In methods able to categorize labels at different levels of the hierarchy separately, many authors choose to showcase the accuracy score at each separate level, as well as a single overall score [87,113]. The overall score is the one obtained by classifying the last level of the hierarchy given the (possibly incorrect) predictions of the parent classes. In some cases, authors utilize subset accuracy, where all labels must match the ground truth exactly.

Lastly, some authors utilize the Hamming loss [114,115] metric for HTC. This measure evaluates the fraction of misclassified instances, i.e., true labels that were not predicted or predictions that were not in the ground truth. A comprehensive review of these metrics, as well as less-commonly utilized ones, may be found in Zhang and Zhou [116].

4. Hierarchical Text Classification Methods

In this section, we provide an overview of approaches devised to solve HTC tasks in the 2019–2023 period. Section 4.1 introduces the main class of methods and Section 4.2 lists all relevant works we found in our literature review. Finally, a subset of these is analyzed in more detail in Section 4.3.

4.1. Overview of Approaches

In this section, we provide a high-level overview of notable approaches for HTC that differentiate themselves from more standard approaches for TC and that we find to be popular within the literature. The categories presented are not hard partitions, but rather emergent categories with possible overlap.

Sequence to sequence approaches, as the name suggests, re-frame HTC as a Seq2Seq problem, where the input is the text to classify and the output is the sequence of labels, such as to obtain better modeling of the local label hierarchy [117]. Target labels for each text are flattened to a linear label sequence with a specific order via either sorting, depth-first search, or breadth-first search. Each prediction step generates the next label based on both the input text and the previously generated label; this way, the model can capture the level and path dependency information among labels. Several frameworks have been proposed utilizing this approach [118,119], many arguing for improved label generation and better use of co-occurrence information [120,121,122].

Graph-based approaches encode the hierarchy with specialized structure encoders, which allow for better utilization of the mutual information among labels. Tree-based and graph-based approaches have been proposed to encode the hierarchy, with both showcasing good results [97,123]. Several methods apply GCNs to the hierarchy of labels, often in a joint manner with the encoding of the text to enrich the representation [124]. Additional techniques are often applied to improve label correlation and to better model their co-occurrence [125,126,127,128]. Some authors, such as Ning et al. [129], argue that graph-based approaches may lose on the directed nature of the tree hierarchy and thus introduce unidirectional message-passing constraints to improve graph embedding. Other works utilize GCNs to reinforce feature sharing among labels of the same level [130]. While graph NNs and GCNs in particular are by far the most popular architecture in these approaches, some authors utilize Transformer-based approaches such as Graphormers [91]. All in all, graph-based approaches have obtained excellent results, and the integration of structural information in classification frameworks has proved very successful.

KG-based approaches argue that the integration of external knowledge can enhance classification. They attempt to incorporate this knowledge into the model using graph-based representations, known as knowledge graphs (KGs). A KG comprises nodes that represent real-world concepts or entities. The relationships between these concepts are depicted by edges, which can vary in type depending on the relationship they represent [112]. Well-established examples of KGs in the NLP community include DBpedia [131] and ConceptNet [132]. These methods, while technically a subset of the earlier mentioned graph-based approaches, distinguish themselves by utilizing KGs to encode external knowledge, as opposed to problem-specific data. KG-based methodologies typically utilize KGs to generate knowledge-aware embeddings. Several techniques have been proposed to encode entity–relation–entity triples [133,134]. These embeddings encapsulate the relationships between entities and effectively augment the representation with data that would not be accessible in a task-specific setting, thereby enhancing generalizability across different tasks [135]. Although only a few studies have attempted to use KGs specifically for HTC, we consider them a promising research direction, given their success in broader TC and NLP applications.

Finally, alternative tuning procedures for LMs are being heavily researched in NLP and have showcased promising results in HTC as well. Prompt-tuning aims to reduce the difference between the pre-training and fine-tuning phases, wrapping the input in a natural language template and converting the classification task into an MLM task. For instance, an input text x may be wrapped as “x is [MASK]” (hard prompt). Virtual template words may also be used, allowing the LM to both learn to predict the “[MASK]” and tune the virtual template words (soft prompt). A verbalizer is then utilized to map the predicted word to the final classification [136], with custom verbalizers for HTC also being developed [137]. On the other hand, prefix-tuning only tunes and saves a tiny set of parameters in comparison to a fully fine-tuned method while maintaining comparable performance. To do this, prefix-tuning learns prefix vectors associated with the embedding layer (and, in recent works, each LM layer), while the rest of the LM has its parameters frozen, to fit the target domain better. Specific frameworks for HTC have been developed, training prefix vectors by considering their topological relationship in the hierarchy [138].

4.2. Recent Proposals

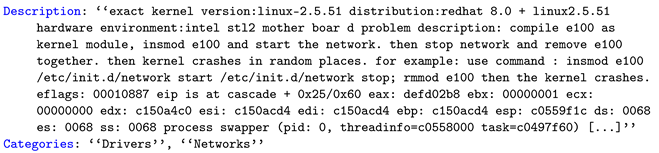

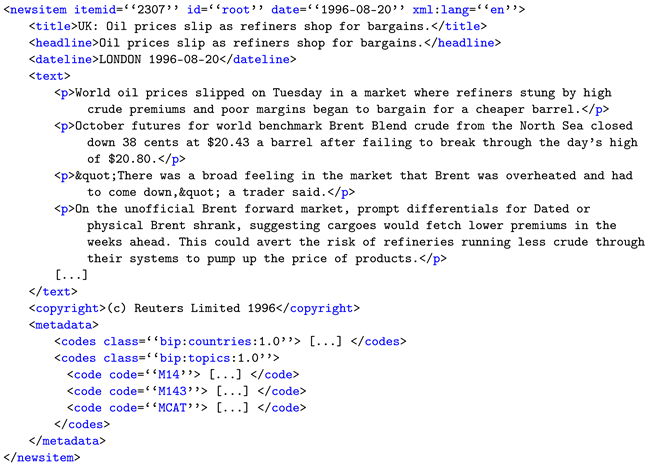

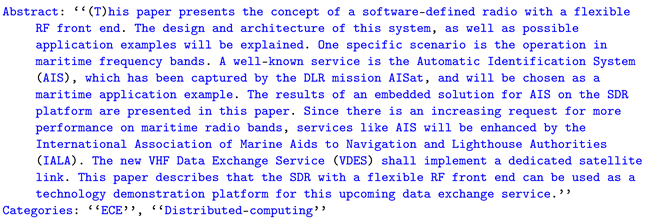

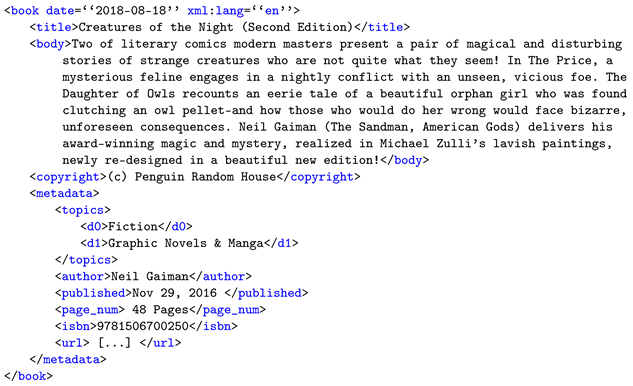

Table 1, Table 2, Table 3 and Table 4 display the results of our search for the latest applications of HTC to the NLP domain. While we do not discuss them, it should be mentioned that other domains of application of HMC have much in common with HTC, and one could also draw inspiration from such works (for example, protein function prediction in functional genomics) [139,140,141,142].

Table 1.

Hierarchical text classification methods. Names for models are reported when authors provide them. ✗ and ✓ stand for code missing and code available, respectively.

Table 2.

Hierarchical text classification methods. Names for models are reported when authors provide them. Base models marked as “n/a” represent works that propose new losses, metrics, or training regimes rather than new methods. ✗ and ✓ stand for code missing and code available, respectively.

Table 3.

Hierarchical text classification methods. Names for models are reported when authors provide them. Base models marked as “n/a” represent works that propose new losses, metrics, or training regimes rather than new methods. ✗ and ✓ stand for code missing and code available, respectively.

Table 4.

Hierarchical text classification methods. Names for models are reported when authors provide them. ✗ and ✓ stand for code missing and code available, respectively.

4.3. Analyzed Methods

As the number of works is too large to provide an exhaustive description of each of the proposals in Table 1, Table 2, Table 3 and Table 4, we provide an analysis of a subset of these based on two criteria: (i) the authors provide a code implementation, and (ii) the methods were tested on the two most common public datasets (RCV1 and WOS). These methods are also the ones considered when gathering implementations to test for the experimental part of this survey.

4.3.1. HTrans

In their work, Banerjee et al. [148] propose Hierarchical Transfer Learning (HTrans), a framework to improve the performance of a local per-node classification approach. The general intuition is that knowledge may be passed to lower-level classifiers by initializing them with the parameters of their parent classifiers. First, they utilize a bidirectional GRU-based RNN enhanced with an attention mechanism as a text encoder and then use a fully connected network as a decoder to produce the class probability. Word embeddings are initialized with GloVe pre-trained embeddings. One such model is trained for each node in the hierarchy tree with binary output, and child nodes share parameters with the classifiers of ancestor nodes. This can be seen as a “hard” sharing approach, which utilizes fine-tuning to enforce inductive bias from parent to child nodes. The inference is achieved through a standard top-down approach. The authors perform an ablation study by removing parameter sharing and attention and also compare their results with a multilabel model initialized with weights from the binary classifiers, showcasing solid improvements when including their proposed enhancements.

4.3.2. HiLAP

Mao et al. [99] tackle the issues that arise from a mismatch between training and inference in local HTC approaches. They propose a reinforcement-learning approach as a solution, modeling the HTC task as a Markov decision process; the task consists in learning a policy that considers where to place an object (which label) as well as when to stop the assignment process (allowing for NMLNP). In other words, such a policy allows the algorithm to “move” between labels or “stop” when necessary. Though theoretically extendable to any neural encoder, the authors use TextCNN [58] with GloVe vectors and BoW features in the document encoder to produce fixed-size embeddings. They also create randomly initialized embeddings for each label, producing an “action embedding” matrix, as each label defines an action for the agent. The encoded document is concatenated with the embedding of the currently assigned label to produce the current state vector. After passing it through a two-layer fully connected network with ReLU activation, the state vector is multiplied by the action embedding matrix to determine the probabilities of all possible actions. In the beginning, each document is placed on the root node, and the assignment stops when the “stop” action is selected as the most probable. The loss function is defined in terms of the overall F1 score between the previous and the current time step. The proposed Hierarchical Label Assignment Policy (HiLAP) yields excellent results, in particular in terms of consistency of parent-child assignments concerning the hierarchy of classes.

4.3.3. MATCH

The authors of MATCH [165] propose to boost the multilabel classification performance by learning a text representation enriched with document metadata and further adding a regularization objective to exploit the label hierarchy. The first component of their architecture is a metadata-aware pre-training scheme that jointly learns words and metadata embeddings considering the vicinity between documents and related metadata and labels. A modified Transformer encoder is then used to compute document representations: to cope with large label spaces, several special tokens ([CLS]) are pre-pended to input sequences, followed by the metadata tokens and the document’s words. The representation of all [CLS] tokens is then concatenated and passed into a fully connected layer with a sigmoid activation function. An L2 regularization (hypernymy regularization) is applied to the classification layer (i.e., to the weight matrix), forcing the parameters of each label to be similar to those of its parent. For all pairs of parent (l) and child labels () the regularization term is expressed as:

where is the set of labels, is the set of parent labels of l, and denotes the parameters of a label l. The final predictions are also regularized to penalize the model when the probability of a parent label is smaller than the one for the child label. The value is summed over all documents , as in the equation below:

where represents the probability that document d belongs to child class l. The authors report the results of an ablation study that confirms the metadata embedding strategy and hierarchy-aware regularization are both beneficial to the classification task.

4.3.4. HiAGM

Zhou et al. [97] propose an end-to-end hierarchy-aware global model (HiAGM) that leverages fine-grained hierarchy information and aggregates label-wise text features. Intuitively, they aim to add information to traditional text encoders by introducing a hierarchy-aware structure encoder (the structure being the hierarchy). As structure encoders, the authors test a TreeLSTM and a GCN adapted to hierarchical structures. Moreover, they propose two different frameworks: one based on multilabel attention (HiAGM-LA), and one on text feature propagation (HiAGM-TP). HiAGM-LA utilizes the attention mechanism to enhance label representations in a bidirectional, hierarchical fashion, utilizing node outputs as the hierarchy-aware label representation. HiAGM-TP, on the other hand, is based on text feature propagation in a serial dataflow; text features are used as direct inputs to the structure encoder, propagating information throughout the hierarchy. For multilabel classification, the binary cross entropy (BCE) loss is used, as well as the regularization term that was described for MATCH.

4.3.5. RLHR

The approach used by Liu et al. [167], which tackles zero-shot HTC, includes a reinforcement learning (RL) agent that is trained to generate deduction paths, i.e., the possible paths from the root label to a child label, to introduce hierarchy reasoning. The reward is assigned depending on the correctness of the predicted paths, which should be sub-paths of the ground truth set of paths to positively reward the agent. Moreover, the authors design a rollback algorithm that overcomes the inefficiencies of previous solutions and allows the model to correct inconsistencies in the predicted set of labels at inference time. The zero-shot task is formulated as a deterministic Markov decision process over label hierarchies. BERT and DistilBERT are used as base models, which are pre-trained on a binary classification task with a negative sampling strategy. Several training examples are created by pairing each document with one of its labels as well as some irrelevant labels to provide positive and negative examples. Using the pre-trained model, a policy is learned to further tune the model on the binary classification task.

4.3.6. HiMatch

In HiMatch [124], the HTC task is framed as a semantic matching problem, and the method is used to jointly learn embeddings for documents and labels as well as to learn a metric for matching them. The proposed architecture first utilizes a text encoder akin to the one used by HiAGM. Then, a label encoder is used to produce label embeddings enriched with dependencies among labels. It uses the same GCN architecture as the text encoder; label vectors are initialized with BERT’s pre-trained embeddings. The document and label representations are used in the label semantic matching component, which projects text and labels into a common feature space using two independent two-layer feed-forward networks. A cross-entropy objective is used for training with two regularization terms: one to force documents and respective labels to share a similar representation, measured in terms of mean square error, and a second to penalize close semantic distance between a document and incorrect labels. The latter constraint uses a triplet margin loss objective with Euclidean distance. All components are trained jointly, and the authors report improved performance over a BERT-based model fine-tuned on multilabel classification.

4.3.7. HE-AGCRCNN

The usage of CNs has also been proposed for HTC tasks. Aly et al. [88], for instance, adapt a CN to exploit the labels’ co-occurrence, correcting the final predictions to include all ancestors of a predicted label to ensure consistent predictions. More recently, Peng et al. [170] discuss the drawbacks and strengths of several popular neural networks used for text processing, including CNNs, RNNs, GCNs, and CNs. They propose to combine them in a single architecture (AGCRCNN) so that they can better capture long- and short-range dependencies and both sequential and non-consecutive semantics. In their work, documents are modeled using a graph of words that retains word ordering information: after a lemmatization and stop-word removal step, each word is represented as a node with its position in the document set as an attribute. A sliding window is passed over the document and edges between nodes are created, weighted on the number of times a word co-occurred in all sliding windows. For each document, they extract a sub-graph whose nodes are the document’s words with the highest closeness centrality—a measure of the importance of words in a document—and their neighbor nodes, up to a fixed number. The nodes are then mapped to Word2Vec embeddings, obtaining a 3D representation that is fed to the attentional capsule recurrent CNN module. This is composed of two blocks, each one containing a convolutional layer to learn higher-level semantic features, and an LSTM layer with an attention mechanism to learn local sequentiality features specific to each sub-graph. A CN with a dynamic routing mechanism is finally used for multilabel classification. They perform an ablation study comparing several variations of their architecture, as well as previously proposed deep learning classifiers, showcasing better results for their proposed model on two datasets.

4.3.8. CLED

As another example of the application of CNs to HTC, Wang et al. [87] propose the Concept-based Label Embedding via Dynamic routing (CLED), in which a CN is used to extract concepts from text documents. Concepts can be shared between parent and child classes and can thus be used to support classification based on hierarchical relations. The top-n keywords from each document are used as concepts and encoded with GloVe word embeddings. A clustering procedure is utilized to initialize concepts’ embeddings with the clusters’ center. Dynamic routing is then used to learn concepts’ embeddings; agreement is only measured between capsules representing parent and child classes.

4.3.9. ICD-Reranking