Abstract

This paper introduces a Python framework for developing Deep Reinforcement Learning (DRL) in an open-source Godot game engine to tackle sim-to-real research. A framework was designed to communicate and interface with the Godot game engine to perform the DRL. With the Godot game engine, users will be able to set up their environment while defining the constraints, motion, interactive objects, and actions to be performed. The framework interfaces with the Godot game engine to perform defined actions. It can be further extended to perform domain randomization and enhance overall learning by increasing the complexity of the environment. Unlike other proprietary physics or game engines, Godot provides extensive developmental freedom under an open-source licence. By incorporating Godot’s built-in powerful node-based environment system, flexible user interface, and the proposed Python framework, developers can extend its features to develop deep learning applications. Research performed on Sim2Real using this framework has provided great insight into the factors that affect the gap in reality. It also demonstrated the effectiveness of this framework in Sim2Real applications and research.

1. Introduction

In recent years, the field of Deep Reinforcement Learning (DRL) has undergone significant development in artificial intelligence and robotics. Hinton et al. [1] in 2016 proposed “Deep Learning” (DL), which is a branch of Machine Learning and Artificial Intelligence (AI) that can learn from the given data. DRL combines DL and reinforcement learning (RL), where a computational agent attempts to make decisions through trial and error while adjusting its learning policies. DRL uses deep neural networks to train agents that are capable of making complex decisions to adapt to dynamic environments. The agent can learn from the environment and optimize its behavior based on rewards and punishments to focus on its overall learning. However, the success of DRL methods is often hindered by a lack of quality or sufficient training data (sample inefficiency), particularly when the tasks involve real-world scenarios and applications. Developing advanced robotic systems in the real world is often challenging because the required training data can be dangerous or difficult to gather [2]. Therefore, creating virtual environments for Sim2Real transfer is important for offering a safe, scalable, and cost-effective platform for training, testing, and evaluating intelligent systems while minimizing the risks and costs associated with real-world applications.

To address these challenges, an upcoming method for generating quality training datasets is to utilize virtual environments. As an increasing number of DRL agents are being trained and used for real-world applications, such as robotics, manufacturing, and warehouses, it is important to have quality training datasets or access to high-fidelity virtual environments to perform simulations. A lack of quality training data can cause a “reality gap”, which is a term used to identify the difference between the source and target domains. According to [3,4], techniques such as Sim2Real are currently used for transfer learning from a simulated environment to a physical environment. Virtual environments have opened new possibilities for the development of advanced robotic systems that do not require real-world training datasets, physical platforms, or planned simulation scenarios. Advanced game engines and physics engines, such as Unreal Engine, Unity, and MujoCo are used in such applications. Virtual environments provide an inexpensive method for gathering necessary data, performing advanced simulations, and creating advanced DRL models.

Currently, many Software Development Kits (SDK), plugins, game engines, and physics engines have been developed to aid in simulated training or add capabilities to existing game engines. Most of these implementations rely on high-end physics or game engines, which poses challenges.

- 1.

- Complexity and overhead: Game engines are complex and designed to handle a wide range of tasks related to rendering, simulations, physical interactions, and user interactions. This complexity can introduce unnecessary overheads during DRL tasks, potentially slowing the learning process. In addition, integrating DRL algorithms or workflows with high-end game engines can be challenging.

- 2.

- Limited Customizations and Closed Sourced: As most game engines are designed for specific purposes, using them to perform simulations requires extending their features through APIs. However, most high-end game engines are proprietary and close-sourced, and they limit their ability to modify the core codebase, extend their features via APIs, and access low-level functionalities.

- 3.

- Resource intensiveness: High-end game engines can be resource-intensive, and they require powerful GPUs, CPUs, and significant computational resources to handle complex scenes. Resource intensiveness can cause scalability challenges, particularly when the access to high-end computational clusters is limited or expensive. In addition, performing DRL alongside the simulation could affect the overall learning owing to the delayed execution of actions and state observations.

- 4.

- Tradeoff between Realism and Simplicity: Most modern game engines are designed to simulate realistic immersion environments. However, DRL research requires simplicity and control over the simulation rather than realism. Simplifying the environment allows more control and customization options to be tailored to the specific requirements of reinforcement learning algorithms. Most existing virtual environments and physics engines require researchers to follow a specific structure to begin performing DRL. This restricts the ability to tailor the research.

This paper presents a methodology for utilizing an open-source Godot game engine to train DRL agents. Godot provides extensive flexibility to overcome the above-mentioned limitations and provides a user-friendly user interface and a custom programming language called GDscript, which is closely modelled after Python. The developed Python framework allows it to interface with the Godot game engine instance and DRL algorithm. For the demonstration, a three-degrees-of-freedom (3-DoF) Stewart platform was built to perform DRL learning. Additionally, the framework was utilized to perform a Sim2Real transfer learning on a real-world 3-DoF Stewart platform. To overcome the reality gap and other domain-related inconsistencies, the DR technique was introduced.

The paper is organized as follows: In Section 2, related works, such as other physics engines, game engines, and techniques that were utilized to improve the overall simulated learning, are presented. This provides an overview of the current frameworks and environments used by the research community. Section 3 provides an overview of the Godot game engine, its unique capabilities, and the features that can be used for DRL. Section 4 outlines the design aspects of the Python framework, communication protocols, and the general structure of the implementations. Section 5 presents the framework used for the design and development of a 3-DoF Stewart platform on a Godot game engine to perform DRL. Finally, in Section 6, we conclude this paper and provide steps for future development.

2. Related Work

There has been significant research and development in utilizing virtual environments to generate training data through virtual environments to develop advanced DRL models. DRL models require large amounts of high-quality training data. Owing to challenges in accessing real-world data in scenarios where it is often dangerous, or such data are inaccessible, a virtual environment provides a great alternative to resolve this limitation. However, there are many challenges in simulating the real world, such as simulation fidelity, real-world physical conditions, the dynamic nature, hardware limitations, sensor and physics simulations, computational complexity, and the domain gap (reality gap). Many researchers have used existing game engines to perform DRL, whereas others have developed state-of-the-art physics engines. Additionally, they utilized techniques such as Sim2Real, DR, and domain adaptation (DA) to augment simulated environments. These techniques increased the overall learning and fidelity of the trained DRL model.

There are many DRLframeworks developed to achieve state-of-the-art RL. Some of these frameworks include SampleFactory [5], OpenAI Gym [6], Google’s Dophamine [7], Keras RL [8], and RLLib [9]. These frameworks utilize their built-in environments and API structures for learning. In addition, there are a few implementations that utilize the Godot game engine to perform DRL. One notable implementation is ’Godot Reinforcement Learning Agents’ [10], which was developed as a Godot plugin and Python package called godot-rl. The plugin allows users to create custom reinforcement learning environments. Currently, this library provides wrappers for multiple RL frameworks such as StableBaselines3 [11], CleanRL [12], SampleFactory, and RayRLLib. This allows interfacing with the Godot game engine using the Godot-rl Python library. This library offers features for creating virtual sensors that can be used within the training environment. It utilizes the PyTorch open-source machine-learning framework. The implementation employs a TCP client–server mechanism for communication with multiple virtual instances, both locally and in distributed settings.

Similarly, another library, GodotAI Gym [13], enables the conversion of existing Godot virtual environments to compatible OpenAI Gym environments to train RL models using PyTorch. This library communicates with the Godot game engine through a shared memory to perform training. Since the framework is designed to be similar to the OpenAI Gym architecture, similar environments can be easily developed within the Godot engine. CST-Godot [14] is a framework designed to facilitate the integration of cognitive architectures within the Godot game engine. This framework provides a seamless interface between game engines and cognitive agents. This implementation provides a general framework that can be used to design Web-based RL-agents. Compared with CST-Godot, the proposed framework is more tailored towards Sim2Real learning applications. The proposed framework incorporates Sim2Real techniques, such as DR, to enhance the overall learning.

The GodotRL agent library was developed concurrently (at the same time) as the initial research phase of the current study, marking its early development stage. Similar to GodotRL, our research employed a TCP client-server connection to facilitate the communication between each virtual instance for distributed learning. This setup enabled the execution of additional training steps, such as DR, to enhance the overall learning process required for Sim2Real applications. In contrast, the current study was designed specifically for DRL in Sim2Real applications. This research incorporates techniques such as DR to address the reality gap. Additionally, this research uses TensorFlow and Keras machine learning libraries to develop and train the DRL models.

To develop high-fidelity virtual environments and bridge the reality gap to overcome limitations during DRL, many studies have proposed the use of game engines. Since most modern AAA game engines have been developed to simulate the real world, they consist of advanced physics engines and techniques to accurately reproduce real-world lighting and perform high-fidelity simulations. Game engines such as Unreal Engine [15,16] and Unity [17] are widely used in DRL development. Additionally, GPU-based simulators such as the NVIDIA Flex [18], MuJoCo [19,20], and RAWSim-O [21] have been developed specifically for DRL applications. These simulators are specifically designed to provide extensive support for accurate real-world physical simulations. The key feature of these simulators is their support for rigid/deformable bodies, phase transitions, particles, fluid, cloth, rope, adhesion, and gas simulations. Additionally, RAWSim-O is a simulation framework specifically designed to simulate a robotic fulfilment center for a large warehouse. This allows for efficient simulation and training, order pickup, order delivery, and fulfilment.

Domain randomization (DR) is one of the proposed techniques used in DRL applications to augment learning and eliminate discrepancies between source and target domains. This technique is commonly used to bridge the gap in reality. During the training of the DRL model in a virtual environment, the training environment cannot capture the nuances of the real world, which creates a vast difference between the two environments. Research, such as [22,23], demonstrates the use of DR to augment the training quality by creating a diverse environment to create variability. With DR, environmental variables and properties can be randomized to create different environmental conditions. This allows for changing the textures, lighting conditions, and physical properties to expose the DRL agent to different environmental conditions and scenarios. One of the novel techniques created by OpenAI, called Automated Domain Randomization (ADR) [24], was used to augment the training of a multi-dexterous robotic arm to solve a Rubik’s cube in a virtual environment. This allowed researchers to expose the model to increasingly challenging environmental conditions. This paper demonstrates that training a DRL agent in a virtual environment exhibits the characteristics of reaching meta-learning. Additionally, this research shows that the agent can learn the advanced control policies and vision-based estimations of the model. Sergey et al. [25] demonstrated a photo-realistic DR technique to further augment the learning fidelity.

These advancements have resulted in the creation of more realistic virtual environments that can simulate complex real-world scenarios with a high accuracy. The use of virtual environments has enabled the development of advanced DRL models that can be used in various applications such as autonomous vehicles, robotics, and gaming. The potential benefits of utilizing virtual environments to generate training data are immense and can lead to significant advancements in machine learning. In this paper, Godot, an open-source game engine, was used to perform advanced DRL for Sim2Real applications.

2.1. Deep Reinforcement Learning

In traditional RL, when an agent interacts with an environment, sequential decisions are made to discrete steps [26]. DRL combines reinforcement learning and deep learning to train an agent through trial-and-error. With DRL, the agent can learn the complex policies of the environment. In this implementation, DRL was used to train the agent and create an agent that can self-learn through trial-and-error. DRL excels in learning intricate policies, without requiring explicit guidance. The main goal of an agent in a virtual environment is to maximize the reward by executing the correct sequence of actions [27]. OpenAI et al. [28] in their research on training OpenAI Five to play Dota 2 demonstrated the successful application of DRL in learning-controlled policies from high-dimensional input spaces. Learning involves using exploration/exploitation techniques, where agents try new actions to discover the environment, while exploitation involves choosing actions that are known to yield a higher reward level. A trade-off exists between the two techniques. Rewards are returned based on the actions performed in the environment. The reward indicates how well the agent performs and selects an action. Over time, DRL agents can learn which actions to perform to optimize the reward.

2.2. Domain Randomization

DR is a widely used technique in the field of DRL, particularly in the context of Sim2Real. This technique is commonly used to mitigate reality gaps. DR’s core objective of DR is to subject DRL agents to a diverse set of environments and scenarios. Through a controlled range of simulated variability, it is possible to optimize the policies of the DRL agent to enable its adaptation to real-world conditions. In this case, the environment is created in a game engine, which allows the randomization of attributes such as simulated fidelity, physics dynamics, lighting conditions, specular highlights, textures, and object positions and their orientation [22]. These augmentations in the virtual environment create complexity and diversity, allowing the agent to adjust its behavior progressively over time [29].

3. Godot Game Engine

3.1. Overview of the Game Engine

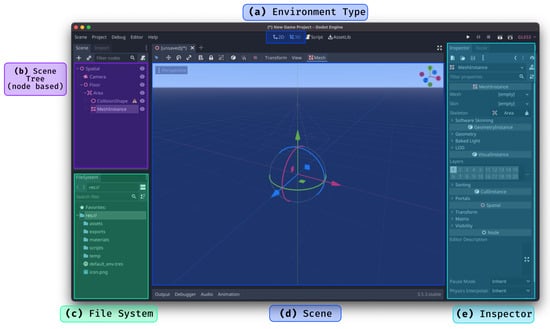

Godot is an open-source game engine that provides cross-platform game development in both 2D and 3D. It has extensive scripting capabilities in multiple languages, performance optimization, network features, and a node-based scene system for creating levels and environments. The Godot game engine provides extensive optimization and support for deploying games on multiple platforms, including the web. Figure 1 outlines Godot’s game engine user interface. Godot’s UI provides direct access to scene builders, file systems, and node inspectors for building high-fidelity gaming environments with ease.

Figure 1.

Godot game engine user-interface.

3.2. Features and Capabilities

The Godot game engine provides many features and capabilities that are ideal for advanced game development and tools for creating both 2D and 3D games. In addition, it provides features suitable for building more complex environments for machine learning.

- 1.

- Multi-platform support: Godot supports multiple platforms including Windows, MacOS, Linux, Android, and HTML. This allows developers to create training environments for their operating systems.

- 2.

- Open-Source: Godot is fully open-source, and developers release it under an MIT license. This enables developers and researchers to create applications without legal constraints. Godot can be customized because the source code is publicly available.

- 3.

- Scripting: Godot was developed in multiple languages. It has a built-in scripting language called GDScript, similar to Python. Additionally, it supports other development languages, such as C#, C++, and Visual Script. This provides a wide range of programming languages for work and development purposes.

- 4.

- Physics Engine: Godot includes both 2D and 2D physics engines. By default, Godot used a high-fidelity Bullet physics engine. In addition, it contains a low-fidelity physics engine called GodotPhysics.

- 5.

- Networking: The Godot engine provides extensive support for both the client-server and peer-to-peer networking for building multiplayer games.

- 6.

- Node-based scene system: The Godot engine allows for the construction of environments or scenes using node-based systems that create a hierarchy between nodes.

The above features provide an excellent starting point for creating a virtual environment for DRL learning. The ability to select different physics engines, networking features, and multi-platform support provides accessibility for controlling the simulation.

With the Godot Game engine, some of the limitations posed by high-end game engines, such as complexity, overhead (due to high resource usage), and limited customization due to closed-source code, can be overcome. First, Godot’s open-source nature allows great flexibility and customization to address the limitations imposed by closed-source code in other game engines. This allows developers full access to the engines’ source code, enabling them to modify and tailor to suit their requirements. Additionally, Godot is designed to be lightweight and efficient, minimizing the overhead and resource usage compared to other high-end engines. Based on the use-case, Godot supports both 2D and 3D simulations while allowing the user to select CPU- or GPU-based physics simulations to further fine-tune the resource usage. This makes it particularly suitable for developers working on projects with limited hardware and resources. Overall, Godot’s combination of openness, efficiency, and user-friendly design makes it an excellent candidate for reinforcement learning applications.

4. Methodology

The following section outlines how the Godot game engine was modified and utilized to perform the DRL. The built-in code features of Godot game engines have been extensively used for interfacing with simulated environments. A custom GDscript is used to create a TCP WebSocket connection to communicate with the game instance. Additionally, a Python framework was developed to interface with the game engine and train the DRL model. The rest of this section demonstrates the proposed framework, setting up the environment, and the experimental setup of the 3-DoF Stewart platform used for Sim2Real DRL.

4.1. Structure of the Framework

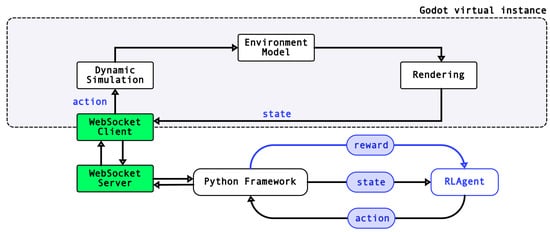

Figure 2 shows the overall structure of the designed DRL framework using the Godot game engine. The Python framework defines the DRL model and communicates with the virtual environment exported from the game engine. For training, the DRL agent must interact with the virtual environment to make state observations, perform actions, evaluate the agent’s performance, and assign rewards. The framework structure is defined as follows:

Figure 2.

3-DoF Stewart platform on Godot game engine.

Environment: Scenario or virtual environment DRL model used for training. It consists of interactive elements, objects, collision meshes, and real-world scenarios that have been virtually developed. This will be based on research, and it will be developed using Godot level design features. Interactions and the manner in which objects behave in virtual systems can be defined by applying kinematic, static, or rigid properties.

RLAgent: The RL agent can perform actions in the environment, make state observations regarding environmental conditions, and update its policies based on the reward received. Observations were made through the WebSocket connection established between the Python framework and virtual instance. The agent is defined as an archive; for example, in the Stewart platform, the goal is to balance the marble at the center of the moving platform by precisely controlling the three axes. To achieve this, the agent should be able to observe the current marble position and moving direction and predict where the marble should be.

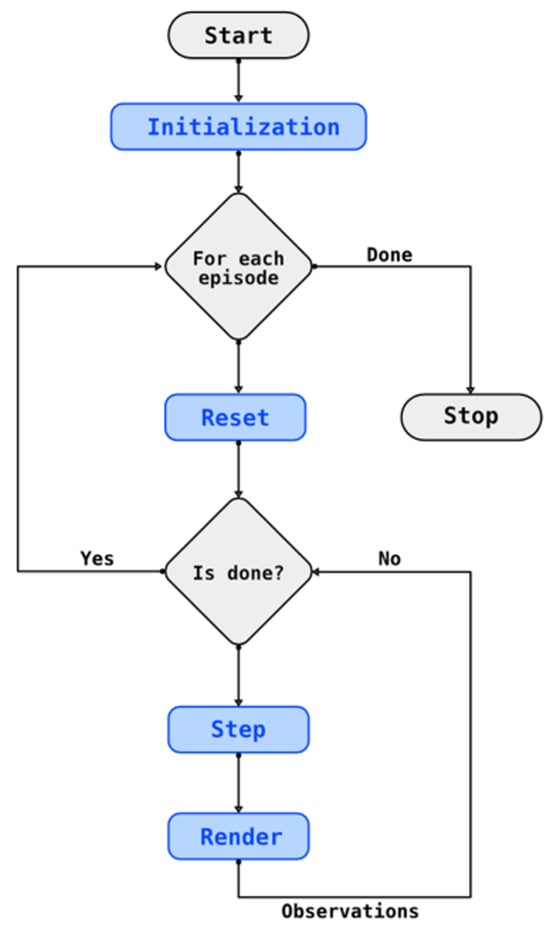

As shown in Figure 3, the environment was first initialized. This initializes the communication ports between the framework and game instance. The Godot virtual environment was exported as a standalone application. A custom GDScript (tcpserver.gd) was created to accept arguments from the Python framework during the initial launch to establish the environment and WebSocket for communication. The Python framework sends requests to virtual instances to step through the simulation, perform domain randomization, and reset the environment when RLAgent fails to reach the goal. This process is repeated until the RLAgent reaches a specific reward level or goal.

Figure 3.

Flow diagram developed to perform DRL [29].

4.1.1. Establishing the WebSocket Connection

For DRL to take place, the Python training script needs to communicate with the virtual instance. When the training script is started, it initiates a handshake and spawns multiple instances of the application, with unique TCP ports for communication. A custom GDScript (tcpserver.gd) was created to accept arguments launched through the Command Line Interface (CLI). These arguments define the WebSocket port used for communication, environmental conditions, and the type of DR to be performed. The websocket port defines the TCP port at which a new virtual instance communicates. Each new virtual instance is opened in a new subprocess to maintain control and handle errors caused by the child process. This allows DRL to be performed across multiple instances of the virtual environment in a distributed manner, using resources.

4.1.2. Interfacing with the Virtual Environment

Godot scripts (GDScripts) can be used to define the behavior and functionality of an object at a scene or level. In addition, it extends the features of the virtual environment by allowing external access. As mentioned, Godot allows the use of different scripting languages such as GDScript, C#, and C++. GDScripts can be attached to a node, connected to signals, and auto-loaded with the environment. When a script is attached to a node, it allows the control of the specific attributes, behaviors, and aspects of the node.

Godot signals provide a mechanism to communicate with other objects in a given scene. Some events are object-specific, whereas others are based on external interactions from user inputs, game logic, physics, collisions between objects, or custom actions. Specific scripts can be attached for these events. State observations can be performed when an event is fired.

Additionally, events can be attached to a global node that allows access to all the sub-children in the node tree. Godot allows the properties and attributes of the selected element or environment object to be changed based on the node type. Using the payload received from the WebSocket connection, the properties of these nodes were modified to perform randomization. In addition, by attaching it to the camera node, it can capture the current view of the platform to make observations. Similarly, actions performed by RLAgent are applied to the environment via the event broadcast feature to trigger a predefined action logic.

4.1.3. Scalability and Fault Tolerance

Scalability and fault tolerance are critical aspects in the design of a robust and efficient framework for DRL. Since Godot supports both CPU- and GPU-based simulations (with slight differences in robustness and performance), each instance can be deployed on any number of machines. Since the framework uses WebSockets to communicate, these instances can be deployed in a distributed manner. To track errors or failures during the DRL process, the continuous logging of progress was implemented. The logs maintain a consistent record of current progress, any errors thrown by the virtual instance, or by the python framework. To perform the distribution, the framework assigns a unique TCP port per instance. The Python script responsible for initializing the WebSocket connection with the Godot game engine was built with commands to step through the simulation. Godot provided a method to pause the simulation, which allows it to step through the simulation until the next set of commands arrives via the initialized connection. If the connection fails during training, it will simply retry the connection, and once the connection is established, the simulation will continue where it is left off.

In addition, to prevent a loss of progress during training, the framework employs periodic checkpoints for every set interval. This allows the training to continue where it is left off, if complete failure occurs. Whenever the models achieve the new best performance on a validation set or through other means of a performance metric, it saves the best performing model. This ensures that the training will retain the best version observed during training. Saving the model periodically allows it to capture the rapid improvements overtime during the exploration phase. Each new training session saves a new model with a timestamp to avoid overwriting the previous models. TensorBoard was used to observe the progress of the training during and after the exploration process. During long training runs, this will provide insights into the overall progress and allow adjustments to hyperparameters and environments, as needed.

4.1.4. Challenges and Difficulties

During the development of the Python framework, a significant challenge arose in establishing the communication between the DRL model and virtual instance. Initially, the virtual environment was exported to a browser-based platform, where actions against the virtual environment were performed using Selenium, which is a Python package for browser automation. The state observations were obtained by capturing a browser screen for basic training. However, browser-based solutions exhibit limitations in terms of fidelity, performance, graphics quality, and platform compatibility, which often result in input latency. Some of these limitations stem from browser-imposed constraints, such as restrictions on the number of concurrent physical objects or limitations on hardware acceleration.

To address these challenges, WebSockets were employed for communication. A dedicated GDScript was developed to facilitate WebSocket client-server support. For distributed training, multiple virtual instances were utilized, necessitating that each instance be assigned a unique TCP port for communication. Leveraging Godot’s ability to pass arguments via the terminal during the launch, a unique TCP port was sent to each instance to establish communication.

5. Experimentation

5.1. Deep Reinforcement Learning on a 3-DoF Stewart Platform

The Python framework and Godot game engine were used to perform Sim2Real on a Stewart platform to overcome this gap in reality. In that paper [29], a Godot environment was used to train the DRL algorithm and perform DR to increase the fidelity of the model. The trained DRL model was used to manipulate a real-world 3-DoF Stewart platform.

First, a Stewart platform was developed using 3D CAD software to ensure that the two platforms were identical. Each designed part was then be exported and 3D printed to create the physical environment or exported to build the virtual environment in the Godot game engine.

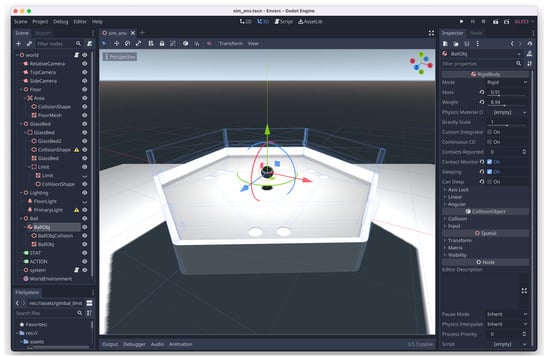

To create the virtual environment, components designed in Autodesk Fusion 360 were imported into the Godot game engine in an OBJECT format. OBJECT/MeshInstances can be used to assign the physical attributes. Figure 4 shows the developed 3-DoF Stewart platform hosted on the Godot game engine. Godot’s collision shapes and body type attributes were used to define how each part interacts with the environment, inputs from the DRAgent, external perturbations, and during the simulation. The body type allows the definition of rigid, static, and kinematic bodies of the Stewart platform. Without defining the body type, the marble will not interact with the platform. By setting, the kinematic body type of the servo arms allows the addition of linear motion to the base platform, resulting in a pitch and roll motion. The servos arms were directly controlled by the inputs from the DRL agent. Additionally, using Godot’s environment properties, the object mass, weight, and gravity scale can be manipulated in environmental physics.

Figure 4.

3-DoF Stewart platform on Godot game engine.

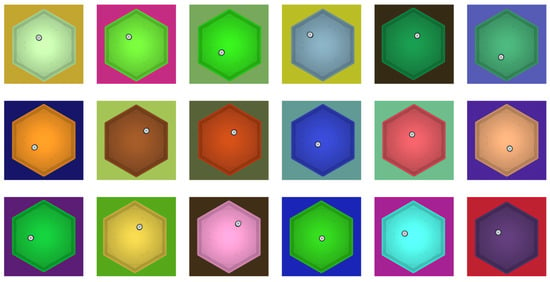

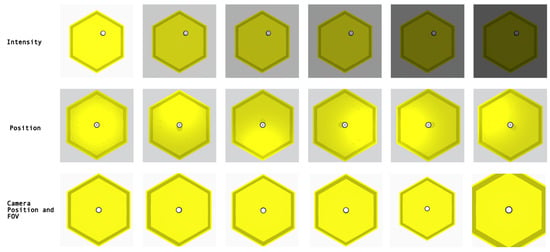

Virtual cameras and event-based triggers were used to make state observations. Additionally, the position and relative velocity of the marble were determined by accessing its position using custom GDScripts. To perform DR, which were attached to the environment nodes to manipulate different aspects of the nodes. The goal of DR is to increase the randomization of the virtual environment, which allows for an increase in the variability of the environment while exposing the agent to a diverse set of different environmental conditions. To this end, different randomizations were introduced. Table 1 lists these randomizations and their value ranges. Each randomization was designed to capture all aspects of the virtual environment. This allows DRL agents to learn only the essential features of the virtual environment. Figure 5 and Figure 6 show the previews of these randomizations in the virtual environment captured through the virtual camera.

Table 1.

Defined environmental properties for DR.

Figure 5.

Domain randomization applied through changing the background colour to increase the variation [29].

Figure 6.

DR applied to change the lighting position, intensity and camera position [29].

5.1.1. Defining the Action Space

For reinforcement learning (RL), it is important to define all possible actions that an agent can take in the given environment, because the agent is required to make a sequence of decisions to maximize the reward in the given environment. In the Stewart platform, the goal of the agent is to guide the marble towards the center of the platform, which maximizes the reward. Based on the DRL algorithm, the agent performs exploration/exploitation to determine actions that yield the highest reward. Therefore, it is important to balance these two factors to ensure effective learning. There are two types of action space: discrete and continuous. In the discrete action space, the agent selects actions from a finite set. However, in a continuous action space, the agent’s action can be a real-valued number within a certain range. In this environment, the actions were designed to perform pitch and roll on the base of the Stewart platform to guide the marble. To move the marble, the platform must move forward, backward, left, and right, considering the base center as the pivot point. In this case, the action space is discrete because a specific action must be performed to move the platform. The action space is defined in the Python framework, and each action in the virtual Godot environment is bound to a specific keypress action. In this environment, the actions are bound to the left, right, top, and bottom keys on the keyboard. Additionally, to reset the position of the platform and the environment, keypress “V” and “R” are used.

5.1.2. Results

This framework was utilized to facilitate the research conducted by the authors. The framework was utilized to evaluate techniques for Sim2Real RL [30] and to show the effectiveness of DR and induced noise to bridge the reality gap in [2]. Two DRL algorithms were used for the evaluation: Actor-Critic and Deep Q-Learning. The paper involved evaluating the performance of the two DRL algorithms in both virtual and physical environments under different training conditions. Multiple training sessions were conducted with different environmental parameters while optimizing the hyperparameters for each algorithm. The following is a summary of the observation made on previous research conducted using this framework. Table 2 shows the optimized hyper-parameters for training and making observations.

Table 2.

Hyperparameters for RL algorithms [29].

To evaluate, each algorithm was measured for 20 runs in each virtual and physical environment. Each iteration was performed with different environment conditions to determine the change in success rate. Table 3 shows different environmental conditions that were performed against each DRL algorithm. The tests were performed with no randomization, randomization to marble position, camera position, with and without DR, and induced noise. In each episode, the complexity of the environment was increased to induce a variability in the environment using the framework. Observations were made through the virtual camera setup in the environment and the physical camera in the physical environment, respectively.

Table 3.

The performance results obtained using the framework, demonstrating the application of DRL using Actor-Critic (A) and Q-Learning (B) within different environment conditions. Adapted from [29].

The results shown in the table demonstrate the effectiveness of using DR and induced noise during the training process to enhance the performance of the physical environment. The maximum reward observed was reached by the agent through balancing the marble position on the Stewart platform. The success rate observed in the training was determined by the number of steps the agent took to position the marble within the center of the platform. The positional data and observational data received by the framework is used to determine the position of the marble relative to the Stewart platform. For all experiments within the virtual and physical environment, the marble was placed randomly. Based on the observations, Q-Learning without randomizations performed poorly due to the inconsistencies between the virtual and physical environments, demonstrating the importance of environmental fidelity during the training. Q-Learning utilized the positional and camera data to make decisions. On the other-hand, the Actor-Critic model excelled in both virtual and physical environments, which leverage only pre-processed image data to track the marble position and mitigate external environment noise. The maximum reward observed through Actor-Critic and Q-Learning was 9942 and 7944, respectively.

Additionally, the results obtained from the framework were compared with a similar implementation of a robot arm developed by Vacaro et al. [31]. This research demonstrated that the models trained on the framework outperformed IMPALA RL when DR and induced noise were added during the DRL process. IMPALA RL used the Unity game engine to perform their training. IMPALA RL with target, camera, and color randomization achieved a success rate of 91.33% in the virtual environment and 87.67% in the physical environment. Meanwhile, the Actor-Critic using the Godot environment achieved a success rate of 99.49% in the virtual environment and 81.88% in the physical environment. Q-Learning with DR and induced noise achieved a respectable 89.55% in the virtual environment and and 78.56% in the physical environment.

The reduced performance in the physical environment is attributed to factors such as the gear-backslash, servo noise, friction on 3D-printed arms and joints, and other environmental variables that had not being accounted for during the modelling of the environment for training. However, the integration of the framework with the Godot game engine provides valuable insights into finding techniques to overcome the reality-gap.

6. Conclusions and Future Work

The development of DRL has led to significant advancements in the field of advanced intelligent robotic systems. One of the major challenges in applying DRL to real-world scenarios is the lack of high-quality training data. One of the proposed methods utilizes a common game or physics engine to learn in a safe but controlled environment. However, existing game/physics engines require extensive hardware and resources, and are often complex to use. In addition, these platforms are proprietary and require licensing for their use and development.

To overcome this issue, this paper introduces a custom framework for utilizing the open-source Godot game engine to perform DRL. The Godot game engine provides a versatile and accessible platform that can be used to train DRL models. This framework was utilized in a recent study [29] to bridge the reality gap between virtual reality and reality using a 3-DoF Stewart platform. This research demonstrates the versatility and efficacy of using Godot game engines in DRL applications.

Future work will focus on improving the overall fidelity of the simulations, utilizing external sensor data for real-world applications, optimizing scalability, and creating a standalone library for use within the Godot game engine. The current version of this framework is compatible with Godot version 3.0. Future attempts will be made to migrate the current codebase to a compatible Godot 4.0 version.

Author Contributions

Writing—review, editing and original draft preparation, M.R.; supervision, review and editing, Q.H.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Computer Code and Software for this research are available at https://github.com/ma-he-sh/GodotSim2RealResearch.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Ranaweera, M.; Mahmoud, Q. Bridging Reality Gap Between Virtual and Physical Robot through Domain Randomization and Induced Noise. In Proceedings of the Canadian Conference on Artificial Intelligence, Virtual Online, 27 May 2022; Available online: https://caiac.pubpub.org/pub/kzx3gl4e (accessed on 12 January 2024).

- Wang, J.; Chen, Y.; Feng, W.; Yu, H.; Huang, M.; Yang, Q. Transfer Learning with Dynamic Distribution Adaptation. ACM Trans. Intell. Syst. Technol. 2020, 11, 1–25. [Google Scholar] [CrossRef]

- Ranaweera, M.; Mahmoud, Q.H. Virtual to Real-World Transfer Learning: A Systematic Review. Electronics 2021, 10, 1491. [Google Scholar] [CrossRef]

- Petrenko, A.; Huang, Z.; Kumar, T.; Sukhatme, G.; Koltun, V. Sample Factory: Egocentric 3D Control from Pixels at 100000 FPS with Asynchronous Reinforcement Learning. arXiv 2020, arXiv:2006.11751. [Google Scholar]

- Brockman, G.; Cheung, V.; Pettersson, L.; Schneider, J.; Schulman, J.; Tang, J.; Zaremba, W. OpenAI Gym. arXiv 2016, arXiv:1606.01540. [Google Scholar]

- Castro, P.S.; Moitra, S.; Gelada, C.; Kumar, S.; Bellemare, M.G. Dopamine: A Research Framework for Deep Reinforcement Learning. arXiv 2018, arXiv:1812.06110. [Google Scholar]

- Plappert, M. Keras-rl. 2016. Available online: https://github.com/keras-rl/keras-rl (accessed on 12 January 2024).

- Liang, E.; Liaw, R.; Moritz, P.; Nishihara, R.; Fox, R.; Goldberg, K.; Gonzalez, J.E.; Jordan, M.I.; Stoica, I. RLlib: Abstractions for Distributed Reinforcement Learning. arXiv 2018, arXiv:1712.09381. [Google Scholar]

- Beeching, E.; Debangoye, J.; Simonin, O.; Wolf, C. Godot Reinforcement Learning Agents. arXiv 2021, arXiv:2112.03636. [Google Scholar]

- Raffin, A.; Hill, A.; Gleave, A.; Kanervisto, A.; Ernestus, M.; Dormann, N. Stable-Baselines3: Reliable Reinforcement Learning Implementations. J. Mach. Learn. Res. 2021, 22, 1–8. [Google Scholar]

- Huang, S.; Dossa, R.F.J.; Ye, C.; Braga, J. CleanRL: High-quality Single-file Implementations of Deep Reinforcement Learning Algorithms. arXiv 2021, arXiv:2111.08819. [Google Scholar]

- Derevyanko, G. Lupoglaz/GodotAIGym. 2019. Available online: https://lupoglaz.github.io/GodotAIGym/ (accessed on 12 January 2024).

- Morais, G.; Loron, I.; Coletta, L.F.; da Silva, A.A.; Simões, A.; Gudwin, R.; Costa, P.D.P.; Colombini, E. CST-Godot: Bridging the Gap Between Game Engines and Cognitive Agents. In Proceedings of the 2022 21st Brazilian Symposium on Computer Games and Digital Entertainment (SBGames), Natal, Brazil, 24–27 November 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Bakhmadov, M.; Fridheim, M. Combining Reinforcement Learning and Unreal Engine’s AI-Tools to Create Intelligent Bots. Bachelor Thesis, NTNU, May 2020. Available online: https://ntnuopen.ntnu.no/ntnu-xmlui/handle/11250/2672159 (accessed on 12 January 2024).

- Boyd, R.A.; Barbosa, S.E. Reinforcement Learning for All: An Implementation Using Unreal Engine Blueprint. In Proceedings of the 2017 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 14–16 December 2017; pp. 787–792. [Google Scholar] [CrossRef]

- Ward, T.; Bolt, A.; Hemmings, N.; Carter, S.; Sanchez, M.; Barreira, R.; Noury, S.; Anderson, K.; Lemmon, J.; Coe, J.; et al. Using Unity to Help Solve Intelligence. arXiv 2020, arXiv:2011.09294. [Google Scholar]

- Kar, A.; Prakash, A.; Liu, M.Y.; Cameracci, E.; Yuan, J.; Rusiniak, M.; Acuna, D.; Torralba, A.; Fidler, S. Meta-Sim: Learning to Generate Synthetic Datasets. arXiv 2019, arXiv:1904.11621. [Google Scholar]

- Todorov, E.; Erez, T.; Tassa, Y. MuJoCo: A physics engine for model-based control. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 5026–5033. [Google Scholar] [CrossRef]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar] [CrossRef]

- Merschformann, M.; Xie, L.; Li, H. RAWSim-O: A Simulation Framework for Robotic Mobile Fulfillment Systems, 8th ed.; Bundesvereinigung Logistik (BVL) e.V: Bremen, Germany, 2018. [Google Scholar] [CrossRef]

- Tobin, J.; Fong, R.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain Randomization for Transferring Deep Neural Networks from Simulation to the Real World. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24 September 2017; pp. 23–30. [Google Scholar]

- Park, S.; Kim, J.; Kim, H.J. Zero-Shot Transfer Learning of a Throwing Task via Domain Randomization. In Proceedings of the 2020 20th International Conference on Control, Automation and Systems (ICCAS), Busan, Republic of Korea, 13–16 October 2020; pp. 1026–1030. [Google Scholar] [CrossRef]

- Open, A.I.; Akkaya, I.; Andrychowicz, M.; Chociej, M.; Litwin, M.; McGrew, B.; Petron, A.; Paino, A.; Plappert, M.; Powell, G.; et al. Solving Rubik’s Cube with a Robot Hand. arXiv 2019, arXiv:1910.07113. [Google Scholar]

- Zakharov, S.; Ambrus, R.; Guizilini, V.; Kehl, W.; Gaidon, A. Photo-realistic Neural Domain Randomization. arXiv 2022, arXiv:2210.12682. [Google Scholar]

- Zhao, W.; Queralta, J.P.; Westerlund, T. Sim-to-Real Transfer in Deep Reinforcement Learning for Robotics: A Survey. In Proceedings of the 2020 IEEE Symposium Series on Computational Intelligence (SSCI), Canberra, Australia, 1–4 December 2020; pp. 737–744. [Google Scholar] [CrossRef]

- Jiang, H.; Wang, H.; Yau, W.Y.; Wan, K.W. A Brief Survey: Deep Reinforcement Learning in Mobile Robot Navigation. In Proceedings of the 2020 15th IEEE Conference on Industrial Electronics and Applications (ICIEA), Kristiansand, Norway, 9–13 November 2020; pp. 592–597. [Google Scholar] [CrossRef]

- Berner, C.; Brockman, G.; Chan, B.; Cheung, V.; Dębiak, P.; Dennison, C.; Farhi, D.; Fischer, Q.; Hashme, S.; Hesse, C.; et al. Dota 2 with Large Scale Deep Reinforcement Learning. arXiv 2019, arXiv:1912.06680. [Google Scholar]

- Ranaweera, M.; Mahmoud, Q.H. Bridging the Reality Gap Between Virtual and Physical Environments Through Reinforcement Learning. IEEE Access 2023, 11, 19914–19927. [Google Scholar] [CrossRef]

- Ranaweera, M.; Mahmoud, Q. Evaluation of Techniques for Sim2Real Reinforcement Learning. In The International FLAIRS Conference Proceedings; Open Journal System: Gainesville, FL, USA, 2023; Volume 36. [Google Scholar] [CrossRef]

- Vacaro, J.; Marques, G.; Oliveira, B.; Paz, G.; Paula, T.; Staehler, W.; Murphy, D. Sim-to-Real in Reinforcement Learning for Everyone. In Proceedings of the 2019 Latin American Robotics Symposium (LARS), 2019 Brazilian Symposium on Robotics (SBR) and 2019 Workshop on Robotics in Education (WRE), Rio Grande do Sul, Brazil, 23–25 October 2019; pp. 305–310. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).