Abstract

Most online action detection methods focus on solving a (K + 1) classification problem, where the additional category represents the ‘background’ class. However, training on the ‘background’ class and managing data imbalance are common challenges in online action detection. To address these issues, we propose a framework for online action detection by incorporating an additional pathway between the feature extractor and online action detection model. Specifically, we present one configuration that retains feature distinctions for fusion with the final decision from the Long Short-Term Transformer (LSTR), enhancing its performance in the (K + 1) classification. Experimental results show that the proposed method achieves an accuracy of 71.2% in mean Average Precision (mAP) on the Thumos14 dataset, outperforming the 69.5% achieved by the original LSTR method.

1. Introduction

Online action detection [1] plays an important role in computer vision for applications such as smart surveillance and human–computer interaction [2,3]. Unlike action recognition, which focuses solely on trimmed or pre-edited videos, online action detection involves the identification and localization of actions in real-time video streams as they unfold, enabling real-time decision-making even when the information is incomplete.

An online action detector architecture typically includes a feature extractor for processing input videos and a detection model that generates probabilities for target action classes, along with an additional ‘background’ class, thereby constituting a (K + 1) classification task. Note that the ‘background’ class is the key to localizing the actions within a video stream. Due to the significance of online action detection, several popular models have been extensively developed in recent years, such as TRN [4], GateHUB [5], and LSTR [6].

Basically, there are two tasks closely related to online action detection: action recognition and temporal action localization. Action recognition [7,8] is the simplest task, where the goal is to classify a trimmed video segment into predefined target actions. Therefore, the entire video segment contains only one action and excludes other actions. However, when recognizing actions in unedited videos, where multiple actions may occur simultaneously or where there are moments without any action, action recognition alone may be insufficient. Temporal action localization [9,10], on the other hand, adds the additional challenge of identifying the time intervals of each action in untrimmed videos, which may involve different types of actions occurring simultaneously. This approach more accurately reflects real-world scenarios.

Unfortunately, both of the above-mentioned tasks share the drawback of typically being performed offline, meaning predictions are made only after the entire video has been viewed. This limitation hinders their practical applications in real-time scenarios. To tackle this problem, online action detection addresses this issue by making predictions based on past information at the current moment, unlike traditional methods that require processing information from all moments before making predictions for each time frame. In other words, online action detection tasks require the model to identify ongoing actions in a live video stream, even when only partial actions have been observed.

There are two well-known datasets for online action detection: Thumos14 [11] and TVSeries [1]. Many prominent online action detection models, which predominantly use image-based approaches, have been validated using these datasets. For this reason, this paper will not discuss skeleton-based methods. Among them, De Geest et al. [1] were the first to introduce the task of online action detection and provided the TVSeries dataset. In the early stages of the online action detection field, 3D ConvNets [7] were employed for spatio-temporal feature modeling across multiple frames, similar to their early use in action recognition. However, 3D ConvNets struggled to capture temporal correlations beyond their receptive fields. This led to the proposal of a two-stream feedback network with LSTM [12] to model temporal dependencies [13]. Inspired by human judgment, where current actions are often predicted based on anticipated future states, TRN [4] used an LSTM model to predict future information and combined it with previously observed data for recognition. While these approaches rely on RNN models, they face challenges in effectively modeling long-term dependencies due to limited interaction between features. In recent years, significant breakthroughs have been achieved with Transformers in the fields of NLP and image recognition. Thus, OadTR [14] was proposed, using Transformers instead of RNNs. Beyond enabling interactions between stored features, Transformers can also learn how past features influence current predictions.

Finally, building on these foundations, LSTR [6] divided information into short-term memory and long-term memory and used Transformers to construct encoders and decoders, thus avoiding the limitations associated with RNNs [12,15,16,17].

While previous models have addressed many challenges in online action detection, this paper focuses on two under-explored issues in the field of online action detection, including the data imbalance caused by the background class and the similarity in features between the background class and the other target classes, both of which significantly hinder training effectiveness. The background class is unique compared to other target actions, but most prior methods, such as LSTR [6], OadTR [14], and TRN [4], treated the background class as a regular class similar to the other target actions, directly undertaking a (K + 1) classification task, where denotes the number of target actions. However, in most real-world scenarios, the ‘background’ class occupies more frames than the target actions in video streams, leading to a data imbalance problem. Furthermore, the large number and diversity of frames cause the ‘background’ class to easily overlap with similar target actions in terms of features. As an attempt to solve the problem of data imbalance and feature similarity, this paper proposes a novel modeling approach based on the LSTR model to mitigate the training difficulties posed by these two issues.

In the video domain, addressing data imbalance remains a relatively unexplored topic. In contrast, the image domain benefits from data augmentation techniques that can effectively tackle this issue. However, traditional strategies from the image domain are not directly applicable to video data due to their temporal nature. CutMix [18], a commonly used data augmentation technique in the image domain, overlays two different images and applies random erasing to enhance the dataset. VideoMix [19] adapted the work of CutMix [18] for the video domain. However, recent approaches to online action detection typically use the same dataset and feature extractor during training to ensure a fair comparison of model performance. At this stage, the data consist of offline-extracted features rather than raw images. Moreover, creating meaningful augmented action videos requires careful design of video process operations such as random erasing, cropping, translation, or overlay, which present limitations when adopting data augmentation techniques in the video domain.

Another approach relevant to our research is GateHub [5], which employed focal loss to address the data imbalance issue, referred to as the “background suppression objective” in their work. Focal loss [20] is commonly used to mitigate data imbalance by down-weighting the contribution of easy examples, thereby enabling the model to learn from harder examples. Inspired by this suppression mechanism, we developed our framework by incorporating an additional pathway to achieve a similar function. Unlike GateHub, which relies solely on training loss to tackle this problem, our proposed method strengthens predicted actions through an additional classifier, allowing the overall framework to make more stable predictions and achieve state-of-the-art performance on the dataset.

To the best of our knowledge, this work is the first to highlight these two challenges in the field of online action detection. The proposed framework for online action detection significantly addresses the training difficulties posed by these challenges. The contributions of this work include:

- Identifying two specific challenges in online action detection: data imbalance caused by the background and the difficulty in classification due to the feature similarity between the background class and the target actions.

- Proposing a framework for online action detection to mitigate the negative impacts of data imbalance and classification difficulty resulting from feature similarity between the background class and the target actions.

- Developing a framework for online action detection where an additional action classifier is incorporated into the well-known model LSTR [6].

- Demonstrating through experimental results that the proposed framework for online action detection significantly improves performance on the Thumos14 dataset.

2. Motivations

While the aforementioned methods address some challenges in online action detection to a certain extent, they share a common limitation of failing to explicitly distinguish the “background” class. Instead, a (K )-class classifier is typically trained. Specifically, Equation (1) shows the output y of a typical online action detection model:

where is the number of target actions, P(i) is the probability of action occurring, and P(background) denotes the union of probabilities of all non-target actions. The background is unique and can be represented as shown in Equation (2).

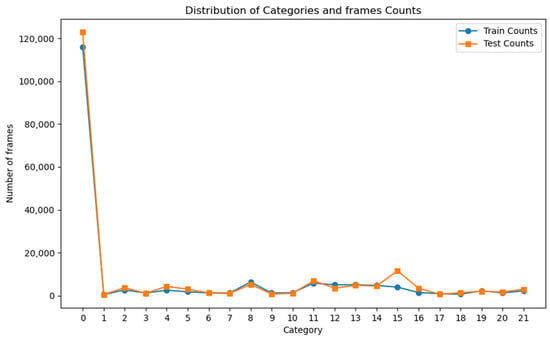

Based on the problem formation, two primary challenges emerge. The first challenge is data imbalance. In online action detection, labels are assigned at the frame level, with each frame having a label. The number of background labels far exceeds those of other classes, as demonstrated in Figure 1, which shows the distribution of frames per category in the Thumos14 dataset, where category 0 represents the background class. We can see that the number of frames labeled as background is approximately 120,000, while all other categories have fewer than 20,000 frames. This indicates a severe data imbalance for the background category in comparison to other categories, making model training particularly challenging. Unfortunately, in real-world scenarios, the background class typically constitutes the majority of frame labels. Thus, data imbalance reflects potential training difficulties, even though it may accurately represent real-world data distributions. Common approaches to handling data imbalance, such as oversampling and undersampling, are difficult to implement in the context of online action detection, unlike in image classification tasks. In online action detection, each frame is temporally related, making it infeasible to arbitrarily add or remove frames. This interdependence adds to the complexity of the task.

Figure 1.

Distribution of frames per category in the Thumos14 dataset, where category 0 represents the background class.

The second challenge is that the feature differences between classes are sometimes marginal. In classification tasks, the difficulty of classification depends on the distinctiveness of features between classes. For example, the classification difficulty of category can be represented by the intersection of the set of features of category (denoted as ) with the set of features of other categories, which is represented as the union of the set of features of all other target actions except action and the set of features of the background category, as shown in Equation (3)

where is the set of features of action , and is the set of target action indices, and is the set of background features. As previously defined in Equation (2), anything that is not a target action will be classified as background. Therefore, depending on the dataset, the background category may include any action other than the target actions. This means that the features of the background category encompass the features of all possible actions. Consequently, the challenge in online action detection lies not only in distinguishing between a target action and other target actions but also in differentiating between the target action and all possible actions. Therefore, Equation (3) can be rewritten as Equation (4),

where represents the set of all possible action indices. The larger the intersection, the more difficult the classification. Note that the includes features of all possible actions, and even frames with no actions are classified as background. Therefore, the feature differences between classes can be marginal for the model, making the classification task in online action detection particularly challenging.

Based on the two points mentioned above, we find that training a simple K-class classifier to classify only target actions without including the background class can reduce classification difficulty compared to using a (K + 1)-class classifier. The classification difficulty of the K-classifier, without the background category, can be represented as shown in Equation (5).

Firstly, this approach avoids data imbalance issues. Secondly, its classification difficulty depends solely on the features associated with the selected target actions. Therefore, this paper suggests first training a K-class classifier and then fusing the information obtained with the original model to arrive at better predictions.

For illustrative purposes, this paper uses the LSTR model as the base and integrates a pre-trained K-class classifier to enhance detection accuracy. Experimental results demonstrate that for target actions without background, the K-class classifier outperforms the original model in making predictions. Furthermore, when applied to all classes, including the background, incorporating the information generated by the K-class classifier into the original model yields better accuracy than direct prediction using the original model alone.

3. Proposed Framework and Loss

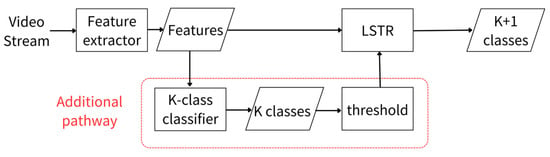

As an attempt to address the above-mentioned challenges, this paper proposes an innovative framework for online action detection, as shown in Figure 2, which incorporates an additional pathway between the feature extractor and the LSTR. The advantage of using a K-class classifier within this pathway is that it avoids the two aforementioned challenges by fusing the information obtained from the K-class classifier with the original model, leading to improved predictions.

Figure 2.

The proposed framework for online action detection with an additional action classifier.

3.1. Model Architecture

When a video stream is fed into the feature extractor, the collected input features at time t can be represented as Equation (6),

where τ represents the number of input frames, t represents the current time, and represents the feature of the i-th frame. According to the official settings in [4], the original dimension of is set to 2048 for the LSTR model. As shown in Figure 2, we employ a K-class action classifier within the additional pathway. The reason for using K-class classification is that it provides more robust and accurate training compared to a (K + 1)-class classifier, thereby alleviating the data imbalance issue. We then use this classifier as a secondary feature extractor to derive K probabilities for the target actions. The output of the K-class classifier can be represented as shown in Equation (7),

where is the number of target actions, and is the event where any target action occurs, and is the predicted probability of a specific target action.

Next, we empirically determine a threshold: if the input is greater than 0.05, the output is 1; otherwise, the output is 0, as shown in Equation (8),

To determine the optimal threshold value, we initially tested a threshold of 0.5, then gradually decreased it in increments of 0.05, with the lowest value tested being 0.01. After several experiments, a threshold of 0.05 was selected as optimal.

In the context of neural networks, the output of the last layer in a fully connected network is often referred to as the logits. Therefore, we denote the output of the fully connected layer in the original model as , as shown in Equation (9),

where represents the logit of the original model for the i-th class. Next, we use the output of the K-class classifier, as the input to the threshold function in Equation (8) to obtain a reference value . We then perform element-wise multiplication between this reference value and the logits of the original model, to obtain the output of the proposed model as shown in Equation (10),

where * denotes element-wise multiplication. The missing dimension of the background is represented by 1, as the K-class classifier cannot predict the probability of the background class. Finally, after passing through softmax, we obtain the predicted output, as shown in Equation (11)

The purpose of this approach is to exclude certain actions, which are assigned a reference value of 0 and judged as highly unlikely to occur by the K-class classifier, without disrupting the original logit values for other classes, which have a reference value of 1. The classification capability of the K-class classifier for target actions, by excluding frames with the background class, is superior to that of the (K + 1)-class classifier, as will be demonstrated in the experimental results. However, the probabilities generated by the K-class classifier are based on the assumption that the input contains only the target actions. As shown in Equation (7), since the K-class classifier was trained on data containing only target actions, the probabilities it outputs reflect these known target actions. In the context of online action detection, this assumption is impractical. Thus, the actual numerical values of the probabilities from the K-class classifier cannot be directly applied to the logit values of the original model. To address this, we use a threshold function to evaluate the relative magnitudes of the probabilities, disregarding their actual values. This approach avoids directly computing the probabilities from the -class classifier with the logit values of the ( + 1)-class classifier.

3.2. Loss Function

In the aforementioned architecture, when an update occurs and the threshold outputs a value of 0 for a certain action, the multiplication with the original logit O (as described in Equation (10)) results in 0, regardless of the logit’s original value. Consequently, the model’s final output will not be influenced by the original logit O. Since most updates in neural networks rely on gradient descent, it is essential to calculate gradients to perform these updates. From the perspective of backpropagation updates, as long as the threshold outputs a value of 0 for a certain action, the error term propagated to the preceding layers for that action will also be 0 during the update. This means that the weights related to that action will not be adjusted, as illustrated by the gradients in Equation (12).

Backpropagation allows us to compute gradients efficiently using the chain rule of calculus. The partial derivative of the logits layer of the proposed model with respect to the original logit O is given by Equation (12).

where represents the prediction value of the K-class classifier for the i-th action. As long as the is 0, the i-th action will not be updated during the iteration. For example, suppose a certain frame does not contain the action of playing basketball. If the K-class classifier determines that this frame does not depict the action of playing basketball, meaning is 0, then even if the original model predicts that the frame is definitely depicting playing basketball, the final output from the proposed model will still indicate that it is not the action of playing basketball. Although the action in this frame is correctly identified, the original LSTR model is not penalized for this incorrect prediction. This could lead to the model becoming overly reliant on the K-class classifier.

To address the issue of no updating when outputs 0, introduce an additional loss component to the original Cross-Entropy (CE) loss. The purpose of this additional loss is to prevent the ( + 1)-class classifier from overly reliant on the predictions of the K-class classifier. The Cross-Entropy loss function is defined in Equation (13)

where is the number of samples, is the number of classes, is the ground truth label for sample and class , and is the predicted probability for sample and class . The original loss function can be calculated using Cross-Entropy to compute the error between the ground truth and our predicted values, as shown in Equation (14)

Additionally, we design an extra loss function based on Cross-Entropy to calculate the error between the ground truth and the probabilities obtained by passing through the softmax, as shown in Equation (15):

Finally, we obtain the proposed loss function by adding the original loss in Equation (14) and the newly designed loss in Equation (15) together, and dividing the final combined loss by 2, as shown in Equation (16)

to maintain a learning rate similar to that of the original LSTR model.

To analyze the impact of this proposed loss, we examine its gradient, which determines the weight updates, similar to what we carried out earlier. The partial derivative of the proposed loss with respect to O can be expressed as Equation (17) below:

When the previously mentioned situation occurs, that is, when outputs 0 for a certain action, the value of corresponding to that action will also be 0, as shown in Equation (12). If we use the original loss, this will result in the weights related to that action not being updated due to this discrepancy. However, with the proposed loss, even if is 0, can still update this discrepancy.

With the addition of the newly designed loss , even when the threshold output is 0, the gradient of with respect to the logits will still propagate back to update the earlier layers of the network. This helps to reduce instances where updating is not triggered when the predicted logits for a certain action significantly deviate from the true label.

The proposed extra loss function, , is a crucial component designed to address situations where the K-class classifier predicts a value of 0, indicating that no target action is detected. In such instances, using the original loss design would cause the overall outcome to be dominated by this zero value, regardless of the contribution from the (K + 1)-class classifier. This would lead to the model’s training process being overly dependent on the K-class classifier. In contrast, by applying the proposed loss function, this issue is mitigated, preventing the model from relying solely on the K-class classifier’s prediction. In summary, the purpose of the proposed additional loss element is to ensure that the model can still make stable predictions even when the K-class classifier predicts no action from within the K classes, as backpropagation remains valid through this extra loss element during training.

4. Experimental Results

The previous section detailed the fusion of information extracted by the K-class classifier with the original online action detection model architecture. In this section, we first introduce the dataset, metrics, and training configurations used. We then discuss how we trained the K-class classifier and validated that its accuracy for the K target actions surpasses that of the (K + 1)-class classifier. Following this, we describe the training configuration for the proposed model using the fusion method outlined in the previous section. Additionally, we briefly describe two other fusion methods that we experimented with. Finally, we present experimental results on the well-known Thumos14 dataset, comparing the proposed method with other state-of-the-art models in the field of online action detection.

4.1. Computational Platforms

To evaluate the performance of the proposed system, we conducted experiments utilizing an Intel I Core I i7-7700 processor (Intel Corporation, Santa Clara, CA, USA) running at 3.6 GHz and an NVIDIA GeForce RTX 3090 graphics card (NVIDIA Corporation, Santa Clara, CA, USA). The experiments are conducted under Python 3.8, which utilizes Pytorch and the NVIDIA CUDA 11.7 library for computation.

4.2. Datasets

4.2.1. Thumos14

The Thumos14 dataset is a large-scale video dataset that includes 1010 videos for validation and 1574 videos for testing from 20 classes. Among all the videos, there are 220 and 212 videos with temporal annotations in the validation and testing sets, respectively. This dataset mainly consists of motion-related actions, such as high jumps, diving, and so on. A potential limitation of the Thumos14 dataset is that the distinct visual features of different sports movements make them easier to distinguish. This can lead to repetitive feature extraction within the same action class, making the dataset more reflective of a controlled or closed-world scenario. In such cases, classifiers may rely heavily on environment-specific features, reducing the challenge of distinguishing between actions. For instance, “high jump” clips are typically recorded in specific venues, while “weightlifting” actions are commonly performed on mats. If the video input of the test set contains a high jump performed on a mat, the classifier could mistakenly categorize it as “weightlifting”, relying on the shared environment-specific feature (the mat) rather than the actual movement. As a result, when transferring models trained on Thumos14 to other datasets, this reliance on environment-specific features can lead to domain shift problems, where the classifier struggles to generalize to different contexts or action settings. However, since the Thumos14 dataset is the most widely used dataset for online action detection models, we selected it for our experiments.

4.2.2. Kinetics-400

The dataset contains 400 human action classes, with at least 400 video clips for each action. Each clip lasts around 10 s and is taken from a different YouTube video. The actions are human-focused and cover a broad range of classes, including human-object interactions such as playing instruments as well as human–human interactions such as shaking hands. As most recent online action detection [4,5,6] approaches use the same feature extractor pretrained on the Kinetics-400 dataset, we adopted this setting and selected Kinetics-400 as the dataset for training our feature extractor.

4.3. Evaluation Metric

Following previous work [1,6,14], we evaluate the performance of online action detection using mean Average Precision (mAP) per frame. First, the frames are ranked according to their confidence (high to low). The precision of a class at cut-off n in this list is calculated as:

where and are the number of true positive frames and false positives at the cut-off, respectively. The average precision of a class is then defined as:

where is an indicator function that equals 1 if frame is a true positive and 0 otherwise. P represents the total number of positive frames. The mean of the AP across all classes (mAP) is the final performance metric for an online action detection method.

We selected mAP as the evaluation metric because it is the most widely used metric for online action detection tasks. For example, both the paper [1] and the official documentation for Thumos14 use mAP for evaluation. Similar to the F1 score, mAP is effective in assessing the ability of online action detection frameworks to handle data imbalance, ensuring that minority classes are not overlooked due to imbalanced training data. Additionally, mAP accounts for confidence levels, making it one of the best choices for evaluating multi-class action recognition tasks, as it assesses both recall and precision for each class.

4.4. Implementation Details and Evaluation

We used the same LSTR [6] method to train the K-class classifier, with the training configuration largely following that of LSTR. As is common in image tasks, we first pre-trained our feature extractors on a large action dataset. In the field of action recognition, dual-stream inputs, including RGB frames and optical flow, are commonly employed. Accordingly, we utilized two feature extractors to process the RGB frames and optical flow data separately.

For pre-training, we chose the Kinetics-400 dataset [7]. As for the backbone, we used ResNet-50 [21] for extracting features from the RGB frames and BN-Inception [22] for the optical flow. We directly used the pre-trained data provided by TeSTra [23], as mentioned in LSTR, which pre-trains the feature extractors based on these methods. The Thumos14 videos were then processed using the trained feature extractors to obtain RGB and optical flow features, which were subsequently used for training in this study. Additionally, optical flow was processed using the TV-L1 method.

After obtaining the training data, we performed frame trimming to remove those containing the background class, as the K-class classifier is not designed to classify the background class. This effectively transformed the online action detection task into an action recognition task while still maintaining its online nature. We adjusted two hyperparameters of the LSTR model: setting the long memory to 0 and the working memory to 2. This configuration was used to train our K-class classifier, as demonstrated in Table 1.

Table 1.

The training configuration differences between the (K + 1)-class classifier and the K-class classifier.

After training, we compared this model with the original LSTR model, which was trained on the full, untrimmed videos, in terms of mAP for the K target actions. We found that, using the same LSTR model for training, the K-class classifier outperformed the (K + 1)-class classifier for the K target actions, as demonstrated in Table 2.

Table 2.

Performance comparison of K-class classifier and (K + 1)-class classifier via LSTR on the Thumos14 dataset, focusing only on target actions and excluding the background class.

Next, we combined the K-class classifier and (K + 1)-class classifier using the fusing method described in the previous section and retrained the combined model. For simplicity, we used the same offline extracted features provided by TeSTra [23] to train the (K + 1)-class classifier. The training configuration followed the standard LSTR [6] setup, using the Adam optimizer [24] with a weight decay of . The model was optimized with a batch size of 16 and trained for 25 epochs, with the threshold set to 0.05. We compared the performance of the proposed method on Thumos14 with several state-of-the-art models, including TRN [4], LSTR [6], OadTR [14], Colar [25], and WOAD [26]. In the experiment, we tested both the original loss and the proposed loss , as demonstrated in Table 3.

Table 3.

Performance comparison with state-of-the-art methods on the Thumos14 dataset.

As shown in the bottom row of Table 3, the proposed method employing the achieves the highest performance, with an accuracy of 71.2% in mAP. Additionally, the proposed method using the also demonstrates strong performance, reaching approximately 70.6% accuracy in mAP, which is still superior to the state-of-the-art approaches. This result highlights the effectiveness of the proposed approach by retaining feature distinctions for fusion with the fully connected layers of the LSTR, demonstrating an improvement in (K + 1) classification performance for online action detection.

While our method introduces an additional K-class classifier to the original online action detection model, it is important to analyze the impact this design has on computational cost. We conducted an experiment to measure the FLOPs of the LSTR model with and without an additional action classifier. The FLOPs for LSTR are based on the data provided by [5], while the FLOPs of our method were calculated independently, as shown in Table 4.

Table 4.

Comparison of the GFLOPs between the original LSTR ((K + 1)-class classifier) and the proposed method.

4.5. Other Methods Attempted

The models described above reveal the final version of our work. Apart from using the threshold approach, we also explored two additional methods to combine the two models. The motivation behind these methods was to enable the original model to autonomously incorporate information from the K-class classifier rather than relying on manually set thresholds.

In the first method, we linearly transformed the K-class classifier’s output into K values, where K is the number of target actions. We then applied a sigmoid function, multiplied this with the original logits, and finally applied a softmax. Essentially, the key difference from the final model is expressed as follows:

where represents the sigmoid function, and L(x) represents the linear transformation. We chose not to linearly predict the probability of the background class because we believe that a model trained on a dataset without a background class cannot accurately predict information related to the background class.

In the second method, we referred to the attention mechanism and self-attention mechanism [27], employing the QKV (Query, Key, Value) approach. Our goal was to enable the model to learn how to integrate the relationships between the K-class classifier and (K + 1)-class classifier using the attention mechanisms. In this approach, we selected the output of the (K + 1)-class classifier as the Query and the output of the K-class classifier as the Key. Our aim was to obtain the Value corresponding to the Key when Query and Key are similar. We linearly transformed the output of the (K + 1)-class classifier into K dimensions and used this transformed value as the Value. We chose not to use the K-class classifier directly as the Value because, as mentioned earlier, we believe that the probabilities predicted by the K-class classifier reflect relative magnitudes rather than actual values. The formula related to the QKV attention mechanism is shown below:

Unfortunately, according to the results in Table 5, both approaches, while showing some effectiveness, slightly underperform compared to the proposed threshold-based method. Although the fusion methods did not perform as well as the selected threshold-based approach, we believe that their design insights are valuable for further investigation. However, these methods may require more complex tuning processes or the integration of different loss designs to achieve optimal performance. In contrast, the threshold-based method is straightforward and intuitive, making it an effective choice for manually achieving desired online action detection performance. Therefore, exploring a more effective way to fuse the outputs of the K-class classifier and the (K + 1)-class classifier will be one of the key focuses of our future research.

Table 5.

Performance comparison of the three proposed fusion methods with state-of-the-art methods on the Thumos14 dataset.

5. Conclusions

This paper addresses two significant challenges in online action detection: data imbalance caused by the background class and the similarity in features between the background and target classes. We proposed a framework that incorporates an additional K-class classifier to mitigate these issues. Experimental results demonstrate that the proposed method significantly improves mAP performance on the Thumos14 dataset compared to existing models. While the introduction of the K-class classifier enhances accuracy, future work will focus on optimizing the fusion process between the K-class and (K + 1)-class classifiers. Additionally, we plan to explore alternative loss functions and model adjustments to further improve classification performance.

The general applicability of our method to other online action detection models also merits further investigation. Future work will involve testing our approach on different models and datasets to validate its broader effectiveness.

Author Contributions

Conceptualization, M.-H.H. and C.-C.H.; implementation, M.-H.H.; validation, Y.-H.C. and Y.-T.W.; writing—original draft preparation, M.-H.H. and S.-K.H.; writing—review and editing; Y.-T.W. and C.-C.H.; visualization, M.-H.H.; supervision, C.-C.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the “Higher Education Sprout Project” of National Taiwan Normal University and the Ministry of Education (MOE), Taiwan; and in part by the National Science and Technology Council, Taiwan, under Grant NSTC 113-2221-E-003-008 and Grant NSTC 113-2221-E-032-016.

Data Availability Statement

The data can be shared up on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- De Geest, R.; Gavves, E.; Ghodrati, A.; Li, Z.; Snoek, C.; Tuytelaars, T. Online Action Detection. In Proceedings of the Computer Vision–ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; pp. 269–284. [Google Scholar]

- Tsai, J.-K.; Hsu, C.-C.; Wang, W.-Y.; Huang, S.-K. Deep Learning-based Real-time Multiple-person Action Recognition System. Sensors 2020, 20, 4758. [Google Scholar] [CrossRef] [PubMed]

- Hwang, P.-J.; Hsu, C.-C.; Wang, W.-Y. Development of a Mimic Robot-learning from Demonstration Incorporating Object Detection and Multiaction Recognition. IEEE Consum. Electron. Mag. 2020, 9, 79–87. [Google Scholar] [CrossRef]

- Xu, M.; Gao, M.; Chen, Y.-T.; Davis, L.; Crandall, D. Temporal Recurrent Networks for Online Action Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5531–5540. [Google Scholar]

- Chen, J.; Mittal, G.; Yu, Y.; Kong, Y.; Chen, M. GateHUB: Gated History Unit with Background Suppression for Online Action Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 19925–19934. [Google Scholar]

- Xu, M.; Xiong, Y.; Chen, H.; Li, X.; Xia, W.; Tu, Z.; Soatto, S. Long Short-term Transformer for Online Action Detection. Adv. Neural Inf. Process. Syst. 2021, 34, 1086–1099. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Li, K.; Wang, Y.; Li, Y.; Wang, Y.; He, Y.; Wang, L.; Qiao, Y. Unmasked Teacher: Towards Training-Efficient Video Foundation Models. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; pp. 19891–19903. [Google Scholar]

- Lin, T.; Liu, X.; Li, X.; Ding, E.; Wen, S. BMN: Boundary-matching Network for Temporal Action Proposal Generation. In Proceedings of the International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3888–3897. [Google Scholar]

- Lin, T.; Zhao, X.; Su, H.; Wang, C.; Yang, M. BSN: Boundary Sensitive Network for Temporal Action Proposal Generation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Idrees, H.; Zamir, A.R.; Jiang, Y.-G.; Gorban, A.; Laptev, I.; Sukthankar, R.; Shah, M. The THUMOS Challenge on Action Recognition for Videos “in the Wild”. Comput. Vis. Image Underst. 2017, 155, 1–23. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- De Geest, R.; Tuytelaars, T. Modeling Temporal Structure with LSTM for Online Action Detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1549–1557. [Google Scholar]

- Wang, X.; Zhang, S.; Qing, Z.; Shao, Y.; Zuo, Z.; Gao, C.; Sang, N. OadTR: Online Action Detection with Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 7565–7575. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Schuster, M.; Paliwal, K.K. Bidirectional Recurrent Neural Networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar]

- Yun, S.; Oh, S.J.; Heo, B.; Han, D.; Kim, J. VideoMix: Rethinking Data Augmentation for Video Classification. arXiv 2020, arXiv:2012.03457. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 9–22 October 2017; pp. 2980–2988. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Zhao, Y.; Krähenbühl, P. Real-time Online Video Detection with Temporal Smoothing Transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 485–502. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Yang, L.; Han, J.; Zhang, D. Colar: Effective and Efficient Online Action Detection by Consulting Exemplars. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 3160–3169. [Google Scholar]

- Gao, M.; Zhou, Y.; Xu, R.; Socher, R.; Xiong, C. WOAD: Weakly Supervised Online Action Detection in Untrimmed Videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 1915–1923. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).