Abstract

Due to the randomness of participants’ movement and the selfishness and dishonesty of individuals in crowdsensing, the quality of the sensing data collected by the server platform is uncertain. Therefore, it is necessary to design a reasonable incentive mechanism in crowdsensing to ensure the stability of the sensing data quality. Most of the existing incentive mechanisms for data quality in crowdsensing are based on traditional economics, which believe that the decision of participants to complete a task depends on whether the benefit of the task is greater than the cost of completing the task. However, behavioral economics shows that people will be affected by the cost of investment in the past, resulting in decision-making bias. Therefore, different from the existing incentive mechanism researches, this paper considers the impact of sunk cost on user decision-making. An incentive mechanism based on sunk cost called IMBSC is proposed to motivate participants to improve data quality. The IMBSC mechanism stimulates the sunk cost effect of participants by designing effort sensing reference factor and withhold factor to improve their own data quality. The effectiveness of the IMBSC mechanism is verified from three aspects of platform utility, participant utility and the number of tasks completed through simulation experiments. The simulation results show that compared with the system without IMBSC mechanism, the platform utility is increased by more than 100%, the average utility of participants is increased by about 6%, and the task completion is increased by more than 50%.

1. Introduction

Crowdsensing refers to forming a sensing network through people’s existing mobile devices and publishing sensing tasks to individuals or groups in the network for completion, thereby helping professionals or the public to collect data, analyze information and share knowledge [1]. At present, crowdsensing is applied in various aspects of real life, such as traffic monitoring system [2], environmental monitoring [3], road condition monitoring [4]. There are still many problems in the above specific applications. Participants will waste personal time and consume the resources of the terminal equipment, such as data traffic, battery power, computing resources, etc., in the process of completing the sensing task. So, participants hope to get a certain economic reward as a compensation for the resource consumption when they complete the sensing task. Therefore, the server platform will pay appropriate rewards to participants to incentive participate in the sensing task in crowdsensing. There may be malicious participants in the crowdsensing system, they hope to obtain more rewards through a small cost or submit false data directly. At the same time, there are also problems that participants may submit unsatisfactory data quality due to limited time, low accuracy of the perception module in the terminal device, or negligence of participants themselves. To solve these problems, it is necessary to design a reasonable mechanism to motivate participants to submit high-quality data.

At present, many scholars have focused on the research of incentive mechanism on data quality [5,6,7,8,9,10], but most of them are based on traditional economics. They believe that users take the maximization of individual future utility as the goal when making decisions, and only consider future costs and benefits [11]. However, more and more studies in behavioral economics show that people will be affected by the cost of investment in the past, resulting in decision-making bias, and the cost of investment in the past is the sunk cost. Sunk cost refers to the cost that people have invested that cannot be recovered, and the sunk cost effect refers to the fact that people choose to continue to adhere to the previous behavior in order to avoid losses [12]. Sunk costs play an important role in the decision-making of participants, and have been widely existing in people’s daily life, such as commodity consumption and market investment. It is very meaningful to introduce the sunk cost effect into the design of incentive mechanism of crowdsensing [13].

Different from the existing incentive mechanism researches [5,6,7,8,9,10], this paper considers the impact of sunk cost on user decision-making. An incentive mechanism for improving data quality based on sunk cost effect is proposed. Taking participants as the incentive object, reverse auction as the carrier, and data quality as the incentive goal, the mechanism designed effort sensing reference factor and withhold factor to motivate participants to perform sensing tasks and submit high-quality sensing data. Participants evaluated their own effort level through the effort sensing reference factor provided by the server platform. Server platform further withheld part of the participants ‘reward through the withhold factor to stimulate the sunk cost effect of participants. In order to win the withheld reward, participants will resubmit high-quality data to the platform, and finally achieve the purpose of improving data quality.

The remainder of the study is organized as follows. Section 2 introduces the incentive mechanism for data quality in crowdsensing and sunk cost effect. In Section 3, the Incentive Mechanism based on Sunk Cost (IMBSC) is presented in detail. In Section 4, simulations verify the effectiveness of IMBSC in improving task completion quality.

2. Related Work

2.1. Research on Incentive Mechanism for Data Quality in Crowdsensing

At present, the researches on crowdsensing incentive mechanism mainly focuses on social benefits, protecting the privacy of participants, task allocation algorithm, ensuring the authenticity of incentive mechanism and ensuring data quality. In order to incentive participants to submit high-quality data, Yang Di et al. [5] proposed a user reputation evaluation mechanism that combines the reputation score feedback of requester to participant, the objective time reliability and the reliability of the provided data size. They designed an online incentive algorithm based on reputation update by considering the update mechanism of user history and display reputation records. Yang Jing et al. [6] proposed to analyze the historical trust status of participants through the willingness degree and data quality of historical tasks, evaluate their historical reputation, and dynamically update the reputation value of participants in subsequent tasks. The server platform reasonably selects participants according to the reputation value, so as to achieve the purpose of accurately collecting high-quality sensing data in real time. Dai et al. [7] proposed to guarantee data quality from two aspects: constraining the physical location distance between participants and tasks and using linear model to calculate whether the quality sum of participants meets the quality threshold. Peng et al. [8] proposed to use the expectation maximization algorithm combining maximum likelihood and Bayesian inference to evaluate data quality, so as to reward participants with fair and appropriate rewards and further incentive users to submit high-quality data. Wen et al. [9] mainly designed a probability model for indoor positioning scenarios to evaluate data reliability, and paid participants according to the number of data requesters. It not only controlled data quality, but also effectively motivated participants to submit high-quality data next time. Both research on the reputation value of participants and quality guarantee are mostly based on historical data to evaluate the reputation or quality of participants, but these studies ignore an important feature of historical data, that is, the relationship between the data in the time series. Ignoring the time characteristics of historical data, the evaluation results of reputation value or quality will also lose time characteristics. Wang et al. [10] proposed to model the quality of participants in latent time series based on linear dynamical system. It used Expectation Maximization (EM) algorithm to periodically evaluate the participant hyper-parameters to ensure the accuracy of quality assessment. Ref. [14] evaluated the user’s task performance through data accuracy and response time, and the user’s task reward depends on their own task performance. Finally, the mechanism can not only motivate users to participate in the sensing task, but also ensure the completion quality of the task. Ref. [15] made use of the diversity of inherently inaccurate data from many users to aggregate data perceived by the crowd, so that the accuracy of data aggregation can be improved, and pays according to the quality of data submitted by individuals and the truth value of aggregated data. Ref. [16] proposed an allocation algorithm that can effectively use and manage idle resources to obtain maximum benefits, and motivate users proportionally according to the quality of task completion, which not only improves the participation rate of users but also improves the data quality of users. The reward amount paid by the platform to users is determined according to the data quality provided by users. Ref. [17] proposed a payment mechanism, named Theseus, that deals with users’ strategic behavior, and incentivizes high-effort sensing from users. It ensured that, at the Bayesian Nash Equilibrium of the non-cooperative game induced by Theseus, all participating users will spend their maximum possible effort on sensing, which improves their data quality.

2.2. Sunk Cost Effect

Sunk cost effect [12] refers to the fact that people choose to continue to adhere to the previous behavior in order to avoid losses. There are many sources [13 of the sunk cost effect, and we mainly use the mental account in the cognitive mechanism [18]. The mental account refers to people’s psychological estimation of the outcome, that is, people’s psychological measurement of gains and losses. “Gains” refers to the obtained utility, and “Losses” refers to the total amount paid by people in the process of consumption, that is, people’s sunk cost. The mental account reaches equilibrium only if people’s gain utility is greater than sunk costs. Generally, the mental account value function is used to represent people’s gains and losses [18], as shown in Formula (1).

where represents the reference point of people. It can be seen from Formula (1) that when people judge their gains and losses, they are based on the reference point, rather than the absolute value of gains and losses. Sunk cost effect has been studied in many fields, for example, Ref. [19] studies the influence of psychological distance on sunk cost, Ref. [20] studies the sunk cost effect in engineering change in engineering management, and Ref. [21] studies the positive and negative effects of sunk cost effect on consumers.

It can be seen from the above that the sunk cost effect has been studied in many fields, but there is almost no sunk cost effect applied to the incentive mechanism of crowdsensing. In this paper, the sunk cost effect is introduced into the incentive mechanism of crowdsensing to motivate participants to improve their own data quality, and then improve the data quality level of the whole crowd sensing system.

3. Design of IMBSC

This part first gives the system model, and then mainly explains the design process of IMBSC.

3.1. System Model

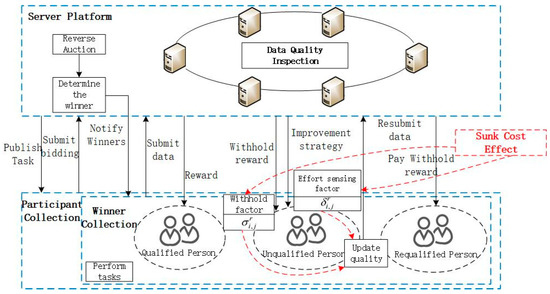

As shown in Figure 1, the IMBSC system model mainly includes two parts: server platform and participants. For the server platform, it is hoped that more high-quality data can be obtained at a lower cost, thereby improving the utility of the server platform. IMBSC will focus on this aspect of the incentive mechanism, by setting some parameters and using the sunk cost effect to influence the decision-making of participants and improve the data quality.

Figure 1.

IMBSC System Model.

In IMBSC, a running process (that is, task completion process) includes three stages: task publishing, selection reward payment, and final reward payment. The final reward payment stage of the previous run is completed, and the task publishing stage of the next run is entered. As shown in Figure 1, in the task publishing stage, the platform first publishes the task. Then, users submit bidding information based on task information and their own capabilities. Next, the platform selects the winning participants according to the participants’ bidding information through the reverse auction. After that, the winning participant performs the task and submits the sensing data. The platform assesses the quality of data submitted by participants. Participants who meet the quality threshold are paid reward, and participants who do not meet the quality threshold are paid a certain proportion reward. Those whose quality is not up to the quality threshold evaluate their own efforts according to the effort sensing factor. Users whose sensing data quality does not meet the quality threshold set by the platform will be affected by sunk costs. In order to get reward, they will continue to participate in the task and improve the quality of sensing data. The platform pays the reward according to the data quality of the participants reaching the threshold.

Next, the process of each stage is elaborated in detail.

Task publishing stage: The server platform publishes task set and task budget set to participants in specific areas according to task characteristics, that is, the th task has a task budget . In addition to the public task budget, the server platform also has a private task value attribute , that is, the th task has a task value . The th participant evaluates the tasks suitable for completion according to the task information given by the server platform and his own ability level, and submits a binary group to the server platform. indicates the set of tasks that participants want to complete, and for any , there is . represents the set of bidding from participant corresponding to task set . represents the set of bidding information submitted by all participants.

Selection reward payment stage: After receiving the bidding information submitted by the participants, the server platform selects the appropriate participant as the winner according to the bidding information, forming a winner set. Participants who are not selected do not belong to the winner set and are not required to perform tasks. The winner executes the task and submits data to the server platform. The server platform evaluates the data quality, and when the data quality of the winner exceeds a quality threshold, the winner is called a quality standard. Conversely, winners whose data quality does not exceed the quality threshold are called quality underachievers. The server platform gives those whose quality meets the standard the reward in their bidding information, and those whose quality does not meet the standard will give a certain proportion of reward according to their bidding information and data quality. An effort sensing reference factor for those whose quality of feedback from the server platform is not up to standard. Those who fail to meet the quality standards will be evaluated according to their own efforts . Those who fail to meet quality standards will have a sense of disapproval of the gap between the proportion of reward they receive at the stage of choosing payment and their own efforts [13], and stimulate the sunk cost effect of those who fail to meet quality standards. In order to recover sunk costs and obtain the remaining proportion of reward, those who fail to meet quality standards will continue to perform tasks, improve the quality of data, and re-submit data to the server platform.

Final reward payment stage: The server platform performs quality inspection on the re-submit data. When the data re-submit by the quality failure reaches the quality threshold, the quality failure is called the quality re-satisfaction. The server platform pays the reward of the remaining proportion of those who re-qualify the quality. Conversely, quality underachievers whose re-submit data have not yet met the quality threshold will not receive any compensation at this stage.

Some parameters used in this paper are listed in Table 1.

Table 1.

Common parameters and their meanings.

3.2. The Influence of Sunk Cost Effect on Participants’ Decision-Making

3.2.1. Effort Sensing Reference Factor

The platform judges whether the data submitted by the participant for the first time is qualified according to the task quality threshold . If the quality of the data submitted for the first time in the r-th run by participant satisfy that , the participants is only paid . Then, the platform will send data quality and a percentage to the participants whose quality of the data submitted for the first time is not qualified, and indicates how many other participants the quality of the data submitted by the participant for the first time exceeds. The purpose is that participants can use as a reference to evaluate their own efforts. The reason we do not directly send the real percentage is that when the participant’s self-perceived effort is higher, the possibility of participant choosing to continue to improve the data quality to complete the task is higher. Appropriately increasing the effort sensing reference factor given to the participants will motivate the initial participants to improve their effort sensing. So is private to the platform. In the Definition 1, the real proportion is defined.

Definition 1 (Real proportion ).

is the proportion of participants in the winner set who submitted data quality smaller than for the first time. The calculation formula of is shown in Formula (2):

where is the set of winners in the r-th run, is the set of winners whose data quality submitted for first time is smaller than , and . Then we will define effort sensing factor in Definition 2.

Definition 2 (Effort sensing reference factor ).

is the proportion of participants whose data quality satisfy in the r-th run, which submitted data quality for the first time more than , and it is given by the platform. It means the platform provides a reference for perceptions of self-effort to participant whose data quality is not qualified, and participant will evaluate his effort in collecting data for the first time based on the proportion of platform feedback. When is smaller than 0.5, the feedback is too small even though has been increased, so motivation to participants is weak. For participants continuing complete task, when is smaller than 0.5, the platform directly sets as a constant above 0.5. is calculated as Formula (3):

when , we set as a constant to ensure the continuity of . Since is a proportion, its value range is [0, 1]. Since is also a proportion, its value range must be a subset of [0, 1]. As Definition 2 shown, always satisfies . We will use Theorem 1 to prove that defined by Formula (3) satisfies the above two requirements, so as to illustrate the rationality of the formula.

Theorem 1.

When , value range of is a subset of [0, 1], and .

Proof of Theorem 1.

First, let us proof that when , value range of is a subset of [0, 1].

Case1: When , , so the value range of equal to is a subset of [0, 1].

Case2: is increasing, when , and when , , and when , . So the value range of is , when , and . So the value range of is a subset of [0, 1].

Then, let us proof that when , we always have .

Case1: When , , obviously .

Case2: let us proof that when , we always have . To proof that when , we always have , we only need to proof that is always satisfied for . Let , and . To calculate extremum of , let , and we have . By derivative calculation, monotonically decreases on , and , so when , it is always satisfied that , which means when , it is always satisfied that . In summary, we always have , when .

Proven. □

Definition 3 (Withhold factor ).

In r-th run, when is smaller than , the platform will withhold the reward of the participant , and only pay . When participant submit data for second time and its quality reach , then the platform will pay the rest . is calculated as Formula (4):

where, is a constant, and . is set to exclude participants whose is smaller in , so that only if participant satisfies , is qualified to get paid. The platform evaluates the quality of the data submitted for the first time by participant by , and when doesn’t reach quality threshold , the platform return based on to participant , and participant judge his self effort by . For task , as and participant receive bigger, participant will think that the more effort he puts in to perform the task, the more payment he should get after submitting the data. is based on , so with getting bigger, is also getting bigger. We will proof in Theorem 2 that for task , when submitted by participant is fixed, the greater the proportion of participants he outperforms, the more payment it is, that is to prove for task , when and are fixed, we have .

Theorem 2.

For task , when and are fixed and , we have .

Proof of Theorem 2.

Because , we just need to consider the situation that the max part in Formula (4) is equal to , and and are fixed, so it obviously we just need to proof for .

(1) When , is a constant, then with increasing, is decreasing which means is increasing. Therefore, when , with increasing, is increasing, which means .

(2) It is shown in Theorem 1 that when , is decreasing, which means with increasing, is decreasing and according to Formula (3) it is obviously when , with increasing, is increasing, so is increasing, which means .

(3) We proofed , when and , and we need to proof that the left-side and right-side of satisfy that the left-side is smaller than right-side. When , it is shown in (1) that is smaller than . When , it is shown in (2) that . Therefore, the left-side of is smaller than right-side. Combining (1) (2) (3), for task we have , when and are fixed.

Proven. □

Combining Theorem 2 and Formula (3), we have that the feedback is bigger, the more payment participant receive, when and are fixed, which is in line with economic principles. Although we didn’t mention the relationship between and , it is obviously that as increases, also tends to increase, because the increase of also increases the probability that will increase, and it is shown in Formula 4 that the increase of can also act directly on .

3.2.2. Data Quality Update Based on Sunk Cost Effect

As mentioned in Section 3.2.1, the platform uses the quality threshold required by the task requester to judge whether the quality of the data submitted by the participant is qualified, that is , at that time, is deemed qualified, otherwise, is deemed unqualified. For each of the unqualified participants in the set , the platform will withhold payment , feedback and give an additional opportunity to submit data. will judge my own quality and effort based on and , and decide whether to use this extra opportunity to recover the withheld reward.

For in , the completion quality of the second uploaded data is not only related to itself, but also affected by and the sunk cost effect. If is used to express the quality of the second submission data affected by the sunk cost effect, there is Formula (5).

among them, , represents the internal reference weight, and represents the degree of influence of the sunk cost effect on . When , it means that the sunk cost effect has a smaller impact on , and vice versa, it has a greater impact. From the perspective of traditional economics, the cost paid by participants is , but participants will be affected by the effort sensing factor we provide when evaluating the value of their own efforts, overjudge their own efforts, and think that the sunk cost is . This section will use Theorem 3 to illustrate how sunk costs affect participants.

Theorem 3.

In the rth run, when and , there is .

Proof of Theorem 3.

First, let , after simplification, . Among them, the internal reference weight , ’s first data quality , and can be obtained according to Formula (3). Therefore, , that is , the proof is completed.

Proven. □

Theorem 3 shows that is affected by and overestimates its own effort cost. In turn, it is easier to trigger the psychological sunk cost effect of , focus ’s mental focus on the cost paid, and promote to improve the quality of the second data. It can be obtained from the proof process, when , is obtained. When , indicates that did not submit any data or have any valid effort cost, so the sunk cost effect naturally did not exist. In most cases, the sunk cost effect can improve the data quality of ().

3.3. Incentive Mechanism Design Based on Sunk Cost Effect

Taking the reverse auction as the carrier, fully considering the sunk cost effect of the participants in the decision-making process, an incentive mechanism based on the sunk cost effect is designed. A run is divided into three stages according to the sequence: the task publishing stage, the selective reward payment stage based on the sunk cost effect, and the final reward payment stage based on the sunk cost effect.

3.3.1. Task Publishing

The process of the task publishing stage is shown in Algorithm 1.

In the stage of publishing tasks, the server platform publishes the tasks and their values on the platform (lines 1–4). Participants register on the platform, choose suitable tasks according to their own preferences and abilities, and evaluate the cost of performing tasks. Send the selected task set and bidding information to the server platform (lines 5–11).

| Algorithm 1: Task publishing |

| 1: FOR DO |

| 2: |

| 3: Releasing on the platform |

| 4: End FOR |

| 5: FOR DO |

| 6: Registering on the platform |

| 7: Selecting the tasks which are willing to execute |

| 8: Evaluating value |

| 9: |

| 10: Submitting to platform |

| 11: End FOR |

The selection reward payment phase can be divided into two parts, namely the participant selection phase and the compensation payment phase based on the sunk cost effect. In the user selection stage, the server platform selects suitable participants to complete the task according to the submitted by all participants, and these participants are called winners. In the phase of reward payment based on sunk cost effect, the winner performs the task and submits the data to the server platform, and then the server platform issues reward to the winner according to the data quality as a reward. At the same time, feedback information is given to those who fail to meet the quality standards to motivate them to improve data quality and resubmit data.

3.3.2. Participant Selection

In the participant selection stage, the server platform first arranges the tasks and generates winners according to the order of the tasks. When generating winners, arrange the participants in the order , and select the first few participants as the winners of the task.

Definition 4 (Task winner conditions).

For a certain task in the task set , the server platform sorts all participants who participate in the bidding task in descending order , and if there is the smallest participant that satisfies the Formula (6), then this participant is considered as a task the winner.

where represents the number of winners for task .

3.3.3. Reward Payment Stage Based on Sunk Cost Effect

Based on the participant selection, the server platform can get a set of winners. For any one , the server platform will pay it according to the data quality and bidding submitted for the first time.

The process of the whole selection payment stage is shown in Algorithm 2.

| Algorithm 2: Reward selection payment |

| 1:Input: , , , , , |

| 2:Output: |

| 3: Sort all in descending order of |

| 4: FOR DO |

| 5: Sort all in ascending order of |

| 6: Find the smallest such that |

| 7: IF such exists THEN |

| 8: FOR all s.t DO |

| 9: |

| 10: |

| 11: End FOR |

| 12: End IF |

| 13: End FOR |

| 14: FOR DO |

| 15: performs his tasks |

| 16: submits his data to platform |

| 17: End FOR |

| 18: the platform test quality of data |

| 19: FOR DO |

| 20: IF THEN |

| 21: Pay according to Definition 3 |

| 22: |

| 23: Give the and |

| 24: ELSE |

| 25: Pay the |

| 26: End IF |

| 27: End FOR |

Algorithm 2 describes the participant selection and reward payment process in one run. In the participant selection, the server platform first sorts the tasks in the task set from large to small according to (line 3). The server platform then selects a winner for each task in turn (lines 4–13). According to sorting the participants participating in the bidding task from large to small, judge whether there are any previous participants satisfying Definition 4 (lines 5–6). Generate winner for the task , if one exists (Lines 7–13). The next step is the payment stage, according to the Definition 3, the participants in the process are rewarded as rewards, and the information is fed back to those who fail to meet the quality standards.

In the final reward settlement stage, after receiving the feedback information, those who fail to meet the quality standards will be encouraged to improve their own data quality and submit data to the server platform again. When the data quality is resubmitted, the server platform will return the reward withheld by those who re-qualified. The process of the final reward settlement stage is as Algorithm 3.

| Algorithm 3: Final reward settlement |

| 1:Input: |

| 2: FOR DO |

| 3: continue to performs his tasks and improve quality |

| 4: resubmits his data to platform |

| 5: End FOR |

| 6: the platform test quality of data |

| 7: FOR DO |

| 8: IF THEN |

| 9: Pay the |

| 10: End IF |

| 11: End FOR |

According to the selection reward payment and the final reward settlement, when submits the first data quality , gets paid by as a reward, otherwise, will be obtained. When the resubmitted data quality of satisfies , the remaining reward will be obtained by , otherwise, no reward will be obtained. Then the reward of in the rth run can be obtained as Formula (7):

where represents the set of tasks for which participant is selected as the winner.

3.4. Utility Analysis

This section analyzes the effectiveness of IMBSC in terms of participants and server platform. For participants, IMBSC encourages participants to improve their data quality by effort sensing reference factor and withhold factor . And through the quality threshold , it makes realize that the reason why they cannot get rewards is that the quality of the data they submitted is not up to standard, and there will be no other psychological burden. For the server platform, IMBSC improves the effectiveness of the server platform by encouraging participants to improve data quality and complete more tasks.

3.4.1. Participant Utility

According to the process of incentive mechanism described in Section 3.3, the server platform provides different payment schemes according to the data quality of the tasks completed by the winners. In the rth run, when the task is completed, For participant who meet and , according to the theory of utility = benefit-cost in traditional economics, she can obtain the following utility when completing the task :

However, according to the sunk cost effect of behavioral economics, the participants can overestimate their own efforts by changing effort sensing factor, and the withhold factor detains part of the participants’ compensation, so that the psychological focus of the participants will focus on the sunk cost. When the quality of the data submitted by the participant for the second time is , they will receive sinking compensation . The return of this part of the reward will make the participant psychologically magnify the value of this part of the reward. So for the rth run, when task is completed, the utility of the participants meeting and is:

where is the value amplification factor and is the risk factor. represents the reward obtained in the payment selection stage and represents the cost of completing the task . refers to the reward that is withheld after the first data submission and obtained again after the second data submission. represents the value degree of reward to participant . The larger is, the greater the participant ‘ perceived effort is, the greater the value of is.

The above analyzes the utility of participant. For participants whose’s data quality meeting are not affected by the server platform feedback effort sensing reference factor and withhold factor. So the utility of these participants is benefit-cost. For participants whose data quality meeting and , although they have improved their data quality under the influence of the effort sensing reference factor and withhold factor, they can learn from the quality threshold given by the server platform. The reason why they are not paid is that the submitted data quality is not up to standard, so they do not have any other psychological burden, so the utility of these participants is also benefit-cost. So for the rth run, when task is completed, the utility of the winner is:

The utility of participant in the rth run is the sum of all task utilities selected as the winner. Therefore, the utility of participant in the rth run is:

where indicates the participant is the winner of task.

3.4.2. Platform Utility

The incentive mechanism IMBSC proposed in this paper focuses on data quality, which should be taken into account when calculating platform effectiveness. For any participant , if , the task completed separately cannot be regarded as the completed task. So the platform utility of IMBSC is:

where in, represents the union of tasks performed by all participants in set that meet , and represents the sum of the values of all tasks in the set , as given in Formula (7).

4. Simulation Experiment and Result Analysis

4.1. Experimental Setup

In this section, we compare IMBSC with STATIC mechanism. STATIC does not introduce sunk cost. When selecting set by reverse auction, STATIC uses the same selection algorithm as IMBSC. When set is selected, STATIC will pay to the winner who satisfies , and will not affect the data quality of through .

To ensure the accuracy of the comparison, IMBSC and STATIC use the same experimental environment and parameters. For the value of , this section will adopt the same value 0.85 as that in reference [22]. Loss aversion factor and risk attitude factor will adopt the classical values 2.25 and 0.88 in reference [23]. This experiment runs IMBSC and STATIC mechanism by JAVA simulation. All experimental results were averaged after 1000 operations. Other experimental parameter settings are listed in Table 2.

Table 2.

Experimental Parameters.

4.2. Experimental Results

In order to verify the effectiveness of IMBSC, this section will analyze the server platform utility, the average utility of participants, and the number of tasks completed through simulation experiments. When verifying the impact of the number of participants on the server platform utility and the average utility of participants, the number of tasks is set to 200. When verifying the impact of the number of tasks on the server platform utility and the average utility of participants, the number of participants is set to 200.

4.2.1. Server Platform Utility

The utility of the server platform is used to measure the rationality of the revenue and expenditure of the incentive mechanism. Formula (12) gives the calculation method of the utility of the server platform running at one time. This section evaluates the server platform utility attributes by calculating the average value of all running server utilities.

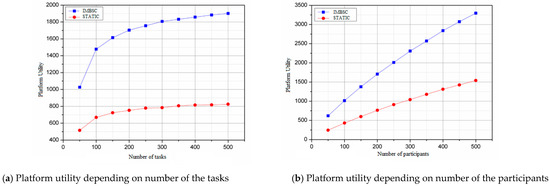

Figure 2 describes the changes of the server platform utility of the two mechanisms under different task number and different participant number. It can be seen from Figure 2a that the server platform utility of both mechanisms increases with the increase of task number . This is because as the number of tasks increases, the number of tasks that participants can choose will also increase, as a result, the completed tasks increase and the utility of the server platform increases. When the number of tasks increases to a certain extent, the utility of the server platform tends to grow slowly. Because of the influence of the number of participants , the number of tasks completed by participants in each round is limited due to their limited personal energy. When the number of tasks is large, the number of tasks completed by participants reaches saturation, and the utility growth of the server platform decreases. It can be seen from Figure 2b that the server platform utility of both mechanisms increases with the increase of the number of participants . As the number of participants increases, the competition among participants increases, providing more participants for the task and improving the possibility of task completion. The server platform utility of IMBSC is better than that of STATIC, mainly because IMBSC uses effort sensing factor and withhold factor to motivate winners to improve their own data quality. When the winners who fail to meet the quality standards resubmit higher quality data, they will make some unfinished tasks complete, thus improving the server platform utility.

Figure 2.

Platform utility depending on number of the tasks and participants.

4.2.2. Average Utility of Participants

The average utility of participants can explain the feasibility of incentive mechanism to some extent. The average utility of the participants in this section is the average utility of the participants in . Formula (11) gives the calculation method for calculating the one time operation utility of the participants.

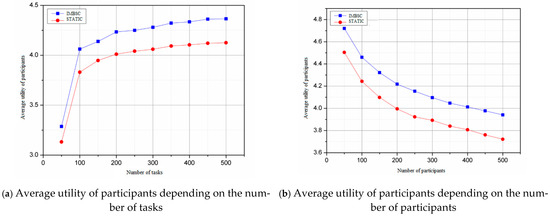

Figure 3 describes the changes of the average utility of the participants of the two mechanisms under different task numbers and different participant numbers. The average utility of the participants of IMBSC is still better than that of STATIC. Assume that , STATIC will only pay the reward to the participants whose data quality is unqualified for the first time. When the quality of the data submitted by the participants for the second time is qualified, the remaining reward will be paid, which improves the average utility of the participants, so the average utility of the participants of IMBSC is still better than that of STATIC. According to Figure 3a, the average utility of the participants of the two mechanisms increases with the increase of the number of tasks . This is because the increase of the number of tasks provides more opportunities to perform tasks, and the number of tasks performed by a single participant increases, which improves the average utility of the participants. According to Figure 3b, the average utility of the participants of the two mechanisms decreases with the increase of the number of participants . This is because with the increase of the number of participants , the participants are too saturated relative to the task execution opportunities, resulting in a decrease in the number of times the average participants perform tasks, and the average utility of the participants decreases.

Figure 3.

Average utility of participants.

4.2.3. Number of Tasks Completed

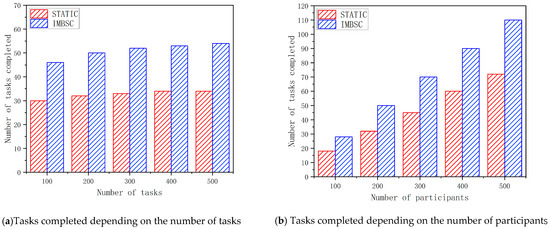

Figure 4 shows that the number of tasks completed varies with the number of tasks and participants. The number of tasks completed by IMBSC is greater than that of STATIC. Suppose executes tasks , and task is not completed. When improves data quality and resubmits data quality , task is completed and the number of task completions increases by 1, so the number of task completions of IMBSC is greater than that of STATIC. The number of tasks completed in Figure 4a increases with the number of tasks because the number of tasks increases, which increases the number of tasks performed by participants and the number of tasks to be completed. The reason for the small increase is that the number of tasks is about to be saturated with the number of participants . Increasing the number of tasks cannot significantly increase the number of tasks completed. The number of tasks completed in Figure 4b also increases with the number of participants . Because the number of tasks is saturated, while the number of participants is not saturated. Increasing the number of participants is conducive to more tasks being completed, thus increasing the number of tasks completed by the system.

Figure 4.

Number of tasks completed.

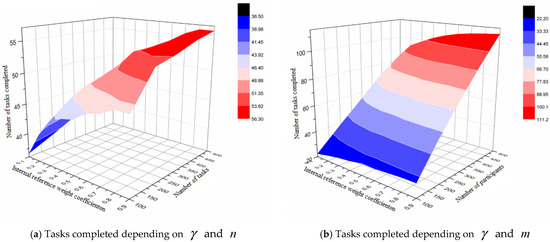

Figure 5 shows the influence of the internal reference weight factor on the number of tasks completed. According to Figure 5a, the number of tasks completed increases with the number of tasks , and increases with . According to Figure 5b, the number of tasks completed increases with the number of participants , and increases with . Because reflects the degree of influence on improving the data quality of participants, the greater the value , the greater the value of improving the quality of participants, so the more tasks completed. It can be seen that the conclusion in Figure 5 is consistent with the theory in Formula (5).

Figure 5.

Impact of Internal reference weight factor on the number of tasks completed.

5. Summary

A crowdsensing incentive mechanism based on sunk cost effect is designed to motivate participants to improve the quality of sensing data. IMBSC mainly takes reverse auction as the main body, and through the design of effort sensing reference factor and withhold factor, it urges participants to perform tasks and submit high-quality sensing data. Participants evaluate their own efforts through the effort sensing reference factor provided by the service platform, and the server platform further detains part of the participants’ reward through withhold factor to stimulate the psychological sunk cost effect of participants. Participants in the sunk cost effect will resubmit data to the server platform in order to win the retained reward and improve the quality of sensing data. Finally, the effectiveness of IMBSC is further verified through simulation experiments from three aspects: the server platform utility, the average utility of participants, and the number of tasks completed. The simulation results show that compared with the system without IMBSC mechanism, the platform utility is increased by more than 100%, the average utility of participants is increased by about 6%, and the task completion is increased by more than 50%. This paper mainly studies how the sunk cost effect improves the data quality of participants in one operation, and lacks the research on the impact of sunk cost effect on the next operation. In fact, the impact of sunk cost effect on participants who have not received withhold reward after the second data submission will continue until the next operation. In the future, we can focus on how the sunk cost effect affects the data quality of participants in multiple operations.

Author Contributions

K.W., Z.C. and L.Z. designed the project and drafted the manuscript, as well as collected the data. J.L. and B.L. wrote the code and performed the analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Science and Technology Project of State Grid Ningxia Electric Power Co., Ltd. Information and Communication Branch, (Research on Key Technologies of Anomaly Detection of Electric Power Information Equipment Based on Deep Learning). The contract number is: SGNxxt00xxJS2200078.

Data Availability Statement

Not acceptable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Capponi, A.; Fiandrino, C.; Kantarci, B.; Foschini, L.; Kliazovich, D.; Bouvry, P. A Survey on Mobile Crowdsensing Systems: Challenges, Solutions, and Opportunities. IEEE Commun. Surv. Tutor. 2019, 21, 2419–2465. [Google Scholar] [CrossRef]

- Liu, Y.; Li, H.; Guan, X.; Yuan, K.; Zhao, G.; Duan, J. Review of incentive mechanism for mobile crowd sensing. J. Chongqing Univ. Posts Telecommun. (Nat. Sci. Ed.) 2018, 30, 147–158. [Google Scholar]

- Liu, Z.D.; Jiang, S.Q.; Zhou, P.F. A participatory urban traffic monitoring system: The power of bus riders. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2851–2864. [Google Scholar] [CrossRef]

- Dutta, P.; Aoki, P.M.; Kumar, N.; Mainwaring, A.; Myers, C.; Willett, W.; Woodruff, A. Demo Abstract: Common Sense: Participatory urban sensing using a network of handheld air quality monitors. In Proceedings of the International Conference on Embedded Networked Sensor Systems. DBLP, Berkeley, CA, USA, , 4–6 November 2009; pp. 349–350. [Google Scholar]

- Yang, D.; Li, Z.; Yang, L.; Xu, Q. Reputation—Updating Online Incentive Mechanism for Mobile Crowd Sensing. J. Data Acquis. Proc. 2019, 34, 797–807. [Google Scholar]

- Yang, J.; Li, P.-C.; Yan, J.-J. MCS data collection mechanism for participants’ reputation awareness. Chin. J. Eng. 2017, 39, 1922–1934. [Google Scholar]

- Dai, W.; Wang, Y.; Jin, Q.; Ma, J. Geo-QTI: A quality aware truthful incentive mechanism for cyber-physical enabled Geographic crowdsensing. Future Gener. Comput. Syst. 2018, 79 Pt 1, 447–459. [Google Scholar] [CrossRef]

- Peng, D.; Wu, F.; Chen, G. Data Quality Guided Incentive Mechanism Design for Crowdsensing. IEEE Trans. Mob. Comput. 2018, 17, 307–319. [Google Scholar] [CrossRef]

- Wen, Y.; Shi, J.; Zhang, Q.; Tian, X.; Huang, Z.; Yu, H.; Cheng, Y.; Shen, X. Quality-Driven Auction-Based Incentive Mechanism for Mobile Crowd Sensing. Veh. Technol. IEEE Trans. 2015, 64, 4203–4214. [Google Scholar] [CrossRef]

- Wang, H.; Guo, S.; Cao, J.; Guo, M. Melody: A Long-Term Dynamic Quality-Aware Incentive Mechanism for Crowdsourcing. In Proceedings of the IEEE International Conference on Distributed Computing Systems, Atlanta, GA, USA, 5–8 June 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Kai, H.; He, H.; Jun, L. Quality-Aware Pricing for Mobile Crowdsensing. IEEE/ACM Trans. Netw. 2018, 26, 1728–1741. [Google Scholar]

- Nash, J.S.; Imuta, K.; Nielsen, M. Behavioral Investments in the Short Term Fail to Produce a Sunk Cost Effect. Psychol. Rep. 2018, 122, 1766–1793. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Zhang, B.; Liang, S.; Li, J. Sunk cost effects hinge on the neural recalibration of reference points in mental accounting. Prog. Neurobiol. 2022, 208, 102178. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Si, Y.; Zhu, L.; Zhang, C.; Sharif, K.; Zhang, C. Pay as how you behave: A truthful incentive mechanism for mobile crowdsensing. IEEE Internet Things J. 2019, 6, 10053–10063. [Google Scholar] [CrossRef]

- Gong, X.; Shroff, N.B. Truthful mobile crowdsensing for strategic users with private data quality. IEEE/ACM Trans. Netw. 2019, 27, 1959–1972. [Google Scholar] [CrossRef]

- Saha, S.; Habib, M.A.; Adhikary, T.; Razzaque, M.A.; Rahman, M.M.; Altaf, M.; Hassan, M.M. Quality-of-experience-aware incentive mechanism for workers in mobile device cloud. IEEE Access 2021, 9, 95162–95179. [Google Scholar] [CrossRef]

- Jin, H.; He, B.; Su, L.; Nahrstedt, K.; Wang, X. Data-driven pricing for sensing effort elicitation in mobile crowd sensing systems. IEEE/ACM Trans. Netw. 2019, 27, 2208–2221. [Google Scholar] [CrossRef]

- Christoph Merkley, J.M.A. Closing a Mental Account: The Realization Effect for Gains and Losses. Exp. Econ. 2021, 24, 303–329. [Google Scholar] [CrossRef]

- Zhang, Q. The Effect of Psychological Distance on Sunk Cost Effect: The Mediating Role of Expected Regret[D]. Ph.D. Thesis, Shanghai Normal University, Shanghai, China, 2019. [Google Scholar]

- Jin, L.; Li, C.; Zheng, X.; Hao, S.; Han, L.; Liang, Q. Cost effects analysis of engineering change decision under different construal levels. J. Ind. Eng. Eng. Manag. 2018, 32, 202–206. [Google Scholar]

- Xiang, P.; Xu, F.; Shi, Y.; Zhang, H. Review of research on the effect of sunk costs in consumer decision making. Psychol. Res. 2017, 10, 30–34. [Google Scholar]

- Zhu, X.; An, J.; Yang, M.; Xiang, L.; Yang, Q.; Gui, X. A fair incentive mechanism for crowdsourcing in crowd sensing. IEEE Internet Things J. 2017, 3, 1364–1372. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D. Advances in Prospect Theory: Cumulative representation of uncertainty. J. Risk Uncertain. 1992, 5, 297–323. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).