Abstract

In the real world, multimodal sentiment analysis (MSA) enables the capture and analysis of sentiments by fusing multimodal information, thereby enhancing the understanding of real-world environments. The key challenges lie in handling the noise in the acquired data and achieving effective multimodal fusion. When processing the noise in data, existing methods utilize the combination of multimodal features to mitigate errors in sentiment word recognition caused by the performance limitations of automatic speech recognition (ASR) models. However, there still remains the problem of how to more efficiently utilize and combine different modalities to address the data noise. In multimodal fusion, most existing fusion methods have limited adaptability to the feature differences between modalities, making it difficult to capture the potential complex nonlinear interactions that may exist between modalities. To overcome the aforementioned issues, this paper proposes a new framework named multimodal-word-refinement and cross-modal-hierarchy (MWRCMH) fusion. Specifically, we utilized a multimodal word correction module to reduce sentiment word recognition errors caused by ASR. During multimodal fusion, we designed a cross-modal hierarchical fusion module that employed cross-modal attention mechanisms to fuse features between pairs of modalities, resulting in fused bimodal-feature information. Then, the obtained bimodal information and the unimodal information were fused through the nonlinear layer to obtain the final multimodal sentiment feature information. Experimental results on the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets demonstrated that the proposed approach outperformed other comparative methods, achieving Has0-F1 scores of 76.43%, 80.15%, and 81.93%, respectively. Our approach exhibited better performance, as compared to multiple baselines.

1. Introduction

In the research field of intelligent human–computer interaction, the ability to recognize, analyze, understand, and express emotions is essential for intelligent machines. Therefore, the utilization of computer technology to automatically recognize, understand, analyze, classify, and respond to emotion holds significant value for establishing a harmonious human–machine interaction environment, improving interaction efficiency, and enhancing user experience [1,2,3]. Previous studies [4,5] have primarily focused on sentiment analysis using textual data and have achieved remarkable accomplishments. However, as compared to unimodal analysis, MSA can effectively leverage the coordinated and complementary information from different modalities to enhance emotional understanding and expression capabilities and provide richer information that is more consistent with human behavior.

In recent years, there has been a growing interest in multimodal data for sentiment analysis. MSA aims to utilize the information interaction between texts, images, speech, etc., enabling machines to automatically utilize comprehensive multimodal emotional information for the identification of users’ sentiment tendencies. Early research often employed multimodal fusion [6] through early fusion, directly combining multiple sources of raw features [7,8], or late fusion, aggregating the decisions of multiple sentiment classifiers [9,10,11]. However, the former approach may result in a large number of redundant input vectors, leading to increased computational complexity, while the latter approach may struggle to capture the correlations between different modalities. Therefore, various methods have been proposed for feature fusion in multimodal sentiment analysis. Existing fusion methods include those based on simple operations [12,13], attention-based methods [14,15,16,17], tensor-based methods [18], translation-based methods [19], GAN-based methods [20], routing-based methods [21], and hierarchical fusion [22,23,24]. Although there is a wide range of fusion methods, attention-based fusion methods have shown superior efficiency and performance [25]. However, weighting and summing the features of each modality in the attention mechanism alone may not be able to effectively adapt to the differences in the features across different modalities. Consequently, certain modal features might be disregarded or underestimated, ultimately impacting the accuracy of the fused feature representation. Additionally, complex nonlinear interactions may exist between different modalities, and the attention mechanism may struggle to model such relationships accurately, thereby impacting the effectiveness of feature fusion. Furthermore, previous methods have rarely considered the simultaneous utilization of the interaction information within a single modality and between modalities.

In addition to fusion mechanisms, handling noise in the data is crucial for MSA. While many excellent MSA models have been proposed, they are rarely deployed in the real world. The reason is that during the process of human–machine interaction in real environments, text acquisition can only be achieved through ASR models, which convert speech into texts. Through an in-depth analysis of ASR output, we have discovered that errors in recognizing emotional words in ASR-generated texts directly impair MSA models.

To address these challenges, we propose a MWRCMH framework that reduces speech recognition errors and incorporates cross-modal hierarchical fusion. Specifically, we utilized a language model to capture syntactic and semantic information, enabling us to determine the most likely positions of sentiment words. Furthermore, we leveraged multimodal sentiment information to dynamically complement the emotional semantics in the representation of these positions. The corrected representation of sentiment words was then combined with images and sounds, allowing us to capture the interaction information between pairs of modalities using a cross-modal attention mechanism. The fused bimodal feature information was then concatenated with the feature information from each mode, resulting in a vector that encompassed all the feature information. This vector served as the input, passing through the nonlinear layers for fusion. Finally, we employed nonlinear layers to map the concatenated feature vector to a higher-dimensional space, utilizing the output as the final feature representation for training and prediction in subsequent tasks.

The remaining structure of this paper is as follows: Section 2 provides a review of related literature. In Section 3, we present a detailed description of our proposed method. Subsequently, in Section 4, we elaborate the experimental results. Finally, Section 5 concludes the paper, and we discuss future work.

2. Related Work

Sentiment computing, as an emerging interdisciplinary research field, has been widely studied and explored since its introduction in 1995 [26]. Previous research has primarily focused on unimodal data representation and multimodal fusion. In terms of unimodal data representation, Pang et al. [4] were the first to employ machine learning-based methods to address textual sentiment classification, achieving better results than traditional manual annotation, by using movie reviews as the dataset. Yue et al. [5] proposed a hybrid model called Word2vec-BiLSTM-CNN, which leveraged the feature extraction capability of convolutional neural networks (CNN) and the ability of bi-directional long short-term memory (Bi-LSTM) to capture short-term bidirectional dependencies in text. Their results demonstrated that hybrid network models outperformed single-structure neural networks in the context of short texts. Colombo et al. [27] segmented different regions in image and video data based on features such as color, warmth, position, and size, enabling their method to obtain higher semantic levels beyond the objects themselves. They applied this approach to a sentiment analysis of art-related images. Wang et al. [28] utilized neural networks for the facial feature extraction of images. Bonifazi et al. [29] proposed a space–time framework that leveraged the emotional context inherent in a presented situation. They employed this framework to extract the scope of emotional information concerning users’ sentiments on a given subject. However, the use of unimodal analysis in sentiment analysis has had some limitations since humans express emotions through various means, including sound, content, facial expressions, and body language, all of which are collectively employed to convey emotions.

Multimodal data describe objects from different perspectives, providing richer information, as compared to unimodal data. Different modalities of information can complement each other in terms of content. In the context of multimodal fusion, previous research can be categorized into three stages: early feature fusion, mid-level model fusion, and late decision fusion. Wollmer et al. [30] and Rozgic et al. [31] integrated data from audio, visual, and text sources to extract emotions and sentiments. Metallinou et al. [32] and Eyben et al. [33] combined audio and text patterns for emotional recognition. These methods relied on early feature fusion, which mapped them to the same embedding space through simple concatenation, and the lack of interaction between different modalities. For late-stage decision-fusion methods, internal representations were initially learned within each modality, followed by learning the fusion between modalities. Zadeh et al. [18] utilized tensor-fusion networks to calculate the outer product between unimodal representations, yielding tensor representations. Liu et al. [34] introduced a low-rank multimodal-fusion method to reduce the computational complexity of tensor-based approaches. These methods aimed to enhance efficiency by decomposing the weights of high-dimensional fusion tensors, reducing redundant information, yet they struggled to effectively simulate intermodal or specific modality dynamics. Intermediate model fusion amalgamates the advantages of both early feature fusion and late-stage decision fusion, facilitating the selection of fusion points and enabling multimodal interaction. Poria et al. [35] further extended the combination of convolutional neural networks (CNNs) and multiple kernel learning (MKL). In contrast to Ghosal et al. [36], Poria et al. utilized a novel fusion method to effectively enhance fusion features. Zhang et al. [37] introduced a quantum-inspired framework for the sentiment analysis of bimodal data (texts and images) to address semantic gaps and model the correlation between the two modalities using density matrices. However, these methods exhibited limited adaptability to feature differences and suffered from significant feature redundancy. Concerning hierarchical fusion, Majumder et al. [22] employed a hierarchical-fusion strategy, initially combining two modalities and, subsequently, integrating all three modalities. However, this approach struggled to adequately capture intramodal dynamics. Georgiou et al. [23] introduced a deep hierarchical-fusion framework, applying it to sentiment-analysis problems involving audio and text modalities. Yan et al. [24] introduced a hierarchical attention-fusion network for geographical localization. Nevertheless, these methods overlooked the potential existence of complex nonlinear interactions between modalities. Moreover, many fusion approaches have seldomly considered simultaneously harnessing intramodal and intermodal interactions. Verma et al. [38] emphasized that each modality possesses unique intramodality features, and multimodal sentiment analysis methods should capture both common intermodality information and distinctive intramodality signals.

In addition to considering fusion strategies in the MSA model, it is crucial to address the noise present in modal data. Pham et al. [39] proposed the MCTN model to handle the potential absence of visual and acoustic data. Liang et al. [40] and Mittal et al. [41] also focused on addressing noise introduced by visual and acoustic data, relying on word-level features there were obtained by aligning the audio with the actual text. Xue et al. [42] introduced a multi-level attention-graph network to reduce noise within and between modalities. Cauteruccio et al. [43] introduced a string-comparison metric that could be employed to enhance the processing of heterogeneous audio samples, mitigating modality-related noise. However, these models did not investigate the impact of ASR errors on the MSA model. Notably, Wu et al. [44] utilized a sentiment word position-detection module to determine the most likely positions of sentiment words in text. They dynamically refined sentiment-word embeddings using a multimodal sentiment-word-refinement module that incorporated the improved embeddings as the textual input for the multimodal feature-fusion module. This approach reduced the influence of ASR errors on the MSA model. The sentiment word position-detection module and multimodal sentiment-word-refinement module have proven highly effective, achieving state-of-the-art performance on real-world datasets. However, the original SWRM simply concatenated modalities in feature fusion without capturing intramodal and intermodal features, even when genuine correlations existed.

In this work, we employed a cross-modal hierarchical fusion network for feature fusion. This enabled the model to focus on important features, reduce noisy impacts, and achieve superior fusion results. Thus, our approach enhanced the robustness and performance of the model.

3. Methodology

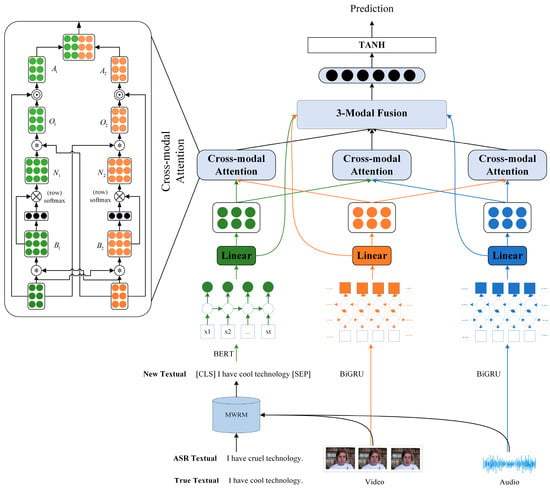

To enhance the adaptability to feature differences across different modalities and effectively model complex nonlinear interactions, we designed a cross-modal, hierarchical-fusion network that considered the presence of sentiment-word-recognition errors in the texts generated by ASR. The model’s architecture is depicted in Figure 1, and it comprises 4 main components: unimodal-feature extraction, a multimodal-word-refinement module, a multimodal-feature-fusion module, and a prediction network. Initially, we employed BERT to extract textual feature information while Bi-GRU was utilized to extract audio and visual feature information. BERT, rooted in the transformer architecture, served as a pre-trained language model capable of capturing the nuanced semantic information of words within sentences through bidirectional contextual modeling [45]. Bi-GRU, a variant of a recurrent neural network (RNN), was particularly well suited for modeling sequential data [46]. Subsequently, the multimodal-word-refinement module dynamically supplemented missing sentiment semantics by leveraging multimodal-sentiment information, resulting in the generation of new word embeddings. These new word embeddings were then input into the multimodal-feature-fusion module, which utilized a cross-modal hierarchical-fusion network to perform feature fusion across different modalities. Finally, a nonlinear layer was employed to predict the final sentiment-regression labels, facilitating accurate sentiment judgment.

Figure 1.

The architecture of our model. where * represents matrix multiplication; × represents line-by-line softmax function. Green represents textual, orange represents visual, and blue represents audio.

3.1. Multimodal Word-Refinement Module (MWRM)

We drew inspiration from the SWRM model of Wu et al. [44]. Firstly, we employed a language model to estimate the potential positions of sentiment words in the ASR transcripts by capturing syntactic and semantic information. For example, given a sentence , let us assume that the word in the sentence was a sentiment word; we replaced the word with a special word (MASK). Then, we used BERT and Bi-GRU to model the word embeddings and the visual, audio, and visual–audio features separately, resulting in . In addition, we also used a Bi-GRU network to fuse acoustic and visual features in order to capture advanced sentiment semantics and obtained . The formulas are presented below:

where the three modalities of unaligned features, word embeddings, visual features, and audio features are represented as . To obtain the corresponding multimodal information, we used a pseudo-alignment method to align the features. We divided the acoustic and visual features into non-overlapping feature groups with lengths of and , respectively, and took the average of the features in each group to obtain the aligned feature representation .

Then, we used a multimodal, gated network to filter the representation information at the locations of the sentiment word to generate . To make the model ignore impossible solutions, we used the gate mask . Therefore, is the gate value obtained by connecting the unimodal contextual perception representation , , , and the bimodal representation in the position , using a nonlinear network. We then used the word embedding of the candidate word and the multimodal representation , and , to obtain the attention score through linear fusion. We fed the attention score to the softmax function to obtain the attention weight, which was then applied to the candidate word embeddings to obtain the sentiment embedding , as follows:

where are the parameters of the multimodal, gated network, are the parameters of the multimodal sentiment-word attention network, and ⊙ represents element-wise multiplication.

As the sentiment word we wanted may not have been included in the candidate word list, we also introduced the representation of (MASK) when correcting the word representation, that is . Finally, we combined the above representations to obtain the corrected word vector , as follows:

where are the trainable parameters of the nonlinear network.

3.2. Unimodal Feature Extraction

We utilized the BERT model to extract features from the improved word embeddings , which are represented as :

For the visual and audio modalities, we employed two separate Bi-GRU networks to encode the visual and audio features. The representation of the first word was used as the visual representation and acoustic representation :

Unimodal features can have different dimensions, i.e., . Therefore, they were mapped to the same dimension, such as , using a fully connected layer, and computed as follows:

where are the trainable parameters of the textual feature-extraction network, are the trainable parameters of the audio feature-extraction network, and are the trainable parameters of the visual feature-extraction network.

3.3. Cross-Modal Attention (CMA)

In general, the three modalities of word-embedding, vision, and audio had some content that was related to sentiment information. If the interaction of the modality information was not considered, the final multimodal features obtained would inevitably contain a large amount of redundant information that would be irrelevant to the detection task. Therefore, we used a cross-modal attention framework, where the attention function was applied to the representations of the two modalities, i.e., visual–textual, textual–audio, and audio–visual. The cross-modal attention calculation for visual–textual was as follows:

The feature matrices for visual and textual were multiplied to obtain the cross-modal information matrix , where represents matrix multiplication.

Through a line-by-line softmax function, the attention distribution was calculated, , where represents the correlation between the feature of the word-embedding modality and the feature of the visual modality: the larger the value, the stronger the interaction between the two features and the more important the fusion information.

The attention distribution was then multiplied by the feature matrix to obtain the final attention representation matrix, .

Finally, a multiplication gate mechanism was used to obtain the mutual-attention-information matrix of the two modalities , where ⊙ represents element-wise matrix multiplication.

By transposing and to the desired dimensions and concatenating them, the fused information representation of the word embedding and visual modalities was obtained: , where ⊕ represents the concatenation operation of vectors.

Using the same method, the fused features of the textual–audio modality and the audio–visual modality could be obtained: .

3.4. Trimodal Fusion

After concatenating the aforementioned cross-modal attention-fused features , , and , as well as the unimodal features , , and , we used fully connected layers to fuse features from different sources, enabling the model to fully utilize various feature information. The nonlinear tanh activation function was used to enhance the expressive power of the features. The equation was as follows:

where are the trainable parameter of the trimodal fusion network.

3.5. Regression Analysis

In the regression analysis, we utilized a nonlinear layer to predict the final sentiment regression labels, as shown in the following equation:

where are the trainable parameters of the prediction network.

4. Experiments

4.1. Dataset

We used three real-world datasets constructed from the CMU-MOSI [47] dataset, including MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek. The CMU-MOSI dataset is one of the most popular multimodal sentiment analysis datasets and contains 93 monologue clips of YouTube movie-review videos. These clips were divided into 2199 annotated video segments with emotion labels. Sentiment scores ranging from −3 (strongly negative) to 3 (strongly positive) were also annotated. For the multimodal features, we used Facet to extract the visual features. Facet can extract facial-action units, facial landmarks, head poses, and gaze-tracking data from each frame, resulting in a comprehensive representation across 34 dimensions. Facet achieves the visualization of relationships and trends among multiple dimensions by mapping various dimensions of the data onto distinct visual channels. The acoustic features were obtained by applying COVAREP [48], which includes 12 Mel-frequency cepstral coefficients (MFCC) and other low-level features.

However, the text data in the MOSI dataset was sourced from the subtitles of movies and TED Talks as transcriptions and have been expertly corrected and processed for speech. However, obtaining text for real-world applications in this way is unrealistic. To evaluate models in the real world, we manually replaced the raw text data in the dataset with text generated by ASR models. We used SpeechBrain’s ASR model and two widely used commercial APIs to generate text. The ASR model used, as published by Ravanelli et al. [49], was built on a transformer encoder–decoder framework and trained on the Librispeech dataset [50]. The commercial APIs we used were IBM and iFlytek speech-to-text, which are widely used by researchers and software developers. Finally, we transcribed the videos into text using the three ASR models and then built three new datasets: MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek. The percentages of the emotional-word-replacement errors for the MOSI dataset using the 3 ASR APIs are listed in Table 1. The percentage of emotional-word-replacement errors for MOSI-IBM was 17.6%, meaning that about 17 out of 100 utterances had this type of error.

Table 1.

The proportion of sentiment-word-replacement errors in three real-world datasets.

4.2. Implementation Details

To ensure fairness, we used the same parameters for the experiments conducted on all 3 datasets. We used Adam as the optimizer, and the learning rate was 5 × 10−5. The batch size was 32. The dropout was 0.1. In addition, in Section 3, the values , , and were 768,128 and 128, respectively. We implemented our entire method based on PyTorch, and all experiments were run on an NVIDIA GeForce RTX 3080 GPU.

4.3. Baseline

To validate the effectiveness of the model, we conducted a comparative analysis of established and representative multimodal sentiment-analysis models, including:

TFN (Zadeh et al., 2017) [18], which uses a tensor-fusion network to create a multi-dimensional tensor to learn unimodal, bimodal, and trimodal information in multimodal data;

LMF (Liu et al., 2018) [34], which decomposes the weight tensor using a low-rank multimodal-fusion approach, which reduces the computational complexity of tensor-based methods, and efficiently fuses multimodal interactions with modality-specific low-rank factors;

MulT (Tsai et al., 2019) [15], which proposes a multimodal transformer that utilizes cross-modal attention to enable interactions between multimodal sequences across different time steps;

MISA (Hazarika et al., 2020) [51], which uses multitask learning to partition each modality into different subspaces and learn related sentiment representations that aid the fusion process;

Self-MM (Yu et al., 2021) [52], which learns rich unimodal representations by training on 1 multimodal task and 3 unimodal subtasks; and

SWRM (Wu et al., 2022) [44], which proposes a multimodal word correction model based on sentiment word perception and utilizes multimodal sentiment information to dynamically complete damaged sentiment semantics.

4.4. Results and Analysis

In this section, we evaluated the performance of our model on the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets using various metrics, including accuracy (Acc2), F1-score (F1) in binary classification, mean absolute error (MAE), and correlation (Corr). Specifically, we calculated the Acc2 and F1-scores in 2 ways: negative/non-negative (Non0-Acc, Non0-F1) and negative/positive (Has0-Acc, Has0-F1). Since the predicted results were continuous values, we converted them into emotion classification labels by mapping the emotion scores to the labels. The experimental results for different models are presented in Table 2.

Table 2.

Results on the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets. The best results are in bold. ↑ indicates that the larger the numerical value, the better the model performance; ↓ indicates that the smaller the numerical value, the better the model performance.

Based on the Has0-F1 values in the table, we drew the following conclusions: Compared to the tensor-fusion methods TFN and LMF, our model demonstrated a significant improvement of 7.48% and 6.65% on the MOSI-SpeechBrain dataset, respectively; 8.37% and 7.06% on the MOSI-IBM dataset, respectively; and 9.92% and 9.90% on the MOSI-iFlytek dataset, respectively, highlighting the superiority of our model. This superiority stemmed from the fact that TFN and LMF performed tensor fusion at an overall level without adequately considering the interaction modeling between the modalities, whereas our fusion network took this aspect into account.

Additionally, as compared to the attention-based cross-modal model MulT, our model achieved improvements of 4.73%, 4.61%, and 4.88% on the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets, respectively. The reason behind this performance gain was that MulT overlooked the feature differences between the different modalities and the potential nonlinear interaction relationships. In contrast, our hierarchical fusion approach incorporated a nonlinear layer for feature fusion, leading to better results. Furthermore, as compared to the modality-invariant and specificity-based fusion model MISA, our model outperformed it by achieving improvements of 2.58%, 3.16%, and 2.31% on the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets, respectively. This improvement could be attributed to our model’s focus on capturing the semantic representations of different modalities, resulting in enhanced performance. Moreover, our model, named Self-MM, surpassed the self-supervised and multitasking-representation learning model Self-MM with improvements of 2.71%, 2.78%, and 1.67% on the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets, respectively. These findings validated the superior performance of our model, which benefited from its unique design and approach. Lastly, in comparison with the baseline model SWRM, our model achieved improvements of 1.81%, 1.68%, and 1.64% on the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets, respectively. This improvement could be attributed to the utilization of our cross-modal hierarchical-fusion network, which enabled better and more effective multimodal fusion, leading to enhanced overall performance.

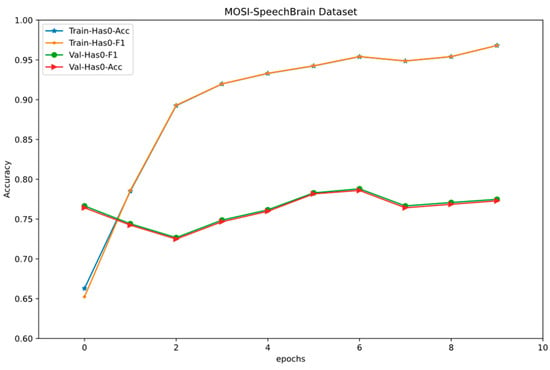

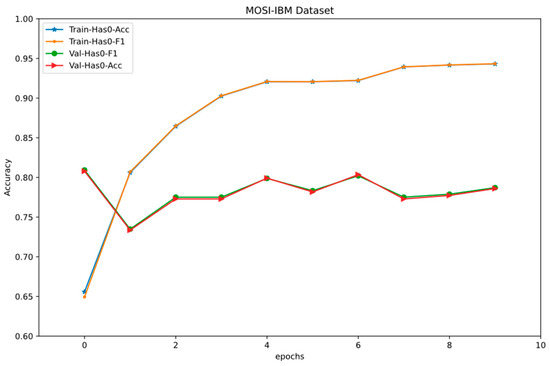

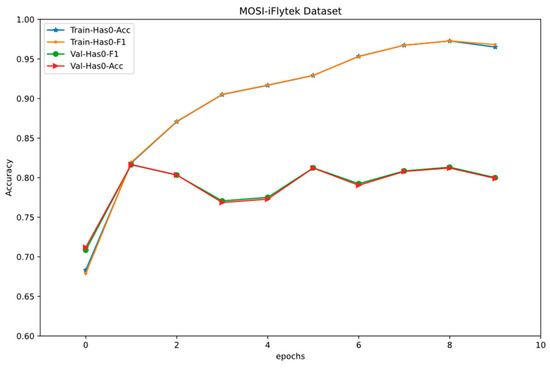

Figure 2, Figure 3 and Figure 4 show the accuracy of the model regression on the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets. Train-Has0-Acc and Train-Has0-F1 were correlated to Has0-Acc and Has0-F1, as generated by the training datasets. Val-Has0-Acc and Val-Has0-F1 were generated by the validation datasets. The models were trained in 10 epochs using 70% of the samples as the training set, and 30% of samples were used as test data.

Figure 2.

Train-Has0-Acc, Train-Has0-F1, Val-Has0-F1, and Val-Has0-Acc on the MOSI-SpeechBrain dataset.

Figure 3.

Train-Has0-Acc, Train-Has0-F1, Val-Has0-F1, and Val-Has0-Acc on the MOSI-IBM dataset.

Figure 4.

Train-Has0-Acc, Train-Has0-F1, Val-Has0-F1, and Val-Has0-Acc on the MOSI-iFlytek dataset.

4.5. Ablation Study

4.5.1. Modal Ablation

The literature [15,24] has demonstrated the superiority of multimodal analysis over unimodal analysis. We also observed the same trend in our experiments, where the trimodal and bimodal classifiers outperformed the unimodal classifier. Among the different modalities, the textual modality exhibited the highest accuracy in the unimodal classification, reaching 73.32%. While the other modalities contributed to enhancing the performance of the multimodal classifier, their impact was relatively small, as compared to the textual modality.

To validate the significant influence of the multimodal information fusion on sentiment-analysis accuracy, we conducted experiments using eight different combinations of modality features: unimodal (T, A, V), bimodal (V + T, T + A, A + V), trimodal (T + V + A), and composite hierarchical fusion (TVA + T + V + A). For the unimodal feature information, we processed the data through a feature-extraction layer and directly utilized the results for sentiment analysis. Similarly, for the bimodal features, we initially fused pairwise combinations of the different modalities and then applied the same processing for the sentiment analysis. Regarding the trimodal feature information, we fused the bimodal features T + A, T + V, and V + T to obtain the trimodal fusion T + A + V, followed by applying the same processing for sentiment analysis. Finally, we employed the fusion processing method proposed in this paper, merging the trimodal information with the unimodal information to obtain the final trimodal features (TVA + T + V + A) for sentiment analysis. The detailed results of sentiment analysis are presented in Table 3.

Table 3.

Experimental results of modal ablation on the MOSI-SpeedBrain dataset. ↑ indicates that the larger the numerical value, the better the model performance; ↓ indicates that the smaller the numerical value, the better the model performance.

Considering the evaluation metrics for the regression results, the bimodal sentiment analysis generally outperformed the unimodal sentiment analysis, and the best performance was achieved when utilizing the fused trimodal features. Therefore, the effective fusion of textual, acoustic, and visual features contributed to enhancing the performance of sentiment classification.

4.5.2. Model Ablation

We conducted ablation experiments to assess the contributions of each component in our model. Our architecture comprised several different variants. MWRCMH represented the complete model proposed by us. MWRCMH without fusion excluded the cross-modal hierarchical-fusion module and simply concatenated the feature vectors from the different modalities. MWRCMH without MWRM did not employ the multimodal word-correction module and did not consider the impact of the recognition errors of the sentiment words in the ASR transcripts. The results of the model variants are presented in Table 4. Without the multimodal word-correction module, MWRCMH without MWRM yielded inferior results, as compared to MWRCMH, highlighting the significance of identifying appropriate words for refinement. Nevertheless, the results outperformed those of the models in the baseline experiment, thereby demonstrating the effectiveness and superiority of our fusion network. Moreover, the results of MWRCMH without fusion, which lacks the cross-modal hierarchical-fusion module, further substantiated this conclusion.

Table 4.

Experimental results of the model ablation. ↑ indicates that the larger the numerical value, the better the model performance; ↓ indicates that the smaller the numerical value, the better the model performance.

5. Conclusions

This paper introduced a cross-modal hierarchical fusion network-based multimodal sentiment-analysis architecture. Through the incorporation of the multimodal word-correction module and the cross-modal hierarchical-fusion module, our architecture effectively mitigated the sentiment word-recognition errors stemming from speech recognition, augmented the adaptability across diverse modal characteristics, and enhanced the nonlinear modeling capabilities. Our proposed architecture demonstrated strong performance across the MOSI-SpeechBrain, MOSI-IBM, and MOSI-iFlytek datasets. However, the architecture disregarded the fine-grained relationships between the textual, audio, and visual information, and it also exhibited certain issues related to feature redundancy.

In forthcoming work, we intend to address the alignment challenges presented by various modalities while concurrently minimizing modal-feature-information redundancy. Furthermore, we will place greater emphasis on rectifying contribution imbalances within multimodal learning.

Author Contributions

Conceptualization, J.H.; methodology, J.H. and F.W.; software, J.H.; validation, J.H.; formal analysis, J.H.; investigation, F.W. and S.S.; resources, J.H. and P.L.; data curation, J.H.; writing—original draft preparation, J.H.; writing—review and editing, F.W. and S.S.; visualization, J.H.; supervision, S.S. and F.W.; project administration, S.S.; funding acquisition, S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China, grant number U1703261.

Data Availability Statement

Publicly available datasets were analyzed in this study. The data can be found at: https://github.com/huangju1/MWRCMH (accessed on 1 August 2023) dataset.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Qin, Z.; Zhao, P.; Zhuang, T.; Deng, F.; Ding, Y.; Chen, D. A survey of identity recognition via data fusion and feature learning. Inf. Fusion 2023, 91, 694–712. [Google Scholar] [CrossRef]

- Tu, G.; Liang, B.; Jiang, D.; Xu, R.J.I.T.o.A.C. Sentiment-Emotion-and Context-guided Knowledge Selection Framework for Emotion Recognition in Conversations. IEEE Trans. Affect. Comput. 2022, 1–14. [Google Scholar] [CrossRef]

- Noroozi, F.; Corneanu, C.A.; Kamińska, D.; Sapiński, T.; Escalera, S.; Anbarjafari, G. Survey on emotional body gesture recognition. IEEE Trans. Affect. Comput. 2018, 12, 505–523. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs up? Sentiment classification using machine learning techniques. arXiv 2002, arXiv:cs/0205070. [Google Scholar]

- Yue, W.; Li, L. Sentiment analysis using Word2vec-CNN-BiLSTM classification. In Proceedings of the 2020 Seventh International Conference on Social Networks Analysis, Management and Security (SNAMS), Paris, France, 14–16 December 2020; pp. 1–5. [Google Scholar]

- Atrey, P.K.; Hossain, M.A.; El Saddik, A.; Kankanhalli, M. Multimodal fusion for multimedia analysis: A survey. Multimed. Syst. 2010, 16, 345–379. [Google Scholar] [CrossRef]

- Mazloom, M.; Rietveld, R.; Rudinac, S.; Worring, M.; Van Dolen, W. Multimodal popularity prediction of brand-related social media posts. In Proceedings of the Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 197–201. [Google Scholar]

- Pérez-Rosas, V.; Mihalcea, R.; Morency, L.-P. Utterance-level multimodal sentiment analysis. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Sofia, Bulgaria, 4–9 August 2013; pp. 973–982. [Google Scholar]

- Poria, S.; Cambria, E.; Gelbukh, A. Deep convolutional neural network textual features and multiple kernel learning for utterance-level multimodal sentiment analysis. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 2539–2544. [Google Scholar]

- Liu, N.; Dellandréa, E.; Chen, L.; Zhu, C.; Zhang, Y.; Bichot, C.-E.; Bres, S.; Tellez, B. Multimodal recognition of visual concepts using histograms of textual concepts and selective weighted late fusion scheme. Comput. Vis. Image Underst. 2013, 117, 493–512. [Google Scholar] [CrossRef]

- Yu, W.; Xu, H.; Meng, F.; Zhu, Y.; Ma, Y.; Wu, J.; Zou, J.; Yang, K. Ch-sims: A chinese multimodal sentiment analysis dataset with fine-grained annotation of modality. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3718–3727. [Google Scholar]

- Poria, S.; Chaturvedi, I.; Cambria, E.; Hussain, A. Convolutional MKL based multimodal emotion recognition and sentiment analysis. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; pp. 439–448. [Google Scholar]

- Nguyen, D.; Nguyen, K.; Sridharan, S.; Dean, D.; Fookes, C. Deep spatio-temporal feature fusion with compact bilinear pooling for multimodal emotion recognition. Comput. Vis. Image Underst. 2018, 174, 33–42. [Google Scholar] [CrossRef]

- Lv, F.; Chen, X.; Huang, Y.; Duan, L.; Lin, G. Progressive modality reinforcement for human multimodal emotion recognition from unaligned multimodal sequences. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2554–2562. [Google Scholar]

- Tsai, Y.-H.H.; Bai, S.; Liang, P.P.; Kolter, J.Z.; Morency, L.-P.; Salakhutdinov, R. Multimodal transformer for unaligned multimodal language sequences. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 27 July–2 August 2019; p. 6558. [Google Scholar]

- Cheng, J.; Fostiropoulos, I.; Boehm, B.; Soleymani, M. Multimodal phased transformer for sentiment analysis. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Virtual, 7–11 November 2021; pp. 2447–2458. [Google Scholar]

- Rahman, W.; Hasan, M.K.; Lee, S.; Zadeh, A.; Mao, C.; Morency, L.-P.; Hoque, E. Integrating multimodal information in large pretrained transformers. In Proceedings of the Conference Association for Computational Linguistics Meeting, Seattle, WA, USA, 5–10 July 2020; p. 2359. [Google Scholar]

- Zadeh, A.; Chen, M.; Poria, S.; Cambria, E.; Morency, L.-P. Tensor fusion network for multimodal sentiment analysis. arXiv 2017, arXiv:1707.07250. [Google Scholar]

- Wang, Z.; Wan, Z.; Wan, X. Transmodality: An end2end fusion method with transformer for multimodal sentiment analysis. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 2514–2520. [Google Scholar]

- Peng, Y.; Qi, J. CM-GANs: Cross-modal generative adversarial networks for common representation learning. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2019, 15, 1–24. [Google Scholar] [CrossRef]

- Tsai, Y.-H.H.; Ma, M.Q.; Yang, M.; Salakhutdinov, R.; Morency, L.-P. Multimodal routing: Improving local and global interpretability of multimodal language analysis. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Online, 16–20 November 2020; p. 1823. [Google Scholar]

- Majumder, N.; Hazarika, D.; Gelbukh, A.; Cambria, E.; Poria, S. Multimodal sentiment analysis using hierarchical fusion with context modeling. Knowl.-Based Syst. 2018, 161, 124–133. [Google Scholar] [CrossRef]

- Georgiou, E.; Papaioannou, C.; Potamianos, A. Deep Hierarchical Fusion with Application in Sentiment Analysis. In Proceedings of the INTERSPEECH, Graz, Austria, 15–19 September 2019; pp. 1646–1650. [Google Scholar]

- Yan, L.; Cui, Y.; Chen, Y.; Liu, D. Hierarchical attention fusion for geo-localization. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2220–2224. [Google Scholar]

- Fu, Z.; Liu, F.; Xu, Q.; Qi, J.; Fu, X.; Zhou, A.; Li, Z. NHFNET: A non-homogeneous fusion network for multimodal sentiment analysis. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- Poria, S.; Cambria, E.; Bajpai, R.; Hussain, A. A review of affective computing: From unimodal analysis to multimodal fusion. Inf. Fusion 2017, 37, 98–125. [Google Scholar] [CrossRef]

- Colombo, C.; Del Bimbo, A.; Pala, P. Semantics in visual information retrieval. IEEE Multimed. 1999, 6, 38–53. [Google Scholar] [CrossRef]

- Wang, K.; Peng, X.; Yang, J.; Lu, S.; Qiao, Y. Suppressing uncertainties for large-scale facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 6897–6906. [Google Scholar]

- Bonifazi, G.; Cauteruccio, F.; Corradini, E.; Marchetti, M.; Sciarretta, L.; Ursino, D.; Virgili, L. A Space-Time Framework for Sentiment Scope Analysis in Social Media. Big Data Cogn. Comput. 2022, 6, 130. [Google Scholar] [CrossRef]

- Wöllmer, M.; Weninger, F.; Knaup, T.; Schuller, B.; Sun, C.; Sagae, K.; Morency, L.-P. Youtube movie reviews: Sentiment analysis in an audio-visual context. IEEE Intell. Syst. 2013, 28, 46–53. [Google Scholar] [CrossRef]

- Rozgić, V.; Ananthakrishnan, S.; Saleem, S.; Kumar, R.; Prasad, R. Ensemble of SVM Trees for Multimodal Emotion Recognition. In Proceedings of the 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference, Hollywood, CA, USA, 3–6 December 2012; pp. 1–4. [Google Scholar]

- Metallinou, A.; Lee, S.; Narayanan, S. Audio-visual emotion recognition using gaussian mixture models for face and voice. In Proceedings of the 2008 Tenth IEEE International Symposium on Multimedia, Berkeley, CA, USA, 15–17 December 2008; pp. 250–257. [Google Scholar]

- EyEyben, F.; Wöllmer, M.; Graves, A.; Schuller, B.; Douglas-Cowie, E.; Cowie, R. On-line emotion recognition in a 3-D activation-valence-time continuum using acoustic and linguistic cues. J. Multimodal User Interfaces 2010, 3, 7–19. [Google Scholar] [CrossRef]

- Liu, Z.; Shen, Y.; Lakshminarasimhan, V.B.; Liang, P.P.; Zadeh, A.; Morency, L.P. Efficient low-rank multimodal fusion with modality-specific factors. arXiv 2018, arXiv:1806.00064. [Google Scholar]

- Poria, S.; Peng, H.; Hussain, A.; Howard, N.; Cambria, E.J.N. Ensemble application of convolutional neural networks and multiple kernel learning for multimodal sentiment analysis. Neurocomputing 2017, 261, 217–230. [Google Scholar] [CrossRef]

- Ghosal, D.; Akhtar, M.S.; Chauhan, D.; Poria, S.; Ekbal, A.; Bhattacharyya, P. Contextual inter-modal attention for multi-modal sentiment analysis. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 3454–3466. [Google Scholar]

- Zhang, Y.; Song, D.; Zhang, P.; Wang, P.; Li, J.; Li, X.; Wang, B. A quantum-inspired multimodal sentiment analysis framework. Theor. Comput. Sci. 2018, 752, 21–40. [Google Scholar] [CrossRef]

- Verma, S.; Wang, C.; Zhu, L.; Liu, W. Deepcu: Integrating both common and unique latent information for multimodal sentiment analysis. In Proceedings of the International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 3627–3634. [Google Scholar]

- Pham, H.; Liang, P.P.; Manzini, T.; Morency, L.P.; Póczos, B. Found in Translation: Learning Robust Joint Representations by Cyclic Translations between Modalities. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 6892–6899. [Google Scholar]

- Liang, P.P.; Liu, Z.; Tsai, Y.H.H.; Zhao, Q.; Salakhutdinov, R.; Morency, L.P. Learning Representations from Imperfect Time Series Data via Tensor Rank Regularization. arXiv 2019, arXiv:1907.01011. [Google Scholar]

- Mittal, T.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. M3ER: Multiplicative Multimodal Emotion Recognition using Facial, Textual, and Speech Cues. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 1359–1367. [Google Scholar]

- Xue, X.; Zhang, C.; Niu, Z.; Wu, X. Multi-level attention map network for multimodal sentiment analysis. IEEE Trans. Knowl. Data Eng. 2022, 35, 5105–5118. [Google Scholar] [CrossRef]

- Cauteruccio, F.; Stamile, C.; Terracina, G.; Ursino, D.; Sappey-Mariniery, D. An automated string-based approach to White Matter fiber-bundles clustering. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar]

- Wu, Y.; Zhao, Y.; Yang, H.; Chen, S.; Qin, B.; Cao, X.; Zhao, W. Sentiment Word Aware Multimodal Refinement for Multimodal Sentiment Analysis with ASR Errors. arXiv 2022, arXiv:2203.00257. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y.J.E.A. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Zadeh, A.; Zellers, R.; Pincus, E.; Morency, L.-P. Mosi: Multimodal corpus of sentiment intensity and subjectivity analysis in online opinion videos. arXiv 2016, arXiv:1606.06259. [Google Scholar]

- Degottex, G.; Kane, J.; Drugman, T.; Raitio, T.; Scherer, S. COVAREP—A collaborative voice analysis repository for speech technologies. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 960–964. [Google Scholar]

- Ravanelli, M.; Parcollet, T.; Plantinga, P.; Rouhe, A.; Bengio, Y. SpeechBrain: A General-Purpose Speech Toolkit. arXiv 2021, arXiv:2106.04624. [Google Scholar]

- Panayotov, V.; Chen, G.; Povey, D.; Khudanpur, S. Librispeech: An asr corpus based on public domain audio books. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 5206–5210. [Google Scholar]

- Hazarika, D.; Zimmermann, R.; Poria, S. Misa: Modality-invariant and-specific representations for multimodal sentiment analysis. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 10–16 October 2020; pp. 1122–1131. [Google Scholar]

- Yu, W.; Xu, H.; Yuan, Z.; Wu, J. Learning modality-specific representations with self-supervised multi-task learning for multimodal sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 10790–10797. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).