Abstract

Tone mapping is used to compress the dynamic range of image data without distortion. To compress the dynamic range of HDR images and prevent halo artifacts, a tone mapping method is proposed based on the least squares method. Our method first uses weights for the estimation of the illumination, and the image detail layer is obtained by the Retinex model. Then, a global tone mapping function with the parameter is used to compress the dynamic range, and the parameter is obtained by fitting the function to the histogram equalization. Finally, the detail layer and the illumination layer are fused to obtain the LDR image. The experimental results show that the proposed method can efficiently restore real-world scene information while preventing halo artifacts. Therefore, tone mapping quality index and mean Weber contrast of the tone-mapped image are 8% and 12% higher than the closest competition tone mapping method.

1. Introduction

The real-world scene contains a wide range of dynamic range differences, the visible light dynamic range can reach , and the dynamic range that can be perceived by the human eye is . However, conventional display devices can display a dynamic range of . The lack of dynamic range causes the loss of scene details and color information and cannot present the rich information of high dynamic range (HDR) images’ content. Thus, a compression of the dynamic range for rendering HDR images on low dynamic range (LDR) devices is needed; this is commonly called tone mapping. In the process of tone mapping operation, it is necessary to retain the details, contrast, and other information of the image.

At present, HDR tone mapping methods are mainly divided into two categories: global tone mapping and local tone mapping. The method of global tone mapping mainly uses a mapping curve to map each pixel and adjusts the input brightness regardless of location; this method is easy to calculate and can maintain a good overall image effect, but it will cause a lot of loss of local details. On the contrary, local tone mapping takes into consideration the neighborhood of a pixel. Local tone mapping can effectively preserve more details of the image and prevent halo artifacts. Therefore, more and more researchers are paying attention to local tone mapping methods. Based on that, this paper proposes a local tone mapping method based on the least squares. The innovation of our method is first to use weights to obtain the detail layer through the Retinex model. The method of using weights for illumination estimation has two advantages. First, it has good edge-preserving and smoothing properties, which can obtain more accurate illumination information. Second, it can ensure that no gradient reversal is caused and prevent the generation of halo artifacts. The specific method is to assume that each pixel is weighted by the other pixels in its neighborhood; the equation listed for all pixel points is solved by the least squares method. The obtained weights are used for the estimation of the illumination, while the improved boundary-aware weights are introduced to prevent halo artifacts. The results of the illumination estimation are used to obtain the detail layer by the Retinex model. Then, the dynamic range is compressed by a global tone mapping function with a parameter. Different images correspond to different parameters, so this method can achieve dynamic range compression for different images more effectively. Finally, the detail layer is fused with the adjusted illumination layer to obtain the LDR image. This method can compress the dynamic range and render HDR images effectively.

2. Background

In recent years, many researchers have proposed different methods to solve the problems in the process of tone mapping. Common global tone mapping methods include histogram equalization, gamma correction, logarithmic correction, and so on. Many people have proposed improved methods of global tone mapping. Khan et al. presented a new histogram-based tone mapping method for HDR images which outperformed some existing state-of-the-art methods in terms of preserving both naturalness and structure [1]. Choi et al. proposed that the combination of the key value of the scene and visual gamma is used to improve the contrast in the entire resulting image as well as to scale the dynamic range using input luminance value, simultaneously [2]. Khan et al. used a histogram of luminance to construct a look-up table (LUT) for tone mapping [3]. Anas et al. proposed a modification of the histogram adjustment-based linear-to-equalized quantizer (HALEQ), developed for HDR images. The proposed modification preserves more details than the original version of the method in most parts of the HDR image [4].

Recent studies focus more on the local tone mapping method. Thai et al. used an adaptive powerful prediction step. The pixel distribution of the coarse reconstructed LDR image is then adjusted according to a perceptual quantizer concerning the human visual system using a piecewise linear function [5]. Yang et al. proposed an efficient method for image dynamic range adjustment with three adaptive steps [6]. Li et al. presented a clustering-based content and color-adaptive tone mapping method. Different from previous methods which are mostly filtering-based, this method works on image patches, and it decomposes each patch into three components: patch means, color variation, and color structure [7]. Thai and Mokraoui’s method is a multiresolution approach with approximation and details separated, is weighted by entropy, and used an optimized contrast parameter. Then, the pixel distribution of the coarse reconstructed LDR image is adjusted according to a perceptual quantizer concerning the human visual system using a piecewise linear function [8]. Fahim and Jung proposed a two-step solution to perform a tone mapping operation using contrast enhancement. Our method improves the performance of the camera response model by utilizing the improved adaptive parameter selection and weight matrix extraction [9]. David et al. presented two new TMOs for HDR backward-compatible compression. The first TMO minimizes the distortion of the HDR image under a rate constraint on the SDR layer. The second TMO maintains the same minimization with an additional constraint to preserve the SDR perceptual quality [10].

In recent years, researchers have been focusing on the design of various edge-preserving filters for tone mapping. Liang et al. proposed a hybrid ℓ1-ℓ0 decomposition model. A ℓ1 sparsity term is imposed on the base layer to model its piecewise smoothness property. A ℓ0 sparsity term is imposed on the detail layer as a structural prior [11]. Many researchers have designed methods based on deep learning networks. Rana et al. presented an end-to-end parameter-free DeepTMO. Tailored in a cGAN framework, the model is trained to output realistically looking tone-mapped images that duly encompass all the various distinctive properties of the available TMOs [12]. Hu et al. proposed a joint multiscale denoising and tone mapping framework that is designed with both operations in mind for HDR images. The joint network is trained in an end-to-end format that optimizes both operators together [13].

3. Proposed Method

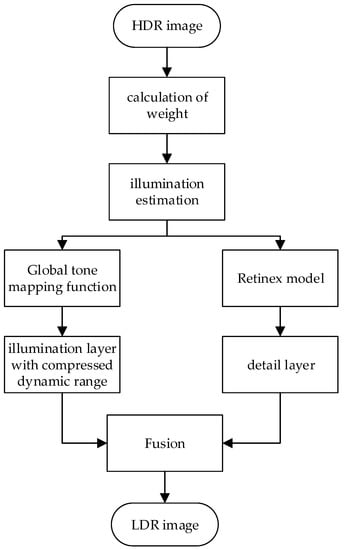

The proposed method includes two parts: illumination layer estimation based on image weights and a global tone mapping function with a parameter. These methods can effectively improve the image quality of the LDR after tone mapping. The flow chart of the method is shown in Figure 1, and its specific steps are as follows.

Figure 1.

Flow chart of the method in this paper.

- The weight between the original image pixel points and other pixel points in their neighborhood is obtained by the least squares method, and the boundary-aware weights are introduced to prevent the halo artifacts and are used to estimate the illumination of the original image.

- The detail layer of the image is obtained by the Retinex model.

- A global tone mapping function with a parameter is used to process the illumination layer obtained in step (1).

After the above processing, the illumination layer and detail layer are fused to obtain the final LDR image.

3.1. The Illumination Estimation

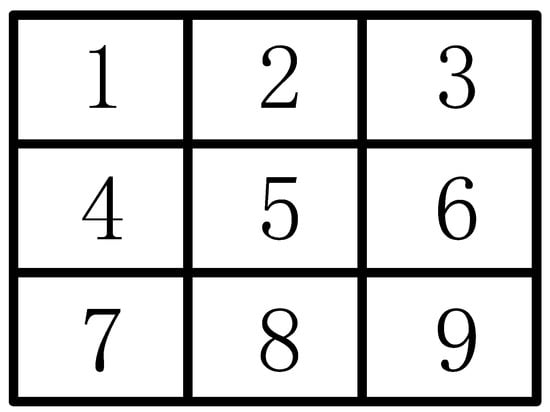

We chose to obtain the weight of all pixel points in the image with their 3 × 3 neighborhood pixel points; each region includes nine pixels. Figure 2 shows the weight matrix of a pixel and its 3 × 3 neighborhood:

Figure 2.

Pixel matrix in the 3 × 3 neighborhood.

The luminance of any pixel is assumed to be weighted by the luminance of other pixels in the 3 × 3 neighborhood, and different pixel points correspond to the same weights as their neighborhoods. For all the different pixels, a function is constructed concerning the 9-pixel weights, as shown in Equation (1).

where is the weight of a pixel point with other pixels in its 3 × 3 neighborhood, denotes the brightness of a pixel with horizontal coordinate I and vertical coordinate j in the image pixel matrix, H is the image pixel matrix height value, and W is the width value of the image pixel matrix. According to the least squares criterion, the derivative function of any pixel weight of is 0, i.e., Equation (2) holds.

Bringing all the weights into Equation (2) according to Equation (1), a linear system of equations on the pixel weights is obtained, as shown in Equation (3).

By solving Equation (3) through the Jacobi iteration method, the optimal solution of any pixel weight can be obtained, as shown in Equation (4):

where , and the superscript t denotes the number of iterations. Let Equation (5) hold constant for all pixels. We set an error value to determine the termination of the iteration. When the error value is less than 0.001, the image effect difference does not change much. To take into account the efficiency and the image quality, we set the error value to 0.001.

Then, the Jacobi iterative method converges; at this point, is the least squares estimate of the pixel weights. The obtained weights are used to estimate the illumination. Equation (6) shows the estimation result of the illumination.

3.2. Improved Illumination Estimation

The experiment found that the difference between the brightness value of a pixel and other pixels in its neighborhood is too large, that is, if it is in the edge area, the artifact of a halo will occur. To prevent the artifact of halos, i.e., to achieve the function of edge detection, this paper introduces boundary-aware weights.

where ,, N is the total number of pixels in the area, is the variance value of the pixel in the neighborhood window, and is the variance of all pixel points in the neighborhood except for the selected pixel points. As is the variance value whose minimal value can be 0 for the area where the luminance value remains unchanged, is a small constant value used to avoid the singularity that occurs with 0 values in these cases when taking division operation. It is used to ensure the validity of weights. We also should ensure that the value of is as small as possible while adapting to different images. So, when the input image is L, set it to . When is in the edge region, the value is usually greater than 1. When is in the smoothing region, the value is usually less than 1. We combined the interpixel weights obtained by the least squares method and obtained an improved illumination layer estimation for solving the halo artifacts, as shown in the following equation.

where is the improved illumination estimation. When a pixel is at the edge, causing a large value of , the introduction of boundary-aware weights can effectively reduce the impact of the brightness of other pixels, thus solving the halo artifacts caused by large differences in weights. When a pixel is in the smoothing region, introducing improved weights can enlarge the smoothing region and enhance the image details.

3.3. Dynamic Range Compression

To make full use of the advantages of histogram equalization and solve the problem of image over-enhancement caused by the method, in this paper, a global tone mapping function with a parameter was designed to compress the dynamic range. Based on the least squares, the parameter is calculated by fitting the global tone mapping function to the histogram equalization. The following equation is the global tone mapping function.

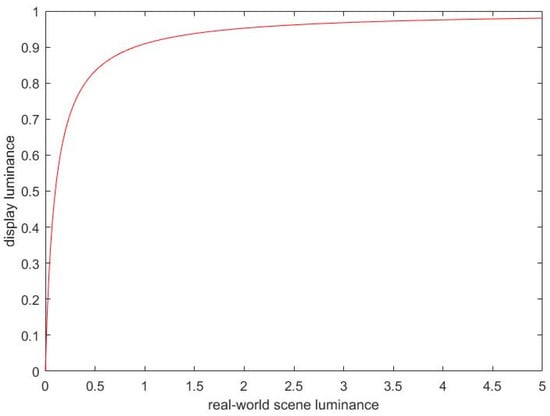

where is the mapped luminance, is the input luminance value, and is the parameter; the result of the mapping is shown in Figure 3.

Figure 3.

The dynamic range compression curve.

We take the reciprocal of the global tone mapping function and histogram equalization results at the same time and build a functional relationship between the global tone mapping function and histogram equalization based on the least squares criterion:

where is the calculation result of histogram equalization. According to the least squares criterion, the derivative of is 0; this shows that Equation (11) holds, and Equation (12) is obtained:

By solving Equation (12), the parameter of the global tone mapping function can be obtained:

According to the obtained parameters, the global tone mapping functions corresponding to different images can thus be obtained, and the dynamic range compression of different images can be achieved.

3.4. LDR Image Generation

3.4.1. Detail Layer Estimation

The maximum value between each pixel RGB channel is set as the luminance component of the image, as shown in Equation (14).

where is the luminance maximum for each pixel RGB channel, which is used to normalize the RGB colors. Then, calculate the color components for each channel, as shown in Equation (15).

Based on the estimation of illumination obtained above, the detail layer of the image is obtained, as shown in the following equation

where is the image detail layer.

3.4.2. Image Fusion and Color Retention

The detail layer and the illumination layer after dynamic range compression are fused to obtain the result, as shown in the following equation:

where is the brightness value after fusion, and are the reconstruction coefficients of the image fusion process, and the reconstruction coefficients take different values because the image illumination layer and the detail layer contain different information. The illumination layer contains the dynamic range of the image, which generally needs to be compressed, and the choice for is generally less than 1. However, if the value is too small, it will cause problems such as a low overall brightness value and low contrast, which will affect the image quality. The detail layer contains the image detail information, which generally needs to be enhanced, and the choice is generally greater than 1. However, it should not be set too large because it can cause excessive detail enhancement while creating problems such as halo artifacts and unnatural overall image effects. Based on that, we set and , respectively, to 0.8 and 1.2.

After the above processing, the color information needs to be recovered, as shown in the following equation.

where ,, are the three-channel information after color recovery, and s is the gamma correction factor that determines the color saturation of the recovered image. In most cases, we can achieve close-to-ideal colors mostly by using a monitor with a gamma value of 2.2. Most LCD monitors are designed based on a gamma value of 2.2. So, we set s = 1/2.2, i.e., s = 0.45, in our method.

4. Experimental Results and Analysis

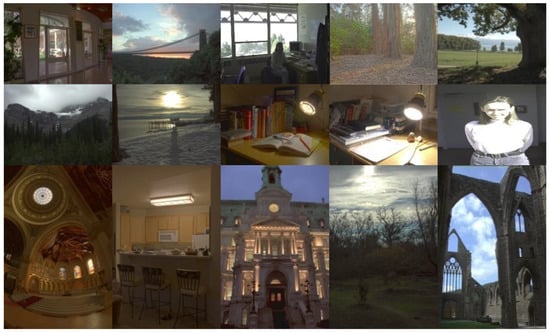

An experimental platform with an Intel i5-6300HQ processor, 8 GB memory capacity, 64-bit Windows 10 operating system, and Matlab 2016b simulation software was used to verify the feasibility of the proposed method. The LDR images were displayed on a Samsung LCD monitor model S32AM700PC. HDR images from the TMQI database [14] were selected for experimental comparison and analysis, and all images in the TMQI database are shown in Figure 4. The dataset contains some high-dynamic range scenes from the world, such as landscape photos, architecture photos, etc. A total of 15 selected HDR images from the database were used to analyze and evaluate the LDR images tone-mapped by TVI-TMO [3], the method of Li et al. [7], the method of Aziz et al. [1], the method of Gu et al. [15], the method of Shibata et al. [16], and the proposed method. Tone-mapped images were evaluated by subjective and objective assessment.

Figure 4.

TMQI dataset.

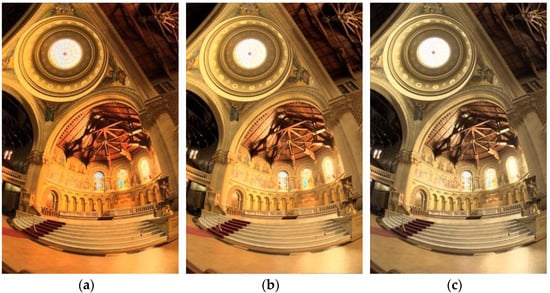

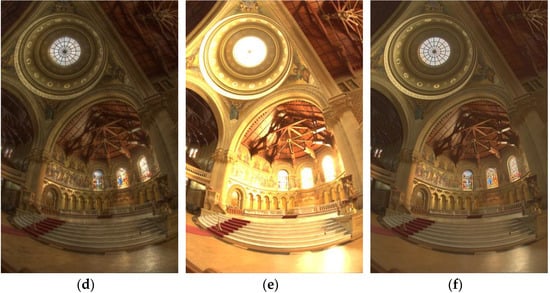

4.1. Subjective Effect Analysis

The three sets of experimental results are shown in Figure 5, Figure 6, and Figure 7, respectively. From the experimental results, it can be seen that TVI-TMO retains the background information in the image, and the detail part is enhanced, but the overall brightness difference is large. The detail of the result of Li et al. is clearer, but the overall picture performance is not realistic. The result of Aziz et al. has good overall brightness performance, but there are incomplete details at extremely bright places. The result of Gu et al. is clear, but there is an artifact of the halo at the edge of the image. The result by Shibata et al. has overall good image perception, and the image details are clear, but there is the problem of low brightness. The proposed method has a good sense of image hierarchy, moderate brightness, and effectively preserves the edges of the original image and solves the halo artifacts, which is in line with the visual effect of the human eye.

Figure 5.

The tone-mapped images of the church. (a) Result of TVI-TMO; (b) Result of Li et al.; (c) result of Aziz et al.; (d) result of Gu et al.; (e) result of Shibata et al.; (f) result of the proposed method.

Figure 6.

The tone-mapped images of the landscape. (a) Result of TVI-TMO; (b) result of Li et al.; (c) result of Aziz et al.; (d) result of Gu et al.; (e) result of Shibata et al.; (f) result of the proposed method.

Figure 7.

The tone-mapped images of the architecture. (a) Result of TVI-TMO; (b) result of Li et al.; (c) result of Aziz et al.; (d) result of Gu et al.; (e) result of Shibata et al.; (f) result of the proposed method.

Looking at Figure 6 and Figure 7, it can be seen that, when compared to the method of this paper, the method of Gu et al., although it seems clearer, is not universal. The evaluation of LDR images generated by the method of Gu et al. for different scenes is significantly different. Moreover, the method of Gu et al. suffers from halo artifacts, an unnatural appearance, color distortion, and overexaggerated detail. For example, the edge between the leaf and sky in Figure 6d shows clear halos, the detail of the trunk and the sunroof in Figure 5d and Figure 6d are overexaggerated, there are halo artifacts at the edges of the buildings and the sky in Figure 7d, and all three images produced by the method of Gu et al. suffer from low saturation. This demonstrates that the method of Gu et al. makes the output image visually unnatural to some extent.

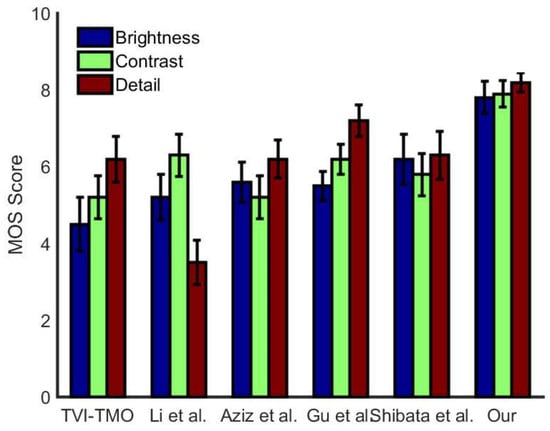

We use the calculation of the mean opinion score (MOS) [7] for each comparison method to subjectively evaluate the tone mapping method. The method involves each participant in the experiment scoring each image on parameters such as brightness, contrast, and detail according to subjective visual effects. The method displayed the tone-mapped images on the monitor one by one. Eight experienced female and nine experienced male volunteers were selected, and each volunteer scored the images on the monitor subjectively in the range of [1,10]. The evaluation indexes include brightness, contrast, and detail. A rating of 1 represents the worst visual image, and a rating of 10 represents the best visual image. All the images in the TMQI database were scored, and the MOS values for each comparison method were counted. The obtained MOS score statistics are shown in Figure 8. The high values of the indicators in Figure 8 indicate that the image quality after tone mapping is good.

Figure 8.

Statistical chart of MOS scores.

In the horizontal axis of Figure 8, the methods under comparison are displayed and the vertical coordinates are the MOS scores. The vertical line at the center of the upper boundary of the bar chart corresponds to the error line for each method, and the magnitude of the error line represents the standard deviation of the scores scored by different people.

Note that even though rare LDR images produced by the method of Gu et al. are cleaner than our method, the method of Gu et al. easily causes an unnatural effect by introducing halo artifacts, color distortion, and overexaggerated detail. More importantly, the method of Gu et al. is not a universal method. Thus, as an average evaluation, the MOS score of the image produced by the method of Gu et al. is a little lower than our method.

According to the scoring results, the results obtained by the proposed method were evaluated highly by most of the experimenters. The method has the smallest evaluation error, which indicates that the proposed method can adapt to the variability of images between different scenes, and the effect of the mapped images is consistent with the visual effect of most people and has strong stability for different individuals.

4.2. Objective Evaluation Effect Analysis

We used the objective evaluation method of tone mapping quality index (TMQI) [14] to evaluate the results of the method, after tone mapping, more comprehensively. The TMQI method consists of three evaluation metrics: structural fidelity, naturalness, and image quality score. Structural fidelity measures the extent to which the generated low-dynamic-range image maintains the structural information of the original image. Naturalness is a criterion designed by comparing the luminance statistics of a large number of natural images, and the larger the value, the more suitable it is for human eye observation. The image quality score is a composite score of the first two metrics. The TMQI scores are shown in Table 1.

Table 1.

Tone mapping TMQI scores.

In addition, the local mean Weber contrast (MLWC) [17] metric, natural image quality evaluator (NIQE) [18], and blind/referenceless image spatial quality evaluator (BRISQUE) [19] are selected to further evaluate the performance of each method. The mean local Weber contrast index is a perceived contrast measure based on Weber’s law, which takes into account the sensitivity of human visual effects to the spatial frequency content of an image. MLWC evaluates the image detail, and a higher the MLWC score indicates richer details of the LDR image. The natural image quality evaluator (NIQE) model extracts a set of local features from an image to evaluate image quality by using a multivariate Gaussian model. A smaller NIQE score indicates a higher image quality of the LDR image. BRISQUE compares the processed image to a default model computed from images of natural scenes with similar distortions. A small score indicates better perceptual quality. Several evaluation indexes’ scores are shown in Table 2 and Table 3.

Table 2.

Tone mapping MLWC and NIQE scores.

Table 3.

Tone mapping BRISQUE scores.

As can be seen from Table 1, the TMQI score of this method is higher than other methods, which indicates that the proposed method maintains a better structure and the visual effect is closer to the real scene. From Table 2, the MLWC score is higher than other methods, which indicates that the proposed method is better than other methods in terms of detail richness. The NIQE and BRISQUE scores are lower than those of several other methods, which indicates that the proposed method is better than other methods in terms of image quality.

5. Conclusions

In this paper, we proposed a tone mapping method based on the least squares. Our purpose is to reveal the local contrast of real-world scenes on a conventional monitor. Based on the classical Retinex model, we used the interimage weights by the least squares for illumination estimation, while we introduced boundary-aware weights to prevent halo artifacts. The detail layer is obtained by the above method. The method using weights takes into account the luminance intensity and pixel position, which can prevent the generation of halo artifacts while ensuring accurate estimation of illumination information. A global tone mapping function with the parameter is applied to the obtained illumination estimation to compress the dynamic range. The function is obtained by fitting the histogram equalization results, and different images correspond to different parameter values. This method can achieve dynamic range compression for different images more effectively. The detail layer and the illumination layer after dynamic range compression are fused to obtain the LDR image. The high dynamic range scenes in the TMQI dataset were selected for comparative analysis from both subjective and objective aspects. The experimental results show that details of both dark and highlighted regions are preserved, and halo artifacts do not appear. In the proposed method, the TMQI score of LDR images is 0.893 and the MLWC score is 1.654; the detail richness, structure fidelity, and visual effect are all better than other methods in different degrees. In this paper, we focused on solving the problems of illumination estimation and dynamic range compression in tone mapping, and we will focus on using tone mapping for video processing in the future.

Author Contributions

Conceptualization, L.Z.; methodology, L.Z. and J.W.; software, G.L.; validation, L.Z., G.L. and J.W.; formal analysis, G.L.; investigation, G.L.; resources, J.W.; data curation, L.Z.; writing—original draft preparation, G.L.; writing—review and editing, J.W.; visualization, J.W.; supervision, L.Z.; project administration, L.Z. and J.W.; funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Quzhou Science and Technology Plan Project, grant number 2022K108; Heilongjiang Provincial Natural Science Foundation of China, grant number YQ2022F014; and Basic Scientific Research Foundation Project of Provincial Colleges and Universities in Heilongjiang Province, grant number 2022KYYWF-FC05.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors acknowledge Quzhou Science and Technology Plan Project (grant number 2022K108), Heilongjiang Provincial Natural Science Foundation of China (grant number YQ2022F014), and Basic Scientific Research Foundation Project of Provincial Colleges and Universities in Heilongjiang Province (grant number 2022KYYWF-FC05).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khan, I.R.; Aziz, W.; Shim, S.O. Tone Mapping Using Perceptual-quantizer and Image Histogram. IEEE Access 2020, 12, 12–15. [Google Scholar] [CrossRef]

- Choi, H.H.; Kang, H.S.; Yun, B.J. A Perceptual Tone Mapping HDR Images Using Tone Mapping Operator and Chromatic Adaptation Transform. Imaging Sci. Technol. 2017, 61, 15–20. [Google Scholar] [CrossRef]

- Khan, I.R.; Rahardja, S.; Khan, M.M.; Movania, M.M.; Abed, F.A. Tone Mapping Technique Based on Histogram Using a Sensitivity Model of The Human Visual System. IEEE Trans. Ind. Electron. 2018, 65, 3469–3479. [Google Scholar] [CrossRef]

- Husseis, A.; Mokraoui, A.; Matei, B. Revisited Histogram Equalization as HDR Images Tone Mapping Operators. IEEE Comput. Soc. 2017, 15, 144–149. [Google Scholar]

- Chien, B.; Mokraoui, A.; Matei, B. Contrast Enhancement and Details Preservation of Tone Mapped High Dynamic Range Images. Image Represent. 2018, 58, 22–26. [Google Scholar]

- Kai-Fu, Y.; Hui, L.; Hulin, K.; Chao-Yi, L.; Yong-Jie, L. An Adaptive Method for Image Dynamic Range Adjustment. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 15–18. [Google Scholar]

- Hui, L.; Xixi, J.; Zhang, L. Clustering Based Content and Color Adaptive Tone Mapping. Comput. Vis. Image Underst. 2018, 168, 15–20. [Google Scholar]

- Thai, B.C.; Mokraoui, A. HDR Image Tone Mapping Histogram Adjustment with Using an Optimized Contrast Parameter. In Proceedings of the International Symposium on Signal, Image, Video and Communications (ISIVC), Rabat, Morocco, 27–30 November 2018; Volume 12, pp. 15–20. [Google Scholar]

- Fahim, M.A.N.I.; Jung, H.Y. Fast Single-Image HDR Tone Mapping by Avoiding Base Layer Extraction. Sensors 2020, 20, 4378. [Google Scholar] [CrossRef] [PubMed]

- Gommelet, D.; Roumy, A.; Guillemot, C.; Ropert, M.; Julien, L. Gradient-Based Tone Mapping for Rate-Distortion Optimized Backward-Compatible High Dynamic Range Compression. IEEE Trans. Image Processing 2017, 15, 12–18. [Google Scholar] [CrossRef] [PubMed]

- Liang, Z.; Xu, J.; Zhang, D. A Hybrid l1-l0 layer Decomposition Model for Tone Mapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4758–4766. [Google Scholar]

- Rana, A.; Singh, P.; Valenzise, G.; Dufaux, F.; Komodakis, N.; Smolic, A. Deep Tone Mapping Operator for High Dynamic Range Images. IEEE Trans. Image Processing 2019, 29, 18–25. [Google Scholar] [CrossRef] [PubMed]

- Hu, L.; Chen, H.; Allebach, J.P. Joint Multi-Scale Tone Mapping and Denoising for HDR Image Enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; Volume 26, pp. 729–738. [Google Scholar]

- Yeganeh, H.; Wang, Z. Objective Quality Assessment of Tone-mapped Images. IEEE Trans. Image Processing 2012, 22, 657–667. [Google Scholar] [CrossRef] [PubMed]

- Gu, B.; Li, W.; Zhu, M. Local Edge-preserving Multiscale Decomposition for High Dynamic Range Image Tone Mapping. IEEE Trans. Image Processing 2012, 22, 70–79. [Google Scholar]

- Shibata, T.; Tanaka, M. Gradient-domain Image Reconstruction Framework with Intensity-range and Base-structure Constraints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June 2016; pp. 2745–2753. [Google Scholar]

- Khosravy, M.; Gupta, N.; Marina, N. Perceptual Adaptation of Image Based on Chevreul–Mach Bands Visual Phenomenon. IEEE Signal Processing Lett. 2017, 24, 594–598. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).