Abstract

In this paper, we investigate the problem of cross-corpus speech emotion recognition (SER), in which the training (source) and testing (target) speech samples belong to different corpora. This case thus leads to a feature distribution mismatch between the source and target speech samples. Hence, the performance of most existing SER methods drops sharply. To solve this problem, we propose a simple yet effective transfer subspace learning method called joint distribution implicitly aligned subspace learning (JIASL). The basic idea of JIASL is very straightforward, i.e., building an emotion discriminative and corpus invariant linear regression model under an implicit distribution alignment strategy. Following this idea, we first make use of the source speech features and emotion labels to endow such a regression model with emotion-discriminative ability. Then, a well-designed reconstruction regularization term, jointly considering the marginal and conditional distribution alignments between the speech samples in both corpora, is adopted to implicitly enable the regression model to predict the emotion labels of target speech samples. To evaluate the performance of our proposed JIASL, extensive cross-corpus SER experiments are carried out, and the results demonstrate the promising performance of the proposed JIASL in coping with the tasks of cross-corpus SER.

1. Introduction

The research of speech emotion recognition (SER) aims at enabling the computer to automatically understand the emotional states of speech signals [1,2,3], which provides a more natural interaction between the human and computer. Due to this reason, SER research has attracted the wide attention of researchers from the communities of speech signal processing, affective computing, pattern recognition, and human-computer interaction [1,2,3,4]. Over the past several decades, many well-performing SER methods have been proposed and achieved promising performance on widely used speech emotion corpora [3,5,6]. However, it is noted that these SER methods do not consider real-world scenarios [7,8,9,10], e.g., the training and testing speech samples may be recorded by different microphones, under different environments, expressed by diverse speakers, or belong to different languages. In this case, the training and testing speech samples possibly have inconsistent feature distributions, which remarkably degrades the performance of the SER methods when the model trained on training data cope with the new testing data. Hence, it is meaningful to investigate a more challenging but interesting SER task, in which the SER model is trained on one (or several) dataset(s) and tested on other datasets, i.e., cross-corpus SER.

Different from the ordinary SER, the labeled training data (source domain) and unlabeled testing data (target domain) in cross-corpus SER come from different speech corpora, following the definitions of transfer learning (TL) and domain adaptation (DA) [9,11,12,13,14]. Consequently, corpus bias [9] has widely existed between the source and target domains, caused by the inconsistent feature distribution of speech samples. To deal with the bias issue in cross-corpus SER tasks, researchers have made great efforts in recent years. For example, in [10], which may be the earliest work to formalize the cross-corpus SER standardly, Schuller et al. systematically defined the setting of cross-corpus SER tasks and investigated how to solve this problem from the elimination of the distribution gap between the source and target speech samples. Additionally, they proposed to use a set of normalization schemes including speaker normalization (SC), corpus normalization (CN), and speaker-corpus normalization (SCN) to investigate the cross-corpus SER.

Subsequently, the methods based on TL and DA gradually began to be applied to cope with cross-corpus SER [9,12,15]. In the work of [12], Zong et al. proposed a domain-adaptive least square regression (DaLSR) model guided by a regularization term consisting of one- and second-order moments to learn a corpus-independent regression matrix. Furthermore, Liu et al. [15] presented a simple yet effective transfer subspace learning method called domain-adaptive subspace learning (DoSL) by only considering the one-order moment (i.e., mean value) to measure the distribution gap between the source and target speech samples. More recently, Song et al. [9] investigated a straightforward transfer subspace learning (TSL) model to bridge the feature distribution gap across corpora by resorting to the maximum mean discrepancy (MMD) [16,17].

In addition to traditional subspace learning-based methods, deep learning-based methods [18,19,20,21,22], e.g., convolution neural networks (CNN) and recurrent Neural networks (RNN), have achieved promising performance in cross-corpus SER, taking advantage of their powerful representation capability. Parray et al. [22] investigated the generalization of several deep learning architectures, e.g., CNN, long short-term Memory(LSTM), and CNN-LSTM, on different cross-corpus SER tasks. In the work of [18,19], deep neural networks were embedded into DA to learn the corpus-invariant features for emotional speech. Moreover, the works of [20,21] utilized domain adversarial learning to reduce the domain shift between training and testing data.

Basically, these methods mainly aim to learn a common emotion feature subspace in which the marginal feature distributions of source and target domains are as close as possible. In the cross-database SER, however, this common subspace is incomplete as the emotion features of speech samples are susceptible to background noise, speaker identity information, and language information, leading to feature confusion [8,9,10]. To maintain the discriminativeness of emotion features, adapting both marginal and conditional distribution (i.e., joint distribution adaptation (JDA), which has achieved success in image classification [14,23,24]) provides a promising method to deal with cross-corpus SER. Zhang et al. [25] proposed a joint distribution adaptive regression (JDAR) to integrate the conditional distribution into MMD for the fine-gained domain shift alignment. Even so, the JDAR is weak in dealing with outlier samples, leading to large domain discrepancies between the training and testing data.

Inspired by the success of the above TL- and DA-based methods, in this paper, we propose a novel method called joint distribution implicitly aligned subspace learning (JIASL) for the cross-corpus SER problem. Unlike these aforementioned methods, the proposed JIASL has three advantages as follows. (1) It absorbs the idea of recent widely-used approach (i.e., JDA) for the distribution gap alignment [14,23,25], which jointly considers the marginal feature distribution and class-aware conditional distribution. (2) More importantly, it adopts a strategy of reconstructing target speech features by the source features to implicitly remove the feature distribution match between the original source and target speech feature sets instead of directly minimizing statistical moments. (3) Meanwhile, it can also restrict the influence of outlier source features for the reconstruction of the target domain by sparse constraints in joint distribution alignment. Guided by the above advantages, JIASL can learn a corpus invariant projection matrix to predict the emotion labels of target speech samples, although it is merely given the source emotion label information. To evaluate the proposed JIASL, we design the cross-corpus SER tasks based on three publicly available speech emotion corpora, including EmoDB (Berlin) [26], eNTERFACE [27], and CASIA [28], and conduct extensive experiments. Experimental results showed that compared with current state-of-the-art transfer subspace learning methods, the proposed JIASL achieved more promising performance in coping with the cross-corpus SER tasks.

2. The Proposed Method

In this section, we describe the proposed JIASL in detail and provide its optimization algorithm. Then, we also illustrate its application for cross-corpus SER.

2.1. Notations

Herein, we give some important notations that are needed in formulating JIASL for convenient illustration. According to the task setting of cross-corpus SER [9,12], the speech samples and their emotion labels of source domain are provided, while the ones of the target domain have no labels. Therefore, we denoted the feature matrix of source data as and its label matrix as , where is the acoustic feature vector of the speech sample. Note that we adopt one-hot labels to represent (i.e., the column of ), and is a one-hot vector. The value of its entry is set as 1 if its corresponding speech sample belongs to the emotion, while the resting entries are all set as 0. Similarly, the feature matrix of target speech samples can be denoted as , where is the feature vector of the speech sample in the target domain.

2.2. Formulation of JIASL

The basic idea of the proposed JIASL is very straightforward, i.e., building a subspace learning model to learn an emotion-discriminative and corpus-invariant projection matrix for cross-corpus SER. Following this idea, we design the optimization problem for JIASL as follows:

where is a such projection matrix that the proposed JIASL model aims to learn, and are the reconstruction coefficients, whose detail will be given as follows, and and represent the indexes of speech sample and emotion, respectively. is the trade-off parameter to control the balance between two terms.

From Equation (1), it can be found that the objective function of our JIASL has two major terms. Both terms actually correspond to the expectative abilities described in the basic idea of JIASL, i.e., emotion discriminative and corpus invariant. To this end, is designed as a simple group sparse linear regression loss associated with emotion-discriminative ability according to [12,15], which can be formulated as follows:

where is the trade-off parameter for the balance across terms. The projection matrix aims to regress the source features to the label space to preserve the emotion discrimination in feature learning. Meanwhile, the norm on seeks the features on some specific dimensions contributed to emotion feature learning through group sparse of the whole row elements.

As for the second term, , it corresponds to the corpus-invariant ability in emotion feature learning. To achieve this goal, we make efforts from two aspects:

First, instead of directly minimizing their statistical moments such as mean value, covariance, and MMD, we raise the idea of implicitly alleviating the feature distribution mismatch between the source and target speech corpora. The advantage of this method is that the estimation of domain shift is not restricted by the discrepancy measurement function but can be obtained gradually through parameter optimization.

Specifically, inspired by the common subspace learning works [12,29], we adopt a strategy of reconstructing the target speech samples by a part of the source samples to enforce the projection matrix learning in JIASL from the sample-level view, which can be formulated as the following sparse optimization problem:

where is the trade-off parameter, and denotes the column of and is the reconstruction coefficient vector corresponding to the target speech sample . The reconstruction strategy aims to narrow the bias between the source and target domains in the common emotion feature subspace. Meanwhile, in order to avoid the interference of outlier samples in the source domain for the reconstruction, the norm is embedded into to achieve the sparseness of redundant samples in the source domain. Furthermore, extending the reconstruction to all the target speech samples, we arrive at the total reconstruction optimization problem, as follows:

where .

Second, our JIASL also absorbs the idea of jointly aligning the marginal and class-aware conditional feature distributions (i.e., JDA) to pursue the fine-gained domain alignment, in which the effectiveness of JDA has been demonstrated in dealing with other domain adaptation tasks [23,25]. By incorporating the JDA idea into Equation (4), the objective function of the above designed reconstruction can be extended to the following formulation according to [23,25], which is eventually served as the for JIASL:

where is the emotion class-aware reconstruction coefficient matrix, and , and and denote the numbers of speech sample from the emotion class satisfying and . Similar to , can also be rewritten as , where are the column in .

2.3. JIASL for Cross-Corpus SER

After constructing the JIASL model, we implement it to the cross-corpus SER task; the details are described as follows. Given the speech features of labeled source and unlabeled target domains, we firstly optimize Equation (6) to achieve the optimal projection matrix corresponding to . Once the is learned, we can conveniently predict the target emotion labels. Specifically, suppose we have a speech feature vector denoted by from target corpora. Then, its emotion label is determined according to the following criterion:

where is the entry of label vector .

2.4. Optimization of JIASL

Since the optimization function, Equation (6), contains several complex regularization terms, e.g., the norm and norm, its closed-form solution can not be solved directly. Hence, we adopt the alternating direction method (ADM) [30] to optimize the proposed JIASL according to [12,31]. In detail, as the target label information is unknown, we firstly need to initialize the projection matrix to help compute the reconstruction term corresponding to emotion class aware conditional distribution alignment. Then, we repeat the following two major steps until convergence:

(1) Predict the target emotion labels using Equation (7) based on , and then confirm and according to the predicted target emotion labels;

(2) Solve the optimization problem in Equation (6), whose detailed solving procedures are summarized in Algorithm 1.

| Algorithm 1 Detailed procedures for solving the optimization problem in Equation (6). |

Repeat the following steps until convergence:

|

3. Experiments

In this section, we conduct extensive experiments to evaluate the proposed JIASL method and discuss its results compared with the state-of-the-art methods under the cross-corpus SER tasks.

3.1. Speech Emotion Database

The experiments were designed for extensive cross-corpus SER tasks on three widely used speech emotion corpora, i.e., EmoDB (Berlin) [26], eNTERFACE [27], and CASIA [28].

EmoDB is a German emotional speech database consisting of 535 speech samples from seven emotions, i.e., happiness (HA), sadness (SA), disgust (DI), anger (AN), boredom (BO), fear (FE), and neutral (NE). Ten German volunteers were induced to express their emotions with some prepared texts. Each speech sample is recorded with a sample rate of 16 kHz.

eNTERFACE is an English bi-modal emotion database, and we extract its audio in the experiments. There are 1257 samples (without the data of the 6th speaker) in eNTERFACE, and each sample is labeled as one of six types of emotions, i.e., happiness (HA), sadness (SA), disgust (DI), fear (FE), anger (AN), and surprise (SU). The speech sentences are recorded by 43 English speakers with a sample rate of 44 kHz.

CASIA is a Chinese speech emotion corpus and includes 1200 samples with six emotions, e.g., happiness (HA), sadness (SA), neutral (NE), fear (FE), anger (AN), and surprise (SU). The speech utterances are generated by four Chinese volunteers expressing emotions under specific scripts with a sample rate of 16 kHz.

3.2. Experimental Setup

Task Setup and Protocol: The task setting of a cross-corpus SER is that one dataset (or several datasets) is regarded as training data and another dataset is set as target data. Note that we only obtain the data of the target domain without labels in practical cases; thus, the current practice is to use the training samples and their labels in the source domain and the testing samples in the target domain for domain adaptation. By alternatively using either two of the above speech corpora, we were able to design six cross-corpus SER tasks denoted by B→E, E→B, B→C, C→B, E→C, and C→E. B, E, and C are the abbreviations of EmoDB, eNTERFACE, and CASIA, respectively, and the left side of the arrow is the source speech corpus, while the other corresponds to the target one. Noting that, since these three speech corpora have inconsistent label information, we selected the speech samples sharing the same emotion labels in each task. We summarize the sample statistical information in all six cross-corpus SER tasks in Table 1.

Table 1.

The sample statistical information in all six cross-corpus SER tasks.

Input Feature: In the experiments, we chose the INTERSPEECH 2009 Emotion Challenge (IS09) official feature set [33] and INTERSPEECH 2010 Paralinguistic Challenge feature set (IS10) [34] to describe the speech signals. The IS09 feature set consists of 384 elements, including 32 acoustic low-level descriptors (LLDs) and their 12 corresponding functions, which can be extracted by the openSMILE toolkit [35]. The IS10 feature set consists of 1582 elements with 34 LLDs and their 21 corresponding functions in the openSMILE toolkit.

Parameter Setup: We set the trade-off parameters for all the comparison methods by searching from a preset parameter interval and then reported the best evaluation metrics (i.e., WAR and UAR), which correspond to the best parameters. As for our JIASL, we searched for , , and from [0.001:0.001:0.009, 0.01:0.01:0.09, 0.1:0.1:0.9, 1:1:9], where START:STEP:END represents the loop from the start value to the end value with a step.

Evaluation Metric: Two widely used evaluation metrics, i.e., weighted average recall (WAR) and unweighted average recall (UAR) [10], were adopted to serve as the performance measurement for cross-corpus SER. WAR is known as the standard accuracy, denoted as

where and are the numbers of correctly predicted samples and all testing samples, respectively. UAR is defined as the class-wise accuracy, which can be represented as

where and represent the numbers of correctly predicted samples and total samples for the ith emotion class, respectively.

3.3. Comparison Methods

We compare our JIASL with recent well-performing state-of-the-art methods for cross-corpus SER tasks. These comparison methods are illustrated as follows.

- Baseline method:IS09 or IS10 feature sets with the classifier of SVM [5];

- Transfer subspace learning-based methods:Transfer component analysis (TCA) [36];Geodesic flow kernel (GFK) [37];Subspace alignment (SA) [38];Transfer kernel learning (TKL) [13];Domain-adaptive subspace learning method (DoSL) [15];Joint distribution adaptive regression (JDAR) [25].

3.4. Results and Discussions

Experimental results in terms of WAR are depicted in Table 2. As Table 2 shows, several interesting observations can be found. (1) Based on the IS09 feature set, it is clear to see that the proposed JIASL achieved the best average accuracy of all six cross-corpus SER tasks, reaching , which has a remarkable increase of compared with the second-highest WAR obtained by GFK [37]. In detail, among all the six cross-corpus SER tasks, it can also be observed that our JIASL achieved the highest WAR in five tasks, i.e., , , , , and . (2) The results based on the IS10 feature set reveal that our proposed JIASL obtained more competitive performance than other comparison methods. Furthermore, the JIASL performed best in five cross-corpus SER tasks, i.e., , , , , and . Both observations demonstrate the superior performance of the proposed JIASL over recent state-of-the-art transfer subspace learning methods in coping with cross-corpus SER tasks. In addition, we also find that the WAR results based on the IS10 feature set are better than the those based on the IS09 feature set, both in average accuracy and in most subtasks. This is due to the fact that the feature dimension of IS10 is higher than the feature of IS09, containing more emotional information.

Table 2.

The experimental results in terms of WAR (%) for six designed cross-corpus SER tasks. The best result in each task is highlighted in bold.

Since both the eNTERFACE and Emo-DB datasets used in the experiments are class-imbalanced, we also report the results in terms of UAR for cross-corpus SER tasks to evaluate the performance of our proposed JIASL and the state-of-the-art methods more comprehensively, as shown in Table 3. For Table 3, it is clear that: (1) based on two feature sets, our proposed JIASL obtained the highest average accuracies, in which it reached the UAR based on the IS09 feature set (improving by compared with the second-highest WAR obtained by DoSL [15]) and UAR based on IS10 feature set (increasing by compared with the second-highest WAR obtained by JDAR [25])a (2) Among all the six cross-corpus SER tasks, the proposed JIASL based on the IS09 feature set obtained the highest UAR in five tasks, i.e., , , , , and . It also performed the best in the other five tasks, i.e., , , , , and . These findings indicate that our proposed method can also achieve the best results in the class-imbalance case. This demonstrates that the joint regularization terms of marginal distribution and class-aware conditional distribution in our JIASL can effectively deal with class-imbalanced datasets.

Table 3.

The experimental results in terms of UAR (%) for six designed cross-corpus SER tasks. The best result in each task is highlighted in bold.

Furthermore, comparing the results in Table 2 and Table 3, we also have some interesting findings. For instance, in the results of JIASL and JDAR, it is clear to see that both of these two methods promisingly outperformed other comparison methods. This provides evident support to show the better effectiveness of jointly considering marginal and class-aware conditional distribution alignments adopted by JIASL and JDAR than simply considering the marginal one in bridging the distribution gap between two different feature sets. It is also worth mentioning that our JIASL performed better than JDAR, which shows that for a joint marginal and conditional distribution alignment strategy, our designed implicit method (reconstruction) is more advantageous than the statistical moment-based strategy.

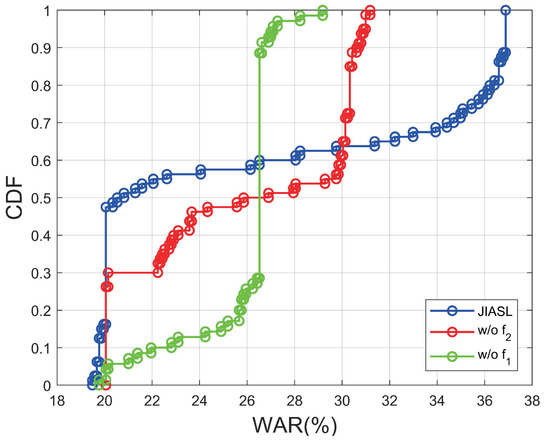

In addition, to evaluate the influence of the proposed optimization terms and w/o for cross-corpus SER tasks, we also calculated the cumulative distribution function (CDF) [39] with respect to WAR on the B → E task, shown in Figure 1, in which each point represents the WAR results of the specific iteration step, and w/o and w/o represent the proposed JIASL without the terms and , respectively. Figure 1 reveals two main advantages of our proposed JIASL. The first point is that our JIASL with the optimization terms of and can achieve the highest WAR result compared to the models of JIASL without and . The second point is that when the WAR is in the range of [20–26%], the CDF of JIASL is higher than JIASL without and . This case demonstrates that the proposed JIASL converges faster and has more rounds to reach a high WAR in all iterations.

Figure 1.

The cumulative distribution function (CDF) with respect to WAR for the cross-corpus SER task of B → E, in which w/o and w/o represent the proposed JIASL without the terms and , respectively.

3.5. Parameter Sensitivity Analysis

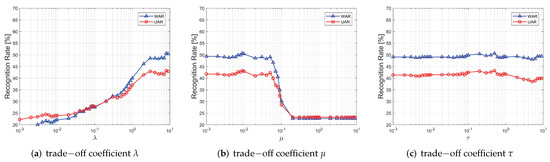

In our proposed JIASL, three trade-off coefficients (i.e., , , and ) are adopted to balance the different loss terms. Hence, we conducted additional experiments for the sensitivity analysis of these parameters to demonstrate the adaptation of JIASL. Figure 2 describes the results of the parameter sensitivity analysis on the cross-corpus SER task of E → B, in which the optimal parameters for , , and under this task are , , and , respectively, and the search set of parameters was set as [0.001:0.001:0.009, 0.01:0.01:0.09, 0.1:0.1:0.9, 1:1:9]. From Figure 2a, it is obvious that the recognition accuracies of WAR and UAR vary gently with the in [2, 9], demonstrating that JIASL is insensitive to in the optimal parameter interval [2, 9]. Similarly, Figure 2b reveals that the proposed method is susceptible to in the parameter interval [0.001, 0.4]. Moreover, from Figure 2c, we can observe that the recognition rate is flat enough in the whole parameter interval of . These results all indicate that our proposed JIASL is insensitive to three trade-off coefficients.

Figure 2.

The parameter sensitivity analysis of three trade−off parameters (i.e., , , ) for our proposed JIASL method on the cross-corpus SER task of E → B.

In addition, it is also interesting to find that the three parameters have specific sensitivities in different parameter intervals. For instance, is insensitive in the range of large parameter values, and is insensitive in the range of small parameter values. is insensitive in the entire parameter search range. This situation also reflects the different contributions of regularization terms to the performance of our JIASL model.

4. Conclusions

We propose a novel transfer subspace learning method called joint distribution implicitly aligned subspace learning (JIASL) for cross-corpus SER. The aim of JIASL is to learn an emotion discriminative and corpus-invariant projection matrix to predict the emotion labels of target speech samples. To this end, we first build a sparse linear regression model guided by the labeled source speech samples to endow the projection matrix with the emotion-discriminative ability. Then, a reconstruction regularization term, which implicitly bridges both marginal and emotion class aware conditional feature distribution gaps between two speech corpora, is further designed to enhance the corpus-invariant ability of the projection matrix. Finally, extensive cross-corpus SER experiments on EmoDB, eNTERFACE, and CASIA are conducted to evaluate the proposed JIASL. Experimental results demonstrated the effectiveness and superiority of the proposed JIASL in coping with cross-corpus SER tasks.

Author Contributions

Conceptualization and methodology, C.L., Y.Z. (Yuan Zong), C.T. and H.L.; validation, H.C. and J.Z.; writing—original draft preparation, C.L. and Y.Z. (Yuan Zong); writing—review and editing, S.L. and Y.Z. (Yan Zhao); funding acquisition, C.L. and Y.Z. (Yuan Zong). All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by in part the NSFC under the Grants U2003207 and 61902064, in part by the Jiangsu Frontier Technology Basic Research Project under the Grant BK20192004, in part by the Zhishan Young Scholarship of Southeast University, and in part by the Scientific Research Foundation of Graduate School of Southeast University YBPY1955.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| The features of labeled speech samples in the source domain | |

| The feature vector of ith speech sample in the source domain | |

| The features of unlabeled speech samples in the target domain | |

| The feature vector of jth speech sample in the target domain | |

| The emotion labels of speech samples in the source domain | |

| The emotion label of the ith speech sample | |

| The kth entry of one-hot vector | |

| The projection matrix | |

| The reconstruction coefficient matrix | |

| The Frobenius norm | |

| The norm | |

| The norm | |

| The number of source speech samples | |

| The number of target speech samples | |

| d | The dimension of the speech feature vector |

| c | The number of emotions involved in cross-corpus SER tasks |

References

- Schuller, B.; Batliner, A. Computational Paralinguistics: Emotion, Affect and Personality in Speech and Language Processing; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Busso, C.; Bulut, M.; Narayanan, S.; Gratch, J.; Marsella, S. Toward effective automatic recognition systems of emotion in speech. In Social Emotions in Nature and Artifact: Emotions in Human and Human-Computer Interaction; Gratch, J., Marsella, S., Eds.; Oxford University Press: New York, NY, USA, 2013; pp. 110–127. [Google Scholar]

- Schuller, B.W. Speech emotion recognition: Two decades in a nutshell, benchmarks, and ongoing trends. Commun. ACM 2018, 61, 90–99. [Google Scholar] [CrossRef]

- Schuller, B.; Arsic, D.; Rigoll, G.; Wimmer, M.; Radig, B. Audiovisual behavior modeling by combined feature spaces. In Proceedings of the 2007 IEEE International Conference on Acoustics, Speech and Signal Processing-ICASSP’07, Honolulu, HI, USA, 15–20 April 2007; Volume 2, pp. 733–736. [Google Scholar]

- Schuller, B.; Vlasenko, B.; Eyben, F.; Rigoll, G.; Wendemuth, A. Acoustic emotion recognition: A benchmark comparison of performances. In Proceedings of the 2009 IEEE Workshop on Automatic Speech Recognition & Understanding, Moreno, Italy, 13 November–17 December 2009; pp. 552–557. [Google Scholar]

- Akçay, M.B.; Oğuz, K. Speech emotion recognition: Emotional models, databases, features, preprocessing methods, supporting modalities, and classifiers. Speech Commun. 2020, 116, 56–76. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, S.; Huang, T.; Gao, W. Speech emotion recognition using deep convolutional neural network and discriminant temporal pyramid matching. IEEE Trans. Multimed. 2017, 20, 1576–1590. [Google Scholar] [CrossRef]

- Lu, C.; Zong, Y.; Zheng, W.; Li, Y.; Tang, C.; Schuller, B.W. Domain Invariant Feature Learning for Speaker-Independent Speech Emotion Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 2217–2230. [Google Scholar] [CrossRef]

- Song, P. Transfer Linear Subspace Learning for Cross-Corpus Speech Emotion Recognition. IEEE Trans. Affect. Comput. 2019, 10, 265–275. [Google Scholar] [CrossRef]

- Schuller, B.; Vlasenko, B.; Eyben, F.; Wöllmer, M.; Stuhlsatz, A.; Wendemuth, A.; Rigoll, G. Cross-corpus acoustic emotion recognition: Variances and strategies. IEEE Trans. Affect. Comput. 2010, 1, 119–131. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Zong, Y.; Zheng, W.; Zhang, T.; Huang, X. Cross-corpus speech emotion recognition based on domain-adaptive least-squares regression. IEEE Signal Process. Lett. 2016, 23, 585–589. [Google Scholar] [CrossRef]

- Long, M.; Wang, J.; Sun, J.; Philip, S.Y. Domain invariant transfer kernel learning. IEEE Trans. Knowl. Data Eng. 2014, 27, 1519–1532. [Google Scholar] [CrossRef]

- Long, M.; Cao, Y.; Cao, Z.; Wang, J.; Jordan, M.I. Transferable representation learning with deep adaptation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 3071–3085. [Google Scholar] [CrossRef]

- Liu, N.; Zong, Y.; Zhang, B.; Liu, L.; Chen, J.; Zhao, G.; Zhu, J. Unsupervised cross-corpus speech emotion recognition using domain-adaptive subspace learning. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5144–5148. [Google Scholar]

- Gretton, A.; Borgwardt, K.M.; Rasch, M.J.; Schölkopf, B.; Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 2012, 13, 723–773. [Google Scholar]

- Borgwardt, K.M.; Gretton, A.; Rasch, M.J.; Kriegel, H.P.; Schölkopf, B.; Smola, A.J. Integrating structured biological data by kernel maximum mean discrepancy. Bioinformatics 2006, 22, e49–e57. [Google Scholar] [CrossRef]

- Mao, Q.; Xue, W.; Rao, Q.; Zhang, F.; Zhan, Y. Domain adaptation for speech emotion recognition by sharing priors between related source and target classes. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 2608–2612. [Google Scholar]

- Deng, J.; Xu, X.; Zhang, Z.; Frühholz, S.; Schuller, B. Universum autoencoder-based domain adaptation for speech emotion recognition. IEEE Signal Process Lett. 2017, 24, 500–504. [Google Scholar] [CrossRef]

- Abdelwahab, M.; Busso, C. Domain adversarial for acoustic emotion recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 2423–2435. [Google Scholar] [CrossRef]

- Gideon, J.; McInnis, M.G.; Provost, E.M. Improving cross-corpus speech emotion recognition with adversarial discriminative domain generalization (ADDoG). IEEE Trans. Affect. Comput. 2019, 12, 1055–1068. [Google Scholar] [CrossRef] [PubMed]

- Parry, J.; Palaz, D.; Clarke, G.; Lecomte, P.; Mead, R.; Berger, M.; Hofer, G. Analysis of Deep Learning Architectures for Cross-Corpus Speech Emotion Recognition. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019; pp. 1656–1660. [Google Scholar]

- Long, M.; Wang, J.; Ding, G.; Sun, J.; Yu, P.S. Transfer feature learning with joint distribution adaptation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2200–2207. [Google Scholar]

- Zhu, Y.; Zhuang, F.; Wang, J.; Ke, G.; Chen, J.; Bian, J.; Xiong, H.; He, Q. Deep subdomain adaptation network for image classification. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 1713–1722. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, L.; Zong, Y.; Zheng, W.; Zhao, L. Cross-Corpus Speech Emotion Recognition Using Joint Distribution Adaptive Regression. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 3790–3794. [Google Scholar]

- Burkhardt, F.; Paeschke, A.; Rolfes, M.; Sendlmeier, W.F.; Weiss, B. A database of German emotional speech. In Proceedings of the Interspeech, Lisboa, Portugal, 4–8 September 2005; Volume 5, pp. 1517–1520. [Google Scholar]

- Martin, O.; Kotsia, I.; Macq, B.; Pitas, I. The eNTERFACE’05 audio-visual emotion database. In Proceedings of the 22nd International Conference on Data Engineering Workshops (ICDEW’06), Atlanta, GA, USA, 3–7 April 2006; p. 8. [Google Scholar]

- Tao, J.; Liu, F.; Zhang, M.; Jia, H. Design of speech corpus for mandarin text to speech. In Proceedings of the the Blizzard Challenge 2008 Workshop, Brisbane, Australia, 1 February 2008; pp. 1–4. [Google Scholar]

- Kan, M.; Wu, J.; Shan, S.; Chen, X. Domain adaptation for face recognition: Targetize source domain bridged by common subspace. Int. J. Comput. Vis. 2014, 109, 94–109. [Google Scholar] [CrossRef]

- Lin, Z.; Liu, R.; Su, Z. Linearized alternating direction method with adaptive penalty for low-rank representation. Adv. Neural Inf. Process. Syst. 2011, 24, 612–620. [Google Scholar]

- Zheng, W.; Xin, M.; Wang, X.; Wang, B. A novel speech emotion recognition method via incomplete sparse least square regression. IEEE Signal Process. Lett. 2014, 21, 569–572. [Google Scholar] [CrossRef]

- Liu, J.; Ji, S.; Ye, J. SLEP: Sparse learning with efficient projections. Ariz. State Univ. 2009, 6, 7. [Google Scholar]

- Schuller, B.; Steidl, S.; Batliner, A. The interspeech 2009 emotion challenge. In Proceedings of the Interspeech 2009, 10th Annual Conference of the International Speech Communication Association, Brighton, UK, 6–10 September 2009; pp. 312–315. [Google Scholar]

- Schuller, B.; Steidl, S.; Batliner, A.; Burkhardt, F.; Devillers, L.; Müller, C.; Narayanan, S. The INTERSPEECH 2010 paralinguistic challenge. In Proceedings of the INTERSPEECH 2010, Makuhari, Japan, 26–30 September 2010; pp. 2794–2797. [Google Scholar]

- Eyben, F.; Wöllmer, M.; Schuller, B. Opensmile: The munich versatile and fast open-source audio feature extractor. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 1459–1462. [Google Scholar]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 2010, 22, 199–210. [Google Scholar] [CrossRef] [PubMed]

- Gong, B.; Shi, Y.; Sha, F.; Grauman, K. Geodesic flow kernel for unsupervised domain adaptation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2066–2073. [Google Scholar]

- Fernando, B.; Habrard, A.; Sebban, M.; Tuytelaars, T. Unsupervised visual domain adaptation using subspace alignment. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2960–2967. [Google Scholar]

- Liu, S.; Sinha, R.S.; Hwang, S.H. Clustering-based noise elimination scheme for data pre-processing for deep learning classifier in fingerprint indoor positioning system. Sensors 2021, 21, 4349. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).