Abstract

In this paper we study deep neural network algorithms for solving linear and semilinear parabolic partial integro-differential equations with boundary conditions in high dimension. Our method can be considered as an extension of the deep splitting method for PDEs to equations with non-local terms. To show the viability of our approach, we discuss several case studies from insurance and finance.

1. Introduction

Many problems in insurance and finance lead to terminal or boundary value problems involving parabolic partial integro-differential equations (PIDEs). Examples include option pricing in models with jumps, the valuation of insurance contracts, optimal investment problems and many applications in credit risk. These PIDEs can be inear (such as PIDEs arising in risk-neutral pricing) or semilinear (such as the dynamic programming equation in many stochastic control problems). Practical applications often involve several underlying assets or economic factors, so one has to deal with PIDEs in a high-dimensional space. These PIDEs do typically not admit an analytic solution, making the design of suitable numerical methods an ongoing challenge. Existing numerical methods include deterministic schemes, in particular finite difference and finite element methods, see e.g., [1,2,3,4,5], and random schemes based on Monte Carlo methods, see e.g., [6,7,8]. However, finite difference and finite element methods cannot be used in the case of high-dimensional PIDEs, as they suffer from the curse of dimensionality. Monte Carlo methods, on the other hand, are suitable for problems in higher dimensions. However, these methods only provide a solution for a single fixed time-space point . This is problematic in risk management applications, where one needs to find the solution of a pricing problem for a large set D of future scenarios. The naive solution via nested Monte Carlo is in most cases computationally infeasible. Regression-based Monte Carlo methods (see [7]) sometimes help, but the choice of proper basis functions remains a delicate issue. Moreover, it is not straightforward to apply Monte Carlo techniques to semilinear parabolic equations.

For these reasons, many recent contributions study machine learning techniques for the numerical solution of PDEs. A large strand of this iterature is based on the representation of semilinear parabolic PDEs via backward stochastic differential equations (BSDEs). In the seminal papers [9,10], a BSDE is discretized using a time grid , and the solution at the initial date and its gradient at every time step are approximated by a combined deep neural network. The parameters are trained by minimizing the difference between the network approximation and the known terminal value of the solution. An error estimation of this method is given in [11,12] considers an extension to elliptic semilinear PDEs and applications to insurance mathematics. Other contributions use a backward induction over the time steps . To begin with, [13] estimates the solution and its gradient simultaneously by backward induction via sequential minimization of suitable loss functions; moreover, the paper contains convergence results. The paper of [14] uses a different discretization method, called deep splitting. In this method, one computes the unknown gradient of the solution by automatic differentiation, which reduces the size of the networks. For inear PDEs, the method boils down to the simpler global regression approach of [15], where Feynman-Kac representation and the -minimality of conditional expectations is used to characterize the solution through an infinite-dimensional stochastic minimization problem, which is then solved with machine learning. Ref. [16] combines the ideas of [13,14] to introduce a neural network scheme for fully nonlinear PDEs. Finally, [17] extends the method in [13] and the paper provides a convergence analysis that can be adapted to show convergence of the deep splitting method of [14].

Applications of deep learning methods to partial integro differential equations with non-local terms, on the other hand, are scarce. The paper [18] presents an extension of the method of [13] to PIDEs and generalizes the convergence results. Numerical case studies are, however, not provided. In fact, from a numerical viewpoint, the method of [18] is quite involved since one needs to approximate the solution, the gradient and the non-local term in the PIDE via three separate networks. The work of [19] is based on the deep Galerkin method of [20]. This is an alternative machine learning approach for PDEs, where the network is trained by directly minimizing the deviations of the fitted functions from the desired differential operator and boundary conditions. The very recent contribution [21] is discussed in Section 5.

In this paper, we consider deep neural network (DNN) algorithms for inear and semilinear parabolic PIDEs that generalize the regression approach of [15] and the deep splitting method of [14], respectively. In the semilinear case, we linearize the equation ocally in time using a time grid , , and we perform a backward induction over the grid points using the DNN algorithm for the inear case in each step. In particular, the gradient and the non-local term entering the equation at are computed using the approximate solution at time . The advantage of this approach, as opposed to the method of [18], is that we approximate only the solution by a DNN so that it suffices to train a single network per time step. In the semilinear case, we propose an alternative linearization procedure to [14], and we show in numerical experiments that this procedure performs substantially better than the original deep splitting method of [14]. Moreover, we apply our DNN algorithms also to boundary value problems, while [14,15] consider only pure Cauchy problems.

The focus of this paper is on the application of our methodology to problems in insurance and finance, a formal convergence analysis is given in the companion paper [22]. To assess the performance of our methodology, we carry out two practically relevant case studies involving multi-dimensional PIDEs arising in actuarial and financial mathematics. In the first case study, we study so-called reinsurance counterparty credit risk (RCCR), which is the risk of a loss that occurs if a reinsurance company fails to meet her contractual obligations towards the ceding insurer. We consider a reinsurance contract of stop-loss type in a framework where claims arrive with stochastic intensity. The associated RCCR can be quantified with the credit value adjustment (CVA), which is defined as the expected discounted value of the replacement cost for the contract. The computation of the CVA by Monte Carlo leads to a nested Monte Carlo problem. We compute the value of the reinsurance contract and the CVA with our deep learning method and asses the performance by comparing the solution to the results of an extensive Monte Carlo simulation. The second case study is an optimization problem in finance. We consider a bank who dynamically optimizes her credit portfolio in the presence of transaction costs and risk capital constraints. In the absence of capital constraints, the problem admits an analytic solution and is, therefore, a useful test case. With capital constraints, the model leads to a semilinear boundary value problem with no explicit solution, so we study this case numerically. Our numerical experiments show that in all test cases the performance of the proposed approximation algorithms is quite satisfactory in terms of accuracy and speed.

The paper is organized as follows. Section 2 introduces the general setting and characterizes the types of inear and semilinear PIDEs considered. In Section 3 the method for inear PIDEs is described, and Section 4 presents the related case study on an application for reinsurance counterparty credit risk. Section 5 is devoted to the theory of the deep splitting method for semilinear PIDEs and Section 6 continues with the related case study on optimal credit portfolios with and without capital restrictions. Section 7 finally concludes.

2. Modelling Framework

We fix a probability space , a time horizon T and a right continuous filtration . Consider measurable functions , and , where is a separable measurable space. We assume that supports a d-dimensional Brownian motion W and a Poisson random measure J on . The compensator of J is given by for a sigma-finite measure on E. We consider a d-dimensional process X that is the unique strong solution of the SDE

Define the set and note that X jumps at t whenever . We assume that

This condition ensures that X has a.s. only finitely many jumps on , so that every integral with respect to J is well-defined. The restriction to finite-activity jump processes simplifies the exposition and it is sufficient for most applications in insurance. Conditions on the coefficients , and ensuring that the SDE (1) has a unique strong solution are given, for instance, in [23] or [24].

By , we denote the functions that are k times continuously differentiable on the set , and by , we denote functions that are once continuously differentiable in t and k-times continuously differentiable in x on the set . For every function , we write for the first derivatives of h with respect to , , , for second derivatives and for the first derivative with respect to time.

Define the matrix , by

and consider for the integro-differential operator given by

The operator is the generator of X, and X is a solution of the martingale problem for , see e.g., [25] for details.

Consider functions , and , and et D be an open subset of . In Section 3 we are interested in the following boundary value problem

The special case corresponds to a pure Cauchy problem without boundary conditions; in that case we use the simpler notation to denote the terminal condition.

It follows from the Feynman-Kac formula that under some integrability conditions a classical solution u of (4) has the probabilistic representation

where . Conversely, [26,27] provide conditions ensuring that the function u defined in (6) is a classical solution of the problem (4) (for the case of a pure Cauchy problem).

In Section 3 we propose a deep neural network (DNN) algorithm to approximate the function u defined in (6). In Section 5 we are interested in semilinear problems of the form

where is a nonlinear function such as the Hamiltonian in a typical Hamilton Jacobi Bellman equation. To handle this case, we partition the interval into time points , consider a linearized version of (7) for each subinterval , and apply the DNN algorithm for the inear case recursively.

3. Deep Neural Network Approximation for Linear PIDEs

In this section we consider inear PIDEs. We extend the regression-based algorithm of [15] to PIDEs and we include boundary conditions into the analysis.

3.1. Representation as Solution of a Minimization Problem

Fix some time point and a closed and bounded set . Define the function by the Feynman-Kac representation (6). We want to compute an approximation to the function on the set A. The key idea is to write this function as a solution of a minimization problem in an infinite dimensional space. This representation is used to construct a loss function for our deep neural network method.

Consider some random variable whose distribution is absolutely continuous with respect to the Lebesgue measure such that the corresponding density has support A (in applications the distribution of is often the uniform distribution on A). Denote by the solution of the SDE (1) with initial value . Define the random variable

Assume that and that the function belongs to . Since is a Markov process, it holds that , where is the sigma-field generated by . As is square integrable, we get from the -minimality of conditional expectations that

Since and since the density of is strictly positive on A we conclude that is the unique solution of the minimization problem

The problem (11) can be solved with deep learning methods, as we explain next.

3.2. The Algorithm

The first step in solving (11) with machine learning techniques is to simulate trajectories of up to the stopping time . The simplest method is the Euler-Maruyama scheme. Here, we choose a time discretization , , generate K simulations of the random variable and simulate K paths of up to the stopping time by the following recursive algorithm. We et , and for ,

Note that the integrand in the integral with respect to is evaluated at , so this integral corresponds to the increment of a standard compound Poisson process. Using these simulations we compute for each path

where the integrals on the right can be approximated by Riemann sums.

In the next step, we approximate from (6) by a deep neural network . We determine the network parameters (training of the network) by minimizing the loss function

where denotes the set of admissible network parameters. For this we rely on stochastic gradient-descent methods; algorithmic details are given in the next section. This approach can be considered as a regression-based scheme since one attempts to minimize the squared error between the DNN approximation and the given terminal and boundary values of the PIDE. Note that derivatives of u do not enter the algorithm, so that the method applies even in cases where u is only a continuous viscosity solution of the PIDE (4).

Remark 1

(Convergence result for the inear case). In the companion paper [22] we provide a convergence result for the case of pure Cauchy problems. Under Lipschitz assumptions on the coefficients of and on the functions c and g we show that

where measures the approximation quality of the set of network functions (loosely speaking, describes how well the true solution can be approximated by functions from ). Relation (14) shows that the approximation error of the method can be made small by considering a fine time grid (small ) and a class of network functions with good approximation properties (small ).

4. Case Study for the Linear Case: Reinsurance Counterparty Credit Risk

In this section we test the performance of the proposed DNN algorithm for inear PIDEs. Our case study is concerned with reinsurance counterparty credit risk (RCCR), which is the risk that a reinsurance company R might default and is thus unable to fulfill her contractual obligations towards the ceding insurer I. Using market-consistent valuation this risk is described in terms of the credit value adjustment (CVA), which gives the expected discounted value of the replacement cost for the contract incurred by I at the default time of R. We work in the model of [28], where RCCR is studied using Monte Carlo methods. The framework of [28] includes several sources of dependence between the market value of the reinsurance contract and the default event of the reinsurance company. In this way it is possible to account for practically relevant contagion effects that are often subsumed under the abel “wrong way risk”.

Our case study consists of two parts: first, we compute the value of a reinsurance contract with maturity T, where the underlying claims process L follows a doubly stochastic marked point process. Second, we compute the CVA to measure the RCCR arising from the reinsurance contract. This leads to a nested Monte Carlo problem, as one needs to compute the value of the reinsurance contract for all possible values of for every . The Monte Carlo approach used in [28] therefore has a high computational cost, whereas our DNN algorithm is very well suited for this problem. Details on the numerical implementation and the computational equipment are given in Remark 2.

4.1. Valuation of an Insurance Contract with Doubly Stochastic Poisson Arrivals

Consider a reinsurance contract with maturity on an insurance portfolio with cumulative loss process . To describe the dynamics of L, we introduce a sequence of claim arrival times with nonnegative intensity process and a sequence of claim sizes that are iid strictly positive random variables independent of the counting process with . The cumulative loss process is given by , . We assume that the are Gamma(,) distributed with density . This is a common choice in insurance. The claim-arrival intensity process satisfies the SDE

where is a standard Brownian motion. Define the process X by , . We assume that the reinsurance contract is a stop-loss contract, i.e., the indemnity payment is of the form with

We use a market consistent valuation approach and define the market value u at time of the reinsurance contract by

Here, expectations are taken under a pricing measure and the risk premium charged by the reinsurer is accounted for by a proper choice of that measure, see [28] for details. In [28] it is shown that u is the unique solution of the PIDE with terminal condition and generator

for , . There is no explicit solution for this PIDE, and we approximate on the set with a deep neural network . Table 1 contains the parameters we use. Paths of the processes L and are simulated with the Euler-Maruyama scheme on a equidistant time grid with and .

Table 1.

Parameters used for the valuation of the stop-loss contract. The claim sizes are Gamma distributed with density function .

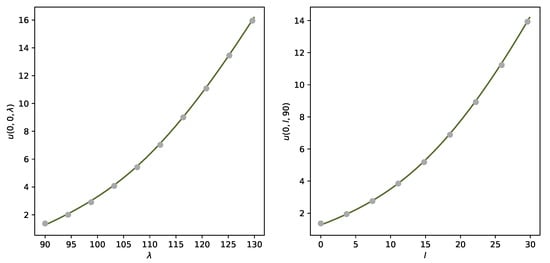

Figure 1 shows the approximate solution obtained by the DNN algorithm; details on the training procedure are given in Remark 2.

Figure 1.

Solution for , (left) and , (right) computed with the DNN-algorithm (green ine) and reference points computed with Monte Carlo (grey dots).

As a reference for the whole set A, we compute for fixed approximate values with Monte Carlo using simulated paths for each point (paths are simulated with the Euler-Maruyama scheme). The relative -error between the DNN approximation and the MC-solution is defined as

Using 1000 simulations of , we obtained a relative error of

The computation of via the training of a DNN took around 90 s, whereas the computation of with Monte Carlo for a fixed point took around 4.5 s. This shows that the DNN approach is faster than the MC approach if one wants to compute for a grid with more than 20 grid points. Details on the numeric implementation and computational equipment are given in Remark 2.

We continue with a more detailed performance analysis for the fixed space point . As a reference we approximate with Monte Carlo using simulations. The computation of a single point takes around 2 min, upper and lower bounds for the reference value are (), where is the standard deviation of the Monte Carlo simulation. In Table 2 we approximately calculate the mean, the standard deviation, the average relative approximation error (with the Monte Carlo approximation as reference) and the average runtime of for different numbers of epochs based on 10 independent realizations (10 independent trainings of ).

Table 2.

Average value, standard deviation, average relative error of and average run time for different number of epochs.

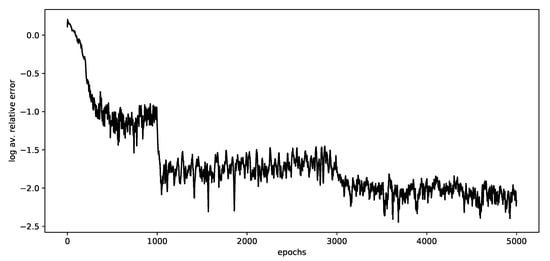

In Figure 2 we illustrate Log-average relative error of relative to the reference value 6.2825, based on 10 independent realizations. We observe that the relative -error decreases with increasing epochs.

Figure 2.

Log-average relative error of relative to the reference value 6.2825, based on 10 independent realizations.

4.2. Computing the CVA for the Reinsurance Contract

The reinsurance company R may default and we denote by the stopping time representing her default time. If , R will not be able to fulfill her obligations which creates reinsurance counterparty credit risk. The default indicator process associated with is given by

We assume that admits a stochastic intensity (in the sequel called the default intensity of R), with the property that is a martingale. We assume that follows the SDE

where is a standard Brownian motion independent of .

We define the credit value adjustment (CVA) for the reinsurance contract as the market consistent value of the future credit loss, that is,

for and u the value of the insurance contract given in (17). The amount can be viewed as a risk reserve that the insurance company has to set aside at time t to cover for losses due to reinsurance counterparty risk.

Our framework accounts for two forms of dependence between the default of R and the market value of the insurance contract. First, there is correlation between default intensity and claim-arrival intensity , modelled by the parameter , which means that many losses (high claim arrival intensity ) lead to a ess favourable economic outlook for the reinsurance company. Second, there is pricing contagion: at the default time the risk-neutral claim arrival intensity jumps upward in (24), which corresponds to an increase of the market value of the reinsurance contract as the claim arrival intensity becomes larger. This models the phenomenon that the default of R will reduce the supply for reinsurance so that the market price of reinsurance goes up. (This is a pure pricing phenomenon; in particular we do not assume that the claim arrival intensity under the real-world measure jumps upward at ).

In [28] it is shown that the value of the CVA is equal to

Moreover, the following PIDE characterization of the CVA is given: It holds that

where is the unique classical solution of the PIDE

for all with terminal condition . The operator is given by

where f is always evaluated at .

We approximate on the set with a deep neural network . We use and ; the other parameters are as in Section 4.1. Paths of the processes L, , are simulated with the Euler-Maruyama scheme on the time grid with and . For each path, we approximate the integral term in (24) with the trapezoidal rule using the endpoints of time grid . The contract value is replaced by its neural network approximation on the set , which we compute as described in Section 4.1 for all .

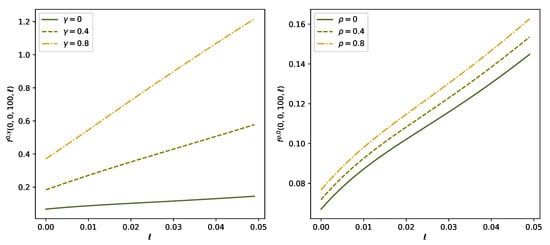

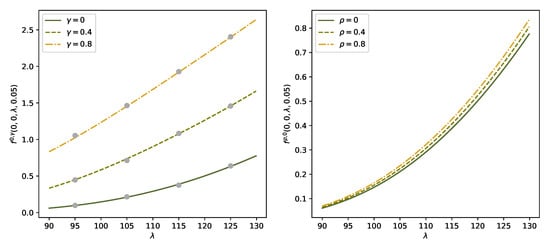

In this case study, we are particularly interested in the impact of the correlation parameter and of the pricing contagion parameter on the CVA. We therefore construct a neural network with additional input variables and . During the training procedure we use different values of to simulate the paths of and different values of to compute the integral term in (24). In the sequel we write to stress the dependence of f on and . Figure 3 shows for varying default-intensity ℓ and Figure 4 for varying in the left panel and varying in the right panel. Moreover, all figures compare the solution for different values of and . We see that increases in both and , but the effect of price contagion (i.e., variation in ) is quite pronounced and dominates the effect of dependence between intensities (i.e., variation in ).

Figure 3.

Solution for , , varying and different levels of and with computed with the DNN-algorithm. We have (left) resp. (right).

Figure 4.

Solution for , , varying and different levels of (left) and varying for different levels of (right) computed with the DNN-algorithm.

This part of the case study highlights the computational advantages of the DNN approach compared with nested Monte Carlo methods. Figure 5 shows for varying claim-arrival intensity together with reference solution points computed with classical Monte Carlo simulations. In this nested Monte Carlo problem, we use 3000 simulations to approximate the inner function u and 2000 path simulations for the outer expectation. The integral term is approximated following the trapezoidal rule on the time grid . Computation of only one point takes more than 5 min (despite using only 3000 resp. 2000 simulations). On the other hand training the network approximations of for all takes around 20 min and training of the network approximation for takes ess than 4 min, i.e., it takes only around 24 min to train the network for the whole space domain B and for all values of . This should be contrasted with a computation time of more than 60 min to compute the CVA for only 12 space points (numerical details on the training procedure are given in Remark 2). Due to the computational infeasibility of nested Monte Carlo solutions, we omit a similar error analysis as in (19).

Figure 5.

Solution for , varying , different levels of resp. and resp. (left resp. right) computed with the DNN-algorithm. The reference points are computed with Monte Carlo (left, grey dots).

In [28] we moreover addressed the hedging of RCCR by dynamic trading in a credit default swap (CDS) on the reinsurance company using a quadratic hedging approach. The computation of the mean-variance minimizing hedging strategies (Theorem 4.3 [28]) involves computing various derivatives of the functions f and g, where g is the present value of future payments of the CDS. The DNN algorithm is particularly useful for this: we can approximate f and g for with neural networks on the whole space domain and compute their derivatives via automatic differentiation. In [28] the derivatives of f and g were computed via classical Monte Carlo, which fairly unstable and time-consuming.

Remark 2

(Details regarding the numerical implementation). Our choice of the network architecture largely follows [15]. Throughout, we use DNNs with 2 hidden ayers and 20 nodes for the hidden ayers. For a payoff of the form (16), it is advantageous to use softplus as activation function for the hidden ayers and the identity for the output ayer. The networks are initialized with random numbers using the Xavier initialization. We use mini-batch optimization with mini-batch size , batch normalization and 5000 epochs for training. We minimize the loss function with the Adam optimizer and decrease learning rate from 0.1 to 0.01 and 0.001 after a 1000 and 3000 epochs. The neural networks used are standard, hence, training induces a cost that is inear in M and e, i.e., , where M is the batch size and e the number of epochs, see also Table 2 in the column runtime. Each of the numerical experiments presented in this paper are performed in Python using Tensorflow computations on a Lenovo Thinkpad notebook with an Intel Core i5 processor (1.7 GHz) and 16 GB memory. Note that one can gain a performance boost by using GPU computing, e.g., with Cuda from Nvidia, but to emphasize the practicality of the algorithm we run our computations with a standard CPU.

Remark 3

(Further examples for the inear case). Further examples for the inear case can be found in our companion papers [22,29]. In [22] we consider an example from [30], who study the pricing of basket options in jump diffusion models. In [29] we compute the ruin probability for an insurance company with three different business ines, which leads to a boundary value problem. In both cases the performance of the deep learning method is assessed by comparing the solution to the results of an extensive Monte Carlo simulation. The DNN algorithm performed well in all cases considered.

5. Deep Learning Approximation for Semilinear PIDEs

Next we consider semilinear PIDEs of the form

Here, is a nonlinear function and the integro-differential operator is given in (3). Our goal is to extend the deep splitting approximation method developed by [14] for semilinear PDEs without boundary conditions to the more general PIDE (28). Moreover, we propose an approach for improving the performance of the method. In order to derive the method with a minimal amount of technicalities, we assume that a classical solution of this PIDE exists. Note however, that the deep splitting approximation applies also in cases where the PIDE (28) admits only a continuous viscosity solution. In that case one has to consider the forward backward stochastic differential equation associated with (28), see see [22] or [17]. The very recent contribution [21] considers an extension of the deep splitting to PIDEs of the form (28) with Neumann boundary conditions, but assumes that f is independent of the gradient of u.

5.1. Basic Method

We divide the interval into subintervals using a time grid and we et . Suppose that is the unique solution of the PIDE (28). Define for a fixed the function

Then also solves the inear PIDE

Applying the Feynman-Kac formula to the inear PIDE (29), we get the following representation for

Here, solves the SDE (1) with initial condition , that is,

and the stopping time is given by . In the deep splitting method proposed in [14] the integral term in (30) is approximated by the endpoint rule

An approximate solution , is then found by backward induction over the grid points. The solution at the maturity is defined using the terminal condition, that is, Given , we then compute , as follows. First, we put

Then, we compute by applying the regression-based DNN algorithm method from Section 3. In this step, it is important to work with DNNs with a smooth activation function so that is well-defined (If is not one has to define as DNN approximation to the terminal condition; see for instance [17]).

Remark 4.

An additional complication may arise if the domain D is unbounded. In that case, we compute the approximate solution on some bounded set . We have to make sure that the set is big enough so that the probability of generating paths with but is sufficiently small. Then, these paths can be ignored without affecting the training procedure. There are various ways to achieve this, see [14] for details.

5.2. Alternative Method

Next, we discuss an alternative linearization procedure based on the midpoint rule

Here, is the midpoint of the interval . It is well known that (34) usually provides a better approximation to an integral than the endpoint rule (32). We therefore propose the following approximation scheme based on the midpoint rule. In a first step, we apply the approximation (33) over the smaller interval ; this yields an approximate solution at . Next, we define

as before, a numerical approximation to is computed via the deep learning algorithm from Section 3. Our numerical experiments in Section 6 show that the approximation based on the midpoint rule performs significantly better than the original deep splitting method from [14].

Remark 5

(Convergence results for the semilinear case). The companion paper [22] provides a convergence result for semilinear Cauchy problems of the form (28) as well. The approximation error is divided into three components: the Euler approximation error from the time discretization (which is of order ), an error due to the linearization of the equation and finally an approximation error in the class of neural networks considered. A precise formulation is more involved than in the inear case and we refer to [22] for details.

6. Case Study for the Semilinear Case: Optimal Credit Portfolios with Risk Capital Constraints

As a case study for the semilinear case, we consider the problem of optimizing the size of the credit portfolio of a financial institution under transaction costs and risk capital constraints. Without capital constraints, the problem admits an analytic solution and is therefore a useful test case. With capital constraints, the model leads to a semilinear boundary value problem for which there is no explicit solution, and we study this case numerically using the deep splitting method. In fact, the analysis of the impact of risk capital constraints on optimal credit portfolios is also interesting in its own right.

We consider a stylized model of a financial institution who invests in a credit portfolio with market value at time t and in cash. We assume that the process S has dynamics

Here, the Brownian motion W models the compensated small credit losses, the compound Poisson process R with intensity and jump size distribution models the large credit losses, and is the expected return earned by the portfolio. We assume that the credit portfolio can be adjusted only gradually over time. In mathematical terms, this means that the financial institution uses an absolutely continuous trading strategy with trading rate , so that for a given strategy , the size of the portfolio satisfies . Moreover, the financial institution incurs a transaction cost, that is proportional to the trading rate . This models the fact that a rapid adjustment of the portfolio is expensive, as the institution needs to carry out costly marketing activities or enter into expensive securitization activities. Given a parameter modelling the size of the transaction cost, the cash position has dynamics

Here, the term gives the revenue from trading the credit portfolio and the term models the reduction of the cash position due to transaction costs. From a mathematical viewpoint, this model is fairly similar to models used in the iterature on optimal portfolio execution such as [31] or [32].

Denote by the institution’s equity. We assume that the institution wants to maximize the expected value of her equity position at some horizon date time T, and that she incurs a liquidation cost of size for some parameter . Note that for a given strategy the process has dynamics

Hence, the pair is Markov and we may use this process as state process. We define the value function of the optimization problem for as

where denotes the set of all adapted trading rates such that .

To prevent early ruin, financial regulators usually impose risk capital constraints. In particular, they may liquidate a financial institution if the equity is too low compared to the size or riskiness of the institution’s credit portfolio. Below, we use the deep splitting method to study how capital constraints affects the value function and the optimal strategy of the financial institution. First, we consider the unconstrained problem for which there is an explicit solution of the HJB equation (PIDE (40) below), which can be used to test the performance of the deep splitting method. Details on the numerical implementation and the computational equipment are given in Remark 6.

6.1. The Case without Constraints

By standard arguments, the HJB equation associated with the problem (37) is

with terminal condition . Maximization gives the candidate optimal trading rate

substitution into (38) yields the semilinear PIDE

To solve the case without constraints, we make the Ansatz , that is, we assume that u is inear in the equity value e. This implies

If we define , (40) reduces to the following first order PDE for v

with terminal condition . To find an explicit solution for v, we follow [31] and make the Ansatz Substitution in the HJB Equation (41) gives the following ODE system for

with terminal condition and . The ODEs can be solved explicitly: one has

For comparison purposes we computed the solution of (40) with the deep splitting algorithm on the set A:=. For this we partition the time horizon into intervals and simulate the auxiliary process with and

where is uniformly distributed on , W is a Brownian motion and J is a jump measure with compensating measure for a Gamma distribution with parameters and . Moreover, we identify the nonlinear term in the PIDE as

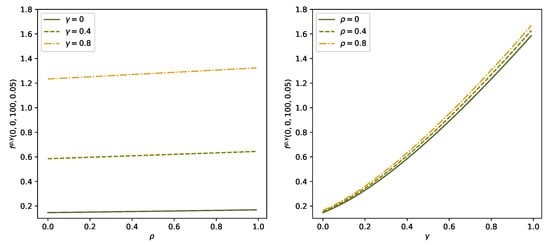

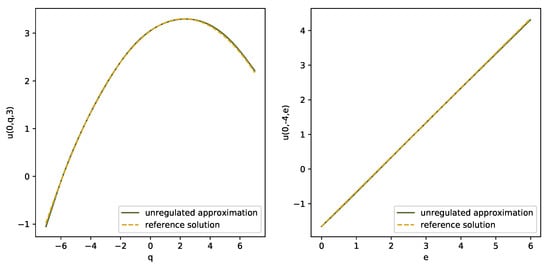

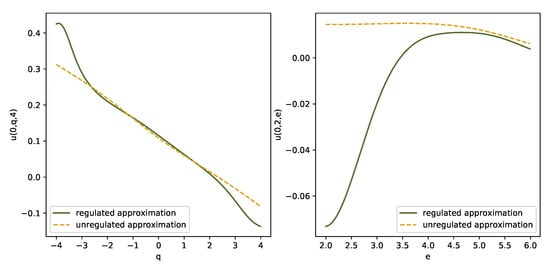

We used the midpoint rule (34) to linearize the PIDE. The network architecture was as in Remark 6. The training for the whole network consisting of 20 subnetworks (a DNN for every grid point , and a DNN for every midpoint ) took around 4400 s. Table 3 contains the parameters used in the experiment. Figure 6 shows the approximate solution obtained by the deep splitting algorithm (solid ine) and the analytic reference solution (dashed ine) for varying in the left panel and varying in the right panel.

Table 3.

Parameters for the credit optimization problem.

Figure 6.

Solution of the unconstrained credit optimization problem for , (left) and , (right) with the DNN-algorithm (green) and reference solution (orange).

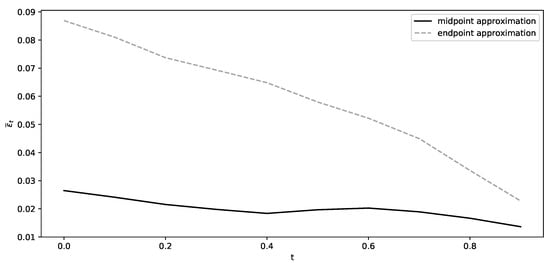

As the solution u can be zero, we report the absolute -error between the DNN approximation and the analytic solution, that is, To compute the absolute error, we used 10,000 simulations of where and . Figure 7 illustrates the mean of 10 different DNN approximations for each time point , using once the midpoint rule (solid ine) and once the original method from [14] (dashed ine). We see that the algorithm based on the midpoint rule performs substantially better than the original deep splitting method.

Figure 7.

Comparison of the two different training methods illustrating the averaged absolute -error using endpoint (grey) and midpoint (black) approximation in 10 training procedures.

6.2. The Optimization Problem with Constraints

Next, we discuss the optimization problem under risk capital constraints. We expect that risk capital constraints will alter the investment decisions of the financial institution since she wants to avoid liquidation of the company. To set up the corresponding optimization problem, we need to specify the set D of acceptable positions of the financial institution and the liquidation value given that her position exits from D. For numerical reasons, it is convenient to model D as a bounded set. Given large constants and and a parameter , we define the set D of acceptable positions as

The constraint implies that before liquidation the solvency ratio of the institution is bounded by , so that can be viewed as a measure of the regulator’s risk tolerance. We assume that the liquidation value of the portfolio on the boundary of D is given by the value of the unconstrained problem, reduced by a penalty , and we set

Here, is a smooth function that penalizes exit from the set of acceptable positions. We assume that (no penalization for high equity values) and that (no penalization at maturity). In our numerical experiments, we take , , and ; the other parameters are identical to the unconstrained case, see Table 3.

The value function for the problem with risk capital constraints is

where . The PIDE associated with this control problem and the form of the optimal strategy are the same as in the unregulated case (see (38) and (39)), but now we have in addition the boundary condition

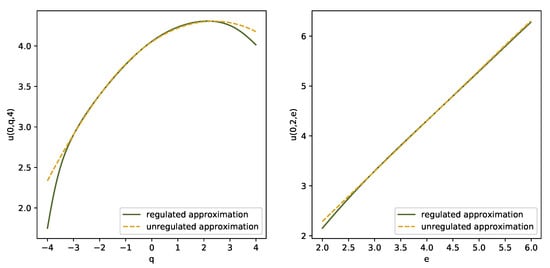

Due to the boundary condition, the Ansatz does not hold and we have to compute via the deep splitting algorithm. In view of its superior performance in the unconstrained case, we worked with the midpoint procedure, and we used the same network architecture as in the unconstrained case. We worked on the set . Figure 8 illustrates the DNN-approximation for (solid ine) and (dashed ine) on the sections (left panel) and (right panel). The right plot shows that with risk capital constraints the value function is concave in e and for significantly lower than (for the point belongs to the lower bound of D).

Figure 8.

DNN-approximations of (green) and (orange) on the section (left) and on (right).

The optimal trading rate is plotted in Figure 9. The plots show that the optimal strategy for the regulated case (solid ine) differs from the optimal strategy in the unregulated case (dashed ine), as the financial institution wants to reduce the size of her risky position in order to avoid costly liquidation, in particular when her position is close to the liquidation boundary. For instance, in the right plot the optimal trading rate for is negative for e close to 2.

Figure 9.

DNN-approximations of the optimal strategy for the regulated case (green) and the unregulated case (orange) on (left) and on (right).

Remark 6

(Details on the numerical implementation). We used a similar network architecture as in [14]. We worked with deep neural networks with 2 hidden ayers consisting of 100 nodes each. All neural networks are initialized with random numbers using the Xavier initialization. We use mini-batch optimization with mini-batch size , batch normalization and 12,000 training epochs. The loss function is minimized with the Adam optimizer and decreasing learning rate from 0.1 to 0.01, 0.001, 0.0001 and 0.00001 after 2000, 4000, 6000 and 8000 epochs. We use sigmoid as activation function for the hidden ayers and theidentity for the output ayer. The neural networks used are standard, and, hence, training induces a cost that is inear in M, e and N, i.e., , where M is the batch size, e the number of epochs and N is the size of the time discretization. All computations are performed in Python using Tensorflow computations on a Lenovo Thinkpad notebook with an Intel Core i5 processor (1.7 GHz) and 16 GB memory.

Remark 7

(Further examples for the semilinear case). For an additional application of our DNN-algorithm to a semilinear PIDE, we refer to the companion paper [22] where the semilinear stochastic inear regulator problem is studied. It is well known that there exists an analytical solution (e.g., see [33]), that can be used to verify the accuracy of the numerical method. However, there are not many (non-trivial) semilinear problems of type (7) with an explicit solution, and the majority of standard numerical methods is quite involved, so that it is not straightforward to come up with further test cases.

7. Conclusions

In this paper we studied an extension of the regression-based deep neural network algorithms of [14,15,17] to PIDE problems from insurance and finance. We illustrated the performance of our methodology, carrying out extensive tests for several multi-dimensional PIDEs arising in typical financial or actuarial pricing and control problems. In all test cases, the performance of the proposed approximation algorithms was satisfying in terms of accuracy and speed. Our results suggest that deep neural network algorithms might become a useful enhancement of the toolbox for solving many valuation and control problems in insurance and finance.

We continue with some ideas for further research. A possible alternative to the methodology proposed in this paper is to extend some of the deep backward dynamic programming schemes discussed in [17] to the PIDE case, similarly as in [18]. However, instead of approximating the increment by a third (large) network, one might use the solution at time as this is ess involved numerically. Moreover, it might be interesting to extend the method to the more general class of fully nonlinear PIDEs.

Author Contributions

Conceptualization and Writing, R.F. and V.K.; Numerical analysis and Coding V.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We are grateful to Michaela Szoelgyenyi and Christa Cuchiero for useful remarks and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PIDE | Partial integro differential equation |

| PDE | Partial differential equation |

| BSDE | Backward stochastic differential equation |

| FBSDEJ | Forward backward stochastic differential equation with jumps |

| DNN | Deep neural network |

| MC | Monte Carlo |

| RCCR | Reinsurance counterparty credit risk |

| CVA | Credit value adjustment |

References

- Cont, R.; Voltchkova, E. A finite difference scheme for option pricing in jump diffusion and exponential Lévy models. SIAM J. Numer. Anal. 2005, 43, 1596–1626. [Google Scholar] [CrossRef]

- Andersen, L.; Andreasen, J. Jump-diffusion processes: Volatility smile fitting and numerical methods for option pricing. Rev. Deriv. Res. 2000, 4, 231–262. [Google Scholar] [CrossRef]

- Matache, A.M.; Von Petersdorff, T.; Schwab, C. Fast deterministic pricing of options on Lévy driven assets. ESAIM Math. Model. Numer. Anal. 2004, 38, 37–71. [Google Scholar] [CrossRef]

- Kwon, Y.; Lee, Y. A second-order finite difference method for option pricing under jump-diffusion models. SIAM J. Numer. Anal. 2011, 49, 2598–2617. [Google Scholar] [CrossRef]

- Briani, M.; Natalini, R.; Russo, G. Implicit–explicit numerical schemes for jump–diffusion processes. Calcolo 2007, 44, 33–57. [Google Scholar] [CrossRef]

- Giles, M.B. Multilevel Monte Carlo path simulation. Oper. Res. 2008, 56, 607–617. [Google Scholar] [CrossRef]

- Glasserman, P. Monte Carlo Methods in Financial Engineering; Springer: New York, NY, USA, 2003. [Google Scholar]

- Metwally, S.A.; Atiya, A.F. Using Brownian bridge for fast simulation of jump-diffusion processes and barrier options. J. Deriv. 2002, 10, 43–54. [Google Scholar] [CrossRef][Green Version]

- Han, J.; Jentzen, A.; Weinan, E. Solving high-dimensional partial differential equations using deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, 8505–8510. [Google Scholar] [CrossRef]

- Han, J.; Jentzen, A. Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations. Commun. Math. Stat. 2017, 5, 349–380. [Google Scholar] [CrossRef]

- Han, J.; Long, J. Convergence of the deep BSDE method for coupled FBSDEs. Probab. Uncertain. Quant. Risk 2020, 5, 5. [Google Scholar] [CrossRef]

- Kremsner, S.; Steinicke, A.; Szölgyenyi, M. A deep neural network algorithm for semilinear elliptic PDEs with applications in insurance mathematics. Risks 2020, 8, 136. [Google Scholar] [CrossRef]

- Huré, C.; Pham, H.; Warin, X. Deep backward schemes for high-dimensional nonlinear PDEs. Math. Comput. 2020, 89, 1547–1579. [Google Scholar] [CrossRef]

- Beck, C.; Becker, S.; Cheridito, P.; Jentzen, A.; Neufeld, A. Deep splitting method for parabolic PDEs. SIAM J. Sci. Comput. 2021, 43, A3135–A3154. [Google Scholar] [CrossRef]

- Beck, C.; Becker, S.; Grohs, P.; Jaafari, N.; Jentzen, A. Solving the Kolmogorov PDE by means of deep learning. J. Sci. Comput. 2021, 88, 73. [Google Scholar] [CrossRef]

- Pham, H.; Warin, X.; Germain, M. Neural networks-based backward scheme for fully nonlinear PDEs. SN Partial. Differ. Equ. Appl. 2021, 2, 16. [Google Scholar] [CrossRef]

- Germain, M.; Pham, H.; Warin, X. Approximation error analysis of some deep backward schemes for nonlinear PDEs. SIAM J. Sci. Comput. 2022, 44, A28–A56. [Google Scholar] [CrossRef]

- Castro, J. Deep Learning Schemes For Parabolic Nonlocal Integro-Differential Equations. arXiv 2021, arXiv:2103.15008. [Google Scholar] [CrossRef]

- Al-Aradi, A.; Correia, A.; Naiff, D.d.F.; Jardim, G.; Saporito, Y. Applications of the Deep Galerkin Method to Solving Partial Integro-Differential and Hamilton-Jacobi-Bellman Equations. arXiv 2019, arXiv:1912.01455. [Google Scholar]

- Sirignano, J.; Spiliopoulos, K. DGM: A deep learning algorithm for solving partial differential equations. J. Comput. Phys. 2018, 375, 1339–1364. [Google Scholar] [CrossRef]

- Boussange, V.; Becker, S.; Jentzen, A.; Kuckuck, B.; Pellissier, L. Deep learning approximations for non-local nonlinear PDEs with Neumann boundary conditions. arXiv 2022, arXiv:2205.03672. [Google Scholar]

- Frey, R.; Köck, V. Convergence Analysis of the Deep Splitting Scheme: The Case of Partial Integro-Differential Equations and the associated FBSDEs with Jumps. arXiv 2022, arXiv:2206.01597. [Google Scholar]

- Gihman, I.; Skohorod, A. The Theory of Stochastic Processes; Springer: New York, NY, USA, 1980; Volume III. [Google Scholar]

- Kliemann, W.; Koch, G.; Marchetti, F. On the unnormalized solution of the filtering problem with counting process observations. IEEE Trans. Inf. Theory 1990, 36, 1415–1425. [Google Scholar] [CrossRef]

- Ethier, S.; Kurtz, T.G. Markov Processes: Characterization and Convergence; Wiley: New York, NY, USA, 1986. [Google Scholar]

- Pham, H. Optimal stopping of controlled jump diffusion processes: A viscosity solution approach. J. Math. Syst. Estim. Control 1998, 8, 1–27. [Google Scholar]

- Colaneri, K.; Frey, R. Classical Solutions of the Backward PIDE for Markov Modulated Marked Point Processes and Applications to CAT Bonds. Insur. Math. Econ. 2021, 101 Pt B, 498–507. [Google Scholar] [CrossRef]

- Ceci, C.; Colaneri, K.; Frey, R.; Köck, V. Value adjustments and dynamic hedging of reinsurance counterparty risk. SIAM J. Financ. Math. 2020, 11, 788–814. [Google Scholar] [CrossRef]

- Frey, R.; Köck, V. Deep Neural Network Algorithms for Parabolic PIDEs and Applications in Insurance Mathematics. arXiv 2021, arXiv:2109.11403. [Google Scholar]

- Xu, G.; Zheng, H. Approximate basket options valuation for a jump-diffusion model. Insur. Math. Econ. 2009, 45, 188–194. [Google Scholar] [CrossRef]

- Cardaliaguet, P.; LeHalle, C.A. Mean Field Game of Controls and an Application to Trade Crowding. Math. Financ. Econ. 2018, 12, 335–363. [Google Scholar] [CrossRef]

- Cartea, Á.; Jaimungal, S.; Penalva, J. Algorithmic and High-Frequency Trading; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Øksendal, B.K.; Sulem, A. Applied Stochastic Control of Jump Diffusions; Springer: Berlin/Heidelberg, Germany, 2007; Volume 498. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).