Abstract

Course recommendation is a critical service in Intelligent Tutoring Systems (ITS) that helps learners discover relevant courses from massive online educational platforms. Despite substantial progress in this field, two key challenges remain unresolved: (1) existing methods fail to leverage the differences in learners’ interests across different courses during knowledge propagation processes, and (2) while sequential relationships have been considered in course recommendations, there is still significant room for improvement in effectively integrating sequential patterns with knowledge-graph-based approaches. To overcome these limitations, we propose PGDB (Preference-aware Graph Diffusion network and Bi-LSTM), an innovative end-to-end framework for course recommendation. Our model consists of four key components: First, a course knowledge graph diffusion module recursively collects multiple knowledge triples related to learners to construct their knowledge background. Second, a preference-aware diffusion attention mechanism analyzes learners’ preferences for courses and relational paths using multi-head attention, effectively distinguishing semantic diversity across different contexts and capturing varying learner interests during knowledge transmission. Third, a temporal sequence modeling module utilizes bidirectional long short-term memory networks to identify learners’ interest evolution patterns, generating learner-dependent representations that efficiently leverage sequential relationships between courses. Finally, a prediction module combines the final representations of learners and courses to output selection probabilities for candidate courses. Extensive experimental results demonstrate that PGDB significantly outperforms state-of-the-art baseline models across multiple evaluation metrics, validating the effectiveness of our approach in addressing data sparsity and sequential modeling challenges in course recommendation systems.

1. Introduction

ITS is a product of the combination of artificial intelligence and computer-assisted instruction. Its purpose is to integrate artificial intelligence, multimedia, and network technologies into traditional teaching systems to improve teaching efficiency and quality. It continuously generates a large amount of learning behavior data. It constructs various intelligent learning services based on learners’ historical interaction data, including cognitive diagnosis [1], knowledge tracking [2], and resource recommendation services [3,4], to help learners enhance their learning efficiency. The rapid development of information technology has facilitated the increasing popularity of online education, making massive open online courses (MOOCs) increasingly popular. Various course platforms are rapidly developing, including Coursera, edX, Udacity, iCourse, and XuetangX. These platforms offer a wide range of courses covering multiple disciplines. For example, XuetangX points out in its introduction that its platform provides over 5000 high-quality courses across 13 disciplines. However, on such a large-scale course platform, learners often find it challenging to locate courses and resources that align with their interests and preferences [5].

A course recommendation system is an intelligent system based on learners’ needs and preferences, designed to provide personalized course recommendations [6]. The system analyzes learners’ learning history, interests, and goals, as well as the characteristics and evaluations of courses, to recommend the most suitable courses for them, thereby improving learning outcomes and user satisfaction. Course recommendations can be categorized into content-based, collaborative filtering, and hybrid recommendations. Thus, course recommendation serves as a bridge between learners and course resources. More specifically, course recommendation is a key component of online course platforms, as it filters through a large amount of course data by recording and analyzing learners’ historical learning behavior data, providing courses that align with their interests and preferences [7]. The first course recommendation system appeared in 1960 as part of the PLATO project developed by the University of Illinois [8]. It was designed for virtual instruction for college students, not only providing interactive learning opportunities but also making significant strides in course design and personalized learning.

Compared to recommendations [9] in fields such as e-commerce [10], music [11], movies [12], and news, course learning requires considerable time and effort, resulting in relatively sparse interactions between learners and courses. Data sparsity and the cold start problem are key factors limiting the effectiveness of recommendations. Data sparsity refers to the situation where interaction data between learners and courses is minimal, making it difficult for the system to infer learners’ learning preferences accurately; in other words, it is challenging to extract learners’ interests from the limited interactions between learners and courses. The cold start problem refers to the challenge faced by course recommendation systems when they are first launched or encounter new learners or new courses, as the lack of sufficient historical data makes it difficult to provide practical and personalized course resource recommendations [13]. Given that interactions in course recommendations are even, DRN [14] introduced a parser, ensuring practical recommendations become more challenging. In the context of data sparsity, providing learners with personalized learning experiences presents significant challenges.

More specifically, online course recommendation can be described as the system’s task of recommending candidate courses that align with learners’ interests based on their historical learning activity data, including course information and feedback data from the learning process. Collaborative filtering-based course recommendation has received widespread attention, primarily utilizing the similarity between students [15] or courses [16] to recommend relevant courses. These methods are straightforward to implement and offer strong interpretability; however, their efficacy in tackling data sparsity and the cold start issue still needs improvement. With the advancement of deep learning technologies, integrating modern recommendation systems with deep learning has provided strong momentum for developing the recommendation field, as seen in methods such as NCF [17] and NFM [18], which have led to a series of explicitly developed course recommendation methods. For example, Hidasi et al. [19] framed course recommendation as a session-based recommendation task, solving it through a recurrent neural network by gradually feeding courses from the time series into a Gated Recurrent Unit (GRU) to gather course information from the historical sequence. The final embedding vector was then treated as the user’s interest. Subsequently, recommendation systems such as DIEN [20], MAAMF [21], and DRN [14] introduced attention mechanisms and reinforcement learning. However, despite the significant contributions of deep learning technologies to improving recommendation effectiveness in fields such as e-commerce, they have not adequately addressed the intrinsic relationships between courses, thereby limiting their potential for further performance improvement. The utilization of knowledge graphs as supplementary information, combined with classic models such as collaborative filtering [22], has been shown to effectively integrate multi-source heterogeneous data [23], leading to a deeper understanding of learners’ preference information and demonstrating good recommendation effectiveness [24,25]. However, there are still two unresolved challenges in the field of course recommendation: (1) It does not capitalize on the differences in learners’ interests in various courses during the knowledge dissemination process. (2) Current research neglects the sequential relationship between the courses that learners learn.

To address the issues above, we propose an innovative solution called PGDB, designed explicitly for course recommendation systems. The method first generates an initial set of course seed collections through the diffusion mechanism of the knowledge graph. It recursively collects multiple knowledge triples related to learners to construct their knowledge background. Next, we employ a preference-aware diffusion attention mechanism to analyze learners’ preferences for courses and their relational paths. We aim to accurately distinguish the semantic diversity in different contexts and gain a more comprehensive understanding of learners’ needs. Building on this, the temporal sequence modeling module utilizes a Bi-LSTM to thoroughly examine the evolution patterns of learners’ interests, generating personalized representations relevant to each learner. These representations not only consider learners’ historical preferences but also reflect the dynamics of their interests over time. Finally, the prediction module combines the final representations of learners and courses to output the probabilities of learners selecting candidate courses. Experimental results on real datasets demonstrate that the PGDB model outperforms the latest baseline models in terms of recommendation effectiveness, validating the efficacy and practicality of our approach.

The key contributions of the paper are summarized as follows:

- (1)

- This paper presents an innovative end-to-end knowledge-aware hybrid model for course recommendation. The model multiplies head entities with relational paths, then applies a multi-head attention mechanism, followed by multiplication with the tail entities of the current hop after the softmax function. Finally, it concatenates the representations from each hop, improving the representation learning capability of both learners and courses.

- (2)

- At the same time, the model fully leverages the advantages of graph neural networks and bi-directional long short-term memory networks, cleverly integrating the two to achieve a fusion of graph networks and temporal networks. This approach better captures the long-term dependencies in graph-structured data, resulting in a more suitable recommendation model.

- (3)

- We utilize the MOOCCube dataset from XuetangX, one of China’s largest online course platforms, to validate the effectiveness of the proposed model. Experimental results demonstrate that our model significantly outperforms the current state-of-the-art baselines.

In the rest of this article, related work is reviewed in Section 2, Section 3 and Section 4, which formally define the research question and describe our proposed PGDB approach. In Section 5, we conducted a large number of experiments and analyzed the resulting data. In Section 6, we discuss the experimental results from multiple perspectives. Finally, Section 7 summarizes this paper, presenting conclusions and outlining future research directions.

2. Related Work

2.1. Course Recommendations

With the rapid development of large-scale online courses, course recommendations have garnered considerable interest in academia and industry. Generally, course recommendation methods can be categorized into content-based course recommendation, collaborative filtering-based course recommendation, and hybrid approaches. Content-based course recommendation utilizes the characteristics of the courses themselves for recommendations. Apaza et al. [26] proposed a course recommendation system based on historical performance, using Latent Dirichlet Allocation (LDA) to model course content, thereby intelligently recommending online courses to students. Collaborative filtering methods use learners with similar interests or course characteristics as a basis for recommendations. Li et al. [27] proposed a Bayesian Personalized Ranking Network for course recommendation, which integrates item-based collaborative filtering with Bayesian networks to model learners’ preferences based on their historically registered courses. Xu and Zhou [28] designed a model that utilizes deep learning to derive multimodal course features, which are then input into a long short-term memory (LSTM) network to infer learners’ interest preferences using both explicit and implicit feedback information. Tian et al. [29] explored the integration of Multidimensional Item Response Theory (MIRT) into a recommendation model, dynamically updating learners’ abilities using an ability tracking model, and effectively combining learners’ ability diagnosis with collaborative filtering-based course recommendations, enhancing the effectiveness and interpretability of course recommendations.

To meet learners’ growing needs for personalized learning, hybrid course recommendation methods have become a primary research strategy for addressing current challenges in education. Researchers are leveraging external knowledge to further enhance the effectiveness of recommendations, with a particular focus on graph-structured data. Zhu et al. [30] proposed an offline hybrid course recommendation model that utilizes multi-source heterogeneous data to describe students, courses, and other entities. The model generates vector representations for students based on relevant data by employing a random walk-based neural network. It finally uses tensor decomposition to learn and predict students’ ratings for courses they have not yet taken. Yang and Cai [31] proposed an end-to-end framework that uses knowledge graphs as an auxiliary information source for collaborative filtering, aiming to enhance the semantic representation of items through knowledge graphs. This framework combines deep matrix factorization models with an improved loss function, applying it to course recommendations. For example, Wang et al. [32] proposed a hyper-edge-based graph neural network (HGNN) for recommending MOOCs. Zhang et al. [33] introduced an efficient course recommendation model called the Knowledge Grouping Aggregation Network (KGAN), which automatically iterates through the course graph to assess learners’ latent interests. Additionally, Deng et al. [34] developed a new online course recommendation method that combines knowledge graphs and deep learning, modeling course information through a course knowledge graph and representing courses using TransD.

However, these existing methods still require improvement in capturing the sequential relationships between the courses learned by learners, and they have not effectively explored learners’ interest preferences during the knowledge dissemination process. In this paper, we propose a course recommendation method that integrates knowledge graphs and long short-term memory networks while emphasizing the importance of knowledge during the dissemination process. This approach better uncovers the relationships between courses and focuses on the transmission of essential knowledge, thereby improving the accuracy of course recommendations.

2.2. Recommendation Method Based on Knowledge Graph

Integrating knowledge graphs with recommendation systems has led to research achievements [35]. Based on different methods of incorporating knowledge graphs, we can categorize knowledge-aware recommendation methods into three main types: embedding-based, path-based, and graph neural network-based.

2.2.1. Embedded-Based Methods

Embedding-based methods provide task independence, allowing for the flexible integration of knowledge graphs into various recommendation models. These methods primarily train item embeddings by using knowledge graphs as constraints and leverage matrix factorization to predict click-through rates. For instance, CKE [22] learns item embeddings utilizing the knowledge graph through TransR [36]. DKN [37] treats entity embeddings and word embeddings as distinct input channels and utilizes TransD [38] to generate news embeddings for recommendation based on the knowledge graph. However, the knowledge graph embedding methods (KGE) employed in these models are more suitable for in-graph applications rather than recommendation tasks, resulting in the subpar performance of the learned entity embeddings in item recommendation.

2.2.2. Path-Based Methods

Path-based methods infer the relevance between entities by analyzing sequences of entities and their relationships, which is then used to make recommendations. However, compared to embedding-based methods, path-based strategies exhibit task dependence and require domain knowledge to extract paths, which may lead to missing important information or introducing irrelevant and trivial paths. In the context of course recommendation, constructing meta-paths is significantly simplified. However, as knowledge expands and learning patterns change rapidly, the emergence of new patterns necessitates the reconstruction of meta-paths, which undoubtedly incurs additional overhead. An alternative approach is to utilize path selection algorithms to identify key paths; however, balancing path selection optimization with recommendation objectives remains a significant challenge in practical applications [39,40].

2.2.3. Graph Neural Network-Based Methods

Combining multi-hop neighborhood information to represent nodes and graph structures in graph neural networks [41] is a highly effective approach [42]. RippleNet [43] is an end-to-end framework that seamlessly integrates knowledge graphs into recommendation systems. It simulates the propagation of ripples on a water surface, automatically expanding users’ latent interests along the links of the knowledge graph, thereby facilitating the dissemination of user preferences. Multiple “ripples” activated by historical clicks are combined to form a preference distribution for candidate items, which is then used to predict click probabilities. KGCN [44] iteratively aggregates neighboring information from nodes through graph convolutional neural networks to generate item representations. Subsequently, the user-item interaction graph is combined with the knowledge graph to form a Unified Knowledge Graph (UKG), which recursively propagates embeddings from neighboring items using an attention mechanism, effectively allocating weights to differentiate the contributions of each neighbor [45]. Additionally, motivated by the effectiveness of contrastive learning in extracting supervisory signals from data, KGIC focuses on applying contrastive learning in Knowledge Graph Recommendation (KGR), proposing a novel multi-level interactive contrastive learning mechanism that achieves significant recommendation performance [46]. Yang et al. [47] proposed DGRec (Diversified GNN-based Recommender System), which achieves diversification in GNN recommendation systems by directly improving the embedding generation process. It utilizes hierarchical attention to allocate attention weights and loss reweighting for each layer, focusing on learning from long-tail categories.

Recently, Wang et al. proposed a framework called KFGAN, which is based on a knowledge-aware fine-grained attention network designed to achieve personalized, knowledge-aware recommendations. This framework encodes relational paths and relevant entities to capture user preferences, generating detailed knowledge graphs that learn latent semantic information [48]. However, most current graph neural network-based methods still have room for improvement in capturing the sequential relationships between courses taken by learners, and they have not effectively delved into learners’ interest preferences during the knowledge dissemination process. In this paper, we propose a course recommendation method that combines knowledge graphs and long short-term memory networks, with a focus on the importance of knowledge during the dissemination process. This method can more effectively uncover the relationships between courses, emphasizing the transmission of essential knowledge, and thereby improving the accuracy of course recommendations.

2.3. Time Series Modeling Method

A time series refers to a sequence of data points indexed in discrete time order, which is prevalent across various fields, making time series analysis a crucial tool [49]. Time series analysis is a crucial domain with applications that include weather pattern forecasting [50], detecting irregular heartbeats in electrocardiograms, and identifying anomalous software deployments [51]. This field encompasses several key tasks, such as time series forecasting, data imputation, and anomaly detection.

Traditional time series forecasting methods, such as the Autoregressive Integrated Moving Average (ARIMA) model and the Holt-Winters seasonal method, are theoretically sound. However, these methods primarily apply to univariate forecasting problems, limiting their use in complex time series data. In recent years, with the increasing availability of data and computational power, deep learning-based time series forecasting (TSF) techniques have demonstrated higher predictive accuracy compared to traditional methods. Over the past few decades, machine learning and deep learning have made significant advancements in various fields, particularly in computer vision (CV) and natural language processing (NLP). There are three main types of deep neural networks used for sequence modeling, all of which are applied to time series forecasting: (i) Temporal Convolutional Networks (TCN); (ii) Transformer-based models; and (iii) Recurrent Neural Networks (RNNs). The Bi-LSTM, which integrates a multi-head attention mechanism, is a powerful tool for time series modeling. By combining the context-capturing capability of Bi-LSTM with the focus characteristics of the multi-head attention mechanism, it significantly enhances performance across various tasks. The application of this model continues to expand, representing an important direction in current research on time series modeling using deep learning techniques [52].

3. Problem Definition

This section provides a brief introduction to the key structural components required for our approach, including the learner–course interaction graph and the course knowledge graph. Following this, we offer a formal statement of the course recommendation problem.

Learner–Course Interaction Graph: In a typical course recommendation scenario, we use

to represent the set of M learners, and

to represent the set of n courses. The interaction between learners and courses is denoted by the matrix

, where learner–course interactions, based on implicit feedback, can be expressed as

if learner u engaged with course c, and

otherwise. When a learner interacts with a course (e.g., by clicking, browsing, etc.), we set the

to indicate that the learner has a behavioral preference for that course. Conversely, if no interaction has occurred,

. It is important to note that

does not necessarily imply that the learner dislikes the course. It could also be due to the vast number of available courses, where the learner has not yet encountered the course, and thus no interaction has taken place.

Course Knowledge Graph: Knowledge graphs store rich factual information in a graph structure, forming a semantic network of relationships between learners and courses, interweaving complex relationships among learners, courses, and other entities. These relationships can be formalized in the form of triples, i.e., (head entity, relationship, tail entity). Thus, a heterogeneous knowledge graph can be represented as

, where

denotes the existence of a relationship

between the head entity

and the tail entity

, and

and

represent the sets of entities and relations, respectively. For example, a course recommendation triple (Data Structures, School, Tsinghua University) indicates that Tsinghua University offers a course on data structures. However, there may be cases where different universities offer courses with the same name, such as (Data Structures, School, South China University of Technology), which can confuse the entities associated with the course. Therefore, to effectively align a course

with an entity

in the knowledge graph, we adopt the set

, which helps account for cases where different schools offer courses with the same name, ensuring accuracy and clarity in the correspondence.

Recommendation Task: Given the learner–course interaction matrix

and the course knowledge graph

, the goal of the recommendation task is to predict the probability that a learner will next click on a course that they have not interacted with. Specifically, we aim to learn a prediction function

, where

denotes the predicted probability and

represents the model parameters of the function

.

4. Method

4.1. Symbol Summary

Table 1 presents the basic symbol representations used in this chapter, where the bold symbols with subscripts also have corresponding meanings. The remaining symbols are generated through operations or propagation between these basic symbols.

Table 1.

A summary of notations.

4.2. Model

Inspired by graph-neural-network-based methods, we treat the courses a learner has interacted with in the past as starting points and then iteratively expand through the connections in the knowledge graph. This process progressively uncovers the learner’s latent interest preferences, aiming to predict the preference distribution over candidate courses and subsequently estimate the final click probability. In this section, we will provide a detailed explanation of the proposed model and introduce the primary contributions of this paper.

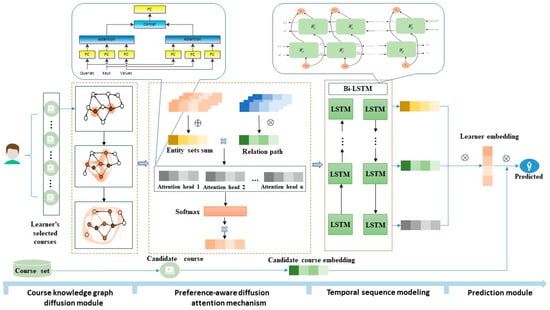

Figure 1 illustrates the workflow of PGDB, which consists of four key components: (1) Course knowledge graph diffusion module. This module is primarily responsible for propagating the initial course seed set within the knowledge graph, recursively obtaining the set of h-hop-related knowledge triples for learner

. (2) Preference-aware diffusion attention mechanism. This component utilizes a novel knowledge-aware multi-head attention mechanism that analyzes learner preferences for courses and their corresponding relational paths. It generates attention weights assigned to the tail entities, capturing the semantic diversity across different contexts. (3) Temporal sequence modeling. This module concatenates the representations of tail entities across different hop distances, generating a collection of tail entities for different temporal states. A bi-directional long short-term memory (Bi-LSTM) network is used to capture the evolution of the learner’s interests over time, producing temporally dependent learner representations. (4) Prediction module. This module leverages the final representations of both the learner and the courses to output the probability that the learner will select a candidate course.

Figure 1.

It illustrates the PGDB model, which comprises four modules: a course knowledge graph diffusion module, a preference-aware diffusion attention mechanism, a temporal sequence modeling module and a prediction module.

4.2.1. Course Knowledge Graph Diffusion Module

The primary goal of the course knowledge graph diffusion module is to capture high-order collaborative signals between learners and courses by propagating course entities that the learners have interacted with across the graph. This enables a more precise representation of the learner’s latent preferences. The learner’s preferences are partially reflected in the interaction data with courses, and propagating through the paths in the knowledge graph not only reveals intrinsic connections between courses but also further enhances the feature representation of the learner. This module consists of two main parts: extracting course entities that learners have interacted with from the interaction graph and performing an iterative propagation process of the course entities within the knowledge graph. Below, we will provide a detailed introduction to these two components.

First, the interaction graph presents the interactions between learners and courses in the form of a bipartite graph. Extracting learner representations from the interaction graph can more accurately reflect their interest preferences than traditional methods that use independent latent vectors to represent learner features. Given the interaction matrix between learners and courses, we can extract the courses with which the learner has directly interacted and obtain the learner’s initial seed set through entity alignment.

Second, we continuously expand the seed set outward along the paths of the knowledge graph in deeper layers, exploring the connectivity between nodes in the graph. This propagation of information enriches the feature representations of both the learner and the courses. Therefore, the set of h-hop relevant entities for learner

is defined as

where

represents the hyperparameter for the maximum number of hops in the propagation, and

denotes the current hop number in the propagation. Given the entity set

, the h-th ripple set for learner

can be obtained as follows:

4.2.2. Preference-Aware Diffusion Attention Mechanism

Entities in the knowledge graph are connected through relationships. Taking a course as an initial example, different relational paths can link to various entities, enriching the course’s representation and, in turn, reflecting the learner’s preferences. For instance, using the course “C++Programming Basics” as the initial course, a two-hop propagation in the knowledge graph reveals that this course is offered by Tsinghua University, which also provides the course “C++Programming Advanced.” This path has two relationships: “offers” and “includes.” On the other path, the course “C++Programming Basics” belongs to the Computer Science subject, which also includes the “Java Programming” course. Here, the two-hop relationship is “subject” and “includes.” Thus, it is evident that the head entity and the relational paths influence the final recommendation results. Consequently, it can be observed that project-entity pairs may exhibit different similarities when measured through other relationships. Drawing on existing knowledge-aware preference propagation mechanisms, we propose a preference-aware diffusion attention mechanism that assigns different attention weights to the tail entities, accurately capturing their significance and better reflecting learner preferences.

Given the tuple set

at the h-th layer, we use

to represent the relational paths propagated to the (h-1)-th layer and

to denote the entity set connected on the relation path

, as denoted below:

where

denotes the embedding of the head entity, while

represents the embedding of the current relationship. Additionally, we construct the attention weight

for the tail entity embedding:

where

denotes the embedding of the current tail entity (a given candidate course entity in the knowledge graph) and

represents the attention of the head entity towards the tail entity in the relational space, which can be expressed as

where

denotes the operation of the multi-head attention mechanism, which captures diverse features and relationships among the input information by simultaneously focusing on different parts. This approach also mitigates the impact of noise during the propagation process, generating a tail entity embedding representation that integrates information from various contexts.

and

represent the trainable weights and biases. In the following, we employ the softmax function to normalize the coefficients across the entire set of triples, which can be formulated as follows:

where

denotes the ripple set for learner

at the h-th layer.

Ultimately, we concatenate all the extracted knowledge from the hops to form a sequential ripple set, which is defined as follows:

where

represents the ripple set representation obtained at each hop, which is specifically expressed by the following formula:

4.2.3. Temporal Sequence Modeling

As the ripple set continuously expands outward, information loss is possible. Each layer of expansion can be viewed as a temporal relationship in the sequence, which aligns with the characteristics of Bi-LSTM. Therefore, utilizing Bi-LSTM to consider temporal relationships enables further updating and refining of the learner’s feature representation. The Bi-LSTM component is a bidirectional LSTM neural network developed from LSTM, with the specific computation process outlined as follows:

where

and

denote the weight matrices,

represents the bias, and

is the sigmoid activation function.

indicates the hidden state representation at the current time step. In this manner, the Bi-LSTM network can retain contextual information through the LSTM units. For the t-th time step, two parallel LSTM layers process the input vector from their respective opposite directions, and then output the sum of the hidden state vectors, which can be described as:

where

,

represent the output results from two parallel LSTM layers operating in the forward and reverse directions, respectively.

,

represent the weight parameters for the two parallel LSTM layers that function in the forward and backward directions, respectively, while

denotes the bias term. We store the output at each time step to obtain the final output representation from the Bi-LSTM, denoted as

. Consequently, the learner’s final embedding representation can be formally expressed as

where

denotes the sigmoid activation function.

To recommend personalized learning paths, it is necessary to consider both the current learning status of students and the cognitive load and complexity of knowledge points during the learning process. Therefore, this article introduces a multi-objective optimization strategy to balance learning effectiveness and cognitive load.

4.2.4. Prediction Module

We use

and

to denote the feature vector representations of the learner and the course, respectively. Ultimately, we obtain the predicted probability of a learner clicking on a course using the inner product:

4.2.5. Loss Function

We present the following loss function to help the model learn the representation of learner preferences more effectively. The loss function for the PGDB model is divided into three components.

First, to facilitate better learning during optimization, we use cross-entropy loss to measure the difference between the predicted probabilities and the actual labels

where

denotes the cross-entropy loss,

,

represent the positive and negative samples of the learner–course pairs, respectively.

Second, we employ a fully connected neural network as the Knowledge Graph Embedding (KGE) loss, which can be expressed as follows:

To alleviate the impact of overfitting and noise in the knowledge graph, we optimize the loss function by incorporating a regularization term, formulated as follows:

Finally, the joint learning objective function is given by:

5. Experiments

5.1. Dataset

In this study, we used the MOOCCube dataset as the evaluation dataset. MOOCCube [53] is an open data repository designed to serve researchers in the fields of large-scale online education, knowledge graphs, and data mining. It contains information on online courses, instructional videos, knowledge concepts, learners’ course selection data, video viewing records, and a concept graph constructed based on the hierarchical and sequential relationships among knowledge concepts. We randomly selected a three-month subset of behavioral data from 1 January 2018 to 1 April 2018, which includes 7156 learners and 219 courses, as shown in Table 2, while preserving the original order of course selections. We followed the configurations established in KGAN and KG to validate the applicability of our model in other educational recommendation tasks with similar data organization structures. We constructed an interaction matrix between learners and courses, with a total of 32,091 interactions. Additionally, we integrated entity pairs and attributes from the MOOCCube dataset to build a triple representation of the course graph, which includes 2029 entities and seven pairs of relationships, such as sequential relationships among concepts, schools, teachers, primary topics, secondary topics, foundational courses, and core courses, resulting in a total of 20,893 triples. For each positive instance, a corresponding negative instance was randomly generated, resulting in a 50% chance of interaction between learners and courses. Thus, when generating learner–course interaction data, positive instances accounted for half of the rating file.

Table 2.

Introduction to MOOCCube datasets.

5.2. Baselines

To assess our proposed method, we compare it with the following baseline models:

- •

- LR [54]: Linear Regression has been widely employed in classification tasks, playing a significant role in industrial Click-Through Rate (CTR) prediction. This approach utilizes a weighted sum of relevant features as input for the model.

- •

- BPR [55]: Bayesian Personalized Ranking (BPR) is a traditional collaborative filtering technique that leverages Bayesian methods to optimize the pairwise ranking loss function in recommendation tasks.

- •

- FM [56]: Factorization Machines (FM) are principled models that can account for interactions between features and conveniently integrate any heuristic features. Our experiments utilized all available information except for the secondary subjects.

- •

- DKN [29]: The Deep Knowledge-Aware Network introduces knowledge graph representations into recommendations to predict click-through rates. The core of DKN is a multi-channel knowledge-aware convolutional neural network that integrates semantic and knowledge representations while maintaining the alignment between words and entities. This study treats course titles as the textual input for DKN.

- •

- RippleNet [43]: This end-to-end framework inherently integrates knowledge graphs into its recommendation systems. It simulates the propagation of ripples on water surfaces to automatically expand users’ possible interests along the links of the knowledge graph, thereby facilitating the diffusion of user preferences. Multiple “ripples” activated by historical clicks accumulate to form a preference distribution for candidate items, which is then utilized to predict click probabilities.

- •

- KGNN-LS [57]: This approach proposes a Knowledge-Aware Graph Neural Network with Label Smoothing Regularization, which computes user-specific item embeddings through a trainable function, transforms the knowledge graph into a weighted graph, and applies graph neural networks for personalized computations.

- •

- CKAN [58]: This paper introduces a novel Cooperative Knowledge-Aware Attention Network (CKAN) that explicitly encodes cooperative signals through heterogeneous propagation strategies while distinguishing the contributions of different knowledge neighbors using a knowledge-aware attention mechanism.

- •

- KGAN [33]: A course recommendation model based on Knowledge Group Aggregation Networks utilizes a heterogeneous graph iteration that describes the relationships between courses and facts to estimate learners’ learning interests, projecting learner behaviors and course graphs into a unified space.

- •

- KFGAN [48]: Based on a knowledge-aware fine-grained attention network, achieves consistency and coherence between collaborative filtering and knowledge graph information, draws on graph contrastive learning methods to further uncover latent semantic information within the knowledge graph.

5.3. Implementation Details

The experiments were conducted on a Windows operating system using the PyCharm Community Edition 2022 integrated development environment, with development carried out in Python 3.7. The following libraries were utilized: TensorFlow, NumPy, and Scikit-learn. The hardware configuration consisted of a 24-core Intel Core i9-10920X CPU running at 3.5 GHz, an NVIDIA GeForce RTX 3090 Ti graphics card, 64 GB of RAM, a 1 TB solid-state drive, and a 4 TB mechanical hard drive. Regarding dataset partitioning, we divided the dataset into training and testing sets using an 8:2 ratio, and the method of random splitting was used. The model parameters were initialized using the Xavier initialization method, with 50 epochs and a batch size of 2048. The learning rate and L2 normalization coefficient were set to 2 × 10−2 and 1 × 10−5, respectively, while the knowledge graph embedding weight was set to 1 × 10−2. The model’s performance was evaluated in the context of Click-Through Rate (CTR) prediction, utilizing the Area Under the Curve (AUC), Accuracy (ACC), Recall, Precision, and F1 metrics for evaluation.

Here, M represents the number of positive samples, and n represents the number of negative samples; there are a total of M × n pairs of samples, with each pair consisting of one positive sample and one negative sample. The count is taken for all samples where the predicted probability of the positive sample is greater than that of the negative sample. TP represents True Positives, TN represents True Negatives, FP represents False Positives, and FN represents False Negatives. To ensure a fair comparison, the experimental results of the baseline models are based on the results from previously published studies.

5.4. Performance Comparison

Along with its variants in the context of Click-Through Rate (CTR) prediction on the MOOCCube dataset. The results indicate that the proposed PGDB model significantly outperforms the baseline models. Specifically, PGDB-s is a variant of PGDB that replaces the multi-head attention mechanism with a self-attention mechanism. At the same time, PGDB-g is another variant that substitutes the Bi-LSTM architecture in PGDB with Gated Recurrent Units (GRU). The following observations can be made from Table 3:

- •

- In prediction tasks, knowledge-aware recommendation models generally outperform classical recommendation models, except DKN. This may be attributed to knowledge-aware models effectively utilizing knowledge graphs as auxiliary information, alleviating the high sparsity in the course dataset.

- •

- The DKN model underperformed in course recommendations compared to classical models such as BPR and FM. This may be due to the knowledge graph embedding (KGE) method employed by DKN, which is better suited for intra-graph applications rather than recommendation tasks, resulting in suboptimal entity embeddings for item recommendations.

- •

- Among classical recommendation models, BPR demonstrated the best performance, as it leverages Bayesian methods to optimize the pairwise ranking loss function in recommendation tasks, facilitating increased attention to high-quality courses by more learners.

- •

- Within knowledge-aware recommendation methods, DKN exhibited the poorest performance, indicating that propagation-based approaches are superior to embedding-based methods.

- •

- The KGAN and RippleNet models significantly outperformed the CKAN and KGNN-LS models in course recommendations. A possible explanation is that introducing collaborative information may carry more noise, mainly due to the highly sparse nature of course recommendation data.

- •

- Compared to these state-of-the-art baselines, PGDB markedly outperforms the latest optimal KGAN and KFGAN models. This suggests that the PGDB model is more effective at uncovering the relationships between courses while emphasizing the transmission of important knowledge, thereby improving the accuracy of course recommendations.

- •

- Both PGDB and its variants consistently exceeded the performance of all baseline models, demonstrating the competitive advantage of the PGDB model in course recommendation. The superior performance of the PGDB model over PGDB highlights the benefits of the multi-head attention mechanism in simultaneously focusing on transmitting multiple important information sources, which is conducive to performance enhancement. Although GRU is simpler than Bi-LSTM, this simplification may incur some performance loss.

Table 3.

Presents the performance results of the baseline and PGDB models.

Table 3.

Presents the performance results of the baseline and PGDB models.

| Model | LR | BPR | FM | DKN | RippleNet | KGNN-LS | CKAN | KGAN | KFGAN | PGDB | PGDB-s | PGDB-g |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | 0.6283 | 0.7602 | 0.7593 | 0.7281 | 0.8516 | 0.8077 | 0.7809 | 0.8595 | 0.8564 | 0.8707 | 0.8683 | 0.8678 |

Compared to existing state-of-the-art models such as KFGAN [48], this study has achieved relatively superior experimental results. This paper proposes an innovative end-to-end knowledge-aware hybrid model for course recommendation. The model multiplies the head entity by the relationship path, applies a multi-head attention mechanism, and then multiplies it by the current tail entity after applying the softmax function. Finally, it concatenates the representations from each hop, thereby improving the representation learning capability of both learners and courses. At the same time, the model fully utilizes the advantages of graph neural networks and bidirectional long short-term memory networks, cleverly combining the two to achieve the integration of graph networks and temporal networks. This approach better captures long-term dependencies in graph-structured data, resulting in a more suitable recommendation model. Table 4 demonstrates the performance of the PGDB model across various evaluation metrics. Except for precision, where the results of the PGDB model are very close to those of the KFGAN model, the PGDB model significantly outperforms in all other metrics.

Table 4.

Comparison of the performance of the PGDB model and the KFGAN model across multiple evaluation metrics.

5.5. Hyperparameter Influence

In this section, we will discuss the impact of hyperparameters on model performance.

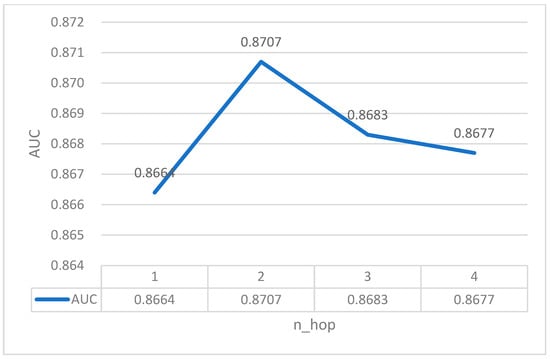

5.5.1. Number of Embedding Layers

The impact of the number of propagation layers in the PGDB model on the AUC results for course recommendations is illustrated in Figure 2. It can be observed that as the number of layers in the model increases, the AUC results initially rise and then decline. The model achieves optimal performance when the number of layers is set to 2. As the model iterates, akin to ripples in water, it undoubtedly captures more user preference information; however, this comes at the cost of introducing additional noise, ultimately diminishing the model’s performance.

Figure 2.

Depth of the course graph layer n_hop.

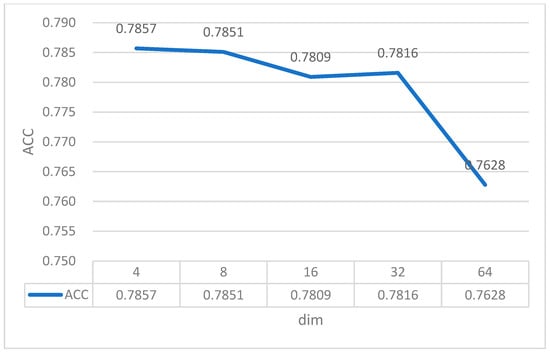

5.5.2. Embedding Dimension Dim

In this section, we discuss the impact of the embedding dimensions of entities and relations on model performance. Figure 3 shows that the model’s performance continuously declines as the dimensions increase. The PGDB model exhibits a gradual decrease in performance during the intermediate stages; however, as the embedding dimensions continue to rise, a significant deterioration in performance occurs. When the dimensions become excessively large, it may lead to overfitting. Therefore, an appropriate embedding dimension is crucial for effectively encoding the features of entities and relations. The MOOC dataset has some feature redundancy, and when the dimensionality is relatively low, the model’s performance tends to be optimal. In the data feature space, excessively high dimensionality often leads to overfitting, while too low dimensionality may prevent the model from capturing sufficient patterns. The appearance of a four-dimensional solution reflects that the PGDB model can generalize well with fewer features, which might be due to effective removal of noise in the data, or the strong correlations between the features in the dataset, allowing a lower-dimensional representation to achieve the global optimum.

Figure 3.

Embedding dimension dim.

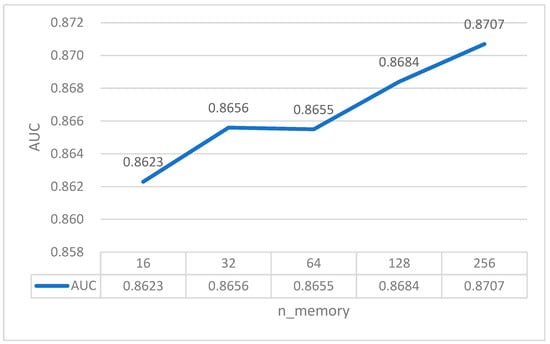

5.5.3. Rejoin Propagation Triplet Sizes

Figure 4 illustrates that as the size of the triples increases, the model’s performance continues to improve. However, due to the limitations of the experimental hardware, it is not feasible to increase the triple size further. Additionally, the training time for the model noticeably increases with the addition of more triples. Under the current hardware conditions, a triple size of 256 yields optimal performance, indicating that this size effectively captures the most relevant connection features of the entities. This configuration enables better encoding of user characteristics while mitigating the impact of noise.

Figure 4.

Rejoin propagation triplet sizes.

In this section, we demonstrate the formidable competitiveness of our proposed model, PGDB, and its variants in click-through rate prediction through a comparison with baseline models. We also explore the impact of various hyperparameters on model performance, ultimately identifying the optimal hyperparameter combinations through extensive experimental studies. Each hyperparameter significantly influences the model, but we will not discuss them individually. As a specialized recommendation scenario, course recommendation inherits advanced technologies from the recommendation field. However, it faces specific challenges due to the increased sparsity of data compared to typical recommendation scenarios and the existence of sequential relationships between courses. Additionally, learner preference information is influenced by their backgrounds, such as their majors, which may lead to heightened interest in a specific series of classes.

6. Discussion

Based on the above experimental results, we can see that compared to traditional models such as logistic regression [54], knowledge-graph-based course recommendation methods demonstrate significant advantages. This is because there are inherent connections between knowledge points, as well as predecessor and successor relationships; some knowledge needs to be acquired in sequence. For example, a programming foundation must be established before learning data structures. If we do not utilize the distinctive auxiliary information in these course recommendations effectively, it may be challenging to achieve favorable experimental results. Compared to knowledge-graph-based recommendation systems, the PGDB model and its variants exhibit certain advantages, particularly the model that incorporates bidirectional long short-term memory networks. This is primarily due to the effective differentiation of learners’ interest variances through a preference diffusion-based attention mechanism during the knowledge dissemination process, while also efficiently utilizing the sequential relationships between learners and courses through time-series modeling. We have reason to believe that, with the continuous development of time series modeling techniques, future course recommendation methods that incorporate time series will achieve even better experimental results.

This study presents a novel approach to further enhance the effectiveness of course recommendations. Course recommendations share some similarities with traditional news recommendations and other recommendation methods, as both can utilize knowledge graphs as auxiliary information to improve recommendation performance. Users have preferences for specific news articles and specific courses. However, news does not contain much explicit knowledge reserve information, whereas courses are often related to the majors that learners are studying or their potential areas of interest. Compared to news recommendation scenarios, the likelihood of preference drift among learners in course recommendation scenarios is relatively small. However, there are also some issues with preference diffusion-based recommendations. For new learners or platforms without existing data, course recommendations may struggle to perform effectively. Additionally, influenced by the sampling strategies in the knowledge graph, a phenomenon of knowledge abandonment occurs to reduce the introduction of noise.

This study offers a clear understanding of the significant value of course recommendations through an analysis of the reasons behind the experimental results, the primary advantages of the model, potential issues, and comparisons with the performance of the latest models. It offers intellectual support for the development of intelligent guided learning systems by leveraging the advantages of existing recommendation methods while incorporating the distinctive features of course recommendations. The enhanced preference-aware knowledge graph diffusion and time series modeling in MOOC recommendation methods contribute to the advancement of course recommendations, online education, and personalized education by providing new ideas and methodologies.

7. Conclusions

This paper proposes an end-to-end course recommendation model, PGDB, which seamlessly integrates bidirectional long short-term memory networks with knowledge graphs. PGDB accomplishes the course recommendation task through a course knowledge graph diffusion module, a preference-aware diffusion attention mechanism, and a temporal sequence modeling and prediction module. This approach effectively alleviates the sparsity issue in course recommendations, better captures the sequential relationships between courses that students learn, and more thoroughly explores the varying interests of learners in different courses during the knowledge dissemination process. Experimental results demonstrate that PGDB has a significant advantage in CTR prediction tasks compared to the latest baseline models. Future research could focus on several directions: First, this study employs uniform neighbor sampling, which applies the same sampling strategy for nodes with many neighbors and those with very few neighbors, potentially hindering the extraction of representative features from neighbor nodes. Using a hierarchical sampling strategy might effectively improve the feature representation of nodes. Secondly, in data processing, we utilized the learner–course interaction matrix. However, a lack of effective supervision strategies has led to high dropout rates during online learning. Future work could incorporate dropout rate data to better understand learners’ experiences with course learning.

Author Contributions

C.D.: Writing—original draft, review, and editing, Conceptualization, Funding acquisition; W.Z.: Writing—original draft; Q.C.: Formal analysis, Methodology, Validation, Writing—original draft; Y.P.: review and editing, Project administration; B.H.: Supervision; Q.H.: review and editing, Project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by the National Natural Science Foundation of China (62177024, 62207027), the Ministry of Education of the People’s Republic of China (20YJC880024), Zhejiang Province educational science and planning research project (2023SCG369), University-Industry Collaborative Education Program (220906424035704), Jinhua Social Sciences Association Project (YB2025112). No additional external funding was received for this study.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Huang, C.Q.; Huang, Q.H.; Huang, X.; Wang, H.; Li, M.; Lin, K.J.; Chang, Y. XKT: Towards explainable knowledge tracing model with cognitive learning theories for questions of multiple knowledge concepts. IEEE Trans. Knowl. Data Eng. 2024, 36, 7308–7325. [Google Scholar] [CrossRef]

- Huang, C.; Wei, H.; Huang, Q.; Jiang, F.; Han, Z.; Huang, X. Learning consistent representations with temporal and causal enhancement for knowledge tracing. Expert Syst. Appl. 2024, 245, 123128. [Google Scholar] [CrossRef]

- Hu, Q.; Han, Z.; Lin, X.; Huang, Q.; Zhang, X. Learning peer recommendation using attention-driven CNN with interaction tripartite graph. Inf. Sci. 2019, 479, 231–249. [Google Scholar] [CrossRef]

- Lin, H.; Chen, P. Factor-level Feature and Attribute Preference Joint Learning Based Session Recommendation. J. Guangdong Univ. Technol. 2024, 41, 91–100. [Google Scholar] [CrossRef]

- Huang, Q.; Zeng, Y. Improving academic performance predictions with dual graph neural networks. Complex Intell. Syst. 2024, 10, 3557–3575. [Google Scholar] [CrossRef]

- Lin, Y.; Feng, S.; Lin, F.; Zeng, W.; Liu, Y.; Wu, P. Adaptive course recommendation in MOOCs. Knowl.-Based Syst. 2021, 224, 107085. [Google Scholar] [CrossRef]

- Huang, Q.; Huang, C.; Huang, J.; Hamido, F. Adaptive resource prefetching with spatial–temporal and topic information for educational cloud storage systems. Knowl.-Based Syst. 2019, 181, 104791. [Google Scholar] [CrossRef]

- Bitzer, D.L. The PLATO project at the university of Illinois. Eng. Educ. 1986, 7, 175–180. [Google Scholar]

- Duan, M.; Li, K.; Zhang, W.; Qin, J.; Xiao, B. Attacking click-through rate predictors via generating realistic fake samples. ACM Trans. Knowl. Discov. Data 2024, 18, 1–24. [Google Scholar] [CrossRef]

- Hu, X.; Long, Z.; Li, M. A Multi-objective Recommendation Algorithm Based on User Stratification. J. Guangdong Univ. Technol. 2023, 40, 10–18. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, P. A Music Recommendation Model Based on Users’ Long and Short Term Preferences and Music Emotional Attention. J. Guangdong Univ. Technol. 2023, 40, 37–44. [Google Scholar] [CrossRef]

- Liu, H.; Lin, W.; Wen Chen, Y.; Yi, M. A MABM-based Model for Identifying Consumers’ Sentiment Polarity―Taking Movie Reviews as an Example. J. Guangdong Univ. Technol. 2022, 39, 1–9. [Google Scholar] [CrossRef]

- Chen, Z.; Gan, W.; Wu, J.; Hu, K.; Lin, H. Data scarcity in recommendation systems: A survey. ACM Trans. Recomm. Syst. 2024, accepted. [Google Scholar] [CrossRef]

- Zheng, G.; Zhang, F.; Zheng, Z.; Xiang, Y.; Yuan, N.J.; Xie, X.; Li, Z. DRN: A deep reinforcement learning framework for news recommendation. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23 April–27 December 2018; pp. 167–176. [Google Scholar]

- Herlocker, J.L.; Konstan, J.A.; Borchers, A.; John, R. An algorithmic framework for performing collaborative filtering. SIGIR Forum 2017, 51, 227–234. [Google Scholar] [CrossRef]

- Resnick, P.; Iacovou, N.; Suchak, M.; Bergstrom, P.; Riedl, J. GroupLens: An Open Architecture for Collaborative Filtering of Netnews. In Proceedings of the ACM Conference on Computer Supported Cooperative Work, Chapel Hill, NC, USA, 22–26 October 1994. [Google Scholar]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.-S. Neural Collaborative Filtering. In Proceedings of the 26th International Conference on World Wide Web, Perth, WA, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- He, X.; Chua, T.-S. Neural Factorization Machines for Sparse Predictive Analytics. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, Tokyo, Japan, 7–11 August 2017; pp. 355–364. [Google Scholar]

- Hidasi, B.; Karatzoglou, A.; Baltrunas, L.; Tikk, D. Session-based recommendations with recurrent neural networks. arXiv 2015, arXiv:1511.06939. [Google Scholar]

- Zhou, G.; Mou, N.; Fan, Y.; Pi, Q.; Bian, W.; Zhou, C.; Gai, K. Deep interest evolution network for click-through rate prediction. In Proceedings of the 33th AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; p. 729. [Google Scholar]

- Duan, C.; Sun, J.; Li, K.; Li, Q. A dual-attention autoencoder network for efficient recommendation system. Electronics 2021, 10, 1581. [Google Scholar] [CrossRef]

- ZHANG, F.; Yuan, N.J.; Lian, D.; Xie, X.; Ma, W. Collaborative knowledge base embedding for recommender systems. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 353–362. [Google Scholar]

- Zhao, N.; Long, Z.; Wang, J.; Zhao, Z.D. AGRE: A knowledge graph recommendation algorithm based on multiple paths embeddings RNN encoder. Knowl. Based Syst. 2022, 259, 110078. [Google Scholar] [CrossRef]

- Zhao, Y.; Ma, W.; Jiang, Y.; Zhan, J. A MOOCs Recommender System Based on User’s Knowledge Background. In Knowledge Science, Engineering and Management; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Gao, C.; Zheng, Y.; Li, N.; Li, Y.; Qin, Y.; Piao, J.; Li, Y. A survey of graph neural networks for recommender systems: Challenges, methods, and directions. ACM Trans. Recomm. Syst. 2023, 1, 3. [Google Scholar] [CrossRef]

- Apaza, R.G.; Cervantes, E.V.V.; Quispe, L.V.C.; Luna, J.E.O. Online courses recommendation based on LDA. In Proceedings of the Symposium on Information Management and Big Data, Lima, Peru, 14–16 August 2014. [Google Scholar]

- Li, X.; Li, X.; Tang, J.; Zhang, Y.; Chen, H. Improving deep item-based collaborative filtering with bayesian personalized ranking for MOOC course recommendation. In Knowledge Science, Engineering and Management, Proceedings of the13th International Conference, KSEM 2020, Hangzhou, China, 28–30 August 2020; Proceedings, Part I; Springer: Berlin/Heidelberg, Germany, 2020; pp. 247–258. [Google Scholar]

- Xu, W.; Zhou, Y. Course video recommendation with multimodal information in online learning platforms: A deep learning framework. Br. J. Educ. Technol. 2020, 51, 1734–1747. [Google Scholar] [CrossRef]

- Tian, X.; Liu, F. Capacity tracing-enhanced course recommendation in MOOCs. IEEE Trans. Learn. Technol. 2021, 14, 313–321. [Google Scholar] [CrossRef]

- Zhu, Y.; Lu, H.; Qiu, P.; Shi, K.; Chambua, J.; Niu, Z. Heterogeneous teaching evaluation network based offline course recommendation with graph learning and tensor factorization. Neurocomputing 2020, 415, 84–95. [Google Scholar] [CrossRef]

- Yang, S.; Cai, X. Bilateral knowledge graph enhanced online course recommendation. Inf. Syst. 2022, 107, 102000. [Google Scholar] [CrossRef]

- Wang, X.; Ma, W.; Guo, L.; Jiang, H.; Liu, F.; Xu, C. HGNN: Hyperedge-based graph neural network for MOOC course recommendation. Inf. Process. Manag. 2022, 59, 18. [Google Scholar] [CrossRef]

- Zhang, H.; Shen, X.; Yi, B.; Wang, W.; Feng, Y. KGAN: Knowledge grouping aggregation network for course recommendation in MOOCs. Expert Syst. Appl. 2023, 211, 9. [Google Scholar] [CrossRef]

- Deng, W.; Zhu, P.; Chen, H.; Yuan, T.; Wu, J. Knowledge-aware sequence modelling with deep learning for online course recommendation. Inf. Process. Manag. 2023, 60, 15. [Google Scholar] [CrossRef]

- Zhu, Y.Q.; Shi, L.; Li, L. Knowledge Mesh Recommendation Method Based on Fuzzy Rules for Course Teaching-Learning. J. Jiangsu Univ. 2022, 43, 201–208. [Google Scholar]

- Lin, Y.; Liu, Z.; Sun, M.; Liu, Y.; Zhu, X. Learning entity and relation embeddings for knowledge graph completion. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–29 January 2015; pp. 2181–2187. [Google Scholar]

- Wang, H.; Zhang, F.; Xie, X.; Guo, M. DKN: Deep knowledge-aware network for news recommendation. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018. [Google Scholar]

- Ji, G.; He, S.; Xu, L.; Liu, K.; Zhao, J. Knowledge graph embedding via dynamic mapping matrix. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015. [Google Scholar]

- Sun, Z.; Yang, J.; Zhang, J.; Bozzon, A.; Huang, L.K.; Xu, C. Recurrent knowledge graph embedding for effective recommendation. In Proceedings of the 12th ACM Conference on Recommender Systems, Vancouver, BC, Canada, 2–7 October 2018; pp. 297–305. [Google Scholar]

- Wang, X.; Wang, D.; Xu, C.; He, X.; Cao, Y.; Chua, T.S. Explainable reasoning over knowledge graphs for recommendation. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27–31 January 2019; p. 653. [Google Scholar]

- Meng, F.R.; Wang, X. EWJ Multi-modal trajectory prediction of vehicles based on GCN-CS-LSTM. J. Jiangsu Univ. (Nat. Sci. Ed.) 2024, 45, 506–512. [Google Scholar]

- Zhang, X.; Xiao, G.; Duan, M.; Chen, Y.; Li, K. PH-CF: A phased hybrid algorithm for accelerating subgraph matching based on CPU-FPGA heterogeneous platform. IEEE Trans. Ind. Inform. 2022, 19, 8362–8373. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X. RippleNet: Propagating user preferences on the knowledge graph for recommender systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Turin, Italy, 22–26 October 2018; pp. 417–426. [Google Scholar]

- Wang, H.; Zhao, M.; Xie, X.; Li, W.; Guo, M. Knowledge graph convolutional networks for recommender systems. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 3307–3313. [Google Scholar]

- Wang, X.; He, X.; Cao, Y.; Liu, M.; Chua, T.S. KGAT: Knowledge graph attention network for recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 950–958. [Google Scholar]

- Zou, D.; Wei, W.; Wang, Z.; Mao, X.L.; Zhu, F.; Fang, R.; Chen, D. Improving knowledge-aware recommendation with multi-level interactive contrastive learning. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022; pp. 2817–2826. [Google Scholar]

- Yang, L.; Wang, S.; Tao, Y.; Sun, J.; Liu, X.; Yu, P.S.; Wang, T. DGRec: Graph neural network for recommendation with diversified embedding generation. In Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining (WSDM ’23), Singapore, 27 February–3 March 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 661–669. [Google Scholar]

- Wang, W.; Shen, X.; Yi, B.; Zhang, H.; Liu, J.; Dai, C. Knowledge-aware fine-grained attention networks with refined knowledge graph embedding for personalized recommendation. Expert Syst. Appl. 2024, 249, 12810. [Google Scholar] [CrossRef]

- Duan, M.; Zheng, X.; Pi, H.; Ding, Y.; Tang, Z. A Completing Missing Pedestrian Trajectories Method Driven by Prior-Posterior Knowledge and Interactive Information. IEEE Trans. Intell. Transp. Syst. 2025, 26, 7969–7979. [Google Scholar] [CrossRef]

- Schneider, S.H.; Dickinson, R.E. Climate modeling. Rev. Geophys. 1974, 12, 447–493. [Google Scholar] [CrossRef]

- Xu, H.; Chen, W.; Zhao, N.; Li, Z.; Bu, J.; Li, Z.; Qiao, H. Unsupervised anomaly detection via variational auto-encoder for seasonal kpis in web applications. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 187–196. [Google Scholar]

- Huang, Q.; Chen, J. Enhancing academic performance prediction with temporal graph networks for massive open online courses. J. Big Data 2024, 11, 52. [Google Scholar] [CrossRef]

- Yu, J.; Luo, G.; Xiao, T.; Zhong, Q.; Wang, Y.; Feng, W.; Tang, J. MOOCCube: A large-scale data repository for NLP applications in MOOCs. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Seber, G.A.; Lee, A.J. Linear Regression Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Rendle, S.; Freudenthaler, C.; Gantner, Z.; Schmidt-Thieme, L. BPR: Bayesian personalized ranking from implicit feedback. In Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence, Montreal, QC, Canada, 18–21 June 2009; pp. 452–461. [Google Scholar]

- Rendle, S. Factorization machines with libFM. ACM Trans. Intell. Syst. Technol. 2012, 3, 57. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Zhang, M.; Leskovec, J.; Zhao, M.; Li, W.; Wang, Z. Knowledge-aware Graph Neural Networks with Label Smoothness Regularization for Recommender Systems. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 968–977. [Google Scholar]

- Wang, Z.; Lin, G.; Tan, H.; Chen, Q.; Liu, X. CKAN: Collaborative knowledge-aware attentive network for recommender systems. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 25–30 July 2020; pp. 219–228. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).