Abstract

A widely used repository of violent death records is the U.S. Centers for Disease Control National Violent Death Reporting System (NVDRS). The NVDRS includes narrative data, which researchers frequently utilize to go beyond its structured variables. Prior work has shown that NVDRS narratives vary in length depending on decedent and incident characteristics, including race/ethnicity. Whether these length differences reflect differences in narrative information potential is unclear. We use the 2003–2021 NVDRS to investigate narrative length and complexity measures among 300,323 suicides varying in decedent and incident characteristics. To do so, we operationalized narrative complexity using three manifest measures: word count, sentence count, and dependency tree depth. We then employed regression methods to predict word counts and narrative complexity scores from decedent and incident characteristics. Both were consistently lower for black non-Hispanic decedents compared to white, non-Hispanic decedents. Although narrative complexity is just one aspect of narrative information potential, these findings suggest that the information in NVDRS narratives is more limited for some racial/ethnic minorities. Future studies, possibly leveraging large language models, are needed to develop robust measures to aid in determining whether narratives in the NVDRS have achieved their stated goal of fully describing the circumstances of suicide.

1. Introduction

Violent death is a major public health concern in the United States, where suicide ranks 10th among the leading causes of death [], and homicide is the third leading cause of death among young adults []. In the hopes of identifying approaches that might reduce these risks, the Centers for Disease Control created the National Violent Death Reporting System (NVDRS) in 2003 [,]. Its purpose is to serve as a national surveillance system integrating data collected via state-based surveillance systems. These systems draw on death certificates, coroner/medical examiner and law enforcement reports, as well as other information sources to generate case records. The fundamental goal is to identify both causes and potentially remediable factors that could be leveraged to reduce these deaths. The NVDRS is also highly unique within the national system of health surveillance data, both in how it is generated and the structure of its content. Across the United State and its Territories, state public health workers (PHWs) summarize characteristics of each violent death by completing closed-ended questions about the victim’s history and the circumstances of the incident []. PHWs also generate two brief text summaries for the purpose of providing contextual information on the “who, what, when, where, and why” of the incident []. To the extent that these narratives provide an inadequate understanding of a death, their original purpose might not be achieved []. Furthermore, if the amount of information about a death systematically differs by characteristics of decedents, such as race or gender, it could undermine the equity of intervention solutions generated from narrative data.

Prior work using the NVDRS has found that decedent features and some characteristics of the incidents are predictive of total word counts in both the coroner/medical examiner and law enforcement narratives [,,,]. For example, narratives of Asian and Black racial and ethnic minority suicide decedents contain, on average, fewer words than those of white decedents, but American Indian/Alaska Native decedents contain more []. In this context, word count has been interpreted as a proxy for differences in information potential [], an interpretation consistent with existing evidence that more complex stories, when retold, result in longer narratives than less complex stories []. Yet at the same time, other studies in different contexts have also shown that the relationship between word count and narrative quality, a similar but not identical construct to information potential, is not necessarily linear [,]. Furthermore, none of the NVDRS studies have specifically examined the assumption that deaths with more complex circumstances have narratives of greater length or complexity.

In this study, we explore three aspects of narrative information potential in the NVDRS. First, we investigate whether we can enhance the word count measure and its presumed indexing of information potential with additional measures. To achieve this, we use narrative word counts along with two additional metrics: sentence counts and maximum dependency tree depth. In Natural Language Processing (NLP), dependency tree depth is a widely used method for quantifying linguistic complexity [,] and has been applied in various social science contexts to capture variations in text and speech [,,]. This measure provides a direct assessment of syntactic structures. Both word and sentence counts have the advantage of being easily obtainable as well as being an indirect measure of syntactic complexity []. Our goal in combining the three measures into a single index is to simultaneously account for both information potential and syntactic complexity in assessing narrative complexity [,]. Second, we aim to determine whether word count and our new measure of narrative complexity can serve as proxies for narrative information potential. To do so, we utilize two existing indicators of informational need in the NVDRS: (1) the number of crises recorded that occurred close to the time of death, and (2) the count of pharmaceutical and illicit substances recorded in the record. Both indicators intuitively suggest that a comprehensive description of the death circumstances will require more detailed information as the counts increase []. Finally, we evaluate whether word count and narrative complexity exhibit similar demographic and incident differences as documented elsewhere [,,,], even after adjusting for our two indicators of the potential complexity of death circumstances.

2. Materials & Methods

2.1. Data Source

We use data from the 2003–2021 National Violent Death Reporting System (NVDRS), a national registry of violent death incidents, which is managed under the auspices of the Centers for Disease Control. Information about each violent death is coded by extensively trained state public health workers (PHWs). PHWs produce two narratives. One is derived from coroner/medical examiner (CME) reports and the other from law enforcement (LE) reports. Initially, only a few states participated in the NVDRS, but as of 2018, all 50 states, the District of Columbia, and some U.S. territories provided reports to the NVDRS. As of 2021, the NVDRS contained descriptions of more than half a million violent deaths (N = 571,428). From these, we excluded 270,171 deaths, including those from U.S. Territories (n = 4603), individuals under 12 years of age at the time of death (n = 10,926), those who did not die in incidents classified as suicide alone (n = 215,173), and/or where circumstances were unknown (n = 39,469) as many of these latter cases lacked informative text data. We then cleaned the narratives to correct spelling errors and address formatting issues. Finally, we excluded 934 deaths where there was neither a CME nor an LE narrative of 20 words or more, as very brief narratives in the NVDRS provide limited information explicitly about decedents. This resulted in a final sample of 300,323 suicide deaths, of which 289,640 included coroner/medical examiner narratives, and 238,563 law enforcement narratives.

2.2. Study Measures

Demographic and incident-related indicators. The NVDRS records decedents’ race/ethnicity, which we recoded into 6 categories (American Indian/Alaska Native non-Hispanic; Asian/Pacific Islander non-Hispanic; Black or African American non-Hispanic; Hispanic; and Other or unknown race non-Hispanic; White non-Hispanic). We then drew on several additional measures included in the NVDRS that possibly confound associations between race/ethnicity and narrative information potential [,]. These included: age recoded into five categories (12–17, 18–24, 25–44, 45–64, and 65+ years), sex (female, male), sexual orientation and/or gender minority status (lesbian, gay, bisexual, transgender, sexual minority; heterosexual; or unknown), military veteran (yes, no, or unknown), unhoused status (yes, no, or unknown), marital status (married/in relationship, divorced/separated, single/never married, widowed, unknown), and educational attainment recoded into three categories (high school or less, college or more, unknown).

We also included several previously coded incident characteristics as possible confounders []. These were type of weapon used for the suicide recoded into 5 categories (firearm; hanging, strangulation, suffocation; poisoning; other; unknown), location of death (home, hospice or Longterm Care facility (LTC), hospital, other, or unknown), whether a toxicology report was available (yes, no), and if an autopsy was performed (yes, no, or unknown). Possible regional variation was accounted for by including census geographic divisions (East North Central (Illinois, Indiana, Michigan, Ohio, Wisconsin), East South Central (Alabama, Kentucky, Mississippi, Tennessee), Middle Atlantic (New Jersey, New York, Pennsylvania), Mountain (Arizona, Colorado, Idaho, Montana, Nevada, New Mexico, Utah, Wyoming), New England (Connecticut, Maine, Massachusetts, New Hampshire, Rhode Island, Vermont), South Atlantic (Delaware, Florida, Georgia, Maryland, North Carolina, South Carolina, Virginia, West Virginia, Washington DC), West North Central (Iowa, Kansas, Minnesota, Missouri, Nebraska, North Dakota, South Dakota), and West South Central (Arkansas, Louisiana, Oklahoma, Texas). Temporal variation was captured by year of death, grouped into early (2003–2005), mid (2006–2016), and late (2017–2021) cohorts, reflecting the staggered entry of states into the NVDRS database.

Finally, two pre-coded variables were selected to serve as indicators of informational need for a longer or more complex narrative based on face validity. PHWs coded whether proximal to the death, decedents experienced any of 9 possible crises (e.g., relationship problems, addiction) and recorded how many crises occurred. This was recoded into three categories: none, one, or more than one. A second variable recorded the number of 132 different licit and illicit pharmaceutical substances mentioned in the death record. This was recoded into three categories: no substances, one substance, and more than one substance.

Narrative count measures. For each narrative, both the total number of words and the number of sentences contained within the narrative text were determined. For word count, the text was split into individual words using white spaces as demarcation. This preserved words that contained hyphens and apostrophes. For sentence count, the text was split into individual sentences using sentence-ending punctuation marks (e.g., “.”, “!”, “?”).

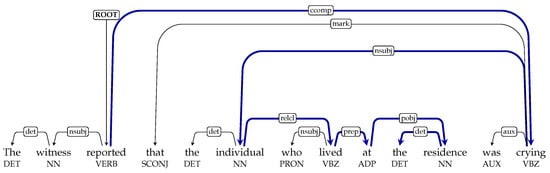

Estimates of dependency tree depth. To capture syntactic complexity, dependency parsing was applied to each narrative [,,]. A dependency parse represents a sentence’s grammatical structure as a tree in which each word is a node, and edges connect words to their heads. For example, in the phrase “quickly run,” “run” functions as the head, while “quickly” is attached as its dependent. The depth of a word is defined as the number of edges in the path from that word to the root of the tree, and the maximum depth of a sentence corresponds to the greatest such value among its words. As shown in Figure 1, the dependency tree of the sentence represents the sentence structure and the relationships between words. We use the tree’s depth (the longest path from the root to a leaf) to quantify the sentence’s syntactic complexity. Extending this to a narrative N consisting of multiple sentences, the narrative’s maximum depth is defined as

Figure 1.

Example of calculating the tree depth of “The witness reported that the individual who lived at the residence was crying”. For this sentence, the max depth is 6. Tracing the arrows, starting from the root word “reported”, each arrow indicates one additional level of depth. The black arrows indicate general dependency relations, while the blue arrows trace the longest dependency path used to compute the maximum depth of 6.

This measure of narrative-wise maximum dependency depth captures the greatest level of syntactic embedding within a narrative, reflecting its most complex grammatical construction. In our implementation, we parsed sentences in the narratives using the dependency parser in the spaCy library [].

2.3. Data Analysis

We first addressed missing data among structured variables. Fortunately, this was rare with only a few decedents missing their age (n = 20); single imputation was used to resolve these cases via Stata 19 []. Next, we used R (4.1.1) [] to estimate pairwise Pearson correlations among measures of word count, sentence count, and dependency tree depth within both the CME and LE narratives. We then conducted a principal axis factor (PAF) analysis on the CME and LE measures independently after standardizing the measures to z-scores. As anticipated, this revealed that a single latent factor was needed to represent the covariance structure of the data for both narrative types. Narrative complexity scores were then calculated from PAF factor scores. In a fourth analytic step, we evaluated evidence for treating the four measures (word count, sentence count, dependency tree depth, narrative complexity scores) as proxies for information potential in both the CME and LE narratives, respectively, by calculating Spearman correlations between these measures and the recoded counts for crises and substances in the death record. Fifth, we estimated regression models to predict word count and narrative complexity scores for both narratives independently. For both models, we adjusted for number of crises and number of substances so that results offer insight into patterns of information potential beyond what is simply explained by these two variables, as other factors (e.g., number of involved persons or locations) may also increase word count or narrative complexity scores. In conducting these analyses, we carefully evaluated potential violations of model assumptions and made adjustments as necessary. For word count, we used negative binomial regression methods consistent with its count structure to evaluate racial/ethnic differences in both CME and LE narrative word counts after controlling for potential confounding from decedent characteristics, incident characteristics, number of crises, and number of substances. For narrative complexity scores, we used linear regression, methods consistent with the scaling of these scores, to predict racial/ethnic differences in narrative complexity scores, while controlling for the same set of potential confounders. After checking for possible violations in model assumptions, we first trimmed complexity scores above the 99th percentile to the 99th percentile to limit outlier influence while preserving the distribution []. To ensure linear regression assumptions were met, the complexity scores were further transformed using the Box–Cox procedure []. For both models, robust standard errors were used to address potential heteroskedasticity and ensure the validity of significance estimates and confidence intervals.

We report prevalences of structured variables, means and ranges of narrative scores, Pearson or Spearman correlations as described above, odds ratios and their 95% confidence intervals from the negative binomial models, and regression estimates and their standardized errors from the linear regression models. Statistical significance was evaluated at < 0.05. This study was deemed exempt by the UCLA Office of Human Research Protection.

3. Results

3.1. Characteristics of the Sample

As shown in Table 1, suicide decedents in the NVDRS were predominantly White, non-Hispanic and male. Approximately half had attained a high school education or less. A sizeable minority were military veterans; few decedents were unhoused at the time of their death. With respect to incident characteristics, about half of suicides in the NVDRS during these years involved firearms, and slightly more than half occurred in the decedent’s residence. The majority of death records were accompanied by toxicology reports, with about half indicating that an autopsy report was available.

Table 1.

Characteristics of suicide decedents and death incidents in the National Violent Death Reporting System (NVDRS), 2003–2021.

Coroner/medical examiner narratives were more commonly present in death records than law enforcement narratives. Reflecting the nationwide reporting structure of the NVDRS, deaths occurred throughout the 9 census divisions, with nearly one-third of the deaths concentrated in the South Atlantic division.

3.2. Developing Measures of Narrative Complexity

On average, both CME and LE narratives were relatively brief, containing approximately 10 sentences with about 137.5 words per narrative (see Table 2). Maximum depth of the text in the narratives showed a wide range but averaged around 7 to 9 levels across both narrative types. All three individual measures (word count, sentence count, max depth) were highly intercorrelated (all p’s < 0.001; for CME narratives: r = 0.930, for word count and sentence count, r = 0.443 for word count and max depth, r = 0.328 for sentence count and max depth; for LE narratives: r = 0.939, for word count and sentence count, r = 0.447 for word count and max depth, r = 0.342 for sentence count and max depth).

Table 2.

Summary statistics of total word count, sentence count, max depth, and complexity score of coroner/medical examiner and law enforcement narratives in suicides in the NVDRS.

3.3. Associations with Indicators of Informational Need

Approximately 30% of suicide deaths in both the CME and LE narratives involved at least one or more recent crises at the time of death (see Table 3). Nearly half also mentioned one or more licit or illicit substances. Both indicators were positively correlated with our measures of narrative complexity, consistent with the hypothesis that deaths involving more crises or types of substances would result in a need for more information to be recorded in the narratives.

Table 3.

Bivariate associations between measures of narrative complexity and informational need in decedents’ death records.

3.4. Predictors of Narrative Length

Black non-Hispanic decedents in both narrative types, and in the LE narrative for Asian/Pacific Islander non-Hispanic and Hispanic decedents, had lower odds of a longer narrative when compared to white non-Hispanic decedents, after adjusting for the effects of other decedent and incident characteristics, including the two measures of informational need (see Table 4). In contrast, Hispanic decedents in the CME narrative had greater odds of a longer narrative as compared to white non-Hispanic decedents.

Table 4.

Partial results of negative binomial regression models predicting total word counts of coroner/medical examiner and law enforcement narratives in suicides in the NVDRS.

As might be anticipated, the two indicators of informational need, number of crises and substances, evidenced a relatively robust association with word count, with both demonstrating higher odds of longer narratives. Similarly, being female or younger, having more education, being coded for sexual orientation/gender identity status, being married/partnered, and having a known military veteran and/or housing status were all associated with higher odds of a longer narrative for both narrative types. Deaths due to firearm use and those that occurred in the home also had greater odds of longer narratives, as did incidents with unknown autopsy status, and those occurring in a later NVDRS cohort or in the Pacific census division. In the LE narrative, deaths with toxicology reports had lower odds of longer narratives.

3.5. Predictors of Narrative Complexity

Consistent with the findings for word counts, for both narrative types, being Black non-Hispanic, and for the LE narrative only being Asian/Pacific Islander non-Hispanic or Hispanic, was associated with a lower narrative complexity score as compared to being white non-Hispanic, after adjusting for confounding due to decedent and incident characteristics as well as the two measures of informational need (see Table 5). Furthermore, being Hispanic, as opposed to white non-Hispanic, was associated with higher narrative complexity scores in the CME narrative, after adjusting for the other variables.

Table 5.

Partial results of linear regression models predicting narrative complexity score in coroner/medical examiner and law enforcement narratives in suicides in the NVDRS.

As observed for word counts, higher narrative complexity scores were associated with reports of more crises and substances in the death record. They were also positively associated with being female, younger, more educated, and having a known military veteran and/or housing status. Being coded as heterosexual vs. unknown was negatively associated with CME narrative complexity scores but positively associated with LE narrative complexity scores. In contrast, being classified as a sexual orientation/gender minority vs. unknown status was positively linked to both CME and LE narrative complexity scores. Higher narrative complexity scores were also positively related to deaths resulting from firearms versus most other types, dying at home, having an unknown autopsy status, being in a later NVDRS cohort, and dying in the Pacific census division. Having a toxicology report was positively associated with CME narrative complexity but negatively linked to LE narrative complexity.

4. Discussion

Measuring the extent to which unstructured text data conveys information is an ongoing methodological challenge in general [], and especially in the surveillance of lethal violence [,,,,,]. Ideally, NVDRS narratives supply an informative answer to questions of “who, what, when, where, and why” that might surround deaths due to suicide []. To that end, word count offers one indication of narratives’ information potential []. Whether other measures, such as syntactic complexity, might add to this was the motivating factor for the current study. Hence, we chose to focus on three indices (total word count, total sentence count, and maximum narrative depth) and combined these successfully into a single narrative complexity score. All are thought to have their strengths in capturing the information potential arising from the array of topics included in the narratives, as well as the narratives’ varying levels of syntactic structure and semantic nuance [].

We began by investigating whether any of these measures are good indices of information potential. We find that they are. Without evaluating the actual content contained within the narratives, we demonstrated that deaths where decedents were experiencing multiple crises or were taking multiple licit and illicit pharmaceuticals at the time of death were typically described with longer narratives and narratives with greater complexity. That is, complicated stories required more informational content to convey this [].

And then we returned to our other motivating factor for this work. Is it in fact the case that the information potential of the NVDRS narratives is shaped by decedents’ race/ethnicity? If so, this comes at a time when suicide risks are increasing for Blacks and Hispanics [] creating an even greater need for insights drawn from the narratives to prevent these deaths. Previous research on the NVDRS narratives and focusing on word count alone has found that narratives of Asian American and Black decedents are shorter than those of white non-Hispanic decedents after adjusting for multiple possible confounders (e.g., sex, age, marital status, educational attainment, military veteran, and housing status, location of the death, presence of toxicology and autopsy reports, and year of death), but those of American Indians/Native Alaskans are longer []. To this list of potential confounders, we added more, including sexual orientation/gender identity, weapon used for suicide, and geographic region. We also included our two indicators of informational need (the numbers of crises and substances). Nevertheless, we still observed lower information potential in both narrative word count and narrative complexity scores for Black non-Hispanic decedents when compared to white non-Hispanic decedents. In addition, similar effects were observed for Asian American decedents in the LE narratives, though not in the CME narratives. For Hispanic decedents compared to white-non-Hispanic decedents, we found evidence of greater CME narrative word count and narrative complexity scores, but the opposite for LE narratives. Additionally, we did not observe evidence of disadvantage for American Indian/Alaska Native decedents.

The persistent disadvantage for Asian American and Black decedents in narrative information potential seen here may be benignly linked to uncontrolled confounding in both previous studies and the current one. Some of these potential cofounders include features that do not exist within the restricted NVDRS dataset, such as differences generated by the ways in which deaths are initially recorded. PHWs differ across States in terms of training and propensity to seek information from news and social media, which is allowed. State public health departments also vary in their funding. We note that among our strongest findings is the importance of geographic region in shaping the information potential in the narratives. This hints that state-based factors may be influential and yet underrecognized.

But to the extent that confounding does not shape these results, it raises a broader issue of data equity: differential levels of information across population subgroups may reinforce or exacerbate knowledge disparities in an increasingly data-driven society. While data-driven insights inform prevention and intervention programs [], limitations such as poor data quality, missing information, and inconsistencies can result in biased insights and flawed support, including inequitable resource allocation and ineffective public health interventions [].

Researchers increasingly use the NVDRS narratives to supplement information not captured by its closed-ended questions [,,,]. To that end, emerging advances in Natural Language Processing (NLP) and large language models (LLMs) can be used to efficiently mine these data. But if the information is not there, in whole or in part, the work risks yielding muddled information for specific risk groups or risk factors. Our findings highlight the need for quality assessment strategies that are robust in determining narrative quality gaps.

Limitations and Future Directions

The findings reported here should be considered in light of three key limitations. First, there are multiple ways to measure semantic and syntactic complexity beyond the three measures we report here. We experimented with additional approaches, such as using human entity (NER) counts [,,] and average depth [,], but both were highly correlated with the three measures we finally selected and did not substantially alter study findings. We also observed that NER counts lacked robustness, overcounting actors (typically the “victim”) who might be mentioned multiple times. NER counts also failed to resolve coreference issues (e.g., linking a victim to descriptors like husband, which referred to the victim and not another actor in the narrative) []. Average depth was also strongly correlated with the chosen max depth metric, indicating little new information would be gained by its inclusion. Furthermore, it underestimated complexity in longer narratives by penalizing sentence-rich texts where descriptive phrases or compound structures lowered the mean score. This is a particular problem in narratives, such as the NVDRS, that are dense with health and healthcare technical jargon.

Second, our measure of narrative complexity may fall short of identifying narratives that meet the threshold of purpose set by the NVDRS surveillance system []. Differences in narrative complexity scores may not always reflect differences in overall information potential. For example, straightforward incidents involving a single victim and a clear, short sequence of events may have minimal syntactic or structural complexity, yet the narrative might still be judged as excellent in explaining the reasons for the suicide. This highlights the need for context-sensitive measures of the narratives that can distinguish between brevity due to simplicity of exposition and brevity due to missing information.

Third, both word count and narrative complexity scores find that narratives of racial/ethnic minority deaths are more likely to be shorter and semantically and syntactically less complex after adjusting for other decedent characteristics and incident characteristics. However the current study did not investigate a causal link and as noted these differences may be due to confounding factors that have yet to be identified. Further study with careful control of confounding, including aspects of decedents and incidents we may not have considered here, are needed to determine whether this robust effect is due to true differences in suicide events between racial/ethnic minorities and white, non-Hispanic decedents, differential barriers to information compilation, or bias in case documentation. The methods by which NVDRS death records are generated involve the input of many entities, including law enforcement, coroner/medical examiners, and public health departments. Finally, the NVDRS cohort is quite large, which renders statistical significance testing vulnerable to detecting very small departures from chance.

Future work may also consider evaluating the potential contributions of additional NLP methods to creating a reliable narrative information potential measure, such as measures of semantic diversity [,], readability indices [,], topic coverage [,], or question-answering assessment [,,]. In the current study, we employed both word counts and a measure of narrative complexity, demonstrating robust associations with decedent and incident characteristics similar to previous work using word counts alone [,,]. But neither approach used here directly addressed the issue of narrative quality, or whether the description of a suicide resolved questions about why it occurred in the way that it did. In line with the NVDRS goal of capturing the “who, what, when, where, and why” of these deaths, there is a continuing need for metrics that can evaluate whether these key elements are adequately represented in the narratives.

5. Conclusions

Suicide in the United States is a significant public health concern, which has prompted the investment in building the NVDRS surveillance system. The hope is that it will lead to the development of effective public health interventions that reduce suicide’s substantial mortality burden. To that end, the NVDRS narratives, if they achieve their purpose of characterizing critical components of death incidents, offer an exciting potential for the field. However, measuring whether they have the capability to do so is a challenge, as it is in other health-related domains, where mining of clinical data to improve clinical decision-making is an important goal. This study represents a critical step toward producing a metric of narrative complexity. We observed a potential for information disadvantage among racial/ethnic minority deaths compared to those of white, non-Hispanic decedents, echoing findings from studies of word counts [,]. Future efforts in developing metrics to assess narrative quality may profitably explore alternative metrics, methodologies, and dimensions of narrative quality. Such efforts can help build a more robust and informative toolkit for evaluating narrative quality and, more broadly, for advancing the measurement of data quality in the NVDRS.

Author Contributions

Conceptualization, C.C., A.A.-K., V.M.M., K.-W.C. and S.D.C.; Data curation, S.D.C.; Formal analysis, C.C., A.A.-K. and K.-W.C.; Funding acquisition, V.M.M. and S.D.C.; Investigation, C.C.; Methodology, C.C., A.A.-K. and S.D.C.; Project administration, V.M.M. and S.D.C.; Resources, V.M.M. and S.D.C.; Software, C.C.; Supervision, V.M.M. and S.D.C.; Validation, A.A.-K., V.M.M. and S.D.C.; Visualization, C.C.; Writing—original draft, C.C., A.A.-K. and S.D.C.; Writing—review & editing, C.C., A.A.-K., V.M.M., K.-W.C. and S.D.C. All authors have read and agreed to the published version of the manuscript.

Funding

Partial support for this work from the NIH National Institute on Mental Health (MH115334) and a National Library of Medicine Training Grant (T15LM011271).

Institutional Review Board Statement

The study involved research on decedents of suicide; the restricted dataset does not contain protected health information. Under the Common Rule (45 CFR 46.102), this is not human subject research. The study was ruled exempt from human subjects review by the UCLA IRB.

Informed Consent Statement

Waived because it is not human subject research.

Data Availability Statement

The National Violent Death Reporting System (NVDRS) is administered by the Centers for Disease Control and Prevention (CDC) and by participating NVDRS jurisdictions. A restricted dataset is available, on approval, from the CDC (nvdrs-rad@cdc.gov). The findings and conclusions of this study are those of the authors alone and do not necessarily represent the official position of the CDC or of participating NVDRS jurisdictions, or the National Institutes of Health.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Centers for Disease Control and Prevention. National Center for Health Statistics. National Vital Statistics System, Provisional Mortality on CDC WONDER Online Database. 2025. Available online: http://wonder.cdc.gov/mcd-icd10-provisional.html (accessed on 29 May 2025).

- Strassle, P.D.; Kendrick, P.; Baumann, M.M.; Kelly, Y.O.; Li, Z.; Schmidt, C.; Sylte, D.O.; Compton, K.; Bertolacci, G.J.; La Motte-Kerr, W.; et al. Homicide Rates Across County, Race, Ethnicity, Age, and Sex in the US: A Global Burden of Disease Study. JAMA Netw. Open 2025, 8, e2462069. [Google Scholar] [CrossRef] [PubMed]

- Centers for Disease Control and Prevention. About the National Violent Death Reporting System (NVDRS). 2025. Available online: https://www.cdc.gov/nvdrs/about/index.html (accessed on 29 September 2025).

- Nguyen, B.L. Surveillance for Violent Deaths — National Violent Death Reporting System, 48 States, the District of Columbia, and Puerto Rico, 2021. MMWR. Surveill. Summ. 2024, 73, 1–44. [Google Scholar] [CrossRef]

- Centers for Disease Control and Prevention, National Center for Injury Prevention and Control. National Violent Death Reporting System Web Coding Manual Version 6.1. 2025. Available online: https://www.cdc.gov/nvdrs/media/pdfs/2025/03/NVDRS-Coding-Manual-Version-6.1_508.pdf (accessed on 28 April 2025).

- Centers for Disease Control and Prevention. National Violent Death Reporting System Coding Manual Version 2. 2003. Available online: https://www.reginfo.gov/public/do/DownloadDocument?objectID=963701 (accessed on 29 September 2025).

- Mezuk, B.; Kalesnikava, V.A.; Kim, J.; Ko, T.M.; Collins, C. Not Discussed: Inequalities in Narrative Text Data for Suicide Deaths in the National Violent Death Reporting System. PLoS ONE 2021, 16, e0254417. [Google Scholar] [CrossRef]

- Arseniev-Koehler, A.; Foster, J.G.; Mays, V.M.; Chang, K.W.; Cochran, S.D. Aggression, Escalation, and Other Latent Themes in Legal Intervention Deaths of Non-Hispanic Black and White Men: Results from the 2003–2017 National Violent Death Reporting System. Am. J. Public Health 2021, 111, S107–S115. [Google Scholar] [CrossRef]

- Arseniev-Koehler, A.; Mays, V.M.; Foster, J.G.; Chang, K.W.; Cochran, S.D. Gendered Patterns in Manifest and Latent Mental Health Indicators Among Suicide Decedents: 2003–2020 National Violent Death Reporting System (NVDRS). Am. J. Public Health 2023, 114, S268–S277. [Google Scholar] [CrossRef]

- Parker, S.T. Supervised Natural Language Processing Classification of Violent Death Narratives: Development and Assessment of a Compact Large Language Model. JMIR AI 2025, 4, e68212. [Google Scholar] [CrossRef]

- Kawar, K.; Saiegh-Haddad, E.; Armon-Lotem, S. Text Complexity and Variety Factors in Narrative Retelling and Narrative Comprehension Among Arabic-speaking Preschool Children. First Lang. 2023, 43, 355–379. [Google Scholar] [CrossRef]

- Shi, Y.; Lei, L. Lexical Richness and Text Length: An Entropy-based Perspective. J. Quant. Linguist. 2022, 29, 62–79. [Google Scholar] [CrossRef]

- Kobrin, J.L.; Deng, H.; Shaw, E.J. Does Quantity Equal Quality? The Relationship between Length of Response and Scores on the SAT Essay. J. Appl. Test. Technol. 2014, 8, 1–15. [Google Scholar]

- Wang, Y.; Buschmeier, H. Revisiting the Phenomenon of Syntactic Complexity Convergence on German Dialogue Data. arXiv 2024, arXiv:2408.12177. [Google Scholar] [CrossRef]

- Jing, Y.; Liu, H. Mean Hierarchical Distance Augmenting Mean Dependency Distance. In Proceedings of the Third International Conference on Dependency Linguistics (Depling 2015), Uppsala, Sweden, 24–26 August 2015; Nivre, J., Hajičová, E., Eds.; Uppsala University: Uppsala, Sweden, 2015; pp. 161–170. Available online: https://aclanthology.org/W15-2119/ (accessed on 29 September 2025).

- Gao, N.; He, Q. A Dependency Distance Approach to the Syntactic Complexity Variation in the Connected Speech of Alzheimer’s Disease. Humanit. Soc. Sci. Commun. 2024, 11, 1–12. [Google Scholar] [CrossRef]

- Liu, H. Dependency Distance as a Metric of Language Comprehension Difficulty. J. Cogn. Sci. 2008, 9, 159–191. [Google Scholar] [CrossRef]

- Oya, M. Syntactic Dependency Distance as Sentence Complexity Measure. In Proceedings of the 16th International Conference of Pan-Pacific Association of Applied Linguistics, Hong Kong, China, 8–10 August 2011; Volume 1. Available online: https://www.paaljapan.org/conference2011/ProcNewest2011/pdf/poster/P-13.pdf (accessed on 29 September 2025).

- McNamara, D.S.; Crossley, S.A.; McCarthy, P.M. Linguistic Features of Writing Quality. Writ. Commun. 2010, 27, 57–86. [Google Scholar] [CrossRef]

- Norris, J.M.; Ortega, L. Towards an Organic Approach to Investigating CAF in Instructed SLA: The Case of Complexity. Appl. Linguist. 2009, 30, 555–578. [Google Scholar] [CrossRef]

- Ali, B.; Rockett, I.; Miller, T. Variable Circumstances of Suicide Among Racial/Ethnic Groups by Sex and Age: A National Violent-Death Reporting System Analysis. Arch. Suicide Res. 2021, 25, 94–106. [Google Scholar] [CrossRef] [PubMed]

- Nivre, J. Dependency Parsing. Lang. Linguist. Compass 2010, 4, 138–152. [Google Scholar] [CrossRef]

- Liu, H.; Xu, C.; Liang, J. Dependency distance: A New Perspective on Syntactic Patterns in Natural Languages. Phys. Life Rev. 2017, 21, 171–193. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, J.; Jiang, J.; Liu, H. Dependency Distance Measures in Assessing L2 Writing Proficiency. Assess. Writ. 2022, 51, 100603. [Google Scholar] [CrossRef]

- Explosion. spaCy: Industrial-Strength Natural Language Processing in Python. 2025. Available online: https://spacy.io/ (accessed on 29 September 2025).

- StataCorp. Stata Statistical Software: Release 19.0; StataCorp LLC.: College Station, TX, USA, 2025. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Osborne, J. Improving Your Data Transformations: Applying the Box-Cox Transformation. Pract. Assess. Res. Eval. 2010, 15, 12. [Google Scholar] [CrossRef]

- Khurana, D.; Koli, A.; Khatter, K.; Singh, S. Natural Language Processing: State of the Art, Current Trends and Challenges. Multimed. Tools Appl. 2023, 82, 3713–3744. [Google Scholar] [CrossRef]

- Burke, H.B.; Hoang, A.; Becher, D.; Fontelo, P.; Liu, F.; Stephens, M.; Pangaro, L.N.; Sessums, L.L.; O’Malley, P.; Baxi, N.S.; et al. QNOTE: An Instrument for Measuring the Quality of EHR Clinical Notes. J. Am. Med. Informatics Assoc. 2014, 21, 910–916. [Google Scholar] [CrossRef]

- Stetson, P.D.; Morrison, F.P.; Bakken, S.; Johnson, S.B.; eNote Research Team. Preliminary Development of the Physician Documentation Quality Instrument. J. Am. Med Inform. Assoc. 2008, 15, 534–541. [Google Scholar] [CrossRef]

- Stetson, P.D.; Bakken, S.; Wrenn, J.O.; Siegler, E.L. Assessing Electronic Note Quality Using the Physician Documentation Quality Instrument (PDQI-9). Appl. Clin. Inform. 2012, 3, 164–174. [Google Scholar] [CrossRef]

- Feldman, J.; Goodman, A.; Hochman, K.; Chakravartty, E.; Austrian, J.; Iturrate, E.; Bosworth, B.; Saxena, A.; Moussa, M.; Chenouda, D.; et al. Novel Note Templates to Enhance Signal and Reduce Noise in Medical Documentation: Prospective Improvement Study. JMIR Form. Res. 2023, 7, e41223. [Google Scholar] [CrossRef]

- Roman, J.K.; Cook, P. Studying Firearm Fatalities Using the NVDRS. In Improving Data Infrastructure to Reduce Firearms Violence; NORC at the University of Chicago: Chicago, IL, USA, 2021; Chapter 4; pp. 81–100. Available online: https://www.norc.org/content/dam/norc-org/pdfs/Improving%20Data%20Infrastructure%20to%20Reduce%20Firearms%20Violence_Chapter%204.pdf (accessed on 29 September 2025).

- Bommersbach, T.J.; Rosenheck, R.A.; Rhee, T.G. Racial and ethnic differences in suicidal behavior and mental health service use among US adults, 2009–2020. Psychol. Med. 2023, 53, 5592–5602. [Google Scholar] [CrossRef] [PubMed]

- Mercy, J.A.; Barker, L.; Frazier, L. The Secrets of the National Violent Death Reporting System. Inj. Prev. 2006, 12, ii1–ii2. [Google Scholar] [CrossRef]

- Wang, Y.; Boyd, A.E.; Rountree, L.; Ren, Y.; Nyhan, K.; Nagar, R.; Higginbottom, J.; Ranney, M.L.; Parikh, H.; Mukherjee, B. Towards Enhancing Data Equity in Public Health Data Science. arXiv 2025, arXiv:2508.20301. [Google Scholar] [CrossRef]

- Arseniev-Koehler, A.; Cochran, S.D.; Mays, V.M.; Chang, K.W.; Foster, J.G. Integrating Topic Modeling and Word Embedding to Characterize Violent Deaths. Proc. Natl. Acad. Sci. USA 2022, 119, e2108801119. [Google Scholar] [CrossRef]

- Phillips, J.A.; Davidson, T.R.; Baffoe-Bonnie, M.S. Identifying Latent Themes in Suicide Among Black and White Adolescents and Young Adults Using the National Violent Death Reporting System, 2013–2019. Soc. Sci. Med. 2023, 334, 116144. [Google Scholar] [CrossRef]

- Dang, L.N.; Kahsay, E.T.; James, L.N.; Johns, L.J.; Rios, I.E.; Mezuk, B. Research Utility and Limitations of Textual Data in the National Violent Death Reporting System: A Scoping Review and Recommendations. Inj. Epidemiol. 2023, 10, 23. [Google Scholar] [CrossRef]

- Zhang, B. Getting to Know Named Entity Recognition: Better Information Retrieval. Med Ref. Serv. Q. 2024, 43, 196–202. [Google Scholar] [CrossRef] [PubMed]

- Uppunda, A.; Cochran, S.D.; Foster, J.G.; Arseniev-Koehler, A.; Mays, V.M.; Chang, K.W. Adapting Coreference Resolution for Processing Violent Death Narratives. arXiv 2021, arXiv:2104.14703. [Google Scholar] [CrossRef]

- Han, S.; Kim, B.; Chang, B. Measuring and Improving Semantic Diversity of Dialogue Generation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Goldberg, Y., Kozareva, Z., Zhang, Y., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2022; pp. 934–950. [Google Scholar] [CrossRef]

- Johns, B.T.; Jones, M.N. Content Matters: Measures of Contextual Diversity Must Consider Semantic Content. J. Mem. Lang. 2022, 123, 104313. [Google Scholar] [CrossRef]

- Daraz, L.; Morrow, A.S.; Ponce, O.J.; Farah, W.; Katabi, A.; Majzoub, A.; Seisa, M.O.; Benkhadra, R.; Alsawas, M.; Larry, P.; et al. Readability of Online Health Information: A Meta-Narrative Systematic Review. Am. J. Med Qual. 2018, 33, 487–492. [Google Scholar] [CrossRef]

- Crossley, S.A. Developing Linguistic Constructs of Text Readability Using Natural Language Processing. Sci. Stud. Read. 2025, 29, 138–160. [Google Scholar] [CrossRef]

- Scarpino, I.; Zucco, C.; Vallelunga, R.; Luzza, F.; Cannataro, M. Investigating Topic Modeling Techniques to Extract Meaningful Insights in Italian Long COVID Narration. BioTech 2022, 11, 41. [Google Scholar] [CrossRef]

- Schlachter, J.; Ruvinsky, A.; Reynoso, L.A.; Muthiah, S.; Ramakrishnan, N. Leveraging Topic Models to Develop Metrics for Evaluating the Quality of Narrative Threads Extracted from News Stories. Procedia Manuf. 2015, 3, 4028–4035. [Google Scholar] [CrossRef]

- Deutsch, D.; Bedrax-Weiss, T.; Roth, D. Towards Question-Answering as an Automatic Metric for Evaluating the Content Quality of a Summary. Trans. Assoc. Comput. Linguist. 2021, 9, 774–789. [Google Scholar] [CrossRef]

- Narayan, S.; Cohen, S.B.; Lapata, M. Ranking Sentences for Extractive Summarization with Reinforcement Learning. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, Louisiana, 1–6 June 2018; Walker, M., Ji, H., Stent, A., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 1747–1759. [Google Scholar] [CrossRef]

- Wang, A.; Cho, K.; Lewis, M. Asking and Answering Questions to Evaluate the Factual Consistency of Summaries. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 5008–5020. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).