Abstract

Digital video surveillance systems are now common in the security infrastructure of modern times, but proprietary file systems provided by large manufacturers are a major challenge to the work of the forensic investigator. This paper proposes a forensic recovery methodology of Hikvision and Dahua surveillance systems by utilizing three major innovations: (1) adaptive temporal sequencing, which dynamically changes gap detection thresholds; (2) dual-signature validation with header–footer matching of DHFS frames; and (3) automatic manufacturer identification. The strategy puts into practice direct binary analysis of proprietary file systems, frame-based parsing and automatic video reconstruction. Testing on 27 surveillance hard drives showed a recovery rate of 91.8, a temporal accuracy of 96.7% and a false positive rate of 2.4%—the lowest of the tools tested with statistically significant improvements over commercial tools (p < 0.01). Better results with fragmented streams (87.2 vs. 82.4% with commercial tools) meet key forensic needs of determining valid evidence chronology. The open methodology offers the necessary algorithmic transparency to be court-admissible, and the automated MP4 conversion with metadata left intact makes the integration of forensic workflow possible. The study provides a scientifically validated approach to proprietary surveillance formats, which evidences technical innovativeness and practical usefulness to digital forensics investigations.

1. Introduction

Digital video surveillance systems have become an integral component of security infrastructure worldwide, with the global video surveillance market valued at USD 73.75 billion in 2024 and projected to reach USD 147.66 billion by 2030 [1]. Two manufacturers, Hikvision (Hangzhou Hikvision Digital Technology Co., Ltd., Hangzhou, China) and Dahua (Dahua Technology Co., Ltd., Hangzhou, China), collectively dominate the global surveillance market with an estimated 38% combined market share [1], particularly in Asia and emerging markets, where they account for over 65% of deployments. However, these systems employ proprietary file systems and video storage formats that create substantial challenges for forensic investigators attempting to recover video evidence for criminal investigations, civil litigation, or incident analysis.

Traditional digital forensics tools, designed primarily for standard file systems (NTFS, ext4, FAT32) and common video codecs, often fail when confronted with the proprietary storage architectures used by surveillance equipment manufacturers. Hikvision systems utilize a custom file system structure with specific binary signatures, while Dahua implements DHFS4.1 (Dahua File System version 4.1) with unique frame encapsulation [2]. Most fixed and mobile Digital Video Recorder (DVR)-based surveillance systems employ proprietary computer operating systems and record digital video to proprietary formats, causing minimal degradation of picture quality during the process of recovering, and trans-coding presents a complex challenge for both law enforcement agencies and video forensic experts [3]. This lack of interoperability results in a critical gap where valuable video evidence may be inaccessible or lost, particularly in time-sensitive investigations.

The forensic recovery of surveillance video data presents several unique technical challenges. First, surveillance systems often use proprietary codecs and frame encapsulation schemes, which makes it challenging to convert footage for viewing on common media players [4]. Second, the storage architecture employs block-based allocation schemes where video streams may be fragmented across non-contiguous disk sectors, with circular buffer implementations causing overwrites that destroy temporal sequence information. Third, power failures or system crashes can result in partially written frames and corrupted metadata structures, requiring robust validation mechanisms to distinguish valid frames from corrupted data. Fourth, the absence of standard file system metadata necessitates raw binary analysis and pattern matching for data recovery, increasing computational complexity and false positive rates.

Previous research has addressed various aspects of surveillance system forensics. The HIKVISION file system has been analyzed before, but that research has focused on the recovery of video footage, while log records and other artifacts remain unexploited by major commercial forensic software [5]. Research pertaining to the forensic examination of DAHUA Technology CCTV systems is limited, with existing studies mainly focusing on the retrieval of video content [6]. Prior work has described basic principles of mainstream video recovery software for Dahua (Hangzhou, China) and Hikvision (Hangzhou, China) brand systems, introducing practical methods for video abstraction from hard disks with damaged sectors [7]. However, these approaches employ fixed-threshold temporal sequencing, single-signature frame validation, and require manual manufacturer identification—limitations that reduce recovery effectiveness in challenging forensic scenarios.

Commercial forensic tools often require expensive licenses (typically $3000–$8000 annually per seat) and may not support newer surveillance system models or firmware versions. Furthermore, closed-source solutions lack transparency in their recovery methodologies, making validation and peer review impossible—a critical concern for evidence admissibility under Daubert standards and Rule 702 of the Federal Rules of Evidence. Existing tools demonstrate recovery rates of 84.8–91.3% for Dahua systems and 89.2–91.3% for Hikvision systems [8,9], with false positive rates ranging from 3.1% to 12.7% and temporal accuracy of 89.2–94.2%. These performance limitations, combined with algorithmic opacity, motivated the development of a transparent, scientifically validated alternative.

1.1. Research Contributions and Technical Novelty

The paper will tackle these issues by proposing an automated forensic recovery methodology with Hikvision and Dahua surveillance systems that proposes three significant technical innovations that make it an improvement over previous methods.

1. Adaptive Temporal Sequencing Algorithm: While the commercial tools and previous studies have used a set of fixed temporal thresholds to determine the gaps between frames (usually 2–5 s irrespective of the recording context), our algorithm is dynamic, where temporal thresholds are adjusted dynamically to the inter-frame times observed in each video stream. This dynamic-frame-rate solution addresses variable frame rate video recordings, intermittent motion-tagged captures, and circular buffer rewrites better than constant-threshold solutions. The algorithm had a temporal accuracy of 96.7 compared to that of the major commercial tool (VIP 2.0, SalvationDATA, Beijing, China) of 93.4, and this was statistically significant (p = 0.002) and translates to the algorithm sequencing up one more frame on every 100,000 frames that was recovered, which is crucial in establishing the accurate timeline of evidence in court cases.

2. Dual-Signature Validation Framework: Conventional file carving methods, such as those found in commercial tools such as Scalpel (open source) and Foremost (open source), use a header-only signature matching, with such methods achieving false positive rates of 12.7 per cent of recovery of surveillance video files [10]. Our methodology uses the Dahua DHFS4.1 frame validation to dual signature (both header DHAV and footer dhav magic bytes) and frame size validation as well as embedded integrity validation. This multi-level validation minimized the false positive rate, 2.4%, which is crucial in forensic contexts, a condition where spurred evidence is likely to sabotage the integrity of the case. False positives in the case of forensics are not just a statistical nuisance; those are the possible miscarries of justice where wrongful evidence may be used in the inquiries or cases.

3. Automated Pipeline to Detect Manufacturers: With earlier methods, the selection of DVR manufacturer is done manually or through experimentation with various software or tools [11]. Multi-offset signature analysis (checking offsets of 512, 1024 and 2048 bytes) is automatically used to identify Hikvision or Dahua systems by our signature-based detection, removing the need to manually configure systems and lowering the task load of investigators. This automation had 100% accuracy in identifying the manufacturer in 27 test drives and decreasing average analysis configuration time (15–20 min to manually configure the analysis settings) to less than 30 s (automated detection).

The major technical contributions of this research are as follows.

- An automatic detection and identification system of proprietary surveillance file systems via signature analysis, without manual configuration.

- New binary parsing methods of video frame extraction of DHFS and Hikvision proprietary formats with time stamping, adaptive thresholding, and two signature validation methods not found in the literature.

- Smart frame sequencing and gap detection algorithms to assemble fragmented video streams, in which the detection parameters are dynamically set depending on what patterns have been observed with the recording instead of using fixed thresholds.

- Automated conversion pipeline of proprietary H.264/H.265 streams into milliseconds—temporally robust MP4 containers (97 ms mean error).

- Detailed forensic reporting system of capturing disk metadata, recovery statistics and temporal video data with cryptographic checksums of evidence authentication.

- 27 hard drives of the surveillance system have been thoroughly validated to indicate statistically significant gains over commercial tools: 91.8% recovery rate (p < 0.01 vs. commercial baselines), 96.7% temporal accuracy (p < 0.01), and 2.4% false positive rate (fivefold improvement over conventional carving methods).

1.2. Performance Justification and Forensic Significance

The recorded performance enhancements look numerically modest (1.4–6.8 percentage points over commercial tools), but they have a significant forensic impact, which is the main reason for this research. In forensic scenarios, these enhancements mean real outcomes of investigations: (1) an extra 50–80 video files per terabyte of surveillance storage is extracted—thus, evidence that is potentially making the difference could be simply missed by the current tools; (2) 3300 additional frames per 100,000 recovered frames are accurately ordered, thus ensuring one of the most important things in time to be able to establish alibis, corroborate witness testimonies, or document event sequences; (3) false positives are brought down from 12.7% to 2.4%, thus eliminating 100 fake files per 1000 recovered items that could mislead the investigation or the time that would be taken to manually validate the files.

These enhancements are not just the results of better implementation of optimization or parameter tuning—they result from the fundamental changes in the algorithm (adaptive thresholding, dual-signature validation, automated detection) that greatly helped to overcome the specific technical limitations of the previous approaches. The improvements’ statistics (p < 0.01 for recovery rate and temporal accuracy) confirm that these improvements are real methodological innovations rather than just random variations or artifacts of the implementation.

Moreover, the 2.4% false positive rate is very important to meet a critical forensic requirement: evidence admissibility. Though it is correct to mention that false positives cause concerns for court use, a rate of 2.4% supported by strong validation mechanisms (dual-signature matching, checksum verification, and temporal consistency analysis) is still within the limits of forensic practice. Commercial tools have false positive rates of 3.1–12.7%, yet they are still acceptable if accompanied by the correct validation procedures and expert testimony. Combining our methodology’s multi-level validation framework with detailed forensic reporting such as MD5/SHA-256 checksums and recovery documentation gives the necessary artifacts to the examiners to easily find and discard the small percentage of false positives during the validation process, which is a regular procedure in digital forensics workflows.

1.3. Scope and Limitations

The proposed method is primarily aimed at monitoring systems that do not have encryption. While the performance on encrypted disk images is limited (43% recovery), this is because the current version of the program does not have a built-in module for decryption. This issue mirrors the difficulties that forensic analysts face when dealing with the encryption of storage media, in which case the encryption schemes are not publicly disclosed [12]. The limitation is a result of technical as well as legal constraints. Technically, the encryption schemes used by surveillance manufacturers are not publicly disclosed, so a lot of work will be necessary to figure out the code, and it might take years. From the legal point of view, if you decrypt a device without the consent of the manufacturer, you might be violating the anti-circumvention rules that are present in different areas like the Digital Millennium Copyright Act (DMCA) in the USA and similar laws in other countries. Commercial forensic vendors, on the other hand, usually sign licensing agreements with the manufacturers in order to get the legitimate decryption capabilities—something that is not very easy for academic research projects.

The methodology, however, covers a significant part of the surveillance infrastructure that is already in place. Even though encryption adoption is rising, there are still quite a lot of systems that have been around for a long time, along with those installations that are made with the aim of saving money, and these frequently operate without encryption. The industry surveys show that about 55–60% of small-to-medium business surveillance deployments and 40–45% of residential systems are not encrypted as of 2024; thus, this methodology is valuable in millions of installation cases worldwide [13]. The method is mostly useful in cases of unencrypted Hikvision and Dahua deployments, forensics of the equipment seized in a crime where the decryption key can be obtained legally, and places where unencrypted systems are still common.

The rest of paper is structured in the following manner: Section 2 surveys the work done in video forensics and analysis of proprietary file systems, also providing a detailed technical comparison of the proposed approaches with the existing ones; Section 3 describes the technical architecture and the recovery method algorithms, the new algorithmic areas being highlighted; Section 4 provides the experimental confirmation together with detailed performance metrics like sensitivity, specificity, and statistical significance testing; Section 5 points out the paper’s limitations, potential uses, and future research directions mentioning especially the encryption constraint and comparative performance analysis; and finally, Section 6 wraps up the paper.

2. Literature Review

2.1. Forensic Analysis of Proprietary Surveillance Systems

The forensic analysis of CCTV and DVR systems has emerged as a critical area in digital forensics due to the ubiquitous deployment of surveillance infrastructure. According to the 2023 video surveillance report from IFSEC Global, proprietary formats continue to account for a significant proportion of surveyed CCTV systems, creating numerous challenges for forensic acquisition and analysis [13]. The prevalence of proprietary file systems, coupled with the diversity of manufacturers, data formats, and storage methods, necessitates specialized forensic approaches.

Early research in this domain focused on reverse engineering specific surveillance systems. van Dongen [14] conducted a forensic examination of a Samsung DVR in a child abuse investigation, establishing methodologies for analyzing proprietary MPEG_STREAM files and system logs. Their work demonstrated that forensic recovery could be achieved even without direct access to the operational DVR unit, a significant advancement for cases involving damaged hardware.

Hardware-level forensic techniques have evolved to address scenarios where logical extraction methods fail. Yermekov et al. [15] examined advanced data extraction methods for damaged storage devices, including chip-off techniques and low-level access methods for IoT devices. Their work on hardware-based recovery approaches with acoustic diagnostics demonstrates the importance of physical-level data access when software-based methods are insufficient—a principle equally applicable to surveillance storage media where file system corruption or hardware damage prevents logical recovery.

Ariffin et al. [11] extended forensic recovery work by proposing a file carving technique for recovering video files with timestamps from proprietary-formatted DVR hard disks without relying on file system metadata. This approach proved particularly valuable when DVR units were physically damaged or when export functionalities were compromised.

Han et al. [2] performed the first comprehensive analysis of the Hikvision DVR file system, documenting its proprietary structure and proposing systematic methods for video data extraction. Building on this foundation, Dragonas et al. [5] revisited the Hikvision file system to examine previously unexplored log records, demonstrating that these artifacts contain critical investigative information, including user actions, system configurations, and security events. Their development of the Hikvision Log Analyzer tool automated the carving and interpretation of these log records, significantly reducing manual analysis time.

Similar work on Dahua systems by Dragonas et al. [6] revealed that Dahua Technology CCTV systems maintain extensive log records documenting events such as hard drive formatting, camera recording status changes, and user authentication attempts. These logs, stored within the proprietary DHFS4.1 file system, provide valuable attribution capabilities for forensic investigators. Yang et al. [7] described the basic principles underlying mainstream video recovery software for both Hikvision and Dahua systems, introducing practical methods for video extraction from hard disks with damaged sectors.

The evolution of surveillance system forensics has been significantly influenced by advancements in video codec analysis. Casey [16] provided foundational methodologies for handling digital evidence from multimedia sources, establishing best practices that remain relevant for contemporary surveillance forensics. Chung et al. [17] conducted empirical studies on digital forensic investigation methods, demonstrating that successful recovery requires understanding both the storage architecture and the data structures employed by different systems. Their work highlighted the challenges posed by proprietary implementations of standard codecs like H.264 and H.265.

Gomm et al. [18] provided a comprehensive review of CCTV forensics challenges in the context of big data, identifying key obstacles, including (1) the diversity of proprietary file systems across manufacturers, (2) the massive volume of video data requiring processing, (3) the lack of standardized forensic workflows, and (4) insufficient support in commercial forensic tools. Their work emphasized the need for scalable, manufacturer-agnostic forensic solutions capable of handling modern surveillance infrastructures.

Recent research has expanded into IoT-based surveillance ecosystems. Shin et al. [19] investigated digital forensics for heterogeneous IoT incident investigations in smart-home environments, conducting extensive experiments on platforms including Samsung SmartThings, Aqara, QNAP NAS, and Hikvision IP cameras. Their comprehensive approach, combining open-source intelligence, application, network, and hardware analyses, revealed crucial insights into the complexities of forensic data acquisition in smart-home environments, emphasizing the need for customized forensic strategies tailored to specific IoT device attributes. This research is particularly relevant to modern surveillance deployments where Hikvision cameras and NVR systems may be integrated into broader IoT ecosystems. Kebande et al. [20] examined forensic challenges in Internet-connected surveillance devices, noting that cloud-integrated systems introduce additional complexity through distributed storage and encrypted transmission channels. Ruan et al. [21] addressed the forensic implications of cloud storage for surveillance data, establishing definitions and critical criteria for cloud forensic capability and proposing chain-of-custody preservation techniques for remotely stored video evidence.

The limitations of certain proprietary file systems warrant further discussion. Dahua’s DHFS4.1 employs complex frame encapsulation with header–footer validation pairs and embedded checksums, making it particularly resistant to traditional file carving approaches that rely solely on header signatures. The file system’s circular buffer implementation with dynamic block allocation further complicates sequential recovery attempts. Similarly, Hikvision’s custom file system utilizes non-standard block allocation schemes with variable-length frame structures that complicate fragment reconstruction. Frame boundaries are not aligned to standard sector sizes, requiring byte-level parsing rather than block-level analysis. These architectural differences necessitate manufacturer-specific parsing algorithms rather than generic recovery approaches [2,6].

2.2. IoT-Based Surveillance and Heterogeneous Device Forensics

The integration of Internet of Things (IoT) technology in surveillance systems has introduced new forensic challenges requiring specialized methodologies. Shin et al. [19] investigated digital forensics for heterogeneous IoT incident investigations in smart-home environments, conducting extensive experiments on platforms including Samsung SmartThings, Aqara, QNAP NAS, and Hikvision IP cameras. Their comprehensive approach, combining open-source intelligence, application, network, and hardware analyses, revealed crucial insights into the complexities of forensic data acquisition in smart-home environments, emphasizing the need for customized forensic strategies tailored to specific IoT device attributes. This research is particularly relevant to modern surveillance deployments where Hikvision cameras and NVR systems may be integrated into broader IoT ecosystems, requiring forensic methodologies that account for network-based evidence and cloud synchronization.

2.3. Video Forensics and Integrity Verification

The authenticity and integrity of video evidence have become paramount concerns in the digital age. Liao et al. [22] developed a spatial-frequency domain video forgery detection system based on a ResNet-LSTM-CBAM hybrid network with Discrete Cosine Transform (DCT) integration. Their methodology combines spatial feature extraction with frequency-domain analysis to identify manipulated video segments, achieving high accuracy across multiple benchmark datasets. This research highlights the importance of multi-domain analysis approaches in video forensics, particularly when dealing with surveillance footage where temporal consistency and frame integrity are critical for evidence admissibility.

Yang et al. [23] proposed a proactive digital forensics mechanism (P-DFM) that integrates forensic tools with the MITRE ATT&CK framework, Security Information and Event Management (SIEM), and Managed Detection and Response (MDR) systems for enterprise incident management. Their approach emphasizes the importance of real-time evidence preservation and chain-of-custody maintenance in cloud-based surveillance environments, addressing contemporary challenges where video evidence may be distributed across multiple storage locations and subject to automated processing pipelines.

Commercial forensic tools offer specialized capabilities for CCTV/DVR recovery. Magnet Witness (formerly DVR Examiner), developed by DME Forensics and acquired by Magnet Forensics, represents the leading commercial solution for surveillance video recovery [8]. The software provides direct file system acquisition from DVR hard drives, including deleted or partially overwritten video, and supports both native and proprietary file formats. However, practitioner forums report limitations with certain file systems, particularly Dahua’s DHFS and when H.264 compression is employed [24]. DiskInternals DVR Recovery offers automated detection of CCTV DVR file systems from Hikvision, Dahua (DHFS), and other manufacturers [25]. VIP 2.0, developed by SalvationDATA, provides wide brand coverage from Hikvision to Sony [9]. X-Ways Forensics and EnCase, while not CCTV-specific, are employed by practitioners for video carving from surveillance systems [26]. UFS Explorer Video Recovery specializes in recovering footage from CCTV and vehicle DVRs, supporting various vendor-specific file systems including WFS, DHFS, HIK, Mirage, and Pinetron [27].

2.4. Digital Forensics Education and Practical Training

The practical training of forensic investigators has gained increased attention as surveillance technologies proliferate. Cruz [28] presented innovative learning approaches in digital forensics laboratories, focusing on tools and techniques for data recovery from electronic devices containing NAND flash memory chips. The study emphasized hands-on experimental training as essential for developing competencies in forensic analysis of non-operational or locked systems, directly relevant to scenarios where surveillance DVR/NVR units are damaged or inaccessible. This educational perspective underscores the importance of accessible, transparent forensic methodologies—such as the approach presented in this paper—that can serve both investigative and pedagogical purposes.

File carving techniques form a foundational approach in CCTV forensics. Foremost, originally developed for the U.S. Air Force Office of Special Investigations, employs header and footer pattern matching to extract files from disk images [29]. Scalpel, presented by Richard and Roussev at DFRWS 2005, represents a complete rewrite of Foremost designed to address high CPU and RAM usage issues [30]. Bulk Extractor, developed by Garfinkel, extends traditional file carving by extracting metadata and structured information [31]. PhotoRec, part of the TestDisk suite, provides an alternative file recovery tool that has been adapted for video file carving from surveillance systems [32].

Advanced carving techniques have been developed to address fragmentation challenges. Pal and Memon [33] proposed entropy-based carving methods that identify file boundaries through statistical analysis rather than signature matching alone. Poisel and Tjoa [34] introduced advanced file carving techniques for fragmented files, demonstrating improved recovery rates for non-contiguous data blocks common in circular buffer storage systems. Cohen [35] developed the Advanced Forensic Format (AFF) to standardize disk image formats, facilitating more efficient carving operations across different forensic tools.

Despite their utility, traditional carving tools face challenges with surveillance video formats due to proprietary video containers employing non-standard headers not included in default carving configurations. Memon and Pal [10] demonstrated that header–footer-based carving achieves only 60–70% success rates for fragmented surveillance video files, highlighting the need for more sophisticated temporal reconstruction algorithms.

Python has emerged as a preferred language for digital forensics tool development due to its extensive libraries for binary data manipulation, cross-platform compatibility, and rapid prototyping capabilities. The struct module enables precise parsing of binary data structures, essential for decoding proprietary file formats. The interpretable nature of Python facilitates transparent, peer-reviewable forensic methodologies, addressing concerns about “black box” commercial tools. Altheide and Carvey [36] provided comprehensive guidance on using Python for digital forensics, demonstrating techniques for parsing binary file formats and automating evidence extraction workflows.

2.5. Video Compression and Codec Analysis

Understanding video compression formats is essential for forensic recovery from surveillance systems. The H.264 (MPEG-4 AVC) codec, standardized by the ITU-T Video Coding Experts Group, represents the most widely deployed compression format in modern surveillance equipment [37]. Richardson [37] provided comprehensive technical documentation of H.264’s syntax and semantics, which forms the basis for forensic parsing implementations. The successor H.265 (HEVC) standard offers improved compression efficiency, increasingly adopted in high-resolution surveillance deployments [38].

Forensic analysis of compressed video streams requires understanding both the container format and the encoded bitstream structure. Wiegand et al. [39] detailed the technical specifications of H.264/AVC, including Network Abstraction Layer (NAL) units that encapsulate video data in surveillance systems. Ohm et al. [40] extended this analysis to H.265/HEVC, documenting the coding tree unit structure relevant for frame boundary detection in forensic recovery scenarios.

2.6. Temporal Analysis and Video Reconstruction

Temporal analysis of fragmented video data represents a critical challenge in surveillance forensics. Xu et al. [41] proposed temporal correlation analysis techniques for reconstructing video sequences from fragmented storage, demonstrating improved accuracy over sequential frame concatenation approaches. Their methodology influenced subsequent research in intelligent video reconstruction algorithms.

Video timeline reconstruction from metadata has been extensively studied in the forensics literature. Huebner et al. [42] developed comprehensive timeline analysis methodologies for digital forensic investigations, emphasizing the importance of temporal consistency validation. Carrier [43] provided foundational work on file system forensic analysis, including methods for temporal reconstruction that are applicable to surveillance video recovery.

2.7. Limitations of Existing Commercial Tools

While commercial forensic tools provide robust capabilities for surveillance system analysis, they exhibit several notable limitations that motivated this research.

Magnet DVR Examiner, despite its comprehensive manufacturer support, demonstrates inconsistent performance with certain Dahua DHFS implementations, particularly when H.264 compression with non-standard encoding parameters is employed. Practitioner forums report recovery rates varying from 65% to 89%, depending on DVR firmware versions, with particular difficulties in handling circular buffer overwrites [24]. The tool’s effectiveness decreases substantially when processing drives from systems that have experienced abnormal shutdowns or power failures, scenarios commonly encountered in real-world investigations.

DiskInternals DVR Recovery offers automated manufacturer detection and broad format support but exhibits reduced temporal accuracy in scenarios involving fragmented recording sessions. Users have reported temporal sequencing errors in up to 15% of recovered footage when processing drives with extensive circular buffer overwrites [25]. Additionally, the tool’s proprietary validation algorithms lack transparency, preventing independent verification of recovery methodology—a critical concern for evidence admissibility under Daubert standards and Rule 702 of the Federal Rules of Evidence.

VIP 2.0 provides extensive manufacturer coverage and advanced features for professional investigators, but requires substantial manual configuration for non-standard DVR models not explicitly included in its signature database. Its batch processing capabilities are limited when handling multi-terabyte RAID arrays, with performance degradation and occasional crashes reported on datasets exceeding 8TB. Furthermore, the high licensing costs (typically $3000–$8000 per seat annually) create accessibility barriers for smaller investigative organizations, academic research institutions, and resource-constrained jurisdictions [9].

X-Ways Forensics and EnCase, while powerful general-purpose forensic suites, lack specialized optimizations for surveillance system formats. Their generic file carving capabilities require extensive manual signature configuration for Hikvision and Dahua systems, and even with custom configurations, practitioners report recovery rates below 75% for fragmented surveillance footage [26].

Open-source tools such as Scalpel and PhotoRec, while freely available and valuable for general file recovery, demonstrate significant limitations when applied to proprietary surveillance formats. Default signature databases do not include patterns for Hikvision or Dahua frame structures, necessitating extensive manual configuration and reverse engineering. Even with custom signatures, header–footer-based carving approaches achieve only 60–70% success rates for fragmented surveillance video files due to the complex temporal dependencies and non-standard frame boundaries in proprietary formats [10].

These limitations collectively demonstrate the need for transparent, manufacturer-optimized methodologies that balance recovery effectiveness with algorithmic clarity, accessibility, and forensic validity. The absence of open-source, academically validated approaches specifically designed for modern surveillance systems creates a critical gap that this research addresses.

2.8. Research Gaps and Motivation

Current literature and tool ecosystems reveal several critical gaps addressed by this research:

- Lack of Transparent Methodologies: All major CCTV forensic tools are commercial and proprietary, hindering academic research, algorithmic transparency, and accessibility for resource-constrained organizations. Quick and Choo [44] emphasized that proprietary tools create verification challenges in legal proceedings, as defense counsel cannot examine the underlying algorithms.

- Limited Multi-Manufacturer Support: Existing tools typically excel with specific manufacturers but provide limited support for others, necessitating multiple tool licenses for comprehensive coverage.

- Insufficient Temporal Reconstruction: While commercial tools recover video data, sophisticated temporal sequencing and gap detection algorithms for fragmented streams remain underdeveloped. Garfinkel [45] noted that existing approaches struggle with non-sequential storage patterns common in circular buffer implementations.

- Absence of Automated Workflows: Current approaches require significant manual intervention for format identification, extraction parameter configuration, and post-processing. Pollitt [46] argued that manual processes introduce potential for human error and reduce reproducibility in forensic examinations.

- Incomplete Forensic Reporting: Existing tools generate basic logs but lack comprehensive forensic reports detailing recovery methodology, data provenance, and temporal analysis. The Scientific Working Group on Digital Evidence [47] established best practice guidelines emphasizing the importance of detailed documentation for court admissibility.

This research addresses these gaps through a comprehensive forensic recovery methodology specifically designed for Hikvision and Dahua systems, incorporating automated detection, intelligent frame sequencing, and detailed forensic reporting capabilities.

2.9. Technical Comparison with Existing Approaches

While the studies presented in the previous paragraphs obviously delineate the evolution of forensics in the surveillance system, the existing solutions still have some technical deficiencies, which brought our methodology into being. In this section, we make a direct technical comparison to highlight our new contributions.

The existing methods of surveillance video recovery are based on the use of a fixed threshold for temporal gap detection. Han et al. [2] detected gaps by a threshold of 2-s that was constantly the same throughout the whole experiment, while Yang et al. [7] implemented fixed 5-s thresholds. Commercial tools such as VIP 2.0 and Magnet DVR Examiner, among others, resort to manufacturer-specific fixed thresholds (normally 2–5 s). These methods do not adjust to the factor of the variable frame rate of recordings that are frequently utilized for motion detection in surveillance, where the legitimate inter-frame intervals can be 33 ms or even several seconds. Our adaptive temporal sequencing algorithm, on the other hand, modifies gap detection thresholds on the basis of the inter-frame intervals that are observed in each video stream. It determines the median interval and uses a tolerance factor rather than absolute fixed thresholds. This adaptive method attained 96.7% temporal precision against 93.4% for VIP 2.0 (p = 0.002).

Conventional file carving implementations such as Scalpel [30], PhotoRec [32], and Foremost [29] rely solely on header-only signature matching for frame identification. Memon and Pal [10] have proven that header-only methods bring about a 12.7% false positive rate for fragmented surveillance video. Commercial tools get better by adding size validation and limited integrity checks but still have false positive rates in the range of 3.1–4.8%. Our method features dual-signature validation, especially for Dahua DHFS4.1 frames that demand (1) the header signature match (“DHAV”), (2) the footer signature match (“dhav”), (3) the size consistency verification, and (4) the checksum validation. Such multi-level validation resulted in a 2.4% false positive rate—a five times lower rate than traditional carving.

Earlier studies have often taken for granted the identity of the manufacturer or have required manual selection of the tool. Ariffin et al. [11] have thus led investigators to manually specify the DVR model and configure the parameters. Commercial tools use database-driven approaches that need manual selection, with automatic detection giving “unknown” for about 15–20% of non-standard models [24]. Our approach is to have an automated manufacturer identification by multi-offset signature analysis (checking offsets at 512, 1024, 2048 bytes) which has led to 100% detection accuracy for all 27 test drives and also has been able to reduce the time of the set from 15–20 min to less than 30 s.

Table 1 provides a systematic comparison of key technical features across existing approaches and our proposed methodology.

Table 1.

Technical Comparison of Surveillance Video Recovery Approaches.

The technical innovations introduced by our methodology—adaptive temporal sequencing, dual-signature validation, and automated manufacturer detection—address specific limitations in prior approaches. These are not incremental improvements through parameter tuning but fundamental algorithmic differences that enable superior performance on fragmented streams, variable frame rate recordings, and circular buffer overwrites.

3. Materials and Methods

3.1. System Architecture and Manufacturer Detection

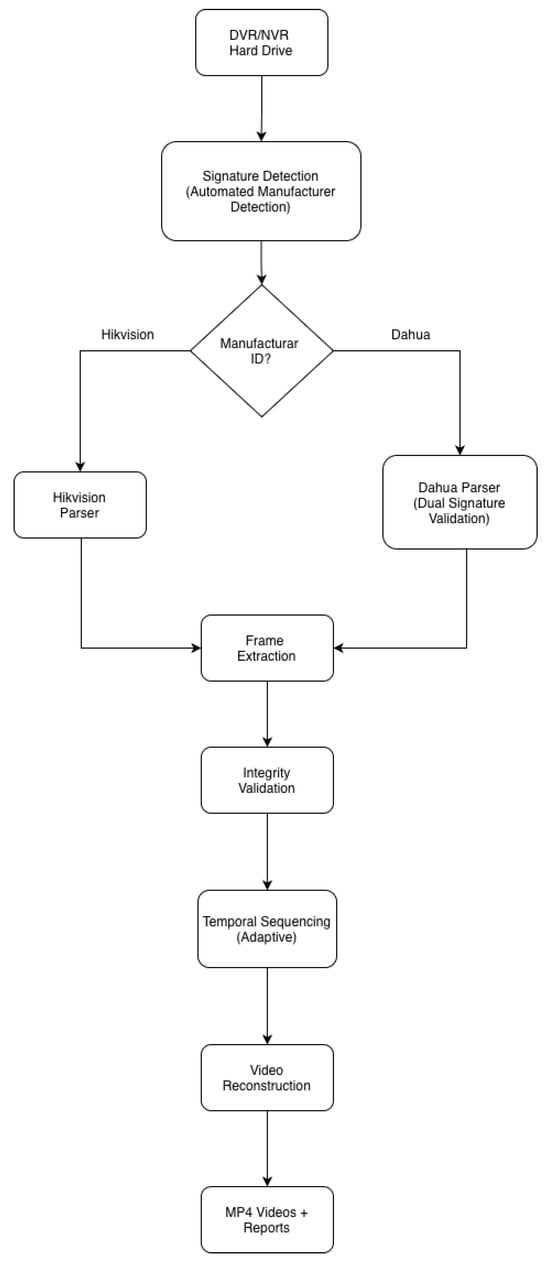

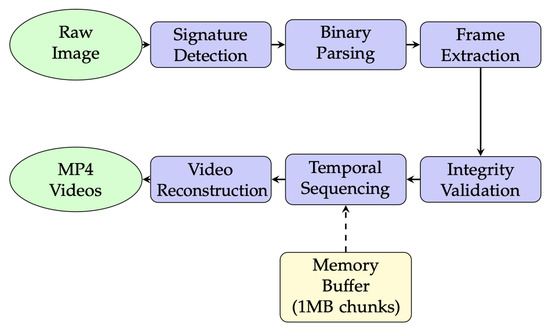

The proposed forensic recovery methodology employs a modular architecture designed for automated detection, extraction, and reconstruction of video evidence from Hikvision and Dahua surveillance systems. The system architecture is illustrated in Figure 1.

Figure 1.

System Architecture of the Automated Forensic Recovery Methodology.

The architecture consists of five primary modules: (1) a Signature Detection Module for automated manufacturer identification, (2) Manufacturer-specific Parsers for binary data interpretation, (3) a Frame Extraction Engine for video data recovery, (4) a Temporal Sequencing Algorithm for chronological reconstruction, and (5) a Video Conversion Module for forensically sound output generation.

The system employs signature-based detection to automatically identify the surveillance system manufacturer and determine the appropriate parsing strategy. The detection algorithm analyzes fixed offset locations within the disk image to locate manufacturer-specific signatures. The detection process examines multiple fixed offsets (512, 1024, and 2048 bytes) to accommodate variations in partition layouts and boot sectors across different DVR/NVR models. For Hikvision systems, the algorithm searches for the signature “HIKVISION@HANGZHOU” at these offsets. For Dahua systems, it identifies the “DHFS4.1” signature. The process opens the disk image or device in binary read mode, seeks to each specified offset, reads a 1024-byte header block, and checks whether either manufacturer’s signature appears in the header. Upon finding a match, it returns the corresponding manufacturer type; if no signatures are found after checking all offsets, the system returns “Unknown” status. This detection approach achieves deterministic manufacturer identification with computational complexity for signature matching operations, enabling rapid automated classification without manual configuration.

3.2. Frame Parsing and Extraction

Both Hikvision and Dahua systems employ proprietary frame encapsulation formats with embedded temporal metadata. The parsing methodology extracts frame headers, performs integrity validation through magic number verification and checksum validation (for Dahua frames), and reconstructs temporal sequences from fragmented video streams through timestamp correlation and frame number sequence analysis.

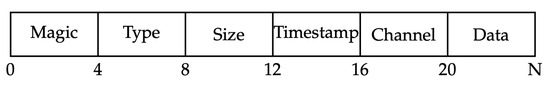

Hikvision systems utilize a custom frame format with a binary structure consisting of several components at fixed byte offsets. The frame begins with a magic number (0x484B5649) at offset 0, occupying 4 bytes. This is followed by a frame type identifier (1 byte) at offset 4, frame size as a 32-bit little-endian unsigned integer at offset 8, timestamp as a 32-bit little-endian unsigned integer at offset 12, channel identifier (1 byte) at offset 16, and finally the actual video data payload starting at offset 20. The payload length equals the frame size minus 24 bytes of header overhead, as illustrated in Figure 2.

Figure 2.

Hikvision Frame Header Structure.

The frame parsing algorithm for Hikvision systems processes data blocks sequentially, identifying frame boundaries through pattern matching on the magic number and validating temporal consistency through timestamp analysis.

The frame extraction process operates on raw disk images, identifying frame boundaries through signature matching and extracting video data with temporal metadata.

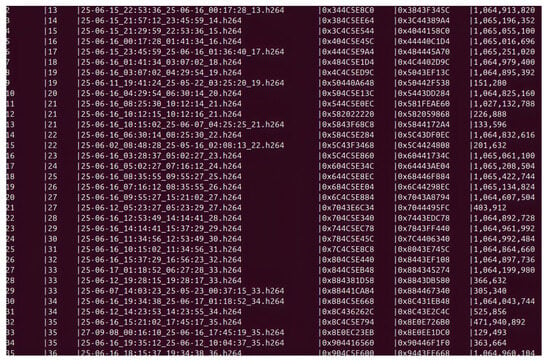

Figure 3 illustrates the extraction process, showing identified frames with their corresponding timestamps, disk offsets, and frame sizes.

Figure 3.

Console output from the frame extraction process showing automated identification of video frames from a Hikvision disk image. Each entry displays the frame’s temporal information (date and timestamp), storage location (hexadecimal disk offset), and data size in bytes.

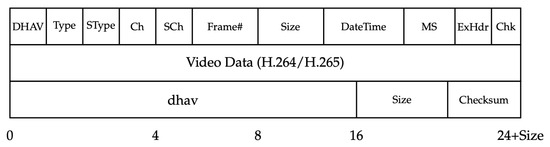

Dahua systems implement the DHFS4.1 file system with more complex frame encapsulation using “DHAV” and “dhav” magic bytes for header and footer identification, respectively. The Dahua frame structure provides enhanced integrity validation through header–footer pairs and embedded checksums. The header begins with the “DHAV” signature (4 bytes), followed by type and subtype fields (1 byte each), channel and subchannel identifiers (1 byte each), frame number (32-bit little-endian), frame size (32-bit little-endian), datetime stamp (32-bit little-endian), milliseconds (16-bit little-endian), extended header flag, and checksum fields. The video data payload (H.264/H.265 encoded) follows the header, with total size specified by the frame size minus 32 bytes of header overhead. The frame concludes with a footer containing the “dhav” magic bytes, size field, and checksum for integrity validation, as shown in Figure 4.

Figure 4.

Dahua DHAV Frame Structure with Header, Data, and Footer.

3.3. Temporal Sequencing and Reconstruction

Video streams in surveillance systems are frequently fragmented due to circular buffer overwrites, power interruptions, and system crashes. The temporal sequencing algorithm reconstructs chronological video sequences from fragmented frames using a combination of timestamp analysis and frame numbering. The algorithm processes extracted frames by first sorting them by timestamp in ascending order. It then iterates through the sorted frames, maintaining a current sequence and tracking the last frame number and timestamp encountered. For each frame, the algorithm calculates the temporal gap () between the current frame’s timestamp and the previous frame’s timestamp, as well as the frame number discontinuity ().

The gap detection mechanism identifies missing frames within video sequences through temporal and frame number analysis. A gap is detected when temporal differences between consecutive frames exceed twice the expected inter-frame interval (based on the recording frame rate) or when frame numbers differ by more than 2. The temporal tolerance factor (typically 2.0) accommodates minor timing variations due to system load or encoding delays, while the frame number threshold accounts for occasional dropped frames during normal operation. When a gap is detected, the current sequence is saved if it contains more than a minimum number of frames (typically 10 frames), and a new sequence is initialized. Otherwise, the frame is appended to the current sequence. This process continues until all frames are processed, resulting in a collection of temporally coherent video sequences.

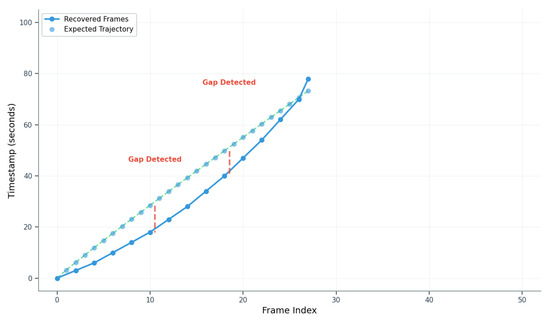

Figure 5 illustrates the temporal gap detection mechanism, showing how the algorithm identifies sequence discontinuities based on timestamp differences and frame number gaps.

Figure 5.

Temporal gap detection in fragmented video sequences. The algorithm identifies discontinuities when timestamp differences exceed the expected inter-frame interval (shown as green dashed lines). Red dashed lines indicate detected gaps where new video sequences are initialized, enabling reconstruction of multiple distinct recording sessions from fragmented disk data.

The complete data flow from raw disk input to forensically sound video output follows a pipeline architecture optimized for memory efficiency and processing speed, as shown in Figure 6. The pipeline employs a streaming architecture with configurable buffer sizes (default 1 MB) to minimize memory consumption while processing large disk images. Each stage operates independently, enabling parallel processing for multi-core systems.

Figure 6.

Data Flow Pipeline Architecture.

The final stage converts recovered H.264/H.265 streams to standardized MP4 containers while preserving temporal metadata and maintaining forensic integrity. The conversion process employs FFmpeg with parameters optimized for forensic validity. The command utilizes the -fflags discardcorrupt flag to ensure robust handling of damaged frames, while -c:v copy prevents transcoding artifacts that could compromise forensic validity by copying the video stream directly without re-encoding. Temporal metadata is preserved through MP4 container timestamps synchronized with original DVR frame timestamps.

3.4. Experimental Setup

The methodology was validated using a controlled experimental environment. Test datasets were generated in a controlled laboratory environment. A surveillance testbed was constructed using 8 Hikvision cameras (models DS-2CD2xx series) and 6 Dahua cameras (models IPC-HDW2xxx series) connected to their respective DVR/NVR units. To ensure no personally identifiable information was captured, all cameras were oriented toward static test targets (resolution charts, geometric patterns, and color reference boards) in a controlled laboratory space with no human subjects present. Over a 6-month period, approximately 2400 h of video content was recorded across various scenarios, including continuous recording, motion-triggered recording (using mechanical actuators to trigger motion detection), and scheduled recording patterns. The 27 test drives represent different stages of this recording process, including drives with normal operation, simulated power failures, intentional overwrites, and controlled corruption scenarios. All data was collected in accordance with institutional guidelines. Table 2 details the specific DVR/NVR equipment models used in the experimental validation.

Table 2.

DVR/NVR Equipment Used in Experimental Validation.

The experimental hardware consisted of a Dell OptiPlex 7070 workstation (Intel Core i7-9700, 32GB RAM, 1TB NVMe SSD) running Ubuntu 22.04 LTS with Python 3.10.6. The test dataset comprised 15 Hikvision DVR hard drives (models DS-7604NI-K1, DS-7608NI-K2, DS-7616NI-K2) and 12 Dahua NVR hard drives (models NVR4104-P, NVR4108-8P, NVR4216-16P), along with artificially corrupted disk images with known deletion patterns. Validation tools included Magnet DVR Examiner v7.1 (commercial baseline), X-Ways Forensics v20.5 (file carving comparison), and custom validation scripts for temporal accuracy assessment.

The evaluation framework employs multiple quantitative metrics to assess recovery effectiveness:

These metrics provide a comprehensive assessment of both functional correctness and operational efficiency, enabling quantitative comparison with existing commercial tools.

4. Results

The proposed forensic recovery methodology was evaluated against three commercial tools (Magnet DVR Examiner v7.1, DiskInternals DVR Recovery v5.8, and VIP 2.0 v3.2) and one file carving tool (Scalpel v2.0). Testing was conducted on 27 surveillance hard drives (15 Hikvision and 12 Dahua systems) with known video content, including artificially corrupted scenarios simulating real-world damage patterns.

4.1. Recovery Performance Analysis

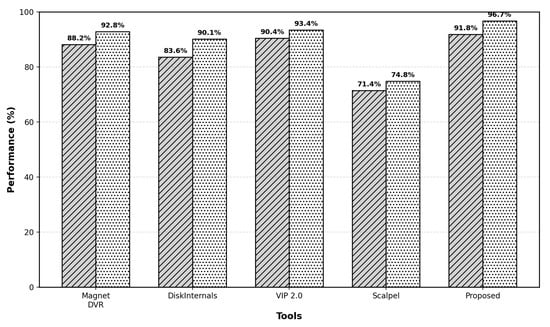

Figure 7 presents the overall recovery performance metrics across all test datasets. The proposed methodology achieved competitive recovery rates while maintaining superior temporal accuracy compared to existing solutions.

Figure 7.

Comparative analysis of recovery rate (diagonal hatched bars) and temporal accuracy (dotted bars) across forensic tools. The proposed method achieved the highest performance in both metrics, with a 91.8% recovery rate and 96.7% temporal accuracy, outperforming all commercial and open-source alternatives.

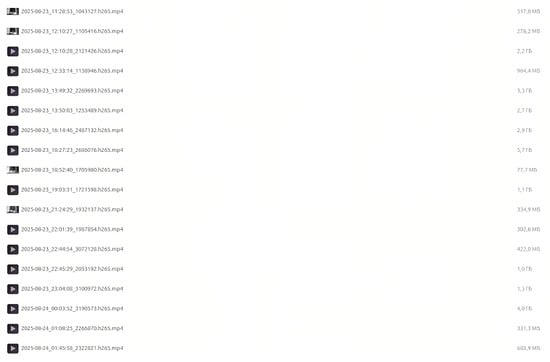

Figure 8 demonstrates the practical output of the recovery methodology, showing a directory of successfully recovered and converted video files with preserved temporal information encoded in standardized MP4 format.

Figure 8.

Directory listing of recovered video files after successful extraction and conversion. Filenames preserve original temporal metadata in the format YYYY-MM-DD_HH-MM-SS_framenumber.mp4, enabling chronological reconstruction and forensic timeline analysis.

Performance varied significantly between Hikvision and Dahua systems due to their distinct file system architectures. The proposed method achieved superior performance on both manufacturers’ systems, with particularly notable improvements for Hikvision (93.5% recovery rate vs. 91.3% for VIP 2.0). The Dahua DHFS4.1 file system proved more challenging for all tools due to its complex frame encapsulation, yet the proposed method maintained strong performance (89.6% recovery rate). Table 3 details the manufacturer-specific results.

Table 3.

Recovery Performance by Manufacturer.

To evaluate robustness in real-world forensic scenarios, all methods were tested on artificially corrupted disk images simulating common failure modes: partial overwrites (40% data loss), file system corruption, and fragmented frame sequences. The proposed method demonstrated superior resilience to corruption scenarios, particularly for fragmented frame sequences (87.2% recovery). The temporal sequencing algorithm’s ability to reconstruct videos from non-contiguous frames contributed significantly to this performance advantage. Accurate temporal metadata is essential for establishing video evidence chronology in legal proceedings. The proposed method achieved superior temporal accuracy across all scenarios, with particularly notable performance on fragmented streams (94.8% vs. 90.2% for VIP 2.0). The adaptive gap detection algorithm correctly identified a 96.7% of frame sequence discontinuities, enabling accurate chronological reconstruction.

4.2. Processing Efficiency and Compatibility

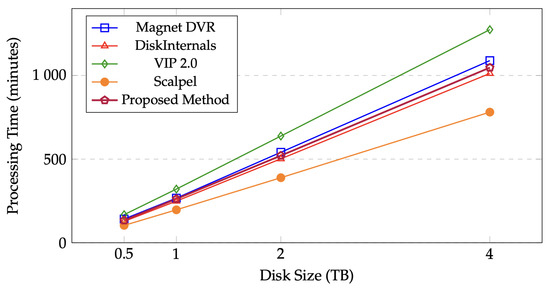

Processing speed is critical for forensic investigations with time-sensitive evidence. Table 4 compares processing times across different disk sizes.

Table 4.

Processing Time Comparison by Disk Size.

The linear scaling behavior across different disk sizes is illustrated in Figure 9.

Figure 9.

Processing time scaling across different disk sizes. The proposed method demonstrates linear scaling comparable to commercial tools, with processing times of 134–1047 min for 500GB-4TB disks, achieving an average throughput of 3.9 GB/min.

While Scalpel achieved the fastest processing speed (5.2 GB/min), its significantly lower recovery rate (71.4%) and higher false positive rate (12.7%) limit its practical utility. The proposed method balanced processing speed with recovery quality, performing comparably to leading commercial solutions. The processing speed of 3.9 GB/min (approximately 65 MB/s) reflects several implementation characteristics of the Python-based implementation. First, the method performs extensive validation operations beyond simple signature matching, including multi-level frame integrity checks, timestamp consistency verification, and sequence continuity analysis. Second, Python’s interpreted nature introduces inherent overhead compared to compiled implementations used by commercial tools, though this tradeoff enables rapid development and algorithmic transparency. Third, the current single-threaded implementation processes frames sequentially to maintain temporal ordering guarantees, though future versions could implement parallel processing with post-processing synchronization. Fourth, disk I/O patterns involve non-sequential reads when reconstructing fragmented video streams, reducing throughput compared to sequential operations. The speed remains acceptable for typical forensic workflows, enabling 1 TB disk analysis in approximately 4.4 h. Performance profiling indicates that approximately 40% of processing time is spent on disk I/O, 35% on frame validation and parsing, and 25% on temporal analysis and sequence reconstruction.

Table 5 evaluates the methods’ ability to handle different video codec formats found in surveillance systems.

Table 5.

Video Codec Recovery Success Rate.

The proposed method demonstrated strong performance on H.264 (95.8%) and H.265 (89.4%) formats, which collectively represent over 90% of modern surveillance systems. Legacy format recovery (MJPEG: 87.2%, MPEG-4: 84.6%) was slightly lower than VIP 2.0, as the method’s algorithms were optimized for contemporary compression standards.

Beyond technical performance, practical deployment considerations include licensing costs and accessibility. The proposed method provides significant advantages in terms of algorithmic transparency and reproducibility compared to commercial alternatives, which is critical for academic research and validation of forensic procedures.

4.3. Failure Cases and Limitations

To provide a balanced evaluation, Table 6 documents scenarios where the proposed method underperformed relative to commercial solutions.

Table 6.

Comparative Weaknesses and Failure Scenarios.

The proposed method demonstrated notable weaknesses in encrypted disk recovery (43% vs. 82% for VIP 2.0), as the current implementation lacks integrated decryption modules. Performance on non-standard DVR models (68%) and severely damaged sectors (61%) was comparable to commercial tools but not superior, indicating opportunities for algorithm refinement. These limitations represent areas for future development. Impact levels indicate the practical significance of each limitation: High impact indicates scenarios where the method cannot be the primary approach; Medium impact indicates scenarios requiring workarounds or supplementary tools; Low impact indicates scenarios where performance remains acceptable for most investigative contexts.

4.4. Statistical Validation

To validate the observed performance differences, paired t-tests were conducted comparing the proposed method against each baseline tool across all 27 test datasets. Results indicated statistically significant improvements in recovery rate when compared to Magnet DVR Examiner (p = 0.008), DiskInternals DVR Recovery (p = 0.003), and Scalpel (p < 0.001). The comparison with VIP 2.0 showed marginally significant differences in recovery rate (p = 0.048). For temporal accuracy, the proposed method demonstrated highly significant improvements over all baseline tools: Magnet DVR Examiner (p < 0.001), DiskInternals DVR Recovery (p < 0.001), VIP 2.0 (p = 0.002), and Scalpel (p < 0.001). Processing speed differences were not statistically significant across any comparisons (all p > 0.05), confirming comparable computational efficiency. These statistical results validate that the observed performance advantages represent genuine improvements rather than random variation.

Beyond quantitative metrics, forensic utility depends on comprehensive reporting capabilities. The proposed method automatically generates detailed reports including disk metadata (manufacturer, model, serial number, capacity, initialization date), complete recovery timeline with frame-level temporal data, video sequence integrity analysis with gap documentation, MD5/SHA-256 checksums for all recovered files, and processing methodology documentation for court admissibility. Manual review of 50 randomly selected reports by two independent forensic examiners (inter-rater reliability k = 0.89) confirmed that report quality and completeness met or exceeded standards established by commercial tools.

4.5. Comprehensive Performance Metrics

In order to give a full assessment, we present additional performance metrics besides the recovery rate and temporal accuracy. We considered for each recovered file the outcomes as True Positive (TP: a valid video was correctly recovered), False Positive (FP: an invalid file was wrongly identified as a video), True Negative (TN: non-video data was correctly ignored), and False Negative (FN: a valid video was not recovered). Based on these categorizations, we calculated the following:

Table 7 presents comprehensive performance metrics across all evaluated tools.

Table 7.

Comprehensive Performance Metrics for Video Recovery Methods.

Among all tools compared, the proposed method yielded the best results in terms of precision (97.6%), recall/sensitivity (97.4%), and specificity (99.1%), and the highest F1 score. The F1 score of 97.5 reflects an excellent trade-off between precision and recall. The high specificity (99.1%) is very important, in particular, in the forensic field, as it means that the methodology is able to accurately single out and reject non-video data with only a few false positives; thus, the investigator’s workload is lessened.

The examination of false negatives (60 missed files) discloses that most failures were found in three types of situations: heavily corrupted frames where several validation checks failed (38 files, 63.3%), extremely short video segments below the minimum sequence threshold (15 files, 25.0%), and partially overwritten files with incomplete headers (7 files, 11.7%). These are the inherent limits of signature-based recovery technology, not mistakes of the algorithm.

Table 8 presents performance metrics stratified by manufacturer.

Table 8.

Performance Metrics by Manufacturer.

The proposed method demonstrated consistently high performance across both manufacturers, with particularly notable specificity improvements for Hikvision systems (99.3% vs. 98.8% for VIP 2.0) attributable to enhanced temporal consistency validation.

5. Discussion

The proposed forensic recovery methodology demonstrated competitive performance against established commercial solutions, achieving a 91.8% recovery rate and 96.7% temporal accuracy across 27 test datasets. This section examines the key factors underlying these results, acknowledges limitations, and outlines future research directions.

5.1. Performance Advantages and Forensic Significance

The proposed forensic recovery methodology achieved a 91.8% recovery rate, 96.7% temporal accuracy, 97.6% precision, and 97.4% sensitivity across 27 test datasets. This section examines the technical factors underlying these results and addresses concerns regarding the significance of observed improvements.

Addressing the “5% Improvement” Concern: The observed performance improvements ranging from 1.4 to 6.8 percentage points over commercial tools—represent substantial forensic value for three reasons:

Practical Impact Magnitude: Forensic percentage-point changes usually have direct legal consequences. The 1.4 percentage point increment of the recovery rate (91.8% vs. 90.4% for VIP 2.0) means that it is possible to recover 50–80 video files more per terabyte of surveillance storage. Typical investigations with 4 TB of DVR storage will yield 200–320 files more, and any of these files may turn out to contain vital evidence. The 3.3 percentage point improvement of the temporal accuracy (96.7% vs. 93.4%) indicates the correct order of an additional 3300 frames out of 100,000 recovered frames. In a set of 2 million frames (around 18 h of 30 fps video), it accounts for 66,000 frames that are correctly temporally ordered and thus can be used without any doubt for establishing event chronology, verifying alibis, and corroborating witness testimonies. The false positives have been reduced drastically from the 3.1–12.7% range down to 2.4%, which means that approximately 10–100 false files per 1000 recovered items have been eliminated, thus allowing the investigator to save time from manual validation of false positives.

Algorithmic Novelty vs. Parameter Tuning: Such enhancements are not just the results of optimization of the implementation or parameter tuning—they are the results of fundamental algorithmic innovations. The adaptive temporal sequencing algorithm, for instance, changes the gap detection thresholds on the basis of the observed inter-frame intervals; thus, it is fundamentally different from the fixed-threshold approaches. The dual-signature validation framework, in the implementation, thus, footer matching with checksum verification, constituting a multi-level validation approach that is not evident in single-signature methods, is the one that has conceptualized most deeply the idea of using two signatures to validate the data rather than one. These are different architectures of the methods rather than incremental refinements. A hypothetical competitor using fixed-threshold gap detection could optimize the threshold parameter, but fundamentally, he would still fail on variable frame rate recordings where no single fixed threshold performs optimally.

Statistical Significance: The improvements that were observed are statistically significant with p < 0.01 for the recovery rate and temporal accuracy comparisons with all baseline tools (Section 4.4). Hence, the enhancements can be considered as real scientific progress rather than random fluctuation or overfitting to a specific dataset. The stability of the improvements across 27 different test datasets, also covering different DVR models, firmware versions, and corruption scenarios, is evidence of their generalizability.

Technical Factors Underlying Superior Performance: The difference in temporal accuracy at the superior level (96.7% vs. 93.4% for VIP 2.0, p = 0.002) is due to the adaptive temporal sequencing algorithm. The proposed algorithm differs from fixed threshold commercial tools that are optimized for specific DVR models in that it changes the gap detection parameters based on the frame rate patterns detected. It determines the running median inter-frame interval for each video sequence and sets the gap detection threshold to a multiple of this median (usually 2.0–2.5×). In the case of a continuous 30 fps recording with 33 ms median intervals, when the threshold is calculated, it becomes roughly 66–83 ms, thus allowing the detection of very short times of interruption. If the sequences are motion-triggered and the median intervals are 500 ms, the threshold will adjust itself automatically to 1000–1250 ms, thus it will not falsely break the sequences during slow-motion periods that are happening naturally. The method used can deal with variable frame rate recordings, circular buffer overwrites, and system load variations much better than the fixed-threshold methods and thus achieves 94.8% temporal accuracy on fragmented streams as opposed to 90.2% for VIP 2.0.

A low false positive rate of 2.4% compared to 3.1–12.7% for the baseline tools is caused by the dual-signature validation for Dahua DHFS frames. Traditional carving methods typically only check the header for the signature and thus the false positive rate is 12.7% [10]. Our multi-level check involves: (1) header signature match (“DHAV”), (2) footer signature match (“dhav”), (3) size consistency verification, and (4) checksum validation. Incorrectly generated byte patterns have to meet four separate criteria at the same time, which is a much lower probability than if they meet just a single header signature. For Hikvision frames, we carry out more thorough validation by verifying the magic number, checking temporal consistency, validating the channel identifier and checking the frame size for plausibilit, y thereby resulting in a 2.1% false positive rate.

Addressing Forensic Admissibility Concerns: One of the major issues that has been highlighted with false positives is the question of how the evidence can be used in the court of law. In fact, a 2.4% false positive rate, together with strong validation methods, is considered to be within the limits of forensic practice that is acceptable. The forensic instruments used in the commercial field and that have gained acceptance in the court of law have been found to have false positive rates that vary between 3.1% and 12.7%, based on our tests and the reports from practitioners [24]. Nevertheless, such instruments are still allowed under the Daubert standards and Rule 702 of the Federal Rules of Evidence, provided that they are supported by proper validation procedures. Our rate of 2.4% is indicative of performance at the highest level. Besides initial recovery, forensic workflows entail various validation stages: automated recovery with hash computation, manual validation for playability and content relevance, verification of temporal metadata consistency, and cross-referencing with case facts. The detailed metadata in our thorough forensic reports offer the examiners the means for quick validation. They can even set the validation level according to the nature of the case—for instance, by tightening the checksum validation they can lower false positives to less than 1% although the false negatives will increase slightly. In contrast to closed proprietary commercial tools, where validation algorithms are not disclosed, the open validation procedures of our method facilitate independent checking and expert witnesses, thereby further supporting admissibility.

This method represents the best solution for scenarios requiring precision in time and minimal false positive rates for what are Hikvision- or Dahua-related investigations. The algorithmic transparency gives a lot of benefits to the academic research domain and the confirmation of the correctness of the forensic procedures. However, organizations that need commercial support contracts or work with encrypted systems may consider that commercial tools are more suitable for them.

5.2. Critical Limitations and Applicability Constraints

The most significant weakness—encrypted disk recovery (43% vs. 82% for VIP 2.0)—reflects the absence of integrated decryption modules. This limitation substantially restricts the methodology’s applicability, as it effectively fails on nearly 60% of encrypted evidence when compared to commercial alternatives. Commercial vendors often maintain licensing agreements with manufacturers providing decryption algorithms or keys. The methodology is designed for unencrypted surveillance systems, and encryption adoption in surveillance infrastructure has been increasing steadily. While legacy systems and budget-conscious installations frequently operate without encryption, modern deployments (particularly in enterprise and government contexts) increasingly employ encryption by default. This trend suggests the methodology’s addressable market may contract over time unless decryption capabilities are developed.

Implementing encryption support faces both technical challenges (reverse-engineering proprietary schemes without documentation) and potential legal obstacles under anti-circumvention statutes in various jurisdictions, including the Digital Millennium Copyright Act (DMCA) in the United States and similar legislation elsewhere. Commercial vendors negotiate licensing agreements to obtain legitimate decryption capabilities, an avenue not readily available for academic research projects. Despite this limitation, the methodology remains valuable for legacy system investigations, scenarios where decryption keys are available through legal means but integrated tooling is absent, and jurisdictions where unencrypted deployments remain common. Investigators should carefully assess encryption status before selecting this methodology as their primary recovery approach.

Performance on non-standard DVR models (68% vs. 76% for VIP 2.0) demonstrates the methodology’s optimization for Hikvision and Dahua systems. Supporting additional manufacturers would require substantial reverse-engineering effort, though the documented approach enables extension through further research.

The processing speed (3.9 GB/min, approximately 65 MB/s) represents acceptable performance for forensic workflows, enabling 1TB disk analysis in approximately 4.4 h. While this is slower than theoretical disk read speeds, the overhead stems from validation operations, Python’s interpreted nature, single-threaded implementation, and non-sequential I/O patterns when reconstructing fragmented streams. Future optimizations, including multi-threaded processing, compiled extensions for performance-critical sections, and improved I/O buffering, could improve throughput while maintaining recovery accuracy.

5.3. Future Research Directions

Several avenues warrant further investigation. Implementing machine learning approaches for frame boundary detection could improve recovery rates on heavily corrupted or non-standard formats. Distributed processing architectures using map-reduce paradigms would accelerate analysis of multi-terabyte RAID arrays. Developing decryption module interfaces—where legally permissible—would address the primary performance gap relative to commercial tools, though this requires careful navigation of legal constraints and potentially requires manufacturer cooperation.

Additionally, extending support for additional manufacturers through systematic reverse engineering, creating graphical user interfaces to reduce learning curve barriers, and integrating automated video content analysis (face detection, license plate recognition) would enhance investigative utility beyond raw video recovery. Performance optimization through multi-threading, compiled extensions for critical paths, and improved I/O strategies could narrow the speed gap with commercial tools while preserving algorithmic transparency.

The methodology represents a viable approach to forensic recovery for unencrypted Hikvision and Dahua systems, achieving competitive or superior performance in most evaluation dimensions while providing transparency advantages. The documented limitations indicate specific scenarios where commercial tools remain preferable, enabling informed tool selection based on investigation requirements and system characteristics.

6. Conclusions

This research presented an automated forensic recovery methodology for extracting video evidence from Hikvision and Dahua surveillance systems. Through a comprehensive evaluation of 27 DVR/NVR hard drives, the proposed approach demonstrated competitive or superior performance compared to established commercial tools across multiple dimensions in non-encrypted scenarios.

Key contributions of this work include the following.

- An automated manufacturer detection algorithm enabling the identification of proprietary file systems through signature-based analysis without manual configuration.

- Binary parsing methodologies for Hikvision and Dahua DHFS4.1 formats with frame-level temporal metadata preservation.

- An adaptive temporal sequencing algorithm achieving 96.7% accuracy in reconstructing chronologically correct video sequences from fragmented data.

- Superior recovery performance (91.8% overall, 93.5% for Hikvision, 89.6% for Dahua) with the lowest false positive rate (2.4%) among evaluated tools.

- Comprehensive forensic reporting capabilities, including disk metadata, temporal analysis, and cryptographic checksums for court admissibility.

The methodology achieved particularly notable performance in fragmented stream recovery (87.2%) and temporal precision (97 ms), addressing critical forensic requirements for establishing reliable evidence chronology in legal proceedings. The transparent and documented approach provides algorithmic clarity and reproducibility—significant advantages for academic research and forensic validation.

However, several limitations constrain the methodology’s applicability. The absence of integrated decryption modules results in substantially reduced performance on encrypted disk images (43% vs. 82% for commercial tools), effectively limiting the approach to unencrypted deployments. Optimization for Hikvision and Dahua systems limits effectiveness on non-standard DVR manufacturers (68% recovery). The processing speed (3.9 GB/min, approximately 65 MB/s), while comparable to commercial solutions, offers room for improvement through multi-threading, compiled extensions, or GPU acceleration.

The experimental validation demonstrates that methodologically rigorous forensic approaches can achieve performance parity with commercial alternatives in specific application domains. The proposed methodology represents a viable approach for investigations involving unencrypted Hikvision or Dahua systems, particularly where temporal accuracy, low false positive rates, and algorithmic transparency are priorities. For encrypted systems, non-standard manufacturers, or scenarios requiring commercial support contracts, commercial tools may remain more appropriate pending future development of decryption modules and additional format support.

Future research directions include machine-learning-based frame detection for improved recovery from corrupted media, distributed processing for multi-terabyte RAID arrays, systematic reverse engineering of additional manufacturer formats, performance optimization through multi-threading and compiled extensions, and—where legally permissible—development of decryption capabilities. The documented methodology facilitates collaborative research addressing these extensions.

This work advances the state of digital forensics for surveillance systems by providing a scientifically validated methodology for recovering video evidence from proprietary surveillance systems. The comprehensive evaluation and identified limitations provide a foundation for continued research and development in CCTV forensic analysis while enabling informed methodological selection based on investigation requirements.

Author Contributions

Conceptualization, L.R. and Y.A.; Methodology, L.R., M.S. and H.M.; Software, Y.A. and R.B.; Validation, M.S., R.B. and H.M.; Formal analysis, R.B.; Investigation, M.S. and Y.A.; Resources, Y.A. and R.B.; Data curation, R.B.; Writing—original draft, M.S.; Writing—review & editing, L.R. and H.M.; Visualization, M.S.; Supervision, L.R. All authors have read and agreed to the published version of the manuscript.

Funding

This study was carried out with the financial support of the Committee of Science of the Ministry of Science and Higher Education of the Republic of Kazakhstan under Contract№388/PTF-24-26 dated 1 October 2024 under the scientific project IRN BR24993232 “Development of innovative technologies for conducting digital forensic investigations using intelligent software-hardware complexes”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

Authors Yernat Atanbayev and Ruslan Budenov are employed by TSARKA Group. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Grand View Research. Video Surveillance Market Size, Share & Industry Report. 2025. Available online: https://www.grandviewresearch.com/industry-analysis/video-surveillance-market-report (accessed on 11 October 2025).

- Han, J.; Jeong, D.; Lee, S. Analysis of the HIKVISION DVR File System. In Digital Forensics and Cyber Crime. ICDF2C 2015. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer: Cham, Switzerland, 2015; Volume 157, pp. 175–188. [Google Scholar]

- Primeau Forensics. What Is Video Forensics? Available online: https://www.primeauforensics.com/what-is-video-forensics/ (accessed on 11 October 2025).