Differentiating Between Human-Written and AI-Generated Texts Using Automatically Extracted Linguistic Features

Abstract

1. Introduction

1.1. Automatic Elicitation of Linguistic Features

- •

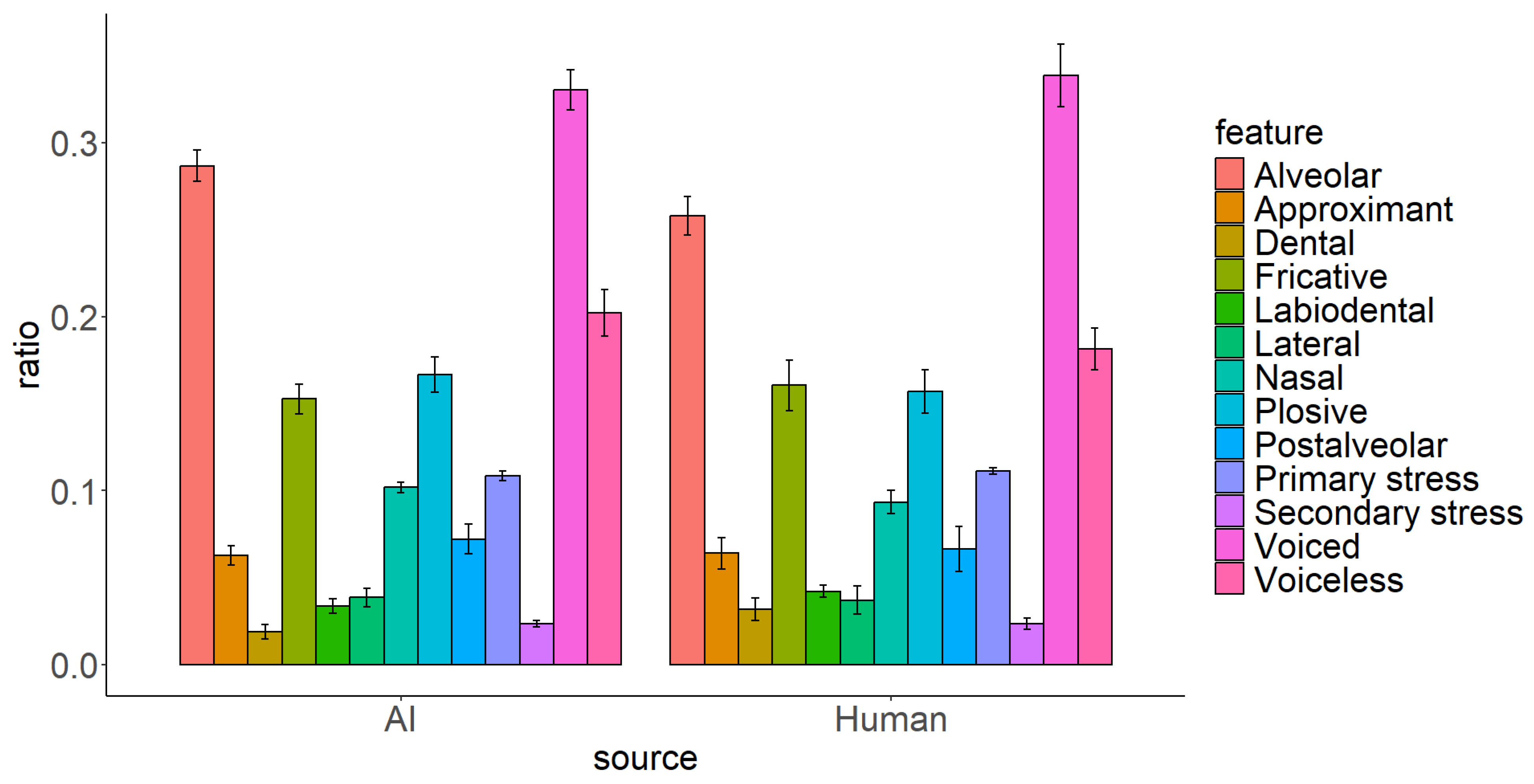

- Phonology: Measures include the number and ratios of syllables, vowels, words with primary and secondary stress, consonants per place and manner of articulation, and voiced and voiceless consonants.

- •

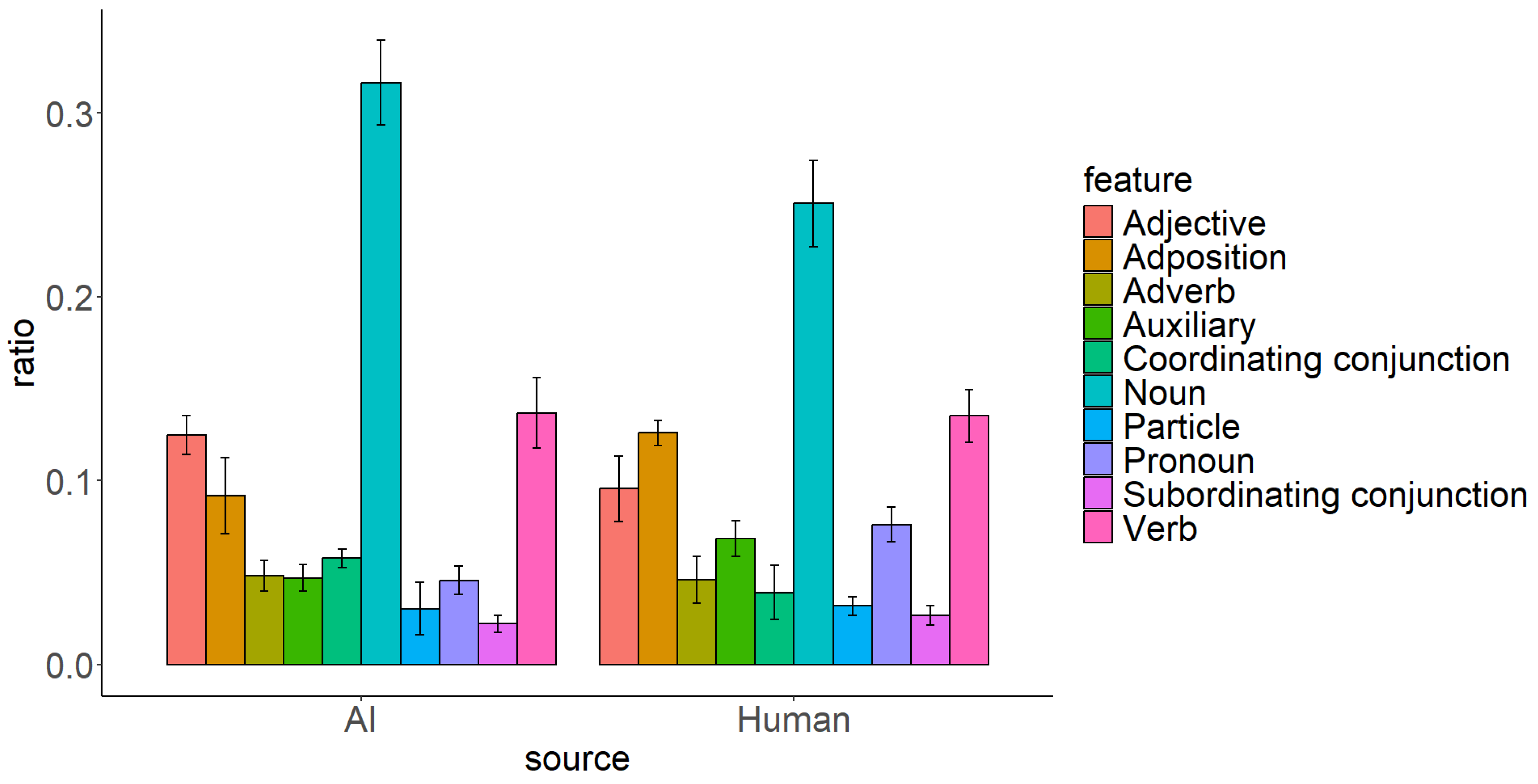

- Morphology: Includes the counts and ratios of parts of speech (e.g., verbs, nouns, adjectives, adverbs, conjunctions, etc.) relative to the total number of words.

- •

- Syntax: Calculates the counts and ratios of syntactic constituents (e.g., modifiers, case markers, direct objects, nominal subjects, predicates, etc.).

- •

- Lexicon: Provides metrics such as the total number of words, hapax legomena (words that occur once), Type Token Ratio (TTR), and others.

- •

- Semantics: Estimates counts and ratios of semantic entities within the text (e.g., persons, dates, locations, etc.).

- •

- Readability Measures: Assessments of text readability and grammatical structure.

1.2. This Study

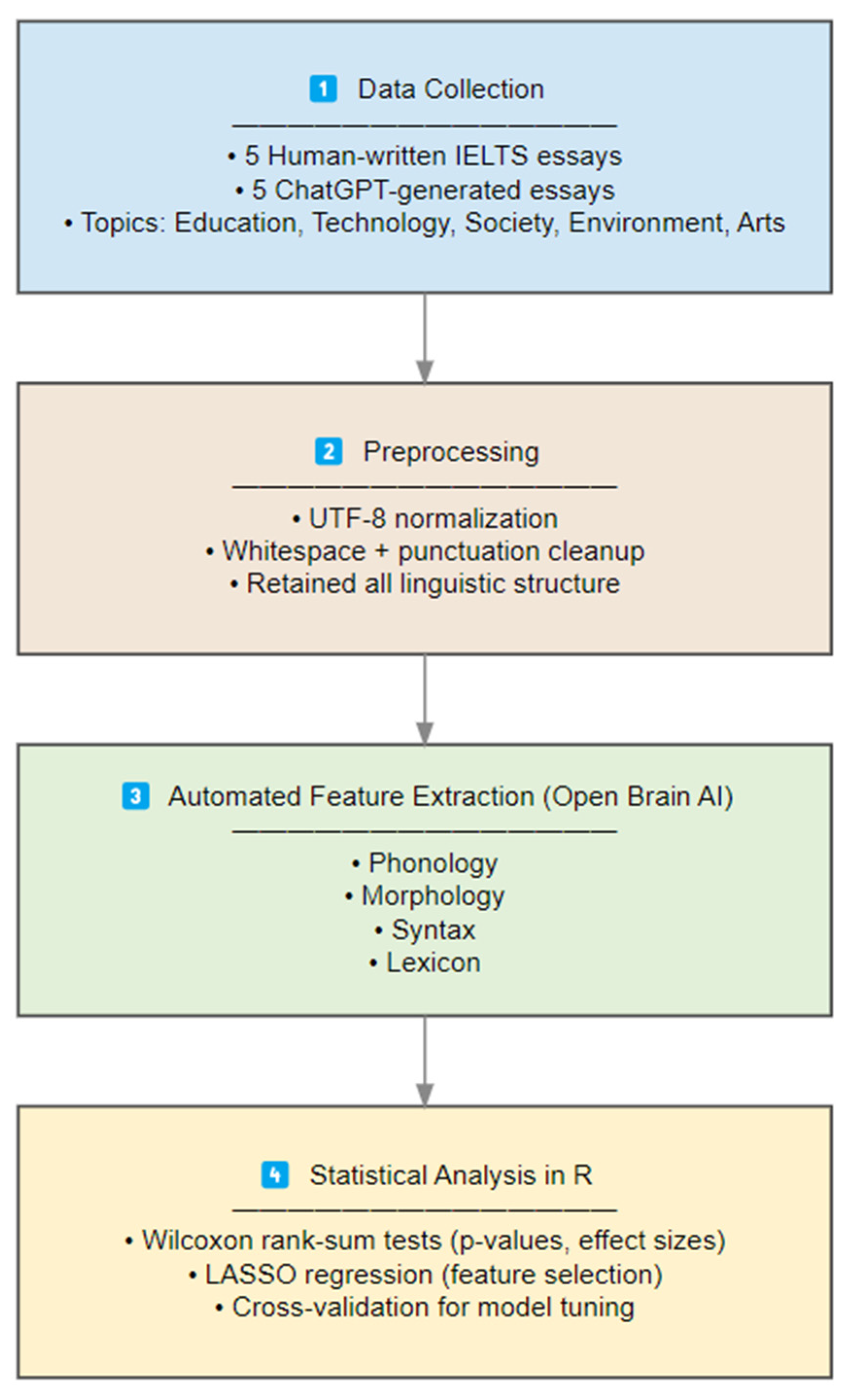

2. Methodology

2.1. Procedure

2.2. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Kasneci, G. ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Zhou, H.; Gu, B.; Zou, X.; Li, Y.; Chen, S.S.; Zhou, P.; Liu, F. A Survey of Large Language Models in Medicine: Progress, Application, and Challenge. arXiv 2023, arXiv:2311.05112. [Google Scholar]

- Adeshola, I.; Adepoju, A.P. The Opportunities and Challenges of ChatGPT in Education. Interact. Learn. Environ. 2023, 32, 1–14. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P. ChatGPT for Healthcare Services: An Emerging Stage for an Innovative Perspective. BenchCouncil Trans. Benchmarks Stand. Eval. 2023, 3, 100105. [Google Scholar] [CrossRef]

- Kohnke, L.; Moorhouse, B.L.; Zou, D. ChatGPT for Language Teaching and Learning. RELC J. 2023, 54, 537–550. [Google Scholar] [CrossRef]

- Koc, E.; Hatipoglu, S.; Kivrak, O.; Celik, C.; Koc, K. Houston, We Have a Problem!: The Use of ChatGPT in Responding to Customer Complaints. Technol. Soc. 2023, 74, 102333. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training Language Models to Follow Instructions with Human Feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Chukwuere, J.E. Today’s Academic Research: The Role of ChatGPT Writing. J. Inf. Syst. Inform. 2024, 6, 30–46. [Google Scholar] [CrossRef]

- Alexander, K.; Savvidou, C.; Alexander, C. Who Wrote This Essay? Detecting AI-Generated Writing in Second Language Education in Higher Education. Teach. Engl. Technol. 2023, 23, 25–43. [Google Scholar] [CrossRef]

- Herbold, S.; Hautli-Janisz, A.; Heuer, U.; Kikteva, Z.; Trautsch, A. A Large-Scale Comparison of Human-Written versus ChatGPT-Generated Essays. Sci. Rep. 2023, 13, 18617. [Google Scholar]

- Cai, Z.G.; Duan, X.; Haslett, D.A.; Wang, S.; Pickering, M.J. Do Large Language Models Resemble Humans in Language Use? arXiv 2023, arXiv:2303.08014. [Google Scholar]

- Liao, W.; Liu, Z.; Dai, H.; Xu, S.; Wu, Z.; Zhang, Y.; Li, X. Differentiating ChatGPT-Generated and Human-Written Medical Texts: Quantitative Study. JMIR Med. Educ. 2023, 9, e48904. [Google Scholar] [CrossRef]

- Mitchell, E.; Lee, Y.; Khazatsky, A.; Manning, C.D.; Finn, C. DetectGPT: Zero-Shot Machine-Generated Text Detection Using Probability Curvature. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 24950–24962. [Google Scholar]

- Bao, G.; Zhao, Y.; Teng, Z.; Yang, L.; Zhang, Y. Fast-DetectGPT: Efficient Zero-Shot Detection of Machine-Generated Text via Conditional Probability Curvature. arXiv 2023, arXiv:2310.05130. [Google Scholar]

- Biber, D.; Conrad, S.; Cortes, V. Lexical Bundles in University Teaching and Textbooks. Appl. Linguist. 2004, 25, 371–405. [Google Scholar] [CrossRef]

- Biber, D.; Barbieri, F. Lexical Bundles in University Spoken and Written Registers. Engl. Specif. Purp. 2007, 26, 263–286. [Google Scholar] [CrossRef]

- Emara, I.F. A Linguistic Comparison between ChatGPT-Generated and Nonnative Student-Generated Short Story Adaptations: A Stylometric Approach. Smart Learn Environ. 2025, 12, 36. [Google Scholar] [CrossRef]

- Jaashan, H.M.; Bin-Hady, W.R.A. Stylometric Analysis of AI-Generated Texts: A Comparative Study of ChatGPT and DeepSeek. Cogent Arts Humanit. 2025, 12, 2553162. [Google Scholar] [CrossRef]

- Wang, S.; Cristianini, N.; Hood, B.M. Stylometric Comparison between ChatGPT and Human Essays. In Proceedings of the 18th International AAAI Conference on Web and Social Media, Buffalo, NY, USA, 3–6 June 2024. [Google Scholar]

- Muñoz-Ortiz, A.; Gómez-Rodríguez, C.; Vilares, D. Contrasting Linguistic Patterns in Human and LLM-Generated News Text. Artif. Intell. Rev. 2024, 57, 265. [Google Scholar] [CrossRef]

- Sankar Sadasivan, V.; Kumar, A.; Balasubramanian, S.; Wang, W.; Feizi, S. Can AI-Generated Text Be Reliably Detected? arXiv 2023, arXiv:2303.11156. [Google Scholar]

- Georgiou, G.P.; Kaskampa, A. Differences in Voice Quality Measures among Monolingual and Bilingual Speakers. Ampersand 2024, 12, 100175. [Google Scholar] [CrossRef]

- Georgiou, G.P.; Panteli, C.; Theodorou, E. Speech Rate of Typical Children and Children with Developmental Language Disorder in a Narrative Context. Commun. Disord. Q. 2025, 46, 212–221. [Google Scholar]

- Themistocleous, C. Open Brain AI: An AI Research Platform. In Proceedings of the Huminfra Conference, Gothenburg, Sweden, 10–11 January 2024; Volume 200, pp. 1–9. [Google Scholar]

- Themistocleous, C. Open Brain AI. Automatic Language Assessment. In Proceedings of the Fifth Workshop on Resources and Processing of Linguistic, Para-Linguistic and Extra-Linguistic Data from People with Various Forms of Cognitive/Psychiatric/Developmental Impairments @ LREC-COLING 2024, Torino, Italy, 25 May 2024; pp. 45–53. [Google Scholar]

- Georgiou, G.P.; Theodorou, E. Detection of Developmental Language Disorder in Cypriot Greek Children Using a Neural Network Algorithm. J. Technol. Behav. Sci. 2024. [Google Scholar] [CrossRef]

- Stamatatos, E. A Survey of Modern Authorship Attribution Methods. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 538–556. [Google Scholar] [CrossRef]

- Kestemont, M. Function Words in Authorship Attribution: From Black Magic to Theory? In Proceedings of the 3rd Workshop on Computational Linguistics for Literature, Gothenburg, Sweden, 26–27 April 2014; pp. 59–66. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2024. [Google Scholar]

- Suvarna, A.; Khandelwal, H.; Peng, N. PhonologyBench: Evaluating Phonological Skills of Large Language Models. arXiv 2024, arXiv:2404.02456. [Google Scholar] [CrossRef]

- Biber, D.; Conrad, S. Register, Genre, and Style; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Holtzman, A.; Buys, J.; Du, L.; Forbes, M.; Choi, Y. The Curious Case of Neural Text Degeneration. In Proceedings of the International Conference on Learning Representations (ICLR 2020), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Meister, C.; Pimentel, T.; Wiher, G.; Cotterell, R. Locally Typical Sampling. Trans. Assoc. Comput. Linguist. 2023, 11, 102–121. [Google Scholar] [CrossRef]

- Gehrmann, S.; Strobelt, H.; Rush, A.M. GLTR: Statistical Detection and Visualization of Generated Text. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Florence, Italy, 28 July–2 August 2019; pp. 111–116. [Google Scholar]

- Sasse, K.; Barham, S.; Kayi, E.S.; Staley, E.W. To Burst or Not to Burst: Generating and Quantifying Improbable Text. arXiv 2024, arXiv:2401.15476. [Google Scholar] [CrossRef]

- Walwema, J. The WHO Health Alert: Communicating a Global Pandemic with WhatsApp. J. Bus. Tech. Commun. 2021, 35, 35–40. [Google Scholar] [CrossRef]

| Measure | Human | AI |

|---|---|---|

| Estimated Reading Time (s) | 22.462 | 26.764 |

| Flesch Reading Ease | 55.352 | 28.444 |

| Flesch–Kincaid Grade Level | 10.366 | 13.795 |

| Gunning Fog Index | 12.537 | 16.391 |

| Coleman–Liau Index | 11.651 | 17.306 |

| Automated Readability Index | 11.498 | 15.463 |

| Smog Index | 12.482 | 15.690 |

| Linsear Write Formula | 2.645 | 2.715 |

| Passive Sentences Percent | 33.593 | 11.055 |

| Dale Chall Readability Score | 9.498 | 12.176 |

| Difficult words | 95.800 | 146.00 |

| Level | Feature | p-Value | 95% CI | W | Hedges’ g |

|---|---|---|---|---|---|

| phonology | approximant | 1.0000 | −1.48–1.16 | 12 | −0.16 |

| fricative | 0.4034 | −1.95–0.75 | 8 | −0.60 | |

| lateral | 1.0000 | −1.12–1.53 | 13 | 0.20 | |

| nasal | 0.0216 | 0.01–2.99 | 24 | 1.50 | |

| plosive | 0.4034 | −0.60–2.13 | 17 | 0.77 | |

| alveolar | 0.0122 | 0.80–4.37 | 25 | 2.58 | |

| dental | 0.0216 | −3.83–−0.51 | 1 | −2.17 | |

| labiodental | 0.0216 | −3.54–−0.34 | 1 | −1.94 | |

| postalveolar | 0.2963 | −0.86–1.81 | 18 | 0.47 | |

| voiced | 0.4034 | −1.83–0.84 | 8 | −0.50 | |

| voiceless | 0.0367 | 0.00–2.98 | 23 | 1.49 | |

| primary stress | 0.2963 | −2.40–0.40 | 7 | −1.00 | |

| secondary stress | 0.8345 | −1.35–1.20 | 11 | −0.03 | |

| morphology | adjective | 0.0367 | 0.25–3.38 | 23 | 1.81 |

| adposition | 0.0212 | −3.62–−0.39 | 1 | −2.00 | |

| adverb | 0.6752 | −1.15–1.49 | 15 | 0.17 | |

| auxiliary | 0.0122 | −3.97–−0.59 | 0 | −2.28 | |

| coordinating conjunction | 0.0356 | 0.03–3.02 | 23 | 1.52 | |

| noun | 0.0122 | 0.78–4.33 | 25 | 2.56 | |

| particle | 0.7526 | −1.45–1.18 | 10.5 | −0.14 | |

| pronoun | 0.0119 | −5.20–−1.22 | 0 | −3.21 | |

| subordinating conjunction | 0.2059 | −2.21–0.54 | 6 | −0.84 | |

| verb | 0.8345 | −1.23–1.40 | 14 | 0.09 | |

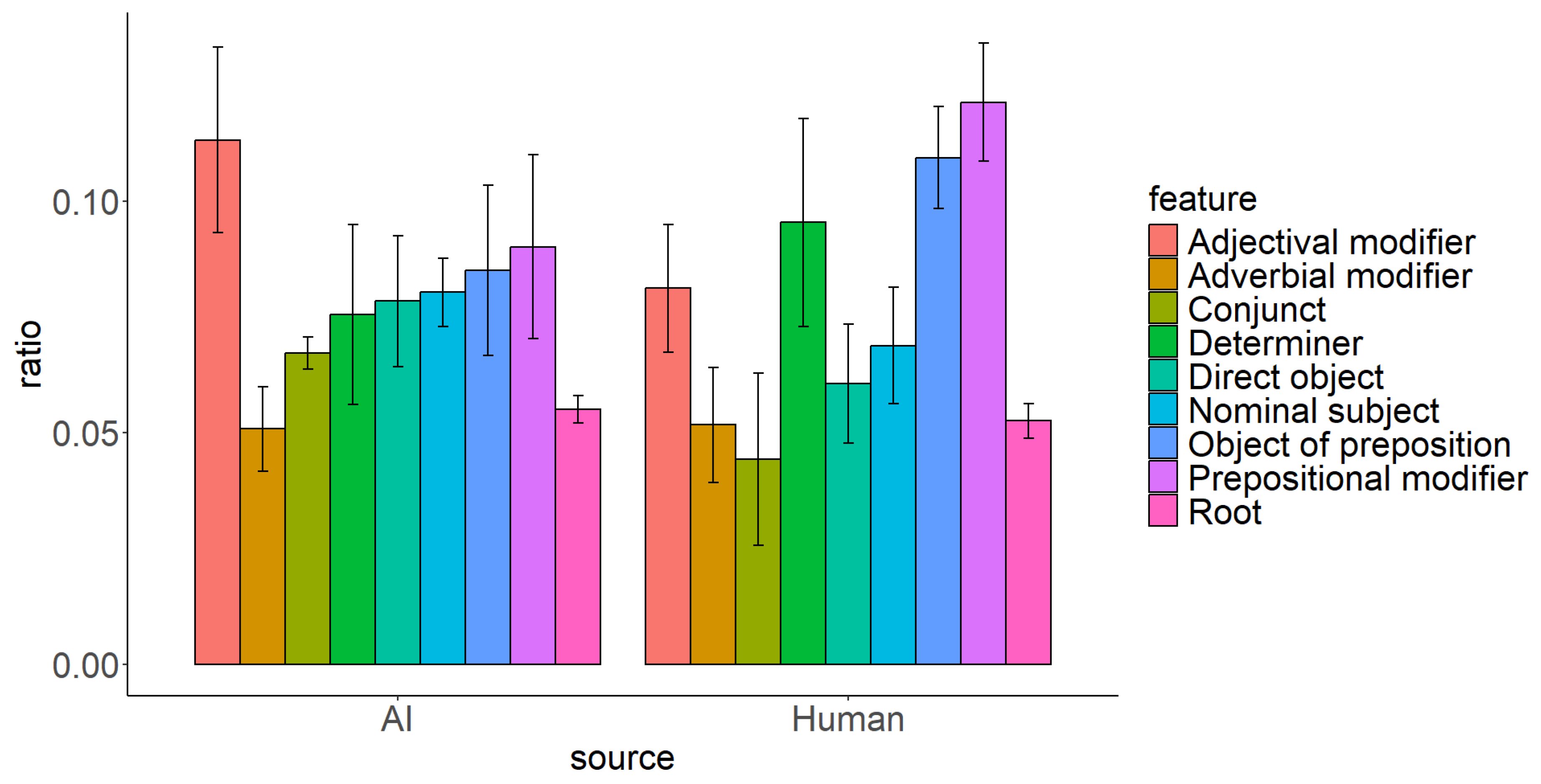

| syntax | adjectival modifier | 0.0278 | 0.15–3.22 | 23.5 | 1.68 |

| adverbial modifier | 1.0000 | −1.39–1.25 | 12 | −0.07 | |

| conjunct | 0.0160 | 0.05–3.05 | 24.5 | 1.55 | |

| determiner | 0.1732 | −2.23–0.52 | 5.5 | −0.85 | |

| direct object | 0.0662 | −0.24–2.62 | 21.5 | 1.19 | |

| nominal subject | 0.2101 | −0.39–2.41 | 19 | 1.01 | |

| object of preposition | 0.0465 | −2.93–0.03 | 2.5 | −1.45 | |

| prepositional modifier | 0.0367 | −3.22–0.16 | 2 | −1.69 | |

| root | 0.1732 | –0.69–2.02 | 19.5 | 0.66 | |

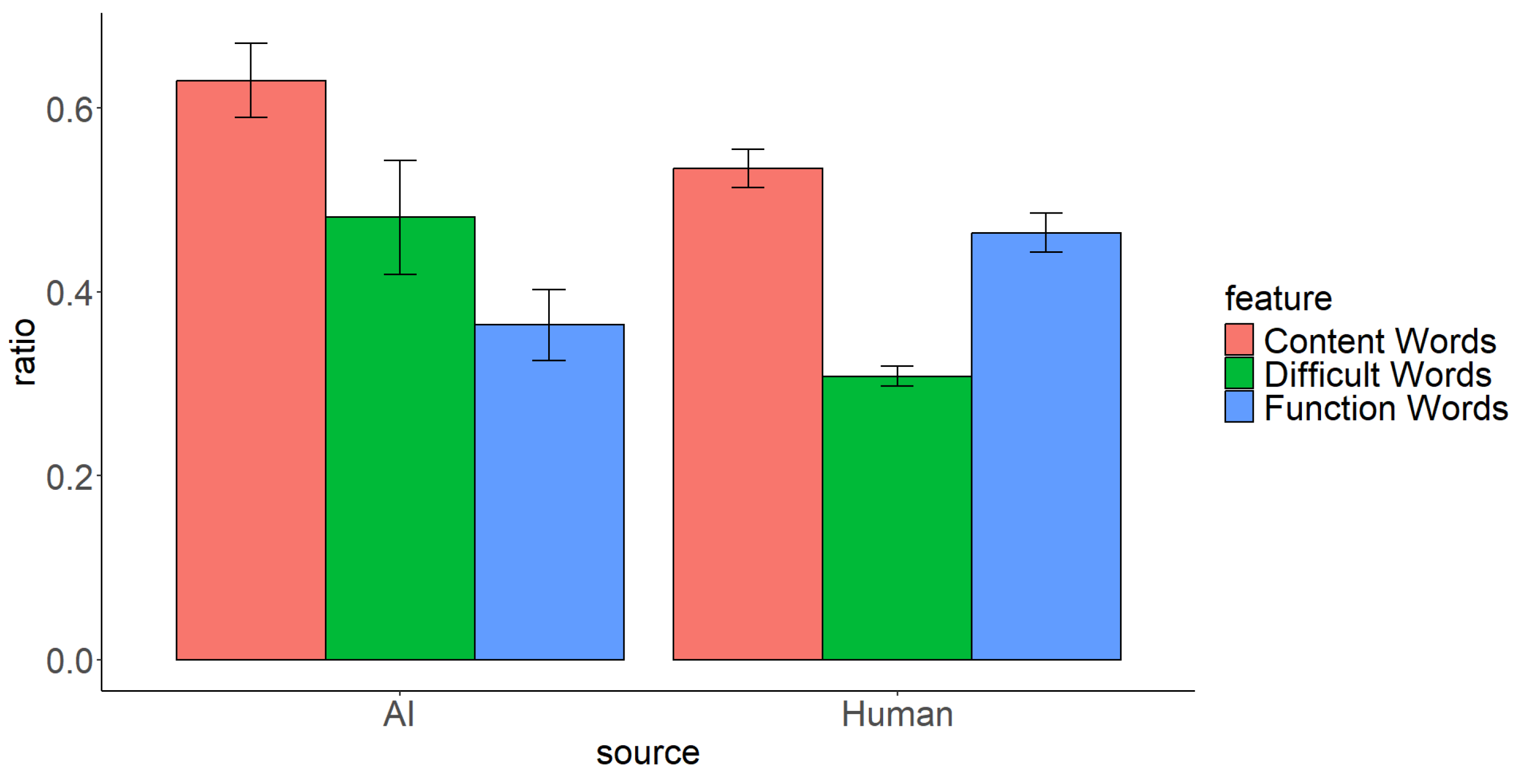

| lexicon | difficult word | 0.0122 | 1.02–4.79 | 15 | 2.90 |

| content word | 0.0122 | 0.88–4.51 | 25 | 2.69 | |

| function word | 0.0122 | −4.78–−1.01 | 0 | −2.90 |

| Level | Feature | p-Value |

|---|---|---|

| phonology | primary stress | −269.2275 |

| alveolar | 122.52697 | |

| voiceless | 53.26128 | |

| dental | −20.5736 | |

| approximant | 0 | |

| fricative | 0 | |

| lateral | 0 | |

| nasal | 0 | |

| plosive | 0 | |

| labiodental | 0 | |

| postalveolar | 0 | |

| voiced | 0 | |

| secondary stress | 0 | |

| morphology | pronoun | −99.27591 |

| auxiliary | −21.29675 | |

| noun | 21.17488 | |

| adjective | 0 | |

| adposition | 0 | |

| adverb | 0 | |

| coordinating conjunction | 0 | |

| particle | 0 | |

| subordinating conjunction | 0 | |

| verb | 0 | |

| syntax | prepositional modifier | −26.1565252 |

| adjectival modifier | 24.0081669 | |

| conjunct | 21.642647 | |

| nominal subject | 0.5338395 | |

| adverbial modifier | 0 | |

| determiner | 0 | |

| direct object | 0 | |

| object of preposition | 0 | |

| root | 0 | |

| lexicon | function words | −8.95668793 |

| difficult words | 0.01683913 | |

| content words | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Georgiou, G.P. Differentiating Between Human-Written and AI-Generated Texts Using Automatically Extracted Linguistic Features. Information 2025, 16, 979. https://doi.org/10.3390/info16110979

Georgiou GP. Differentiating Between Human-Written and AI-Generated Texts Using Automatically Extracted Linguistic Features. Information. 2025; 16(11):979. https://doi.org/10.3390/info16110979

Chicago/Turabian StyleGeorgiou, Georgios P. 2025. "Differentiating Between Human-Written and AI-Generated Texts Using Automatically Extracted Linguistic Features" Information 16, no. 11: 979. https://doi.org/10.3390/info16110979

APA StyleGeorgiou, G. P. (2025). Differentiating Between Human-Written and AI-Generated Texts Using Automatically Extracted Linguistic Features. Information, 16(11), 979. https://doi.org/10.3390/info16110979