Toward Robust Human Pose Estimation Under Real-World Image Degradations and Restoration Scenarios

Abstract

1. Introduction

- 1.

- A new dataset is created that contains a set of filtered versions of the original images in the MPII dataset. This makes up for an important gap in the currently available datasets, as no dataset addressing these issues has ever been compiled.

- 2.

- We propose an unclear image detection and classification framework that achieves better results compared to the state-of-the-art in these specific tasks by employing RotNet as one of the central classifiers.

- 3.

- We present an image restoration process to help enhance and reverse the degraded images to their original quality before feeding them into the HPE model for better pose detection accuracy. Together, these contributions improve the effectiveness and reliability of HPE systems in unconstrained real-world conditions.

2. Related Work

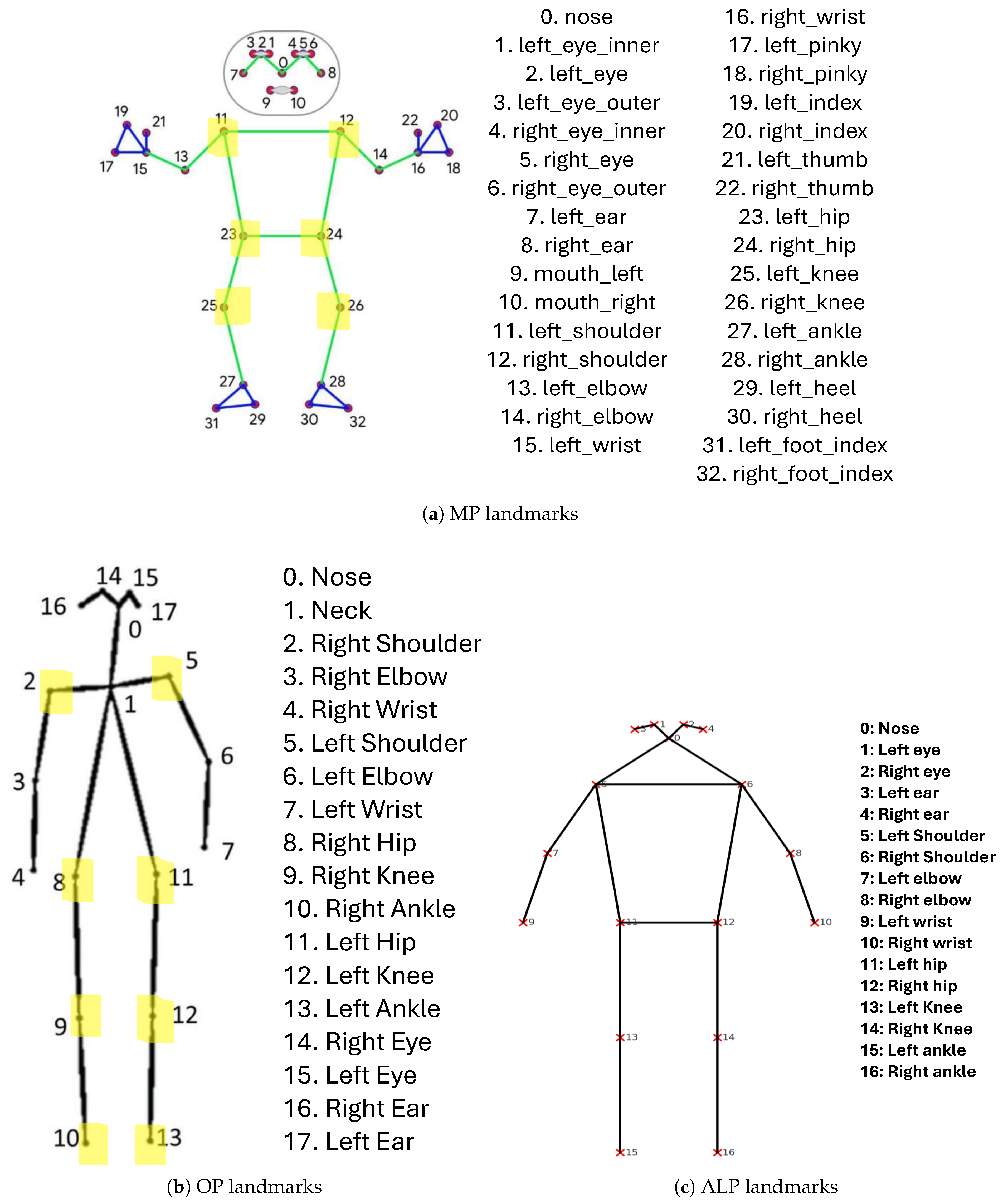

2.1. Human Pose Estimation Methods

2.2. Image Quality Factors in Computer Vision

2.3. Image Quality Assessment and Enhancement

2.4. Gap Analysis

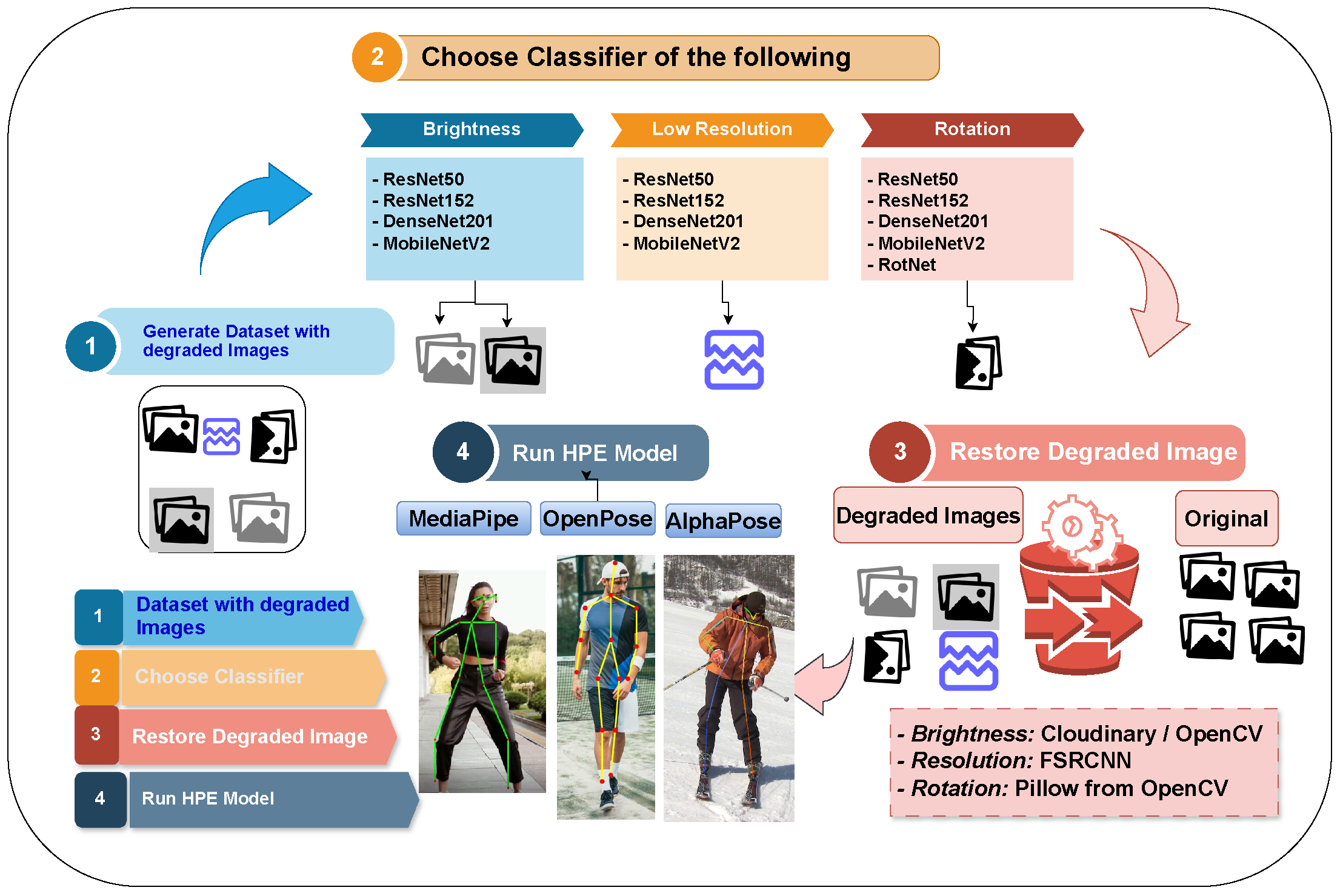

3. Methodology

3.1. Overview

3.2. Dataset Preparation

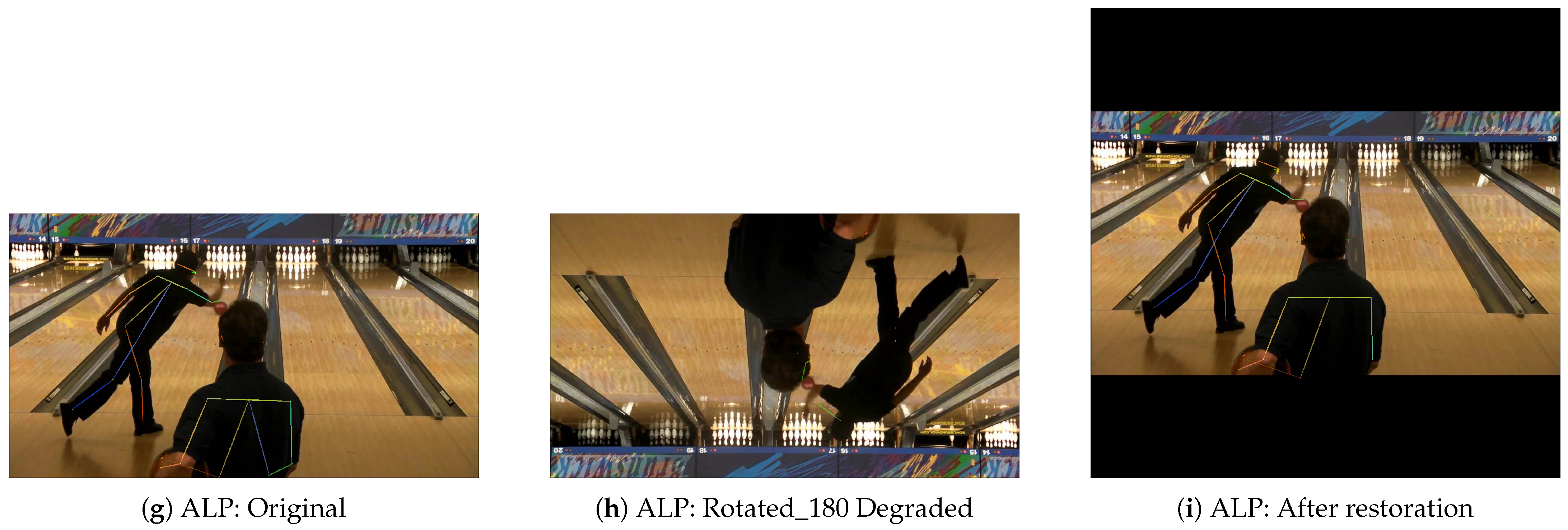

- 1.

- Resolution Reduction Procedure: The algorithm was used to transform low-resolution images by using a one reduction percentage of the following 66.7%, 80%, 87.5%, or 90% to change image resolution. It is used the class image from the PIL library. The algorithm was applied by firstly calculating the target size based on the chosen reduction percentage and using nearest-neighbor interpolation to reduce image size. To reverse the resized image to its original size, bilinear interpolation was used to smooth transitions and maintain superior greater detail preservation. The above methodology ensured low-resolution images preserved image quality even when reduced in size. The algorithm processes all images in a given input directory sequentially, applying those methods to each image and then writing the results into an output directory, thereby facilitating efficient batch processingthus enabling efficient batch processing as well as storage.

- 2.

- Brightness Adjustment Algorithm: The process used to adjust brightness changes illumination levels of images by two primary processes, namely, increasing and decreasing level of brightness. The process uses the convertScaleAbs function of the OpenCV library to apply these changes. Scaling factors of 80, 90, and 100 were used to enhance brightness, while −100, −110, and −120 were used to reduce brightness, resulting in values ranging from 0 to −255. The first step in this process was to convert each image to HSV color space, where hue, saturation, and value are separated components. The brightness was adjusted by changing the value channel in accordance with specified brightness levels. The image was then restored to its original BGR format. The conversion to HSV space was important because it enabled for modest brightness adjustments without affecting color hue and saturation quality. Finally, the technique generated a balanced dataset with varying brightness levels, which may be used for subsequent analysis or model training.

- 3.

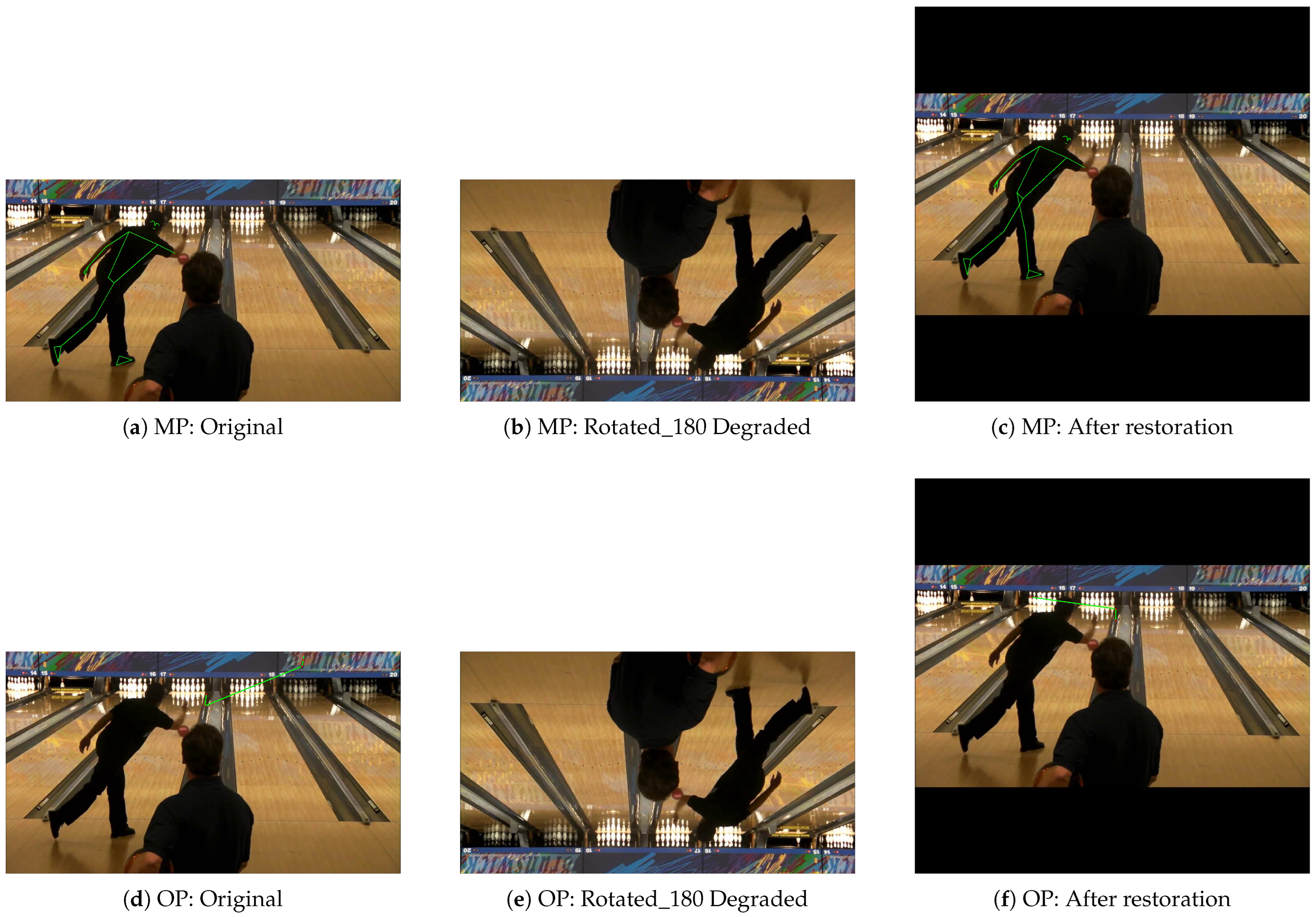

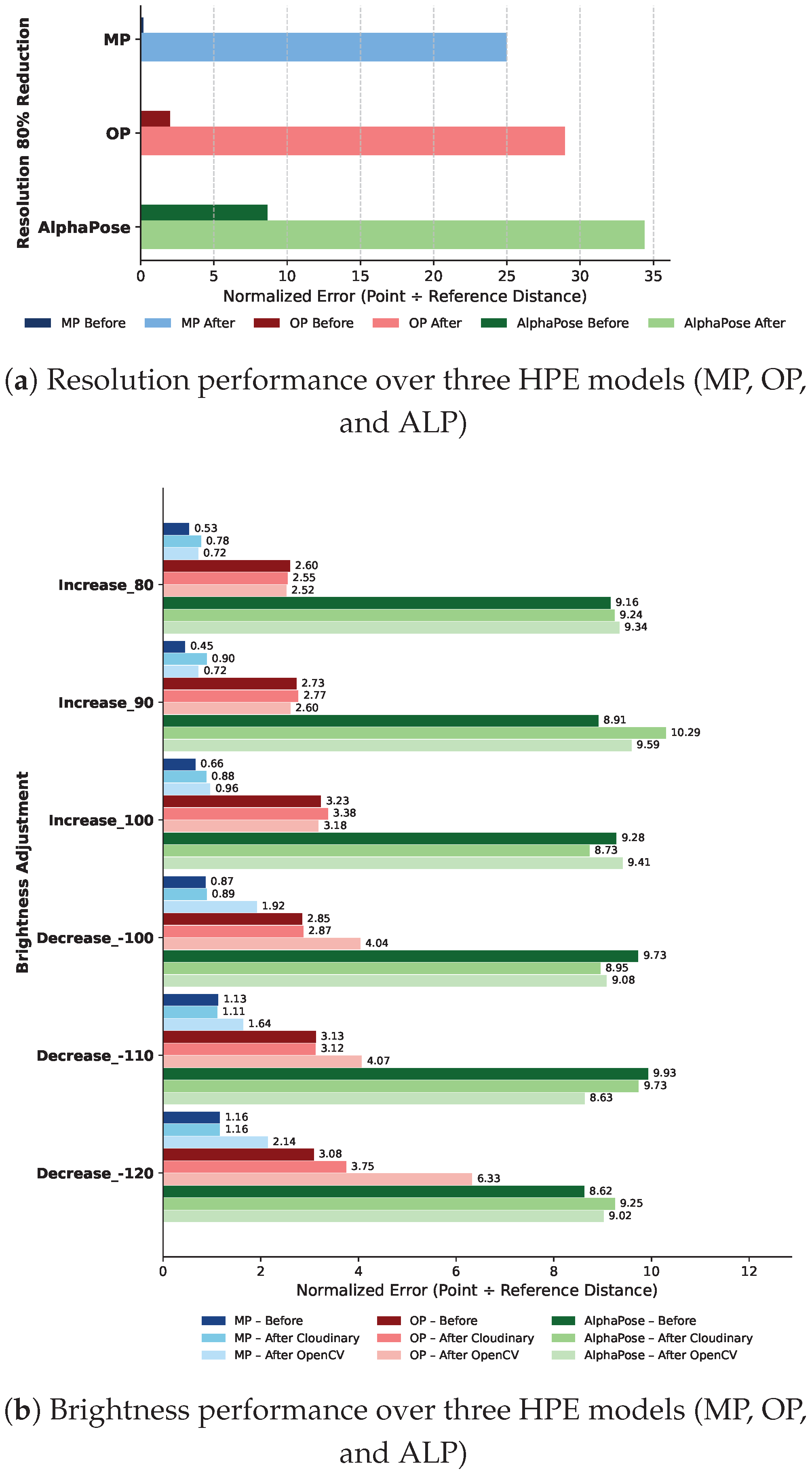

- Rotation Algorithm: This approach proceeded by applying image rotations with an affine transformation matrix, which was obtained based on the image’s center and a selected rotation angle from 0°, 90°, 180°, and 270°. To reduce image cropping during rotation, the method recalculated the image dimensions using trigonometric calculations that account for the change in orientation. The affine transformation matrix was then updated to ensure that the original image’s center is aligned with the center of the resized output, maintaining all visual content while avoiding distortion or data loss. Following image rotation, the method uses a geometric transformation to determine the predicted locations of human pose landmarks in the original image. A 2D rotation matrix for a given angle was defined using Equation (4):Using Equation (5), the original landmark coordinates (X, Y) are transformed into new coordinates (, ) after rotation.where there are rotations about the image’s center of rotation, these coordinates are translated so as to take into consideration this point of rotation.This approach provides a collection of expected landmark positions, which correspond to the predicted locations of the landmarks after rotating the original image by a specific angle. These produced coordinates provide a baseline for evaluating the accuracies of the HPE models’ landmark predictions for rotated images. The comparison illustrates how closely the predicted landmarks correspond to the expected positions, assessing the HPE model’s resilience to rotated inputs.

3.3. Performance Evaluation Framework

- 1.

- If a landmark was present in the original but absent in the filtered image, NaN was recorded.

- 2.

- If a landmark was absent in the original but present in the filtered image, Null was noted.

- 3.

- When both values were available, the absolute difference was computed.

- 1.

- 2.

- Euclidean distance of shoulder joints divided by reference Distance

3.4. Quality Assessment Models

3.5. Image Restoration Approaches

3.5.1. Reverse Brightness

3.5.2. Reverse Low Resolution

3.5.3. Reverse Rotation

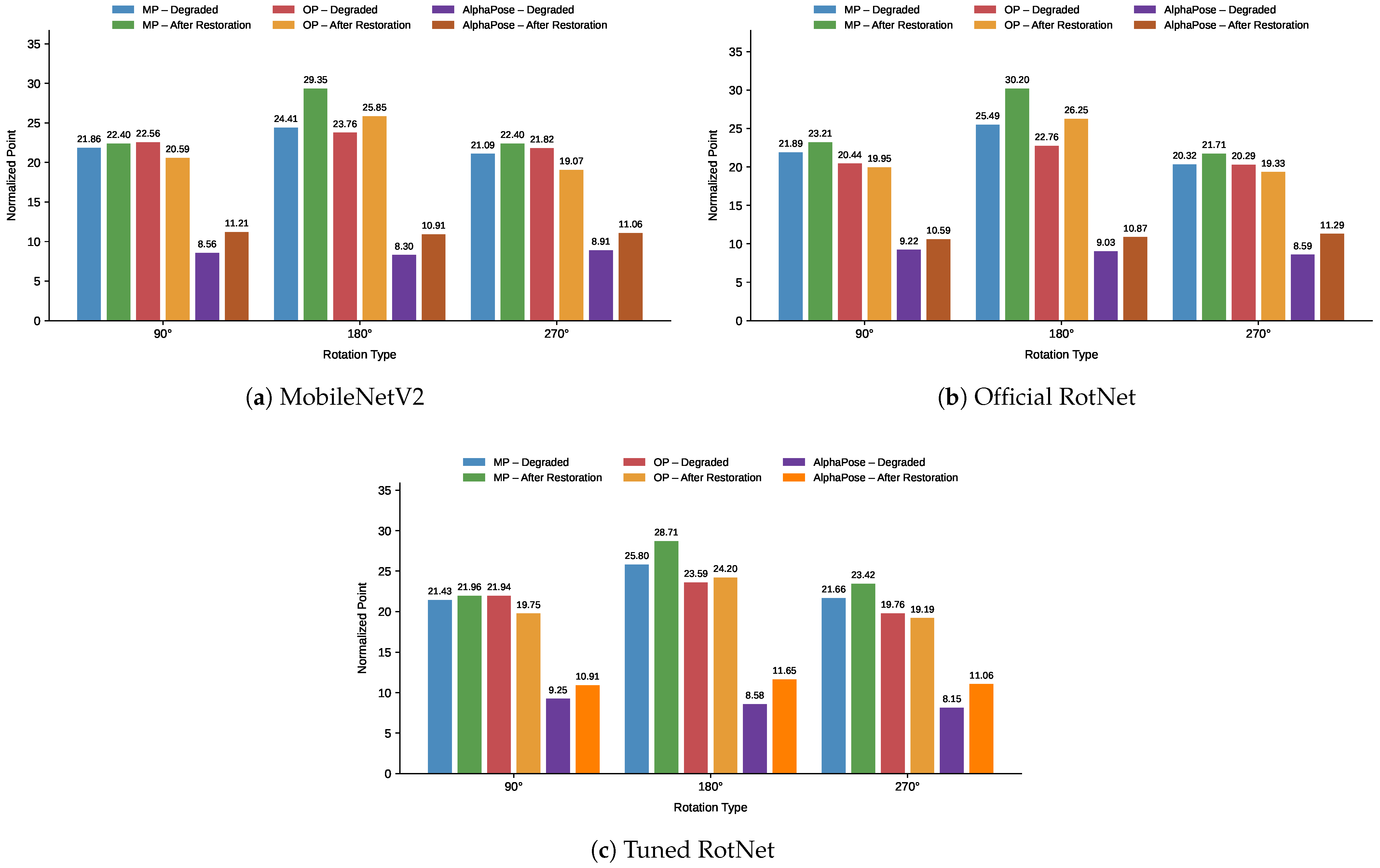

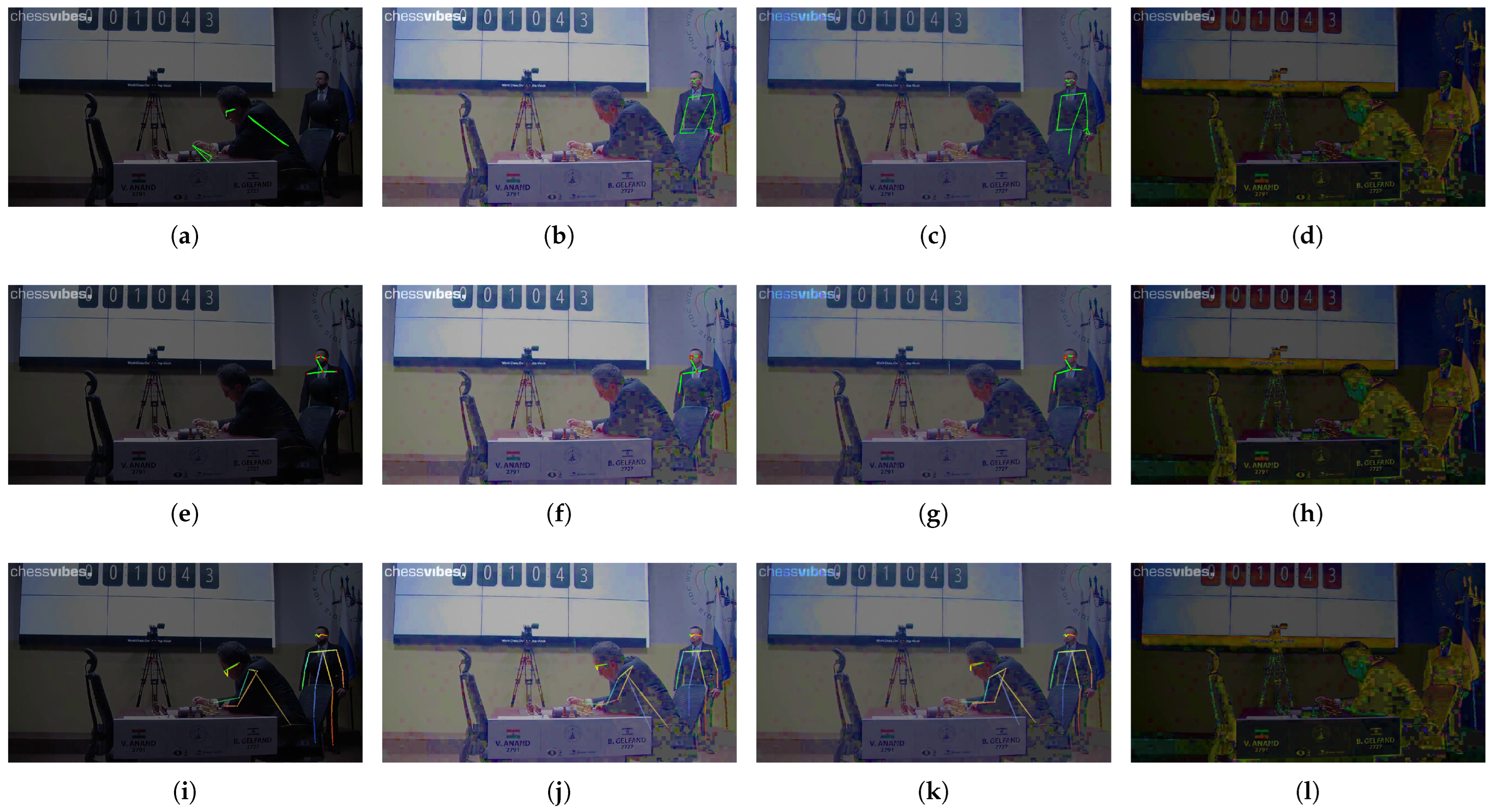

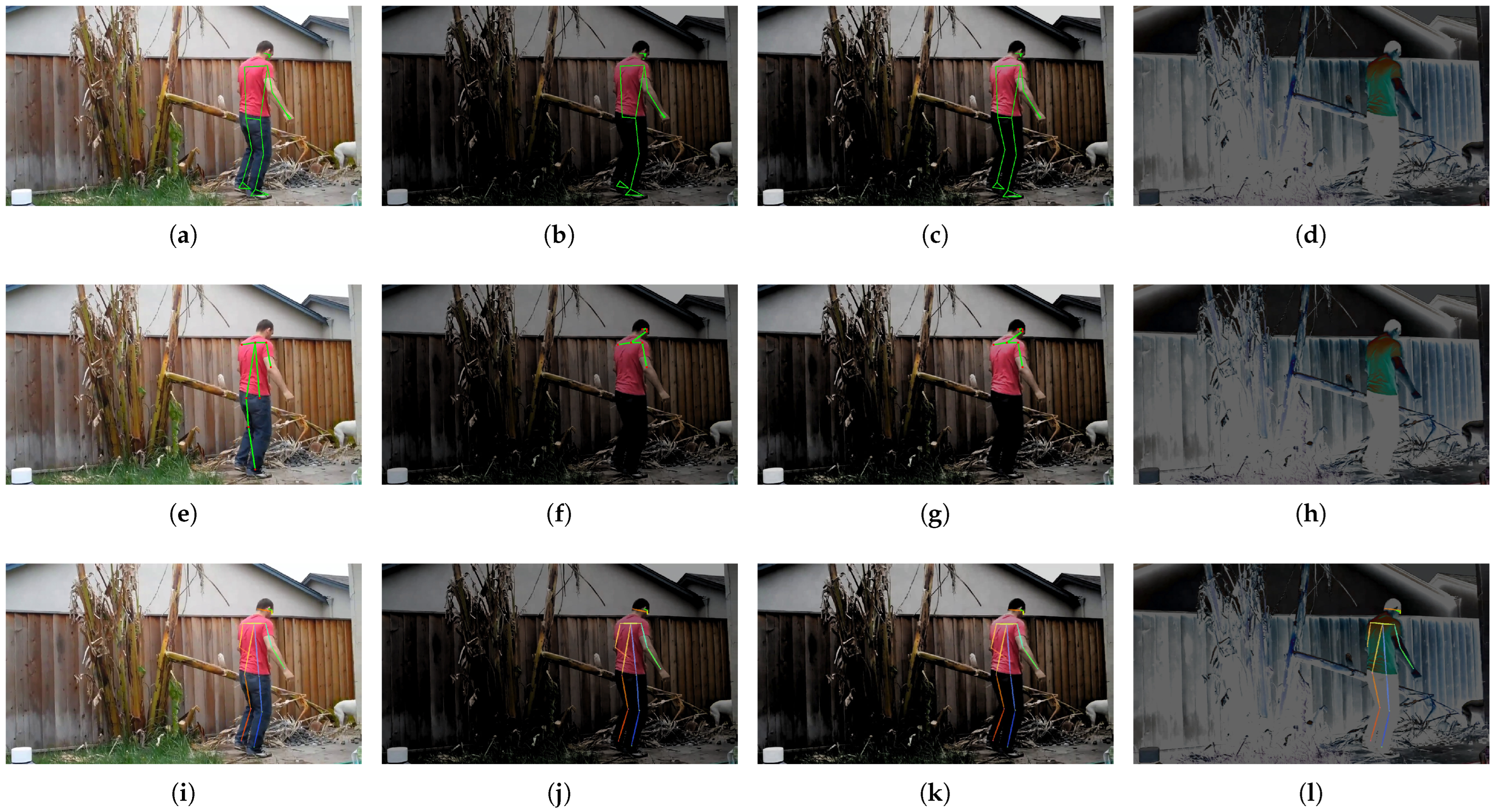

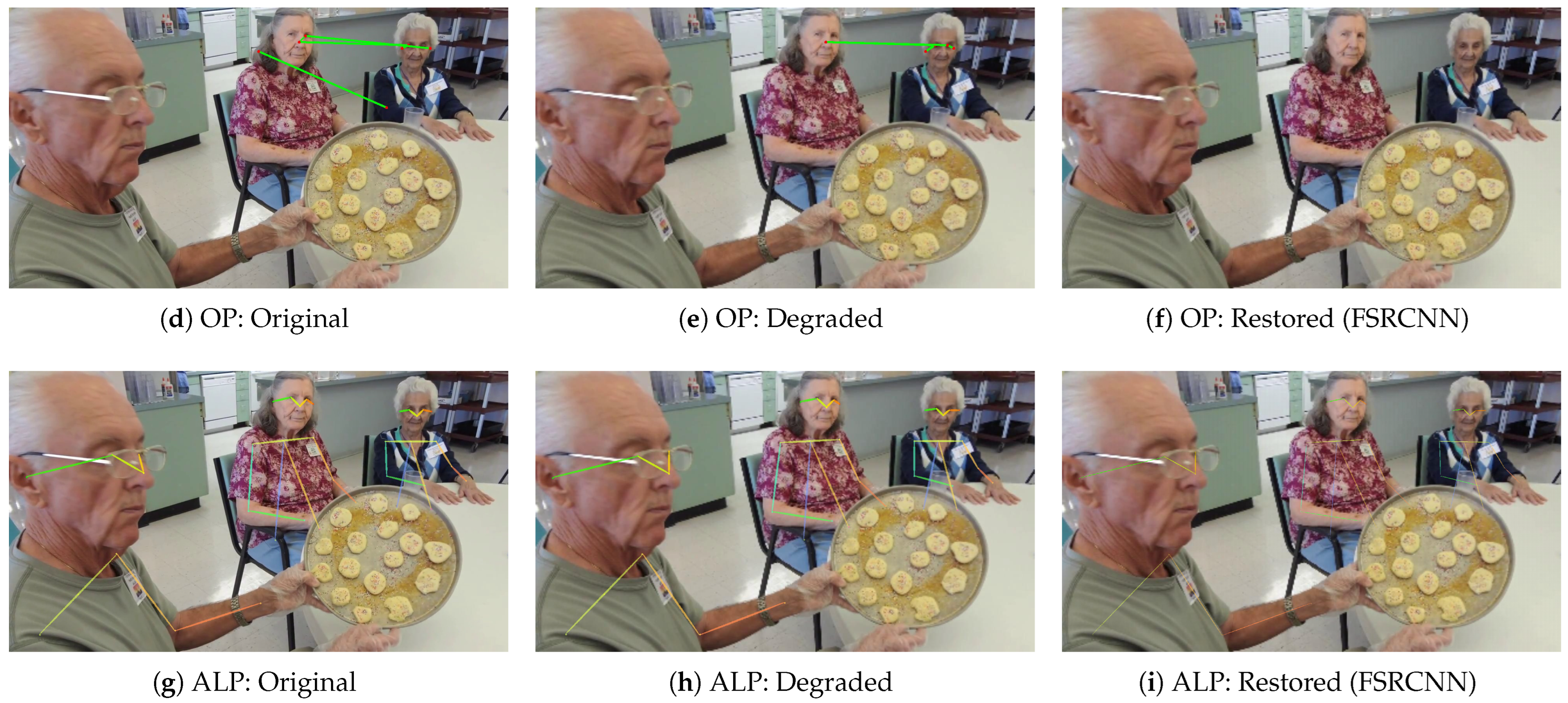

4. Experimental Results and Discussion

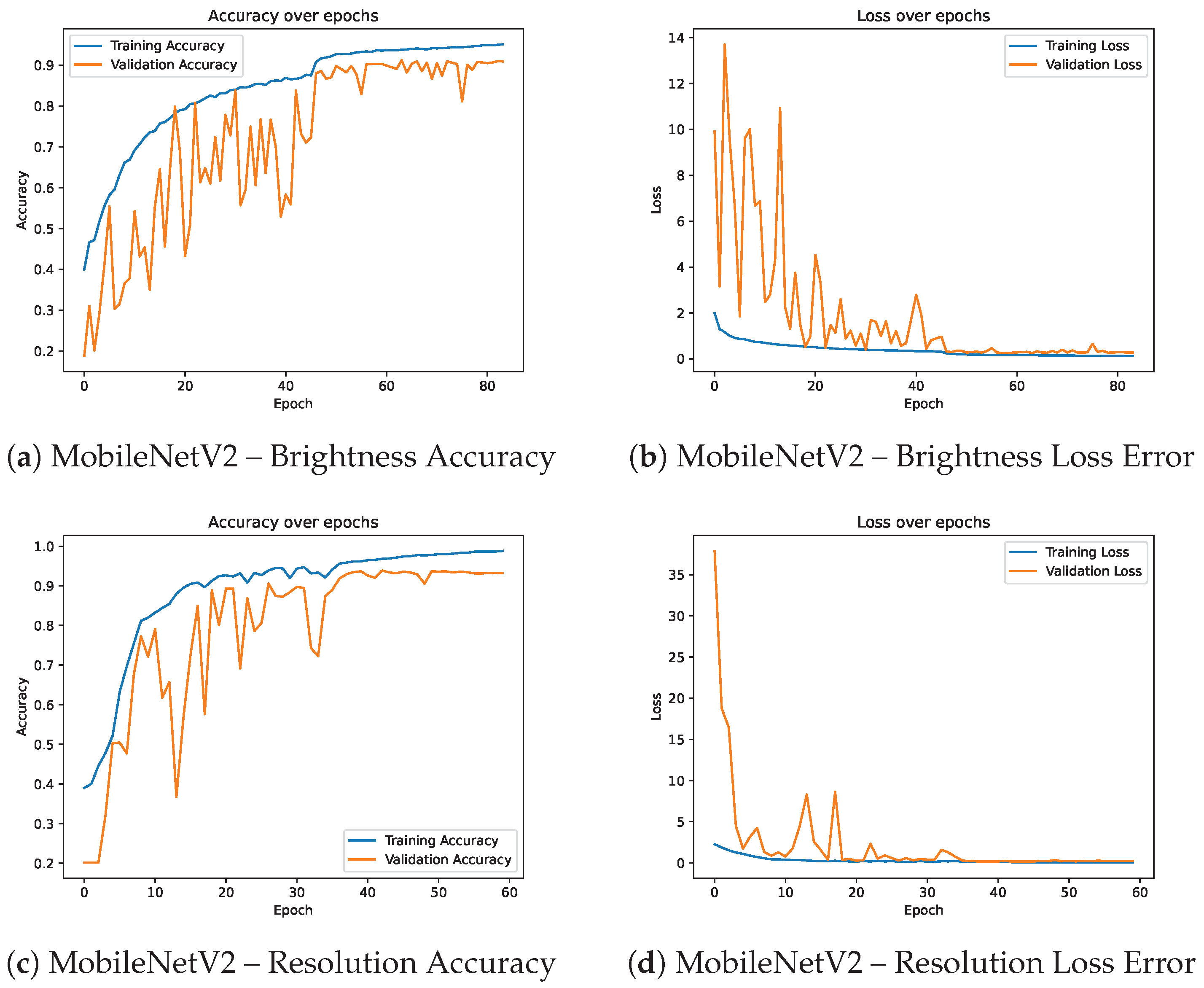

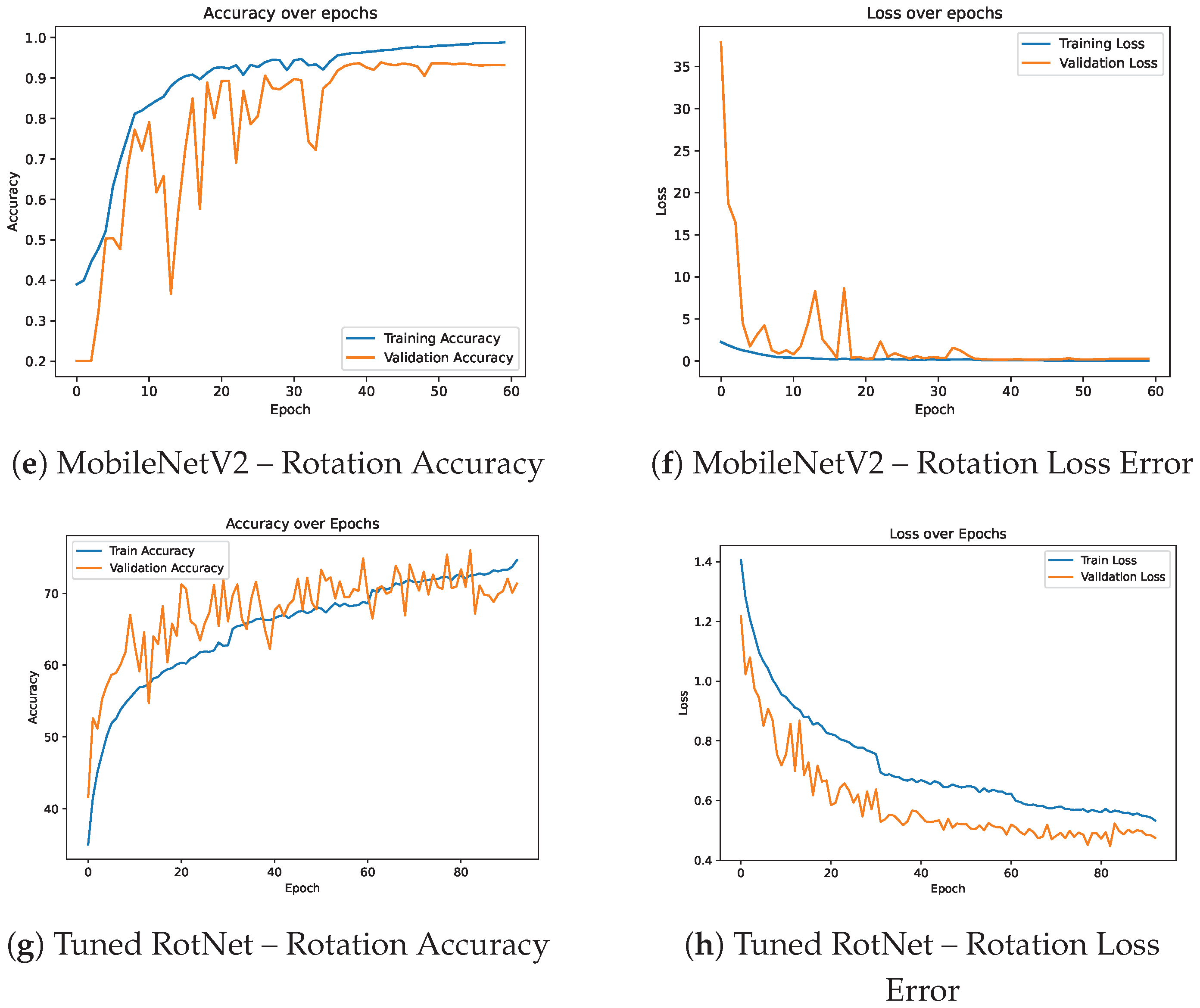

4.1. Classifiers Results

4.2. Quality Assessment Models Performance

5. Limitation

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Guo, W.; Pan, Z.; Xi, Z.; Tuerxun, A.; Feng, J.; Zhou, J. Sports analysis and VR viewing system based on player tracking and pose estimation with multimodal and multiview sensors. arXiv 2024, arXiv:2405.01112. [Google Scholar] [CrossRef]

- Zhou, L.; Meng, X.; Liu, Z.; Wu, M.; Gao, Z.; Wang, P. Human pose-based estimation, tracking and action recognition with deep learning: A survey. arXiv 2023, arXiv:2310.13039. [Google Scholar] [CrossRef]

- Chen, H.; Feng, R.; Wu, S.; Xu, H.; Zhou, F.; Liu, Z. 2D human pose estimation: A survey. Multimed. Syst. 2023, 29, 3115–3138. [Google Scholar] [CrossRef]

- Stenum, J.; Cherry-Allen, K.M.; Pyles, C.O.; Reetzke, R.D.; Vignos, M.F.; Roemmich, R.T. Applications of pose estimation in human health and performance across the lifespan. Sensors 2021, 21, 7315. [Google Scholar] [CrossRef]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2D human pose estimation: New benchmark and state of the art analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3686–3693. [Google Scholar]

- Sapp, B.; Taskar, B. Modec: Multimodal decomposable models for human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3674–3681. [Google Scholar]

- Tan, F.; Zhai, M.; Zhai, C. Foreign object detection in urban rail transit based on deep differentiation segmentation neural network. Heliyon 2024, 10, e37072. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Yi, J.; Tan, F. Facial micro-expression recognition method based on CNN and transformer mixed model. Int. J. Biom. 2024, 16, 463–477. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar] [CrossRef]

- Singh, A.K.; Kumbhare, V.A.; Arthi, K. Real-time human pose detection and recognition using MediaPipe. In Advances in Intelligent Systems and Computing, Proceedings of the International Conference on Soft Computing and Signal Processing, Hyderabad, India, 18–19 June 2021; Springer: Singapore, 2021; pp. 145–154. [Google Scholar]

- Kulkarni, S.; Deshmukh, S.; Fernandes, F.; Patil, A.; Jabade, V. Poseanalyser: A survey on human pose estimation. SN Comput. Sci. 2023, 4, 136. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2D pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Kitamura, T.; Teshima, H.; Thomas, D.; Kawasaki, H. Refining OpenPose with a new sports dataset for robust 2D pose estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 672–681. [Google Scholar]

- Roggio, F.; Trovato, B.; Sortino, M.; Musumeci, G. A comprehensive analysis of the machine learning pose estimation models used in human movement and posture analyses: A narrative review. Heliyon 2024, 10, e39977. [Google Scholar] [CrossRef]

- Dedhia, U.; Bhoir, P.; Ranka, P.; Kanani, P. Pose estimation and virtual gym assistant using MediaPipe and machine learning. In Proceedings of the 2023 International Conference on Network, Multimedia and Information Technology (NMITCON), Bengaluru, India, 1–2 September 2023; pp. 1–7. [Google Scholar]

- Fang, H.S.; Li, J.; Tang, H.; Xu, C.; Zhu, H.; Xiu, Y.; Li, Y.L.; Lu, C. Alphapose: Whole-body regional multi-person pose estimation and tracking in real-time. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7157–7173. [Google Scholar] [CrossRef]

- Zhang, Z.; Wan, L.; Xu, W.; Wang, S. Estimating a 2D pose from a tiny person image with super-resolution reconstruction. Comput. Electr. Eng. 2021, 93, 107192. [Google Scholar] [CrossRef]

- Johnson, S.; Everingham, M. Clustered Pose and Nonlinear Appearance Models for Human Pose Estimation. In Proceedings of the British Machine Vision Conference (BMVC), Aberystwyth, UK, 31 August–3 September 2010; British Machine Vision Association: Durham, UK, 2010; p. 5. [Google Scholar]

- Sun, X.; Li, F.; Bai, H.; Ni, R.; Zhao, Y. SRPose: Low-resolution human pose estimation with super-resolution. In Smart Innovation, Systems and Technologies, Proceedings of the International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kitakyushu, Japan, 16–18 December 2022; Springer: Singapore, 2022; pp. 343–353. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Computer Vision—Proceedings of the ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13; Springer: Singapore, 2014; pp. 740–755. [Google Scholar]

- Tran, T.Q.; Nguyen, G.V.; Kim, D. Simple multi-resolution representation learning for human pose estimation. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 511–518. [Google Scholar]

- Yun, K.; Park, J.; Cho, J. Robust human pose estimation for rotation via self-supervised learning. IEEE Access 2020, 8, 32502–32517. [Google Scholar] [CrossRef]

- Gidaris, S.; Singh, P.; Komodakis, N. Unsupervised representation learning by predicting image rotations. arXiv 2018, arXiv:1803.07728. [Google Scholar] [CrossRef]

- Kim, J.W.; Choi, J.Y.; Ha, E.J.; Choi, J.H. Human pose estimation using MediaPipe pose and optimization method based on a humanoid model. Appl. Sci. 2023, 13, 2700. [Google Scholar] [CrossRef]

- SIMOES, W.; REIS, L.; ARAUJO, C.; MAIA JR, J. Accuracy assessment of 2D pose estimation with MediaPipe for physiotherapy exercises. Procedia Comput. Sci. 2024, 251, 446–453. [Google Scholar] [CrossRef]

- Wang, K.; Wang, T.; Qu, J.; Jiang, H.; Li, Q.; Chang, L. An end-to-end cascaded image deraining and object detection neural network. IEEE Robot. Autom. Lett. 2022, 7, 9541–9548. [Google Scholar] [CrossRef]

- Wang, M.; Liao, L.; Huang, D.; Fan, Z.; Zhuang, J.; Zhang, W. Frequency and content dual stream network for image dehazing. Image Vis. Comput. 2023, 139, 104820. [Google Scholar] [CrossRef]

- Kandel, I.; Castelli, M.; Manzoni, L. Brightness as an augmentation technique for image classification. Emerg. Sci. J. 2022, 6, 881–892. [Google Scholar] [CrossRef]

- Li, K.; Chen, H.; Huang, F.; Ling, S.; You, Z. Sharpness and brightness quality assessment of face images for recognition. Sci. Program. 2021, 2021, 4606828. [Google Scholar] [CrossRef]

- Bengtsson Bernander, K.; Sintorn, I.M.; Strand, R.; Nyström, I. Classification of rotation-invariant biomedical images using equivariant neural networks. Sci. Rep. 2024, 14, 14995. [Google Scholar] [CrossRef]

- Dong, W.; Zhang, J.; Zhou, Y.; Gao, L.; Zhang, X. Blind detection of circular image rotation angle based on ensemble transfer regression and fused HOG. Front. Neurorobot. 2022, 16, 1037381. [Google Scholar] [CrossRef]

- Szczuko, P. ANN for human pose estimation in low resolution depth images. In Proceedings of the 2017 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 20–22 September 2017; pp. 354–359. [Google Scholar]

- Szczuko, P. CNN architectures for human pose estimation from a very low resolution depth image. In Proceedings of the 2018 11th International Conference on Human System Interaction (HSI), Gdansk, Poland, 4–6 July 2018; pp. 118–127. [Google Scholar]

- Szczuko, P. Very Low Resolution Depth Images of 200,000 Poses–Open Repository. 2018. Available online: https://github.com/szczuko/poses (accessed on 25 October 2025).

- Srivastav, V.; Gangi, A.; Padoy, N. Unsupervised domain adaptation for clinician pose estimation and instance segmentation in the operating room. Med. Image Anal. 2022, 80, 102525. [Google Scholar] [CrossRef] [PubMed]

- Srivastav, V.; Issenhuth, T.; Kadkhodamohammadi, A.; de Mathelin, M.; Gangi, A.; Padoy, N. MVOR: A multi-view RGB-D operating room dataset for 2D and 3D human pose estimation. arXiv 2018, arXiv:1808.08180. [Google Scholar]

- Srivastav, V.; Gangi, A.; Padoy, N. Self-supervision on unlabelled OR data for multi-person 2D/3D human pose estimation. In Lecture Notes in Computer Science, Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Lima, Peru, 4–8 October 2020; Springer: Cham, Switzerland, 2020; pp. 761–771. [Google Scholar]

- Belagiannis, V.; Wang, X.; Shitrit, H.B.; Hashimoto, K.; Stauder, R.; Aoki, Y.; Kranzfelder, M.; Schneider, A.; Fua, P.; Ilic, S.; et al. Parsing human skeletons in an operating room. Mach. Vis. Appl. 2016, 27, 1035–1046. [Google Scholar] [CrossRef]

- Szczuko, P. Deep neural networks for human pose estimation from a very low resolution depth image. Multimed. Tools Appl. 2019, 78, 29357–29377. [Google Scholar] [CrossRef]

- Cloudinary. Techniques for Image Enhancement with Cloudinary: A Primer; Cloudinary: London, UK, 2024. [Google Scholar]

- Bradski, G.; The OpenCV Team. OpenCV: Open Source Computer Vision Library. 2025. Available online: https://opencv.org/ (accessed on 14 September 2025).

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Computer Vision—Proceedings of the ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14; Springer: Cham, Switzerland, 2016; pp. 391–407. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Agustsson, E.; Timofte, R. NTIRE 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

- Reidy, L. Rotate Images Function in Python. Available online: https://gist.github.com/leonardreidy/2dcca95a7c14b485dcee06792c6f14e9 (accessed on 25 October 2025).

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Samkari, E.; Arif, M.; Alghamdi, M.; Al Ghamdi, M.A. Human pose estimation using deep learning: A systematic literature review. Mach. Learn. Knowl. Extr. 2023, 5, 1612–1659. [Google Scholar] [CrossRef]

- Yu, B.; Fan, Z.; Xiang, X.; Chen, J.; Huang, D. Universal Image Restoration with Text Prompt Diffusion. Sensors 2024, 24, 3917. [Google Scholar] [CrossRef]

- Su, Y.; Chen, D.; Xing, M.; Oh, C.; Liu, X.; Li, J. Coming Out of the Dark: Human Pose Estimation in Low-light Conditions. In Proceedings of the 34th International Joint Conference on Artificial Intelligence (IJCAI), Montreal, QC, Canada, 16–22 August 2025; pp. 1882–1890. [Google Scholar] [CrossRef]

- Yoon, J.h.; Kwon, S.k. Robust Human Pose Estimation Method for Body-to-Body Occlusion Using RGB-D Fusion Neural Network. Appl. Sci. 2025, 15, 8746. [Google Scholar] [CrossRef]

- Zhang, Z.; Shin, S.Y. Two-Dimensional Human Pose Estimation with Deep Learning: A Review. Appl. Sci. 2025, 15, 7344. [Google Scholar] [CrossRef]

- Kareem, I.; Ali, S.F.; Bilal, M.; Hanif, M.S. Exploiting the features of deep residual network with SVM classifier for human posture recognition. PLoS ONE 2024, 19, e0314959. [Google Scholar] [CrossRef]

- Gao, M.; Li, J.; Zhou, D.; Zhi, Y.; Zhang, M.; Li, B. Fall detection based on OpenPose and MobileNetV2 network. IET Image Process. 2023, 17, 722–732. [Google Scholar] [CrossRef]

| Study (Reference) | Year | Dataset | Model/Framework | Type of Degradation/Challenge | Methodology and Key Results |

|---|---|---|---|---|---|

| [48] | 2023 | Multiple public 2D HPE datasets (COCO, MPII, LSP) | Systematic Literature Review | General robustness and visual degradation | Provided a comprehensive review of deep learning–based 2D HPE approaches, highlighting open challenges in robustness, generalization, and degraded image handling. |

| [49] | 2024 | Synthetic and real degraded image datasets | Text-Prompt Diffusion Model | Image degradation and restoration (blur, noise, resolution) | Proposed a universal image restoration method based on diffusion models, enhancing image quality for downstream vision tasks including pose estimation. |

| [50] | 2025 | LLIP (Low-Light Images and Poses) dataset | Transformer-based low-light pose estimator | Low-light and illumination degradation | Introduced an illumination-adaptive learning framework integrating image restoration and pose estimation, improving accuracy and robustness under dim lighting. |

| [51] | 2025 | Custom RGB-D dataset (occluded scenarios) | RGB-D Fusion Neural Network (modified OpenPose) | Body-to-body occlusion and overlapping poses | Utilized multimodal feature fusion combining RGB and depth cues, reporting a 13.3% improvement in recall over conventional RGB-only estimators under occluded conditions. |

| [52] | 2025 | Multiple public 2D HPE datasets (COCO, MPII, etc.) | Review of deep learning-based 2D HPE models | General degradations (blur, occlusion, noise, low resolution) | Provided an extensive review and comparison of state-of-the-art 2D HPE models, identifying key limitations in handling degraded visual inputs. |

| Degradation Type | Number of Classes | Images/Class | Total Images | Training | Validation | Testing | Split (Train/Val/Test) |

|---|---|---|---|---|---|---|---|

| Brightness | 7 | 8040 | 56,280 | 38,270 | 6754 | 11,256 | 68%/12%/20% |

| Resolution | 5 | 8040 | 40,200 | 27,336 | 4824 | 8040 | 68%/12%/20% |

| Rotation | 4 | 8040 | 32,160 | 21,869 | 3859 | 6432 | 68%/12%/20% |

| Model | Training Accuracy | Training Loss | LR | Validation Accuracy | Validation Loss | Stopped at Epoch No. | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|---|

| ResNet50 | 1.0000 | 0.0016 | 0.000010 | 0.9572 | 0.2118 | 77 | 0.9594 | 0.9594 | 0.9594 |

| ResNet152 | 1.0000 | 0.0018 | 0.000010 | 0.9788 | 0.0789 | 74 | 0.9799 | 0.9799 | 0.9799 |

| DenseNet201 | 0.9904 | 0.5565 | 0.000100 | 0.9236 | 2.3973 | 23 | 0.8518 | 0.8459 | 0.8460 |

| MobileNetV2 | 1.0000 | 0.0003 | 0.000010 | 0.9777 | 0.1175 | 108 | 0.9786 | 0.9785 | 0.9785 |

| RotNet Official a | 0.8470 | 0.3412 | 0.100000 | 0.6159 | 0.6992 | 250 | 0.7000 | 0.7000 | 0.7000 |

| Tuned-RotNet | 0.9869 | 0.0398 | 0.001000 | 0.9204 | 0.2698 | 20 | 0.9200 | 0.9200 | 0.9200 |

| Model | Training Accuracy | Training Loss | LR | Validation Accuracy | Validation Loss | Stopped at Epoch | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|---|

| ResNet50 | 0.9619 | 0.0882 | 0.000010 | 0.9109 | 0.3590 | 84 | 0.8978 | 0.8881 | 0.8889 |

| ResNet152 | 0.9656 | 0.1187 | 0.000100 | 0.7210 | 1.2536 | 34 | 0.5662 | 0.5473 | 0.5479 |

| DenseNet201 | 0.6605 | 0.7371 | 0.000100 | 0.5642 | 0.9305 | 54 | 0.5583 | 0.5462 | 0.5347 |

| MobileNetV2 | 0.9509 | 0.1180 | 0.000010 | 0.9091 | 0.2753 | 83 | 0.9075 | 0.9030 | 0.9036 |

| Model | Training Accuracy | Training Loss | LR | Validation Accuracy | Validation Loss | Stopped at Epoch | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|---|

| ResNet50 | 0.9948 | 0.0193 | 0.000100 | 0.8912 | 0.5730 | 46 | 0.8932 | 0.8894 | 0.8893 |

| ResNet152 | 0.9924 | 0.0422 | 0.000100 | 0.7622 | 1.0796 | 54 | 0.7109 | 0.7070 | 0.7069 |

| DenseNet201 | 0.9076 | 0.2753 | 0.000100 | 0.6928 | 0.9740 | 63 | 0.6775 | 0.6695 | 0.6699 |

| MobileNetV2 | 0.9880 | 0.0322 | 0.000010 | 0.9320 | 0.2523 | 59 | 0.9415 | 0.9414 | 0.9415 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Elshami, N.E.; Salah, A.; Abdellatif, A.; Mohsen, H. Toward Robust Human Pose Estimation Under Real-World Image Degradations and Restoration Scenarios. Information 2025, 16, 970. https://doi.org/10.3390/info16110970

Elshami NE, Salah A, Abdellatif A, Mohsen H. Toward Robust Human Pose Estimation Under Real-World Image Degradations and Restoration Scenarios. Information. 2025; 16(11):970. https://doi.org/10.3390/info16110970

Chicago/Turabian StyleElshami, Nada E., Ahmad Salah, Amr Abdellatif, and Heba Mohsen. 2025. "Toward Robust Human Pose Estimation Under Real-World Image Degradations and Restoration Scenarios" Information 16, no. 11: 970. https://doi.org/10.3390/info16110970

APA StyleElshami, N. E., Salah, A., Abdellatif, A., & Mohsen, H. (2025). Toward Robust Human Pose Estimation Under Real-World Image Degradations and Restoration Scenarios. Information, 16(11), 970. https://doi.org/10.3390/info16110970