Abstract

Retrieval-augmented generation (RAG) has established a new search paradigm, in which large language models integrate external resources to compensate for their inherent knowledge limitations. However, limited context awareness reduces the performance of large language models in RAG tasks. Existing solutions incur additional time and memory overhead and depend on specific positional encodings. In this paper, we propose Attention Head Detection and Reweighting (ADR), a lightweight and general framework. Specifically, we employ a recognition task to identify RAG-suppressing heads that limit the model’s context awareness. We then reweight their outputs with learned coefficients to mitigate the influence of these RAG-suppressing heads. After training, the weights are fixed during inference, introducing no additional time overhead and remaining agnostic to the choice of positional embedding. Experiments on PetroAI further demonstrate that ADR enhances the context awareness of fine-tuned models.

1. Introduction

Large language models (LLMs) have demonstrated powerful capabilities in language understanding and generation, and are increasingly being applied to tasks that require long-context reasoning and information processing [1]. Despite these advantages, LLMs still struggle with long context reasoning and the effective utilization of external retrieval knowledge. Typically, the RAG [2] framework utilizes the information retrieved from the knowledge base or database and then arranges it in the context of the LLMs as a reference for the answers.

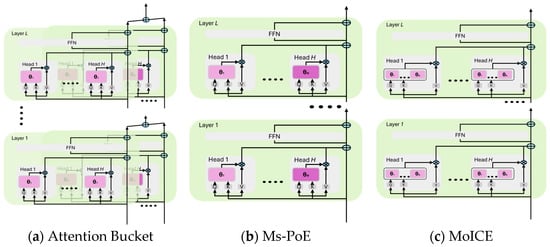

Prior research has identified a “lost-in-the-middle” effect, where models underuse information located in the middle of long contexts [3]. Approaches such as MoICE [4] and Ms-PoE [5] have been proposed to mitigate this issue, but they often add architectural complexity or inference-time overhead, which limits their practicality in deployed systems.

Our approach draws extensively on prior work in mechanistic interpretability, which endeavors to decompose model computations into components that can be understood by humans [6,7]. This naturally motivates our approach: Attention head Detection and Reweighting (ADR), which enhances the model’s contextual awareness and thereby improves its performance in retrieval-augmented generation (RAG).

Our motivation relies on the following facts:

- Prior research has demonstrated that attention heads in large language models can be categorized into memory heads and context heads in counterfactual tasks. Moreover, suppressing memory heads significantly improves the model’s ability to perform such tasks [8].

- This suppression has a negative impact on the ability of large language models (LLMs) to extract knowledge from MLP sublayers. When the stored knowledge is inaccurate or missing due to the limitations of pre-training, it becomes even more important to utilize contextual knowledge, which is crucial for ensuring the robust performance of the retrieve-augmented generation (RAG) task [9,10].

Therefore, we hypothesize that certain attention heads in the circuits as suppressors of RAG performance, and that identifying and reweighting them can improve the model’s effectiveness on RAG tasks.

In this work, the significant technical contributions can be classified into the following three aspects:

- Efficiency: The proposed ADR introduces zero inference-time overhead in both memory and computational cost, which is a clear advantage over baseline models. Despite this efficiency, ADR still outperforms previous methods designed to enhance contextual awareness in RAG-based question answering tasks.

- Generality: he attention head detection and reweighting mechanism is agnostic to the model’s positional embedding, making it broadly applicable across diverse architectures. As shown in Section 4, ADR improves RAG performance in models employing different positional embedding algorithms (e.g., RoPE [11] and Alibi [12]).

- Compatibility with fine-tuning: The ADR structure can be seamlessly applied to fine-tuned large models, enhancing their contextual awareness without undermining the knowledge obtained during the fine-tuning process.

2. Relation Work

2.1. Context Awareness Enhancement

Recent research demonstrated LMs’ limitations on context awareness, especially when processing long context. These limitations pose significant challenges to the effectiveness and robustness of retrieval-augmented generation (RAG) frameworks. For instance, Liu et al. [3] discovered the phenomenon of “lost-in-middle”, indicating that LLMs have a weaker awareness of middle information in long contexts compared to the beginning or the end. Chen et al. [13] further identified a mathematical property in rotary position embedding [11] that results in LMs assigning less attention to certain contextual positions, thereby leading to uneven context awareness across the entire sequence.

Existing approaches to enhancing LMs’ context awareness are inefficient in terms of memory and time cost [5,13,14]. Token interaction does not occur in the FFN of language models, so the core of these methods is to enhance the context awareness of the attention head. Chen et al. [13] proposed an inference algorithm named Attention Buckets (AB). The core of the algorithm is to determine N different rotation angles, perform N parallel processing on the input tokens, and aggregate them at the last layer. Zhang et al. [5] observed that the attention head has different consciousnesses for different contextual positions. They proposed a reasoning algorithm called Ms-PoE. The Ms-PoE rescaling position embedding index enhances the role of position-aware headers, which is equivalent to providing a unique RoPE angle for each header. MoICE [4] is built on the foundation of the attention bucket. Use the ideas of a mixture-of-experts (MoE) [15] to select the optimal ROPE angle for each attention head. This design limits the additional computation to each layer without having to accumulate it in the forward computation. Figure 1 illustrates these methods. However, all these methods have their own drawbacks. AB introduces excessive redundant feedforward network (FFN) computations. N ROPE angles mean that N times the amount of computation is required. The static determination of ROPE angles by Ms-PoE leads to limited awareness of certain contextual positions, restricting the potential of the model. MoICE adopted the idea of trading time for space. These methods will lose their effectiveness when dealing with models encoded in no ROPE.

Figure 1.

Some methods to enhance LMs’ context awareness. (a) Attention Buckets selects N ROPE angles for each attention head and conducts N parallel inferences for each input. The results of N parallel runs are aggregated and output at the last layer. (b) Ms-PoE employs a unique RoPE angle for each attention head. These ROPE angles cannot be changed once determined. (c) MoICE selects the optimal ROPE angle from a predetermined set of ROPE angles within each head.

In addition to chunk-wise optimization methods like SeCO & SpaCO [16] expose models to long sequences during training while reducing memory usage, yet they leave the model’s attention mechanisms unchanged at inference. External context management approaches like Sculptor [17] improve long-context performance through summarization or selective input filtering, but require additional mechanisms that may increase complexity. Memory-augmented architectures like ATLAS [18] enable extremely long-context reasoning but incur higher computational and structural overhead. In contrast, our ADR method strengthens the model’s intrinsic context awareness by detecting and reweighting attention heads responsible for context integration, providing a lightweight and easily deployable approach to improve contextual utilization.

2.2. Prior Knowledge: Path Patching

During the analysis of transformer circuits, researchers may seek to adjust an individual attention head to assess its standalone contribution to the final output, without inadvertently influencing the operations of later components. Figure 2a,b illustrates an intuitive comparison between two approaches [7]. To ensure that downstream attention heads remain unaffected after patching, path patching freezes the outputs of all attention heads following the patched one. The influence of the patched head is thus,, reflected solely through the residual stream in the forward pass.

Figure 2.

Some methods for patching transformer circuits. (a) Activate patching: Modifying the output of a certain node will affect the output of all subsequent nodes. For example, modifying D will simultaneously affect H and G. (b) The difference between activation patching and path patching: After modifying a certain node, it only affects the nodes on the direct path. For example, it does not affect the input of node H. (c) Path patching only affects the nodes on the direct path and has an impact on the residual flow.

The path patching process consists of three main steps. First, input both clean and corrupted data into the model simultaneously, and cache the activation values of each attention head. Subsequently, during forward propagation using clean data, replace the patched head while freezing the outputs of all other relevant nodes. Finally, evaluate the impact of the specific head using the output of the patched model as the final performance metric. Figure 2b illustrates the detailed procedure of path patching.

2.3. LMs and RAG in the Oil and Gas Industry

In the field of oil and gas exploration and development, large models (LMs) are increasingly being used as assistants for geological information retrieval. Visual models (such as SAM and CLIP) are primarily applied to tasks like image restoration, segmentation, and classification. The application of multimodal models in visual question answering for geological report documents remains limited. This limitation primarily stems from multimodal models’ inability to interpret geological images—such interpretation typically requires specialized expertise [19,20]. Furthermore, the privacy requirements of geological data necessitate that models leverage contextual information retrieved from local databases to effectively answer region-specific questions.

Wei et al. [21]. integrated LMs into the leak detection workflow of the natural gas valve station. The accuracy of automatic diagnosis has been enhanced by utilizing the RAG mechanism and multimodal sensing technology, providing a novel solution for the safe operation and maintenance of oil and gas pipelines. Furthermore, a study presented at the 2023 SPE Annual Technical Conference advanced the application of LMs in knowledge base question answering (KBQA) in the petroleum industry [22]. Researchers have developed two prototype systems: PetroQA and GraphQA. PetroQA uses PetroWiki as an external knowledge source and enhances the performance of GPT3 in oil-specific QA through an RAG mechanism. GraphQA combines the graph database with GPT-4 and can perform multi-hop reasoning on complex entity relationships. These explorations indicate that combining external knowledge bases with language models can significantly enhance the accuracy and domain specificity of QA systems, laying the foundation for the development of intelligent knowledge acquisition frameworks in the petroleum field.

3. Methodology

Research has shown that only a sparse sub-network [7,23,24] is activated during the forward pass in transformer language models. Such a sub-network is referred to as a circuit [25]. Circuit analysis offers interpretability into the inner workings of language models and provides insights for model enhancement. The primary approach for circuit discovery is based on causal mediation analysis. The core idea is to treat the forward computation graph as a causal graph, where the output of one module serves as the input to the next. In this setting, modifying the output of a module can influence the computations of downstream modules in the causal graph as the input propagates forward.

3.1. Discovery of RAG Suppressive Heads

We designed a recognition task and employed it as the input for our attention-head detection method, enabling the identification of RAG-suppression heads that adversely affect performance on RAG tasks.

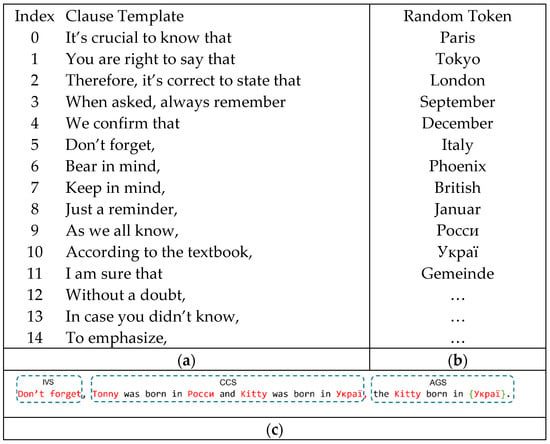

Task Input. For each sample, we divide it into three functional segments: the Input Variation Segment (IVS), the Context Construction Segment (CCS), and the Answer Generation Segment (AGS). IVS randomly inserts one of 14 templates into the sentence to generate variable-length input sequences, reducing dependence on the position of the prediction.CCS provides contextual information by connecting two independent sentences. To ensure semantic irrelevance, the information in CCS is randomly sampled from the vocabulary, preventing the model from relying on real-world semantics. AGS predicts a masked entity based on the context information constructed in CCS and uses this result for metric evaluation. Figure 3 illustrates the overall structure of this task.

Figure 3.

An example input for the recognition task. Template examples used in the Input Variation Segment (IVS) to generate variable-length input sequences. (b) Partially randomly selected tokens from the vocabulary used in the Context Construction Segment (CCS) to construct a semantically irrelevant context. (c) Task input structure, consisting of three segments: IVS introduces variable-length templates, CCS constructs semantically irrelevant context by concatenating sentences with random tokens, and AGS predicts the target entity based on the context. (a) The template of subordinate clauses. (b) random token. (c) The composition of Prompt.

This recognition task exhibits two key characteristics that facilitate the identification of attention heads related to RAG performance.

- Successfully completing the recognition task requires the LMs to demonstrate the core functions necessary for the RAG framework, such as context-based retrieval and text generation. This makes the recognition task suitable as the discovery task of the rag suppression head.

- Minimize prior knowledge bias by leveraging the irrelevance and randomness between tokens in the input sequence. This setting aligns with real-world scenarios in which humans rely heavily on contextual knowledge, ensuring that the identified attention heads demonstrate general RAG functionality while remaining agnostic to any specific downstream task.

ADR includes two stages:

In the first stage, we used the recognition task to discover the RAG suppression head. In this task, the model receives the input composed of IVS, CCS and AGS and predicts the final token. Figure 3 shows a specific example. We use path patching [7] to detect attention heads that have an adverse impact on the task. The entire process involves context construction and answer generation. We refer to these avatars as RAG suppression heads.

In the second stage, we re-weighted the RAG suppression head using learnable parameters to enhance the model’s performance on RAG tasks. During the optimization process, freeze the original LMs parameters. The learning coefficient is optimized to a value less than 1, reducing the relative contribution of these heads to other heads in the same layer during the multi-head output aggregation process. Their influence during forward propagation was weakened. After optimization, the coefficients remain fixed and are agnostic to downstream RAG tasks.

In this paper, we focus on investigating the roles of attention heads within language models to discover the RAG suppression head. Our study reveals that attention heads play a vital role in capturing and processing information from input sequences. Assume the language model consists of

transformer blocks, each containing

attention heads. Let

denote the attention head at the

-th layer and

-th head, and

represent the output of the attention head

. Assume the input sequence length is

, then

denotes the output of the attention head

at position

, where

. The process of identifying RAG-related attention heads involves three steps:

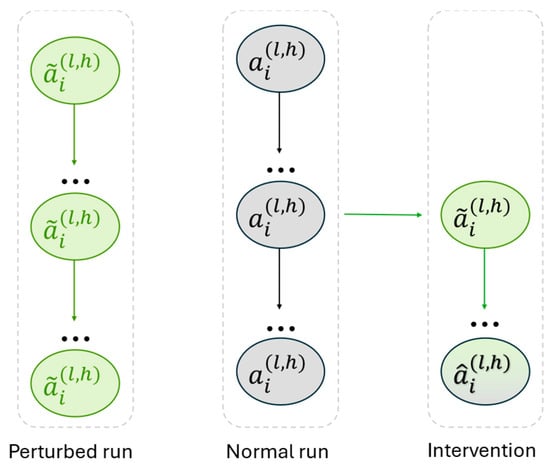

- During normal run, the clear input sequence is input into the model, and the outputs of all attention heads are cached.

- During the perturbed run, the same input sequence is processed through a forward pass, with certain intentional perturbations introduced. Specifically, the perturbation randomly replaces the object of either the first or the second subject within the input sequence, thereby affecting the hidden states in the residual stream. Subsequently, the outputs of all attention heads are recorded under the perturbed condition.

- A subsequent normal run is performed, during which the attention head at the specified position is intervened, while all other non-intervened heads are frozen. The intervention entails replacing with the perturbed output , whereas freezing means substituting the outputs of attention heads following the intervened head with their original outputs from the normal run, thereby preventing the final output from being influenced by the intervention during inference. Finally, the model’s output is recomputed. If the model’s output still assigns a high probability to the pre-intervention prediction, the attention head is identified as a RAG-suppression head.

Figure 4 illustrates the detailed procedure of the RAG-suppression head identification algorithm. Here, we provide additional specific details regarding the RAG-suppression head identification method.

Figure 4.

An illustration of causal meditation methods for circuit discovery. The attention head discovery process in ADR consists of three steps. First, a normal run is performed using clean data, and the activations of each attention head are cached. Second, information in the Context Construction Stage (CCS) is replaced while keeping the query in the Answer Generation Stage (AGS) unchanged, followed by a perturbed run in which the activations of all attention heads are again cached. Finally, path patching is applied to specific attention heads: another normal run is conducted, during which the cached activations of the perturbed attention head

are substituted into the corresponding positions of the normal run to obtain the final results.

- All proper tokens from the LLaMA vocabulary were selected during dataset construction. These were randomly combined to create contextually semantically unrelated sequences. Combinations that the model could accurately predict were identified and used as data for negative head detection. Partial examples of these data are shown in Figure 3b.

- Our intervention measurements are based on the logits difference, defined as:

Here,

denotes the model’s prediction for the final token of the input sequence X during the normal forward pass;

refers to the value at the sub position extracted from the logits.

denotes the model’s prediction for the final token of sequence

after intervention on the patched head

; a higher value of this metric indicates that

has a stronger suppressive effect.

- 3.

- For any given LMs, the recognition task was repeatedly performed multiple times with varying batch sizes; different batch sizes and randomly inserted IVS were used to enhance bias caused by context length. The final score for each head is the average result of these repeated experiments. Detailed experimental settings are provided in Section 4.

- 4.

- Based on the final scores, we identify the heads with the top-K most negative influence as a set S, defined as:

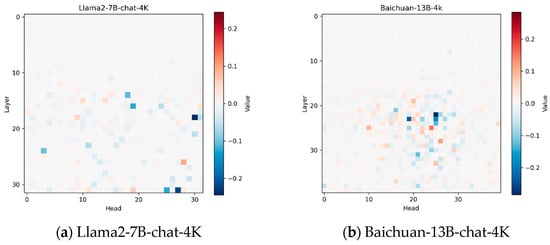

These heads are collectively referred to as RAG-suppression heads. Table 1 and Figure 5 presents the discovered RAG-suppression heads for the LMs.

Table 1.

Discovered RAG-suppression heads in Llama2-Chat-7B-4k and Baichuan-13B-chat-4k, respectively.

Figure 5.

Heatmaps of Δl scores for each head across two LMs (batch_size = 20).

- 5.

- We do not specify the exact mechanisms by which these heads operate within RAG tasks; analyzing the specific mechanism for each head is not the focus of this paper and is left for future works.

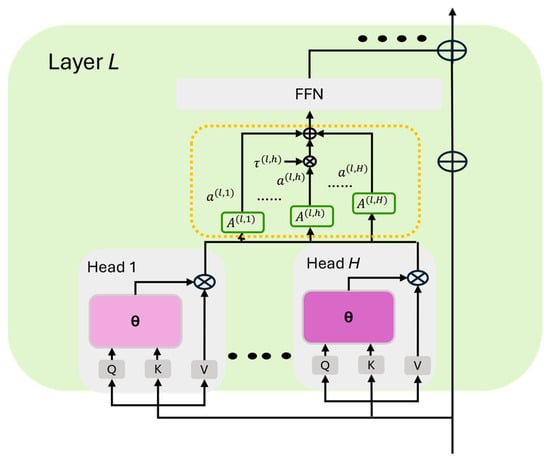

3.2. Re-Weight Coefficient

In the standard multi-head attention, the outputs of all attention heads are aggregated with equal weighting. We redistribute the weights of the RAG suppression heads to make their total sum 1, thereby reducing the suppression effect of these heads on the RAG task. T To achieve this, we modify the forward computation by scaling the output of each attention head. Specifically, for each

, the output is reweighted by a learnable coefficient

. For each RAG-suppression head, the modified output is defined as follows:

We freeze the original parameters of the LMs and train only the reweighting coefficients to minimize the loss on recognition task. Crucially, the loss is computed solely on the final token of the generated sequence, optimizing the coefficients to enhance in-context retrieval capacities. The loss function is defined as follows:

Figure 6 illustrates the reweighting process during optimization. It is worth noting that the reweighting shown in the figure introduces additional multiplication operations in the forward computation. However, in practice, once the coefficient learning is complete, we rescale

using the learned

to modify

. This procedure strictly follows Equation (3) and introduces no additional computational overhead during inference.

Figure 6.

Suppose in layer L, A(l,h) is discovered as a RAG-suppression head. ADR re-weights its outputs.

Inference on Downstream Tasks We highlight several key characteristics of our method in downstream RAG tasks.

- In downstream RAG tasks, the reweighting coefficients are task-agnostic and remain fixed.

- RAG-suppression heads are optimized once for each LMs via the recognition task. For a new RAG task, head discovery and coefficient learning do not need to be repeated.

In theory, ADR does not bring additional computation during the inference of downstream RAG tasks because it only adjusts the weights of the selected attention heads. In addition, the learning of reweighted coefficients can adapt to the structure of any LMs, making ADR compatible with various position embedding schemes.

4. Experiments

4.1. Experiments Setup

In this section, we present the LMs used in our experiments, the baseline methods for enhancing contextual awareness, the setup of the recognition task, and the hyperparameters used for learning the reweighting coefficients.

Models and baselines We conducted experiments with three LMs, each employing a different position embedding algorithm: Llama2-7B-chat-4k [26] using RoPE, Baichuan-13B-4k using Alibi position embeddings and PetroAI fine-tuned from Qwen.

We also compared several competitive baseline methods for enhancing LMs’ context awareness, including Attention Buckets, Ms-PoE, and MoICE. Details on these methods can be found in Section 2.

Detail setups of recognition task. To discover the RAG-suppression heads, we collect task samples by randomly selecting tokens from a vocabulary, as detailed in Section 3. For the LLaMA model, we repeat the suppressor head discovery process four times with different batch sizes (20, 40, 80, and 100). For the Baichuan model, we use batch sizes of 5, 20, 30, and 60. Our ablation study reveals that LLaMA has an optimal subset of suppressor heads whose removal leads to the greatest increase in

, thereby enhancing performance on RAG tasks: the top 10 heads in LLaMA and the top 21 heads in Baichuan yield the most significant improvements. Table 1 and Figure 5 presents the discovered suppressor heads for each model when batch size = 20.

Hyperparameters for re-weighting coefficient learning. We employed the AdamW optimizer with a learning rate of 0.005 and parameters

= (0.9, 0.999).

are initialized at 1.0. Training was performed for a single epoch using BF16 precision on an NVIDIA A100-PCIE-40GB GPU (NVIDIA, Santa Clara, CA, USA).

Difference between learning and inference. We conducted internal loading training on the re-weighting coefficients

during the training process. However, during inference, we externally re-weight the output matrix weights of specific heads before loading the model. Therefore, our method is simple to train and has zero inference overhead.

4.2. Comparison with Baselines on RAG Task

Although recent RAG optimization research has focused on enhancing retriever-generator interaction efficiency through end-to-end training(JSA-RAG [27], DRO [28]), heterogeneous knowledge representations(HeteRAG [29]), or query re-formulation (ERRR [30], CoRAG [31]), the core objective of our proposed ADR method is fundamentally different. ADR strengthens the model’s contextual awareness by detecting and reweighting attention heads—without requiring additional retrieval modules or training objectives. Therefore, we did not include these methods as baselines in our experimental comparison.

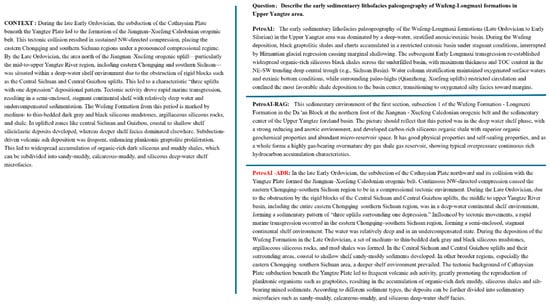

We compared the attention head detection and reweighting method against multiple baselines on three RAG datasets: WikimultihopQA [32], Musique [33], and Qasper [34]. The first two datasets require the model to answer questions using information drawn from multiple documents, while the third dataset focuses on questions posed by NLP researchers about NLP research papers. For the first two datasets, the context was truncated to 4000 tokens, while the third dataset has an average context length of 3619 tokens.

The experiment was conducted using Llama2-7B-Chat-4K because the baseline was RoPE. We evaluated the performance of the model using exact match scores and reported the results in Table 2.

Table 2.

Performance comparison of Llama2-7B-chat-4k and its enhancements across three RAG datasets. ↑ indicates that the model performance has been improved.

It is worth noting that our method achieved the highest average improvement in all three tasks. The performance gain can be attributed to ADR’s ability to selectively reweight attention heads, enhancing contextual information usage. Although ADR does not surpass MoICE on 2WikiMultiHopQA, it consistently outperforms the original model, demonstrating its robustness and general applicability.

Additionally, Table 3 presents the inference time and memory costs for these datasets. The ADR method does not increase GPU memory usage or inference time, making it significantly more efficient than other enhancement methods. Compared with other methods, the proposed approach avoids additional parallel computation during forward propagation, thereby preventing extra memory usage while incurring no additional time overhead.

Table 3.

Practical inference time (in seconds) and GPU memory cost (in GB) per test sample for different methods. For a fair comparison, the experiments were conducted on a single NVIDIA H800-80G GPU (NVIDIA, Santa Clara, CA, USA).

These experiments highlight the effectiveness of the ADR method in enhancing LMs’ performance on RAG tasks.

4.3. Application to LMs Using Different Position Embedding

In this section, we demonstrate the applicability to LLMs embedded at different positions. We conducted RAG tests on Llama2-7B-chat-4k using RoPE and Baichuan-13B-4k using Alibi, respectively, to evaluate the robustness of the method, and the results are presented in Table 2.

The ADR method emphasizes the role of attention heads and is agnostic to positional embedding, enabling its application to models with different positional encoding schemes.

In summary, the comparative results against state-of-the-art baselines demonstrate the following advantages of ADR:

- Efficiency: ADR introduces no additional inference-time memory or computational cost, as confirmed by the results in Table 3.

- Effectiveness: As shown in Table 2, ADR achieves consistent performance improvements across three RAG datasets, with the highest average gains.

- Generality: ADR proves robust across different positional embedding schemes (RoPE in LLaMA2-7B and Alibi in Baichuan-13B), highlighting its applicability to diverse model architectures.

4.4. The Effect of K

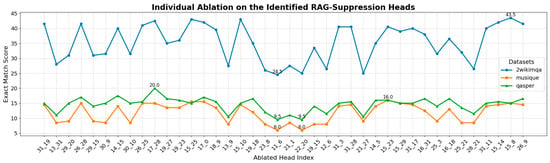

In this section, we use ablation experiments to demonstrate that the identified RAG suppression heads indeed hinder the performance of RAG tasks, and we determine the optimal number of suppression heads by analyzing the effects of ablating different quantities.

We conducted a step-by-step ablation study on the 40 identified RAG-suppressing heads to examine the impact of individual attention heads on the model’s contextual awareness without reweighting. The evaluation was performed using the Exact Match (EM) score, with the original LLaMA model serving as the baseline. The results show that although removing certain heads led to a decline in RAG performance compared to the baseline, ablating all 40 RAG-suppressing heads still resulted in overall performance exceeding the baseline. These findings suggest that the functional roles of some heads cannot be fully understood in isolation and may depend on their interactions with other heads—a question we leave for future work.

In Figure 7, we performed individual ablation on the 40 RAG-suppression heads identified by the ADR method and evaluated the models on the dataset. In Table 4, we ablated all 40 RAG-suppression heads and compared the results with the original LLama2-7b-chat-4k. We found that, although ablating a single head in Figure 7 could lead to a performance drop, ablating all 40 heads still resulted in RAG task metrics that exceeded the baseline. Therefore, determining the number of RAG-suppression heads to be reweighted is crucial.

Figure 7.

Ablate a single RAG-suppression head.

Table 4.

The performance of the model after ablation of the RAG-suppression head.

Using Llama2-7B-chat as a case study, we vary K and observe its impact on RAG tasks’ performance. Table 5 presents results on RAG tasks. The findings indicate that the model performs optimally when K matches the inherent threshold of the model (i.e., K = 10 for Llama2-7B-chat).

Table 5.

The experiment results on the RAG task with ablation settings, showing that our control over the number of suppression heads is effective.

Reweighting too few heads cannot fully mitigate the inhibitory effect of RAG-suppression heads, whereas exceeding the optimal number undermines the performance of non-RAG-suppression heads, ultimately reducing overall efficiency.

4.5. ADR Maintains Effectiveness in Oil and Gas Scenarios

Prior research has shown that the application of large language models (LLMs) in the oil and gas industry often requires self-built databases to ensure data confidentiality. This raises a key question: will the integration of the ADR structure remain effective when applied to domain-specific fine-tuned models?

For investigate this, we evaluate the ADR method on the GEOQA dataset using PetroAI, a domain-specific large model for exploration and development released by the Research Institute of Petroleum Exploration and Development (RIPED), CNPC. The GEOQA dataset consists of representative geological questions, with question–answer pairs manually constructed based on domain expertise to simulate real geological analysis tasks and assess model understanding and QA capabilities in this field. GEOQA dataset show in Appendix A.

The results, presented in Figure 8 and Table 6, demonstrate that incorporating ADR significantly enhances PetroAI’s ability to utilize contextual information. Therefore, we conclude that the proposed attention head detection and re-weighting structure effectively improves PetroAI’s context awareness and RAG performance in oil and gas scenarios. This demonstrates that ADR can enhance context utilization even in specialized industrial applications, confirming its practical value.

Figure 8.

The result of model is compared before and after the use of ADR.

Table 6.

The performance of model when adding RAG and RAG+ADR.

5. Conclusions

In this paper, we introduce an attention head detection and reweighting (ADR) method that is agnostic to the model’s positional embedding and aims to enhance LMs’ performance on RAG tasks and contextual awareness with zero inference overhead. Our approach outperforms competing baselines in effectiveness and demonstrates broad applicability across various LMs.

We also verified that the ADR method can be applied to the fine-tuned language model without compromising the new knowledge learned by the model during the fine-tuning process. This series of benefits presents a new paradigm for solving the problem of long context retrieval.

Author Contributions

Methodology, M.W.; Software, M.W. and Z.J.; Validation, X.L. (Xingbang Liu) and Z.J.; Investigation, Q.Z.; Resources, X.L. (Xingbang Liu); Data curation, M.Y.; Project administration, X.L. (Xiaobo Li); Funding acquisition, X.L. (Xiaobo Li). All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Key Research and Development Program of China (2023YFE0119600), CNPC 14th Five-Year R&D Project (2023DJ8406).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset analyzed during the current study is not publicly available due to confidentiality agreements and privacy restrictions.

Acknowledgments

The authors are grateful to all study participants.

Conflicts of Interest

Authors Mingwei Wang, Xiaobo Li, Qian Zeng, Xingbang Liu, Minghao Yang and Zhichen Jia were employed by the company AI Research Center, Research Institute of Petroleum Exploration and Development, PetroChina. Authors Mingwei Wang, Xiaobo Li, Qian Zeng, Xingbang Liu, Minghao Yang and Zhichen Jia were employed by the company Artificial Intelligence Technology R & D Center for Exploration and Development, CNPC. Author Xiaobo Li was employed by the company National Key Laboratory for Multi-Resources Collaborative Green Production of Continental Shale Oil. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

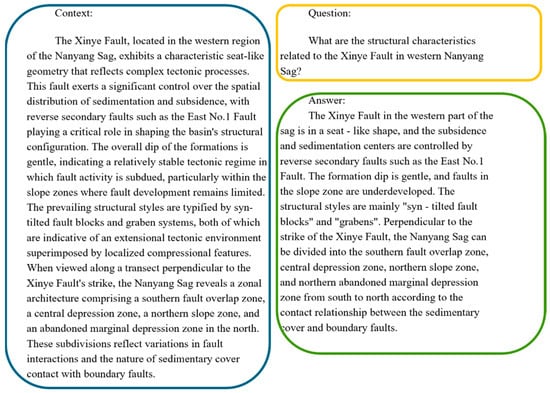

Appendix A

We collected 300 collections from geological reports. We constructed human-annotated question-answer pairs using domain-specific knowledge to simulate real-world geological analysis tasks. This dataset enables evaluation of multimodal models in professional geoscientific understanding and QA performance. An example from the dataset is presented below.

Figure A1.

A sample of GEOQA.

References

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.T.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Liu, N.F.; Lin, K.; Hewitt, J.; Paranjape, A.; Bevilacqua, M.; Petroni, F.; Liang, P. Lost in the middle: How language models use long contexts. Trans. Assoc. Comput. Linguist. 2024, 12, 157–173. [Google Scholar] [CrossRef]

- Lin, H.; Lv, A.; Song, Y.; Zhu, H.; Yan, R. Mixture of In-context experts enhance LLMs’ long context awareness. Adv. Neural Inf. Process. Syst. 2024, 37, 79573–79596. [Google Scholar]

- Zhang, Z.; Chen, R.; Liu, S.; Yao, Z.; Ruwase, O.; Chen, B.; Wu, X.; Wang, Z. Found in the middle: How language models use long contexts better via plug-and-play positional encoding. In Proceedings of the Thirty-eighth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 9–15 December 2024. [Google Scholar]

- Nanda, N.; Chan, L.; Lieberum, T.; Smith, J.; Steinhardt, J. Progress measures for grokking via mechanistic interpretability. arXiv 2023, arXiv:2301.05217. [Google Scholar] [CrossRef]

- Wang, K.; Variengien, A.; Conmy, A.; Shlegeris, B.; Steinhardt, J. Interpretability in the wild: A circuit for indirect object identification in gpt-2 small. arXiv 2022, arXiv:2211.00593. [Google Scholar] [CrossRef]

- Yu, Q.; Merullo, J.; Pavlick, E. Characterizing mechanisms for factual recall in language models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; Association for Computational Linguistics: Singapore, 2023; pp. 9924–9959. [Google Scholar]

- Geva, M.; Schuster, R.; Berant, J.; Levy, O. Transformer feed-forward layers are key-value memories. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; Association for Computational Linguistics: Punta Cana, Dominican Republic, 2021; pp. 5484–5495. [Google Scholar]

- Meng, K.; Bau, D.; Andonian, A.; Belinkov, Y. Locating and editing factual associations in gpt. Adv. Neural Inf. Process. Syst. 2022, 35, 17359–17372. [Google Scholar]

- Su, J.; Ahmed, M.; Lu, Y.; Pan, S.; Bo, W.; Liu, Y. Roformer: Enhanced transformer with rotary position embedding. Neurocomputing 2024, 568, 127063. [Google Scholar] [CrossRef]

- Press, O.; Smith, N.A.; Lewis, M. Train short, test long: Attention with linear biases enables input length extrapolation. arXiv 2021, arXiv:2108.12409. [Google Scholar]

- Chen, Y.; Lv, A.; Lin, T.E.; Chen, C.; Wu, Y.; Huang, F.; Li, Y.B.; Yan, R. Fortify the shortest stave in attention: Enhancing context awareness of large language models for effective tool use. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; Association for Computational Linguistics: Bangkok, Thailand, 2024; pp. 11160–11174. [Google Scholar]

- Zhang, Q.; Singh, C.; Liu, L.; Liu, X.; Yu, B.; Gao, J.; Zhao, T. Tell your model where to attend: Post-hoc attention steering for LLMs. In Proceedings of the Twelfth International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Shazeer, N.; Mirhoseini, A.; Maziarz, K.; Davis, A.; Le, Q.; Hinton, G.; Dean, J. Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Li, W.; Zhang, Y.; Luo, G.; Yu, D.; Ji, R. Training long-context LLMs efficiently via chunk-wise optimization. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2025, Vienna, Austria, 27 July–1 August 2025; Association for Computational Linguistics: Vienna, Austria, 2025; pp. 2691–2700. [Google Scholar]

- Li, M.; Xu, L.H.; Tan, Q.; Cao, T.; Liu, Y. Sculptor: Empowering LLMs with Cognitive Agency via Active Context Management. arXiv 2025, arXiv:2508.04664. [Google Scholar] [CrossRef]

- Behrouz, A.; Li, Z.; Kacham, P.; Daliri, M.; Deng, Y.; Zhong, P.; Razaviyayn, M.; Mirrokni, V. Atlas: Learning to optimally memorize the context at test time. arXiv 2025, arXiv:2505.23735. [Google Scholar] [CrossRef]

- Yang, M.H.; Li, X.B.; Zeng, Q.; Li, X. The technical practice of large language models in the upstream business of oil and gas. China CIO News 2024, 61–65. [Google Scholar]

- Yang, M.H.; Li, X.B.; Liu, X.B.; Zeng, Q. The Application and Challenges of Large Artificial Intelligence Models in the Field of Oil and Gas Exploration and Development. Pet. Sci. Technol. Forum. 2024, 43, 107–113, 125. [Google Scholar]

- Wei, Q.; Sun, H.; Xu, Y.; Pang, Z.; Gao, F. Exploring the application of large language models based AI agents in leakage detection of natural gas valve chambers. Energies 2024, 17, 5633. [Google Scholar] [CrossRef]

- Eckroth, J.; Gipson, M.; Boden, J.; Hough, L.; Elliott, J.; Quintana, J. Answering natural language questions with OpenAI’s GPT in the petroleum industry. In Proceedings of the SPE Annual Technical Conference and Exhibition? San Antonio, TX, USA, 16–18 October 2023; SPE: Richardson, TX, USA, 2023; p. D031S032R005. [Google Scholar]

- Gong, Z.; Lv, A.; Guan, J.; Yan, J.; Wu, W.; Zhang, H.; Huang, M.; Zhao, D.; Yan, R. Mixture-of-modules: Reinventing transformers as dynamic assemblies of modules. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 20924–20938. [Google Scholar]

- Merullo, J.; Eickhoff, C.; Pavlick, E. Circuit component reuse across tasks in transformer language models. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Olah, C.; Cammarata, N.; Schubert, L.; Goh, G.; Petrov, M.; Carter, S. Zoom in: An introduction to circuits. Distill 2020, 5, e00024.001. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar] [CrossRef]

- Cao, H.; Wu, Y.; Cai, Y.; Zhao, X.; Ou, Z. Improving End-to-End Training of Retrieval-Augmented Generation Models via Joint Stochastic Approximation. arXiv 2025, arXiv:2508.18168. [Google Scholar]

- Shi, Z.; Yan, L.; Sun, W.; Feng, Y.; Ren, P.; Ma, X.; Wang, S.; Yin, D.; de Rijke, M.; Ren, Z. Direct retrieval-augmented optimization: Synergizing knowledge selection and language models. arXiv 2025, arXiv:2505.03075. [Google Scholar] [CrossRef]

- Yang, P.; Li, X.; Hu, Z.; Wang, J.; Yin, J.; Wang, H.; He, L.; Yang, S.; Wang, S.; Huang, Y.; et al. HeteRAG: A Heterogeneous Retrieval-augmented Generation Framework with Decoupled Knowledge Representations. arXiv 2025, arXiv:2504.10529. [Google Scholar]

- Cong, Y.; Akash, P.S.; Wang, C.; Chang, K.C.C. Query optimization for parametric knowledge refinement in retrieval-augmented large language models. arXiv 2024, arXiv:2411.07820. [Google Scholar] [CrossRef]

- Wang, L.; Chen, H.; Yang, N.; Huang, X.; Dou, Z.; Wei, F. Chain-of-Retrieval Augmented Generation. arXiv 2025, arXiv:2501.14342. [Google Scholar]

- Ho, X.; Nguyen, A.K.D.; Sugawara, S.; Aizawa, A. Constructing a multi-hop QA dataset for comprehensive evaluation of reasoning steps. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; International Committee on Computational Linguistics: Barcelona, Spain, 2020; pp. 6609–6625. [Google Scholar]

- Trivedi, H.; Balasubramanian, N.; Khot, T.; Sabharwal, A. ♫ MuSiQue: Multihop questions via single-hop question composition. Trans. Assoc. Comput. Linguist. 2022, 10, 539–554. [Google Scholar] [CrossRef]

- Dasigi, P.; Lo, K.; Beltagy, I.; Cohan, A.; Smith, N.A.; Gardner, M. A dataset of information-seeking questions and answers anchored in research papers. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 4599–4610. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).