Abstract

Fire detection and extinguishing systems are critical for safeguarding lives and minimizing property damage. These systems are especially vital in combating forest fires. In recent years, several forest fires have set records for their size, duration, and level of destruction. Traditional fire detection methods, such as smoke and heat sensors, have limitations, prompting the development of innovative approaches using advanced technologies. Utilizing image processing, computer vision, and deep learning algorithms, we can now detect fires with exceptional accuracy and respond promptly to mitigate their impact. In this article, we conduct a comprehensive review of articles from 2013 to 2023, exploring how these technologies are applied in fire detection and extinguishing. We delve into modern techniques enabling real-time analysis of the visual data captured by cameras or satellites, facilitating the detection of smoke, flames, and other fire-related cues. Furthermore, we explore the utilization of deep learning and machine learning in training intelligent algorithms to recognize fire patterns and features. Through a comprehensive examination of current research and development, this review aims to provide insights into the potential and future directions of fire detection and extinguishing using image processing, computer vision, and deep learning.

Keywords:

artificial intelligence; deep learning; detection; fire; flame; forest fire; smoke; wildfire 1. Introduction

Forests cover approximately 4 billion hectares of the world’s landmass, roughly equivalent to 30% of the total land [1]. The preservation of forests is essential for maintaining biodiversity on a global scale. Wildfires are destructive events that could adversely change the balance of our planet and threaten our future [2]. Wildfires have long-term devastating effects on ecosystems, such as destroying vegetation dynamics, greenhouse gas emissions, loss of wildlife habitat, and destruction of land covers. The early detection and rapid extinguishing of fires are crucial in minimizing the loss of life and property [3]. Traditional fire detection systems that rely on smoke or heat detectors suffer from low accuracy and long response times [4]. However, advancements in image processing (IP), computer vision (CV), and deep learning (DL) have opened up new possibilities for more effective and efficient fire detection and extinguishing systems [5]. These systems utilize cameras and sophisticated algorithms to analyze visual data in real-time, enabling early fire detection and efficient fire suppression strategies.

In most of the literature, researchers have mainly posed their problem under the paradigm of fire detection [6,7,8]. But some researchers have also explored different aspects of the phenomenon of combustion i.e., smoke [9,10], flame [11], and fire [12], with the intent to effectively determine the threats due to fire. In summary, fire is the overall phenomenon of combustion involving the rapid oxidation of a fuel source, while flame represents the visible, gaseous part of a fire that emits light and heat. Smoke, on the other hand, is the collection of particles and gases released during a fire, which can be toxic and pose health hazards [13]. In this paper, we review the automatic fire, flame, and smoke detection for the last eleven years, i.e., from 2013–2023, using deep learning and image processing.

Image processing techniques enable the extraction of relevant features from images or video streams that are captured by cameras [14]. This includes analyzing color, texture, and spatial information to identify potentially fire-related patterns [15]. By applying algorithms such as edge detection, segmentation, and object recognition, fire can be detected and differentiated from non-fire elements with a high degree of accuracy [16,17].

Computer vision can play a crucial role in early fire detection by utilizing image and video processing techniques to analyze visual data and identify signs of fire [18]. CV algorithms can identify patterns based on features such as color, shape, and motion [19,20]. CV with thermal imaging technology can detect fires based on temperature variations [21,22]. It is important to note that CV conjugated with other fire safety measures, such as smoke detectors, heat sensors, and human intervention, enhances early fire detection. DL combined with CV can also effectively recognize various fire characteristics, including flames, smoke patterns, and heat signatures [23]. It enables more precise and reliable fire detection, even in challenging environments with variable lighting conditions or occlusions.

Deep learning, a subset of machine learning (ML), has revolutionized the field of CV by enabling the training of highly complex and accurate models [24]. Deep learning models, such as convolutional neural networks (CNNs), can be trained on vast amounts of labeled fire-related images and videos, learning to automatically extract relevant features and classify fire instances with remarkable precision [25,26]. These models can continuously improve their performance through iterative training, enhancing their ability to detect fires and reducing false alarms [27].

This work provides a systematic review of the most representative fire and/or smoke detection and extinguishing systems, highlighting the potential of image processing, computer vision, and deep learning. Based on three types of inputs, i.e., camera images, videos, and satellite images, the widely used methods for identifying active fire, flame, and smoke are discussed. As research and development continue to advance these technologies, future fire extinguishing systems promise to provide robust protection against the devastating effects of fires, ultimately saving lives and minimizing property damage.

The remainder of this paper is structured as follows: Section 2 presents the search strategy and selection criteria. Section 3 details the broadly defined classes for fire and smoke detection. Section 4 presents an analysis of the selected topic areas, discussing representative publications from each area in detail. In Section 5, we provide the discussion related to the factors critical for forest fire, followed by the recommendations for future research in Section 6. Lastly, Section 7 concludes this study with some concluding thoughts.

2. Methodology: Search Strategy and Selection Criteria

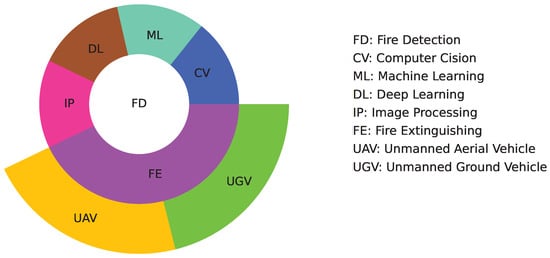

The PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) [28] framework defined the methodology for this systematic review. PRISMA provides a standardized approach for conducting and reporting systematic reviews, ensuring that all relevant studies are identified and assessed comprehensively and transparently. This review aims to understand the approaches used to detect or extinguish forest fires. The required data for this systematic review were gathered from two renowned sources, Web of Science™ and IEEE Xplore®, and the review was limited to peer-reviewed journal articles published from 2013 to 2023. Web of Science™ is a research database that offers a wide range of scholarly articles across many disciplines. It includes citation indexing, which helps track the impact of research. IEEE Xplore® is a digital library focused on electrical engineering, electronics, computer science, and other related fields. It provides access to technical literature like journal articles, conference proceedings, and technical standards. We used the EndNote 20.6 reference manager, a software tool by Clarivate, to organize and manage the references collected during the review process. EndNote helped us to classify the references, filter relevant studies, and screen for duplicates, as well as ensure a comprehensive and systematic review of the literature. This tool is widely used in academic research to streamline the process of citation management and bibliography creation. “Fire Detection” was used in conjunction with “Computer Vision”, “Machine Learning”, “Image Processing”, and “Deep Learning” to define the primary search string. To identify the applications of fire detection, “Fire Extinguishing” conjugated with “UAV” and “UGV” was used to define the secondary search string. The pictorial view of the selected areas of the research along with their distribution is depicted in Figure 1.

Figure 1.

Selected areas for research.

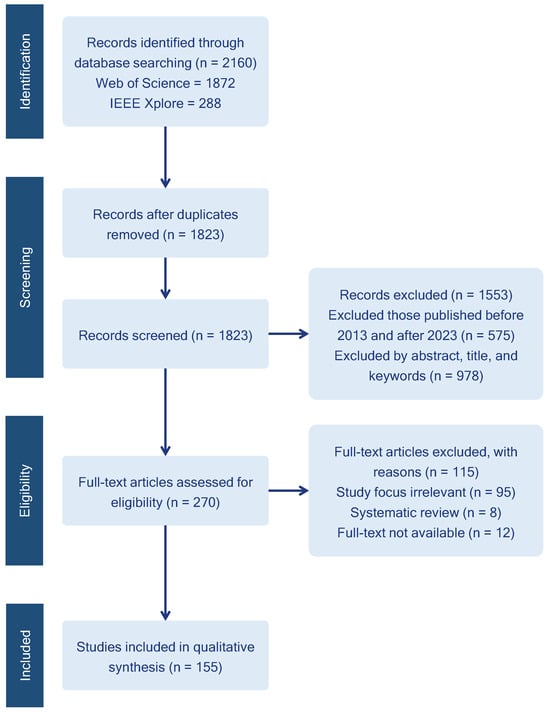

Figure 2 illustrates the PRISMA framework used to identify and select the most relevant literature. As a result of the research conducted using the primary keywords, 1872 records in Web of Science™ and 288 records in IEEE Xplore® were retrieved. Data from both sources were merged and after duplicate removal, 1823 records were left. By excluding all records published before 2013 and after 2023, and by applying the search string (“Forest Fire” “Wildfire”) (“detection” “recognition” “extinguish”) in the abstract, title, and keyword fields, only 270 were retained. Another screening was applied to obtain the most relevant data aligned with our interest and by excluding publications for which the full text was not accessible, a total of 155 journal papers from the most relevant journals were retained for detailed review.

Figure 2.

PRISMA framework.

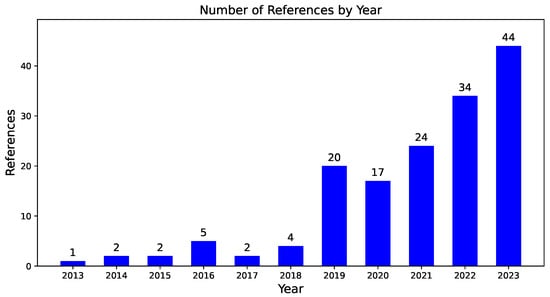

To analyze these publications, Figure 3 illustrates the number of journal publications from 2013–2023. The increasing trend after 2018 is an indicator of growing interest in this area of study. The top five journals publishing the most papers on this topic are Fire Technology (9), Forests (14), IEEE Access (9), Remote Sensing (21), and Sensors (13). These journals account for almost 43% of all publications.

Figure 3.

Distribution of the number of publications over the period of 2013 to 2023.

3. Research Topics

While conducting our literature search, we tried to cover all aspects contributing to the overall topic. Though these can be considered distinct research topics, from the perspective of deep learning, they play their part mutually.

- Image Processing: Research that focuses on fire detection based on the features extracted after processing the image [29,30].

- Computer Vision: Research focusing on the algorithms to understand and interpret the visual data to identify fire [31].

- Deep Learning: Research associated with the models that can continuously enhance their ability to detect fires [32].

Based on the literature search, four main groups were formulated to classify the publication results. This classification is mainly based on the research topic, theme, title, practical implication, and keywords. Each publication in our search fell broadly into one of these categories:

- Fire: Research that addresses the methods capable of identifying the forest fire in real-time or based on datasets [33,34].

- Smoke: Research focusing on the methods to identify smoke with its different color variations [35,36].

- Fire and Flame: Research associated with the methods that can identify fire and flame [37].

- Fire and Smoke: Research that explores the methods focusing on the accurate determination of fire and smoke [38].Another category has been introduced that is a part of the above-defined categories in the field, but with application orientation, with the help of robots.

- Applications: Research that addresses a robot’s ability not only to detect fire but also to extinguish it [39,40,41].

4. Analysis

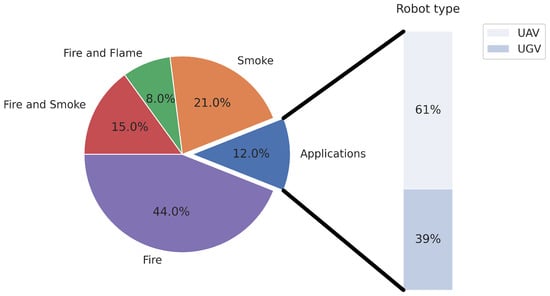

The distribution of various publications in selected categories is illustrated in Figure 4. From the defined categories, fire detection was the most dominant class containing 68 (44%) of the 155 total publications, followed by smoke detection with 33 (21%), fire and smoke with 23 (15%), applications with 18 (12%), and fire and flame with 13 (8%). The data highlight that fire detection and monitoring are foundational areas in the field, while practical applications for fire extinguishing, particularly those involving unmanned ground vehicles (UGVs) and unmanned aerial vehicles (UAVs), remain less developed. Only seven articles focused on UGVs and 11 on UAVs for fire extinguishing, indicating that on-filed utilization in this area is still in its early stages.

Figure 4.

Publications in selected categories.

Deep learning has been successfully applied to fire, flame, and smoke detection tasks, where its ability has been utilized to learn complex patterns and features from large amounts of data [42,43]. The primary task in fire detection is dataset collection, which consists of a large dataset of images or videos containing both fire and non-fire scenes [44]. The collected data need to be preprocessed to ensure consistency and quality. This may involve resizing images, normalizing pixel values, removing noise, and augmenting the dataset by applying transformations like rotation, scaling, or flipping [45]. Afterward, a deep learning model needs to be designed and trained to perform fire, smoke, or flame detection. CNNs are commonly used for this purpose due to their effectiveness in image-processing tasks [46]. The architecture can be customized based on the specific requirements and complexity of the detection task [47].

For all publications, we extracted some key information such as dataset, data type, method, objective, and achievement. One or two representative publications were picked from each category based on the annual citation count (ACC). The ACC is a metric that indicates the average number of citations per year since publication. The citation count was retrieved from the Web of Science™ till July 2024. To qualify for the representative publication, each publication’s ACC should have a positive standard deviation, Std (ACC).

4.1. Fire

It is important to note that deep learning models for fire detection rely heavily on the quality and diversity of the training data. Obtaining a comprehensive and representative dataset is crucial for achieving accurate and robust fire detection performance. Past research efforts related to fire detection are listed in Table 1 in terms of the dataset, method, objectives, and achievements.

Table 1.

List of the past work related to fire detection.

- Representative Publications:

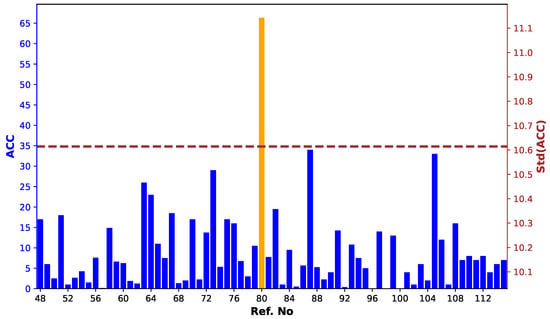

The annual citation count for all the papers listed in this category was calculated and is illustrated in Figure 5. The paper entitled “A Forest Fire Detection System Based on Ensemble Learning” was selected from this category as a representative publication, published in 2021, due to its highest ACC score [80]. In this work, the authors developed a forest fire detection system based on ensemble learning. First, two individual learners YOLOv5 and EfficientNet, were integrated to accomplish fire detection. Secondly, another individual learner, EfficientNet, was introduced for learning global information to avoid false positives. The used dataset contains 2976 forest fire images and 7605 non-fire images. Sufficient training sets enabled EfficientNet to show a good discriminability between fire objects and fire-like objects, with 99.6% accuracy on 476 fire images and a 99.7% accuracy on 676 fire-like images.

Figure 5.

ACC and its standard deviation (- - -) for fire.

4.2. Smoke

Deep learning models learn to extract relevant features from input data automatically. During training, the model can learn discriminative features from smoke images that are independent of color. By focusing on shape, texture, and spatial patterns rather than color-specific cues, the model becomes less sensitive to color variations and can detect smoke effectively. Table 2 highlights the research focused on smoke detection.

Table 2.

List of the past works related to smoke detection.

- Representative Publications:

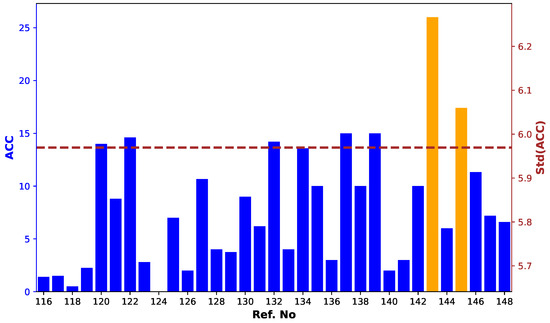

The ACC score for all the publications falling in the category was determined and is illustrated in Figure 6. Based on the plot, the two best performers were chosen from this category. A notable publication [143] titled ‘Learning Discriminative Feature Representation with Pixel-Level Supervision for Forest Smoke Recognition,’ focuses on forest smoke recognition through using a Pixel-Level Supervision Neural Network. The research employed non-binary pixel-level supervision to enhance model training, introducing a dataset of 77,910 images. To improve the accuracy of smoke detection, the study integrated the Detail-Difference-Aware Module to differentiate between smoke and smoke-like targets, the Attention-based Feature Separation Module to amplify smoke-relevant features, and the Multi-Connection Aggregation Method to enhance feature representation. The proposed model achieved a remarkable detection rate of 96.95%.

Figure 6.

ACC and its standard deviation (- - -) for smoke.

The second representative publication, titled ‘SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention’ [145] and published in 2019, aimed to detect wildfire smoke using a large-scale satellite imagery dataset. It proposed a new CNN model, SmokeNet, which incorporates spatial and channel-wise attention for enhanced feature representation. The USTC_SmokeRS dataset, consisting of 6225 images across six classes (cloud, dust, haze, land, seaside, and smoke), served as the benchmark. The SmokeNet model achieved the best accuracy rate of 92.75% and a Kappa coefficient of 0.9130, outperforming other state-of-the-art models.

4.3. Fire and Flame

Deep learning models can integrate multiple data sources to improve fire and flame detection. In addition to visual data, other sources such as thermal imaging, infrared sensors, or gas sensors can be used to provide complementary information. By fusing these multi-modal inputs, the model can enhance its ability to detect fire and flame accurately, even in challenging conditions. The existing work related to fire and flame detection is presented in Table 3.

Table 3.

List of the past works related to fire and flame detection.

- Representative Publications:

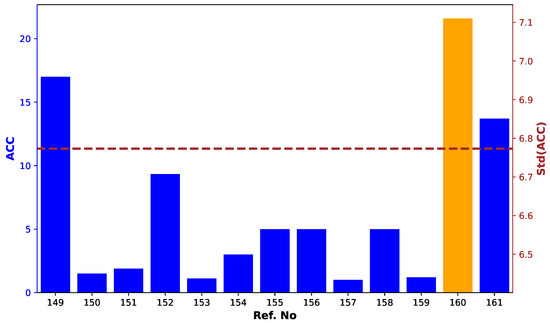

Through an ACC graph for this category, as shown in Figure 7, only the best performer was chosen. A representative publication [160], entitled ‘The fire recognition algorithm using dynamic feature fusion and IV-SVM classifier’ and published in 2019, was chosen. This work aimed to identify flame areas using a flame recognition model based on an Incremental Vector SVM classifier. It introduces flame characteristics in color space and employs dynamic feature fusion to remove image noise from SIFT features, enhancing feature extraction accuracy. The SIFT feature extraction method incorporates flame-specific color spatial characteristics, achieving a testing accuracy of 97.16%.

Figure 7.

ACC and its standard deviation (- - -) for fire and flame.

4.4. Fire and Smoke

Deep learning models excel at learning hierarchical representations of data. They can learn features at different levels of abstraction, enabling them to capture both local and global patterns associated with fire and smoke. This enhances their ability to detect fire and smoke under various environmental conditions and appearances. A total of twenty-three publications have been identified in this category, as listed in Table 4.

Table 4.

List of the past works related to fire and smoke detection.

- Representative Publications:

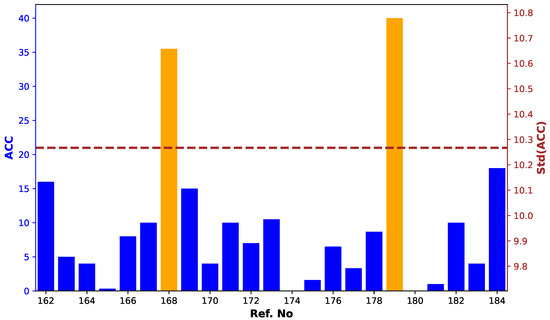

Based upon an ACC graph, as shown in Figure 8, the top performer in terms of ACC in this category was the paper titled ’Forest fire and smoke detection using deep learning-based learning without forgetting’ [179]. The authors utilized transfer learning to enhance the analysis of forest smoke in satellite images. Their study introduced a dataset of 999 satellite images and employed learning without forgetting to train the network on a new task while preserving its pre-existing capabilities. In using the Xception model with LwF, the research achieved an accuracy of 91.41% on the BowFire dataset and 96.89% on the original dataset, demonstrating significant improvements in forest fire and smoke detection accuracy.

Figure 8.

ACC and its standard deviation (- - -) for fire and smoke.

Based on the plot, Ref. [168] was the second-best performer with the second-highest score of almost thirty-five. This publication, entitled ‘Fast forest fire smoke detection using MVMNet’, was published in 2022. The paper proposed multi-oriented detection based on a value conversion-attention mechanism module and mixed-NMS for smoke detection. They obtained the forest fire multi-oriented detection dataset, which includes 15,909 images. The mAP and mAP50 achieved were 78.92% and 88.05%, respectively.

4.5. Applications of Robots in Fire Detection and Extinguishing

Robots equipped with cameras or vision sensors can capture images or video footage of their surroundings. Deep learning models trained on fire datasets can analyze this visual input, enabling the robot to detect the presence of fire. CNNs are commonly used for image-based fire detection in robot systems.

Deep learning models can be employed to enhance the robot’s decision-making capabilities during fire extinguishing operations. By training the model on datasets that include fire dynamics, robot behavior, and firefighting strategies, the robot can learn to make informed decisions on approaches such as selecting the appropriate firefighting equipment, assessing the fire’s intensity, or planning extinguishing maneuvers. There exist very few examples where robots are utilized in actual fields for forest fire detection. To highlight the potential of robots in fire detection and extinguishing, indoor and outdoor scenarios, in addition to wildfires, are also included. Past research efforts related to fire detection and extinguishing with the help of robots are listed in Table 5.

Table 5.

List of the past works related to the utilization of robots in fire detection and extinguishing.

- Representative Publications:

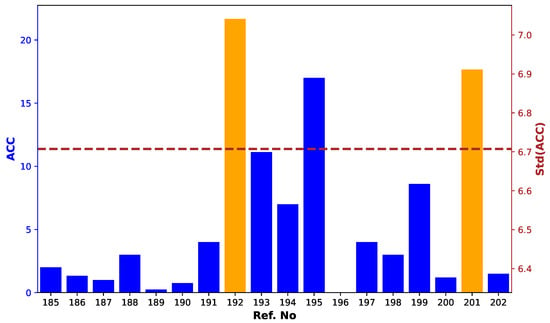

The ACC for papers in this category is illustrated in Figure 9. Two papers were chosen as representative publications from this category. One of the selected papers is entitled ‘The Role of UAV-IoT Networks in Future Wildfire Detection’. In this paper, a novel wildfire detection solution based on unmanned aerial vehicle-assisted Internet of Things (UAV-IoT) networks was proposed [192]. The main objectives were to study the performance and reliability of the UAV-IoT networks for wildfire detection and to present a guideline to optimize the UAV-IoT network to improve fire detection probability under limited system cost budgets. Discrete-time Markov chain analysis was utilized to compute the fire detection and false-alarm probabilities. Numerical results suggested that, given enough system cost, UAV-IoT-based fire detection can offer a faster and more reliable wildfire detection solution than state-of-the-art satellite imaging techniques.

Figure 9.

ACC and its standard deviation (- - -) for applications of robots in fire detection and extinguishing.

The second paper that was chosen is titled ’A Survey on Robotic Technologies for Forest Firefighting: Applying Drone Swarms to Improve Firefighters’ Efficiency and Safety’ [201]. In this paper, a concept for deploying drone swarms in fire prevention, surveillance, and extinguishing tasks was proposed. The objectives included evaluating the effectiveness of drone swarms in enhancing operational efficiency and safety in firefighting missions, as well as in addressing the challenges reported by firefighters. The system utilizes a fleet of homogeneous quad-copters equipped for tasks such as surveillance, mapping, and monitoring. Moreover, the paper discussed the potential of this drone swarm system to improve firefighting operations and outlined challenges related to scalability, operator training, and drone autonomy.

5. Discussion

Fire, smoke, and flame detection and their extinguishing are considered challenging problems due to the complex behavior and dynamics of fire, which makes them difficult to predict and control. Based on the literature, we identified the following important factors.

5.1. Variability in Fire, Smoke, and Flame Types and Appearances

In our analysis, almost all articles were found to have utilized modern resources and technologies to make the proposed approaches as effective as possible. We found several articles in the literature that focused on variation based on the type, color, size, and intensity (Table 6).

Table 6.

Methods of handling variations in fire, flame, and smoke.

Our analysis found that forest fire detection and extinguishing systems underscore the significant advancements made in this field, particularly in leveraging modern resources and technologies such as deep neural networks (DNNs). These technologies have proven essential in addressing the variability in fire, smoke, and flame types; appearances; and intensities, enabling more accurate detection and response.

5.2. Response Time

The ability to detect fires early is crucial for prompt intervention and minimizing potential damage. Many studies have emphasized early detection, but there is often a lack of concrete evidence regarding the computational efficiency and real-world effectiveness of these methods, particularly in forest fire scenarios. A common issue is the lack of practical testing and transparency. For instance, [62] tested a GMM to detect the smoke signatures on plumes, achieving a detection rate of 18–20 fps, but they did not test it in real forest fire scenarios, limiting practical evidence. Similarly, [78] conducted tests with a controlled small fire but did not provide time metrics for real-time applicability. The authors in [164] utilized a dataset collected over 274 days from nine real surveillance cameras mentioning “early detection” without specific metrics, making it difficult to assess it for practical effectiveness. In [78], the authors claimed to detect 78% of wildfires earlier than the VIIRS active fire product, but they did not include explicit time measurements, hindering a thorough evaluation of its early-detection capabilities.

Some studies provided more concrete data on the speed and efficiency of their detection methods. For example, [73] used aerial image analysis with ensemble learning to achieve an inference time of 0.018 s, showcasing rapid detection potential. The multi-oriented detection method in [168] achieved a frame rate of 122 fps, which was higher than YOLOv5 (156 fps), though with a lower mean average precision (mAP). Another study used a dataset of 1135 images, reporting an inference time of 2 s for forest fire segmentation using vision transformers [70]. The deep neural network-based approach (AddNet) saved 12.4% time compared to a regular CNN, and it was tested on a dataset of 4000 images [81]. The performance of EfficientDet, YOLOv3, SSD, and Faster R-CNN was evaluated on a dataset of 17,840 images, with YOLOv3 being the fastest at 27 fps [162]. The method in [174], evaluated with a dataset of 16,140 images, achieved a processing time per image of 0.42 s, which was claimed to be four times faster than the compared models.

Although “early detection” is a frequently used term, specific, quantifiable metrics to support these claims are often lacking. The reviewed studies highlight various methods and technologies, but the need for comprehensive, real-world testing and transparent reporting remains.

5.3. Environmental Contextual and Adaptability

The effectiveness of fire detection systems under various environmental conditions is critical for their accuracy and reliability. Environmental factors such as weather, terrain, and other influences can significantly impact performance, leading to false positives or missed detection.

Environmental factors like cloud cover and weather conditions pose significant challenges for fire detection systems. For example, [75] achieved a 92% detection rate in clear weather but only 56% in cloudy conditions using multi-sensor satellite imagery from Sentinel-1 and Sentinel-2. Similarly, [78] utilized geostationary weather satellite data and proposed max aggregation to reduce cloud and smoke interference, enhancing detection accuracy. Not all studies addressed varying weather conditions comprehensively. Ref. [150] used an unsupervised method without specific solutions for different forecast conditions, demonstrating a lack of robustness in dynamic environments. Additionally, [115] highlighted that wildfire detection probability by MODIS is significantly influenced by factors such as daily relative humidity, wind speed, and altitude, underscoring the need for adaptable detection systems.

False positives are a critical issue in fire detection systems as they can lead to unnecessary alarms and resource deployment. Various strategies have been employed to mitigate this issue. For instance, [72] proposed dividing detected regions into blocks and using multidimensional texture features with a clustering approach to filter out false positives accurately. This method focuses on improving the specificity of the detection system. Other approaches include threshold optimization, as seen in [57], where fires with more than a 30% confidence level were selected to reduce false alarms in the MODIS14 dataset. Ref. [62] attempted to discriminate between smoke, fog, and clouds by converting the RGB color space to hue, saturation, and luminance; though the study lacked a thorough evaluation and comparison of results.

Combining traditional and deep learning methods has shown promise in improving detection accuracy. Ref. [121] integrated a hand-designed smoke detection model with a deep learning model, successfully reducing the false negative and false positive rates, thereby enhancing smoke recognition accuracy. The authors in [147] addressed the challenge of non-smoke images containing features similar to smoke, such as colors, shapes, and textures, by proposing multiscale convolutional layers for scale-invariant smoke recognition.

Detection in fog or dust conditions presents additional challenges. The authors in [151] compared their approach with other methods, including SVM, Bayes classifier, fuzzy c-means, and Back Propagation Neural Network, and they demonstrated the lowest false alarm rate in wildfire smoke detection under heavy fog. Further advancements include the use of quasi-dynamic features and dual tree-complex wavelet transform with elastic net processing, as proposed by [177], to handle disturbances like fog and haze. Similarly, [148] developed a deep convolutional neural network to address variations in image datasets, such as clouds, fog, and sandstorms, achieving an average accuracy of 97%. However, they noted a performance degradation when testing on wildfire smoke compared to nearby smoke, indicating the need for more specialized training datasets.

5.4. Extinguishing Efficiency

Most of the development of firefighting robots has mainly focused on indoor and smooth outdoor environments, limiting their use in rugged terrains like forests. These robots are designed to assist in firefighting, but their effectiveness in actual forest environments is largely untested. Most existing firefighting UGVs are suited for smooth surfaces and controlled conditions, such as urban areas, and are equipped with fire suppression systems and sensors. However, they are not optimized for the unpredictable conditions of forests.

Some pioneering efforts are being made to develop technologies specifically for forest environments. For instance, a UAV platform with a 600-L payload capacity and equipped with thermographic cameras and navigation systems has been proposed, but it has not been fully tested in real-world conditions [198]. Another study explored the use of fire extinguishing balls deployed from unmanned aircraft systems; though practical effectiveness remained uncertain due to limited integration evidence [199,200]. Research has also focused on robotized surveillance with conventional, multi-spectral, and thermal cameras, primarily for situational awareness and early detection [201]. However, there is a gap in integrating autonomous systems for direct fire suppression, with most efforts centered on surveillance rather than active firefighting.

While there are promising developments, forest firefighting robots are still in the early stages of research and development. Most current technologies are designed for controlled environments and have not been extensively tested in forest conditions. Therefore, their efficiency and practical effectiveness cannot be validated due to a lack of evidence and comprehensive testing.

5.5. Compliance and Standards

The use of UAVs for forest fire detection and extinguishing offers advantages like rapid deployment, real-time data acquisition, and access to hard-to-reach areas. However, integrating UAVs into these applications presents challenges, particularly regarding compliance with regional regulations and safety standards. For instance, in Canada, UAV operators must obtain a pilot license, maintain a line of sight with the UAV, and avoid flying near forest fires [130]. These regulations, while essential for safety, can limit the effectiveness and operational scope of UAV-based systems. Our review found a lack of focus on developing UAV hardware that complies with these regulatory frameworks, highlighting the need for compliant technologies that can operate safely and legally across different regions.

6. Recommendations for Future Research

A review of the current literature on forest fire detection and extinguishing systems revealed several key areas where further research and development are needed. Addressing these gaps will not only enhance the effectiveness of these systems but also ensure their safe and compliant integration into existing fire management frameworks. Below are three primary gaps that were identified, along with corresponding recommendations for future research.

6.1. Recommendation 1: Integration of Real-Time Data Processing and Decision-Making Algorithms

Gap: Current research often focuses on the capabilities of UAV systems for data collection but there is a lack of emphasis on the integration of real-time data processing and decision-making algorithms [82,130]. This integration is crucial for enabling UAVs to respond promptly and accurately to detecting fires, especially in rapidly changing environments.

Recommendation: Future research should concentrate on developing and integrating advanced algorithms capable of real-time data processing [174] and decision making [202]. This includes machine learning and AI techniques that can analyze sensor data on-the-fly, identify potential fire hazards, and make autonomous decisions regarding navigation and intervention. Researchers should explore how these algorithms can be implemented efficiently on UAV platforms, considering constraints like computational power and energy consumption [110,169].

6.2. Recommendation 2: Effectiveness and Autonomy in Real-World Conditions

Gap: Although numerous UAV systems have been proposed for forest fire detection and extinguishing, many have not been extensively tested or validated in real-world conditions [65,73,198]. This lack of field testing raises concerns about the practical effectiveness, functionality, and autonomy of these systems in the diverse and challenging environments typical of forest fires.

Recommendation: There is a need for comprehensive field trials and simulations that replicate the conditions of actual forest fires. Future research should focus on developing and testing UAV systems in varied and dynamic environments to assess their performance in detecting and responding to fires. This includes testing the systems’ navigation capabilities, sensor accuracy, and overall operational reliability.

6.3. Recommendation 3: Human–Robot Interactions and Collaboration

Gap: While UAVs offer advanced surveillance and early detection capabilities, there is limited research on how these systems can effectively interact and collaborate with human firefighters. Our analysis found no article that discusses the HRI for the forest fire. Ensuring seamless HRI is crucial for optimizing the use of UAVs in firefighting, including coordinating actions with ground teams and ensuring the safety and efficiency of operations.

Recommendation: Future research should explore the development of systems and protocols that facilitate effective HRI in the context of forest firefighting. This includes designing intuitive interfaces and communication systems that allow human operators to easily control and monitor UAVs. Additionally, research should focus on developing collaborative frameworks where UAVs and human firefighters can work together, leveraging each other’s strengths. For example, UAVs can provide real-time aerial data to ground teams, enhancing situational awareness and guiding decision-making processes [58]. Studies should also address the psychological and ergonomic aspects of HRI, ensuring that the introduction of UAVs does not overwhelm or distract human operators but rather complements their efforts.

7. Conclusions

Automatic fire detection in forests is a critical aspect of modern wildfire management and prevention. In this paper, through the PRISMA framework, we surveyed a total of 155 journal papers that concentrated on fire detection using image processing, computer vision, deep learning, and machine learning for the time span of 2013–2023. The literature review was mainly classified into four categories: fire, smoke, fire and flame, and fire and smoke. We also categorized the literature based on their applications in real fields for fire detection, fire extinguishing, or a combination of both. We observed an exponential increase in the number of publications from 2018 onward; however, very limited research has been conducted in the utilization of robots for the detection and extinguishing of fire in hazardous environments. We predict that, with the increasing number of fire incidents in the forests and with the increased popularity of robots, the trend of autonomous systems for fire detection and extinguishing will thrive. We hope that this research work can be used as a guidebook for researchers who are looking for recent developments in forest fire detection using deep learning and image processing to perform further research in this domain.

Author Contributions

B.Ö.: conceptualization, methodology, formal analysis, and writing–original draft preparation; M.S.A.: methodology, investigation, visualization, and writing–review and editing; M.U.K.: conceptualization, methodology, investigation, visualization, writing—review and editing, and supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this study:

| AAFLM | Attention-Based Adaptive Fusion Residual Module |

| AAPF | Auto-Organization, Adaptive Frame Periods |

| ADE-Net | Attention-based Dual-Encoding Network |

| AERNet | An Effective Real-time Fire Detection Network |

| AFM | Attention Fusion Module |

| AFSM | Attention-Based Feature Separation Module |

| AGE | Attention-Guided Enhancement |

| AMP | Automatic Mixed Precision |

| ANN | Artificial Neural Network |

| ASFF | Adaptively Spatial Feature Fusion |

| AUROC | Area Under the Receiver Operating Characteristic |

| BNN | Bayesian Neural Network |

| BiFPN | Bidirectional Feature Pyramid Network |

| BPNN | Back Propagation Neural Network |

| CA | Coordinate Attention |

| CARAFE | Content-Aware Reassembly of Features |

| CBAM | Convolutional Block Attention Module |

| CCDC | Continuous Change Detection and Classification |

| CEP | Complex Event Processing |

| CIoU | Complete Intersection over Union |

| CoLBP | Co-Occurrence of Local Binary Pattern |

| DARA | Dual Fusion Attention Residual Feature Attention |

| DBN | Deep Belief Network |

| DCNN | Deep Convolutional Neural Network |

| DDAM | Detail-Difference-Aware Module |

| DETR | Detection Transformer |

| DPPM | Dense Pyramid Pooling Module |

| DTMC | Discrete-Time Markov Chain |

| ECA | Efficient Channel Attention |

| ELM | Extreme Learning Machine |

| ESRGAN | Enhanced Super-Resolution Generative Adversarial Network |

| FCN | Fully Convolutional Network |

| FCOS | Fully Convolutional One-Stage |

| FFDI | Forest Fire Detection Index |

| FFDSM | Forest Fire Detection and Segmentation Model |

| FILDA | Firelight Detection Algorithm |

| FL | Federated Learning |

| FLAME | Fire Luminosity Airborne-based Machine Learning Evaluation |

| FSCN | Fully Symmetric Convolutional–Deconvolutional Neural Network |

| GCF | Global Context Fusion |

| GIS | Geographic Information System |

| GLCM | Gray Level Co-Occurrence Matrix |

| GMM | Gaussian Mixture Model |

| GRU | Gated Recurrent Unit |

| GSConv | Ghost Shuffle Convolution |

| HRI | Human–Robot Interaction |

| HDLBP | Hamming Distance Based Local Binary Pattern |

| ISSA | Improved Sparrow Search Algorithm |

| KNN | K-Nearest Neighbor |

| K-SVD | K-Singular Value Decomposition |

| LBP | Local Binary Pattern |

| LMINet | Label-Relevance Multi-Direction Interaction Network |

| LSTM | Long Short-Term Memory Networks |

| LwF | Learning without Forgetting |

| MAE-Net | Multi-Attention Fusion |

| MCCL | Multi-scale Context Contrasted Local Feature Module |

| MCAM | Multi-Connection Aggregation Method |

| MQTT | Message Queuing Telemetry Transport |

| MSD | Multi-Scale Detection |

| MTL | Multi-Task Learning |

| MWIR | Middle Wavelength Infrared |

| NBR | Normalized Burned Ratio |

| NDVI | Normalized Difference Vegetation Index |

| PANet | Path Aggregation Network |

| PConv | Partial Convolution |

| POD | Probability of Detection |

| POFD | Probability of False Detection |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PSNet | Pixel-level Supervision Neural Network |

| PSO | Particle Swarm Optimization |

| R-CNN | Region-Based Convolutional Neural Network |

| RECAB | Residual Efficient Channel Attention Block |

| RFB | Receptive Field Block |

| ROI | Region of Interest |

| RNN | Recurrent Neural Network |

| RS | Remote Sensing |

| SE-GhostNet | Squeeze and Excitation–GhostNet |

| SHAP | Shapley Additive Explanations |

| SIFT | Scale Invariant Feature Transform |

| SIoU | SCYLLA–Intersection Over Union |

| SPPF | Spatial Pyramid Pooling Fast |

| SPPF+ | Spatial Pyramid Pooling Fast+ |

| SVM | Support Vector Machine |

| TECNN | Transformer-Enhanced Convolutional Neural Network |

| TWSVM | Twin Support Vector Machine |

| USGS | United States Geological Survey |

| ViT | Vision Transformer |

| VHR | Very High Resolution |

| VIIRS | Visible Infrared Imaging Radiometer Suite |

| VSU | Video Surveillance Unit |

| WIoU | Wise–IoU |

| YOLO | You Only Look Once |

References

- Brunner, I.; Godbold, D.L. Tree roots in a changing world. J. For. Res. 2007, 12, 78–82. [Google Scholar] [CrossRef]

- Ball, G.; Regier, P.; González-Pinzón, R.; Reale, J.; Van Horn, D. Wildfires increasingly impact western US fluvial networks. Nat. Commun. 2021, 12, 2484. [Google Scholar] [CrossRef] [PubMed]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Truong, C.T.; Nguyen, T.H.; Vu, V.Q.; Do, V.H.; Nguyen, D.T. Enhancing fire detection technology: A UV-based system utilizing fourier spectrum analysis for reliable and accurate fire detection. Appl. Sci. 2023, 13, 7845. [Google Scholar] [CrossRef]

- Geetha, S.; Abhishek, C.; Akshayanat, C. Machine vision based fire detection techniques: A survey. Fire Technol. 2021, 57, 591–623. [Google Scholar] [CrossRef]

- Alkhatib, A.A. A review on forest fire detection techniques. Int. J. Distrib. Sens. Netw. 2014, 10, 597368. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Fire detection using infrared images for UAV-based forest fire surveillance. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 567–572. [Google Scholar]

- Yang, X.; Hua, Z.; Zhang, L.; Fan, X.; Zhang, F.; Ye, Q.; Fu, L. Preferred vector machine for forest fire detection. Pattern Recognit. 2023, 143, 109722. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Learning-based smoke detection for unmanned aerial vehicles applied to forest fire surveillance. J. Intell. Robot. Syst. 2019, 93, 337–349. [Google Scholar] [CrossRef]

- Li, X.; Song, W.; Lian, L.; Wei, X. Forest fire smoke detection using back-propagation neural network based on MODIS data. Remote Sens. 2015, 7, 4473–4498. [Google Scholar] [CrossRef]

- Mahmoud, M.A.; Ren, H. Forest Fire Detection Using a Rule-Based Image Processing Algorithm and Temporal Variation. Math. Probl. Eng. 2018, 2018, 7612487. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A novel dataset and deep transfer learning benchmark for forest fire detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Rangwala, A.S.; Raghavan, V. Mechanism of Fires: Chemistry and Physical Aspects; Springer Nature: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Wu, D.; Zhang, C.; Ji, L.; Ran, R.; Wu, H.; Xu, Y. Forest fire recognition based on feature extraction from multi-view images. Trait. Signal 2021, 38, 775–783. [Google Scholar] [CrossRef]

- Qiu, X.; Xi, T.; Sun, D.; Zhang, E.; Li, C.; Peng, Y.; Wei, J.; Wang, G. Fire detection algorithm combined with image processing and flame emission spectroscopy. Fire Technol. 2018, 54, 1249–1263. [Google Scholar] [CrossRef]

- Dzigal, D.; Akagic, A.; Buza, E.; Brdjanin, A.; Dardagan, N. Forest fire detection based on color spaces combination. In Proceedings of the 2019 11th International Conference on Electrical and Electronics Engineering (ELECO), Bursa, Turkey, 28–30 November 2019; pp. 595–599. [Google Scholar] [CrossRef]

- Khalil, A.; Rahman, S.U.; Alam, F.; Ahmad, I.; Khalil, I. Fire detection using multi color space and background modeling. Fire Technol. 2021, 57, 1221–1239. [Google Scholar] [CrossRef]

- Gaur, A.; Singh, A.; Kumar, A.; Kumar, A.; Kapoor, K. Video flame and smoke based fire detection algorithms: A literature review. Fire Technol. 2020, 56, 1943–1980. [Google Scholar] [CrossRef]

- Wu, H.; Wu, D.; Zhao, J. An intelligent fire detection approach through cameras based on computer vision methods. Process. Saf. Environ. Prot. 2019, 127, 245–256. [Google Scholar] [CrossRef]

- Khondaker, A.; Khandaker, A.; Uddin, J. Computer Vision-based Early Fire Detection Using Enhanced Chromatic Segmentation and Optical Flow Analysis Technique. Int. Arab. J. Inf. Technol. 2020, 17, 947–953. [Google Scholar] [CrossRef]

- He, Y. Smart detection of indoor occupant thermal state via infrared thermography, computer vision, and machine learning. Build. Environ. 2023, 228, 109811. [Google Scholar] [CrossRef]

- Mazur-Milecka, M.; Głowacka, N.; Kaczmarek, M.; Bujnowski, A.; Kaszyński, M.; Rumiński, J. Smart city and fire detection using thermal imaging. In Proceedings of the 2021 14th International Conference on Human System Interaction (HSI), Gdańsk, Poland, 8–10 July 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Taberkit, A.M.; Kechida, A. A review on early wildfire detection from unmanned aerial vehicles using deep learning-based computer vision algorithms. Signal Process. 2022, 190, 108309. [Google Scholar] [CrossRef]

- Dong, S.; Wang, P.; Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 2021, 40, 100379. [Google Scholar] [CrossRef]

- Saponara, S.; Elhanashi, A.; Gagliardi, A. Real-time video fire/smoke detection based on CNN in antifire surveillance systems. J.-Real-Time Image Process. 2021, 18, 889–900. [Google Scholar] [CrossRef]

- Florath, J.; Keller, S. Supervised Machine Learning Approaches on Multispectral Remote Sensing Data for a Combined Detection of Fire and Burned Area. Remote Sens. 2022, 14, 657. [Google Scholar] [CrossRef]

- Mohammed, R. A real-time forest fire and smoke detection system using deep learning. Int. J. Nonlinear Anal. Appl. 2022, 13, 2053–2063. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Mahmoud, M.A.I.; Ren, H. Forest fire detection and identification using image processing and SVM. J. Inf. Process. Syst. 2019, 15, 159–168. [Google Scholar] [CrossRef]

- Yuan, C.; Ghamry, K.A.; Liu, Z.; Zhang, Y. Unmanned aerial vehicle based forest fire monitoring and detection using image processing technique. In Proceedings of the 2016 IEEE Chinese Guidance, Navigation and Control Conference (CGNCC), Miami, FL, USA, 13–16 June 2016; pp. 1870–1875. [Google Scholar] [CrossRef]

- Rahman, E.U.; Khan, M.A.; Algarni, F.; Zhang, Y.; Irfan Uddin, M.; Ullah, I.; Ahmad, H.I. Computer Vision-Based Wildfire Smoke Detection Using UAVs. Math. Probl. Eng. 2021, 2021, 9977939. [Google Scholar] [CrossRef]

- Almasoud, A.S. Intelligent Deep Learning Enabled Wild Forest Fire Detection System. Comput. Syst. Sci. Eng. 2023, 44. [Google Scholar] [CrossRef]

- Chen, X.; Hopkins, B.; Wang, H.; O’Neill, L.; Afghah, F.; Razi, A.; Fulé, P.; Coen, J.; Rowell, E.; Watts, A. Wildland fire detection and monitoring using a drone-collected RGB/IR image dataset. IEEE Access 2022, 10, 121301–121317. [Google Scholar] [CrossRef]

- Dewangan, A.; Pande, Y.; Braun, H.W.; Vernon, F.; Perez, I.; Altintas, I.; Cottrell, G.W.; Nguyen, M.H. FIgLib & SmokeyNet: Dataset and deep learning model for real-time wildland fire smoke detection. Remote Sens. 2022, 14, 1007. [Google Scholar] [CrossRef]

- Zhou, Z.; Shi, Y.; Gao, Z.; Li, S. Wildfire smoke detection based on local extremal region segmentation and surveillance. Fire Saf. J. 2016, 85, 50–58. [Google Scholar] [CrossRef]

- Zhang, Q.x.; Lin, G.h.; Zhang, Y.m.; Xu, G.; Wang, J.j. Wildland forest fire smoke detection based on faster R-CNN using synthetic smoke images. Procedia Eng. 2018, 211, 441–446. [Google Scholar] [CrossRef]

- Sudhakar, S.; Vijayakumar, V.; Kumar, C.S.; Priya, V.; Ravi, L.; Subramaniyaswamy, V. Unmanned Aerial Vehicle (UAV) based Forest Fire Detection and monitoring for reducing false alarms in forest-fires. Comput. Commun. 2020, 149, 1–16. [Google Scholar] [CrossRef]

- Hossain, F.A.; Zhang, Y.; Yuan, C.; Su, C.Y. Wildfire flame and smoke detection using static image features and artificial neural network. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019. [Google Scholar] [CrossRef]

- Ghamry, K.A.; Kamel, M.A.; Zhang, Y. Cooperative forest monitoring and fire detection using a team of UAVs-UGVs. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 1206–1211. [Google Scholar] [CrossRef]

- Akhloufi, M.A.; Couturier, A.; Castro, N.A. Unmanned aerial vehicles for wildland fires: Sensing, perception, cooperation and assistance. Drones 2021, 5, 15. [Google Scholar] [CrossRef]

- Battistoni, P.; Cantone, A.A.; Martino, G.; Passamano, V.; Romano, M.; Sebillo, M.; Vitiello, G. A cyber-physical system for wildfire detection and firefighting. Future Internet 2023, 15, 237. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A deep learning based forest fire detection approach using UAV and YOLOv3. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep learning approaches for wildland fires using satellite remote sensing data: Detection, mapping, and prediction. Fire 2023, 6, 192. [Google Scholar] [CrossRef]

- Artés, T.; Oom, D.; De Rigo, D.; Durrant, T.H.; Maianti, P.; Libertà, G.; San-Miguel-Ayanz, J. A global wildfire dataset for the analysis of fire regimes and fire behaviour. Sci. Data 2019, 6, 296. [Google Scholar] [CrossRef] [PubMed]

- Sayad, Y.O.; Mousannif, H.; Al Moatassime, H. Predictive modeling of wildfires: A new dataset and machine learning approach. Fire Saf. J. 2019, 104, 130–146. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, M.; Liu, K. Deep neural networks for global wildfire susceptibility modelling. Ecol. Indic. 2021, 127, 107735. [Google Scholar] [CrossRef]

- Zheng, S.; Zou, X.; Gao, P.; Zhang, Q.; Hu, F.; Zhou, Y.; Wu, Z.; Wang, W.; Chen, S. A forest fire recognition method based on modified deep CNN model. Forests 2024, 15, 111. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, M.; Fu, Y.; Ding, Y. A Forest Fire Recognition Method Using UAV Images Based on Transfer Learning. Forests 2022, 13, 975. [Google Scholar] [CrossRef]

- Qian, J.; Lin, H. A Forest Fire Identification System Based on Weighted Fusion Algorithm. Forests 2022, 13, 1301. [Google Scholar] [CrossRef]

- Anh, N. Efficient Forest Fire Detection using Rule-Based Multi-color Space and Correlation Coefficient for Application in Unmanned Aerial Vehicles. Ksii Trans. Internet Inf. Syst. 2022, 16, 381–404. [Google Scholar] [CrossRef]

- Zhang, J.; Zhu, H.; Wang, P.; Ling, X. ATT Squeeze U-Net: A lightweight Network for Forest Fire Detection and Recognition. IEEE Access 2021, 9, 10858–10870. [Google Scholar] [CrossRef]

- Qi, R.; Liu, Z. Extraction and Classification of Image Features for Fire Recognition Based on Convolutional Neural Network. Trait. Signal 2021, 38, 895–902. [Google Scholar] [CrossRef]

- Chanthiya, P.; Kalaivani, V. Forest fire detection on LANDSAT images using support vector machine. Concurr.-Comput.-Pract. Exp. 2021, 33, e6280. [Google Scholar] [CrossRef]

- Sousa, M.; Moutinho, A.; Almeida, M. Thermal Infrared Sensing for Near Real-Time Data-Driven Fire Detection and Monitoring Systems. Sensors 2020, 20, 6803. [Google Scholar] [CrossRef] [PubMed]

- Chung, M.; Han, Y.; Kim, Y. A Framework for Unsupervised Wildfire Damage Assessment Using VHR Satellite Images with PlanetScope Data. Remote Sens. 2020, 12, 3835. [Google Scholar] [CrossRef]

- Wang, Y.; Dang, L.; Ren, J. Forest fire image recognition based on convolutional neural network. J. Algorithm. Comput. Technol. 2019, 13, 1748302619887689. [Google Scholar] [CrossRef]

- Park, W.; Park, S.; Jung, H.; Won, J. An Extraction of Solar-contaminated Energy Part from MODIS Middle Infrared Channel Measurement to Detect Forest Fires. Korean J. Remote Sens. 2019, 35, 39–55. [Google Scholar] [CrossRef]

- Yuan, C.; Liu, Z.; Zhang, Y. Aerial Images-Based Forest Fire Detection for Firefighting Using Optical Remote Sensing Techniques and Unmanned Aerial Vehicles. J. Intell. Robot. Syst. 2017, 88, 635–654. [Google Scholar] [CrossRef]

- Prema, C.; Vinsley, S.; Suresh, S. Multi Feature Analysis of Smoke in YUV Color Space for Early Forest Fire Detection. Fire Technol. 2016, 52, 1319–1342. [Google Scholar] [CrossRef]

- Polivka, T.; Wang, J.; Ellison, L.; Hyer, E.; Ichoku, C. Improving Nocturnal Fire Detection With the VIIRS Day-Night Band. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5503–5519. [Google Scholar] [CrossRef]

- Lin, L. A Spatio-Temporal Model for Forest Fire Detection Using HJ-IRS Satellite Data. Remote Sens. 2016, 8, 403. [Google Scholar] [CrossRef]

- Yoon, S.; Min, J. An Intelligent Automatic Early Detection System of Forest Fire Smoke Signatures using Gaussian Mixture Model. J. Inf. Process. Syst. 2013, 9, 621–632. [Google Scholar] [CrossRef]

- Xue, Z.; Lin, H.; Wang, F. A Small Target Forest Fire Detection Model Based on YOLOv5 Improvement. Forests 2022, 13, 1332. [Google Scholar] [CrossRef]

- Seydi, S.T.; Saeidi, V.; Kalantar, B.; Ueda, N.; Halin, A.A. Fire-Net: A Deep Learning Framework for Active Forest Fire Detection. J. Sensors 2022, 2022, 8044390. [Google Scholar] [CrossRef]

- Lu, K.; Xu, R.; Li, J.; Lv, Y.; Lin, H.; Li, Y. A Vision-Based Detection and Spatial Localization Scheme for Forest Fire Inspection from UAV. Forests 2022, 13, 383. [Google Scholar] [CrossRef]

- Lu, K.; Huang, J.; Li, J.; Zhou, J.; Chen, X.; Liu, Y. MTL-FFDET: A Multi-Task Learning-Based Model for Forest Fire Detection. Forests 2022, 13, 1448. [Google Scholar] [CrossRef]

- Guan, Z.; Miao, X.; Mu, Y.; Sun, Q.; Ye, Q.; Gao, D. Forest Fire Segmentation from Aerial Imagery Data Using an Improved Instance Segmentation Model. Remote Sens. 2022, 14, 3159. [Google Scholar] [CrossRef]

- Li, W.; Lin, Q.; Wang, K.; Cai, K. Machine vision-based network monitoring system for solar-blind ultraviolet signal. Comput. Commun. 2021, 171, 157–162. [Google Scholar] [CrossRef]

- Kim, B.; Lee, J. A Bayesian Network-Based Information Fusion Combined with DNNs for Robust Video Fire Detection. Appl. Sci. 2021, 11, 7624. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.; Jmal, M.; Mseddi, W.; Attia, R. Wildfire Segmentation Using Deep Vision Transformers. Remote Sens. 2021, 13, 3527. [Google Scholar] [CrossRef]

- Toptas, B.; Hanbay, D. A new artificial bee colony algorithm-based color space for fire/flame detection. Soft Comput. 2020, 24, 10481–10492. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early fire detection based on aerial 360-degree sensors, deep convolution neural networks and exploitation of fire dynamic textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.; Mseddi, W. Deep Learning and Transformer Approaches for UAV-Based Wildfire Detection and Segmentation. Sensors 2022, 22, 1977. [Google Scholar] [CrossRef]

- Zhang, Q.; Ge, L.; Zhang, R.; Metternicht, G.; Liu, C.; Du, Z. Towards a Deep-Learning-Based Framework of Sentinel-2 Imagery for Automated Active Fire Detection. Remote Sens. 2021, 13, 4790. [Google Scholar] [CrossRef]

- Rashkovetsky, D.; Mauracher, F.; Langer, M.; Schmitt, M. Wildfire Detection From Multisensor Satellite Imagery Using Deep Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7001–7016. [Google Scholar] [CrossRef]

- Pereira, G.; Fusioka, A.; Nassu, B.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. Isprs J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Benzekri, W.; Moussati, A.; Moussaoui, O.; Berrajaa, M. Early Forest Fire Detection System using Wireless Sensor Network and Deep Learning. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 496–503. [Google Scholar] [CrossRef]

- Zhao, Y.; Ban, Y. GOES-R Time Series for Early Detection of Wildfires with Deep GRU-Network. Remote Sens. 2022, 14, 4347. [Google Scholar] [CrossRef]

- Hong, Z. Active Fire Detection Using a Novel Convolutional Neural Network Based on Himawari-8 Satellite Images. Front. Environ. Sci. 2022, 10, 794028. [Google Scholar] [CrossRef]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A Forest Fire Detection System Based on Ensemble Learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Pan, H.; Badawi, D.; Zhang, X.; Cetin, A. Additive neural network for forest fire detection. Signal Image Video Process. 2020, 14, 675–682. [Google Scholar] [CrossRef]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency Detection and Deep Learning-Based Wildfire Identification in UAV Imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef] [PubMed]

- Zhang, A.; Zhang, A. Real-Time Wildfire Detection and Alerting with a Novel Machine Learning Approach A New Systematic Approach of Using Convolutional Neural Network (CNN) to Achieve Higher Accuracy in Automation. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 1–6. [Google Scholar]

- Wahyono; Harjoko, A.; Dharmawan, A.; Adhinata, F.D.; Kosala, G.; Jo, K.H. Real-time forest fire detection framework based on artificial intelligence using color probability model and motion feature analysis. Fire 2022, 5, 23. [Google Scholar] [CrossRef]

- Phan, T.; Quach, N.; Nguyen, T.; Nguyen, T.; Jo, J.; Nguyen, Q. Real-time wildfire detection with semantic explanations. Expert Syst. Appl. 2022, 201, 117007. [Google Scholar] [CrossRef]

- Yang, X. Pixel-level automatic annotation for forest fire image. Eng. Appl. Artif. Intell. 2021, 104, 104353. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fule, P.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, K.; Wang, C.; Huang, S. Research on the identification method for the forest fire based on deep learning. Optik 2020, 223, 165491. [Google Scholar] [CrossRef]

- Khurana, M.; Saxena, V. A Unified Approach to Change Detection Using an Adaptive Ensemble of Extreme Learning Machines. IEEE Geosci. Remote Sens. Lett. 2020, 17, 794–798. [Google Scholar] [CrossRef]

- Huang, X.; Du, L. Fire Detection and Recognition Optimization Based on Virtual Reality Video Image. IEEE Access 2020, 8, 77951–77961. [Google Scholar] [CrossRef]

- Govil, K.; Welch, M.L.; Ball, J.T.; Pennypacker, C.R. Preliminary results from a wildfire detection system using deep learning on remote camera images. Remote Sens. 2020, 12, 166. [Google Scholar] [CrossRef]

- Ouni, S.; Ayoub, Z.; Kamoun, F. Auto-organization approach with adaptive frame periods for IEEE 802.15.4/zigbee forest fire detection system. Wirel. Netw. 2019, 25, 4059–4076. [Google Scholar] [CrossRef]

- Jang, E.; Kang, Y.; Im, J.; Lee, D.; Yoon, J.; Kim, S. Detection and Monitoring of Forest Fires Using Himawari-8 Geostationary Satellite Data in South Korea. Remote Sens. 2019, 11, 271. [Google Scholar] [CrossRef]

- Mao, W.; Wang, W.; Dou, Z.; Li, Y. Fire Recognition Based On Multi-Channel Convolutional Neural Network. Fire Technol. 2018, 54, 531–554. [Google Scholar] [CrossRef]

- Zheng, S.; Gao, P.; Zhou, Y.; Wu, Z.; Wan, L.; Hu, F.; Wang, W.; Zou, X.; Chen, S. An accurate forest fire recognition method based on improved BPNN and IoT. Remote Sens. 2023, 15, 2365. [Google Scholar] [CrossRef]

- Liu, T.; Chen, W.; Lin, X.; Mu, Y.; Huang, J.; Gao, D.; Xu, J. Defogging Learning Based on an Improved DeepLabV3+ Model for Accurate Foggy Forest Fire Segmentation. Forests 2023, 14, 1859. [Google Scholar] [CrossRef]

- Reis, H.C.; Turk, V. Detection of forest fire using deep convolutional neural networks with transfer learning approach. Appl. Soft Comput. 2023, 143, 110362. [Google Scholar] [CrossRef]

- Pang, Y.; Wu, Y.; Yuan, Y. FuF-Det: An Early Forest Fire Detection Method under Fog. Remote Sens. 2023, 15, 5435. [Google Scholar] [CrossRef]

- Lin, J.; Lin, H.; Wang, F. A semi-supervised method for real-time forest fire detection algorithm based on adaptively spatial feature fusion. Forests 2023, 14, 361. [Google Scholar] [CrossRef]

- Akyol, K. Robust stacking-based ensemble learning model for forest fire detection. Int. J. Environ. Sci. Technol. 2023, 20, 13245–13258. [Google Scholar] [CrossRef]

- Niu, K.; Wang, C.; Xu, J.; Yang, C.; Zhou, X.; Yang, X. An Improved YOLOv5s-Seg Detection and Segmentation Model for the Accurate Identification of Forest Fires Based on UAV Infrared Image. Remote Sens. 2023, 15, 4694. [Google Scholar] [CrossRef]

- Sarikaya Basturk, N. Forest fire detection in aerial vehicle videos using a deep ensemble neural network model. Aircr. Eng. Aerosp. Technol. 2023, 95, 1257–1267. [Google Scholar] [CrossRef]

- Rahman, A.; Sakif, S.; Sikder, N.; Masud, M.; Aljuaid, H.; Bairagi, A.K. Unmanned aerial vehicle assisted forest fire detection using deep convolutional neural network. Intell. Autom. Soft Comput 2023, 35, 3259–3277. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. CT-Fire: A CNN-Transformer for wildfire classification on ground and aerial images. Int. J. Remote Sens. 2023, 44, 7390–7415. [Google Scholar] [CrossRef]

- Abdusalomov, A.B.; Islam, B.M.S.; Nasimov, R.; Mukhiddinov, M.; Whangbo, T.K. An improved forest fire detection method based on the detectron2 model and a deep learning approach. Sensors 2023, 23, 1512. [Google Scholar] [CrossRef]

- Supriya, Y.; Gadekallu, T.R. Particle swarm-based federated learning approach for early detection of forest fires. Sustainability 2023, 15, 964. [Google Scholar] [CrossRef]

- Khennou, F.; Akhloufi, M.A. Improving wildland fire spread prediction using deep U-Nets. Sci. Remote Sens. 2023, 8, 100101. [Google Scholar] [CrossRef]

- Peruzzi, G.; Pozzebon, A.; Van Der Meer, M. Fight fire with fire: Detecting forest fires with embedded machine learning models dealing with audio and images on low power iot devices. Sensors 2023, 23, 783. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Kastridis, A.; Stathaki, T.; Yuan, J.; Shi, M.; Grammalidis, N. Suburban Forest Fire Risk Assessment and Forest Surveillance Using 360-Degree Cameras and a Multiscale Deformable Transformer. Remote Sens. 2023, 15, 1995. [Google Scholar] [CrossRef]

- Almeida, J.S.; Jagatheesaperumal, S.K.; Nogueira, F.G.; de Albuquerque, V.H.C. EdgeFireSmoke++: A novel lightweight algorithm for real-time forest fire detection and visualization using internet of things-human machine interface. Expert Syst. Appl. 2023, 221, 119747. [Google Scholar] [CrossRef]

- Zheng, H.; Dembele, S.; Wu, Y.; Liu, Y.; Chen, H.; Zhang, Q. A lightweight algorithm capable of accurately identifying forest fires from UAV remote sensing imagery. Front. For. Glob. Chang. 2023, 6, 1134942. [Google Scholar] [CrossRef]

- Shahid, M.; Chen, S.F.; Hsu, Y.L.; Chen, Y.Y.; Chen, Y.L.; Hua, K.L. Forest fire segmentation via temporal transformer from aerial images. Forests 2023, 14, 563. [Google Scholar] [CrossRef]

- Ahmad, K.; Khan, M.S.; Ahmed, F.; Driss, M.; Boulila, W.; Alazeb, A.; Alsulami, M.; Alshehri, M.S.; Ghadi, Y.Y.; Ahmad, J. FireXnet: An explainable AI-based tailored deep learning model for wildfire detection on resource-constrained devices. Fire Ecol. 2023, 19, 54. [Google Scholar] [CrossRef]

- Wang, X.; Pan, Z.; Gao, H.; He, N.; Gao, T. An efficient model for real-time wildfire detection in complex scenarios based on multi-head attention mechanism. J.-Real-Time Image Process. 2023, 20, 66. [Google Scholar] [CrossRef]

- Ying, L.X.; Shen, Z.H.; Yang, M.Z.; Piao, S.L. Wildfire Detection Probability of MODIS Fire Products under the Constraint of Environmental Factors: A Study Based on Confirmed Ground Wildfire Records. Remote Sens. 2019, 11, 31. [Google Scholar] [CrossRef]

- Liu, T. Video Smoke Detection Method Based on Change-Cumulative Image and Fusion Deep Network. Sensors 2019, 19, 5060. [Google Scholar] [CrossRef] [PubMed]

- Bugaric, M.; Jakovcevic, T.; Stipanicev, D. Adaptive estimation of visual smoke detection parameters based on spatial data and fire risk index. Comput. Vis. Image Underst. 2014, 118, 184–196. [Google Scholar] [CrossRef]

- Xie, J.; Yu, F.; Wang, H.; Zheng, H. Class Activation Map-Based Data Augmentation for Satellite Smoke Scene Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6510905. [Google Scholar] [CrossRef]

- Zhu, G.; Chen, Z.; Liu, C.; Rong, X.; He, W. 3D video semantic segmentation for wildfire smoke. Mach. Vis. Appl. 2020, 31, 50. [Google Scholar] [CrossRef]

- Li, X.; Chen, Z.; Wu, Q.; Liu, C. 3D Parallel Fully Convolutional Networks for Real-Time Video Wildfire Smoke Detection. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 89–103. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, Y. Real-time forest smoke detection using hand-designed features and deep learning. Comput. Electron. Agric. 2019, 167, 105029. [Google Scholar] [CrossRef]

- Lin, G.; Zhang, Y.; Xu, G.; Zhang, Q. Smoke detection on video sequences using 3D convolutional neural networks. Fire Technol. 2019, 55, 1827–1847. [Google Scholar] [CrossRef]

- Gao, Y.; Cheng, P. Forest Fire Smoke Detection Based on Visual Smoke Root and Diffusion Model. Fire Technol. 2019, 55, 1801–1826. [Google Scholar] [CrossRef]

- Jakovcevic, T.; Bugaric, M.; Stipanicev, D. A Stereo Approach to Wildfire Smoke Detection: The Improvement of the Existing Methods by Adding a New Dimension. Comput. Inform. 2018, 37, 476–508. [Google Scholar] [CrossRef]

- Jia, Y.; Yuan, J.; Wang, J.; Fang, J.; Zhang, Y.; Zhang, Q. A Saliency-Based Method for Early Smoke Detection in Video Sequences. Fire Technol. 2016, 52, 1271–1292. [Google Scholar] [CrossRef]

- Chen, S.; Li, W.; Cao, Y.; Lu, X. Combining the Convolution and Transformer for Classification of Smoke-Like Scenes in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4512519. [Google Scholar] [CrossRef]

- Guede-Fernandez, F.; Martins, L.; Almeida, R.; Gamboa, H.; Vieira, P. A Deep Learning Based Object Identification System for Forest Fire Detection. Fire 2021, 4, 75. [Google Scholar] [CrossRef]

- Yazdi, A.; Qin, H.; Jordan, C.; Yang, L.; Yan, F. Nemo: An Open-Source Transformer-Supercharged Benchmark for Fine-Grained Wildfire Smoke Detection. Remote Sens. 2022, 14, 3979. [Google Scholar] [CrossRef]

- Shi, J.; Wang, W.; Gao, Y.; Yu, N. Optimal Placement and Intelligent Smoke Detection Algorithm for Wildfire-Monitoring Cameras. IEEE Access 2020, 8, 72326–72339. [Google Scholar] [CrossRef]

- Hossain, F.A.; Zhang, Y.M.; Tonima, M.A. Forest fire flame and smoke detection from UAV-captured images using fire-specific color features and multi-color space local binary pattern. J. Unmanned Veh. Syst. 2020, 8, 285–309. [Google Scholar] [CrossRef]

- Li, T.; Zhao, E.; Zhang, J.; Hu, C. Detection of Wildfire Smoke Images Based on a Densely Dilated Convolutional Network. Electronics 2019, 8, 1131. [Google Scholar] [CrossRef]

- Cao, Y.; Yang, F.; Tang, Q.; Lu, X. An Attention Enhanced Bidirectional LSTM for Early Forest Fire Smoke Recognition. IEEE Access 2019, 7, 154732–154742. [Google Scholar] [CrossRef]

- Prema, C.; Suresh, S.; Krishnan, M.; Leema, N. A Novel Efficient Video Smoke Detection Algorithm Using Co-occurrence of Local Binary Pattern Variants. Fire Technol. 2022, 58, 3139–3165. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Kim, S.Y.; Muminov, A. Forest fire smoke detection based on deep learning approaches and unmanned aerial vehicle images. Sensors 2023, 23, 5702. [Google Scholar] [CrossRef]

- Yang, H.; Wang, J.; Wang, J. Efficient Detection of Forest Fire Smoke in UAV Aerial Imagery Based on an Improved Yolov5 Model and Transfer Learning. Remote Sens. 2023, 15, 5527. [Google Scholar] [CrossRef]

- Huang, J.; Zhou, J.; Yang, H.; Liu, Y.; Liu, H. A small-target forest fire smoke detection model based on deformable transformer for end-to-end object detection. Forests 2023, 14, 162. [Google Scholar] [CrossRef]

- Saydirasulovich, S.N.; Mukhiddinov, M.; Djuraev, O.; Abdusalomov, A.; Cho, Y.I. An improved wildfire smoke detection based on YOLOv8 and UAV images. Sensors 2023, 23, 8374. [Google Scholar] [CrossRef]

- Chen, G.; Cheng, R.; Lin, X.; Jiao, W.; Bai, D.; Lin, H. LMDFS: A lightweight model for detecting forest fire smoke in UAV images based on YOLOv7. Remote Sens. 2023, 15, 3790. [Google Scholar] [CrossRef]

- Qiao, Y.; Jiang, W.; Wang, F.; Su, G.; Li, X.; Jiang, J. FireFormer: An efficient Transformer to identify forest fire from surveillance cameras. Int. J. Wildland Fire 2023, 32, 1364–1380. [Google Scholar] [CrossRef]

- Fernandes, A.M.; Utkin, A.B.; Chaves, P. Automatic early detection of wildfire smoke with visible-light cameras and EfficientDet. J. Fire Sci. 2023, 41, 122–135. [Google Scholar] [CrossRef]

- Tao, H. A label-relevance multi-direction interaction network with enhanced deformable convolution for forest smoke recognition. Expert Syst. Appl. 2024, 236, 121383. [Google Scholar] [CrossRef]

- Tao, H.; Duan, Q.; Lu, M.; Hu, Z. Learning discriminative feature representation with pixel-level supervision for forest smoke recognition. Pattern Recognit. 2023, 143, 109761. [Google Scholar] [CrossRef]

- James, G.L.; Ansaf, R.B.; Al Samahi, S.S.; Parker, R.D.; Cutler, J.M.; Gachette, R.V.; Ansaf, B.I. An Efficient Wildfire Detection System for AI-Embedded Applications Using Satellite Imagery. Fire 2023, 6, 169. [Google Scholar] [CrossRef]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite smoke scene detection using convolutional neural network with spatial and channel-wise attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef]

- Larsen, A.; Hanigan, I.; Reich, B.J.; Qin, Y.; Cope, M.; Morgan, G.; Rappold, A.G. A deep learning approach to identify smoke plumes in satellite imagery in near-real time for health risk communication. J. Expo. Sci. Environ. Epidemiol. 2021, 31, 170–176. [Google Scholar] [CrossRef]

- Yuan, F.; Zhang, L.; Wan, B.; Xia, X.; Shi, J. Convolutional neural networks based on multi-scale additive merging layers for visual smoke recognition. Mach. Vis. Appl. 2019, 30, 345–358. [Google Scholar] [CrossRef]

- Pundir, A.S.; Raman, B. Dual deep learning model for image based smoke detection. Fire Technol. 2019, 55, 2419–2442. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, A.; Han, Y.; Nan, J.; Li, K. Fast stochastic configuration network based on an improved sparrow search algorithm for fire flame recognition. Knowl.-Based Syst. 2022, 245, 108626. [Google Scholar] [CrossRef]

- Buza, E.; Akagic, A. Unsupervised method for wildfire flame segmentation and detection. IEEE Access 2022, 10, 55213–55225. [Google Scholar] [CrossRef]

- Zhao, Y.; Tang, G.; Xu, M. Hierarchical detection of wildfire flame video from pixel level to semantic level. Expert Syst. Appl. 2015, 42, 4097–4104. [Google Scholar] [CrossRef]

- Prema, C.; Vinsley, S.; Suresh, S. Efficient Flame Detection Based on Static and Dynamic Texture Analysis in Forest Fire Detection. Fire Technol. 2018, 54, 255–288. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, N.; Xiao, N. Fire detection and identification method based on visual attention mechanism. Optik 2015, 126, 5011–5018. [Google Scholar] [CrossRef]

- Liu, H.; Hu, H.; Zhou, F.; Yuan, H. Forest flame detection in unmanned aerial vehicle imagery based on YOLOv5. Fire 2023, 6, 279. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, H.; Zhang, Y.; Hu, K.; An, K. A deep learning-based experiment on forest wildfire detection in machine vision course. IEEE Access 2023, 11, 32671–32681. [Google Scholar] [CrossRef]

- Kong, S.; Deng, J.; Yang, L.; Liu, Y. An attention-based dual-encoding network for fire flame detection using optical remote sensing. Eng. Appl. Artif. Intell. 2024, 127, 107238. [Google Scholar] [CrossRef]

- Kaliyev, D.; Shvets, O.; Györök, G. Computer Vision-based Fire Detection using Enhanced Chromatic Segmentation and Optical Flow Model. Acta Polytech. Hung. 2023, 20, 27–45. [Google Scholar] [CrossRef]

- Chen, B.; Bai, D.; Lin, H.; Jiao, W. Flametransnet: Advancing forest flame segmentation with fusion and augmentation techniques. Forests 2023, 14, 1887. [Google Scholar] [CrossRef]

- Morandini, F.; Toulouse, T.; Silvani, X.; Pieri, A.; Rossi, L. Image-based diagnostic system for the measurement of flame properties and radiation. Fire Technol. 2019, 55, 2443–2463. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, W.; Zuo, J.; Yang, K. The fire recognition algorithm using dynamic feature fusion and IV-SVM classifier. Clust. Comput. 2019, 22, 7665–7675. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-temporal flame modeling and dynamic texture analysis for automatic video-based fire detection. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 339–351. [Google Scholar] [CrossRef]

- Zheng, X.; Chen, F.; Lou, L.; Cheng, P.; Huang, Y. Real-time detection of full-scale forest fire smoke based on deep convolution neural network. Remote Sens. 2022, 14, 536. [Google Scholar] [CrossRef]

- Martins, L.; Guede-Fernandez, F.; Almeida, R.; Gamboa, H.; Vieira, P. Real-Time Integration of Segmentation Techniques for Reduction of False Positive Rates in Fire Plume Detection Systems during Forest Fires. Remote Sens. 2022, 14, 2701. [Google Scholar] [CrossRef]

- Fernandes, A.; Utkin, A.; Chaves, P. Automatic Early Detection of Wildfire Smoke With Visible light Cameras Using Deep Learning and Visual Explanation. IEEE Access 2022, 10, 12814–12828. [Google Scholar] [CrossRef]

- Jiang, Y.; Wei, R.; Chen, J.; Wang, G. Deep Learning of Qinling Forest Fire Anomaly Detection Based on Genetic Algorithm Optimization. Univ. Politeh. Buchar. Sci. Bull. Ser.-Electr. Eng. Comput. Sci. 2021, 83, 75–84. [Google Scholar]

- Perrolas, G.; Niknejad, M.; Ribeiro, R.; Bernardino, A. Scalable Fire and Smoke Segmentation from Aerial Images Using Convolutional Neural Networks and Quad-Tree Search. Sensors 2022, 22, 1701. [Google Scholar] [CrossRef]

- Li, J. Adaptive linear feature-reuse network for rapid forest fire smoke detection model. Ecol. Inform. 2022, 68, 101584. [Google Scholar] [CrossRef]

- Hu, Y.; Zhan, J.; Zhou, G.; Chen, A.; Cai, W.; Guo, K.; Hu, Y.; Li, L. Fast forest fire smoke detection using MVMNet. Knowl.-Based Syst. 2022, 241, 108219. [Google Scholar] [CrossRef]

- Almeida, J.; Huang, C.; Nogueira, F.; Bhatia, S.; Albuquerque, V. EdgeFireSmoke: A Novel lightweight CNN Model for Real-Time Video Fire-Smoke Detection. IEEE Trans. Ind. Inform. 2022, 18, 7889–7898. [Google Scholar] [CrossRef]

- Zhao, E.; Liu, Y.; Zhang, J.; Tian, Y. Forest Fire Smoke Recognition Based on Anchor Box Adaptive Generation Method. Electronics 2021, 10, 566. [Google Scholar] [CrossRef]