Abstract

Neural encoder-decoder models for language generation can be trained to predict words directly from linguistic or non-linguistic inputs. When generating with these so-called end-to-end models, however, the NLG system needs an additional decoding procedure that determines the output sequence, given the infinite search space over potential sequences that could be generated with the given vocabulary. This survey paper provides an overview of the different ways of implementing decoding on top of neural network-based generation models. Research into decoding has become a real trend in the area of neural language generation, and numerous recent papers have shown that the choice of decoding method has a considerable impact on the quality and various linguistic properties of the generation output of a neural NLG system. This survey aims to contribute to a more systematic understanding of decoding methods across different areas of neural NLG. We group the reviewed methods with respect to the broad type of objective that they optimize in the generation of the sequence—likelihood, diversity, and task-specific linguistic constraints or goals—and discuss their respective strengths and weaknesses.

1. Introduction

The rise of deep learning techniques in NLP has significantly changed the way natural language generation (NLG) systems are designed, developed, and trained with data. Traditional, rule- or corpus-based NLG systems typically modeled decisions at different levels of linguistics processing in an explicit and symbolic fashion [1,2].

In contrast, recent neural network architectures for generation can be trained to predict words directly from linguistic inputs or non-linguistic data, such as database records or images. For this reason, neural generators are commonly referred to as “end-to-end systems” [3,4,5,6,7].

It is less commonly noticed, however, that the neural end-to-end approach to generation is restricted to modeling word probability distributions, whereas the step of determining the output sequence is not handled in the model itself. Thus, neural NLG system generally need an additional decoding procedure that operates in symbolic space and defines how words are strung together to form sentences and texts. This survey focuses on the decoding stage in the neural language generation process. It provides an overview of the vast range of decoding methods that have been developed and used in recent research on neural language generation and discusses their respective strengths and weaknesses.

The development of a neural architecture for generation involves many steps and aspects, starting from the definition of the task, the collection and preparation of data, the design of the model and its training, and the evaluation. Recent surveys in NLG cover these aspects very well but do not address the topic of decoding in particular, e.g., Gatt and Krahmer [2]’s very extensive survey on different NLG tasks, architectures, and evaluation methods. Similarly, in many recent papers on NLG systems or tasks, the decoding method does not play a central role. Often, it is reported as a technical detail of the experimental set-up. At the same time, research into decoding has become a real trend in the area of neural language generation. Numerous papers have been published in recent years showing that the choice of decoding method has a considerable impact on the quality and various linguistic properties of the generation output. This survey aims to make this trend more visible and to contribute to a more systematic understanding of decoding and its importance for neural NLG.

1.1. Motivation and Overview

In a neural NLG system, the decoding method defines the way the system handles its search space over potential output utterances when generating a sequence. Generally, in neural language generation, this search space is infinite, i.e., it grows exponentially with the length of the output sequence. Therefore, the decoding procedure is an important part of the neural NLG pipeline where non-trivial design decisions are taken by the developer of the NLG system. The first goal of this survey is to introduce the notion of decoding and show its importance for different neural (and non-neural) NLG frameworks (Section 2).

The most well-known and de-facto standard decoding procedure in NLG is beam search, a general and traditional search algorithm which dates back to Lowerre [8]’s work on speech recognition. Since the advent of neural NLG, however, researchers have noticed shortcomings of beam search and its many variants that are used more or less systematically in practice. The second goal of this survey is to provide an in-depth overview of definitions and analyses of beam search in neural NLG (Section 3).

While beam search is designed to maximize the likelihood of the generated sequence, many recently developed decoding methods prioritize other generation objectives. Most notably, a considerable body of work has investigated decoding methods that increase the so-called “diversity” of generation output. Section 4 introduces different notions of diversity used in the decoding literature and reviews the corresponding methods.

While likelihood-oriented and diversity-oriented decoding is rather task-indepen-dent, other lines of work have investigated decoding methods that explicitly introduce task-specific objectives and linguistic constraints into the generation process. Modeling constraints that control the behavior of an NLG system for particular tasks or situations is a notorious problem in neural NLG, given the complex black-box design of neural network architectures. Decoding seems to offer an attractive solution (or work-around) to this problem as it operates on the symbolic search space representing generation candidates. Section 5 will summarize works that view decoding as a means of controlling and constraining the linguistic properties of neural NLG output.

Finally, the decoding methods reviewed in this survey do not only constitute interesting algorithms on their own, since they are closely connected to general themes and questions that revolve around neural NLG. As the above overview has already shown, decoding methods show important differences with respect to their objectives and underlying assumptions of the generation process. Section 6 provides some discussion of the challenges and open questions that are brought up by decoding, but concern neural NLG in general.

In short, the goals of this survey can be summarized as follows:

- overview decoding across different neural NLG frameworks (Section 2),

- review of different variants of beam search-based decoding and summarize the debate about strengths and weaknesses of beam search (Section 3),

- discuss different notions of diversity in the decoding literature and summarize work on diversity-oriented decoding methods (Section 4),

- summarize work on task-specific decoding (Section 5), and

- discuss challenges in neural NLG brought up by work on decoding (Section 6).

1.2. Scope and Methodology

This survey focuses on decoding methods for neural NLG-but how do we define NLG in the first place? A very popular definition of NLG is the one by Reiter and Dale [9], which states that NLG is “is concerned with the construction of computer systems than can produce understandable texts in English or other human languages from some underlying non-linguistic representation of information”. In recent years, however, the research questions and modeling approaches in NLG overlap more and more with questions addressed in areas, such as text-to-text generation (e.g., summarization) [10,11], machine translation [10], dialog modeling [12], or, notably, language modeling [11]. Here, the input is not necessarily language-external data but linguistic input, e.g., text. Gatt and Krahmer [2]’s survey focuses mostly on “core” NLG where the input to the system is non-linguistic. In our discussion of decoding, we will see that this distinction is very difficult to maintain as methods for neural data-to-text generation are often directly inspired by and compared to methods from other areas subsumed under or related to NLG, particularly in machine translation and language modeling. Hence, in most of this article, we will adopt a rather loose definition of NLG and report on decoding methods used to generate text in neural encoder-decoder frameworks.

This survey aims at a comprehensive overview of different approaches to decoding and their analysis in the recent literature. Therefore, it includes a diverse set of papers published at major international NLP, ML, and AI venues since the development neural NLG in 2015, i.e., papers that introduce particular decoding methods, that present analyses of decoding, or that report relevant experiments on decoding as part of a particular NLG system. This survey also includes papers published before the advent of neural NLG, introducing foundational work on decoding methods that are still widely used in neural NLG. Furthermore, this survey provides a perspective on decoding methods from a practical perspective. We have compiled a list of papers on well-known NLG systems spanning the different NLG tasks just discussed and report their decoding method, even if it is not central in that paper. Tables 2 and 3 show this list, which contains systems that either implement a decoding method relevant for this survey or constitute a popular approach in their sub-area according to their number of citations and publication venue. Table 2 summarizes the text-to-text generation systems which process linguistic inputs, whereas Table 3 lists data-to-text systems that take non-linguistic data as input. This takes up the distinction between different types of NLG tasks discussed above and allows for a comparison between these overlapping areas.

2. Decoding across NLG Frameworks and Tasks

This survey is devoted to decoding methods that are defined as inference procedures external to the neural NLG model and that can be used broadly and independently across different NLG tasks and architectures. Hence, most of this survey will abstract away from the inner workings of neural NLG architectures and models. At the same time, we will also see that many decoding methods are designed to address particular shortcomings of neural generation systems and challenges that arise in the neural encoder-decoder generation framework. Therefore, before going into the details of decoding methods in the remainder of this article, this section will briefly introduce some basic NLG frameworks and discuss why and where they require a decoding procedure. We will start with pre-neural statistical NLG systems in Section 2.1, move on to autoregressive neural generation in Section 2.2 and non-autoregressive models in Section 2.3. Section 1.2 gives an overview of different NLG tasks considered in this survey.

2.1. Pre-Neural NLG

First of all, template- or rule-based approaches constitute an important type of NLG system that is often relevant in practical applications. These systems offer “hand-built” solutions for specific generation domains or specific parts of a generation pipeline [13,14,15,16] and can be designed at varying levels of linguistic complexity [17]. Generally, they explicitly restrict the system’s search space to a finite set of utterances, use rules to fill in pre-specified utterance templates, and do not require a decoding method.

Other NLG frameworks have integrated grammar-based components into hybrid architectures that leveraged a statistical component to rank or score decisions specified by the grammar. Early approaches in corpus-based NLG followed a generate-and-rank approach where a (more or less sophisticated) grammar was used to produce an exhaustive set of generation candidates which was subsequently ranked globally by a language model or some other type of scoring or reranking model [18,19,20,21]. These systems deal with a larger search space than fully template- or rule-based systems, but still search the entire, finite hypothesis space for the globally optimal output candidate.

Subsequent work on stochastic language generation aimed at methods which avoid an exhaustive ranking or traversal of the candidate space. Another way to integrate grammar-based generation with statistical decision making was introduced in the probabilistic CFG-based generator by Belz [22]. Their system was built for the task of weather forecast generation and features expansion rules with weights or probabilities learned from a corpus. Belz [22] experiment with three decoding strategies for searching the space of possible expansions: greedy search, viterbi search and greedy roulette-wheel generation. The latter two correspond to two main types of decoding methods discussed in this survey, i.e., viterbi search as a search-based decoding method and roulette-wheel generation as a sampling-based method that favors diversity. Belz [22]’s experiments showed that greedy search outperformed the other decoding methods.

Subsequent and concurrent work on statistical NLG has aimed at implementing generation models that do not require a grammar as a backbone and can be learned in an end-to-end fashion and trained directly on input-output pairs. Angeli et al. [23] present a simple, domain-independent method for training a generator on different data-to-text generation corpora that align sentences to database records. Their system decomposes the generation process into a sequence of local content selection and realization decisions, which are handled by discriminative classifiers. Thus, in contrast to recent, neural end-to-end systems (see Section 2.2), their model does not directly predict words from a given input, but implements an intermediate level of processing that models the structure of the output sequence. Angeli et al. [23] discuss the possibility to use different decoding methods, i.e., greedy search, sampling and beam search, but state that greedy search outperformed beam search in their setting.

In a similar vein, Konstas and Lapata [24] present an approach to concept-to-text generation which they call unsupervised as it does not assume explicit alignments between input representations (database records) and output text. Their framework is based on a basic probabilistic CFG that captures the syntactic relations between database records, fields, and words. Importantly, their system represents the search space as a set of trees encoded in a hyper-graph. A core component of their system is a relatively advanced decoding method as a naive traversal of the hyper-graph would be infeasible. For decoding, they adopt cube-pruning [25], a variant of beam-search for syntax-based machine translation which allows them to interleave search with language model scoring. Mairesse and Young [26] developed the BAGEL system as a fully stochastic approach for generation in a dialog system setting. Their approach does not rely on a hand-coded grammar, but frames the generation task as a search over Factored Language Models. These can be thought of as dynamic Bayesian networks and constitute, according to Reference [27], a principled way of modeling word prediction in a large search space. Thus, in BAGEL, the language generator’s task is to predict, order and realize a sequence of so-called semantic stacks (similar to slots). A core component of Bagel is a decoding procedure that divides the search problem into three sequential sub-tasks, i.e., the ordering of mandatory stacks, the prediction of the full sequence of stacks and the realization of the stacks [26].

Next to the aforementioned approaches for end-to-end data-to-text generation, another important line of work in pre-neural statistical NLG has investigated models for realizing and linearizing a given hierarchical meaning representation or syntactic structure, e.g., as part of the surface realization challenges [28,29]. Here, the most successful systems adopted statistical linearization techniques. For instance, the system by Bohnet et al. [30] and Bohnet et al. [31] were trained to map trees to output sequences using a series of classification and realization models. These linearization decisions are implemented as decoding procedures via beam search. More recent work on linearization has typically adopted AMR-based meaning representations and used different translation or transduction models to map these to output sentences, using decoding mechanisms from phrase-based MT systems [32,33].

Thus, implementations of decoding algorithms in NLG have often been based on general search algorithms or algorithms developed in the area of MT. In research on MT, different decoding mechanisms have been explored and described in great detail for a range of alignment-based or phrase-based systems [25,34,35]. For instance, the well-known beam search decoder implemented in the Pharaoh system [35] operates on a phrase table that aligns words or phrases in an input sentence with different translation candidates in the output sentence. The decoding problem is to find a high-scoring combination of translation hypotheses and, at the same time, reduce the search space which grows exponentially with the length of the input sentence. Other work on decoding in MT has investigated methods for exact inference or optimal decoding aimed at finding the best possible translation in the huge space of candidates [36,37].

2.2. Neural Autoregressive NLG

The NLG systems described in Section 2.1 explored a variety of computational approaches for modeling language generation with statistical methods while, importantly, assuming some form of underlying structure or linguistic representation of the sequence to be generated. Recent work in the area has focused to a large extent on the neural generation paradigm where the sequence generation task is framed as a conditional language modeling problem. Neural generation architectures are frequently called “encoder-decoder architectures” as they first encode the input x into some hidden, continuous representation and then decode this representation to some linguistic output in a word-by-word manner. It is important to note the difference between the term “decoder” which refers to a part of the neural model that maps the encoded input to word probabilities and the “decoding procedure” which is an algorithm external to the model applied during inference. This survey focuses on the latter type of decoding.

Neural text generation systems generally assume that the output text is a flat sequence of words (or symbols, more generally) drawn from a fixed vocabulary V. The probability of a sequence over this vocabulary can be factorized into conditional word probabilities. More specifically, the probability of a word (to be generated at the position j in the sequence) is conditioned on some input x and the preceding words of the sequence:

Such a generation model assigns a probability to all potential sequences over the given vocabulary V, i.e., it scores every , which is the main idea underlying traditional and neural language models. The word probabilities are typically conditioned on an input x that is given in training data, e.g., some database record, a meaning representation or an image. In the case of so-called open text generation, the input can be empty and the formula in Equation (1) is identical to a language modeling process. In addition, in auto-regressive sequence generation, each word is conditioned on the word generated at the previous time step.

There are several neural network architectures that can be used to implement sequence generation systems as defined in Equation (1). A common example is recurrent neural networks (RNNs) that are able to consume or encode input sequences of arbitrary length and transform them into output sequences of arbitrary length [38,39]. The main idea of RNNs is to learn to represent the hidden states of the sequence, i.e., h, which represents a sort of memory that encodes preceding words in the sequence:

In a simple recurrent architecture, the processing of a sequence is implemented, at least, with the following hidden layers:

An important limitation of RNNs is that they process both the input and the output in a strict left-to-right fashion and make it difficult to pass information between the encoder and the decoder in a flexible way. Therefore, the transformer architecture by Vaswani et al. [40] has now replaced RNNs in many neural generation settings. The central element of the transformer are self-attention heads. The layers of the transformer are built out of many such attention heads which operate in parallel. The self-attention in the encoder is not directional, as the attention at a particular position can attend to all other positions in the input sequence. The decoder of the transformer is most often implemented in an autoregressive fashion, masking out all positions following the current position. The decoder can attend to all positions up to the current one via self-attention, and includes encoder-decoder attention layers that allow the decoder to attend to all positions in the input sequence. Thus, most, but not all, neural generation systems are autoregressive, see the next Section 2.3 for a brief summary of non-autoregressive approaches in generation.

While neural autoregressive NLG models generally model the probability of a sequence as a sequence conditional word probabilities, there are different ways in which these models can be optimized during training. A standard approach is to train in a supervised manner and maximize the likelihood of a word by minimizing the cross-entropy loss between predicted tokens and tokens given in the training examples. This training regime entails that the training signal is given to the model only on the word level. A popular alternative to word-level supervised training are methods from reinforcement learning (RL) which make it possible to give sequence-level rewards to the model [41,42,43,44,45]. A well-known example is Ranzato et al. [41]’s self-critical sequence training used to optimize RNNs, as a variant of the REINFORCE policy gradient optimization algorithm [46]. In this approach, the prediction of the next word corresponds to an action which updates the state of the RL environment (here, the hidden state of an RNN). Importantly, the model receives the reward at the end of the sequence, such that the reward represents a sequence-level goal or quality criterion. A common reward function is the BLEU metric [47], which is also frequently used for automatic evaluation of generated sequences. It is important to note, however, that training a neural sequence generation from scratch using only RL-based sequence-level rewards is not deemed feasible in practice. In Ranzato et al. [41] and other RL-based training regimes, the generation model is pre-trained using cross-entropy loss and used as the initial policy which is then fine-tuned with sequence-level training. Section 5.3 discusses further connections between decoding and RL-based sequence-level training.

Regardless of the choice of architecture (e.g., recurrent or transformer models) and training regime (word-level or sequence-level), existing neural generation models do not provide a built-in mechanism that defines the reconstruction of the sequence from the given word probabilities. This stands in contrast to other statistical generators sketched in Section 2.1 where the sequence generation process is typically restricted by a grammar, template or tree structure. For this reason, the decoding procedure (external to the model) has a central role in the neural generation process as it needs to determine how the output sequence is assembled and retrieved from the exponentially large space of candidate sequences. Given the factorization of the sequence generation problem from Equation (1), the decoding step needs to compose an output utterance in an incremental word-by-word fashion.

2.3. Neural Non-Autoregressive Generation

Shortly after the discovery of the transformer architecture by Vaswani et al. [40], researchers have started exploring the idea of parallelizing not only the encoder, but also the decoder of the neural generation architecture, leading to so-called non-autoregressive models. One of the first successful implementations of parallel decoding (here understood as the decoder part of the model) was the WaveNext architecture for text-to-speech synthesis by Oord et al. [48]. Gu et al. [49] proposed a model for non-autoregressive machine translation, with the aim of fully leveraging the performance advantage of the Transformer architecture during decoding and avoid slow, potentially error-prone decoding mechanisms, such as beam search. The main idea of non-autoregressive modeling is that, at inference time, the model does not have to take into account dependencies between different positions in the output, such as this naive baseline:

This simple non-autoregressive model predicts the target length of the sentence from its input and conditions the word probabilities only on the input, not on preceding output words. This, unsurprisingly, has not been found to work in practice as this model exhibits full conditional independence. Generally, attempts at implementing non-autoregressive models, to date, have been more or less successful. Most studies show that non-autoregressive models typically generate output of lower quality then outputs of autoregressive models. However, they are much faster and in some domains, such as speech synthesis or machine translation, good quality can be reached by using techniques of knowledge distillation [49], probability density distillation [48], or iterative refinement [50].

Besides speeding up conventional procedures for decoding in autoregressive generation, some work on non-autoregressive or partially autoregressive models aims at going beyond the assumption that output needs to be produced in a fixed left-to-right generation order. Gu et al. [51] present a transformer that treats generation order as a latent variable in sequence generation. They train their transformer to predict the next word and, based on the next word, the next position in a given partial sequence. Since the learning of a model that optimizes the likelihood marginalized over generation orders is intractable, they approximate the latent generation orders using beam search to explore the space of all permutations of the target sequence. In a similar vein, Stern et al. [52] develop the Insertion Transfomer which is trained to predict insertions of words into a partial sequence. By adopting different loss functions, their model can accommodate different generation orders, including orders that can be parallelized (e.g., balanced binary trees). Both Gu et al. [51]’s and Stern et al. [52]’s experiments show that insertion-based decoding models reach state-of-the-art performance in tasks, such as MT, code generation, or image captioning.

Generally, the design of non-autoregressive models typically involves a built-in mechanism that defines the assembly of the sequence, in contrast to the autoregressive generation models discussed in Section 2.2. For instance, the Insertion Transformer by Stern et al. [52] explicitly learns operations that manage the construction of the sequence generation, whereas these operations would be handled by the decoding method in autoregressive generation. Hence, this survey focuses on decoding methods for autoregressive generation.

2.4. Summary

The brief summary of some pre-neural statistical NLG systems in Section 2.1 has shown that decoding mechanisms have always played a certain role in statistical generation systems: except the early generate-and-rank architectures where classifiers where used to score full sentences, subsequent systems have generally decomposed the generation process into smaller decisions that can be learned from a corpus, e.g., the selection of the next slot from a meaning representation or database record, the prediction of the next word, or the ordering of two words in a tree, etc. This decomposition entails that most statistical generation frameworks deal with a large number of potential output sequences. Handling this search space over generation outputs has been investigated and tackled in some pre-neural systems, especially in early end-to-end systems for data-to-text generation. Here, a couple of elaborate decoding methods have been proposed as, e.g., in Konstas and Lapata [24]’s or Mairesse and Young [26]’s work. However, there has been little effort in pre-neural NLG on generalizing these methods across different frameworks, apart from research on decoding in MT which has explicitly studied the effect of different decoding procedures. The recent neural encoder-decoder framework, introduced briefly in Section 2.2 and Section 2.3, has led to NLG models that do not impose any hard constraints or structures controlling how word-level predictions should be combined in a sequence. Handling the search space in neural generation, therefore, becomes a real challenge: exhaustive search is intractable and simple search (e.g., greedy decoding) does not seem to work well in practice.

In short, the main points discussed in Section 2 can be summarized as:

- research on decoding in non-neural frameworks based on structured search spaces (e.g., hypergraphs, factored language models),

- autoregressive (left-to-right) neural NLG generally requires a decoding method defining the assembly of the sequence, and

- non-autoregressive generation methods are faster and define operations for assembling the sequences as part of the model, but often perform worse than autoregressive approaches.

3. Decoding as Search for the Optimal Sequence

The most widely used, and debated, decoding algorithm in various sequence-to-sequence frameworks to date is beam search, a basic breadth-first search algorithm. Many of the more advanced or specialized decoding methods discussed below build upon beam search or aim to address its limitations. In the following, we will generally introduce decoding as a search problem (Section 3.1) and discuss a basic example of beam search (Section 3.2). Section 3.3 surveys different variants and parameters of beam search used in the recent NLG and MT literature. Section 3.4 summarizes the recent debate about strengths and weaknesses of beam search, and Section 3.5 concludes with an overview of how beam search is used in practice. Table 1 summarizes these various aspects and papers related to beam search discussed in Section 3.

Table 1.

Overview of papers on beam search reviewed in Section 3.

3.1. Decoding as Search

In neural NLG, decoding is most commonly viewed as a search problem, where the task is to find the most likely utterance y for a given input x:

A principled approach to solving this equation would be exact search over the entire search space. This is typically unfeasible given the large vocabulary that neural generators are trained on and the long sequences they are tasked to generate (i.e., sentences or even entire texts). More formally, Chen et al. [53] prove that finding the best string of polynomial length in an RNN is NP-complete.

When viewed from a search perspective, the objective of decoding is to generate an output text that is as close as possible to the optimal output that could be found with exhaustive search. The simplest way to approximate the likelihood objective is to generate the most likely word at each time step, until an end symbol has been generated or the maximal number of time steps has been reached. This greedy search represents a rather naive approach as it optimizes the probability of the sequence in an entirely local way. Consequently, it has been shown to produce repetitive or invariable sentences [54]. A more widely used way of approximating exact search in decoding is beam search. The discovery of this algorithm is attributed to Lowerre [8]. The main ideas, variants, and shortcomings of this algorithm will be discussed in the following.

3.2. Beam Search: Basic Example

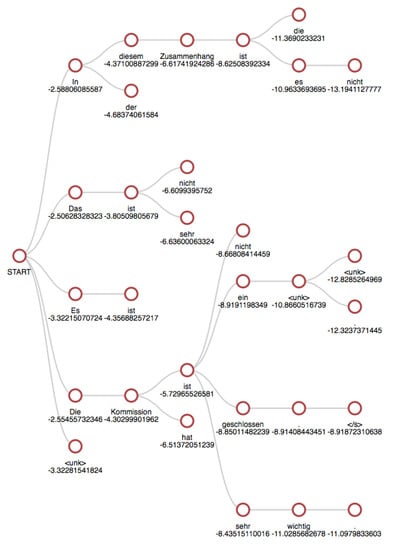

Beam search is a pruned version of breadth-first search that keeps a fixed number of candidates on the beam, at each step of the search procedure. It can be implemented as a graph-based algorithm that builds up a tree of output sequences by incrementally adding words to the high-scoring candidates on the beam, as shown in Figure 1. The key parameter in beam search is the beam width k which determines the number of candidates that will be kept at each time step or level of the tree. Each (partial) output sequence or hypothesis is associated with a score, e.g., the probability assigned to it by the underlying neural language model. All hypotheses that have a score lower than the top-k candidate are pruned. Beam search with is identical to greedy search which generates a single hypothesis with the most probable word given the previous words at each time step. Beam search with an infinite beam amounts to full breadth-first search. For a formal definition of the algorithm, see the pseudo code in Algorithm 1, taken from Graves [39].

Figure 1.

Visualization of beam search, example taken from OpenNMT.

The graph-based visualization in Figure 1 illustrates an example search with : first, the root of the tree, the start symbol, is expanded with the five most likely words that can follow the start symbol. In the second step, one of these paths ((start)-(unk)) is abandoned, while another path splits into 2 hypotheses (start-in-diesem, start-in-der). The hypothesis that is most likely at time step 2 ((start)-(das)-(ist)) is abanoned at time step 4. The reason for this is that one of the candidates from time step 3 ((start)-(die)-(Kommission)-(ist)) leads to 4 high-scoring candidates at time step 4. One of these is the final output candidate ((start)-(die)-(Kommission)-(ist)-(geschlossen)-(.)-(<end>)) as it contains the end symbol and achieves the best score.

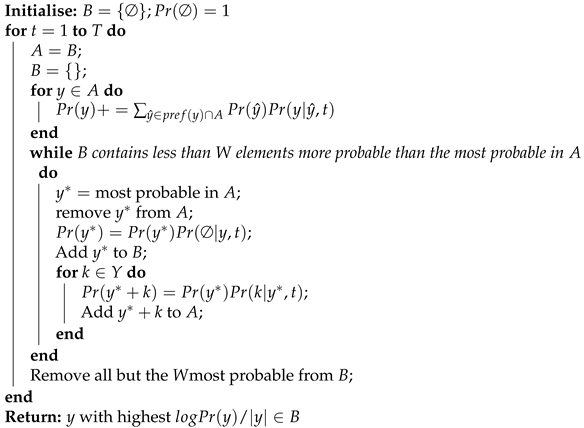

| Algorithm 1: Beam search as defined by Graves [39]. |

|

3.3. Variants of Beam Search

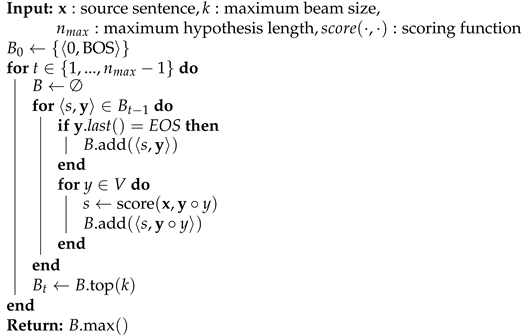

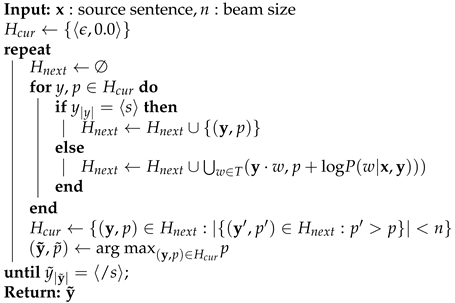

It is important to note that beam search is a heuristic that can be defined and parametrized in different ways, beyond the width parameter k. Nevertheless, beam search is rarely explicitly defined in research papers. Algorithms 1–3 show three definitions of beam search from the recent literature. Interestingly, they are referred to as “standard” beam search in the respective papers but still show slightly different configurations for search: Algorithms 1 and 2 both run for a fixed number of time steps, whereas Algorithm 3 terminates once the top-most candidate on the beam ends with the special end-of-sequence symbol. Algorithms 2 and 3 do not expand hypotheses that end in an end-of-sequence symbol, whereas Algorithm 1 does not account for this case. Algorithm 1 includes a length normalization in the final selection step (last line), Algorithm 2 uses a generic scoring function which might include normalization or not, Algorithm 3 does not consider length normalization, etc.

| Algorithm 2: Beam search as defined by Meister et al. [57]. |

|

| Algorithm 3: Beam search as defined by Stahlberg and Byrne [56]. |

|

Different usages of beam search have been discussed for a long time in the MT community, where it was the standard method in non-neural syntax- and phrase-based models [37]. In these classical phrase-based MT systems, however, candidates were all completed in the same number of steps, whereas sequence-to-sequence models generate hypotheses of different length. Thus, beam search in neural generation requires a good stopping criterion for search or some way of normalizing scores between candidates in order to avoid a bias towards short generation outputs [39,58].

A generic solution for dealing with candidates of different length is shown in the algorithm by Graves [39] in Algorithm 1: the search terminates after a predefined number of time steps and scores for candidates are simply divided by their length. In practice, however, many neural generation systems have adopted more elaborate normalization and stopping criteria. The MT toolkit OpenNMT [55] provides a widely used implementation of beam search and includes three metrics for normalizing the coverage, length and end of sentence of candidate translations. The length penality is defined as follows:

with Y as the current target length, and as a hyperparameter that needs to be set. The length penalty is used in combination with an end of sentence penalty :

with as a further hyperparameter, and as the length of the source sentence. As this penalty depends on the length of the source sentence, it is not generally available in neural language generation, i.e., this penalty does not generalize to generation task beyond translation. In OpenNMT, the search stops once the top candidate obtained in the current step is completed (i.e., with an end symbol).

Another common neural MT framework [62] uses a shrinking beam where beam size is reduced each time a completed hypothesis is found, and search terminates when the beam size has reached 0.

Next to these implementations of beam search in standard MT frameworks, others have proposed to extend the beam search scoring function with a length reward, in order to avoid the inherent bias towards short hypotheses. He et al. [60] define a “word reward” a simple word reward as

with s as the scoring function, e as the hypothesis, m as the hypothesis length, and as the scaling factor for the reward. He et al. [60] evaluate this reward along with a features of a neural MT system and show that is beneficial in their framework. Murray and Chiang [61] present a way of tuning the simple word reward introduced by He et al. [60] and compare it to other length normalization procedures. They find that a simple word reward works well and that a tuned word reward generally performs best in their MT experiment. Huang et al. [58] introduce a related variant of beam search that is guaranteed to finish in an optimal number of steps, given a particular beam size and combine this with a “bounded length reward” that rewards each word, until an estimated optimal output length has been reached. They show that their decoding method outperforms OpenMNT’s implementation of beam search and the shrinking beam in Bahdanau et al. [62].

In sequence-to-sequence generation beyond MT, not a lot of work has been done on defining general stopping and normalization criteria. Zarrieß and Schlangen [70] present a study on decoding in referring expression generation (REG), a relatively constrained NLG sub-task where the length of the generated output is deemed central. They find that a variant of beam search that only keeps hypotheses if the same length, i.e., discards complete hypotheses that are not top candidates in the current time step, provides a better stopping criterion for REG than other criteria that have been explored in the MT literature.

Freitag and Al-Onaizan [63] extend the idea of a dynamic beam width in Bahdanau et al. [62]’s shrinking beam and implement pruning of candidates from the beam that are far away from the best candidate. They investigate different pruning schemes, i.e., relative or absolute probability thresholds for pruning and a pruning scheme that fixes the number of candidates that are expansions of the same hypothesis. They find that pruning speeds up decoding and does not decrease translation quality.

While the above papers extend beam search with specific parameters, others have aimed at more efficient formalizations of beam search. Rush et al. [37] present a variant of beam search for syntax- and phrase-based MT that comes with guarantees a bound on the possible decoding error and is faster. Meister et al. [57] develop a generic reformulation of beam search as agenda-based best-first search. Their implementation is faster than standard implementations and is shown to return the top hypothesis the first time it encounters a complete hypothesis.

The different variants and parameters of beam search discussed in this section are summarized in Table 1.

3.4. Beam Search: Curse or Blessing?

The discussion of different beam search versions is directly connected to is limitations which have been widely noted in the recent literature. One of these limitations has become known as “the beam search curse” and will be discussed below, together with other issues. At the same time, some recent work has argued that beam search should be seen as a blessing as it implicitly compensates for deficiencies of neural generation models. The current section will give a summary of this debate.

In 2017, Koehn and Knowles [65] mentioned beam search as one of the six most important challenges in neural MT. One year later, Yang et al. [64] referred to this challenge as the “beam search curse”: both Yang et al. [64] and Koehn and Knowles [65] showed that increasing the width of the beam does not increase translation quality: the quality of translations as measured by the BLEU score drops with higher values of k. Theoretically, this should not happen as a wider beam takes into account a larger set of candidates and, therefore, should eventually decode the optimal generation output more often than a decoder that searches a smaller space of candidate outputs.

This highly undesired and unexpected negative correlation between quality and beam width has been discussed in relation to the length bias of sequence-to-sequence models [58,61,69]. It has long been noticed that neural sequence transduction models are biased towards shorter sequences [39] and that this bias results from the fact that neural MT and other generation models build probability distributions over candidates of different lengths. Murray and Chiang [61] show that correcting the length bias with a simple word reward helps eliminating the drop in quality for wider beams, though they do not obtain better BLEU scores from wider beams with their method. Interestingly, Stern et al. [52] also note that their non-autoregressive insertion transformer obtains better performance (up to 4 points in BLEU) when using an EOS penalty, i.e., a scalar that is substracted from the log probability of the end token.

Newman et al. [59] take up the issue of stopping or generating the special EOS symbol in sequence-to-sequence models. They compare two settings: models that are trained on sequences ending in EOS (+EOS) and models trained on sequences without EOS (-EOS). They find that the -EOS models achieve better length generalization on synthetic datasets, i.e., these models are able to generate longer sequences than observed in the training set. They observe that the +EOS models unnecessarily stratify their hidden state representations by linear position in the sequence, which leads to better performance of the -EOS models. Thus, similar to the study by Stahlberg and Byrne [56], Newman et al. [59] do not attribute sub-optimal decisions in stopping to the decoding procedure, but to model design and model failure.

To date, the length bias has mostly been discussed in the MT literature, but a few studies report mixed results on the effect of beam search and beam size. Work on visual storytelling found that a larger beam size deteriorates quality of the generated stories [75]. Vinyals et al. [67] find the opposite for the decoding of their well-known image captioning model and observe a positive effect of a large beam size () as opposed to a beam size of 1 (i.e., greedy search). Interestingly, in a later replication of their study, Vinyals et al. [76] carry out further experiments with varying beam width and show that a reduction of the beam size to greatly improves performances compared to . Karpathy and Fei-Fei [68] find that a larger beam size () improves the quality of generated image descriptions but also leads to less novel descriptions being generated, a smaller beam size deteriorates quality and repeats less captions from the training set. The most comprehensive study of performance degradation caused by larger beam widths is presented by Cohen and Beck [66], who investigated this effect in MT, summarization and captioning. They find a negative effect of width on generation quality in all these tasks and explain it with so-called “discrepancies”, i.e., low-probability tokens that are added to early to the beam and compensated later by high-probability tokens.

Another shortcoming of beam search observed in previous work is that the beam tends to contain many candidates that share the same (most likely) prefix [63,72,73]. The bias towards hypotheses with the same prefix is also nicely illustrated in our beam search example in Figure 1: at time step 3, the beam contains 5 hypotheses that expands 3 preceding hypotheses. At time step 4, however, the diversity of the beam is substantially reduced: 4 of the 5 candidates are expansions of a single, very probable candidate from the preceding time step. This means that a relatively high value for beam size would be needed to ensure that more diverse hypotheses that could potentially lead to more probable output are not excluded too early. This, unfortunately, contradicts other studies that report a rather detrimental effect of a large beam size. A range of works have, therefore, have looked at modifying the objective of beam search such that more diverse candidates are considered during decoding. These methods will be discussed in Section 4.

Holtzman et al. [71] observe even more dramatic weaknesses of likelihood-based decoding which they describe as the phenomenon of neural text degeneration: they argue that the likelihood objective used for decoding open text generation with large language models (such as GPT-2) systematically leads to degenerate text that is “generic, akward and repetitive". They find that repeated phrases incur a positive feedback loop during decoding the language model: the probability of a generating a phrases, such as, e.g., “I don’t know” increases with every repetition of the phrase. In practice, this feedback loop leads to text that contains sequences of the same, likely sentence, as they qualitatively show in their paper. Therefore, Holtzman et al. [71] argue that generation models should not aim at maximizing the likelihood of the output sequence, but produce text that is not the most probable text. They introduce nucleus sampling which will be discussed in Section 4.

While the studies discussed up to this point emphasize the limitations of beam search, others suggest that beam search is a blessing rather than a curse, as it implicitly corrects certain built-in biases and defects of neural models. Stahlberg and Byrne [56] compare beam search to exact inference in neural MT. Interestingly, they find that the underlying MT model assigns the global best score to the empty translation in more than half of the cases, which is usually not noticed as exact inference is not used for already discussed, for practical reasons. Beam search fails to find these globally optimal translations due to pruning in combination with other parameters, such as length normalization. Stahlberg and Byrne [56] interpret this as evidence for the failure of neural MT models to capture adequacy. The fact that the BLEU scores drop when decoding with a wider beam should not be blamed on beam search but on deficiencies of the model.

Meister et al. [74] follow up on Stahlberg and Byrne [56] and hypothesize that beam search incorporates a hidden inductive bias that is actually desirable in the context of text generation. They propose a more generalized way of modifying the objective of beam search and formulate regularized decoding, which adds a strategically chosen regularization term to the likelihood objective in Equation (4). They argue that beam search is implicitly biased towards a more general principle from cognitive science: the uniform information density (UID) hypothesis put forward by Levy and Jaeger [77]. This hypothesis states that speakers prefer utterances that distribute information uniformly across the signal. Meister et al. [74] demonstrate the connection between the UID and beam search qualitatively and test a range of regularized decoding objectives that make this explicit. Unfortunately, they do not directly relate their observations to Holtzman et al. [71]’s observations on neural degeneration. While Meister et al. [74] argue in favor of decoding objectives that minimize the surprisal (maximize probability), Holtzman et al. [71] state that “natural language rarely remains in a high probability zone for multiple consecutive time steps, instead veering into lower-probability but more informative tokens”, which seems to contradict the UID hypothesis. Thus, the debate about the merits and limitations of beam search and likelihood as a decoding objective for text generation has not reached a conclusive state in the current literature. Section 6 comes back to this general issue.

3.5. Beam Search in Practice

Table 2 and Table 3 list a range of recent neural NLG systems for different text and data-to-text generation tasks along with their decoding strategy. These tables further corroborate some of the observations and findings summarized in this section: on the one hand, beam search is widely used and seems to be the preferred decoding strategy in most NLG tasks, ranging from translation and summarization to dialog, data-to-text generation and surface realization. On the other hand, the fact that beam search comes with a set of variants and heuristics beyond the beam widths is not generally acknowledged and potentially less well known, especially in work that does not deal with MT. Here, many papers do not report on the stopping criterion or normalization procedures, but, even in MT, the exact search parameters are not always mentioned.

Table 2.

Neural text generation systems and their decoding settings.

Table 3.

Neural data-to-text or image-to-text systems and their decoding settings.

The central parameter, beam width k sometimes differs widely for systems that model the same task, e.g., the dialog generation system by Ghazvininejad et al. [78] uses a width of 200, whereas the system by Shuster et al. [79] uses a width of 2 (and additional trigram blocking). Some sub-areas seem to have developed common decoding conventions, e.g., in MT where advanced beam search with length and coverage penalty is common or image captioning where simple beam search versions with moderate variations of the beam width are pre-dominant. In other areas, the decoding strategies vary widely, e.g., in dialog or open-ended text generation where special tricks, such as trigram blocking, are sometimes used and sometimes not. Moreover, in these areas, beam search is often combined with other decoding strategies, such as sampling, which will be discussed below.

In short, the main points discussed in Section 3 can be summarized as:

- beam search is widely used for decoding in different areas of NLG, but many different variants do exist, and they are not generally distinguished in papers,

- many variants and parameters of beam search have been developed and analyzed exclusively for MT,

- papers on NLG systems often do not report on parameters, such as length normalization or stopping criteria, used in the experiments,

- the different variants of beam search address a number of biases found in decoding neural NLG models, e.g., the length bias, performance degradation with larger beam widths, or repetitiveness of generation output,

- there is an ongoing debate on whether some of these biases are inherent in neural generation models or whether they are weaknesses of beam search, and

- the main research gap: studies on beam search, its variants, and potentially further variants for core NLG tasks beyond MT.

4. Decoding Diverse Sets of Sequences

The previous section described decoding from the perspective of search for the optimal or a highly probable generation output. We have seen, however, that maximizing the likelihood objective during decoding has negative effects on certain linguistic properties of the output: generation outputs tend to be short and lack what is often called “linguistic diversity” [96,116,118]. Research on achieving and analyzing diversity has become an important trend in the recent literature on neural NLG, and it is often investigated in the context of decoding. In the following, we will first discuss various definitions and evaluation methods for assessing diversity of generation output (Section 4.1) We then provides an overview of methods that aim at achieving different types of diversity, which can be broadly categorized into methods that diversify beam search (Section 4.2) and sampling-based methods (Section 4.3). Despite important conceptual and technical differences between these methods, they generally adopt a view on decoding that is directly complementary to the view of decoding as search: rather than deriving a single, highly probable generation output, the goal is to produce varied sets of outputs. Indeed, the discussion in Section 4.4 will show that there is an often observed trade-off between quality (which is optimized by search-based decoding) and diversity. Table 4 shows an overview of the paper reviewed in this section.

Table 4.

Overview of papers on diversity-oriented decoding reviewed in Section 4.

4.1. Definition and Evaluation of Diversity

Diversity or variation has always been a central concern in research on NLG (cf. Reference [2]). It was one of the major challenges for traditional rule-based systems [22,129], and it remains a vexing problem, even in state-of-the-art neural NLG systems [96,130,131,132]. Generally, the need for diverse output in NLG can arise for very different reasons and in very different tasks, e.g., controlling register and style in documents [133], generating entertaining responses in chit-chat dialogs [96], generating responses with certain personality traits [27], or accounting for variation in referring expressions [118,134,135] or image captioning [122,136,137,138,139]. Given the widespread interest in diversity, it is not surprising that many different definitions and assumptions on what diversity in NLG is exist in the literature. Moreover, the issue of diversity is closely linked to evaluation of NLG systems, which is generally considered one of the big challenges in the field [140,141,142,143,144]. Importantly, different notions of diversity adopted in work on NLG are not to be confused with “diversity” investigated in linguistics, where the term often refers to typological diversity across different languages as, e.g., in Nichols [145].

One common thread in the generation literature on diversity is to go beyond evaluating systems only in terms of the quality of the top, single-best generation output. Instead, evaluation should also take into account the quality and the diversity of the n-best list, i.e., a set of generation candidates for a single input. This amounts to the notion of local diversity (in contrast to global diversity discussed below, meaning that a generation system should be able to produce different words and sentences for the same input. Another common thread is that generation outputs should be diverse when looking globally at the outputs produced by the system for a dataset or set of inputs. Thus, global diversity means that the generation system should produce different outputs for different inputs.

An early investigation into local diversity is carried out by Gimpel et al. [146], who argues that MT systems should aim at producing a diverse set of candidates on the n-best list, in order to help users inspect and interact with the system in the case of imperfect translations. They conduct a post-editing study where human participants are asked to correct the output of an MT system and find that editors benefit from diverse n-best list when the quality of the top translation is low (they do not benefit, however, when the top translation is of high quality). Similar definitions of local diversity of have been taken up in neural generation, as for instance, in Vijayakumar et al. [147] and Li et al. [54] (see Section 4.2 for further discussion).

Local diversity can be assessed straightforwardly by means of automatic evaluation metrics. Ippolito et al. [116] present a systematic comparison of different decoding methods in open-ended dialog generation and image captioning and assess them in terms of local diversity. They use perplexity over the top 10 generation candidates for an input and the Dist-k measure by Li et al. [54], which is the total number of distinct k-grams divided by the total number of tokens produced in all the candidates for an input. Additionally, they include the Ent-k measure introduced by Zhang et al. [148] that takes into account the entropy of the distribution of n-grams in the top candidates.

A complementary view on diversity is proposed by van Miltenburg et al. [132], who analyze the global diversity of image captioning systems which they define as the ability of the generation system to use many different words from the vocabulary it is trained on. The main challenge here is that this vocabulary will usually have a Zipfian distribution. A system that generates globally diverse output will, therefore, need to have the ability to generate rare words from the long tail of the distribution. van Miltenburg et al. [132] test a range of metrics for quantitatively measuring global diversity: average sentence length, number of types in the output vocabulary, type-token ratio, and the percentage of novel descriptions. Their general finding is that most image captioning systems from the year 2018 or earlier achieved a low global diversity.

The distinction between local and global diversity is not always clear-cut or, at least, not always made explicit in the reported evaluation. Another way to measure diversity that seems to have been proposed independently in different papers is a variant of the BLEU, which is typically used to score the overlap between human references and generated sentences. In the context of diversity, BLEU can also be used to score the overlap between a set of model outputs, either for a single input or an entire test set [130,149,150,151], where a lower self-BLEU or mBLEU would signal higher diversity.

Generally, diversity is often discussed in open-ended or creative text generation task (see discussion in Section 4.4). Here, diversity is sometimes defined in a more loose way. For instance, Zhang et al. [148] aim at building a system that generates informative and diverse responses in chit-chat dialog, where the goal is to avoid “safe and bland” responses that “average out” the sentences observed in the training set. A related view can be found in the study by Reference [152]. They view diversity as related to the model’s ability to generalize beyond the training set, i.e., generate novel sentences. They argue that human evaluation, which is often seen as a gold standard evaluation is not a good way of capturing diversity as humans are not able to assess what the model has been exposed during training and whether it simply repeats sentences from the training data. Hashimoto et al. [152] propose HUSE, a score that combines automatic and human evaluation, and it can be decomposed into HUSE-D for diversity and HUSE-Q for quality.

4.2. Diversifying Beam Search

As discussed in Section 3.4, a common problem with beam search is that the number of candidates explored by beam search is small, and these candidates are often similar to each other, i.e., are expansions of the same candidate from the previous step of beam search. Hence, beam search is generally not a good choice when local diversity is a target for decoding. In the literature, a whole range of heuristics and modifications of what is often called “standard” beam search have been proposed that all share the idea of diversifying beam search. Typically, these diverse beam search versions incorporate an additional method that scores similarities of candidates or groups beam histories to make sure that future steps of beam search expand different, diverse histories.

A simple but well-known method for diverse beam search has been proposed by Li et al. [54]. They aim at generating diverse n-best lists using beam search. They introduce a penalty that downranks candidates which have a sibling on the beam with a higher score, where a sibling is a candidate that is obtained by expanding the same hypothesis from the previous step of the search:

In Equation (8), is a word that is added to a hypothesis , and denotes that ranking of among other candidates that expand the same hypothesis. The goal of this penalty is to exclude bottom-ranked candidates among siblings and to include hypotheses that might have a slightly lower probability but increase the diversity of the candidates on the beam.

A similar heuristic is proposed for MT by Freitag and Al-Onaizan [63]: they set a threshold for the maximum number of sibling candidates that can enter the beam. This approach is independently proposed and dubbed top-g capping in Ippolito et al. [116] (where g is the threshold for candidates that can enter the beam and have the same history). A slightly more involved method to encourage diverse candidates during beam search is proposed in Vijayakumar et al. [147] for image captioning: they partition the candidates on the beam into groups. When expanding a candidate in a certain group, the scores (i.e., log probabilities) of each word are augmented with a dissimilarity term. The dissimilarity measure that is found to perform best empirically is hamming diversity which penalizes the selection of a token proportionally to the number of times it was selected in previous groups.

Kulikov et al. [73] implement iterative beam search for neural conversation modeling: they run beam search multiple times (with a fixed beam width k) and, for each iteration, score each hypothesis for its dissimilarity to hypotheses found in previous iterations. Tam [155] goes one step further and introduces clustered beam search. Here, similarity between candidates is determined by K-means clustering of hypothesis embeddings. These clusters are then used as groups in References [54,63], i.e., only the top candidates from each cluster are selected for the next step of the search. This method is designed for generation in chatbots, where standard neural generators often produce very short and generic responses. To exclude these, Tam [155] introduces a further language model threshold during decoding, filtering responses which have a language model score above a certain threshold. A similar idea seems to have been introduced independently for sentence simplification by Kriz et al. [154], but they cluster candidates post decoding and select the candidates nearest to the cluster centroids. Ippolito et al. [116] also experiment with post-decoding clustering (PDC) but select candidates with the highest language model score from each cluster.

Work on generating longer texts, such as, e.g., image paragraphs faces the problem that the output texts tend to contain repetitions [120]. Melas-Kyriazi et al. [122] present a model that uses self-critical sequence training to generate more diverse image paragraphs, but they need to combine this with a simple repetition penalty during decoding. Hotate et al. [156] implement diverse local beam search for grammatical error correction.

4.3. Sampling

An alternative way of increasing the diversity of language generation output is to frame decoding not as a search but as a sampling problem. When decoding by sampling, the generator randomly selects, at each time step, a candidate or set of candidates from the distribution predicted by the underlying NLG model. While sampling typically produces diverse text, the obvious caveat is that, eventually, very low probability outputs are selected that might substantially decrease the overall quality and coherence of the text. Thus, while beam search naturally trade-offs diversity in favor of quality, the opposite is true for sampling.

Therefore, existing sampling procedures for neural generators do not apply pure sampling but use additional heuristics to shape or truncate the model distributions. A traditional method is temperature sampling [157] that shapes the probability distribution with a temperature t and can be seen as a parameter of the softmax calculation [71]:

where are the logits of the language model for elements of the vocabulary. Temperature sampling is often used with low temperatures, i.e., , as this skews the distribution to the high probability events. A detailed evaluation of the effect of temperature on quality and diversity is reported by Caccia et al. [103]: they find the neural language models trained with a standard MLE objective outperform GANs in terms of the quality-diversity trade-off, and temperature can be used to systematically balance this trade-off.

Furthermore, nucleus [71] and top-k sampling [99] are well-known decoding methods aimed at increasing diversity. Both strategies are very similar in that they sample from truncated language model distributions: In each decoding step, a set of most probable next tokens is determined, from which one item is then randomly selected. They differ, however, in how the distribution is truncated. Top-k sampling always samples from a fixed number of k items. The sum of the probabilities of the top k items, , is then used as a rescaling factor to calculate the probability of a word in the top-k distribution:

Depending on the shape of the distribution at a given time step, can vary widely, as noticed by Holtzman et al. [71]. Thus, if the distribution is very peaked, p′ might be close to 1; if it is flat, might be a small value. For this reason, it might be difficult to select a value for k that performs consistently for different distribution shapes throughout a generation process.

This shortcoming of top-k sampling is addressed in Reference [71]’s nucleus sampling method: here, the decoding samples from the top-p portion of the accumulative probability mass, where p is a parameter that determines the vocabulary size of the candidate pool.

As the probability distribution changes, the candidate pool expands or shrinks dynamically. This way, nucleus sampling can effectively leverage the high probability mass and suppress the unreliable tail.

In practice, top-k and nucleus sampling are often found in combination with temperature sampling. Moreover, sampling can be integrated with beam search and replace the typical likelihood scoring. Caccia et al. [103] call this procedure stochastic beam search: the width of the beam defines the number of words that are sampled for each hypothesis at each time step. Massarelli et al. [158] propose a similar method, called delayed beam search, where the first L tokens of a sentence are generated via sampling, and the rest of the sentence is continued via beam search.

4.4. Analyses of Trade-Offs in Diversity-Oriented Decoding

Table 2 and Table 3 include neural NLG systems that implement decoding strategies targeted at diversity. Generally, the sample of systems shown in these tables suggests that diversity-oriented decoding is used in practice, but is most widespread in “open” generation task, such as story generation or dialog, and in tasks where output longer than a single sentences needs to be generated. In these NLG domains, even the first papers that implemented neural systems mentioned the need to integrate sampling or diversification to prevent the output from being unnaturally repetitive [92,99]. In the case of story generation or paragraph generation, sampling is further combined with additional constraints aimed at avoiding repetitions in long texts, such as, e.g., trigram blocking in Melas-Kyriazi et al. [122].

Among the many papers that described decoding in MT systems in Table 2, there is not a single paper that uses diversity-oriented decoding, and the same holds for data-to-text generation. In summarization, Radford et al. [11]’s system uses top-k sampling, but their work does not primarily aim at improving the state-of-the-art in summarization. In image captioning and referring expression generation, two studies explicitly aim at understanding the impact of diversity-oriented decoding in these tasks [116,118], whereas other systems do not seem to generally adopt them. For AMR-to-text generation, Mager et al. [128] compare search-based decoding and sampling and find that the latter clearly decreases the performance of the system.

One of the most exhaustive studies on diverse decoding is presented by Ippolito et al. [116]: they compare 10 different decoding methods, both search- and sampling-based, for the tasks of image captioning and dialog response generation. They propose a detailed evaluation using automatic measures for computing local diversity (in terms of entropy and distinct n-grams, see Section 4.1) and correlating them with human judgements of adequacy, fluency and interestingness. They observe that there generally seems to be a trade-off between quality and diversity, i.e., decoding methods that increase diversity typically do so at the expense of quality, and vice versa. Using a sum-of-ranks score over different evaluation metrics, they establish that clustered beam search and standard beam search with a relatively large beam width () perform best for dialog generation. In image captioning, the sum-of-rank score favors random sampling with top-k sampling and PDC. The same trade-off is observed and analyzed by Panagiaris et al. [118] for referring expression generation.

Another trade-off of sampling-based diversity-oriented decoding is discussed by Massarelli et al. [158]: they investigate open text generation, where a large language models is tasked to continue a given textual prompt. They evaluate the verifiability of these freely generated texts against Wikipedia, with the help of an automatic fact-checking system. They show that sampling-based decoding decreases the repetitiveness of texts at the expense of verifiability, whereas beam search leads to more repetitive text that does, however, contain more facts that can be supported in automatic fact checking.

Finally, we observe that most of the decoding methods discussed in this section are designed to increase the local diversity of generation output. van Miltenburg et al. [132] present a study that evaluates the global diversity of image captioning systems using available generated image descriptions. They do not take into account possible effects of the systems’ decoding methods. Schüz et al. [159] compare the global diversity of beam and greedy search, nucleus decoding, and further task-specific, pragmatically-motivated decoding methods in the more specific setting of discriminative image captioning; see Section 5.3.

4.5. Summary

This section has shown that diversity is an important issue that arises in many tasks concerned with the generation of longer or creative text, and that has been tackled in a range of recent papers. At the same time, existing methods that push the diversity of the generation output of neural systems in one way or another, i.e., by diversifying search or by sampling, seem to generally suffer from a quality-diversity trade-off. We will resume the discussion of this observation in Section 6.

In short, the main points discussed in Section 4 can be summarized as:

- different notions of diversity have been investigated in connection with decoding methods in neural NLG,

- diversity-oriented decoding methods are either based on beam search or sampling, or a combination thereof,

- analyses of diversity-oriented decoding methods show trade-offs between diversity, on the one hand, and quality or verifiability, on the other hand,

- diversity-oriented decoding is most often used in open generation tasks, such as, e.g., story generation, and

- the main research gap: studies that investigate and consolidate different notions of diversity, methods that achieve a better trade-off between quality and diversity.

5. Decoding with Linguistic Constraints and Conversational Goals

In the previous sections, we discussed rather domain-general decoding procedures that apply, at least theoretically, to most neural NLG systems and NLG tasks. This follows a general trend in research on neural NLG where, in recent years, systems have become more and more domain-independent and developers often refrain from building domain-specific knowledge into the architecture. In many practically-oriented or theoretically-motivated NLG systems, however, external knowledge about the task at hand, particular hard constraints on the system output, or simply linguistic knowledge on the involved phenomena are given at training and/or testing time. In neural NLG systems, it has become difficult to leverage such external constraints and to control them in terms of their linguistic behavior [161,162], as most of the processing is carried out on continuous representations in latent space. Thus, since decoding operates in the symbolic search space, it constitutes a natural place in the neural architecture to incorporate domain- or task-specific knowledge or reasoning and control mechanisms that target particular linguistic aspects of the generation output. This section discusses such approaches to decoding, which can be divided into methods that introduce lexical constraints (Section 5.1), constraints on the level of structure and form (Section 5.2), or pragmatic reasoning (Section 5.3). An overview of the different methods is shown in Table 5.

Table 5.

Overview of papers on decoding with linguistic constraints (Section 5).

5.1. Lexical Constraints

The need to incorporate lexical constraints in an NLG architecture can arise in different tasks and for different reasons. In some cases, they might be integrated as simple filters or criteria in standard beam search decoding. For instance, Kiddon et al. [104] present a neural checklist model for long text generation, where a list of agenda items is given in the input. They decode the model using beam search and select the most probable candidate which mentions most items from a given agenda. A similar “trick” is used in the data-to-text generation system by Puzikov and Gurevych [106], where they make sure that the candidate that mentions most attributes from the input representation is selected from the beam.

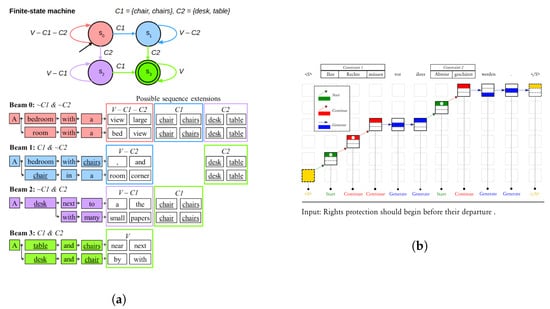

It is, however, not a convincing solution to generally incorporate lexical constraints at the end of search as a very large beam width could be required to produce the desired candidates. This is the case when, for instance, lexical constraints are complex and span several words or when the corresponding words are assigned low probabilities by the underlying model. Anderson et al. [161] make such a case for image captioning and use lexical constraints during decoding to extend the models coverage to a wider set of object classes. They argue that a severe limitation of image captioning systems is that they are difficult to extend and adapt to novel types of concepts and scenes that are not covered in the training data, whereas simple image taggers are easier to scale to new concepts. They develop constrained beam search, illustrated in Figure 2a, which guides the search to include members from given sets of words (external image labels, in their case) during decoding. The main idea of the algorithm is that the set of constraints is represented as a finite-state machine, where each state maintains its own beam of generation candidates. Interestingly, they observe that their constrained-based approach outperforms a competing system that uses similar knowledge to extend the training data of the system.

Figure 2.

Versions of the beam search that incorporate lexical constraints. (a) Constrained beam search [161]. (b) Grid beam search [163].

A similar use case for MT is addressed in Hokamp and Liu [163], where lexical constraints are provided by users that post-edit the output of a translation system. Hokamp and Liu [163]’s grid beam search is illustrated in Figure 2b and, in comparison to the constrained beam search in Anderson et al. [161], also targets phrasal lexical constraints that span multiple words and multi-token constraints where spans might be disconnected. This beam search variant distinguishes 3 operations for expanding a candidate on the beam: open in a new hypothesis (add a word from the model’s distribution), start a new constraint, and continue a constraint. For each constraint, the algorithm allocates a separate beam that groups hypotheses that meet i constraints from the set. At the end, the algorithm returns the highest scoring candidate from , i.e., the sub-beam with hypotheses that meet all constraints. Their experiments show that grid beam search is useful for interactive post-editing and for modeling terminology in domain adaptation.

Post and Vilar [164] note that both constrained beam search and grid beam search have a high complexity that grows linearly (for grid beam search) or exponentially (for constrained beam search) with the number of constraints. They present a faster variant of grid beam search that has a global, fixed beam width and dynamically re-allocates portions of the beam to groups of candidates meeting a different number of constraints and being available at a given time-step. Their algorithm prevents the model from generating the EOS symbol unless all constraints have been met in a given candidate. In their analysis, Post and Vilar [164] take up the discussion in the MT literature, revolving around the issue of larger beam sizes resulting in lower BLEU scores by Koehn and Knowles [65] (see Section 3.4). Post and Vilar [164] observe an effect they call “aversion to references”. They show that, by increasing the beam width and including partial references (i.e., constraints) during decoding, the model scores decrease, but the BLEU scores increase, which is complementary to the model scores increasing and BLEU scores decreasing in the experiment of Koehn and Knowles [65].

While the above approaches incorporated lexical constraints in a symbolic way, Baheti et al. [97] propose a distributional approach to extend the decoding procedure for a generator of conversational responses in open chit-chat dialog. They use an external topic model and an external embedding to extend the objective of standard beam search with two additional terms, scoring the topical and the semantic similarity of the source and response sentence. Furthermore, they combine this objective with the diverse-decoding method by Li et al. [54] and find that this combinations produces rich-in-content responses, according to human evaluation.

5.2. Structure and Form