Abstract

The real-life signals captured by different measurement systems (such as modern maritime transport characterized by challenging and varying operating conditions) are often subject to various types of noise and other external factors in the data collection and transmission processes. Therefore, the filtering algorithms are required to reduce the noise level in measured signals, thus enabling more efficient extraction of useful information. This paper proposes a locally-adaptive filtering algorithm based on the radial basis function (RBF) kernel smoother with variable width. The kernel width is calculated using the asymmetrical combined-window relative intersection of confidence intervals (RICI) algorithm, whose parameters are adjusted by applying the particle swarm optimization (PSO) based procedure. The proposed RBF-RICI algorithm’s filtering performances are analyzed on several simulated, synthetic noisy signals, showing its efficiency in noise suppression and filtering error reduction. Moreover, compared to the competing filtering algorithms, the proposed algorithm provides better or competitive filtering performance in most considered test cases. Finally, the proposed algorithm is applied to the noisy measured maritime data, proving to be a possible solution for a successful practical application in data filtering in maritime transport and other sectors.

1. Introduction

In today’s world, due to the advances in digital technologies, vast amounts of data are continuously acquired by different measurement systems, covering all fields of human activities. These data are then used as input to various algorithms and analysis procedures. However, the obtained real-life signals are often corrupted by various types of noise, which occur due to numerous environmental factors and to the data acquisition and transmission processes themselves. Therefore, prior to the further exploitation and analysis of such data, it needs to be processed by filtering algorithms to reduce the noise, thus enabling more efficient reconstruction of the original information content [1,2,3,4,5,6,7].

The modern maritime transport sector is an example of a complex system consisting of different measurement subsystems and communication systems used to transmit collected information. Moreover, the future development of autonomous shipping increases the amount of data acquired during the ship operation, thus placing additional demand on data quality. Maritime applications imply operation under difficult and rapidly changing environmental conditions, which, accompanied by the reduced availability of reliable communication channels, leads to high noise levels in the acquired data. Therefore, the implementation of the existing and the development of the application-specific filtering algorithms for the reconstruction of useful information from the noise-corrupted signals is an important field of scientific research within maritime and transport engineering [8]. The research has been focused on different areas of application, including underwater signal processing [9,10,11,12], radar signal processing [13,14], underwater image processing [15,16,17], optical signal processing [18], and other applications [19,20,21].

The parametric filtering algorithms require knowledge of the underlying signal and noise characteristics. In many practical applications, such information is not available, and nonparametric filtering algorithms are used [22]. The main task in these algorithms includes applying a method for finding the optimal filter width that controls the level of signal smoothing. The nonparametric methods may be broadly classified into the plug-in methods and the quality-of-fit methods (such as, for example, cross-validation) [22]. The plug-in methods are computationally demanding due to complex formulae for calculating the optimal filter width based on the estimation bias and variance. On the other hand, the data-driven cross-validation method does not require bias estimation. The optimal filter width minimizes the estimation mean squared error (MSE) resulting in an optimal bias-variance trade-off [22].

An easy-to-implement and locally adaptive filtering algorithm is based on the intersection of confidence intervals (ICI) rule [23] combined with the local polynomial approximation (LPA) [24,25]. The LPA-ICI algorithm requires only the estimation of the signal and noise variance. The ICI rule for the optimal filter width selection was upgraded in [26] and named the relative intersection of confidence intervals (RICI) rule, keeping the good properties of the ICI rule, and at the same time improving the filtering performance in terms of MSE and reducing method’s sensitivity to suboptimal parameter selection.

As it may be expected, the filtering performances of the LPA-ICI and the LPA-RICI algorithms are affected by selected parameters’ values. Different data-driven techniques have been analyzed for the selection of the ICI rule’s parameter [24]. However, the RICI algorithm requires a simultaneous adjustment of two parameters, which has not been investigated in depth in the literature. Therefore, the approach based on the grid search in parameter space is generally used when applying the RICI algorithm. The time-consuming nature of this procedure places a demand for a faster solution. The study in [27] proposed a simulation-based method for the selection of the appropriate values of the RICI rule’s parameters with respect to the obtained MSE. However, the provided empirical formula defines regions of near-optimal parameter values and is obtained by analyzing a limited number of signal classes. Moreover, the formula for the calculation of one parameter’s value requires the other parameter’s value as an input, thus leaving a problem of its proper selection. Therefore, a more general approach, which will guarantee the optimal parameters’ values, is required.

In this paper, we propose an adaptive filtering algorithm based on the radial basis function (RBF) kernel smoother with variable width. The kernel width is calculated using the asymmetrical combined-window RICI algorithm. This data-driven, locally adaptive algorithm requires only the noise variance estimation (and does not require the bias estimation nor any information about the underlying signal and noise). In order to reduce the time required by the procedure based on a grid search in the parameter space, the RICI algorithm is upgraded by the particle swarm optimization (PSO) based procedure. We analyze the proposed RBF-RICI algorithm’s filtering performance by applying it to several synthetic, simulated noisy signals. The paper elaborates on the RBF-RICI’s efficiency in noise suppression and the filtering error reduction. Moreover, we compare the proposed algorithm to the competing filtering algorithms and show that it provides better or competitive filtering performance in most considered test cases. Additionally, we have performed a comparative analysis of several evolutionary metaheuristic optimization algorithms applied to selecting the RBF-RICI algorithm’s parameters, including the genetic algorithm (GA) and three algorithms based on the PSO. Finally, the proposed RBF-RICI algorithm is applied to the noisy, real-life measured maritime data, proving to be a potential solution for a successful practical application in data filtering in the maritime and other similar sectors.

2. Materials and Methods

We consider the noisy observations of signal :

where is the original signal, and is the additive white Gaussian noise.

The noisy signal has to be filtered in order to obtain the estimate of the original signal with the minimum estimation error. In this work, we propose an adaptive filtering algorithm combining the RBF kernel smoother with the asymmetrical combined-window RICI procedure whose parameters are adjusted utilizing the PSO algorithm.

2.1. The RBF-Kernel-Based Adaptive Filtering

The kernel smoother estimates the signal sample value as the weighted average of the neighboring observed sample values. The weights assigned to the specific samples are determined by the kernel type. Nonrectangular kernels assign higher weights to the samples closer and smaller weights to the samples farther away from the considered one [28].

The RBF or Gaussian kernel is defined as:

where is the sample of interest, k is the neighboring signal sample, h is the kernel width, and is the RBF kernel scale which is referred to as standard deviation when considering the Gaussian probability density function.

The Nadaraya–Watson kernel-weighted estimate [29] of the signal value at the considered sample is given by:

The kernel width h is a parameter that controls the estimation accuracy and the smoothness of the estimated signal. The kernels with larger widths include more samples in the estimation procedure, causing the decreased estimation variance and, at the same time, increased estimation bias. On the other hand, smaller kernel widths lead to the increase in the estimation variance and, simultaneously, the decrease in the estimation bias due to the reduced number of samples taken into the estimation procedure [24].

Therefore, the selection of the proper kernel width determines the efficiency of the applied filtering algorithm. As opposed to the constant kernel width used for the entire duration of the signal, the varying kernel width enables the adaptation to the local signal features. The kernel width providing the optimal trade-off between estimation bias and variance is here calculated using the adaptive RICI algorithm.

The absolute value of error obtained by the kernel-smoother-based estimation procedure is defined as:

where represents the signal sample value estimated using n samples in its vicinity by applying the kernel with varying width .

The mean squared estimation error, , may be defined, with respect to the estimation bias and the estimation variance , as [30,31]:

The crucial task of the adaptive filtering procedure includes selecting the kernel width that minimizes , thus providing the optimal bias-variance trade-off [30,31]:

Note that the original signal values are with the confidence p contained within confidence intervals [24,31]:

where and are the lower and the upper confidence limits, respectively. The confidence limits are defined using the ICI threshold parameter representing the critical value of the confidence interval:

The initial stage of the RICI algorithm is identical to the ICI rule [23], which provides a set of N growing kernel widths , and the corresponding confidence intervals for each signal sample k [24,25,31].

The ICI rule algorithm tracks the intersection of the confidence intervals and provides the largest kernel width for which the intersection still exists [24,25,31]:

This condition is met if the inequality is satisfied, where is the largest lower and is the smallest upper confidence limit [24,31].

In this work, we applied the asymmetrical combined-window RICI approach, where the above-described procedure is implemented independently to the left and right side of the considered sample k, resulting in two sets of confidence intervals and for each signal sample k [27].

The algorithm tracks the intersection of the currently calculated nth confidence interval with the intersection of all previous confidence intervals on the each side of the considered kth sample independently. This results in and as the largest kernel widths satisfying (10) for the left and right side, respectively [27]. Finally, the candidate for the optimal width of the asymmetrical kernel is obtained by combining and :

The ICI algorithm’s performance is highly sensitive to the selection of the optimal value of the threshold parameter , as too small values result in signal undersmoothing, and too large values cause signal oversmoothing [24,30].

In order to make the ICI rule stage more robust to suboptimal values, the second stage of the algorithm includes the RICI rule upgrade, which applies the additional criterion for the adaptive kernel width selection to the kernel width candidates obtained by the ICI rule. The RICI rule improves the estimation accuracy of the ICI rule for the same values of the threshold parameter [26,27,32,33].

The RICI criterion tracks the relative amount of confidence intervals overlapping by calculating the ratio of the intersection of the confidence intervals’ width and the current confidence interval’s width [26,27]:

The calculated RICI ratio is compared to the preset RICI threshold value () [27]:

Similar to the ICI rule stage, the RICI rule is also applied independently to the both sides of the considered samples, resulting in and which are the largest kernel widths satisfying (10) and (13) for the left and right side, respectively [27]. Finally, the width of the asymmetrical kernel obtained by the RICI algorithm is calculated as:

2.2. The Evolutionary Metaheuristic Optimization Algorithms

In real-world engineering applications, optimization problems are often nonlinear, NP-hard (nondeterministic polynomial time-hard), and nondifferentiable. The traditional techniques require a mathematical formulation of the considered problem, which is often not possible. However, the evolutionary metaheuristic optimization algorithms have been proved to be powerful tools for nonlinear optimization problems. They overcome the limitations of the traditional techniques for nondifferentiable, noncontinuous, and nonconvex objective functions. In this paper, we use and compare several evolutionary metaheuristic optimization algorithms based on the PSO and GA. The generalized pseudocodes for Algorithms A1–A4 are provided in Appendix A.

2.2.1. Traditional PSO Algorithm

The PSO is a population based iterative algorithm, where all particles are gathered in one population, called the swarm [34,35,36]. Each particle represents the potential solution of the optimization problem in the solution space. In each iteration, particles adjust its flying trajectories according to personal and global experiences. Let us denote parameter s as the number of particles, or the population size, and d as the dimension of the solution space. d-dimensional solution space is defined with problem variables that need to be optimized [34,35]. Particles move in the solution space by updating their velocity vector, which is for the i-th particle () defined as , and position vector of the i-th particle, , according to the following equations:

where and are random numbers sampled from the range 0 to 1, w is the inertia weight, and denote the acceleration coefficients which control the particle movement toward the personal and the global best, and , respectively [34,35]. Apart from limiting particle positions for the constrained problem, the value of each velocity can be limited also to range in order to reduce the likelihood of particles moving too fast in smaller solution spaces.

A suitable selection of the inertia factor and the acceleration coefficients provides a balance between global and local exploration and exploitation. Further research has claimed that improved PSO performance could be gained if the inertia weight was chosen as a linearly decreasing number rather than a constant number over all iterations. The idea was that PSO search should start with high inertia weight for strong global exploration while, with linearly decreasing, in later iterations emphasize finer local explorations [34,35]. The w used in our simulations is given as:

where parameters and stand for the current and maximum PSO iteration, respectively [34,35].

2.2.2. Enhanced Partial Search Particle Swarm Optimization (EPS-PSO) Algorithm

In some cases, the standard PSO might lead to a stagnant state, meaning that the global best position cannot be improved over iterations. To avoid PSO’s convergence to a local optimum, a supplementary search direction was obtained by implementing an additional population that takes up the global search assignment. The PSO variation is termed as the Enhanced Partial Search Particle Swarm Optimization (EPS-PSO) [35].

At the start of the EPS-PSO algorithm, the entire population is divided equally into two subswarms, namely the traditional subswarm and the cosearch swarm, respectively. The main difference between those two swarms is that the cosearch swarm is periodically reinitialized every iterations, where is called the reinitialization period [35]. The reinitialization of the particle positions is performed uniformly in the search space. However, there is an exception; if the current global best position of the cosearch, denoted as , outperforms the global best position of the traditional swarm, denoted as , then the reinitialization of the cosearch swarm is called off. Basically, two subswarms work independently. The only cooperation between the subswarms is when the cosearch swarm exploits better outcomes than the traditional swarm. In that case, the velocity vector of the traditional swarm will update its value based on the , instead of the [35].

2.2.3. Multiswarm Particle Swarm Optimization (MSPSO)

The Multiswarm Particle Swarm Optimization (MSPSO) algorithm is another variant PSO algorithm that also introduces additional swarms [37]. Therefore, the total population depends not only on the number of particles but also on the number of swarms, denoted as . The number of swarms determines the degree of communication between the swarms. The studies have shown that the algorithm performance can be improved by involving more swarms in the process [35,37]. However, introducing more swarms also increases the number of evaluations. For our optimizations, we have divided the same population size of the previous PSO variants into multiple swarms.

2.2.4. Genetic Algorithm (GA)

The GA is a stochastic algorithm invented to mimic some procedures in the natural evolution [36]. In GA, new particles, also called offsprings, are formed by combining two particles from current generations, called parents, using crossover and mutation operators. A new generation is formed by keeping the best performing particles from the parents and offsprings. The crossover and mutation operators are part of genetic operators based on the Darwinian principle of survival of the best performing particle in the population. In the GA used in this paper, the numbers of offspring and mutants, and respectively, are controlled with parameters and [36].

3. Results and Discussion

In order to investigate the efficiency of the proposed RBF-RICI adaptive filtering algorithm, we have applied it to the several synthetic signals, including Blocks, Bumps, Doppler, HeaviSine, Piece-Regular, and Sing signal. These signals are chosen as they represent good models of some typical real-world data found in various engineering and signal processing applications. For example, the piecewise constant Blocks signal is used as a model of the acoustic impedance of a layered medium in geophysics or of the one-dimensional profile along certain images in image processing applications [38]. Moreover, the Bumps signal represents spectra arising in nuclear magnetic resonance (NMR), absorption, and infrared spectroscopy [38], whereas the Piece-Regular signal is similar to wave arrivals in a seismogram. Each considered signal is corrupted by the white additive Gaussian noise and studied for three cases corresponding to signal-to-noise-ratio (SNR) values of 5, 7, and 10 dB.

The RBF-RICI algorithm is also compared to the zero-order LPA-RICI, the original LPA-ICI, and the Savitzky–Golay [39] filtering algorithms. To facilitate the quantitative analysis of the tested algorithms, the following filtering quality indicators were calculated for signals of the length :

Mean squared error (MSE):

Mean absolute error (MAE):

Maximum absolute error (MAXE):

Peak signal-to-noise ratio (PSNR):

Improvement in the signal-to-noise ratio (ISNR):

The RBF-RICI algorithm, as well as the other tested algorithms, are applied with the optimal parameters for each considered signal, i.e., the parameters that minimize the estimation MSE. The optimal parameter values of the LPA-ICI algorithm are found in the range of . The Savitzky–Golay filtering algorithm is applied with the second order polynomial and the optimal window width h. The optimal parameters of the RBF-RICI and LPA-RICI algorithms are found by running the exhaustive grid search in the parameter space defined by parameters’ value ranges of and . The search is conducted with the fine parameter value resolution of , resulting in the total number of 50,000 iterations and algorithm evaluations. To speed up this time-consuming and strenuous process, the PSO algorithm is proposed in the RBF-RICI parameters optimization. Different PSO algorithm realizations, as well as the genetic algorithm, are tested, and their performances are compared. The obtained results are presented and discussed in the rest of this section.

3.1. Simulation Results

3.1.1. Blocks Signal

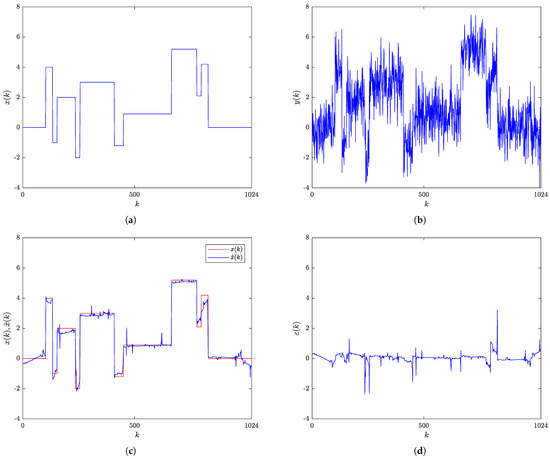

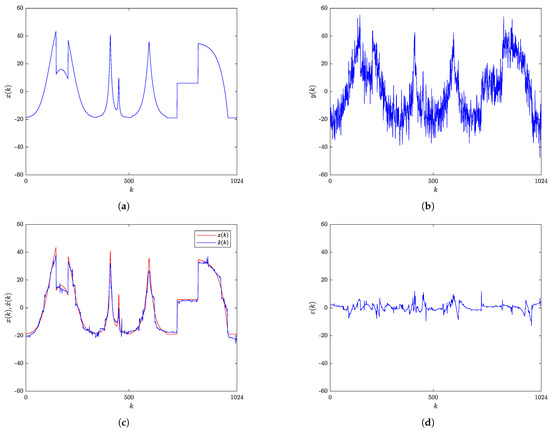

The original Blocks signal is shown in Figure 1a, while Figure 1b shows its noisy version, with the SNR of 7 dB. The results obtained by applying the RBF-RICI filtering algorithm, in terms of comparison of the original and the filtered signal, are presented in Figure 1c, while Figure 1d shows the filtering error. As can be seen, the RBF-RICI algorithm reduces the noise and reconstructs the main features of the original Blocks signal well, with the slightly degraded performance near the instantaneous signal value changes.

Figure 1.

Blocks signal: (a) Original signal. (b) Noisy signal (SNR = 7 dB). (c) Original and RBF-RICI filtered signal (). (d) Filtering error.

The filtering results obtained by the RBF-RICI, the LPA-RICI, the LPA-ICI, and the Savitzky–Golay filtering algorithm applied to the noisy Blocks signal at SNRs of 5, 7, and 10 dB are given in Table 1, Table 2 and Table 3, respectively. The quantitative comparison is provided by calculating the filtering quality indicators MSE, MAE, MAXE, PSNR, and ISNR for the tested algorithms with the optimal parameters, i.e., the parameters that minimize the filtering MSE. The best filtering quality indicators in each table, i.e., the lowest values of MSE, MAE, and MAXE, and the highest values of PSNR and ISNR, are marked in bold. The results presented in Table 1, Table 2 and Table 3 suggest that the RBF-RICI algorithm applied to the noisy Blocks signal provides satisfactory filtering performance, reducing the filtering errors and improving the SNR of the signal. With the decrease of the SNR, the RBF-RICI algorithm’s filtering quality is also somewhat reduced but remained competitive.

Table 1.

Blocks signal (SNR = 5 dB)—Filtering results. The best filtering quality indicators are marked in bold.

Table 2.

Blocks signal (SNR = 7 dB)—Filtering results.

Table 3.

Blocks signal (SNR = 10 dB)—Filtering results.

The filtering quality improvement of the RBF-RICI algorithm over the other tested algorithms for the Blocks signal is calculated, and the percentage values are given in Table 4, Table 5 and Table 6 for SNRs of 5, 7, and 10 dB, respectively. The positive percentage values indicate the filtering quality improvement of the RBF-RICI algorithm over the other algorithms, i.e., the decrease in the values of the filtering quality indicators MSE, MAE, and MAXE, and the increase in the values of the PSNR and ISNR, while the negative percentage values indicate the opposite. The results provided in Table 4, Table 5 and Table 6 suggest that, in most cases, the RBF-RICI algorithm applied to the noisy Blocks signal provides the improved or competitive filtering quality when compared to the other tested algorithms.

Table 4.

Blocks signal (SNR = 5 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 5.

Blocks signal (SNR = 7 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 6.

Blocks signal (SNR = 10 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

The runtimes have also been computed for each tested filtering algorithm. The algorithm runtimes have been obtained on a computer with the Intel Xeon CPU E5-2620 v4 @ 2.10 GHz, and 128 GB of RAM. The results have been averaged over 1000 algorithm runs. The runtimes of the filtering algorithms applied to the noisy Blocks signal at SNRs of 5, 7, and 10 dB are shown in Table 7. The presented results suggest that the proposed RBF-RICI algorithm performs competitively to the LPA-RICI and LPA-ICI algorithms in terms of execution speed, with a dependence on the selected parameters’ values. However, Savitzky–Golay filtering algorithm provides significantly faster performance than the other tested algorithms.

Table 7.

Blocks signal—Filtering algorithms’ runtimes.

3.1.2. Bumps Signal

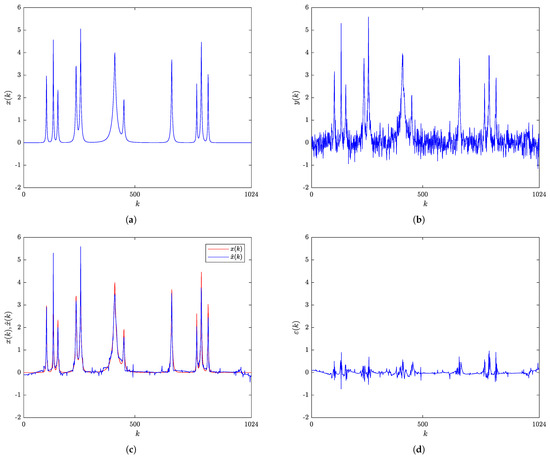

Figure 2a shows the original Bumps signal, whose noise-corrupted version with the SNR of 7 dB is shown in Figure 2b. The comparison of the original and the RBF-RICI filtered Bumps signal is given in Figure 2c, with the filtering error shown in Figure 2d. As shown in Figure 2c, the RBF-RICI algorithm provides very good noise reduction performance.

Figure 2.

Bumps signal: (a) Original signal. (b) Noisy signal (SNR = 7 dB). (c) Original and RBF-RICI filtered signal (). (d) Filtering error.

Table 8, Table 9 and Table 10 provide the filtering quality indicators obtained by the optimized RBF-RICI, LPA-RICI, LPA-ICI, and Savitzky–Golay filtering algorithms applied to the noisy Bumps signal for SNRs of 5, 7, and 10 dB, respectively. The results indicate that the RBF-RICI algorithm provides good filtering performance in terms of noise suppression and filtering error reduction. As expected, the filtering performance slightly declines with the decreasing SNR. Table 11, Table 12 and Table 13 give the relative comparison of the filtering quality obtained by the RBF-RICI algorithm and the other tested algorithms applied to the Bumps signal at SNRs of 5, 7, and 10 dB, respectively. The presented comparison indicates that the proposed RBF-RICI algorithm provides better performance than all other tested algorithms for each considered filtering quality indicator and SNR value.

Table 8.

Bumps signal (SNR = 5 dB)—Filtering results.

Table 9.

Bumps signal (SNR = 7 dB)—Filtering results.

Table 10.

Bumps signal (SNR = 10 dB)—Filtering results.

Table 11.

Bumps signal (SNR = 5 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 12.

Bumps signal (SNR = 7 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 13.

Bumps signal (SNR = 10 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

The filtering algorithms’ runtimes in the case of the Bumps signal filtered at SNR levels of 5, 7, and 10 dB are given in Table 14. The RBF-RICI algorithm shows performance that is significantly slower than the one obtained by the Savitzky–Golay filtering and somewhat slower than those provided by the LPA-RICI and LPA-ICI algorithms.

Table 14.

Bumps signal—Filtering algorithms’ runtimes.

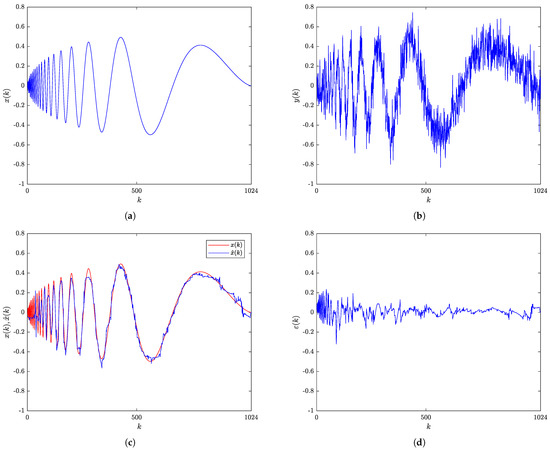

3.1.3. Doppler Signal

The original and noisy (SNR = 7 dB) Doppler signals are shown in Figure 3a,b, respectively. Figure 3c shows the original and the RBF-RICI filtered Doppler signal, while the obtained filtering error is shown in Figure 3d. As shown in Figure 3c, the RBF-RICI algorithm provides somewhat poorer filtering performance in the initial part of the Doppler signal where the higher frequency oscillations are present, and the algorithm’s performance significantly improves in the rest of the signal with the lower frequency content (and higher amplitudes).

Figure 3.

Doppler signal: (a) Original signal. (b) Noisy signal (SNR = 7 dB). (c) Original and RBF-RICI filtered signal (). (d) Filtering error.

The results obtained by applying the improved filtering algorithm to the noisy Doppler signal are given in Table 15, Table 16 and Table 17 for SNRs of 5, 7, and 10 dB, respectively. According to the values of the filtering quality indicators, the RBF-RICI technique provides a good filtering performance by reducing the filtering error and increasing the SNR of the signal. The percentage values describing the relative filtering quality improvement of the RBF-RICI algorithm over the LPA-RICI, the LPA-ICI, and the Savitzky–Golay filtering algorithm applied to the Doppler signal at SNRs of 5, 7, and 10 dB are given in Table 18, Table 19 and Table 20, respectively. The RBF-RICI algorithm outperforms all other tested algorithms for all filtering quality indicators and SNR values, except for the MAXE at the 7 dB SNR.

Table 15.

Doppler signal (SNR = 5 dB)—Filtering results.

Table 16.

Doppler signal (SNR = 7 dB)—Filtering results.

Table 17.

Doppler signal (SNR = 10 dB)—Filtering results.

Table 18.

Doppler signal (SNR = 5 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 19.

Doppler signal (SNR = 7 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 20.

Doppler signal (SNR = 10 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

The runtimes calculated for the filtering algorithms applied to the Doppler signal at SNRs of 5, 7, and 10 dB are given in Table 21. The RBF-RICI algorithm provides the runtimes which are competitive to the ones obtained by the LPA-RICI and LPA-ICI algorithms, with a dependence on the SNR and parameters’ values. These algorithms are again outperformed by the Savitzky–Golay filtering in terms of execution speed.

Table 21.

Doppler signal—Filtering algorithms’ runtimes.

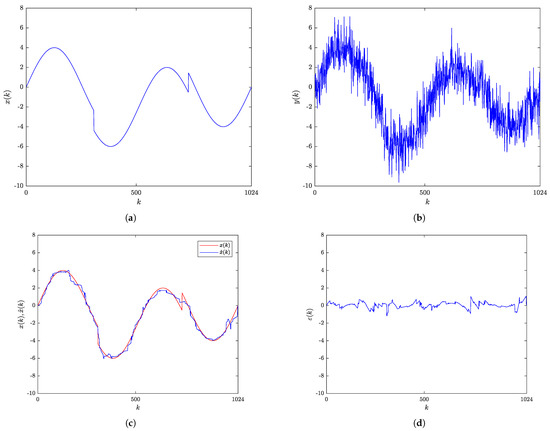

3.1.4. HeaviSine Signal

Figure 4a shows the original noise-free HeaviSine signal, while Figure 4b shows its noise-corrupted version at the 7 dB SNR. The comparison between the original and the RBF-RICI filtered signal is given in Figure 4c, while their difference is illustrated in Figure 4d as the filtering error. The proposed filtering algorithm provides noise reduction and estimates the original signal efficiently, with the low values of filtering error for the whole signal duration. The signal value jump at is successfully reconstructed, in contrast to the sudden change in the signal value at where the algorithm did not adapt fast enough.

Figure 4.

HeaviSine signal: (a) Original signal. (b) Noisy signal (SNR = 7 dB). (c) Original and RBF-RICI filtered signal (). (d) Filtering error.

Table 22, Table 23 and Table 24 provide the filtering results for the RBF-RICI, the LPA-RICI, the LPA-ICI, and the Savitzky–Golay filtering algorithm in the case of the HeaviSine signal filtered at SNRs of 5, 7, and 10 dB, respectively. The obtained filtering quality indicators suggest that the RBF-RICI algorithm efficiently removes the noise, keeping the filtering error at low values. The filtering quality is somewhat reduced for the lower SNR values but remained satisfactory. Table 25, Table 26 and Table 27 give the comparison between the filtering quality indicators obtained by the algorithms applied to the HeaviSine signal at SNRs of 5, 7, and 10 dB, respectively. The RBF-RICI algorithm shows better filtering performance than the LPA-RICI and LPA-ICI algorithms for each SNR case, but it is outperformed by the Savitzky–Golay filtering algorithm.

Table 22.

HeaviSine signal (SNR = 5 dB)—Filtering results.

Table 23.

HeaviSine signal (SNR = 7 dB)—Filtering results.

Table 24.

HeaviSine signal (SNR = 10 dB)—Filtering results.

Table 25.

HeaviSine signal (SNR = 5 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 26.

HeaviSine signal (SNR = 7 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 27.

HeaviSine signal (SNR = 10 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

The filtering algorithms’ runtimes for the noisy HeaviSine signal considered at SNR levels of 5, 7, and 10 dB are shown in Table 28. The RBF-RICI algorithm is competitive to the LPA-RICI and LPA-ICI algorithms in terms of execution speed, outperforming both methods at the SNR of 10 dB. The Savitzky–Golay filtering provides the fastest performance.

Table 28.

HeaviSine signal—Filtering algorithms’ runtimes.

3.1.5. Piece-Regular Signal

Figure 5a,b show the original and the noisy (SNR = 7 dB) Piece-Regular signal, respectively. The original and the RBF-RICI filtered signals are shown in Figure 5c, while Figure 5d shows the obtained estimation error. The results presented in Figure 5c,d suggest that the proposed adaptive algorithm provides excellent filtering accuracy, as the original signal and the filtered signal match closely. The algorithm adapts well to all sudden changes in signal slope and value.

Figure 5.

Piece-Regular signal: (a) Original signal. (b) Noisy signal (SNR = 7 dB). (c) Original and RBF-RICI filtered signal (). (d) Filtering error.

The results presented in Table 29, Table 30 and Table 31 suggest that the proposed RBF-RICI algorithm applied to the noisy Piece-Regular signal provides excellent filtering performance. The performance deteriorates somewhat with decreasing SNR. As shown in Table 32, Table 33 and Table 34, the RBF-RICI algorithm outperforms all other tested algorithms applied to the Piece-Regular signal for each considered SNR.

Table 29.

Piece-Regular signal (SNR = 5 dB)—Filtering results.

Table 30.

Piece-Regular signal (SNR = 7 dB)—Filtering results.

Table 31.

Piece-Regular signal (SNR = 10 dB)—Filtering results.

Table 32.

Piece-Regular signal (SNR = 5 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 33.

Piece-Regular signal (SNR = 7 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 34.

Piece-Regular signal (SNR = 10 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 35 gives the runtimes of the filtering algorithms applied to the noisy Piece-Regular signal at SNRs of 5, 7, and 10 dB. In this case, the RBF-RICI algorithm provides a slower performance when compared to the other tested algorithms.

Table 35.

Piece-Regular signal—Filtering algorithms’ runtimes.

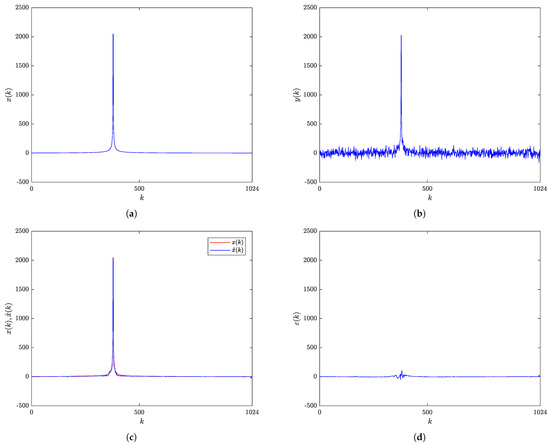

3.1.6. Sing Signal

The original Sing signal and the signal with the added white Gaussian noise (SNR = 7 dB) are shown in Figure 6a,b, respectively. The comparison between the original and the filtered signal is given in Figure 6c, with the filtering error shown in Figure 6d. The Sing signal is characterized by having zero values for the most part of its duration and one sudden peak of high amplitude, making it challenging for the filtering algorithm to adapt in a short period of time. The visual inspection of the presented results suggests that the optimized RBF-RICI algorithm provides excellent filtering performance with efficient noise suppression and an almost perfect match between the original and the filtered signal for the entire signal duration.

Figure 6.

Sing signal: (a) Original signal. (b) Noisy signal (SNR = 7 dB). (c) Original and RBF-RICI filtered signal (). (d) Filtering error.

Table 36, Table 37 and Table 38 provide the results obtained by the RBF-RICI, the LPA-RICI, the LPA-ICI, and the Savitzky–Golay filtering algorithm when applied to the filtering of the noisy Sing signal at SNRs of 5, 7, and 10 dB, respectively. The analysis of the calculated filtering quality indicators suggests that the RBF-RICI algorithm efficiently removes the noise, decreasing the filtering error and increasing the SNR of the signal. The performance is slightly reduced at the lower SNR values. The comparison of the filtering quality indicators obtained by the tested algorithms applied to the Sing signal, given in Table 39, Table 40 and Table 41, suggests that the RBF-RICI algorithm shows better filtering performance than the other tested algorithms for each filtering quality indicator and each considered SNR case.

Table 36.

Sing signal (SNR = 5 dB)—Filtering results.

Table 37.

Sing signal (SNR = 7 dB)—Filtering results.

Table 38.

Sing signal (SNR = 10 dB)—Filtering results.

Table 39.

Sing signal (SNR = 5 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 40.

Sing signal (SNR = 7 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 41.

Sing signal (SNR = 10 dB)—Filtering quality improvement of the RBF-RICI-based filtering over other tested algorithms.

Table 42 provides the filtering algorithms’ runtimes in the case of the noisy Sing signal filtered at SNR levels of 5, 7, and 10 dB. The results suggest that the RBF-RICI algorithm is outperformed by the other tested algorithms in terms of the execution speed.

Table 42.

Sing signal—Filtering algorithms’ runtimes.

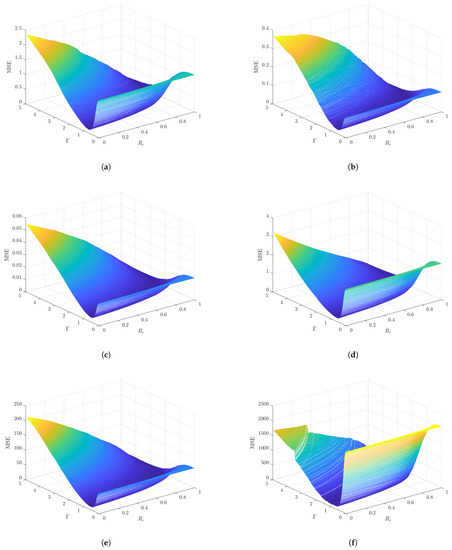

3.1.7. Parameters Sensitivity

In addition to the analysis provided above, Figure 7 shows the filtering quality measure MSE as a function of the RBF-RICI algorithm’s parameters and , for the algorithm applied to each considered noisy signal at the SNR of 7 dB. The MSE values are calculated during the grid search in the parameter space, within the range and . As can be seen, the estimation MSE, whose value determines the filtering performance, depends heavily on the proper selection of the algorithm’s parameters. In order to reduce the total number of evaluations needed to find the parameters’ values that minimize the obtained MSE, the parameters optimization approach following the PSO-based procedure is proposed.

Figure 7.

The filtering quality indicator MSE as a function of the RBF-RICI algorithm’s parameters and for noisy signals at 7 dB SNR: (a) Blocks. (b) Bumps. (c) Doppler. (d) HeaviSine. (e) Piece-Regular. (f) Sing.

3.1.8. Effects of the Signal Length

The effects of the signal length on the RBF-RICI algorithm’s filtering performance are demonstrated on each considered test signal for the SNR of 7 dB. The obtained results are shown in Table 43. The RBF-RICI parameters are set to the values which are in the previous analysis found as optimal for each signal of the 1024 samples length. As can be seen in Table 43, the filtering performance is generally improved with the increasing signal length, i.e., the filtering errors are reduced, and the SNR is increased. The only exceptions are the MAXE which does not always show this declining trend, and the Sing signal due to its specific nature in which the high-amplitude and the narrow-width peak is not adequately registered at lower sampling rates. As expected, the algorithm runtime is increased for longer duration signals.

Table 43.

Filtering results obtained by applying the RBF-RICI algorithm to signals of different lengths.

3.2. Parameters Optimization

In this section, we formalize a single-objective optimization problem:

The optimization has been performed using the described evolutionary optimization methods with the key parameters given in Table 44.

Table 44.

Parameters of the considered optimization algorithms.

The number of particles in the swarm, s, the maximum number of iterations, , and the corresponding parameters have been kept equal for all considered optimization algorithms to preserve the equal number of the MSE evaluations and to facilitate mutual comparison. That is, each swarm size in the EPS-PSO and MSPSO algorithms, which use multiple swarms, has been scaled accordingly to preserve s.

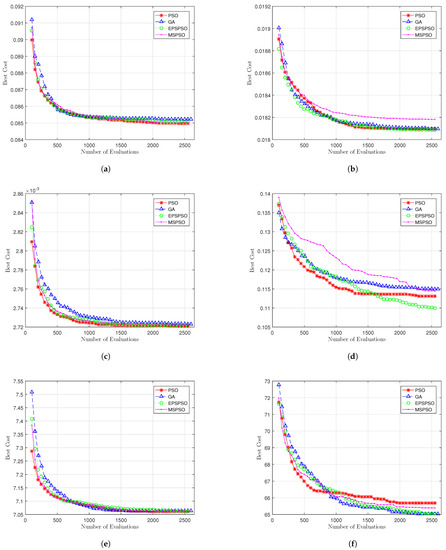

The computational results, including the average (Mean), the best (Best), the worst (Worst), the standard deviation (Std. Dev.), and the median (Median), obtained by the PSO-based and GA-based optimization algorithms applied to the parameters optimization problem for each considered noisy signal at the SNR of 7 dB, are given in Table 45. The convergence results for 50 independent runs are shown in Figure 8.

Table 45.

The computational results obtained by the PSO-based and GA-based optimization algorithms after 50 iterations, averaged over 50 independent runs.

Figure 8.

Convergence comparison between the considered optimization algorithms for signals: (a) Blocks. (b) Bumps. (c) Doppler. (d) HeaviSine. (e) Piece-Regular. (f) Sing.

All considered optimization algorithms have performed well for the Blocks signal. However, the PSO-based algorithms have a slight advantage over the GA with respect to the Worst, Std. Dev., and convergence, as shown in Figure 8a. All optimization algorithms have found the global optimum multiple times in 50 independent runs.

Similarly, high optimization performance is obtained for the Bumps signal, as well. The difference is with the GA, which performs equally well as the PSO-based algorithms in this case. The exception is the MSPSO algorithm, which provides the poorest performance with respect to the Mean, Worst, and Std. Dev. Additionally, Figure 8b clearly shows poorer convergence of the MSPSO algorithm. On the other hand, the EPS-PSO shows excellent performance, as it has converged to the global optimum in each independent run. Similar to the previous case, all optimization algorithms have found the global optimum at least once in 50 independent runs.

Excellent optimization performance is obtained for the Doppler signal. All considered optimization algorithms have found the global optimum numerous times in 50 independent runs, where the PSO and the EPS-PSO have converged to the global optimum in each optimization run.

The results obtained by the considered optimization algorithms differ the most for the HeaviSine signal example. Although all optimization algorithms have found the global optimum at least once in 50 independent runs, the obtained numerical results and graphical representation of the convergence suggest that the GA and the MSPSO have performed worse than the PSO and the EPS-PSO algorithms, with the EPS-PSO highlighted again as the best performing algorithm with respect to the obtained results statistics and convergence (Figure 8d).

Another high optimization performance is obtained for the Piece-Regular signal, similarly to the Doppler signal example. The only difference is that the MSPSO has replaced the EPS-PSO as the best performing algorithm along with the PSO, which has also found the global optimum in each independent run.

The optimization results for the final signal example, the Sing, highlight the MSPSO and, for the first time, the GA as the best performing algorithms with respect to the statistics and convergence, shown in Figure 8f. All considered optimizations algorithms perform with the equal Best and Worst solutions in 50 independent runs.

To sum up, all tested optimization algorithms perform well for our optimization problem, successfully finding the global optimum for all signal examples. The larger size of the single swarm in the PSO algorithm boosts the convergence for the initial number of evaluations. However, the EPS-PSO algorithm, with a greater ability to escape from the local optimum, stands out as the best performing optimization algorithm for our signal examples.

3.3. Experimental Results for Real-Life Signals

In order to test the proposed RBF-RICI filtering algorithm in real-life conditions, we have applied it to the noisy measured maritime signals (as an example of a practical application). The measurements were obtained from a buoy located in the Atlantic Ocean, approximately 210 nautical miles west-southwest of Slyne Head at the west coast of Ireland. The data is provided by Met Éireann, Ireland’s National Meteorological Service, as an open-access dataset publicly available at https://data.gov.ie/dataset/hourly-data-for-buoy-m6 (accessed on 17 April 2021). The dataset contains hourly measurements from 2006 to the present.

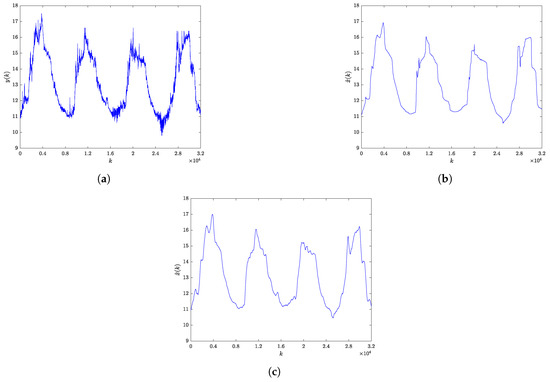

Figure 9a shows the noisy measurements of the sea temperature (°C), while Figure 9b,c show the sea temperature signal obtained after applying the RBF-RICI filtering algorithm and Savitzky–Golay filtering algorithm, respectively. The RBF-RICI algorithm’s parameters are set to and . All real-life maritime signals considered in this analysis are filtered using the Savitzky–Golay filter with the second order polynomial and the window width set to of the signal length. This particular window width setting is chosen because the widths of that order of magnitude proved to be optimal during the analysis performed for several synthetic signals.

Figure 9.

Sea temperature: (a) Noisy measured signal. (b) RBF-RICI filtered signal. (c) Savitzky–Golay filtered signal.

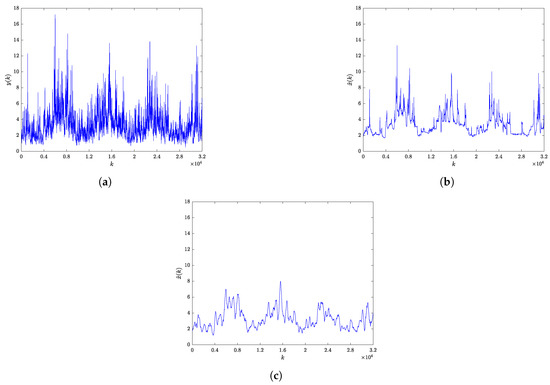

The measurements of the significant wave height (m) are shown in Figure 10a, while Figure 10b,c show the same data after application of the RBF-RICI and Savitzky–Golay filtering algorithm, respectively. In this case, the RBF-RICI parameters are set to and .

Figure 10.

Significant wave height: (a) Noisy measured signal. (b) RBF-RICI filtered signal. (c) Savitzky–Golay filtered signal.

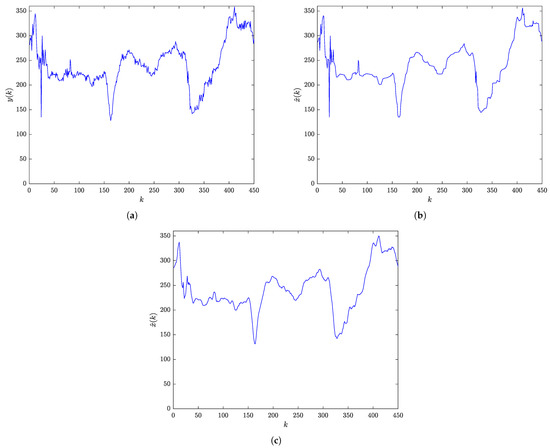

Figure 11a shows the noisy measurements of the wave direction (°), whereas Figure 11b shows the wave direction signal obtained after applying the RBF-RICI filtering algorithm, whose parameters are tuned to the values and . Figure 11c shows the signal obtained after application of the Savitzky–Golay filter.

Figure 11.

Wave direction: (a) Noisy measured signal. (b) RBF-RICI filtered signal. (c) Savitzky–Golay filtered signal.

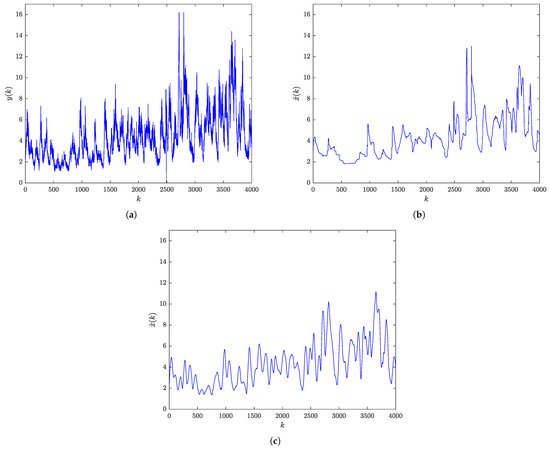

The measurements of the individual maximum wave height (m) are shown in Figure 12a, whereas Figure 12b shows the same data after application of the RBF-RICI filtering algorithm, whose parameters are set to the values and . The Savitzky–Golay filtered signal is shown in Figure 12c.

Figure 12.

Individual maximum wave height: (a) Noisy measured signal. (b) RBF-RICI filtered signal.(c) Savitzky–Golay filtered signal.

As shown in Figure 9, Figure 10, Figure 11 and Figure 12, the RBF-RICI filtering algorithm reduces the noise level in the real-life measurements and successfully reconstructs the main morphological features of the underlying signals, performing competitively to the conventionally applied Savitzky–Golay filtering algorithm. Therefore, the application of the RBF-RICI filtering enables better observation of the useful information and trends in the measurement data, and this way filtered signals may be used for further analysis and processing. Moreover, the RBF-RICI algorithm’s parameters and may be set to the values used as optimal for the simulated signals of similar morphologies. However, these parameters can be additionally adjusted in order to achieve the different levels of the filtered signal’s smoothness. The algorithm’s parameters obtained by this data-driven approach may be then successfully used for the filtering of the signals of the same type and similar characteristics.

4. Conclusions

In this paper, we proposed an adaptive RBF-RICI filtering algorithm, whose parameters are adjusted using the PSO-based procedure. The analysis of the RBF-RICI algorithm’s filtering performance was done using several synthetic noisy signals, showing that the algorithm is efficient in noise suppression and filtering error reduction. Moreover, comparing the proposed algorithm with similar filtering algorithms, we found that it shows better or competitive filtering performance in most considered test cases. Finally, we applied the proposed algorithm to the noisy measured maritime data, proving the possibility of its successful application in real-world practical applications. The possible other applications in the maritime sector include signals obtained by different ship measurement and detection sensors and systems, meteorological sea data, navigational data, etc. Moreover, the application of the proposed PSO-enhanced RBF-RICI filtering algorithm is not limited to the maritime transport sector only but may also be generalized for application in other fields dealing with nonstationary data.

Author Contributions

Conceptualization, N.L., I.J., and J.L.; methodology, N.L. and J.L.; software, N.L. and J.L.; validation, N.L.; formal analysis, N.L.; investigation, N.L.; resources, N.L., I.J. and J.L.; data curation, N.L.; writing—original draft preparation, N.L.; writing—review and editing, N.L., I.J., J.L., and N.W.; visualization, N.L.; supervision, I.J., J.L., and N.W.; project administration, I.J. and J.L.; funding acquisition, I.J. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Croatian Science Foundation under the project IP-2018-01-3739, EU Horizon 2020 project “National Competence Centres in the Framework of EuroHPC (EUROCC)”, IRI2 project “ABsistemDCiCloud” (KK.01.2.1.02.0179), the University of Rijeka under the projects uniri-tehnic-18-17 and uniri-tehnic-18-15, and the University of Rijeka, Faculty of Maritime Studies under the project “Development of advanced digital signal processing algorithms with application in the maritime sector”. The APC was funded by the University of Rijeka, Faculty of Maritime Studies.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: https://data.gov.ie/dataset/hourly-data-for-buoy-m6 (accessed on 18 April 2021).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

| Algorithm A1 PSO algorithm-general |

| Require: Ensure: 1: Initialization: 2: fortosdo 3: Initialize position , velocity and particle’s personal best ; 4: Perform the function evaluation ; 5: 6: if then 7: ; 8: end if 9: end for 10: Main loop: 11: for to do 12: (17); 13: for to s do 14: (15), (16); 15: Perform the function evaluation ; 16: if then 17: ; 18: end if 19: if then 20: ; 21: end if 22: end for 23: end for |

| Algorithm A2 EPS-PSO algorithm-general |

| Require: Ensure: 1: Initialization: 2: for each particle in both swarms do 3: Initialize position , velocity and particle’s personal best ; 4: Perform the function evaluation ; 5: 6: if then 7: ; 8: end if 9: end for 10: Main loop: 11: for to do 12: (17); 13: for each particle in both swarms do 14: (15), (16); 15: Perform the function evaluation ; 16: if the criterion of the reinitialization period for the cosearch swarm is met then 17: for each particle in the cosearch swarm do 18: Reinitialize position , velocity and particle’s personal best ; 19: Perform the evaluation ; 20: if then 21: ; 22: end if 23: end for 24: end if 25: end for 26: end for |

| Algorithm A3 MSPSO algorithm-general |

| Require: Ensure: 1:Initialization: 2:for to do 3: for to s do 4: Initialize position , velocity and particle’s personal best 5: Perform the function evaluation 6: ; 7: if then 8: ; 9: end if 10: end for 11:end for 12:Main loop: 13:for to do 14: (17); 15: for to do 16: for to s do 17: (15), (16); 18: Perform the function evaluation 19: if then 20: ; 21: end if 22: if then 23: ; 24: end if 25: end for 26: end for 27: 28:end for |

| Algorithm A4 GA algorithm-general |

| Require: Ensure: 1:Initialization: 2:fortosdo 3: Initialize position , velocity and particle’s personal best 4: Perform the function evaluation 5:end for 6:Sort population in descending order; 7:; 8:Main loop: 9:for to do 10: for to do 11: Compute crossovers and form new subpopulation; 12: end for 13: for to do 14: Compute mutation and form new subpopulation; 15: end for 16: Create merged population; 17: Sort population in descending order; 18: Truncate population to s best performing particles; 19: ; 20:end for |

References

- Broomhead, D.; King, G.P. Extracting qualitative dynamics from experimental data. Phys. D Nonlinear Phenom. 1986, 20, 217–236. [Google Scholar] [CrossRef]

- Smyth, A.; Wu, M. Multi-rate Kalman filtering for the data fusion of displacement and acceleration response measurements in dynamic system monitoring. Mech. Syst. Signal Process. 2007, 21, 706–723. [Google Scholar] [CrossRef]

- Knowles, I.; Renka, R.J. Methods for numerical differentiation of noisy data. Electron. J. Diff. Eqns. 2014, 21, 235–246. [Google Scholar]

- Layden, D.; Cappellaro, P. Spatial noise filtering through error correction for quantum sensing. Npj Quantum Inf. 2018, 4, 1–6. [Google Scholar] [CrossRef]

- García-Gil, D.; Luengo, J.; García, S.; Herrera, F. Enabling Smart Data: Noise filtering in Big Data classification. Inf. Sci. 2019, 479, 135–152. [Google Scholar] [CrossRef]

- Li, H.; Gedikli, E.D.; Lubbad, R. Exploring time-delay-based numerical differentiation using principal component analysis. Phys. A Stat. Mech. Its Appl. 2020, 556, 124839. [Google Scholar] [CrossRef]

- Zhao, Z.; Wang, S.; Wong, D.; Sun, C.; Yan, R.; Chen, X. Robust enhanced trend filtering with unknown noise. Signal Process. 2021, 180, 107889. [Google Scholar] [CrossRef]

- Ehlers, F.; Fox, W.; Maiwald, D.; Ulmke, M.; Wood, G. Advances in Signal Processing for Maritime Applications. EURASIP J. Adv. Signal Process. 2010. [Google Scholar] [CrossRef]

- Singer, A.C.; Nelson, J.K.; Kozat, S.S. Signal processing for underwater acoustic communications. IEEE Commun. Mag. 2009, 47, 90–96. [Google Scholar] [CrossRef]

- Sazontov, A.; Malekhanov, A. Matched field signal processing in underwater sound channels. Acoust. Phys. 2015, 61, 213–230. [Google Scholar] [CrossRef]

- Yuan, F.; Ke, X.; Cheng, E. Joint Representation and Recognition for Ship-Radiated Noise Based on Multimodal Deep Learning. J. Mar. Sci. Eng. 2019, 7, 380. [Google Scholar] [CrossRef]

- Tu, Q.; Yuan, F.; Yang, W.; Cheng, E. An Approach for Diver Passive Detection Based on the Established Model of Breathing Sound Emission. J. Mar. Sci. Eng. 2020, 8, 44. [Google Scholar] [CrossRef]

- Vicen-Bueno, R.; Carrasco-Álvarez, R.; Rosa-Zurera, M.; Nieto-Borge, J.C.; Jarabo-Amores, M.P. Artificial Neural Network-Based Clutter Reduction Systems for Ship Size Estimation in Maritime Radars. EURASIP J. Adv. Signal Process. 2010, 2010, 380473. [Google Scholar] [CrossRef]

- Ristic, B.; Rosenberg, L.; Kim, D.Y.; Guan, R. Bernoulli filter for tracking maritime targets using point measurements with amplitude. Signal Process. 2021, 181, 107919. [Google Scholar] [CrossRef]

- Schettini, R.; Corchs, S. Underwater Image Processing: State of the Art of Restoration and Image Enhancement Methods. EURASIP J. Adv. Signal Process. 2010, 2010, 746052. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Zhang, Y.; Chen, M.; Serikawa, S.; Kim, H. Underwater Optical Image Processing: A Comprehensive Review. Mob. Netw. Appl. 2017, 22, 1204–1211. [Google Scholar] [CrossRef]

- Huang, Y.; Li, W.; Yuan, F. Speckle Noise Reduction in Sonar Image Based on Adaptive Redundant Dictionary. J. Mar. Sci. Eng. 2020, 8, 761. [Google Scholar] [CrossRef]

- Ricci, R.; Francucci, M.; De Dominicis, L.; Ferri de Collibus, M.; Fornetti, G.; Guarneri, M.; Nuvoli, M.; Paglia, E.; Bartolini, L. Techniques for Effective Optical Noise Rejection in Amplitude-Modulated Laser Optical Radars for Underwater Three-Dimensional Imaging. EURASIP J. Adv. Signal Process. 2010, 2010, 958360. [Google Scholar] [CrossRef]

- Kim, K.S.; Lee, J.B.; Roh, M.I.; Han, K.M.; Lee, G.H. Prediction of Ocean Weather Based on Denoising AutoEncoder and Convolutional LSTM. J. Mar. Sci. Eng. 2020, 8, 805. [Google Scholar] [CrossRef]

- Yuan, J.; Guo, J.; Niu, Y.; Zhu, C.; Li, Z.; Liu, X. Denoising Effect of Jason-1 Altimeter Waveforms with Singular Spectrum Analysis: A Case Study of Modelling Mean Sea Surface Height over South China Sea. J. Mar. Sci. Eng. 2020, 8, 426. [Google Scholar] [CrossRef]

- Wei, J.; Xie, T.; Shi, M.; He, Q.; Wang, T.; Amirat, Y. Imbalance Fault Classification Based on VMD Denoising and S-LDA for Variable-Speed Marine Current Turbine. J. Mar. Sci. Eng. 2021, 9, 248. [Google Scholar] [CrossRef]

- Katkovnik, V.; Egiazarian, K.; Astola, J. Local Approximation Techniques in Signal and Image Processing; SPIE—The International Society for Optical Engineering: Bellingham, WA, USA, 2006. [Google Scholar] [CrossRef]

- Goldenshluger, A.; Nemirovski, A. On spatially adaptive estimation of nonparametric regression. Math. Methods Stat. 1997, 6, 135–170. [Google Scholar]

- Katkovnik, V. A new method for varying adaptive bandwidth selection. IEEE Trans. Signal Process. 1999, 47, 2567–2571. [Google Scholar] [CrossRef]

- Katkovnik, V.; Shmulevich, I. Kernel density estimation with adaptive varying window size. Pattern Recognit. Lett. 2002, 23, 1641–1648. [Google Scholar] [CrossRef]

- Lerga, J.; Vrankic, M.; Sucic, V. A Signal Denoising Method Based on the Improved ICI Rule. IEEE Signal Process. Lett. 2008, 15, 601–604. [Google Scholar] [CrossRef]

- Sucic, V.; Lerga, J.; Vrankic, M. Adaptive filter support selection for signal denoising based on the improved ICI rule. Digit. Signal Process. 2013, 23, 65–74. [Google Scholar] [CrossRef]

- Katkovnik, V. Multiresolution local polynomial regression: A new approach to pointwise spatial adaptation. Digit. Signal Process. 2005, 15, 73–116. [Google Scholar] [CrossRef][Green Version]

- Cai, Z. Weighted Nadaraya–Watson Regression Estimation. Stat. Probab. Lett. 2001, 51, 307–318. [Google Scholar] [CrossRef]

- Katkovnik, V.; Egiazarian, K.; Astola, J. Adaptive window size image de-noising based on intersection of confidence intervals (ICI) rule. J. Math. Imaging Vis. 2002, 16, 223–235. [Google Scholar] [CrossRef]

- Katkovnik, V.; Egiazarian, K.; Astola, J. Adaptive Varying Scale Methods in Image Processing; TTY Monistamo: Tampere, Finland, 2003; Volume 19. [Google Scholar]

- Lerga, J.; Sucic, V.; Sersic, D. Performance analysis of the LPA-RICI denoising method. In Proceedings of the 2009 6th International Symposium on Image and Signal Processing and Analysis, Salzburg, Austria, 16–18 September 2009; pp. 28–33. [Google Scholar] [CrossRef]

- Lopac, N.; Lerga, J.; Cuoco, E. Gravitational-Wave Burst Signals Denoising Based on the Adaptive Modification of the Intersection of Confidence Intervals Rule. Sensors 2020, 20, 6920. [Google Scholar] [CrossRef]

- Evangeline, S.I.; Rathika, P. Particle Swarm optimization Algorithm for Optimal Power Flow Incorporating Wind Farms. In Proceedings of the 2019 IEEE International Conference on Intelligent Techniques in Control, Optimization and Signal Processing (INCOS), Tamilnadu, India, 11–13 April 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Fan, S.K.S.; Jen, C.H. An Enhanced Partial Search to Particle Swarm Optimization for Unconstrained Optimization. Mathematics 2019, 7, 357. [Google Scholar] [CrossRef]

- Garg, H. A hybrid PSO-GA algorithm for constrained optimization problems. Appl. Math. Comput. 2016, 274, 292–305. [Google Scholar] [CrossRef]

- Shen, Y.; Li, Y.; Kang, H.; Zhang, Y.; Sun, X.; Chen, Q.; Peng, J.; Wang, H. Research on Swarm Size of Multi-swarm Particle Swarm Optimization Algorithm. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications (ICCC), Chengdu, China, 7–10 December 2018; pp. 2243–2247. [Google Scholar] [CrossRef]

- Donoho, D.L.; Johnstone, I.M. Adapting to Unknown Smoothness via Wavelet Shrinkage. J. Am. Stat. Assoc. 1995, 90, 1200–1224. [Google Scholar] [CrossRef]

- Schafer, R.W. What Is a Savitzky-Golay Filter? [Lecture Notes]. IEEE Signal Process. Mag. 2011, 28, 111–117. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).