Comparative Analysis of Recurrent vs. Temporal Convolutional Autoencoders for Detecting Container Impacts During Quay Crane Handling

Abstract

1. Introduction

- This paper is believed to be one of the first to embed autoencoders in edge sensors for the dynamic condition monitoring of containers. This approach utilises time-series analytics for anomaly detection, showcasing a novel application in the logistics sector.

- To further test the embedded anomaly detection system by implementing an autoencoder-based anomaly detection system within the selected sensor device. This system will process data locally, reducing the dependency on remote cloud services and minimising the latency and network strain typically associated with large-scale data transmission.

- To validate the system in a live operational environment by testing the robustness and reliability of the proposed system under real-world conditions at a container terminal. This will include evaluating the system’s ability to accurately detect anomalies in real-time and assessing its impact on the overall efficiency of container monitoring.

- To demonstrate the potential of edge computing in logistical operations by showcasing how edge computing can be leveraged to solve complex monitoring tasks that benefit from immediate data processing, such as the dynamic condition monitoring of containers. This includes providing systematic early warnings to repair personnel, crane operators, and terminal managers and facilitating proactive maintenance and operational decisions.

- To contribute to the academic and industrial understanding of autoencoder applications in logistics by presenting findings that could pave the way for further research and development in the integration of advanced machine learning techniques, like autoencoders, in logistics and supply chain management.

2. Materials and Methods

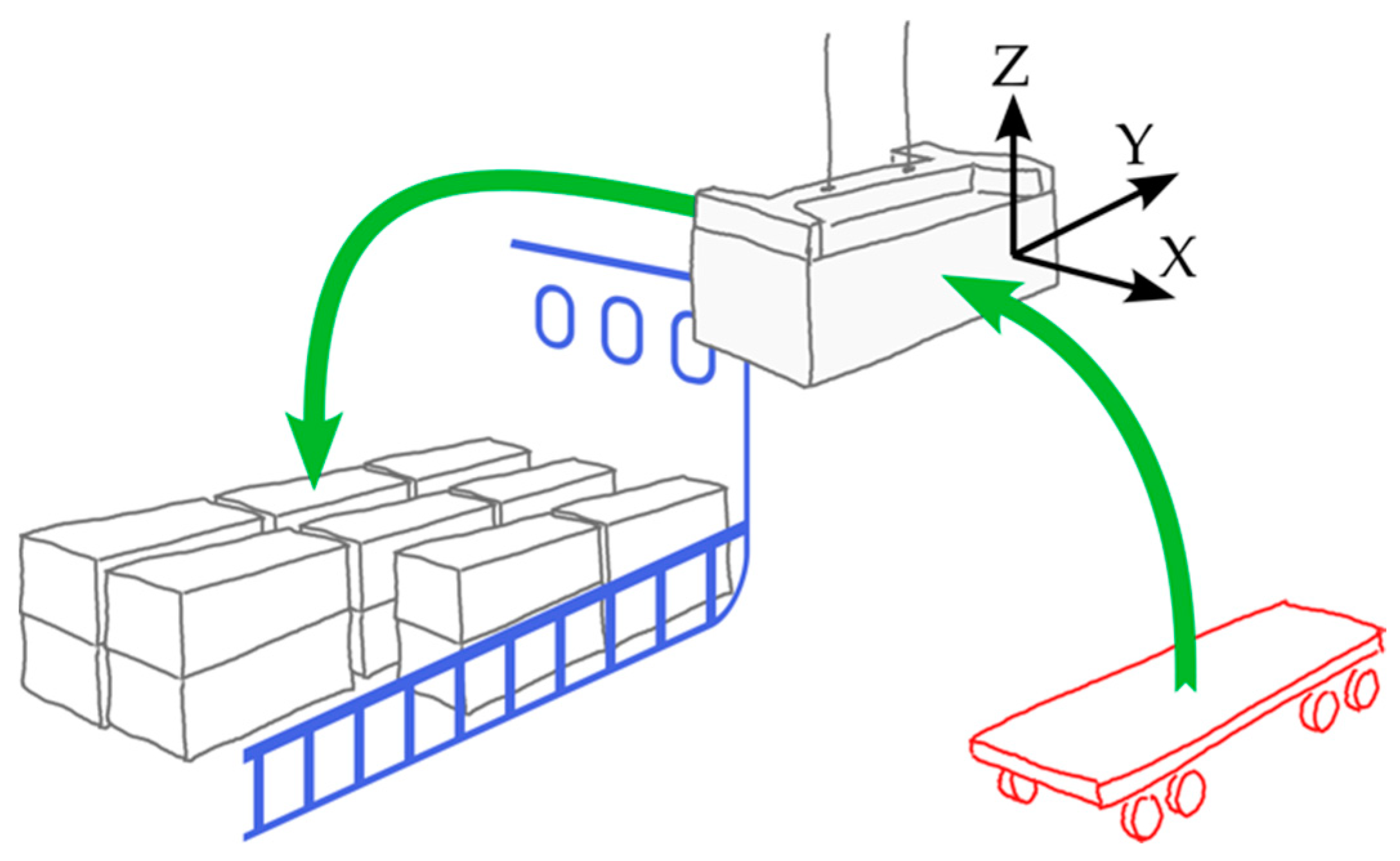

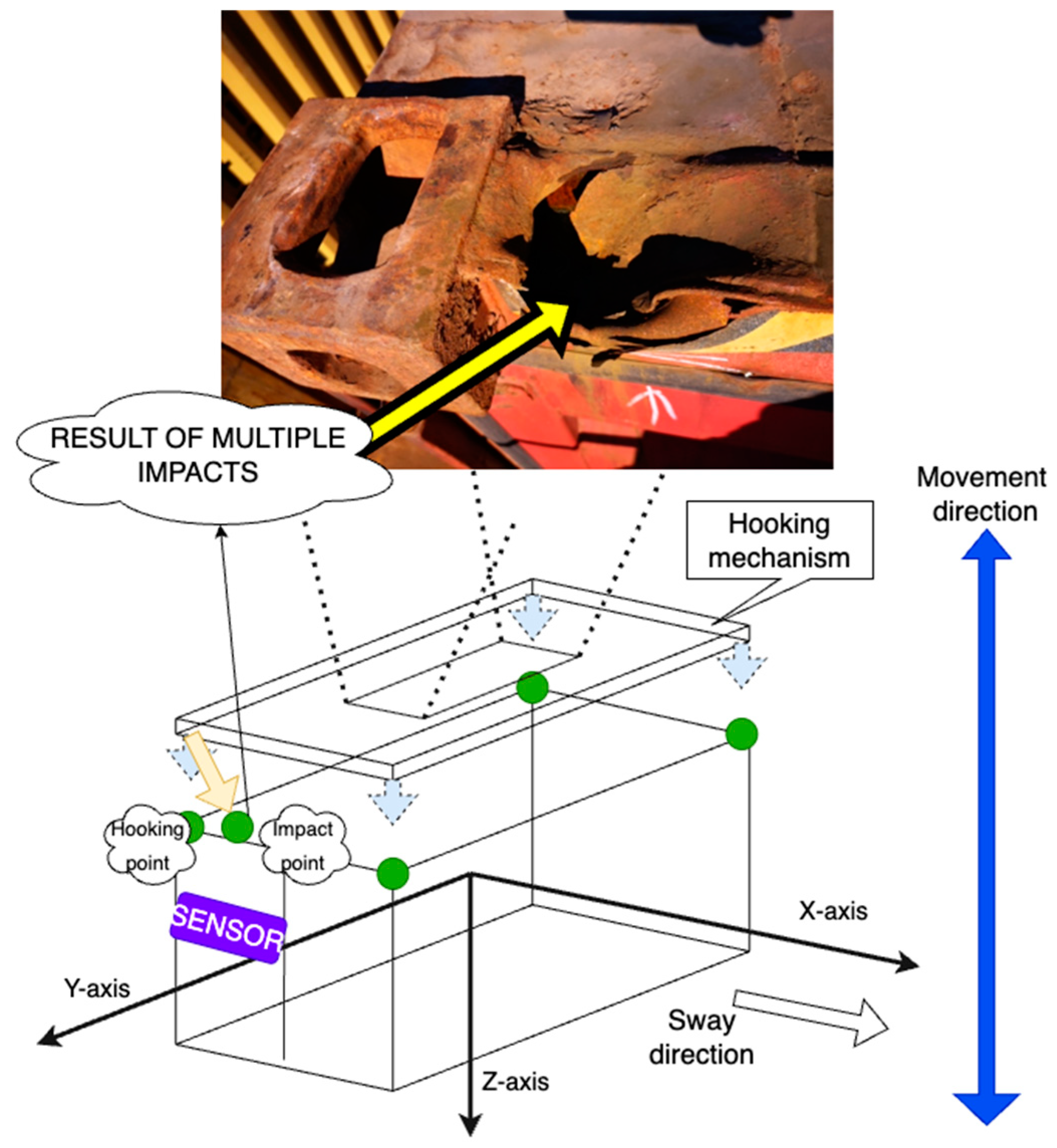

2.1. Description of the Experimental Setup

- Data transmission unit using Bluetooth 5.1.

- Raspberry Pi 4 (four ARM A72 1.5 GHz cores, 8 GB of RAM) with a 128 GB SD UHS-I memory card.

- SINDT-232 digital accelerometer with high-stability 200 Hz MPU6050 3-axis acceleration (WitMotion Shenzhen Co., Ltd., Shenzhen, China), having 0.05-degree accuracy and an acceleration range of ±16 g.

- Inner 33,000 mAh battery.

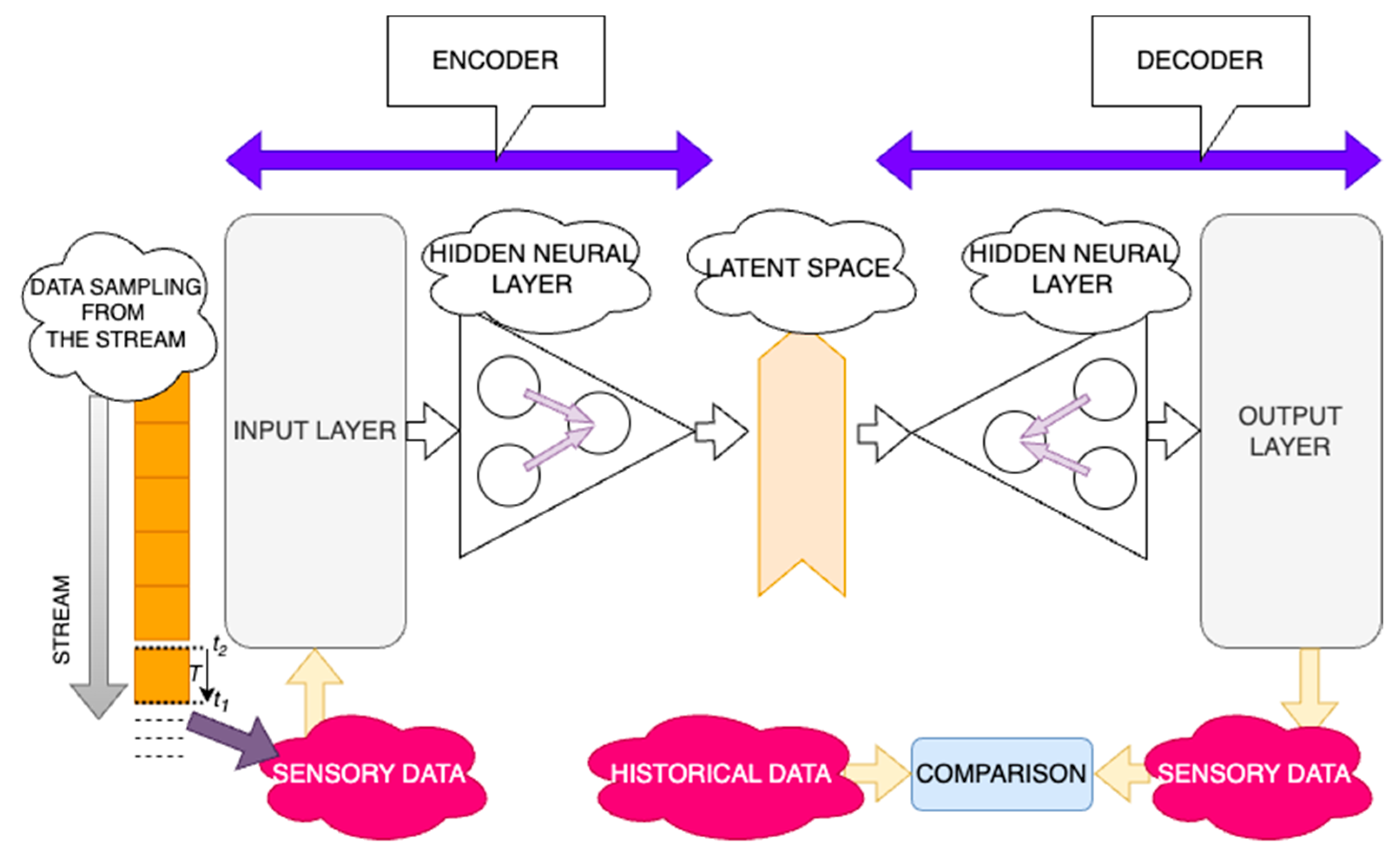

2.2. Structure of an Autoencoder

- Encoder: This part of the network compresses the input into a more miniature, encoded representation. The encoder function is typically denoted as .

- Decoder: This part attempts to reconstruct the input from the encoded representation. The decoder function is typically denoted as .

- Recurrent Autoencoders (RAEs) utilise recurrent neural network (RNN) architec-tures, like Long Short-Term Memory (LSTM) or Gated Recurrent Units (GRUs), in both the encoder and decoder parts. These models are highly effective for time-series data due to their ability to maintain state or memory across time steps, capturing the dynamic temporal behaviour of the data.

- Encoder: Processes the input time-series data and compresses it into a latent space representation. It typically consists of several layers of LSTM or GRU cells that process each time step sequentially, updating their internal state based on both the current input and the previously hidden state

- Where is the input at a time , is the hidden state from the previous time step, and is the output of the LSTM representing the encoded state.

- Decoder: Starts from the latent representation and attempts to reconstruct the original time-series data over time. The decoder can also be a sequence of LSTM or GRU cells, which takes the latent representation and reconstructs the sequence, potentially in reverse order or from a condensed state (7).

- Here, represents the reconstructed output at time , and is the hidden state from the previous time step in the decoder.

- Temporal Convolutional Autoencoders (TCAEs) utilise convolutional neural network (CNN) architectures specifically designed for sequence data, referred to as 1D CNNs. These models are beneficial for handling large datasets where capturing long-term dependencies explicitly with RNNs might be computationally expensive.

- Encoder: Applies convolutional filters to the input sequence to extract features over multiple time steps simultaneously. This is performed using 1D convolutions that slide over the time dimension, capturing patterns and anomalies in the data (8).

- Where ( denotes the convolution operation, and are the weights and biases of the convolutional layers, respectively, and ReLU is the activation function.

- Decoder: Used to map the latent space back to the time-series data’s original dimensionality. This part of the network learns to reconstruct the time sequence from the compressed encoded features (9).

- is the input at time .

- is the reconstruction output at time step .

- is the total number of time steps in the time-series.

- denotes the squared Euclidian distance, ensuring that all errors are positive and that larger errors are penalized more heavily.

- is the regularisation strength.

- is the absolute value of each weight in the network.

- is the square of each weight in the network.

- is the number of training samples.

- and are the encoder and decoder parameters, respectively.

3. Results and Discussions

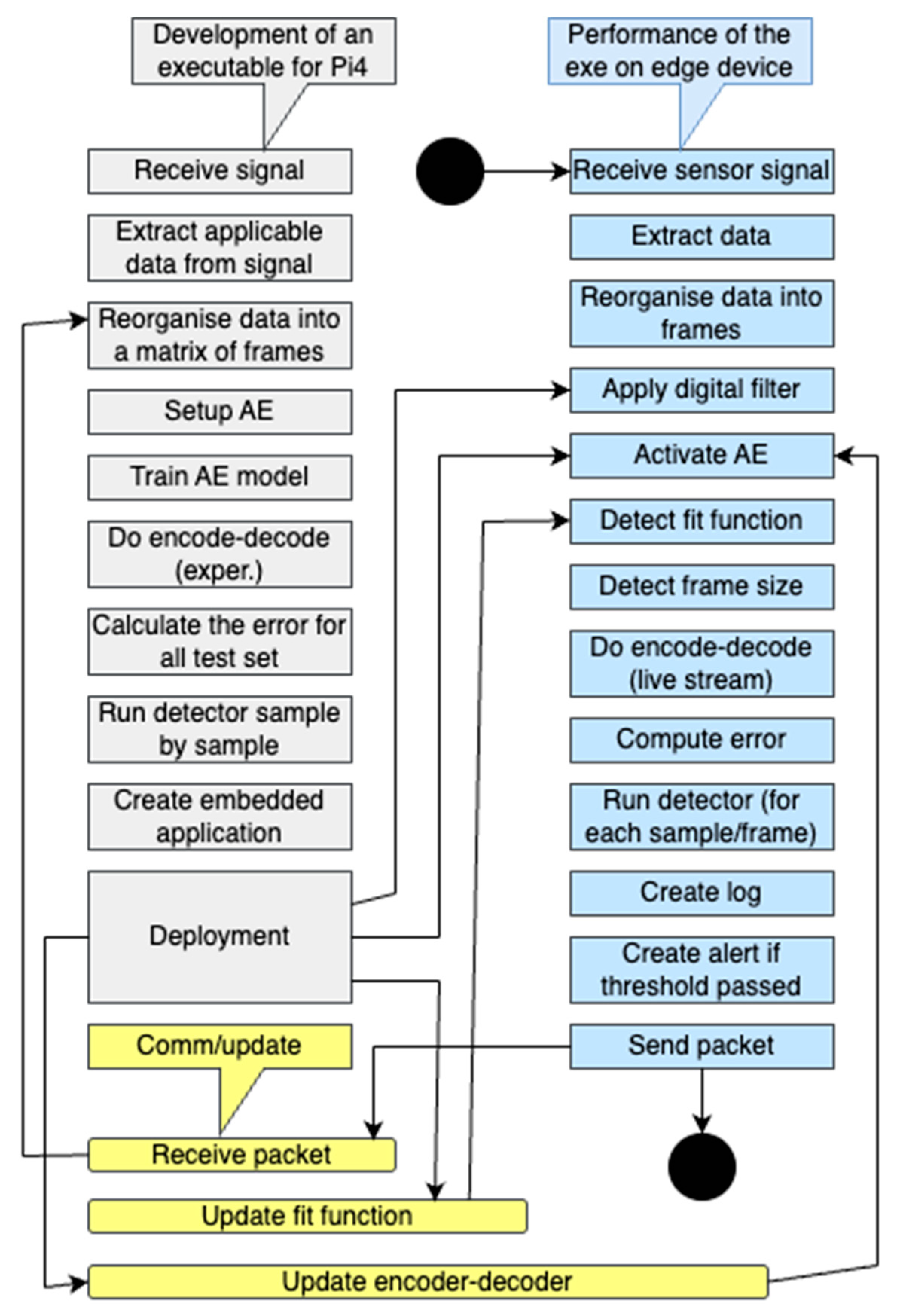

3.1. Data Preparation and Analytical Steps

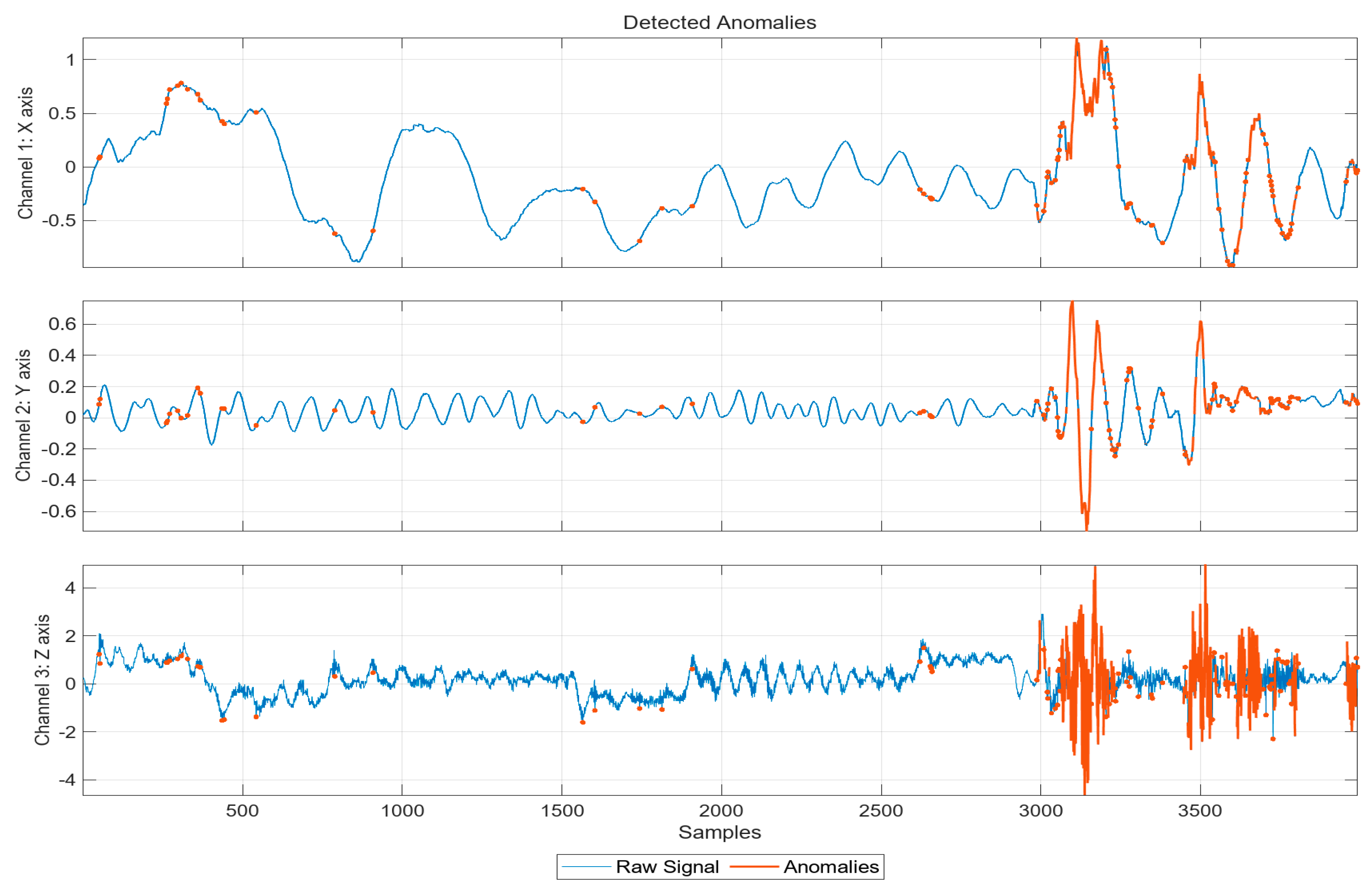

- Data Preparation. Container acceleration data is gathered using a single sensor. This data includes noise components, often seen as groups of anomalous outliers that compromise functional stability. To address this, the data is segmented into a training set, a validation set that contains only normal, extracted data, and a test set that includes both normal and anomalous data.

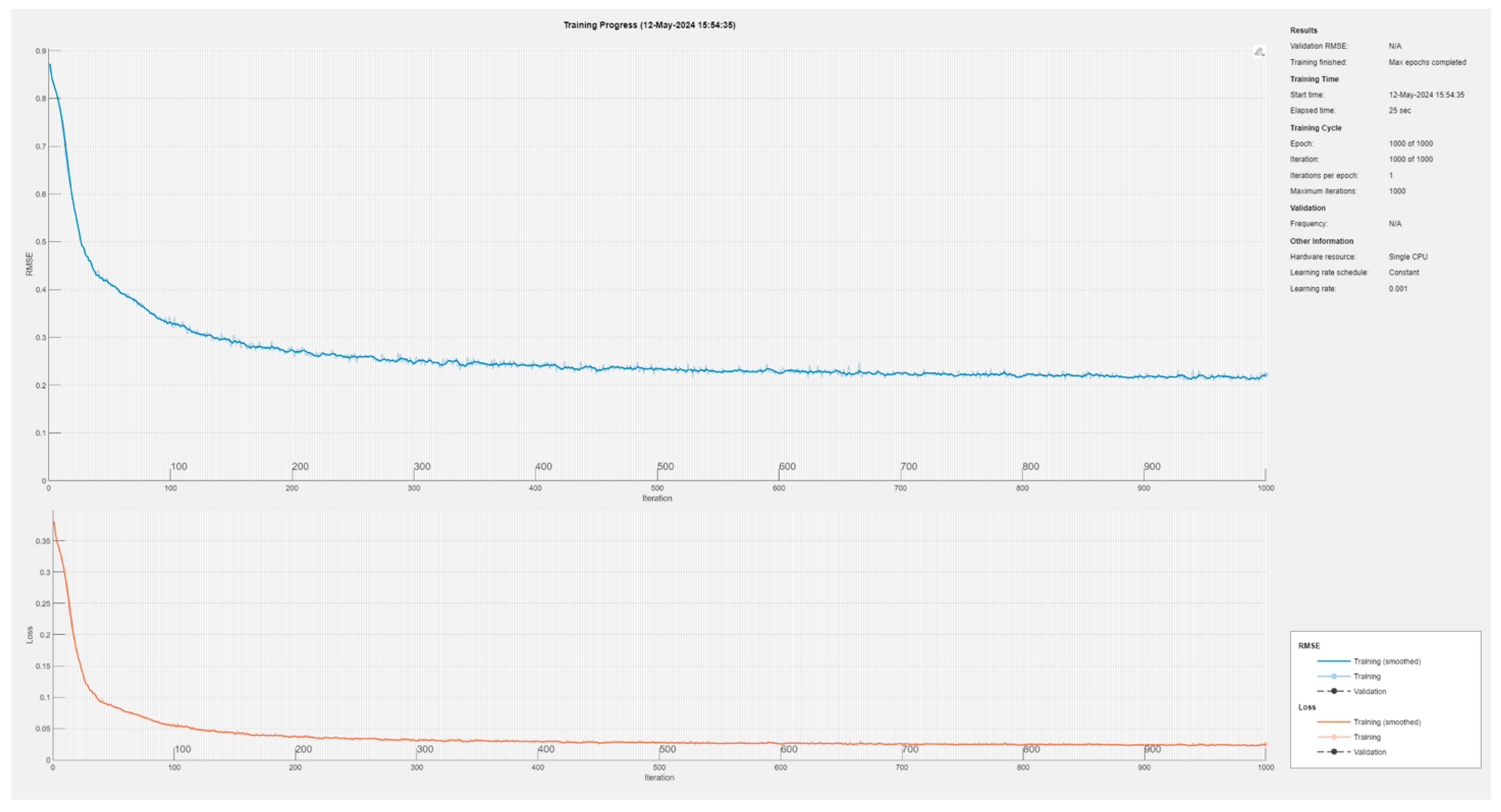

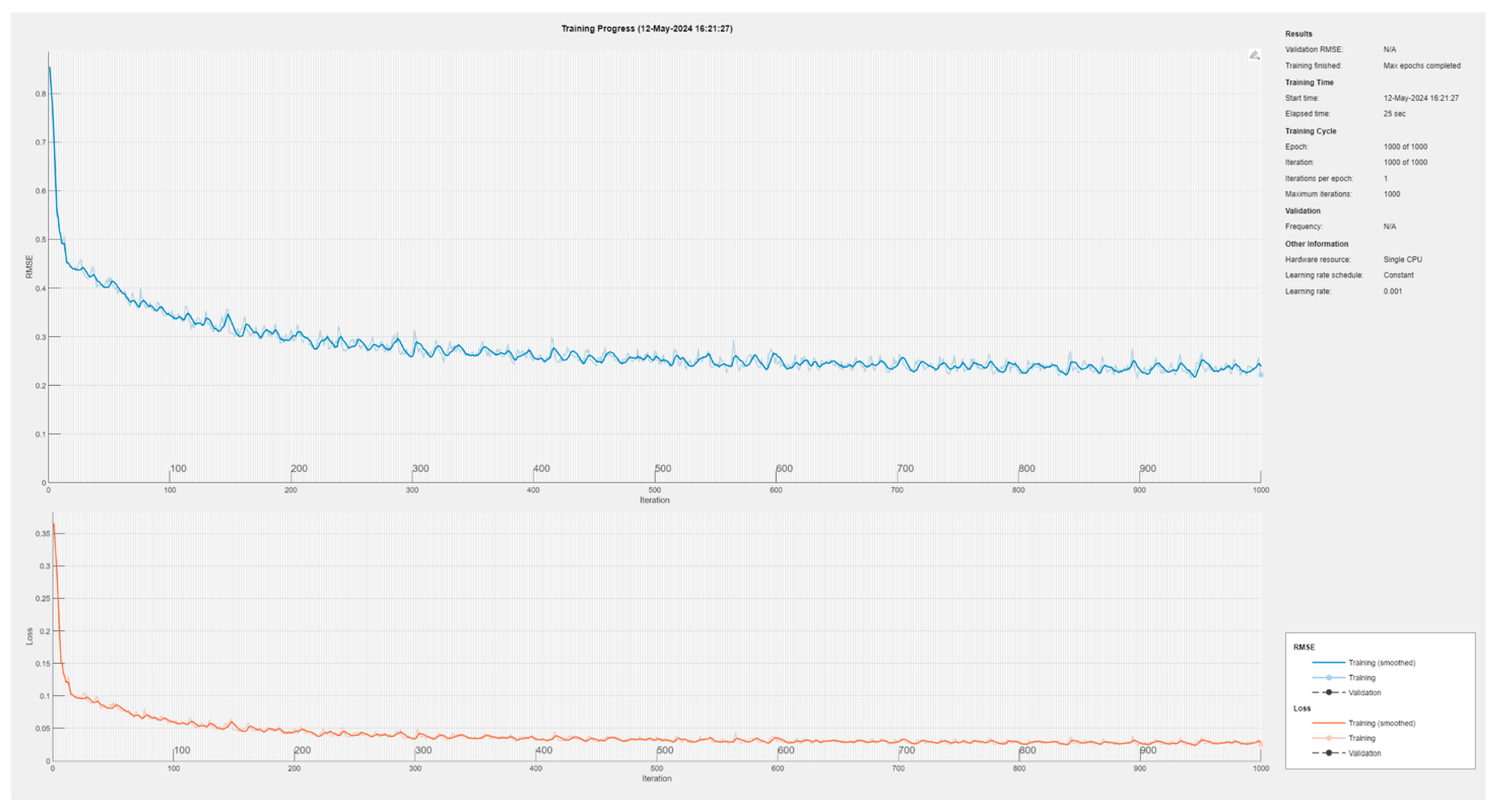

- Model Initialisation. The initial setup involves configuring the model’s hyperparameters, such as the weight coefficient, learning rate, number of iterations, and structure of the AE (autoencoder) module. At this stage of the experiment, the learning parameters were set to random values and optimised during the research process.

- Model Offline Training. The training process began by inputting only the normal data into the AE. Encoder fitting networks are then trained, leading to the generation of reconstructed data based on predefined equations. RMSE loss values are calculated during training, and the learning parameters of the network are updated iteratively, refining the model’s accuracy.

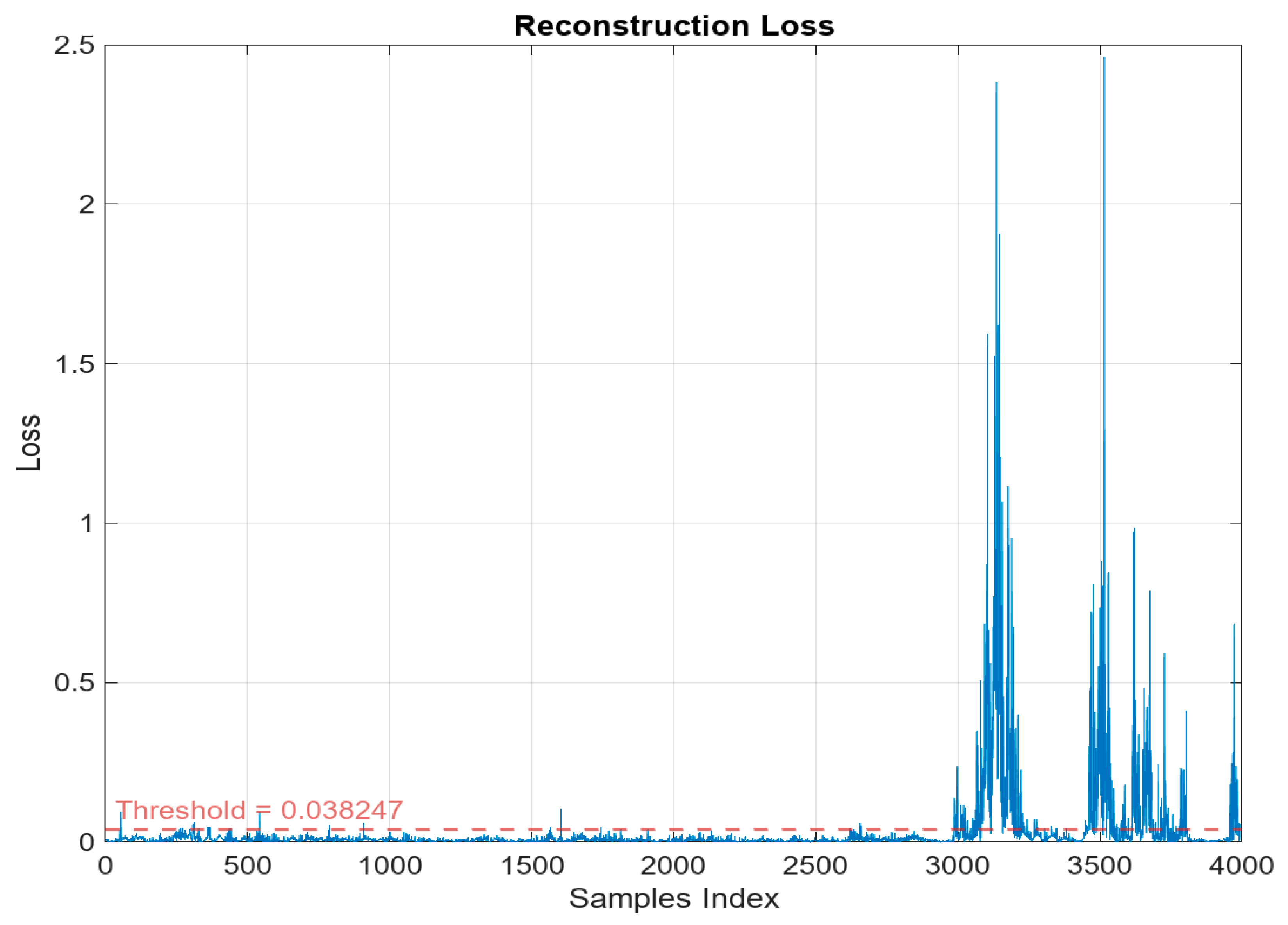

- Model Online Validation. In this phase, only the normal data from the validation set is processed through the trained network. After reconstructing this data, the combined loss values of RMSE for each sample are computed. Given the potential presence of a few unmatched noise samples and to ensure the model’s robustness, the loss value representing 95% of the normal data is selected as the threshold for detecting true anomalies (anomalous regions).

- Model Online Testing. The testing phase involves feeding both normal and anomalous data from the test set into the trained AE. The total RMSE loss values are then computed for each sample to categorise them as normal or anomalous based on the established threshold.

- Outlier detection and removal based on statistical thresholds.

- Linear interpolation to handle missing sensor data.

- Low-pass filtering to reduce high-frequency noise.

3.2. Computational Results Assessment

4. Conclusions

- Local Minima and Non-Convexity. The loss function of deep autoencoders is typically non-convex due to the complex nature of neural networks and their complex deployment. This leads to potential issues where the optimisation algorithm might converge to a local minimum rather than the global minimum, depending on the initial hyperparameter values.

- Overfitting. Autoencoders can be overfit to training data, especially if:

- The network architecture is too complex relative to the amount of input data.

- The selected framework model’s capacity is too high, allowing the network to learn both pertinent features and noise in the training data.

- Sensitivity to Hyperparameters. The performance of the autoencoder is influenced by the selection of hyperparameters, including the number of layers, the number of neurons in each layer, the types of layers (e.g., convolutional, recurrent), the learning rate, and the optimisation algorithm employed.

- Balancing Encoder and Decoder Strength. A balance is needed between the encoder’s and decoder’s capacities. An overly powerful encoder or decoder can skew the learning process, leading to poor generalisation.

- The Dimensionality of Latent Space. The choice of the dimensionality of the latent space is crucial. Too large a latent space might lead the model to learn an identity function, where it can simply copy the input to the output without proper data compression. Conversely, a latent space that is too small may not capture all the necessary features of the data.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rashid, N.; Hossain, M.A.F.; Ali, M.; Islam Sukanya, M.; Mahmud, T.; Fattah, S.A. AutoCovNet: Unsupervised Feature Learning Using Autoencoder and Feature Merging for Detection of COVID-19 from Chest X-Ray Images. Biocybern. Biomed. Eng. 2021, 41, 1685–1701. [Google Scholar] [CrossRef] [PubMed]

- Du, X.; Yu, J.; Chu, Z.; Jin, L.; Chen, J. Graph Autoencoder-Based Unsupervised Outlier Detection. Inf. Sci. 2022, 608, 532–550. [Google Scholar] [CrossRef]

- Ali, S.; Li, Y. Learning Multilevel Auto-Encoders for Ddos Attack Detection in Smart Grid Network. IEEE Access 2019, 7, 108647–108659. [Google Scholar] [CrossRef]

- Hong, S.; Kang, M.; Kim, J.; Baek, J. Investigation of Denoising Autoencoder-Based Deep Learning Model in Noise-Riding Experimental Data for Reliable State-of-Charge Estimation. J. Energy Storage 2023, 72, 108421. [Google Scholar] [CrossRef]

- Saetta, E.; Tognaccini, R.; Iaccarino, G. Uncertainty Quantification in Autoencoders Predictions: Applications in Aerodynamics. J. Comput. Phys. 2024, 506, 112951. [Google Scholar] [CrossRef]

- Jakovlev, S.; Voznak, M. Auto-Encoder-Enabled Anomaly Detection in Acceleration Data: Use Case Study in Container Handling Operations. Machines 2022, 10, 734. [Google Scholar] [CrossRef]

- Cha, S.-H.; Noh, C.-K. A Case Study of Automation Management System of Damaged Container in the Port Gate. J. Navig. Port. Res. 2017, 41, 119–126. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, J.; Zeng, Q.; Sun, Y. Multitype Damage Detection of Container Using CNN Based on Transfer Learning. Math. Probl. Eng. 2021, 2021, 115022. [Google Scholar] [CrossRef]

- Park, H.; Lee, G.H.; Han, J.; Choi, J.K. Multiclass Autoencoder-Based Active Learning for Sensor-Based Human Activity Recognition. Future Gener. Comput. Syst. 2024, 151, 71–84. [Google Scholar] [CrossRef]

- Yang, Z.; Xu, B.; Luo, W.; Chen, F. Autoencoder-Based Representation Learning and Its Application in Intelligent Fault Diagnosis: A Review; Elsevier Ltd.: Amsterdam, The Netherlands, 2021; ISBN 8675523256054. [Google Scholar]

- Santhi, T.M.; Srinivasan, K. A Duo Autoencoder-SVM Based Approach for Secure Performance Monitoring of Industrial Conveyor Belt System. Comput. Chem. Eng. 2023, 177, 108359. [Google Scholar] [CrossRef]

- Zheng, S.; Zhao, J. A New Unsupervised Data Mining Method Based on the Stacked Autoencoder for Chemical Process Fault Diagnosis. Comput. Chem. Eng. 2020, 135, 106755. [Google Scholar] [CrossRef]

- Lv, L.; Bardou, D.; Liu, Y.; Hu, P. Deep Autoencoder-like Non-Negative Matrix Factorization with Graph Regularized for Link Prediction in Dynamic Networks. Appl. Soft Comput. J. 2023, 148, 110832. [Google Scholar] [CrossRef]

- Sarıkaya, A.; Kılıç, B.G.; Demirci, M. RAIDS: Robust Autoencoder-Based Intrusion Detection System Model Against Adversarial Attacks. Comput. Secur. 2023, 135, 103483. [Google Scholar] [CrossRef]

- Wang, J.; Xie, X.; Shi, J.; He, W.; Chen, Q.; Chen, L.; Gu, W.; Zhou, T. Denoising Autoencoder, A Deep Learning Algorithm, Aids the Identification of A Novel Molecular Signature of Lung Adenocarcinoma. Genom. Proteom. Bioinform. 2020, 18, 468–480. [Google Scholar] [CrossRef]

- Dumais, F.; Legarreta, J.H.; Lemaire, C.; Poulin, P.; Rheault, F.; Petit, L.; Barakovic, M.; Magon, S.; Descoteaux, M.; Jodoin, P.-M. FIESTA: Autoencoders for Accurate Fiber Segmentation in Tractography. Neuroimage 2023, 279, 120288. [Google Scholar] [CrossRef]

- Takhanov, R.; Abylkairov, Y.S.; Tezekbayev, M. Autoencoders for a Manifold Learning Problem with a Jacobian Rank Constraint. Pattern Recognit. 2023, 143, 109777. [Google Scholar] [CrossRef]

- Tsakiridis, N.L.; Samarinas, N.; Kokkas, S.; Kalopesa, E.; Tziolas, N.V.; Zalidis, G.C. In Situ Grape Ripeness Estimation via Hyperspectral Imaging and Deep Autoencoders. Comput. Electron. Agric. 2023, 212, 108098. [Google Scholar] [CrossRef]

- Yang, H.; Ding, W.; Yin, C. AAE-Dpeak-SC: A Novel Unsupervised Clustering Method for Space Target ISAR Images Based on Adversarial Autoencoder and Density Peak-Spectral Clustering. Adv. Space Res. 2022, 70, 1472–1495. [Google Scholar] [CrossRef]

- Cai, L.; Li, J.; Xu, X.; Jin, H.; Meng, J.; Wang, B.; Wu, C.; Yang, S. Automatically Constructing a Health Indicator for Lithium-Ion Battery State-of-Health Estimation via Adversarial and Compound Staked Autoencoder. J. Energy Storage 2024, 84, 110711. [Google Scholar] [CrossRef]

- Liu, Z.; Teka, H.; You, R. Conditional Autoencoder Pricing Model for Energy Commodities. Resour. Policy 2023, 86, 104060. [Google Scholar] [CrossRef]

- Wang, W.; Wang, H.; Chen, L.; Hao, K. A Novel Soft Sensor Method Based on Stacked Fusion Autoencoder with Feature Enhancement for Industrial Application. Measurement 2023, 221, 113491. [Google Scholar] [CrossRef]

- Xiao, D.; Qin, C.; Yu, H.; Huang, Y.; Liu, C.; Zhang, J. Unsupervised Machine Fault Diagnosis for Noisy Domain Adaptation Using Marginal Denoising Autoencoder Based on Acoustic Signals. Measurement 2021, 176, 109186. [Google Scholar] [CrossRef]

- Yang, H.; Qiu, R.C.; Shi, X.; He, X. Unsupervised Feature Learning for Online Voltage Stability Evaluation and Monitoring Based on Variational Autoencoder. Electr. Power Syst. Res. 2020, 182, 106253. [Google Scholar] [CrossRef]

- Yan, S.; Shao, H.; Xiao, Y.; Liu, B.; Wan, J. Hybrid Robust Convolutional Autoencoder for Unsupervised Anomaly Detection of Machine Tools under Noises. Robot. Comput. Integr. Manuf. 2023, 79, 102441. [Google Scholar] [CrossRef]

- Buzuti, L.F.; Thomaz, C.E. Fréchet AutoEncoder Distance: A New Approach for Evaluation of Generative Adversarial Networks. Comput. Vision. Image Underst. 2023, 235, 103768. [Google Scholar] [CrossRef]

- Mulyanto, M.; Leu, J.S.; Faisal, M.; Yunanto, W. Weight Embedding Autoencoder as Feature Representation Learning in an Intrusion Detection Systems. Comput. Electr. Eng. 2023, 111, 108949. [Google Scholar] [CrossRef]

- Danti, P.; Innocenti, A. A Methodology to Determine the Optimal Train-Set Size for Autoencoders Applied to Energy Systems. Adv. Eng. Inform. 2023, 58, 102139. [Google Scholar] [CrossRef]

- Zhu, J.; Deng, F.; Zhao, J.; Chen, J. Adaptive Aggregation-Distillation Autoencoder for Unsupervised Anomaly Detection. Pattern Recognit. 2022, 131, 108897. [Google Scholar] [CrossRef]

- Yan, H.; Liu, Z.; Chen, J.; Feng, Y.; Wang, J. Memory-Augmented Skip-Connected Autoencoder for Unsupervised Anomaly Detection of Rocket Engines with Multi-Source Fusion. ISA Trans. 2022, 133, 53–65. [Google Scholar] [CrossRef]

- Zhang, K.; Han, S.; Wu, J.; Cheng, G.; Wang, Y.; Wu, S.; Liu, J. Early Lameness Detection in Dairy Cattle Based on Wearable Gait Analysis Using Semi-Supervised LSTM-Autoencoder. Comput. Electron. Agric. 2023, 213, 108252. [Google Scholar] [CrossRef]

- Fernández-Rodríguez, J.D.; Palomo, E.J.; Benito-Picazo, J.; Domínguez, E.; López-Rubio, E.; Ortega-Zamorano, F. A Convolutional Autoencoder and a Neural Gas Model Based on Bregman Divergences for Hierarchical Color Quantization. Neurocomputing 2023, 544, 126288. [Google Scholar] [CrossRef]

- Davila Delgado, J.M.; Oyedele, L. Deep Learning with Small Datasets: Using Autoencoders to Address Limited Datasets in Construction Management. Appl. Soft Comput. 2021, 112, 107836. [Google Scholar] [CrossRef]

- Yong, B.X.; Brintrup, A. Coalitional Bayesian Autoencoders: Towards Explainable Unsupervised Deep Learning with Applications to Condition Monitoring under Covariate Shift. Appl. Soft Comput. 2022, 123, 108912. [Google Scholar] [CrossRef]

- Novoa-Paradela, D.; Fontenla-Romero, O.; Guijarro-Berdiñas, B. Fast Deep Autoencoder for Federated Learning. Pattern Recognit. 2023, 143, 109805. [Google Scholar] [CrossRef]

- Niu, Y.; Su, Y.; Li, S.; Wan, S.; Cao, X. Deep Adversarial Autoencoder Recommendation Algorithm Based on Group Influence. Inf. Fusion. 2023, 100, 101903. [Google Scholar] [CrossRef]

- Thill, M.; Konen, W.; Wang, H.; Bäck, T. Temporal Convolutional Autoencoder for Unsupervised Anomaly Detection in Time Series. Appl. Soft Comput. 2021, 112, 107751. [Google Scholar] [CrossRef]

- Sun, D.; Li, D.; Ding, Z.; Zhang, X.; Tang, J. Dual-Decoder Graph Autoencoder for Unsupervised Graph Representation Learning. Knowl. Based Syst. 2021, 234, 107564. [Google Scholar] [CrossRef]

- Sun, H.; Zhang, L.; Wang, L.; Huang, H. Stochastic Gate-Based Autoencoder for Unsupervised Hyperspectral Band Selection. Pattern Recognit. 2022, 132, 108969. [Google Scholar] [CrossRef]

- Wang, X.; Peng, D.; Hu, P.; Sang, Y. Adversarial Correlated Autoencoder for Unsupervised Multi-View Representation Learning. Knowl. Based Syst. 2019, 168, 109–120. [Google Scholar] [CrossRef]

- Yang, K.; Kim, S.; Harley, J.B. Unsupervised Long-Term Damage Detection in an Uncontrolled Environment through Optimal Autoencoder. Mech. Syst. Signal Process 2023, 199, 110473. [Google Scholar] [CrossRef]

- Li, P.; Pei, Y.; Li, J. A Comprehensive Survey on Design and Application of Autoencoder in Deep Learning. Appl. Soft Comput. 2023, 138, 110176. [Google Scholar] [CrossRef]

- Daneshfar, F.; Soleymanbaigi, S.; Nafisi, A.; Yamini, P. Elastic Deep Autoencoder for Text Embedding Clustering by an Improved Graph Regularization. Expert Syst. Appl. 2024, 238, 121780. [Google Scholar] [CrossRef]

- Zhai, J.; Bi, J.; Yuan, H.; Wang, M.; Zhang, J.; Wang, Y.; Zhou, M. Cost-Minimized Microservice Migration With Autoencoder-Assisted Evolution in Hybrid Cloud and Edge Computing Systems. IEEE Internet Things J. 2024, 11, 40951–40967. [Google Scholar] [CrossRef]

- Yu, W.; Liu, Y.; Dillon, T.; Rahayu, W. Edge Computing-Assisted IoT Framework With an Autoencoder for Fault Detection in Manufacturing Predictive Maintenance. IEEE Trans. Ind. Inform. 2023, 19, 5701–5710. [Google Scholar] [CrossRef]

- Goyal, V.; Yadav, A.; Kumar, S.; Mukherjee, R. Lightweight LAE for Anomaly Detection with Sound-Based Architecture in Smart Poultry Farm. IEEE Internet Things J. 2024, 11, 8199–8209. [Google Scholar] [CrossRef]

- Somma, M.; Flatscher, A.; Stojanovic, B. Edge-Based Anomaly Detection: Enhancing Performance and Sustainability of Cyber-Attack Detection in Smart Water Distribution Systems. In Proceedings of the 2024 32nd Telecommunications Forum, Belgrade, Serbia, 26–27 November 2024; TELFOR 2024—Proceedings of Papers. Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2024. [Google Scholar]

- Arroyo, S.; Ho, S.-S. Poster: A Hybrid-Cloud Autoencoder Ensemble Method for BotNets Detection on Edge Devices. In Proceedings of the 2024 IEEE 8th International Conference on Fog and Edge Computing (ICFEC), Pennsylvania, PA, USA, 6–9 May 2024; IEEE: New York, NY, USA; pp. 104–105. [Google Scholar]

- Malviya, V.; Mukherjee, I.; Tallur, S. Edge-Compatible Convolutional Autoencoder Implemented on FPGA for Anomaly Detection in Vibration Condition-Based Monitoring. IEEE Sens. Lett. 2022, 6, 7001104. [Google Scholar] [CrossRef]

- Yi, M.S.; Lee, B.K.; Park, J.S. Data-Driven Analysis of Causes and Risk Assessment of Marine Container Losses: Development of a Predictive Model Using Machine Learning and Statistical Approaches. J. Mar. Sci. Eng. 2025, 13, 420. [Google Scholar] [CrossRef]

- Jakovlev, S.; Eglynas, T.; Voznak, M.; Jusis, M.; Partila, P.; Tovarek, J.; Jankunas, V. Detecting Shipping Container Impacts with Vertical Cell Guides inside Container Ships during Handling Operations. Sensors 2022, 22, 2752. [Google Scholar] [CrossRef]

- Aloul, F.; Zualkernan, I.; Abdalgawad, N.; Hussain, L.; Sakhnini, D. Network Intrusion Detection on the IoT Edge Using Adversarial Autoencoders. In Proceedings of the 2021 International Conference on Information Technology, Amman, Jordan, 14–15 July 2021; ICIT 2021—Proceedings. Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA; pp. 120–125. [Google Scholar]

| ENCODER: | DECODER: |

|---|---|

| Input: (where indexes time steps in the input time-series). | Input: (where indexes time steps in the input time-series). |

| Encoding Function: . | Decoding Function: . |

| Output: Latent representation . | Output: Reconstructed output . |

| We get the expression: Here, includes the weights and biases of the LSTM layers in the encoder. | We get the expression: Here, includes the weights and biases of the LSTM layers in the decoder. |

| ENCODER: | DECODER: |

|---|---|

| Input: (the entire time-series input). | Input: . |

| Encoding Function: . | Decoding Function: . |

| Output: Latent representation . | Output: Reconstructed output . |

| We get the expression: and represent the weights and biases of the convolutional layers, respectively, and () denotes the convolution operation. | We get the expression: and represent the weights and biases of the transposed convolutional layers, respectively. |

| Parameter | RAE | TCAE-CN |

|---|---|---|

| Input shape | Flattened multivariate time window | Multivariate time sequence |

| Encoder | 3 Dense layers (128 → 64 → 32) | 2 Conv1D layers (32, 16 filters) |

| Decoder | 3 Dense layers (64 → 128 → output) | Conv1D Transpose + Dense reconstruction |

| Latent dimension | 32 | |

| Activation function | ReLU (hidden), Linear (output) | ReLU (hidden), Linear (output) |

| Loss function | Mean Squared Error (MSE) | MSE |

| Optimiser | Adam | Adam |

| Epochs | 100 | 100 |

| Paper | AE Type | F1-Score | Edge Device | Notes |

|---|---|---|---|---|

| This research | RAE-TCAE | RAE 0.894 | Raspberry Pi 4 | Low-cost, high recall |

| Adversarial AE for IoT Intrusion [52] | Adv-AE + KNN | 0.999 | Raspberry Pi 3B | High performance |

| Lightweight LAE in Poultry Farm [46] | LSTM-LAE | 0.963 | Raspberry Pi 4 | Low-cost, high recall |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jakovlev, S.; Eglynas, T.; Pocevicius, E.; Voznak, M.; Gricius, G.; Jankunas, V.; Jusis, M. Comparative Analysis of Recurrent vs. Temporal Convolutional Autoencoders for Detecting Container Impacts During Quay Crane Handling. J. Mar. Sci. Eng. 2025, 13, 1231. https://doi.org/10.3390/jmse13071231

Jakovlev S, Eglynas T, Pocevicius E, Voznak M, Gricius G, Jankunas V, Jusis M. Comparative Analysis of Recurrent vs. Temporal Convolutional Autoencoders for Detecting Container Impacts During Quay Crane Handling. Journal of Marine Science and Engineering. 2025; 13(7):1231. https://doi.org/10.3390/jmse13071231

Chicago/Turabian StyleJakovlev, Sergej, Tomas Eglynas, Edvinas Pocevicius, Miroslav Voznak, Gediminas Gricius, Valdas Jankunas, and Mindaugas Jusis. 2025. "Comparative Analysis of Recurrent vs. Temporal Convolutional Autoencoders for Detecting Container Impacts During Quay Crane Handling" Journal of Marine Science and Engineering 13, no. 7: 1231. https://doi.org/10.3390/jmse13071231

APA StyleJakovlev, S., Eglynas, T., Pocevicius, E., Voznak, M., Gricius, G., Jankunas, V., & Jusis, M. (2025). Comparative Analysis of Recurrent vs. Temporal Convolutional Autoencoders for Detecting Container Impacts During Quay Crane Handling. Journal of Marine Science and Engineering, 13(7), 1231. https://doi.org/10.3390/jmse13071231