Abstract

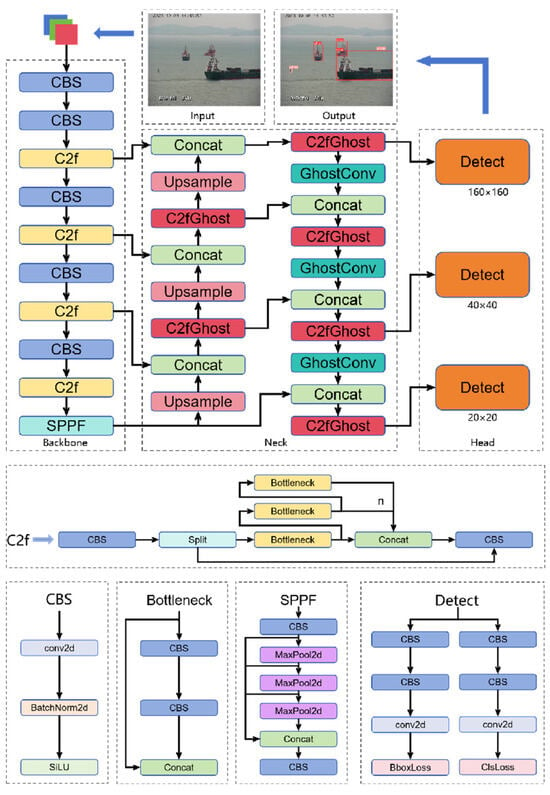

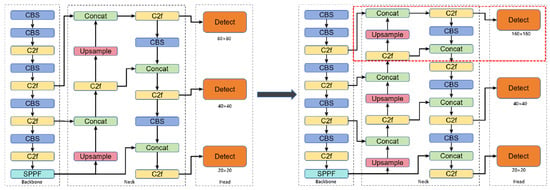

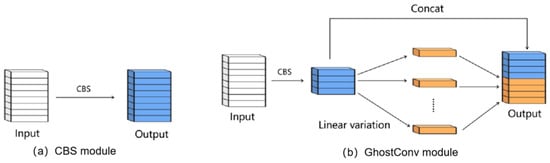

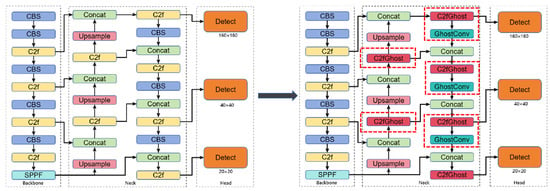

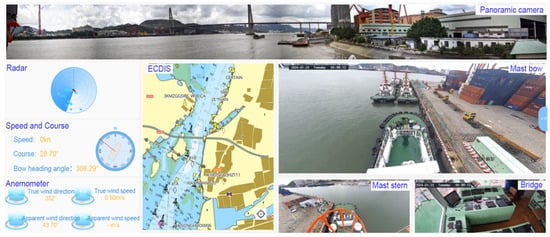

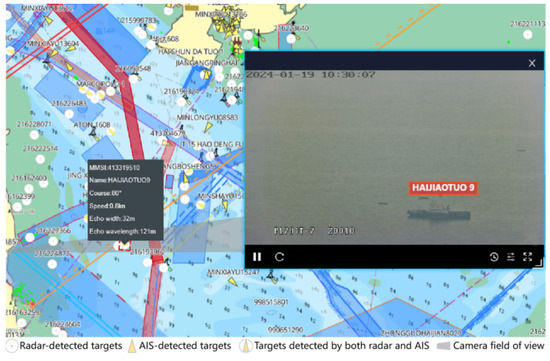

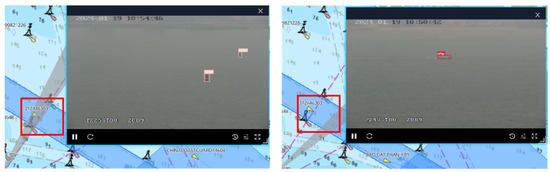

In response to challenges such as narrow visibility for ship navigators, limited field of view from a single camera, and complex maritime environments, this study proposes panoramic visual perception-assisted navigation technology. The approach includes introducing a region-of-interest search method based on SSIM and an elliptical weighted fusion method, culminating in the development of the ship panoramic visual stitching algorithm SSIM-EW. Additionally, the YOLOv8s model is improved by increasing the size of the detection head, introducing GhostNet, and replacing the regression loss function with the WIoU loss function, and a perception model yolov8-SGW for sea target detection is proposed. The experimental results demonstrate that the SSIM-EW algorithm achieves the highest PSNR indicator of 25.736, which can effectively reduce the stitching traces and significantly improve the stitching quality of panoramic images. Compared to the baseline model, the YOLOv8-SGW model shows improvements in the P, R, and mAP50 of 1.5%, 4.3%, and 2.3%, respectively, its mAP50 is significantly higher than that of other target detection models, and the detection ability of small targets at sea has been significantly improved. Implementing these algorithms in tugboat operations at ports enhances the fields of view of navigators, allowing for the identification of targets missed by AISs and radar systems, thus ensuring operational safety and advancing the level of vessel intelligence.

1. Introduction

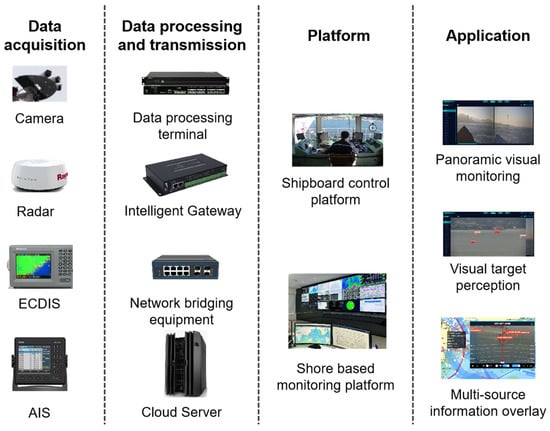

The development and demands of the marine transportation industry have promoted the innovation of ship intelligence technology, and the intelligentization of ships has become a new trend in the development of the shipping industry [1,2]. Ship navigation environment perception refers to the use of radar, LiDAR, Automatic Identification Systems (AISs), depth sounders, cameras, and other sensors to collect ship navigation environment data, as well as the building of intelligent algorithms for perception enhancement, data fusion, target classification, decision recommendations, automatic processing and analyzing of perception data, and distinguishing potential dangers and abnormal situations (such as channel obstacles and past ships). Emergency response measures (such as adjusting the route and speed) to improve the navigation safety of the ship have also been determined.

In the field of ship perception, many scholars have devoted themselves to exploring how to use computer vision and image processing technology to enhance maritime environment perception abilities, and some scholars have used target detection technology to achieve the automatic perception of maritime targets visually [3]. Qu et al. [4] proposed an anti-occlusion vessel tracking method to predict the vessel positions, so as to obtain a synchronized AIS and visual data for data fusion. It can overcome the vessel occlusion problem and improve the safety and efficiency of ship traffic. Chen et al. [5] proposed a new small-ship detection method using a Convolutional Neural Network (CNN) and an improved Generative Adversarial Network (GAN) to help autonomous ships navigate safely. Faggioni et al. [6] proposed a low-computational method for multi-object tracking based on LiDAR point clouds, tested in virtual and real data, demonstrating good performance. Zhu et al. [7] proposed the target detection algorithm YOLOv7-CSAW for the sea. Compared with the YOLOv7 model, the algorithm enhanced the accuracy and robustness of small-target detection in complex scenes and reduced the occurrence of missed detection. Maritime targets mainly include ships, buoys, and reefs. Due to insufficient datasets, current research on the visual perception of maritime targets is mainly focused on ships [8], and small targets such as buoys mainly use LiDAR for perception [9,10]. Few visual perception methods have been developed that can perceive other targets. Moreover, there are cases of missed detection. Further research is needed for practical applications.

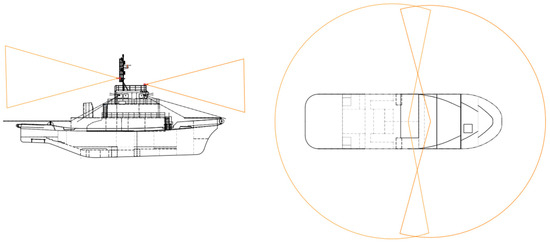

A single camera has a small field of view and captures limited information about the environment. Multiple cameras can capture more environmental information, but it is presented from multiple perspectives; the crew needs to perform frequent perspective switching and cannot watch comprehensive environmental information at the same time. Stitching images from different perspectives into panoramic images for display can solve these problems. In this regard, many scholars have conducted research on panoramic vision technology and have achieved certain results in the field of land transportation [11]. Zhang et al. [12] proposed a distortion correction (DC) and perspective transformation (PT) method based on a LookUp Table (LUT) transformation to enhance the processing speed of image stitching algorithms, generating panoramic images to assist in parking. Christian et al. [13] introduced a real-time image stitching method to improve the horizontal field of view for object detection in autonomous driving. Compared to land transportation systems such as intelligent driving perception in automobiles, which can provide all key visual information related to the driving environment [14] and assist in collision avoidance, pedestrian and vehicle detection, and visual parking aid [15], the panoramic perception systems of ships are still in their infancy and are not widely applied in maritime settings [16].

To enhance the perception capabilities of ship navigation environments, this study is based on panoramic image stitching and object detection algorithms. A real-time environmental perception technology for assisting ship navigation is proposed, and a visual assistance perception system for application on tugboats is constructed. The goal is to ensure the safety of ship navigation and promote the intelligent development of ships.

2. Construction of Ship Panoramic Vision Mosaic Algorithm

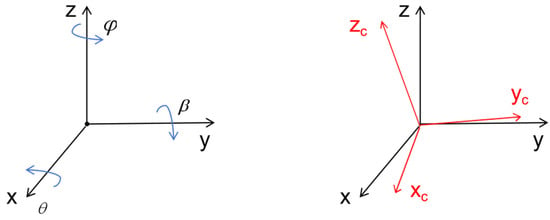

2.1. Panoramic Image Stitching Method

The panoramic image stitching algorithm refers to the alignment and fusion of multiple images by means of extraction, matching, and registration of image feature points [17]. Existing image stitching techniques typically extract features from the entire image during the feature extraction stage, resulting in many invalid feature points, which use computing resources and influence the accuracy of subsequent registration [18]. In terms of image fusion algorithms, there are mainly average weighting methods and fade-in/fade-out methods. Although these fusion algorithms can weaken the traces produced by image stitching, the effect is general, and there is still room for improvement [19].

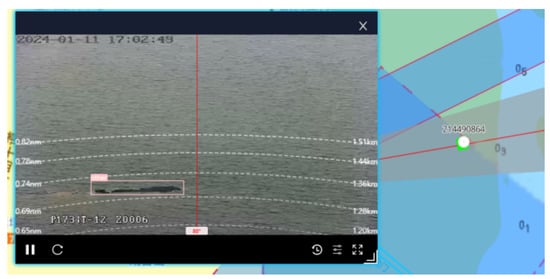

This paper presents an image stitching approach: the SSIM, SURF, and Elliptical Weighted Algorithm Stitcher (SSIM-EW). In addition to the image stitching method based on Speeded-Up Robust Features (SURFs) [20], the region-of-interest search method based on the Structural Similarity Index Measure (SSIM) [21] and the elliptical weighted fusion method are added to improve the algorithm stitching performance and reduce issues such as gaps and artifacts that may arise during the stitching process. The specific steps are illustrated in Figure 1.

Figure 1.

Flowchart of panoramic image stitching.

2.1.1. The Region-of-Interest Search Method Based on SSIM

By comparing the SSIM values of different image regions, the algorithm identifies the region with the highest SSIM value as the region of interest of the stitching algorithm. This approach reduces computational complexity, minimizes interference from invalid feature points, accelerates feature point extraction speed, and enhances image registration accuracy. The specific steps are as follows:

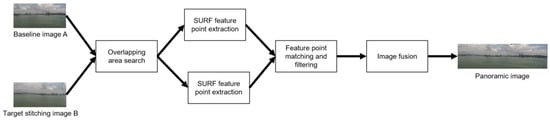

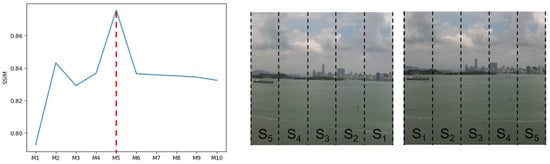

The image is divided into N equal areas along the image splicing direction, as shown in Figure 2. N is a hyperparameter; the larger the value of N is, the slower the speed is and the higher the positioning accuracy of the overlapping area is. In this research, the value of N is 10.

Figure 2.

Schematic diagram of image area division.

After the image is divided, the regions are superimposed and combined along the divided direction, and each image forms N sub-images M of different sizes, as follows:

The SSIM values of the corresponding sub-images are compared, and the SSIM calculation method is as follows:

where is the mean of the image, is the standard deviation, is the image variance, and represents the covariance between images x and y, while and are constants.

The result of the region-of-interest search is shown in Figure 3, where the sub-image M5 has the highest SSIM value. Therefore, the region of interest is the combination of regions S1–S5.

Figure 3.

Results of overlapping area search.

2.1.2. Feature Point Extraction and Matching

In the SURF algorithm for feature extraction, box filters are used to process images and construct the scale space and to evaluate whether a pixel is located at an edge by comparing the determinants of the matrix. And in the area around each key point, through the calculation of continuous sub-windows of varying scales, the final generated feature vector is a 64-dimensional vector. The expression for the Hessian matrix is as follows:

where represents the pixel point.

The process of feature point matching is divided into coarse matching and fine matching. In the coarse matching process, the Euclidean distance between the two groups of feature points is calculated, the matching points are preliminarily filtered according to the Euclidean distance, and the feature point pairs satisfying the following formula are retained.

The Ratio represents the threshold for the difference in matching pairs, which is used to measure the degree of difference between the nearest Euclidean distance matching pair and the second-nearest Euclidean distance matching pair. A smaller Ratio leads to fewer retained matching pairs but higher accuracy. In this research, the Ratio is set to 0.35 (0 < Ratio < 1).

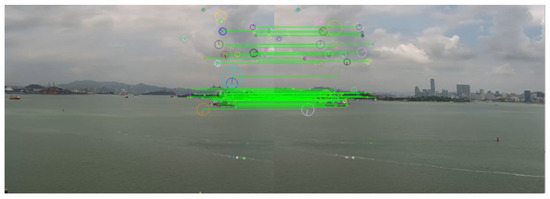

In the fine matching, the RANSAC algorithm [22] is used to randomly sample the feature point pairs retained in the coarse matching stage, the best homography transformation matrix is continuously and iteratively calculated, and the outliers in the matching points are eliminated through the matrix. The final matching result is shown in Figure 4.

Figure 4.

Result of feature point matching.

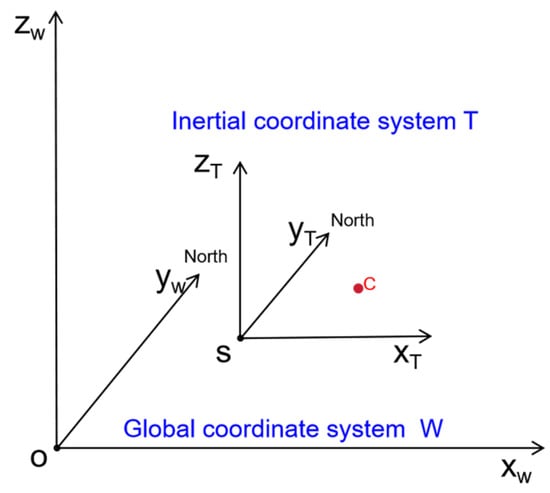

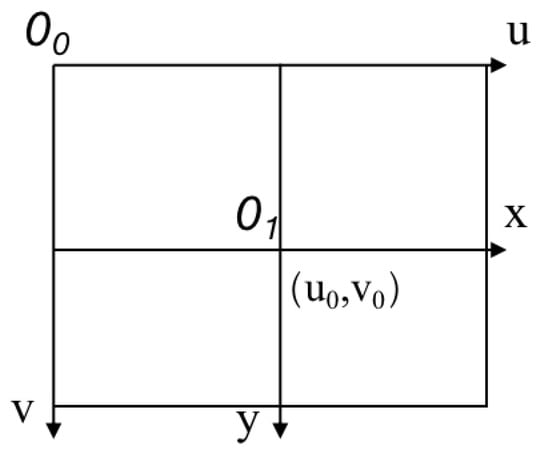

The homography transformation [23] is expressed as follows:

where represents the homography transformation matrix, and and represent the pixel coordinates before and after the transformation, respectively. The pixel points of the image to be registered can be mapped to the pixel coordinate system of the reference image one by one through the best homography transformation matrix.

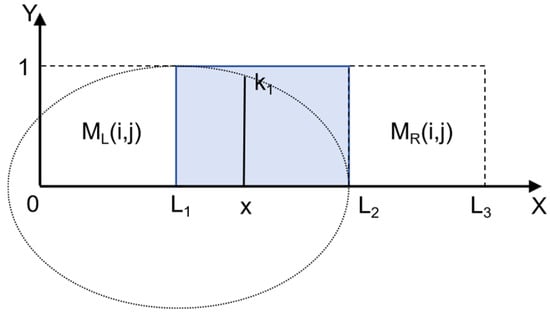

2.1.3. Image Fusion Based on Elliptic Function Weighting

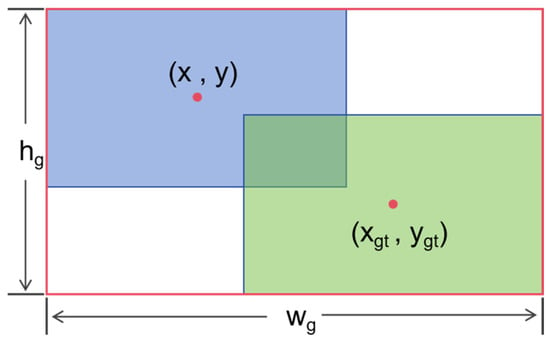

The elliptical weighted fusion method can solve the issues of noticeable image stitching artifacts and unnatural transitions. As shown in Figure 5, is the reference image whose abscissa ranges from 0 to , is an image to be stitched whose abscissa ranges from to , is the abscissa of any pixel point of the panoramic image, and and are the left and right boundaries of the overlapping region, respectively. An ellipse is constructed by taking as the center of the circle, as the semi-major axis, and the length of the semi-minor axis as 1. The ellipse curve in the overlapping area is taken as the weight curve of the reference image pixel point.

Figure 5.

Weighted fusion based on elliptic function.

Let and denote the weights of the left and right images, respectively; then, the calculation method is as follows:

and

Let represent the pixel point of the fused panoramic image; then, the calculation formula is as follows:

2.2. Validation of Ship Panoramic Image Stitching Algorithm

2.2.1. Test Dataset

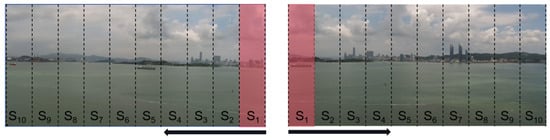

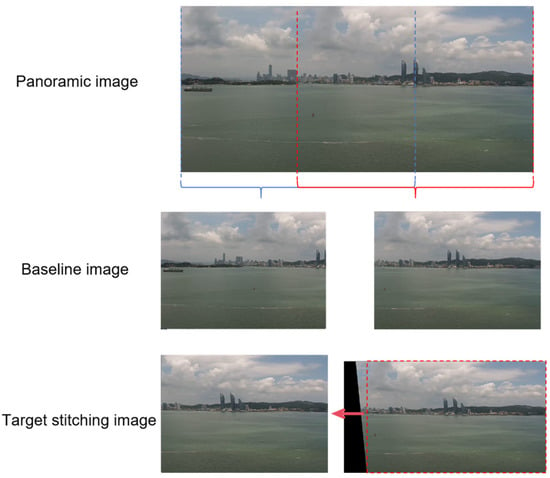

The image data used are a real image of the Xiamen sea scene taken by a shore-based camera. Due to the lack of panoramic images of real scenes, this paper crops two images with partially overlapping areas from a complete image and then compares the stitched and fused images with the original images for verification. Following the principles mentioned above, a test dataset is established as follows. Left and right images are cropped from one image. The left image serves as the reference image, while the right image undergoes a slight stretching transformation to simulate differences between different cameras. A portion of the maximum rectangular sub-image extracted from this is selected as the image to be stitched, as shown in Figure 6. The size of the experimental images is constrained within the range of 640 × 470–680 × 540 pixels.

Figure 6.

Making test datasets.

2.2.2. Verification Results

Comparison of Theoretical Data

To verify the effectiveness of the SSIM-EW algorithm proposed in this paper, the algorithm is compared with a cross-combination of the SIFT-based image registration method, the SURF-based image registration method, the average weighted fusion (AWF) method, and the fade-in/fade-out fusion method (DFF). The higher the evaluation index of Peak Signal-to-Noise Ratio (PSNR) [24], the higher the quality of the stitching. The results are shown in Table 1.

Table 1.

Validation results of stitching algorithm comparison.

In the table, “Time” represents the complete runtime of the stitching algorithm, and “AP_time” indicates the actual application stitching time. In practical applications, low-visibility weather such as rainy and foggy days will seriously affect the accuracy of the algorithm; after fixing the camera parameters, the precomputed homography matrix H and fusion weight matrix can be directly used for panoramic image stitching without needing to run the entire image stitching algorithm, which can reduce the algorithm running time and the adverse effects of bad weather on the stitching effect.

It can be seen from Table 1 that compared with other algorithms, the SSIM-EW algorithm proposed in this paper achieves the best performance in terms of both PSNR and time, and although it is not the best in terms of AP_time, the algorithm also meets the real-time requirements of practical applications. Therefore, the performance of SSIM-EW is the best.

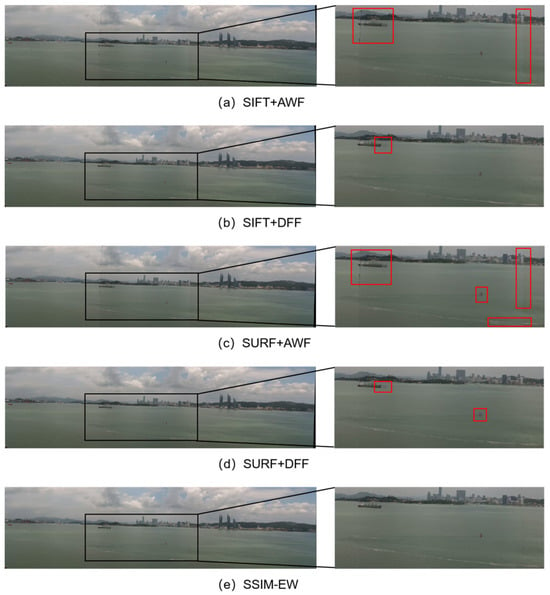

Comparison of Fused Images

The image stitching results of different algorithms are shown in Figure 7. By comparing the locally enlarged images, it can be observed that there are still noticeable stitching seams and severe ghosting in the stitching results of SIFT + AWF and SURF + AWF. In the stitching results of SIFT + DFF and SURF + DFF, there are no obvious stitching seams, but slight ghosting still exists. The SSIM-EW proposed in this paper shows superior stitching results compared to other algorithms, with no apparent stitching seams or ghosting. The image stitching effect is significantly better than that of other algorithms.

Figure 7.

Comparison of the results of different stitching algorithms. Inside this red frame are obvious flaw of picture stitching.

5. Conclusions

This paper proposes a ship environment auxiliary perception technology based on panoramic vision that breaks through the limitations of traditional ship perception technology and promotes the development of ship intelligence. The specific research contents are as follows:

(1) To solve the problems of the instability of the ship panoramic image registration and the unnatural stitching effect, the traditional image stitching method is improved by the region-of-interest search method based on SSIM and the elliptical weighted fusion method. The panoramic image stitched by the improved SSIM-EW algorithm has a significant improvement in the quality evaluation index, and the transition is natural without stitching traces such as ghosting and dislocation. The experimental results show that the splicing effect of the SSMI-EW algorithm is significantly better than that of other algorithms.

(2) To solve the problem of poor perception accuracy of small targets at sea, based on the YOLOv8 model, this study increases the size of the detection head, introduces GhostNet, uses the WIoU function instead of the original loss function, and proposes the YOLOv8-SGW model. The mAP of the YOLOv8-SGW model is increased by 2.3%, its detection accuracy is significantly higher than that of YOLOv5s, YOLOv7-tiny, and YOLOv8, and the detection ability of small targets is greatly improved.

(3) The above technology is applied to the tugboat, and the panoramic vision-assisted tugboat operation is successfully realized, which expands the perception field of view. Moreover, the visual perception model can detect the targets that AIS and radar cannot, which enhances the perception ability of ships and has great significance and practical application value for the safe navigation of ships and the development of ship intelligence.

Author Contributions

Methodology, C.W., X.C. and S.Z. (Shunzhi Zhu); Software, Z.L.; Validation, X.C., Y.L., R.Z. and R.W.; Data curation, R.Z. and S.Z. (Shunzhi Zhu); Writing—original draft, X.C.; Writing—review & editing, C.W., Y.L. and S.Z. (Shunzhi Zhu); Visualization, R.W.; Supervision, L.G.; Project administration, Z.L., S.Z. (Shengchao Zhang) and J.Z.; Funding acquisition, L.G. All authors have read and agreed to the published version of the manuscript.

Funding

The financial support provided by the Green and Intelligent Ship in the Fujian region (No. CBG4N21-4-4), Xiamen Ocean and Fishery Development Special Fund Project (No. 21CZBO14HJ08), Next-Generation Integrated Intelligent Terminal for Fishing Boats (No. FJHYF-ZH-2023-10), and Research on Key Technologies for Topological Reconstruction and Graphical Expression of Next-Generation Electronic Nautical Charts (No. 2021H0026).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

If code and datasets are needed, please contact the corresponding author; they will be available for free.

Conflicts of Interest

Liangqing Guan and Zhiqiang Luo were employed by the Fujian Fuchuan Marine Engineering Technology Research Institute Co., Ltd. Shengchao Zhang and Jianfeng Zhang were employed by the Xiamen Port Shipping Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Sèbe, M.; Scemama, P.; Choquet, A.; Jung, J.L.; Chircop, A.; Razouk, P.M.A.; Michel, S.; Stiger-Pouvreau, V.; Recuero-Virto, L. Maritime transportation: Let’s slow down a bit. Sci. Total Environ. 2022, 811, 152262. [Google Scholar] [CrossRef] [PubMed]

- Li, H. Research on Digital, Networked and Intelligent Manufacturing of Modern Ship. J. Phys. Conf. Ser. 2020, 1634, 012052. [Google Scholar] [CrossRef]

- Yang, D.; Solihin, M.I.; Zhao, Y.; Yao, B.; Chen, C.; Cai, B.; Machmudah, A. A review of intelligent ship marine object detection based on RGB camera. IET Image Proc. 2024, 18, 281–297. [Google Scholar] [CrossRef]

- Qu, J.; Liu, R.W.; Guo, Y.; Lu, Y.; Su, J.; Li, P. Improving maritime traffic surveillance in inland waterways using the robust fusion of AIS and visual data. Ocean Eng. 2023, 275, 114198. [Google Scholar] [CrossRef]

- Chen, Z.; Chen, D.; Zhang, Y.; Cheng, X.; Zhang, M.; Wu, C. Deep learning for autonomous ship-oriented small ship detection. Saf. Sci. 2020, 130, 104812. [Google Scholar] [CrossRef]

- Faggioni, N.; Ponzini, F.; Martelli, M. Multi-obstacle detection and tracking algorithms for the marine environment based on unsupervised learning. Ocean Eng. 2022, 266, 113034. [Google Scholar] [CrossRef]

- Zhu, Q.; Ma, K.; Wang, Z.; Shi, P.B. YOLOv7-CSAW for maritime target detection. Front. Neurorob. 2023, 17, 1210470. [Google Scholar] [CrossRef] [PubMed]

- Cheng, S.; Zhu, Y.; Wu, S. Deep learning based efficient ship detection from drone-captured images for maritime surveillance. Ocean Eng. 2023, 285, 115440. [Google Scholar] [CrossRef]

- Adolphi, C.; Parry, D.D.; Li, Y.; Sosonkina, M.; Saglam, A.; Papelis, Y.E. LiDAR Buoy Detection for Autonomous Marine Vessel Using Pointnet Classification. In Proceedings of the Modeling, Simulation and Visualization Student Capstone Conference, Suffolk, VA, USA, 20 April 2023. [Google Scholar]

- Hagen, I.B.; Brekke, E. In Kayak Tracking using a Direct Lidar Model. In Proceedings of the Global Oceans 2020: Singapore-US Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; pp. 1–7. [Google Scholar]

- Abbadi, N.K.E.L.; Al Hassani, S.A.; Abdulkhaleq, A.H. A Review Over Panoramic Image Stitching Techniques. J. Phys. Conf. Ser. 2021, 1999, 012115. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, T.; Yin, X.; Wang, X.; Zhang, K.; Xu, J.; Wang, D. An improved parking space recognition algorithm based on panoramic vision. Multimed. Tools Appl. 2021, 80, 18181–18209. [Google Scholar] [CrossRef]

- Kinzig, C.; Cortés, I.; Fernández, C.; Lauer, M. Real-time seamless image stitching in autonomous driving. In Proceedings of the 2022 25th International Conference on Information Fusion (FUSION), Linköping, Sweden, 4–7 July 2022; pp. 1–8. [Google Scholar]

- Zhu, H.; Yuen, K.V.; Mihaylova, L.; Leung, H. Overview of Environment Perception for Intelligent Vehicles. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2584–2601. [Google Scholar] [CrossRef]

- Taha, A.E.; AbuAli, N. Route Planning Considerations for Autonomous Vehicles. IEEE Commun. Mag. 2018, 56, 78–84. [Google Scholar] [CrossRef]

- Martelli, M.; Virdis, A.; Gotta, A.; Cassarà, P.; Summa, M.D. An Outlook on the Future Marine Traffic Management System for Autonomous Ships. IEEE Access 2021, 9, 157316–157328. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, Z. Review on image-stitching techniques. Multimed. Syst. 2020, 26, 413–430. [Google Scholar] [CrossRef]

- Wei, X.; Yan, W.; Zheng, Q.; Gu, M.; Su, K.; Yue, G.; Liu, Y. Image Redundancy Filtering for Panorama Stitching. IEEE Access 2020, 8, 209113–209126. [Google Scholar] [CrossRef]

- Chang, C.-H.; Sato, Y.; Chuang, Y.-Y. Shape-preserving half-projective warps for image stitching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3254–3261. [Google Scholar]

- Wang, G.; Liu, L.; Zhang, Y. Research on Scalable Real-Time Image Mosaic Technology Based on Improved SURF. J. Phys. Conf. Ser. 2018, 1069, 012162. [Google Scholar] [CrossRef]

- Bakurov, I.; Buzzelli, M.; Schettini, R.; Castelli, M.; Vanneschi, L. Structural similarity index (SSIM) revisited: A data-driven approach. Expert Syst. Appl. 2022, 189, 116087. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef] [PubMed]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Bucak, S.S.; Jin, R.; Jain, A.K. Multiple Kernel Learning for Visual Object Recognition: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1354–1369. [Google Scholar] [PubMed]

- Amir, S.; Siddiqui, A.A.; Ahmed, N.; Chowdhry, B.S. Implementation of line tracking algorithm using Raspberry pi in marine environment. In Proceedings of the 2014 IEEE International Conference on Industrial Engineering and Engineering Management, Selangor, Malaysia, 9–12 December 2014; pp. 1337–1341. [Google Scholar]

- Jaszewski, M.; Parameswaran, S.; Hallenborg, E.; Bagnall, B. Evaluation of maritime object detection methods for full motion video applications using the pascal voc challenge framework. In Proceedings of the Video Surveillance and Transportation Imaging Applications, San Francisco, CA, USA, 8–12 February 2015; pp. 298–304. [Google Scholar]

- Bäumker, M.; Heimes, F. New calibration and computing method for direct georeferencing of image and scanner data using the position and angular data of an hybrid inertial navigation system. In Proceedings of the OEEPE Workshop, Integrated Sensor Orientation, Hanover, Germany, 17–18 September 2001; pp. 1–17. [Google Scholar]

- Goudossis, A.; Katsikas, S.K. Towards a secure automatic identification system (AIS). J. Mar. Sci. Technol. 2019, 24, 410–423. [Google Scholar] [CrossRef]

- Karataş, G.B.; Karagoz, P.; Ayran, O. Trajectory pattern extraction and anomaly detection for maritime vessels. Internet Things 2021, 16, 100436. [Google Scholar] [CrossRef]

- Lazarowska, A. Review of Collision Avoidance and Path Planning Methods for Ships Utilizing Radar Remote Sensing. Remote Sens. 2021, 13, 3265. [Google Scholar] [CrossRef]

- Cheng, Y.; Xu, H.; Liu, Y. Robust small object detection on the water surface through fusion of camera and millimeter wave radar. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15263–15272. [Google Scholar]

- Clunie, T.; DeFilippo, M.; Sacarny, M.; Robinette, P. Development of a perception system for an autonomous surface vehicle using monocular camera, lidar, and marine radar. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 14112–14119. [Google Scholar]

- Paoletti, S.; Rumes, B.; Pierantonio, N.; Panigada, S.; Jan, R.; Folegot, T.; Schilling, A.; Riviere, N.; Carrier, V.; Dumoulin, A.J.R.I. SEADETECT: Developing an automated detection system to reduce whale-vessel collision risk. Res. Ideas Outcomes 2023, 9, e113968. [Google Scholar] [CrossRef]

- Wu, Y.; Chu, X.; Deng, L.; Lei, J.; He, W.; Królczyk, G.; Li, Z. A new multi-sensor fusion approach for integrated ship motion perception in inland waterways. Measurement 2022, 200, 111630. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).