YOLO-RSA: A Multiscale Ship Detection Algorithm Based on Optical Remote Sensing Image

Abstract

1. Introduction

- To address significant scale variations in the detection of diverse objects in remote sensing ship images, we introduce a 4-layer multi-scale feature pyramid. Leveraging this pyramid, we integrate the multi-scale features of remote sensing ships through multi-level connections, with the goal of augmenting the saliency of features at varying scales and enhancing detection accuracy.

- To effectively extract features from small ships in complex backgrounds, we introduce a mechanism that focuses on small ships to improve the success rate of extraction for medium and small-sized ships.

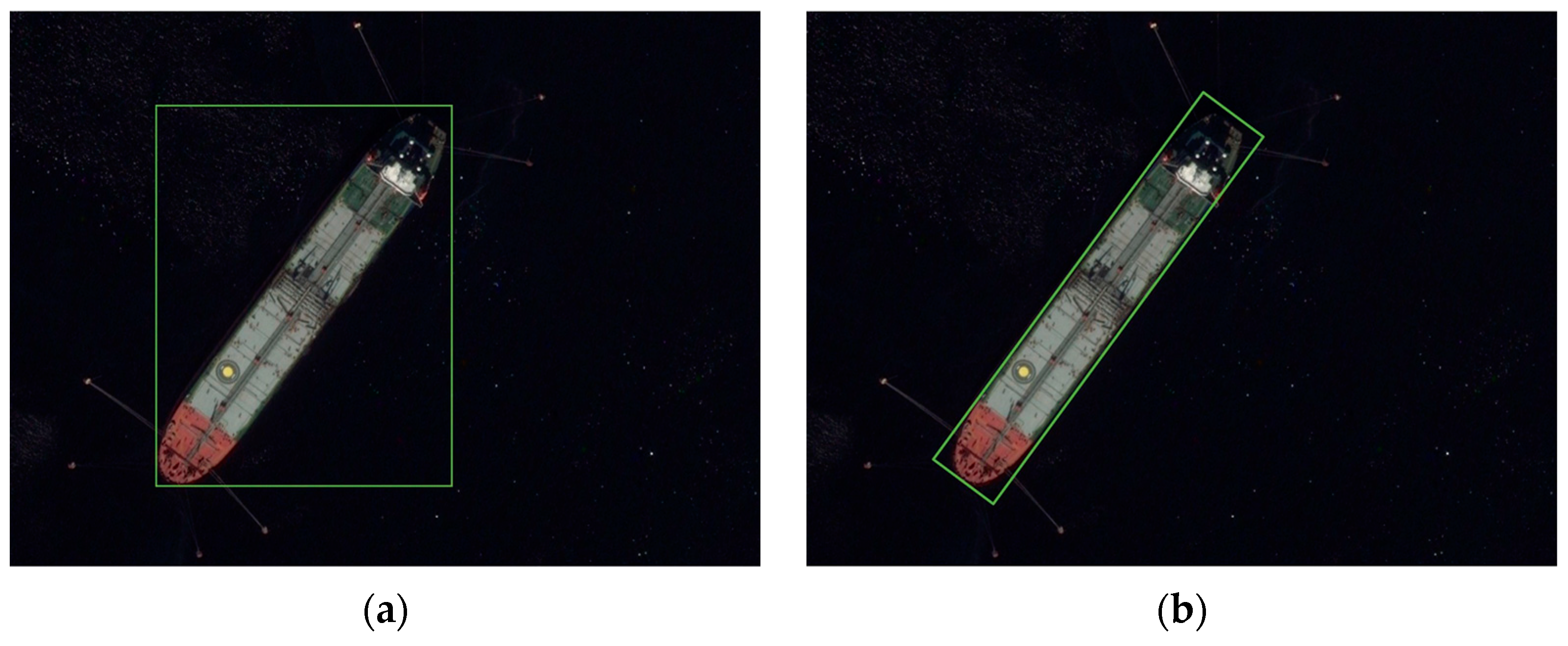

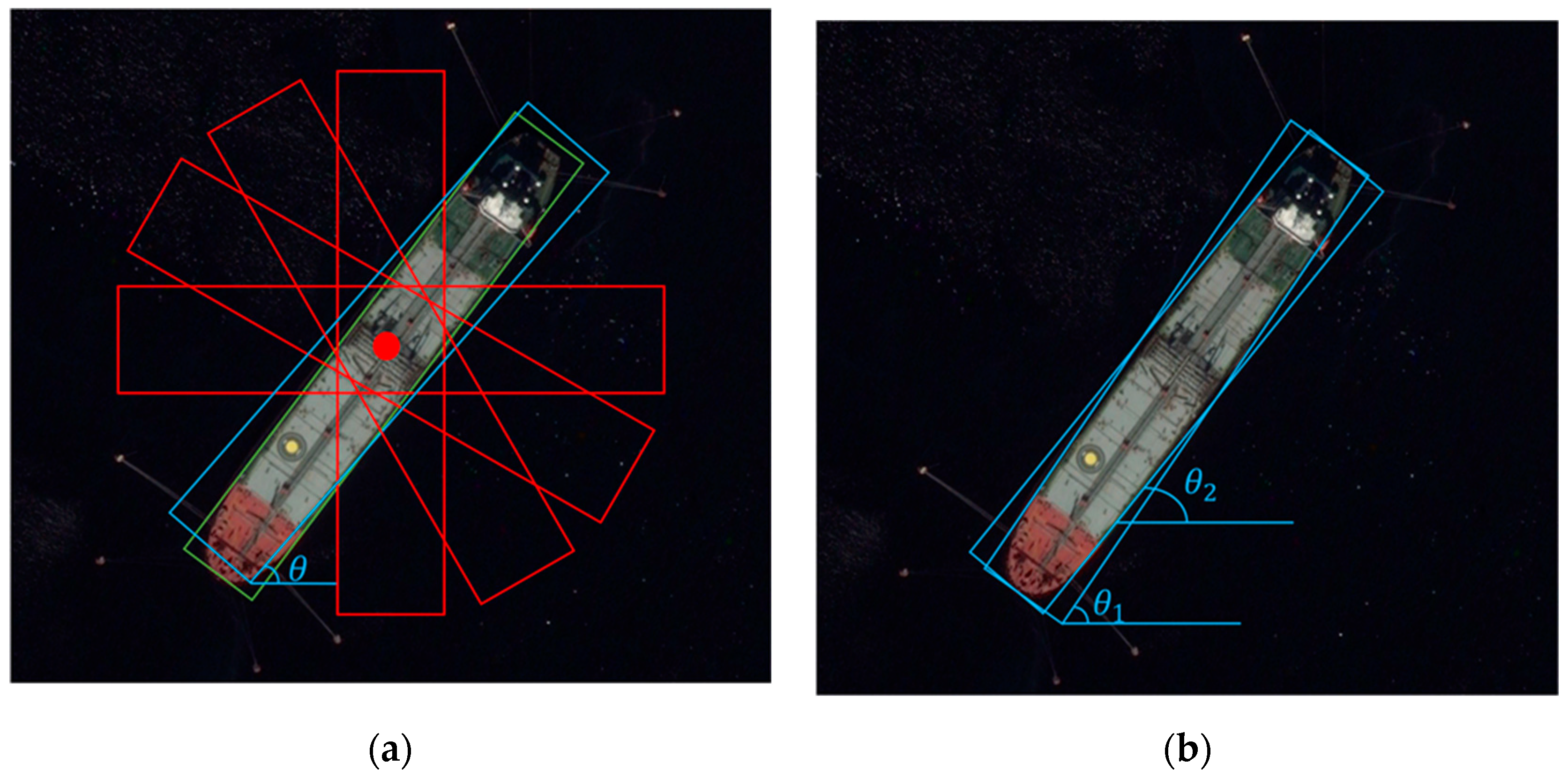

- To improve the ship detection algorithm’s performance in crowded scenes, we introduce a rotated decoupling detection head during the detection stage, employing rotated bounding boxes instead of horizontal ones for ship extraction. Moreover, we enhance the angle prediction model by transitioning from direct angle prediction to predicting sine and cosine values.

- On the HRSC2016 and DOTA datasets, we compare YOLO-RSA with three existing detection models in multiple ways, designing ablation experiments as well as Generalizability Experiments to demonstrate the excellent performance of YOLO-RSA.

2. Proposed Methods

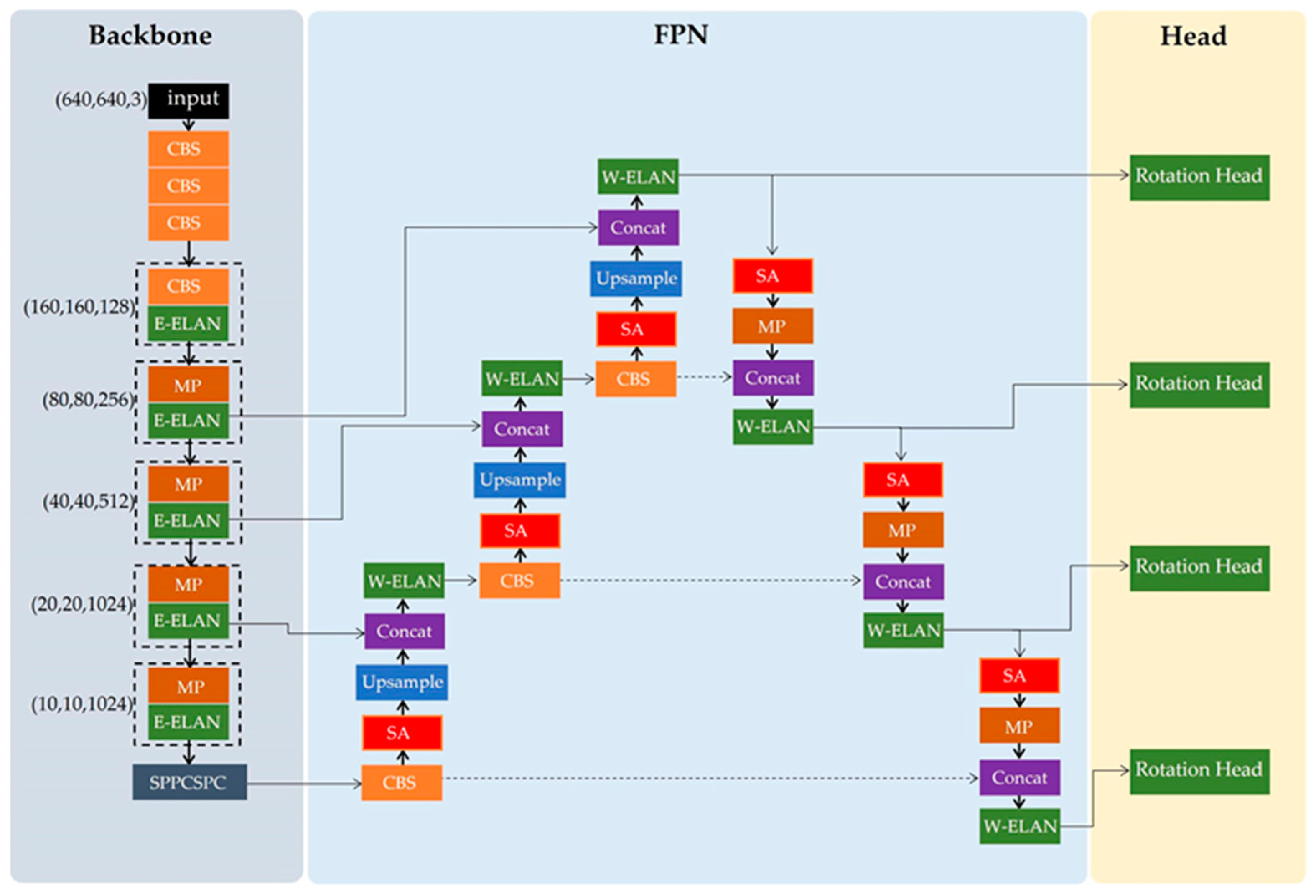

2.1. Cross-Level Feature Extraction Network

2.2. Model Structure

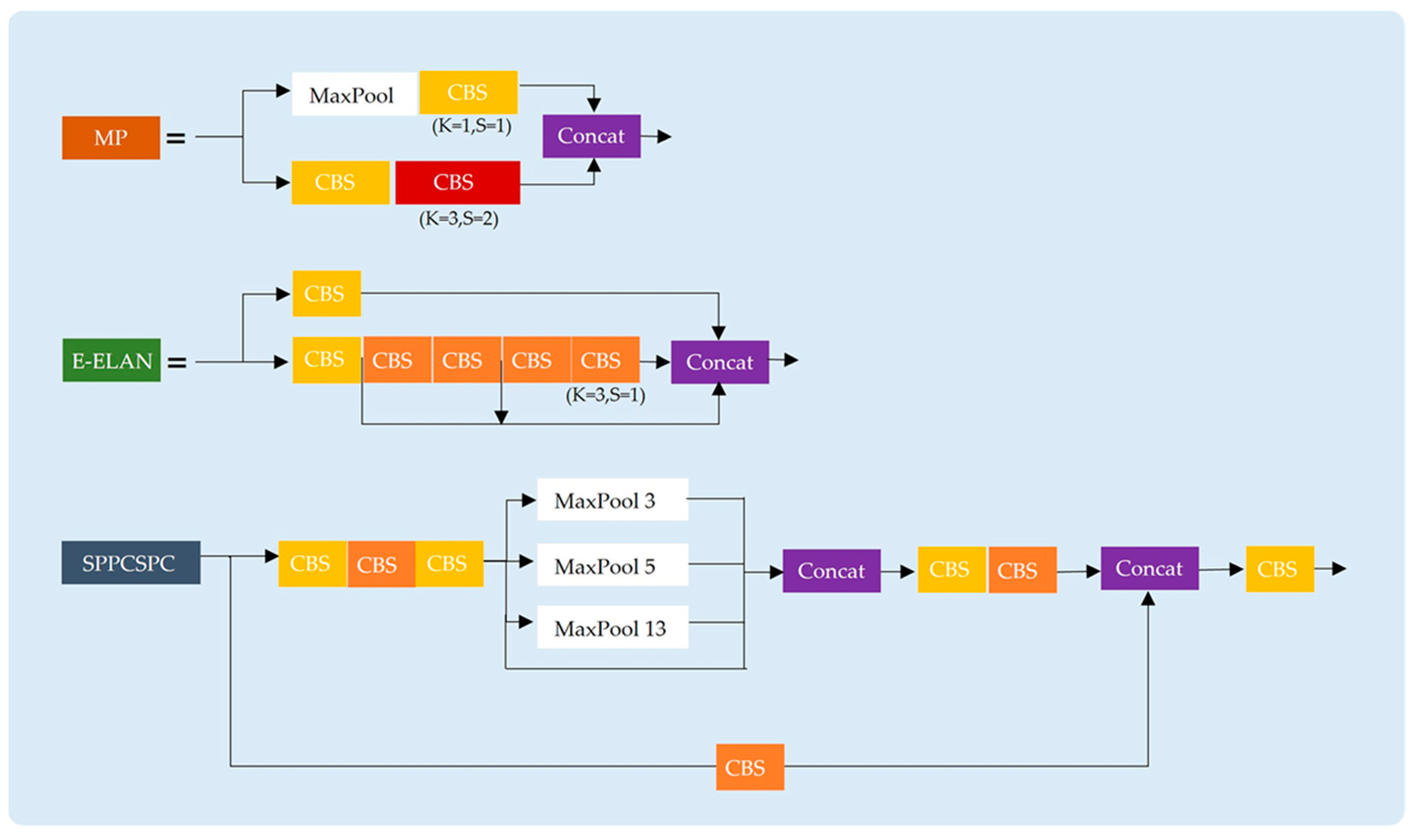

2.2.1. Backbone Network

2.2.2. 4-Layer Multi-Scale Feature Pyramid

2.2.3. Small Ship Attention Mechanism

2.2.4. Rotated Detection Head

- FPN Features: extracted feature layers through the feature extraction network, utilized for subsequent RPN layer and proposal extraction.

- RPN: This network is used for generating candidate boxes. The tasks involve two aspects: firstly, classification, determining if there is a target within all predefined anchors; secondly, bounding box regression, refining anchors to obtain more accurate proposals.

- RoI Pooling: Region of Interest Pooling is employed for collecting proposals generated by RPN. It extracts feature maps from FPN Features to generate proposal feature maps.

- Fully Connected Layer: Classification and Regression, utilizing proposal feature maps to compute specific categories. Simultaneously, another round of bounding box regression is performed to obtain the final precise position of the detection box.

- Three prediction branches: output three parameters, confidence score p, horizontal box regression parameter u, and rotated box regression parameter v.

2.2.5. The Sine–Cosine Angle Prediction Branch

2.2.6. Loss Function

3. Experiments and Results

3.1. Experimental Platform

3.2. Experimental Parameters

3.3. Datasets

- (1)

- HRSC2016

- (2)

- DOTA

3.4. Evaluation Indicators

3.5. Results

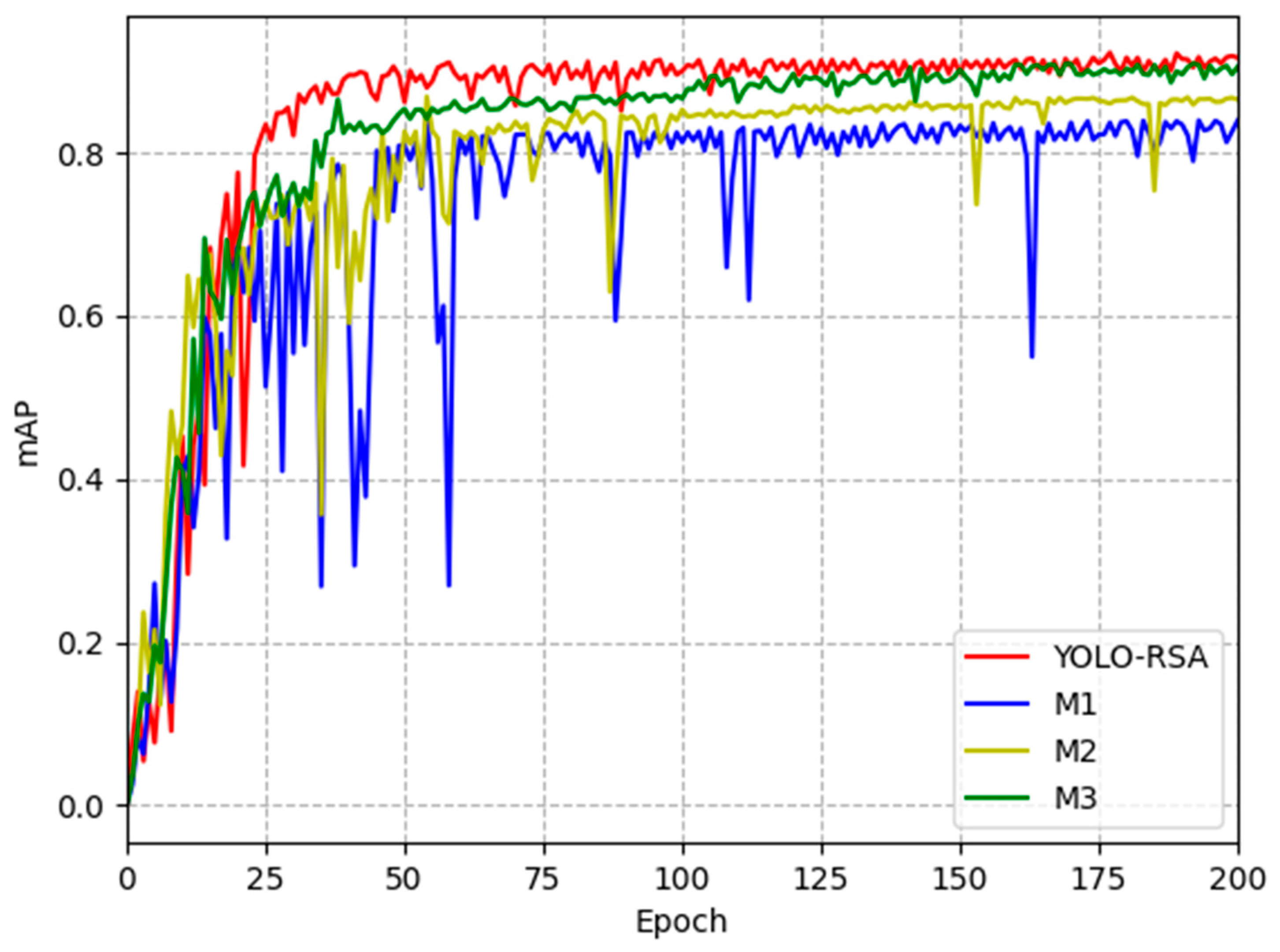

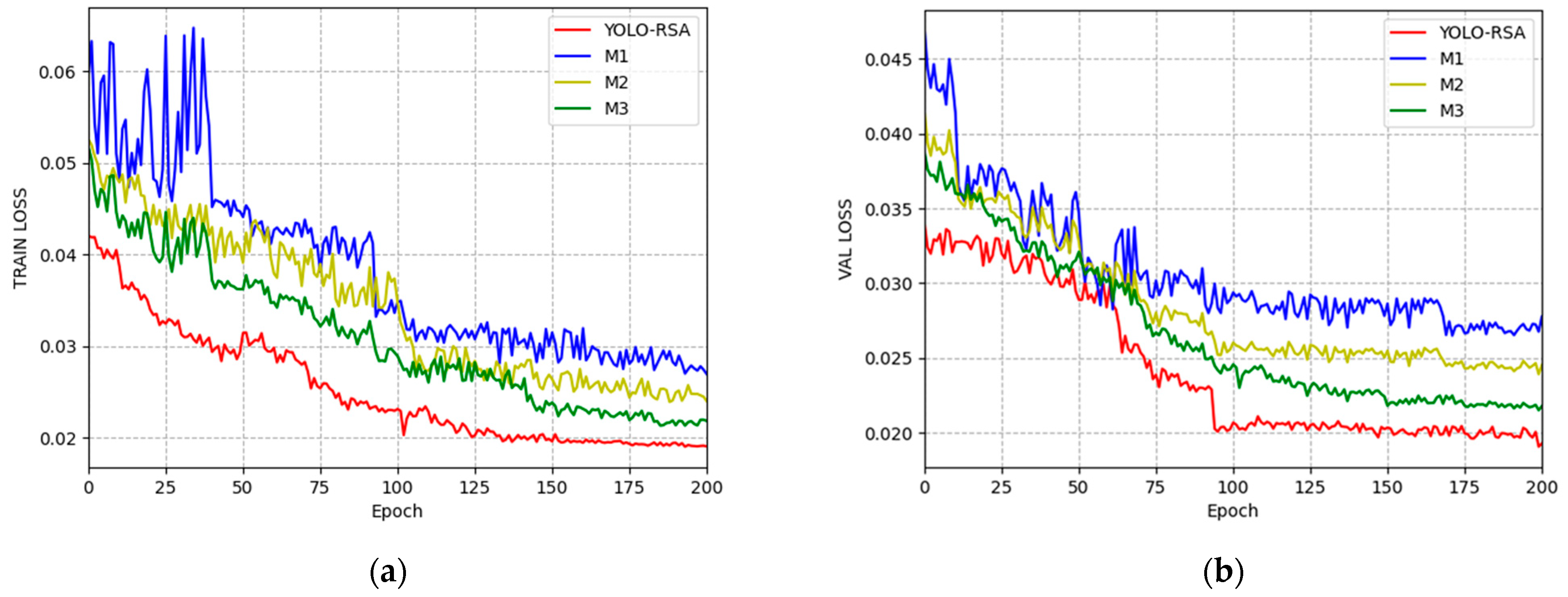

3.5.1. Ablation Experiment

3.5.2. Comparison Experiment with Other Models

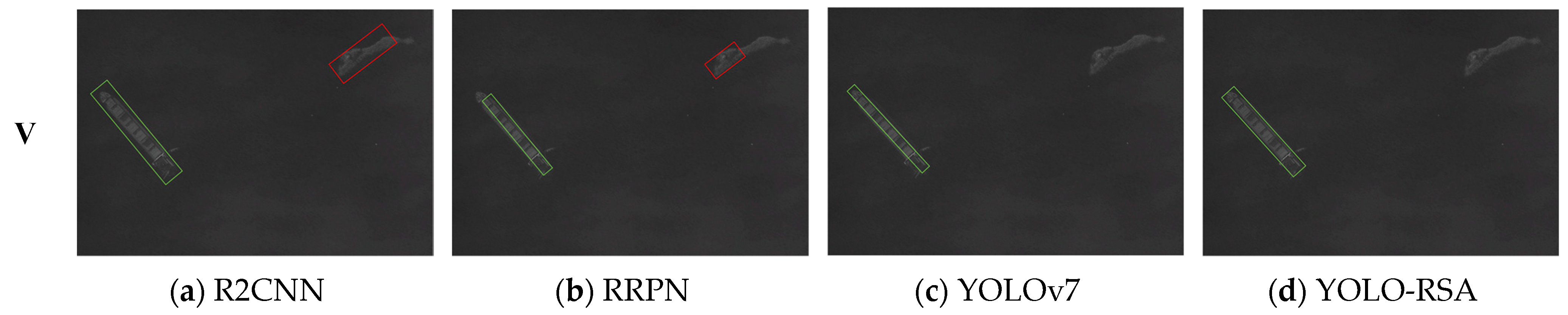

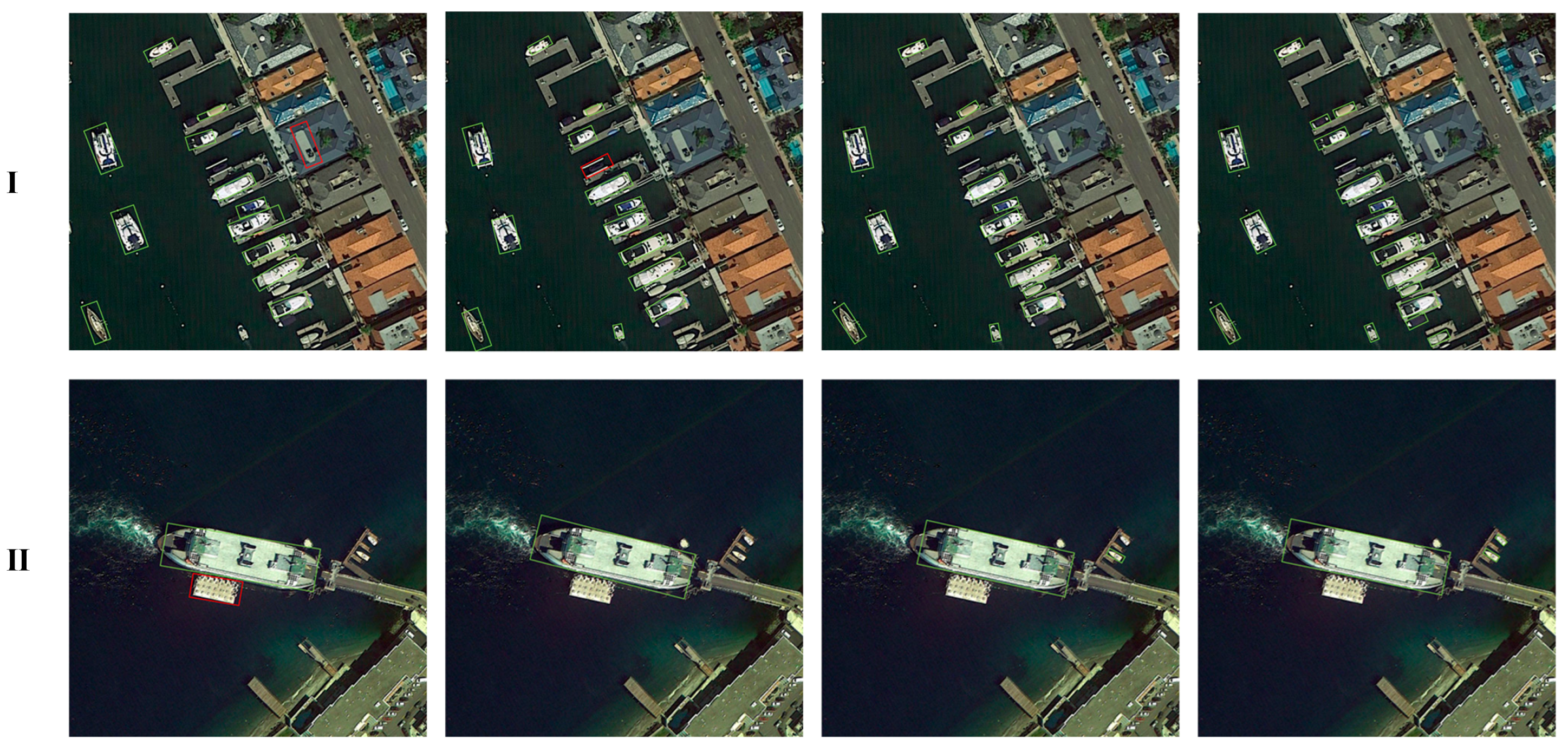

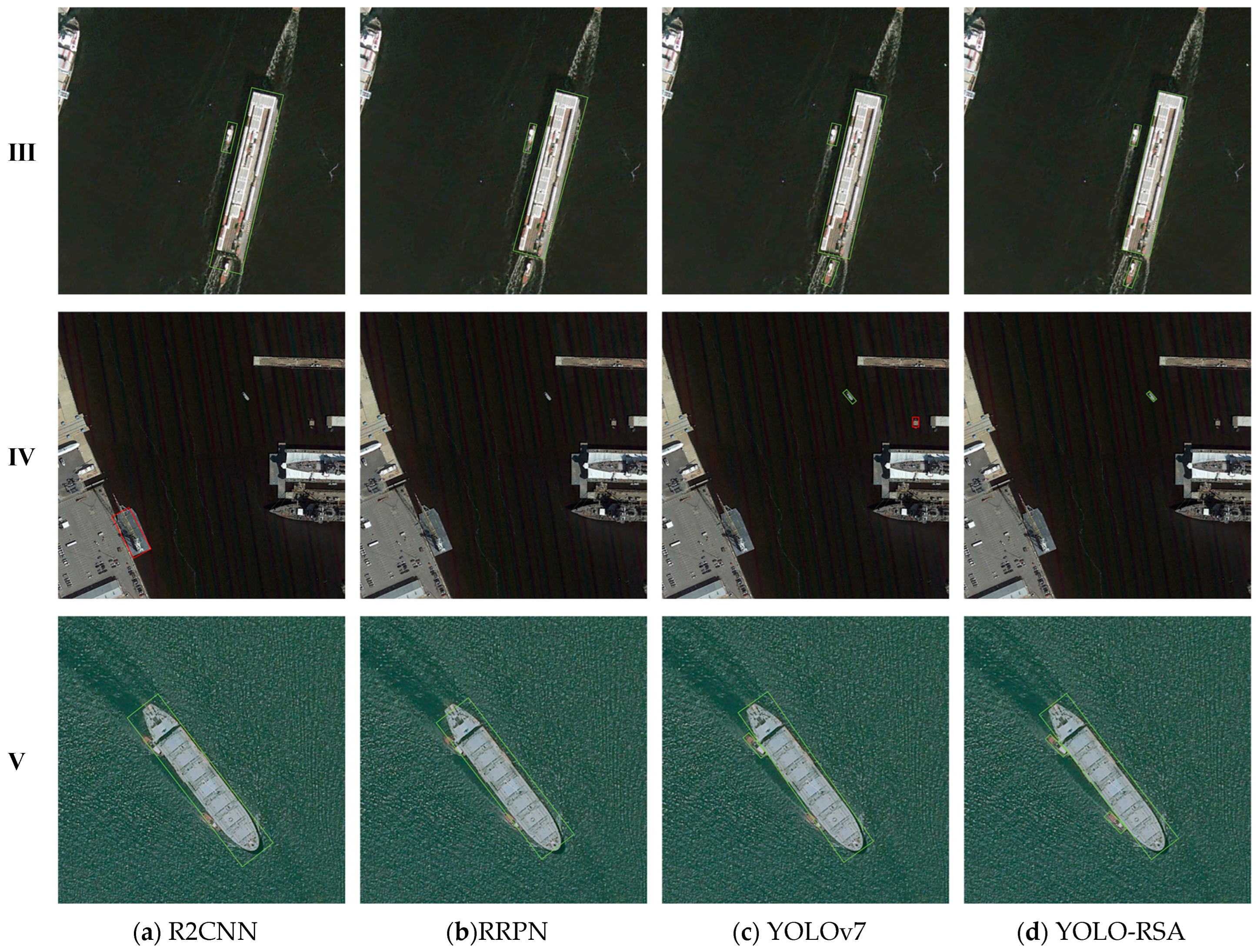

3.5.3. Generalizability Experiments

- (1)

- Effectiveness of Ship Detection in Different Scenarios

- (2)

- Detection Effectiveness of Small Ship Targets

3.6. Discussion of Experimental Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Colgan, C.S. The Blue Economy. In The Blue Economy Handbook of the Indian Ocean Region; Africa Institute of South Africa: Pretoria, South Africa, 2018; Volume 38. [Google Scholar]

- Ma, J.; Jiang, J.; Zhou, H.; Zhao, J.; Guo, X. Guided Locality Preserving Feature Matching for Remote Sensing Image Registration. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4435–4447. [Google Scholar] [CrossRef]

- Li, X.; Tang, Z.; Chen, W.; Wang, L. Multimodal and Multi-Model Deep Fusion for Fine Classification of Regional Complex Landscape Areas Using ZiYuan-3 Imagery. Remote Sens. 2019, 11, 2716. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Lv, S.; Cao, J.; Pan, M.; Ma, Q.; Yu, H. Ship detection of optical remote sensing image in multiple scenes. Int. J. Remote Sens. 2022, 43, 5709–5737. [Google Scholar] [CrossRef]

- Li, K.; Cheng, G.; Bu, S.; You, X. Rotation-insensitive and context-augmented object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2017, 56, 2337–2348. [Google Scholar] [CrossRef]

- Huang, K.; Tian, C.; Li, G. Bidirectional mutual guidance transformer for salient object detection in optical remote sensing images. Int. J. Remote Sens. 2023, 44, 4016–4033. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Yasir, M.; Jianhua, W.; Mingming, X.; Hui, S.; Zhe, Z.; Shanwei, L.; Colak, A.T.I.; Hossain, M.S. Ship detection based on deep learning using SAR imagery: A systematic literature review. Soft Comput. 2023, 27, 63–84. [Google Scholar] [CrossRef]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017. [Google Scholar]

- Jiao, J.; Zhang, Y.; Sun, H.; Yang, X.; Gao, X.; Hong, W.; Fu, K.; Sun, X. A Densely Connected End-to-End Neural Network for Multiscale and Multiscene SAR Ship Detection. IEEE Access 2018, 6, 20881–20892. [Google Scholar] [CrossRef]

- Kim, T.S.; Oh, S.; Chun, T.B.; Lee, M. Impact of Atmospheric Correction on the Ship Detection Using Airborne Hyperspectral Image. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Chang, H.-H.; Wu, G.-L.; Chiang, M.-H. Remote Sensing Image Registration Based on Modified SIFT and Feature Slope Grouping. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1363–1367. [Google Scholar] [CrossRef]

- Wen, L.; Cheng, Y.; Fang, Y.; Li, X. A comprehensive survey of oriented object detection in remote sensing images. Expert Syst. Appl. 2023, 224, 119960. [Google Scholar] [CrossRef]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic Ship Detection in Remote Sensing Images from Google Earth of Complex Scenes Based on Multiscale Rotation Dense Feature Pyramid Networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Sun, B.; Wang, X.; Oad, A.; Pervez, A.; Dong, F. Automatic Ship Object Detection Model Based on YOLOv4 with Transformer Mechanism in Remote Sensing Images. Appl. Sci. 2023, 13, 2488. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar] [CrossRef]

- Wu, F.; Zhou, Z.; Wang, B.; Ma, J. Inshore Ship Detection Based on Convolutional Neural Network in Optical Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4005–4015. [Google Scholar] [CrossRef]

- You, Y.; Cao, J.; Zhang, Y.; Liu, F.; Zhou, W. Nearshore Ship Detection on High-Resolution Remote Sensing Image via Scene-Mask R-CNN. IEEE Access 2019, 7, 128431–128444. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Yi, H.; Cheng, B.; Cai, Y.; Liu, Z. A review of vision-based target detection and tracking. J. Autom. 2016, 42, 1466–1489. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Girshick, R.; Felzenszwalb, P.; McAllester, D. Object detection with grammar models. In Advances in Neural Information Processing Systems; NeurIPS: San Diego CA, USA, 2011; Volume 24. [Google Scholar]

- Zhang, Y.; Li, Q.Z.; Zang, F.N. Ship detection for visual maritime surveillance from non-stationary platforms. Ocean. Eng. 2017, 141, 53–63. [Google Scholar] [CrossRef]

- Kim, S.; Lee, J. Small Infrared Target Detection by Region-Adaptive Clutter Rejection for Sea-Based Infrared Search and Track. Sensors 2014, 14, 13210–13242. [Google Scholar] [CrossRef]

- Wang, B.; Su, Y.; Wan, L. A sea-sky line detection method for unmanned surface vehicles based on gradient saliency. Sensors 2016, 16, 543. [Google Scholar] [CrossRef]

- Loomans, M.J.H.; Wijnhoven, R.G.J.; De With, P.H.N. Robust automatic ship tracking in harbours using active cameras. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013. [Google Scholar]

- Uma, M.; Kumar, S.S. Sea objects detection using color and texture classification. Int. J. Comput. Appl. Eng. Sci. (IJCAES) 2011, 1, 59–63. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Kaur, P.; Khehra, B.S.; Pharwaha, A.P.S. Deep Transfer Learning Based Multiway Feature Pyramid Network for Object Detection in Images. Math. Probl. Eng. 2021, 2021, 5565561. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. arXiv 2019, arXiv:1904.01355. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Zwemer, M.H.; Wijnhoven, R.G.J.; With, P.H.N.D. Ship Detection in Harbour Surveillance based on Large-Scale Data and CNNs. In Proceedings of the International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Funchal, Portugal, 27–29 January 2018. [Google Scholar]

- Bousetouane, F.; Morris, B. Fast CNN surveillance pipeline for fine-grained ship classification and detection in maritime scenarios. In Proceedings of the 2016 13th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Colorado Springs, CO, USA, 23–26 August 2016. [Google Scholar]

- Kim, K.; Hong, S.; Choi, B.; Kim, E. Probabilistic ship detection and classification using deep learning. Appl. Sci. 2018, 8, 936. [Google Scholar] [CrossRef]

- Qi, L.; Li, B.; Chen, L.; Wang, W.; Dong, L.; Jia, X.; Huang, J.; Ge, C.; Xue, G.; Wang, D. Ship Target Detection Algorithm Based on Improved Faster R-CNN. Electronics 2019, 8, 959. [Google Scholar] [CrossRef]

- Ye, J.; Sun, Y.F.; Liu, G.; Liu, L. Ship Detection Framework Based on Deep Learning Network; DEStech Publications: Lancaster, PA, USA, 2019. [Google Scholar]

- Moosbauer, S.; Knig, D.; Jkel, J.; Teutsch, M. A Benchmark for Deep Learning Based Object Detection in Maritime Environments. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Prasad, D.K.; Dong, H.; Rajan, D.; Quek, C. Are Object Detection Assessment Criteria Ready for Maritime Computer Vision; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2020. [Google Scholar]

- Wang, F.; Liu, M.; Liu, X.; Qin, Z.; Ma, B.; Zheng, Y. Real-Time Detection of Marine Ships under Sea Fog Weather Conditions Based on YOLOv3 Deep Learning. Mar. Sci. 2020, 44, 8. [Google Scholar]

- Miao, T.; Zeng, H.; Yang, W.; Chu, B.; Zou, F.; Ren, W.; Chen, J. An improved lightweight RetinaNet for ship detection in SAR images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4667–4679. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, C.; Filaretov, V.; Yukhimets, D. Multi-Scale Ship Detection Algorithm Based on YOLOv7 for Complex Scene SAR Images. Remote Sens. 2023, 15, 2071. [Google Scholar] [CrossRef]

- Yang, Y.B. SA-Net: Shuffle Attention for Deep Convolutional Neural Networks. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021. [Google Scholar]

- Xia, G.-S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arXiv 2017, arXiv:1706.09579. [Google Scholar]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H. Arbitrary-Oriented Scene Text Detection via Rotation Proposals. In IEEE Transactions on Multimedia; IEEE: Piscataway, NJ, USA, 2017; pp. 3111–3122. [Google Scholar]

| Datasets | HRSC2016 | DOTA |

|---|---|---|

| Image number | 1061 | 1014 |

| Ship number | 2976 | 73,824 |

| Size(pixel) | 300 × 300~1500 × 900 | 800 × 800~1500 × 1500 |

| Resolution(m) | 0.4~2 | - |

| NO. | Improvement Strategy | Dataset | P | R | mAP |

|---|---|---|---|---|---|

| 1 | M1 | HRSC2016 | 0.837 | 0.846 | 0.839 |

| 2 | M1 + 4 − FP (M2) | 0.874 | 0.853 | 0.865 | |

| 3 | M1 + 4 − FP + SA (M3) | 0.911 | 0.897 | 0.905 | |

| 4 | M1 + 4 − FP + SA + SC (YOLO-RSA) | 0.923 | 0.911 | 0.917 |

| Model | Dataset | P | R | mAP |

|---|---|---|---|---|

| R2CNN | HRSC2016 | 0.863 | 0.849 | 0.853 |

| DOTA | 0.658 | 0.437 | 0.483 | |

| RRPN | HRSC2016 | 0.882 | 0.839 | 0.864 |

| DOTA | 0.588 | 0.639 | 0.572 | |

| YOLOv7 | HRSC2016 | 0.912 | 0.864 | 0.887 |

| DOTA | 0.896 | 0.724 | 0.735 | |

| YOLO-RSA | HRSC2016 | 0.923 | 0.911 | 0.917 |

| DOTA | 0.907 | 0.796 | 0.830 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, Z.; Wang, X.; Zhang, L.; Jiang, B. YOLO-RSA: A Multiscale Ship Detection Algorithm Based on Optical Remote Sensing Image. J. Mar. Sci. Eng. 2024, 12, 603. https://doi.org/10.3390/jmse12040603

Fang Z, Wang X, Zhang L, Jiang B. YOLO-RSA: A Multiscale Ship Detection Algorithm Based on Optical Remote Sensing Image. Journal of Marine Science and Engineering. 2024; 12(4):603. https://doi.org/10.3390/jmse12040603

Chicago/Turabian StyleFang, Zhou, Xiaoyong Wang, Liang Zhang, and Bo Jiang. 2024. "YOLO-RSA: A Multiscale Ship Detection Algorithm Based on Optical Remote Sensing Image" Journal of Marine Science and Engineering 12, no. 4: 603. https://doi.org/10.3390/jmse12040603

APA StyleFang, Z., Wang, X., Zhang, L., & Jiang, B. (2024). YOLO-RSA: A Multiscale Ship Detection Algorithm Based on Optical Remote Sensing Image. Journal of Marine Science and Engineering, 12(4), 603. https://doi.org/10.3390/jmse12040603