Abstract

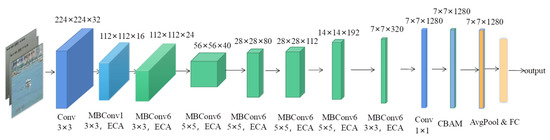

Based on ship navigational requirements and safety in foggy conditions and with a particular emphasis on avoiding ship collisions and improving navigational abilities, we constructed a fog navigation dataset along with a new method for enhancing foggy images and perceived visibility using a discriminant deep learning architecture and the EfficientNet neural network by replacing the SE module and incorporating a convolution block attention module and focal loss function. The accuracy of our model exceeded 95%, which meets the needs of an intelligent ship navigation environment in foggy conditions. As part of our research, we also determined the best enhancement algorithm for each type of fog according to its classification.

1. Introduction

Sea fog is a dangerous weather phenomenon. Under foggy weather conditions, visibility is low and it is difficult to identify ships, obstacles, objects, and navigation marks. Difficulties in ship positioning and navigation, which affect the safety of ship avoidance and rendezvous, can result in water traffic accidents [1]. According to statistics, the accident incidence rate in dense fog is more than 70% [2]. In foggy conditions, the influences of automatic identification system (AIS) information lag, weak radar detection performance, and wind and wave flow worsen ship maneuverability and greatly increase the difficulty of positioning and navigation, imperiling the safety of ships sailing in busy shipping routes.

Many scholars have introduced artificial intelligence technology into the field of shipping using deep learning techniques to guide the transformation and upgrading of the shipping industry and improve its ability to perceive danger [3,4]. Varelas [5] described Danaos Corporation’s innovative toolkit called Operations Research In Ship Management (ORISMA), which optimizes ship routing by considering financial data, hydrodynamic models, weather conditions, and marketing forecasts. ORISMA maximizes revenue by optimizing fleetwide performance instead of single-vessel performance and accounts for financial benefits after voyage completion. ShipHullGAN [6] is a deep learning model that uses convolutional generative adversarial networks (GANs) to generate and represent ship hulls in a versatile way. This model addresses the limitations of current parametric ship design, which only allows for specific ship types to be modeled.

The current emphasis in intelligent ship development is navigational safety [7], making improvements in ship navigation in fog increasingly urgent. To improve ships’ perception ability in fog, it is necessary to improve the detection of foggy conditions so that the targeted treatment of foggy conditions can achieve twice the result with half the effort. Currently, a large number of scholars have studied the relevant perception and classification algorithms and the sea visibility detection method based on image saturation, but advection fog, light, and other factors introduce large errors [8]. Kim et al. [9] used satellite observation data and aerosol lidar detection along with cloud removal and fog edge detection to determine the amount of fog, but the method was only applicable to satellite data. Traditional image-processing methods are susceptible to weather and hardware constraints as well as atmospheric transmission rates. S Cornejo-Bueno et al. [10] applied a neural network approach trained with the ELM algorithm to predict low-visibility events from atmospheric predictive variables, achieving highly accurate predictions within a half-hour time horizon. This study provided a full characterization of fog events in the area, which is affected by orographic fog causing traffic problems year-round. Palvanov [11] proposed a new approach called VisNet for estimating visibility distances from camera imagery in various foggy conditions. It uses three streams of deep integrated convolutional neural networks connected in parallel and is trained on a large dataset of three million outdoor images with exact visibility values. The proposed model achieved the highest performance compared to previous methods in terms of classification based on three different datasets.

Visibility detection using neural networks [12] is applicable to the classification and detection of fog on highways and at airports, with limited detection ability and high hardware requirements. Marine and road environments are quite different, making this last method impractical for use with ships. Engin [13] proposed an end-to-end Cycle-Dehaze single-image defogging network that improves the Cycle GAN formulation and image visual quality. Shao [14] generalized image defogging to truly hazy images by establishing a domain adaptation paradigm for defogging. Qin [15] proposed an end-to-end feature fusion network (FFA-Net) that directly restored fog-free images and improved the highest PSNR index for the SOTS indoor test dataset from 30.23 dB to 36.39 dB. Park [16] proposed a method using heterogeneous generative adversarial networks—both Cycle GAN and cGAN—that worked on both synthetic and realistic hazy images. Wu [17] constructed AECR-Net, a compact defogging network based on an auto-encoder framework that included an auto-encoder with balanced performance and memory storage and a comparison regularization module; the experimental results were significantly better than those of previous methods. Ullah [18] proposed the lightweight convolutional neural network LD-Net using transformed atmospheric scattering models to jointly estimate the transmission map and atmospheric light to reconstruct hazy images.

Our work presented in this paper examined the requirements of ship navigation safety in fog and the problems of ships being prone to collisions with poor visibility in foggy conditions. We then studied the machine classification and enhancement of foggy images, built a ship navigation fog environment classification dataset, and applied image enhancement technology to improve visual perception in fog to lay a foundation for unmanned ships and remote operation.

3. Analysis of Images for Perception Enhancement

By weakening the influence of fog factors and highlighting the environmental characteristics of the ship, we sought to enhance the perception when fog was present and improve the ability to recognize ships. We mainly employed image enhancement and physical model-based methods to achieve these tasks.

3.1. Enhanced Ship Navigation Fog Environment Perception Based on Image Enhancement

We first enhanced the image-based visibility enhancement method by improving contrast but at the cost of losing environmental information. Image-enhancement methods are mainly divided into two categories: global enhancement and local enhancement.

- (1)

- Global enhancement relies significantly on histogram equalization, Retinex theory, and high-contrast retention. Histogram equalization refers to equalization processing of the original image histogram to make the gray-level distribution uniform and improve the contrast [26]. Retinex theory uses three-color theory and color constancy balancing dynamic range compression, edge enhancement, and color constant [27]. High-contrast retention refers to preserving contrast at the junction of the color and shade contrasts, with other areas appearing medium gray. The modified image is superimposed on the original image one or more times to produce an enhanced image.

- (2)

- Local enhancement includes adaptive histogram equalization (AHE), limit contrast, and adaptive histogram equalization (CLAHE). The adaptive histogram equalization algorithm calculates a local histogram and redistributes the brightness to change the contrast to realize image enhancement [28]. The limited contrast adaptive histogram equalization algorithm solves the problem of excessive noise by limiting contrast based on the adaptive histogram equalization, with an interpolation method accelerating the histogram equalization [29].

3.2. Enhanced Perception of Ship Navigation Fog Environment Based on the Physical Model

The algorithm estimated atmospheric illumination and transmission based on the atmospheric scattering physics model. According to the mapping relationship of the atmospheric scattering model, a single-image defogging algorithm for a transmission map and dark channel prior was established to realize image enhancement [30]. Based on this theory, the fog concentration could be estimated using the dark channel image along with a more accurate determination of the atmospheric illumination, and the transmission rate could be calculated according to the inverse operation of the atmospheric scattering model, enabling the identification of ships and other objects in the fog.

5. Comparative Analysis of the Results of the Fog Environment Visibility Experiment

5.1. Classification Experiment of Ship Navigation Fog Environment

To verify the effectiveness of our model, we analyzed our dataset and conducted ablation experiments along with performance measurements of the classification results.

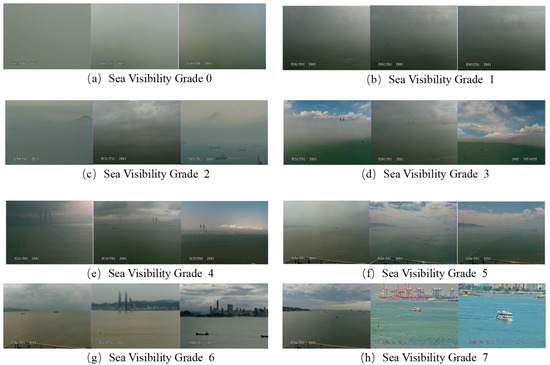

5.1.1. Description of the Fog Environment Visibility Dataset

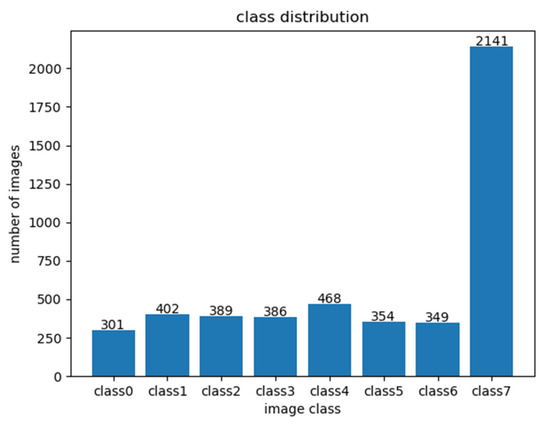

The dataset contained 4790 images divided into eight categories. Figure 6 shows the statistical results of the dataset. Its main features included an uneven distribution of dataset samples, with the first label having the fewest samples (301 pictures) and the eighth sample having the most (2141 pictures). The resolution of the dataset images was constant at 704 × 576 pixels.

Figure 6.

Dataset label distribution.

5.1.2. Ablation Experiment of Ship Navigation Fog Environment Classification Model

According to the classification requirements of our environment, accuracy (Accuracy) was the main evaluation index combined with the cumulative operator (MACS), the number of model parameters, and the model size. The accuracy calculation formula is as follows:

In Equation (8), TP, FP, FN, and TN represent the number of positive samples correctly identified (true positives), the number of misreported negative samples (false positives), the number of negative samples incorrectly identified (false negatives), and the number of negative samples correctly identified (true negatives), respectively.

Ablation experiments refer to the use of laboratory techniques to detect the impact of certain model components (such as layers, features, parameters, etc.) on model performance by removing or blocking them. To verify the effectiveness of our model, we performed the following four ablation experiments:

- (1)

- Basic EfficientNet using the basic EfficientNet network for training;

- (2)

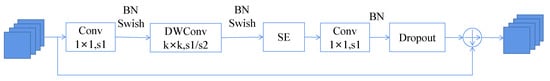

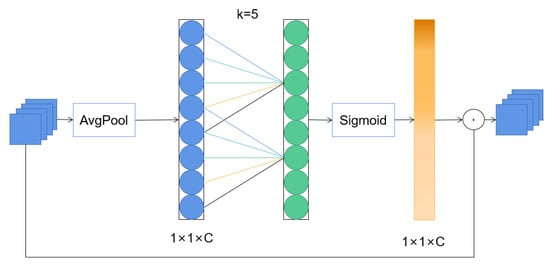

- EfficientNet + ECA using the ECA attention module based on Equation (1) to replace the SE module in the MBConv structure;

- (3)

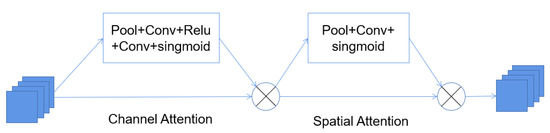

- EfficientNet + ECA + BAM with the CBAM attention module added after the last convolutional layer based on Equation (2); and

- (4)

- Based on (3), the focus loss function was used to form the final improved EfficientNet model.

The results of the ablation experiments are shown in Table 3.

Table 3.

Ablation experiments of ship navigation fog environment classification model.

Based on the experiments summarized in the table, we drew the following conclusions.

- When comparing Models (1) and (2), replacing the SE module with the ECA attention module improved the accuracy by 0.21% and reduced the MACS, the number of parameters, and the model size, showing that the ECA module was lighter and offered a stronger attention learning ability than the SE module.

- When comparing Models (3) and (2), the results showed that adding the CBAM attention module increased the accuracy of MACS with only a slight increase of 0.07% in the number of parameters and the model size, indicating that adding CBAM extracted better network features with greater accuracy.

- When comparing Models (4) and (3), the results showed that the focus loss function reaction model accelerated the network learning. Under the same input conditions, the accuracy of Model (4) was improved by 0.06%.

To sum up, the EfficientNet model was effective in improving the model. The accuracy of the model reached 95.05% in the validation set, with 0.401 G MACS, 4.20 M model parameters, and a model size of 16.31 MB. Relative to the underlying EfficientNet model, the accuracy of the improved perception model improved by 0.42%, and the lighter-weight model preserved a high accuracy.

5.1.3. Classification Performance Analysis of Ship Navigation Fog Environment

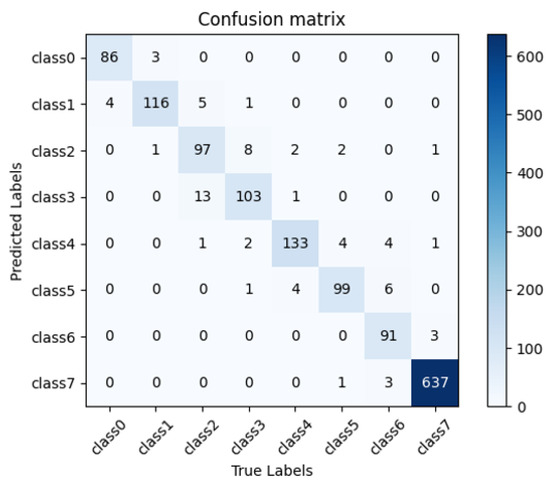

The visibility grade classification results are shown in Table 1. After classifying the validation set data, the confusion matrix was drawn statistically for all images to show the classification results. The confusion matrix diagram is shown in Figure 6.

Figure 7 shows that the overall accuracy of ship navigation fog environment classification reached 95.05%, with good performance in all grades. The data were mainly concentrated on the diagonal and adjacent points, with a small number of misclassified data categories. According to these results, we considered our model’s performance stable and reliable.

Figure 7.

Model confusion matrix plot.

5.2. Visibility Enhancement Experiment of Ship Fog Environment

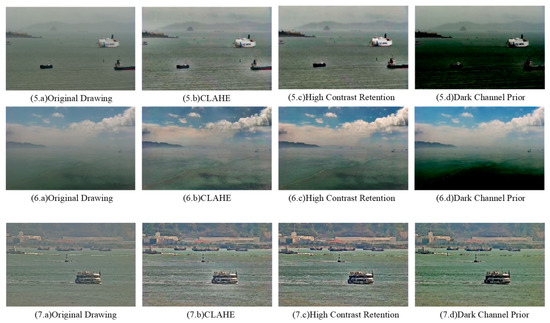

5.2.1. Comparison of Visibility Enhancement Experiment in Fog Environment

To verify the effectiveness of our enhancement algorithm, we used high-contrast retention, CLAHE, and a dark channel algorithm for a visibility enhancement experiment. The specific process was as follows:

- Samples in dataset classes 0–6 were processed with each augmentation algorithm;

- The classification model presented in this paper was used to classify the images processed in the previous step;

- The image-processing classification results were compared with the original image level to determine whether the visibility level was improved and whether the improvement was effectively enhanced;

- The ratio of the effective enhancement number of each level and the number of samples in the corresponding level in different datasets were calculated to obtain the effective enhancement rate.

This article determined the experimental results of the enhancement model for different visibility levels by comparing the application of different image resolutions and different models. According to the experimental results in Table 4, all three algorithms enhanced visibility. The high-contrast retention algorithm had the best results in class 6. The CLAHE algorithm was more prominent in classes 0 and 3, and the dark channel prior algorithm was outstanding in classes 1, 2, 4, and 5.

Table 4.

Comparison of visibility enhancement experiments.

5.2.2. Results of Visibility Enhancement in Fog Environment

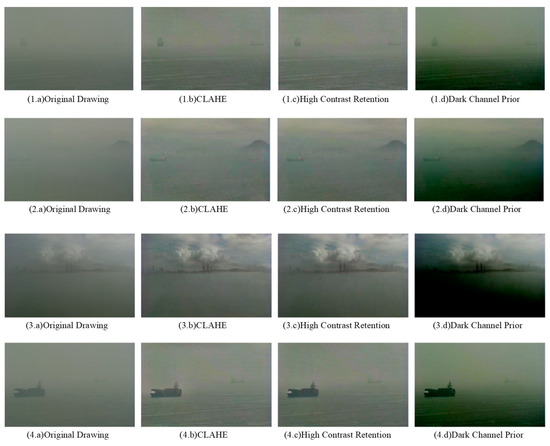

The EfficientNet neural network alone does not directly improve visibility perception. In this manuscript, we utilized the EfficientNet neural network to classify visibility levels and applied existing algorithms such as high-contrast retention, CLAHE, and dark channel enhancement to enhance visibility for different visibility levels. Compared with the original image, a subjective review of the processed images showed that all three algorithms enhanced the visibility and environmental perception, as shown in Figure 8.

Figure 8.

Visibility enhancement results.

6. Conclusions

Given the requirements of safely navigating ships in foggy conditions, we constructed a ship navigation fog environment classification model using deep learning and determined the optimal enhancement algorithm for each type of fog according to the classification results to produce better perception results for navigation. This laid a foundation for intelligent unmanned and remotely operated ships. Our detailed conclusions were as follows.

- (1)

- We constructed a fog environment classification image dataset using visibility grade classification rules and perceived visibility.

- (2)

- Using this dataset, we designed a perceived visibility model structure using a discriminant deep learning architecture and the EfficientNet neural network while adding the CBAM, focal loss, and other improvements. Our experiments showed that our model’s accuracy exceeded 95%, which meets the needs of intelligent ship navigation in foggy conditions.

- (3)

- Using our model and the dataset, we were able to determine the best image-enhancement algorithm based on the type of fog detected. The dark channel prior algorithm worked best with fog classes 1, 2, 4, and 5. The CLAHE algorithm worked best with fog classes 0 and 3. The high-contrast retention algorithm worked best with fog class 6.

Author Contributions

C.W., B.F., Y.L. and S.Z. proposed the idea and derived the algorithm; J.X., L.M. and J.Z. were responsible for the code testing and algorithm results; B.F., Y.L. and R.W. wrote the algorithm and conducted the experiments; J.C., Z.L. and S.S. performed the dataset collection and identification; C.W., B.F., Y.L. and S.Z. performed the theoretical calculations; C.W., B.F., Y.L. and S.Z. wrote the manuscript. All authors analyzed the data, discussed the results, and commented on the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Xiamen Ocean and Fishery Development Special Fund Project grant number No. 21CZBO14HJ08 and the Ship Scientific Research Project—Key technology and demonstration of type 2030 green and intelligent ship in Fujian region grant number No. CBG4N21-4-4.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We thank LetPub (www.letpub.com, accessed on 19 May 2023) for its linguistic assistance during the preparation of this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ye, Y.; Chen, Y.; Zhang, P.; Cheng, P.; Zhong, H.; Hou, H. Traffic Accident Analysis of Inland Waterway Vessels Based on Data-driven Bayesian Network. Saf. Environ. Eng. 2022, 29, 47–57. [Google Scholar]

- Zhen, G. Discussion on the safe navigation methods of ships in waters with poor visibility. China Water Transp. 2022, 4, 18–20. [Google Scholar]

- Khan, S.; Goucher-Lambert, K.; Kostas, K.; Kaklis, P. ShipHullGAN: A generic parametric modeller for ship hull design using deep convolutional generative model. Comput. Methods Appl. Mech. Eng. 2023, 411, 116051. [Google Scholar] [CrossRef]

- Wright, R.G. Intelligent autonomous ship navigation using multi-sensor modalities. Trans. Nav. Int. J. Mar. Navig. Saf. Sea Transp. 2019, 13, 3. [Google Scholar] [CrossRef]

- Varelas, T.; Archontaki, S.; Dimotikalis, J.; Turan, O.; Lazakis, I.; Varelas, O. Optimizing ship routing to maximize fleet revenue at Danaos. Interfaces 2013, 43, 37–47. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhang, D.; Zhao, Y.; Cui, Y.; Wan, C. A Visualization Analysis and Development Trend of Intelligent Ship Studies. J. Transp. Inf. Saf. 2021, 39, 7–16+34. [Google Scholar]

- Pedersen, M.; Bruslund Haurum, J.; Gade, R.; Moeslund, T.B. Detection of marine animals in a new underwater dataset with varying visibility. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kim, D.; Park, M.S.; Park, Y.J.; Kim, W. Geostationary Ocean Color Imager (GOCI) marine fog detection in combination with Himawari-8 based on the decision tree. Remote Sens. 2020, 12, 149. [Google Scholar] [CrossRef]

- Cornejo-Bueno, S.; Casillas-Pérez, D.; Cornejo-Bueno, L.; Chidean, M.I.; Caamaño, A.J.; Cerro-Prada, E.; Casanova-Mateo, C.; Salcedo-Sanz, S. Statistical analysis and machine learning prediction of fog-caused low-visibility events at A-8 motor-road in Spain. Atmosphere 2021, 12, 679. [Google Scholar] [CrossRef]

- Palvanov, A.; Young, I.C. Visnet: Deep convolutional neural networks for forecasting atmospheric visibility. Sensors 2019, 19, 1343. [Google Scholar] [CrossRef]

- Huang, L.; Zhang, Z.; Xiao, P.; Sun, J.; Zhou, X. Classification and application of highway visibility based on deep learning. Trans. Atmos. Sci. 2022, 45, 203–211. [Google Scholar]

- Deniz, E.; Anıl, G.; Hazım Kemal, E. Cycle-Dehaze: Enhanced CycleGAN for Single Image Dehazing. arXiv 2018, arXiv:1805.05308. [Google Scholar]

- Yuanjie, S.; Lerenhan, L.; Wenqi, R.; Changxin, G.; Nong, S. Domain Adaptation for Image Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2808–2817. [Google Scholar]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature Fusion Attention Network for Single Image Dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Park, J.; Han, D.K.; Ko, H. Fusion of Heterogeneous Adversarial Networks for Single Image Dehazing. IEEE Trans. Image Process. 2020, 29, 4721–4732. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Qu, Y.; Lin, S.; Zhou, J.; Qiao, R.; Zhang, Z.; Xie, Y.; Ma, L. Contrastive Learning for Compact Single Image Dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 10551–10560. [Google Scholar]

- Ullah, H.; Muhammad, K.; Irfan, M.; Anwar, S.; Sajjad, M.; Imran, A.S.; de Albuquerque, V.H.C. Light-DehazeNet: A Novel Lightweight CNN Architecture for Single Image Dehazing. IEEE Trans. Image Process. 2021, 30, 8968–8982. [Google Scholar] [CrossRef]

- Koschmieder, H. Theorie der horizontalen sichtweite. Beitr. Phys. Freien Atmos. 1924, 33–53, 171–181. [Google Scholar]

- Middleton, W. Vision through the Atmosphere; University of Toronto Press: Toronto, ON, Canada, 1952. [Google Scholar]

- Redman, B.J.; van der Laan, J.D.; Wright, J.B.; Segal, J.W.; Westlake, K.R.; Sanchez, A.L. Active and Passive Long-Wave Infrared Resolution Degradation in Realistic Fog Conditions; No. SAND2019-5291C; Sandia National Lab (SNL-NM): Albuquerque, NM, USA, 2019. [Google Scholar]

- Lu, T.; Yang, J.; Deng, M. A Visibility Estimation Method Based on Digital Total-sky Images. J. Appl. Meteorol. Sci. 2018, 29, 63–71. [Google Scholar]

- Kaiming, H.; Jian, S.; Xiaoou, T. Single Image Haze Removal Using Dark ChannelPrior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami Beach, FL, USA, 20–26 June 2009; pp. 1956–1963. [Google Scholar]

- Ortega, L.; Otero, L.D.; Otero, C. Application of machine learning algorithms for visibility classification. In Proceedings of the 2019 IEEE International Systems Conference (SysCon), Orlando, FL, USA, 8–11 April 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Huang, L. Wenyuan Bridge. Navigation Meteorology and Oceanography. Hubei; Wuhan University of Technology Press: Wuhan, China, 2014. [Google Scholar]

- Li, Z.; Che, W.; Qian, M.; Xu, X. An Improved Image Defogging Algorithm Based on Histogram Equalization. Henan Sci. 2021, 39, 1–6. [Google Scholar]

- Zhu, Y.; Lin, J.; Qu, F.; Zheng, Y. Improved Adaptive Retinex Image Enhancement Algorithm. In Proceedings of the ICETIS 2022, 7th International Conference on Electronic Technology and Information Science, Harbin, China, 21–23 January 2022; pp. 1–4. [Google Scholar]

- Wen, H.; Li, J. An adaptive threshold image enhancement algorithm based on histogram equalization. China Integr. Circuit 2022, 31, 38–42+71. [Google Scholar]

- Fang, D.; Fu, Q.; Wu, A. Foggy image enhancement based on adaptive dynamic range CLAHE [J/OL]. Laser Optoelectron. Prog. 2022, 9, 1–14. [Google Scholar]

- Yin, J.; He, J.; Luo, R.; Yu, W. A Defogging Algorithm Combining Sky Region Segmentation and Dark Channel Prior. Comput. Technol. Dev. 2022, 32, 216–220. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Nayak, D.R.; Padhy, N.; Mallick, P.K.; Zymbler, M.; Kumar, S. Brain tumor classification using dense efficient-net. Axioms 2022, 11, 34. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Goswami, V.; Sharma, B.; Patra, S.S.; Chowdhury, S.; Barik, R.K.; Dhaou, I.B. IoT-Fog Computing Sustainable System for Smart Cities: A Queueing-based Approach. In Proceedings of the 2023 1st International Conference on Advanced Innovations in Smart Cities (ICAISC), Jeddah, Saudi Arabia, 23–25 January 2023. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21002–21012. [Google Scholar]

- Thombre, S.; Zhao, Z.; Ramm-Schmidt, H.; Garcia, J.M.V.; Malkamaki, T.; Nikolskiy, S.; Hammarberg, T.; Nuortie, H.; Bhuiyan, M.Z.H.; Sarkka, S.; et al. Sensors and AI techniques for situational awareness in autonomous ships: A review. IEEE Trans. Intell. Transp. Syst. 2020, 23, 64–83. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).