Abstract

Climate change intensifies agricultural risks, requiring an integrated analysis of climatic, hydrological, and crop data to support resilient farming. Despite advances in remote sensing, in-field sensors, and artificial intelligence, fragmented data silos hinder spatiotemporal risk assessments by requiring labor-intensive data handling. We present agriclima, a federated, cloud-native, FAIR-by-design platform that unifies heterogeneous agricultural and environmental datasets under consistent identity, policy, and metadata governance. Its scalable open-source architecture, compliance with INSPIRE and RNDT standards, and privacy-preserving access enable researchers and decision-makers to perform comprehensive analyses with minimal coding, accelerating data-driven agricultural risk management. Developed and tested in a research project by a consortium of stakeholders in agricultural risk management, the platform was evaluated via: (1) FAIR assessment of 26 datasets using F-UJI, (2) system performance monitoring on Kubernetes, and (3) a demonstrative spatiotemporal aggregation use case. It achieved 80% average FAIR compliance, with perfect accessibility (7.00/7.00), while findability and reusability remain key areas for improvement. Performance showed stable operation (CPU 17.24%, memory 49.89%) with capacity headroom. The demonstrative use case validated that researchers can conduct spatiotemporal analyses with minimal coding effort through the abstracted data access components. Beyond technical evaluation, we share lessons learned to guide future platform development and metadata standardization, highlighting the platform’s effectiveness as a foundation for data-driven agricultural decision-making.

1. Introduction

1.1. Climate Change Challenges in Agriculture

The agricultural sector is confronted with unprecedented challenges arising from climate change. With temperatures on the rise and extreme weather events becoming more frequent, crop yields and food security are increasingly at risk []. These changes manifest through various mechanisms: altered precipitation patterns affect water availability for irrigation [], temperature extremes impact crop phenology and yields [], and increased frequency of extreme events such as droughts, floods, and frosts cause direct crop damage and economic losses [].

To address these threats, researchers and practitioners are focusing on the complex relationships between climate, hydrology, and crop production [] by relying on new information technologies and data processing techniques []. Understanding and mitigating these risks require integrated analysis that combines multiple data sources across spatial and temporal scales, from field-level observations to regional climate projections.

1.2. Data Sources for Agricultural Risk Analysis

Recent advances in remote sensing and field-based sensors are continuously expanding the availability of high-resolution agricultural and environmental data. Satellite imagery from missions such as Sentinel-2 and Landsat provide continuous monitoring of land cover, soil moisture, and vegetation health over large areas at regular intervals []. These optical sensors are complemented by synthetic aperture radar (SAR) systems that can penetrate cloud cover, enabling year-round monitoring in regions with frequent cloud cover.

In-field sensors deployed in agricultural environments provide environmental data on temperature, rainfall, soil conditions, and other parameters with high temporal granularity []. These Internet of Things (IoT) devices can capture measurements at minute or hourly intervals, providing detailed temporal dynamics that complement the spatial coverage of satellite systems. Furthermore, Unmanned Aerial Vehicles (UAVs) and Unmanned Ground Vehicles (UGVs) deliver new capabilities for gathering additional data, such as high-resolution multispectral images of crops, thermal analysis of soil and vegetation, and three-dimensional canopy structure information [,].

Together, these data streams enable unprecedented spatial and temporal coverage, allowing for detailed characterization of agricultural ecosystems and their responses to changing climatic conditions []. However, realizing this potential requires not only data acquisition but also effective integration and management of heterogeneous data sources.

1.3. Analytical Technologies and Their Requirements

In parallel with improved data acquisition, artificial intelligence (AI) and high-performance computing (HPC) have created powerful tools for analyzing and integrating diverse datasets. Deep learning models excel at identifying complex, nonlinear patterns across large volumes of heterogeneous data [].

For specific agricultural applications, different AI architectures offer complementary capabilities. Convolutional Neural Networks (CNNs) are widely used for detecting crop diseases from drone and satellite images by learning spatial patterns in multispectral imagery []. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks are particularly effective for time-series forecasting of crop yields and resource needs, as they can capture temporal dependencies in sequential data [,].

Unsupervised learning techniques, such as clustering algorithms, can discover hidden relationships within large datasets without requiring labeled training data []. For example, as shown in [], clustering analysis of multiyear satellite image time series using unsupervised deep learning methods can effectively identify distinct land cover zones, revealing patterns and regions with similar characteristics. Furthermore, distributed computing infrastructures, including federated learning approaches, enhance the capacity to model complex interactions while enabling collaborative model training across multiple institutions or farms without sharing sensitive raw data, which is a critical feature for privacy-preserving precision agriculture [].

However, these advanced analytical capabilities depend fundamentally on access to well-organized, standardized, and interoperable datasets. AI models require large volumes of consistently formatted training data, while spatiotemporal analysis demands precise alignment of data from different sources. The effectiveness of these technologies is therefore constrained by the quality and accessibility of the underlying data infrastructure.

1.4. The Data Fragmentation Problem

Despite the proliferation of data sources and analytical tools, researchers and practitioners are significantly constrained by the fragmentation of relevant data. Critical information on weather, water, agricultural practices, and crop performance is scattered across numerous heterogeneous databases, creating isolated “data silos” that hinder comprehensive analysis and collaborative action.

This fragmentation manifests in several ways. First, different data providers use incompatible formats (e.g., NetCDF, GeoTIFF, CSV) and metadata standards, requiring time-consuming conversion before joint analysis. Second, access mechanisms vary widely, from file downloads to APIs with different authentication protocols, creating integration challenges. Third, data governance is inconsistent, with varying license terms, access restrictions, and privacy requirements that complicate sharing and reuse. Fourth, there is often limited documentation of data quality, provenance, and processing methods, reducing confidence in analytical results.

The consequences of this fragmentation are substantial. Researchers must devote significant time to data discovery, cleaning, and normalization [] before any meaningful analysis can begin. This redundant effort across research groups inflates costs and slows progress. Moreover, the absence of standardized data infrastructure prevents researchers from fully leveraging advanced analytical technologies to comprehensively assess climate impacts and develop effective adaptation strategies. Agricultural stakeholders, including farmers, insurers, and policymakers, similarly struggle to access integrated information needed for risk-informed decision-making.

1.5. Emerging Paradigms for Data Integration

In response to these challenges, emerging paradigms such as dataspaces [] and blockchain-based data sharing [] are gaining traction as potential solutions. These approaches target issues of data sovereignty, restricted data access, and automated licensing and monetization.

The dataspace concept enables data providers to retain control over their data while supporting controlled sharing through standardized interfaces and agreements. Blockchain technologies further contribute by ensuring transparent and immutable records of data transactions and access permissions. However, both paradigms remain in early stages of maturity and currently lack the robustness required for large-scale, integrated spatiotemporal risk analysis in agriculture [].

What is needed is not just a conceptual framework but an operational system that demonstrates how heterogeneous agricultural and environmental datasets can be effectively integrated, governed, and accessed for real-world analytical applications while respecting data provider policies and user privacy requirements.

1.6. Research Objectives and Contributions

In this paper, we present agriclima, a platform designed to overcome the fragmentation of agricultural and climate data by providing a unified infrastructure for data integration, governance, and access.The platform was developed over a 10-month collaborative research project involving a consortium representing diverse stakeholder perspectives in agricultural risk management.

The primary objective of this research is to demonstrate and evaluate a working implementation of a federated data platform specifically designed for agricultural spatiotemporal risk analysis. Rather than proposing a purely conceptual framework, we present an operational system that addresses real integration challenges with heterogeneous datasets from multiple providers, while also sharing lessons learned to guide future development of similar platforms.

The main contributions of agriclima are threefold:

- Scalable Open-Source Architecture: We provide a modular, cloud-native platform architecture for robust data integration that supports both batch and real-time data access. The architecture is built entirely on mature open-source components (JupyterHub, GeoNetwork, Keycloak, Apache Airflow, PostgreSQL/PostGIS, MinIO, GeoServer), ensuring reproducibility and broad potential for adoption. The modular design allows individual components to be replaced or extended without disrupting the overall system. This choice of mature, widely-adopted components is particularly important given the limited long-term funding certainty characteristic of research infrastructure.

- FAIR-Compliant Data Management: We implement practical mechanisms to support FAIR (Findable, Accessible, Interoperable, Reusable) principles specifically for spatiotemporal agricultural data. This includes adoption of domain-relevant metadata standards (INSPIRE and RNDT for geospatial data), structured metadata entry through guided onboarding sessions with data providers, a searchable catalog for dataset discovery, and standardized access protocols. Importantly, our implementation demonstrates how FAIR principles can be operationalized in a multi-stakeholder environment with both public and private datasets.

- Privacy-Preserving Abstracted Data Access: We provide custom components (AgriclimaClient and Policy Engine) that enable secure, simplified data access for analysts while preserving governance requirements. These components abstract the complexity of policy verification, credential management, and data fetching, allowing researchers to focus on analysis rather than infrastructure. The architecture explicitly supports both shared public datasets and private workspace repositories, enabling confidential data to remain under provider control while still being accessible for authorized analytical workflows.

The boundaries of this study are defined by the platform’s design, implementation, and initial operational evaluation. This assessment encompasses FAIR maturity assessment, system performance monitoring under normal operational load, and demonstration of the platform workflow through a representative use case. The evaluation was conducted with four concurrent users, representing 25% of the 16-person project team, during the initial deployment phase. This work does not attempt to provide: (1) a comprehensive comparative evaluation against other platforms; (2) high-volume stress testing or scalability limits assessment; (3) extensive user studies or stakeholder feedback collection; or (4) detailed scientific results from the eleven application demonstrators built on the platform. The results from these demonstrators, which address specific agricultural risk analysis questions using the platform, will be reported in separate domain-focused publications upon completion of the overall project. Our focus here is on validating that the platform can successfully enable these and other analyses, specifically assessing system performance (resource utilization, operational stability), FAIR compliance of integrated datasets, and usability for spatiotemporal analysis workflows through a representative demonstrator.

1.7. Paper Organization

The remainder of this paper is organized as follows. Section 2 reviews related work on agricultural data platforms, FAIR data principles, and federated data systems. Section 3 describes our methodology, including the system architecture design, platform implementation, dataset integration approach, and evaluation methods. Section 4 presents results from our three-part evaluation: FAIR compliance assessment, system performance metrics, and demonstrative use case. Section 5 discusses the implications of our findings, including achievements, limitations, and lessons learned. Section 6 concludes with a summary and directions for future work.

2. Related Work

This section provides an overview of existing approaches to agricultural data integration with relevance to our study, as well as of FAIR data principles and their application, and federated systems for privacy-preserving data analysis.

2.1. Agricultural Data Platforms and Integration Efforts

Several initiatives have aimed to reduce agricultural data fragmentation through platform development, each differing in scope and approach from agriclima. Many of these platforms have limited interoperability and struggle with spatiotemporal integrated analyses due to inconsistent formats, lack of standardized metadata, and siloed governance. They often catalog public datasets while incorporating restricted or commercial data, with integration methods varying from simple federation to partial adherence to FAIR principles. For instance, the CGIAR Platform for Big Data in Agriculture [] is a global initiative that aims to leverage big data technologies for agricultural research, offering solutions for interoperability, data sharing, and analytical automation across CGIAR partners. Although the platform emphasizes data sharing and FAIR compliance, actual operational integration across public and private-sector datasets is limited. Another example is agINFRA [], an EU-supported research data hub designed to facilitate the sharing and discovery of agricultural, food, and environmental datasets via open standards and metadata registries. With a focus on the catalog, direct integration of heterogeneous datasets for seamless spatiotemporal analysis is limited. AgYields [] is New Zealand’s national pasture and crop yield database, operating at the country scale and aggregating historical and contemporary records from multiple sources across New Zealand. It enables meta-analysis and modeling of crop productivity and resilience at regional and national scales within New Zealand. However, this platform focuses on harmonized yield and pasture data, and its integration of external data sources or support for dynamic, multi-thematic analyses (e.g., combining climate and insurance risk data) is limited.

These platforms exemplify important advances in agricultural data management but show persistent limitations in data fragmentation, metadata standardization, governance across public/private boundaries, and holistic spatiotemporal integration.

The limitations identified in these platforms, including incomplete FAIR compliance, limited semantic interoperability, inadequate governance for mixed public-private data, and difficulties with real-time spatiotemporal integration, create barriers to comprehensive agricultural risk analysis. These barriers are particularly problematic for climate change adaptation research, where timely integration of heterogeneous data streams (climate projections, hydrological models, crop performance data, insurance records) across multiple spatial and temporal scales is essential.

A key distinguishing feature of agriclima is its explicit design to address these specific limitations for spatiotemporal risk analysis. The platform addresses fragmentation through unified data access APIs, implements FAIR principles via structured metadata and catalog services, provides privacy-preserving governance via a federated architecture and fine-grained access control. This explicit focus on operationalizing solutions to identified limitations, rather than proposing additional conceptual frameworks, represents the platform’s core contribution to agricultural data infrastructure. Additionally, our open-source implementation with explicit cloud-native deployment configurations addresses reproducibility concerns that limit the adoption of proprietary or poorly documented platforms.

2.2. FAIR Principles and Agricultural Data

The FAIR principles (Findable, Accessible, Interoperable, Reusable) have been widely endorsed as a framework for research data management []. However, translating these principles into operational practice, particularly for spatiotemporal agricultural data, presents significant challenges.

Findability: requires not just metadata creation but adoption of appropriate standards, persistent identifiers, and searchable registries. For geospatial agricultural data, relevant standards include ISO 19115 for geospatial metadata and INSPIRE directives in the European context []. However, many agricultural datasets lack adequate metadata or use inconsistent standards, hindering discovery [].

Accessibility: involves providing clear protocols for data retrieval while respecting access restrictions. Agricultural data presents particular challenges because it often includes commercially valuable information (e.g., weather forecasts, crop models) alongside public data (e.g., satellite imagery), requiring fine-grained access control. The principle that “metadata should remain accessible even when data is restricted” is particularly important but often violated in practice.

Interoperability: requires adoption of community-accepted vocabularies, schemas, and formats. In agriculture, relevant vocabularies include Agronomy Ontology (AgrO), Crop Ontology (CO), and others from the AgroPortal collection []. However, adoption remains limited, and many datasets use ad hoc formats and terminologies. For geospatial data specifically, OGC standards (WMS, WFS, WCS) provide important interoperability mechanisms but require implementation effort.

Reusability: depends on rich provenance information, clear licensing, and comprehensive documentation. Agricultural datasets often lack adequate descriptions of data collection methods, processing steps, quality control procedures, and known limitations, reducing confidence in reuse for new applications.

Recent work by Petrosyan et al. [] applied the F-UJI automated assessment tool to evaluate FAIR compliance of agricultural datasets, finding highly variable results with many datasets scoring below 50%. This demonstrates the gap between principle endorsement and practical implementation. Our work contributes by demonstrating how specific mechanisms (structured metadata entry, standard adoption, policy enforcement, provenance tracking) can operationalize FAIR principles in a multi-stakeholder agricultural data environment.

2.3. Federated Learning and Distributed Data Analysis

An important trend in agricultural AI is the use of federated learning (FL), which enables model training without centralizing raw data, as only model updates (i.e., gradients or parameters) are shared with a central server []. Research confirms FL’s effectiveness for tasks like crop disease detection using distributed, diverse datasets []. These studies demonstrate that FL achieves performance comparable to centralized models while enhancing robustness and preserving data privacy [].

While federated learning is a powerful paradigm for privacy-preserving machine learning, it addresses a different aspect of the data integration challenge than our proposal. Federated learning specifically concerns model training across distributed datasets, whereas agriclima focuses on the broader infrastructure for data management, access, and integrated analysis. These approaches are complementary: federated learning could be implemented within the agriclima analytical environment as one analysis modality, while benefiting from its data governance, metadata, and access mechanisms.

2.4. Dataspaces and Data Sovereignty

The European Union is actively promoting the dataspace concept as a framework for trusted, sovereign data sharing across sectors []. The goal is to break down data silos and create a single data market, with agriculture identified as a key focus area. Brunori et al. [] discusses how dataspaces could enable agricultural data exchange while respecting data provider sovereignty and implementing fair value distribution. Key dataspace principles include: data providers retain control and can set access conditions; standardized technical interfaces enable interoperability; governance frameworks establish rules for participation; and mechanisms exist for negotiating data access and compensation.

However, as Bacco et al. [] note in their systematic survey, the dataspace concept remains largely at the architectural and policy level, with limited operational implementations demonstrating how these principles translate into working systems at scale. Technical challenges include authentication and authorization across organizational boundaries, metadata harmonization, data quality assurance, and the development of sustainable business models. agriclima, aligns with dataspace principles by employing a federated architecture (allowing data to remain at provider sites), using policy-based access control, and supporting both public and private datasets. Our primary focus, however, is demonstrating a working implementation for agricultural spatiotemporal risk analysis, not realizing the complete dataspace vision. Nevertheless, operational deployment offers practical insights into challenges such as metadata quality, governance complexity, and performance requirements, which can contribute to broader dataspace development efforts.

2.5. Semantic Interoperability and Ontologies

True interoperability in agricultural research and applications requires more than technical compatibility; it depends on semantic interoperability, which ensures a shared understanding of data across systems. This goal is achieved by enriching sensor data, such as soil moisture readings, with standardized vocabularies, ontologies, and semantic annotations [], enabling diverse platforms to interpret and exchange information consistently []. Resources like the AgroPortal repository support this process by providing ontologies for agronomy and biodiversity, including general ontologies such as AGROVOC and specialized ones for crops, traits, environments, and management practices []. By defining standardized terms and relationships, these ontologies enable the semantic integration of diverse data sources.

However, ontology adoption in operational agricultural systems remains limited. Challenges include the effort required to map existing datasets to ontological terms, the need to update ontologies as domains evolve, and the limited tool support for ontology-based data integration. Many platforms therefore focus on syntactic interoperability (format standards) without full semantic integration.

Our current implementation of agriclima focuses primarily on syntactic and structural interoperability through adoption of geospatial standards (ISO 19115, INSPIRE, RNDT) and common file formats (NetCDF, GeoTIFF). We recognize semantic interoperability through ontologies as an important future enhancement, as discussed in our conclusions. The modular architecture allows ontology-based components to be integrated without fundamental redesign.

2.6. Positioning of This Work

Within this landscape, agriclima makes specific contributions that address identified gaps:

- Operational implementation: Rather than proposing architectural concepts, we present a working platform deployed on cloud infrastructure and evaluated with real datasets and users. This demonstrates practical feasibility and reveals implementation challenges.

- Multi-stakeholder data governance: Our platform explicitly addresses the coexistence of public and private datasets with different access policies, commercial and research users, and varying data sensitivity levels. This reflects realistic requirements for agricultural risk analysis.

- Spatiotemporal focus: The architecture specifically supports spatiotemporal data types and analysis workflows common in agricultural and environmental domains, including geospatial metadata standards, temporal alignment mechanisms, and appropriate storage systems for raster and vector data.

- FAIR operationalization: We demonstrate specific mechanisms to implement FAIR principles in practice, including metadata onboarding processes, catalog implementation, access abstraction layers, and assessment using standardized metrics.

- Open-source implementation: Complete reliance on open-source components with explicit deployment configurations enhances reproducibility and reduces barriers to adoption compared to proprietary platforms.

The evaluation’s scope, however, has certain limits: our focus remains on platform capabilities rather than comparative performance assessment; scalability has not been tested under high concurrent load; and the adoption of semantic ontologies is planned for future work. These limitations are discussed further in Section 5.

3. Methods

This section describes our methodology for developing and evaluating the agriclima platform. We first present the overall research approach, then detail the platform architecture design, implementation, dataset integration procedures, and finally the evaluation methodology.

3.1. Research Approach and Context

3.1.1. Project Organization

The agriclima platform was developed within a collaborative research project funded by Bando Spoke 4 WP 4.3 Agritech (CUP C93C22002790001) under Spoke 4 of the Centro Nazionale per le Tecnologie dell’Agricoltura, Italy (see the project website: https://agriclima.openiotlab.eu/, accessed on 4 November 2025). Conducted over ten months, from January to October 2025, the project brought together a consortium of 10 partner organizations representing diverse expertise and perspectives. The consortium included research institutions such as universities and research centers contributing scientific knowledge in agronomy, hydrology, climate modeling, and remote sensing; technology providers offering meteorological data, phenology models, insurance data, and technical infrastructure; government agencies responsible for agricultural policy, water resource management, and spatial data infrastructure; and end-user organizations including agricultural consortia and associations representing farmers and land managers. The data integrated within the project covered the entire national territory, with additional regional datasets focused on the Trento and Veneto regions for validation and testing within the demonstrators.

The diverse consortium composition ensured that the platform design addressed realistic requirements from multiple stakeholder perspectives, including data providers with commercial interests, researchers needing integrated data access, and practitioners seeking decision-support capabilities.

3.1.2. Development Methodology

The project followed a structured three-phase approach over ten months. During the first phase, covering months one and two, requirements gathering and architecture design were conducted based on inputs from consortium partners. Key requirements for data types, access patterns, governance needs, and analytical workflows were identified, informing the architectural design presented in Section 3.2. The second phase, spanning months three to seven, focused on implementation and dataset integration. Core platform components were developed using selected open-source technologies, while partner datasets were progressively integrated through guided onboarding sessions, as described in Section 3.2.4. In the final phase, from months eight to ten, domain experts created application demonstrators using the platform, enabling user feedback and validation.

This timeline reflects the initial development and deployment phase. The platform is set to remain operational for at least 1 year beyond the project period, with ongoing plans for enhancements and expansion.

3.1.3. Research Questions

Our evaluation of the proposed platform addresses three primary research questions:

- RQ1—FAIR Compliance: To what extent does the implemented platform achieve FAIR (Findable, Accessible, Interoperable, Reusable) compliance for integrated datasets, and what are the specific strengths and weaknesses across the four FAIR dimensions?

- RQ2—Operational Stability: Does the platform demonstrate stable operation under normal use conditions, and what are the resource utilization characteristics that inform future scaling decisions?

- RQ3—Usability for Analysis: Can researchers effectively conduct spatiotemporal analyses using the platform with minimal infrastructure overhead, and what specific mechanisms enable this capability?

These questions focus on platform capabilities rather than comparative performance or comprehensive user studies, reflecting the scope appropriate for an initial deployment evaluation.

3.2. Platform Architecture Design

Spatiotemporal risk analysis in agriculture is inherently complex and involves multiple stakeholders. Commercial weather data vary in terms of cost and added value, while farm management and productivity records directly influence economic risk. In addition, in situ and remote sensing observations offer complementary spatial and temporal resolutions. Addressing this diversity and integrating these heterogeneous data sources requires a carefully designed platform that ensures accessibility, interoperability, and usability.

In response to these challenges, the agriclima architecture was designed with three primary objectives:

- Data integration: Unify heterogeneous datasets from multiple providers with different formats, with a focus on access methods, and policies

- Advanced analytics: Enable researchers to conduct sophisticated spatiotemporal analyses without managing infrastructure complexity

- Practical application: Support development of demonstrators and decision-support tools by domain experts

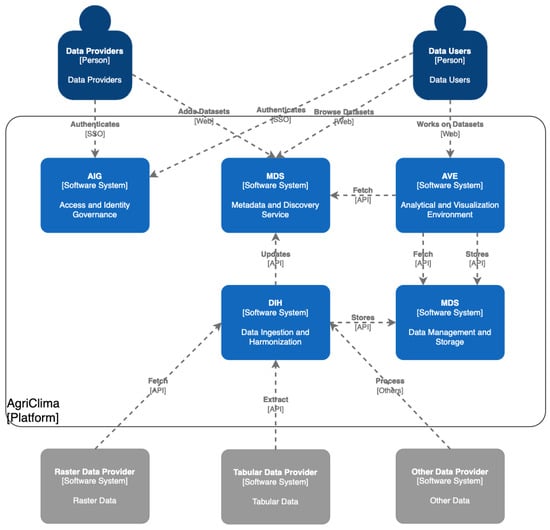

These objectives are realized through five core components shown in Figure 1.

Figure 1.

High-level architecture of the agriclima platform, illustrating the interactions among the five core components and the distinction between shared and private data resources.

3.2.1. Modular Architecture Rationale

To achieve the objectives previously described, we propose a modular architecture, in which each component encapsulates distinct functional responsibilities. This design is motivated by several principles highlighted in the software engineering literature []:

- Maintainability: Components can be updated independently without propagating changes throughout the system

- Scalability: Individual components can be scaled (vertically or horizontally) based on their specific resource demands

- Replaceability: Alternative implementations of components can be substituted (e.g., different catalog software) without disrupting the overall system

- Testability: Components can be tested in isolation, simplifying quality assurance

This modularity is particularly important for research infrastructure that must evolve over time as requirements change and new technologies emerge.

3.2.2. Component Descriptions

The five core components have the following responsibilities:

- Metadata and Discovery Service (MDS): Serves as the platform’s metadata repository, ensuring datasets are discoverable, well-documented, and interoperable. It supports standardized metadata schemas (ISO 19115, INSPIRE, RNDT), facilitates dataset reusability, and provides mechanisms for transparent dataset registration and discovery. The searchable catalog allows users to browse available datasets by various criteria (spatial coverage, temporal range, topic, provider, license).

- Analytical and Visualization Environment (AVE): Provides computational resources for advanced data analysis, geospatial modeling, and visualization. It enables collaborative research through shared notebook environments, development of analytical tools using Python/R/Julia, and application of machine learning and artificial intelligence methods. The environment integrates directly with the platform’s data access mechanisms, abstracting infrastructure complexity from researchers.

- Data Ingestion and Harmonization (DIH): Automates the extraction and integration of heterogeneous datasets from various sources. It ensures consistent, up-to-date data pipelines through scheduled workflows and facilitates metadata consistency while preserving original data formats. The component monitors source data for updates and manages the caching layer that improves access performance.

- Access and Identity Governance (AIG): Manages user authentication, authorization, and policy enforcement. It guarantees that access to datasets is aligned with provider-defined policies and complies with privacy and regulatory standards, thereby supporting secure and responsible data sharing. The component implements Single Sign-On (SSO) across all platform services and integrates with external identity providers when needed.

- Data Management and Storage (DMS): Comprises two complementary repositories. The Shared Data Repository (SDR) hosts datasets accessible to authorized users according to their access level, promoting collaboration and reuse. The Private Workspace Repository (PWR) allows users to store and analyze sensitive or user-contributed datasets securely, maintaining data confidentiality while supporting personalized research workflows. This separation is critical for the multi-stakeholder environment where not all data can be fully public.

3.2.3. Component Interactions Supporting FAIR Principles

The architectural design explicitly supports FAIR principles through specific component interactions:

- Findability: The DIH component ingests and normalizes data while the MDS registers comprehensive metadata generated through guided onboarding sessions with data providers. This metadata is published in a searchable catalog with full-text search and faceted filtering, ensuring datasets are easily discoverable by both humans (through web interface) and machines (through catalog APIs).

- Accessibility: The MDS ensures that data and metadata are retrievable using standard, open communication protocols (HTTP, OGC WMS/WFS/WCS). The AIG establishes clear authentication and authorization procedures through OAuth2/OIDC protocols, enabling users to access only the resources to which they are entitled. Importantly, metadata remains openly accessible even when data itself is restricted, fostering discovery while respecting access policies.

- Interoperability: Heterogeneous data sources (remote sensing imagery, model outputs, tabular IoT data) are made accessible through standardized interfaces. For geospatial data, this includes OGC-compliant services (GeoServer). Common file formats (NetCDF for gridded data, GeoTIFF for raster imagery, GeoPackage for vector data) are used where appropriate. Metadata follows established standards (ISO 19115 for geospatial, Dublin Core for general resources).

- Reusability: In both SDR and PWR, datasets are stored with provenance information tracked through the MDS. Metadata includes explicit licensing information (Creative Commons, Open Data licenses, or commercial terms) and detailed documentation about data collection methods, processing steps, and known limitations. This rich context enables other researchers to understand, validate, and reuse data for new applications, ensuring long-term value.

3.2.4. Key Architectural Features

Three features characterize the platform’s approach:

- FAIR Alignment: Provides the overarching framework, ensuring that datasets, whether public or private, can be shared under clear policies while maintaining transparency, scalability, and reproducibility. Within this framework, particular emphasis is placed on practical realization of interoperability through standardized data formats, open communication protocols, and adoption of community-accepted standards for the specific geospatial agricultural domain.

- Access and Identity Governance: The AIG component manages authentication, authorization, and monitoring, ensuring regulatory compliance and controlled use of both sensitive and openly available information. This builds trust among stakeholders and encourages responsible data sharing, which is essential in an environment where some data has commercial value.

- Support for Innovation: The platform supports innovation by streamlining data preparation (researchers don’t manage authentication, format conversion, or policy checking manually) and by providing contextual metadata that enhances analytical workflows. This enables development of AI-based applications, new risk metrics, and predictive models to inform agricultural decision-making, with researchers focusing on methods rather than infrastructure.

Each core component was implemented using mature, stable, and widely adopted open-source technologies to ensure reproducibility, extensibility, and community support. The implementation choices are detailed below.

3.2.5. Technology Selection

- Analytical and Visualization Environment (AVE): Implemented using JupyterHub [], which provides multi-user access to Jupyter notebooks. Jupyter notebooks are interactive computational documents combining code (Python, R, Julia), narrative text, equations (LaTeX), and visualizations in a single file, making them well-suited for reproducible research and data analysis. JupyterHub handles user authentication, spawns individual notebook servers for each user, and manages computational resources. The AVE integrates with the platform’s data access mechanisms through the custom AgriclimaClient Python library. This client provides a high-level interface to access datasets, abstracting policy verification and data fetching. The client interacts with the MDS to obtain dataset location and access information, validates access rules through the Policy Engine, retrieves necessary credentials from the AIG, and fetches data in the requested format.

- Metadata and Discovery Service (MDS): Implemented using GeoNetwork Open Source [], a catalog application for spatially referenced metadata. GeoNetwork provides: interactive web interface for metadata browsing and editing; support for metadata standards including ISO 19115/19139 (geospatial), ISO 19119 (services), INSPIRE, and Dublin Core; full-text and spatial search capabilities; CSW (Catalog Service for Web) endpoint for programmatic access; and integrated map viewer for spatial data preview.The platform includes 26 datasets provided by project partners (described in Section 3.3). New datasets can be integrated with modest effort through the defined onboarding process involving metadata template completion and data source configuration.

- Access and Identity Governance (AIG): Implemented using Keycloak [] and HashiCorp Vault []. Keycloak provides Identity and Access Management including: user authentication and authorization; integration with external identity providers (LDAP, Active Directory, SAML, OIDC); role-based and fine-grained permissions; and Single Sign-On across platform services. Vault provides secrets management with identity-based security, including: secure storage of credentials for accessing data sources; dynamic secret generation; and audit logging.A custom component called the AgriclimaPolicy Engine orchestrates interactions between Keycloak and Vault. It provides a REST API called by the AgriclimaClient to check access permissions and retrieve appropriate credentials for specific datasets based on user identity and roles.

- Data Ingestion and Harmonization (DIH): Implemented using Apache Airflow [], a platform for programmatically authoring, scheduling, and monitoring workflows. Airflow workflows are defined as Directed Acyclic Graphs (DAGs) in Python, specifying: data source connections and extraction logic; metadata consistency checks; caching and synchronization schedules; and monitoring and alerting rules.Important implementation note: The DIH preserves original data formats provided by sources. It does not perform format transformation or harmonization. This design decision reflects the fact that most contributed datasets already use standard formats appropriate for their data type (NetCDF for gridded climate/weather data, GeoTIFF for raster imagery, GeoPackage for vector features, CSV/Parquet for tabular data). The DIH ensures these datasets remain current through scheduled updates, handles both batch transfers and API-based access as appropriate per source, and validates metadata consistency.Airflow’s scheduling capabilities enable automatic monitoring of dataset updates, with alerts when sources become unavailable or data quality checks fail.

- Data Management and Storage (DMS): Implemented using PostgreSQL [] with PostGIS extension, MinIO [], and GeoServer []:

- –

- PostgreSQL/PostGIS: Relational database with spatial capabilities, primarily used for structured tabular data (farm records, insurance data, sensor observations), vector geospatial features (field boundaries, sensor locations), and metadata storage for the MDS.

- –

- MinIO: S3-compatible object storage for unstructured data including raster images (satellite imagery, climate model outputs), NetCDF files (multidimensional climate and weather data), and user-generated outputs.

- –

- GeoServer: OGC-compliant geospatial data server providing standardized access through Web Map Service (WMS) for map visualization, Web Feature Service (WFS) for vector data access, and Web Coverage Service (WCS) for raster data access. GeoServer internally accesses data from PostgreSQL/PostGIS and MinIO, presenting them through standard protocols.

The separation between Shared Data Repository (SDR) and Private Workspace Repository (PWR) is enforced through integration with AIG. Keycloak manages users and groups, which are mapped to source-specific access policies: GeoServer layer permissions for spatial data, MinIO bucket policies for object storage, and PostgreSQL row-level security for tabular data.Important storage note: Due to high data volumes and provider infrastructure constraints, datasets available externally (at data providers’ premises through their APIs or file servers) are not fully replicated in the SDR. Instead, the DIH implements a caching layer that stages frequently accessed data and maintains consistency with sources through scheduled updates. In contrast, private data uploaded by individual users is persistently stored in the PWR with appropriate backup procedures.

3.2.6. Platform Workflow

Data flow through the system follows this pattern:

- Data Provider Integration: Partners contribute datasets in standard formats (NetCDF, GeoTIFF, CSV, etc.) either through file transfer to platform storage or by providing API access to data hosted at their facilities. Providers participate in guided metadata onboarding sessions where they complete structured metadata templates covering required elements (title, description, creator, temporal and spatial coverage, access conditions, license, etc.).

- Metadata Registration: The DIH validates metadata completeness and consistency, then registers datasets in the MDS (GeoNetwork). The MDS organizes metadata within the searchable catalog and assigns persistent identifiers. Metadata follows ISO 19115 standard for geospatial data, with particular emphasis on elements required by INSPIRE and RNDT (Repertorio Nazionale dei Dati Territoriali) directives.

- Data Access Configuration: The DIH configures appropriate data access mechanisms depending on source characteristics: batch transfer and caching for static historical datasets; API-based access for dynamic or very large datasets; and streaming access for real-time sensor data. Access rules based on dataset licensing and sensitivity are configured in Keycloak and mapped to technical enforcement points (GeoServer permissions, MinIO policies, database access controls).

- User Discovery and Access: Researchers browse the catalog through the MDS web interface or programmatic API to identify relevant datasets. Detailed metadata helps assess dataset suitability (spatial/temporal coverage, variables, quality, license). From the AVE (JupyterHub), researchers use the AgriclimaClient library to request data. The client transparently handles: metadata lookup via MDS; permission verification via Policy Engine and AIG; credential retrieval; and data fetching from appropriate storage (GeoServer, MinIO, PostgreSQL) or source API.

- Analysis and Output: Researchers conduct analyses in Jupyter notebooks with data in memory, benefiting from a rich Python/R ecosystem for geospatial analysis (GDAL, rasterio, geopandas), statistical analysis (numpy, scipy, pandas), machine learning (scikit-learn, tensorflow, pytorch), and visualization (matplotlib, plotly, folium). Private datasets can be uploaded to PWR and integrated with platform datasets. Analysis outputs are saved to PWR for personal use or optionally contributed back to SDR for sharing.

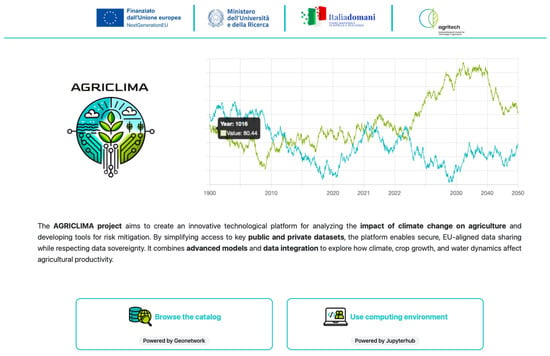

Figure 2 shows the user interface after login, providing direct access to both the MDS (left panel) and AVE (right panel), with SSO ensuring seamless navigation between components.

Figure 2.

Landing page of the AGRICLIMA platform after user login, providing direct access to the Metadata and Discovery Service (GeoNetwork catalog) and the Analytical and Visualization Environment (JupyterHub notebooks).

3.2.7. Deployment Configuration

The platform is deployed on Microsoft Azure cloud infrastructure, although its architecture is cloud-agnostic and could be deployed on other providers such as AWS or Google Cloud, or on on-premises Kubernetes clusters. Deployment leverages Kubernetes for container orchestration, providing automatic restart, health checking, and service discovery for all components. Configuration management is handled using Helm charts and Kustomize, enabling reproducible and version-controlled deployment configurations. Additionally, Horizontal Pod Autoscaling is implemented for the JupyterHub component, allowing the system to dynamically adapt to varying user loads.

The specific Azure deployment consists of:

- Kubernetes cluster with autoscaling between 3 and 6 nodes (Standard B2ms VMs: 2 vCPUs, 8 GiB RAM each), providing 6–12 total vCPUs and 24–48 GiB total RAM

- Azure Managed PostgreSQL database (Standard B2s: 2 vCores, 4 GiB RAM, 32 GiB storage) for structured data and metadata

- Persistent storage: 399 GiB on Azure Managed Disk (for containers and smaller datasets) and 100 GiB on Azure Blob (for user workspaces)

Autoscaling is configured to trigger on CPU utilization thresholds in the AVE pods, allowing the system to dynamically allocate resources as concurrent users increase.

3.3. Dataset Integration

The platform’s analytical capability depends on the integration of comprehensive datasets. Accordingly, 26 datasets from research products and services from project partners have been incorporated. These assets differ in format, size, structure, coverage, time frame, and access policies, providing realistic heterogeneity for assessing the architecture’s data management capabilities. Furthermore, the datasets follow two access policies: Public datasets, available to all authenticated project members without additional restrictions; and Private or commercial datasets, which require specific authorization or a commercial agreement with the provider, demonstrating the platform’s capability to manage restricted-access data. The 26 datasets span seven thematic areas relevant to agricultural spatiotemporal risk analysis:

- Weather and Climate (11 datasets): Historical meteorological reanalysis, weather forecasts, climate scenarios to 2050, and extreme event indices. Includes both public datasets at 10 × 10 km resolution and commercial high-resolution (1 × 1 km) versions.

- Phenology (2 datasets): Crop development models including a neural network model for vine phenology (PhenoCNN) and phenological stage models for extensive crops (wheat, corn, tomato) based on Growing Degree Days.

- Hydrology (3 datasets): Snow water equivalent maps, hydrological cycle component modeling (surface/deep water content, river flows, evapotranspiration), covering Po and Crati basins.

- Agricultural Land Use (4 datasets): Homogeneous land use area segmentation, automatic crop type classification from Sentinel-2, LUCAS land cover surveys, and digital terrain models (DTM).

- Crop Morphological Development (2 datasets): Vegetation indices (MSAVI2, NDVI) derived from Sentinel-2 optical satellite data at 10 × 10 m resolution with 5-day temporal granularity.

- Farm Registries and Insurance (2 datasets): Anonymized farm management records and insurance position data for the Trentino region, with 15 years of historical records. Access is controlled to preserve farmer confidentiality.

- Historical Events and Damages (2 datasets): Reported adverse events (types and intensity) and surveyed field damage data for major crops (apples, vines), collected from on-site inspections following extreme weather events.

Table 1 provides a complete summary of integrated datasets including resolution, temporal coverage, granularity, access policy, size, and format.

Table 1.

Summary of the datasets currently included in the platform.

Data Handling Mechanisms: The platform’s abstraction layer handles both batch and real-time data access through the DIH component. For static historical datasets, batch transfer with caching provides efficient access. For dynamic sources (weather forecasts, real-time sensor streams), API-based access or streaming protocols maintain currency. The AgriclimaClient library presents a uniform interface to researchers regardless of underlying access mechanism, simplifying analytical workflows.

3.4. Evaluation Methodology

Our evaluation addresses the three research questions (RQ1-RQ3) through complementary methods:

3.4.1. FAIR Compliance Assessment (RQ1)

To quantitatively assess alignment with FAIR principles, we adopted the FsF v0.8m metrics proposed by Devaraju and Huber [], which have been validated in prior agricultural data evaluations []. This domain-agnostic metric set comprises 17 distinct metrics (Table 2) that assign numerical scores and maturity levels (initial, moderate, advanced) to each FAIR dimension (i.e., Findability, Accessibility, Interoperability, and Reusability).

Table 2.

Summary and score of the metrics proposed by F-UJI for the FAIR principles.

We used the F-UJI open-source tool [], which programmatically analyzes dataset metadata against these metrics. For each of the 26 integrated datasets, we submitted the url to the F-UJI tool via its web interface, recorded numerical scores for each FAIR dimension, and analyzed the score distributions to identify common gaps. This automated assessment provides an objective, reproducible evaluation based on established metrics rather than subjective judgment.

3.4.2. System Performance Monitoring (RQ2)

To assess operational stability and resource utilization, we monitored the deployed system using Prometheus and Grafana, focusing on four metrics: (1) cluster-level CPU and memory use across all Kubernetes nodes; (2) node-level resource consumption, pod distribution, and availability; (3) pod-level application usage and restart counts for components such as JupyterHub, GeoNetwork, Keycloak, and Airflow; and (4) storage utilization on Azure Managed Disk and Blob, including database growth. Metrics were collected at 1-min intervals over multiple 24-h periods during normal use, defined as four concurrent project researchers performing demonstrator development and analysis, representing typical initial deployment loads.

It is important to note that this monitoring evaluates stability and characterizes resource usage under normal conditions. It does not constitute comprehensive performance testing or scalability assessment. Load testing with many concurrent users, stress testing to identify breaking points, and evaluation of geographic distribution are explicitly out of scope for this initial evaluation.

3.4.3. Demonstrative Use Case (RQ3)

To validate that the platform enables effective spatiotemporal analysis with reduced infrastructure burden, we developed a representative demonstrative use case. This case was selected because it requires integrating multiple data types (gridded meteorological data and vector spatial indicators); involves both platform-provided datasets (from SDR) and user-contributed data (in PWR); and demonstrates the full workflow from data discovery through analysis to visualization.

The use case performs weighted spatiotemporal aggregation of meteorological data, calculating the area-weighted average daily maximum temperature for defined agricultural regions over time. This represents a common pattern in agricultural risk analysis: combining fine-resolution environmental data with agricultural spatial units to compute area-averaged statistics. The implementation’s simplicity underscores the value of the data access abstraction.

The implementation specifically demonstrates:

- Dataset discovery via the MDS catalog.

- Data access using the AgriclimaClient library (metadata lookup, permission verification, data fetching).

- Integration of private spatial indicators uploaded to PWR.

- Geospatial operations (grid-to-vector joining) using standard Python libraries.

- Temporal aggregation and weighted calculation.

- Interactive visualization with region selection.

We document the code complexity (lines of code, number of API calls) and subjectively assess that the workflow focuses successfully on analytical logic rather than infrastructure management. The algorithm (Algorithm 1) and code excerpts are provided to support reproducibility. It is important to highlight that the scope of this demonstrator validates the platform workflow and usability for a defined analysis type. It does not include advanced AI capabilities; those are covered by the broader project’s eleven application-focused demonstrators addressing frost risk, drought management, extreme events, and productivity estimation, which will be detailed in separate domain-specific publications.

| Algorithm 1 Weighted Meteo Data Aggregation |

|

3.4.4. Limitations of Evaluation Approach

The following limitations of our evaluation methodology should be noted:

- User base size: Evaluation involved 4 concurrent users from a 16-person team, not a large diverse user population. User experience assessment is qualitative (researcher feedback) rather than systematic usability testing.

- Performance scope: System monitoring characterized resource usage under normal load, not performance limits or scalability boundaries. Load testing and stress testing remain future work.

- Comparative analysis: No systematic comparison with other agricultural data platforms was conducted.

- Temporal scope: Evaluation occurred during initial 10-month deployment. Long-term sustainability, maintenance burden, and evolution patterns are not assessed.

- Generalizability: Results are specific to the Italian agricultural context, the particular consortium composition, and the selected demonstrator applications. Generalization to other contexts requires additional validation.

These limitations are discussed further in Section 5, along with their implications for interpreting results.

4. Evaluation

In this section, we present the evaluation of the agriclima platform, focusing on validating its three main contributions. First, we perform a system performance evaluation based on key operational metrics, demonstrating the robustness and stability of the proposed architecture. Then, we assess the quality of the data integration by conducting a FAIR compliance analysis of the integrated datasets, which validates our FAIR-Compliant design. Finally, we present a simple use case demonstrator that illustrates the platform’s workflow and highlights the simplified access, privacy preservation, and analytical focus enabled by the custom components.

4.1. FAIR Evaluation

The platform was designed in accordance with the FAIR principles to promote data findability, accessibility, interoperability, and reusability. A quantitative evaluation was performed using the assessment framework proposed by the authors in [], which has also been applied in previous studies of agricultural data resources []. This framework includes multiple sets of indicators, some developed for domain-specific contexts. For this work, we adopted the most recent and broadly applicable version, FsF v0.8m, a domain-agnostic set comprising 17 metrics summarized in Table 2. Each metrics assigns a numerical score and a corresponding maturity level (initial, moderate, or advanced) to each FAIR dimension. The evaluation was conducted through an open-source software tool [] that programmatically analyzes dataset metadata and generates detailed reports. Figure 3 illustrates the output for dataset SD3b, while Table 3 presents a comparative overview of the evaluation results across all analyzed datasets.

Figure 3.

Summary of the FAIR evaluation for dataset SCD3b using the online tool F-UJI [].

Table 3.

Fair evaluation summary for each dataset.

As summarized in Table 4, these results demonstrate exceptionally strong performance in Accessibility (A score), with a mean score of 7.00. The perfect score indicates that all datasets are highly accessible, highlighting the effectiveness of the MDS component. In contrast, the Findability (F score) and Reusability (R score) scores are a bit lower, with means of 3.69 and 3.88, respectively, indicating significant areas for improvement. The Interoperability (I score) has a moderate mean of 5.38. The wide range between the minimum and maximum scores for Interoperability (min = 2, max = 6) and Reusability (min = 3, max = 5) highlights a notable variance in completeness and quality across the datasets. For Interoperability, using standardized vocabularies and linking to other data sources would be beneficial, making the data more machine-actionable and easier to integrate, which is a future work on the project. Finally, to improve Reusability, ensuring clear usage licenses and rich data provenance is another improvement step.

Table 4.

Fair evaluation statistics summary for all datasets.

4.2. System Performance and Operational Metrics

The platform’s performance and health are comprehensively evaluated through a multi-tiered approach, focusing on cluster, pod, and application-specific metrics. Monitoring these metrics is crucial for ensuring the platform’s reliability, optimizing resource utilization, and showcasing its scalability.

4.2.1. Cluster-Level Metrics

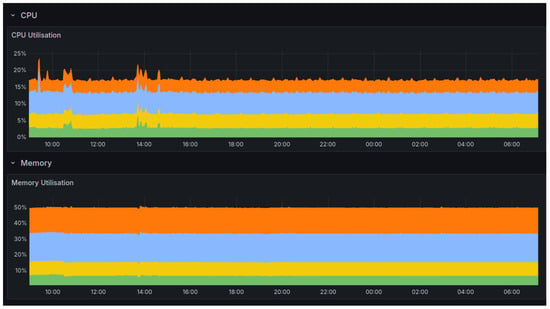

Cluster-level monitoring provides a holistic, macroscopic view of the entire system’s health and resource utilization. This is essential for strategic capacity planning and for determining if the overall cluster is over- or under-provisioned. Key metrics at this level include the aggregate CPU and memory utilization across all nodes. For instance, the AgriClima cluster’s 24-h average CPU usage is approximately 1.38 vCPUs (17.24%), while its memory usage averages 15.96 GiB (49.89%). Figure 4 shows a graph of the cluster’s CPU and memory utilization over a 24-h period.

Figure 4.

Cluster resource utilization (CPU and memory) over a 24-h period. Each color represents one of the four nodes.

4.2.2. Node-Level Metrics

Node-level monitoring focuses on the individual virtual machines that constitute the cluster, providing a deeper understanding of resource distribution and potential bottlenecks. This is crucial for ensuring a balanced workload and for identifying any resource-constrained nodes before they impact performance. All four nodes in the AgriClima cluster are currently “Ready,” indicating they are healthy and able to run pods. The distribution of pods across the nodes is also tracked, showing the workload distribution. For example, the node ‘Aks-agentpool-33955547-vmss00000r’ is running 28 pods, while ‘Aks-agentpool-33955547-vmss000011’ is running 13 pods. Key metrics include pod resource usage (CPU, memory, and network I/O) and latency. Figure 5 shows the metrics for the node currently with the highest resource consumption, over a 24-h period. Additionally, Table 5 shows a summary of the nodes load and restart time.

Figure 5.

24-h resource utilization for the high-workload node. The dashboard visualizes performance across four specific metric rows: CPU, Memory, Disk, and Network.

Table 5.

Nodes Metric Summary.

4.2.3. Pod-Level Metrics

Pod-level monitoring offers the most granular perspective, focusing on the performance and behavior of the application itself, as pods are the fundamental unit for running application code. Metrics at this level include detailed resource usage (CPU, memory, and network I/O) for each pod, which is crucial for identifying issues such as memory leaks or inefficient code. Furthermore, monitoring pod status, restart counts, and latency provides a strong indicator of application stability and availability. Figure 6 shows the metrics for the pod running the MDS component, over a 24-h period.

Figure 6.

This dashboard shows resource utilization (CPU, Memory, Network Bandwidth, and Disk I/O) over 12 h for the pod running the MDS component.

4.2.4. Storage Metrics

Other interesting aspects to monitor in the platform are related to storage. On the one hand, Disk I/O is included in the node-level metrics, to monitor disk-intensive workloads. On the other hand, persistent volume (PV) and persistent volume claim (PVC) capacities are tracked, with a total of 399 GiB on Azure Managed Disk and 100 GiB on Azure Blob. Finally, the Azure Managed Postgres database is monitored separately. It has an average CPU utilization of 7.66% and an average memory utilization of 40.23%. The storage size is 32 GiB, with an average utilization of 13% (3.8 GiB used).

Overall the system performance and operational metrics show that the cluster is over-provisioned and underutilized, with average CPU at 17.24% and memory at 49.89%, indicating significant capacity for growth. While overall healthy, the pod distribution could be improved in case of future bottlenecks. The pod-level metrics show stable resource usage for the MDS component. Finally, storage is well-provisioned for the data-intensive workload, with a low-utilization Postgres database and ample disk space on both Azure Managed Disk and Azure Blob storage. This optimized disk usage highlights the effectiveness of using a cache storage orchestrated by the DIH, as currently the datasets account for almost 700 GBs, whereas less than 400 GBs are used.

4.3. Demonstrator: Weighted Spatiotemporal Aggregation of Meteorological Data

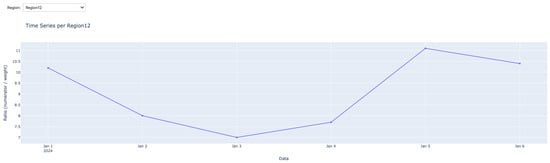

As a final evaluation of the platform, we present a demonstrator that uses an internal dataset, combined with private data, to perform a simple analysis. This analysis, described by Algorithm 1, performs a weighted spatiotemporal aggregation on meteorological data, specifically the Daily Maximum Temperature (). The workflow consists of three main steps: Data preparation: We fetch raw NetCDF data asynchronously from the dataset md2 using the AgriclimaClient for a specified geographic region and time period. This fine-resolution grid data is then converted into a tabular format and joined with static, geo-referenced Land-Cover Indicator Layers (e.g., ‘type-a’, ‘type-b’). These private indicators, uploaded to the AVE, provide spatial weights and define the agrarian regions of interest for the analysis. Weighted calculation: For each grid cell, we calculate weighted numerators () and detailed summary statistics (minimum, quartiles, maximum), including only cells with non-zero indicator weights. Aggregation and visualization: Data is grouped by time and region to sum the numerators and weights. The resulting time series is visualized as a weighted average, computed using Equation (1):

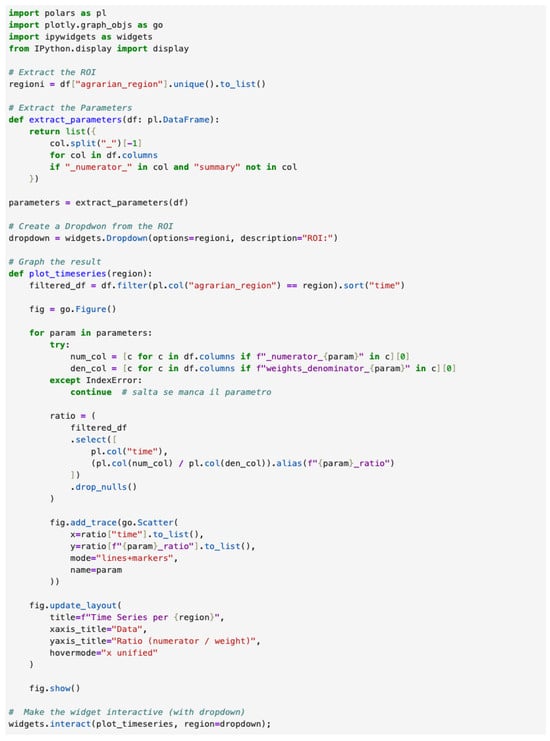

The platform demonstrates its full potential in this analysis. The MDS enables efficient dataset browsing, allowing users to identify suitable datasets quickly. Metadata provides detailed information on licensing and formats, facilitating informed selection. Seamless integration between the MDS and AVE ensures smooth access from the dataset description to the underlying data. DIH components guarantee that data is current and retrieved efficiently. The AgriclimaClient allows users to access specific data with ease, while the PWR supports the inclusion of private datasets, preserving confidentiality. Using the AVE, the analysis can be implemented with minimal code, Figure 7 show the part than handles the visualization of the analysis, which is one of the largest part of the code. Thus, the proposed platform allows researchers to focus on the methodology rather than data preparation. Figure 8 presents a graphical result, including a dropdown list, created with minimal effort.

Figure 7.

Python code extract from the demonstrator implementation.

Figure 8.

Resulting graphical output of the demonstrator analysis.

5. Discussion

This section interprets our findings, discusses their implications, critically examines limitations, and positions the work within the broader context of agricultural data infrastructure development.

5.1. Interpretation of FAIR Compliance Results

The FAIR evaluation revealed a pattern of strengths and weaknesses that has important implications for platform evolution and agricultural data management more broadly. However, before interpreting these results, it is crucial to establish that FAIR compliance scores are context-specific and should not be interpreted as absolute quality metrics or compared across platforms, tools, or research communities. The reported scores specifically reflect our platform’s technical implementation of FAIR-enabling infrastructure, the metadata practices of our consortium partners during initial deployment, and evaluation using the FsF v0.8m metric framework. Different assessment frameworks, platform architectures, or organizational contexts would yield different scores for the same underlying datasets. Our analysis, therefore, focuses on leveraging these dimensional scores to identify strengths and improvement opportunities within our specific implementation, ensuring targeted progress.

5.1.1. Accessibility Success

The perfect Accessibility score (7.00/7.00) across all datasets demonstrates that the platform successfully implements the technical infrastructure for controlled data access. This success reflects several design decisions: the use of standards-based protocols like HTTP/HTTPS and OGC services (WMS, WFS, WCS) to ensure compatibility with standard clients; a unified authentication approach by integrating Keycloak with all components for a consistent user experience; and metadata availability, meaning even restricted datasets have openly accessible metadata, supporting discovery while still respecting access control. This validates our architectural emphasis on the Access and Identity Governance component. However, perfect technical accessibility does not guarantee usability; user experience and documentation quality also matter, aspects not captured by FAIR metrics.

5.1.2. Findability and Reusability Gaps

Lower scores for Findability (mean 3.69/7.00) and Reusability (mean 3.88/7.00) highlight significant opportunities for improvement. The Findability gaps stem from several issues: some datasets lack comprehensive descriptive metadata, such as creator information, keywords, or abstracts; external search engine indexing is minimal, limiting discovery; and not all datasets employ Digital Object Identifiers (DOIs) or other persistent identifiers beyond their internal catalog IDs.

The Reusability shortcomings primarily involve incomplete documentation. Detailed provenance information, including processing chains, quality control procedures, and known limitations, is often missing. Additionally, while access conditions are specified, explicit machine-readable license identifiers (like SPDX codes or Creative Commons URIs) are sometimes absent, and usage documentation (e.g., examples of previous use, validation studies, and recommended applications) is limited.

These deficiencies are largely organizational and social rather than technical. Improving findability and reusability will require enhanced metadata onboarding processes with stricter completeness requirements, and implementing incentives for data providers to invest in robust documentation through credit, recognition, or citation. It will also be beneficial to develop templates and examples that reduce the burden of provenance documentation and to possibly integrate with external identifier services like DataCite for DOIs.

However, relying solely on organizational improvements is impractical, as institutions and users often cannot fully align their priorities and routines with strict platform requirements. Thus, recognizing that metadata will inevitably remain imperfect, the platform could be extended to incorporate several features to help users interpret and work with incomplete documentation. Visual metadata quality indicators (completeness scores, warning badges) can signal documentation levels, enabling informed decisions. User-contributed annotations would allow researchers to share notes on data quality, processing requirements, and usage recommendations, supplementing formal metadata. Finally, contextual recommendations could suggest related datasets, while guided quality assessment workflows could offer interactive tools to evaluate dataset fitness for specific analytical needs. These features should enable productive data use despite documentation gaps, complementing, yet not replacing, comprehensive metadata.

5.1.3. Interoperability Variance

The wide range in Interoperability scores (2–6, mean 5.38) correlates strongly with dataset characteristics. Public datasets from institutional providers (universities, government agencies) tend to score higher, benefiting from established metadata practices and adherence to standards. Commercial datasets often scored lower, reflecting different organizational priorities and lack of exposure to research data management practices.

This suggests that interoperability improvement requires targeted support for commercial data providers, potentially including training sessions on metadata standards; templates specific to commercial data contexts; and clear value proposition for metadata investment (broader reach, reduced support burden, quality reputation).

5.1.4. Comparison with Prior Agricultural Data Assessments

Our results can be contextualized by comparing them with Petrosyan et al. [], who applied F-UJI to evaluate 46 agricultural datasets from various repositories. They found a mean FAIR compliance of 52%, with particularly low scores for findability and reusability. Our overall compliance of 80% represents substantial improvement in the overall score, though different dataset sources and institutional contexts complicate direct comparison.

The improvement in the FAIR score likely reflects: (1) integrated platform design rather than heterogeneous repository federation; (2) guided metadata onboarding during dataset integration; (3) consistent application of geospatial standards (ISO 19115, INSPIRE); and (4) centralized quality control. These results suggest that platform-mediated data sharing, despite coordination overhead, can achieve better FAIR outcomes than purely decentralized approaches.

5.2. System Performance and Scalability Considerations

5.2.1. Current Resource Utilization

Low resource utilization (CPU 17.24%, memory 49.89%) indicates the system is substantially over-provisioned for the current load of four concurrent users. This over-provisioning offers several positive aspects: it provides significant capacity headroom for future user base growth without immediate infrastructure changes, establishes a buffer against usage spikes or computationally intensive analyses, and ensures a responsive user experience with minimal latency. However, it raises potential concerns: there is an associated cost inefficiency if usage does not grow to utilize the provisioned capacity. Furthermore, there is no empirical evidence yet of performance under heavier load, meaning architectural bottlenecks might exist but are not yet apparent. The autoscaling configuration should partially mitigate these concerns by reducing the node count during low usage periods, though a necessary baseline capacity remains allocated, representing a fixed cost.

5.2.2. Scalability Outlook and Unknowns

Current system performance is stable, but several key scalability questions remain unanswered, requiring future systematic load testing and performance benchmarking. Specifically, we need to determine performance degradation with concurrent user scaling (e.g., 50–100 users) and identify potential database or network bottlenecks. We must also evaluate data volume scaling to ensure storage and query performance remain acceptable as datasets grow (e.g., daily weather accumulation). Furthermore, the limits of analysis complexity scaling need testing to confirm the platform can support heavy workloads like deep learning or large raster processing. Finally, we need to verify metadata scaling, so that catalog search remains efficient with hundreds or thousands of datasets. While the architecture (Kubernetes, MinIO, PostGIS) suggests good scalability, dedicated validation is essential.

5.2.3. Storage Efficiency

The efficient disk usage (actual storage ~400 GB vs. raw dataset volume ~700 GB) validates the DIH caching strategy. For large external datasets, maintaining references and caching active subsets rather than full replication is more sustainable. However, this introduces dependencies on data provider infrastructure availability and performance. If multiple providers experience simultaneous outages, many platform datasets become inaccessible even though the platform itself remains operational. Trade-offs between storage costs and data availability/performance require ongoing monitoring and policy decisions based on usage patterns and critical dataset identification.

5.3. Platform Workflow and Usability Insights

The demonstrative use case validates that the AgriclimaClient abstraction layer can successfully reduce infrastructure burden. Researchers can now focus entirely on analytical logic—spatial joins, temporal aggregation, and weighting calculations—instead of managing authentication flows, constructing API requests, handling credentials, or converting data formats. This benefit is quantifiable: the weighted aggregation analysis required only about 150 lines of Python code, with a mere 20 lines dedicated to data access via the AgriclimaClient. Without this abstraction, researchers would likely need 50–100 additional lines to manage authentication, pagination, format conversion, and error handling, all of which constitute boilerplate code shown to burden scientific programming [,]. Furthermore, the abstracted approach is more maintainable: central updates to the AgriclimaClient can accommodate changes in underlying data sources or access mechanisms without requiring modifications to the analysis code.

Informal developer feedback confirmed both successes and challenges. Some users initially encountered minor difficulties with login and first access to the platform, as is common with new systems. However, researchers with coding experience adapted rapidly, typically achieving productivity within hours of introduction. This quick adoption is attributed to the familiar Jupyter notebook environment and the use of standard geospatial libraries such as geopandas and rasterio, which significantly reduce learning requirements.

5.4. Limitations and Threats to Validity

As noted in Section 3.4, some limitations constrain the generalizability and completeness of our evaluation:

- Evaluation Scope Limitations: The evaluation’s transferability is limited by a small user base (4 concurrent users) reflecting only early-stage usage, a 10-month temporal scope missing long-term sustainability insights, and geographic/domain specificity to Italian agriculture and the consortium. Furthermore, performance monitoring only covered normal operational usage, not scalability limits or stress response, and there was no systematic comparative analysis against alternative platforms.