Robust Guidance and Selective Spraying Based on Deep Learning for an Advanced Four-Wheeled Farming Robot

Abstract

:1. Introduction

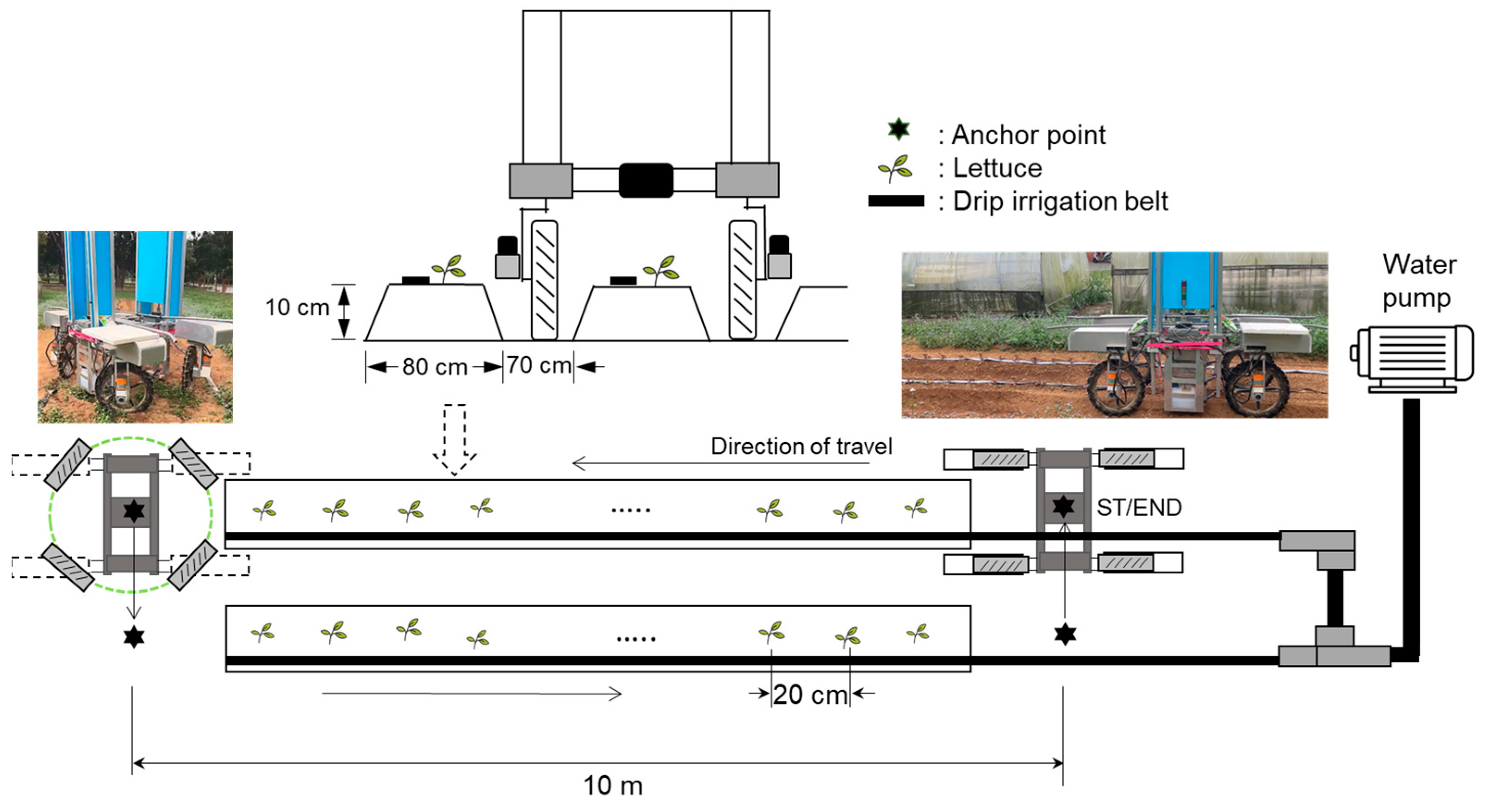

2. Autonomous Navigation and Selective Spraying Scheme

2.1. Motion Model

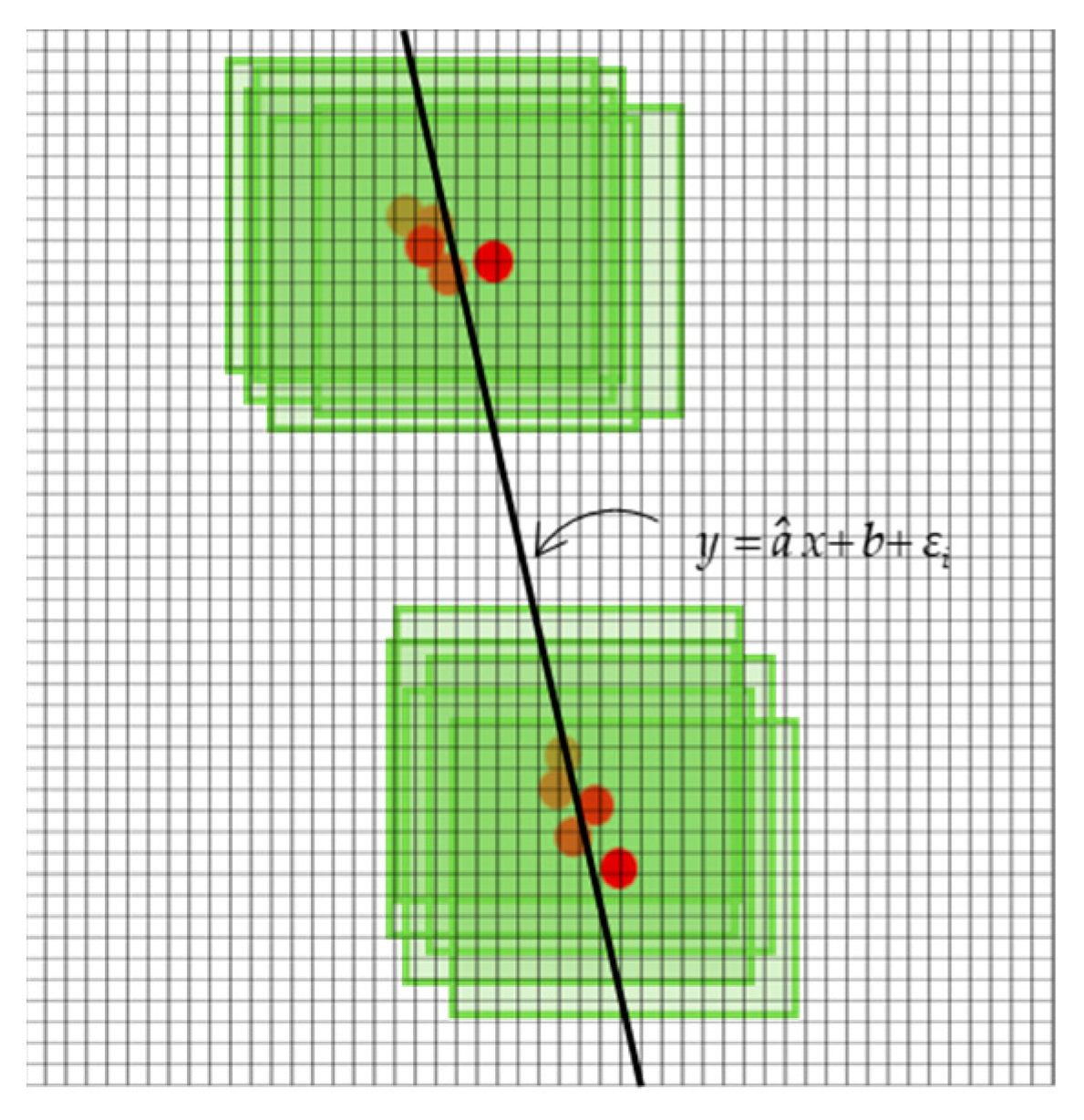

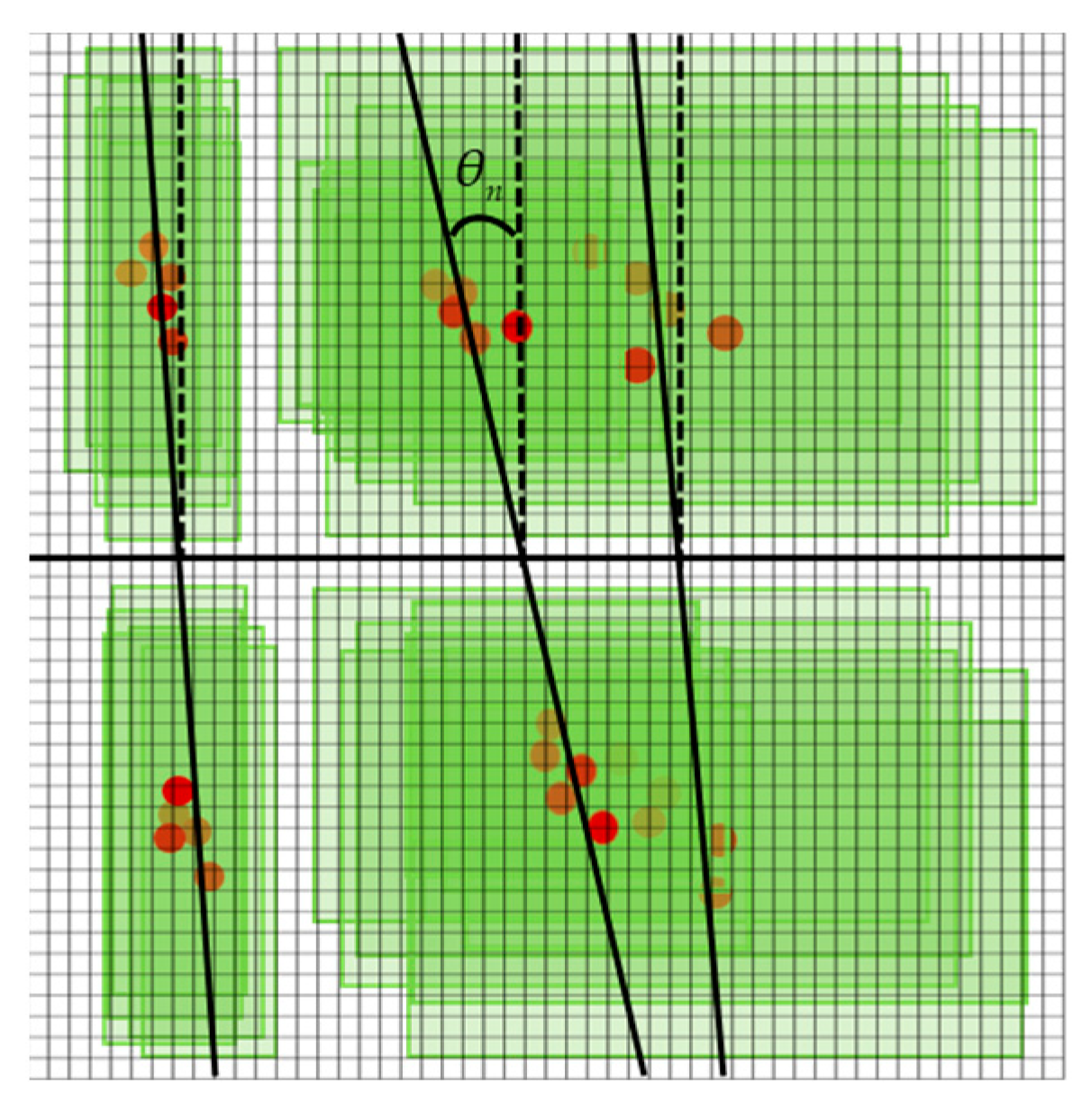

2.2. Guidance Line Generation

2.3. Guidance and Control

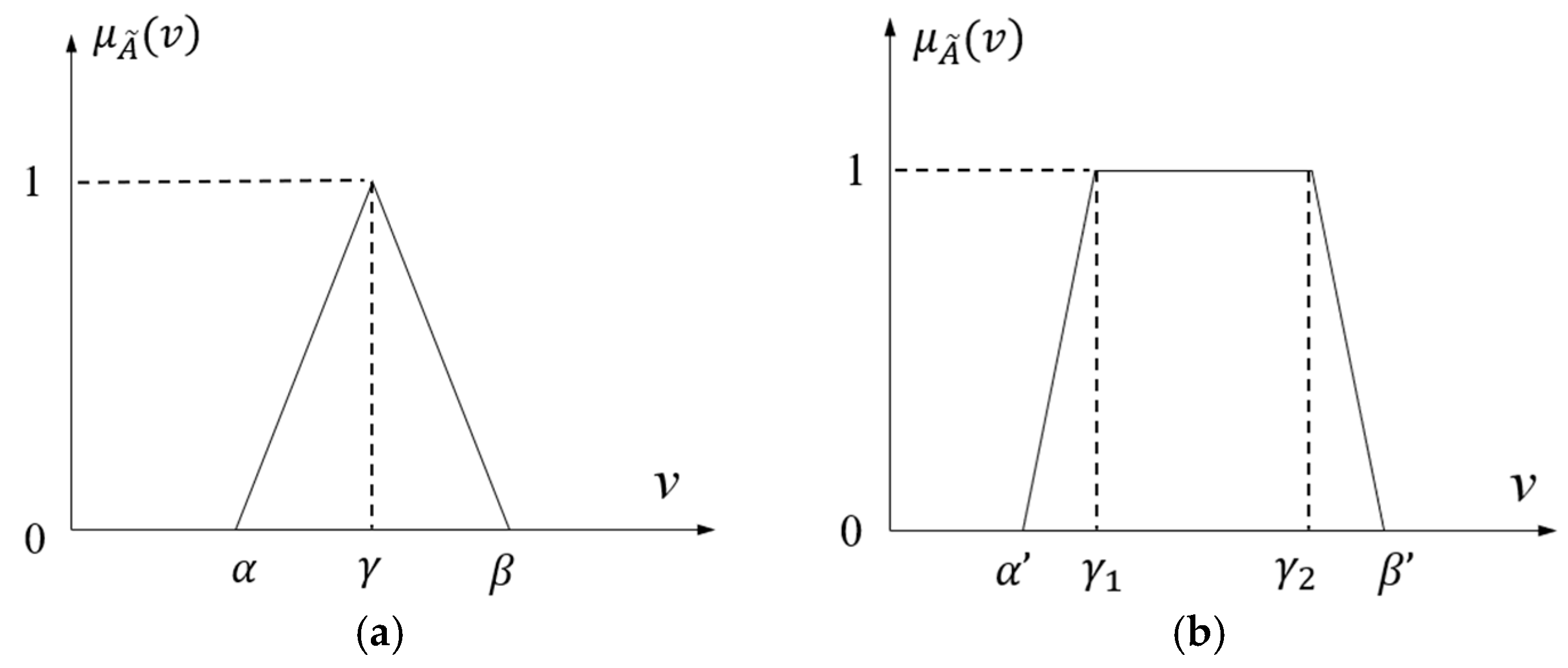

2.3.1. Heading Control Using FLC

- Rule 1:

- IF ( is LO) AND ( is N) THEN ( is L);

- Rule 2:

- IF ( is LO) AND ( is Z) THEN ( is M);

- Rule 3:

- IF ( is LO) AND ( is P) THEN ( is R);

- Rule 4:

- IF ( is M) AND ( is N) THEN ( is L);

- Rule 5:

- IF ( is M) AND ( is Z) THEN ( is M);

- Rule 6:

- IF ( is M) AND ( is P) THEN ( is R);

- Rule 7:

- IF ( is RO) AND ( is N) THEN ( is L);

- Rule 8:

- IF ( is RO) AND ( is Z) THEN ( is M);

- Rule 9:

- IF ( is RO) AND ( is P) THEN ( is R).

2.3.2. Speed Control Using a PID Controller

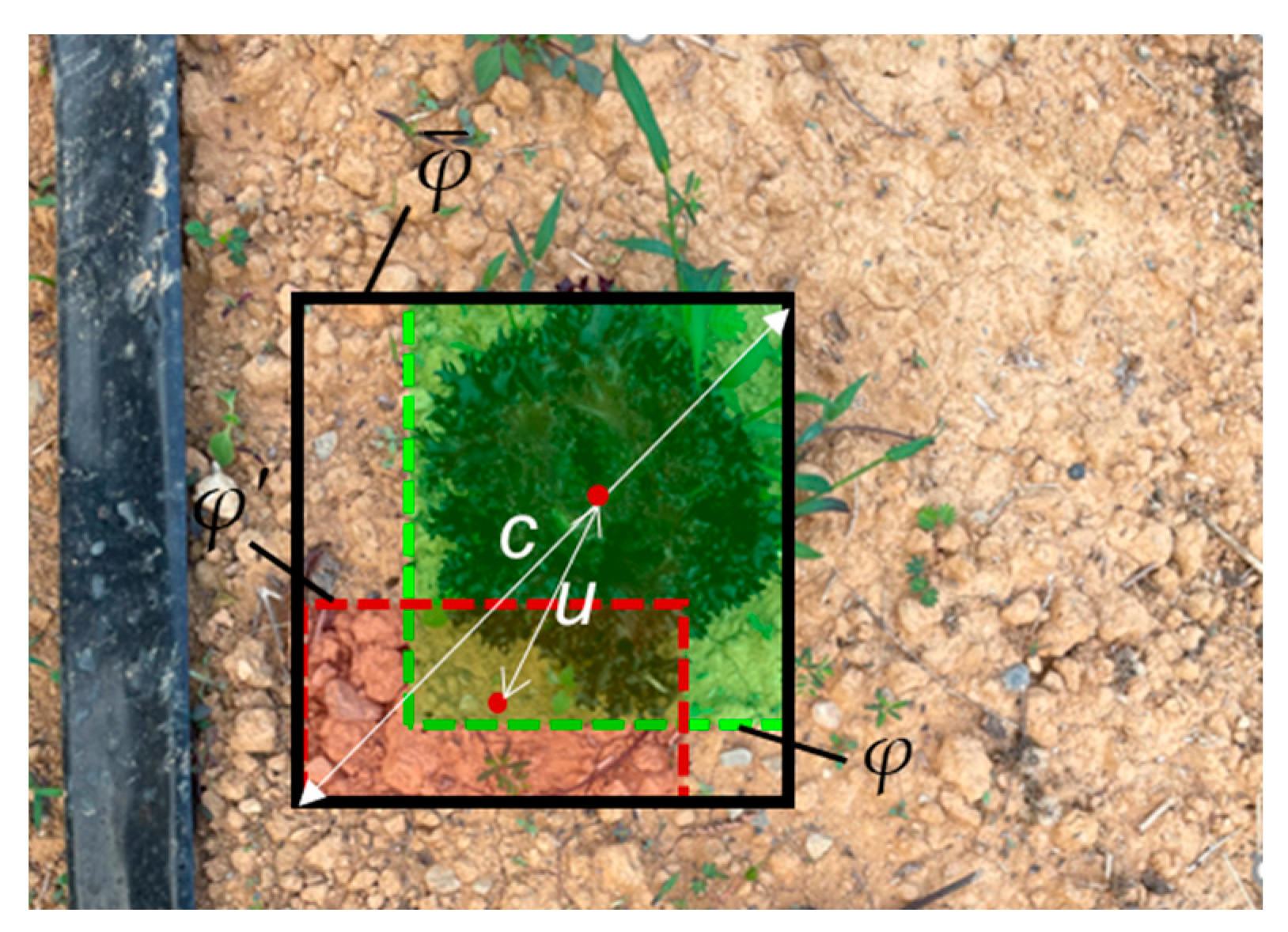

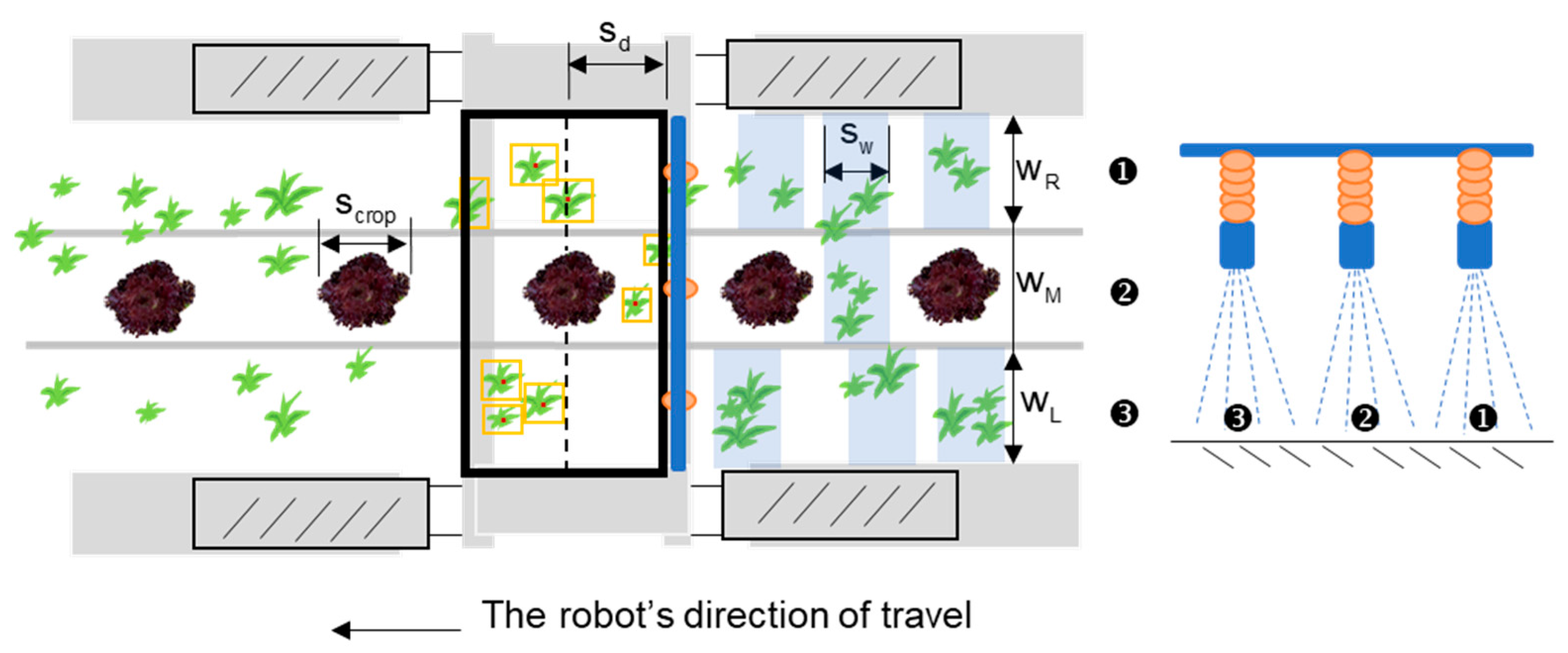

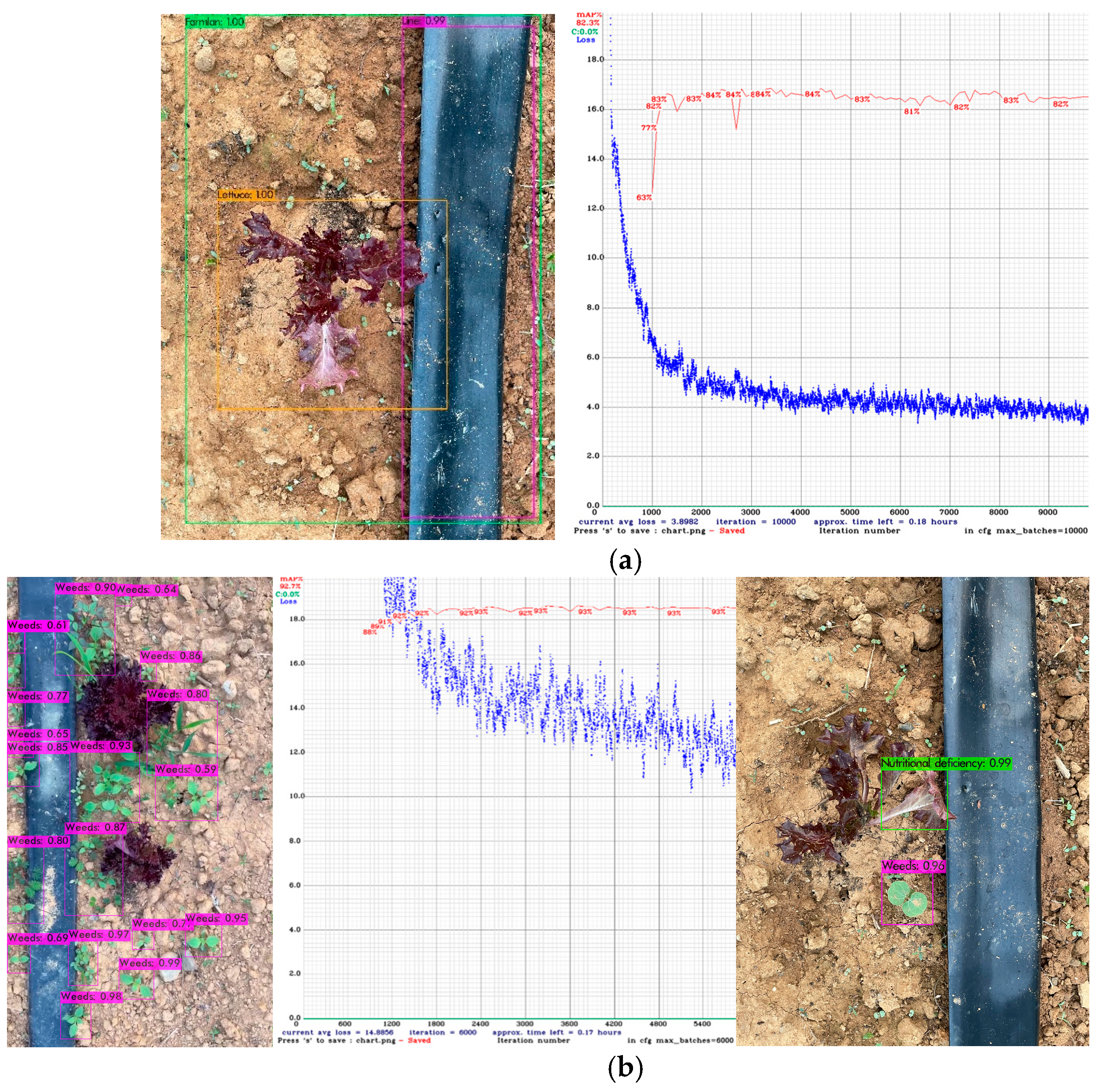

2.4. Selective Spraying

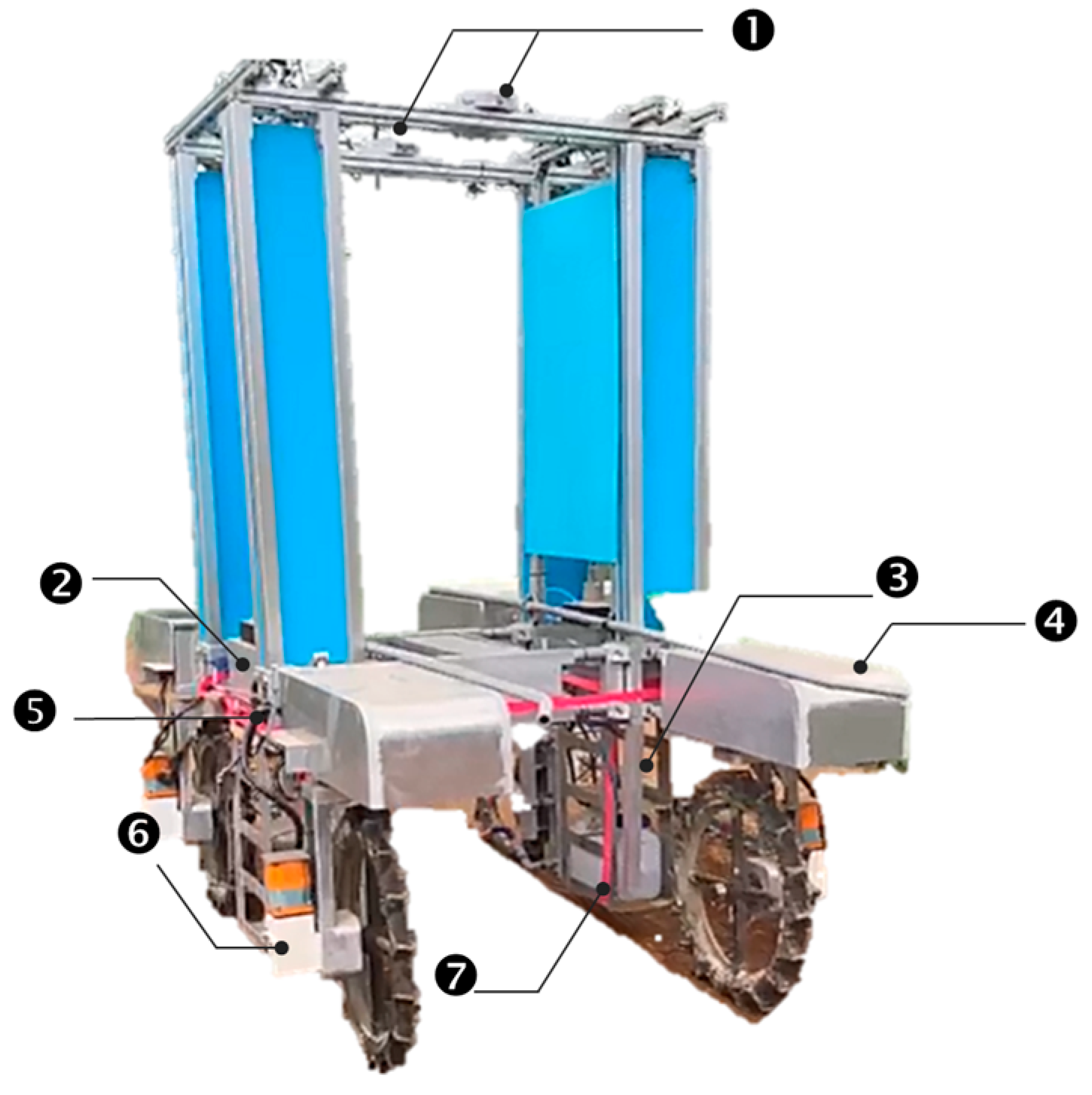

3. Description of the Farming Robot

3.1. Mechatronics System

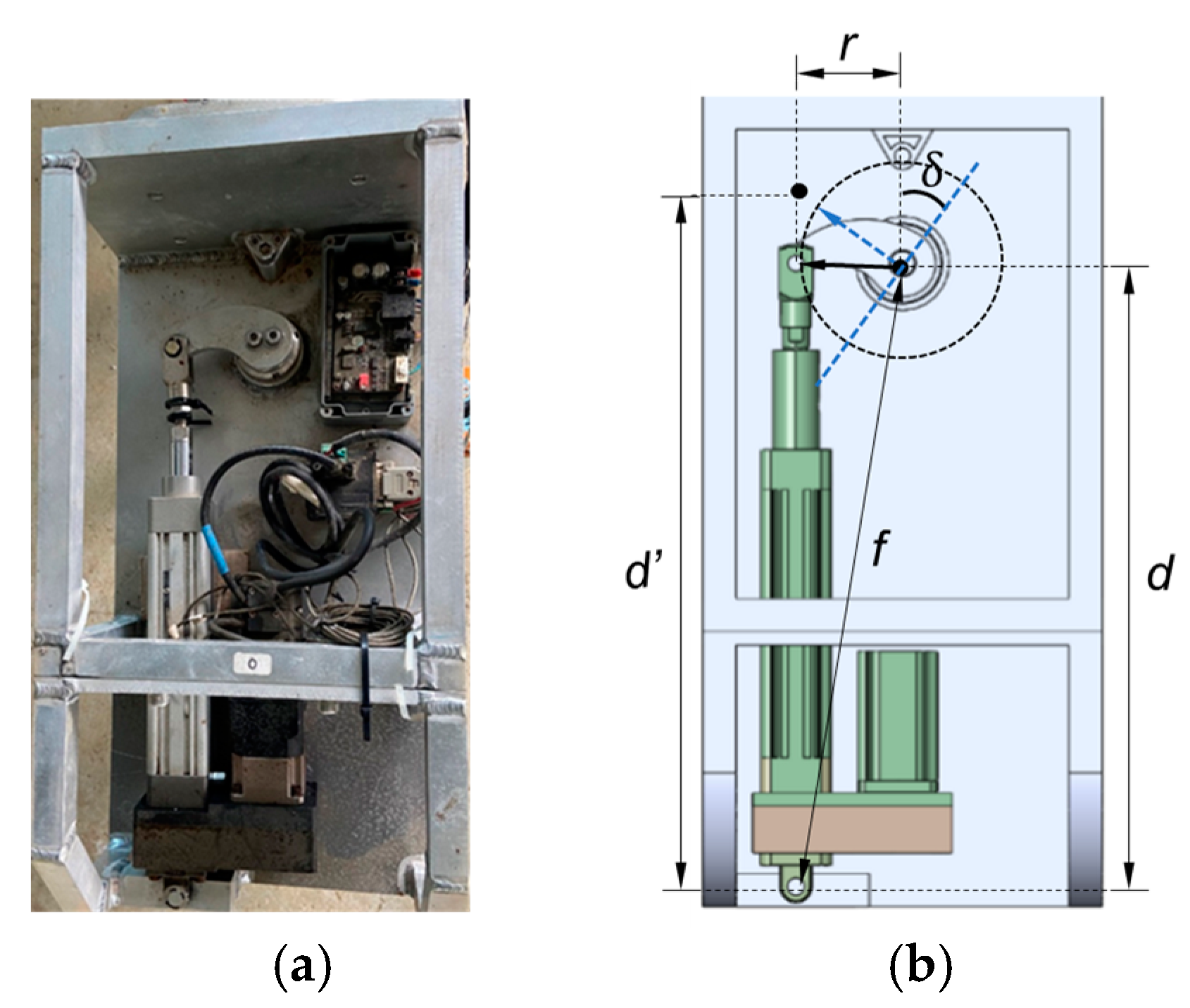

3.2. Steering Mechanism

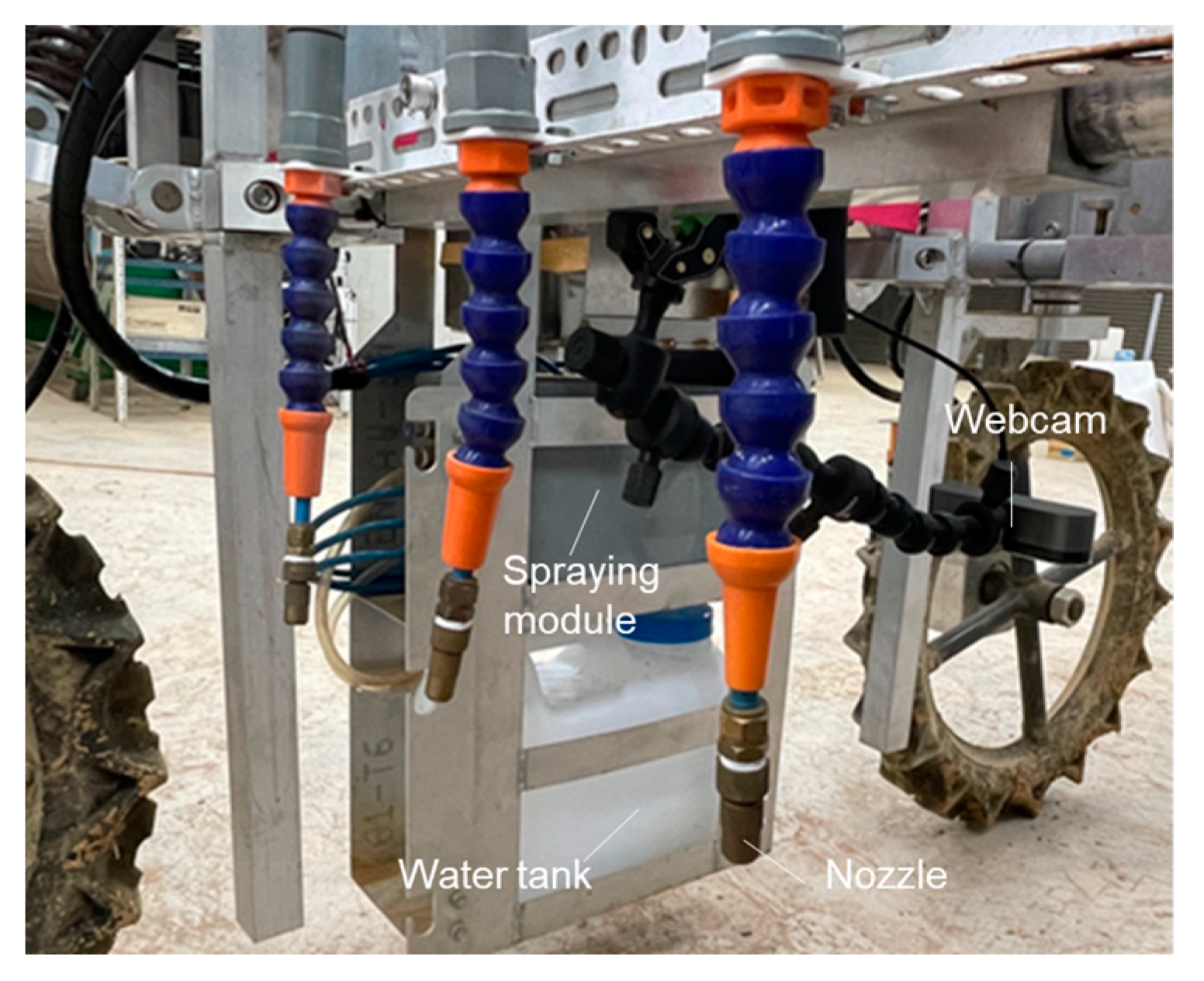

3.3. Spraying Module

4. Experiments and Results

4.1. Environmental Conditions and Parameters

4.2. Preliminary Test

4.3. Experimental Results

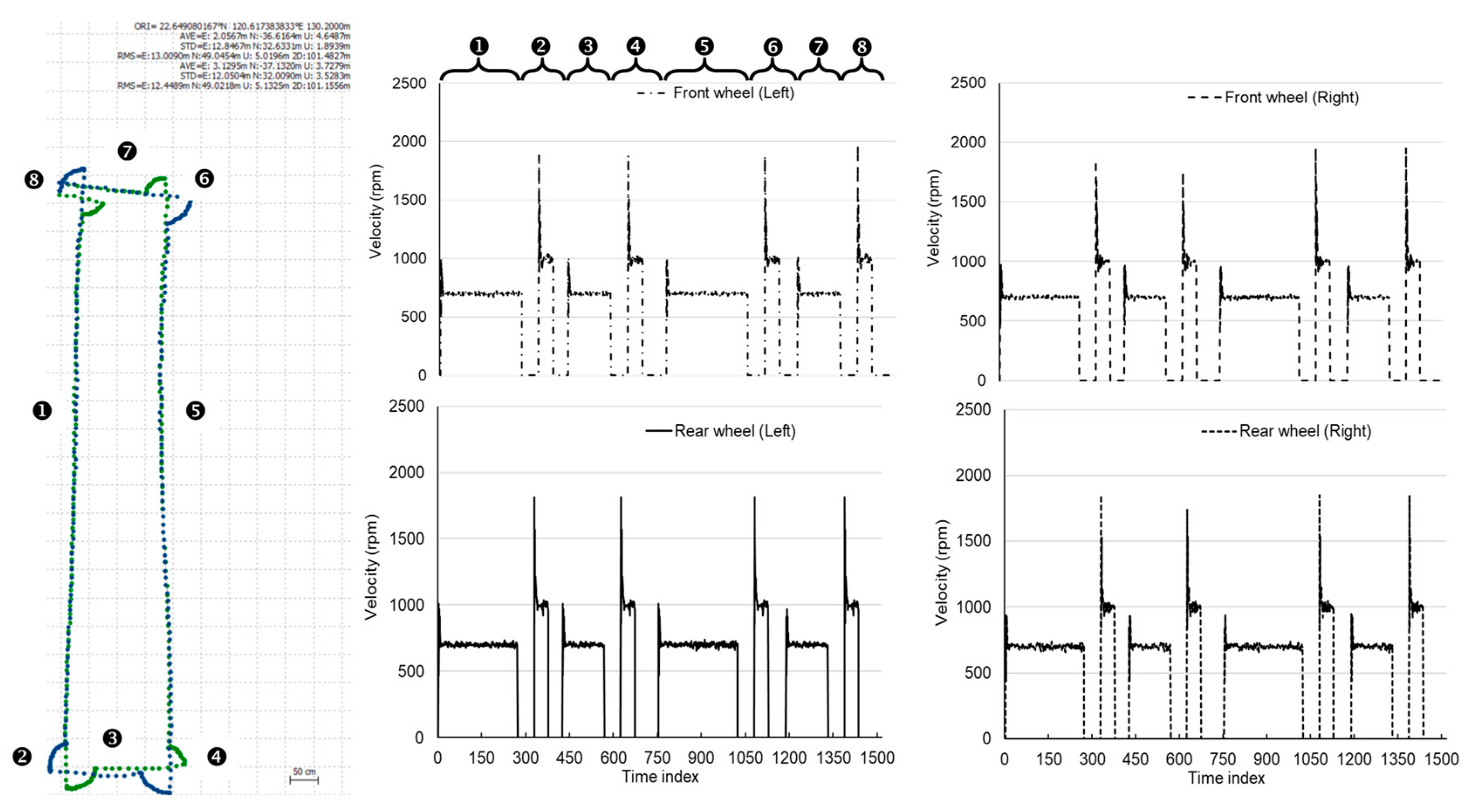

4.3.1. Scenario 1

4.3.2. Scenario 2

4.4. Discussion

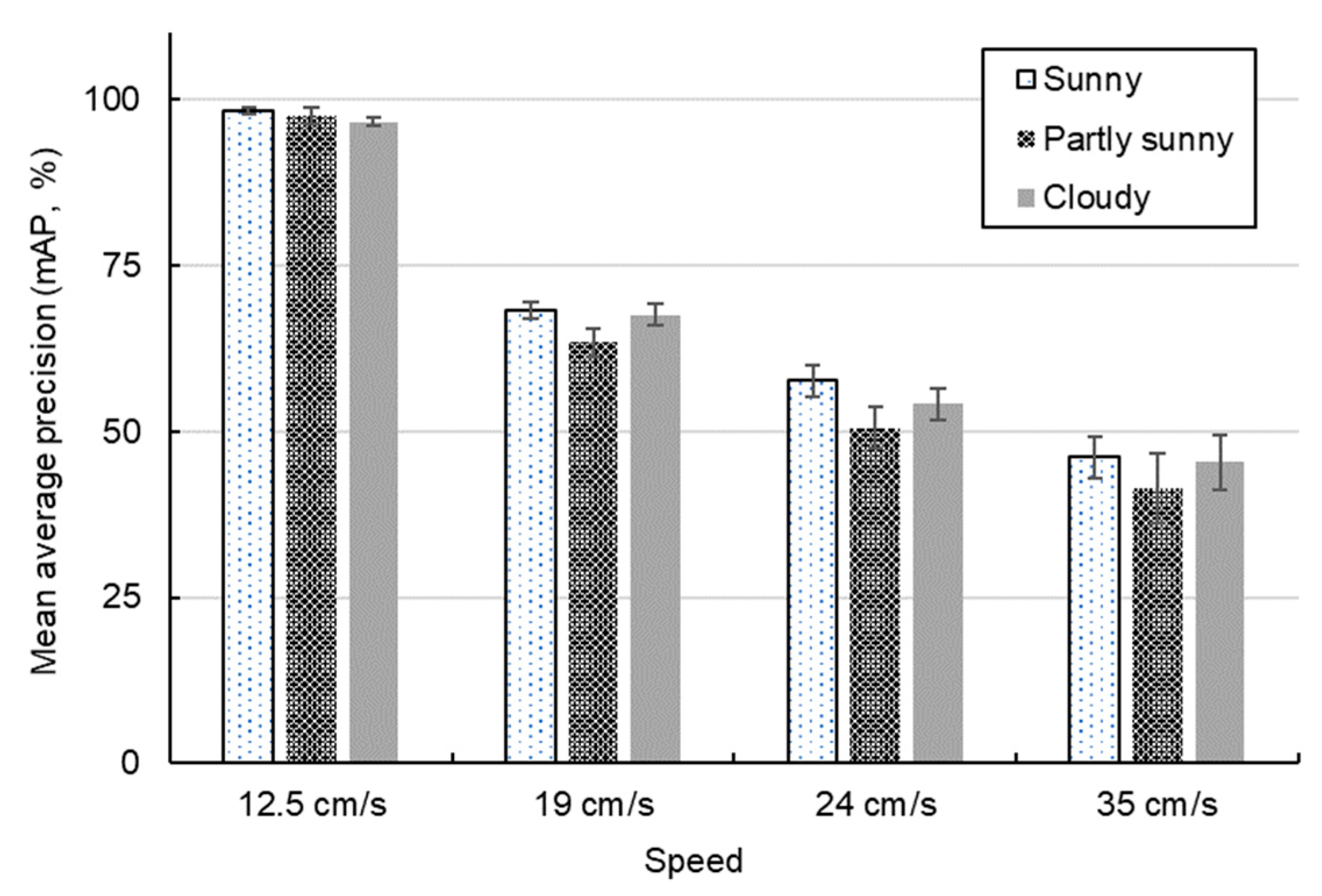

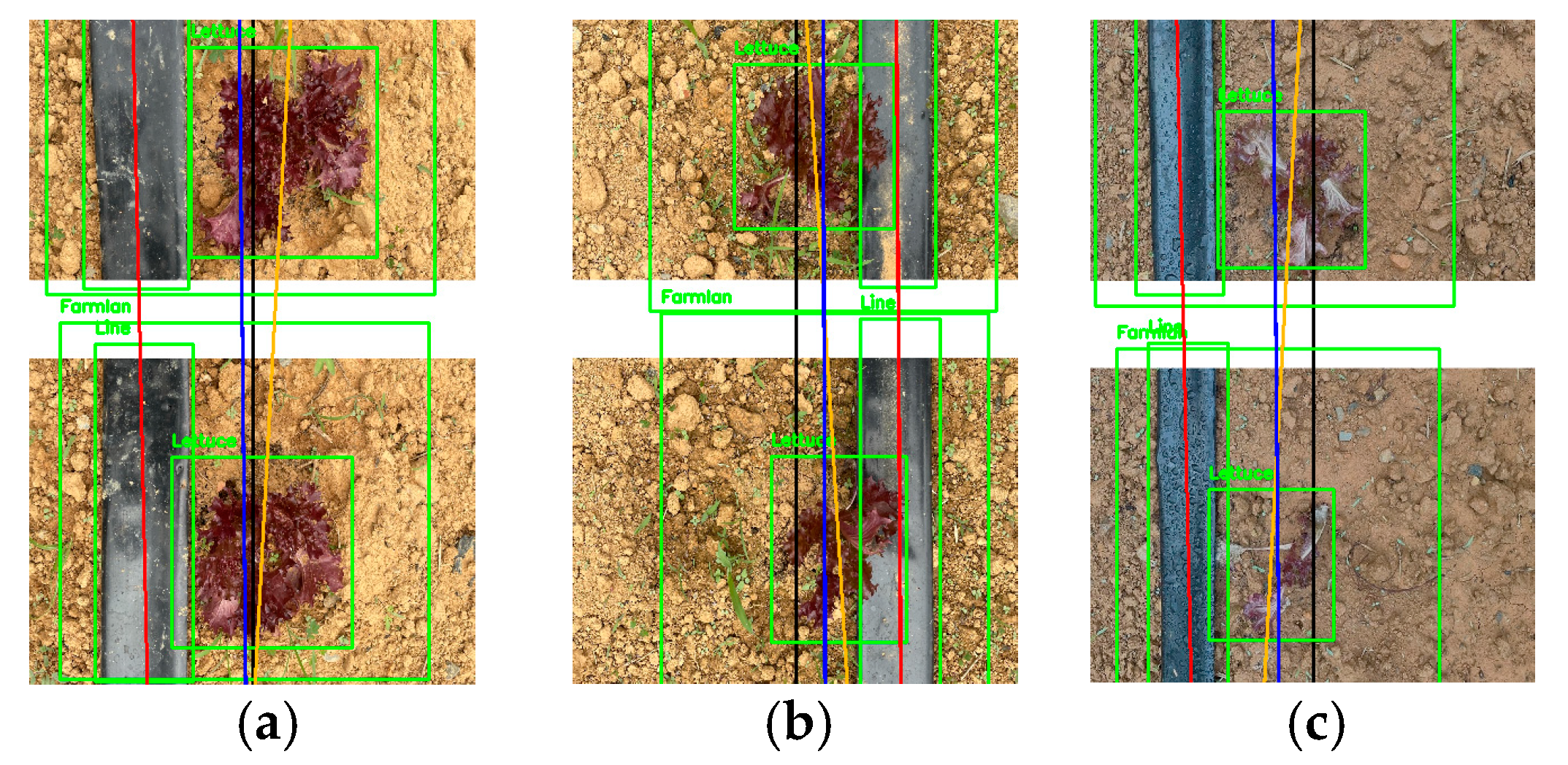

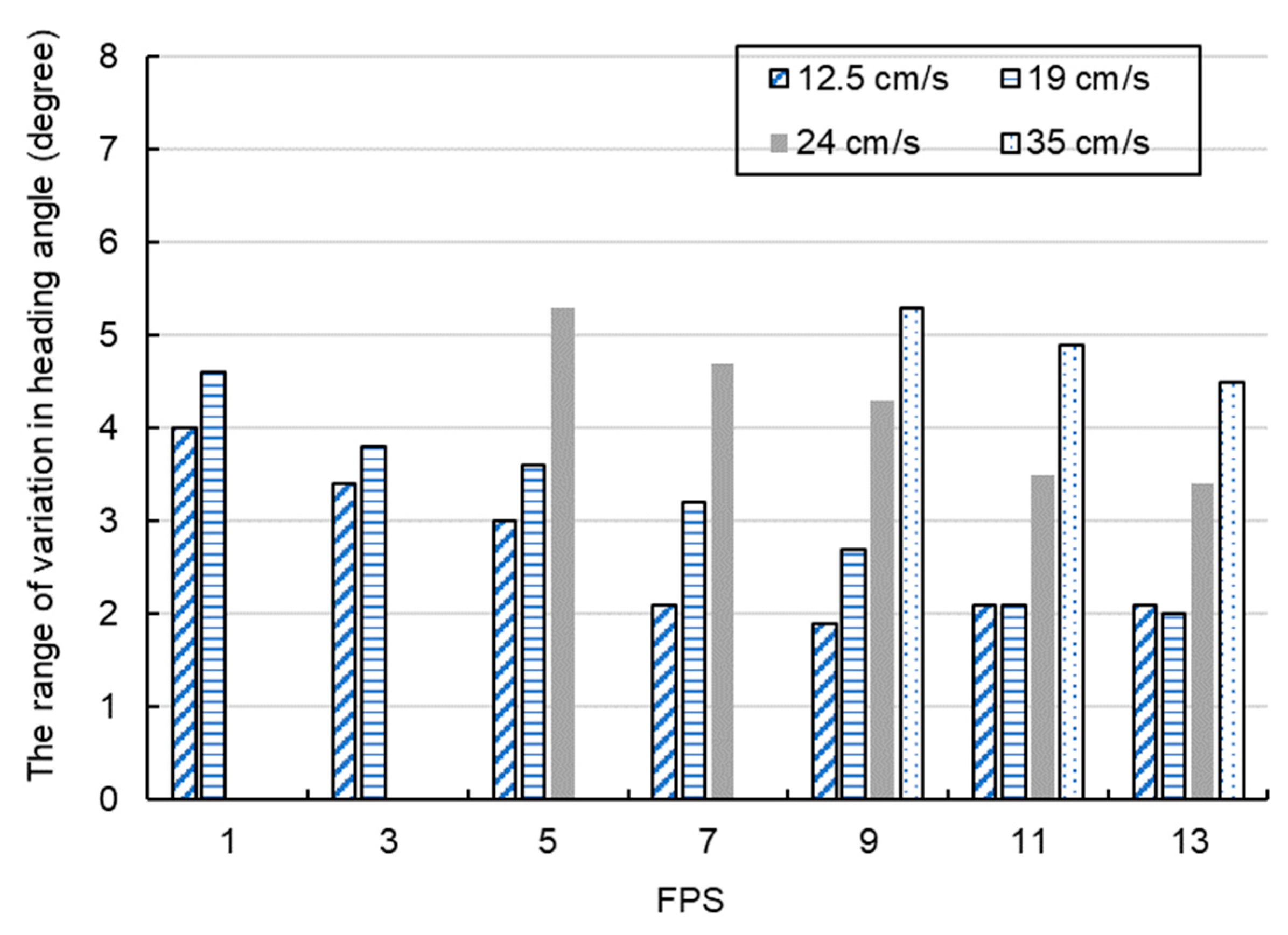

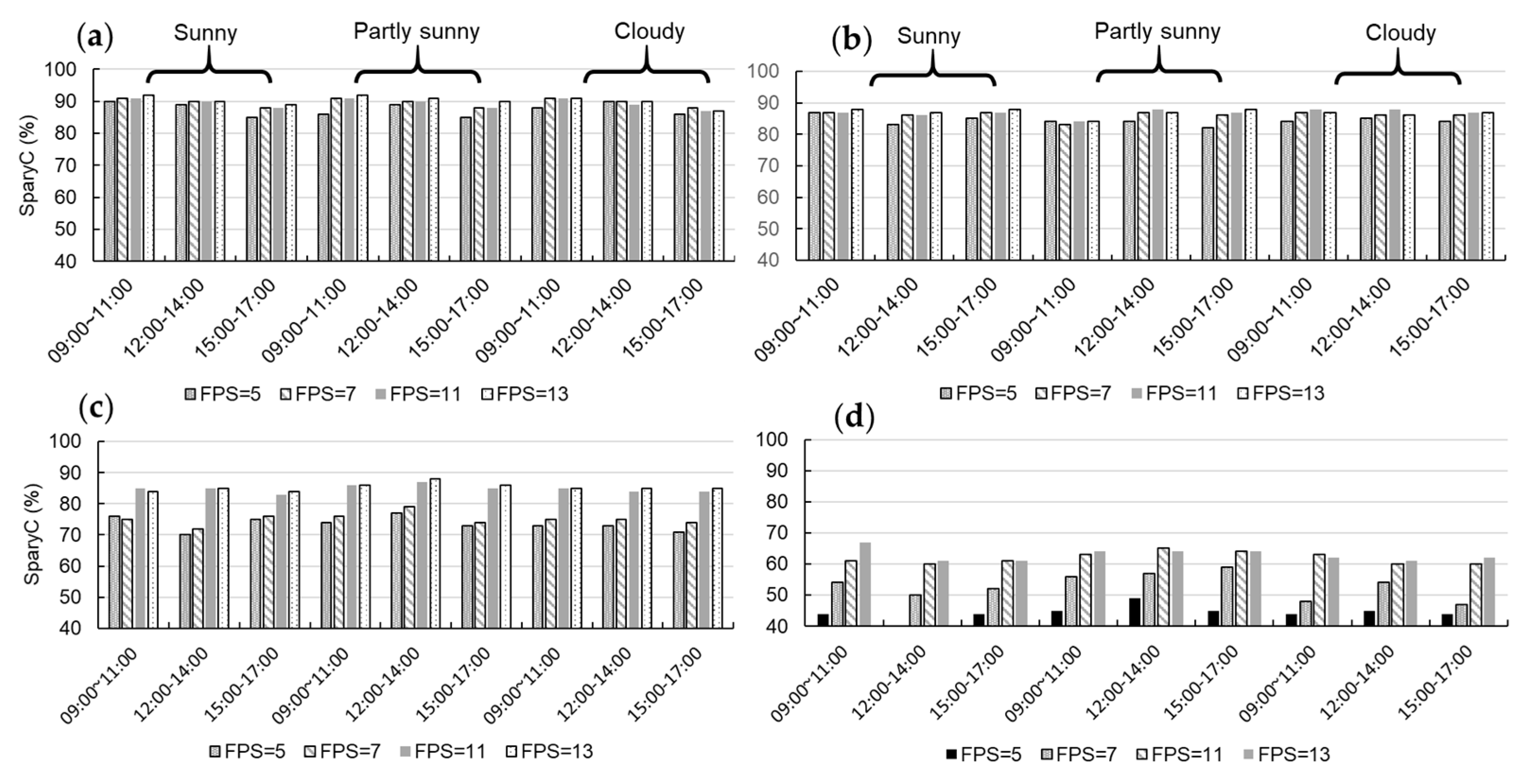

- The fitting result of the guidance line is related to the speed of the robot, the detection performance of DetModel #1, and the FPS value. As shown in Table 4, the accuracy of using DetModel #1 to detect ridges, crops, or drip irrigation belts ranged from 92 to 99%, with an average accuracy (AP) of about 95%. The accuracy of identifying drip irrigation belts was the highest, reaching up to 99%, followed by crops at 98% and field ridges at 97%. Since the training set samples came from images captured in a real environment from different angles and under various weather conditions, such realistic datasets can enhance the object recognition ability of the model [47,48]. The accuracy of navigation line extraction depends on the performance of the trained model in detecting targets. When the same type of object of the image is successfully detected multiple times, a line can be fitted using the least squares method. Soil images under different climate conditions were collected, and even overexposed images were used as training samples for modeling. The experimental results show that the trained model can effectively improve its generalization performance, especially under different climate conditions. In practical operation, as long as the center points of at least two objects can be detected, the navigation line can be extracted.When the robot traveled at a speed of 12.5 cm/s, and the FPS is set to 7, its mAP could reach 98%. As the robot speed increased, the mAP gradually decreased. When comparing the relationship between the mAP and heading angle variation in Figure 19 and Figure 21, it can be observed that a lower mAP would lead to an increased range of heading angle variation, producing more dubious heading angles. Increasing the FPS can help reduce false detection for objects caused by instantaneous strong ambient light and maintain a certain mAP even as the speed of robot increases. However, it also increases the computing load of the system, leading to the risk of overheating of the hardware system. Under conditions that meet various climatic requirements while maintaining the average object detection performance, the average detection time of DetModel #1 was 143 ms/per image.

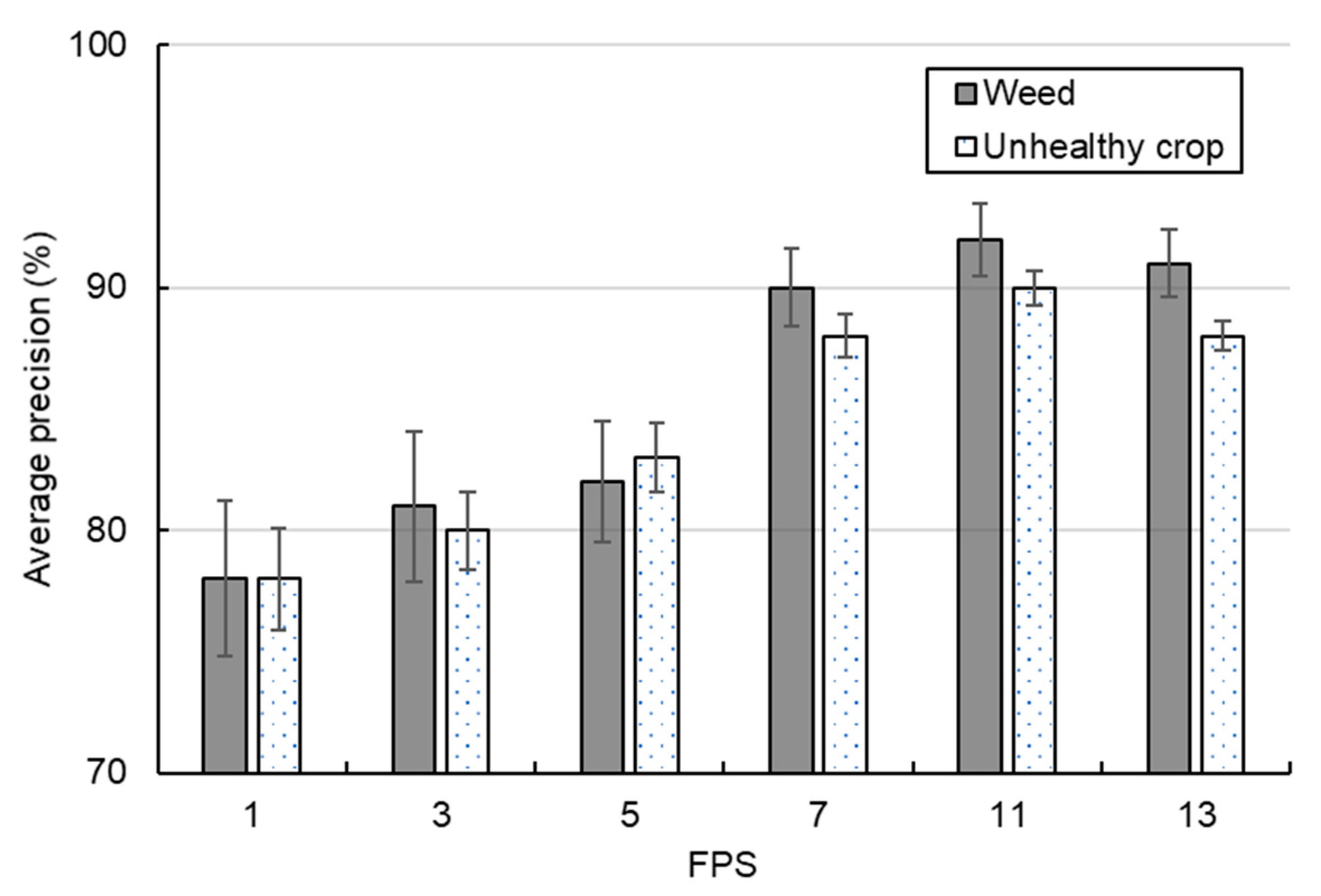

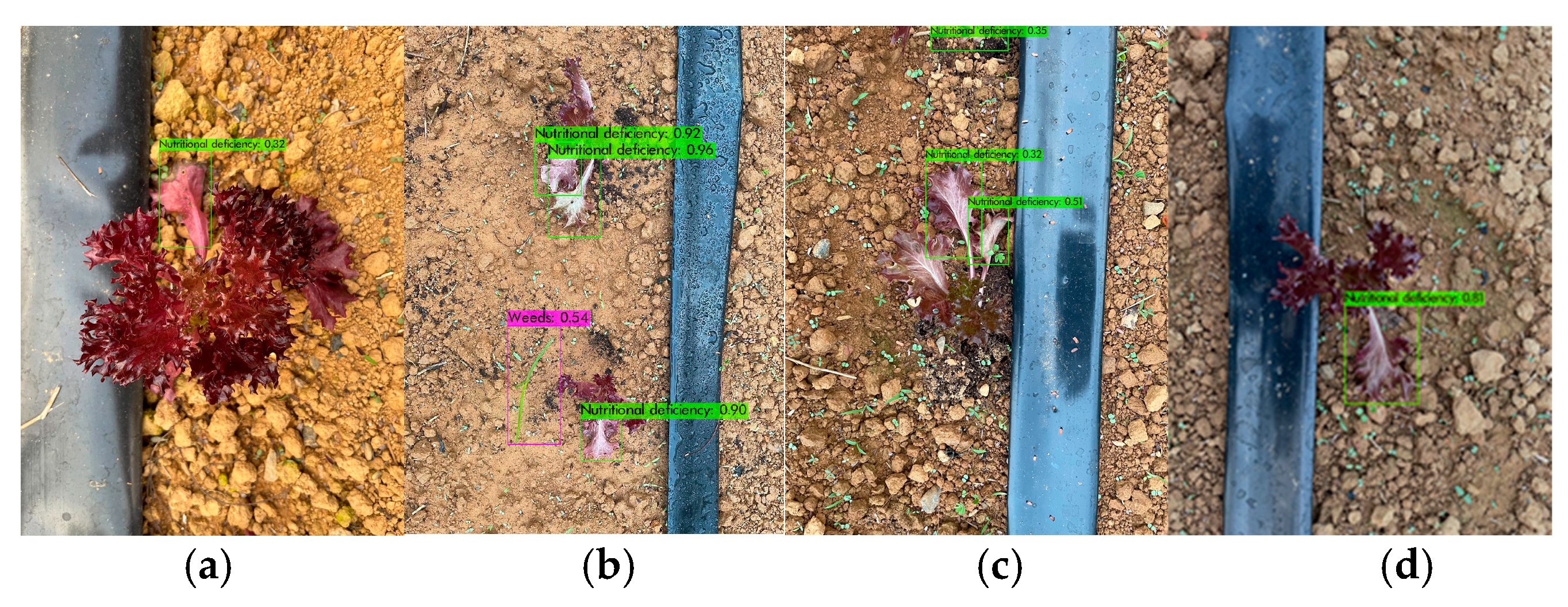

- Under different weather conditions, DetModel #2 was used to identify unhealthy crops and weeds, achieving average PRs of about 84% and 93%, respectively. In the afternoon on a cloudy day, the PR was slightly reduced to 84~89%, demonstrating that the deep learning model exhibited better adaptability to images with weaker light intensities. This adaptability improves upon the limitations of traditional machine learning techniques [49]. However, the limited dynamic illumination range of RGB cameras can easily lead to image color distortion when this limit is exceeded or not met [50], making object recognition difficult, especially with overexposed or insufficiently bright images.Rapid movement can result in specific frames failing to capture the target. Objects often have features significantly different from those encountered during the training process, making them difficult detect [25]. Changes in ambient lighting alter the tones and shadows in the image frame, affecting an object’s color, and pixel edge blur or shadows can also significantly impact detection accuracy [51,52]. Adding more feature information to the training of the deep learning model can improve its detection performance [53]. In this study, more field images, including soil types in low-light environments and even overexposed images, were used as samples. The experimental results show that the trained deep learning model had better generalization performance.

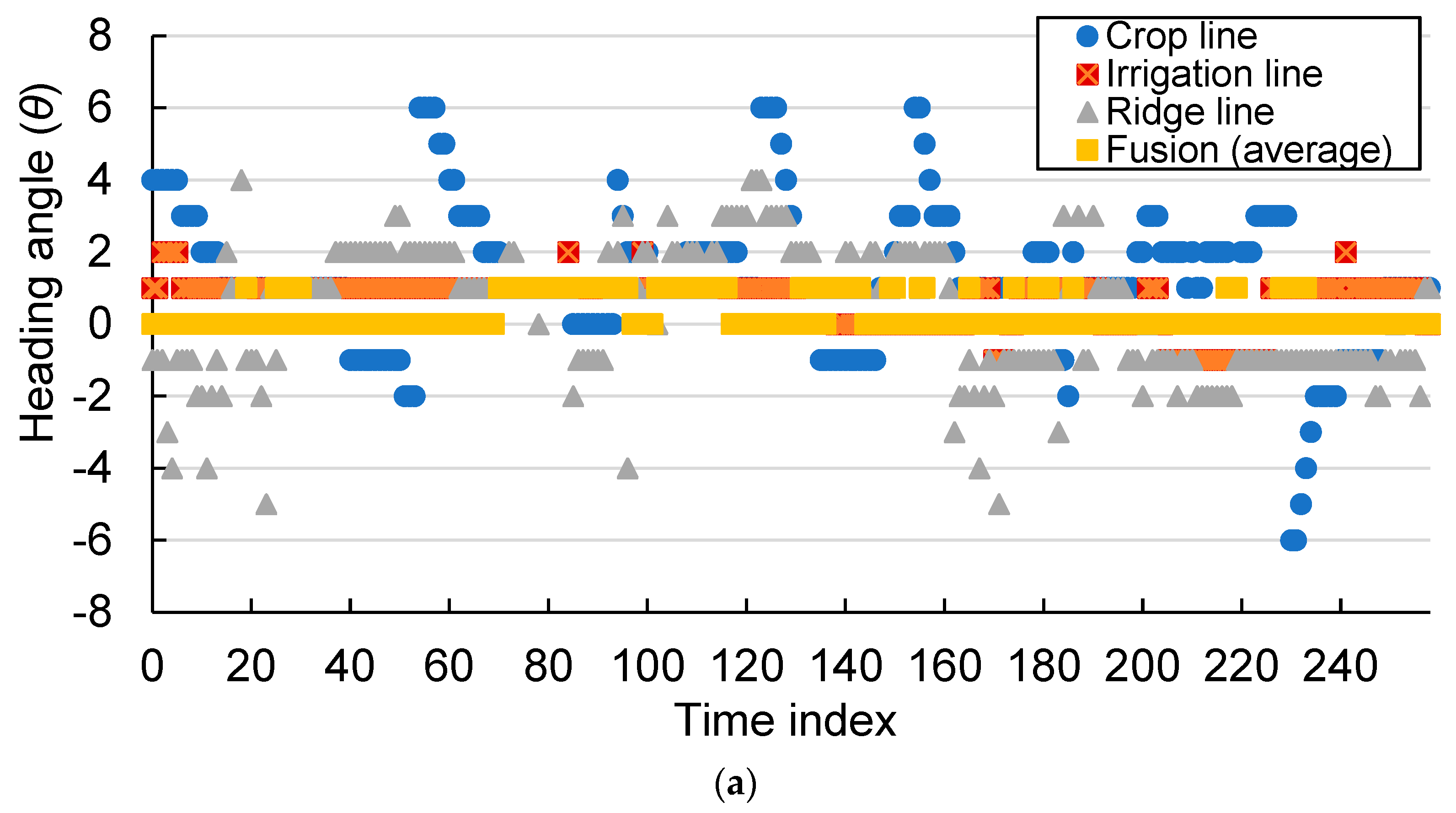

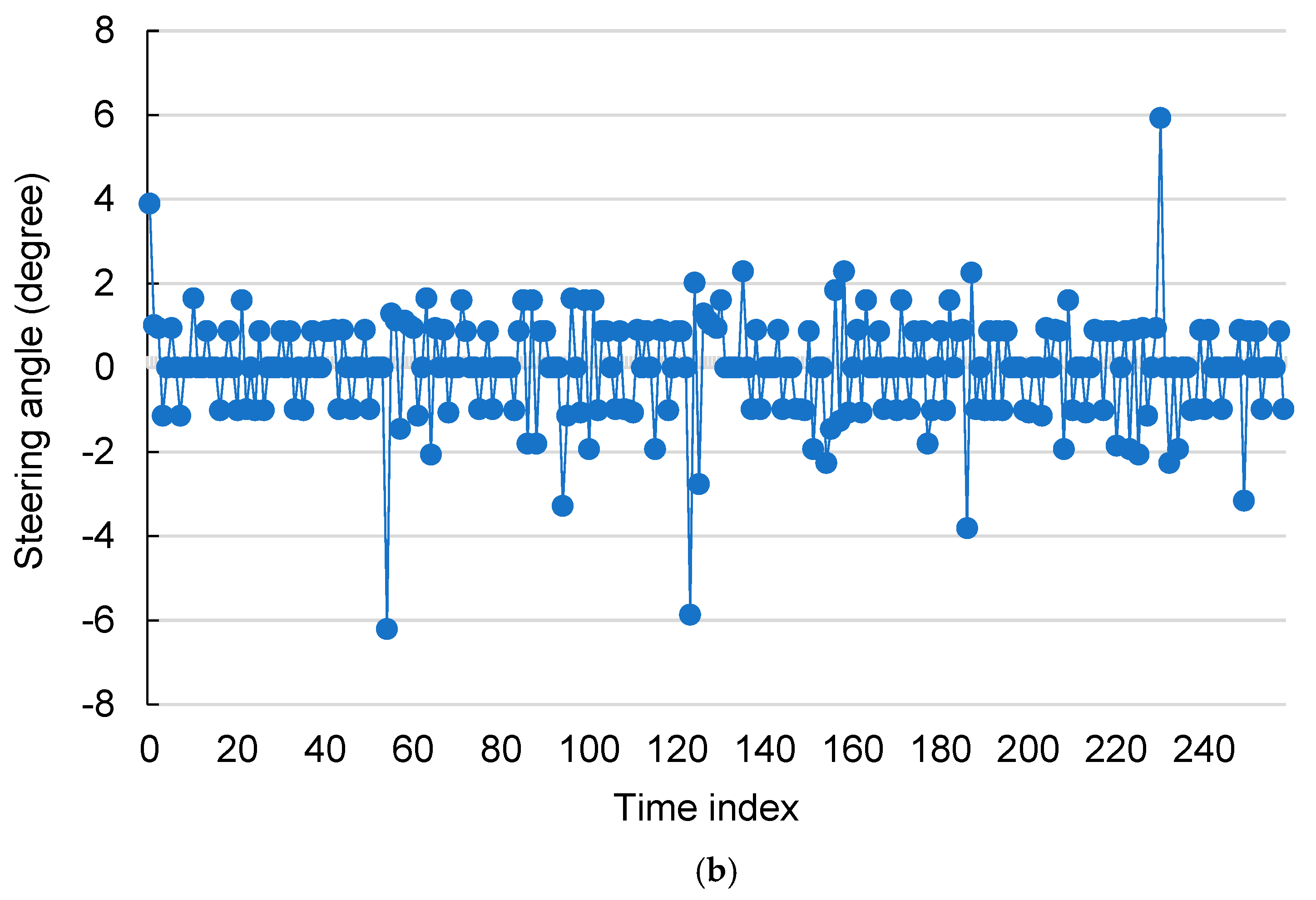

- Limited by the soil hardness and site flatness, in this study, the value of the PID controller was set to 0.5, enabling the four DC motors to reach the required speed quickly. Although there is a short-term small oscillation when the motor starts, its impact on the traveling speed of the robot is minimal. On the other hand, the FLC can smoothly adjust the heading angle and improve the two-wheel speed difference control method [54]. The experimental results show that when the robot moved in a guidance line at a speed of 12.5 cm/s, the variation in the heading angle stayed within one degree. Although no drip irrigation belt was present on the field, the average variation range of the heading angle remained within ±3.5 degrees (Figure 18a). Assuming that the planting position of the crops in the image was not on the vertical line in the center of the field ridge, it would also affect the estimated heading angle when the robot moved. For instance, in Figure 18a, at time indices of about 55, 130, 125, and 232, the heading angle estimated based on the crop line changed instantly by more than four degrees. Fortunately, this result has to be averaged with the heading angles obtained from the other regression lines, preventing ridge damage due to overcorrection of the wheels and avoiding misjudgment of the heading angle. When the robot rotated, a higher motor speed output value (1000 rpm) was used, ensuring greater torque was instantly available and allowing the four drives to power the motors. The advantage of the 4 WD/4 WS system is that it enables the robot to achieve a turning radius of zero. Compared with common car drive systems, this steering mode reduces the space required for turning and improves land use efficiency. Moreover, relying on RTK-GNSS receivers for centimeter-level autonomous guidance of a farming robot is a common practice [55]. This centimeter-level positioning error is acceptable when a fixed ambiguity solution is obtained [56]. However, there are risks in autonomous guiding operations on narrow farming areas. If the positioning signal is interrupted, or the positioning solution is a floating solution, then the robot may damage the field during movement.

- During spraying test operation, setting the delay time of the sprayer according to the speed of the robot can avoid ineffective spraying. In this study, when the robot speed was 12.5 cm/s, with a sprayer time of about 0.5 s and a delay time of 2.5 s, the EWC and IWC reached 83% and 8% respectively, and the pesticide reduction reached 53%. Similar results were also presented in [57], with a 34.5% reduction in pesticide usage compared with traditional uniform spraying methods. As the speed of the robot increased, the EWC decreased, and the IWC increased. At a speed of 35 cm/s, the average precision was too low, which indirectly resulted in a reduction in the number of pesticide applications. On the other hand, the unhealthy crops were about 7–12 cm in diameter. Even with the appropriate delay time set, there were still some unhealthy crops or weeds that could not be sprayed correctly.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Spykman, O.; Gabriel, A.; Ptacek, M.; Gandorfer, M. Farmers’ perspectives on field crop robots—Evidence from Bavaria, Germany. Comput. Electron. Agric. 2021, 186, 106176. [Google Scholar] [CrossRef]

- Wu, J.; Jin, Z.; Liu, A.; Yu, L.; Yang, F. A survey of learning-based control of robotic visual servoing systems. J. Franklin Inst. 2022, 359, 556–577. [Google Scholar] [CrossRef]

- Kato, Y.; Morioka, K. Autonomous robot navigation system without grid maps based on double deep Q-Network and RTK-GNSS localization in outdoor environments. In Proceedings of the 2019 IEEE/SICE International Symposium on System Integration (SII), Paris, France, 14–16 January 2019; pp. 346–351. [Google Scholar]

- Galati, R.; Mantriota, G.; Reina, G. RoboNav: An affordable yet highly accurate navigation system for autonomous agricultural robots. Robotics 2022, 11, 99. [Google Scholar] [CrossRef]

- Chien, J.C.; Chang, C.L.; Yu, C.C. Automated guided robot with backstepping sliding mode control and its path planning in strip farming. Int. J. iRobotics 2022, 5, 16–23. [Google Scholar]

- Zhang, L.; Zhang, R.; Li, L.; Ding, C.; Zhang, D.; Chen, L. Research on virtual Ackerman steering model based navigation system for tracked vehicles. Comput. Electron. Agric. 2022, 192, 106615. [Google Scholar] [CrossRef]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Leemans, V.; Destain, M.F. Application of the Hough transform for seed row localisation using machine vision. Biosyst. Eng. 2006, 94, 325–336. [Google Scholar] [CrossRef]

- Choi, K.H.; Han, S.K.; Han, S.H.; Park, K.-H.; Kim, K.-S.; Kim, S. Morphology-based guidance line extraction for an autonomous weeding robot in paddy fields. Comput. Electron. Agric. 2015, 113, 266–274. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, X.; Zhao, R.; Chen, Y.; Liu, X. Navigation line extraction method for broad-leaved plants in the multi-period environments of the high-ridge cultivation mode. Agriculture 2023, 13, 1496. [Google Scholar] [CrossRef]

- Suriyakoon, S.; Ruangpayoongsak, N. Leading point-based interrow robot guidance in corn fields. In Proceedings of the 2017 2nd International Conference on Control and Robotics Engineering (ICCRE), Bangkok, Thailand, 1–3 April 2017; pp. 8–12. [Google Scholar]

- Bonadiesa, S.; Gadsden, S.A. An overview of autonomous crop row navigation strategies for unmanned ground vehicles. Eng. Agric. Environ. Food 2019, 12, 24–31. [Google Scholar] [CrossRef]

- Chen, J.; Qiang, H.; Wu, J.; Xu, G.; Wang, Z. Navigation path extraction for greenhouse cucumber-picking robots using the prediction-point Hough transform. Comput. Electron. Agric. 2021, 180, 105911. [Google Scholar] [CrossRef]

- Ma, Z.; Tao, Z.; Du, X.; Yu, Y.; Wu, C. Automatic detection of crop root rows in paddy fields based on straight-line clustering algorithm and supervised learning method. Biosyst. Eng. 2021, 211, 63–76. [Google Scholar] [CrossRef]

- Shi, J.; Bai, Y.; Diao, Z.; Zhou, J.; Yao, X.; Zhang, B. Row detection-based navigation and guidance for agricultural robots and autonomous vehicles in row-crop fields: Methods and applications. Agronomy 2023, 13, 1780. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, Y.; Zhu, Z.; Li, Z.; Du, Y.; Mao, E. Tractor path tracking control based on binocular vision. Inf. Process. Agric. 2018, 5, 422–432. [Google Scholar] [CrossRef]

- Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine vision systems in precision agriculture for crop farming. J. Imaging 2019, 5, 89. [Google Scholar] [CrossRef]

- Gu, Y.; Li, Z.; Zhang, Z.; Li, J.; Chen, L. Path tracking control of field information-collecting robot based on improved convolutional neural network algorithm. Sensors 2020, 20, 797. [Google Scholar] [CrossRef]

- Pajares, G.; García-Santillán, I.; Campos, Y.; Montalvo, M.; Guerrero, J.M.; Emmi, L.; Romeo, J.; Guijarro, M.; González-de-Santos, P. Machine-vision systems selection for agricultural vehicles: A guide. J. Imaging 2016, 2, 34. [Google Scholar] [CrossRef]

- de Silva, R.; Cielniak, G.; Gao, J. Towards agricultural autonomy: Crop row detection under varying field conditions using deep learning. arXiv 2021, arXiv:2109.08247. [Google Scholar]

- Hu, Y.; Huang, H. Extraction method for centerlines of crop row based on improved lightweight Yolov4. In Proceedings of the 2021 6th International Symposium on Computer and Information Processing Technology (ISCIPT), Changsha, China, 11–13 June 2021; pp. 127–132. [Google Scholar]

- Ruan, Z.; Chang, P.; Cui, S.; Luo, J.; Gao, R.; Su, Z. A precise crop row detection algorithm in complex farmland for unmanned agricultural machines. Biosyst. Eng. 2023, 232, 1–12. [Google Scholar] [CrossRef]

- Ruigrok, T.; van Henten, E.; Booij, J.; van Boheemen, K.; Kootstra, G. Application-specific evaluation of a weed-detection algorithm for plant-specific spraying. Sensors 2020, 20, 7262. [Google Scholar] [CrossRef]

- Hu, D.; Ma, C.; Tian, Z.; Shen, G.; Li, L. Rice Weed detection method on YOLOv4 convolutional neural network. In Proceedings of the 2021 International Conference on Artificial Intelligence, Big Data and Algorithms (CAIBDA), Xi’an, China, 28–30 May 2021; pp. 41–45. [Google Scholar]

- Chang, C.L.; Xie, B.X.; Chung, S.C. Mechanical control with a deep learning method for precise weeding on a farm. Agriculture 2021, 11, 1049. [Google Scholar] [CrossRef]

- Wang, Q.; Cheng, M.; Huang, S.; Cai, Z.; Zhang, J.; Yuan, H. A deep learning approach incorporating YOLO v5 and attention mechanisms for field real-time detection of the invasive weed Solanum rostratum Dunal seedlings. Comput. Electron. Agric. 2022, 199, 107194. [Google Scholar] [CrossRef]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed detection in sesame fields using a YOLO model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 2022, 202, 107412. [Google Scholar] [CrossRef]

- Ruigrok, T.; van Henten, E.J.; Kootstra, G. Improved generalization of a plant-detection model for precision weed control. Comput. Electron. Agric. 2023, 204, 107554. [Google Scholar] [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 2022, 8, 100308. [Google Scholar] [CrossRef]

- Qiu, Q.; Fan, Z.; Meng, Z.; Zhang, Q.; Cong, Y.; Li, B.; Wang, N.; Zhao, C. Extended Ackerman steering principle for the co-ordinated movement control of a four wheel drive agricultural mobile robot. Comput. Electron. Agric. 2018, 152, 40–50. [Google Scholar] [CrossRef]

- Bak, T.; Jakobsen, H. Agricultural robotic platform with four wheel steering for weed detection. Biosyst. Eng. 2004, 87, 125–136. [Google Scholar] [CrossRef]

- Tu, X.; Gai, J.; Tang, L. Robust navigation control of a 4WD/4WS agricultural robotic vehicle. Comput. Electron. Agric. 2019, 164, 104892. [Google Scholar] [CrossRef]

- Wang, D.; Qi, F. Trajectory planning for a four-wheel-steering vehicle. In Proceedings of the 2001 ICRA. IEEE International Conference on Robotics and Automation, Seoul, Republic of Korea, 21–26 May 2001; Volume 4, pp. 3320–3325. [Google Scholar]

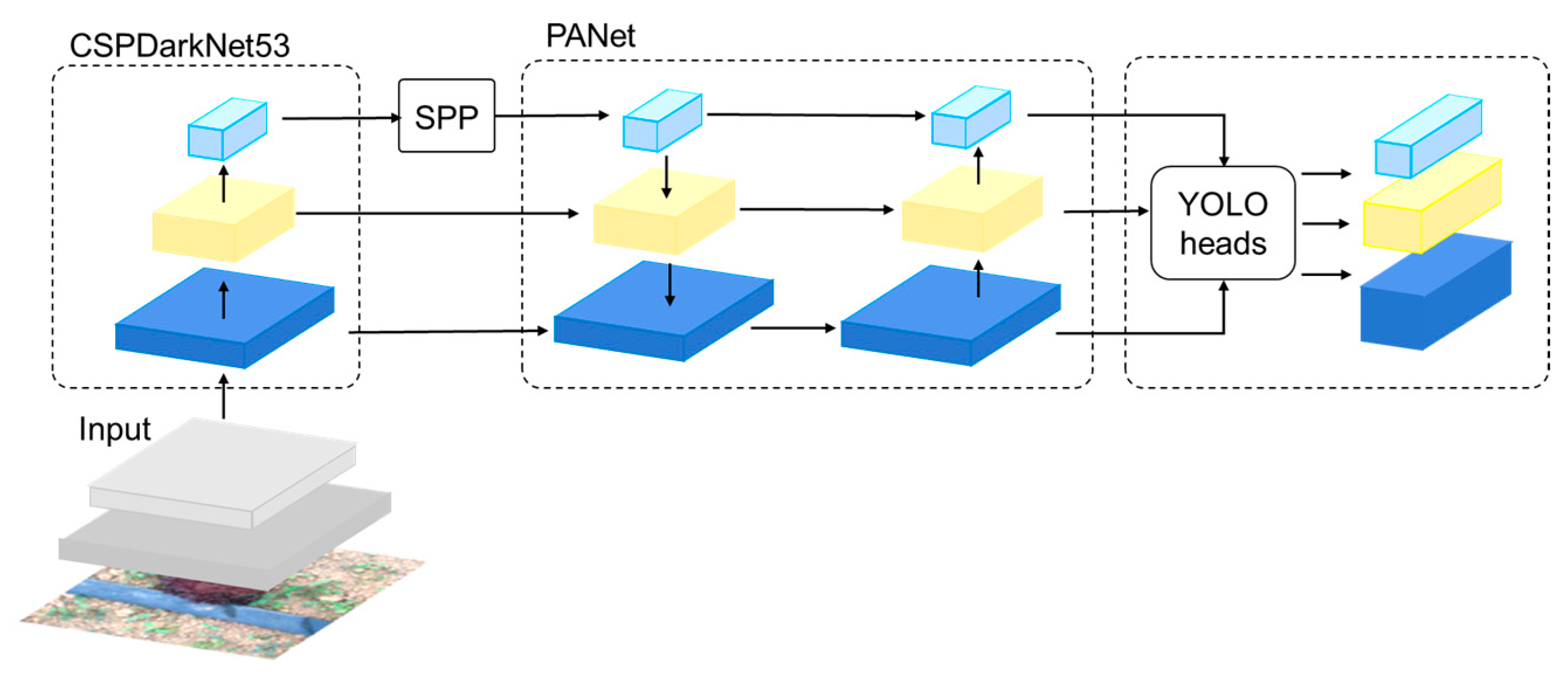

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Work-shops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; He, T.; Zhang, H.; Zhang, Z.; Xie, J.; Li, M. Bag of freebies for training object detection neural networks. arXiv 2019, arXiv:1902.04103. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. AAAI Tech. Track Vis. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Roy, A.M.; Bhaduri, J. Real-time growth stage detection model for high degree of occultation using DenseNet-fused YOLOv4. Comput. Electron. Agric. 2022, 193, 106694. [Google Scholar] [CrossRef]

- Chang, C.L.; Chen, H.W. Straight-line generation approach using deep learning for mobile robot guidance in lettuce fields. In Proceedings of the 2023 9th International Conference on Applied System Innovation (ICASI), Chiba, Japan, 21–25 April 2023. [Google Scholar]

- Lee, C.C. Fuzzy logic in control system: Fuzzy logic controller. IEEE Trans. Syst. Man Cybern. Syst. 1990, 20, 404–418. [Google Scholar] [CrossRef]

- Yu, C.C.; Tsen, Y.W.; Chang, C.L. Modeled Carrier. TW Patent No. I706715, 11 October 2020. [Google Scholar]

- Bennett, P. The NMEA FAQ (Fragen und Antworten zu NMEA), Ver. 6.1; Sepember 1997. Available online: https://www.geocities.ws/lrfernandes/gps_project/Appendix_E_NMEA_FAQ.pdf (accessed on 30 January 2023).

- Shih, P.T.-Y. TWD97 and WGS84, datum or map projection? J. Cadastr. Surv. 2020, 39, 1–12. [Google Scholar]

- Lee, J.; Hwang, K.I. YOLO with adaptive frame control for real-time object detection applications. Multimed. Tools Appl. 2022, 81, 36375–36396. [Google Scholar] [CrossRef]

- Hasan, R.I.; Yusuf, S.M.; Alzubaidi, L. Review of the state of the art of deep learning for plant diseases: A broad analysis and discussion. Plants 2020, 9, 1302. [Google Scholar] [CrossRef]

- Arsenovic, M.; Karanovic, M.; Sladojevic, S.; Anderla, A.; Stefanovic, D. Solving current limitations of deep learning based approaches for plant disease detection. Symmetry 2019, 11, 939. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, H.; He, Y.; Ye, M.; Cai, X.; Zhang, D. Road segmentation for all-day outdoor robot navigation. Neurocomputing 2018, 314, 316–325. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Plant diseases and pests detection based on deep learning: A review. Plant Methods 2021, 17, 22–35. [Google Scholar] [CrossRef] [PubMed]

- Jiao, L.; Zhang, R.; Liu, F.; Yang, S.; Hou, B.; Li, L.; Tang, X. New generation deep learning for video object detection: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 3195–3215. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Wang, R.; Xie, C.; Liu, L.; Zhang, J.; Li, R.; Wang, F.; Zhou, M.; Liu, W. A recognition method for rice plant diseases and pests video detection based on deep convolutional neural network. Sensors 2020, 20, 578. [Google Scholar] [CrossRef]

- Altalak, M.; Ammad uddin, M.; Alajmi, A.; Rizg, A. Smart agriculture applications using deep learning technologies: A survey. Appl. Sci. 2022, 12, 5919. [Google Scholar] [CrossRef]

- Chang, C.L.; Chen, H.W.; Chen, Y.H.; Yu, C.C. Drip-tape-following approach based on machine vision for a two-wheeled robot trailer in strip farming. Agriculture 2022, 12, 428. [Google Scholar] [CrossRef]

- del Rey, J.C.; Vega, J.A.; Pérez-Ruiz, M.; Emmi, L. Comparison of positional accuracy between RTK and RTX GNSS based on the autonomous agricultural vehicles under field conditions. Appl. Eng. Agric. 2014, 30, 361–366. [Google Scholar]

- Han, J.H.; Park, C.H.; Park, Y.J.; Kwon, J.H. Preliminary results of the development of a single-frequency GNSS RTK-based autonomous driving system for a speed sprayer. J. Sens. 2019, 2019, 4687819. [Google Scholar] [CrossRef]

- Gonzalez-de-Soto, M.; Emmi, L.; Perez-Ruiz, M.; Aguera, J.; Gonzalez-de-Santos, P. Autonomous systems for precise spraying—Evaluation of a robotised patch sprayer. Biosyst. Eng. 2016, 146, 165–182. [Google Scholar] [CrossRef]

| Input Variable | Output Variable | |||||||

|---|---|---|---|---|---|---|---|---|

| Heading angle (θ) | Rate of change of the heading angle () | Steering angle (δ) | ||||||

| Crisp interval | Linguistic labels | Crisp interval | Linguistic labels | Crisp interval | Linguistic labels | |||

| Triangular [] | Ladderr [] | Triangularr [] | Ladderr [] | Triangularr [] | Ladderr [] | |||

| − | [−100, −100, −20, 0] | LO | − | [−100, −100, −25, 0] | N | − | [−17, −17, −7, 0] | L |

| [−10, 0, 10] | − | M | [−20, 0, 20] | − | Z | [−5, 0, 5] | − | M |

| − | [0, 20, 100, 100] | RO | − | [0, 25, 100, 100] | P | − | [0, 7, 17, 17] | R |

| Parameters | |||

| Value |

| Description | Value or Other Details |

|---|---|

| Mechanism body | |

| Length | 2.4 m |

| Width | 1.06 m |

| Height | 2 m |

| Wheel diameter | 0.65 m |

| Maximum weight | 440 kg |

| Drive components | |

| Drive method | 4 WS/4 WD |

| Maximum speed | 1 Km/h |

| Motors (input voltage, gear ratio; torque) | DC 24 V, 1/360, 0.8 N-m |

| Linear electric actuator (input voltage, gear ratio; torque) | DC 24 V, 1/5, 0.64 N-m |

| Battery (voltage, capacity) | DC 24 V, 30 Ah |

| Sprayer | |

| Electromagnetic valve (volt, pressure) | DC 12 V, 0~10 Kg/cm2 |

| Pump (input voltage, power, pressure, volumetric flow rate) | DC 24 V, 70 W, 1.0 MPA, 4.5 L/min |

| Copper nozzle (diameter) | 1 mm/0.1 mm |

| Water container | 20 L |

| Electronics | |

| GNSS receiver (voltage, accuracy, band) | 3.3/5 V, 0.01 m–2.5 m, L1/L2C |

| Antennas (input voltage, signal type, noise figure, type, connector) | 3.3 V–18 V, GPS/GLONASS/Galileo/BeiDou, 2.5 dB, active, TNC |

| Camera (maximum resolution, mega pixel, focus type, FoV, connection type) | 4 K/30 FPS, 13, autofocus, 90 degree, USB |

| Name of Model | Type of Object | Evaluation Matric (%) | Sunny | Partly Sunny | Cloudy | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 9:00~ 11:00 a.m. | 12:00~ 2:00 p.m. | 3:00~ 5:00 p.m. | 9:00~ 11:00 a.m. | 12:00~ 2:00 p.m. | 3:00~ 5:00 p.m. | 9:00~ 11:00 a.m. | 12:00~ 2:00 p.m. | 3:00~ 5:00 p.m. | |||

| DetModel #1 | Drip irrigation belt | PR | 98.1 ± 0.1 | 94.3 ± 0.7 | 98.7 ± 0.9 | 97.3 ± 0.9 | 94.3 ± 0.9 | 97.2 ± 0.9 | 97.2 ± 0.3 | 95.1 ± 0.4 | 96.4 ± 0.7 |

| Recall | 96.2 ± 0.5 | 94.3 ± 0.8 | 97.4 ± 0.9 | 95.1 ± 0.8 | 94.1 ± 1.1 | 96.6 ± 0.7 | 96.1 ± 0.5 | 93.7 ± 0.6 | 94.7 ± 0.6 | ||

| F1 score | 97.1 ± 0.1 | 94.3 ± 0.7 | 98.0 ± 0.9 | 96.2 ± 0.8 | 94.2 ± 0.9 | 96.9 ± 0.8 | 96.6 ± 0.4 | 94.4 ± 0.5 | 95.5 ± 0.6 | ||

| Crop | PR | 97.8 ± 0.2 | 93.2 ± 0.5 | 97.6 ± 0.1 | 97.8 ± 0.9 | 94.3 ± 1.1 | 97.1 ± 1.0 | 97.8 ± 0.7 | 95.0 ± 0.8 | 97.4 ± 0.3 | |

| Recall | 96.1 ± 0.1 | 93.1 ± 0.7 | 96.9 ± 0.4 | 96.8 ± 0.6 | 94.6 ± 0.9 | 96.7 ± 0.8 | 96.1 ± 0.6 | 94.1 ± 0.6 | 96.3 ± 0.3 | ||

| F1 score | 96.9 ± 0.1 | 93.1 ± 0.6 | 97.2 ± 0.3 | 97.3 ± 0.7 | 94.4 ± 1.0 | 96.9 ± 0.9 | 96.9 ± 0.6 | 94.5 ± 0.7 | 96.8 ± 0.3 | ||

| Ridge | PR | 95.3 ± 0.2 | 93.4 ± 0.8 | 97.1 ± 0.9 | 95.2 ± 1.1 | 94.3 ± 1.2 | 96.9 ± 1.1 | 94.8 ± 0.3 | 94.2 ± 0.9 | 93.2 ± 1.2 | |

| Recall | 96.1 ± 0.4 | 95.1 ± 0.7 | 96.1 ± 0.6 | 94.7 ± 0.7 | 94.4 ± 0.1 | 96.8 ± 0.8 | 94.1 ± 0.2 | 94.2 ± 0.7 | 93.3 ± 0.2 | ||

| F1 score | 95.7 ± 0.3 | 94.2 ± 0.7 | 96.6 ± 0.7 | 94.9 ± 0.9 | 94.3 ± 0.2 | 97.2 ± 0.9 | 94.4 ± 0.2 | 94.2 ± 0.7 | 93.2 ± 0.3 | ||

| DetModel #2 | Unhealthy crop | PR | 90.3 ± 2.0 | 88.3 ± 2.2 | 91.2 ± 1.8 | 90.1 ± 0.5 | 87.8 ± 1.2 | 90.8 ± 1.2 | 90.2 ± 1.1 | 88.4 ± 1.8 | 85.1 ± 1.4 |

| Recall | 81.3 ± 0.1 | 82.4 ± 0.1 | 82.4 ± 0.1 | 83.1 ± 0.4 | 83.2 ± 0.9 | 82.2 ± 0.9 | 81.1 ± 1.0 | 81.3 ± 1.2 | 80.3 ± 1.1 | ||

| F1 score | 85.6 ± 0.2 | 85.2 ± 0.2 | 86.4 ± 0.2 | 86.5 ± 0.4 | 85.4 ± 0.9 | 86.3 ± 0.9 | 85.4 ± 1.1 | 84.7 ± 1.3 | 82.6 ± 1.2 | ||

| Weed | PR | 92.1 ± 1.1 | 88.3 ± 1.5 | 90.1 ± 1.8 | 89.7 ± 1.4 | 90.8 ± 1.2 | 90.2 ± 1.9 | 90.2 ± 1.1 | 88.7 ± 1.2 | 86.6 ± 1.5 | |

| Recall | 84.3 ± 0.1 | 81.2 ± 1.1 | 82.4 ± 1.6 | 83.4 ± 1.3 | 84.1 ± 1.1 | 82.4 ± 1.6 | 83.3 ± 0.9 | 82.4 ± 1.0 | 80.3 ± 1.1 | ||

| F1 score | 88.0 ± 0.2 | 84.6 ± 1.1 | 86.1 ± 1.6 | 86.4 ± 1.3 | 87.3 ± 1.1 | 86.1 ± 1.7 | 86.6 ± 0.9 | 85.4 ± 1.1 | 83.3 ± 1.1 | ||

| Speed | EWC (%) | IWC (%) | Pesticide Reduction (%) |

|---|---|---|---|

| 12.5 cm/s | 82.9 ± 1.9 | 8.4 ± 0.9 | 53.2 ± 2.9 |

| 19 cm/s | 73.0 ± 2.8 | 15.2 ± 1.9 | 36.1 ± 3.6 |

| 24 cm/s | 73.3 ± 5.3 | 19.4 ± 4.2 | 30.7 ± 3.6 |

| 35 cm/s | 23.7 ± 5.3 | 27.3 ± 5.2 | 63.4 ± 5.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, C.-L.; Chen, H.-W.; Ke, J.-Y. Robust Guidance and Selective Spraying Based on Deep Learning for an Advanced Four-Wheeled Farming Robot. Agriculture 2024, 14, 57. https://doi.org/10.3390/agriculture14010057

Chang C-L, Chen H-W, Ke J-Y. Robust Guidance and Selective Spraying Based on Deep Learning for an Advanced Four-Wheeled Farming Robot. Agriculture. 2024; 14(1):57. https://doi.org/10.3390/agriculture14010057

Chicago/Turabian StyleChang, Chung-Liang, Hung-Wen Chen, and Jing-Yun Ke. 2024. "Robust Guidance and Selective Spraying Based on Deep Learning for an Advanced Four-Wheeled Farming Robot" Agriculture 14, no. 1: 57. https://doi.org/10.3390/agriculture14010057

APA StyleChang, C.-L., Chen, H.-W., & Ke, J.-Y. (2024). Robust Guidance and Selective Spraying Based on Deep Learning for an Advanced Four-Wheeled Farming Robot. Agriculture, 14(1), 57. https://doi.org/10.3390/agriculture14010057