1. Introduction

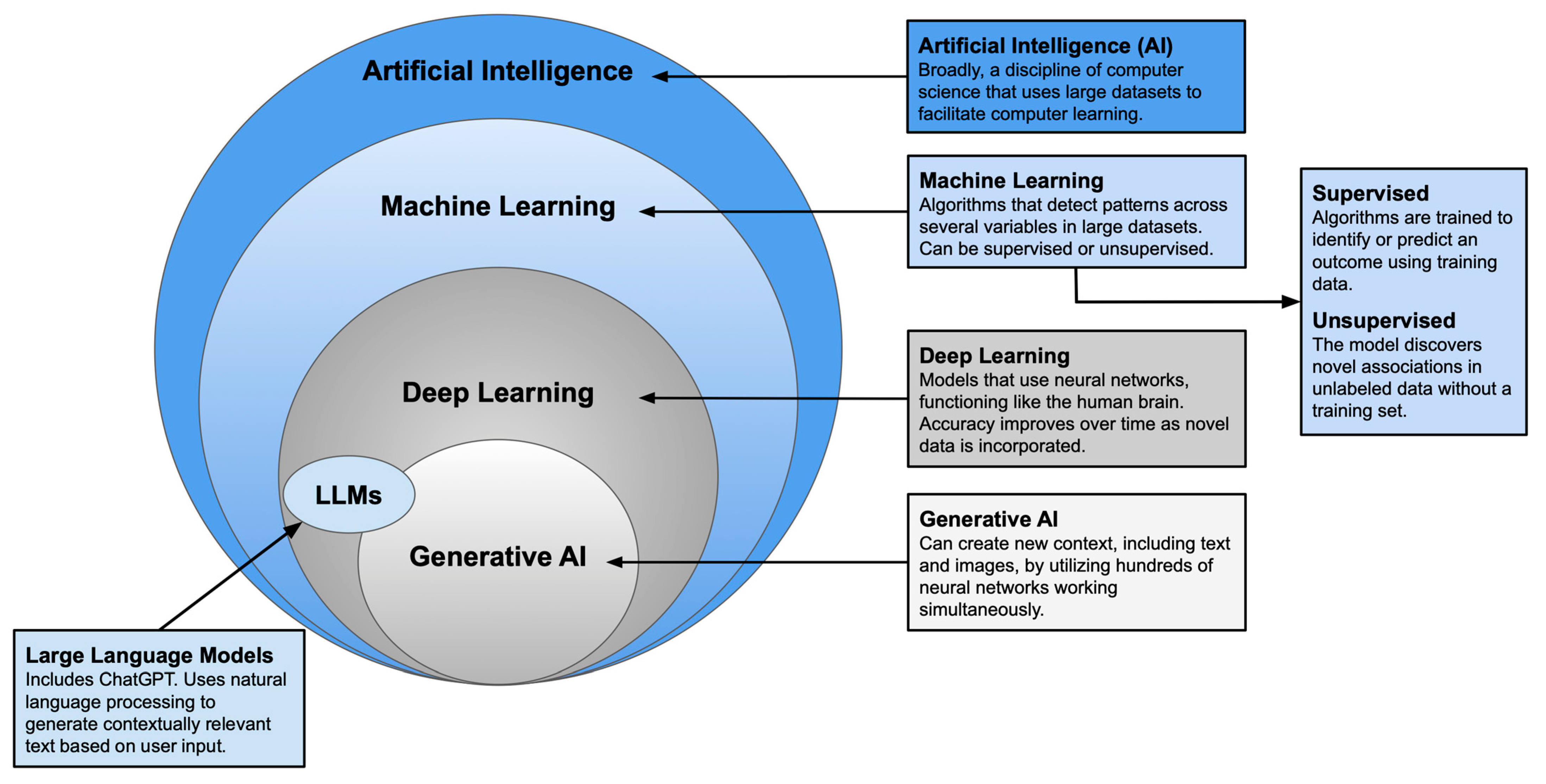

Plastic surgery has long been defined by innovation and adaptability. As established techniques give way to newer approaches, plastic surgeons remain at the forefront of medical advancement. Artificial intelligence (AI)—an umbrella term encompassing various modes of computer learning—represents one of the most transformative developments in recent years. With tools such as machine learning, neural networks, and large language models, surgeons can now augment clinical judgment with data-driven insights to guide decision-making [

1,

2] (See

Figure 1). AI has shown particular promise in complex procedures with specific esthetic goals [

3,

4], leading to its growing application in breast reconstruction.

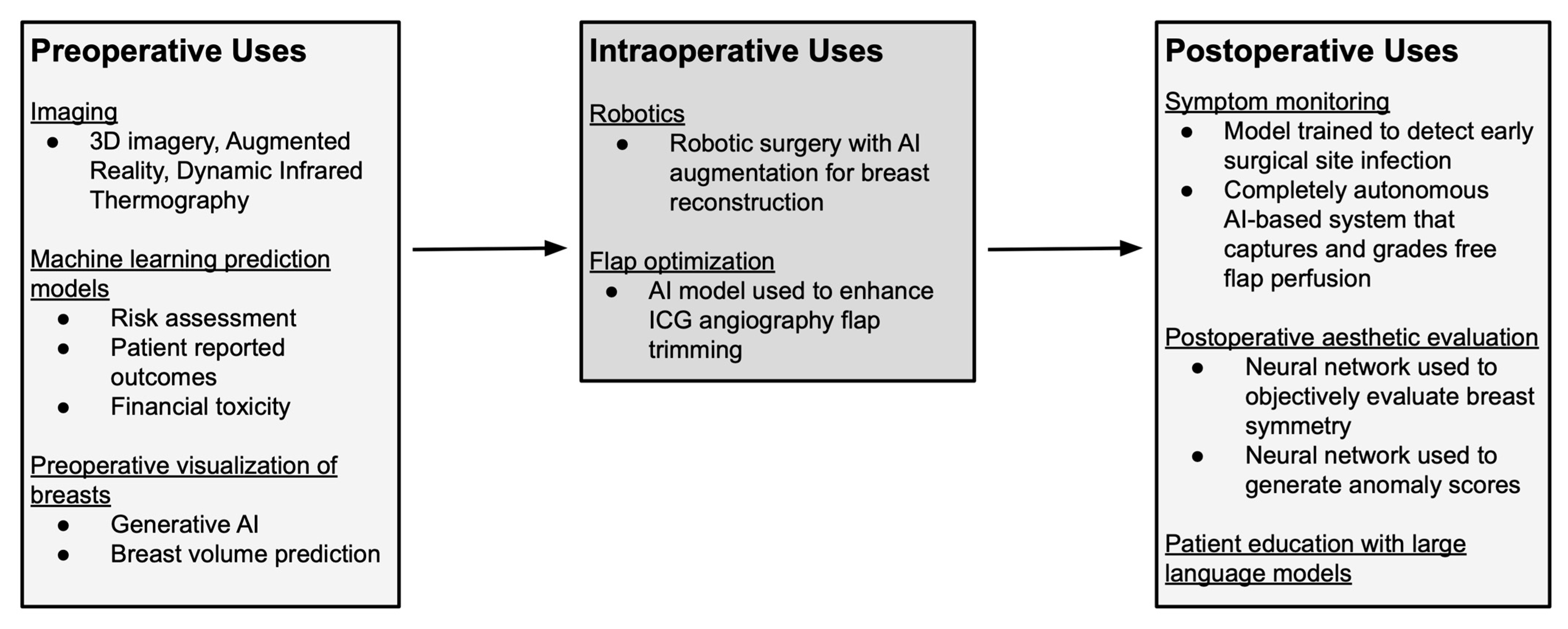

Breast reconstruction is an important aspect of breast cancer care, with effects on both physical recovery and psychological well-being following mastectomy [

5]. Over the past decade, AI has been incorporated into multiple stages of reconstructive care, including preoperative image assessment, risk assessment, and patient-reported outcome prediction [

3,

4] (See

Table 1 and

Figure 2). More recently, the emergence of conversational AI chatbots has prompted investigations into the accuracy and quality of AI-generated medical guidance [

6]. As technological capabilities continue to expand, it is essential to examine how plastic and reconstructive surgery can evolve in parallel with these innovations.

This narrative review provides an overview of the current applications of AI in breast reconstruction, emphasizing its role in improving surgical precision, optimizing patient-centered outcomes, and identifying opportunities for future development.

Generative AI, and Large Language Models [

1,

2].

2. AI in Preoperative Surgical Planning

2.1. Imaging

Preoperative computed tomography angiography (CTA) imaging is the gold standard for evaluating vascular anatomy in autologous breast reconstruction. Preoperative identification of perforators in deep inferior epigastric artery perforator (DIEP) flap reconstruction enhances surgical efficiency [

7]. Several technological advances now facilitate CTA interpretation and perforator mapping, with the aim to further improve operative time and outcomes [

3,

4].

The process of mapping vascular anatomy during presurgical planning is time consuming, requiring slice-by-slice reconstruction and manual identification of each perforating vessel by technicians and radiologists. Mavioso et al. explored whether algorithms could be used to enhance this process [

8]. They compared the quality and time required for perforator identification between a traditional imaging team and an image-processing algorithm, verified against vessels found intraoperatively. The algorithm’s performance was comparable to the traditional method; it adequately identified and characterized the perforators in significantly less time, showing some benefit over operator-dependent analysis [

8].

In addition to the time required to interpret the imaging, another limitation of CTA is the inability to visualize perforators in three dimensions. To address this gap, Cevik and Rosen described a novel use of generative software to render 3D images of the deep inferior epigastric artery (DIEA) and the perforators [

9]. This approach offers insight into the artery’s path within a three-dimensional framework. Seth et al. expanded on this approach and used augmented reality (AR) to visualize the intra and extramuscular perforator courses of the DIEA to enhance surgical precision [

10]. AR overlays digital information (like imaging) onto the user’s view of the real world, allowing for increased interactivity and immersion that is not possible with traditional imaging alone. Berger et al. evaluated an AR tool that superimposes magnetic resonance angiography (MRA) scans onto the patient in real time [

11]. First, MRA images were obtained and uploaded to the augmented reality software. Then, the surgeon used the AR goggles to align the processed image data with the patient’s body. They found that the MRA AR projection closely correlated with the actual vessel location on Doppler ultrasound. Three-dimensional imagery and AR have the potential to enhance the surgeon’s understanding of individual anatomy, but the technology is in its early stages and will require additional modifications before it can be adopted on a larger scale.

Dynamic infrared thermography (DIRT) is a noninvasive technology that uses heat mapping to quickly identify perforators for reconstructive planning [

12]. After establishing a baseline by cooling the abdomen, thermal imaging is used to localize hotspots correlating with areas of optimal perfusion. After two and a half minutes, these areas are marked as probable perforators. Meier et al. integrated DIRT technology with AR software to improve vessel identification in patients undergoing DIEP flap reconstruction [

12]. In their study, the AR device was equipped with a thermal camera, projecting a color-coded heat map onto the patient’s abdomen in real time. They found that most DIRT-identified perforators were also identified on Doppler and CTA, and DIRT performed similarly to CTA when correlating vessels intraoperatively [

12]. Although the technology is still being perfected, DIRT has the potential to optimize presurgical planning by reducing imaging time and eliminating the need for radiation and contrast exposure [

12].

2.2. Preoperative Risk Assessment

Machine learning (ML), a subset of AI, is particularly well-suited for pattern recognition. By analyzing large datasets, ML models can independently predict outcomes with high accuracy [

1]. Traditionally, risk calculators have relied on multivariate statistical models, but these tools are limited in their ability to account for the complex interplay between patient demographics, clinical and oncologic factors, and surgeon-specific choices [

13]. In contrast, ML models can leverage nonlinear relationships among numerous variables to more accurately estimate complication risk. Importantly, as additional data are incorporated, these models continuously adapt and improve their predictive performance [

1]. Recent studies have applied ML to predict a wide range of postoperative complications in both autologous and implant-based breast reconstruction.

In implant-based breast reconstruction, Bavaro et al. developed a model to estimate the risk of capsular contracture in patients undergoing immediate implant reconstruction followed by postoperative radiotherapy [

14]. Chen et al. similarly created a model to predict capsular contracture in patients undergoing two-stage breast reconstruction [

15]. ML has also been used to predict mastectomy skin flap necrosis (MSFN), with Hassan et al. identifying key risk factors and developing a model that achieved a mean predictive accuracy of 89% [

16]. In addition to these studies, Chen et al. developed a machine learning model to estimate the risk of complications following tissue expander (TE) placement [

13]. The model not only identified the key risk factors for TE loss, infection, and seroma, but also ranked them according to their relative contribution to each outcome. Building on these findings, the authors created a clinical prediction app using the highest-performing models, providing surgeons with a user-friendly tool to input patient-specific data and generate individualized risk scores in the preoperative setting [

13].

ML models have also been applied to predict postoperative complications in the autologous breast reconstruction population. O’Neil et al. developed a machine learning model that predicted DIEP flap failure with 95% accuracy [

17]. While their multivariate analysis was unable to determine predictors of flap failure, the ML model successfully recognized clinical patterns associated with increased risk, highlighting the advantages of ML over conventional statistical methods [

17]. Beyond flap failure, readmission remains a significant challenge following DIEP flap reconstruction. Using the National Surgical Quality Improvement Program (NSQIP) database, Ozmen et al. created a model that predicted readmission with 89% accuracy [

18]. Similarly, Gabay et al. used NSQIP data to assess short-term reoperation and readmission rates in both autologous and alloplastic breast reconstruction. Their analysis demonstrated that ML algorithms outperformed traditional logistic regression, further underscoring the utility of ML for risk prediction [

19].

As ML models evolve and enter clinical practice, they hold significant potential to refine surgical risk counseling by providing personalized, data-driven outcome predictions. With continued refinement, the clinical factors included in these models—such as preoperative BMI, age, smoking status, tumor pathology, radiation details, incision length, mastectomy weight, lymph node harvest, and reconstruction type—are becoming increasingly specific. ML algorithms can synthesize these variables to identify patterns and generate individualized risk profiles. However, most current models remain preliminary and lack validation in larger cohorts. Recently, Meyer et al. demonstrated that their ML model predicting nipple-areolar complex necrosis retained its accuracy in a larger dataset [

20], supporting the validity and generalizability of this approach. Looking forward, user-friendly ML calculators will likely be integrated into preoperative counseling, enhancing discussions on risk optimization and expected outcomes.

2.3. Patient-Reported Outcome Prediction

In addition to risk estimation, ML models can also be used to preoperatively predict patient-reported outcomes (PROs). In breast surgery, PROs are most commonly assessed with the BREAST-Q [

21,

22]. Using the BREAST-Q, Pfob et al. developed and validated machine learning algorithms to predict individual satisfaction with breasts at 2-year follow-up, with the goal of better informing reconstructive decision-making after cancer-related mastectomy [

23]. The models were developed and validated using separate patient cohorts, and the best performing model achieved an AUC of 0.87. Notably, Pfob and colleagues were able to show that the drivers of short and long-term satisfaction with breasts were distinct, enhancing our understanding of how PROs change over time [

23]. The same authors conducted a similar analysis in 2023 focused on breast satisfaction at 1 year follow-up and found 30% of women in the cohort experienced a clinically meaningful reduction in breast satisfaction [

24]. They postulated that if these women had been identified using the predictive ML model, a different reconstruction option would have been recommended, and the likelihood of achieving optimal breast satisfaction would have increased substantially [

24].

In addition to breast satisfaction, the BREAST-Q also assesses physical, sexual, and psychosocial well-being, providing broader insight into quality of life. A large multi-institutional study of eleven sites across North America expanded on this approach by developing and externally validating ML models to predict clinically meaningful changes in physical, sexual, and psychosocial well-being at 2 years [

25]. Using data from over 1400 patients, the algorithms demonstrated acceptable predictive accuracy (AUCs 0.64–0.82 across domains), with baseline PROs again exerting the greatest influence on model performance. These findings highlight the potential of ML to forecast long-term quality-of-life outcomes and to support shared decision-making in postmastectomy breast reconstruction.

More recently, Chen et al. advanced this work by demonstrating that their ML models could predict outcomes across five distinct BREAST-Q domains [

26]. The models were trained on a larger patient cohort that incorporated multiple pre-existing comorbidities. With these enhancements, the algorithms outperformed earlier models focused on breast satisfaction, physical well-being, and sexual well-being by a significant margin. These findings underscore that incorporating larger and more diverse patient datasets into ML models improves predictive accuracy and allows the algorithms to evolve over time, better capturing patient experiences.

2.4. Financial Toxicity

Beyond the physical appearance of breasts after reconstruction, the financial burden of cancer treatment is an important outcome to consider. Financial toxicity refers to the material, psychological, and social burden caused by treatment-related out-of-pocket healthcare costs [

27]. Patients undergoing cancer therapy experience disproportionately high rates of financial toxicity, yet until recently, it was unclear which factors most strongly contributed to this burden. To address this gap, Sidey-Gibbons et al. applied ML models to study financial toxicity [

28]. They found that patients receiving autologous breast reconstruction and chemotherapy faced the greatest financial strain and were more likely to adopt maladaptive coping strategies, such as skipping appointments, rationing medications, or cutting back on nonmedical spending. With this ML approach, surgeons can now identify patients at greatest risk for financial toxicity and proactively connect them with supportive resources.

3. Intraoperative AI Applications

3.1. Robotics

Robotic-assisted surgery (RAS) has revolutionized several surgical specialties, helping to minimize patient morbidity, optimize precision, and enhance intraoperative visualization [

29]. Within plastic surgery, there has been a steady increase in RAS use, including in breast reconstruction, over the past decade. The main advantages of robotic systems are high-definition 3D visualization, tremor reduction, and motion scaling that enables precise micromovements. When combined with AI, these systems can further support real-time tissue identification, recognition of anatomical landmarks, and personalized surgical guidance informed by preoperative planning data [

30]. The use of robotics with AI/AR augmentation in breast reconstruction remains largely conceptual; however, implementation of computer vision offers potential to enhance surgical techniques, anatomical visualization, and intraoperative navigation.

3.2. Flap Optimization

Indocyanine green fluorescence angiography (ICGFA), a tool that uses fluorescence to visualize tissue perfusion intraoperatively, has helped reduce perfusion-related complications after DIEP flap reconstruction [

31]. Despite its utility, ICGFA is inherently subjective, making it difficult to determine the exact amount of flap debridement required to optimize surgical outcomes. Ongoing efforts have shown early success in developing high-fidelity AI models using ICGFA to assist in flap trimming decisions intraoperatively. Singaravelu et al. developed an ensemble subspace k-nearest neighbor model that was able to predict the optimal excision area with 99.3% accuracy [

32]. This study offers a proof of concept that AI can help assist with intraoperative decision-making.

4. AI Use in Postoperative Care

4.1. Symptom Monitoring

In the postoperative period, prompt evaluation of potential complications is critical for optimal treatment and improved outcomes. Remote symptom monitoring has decreased the need for in-person appointments, but consequently, physicians are receiving an increasing number of postoperative wound images submitted through online portals. Reviewing these images generates significant clinician workload, indicating a need for automatization of this process. In an effort to reduce clinician burden, Muaddi et al. developed a model trained to detect surgical incisions and early surgical site infections (SSIs) [

33]. The model achieved modest predictive accuracy for identifying SSIs, suggesting this tool is best suited as a triaging modality that supports a surgeon’s clinical judgment.

Similarly, frequent free flap monitoring in the early postoperative period is essential for timely detection of complications, but repeated physical exams are time-intensive and place added strain on already understaffed healthcare teams. Kim et al. created an automated AI-based flap monitoring system, using images taken with a smartphone, to reduce the need for human intervention [

34]. First, they built a deep learning model capable of detecting abnormalities in flap perfusion. Then, they integrated the model into a clinically applicable system. Smartphones were installed in places where the flap could be observed and were programmed to take pictures at regular intervals. If the system detected an abnormality, medical staff was alerted directly to investigate. This system is the first to be fully automated, functioning independently without the need for additional verification of its findings. When tested in the clinical setting, the system performed reliably and smoothly. As these systems become more advanced, they will achieve more precise detection of changes in color and temperature and could potentially lead to improved flap salvage rates.

4.2. LLM Utilization for Patient Education

When preparing for the postoperative period, patients often need clear, reliable guidance on recovery timelines, red-flag symptoms, and drain care. In settings where immediate physician input is unavailable, investigators have examined whether large language models (LLMs) can deliver reliable medical information. LLMs—delivered as text chatbots or avatar-based videobots—can translate complex information into plain language and capture questions between visits. In 2023, Liu et al. compared ChatGPT responses to medical questions with standard Google search results, assessing for readability and reliability; five experienced breast surgeons judged ChatGPT superior in accuracy and quality, yet 40% were reluctant to endorse either platform for patient education [

35]. In a separate analysis addressing breast implant–associated anaplastic large cell lymphoma and breast implant illness, the same group again found ChatGPT’s answers more comprehensible than Google’s but noted instances of inaccessible citations [

36].

In 2024, Kenig et al. found that patients are comfortable with AI involvement in their care when used alongside a clinician, with trust dropping when the surgeon was absent [

37]. In a study by Kim et al., patients found breast reconstruction counseling videobots to be more engaging but no more effective than chatbots for usability/accuracy, and patients still viewed both as supplements rather than replacements for surgeon counseling [

6]. Collectively, these findings suggest that LLMs may serve as adjuncts to patient education, but their use should be accompanied by clinician oversight and transparent source attribution.

Early iterations of LLMs, including ChatGPT, were criticized for “citation hallucination”, i.e., the generation of fabricated or unverifiable references. This behavior reflects the probabilistic, next-token nature of LLMs rather than retrieval from curated bibliographic databases; when a specific source is not represented in the training data or retrieval context, the model may synthesize a plausible-seeming citation to satisfy the prompt [

38]. Although citation handling has improved, the risk of spurious or inaccessible references persists. Nevertheless, LLMs can provide accurate, readable explanations and adjust the complexity of information to match patient health literacy. Accordingly, LLM outputs should be used as adjuncts to education—valuable for helping patients formulate questions—while all medical guidance derived from LLMs is verified by a qualified clinician.

5. AI in Esthetic Evaluation

5.1. Preoperative

Generative AI refers to technology that focuses on enhanced visualization. Crisalix is a generative AI platform that renders detailed images of the patient with a specified modification; for breast reconstruction, Crisalix transforms an image of preoperative breasts into the most likely representation of the postoperative outcome following autologous or alloplastic plastic surgery [

39]. Instead of viewing “representative” postoperative images, patients can see a rendition of their own breasts after surgery. When put into practice, these visual tools will help surgeons and patients set realistic expectations for postoperative outcomes.

5.2. Intraoperative

A major challenge in unilateral implant-based breast reconstruction is achieving symmetry with the contralateral breast in both shape and volume. The reconstructed breast can appear fuller and more rounded following implant placement. Consequently, symmetry can be difficult to achieve in patients with small to medium-sized, ptotic breasts or limited upper pole fullness. In these cases, a contralateral balancing procedure—commonly breast augmentation—may be required to create a long-lasting, symmetric, and esthetically pleasing result. The aim is to match the contralateral breast as closely as possible. Because of this expectation, choosing the correct size implant is of importance. Chen et al. developed two automated whole breast segmentation algorithms to calculate breast volume, which could be used for breast prosthesis selection [

40]. Additionally, they tested an MR-based ML model that could reliably measure breast density, a known modifiable risk factor for breast cancer. They found that the proposed segmentation algorithms were highly concordant with the manual segmentation performed by a radiologist, and importantly, they exhibited small improvements in reliability. By determining the exact breast volume preoperatively, segmentation algorithms have the potential to optimize implant selection and minimize the need for intraoperative sizers, leading to more intraoperative efficiency and patient satisfaction [

40]. Breast augmentation for the contralateral breast is not without its own risks. Issues such as capsular contracture and ALCL are possible and patients should be counseled preoperatively [

41].

5.3. Postoperative

By convention, when plastic surgeons assess postoperative reconstructive results, they use key anatomic features, including the sternal notch, nipple–areolar complex, and the inferior and lateral boundaries of the breast, as landmarks to compare breasts against each other. Although normal anatomical values exist, relying on breast features alone does not capture the subjective nature of the “esthetically ideal” breast, making accurate evaluation difficult. Neural networks (NN) are a subtype of AI that function similarly to the human brain; as more data is fed into the network, some neural connections are pruned while others are strengthened until the network can accurately predict an outcome. To help improve the quality of breast symmetry assessment, Kenig et al. designed and tested a NN capable of locating key breast features [

42]. The NN was trained using a dataset of preoperative and postoperative breast images from patients undergoing cosmetic surgery and was then tested on patients undergoing breast reconstruction following oncologic surgery. Their NN demonstrated a 97.4% success rate in detecting key breast features, an impressive level of accuracy given the considerable variability in breast morphology following reconstruction and radiation therapy [

42].

To evaluate differences in esthetic outcomes in patients that received postoperative radiation therapy, Kim et al. used a NN to detect anomaly scores, a measure of “loss” of features between normal training images and reconstructed images [

43]. They found that autologous reconstruction showed lower anomaly scores than tissue expanders, and time elapsed since radiation treatment was correlated with significantly higher anomaly. With this data, surgeons can help patients better understand how their breasts have changed over time, and if applicable, counsel on the benefits of revision surgery for achieving the desired esthetic result.

When communicating asymmetry following breast reconstruction, care must be taken to fortify the evaluation with medically accurate data while also validating the emotional experience of breast cancer recovery. Kenig et al. reviewed symmetry evaluations conducted by LLMs like ChatGPT version 4o and Gemini 1.5 to determine if they can deliver information reliably and empathetically [

44]. While ChatGPT was able to rate symmetry on a 0–10 scale, it did not modulate the delivery of its assessments, even if the score was low. LLMs are unable to detect social cues, thus they are unlikely to consider the gravity of a low score and its potential impact on the patient’s well-being and self-worth. AI and NNs excel at removing the inherent subjectivity of human esthetic evaluations. But the results of these studies make it clear that delivering sensitive information should remain in the hands of the physician.

6. Limitations of AI

While the use of AI in plastic surgery is rapidly expanding, important limitations remain. In this review, we have outlined several ways that AI can improve efficiency at all stages of breast reconstruction. However, the implementation of certain AI modalities, particularly AR and LLMs, on a large scale can be costly. The expense incurred by the healthcare system would have to be weighed against the quality of care provided with AI—further work exploring the cost effectiveness of AI is needed. Additionally, if an AI tool causes an adverse outcome, where does the liability fall? Universal guidelines must be established to ensure patient safety is prioritized and the boundaries of AI are well defined.

Furthermore, surgeons should be cautious in relying on AI to interpret subjective breast reconstruction outcomes. A “successful” reconstruction is multifactorial and inherently subjective, encompassing not only esthetic parameters such as symmetry, contour, and nipple position but also patient-reported satisfaction, psychosocial well-being, and restoration of body image. These dimensions are difficult to quantify objectively, and current algorithms often rely on surrogate visual metrics or limited datasets that fail to capture the nuances of patient perception. As such, AI-derived assessments should be viewed as adjunctive tools rather than definitive evaluators of reconstructive success, and their outputs must always be interpreted in the context of clinical judgment and patient experience.

Although ML models, NNs, and LLMs can adapt as they are exposed to new data, their performance is constrained by the datasets on which they were originally trained. As clinical guidelines and surgical techniques evolve, AI systems may fail to reflect these changes until updated datasets are incorporated. Similarly, the accuracy and applicability of AI tools are limited by the diversity of the training population. Certain patient experiences are inevitably underrepresented in early model development, and without careful selection of representative samples, prediction accuracy may decline when models are validated in broader or distinct patient cohorts. Additionally, heterogeneity in breast reconstruction techniques, including variation in incision patterns, implant pocket selection, use of acellular dermal matrix, and intraoperative decision-making, introduces substantial variability in postoperative outcomes. Differences in surgeon experience, institutional protocols, and reconstructive philosophy further compound this heterogeneity, making it challenging to train AI models that generalize effectively across institutions. As a result, algorithms developed in one setting may perform poorly when applied elsewhere, underscoring the need for large, multicenter datasets and standardized surgical documentation to enhance model robustness and external validity. Bias is another concern. AI systems risk perpetuating or even amplifying racial and socioeconomic disparities in healthcare. For instance, if a training dataset predominantly consists of Caucasian patients from higher socioeconomic backgrounds, the model will optimize its performance for that population, while predictions for underrepresented or marginalized groups may be inaccurate or unreliable [

45]. To enable safe and equitable implementation, large-scale AI adoption will require the development of robust, diverse databases that capture the full spectrum of patient demographics and clinical experiences.

7. Future Directions

The integration of artificial intelligence into breast reconstruction is still in its early stages, with several priorities for advancement. Large-scale, multi-institutional validation of current models is essential to confirm accuracy across diverse populations and reconstructive approaches. Algorithms predicting outcomes such as flap failure, tissue expander loss, and capsular contracture have shown promise, but broader datasets are needed to ensure generalizability. Future models should also expand in scope. Incorporating variables such as perforator anatomy, mastectomy weight, incision type, radiation exposure, and use of acellular dermal matrix could refine complication prediction and operative planning. Embedding these systems into electronic health records or mobile applications will facilitate adoption by surgeons and empower patients during decision-making. As these tools mature, ethical considerations will remain critical. Safeguards around data privacy, algorithmic bias, and the delivery of sensitive esthetic assessments are necessary to maintain trust and equity in care. Ultimately, the greatest potential lies in integrated AI ecosystems that combine predictive modeling, esthetic evaluation, and patient education into a single pathway. Such platforms could align preoperative counseling, surgical execution, and postoperative monitoring, enhancing both outcomes and the overall patient experience after breast cancer treatment.

8. Conclusions

Plastic surgery has always been driven by innovation, and AI now stands at the forefront of this evolution. Surgeons are beginning to incorporate AI across multiple aspects of breast reconstruction care. Machine learning models have shown promise in risk estimation, enabling prediction of postoperative complications and patient-reported outcomes based on clinical, surgical, and patient-specific variables. These tools are increasingly accurate and are expected to become accessible as phone-based applications for use in the clinic. Neural networks can provide objective, quantifiable assessments of breast esthetics, addressing what has traditionally been a subjective outcome. Large language models further support patient care by guiding discussions during consultations and reinforcing in-clinic education with accessible medical information.

Looking ahead, AI will enable plastic surgeons to refine breast reconstruction surgical planning, enhance patient outcomes, and expand the scope of reconstructive care. Widespread adoption, however, will depend on reducing costs, improving usability, and establishing rigorous safety and ethical guidelines. While AI holds immense potential to transform reconstructive surgery, it will remain a powerful adjunct—never a replacement—for the experience, judgment, and compassion of the human surgeon.

Author Contributions

Conceptualization, F.G. and B.M.P.; investigation, F.G. and B.M.P.; writing—original draft preparation, B.M.P.; writing—review and editing, B.M.P., A.S., R.J.A.J., J.A.N. and F.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part through National Institutes of Health/National Cancer Institute Cancer Center Support Grant P30 CA008748 which supports Memorial Sloan-Kettering Cancer Center’s research infrastructure.

Institutional Review Board Statement

No institutional approval was needed for this study.

Informed Consent Statement

Not applicable.

Conflicts of Interest

There are no financial disclosures, commercial associations, or any other conditions posing a conflict of interest to report for any of the authors.

References

- Jarvis, T.; Thornburg, D.; Rebecca, A.M.; Teven, C.M. Artificial Intelligence in Plastic Surgery: Current Applications, Future Directions, and Ethical Implications. Plast. Reconstr. Surg. Glob. Open 2020, 8, e3200. [Google Scholar] [CrossRef]

- Marcaccini, G.; Susini, P.; Xie, Y.; Cuomo, R.; Pozzi, M.; Grimaldi, L.; Rozen, W.M.; Seth, I. Enhancing patient education in breast surgery: Artificial intelligence-powered guidance for mastopexy, augmentation, reduction, and reconstruction. Transl. Breast Cancer Res. 2025, 6, 12. [Google Scholar] [CrossRef]

- Cevik, J.; Seth, I.; Rozen, W.M. Transforming breast reconstruction: The pioneering role of artificial intelligence in preoperative planning. Gland Surg. 2023, 12, 1271–1275. [Google Scholar] [CrossRef]

- Cevik, J.; Seth, I.; Hunter-Smith, D.J.; Rozen, W.M. A History of Innovation: Tracing the Evolution of Imaging Modalities for the Preoperative Planning of Microsurgical Breast Reconstruction. J. Clin. Med. 2023, 12, 5246. [Google Scholar] [CrossRef] [PubMed]

- Roy, N.; Downes, M.H.; Ibelli, T.; Amakiri, U.O.; Li, T.; Tebha, S.S.; Balija, T.M.; Schnur, J.B.; Montgomery, G.H.; Henderson, P.W. The psychological impacts of post-mastectomy breast reconstruction: A systematic review. Ann. Breast Surg. Open Access J. Bridge Breast Surg. Across World 2024, 8, 19. [Google Scholar] [CrossRef]

- Kim, T.S.; Yu, C.T.; Hinson, C.; Fung, E.B.; Allam, O.; Nazerali, R.S.M.; Ayyala, H.S. ChatGPT Virtual Assistant for Breast Reconstruction: Assessing Preferences for a Traditional Chatbot versus a Human AI VideoBot. Plast. Reconstr. Surg. Glob. Open 2024, 12, e6202. [Google Scholar] [CrossRef]

- Alonso-Burgos, A.; García-Tutor, E.; Bastarrika, G.; Cano, D.; Martínez-Cuesta, A.; Pina, L.J. Preoperative planning of deep inferior epigastric artery perforator flap reconstruction with multislice-CT angiography: Imaging findings and initial experience. J. Plast. Reconstr. Aesthet. Surg. 2006, 59, 585–593. [Google Scholar] [CrossRef]

- Mavioso, C.; Araújo, R.J.; Oliveira, H.P.; Anacleto, J.C.; Vasconcelos, M.A.; Pinto, D.; Gouveia, P.F.; Alves, C.; Cardoso, F.; Cardoso, J.S.; et al. Automatic detection of perforators for microsurgical reconstruction. Breast 2020, 50, 19–24. [Google Scholar] [CrossRef]

- Cevik, J.; Rozen, W. A Novel optimization technique of Computed Tomography Angiographic 3D-reconstructions for pre-operative planning of DIEP flaps. JPRAS Open 2022, 35, 38–41. [Google Scholar] [CrossRef] [PubMed]

- Seth, I.; Lindhardt, J.; Jakobsen, A.; Thomsen, J.B.; Kiil, B.J.; Rozen, W.M.M.; Kenney, P.S. Improving Visualization of Intramuscular Perforator Course: Augmented Reality Headsets for DIEP Flap Breast Reconstruction. Plast. Reconstr. Surg. Glob. Open 2023, 11, e5282. [Google Scholar] [CrossRef] [PubMed]

- Berger, M.F.; Winter, R.; Tuca, A.C.; Michelitsch, B.; Schenkenfelder, B.; Hartmann, R.; Giretzlehner, M.; Reishofer, G.; Kamolz, L.-P.; Lumenta, D.B. Workflow assessment of an augmented reality application for planning of perforator flaps in plastic reconstructive surgery: Game or game changer? Digit. Health 2023, 9, 20552076231173554. [Google Scholar] [CrossRef]

- Meier, E.L.; Ulrich, D.J.O.; Hummelink, S. Projected augmented reality in DIEP flap breast reconstruction: Projecting perforators on the skin using dynamic infrared thermography. J. Plast. Reconstr. Aesthetic Surg. JPRAS 2024, 94, 83–90. [Google Scholar] [CrossRef]

- Chen, J.; Gabay, A.; Kim, M.; Amakiri, U.B.; Boe, L.A.; Stern, C.; Mehrara, B.J.; Gibbons, C.; Nelson, J.A. AI Risk Prediction Tools for Alloplastic Breast Reconstruction. Plast. Reconstr. Surg. 2025, 156, 639–650. [Google Scholar] [CrossRef]

- Bavaro, D.A.; Fanizzi, A.; Iacovelli, S.; Bove, S.; Comes, M.C.; Cristofaro, C.; Cutrignelli, D.; De Santis, V.; Nardone, A.; Lagattolla, F.; et al. A Machine Learning Approach for Predicting Capsular Contracture after Postmastectomy Radiotherapy in Breast Cancer Patients. Healthcare 2023, 11, 1042. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, M.L.; Black, G.G.; Qin, N.; Zhou, G.; Bernstein, J.L.; Chinta, M.; Otterburn, D.M. Machine-Learning Prediction of Capsular Contraction after Two-Stage Breast Reconstruction. JPRAS Open 2023, 38, 1–13. [Google Scholar] [CrossRef]

- Hassan, A.M.; Biaggi, A.P.; Asaad, M.; Andejani, D.F.; Liu, J.; Offodile2Nd, A.C.; Selber, J.C.; Butler, C.E. Development and Assessment of Machine Learning Models for Individualized Risk Assessment of Mastectomy Skin Flap Necrosis. Ann. Surg. 2023, 278, e123–e130. [Google Scholar] [CrossRef] [PubMed]

- O’Neill, A.C.; Yang, D.; Roy, M.; Sebastiampillai, S.; Hofer, S.O.P.; Xu, W. Development and Evaluation of a Machine Learning Prediction Model for Flap Failure in Microvascular Breast Reconstruction. Ann. Surg. Oncol. 2020, 27, 3466–3475. [Google Scholar] [CrossRef]

- Ozmen, B.B.; Phuyal, D.; Berber, I.; Schwarz, G.S. Artificial intelligence prediction model for readmission after DIEP flap breast reconstruction based on NSQIP data. J. Plast. Reconstr. Aesthetic Surg. JPRAS 2025, 106, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Gabay, A.J.; Chen, J.; Stern, C.S.; Gibbons, C.; Mehrara, B.; Nelson, J.A. Predicting Breast Reconstruction Readmission, Reoperation, and Prolonged Length of Stay: A Machine Learning Approach. J. Surg. Oncol. 2025, 132, 345–353. [Google Scholar] [CrossRef]

- Meyer, A.M.; Kim, H.B.; Eom, J.S.; Sinik, L.; Braun, S.; Han, H.H.; Butterworth, J.A. Predicting complications in breast reconstruction: External validation of a machine learning model. J. Plast. Reconstr. Aesthetic Surg. JPRAS 2025, 107, 176–181. [Google Scholar] [CrossRef]

- Pusic, A.L.; Klassen, A.F.; Scott, A.M.; Klok, J.A.; Cordeiro, P.G.; Cano, S.J. Development of a new patient-reported outcome measure for breast surgery: The BREAST-Q. Plast. Reconstr. Surg. 2009, 124, 345–353. [Google Scholar] [CrossRef] [PubMed]

- Cano, S.J.; Klassen, A.F.; Scott, A.M.; Cordeiro, P.G.; Pusic, A.L. The BREAST-Q: Further validation in independent clinical samples. Plast. Reconstr. Surg. 2012, 129, 293–302. [Google Scholar] [CrossRef]

- Pfob, A.; Mehrara, B.J.; Nelson, J.A.; Wilkins, E.G.; Pusic, A.L.; Sidey-Gibbons, C. Machine learning to predict individual patient-reported outcomes at 2-year follow-up for women undergoing cancer-related mastectomy and breast reconstruction (INSPiRED-001). Breast 2021, 60, 111–122. [Google Scholar] [CrossRef]

- Pfob, A.; Mehrara, B.J.; Nelson, J.A.; Wilkins, E.G.; Pusic, A.L.; Sidey-Gibbons, C. Towards Patient-centered Decision-making in Breast Cancer Surgery: Machine Learning to Predict Individual Patient-reported Outcomes at 1-year Follow-up. Ann. Surg. 2023, 277, e144–e152. [Google Scholar] [CrossRef]

- Xu, C.; Pfob, A.; Mehrara, B.J.; Yin, P.; Nelson, J.A.; Pusic, A.L.; Sidey-Gibbons, C. Enhanced Surgical Decision-Making Tools in Breast Cancer: Predicting 2-Year Postoperative Physical, Sexual, and Psychosocial Well-Being following Mastectomy and Breast Reconstruction (INSPiRED 004). Ann. Surg. Oncol. 2023, 30, 7046–7059. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Gabay, A.; Boe, L.A.; Shammas, R.L.; Stern, C.; Pusic, A.; Mehrara, B.J.; Gibbons, C.; Nelson, J.A. Machine Learning Accurately Predicts Patient-Reported Outcomes 1 Year After Breast Reconstruction. Ann. Surg. 2025. online ahead of print. [Google Scholar] [CrossRef]

- Zafar, S.Y.; Peppercorn, J.M.; Schrag, D.; Taylor, D.H.; Goetzinger, A.M.; Zhong, X.; Abernethy, A.P. The Financial Toxicity of Cancer Treatment: A Pilot Study Assessing Out-of-Pocket Expenses and the Insured Cancer Patient’s Experience. Oncologist 2013, 18, 381–390. [Google Scholar] [CrossRef]

- Sidey-Gibbons, C.; Pfob, A.; Asaad, M.; Boukovalas, S.; Lin, Y.-L.; Selber, J.C.; Butler, C.E.; Offodile, A.C. Development of Machine Learning Algorithms for the Prediction of Financial Toxicity in Localized Breast Cancer Following Surgical Treatment. JCO Clin. Cancer Inform. 2021, 5, 338–347. [Google Scholar] [CrossRef]

- Kawashima, K.; Ghali, S.; Nikkhah, D.; Esmaeili, A. Recent Advancements in Robotic-assisted Plastic Surgery Procedures: A Systematic Review. Plast. Reconstr. Surg. Glob. Open 2025, 13, e6476. [Google Scholar] [CrossRef] [PubMed]

- Knudsen, J.E.; Ghaffar, U.; Ma, R.; Hung, A.J. Clinical applications of artificial intelligence in robotic surgery. J. Robot. Surg. 2024, 18, 102. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Jiao, L.; Chen, S.; Li, Z.; Xiao, Y.; Du, F.; Huang, J.; Long, X. Flap perfusion assessment with indocyanine green angiography in deep inferior epigastric perforator flap breast reconstruction: A systematic review and meta-analysis. Microsurgery 2023, 43, 627–638. [Google Scholar] [CrossRef]

- Singaravelu, A.; Dalli, J.; Potter, S.; Cahill, R.A. Artificial intelligence for optimum tissue excision with indocyanine green fluorescence angiography for flap reconstructions: Proof of concept. JPRAS Open 2024, 41, 389–393. [Google Scholar] [CrossRef]

- Muaddi, H.; Choudhary, A.; Lee, F.; Anderson, S.S.; Habermann, E.; Etzioni, D.; McLaughlin, S.; Kendrick, M.; Salehinejad, H.; Thiels, C.D. Imaging-based Surgical Site Infection Detection Using Artificial Intelligence. Ann. Surg. 2025, 282, 419–428. [Google Scholar] [CrossRef]

- Kim, J.; Lee, S.M.; Kim, D.E.; Kim, S.; Chung, M.J.; Kim, Z.; Kim, T.; Lee, K.-T. Development of an Automated Free Flap Monitoring System Based on Artificial Intelligence. JAMA Netw. Open 2024, 7, e2424299. [Google Scholar] [CrossRef]

- Liu, H.Y.; Alessandri Bonetti, M.; Jeong, T.; Pandya, S.; Nguyen, V.T.; Egro, F.M. Dr. ChatGPT will see you now: How do Google and ChatGPT compare in answering patient questions on breast reconstruction? J. Plast. Reconstr. Aesthetic Surg. JPRAS 2023, 85, 488–497. [Google Scholar] [CrossRef]

- Liu, H.Y.; Alessandri Bonetti, M.; De Lorenzi, F.; Gimbel, M.L.; Nguyen, V.T.; Egro, F.M. Consulting the Digital Doctor: Google Versus ChatGPT as Sources of Information on Breast Implant-Associated Anaplastic Large Cell Lymphoma and Breast Implant Illness. Aesthetic Plast. Surg. 2024, 48, 590–607. [Google Scholar] [CrossRef] [PubMed]

- Kenig, N.; Muntaner Vives, A.; Monton Echeverria, J. Is My Doctor Human? Acceptance of AI among Patients with Breast Cancer. Plast. Reconstr. Surg. Glob. Open 2024, 12, e6257. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. ACM Trans. Inf. Syst. 2025, 43, 42. [Google Scholar] [CrossRef]

- Landau, M.; Kassir, M.; Goldust, M. Transforming Aesthetic Medicine with Generative Artificial Intelligence. J. Cosmet. Dermatol. 2025, 24, e70015. [Google Scholar] [CrossRef]

- Chen, M.; Xing, J.; Guo, L. MRI-based Deep Learning Models for Preoperative Breast Volume and Density Assessment Assisting Breast Reconstruction. Aesthetic Plast. Surg. 2024, 48, 4994–5006. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Jia, L.; Guo, R.; Xiong, J.; Jiang, H. A bibliometric and visualized study on global trends of breast augmentation complications, 2011–2021. Gland Surg. 2023, 12, 354–365. [Google Scholar] [CrossRef]

- Kenig, N.; Monton Echeverria, J.; De la Ossa, L. Identification of Key Breast Features Using a Neural Network: Applications of Machine Learning in the Clinical Setting of Plastic Surgery. Plast. Reconstr. Surg. 2024, 153, 273e–280e. [Google Scholar] [CrossRef]

- Kim, D.Y.; Lee, S.J.; Kim, E.K.; Kang, E.; Heo, C.Y.; Jeong, J.H.; Myung, Y.; Kim, I.A.; Jang, B.-S. Feasibility of anomaly score detected with deep learning in irradiated breast cancer patients with reconstruction. npj Digit. Med. 2022, 5, 125. [Google Scholar] [CrossRef] [PubMed]

- Kenig, N.; Monton Echeverria, J.; Muntaner Vives, A. Evaluating Surgical Results in Breast Cancer with Artificial Intelligence. Aesthetic Plast. Surg. 2025. [Google Scholar] [CrossRef] [PubMed]

- Obermeyer, Z.; Powers, B.; Vogeli, C.; Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019, 366, 447–453. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).