Abstract

Background: Hyperkinetic movement disorders involve excessive, involuntary movements such as ataxia, chorea, dystonia, myoclonus, tics, and tremor. Recent advances in artificial intelligence (AI) allow investigators to integrate multimodal instrumented movement measurements and imaging techniques and to analyze these data together at scale. In this systematic review, we aim to characterize AI’s performance in diagnosing and quantitatively phenotyping these disorders. Methods: We searched PubMed and Embase using a semi-automated article-screening pipeline. Results: Fifty-five studies met the inclusion criteria (n = 11,946 subjects). Thirty-five studies used machine learning, sixteen used deep learning, and four used both. Thirty-eight studies reported disease diagnosis, twenty-three reported quantitative phenotyping, and six reported both. Diagnostic accuracy was reported in 36 of 38 and correlation coefficients in 10 of 23 studies. Kinematics (e.g., accelerometers and inertial measurement units) were the most used dataset. Diagnostic accuracy was reported in 36 studies and ranged from 56 to 100% compared to clinical diagnoses to differentiate them from healthy controls. The correlation coefficient was reported in 10 studies and ranged from 0.54 to 0.99 compared to clinical ratings for quantitative phenotyping. Five studies had an overall judgment of “low risk of bias” and three had external validation. Conclusion: There is a need to adopt AI-based research guidelines to minimize reporting heterogeneity and bolster clinical interpretability.

1. Introduction

Hyperkinetic movement disorders are neurological conditions characterized by excessive, involuntary movements, and include ataxia, chorea, dystonia, myoclonus, tics, and tremor. Historically, diagnosis of such disorders has relied on the appreciation of an abnormal movement’s phenomenology, or the science and art of classifying abnormal movements based on the clinician’s physical exam [1]. Diagnosis therefore relies on subjective assessment and may vary based on the clinical neurology training of the clinician (e.g., diagnostic assessment may differ between a primary care physician and a movement disorders specialist). The question remains whether objective, quantitative assessments could aid in diagnosing and phenotyping these conditions. In recent years, research has attempted to instrument movement measurement with body-worn sensors (e.g., accelerometers, gyroscopes), video and audio analysis, or electrophysiology (e.g., electromyography, EMG; electroencephalography, EEG) [2]. Other data modalities, such as neuroimaging (e.g., anatomical or functional MRI), can also be interrogated to identify specific disease signatures [3]. These methods lead to large amounts of data that are challenging to interpret with traditional computational methods [2].

Artificial intelligence (AI)-based methods offer the ability to process large datasets with variable data types (e.g., clinical assessments, genetics, imaging, electrophysiology), making them increasingly utilized in research focusing on the diagnosis and quantitative phenotyping of hyperkinetic movement disorders. AI is the broader field of computer science focused on creating systems that can perform tasks requiring human-like intelligence, such as reasoning and problem-solving. Within AI, Machine Learning (ML) enables computers to learn from data and improve over time without explicit programming. A subset of ML, Deep Learning (DL) utilizes deep neural networks with multiple layers to model complex patterns, making it particularly effective for tasks like image and speech recognition [4]. Despite the growing number of research articles describing the promises of AI tools for hyperkinetic movement disorders, clinical implementation lags behind. Several barriers to clinical translation exist, but at the most elemental level, the major barriers are the quality, interpretability, and clinical applicability of research results.

This review aims to 1. characterize the existing AI-based research in hyperkinetic movement disorders for disease diagnosis and quantitative phenotyping and 2. evaluate its quality.

2. Methods

This systematic review was conducted per the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) guidelines [5]. This study was conducted in accordance with the principles outlined in the Declaration of Helsinki, as revised in 2013.

2.1. Search Methods

We searched PubMed and Embase for peer-reviewed studies published up to December 2023 using a combination of free text and MeSH (Medical Subject Headings) for the following AI keywords: Artificial Intelligence, Machine Learning, Deep Learning, Supervised Learning, Unsupervised Learning, Reinforcement Learning, Neural Network, Natural Language Processing, Computer Vision, Data Mining, Predictive Analytics, Big Data, Feature Engineering, Model Training Algorithm; and movement disorders keywords: Tremor, Movement Disorder, Parkinson, Dystonia, Huntington, Essential Tremor, Ataxia, Myoclonus, Progressive Supranuclear Palsy, PSP, MSA, Tics (Supplementary Table S1). No restrictions were applied to gender, age, ethnicity, disease duration, or disease severity. Hypokinetic movement disorders, namely Parkinson’s disease (PD) and related conditions, were included in the search terms as they can manifest with tremor.

2.2. Inclusion and Exclusion Criteria

Articles were included if they utilized AI algorithms in hyperkinetic movement disorders, namely ataxia, chorea, dystonia, tics, and tremor, specifically involving disease detection and or quantitative phenotyping. Studies of PD participants were excluded if PD was the primary group of interest, as to only include studies focusing on hyperkinetic movement disorders that used PD as a comparison cohort. Articles in non-human subjects or without an English-language translation were excluded. Reviews and conference papers were excluded.

2.3. Selection of Studies

An article screening code was created in Python and finetuned with assistance from a free, online generative language processing model (ChatGPT-4). The program executed the following functions: a. title labeling for AI and movement disorders keywords; b. duplicate removal; c. inclusion suggestion for studies that included a combination of at least one of the AI keywords and one of the movement disorders keywords in their title. As an example, a hypothetical study titled “Diagnostic Markers of Essential Tremor identified with Machine Learning Algorithms”, would have the movement disorder keyword “Essential tremor” and the AI keyword “Machine learning” identified, and since both were present, it would be selected for inclusion. Two authors (JAV, SY) manually reviewed abstracts of the studies selected for inclusion. Twenty percent of the studies recommended for exclusion were reviewed randomly by one author (JAV) to ensure the accuracy of the title screening process. No excluded articles were reclassified as included after the manual revision. The code is freely available on the author’s GitHub (https://github.com/VizcarraJA/sysrevs_screener). The reference lists of selected articles were additionally screened for pertinent studies not included in the original search strategy.

2.4. PICO Questions and Data Analysis

The following PICO (Population, Intervention, Comparison, Outcome) questions were studied:

- In studies of hyperkinetic movement disorders, what is the performance of artificial intelligence models compared to traditional non-artificial intelligence methods for disease diagnosis?

- In studies of hyperkinetic movement disorders, what is the performance of artificial intelligence models compared to traditional non-artificial intelligence methods for quantitative phenotyping?

2.5. Data Extraction, Quality Assessment, and Risk of Bias

The following data were extracted from eligible studies using a standardized form: title, authors, publication year, DOI, review question, population studied, number of cases, number of controls, AI method, reference test, outcomes, type of internal validation, and type of external validation. For PICO question #1, disease diagnosis (i.e., classification task), we extracted accuracy; if accuracy was unavailable, we extracted the area under the receiver operator characteristic curve (AUROC). For PICO question #2, quantitative phenotyping, we extracted the coefficient of correlation (r) or coefficient of determination (r2) for correlation or regression tasks, and accuracy or AUROC for disease severity classification. When these were unavailable, we extracted free text outcomes. We extracted the best-performing metric for each AI model used for outcome reporting.

A quality assessment was performed by evaluating the presence of internal and external validation methods. Internal validation included cross-validation or split sample testing. External validation refers to using an independent dataset not used during the model training or testing phases to attempt to replicate the model’s performance. Risk of bias was assessed as per QUADAS-2 (Quality Assessment of Diagnostic Accuracy Studies 2) guidelines [6]. QUADAS-2 complements the data extraction process by evaluating four key domains: patient selection, index test, reference standard, and flow and timing of patients through the study. Each domain was assessed for risk of bias and review applicability concerns. The risk of bias was rated as low, high, or unclear based on specific criteria, while the review applicability was rated similarly based on how well the study aligned with the review question. Based on QUADAS-2 guidance, we tailored the index test interpretation to the unique challenges of AI diagnostic studies. Key concerns include data leakage, feature selection, adequacy of validation processes and data partitioning, and threshold identification.

3. Results

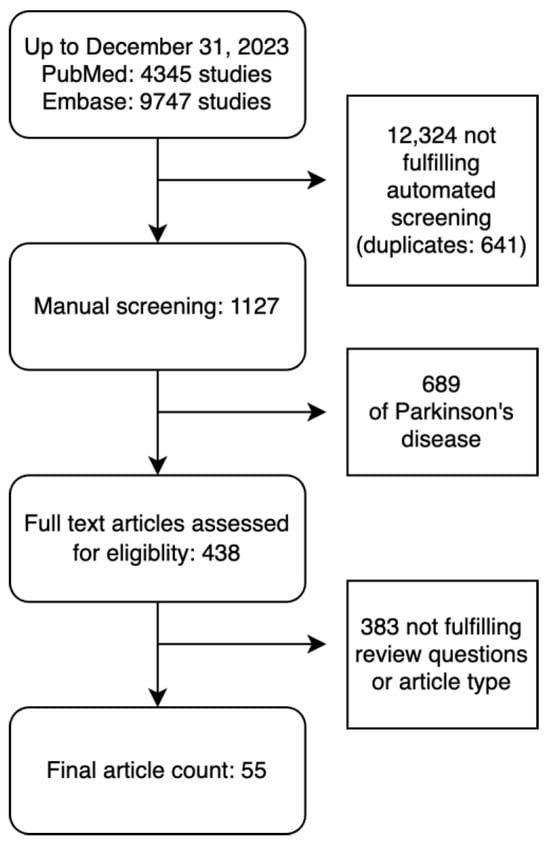

The search strategy identified 14,092 studies published from 1990 to 2023. The automated title screener screened out a total of 12,324 studies without errors detected upon manual review. A manual screening of the remaining 1127 articles found 689 of PD, and an additional 383 abstracts, review articles, or studies not meeting review questions. Full-text assessment for eligibility resulted in 55 articles which met the review criteria (Figure 1) and underwent data extraction as well as quality and risk of bias appraisal. There were 13 articles on ataxia [7,8,9,10,11,12,13,14,15,16,17,18,19], 11 articles on chorea [20,21,22,23,24,25,26,27,28,29,30], five articles on dystonia [31,32,33,34,35], one article on tics [36], and 25 articles on tremor [37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61]. No articles on myoclonus were included. Thirty-five studies used machine learning (ML), sixteen used deep learning (DL), and four used both. The total number of subjects included was 11,946. For each hyperkinetic movement disorders group, ataxia had 2492 cases and 644 controls; chorea had 5256 cases and 271 controls; dystonia had 659 cases and 380 controls; tics had 11 cases with no controls; and tremor had 1540 cases and 693 controls. Data analyzed included 17 kinematics datasets (e.g., accelerometers, inertial measurement units), 15 studies included imaging-based datasets (e.g., MRI, fMRI, DAT scan), 12 studies included voice or video datasets (e.g., voice analysis, video analysis, eye tracking), and 10 studies included electrophysiology datasets (e.g., EEG, EMG), while the remainder included clinical examination and genetic datasets.

Figure 1.

Flowchart of included studies.

3.1. Disease Diagnosis and Quantitative Phenotyping Outcomes

Thirty-eight studies reported disease diagnosis outcomes (Table 1) and twenty-three reported quantitative phenotyping outcomes (Table 2). Six studies reported both types of outcomes and are featured in the tables below.

Table 1.

Studies using AI for disease diagnosis.

Table 2.

Studies using AI for Quantitative Phenotyping.

3.1.1. Disease Diagnosis

Across all hyperkinetic movement disorders, accuracy was reported in 36 studies. The most used ML and DL algorithms were support vector machine (SVM, n = 13) and convolutional neural networks (CNN, n = 8), respectively. In ataxia (n = 8), data from an inertial measurement unit (IMU) was the most common dataset (n = 3), with 86–88% accuracy compared to clinical diagnosis for discriminating from healthy controls. In chorea (n = 8), data from MRI brain images were the most common dataset (n = 3), with accuracy ranging from 62% to 85% compared to clinical diagnosis for discriminating from healthy controls. In dystonia (n = 3), three studies used different datasets with accuracy for video analysis, MRI brain images, and voice recordings of 70%, 99%, and 65%, respectively, compared to clinical diagnosis for discriminating from healthy controls. For patients with tic disorder (n = 1), a video analysis dataset was used in one study, with an AUROC of 0.74 compared to clinical diagnosis. In tremor (n = 18), data from MRI brain images were the most often analyzed data (n = 4), with accuracy ranging from 58% to 98% and 100% compared to clinical diagnosis for discriminating from healthy controls (n = 3) and orthostatic tremor (n = 1), respectively.

3.1.2. Quantitative Phenotyping

Across all hyperkinetic movement disorders, the correlation coefficient was reported in 10 studies. The most used ML and DL algorithms were SVM (n = 6) and CNN (n = 4), respectively. In ataxia (n = 7), the correlation coefficient ranged from 0.56 to 0.82 in four studies comparing IMU (n = 2), MRI brain images (n = 1), and video analysis datasets to clinical ratings; three remaining studies did not report a correlation coefficient. In chorea (n = 5), the correlation coefficient ranged from 0.66 to 0.77 in two studies comparing accelerometer and MRI/fMRI datasets to clinical ratings; three remaining studies did not report a correlation coefficient. In dystonia (n = 2), the correlation coefficient was 0.54–0.93 for two studies comparing video analysis to clinical ratings. In tremor (n = 9), the correlation coefficient ranged from 0.71 to 0.99 in two studies comparing IMU and MRI/fMRI datasets to clinical ratings; seven remaining studies did not report a correlation coefficient.

3.2. Quality Appraisal and Risk of Bias

All studies utilized internal validation methods (cross-validation, n = 47; split sample, n = 8). Only three studies documented external validation methods [8,29,49]. Five studies were judged as having “low risk of bias” [19,29,39,49,52], while the remaining studies had at least one category at risk of bias (Table 3). The index test was the most common category at risk of bias, meaning that the AI test results may have been interpreted with knowledge of the non-AI reference standard or that a threshold for disease diagnosis was not pre-specified. All studies demonstrated low concern regarding review applicability, indicating that their populations, index tests, and reference standards were highly relevant to the review PICO questions.

Table 3.

QUADAS-2 Summary Findings.

4. Discussion

In this study, we summarized the existing research literature applying AI methods to understand hyperkinetic movement disorders. We found 55 articles with 11,946 subjects that evaluated disease diagnosis and quantitative phenotyping. We implemented a semi-automated article screening pipeline that enhanced the reproducibility of results and utilized quality appraisal and risk of bias methods to evaluate selected studies. The most common ML and DL algorithms were SVM and CNN, respectively, and kinematics was the most frequent type of data analyzed with AI. The diagnostic accuracy was reported in 36 studies and ranged from 56 to 100% compared to clinical diagnoses, to differentiate patients with these conditions from healthy controls. The correlation coefficient was reported in 10 studies and ranged from 0.54 to 0.99 compared to clinical ratings for quantitative phenotyping. Importantly, all studies reported internal validation methods, but only three studies were validated with external data sets [8,29,49]. Only five studies were judged to have a low risk of bias [19,29,39,49,52], which is largely explained by the unclear methodology reporting of the index test interpretation. These quality concerns negatively impact the confidence in AI-based clinical research studies and the potential to integrate their findings into clinical practice.

We identified limitations with index test reporting that raise bias concerns. A major issue is data leakage, where training set information improperly influences the test set, often through normalization or feature engineering applied to the entire dataset rather than only to the training set. Inadequate validation processes, like insufficient cross-validation, resulted in overfitting, where models perform well on familiar data but poorly on unseen cases. Additionally, many studies provided insufficient detail on how training and testing sets were divided, which is needed for fair class representation. Furthermore, the determination of decision thresholds was often unclear. Selecting thresholds based on the training set can exacerbate overfitting, while thresholds derived from the testing set may misrepresent the model’s true diagnostic capabilities. Using QUADAS-2, similar limitations have been reported in AI-based imaging diagnostic studies across medical specialties [62]. In PD, concerns about AI-based research quality have also been raised [63], where in a study of 244 neuroimaging studies using AI methods for diagnosis, prognosis, or intervention, only 20% passed a set of minimal quality criteria. Data spillover, inadequate sample size, and insufficient biological plausibility were primary factors for quality loss [63]. Transparency in these practices is therefore needed to enhance the explainability of AI methods in diagnostic contexts [64], a critical requirement for its clinical implementation in movement disorders.

Another source of bias is the lack of external validation. AI diagnostic accuracy can be inflated by up to 30% due to overfitting the training and validation sets in a manner that would otherwise remain unnoticed without an external comparison [65]. Accuracy inflation can occur due to a bias towards the majority class (i.e., accuracy paradox), demographic imbalances associated with the disease of interest (i.e., second-order effects of the accuracy paradox), and the digital fingerprinting phenomenon (i.e., different samples from the same individual both in training and testing sets) [65]. Conversely, it is also possible that accuracy is deflated due to the imperfect nature of the comparison gold standard. Unlike in other specialties, such as oncology or cardiology, expert clinical examination by a trained movement disorders specialist remains the reference test (i.e., “gold standard”) in most hyperkinetic movement disorders. Only a few studies used genetic diagnosis as the reference test, which elevates the diagnostic certainty of the reference test. This is also applicable for studies quantifying disease severity, as severity ratings are anchored in clinical scales administered by clinicians.

There is a need to adopt AI-based research reporting guidelines that evaluate all the aspects of an AI pipeline: data collection, data preprocessing, feature selection, model training, and model testing. Several such guidelines are becoming increasingly available in recent years [66]. Although these guidelines emphasize medical imaging research and are not specific to movement disorders, some newer guidelines are being developed for diagnostic accuracy and prediction model studies. For example, STARD-AI and TRIPOD-AI are extensions of existing guidelines that may provide guidance relevant to movement disorders studies [66]. Ultimately, domain-specific guidelines will be needed to address AI-based kinematic and electrophysiology data analysis for accuracy and quantitative phenotyping in movement disorders. By adopting these measures, researchers can effectively reduce bias and enhance the fairness of AI models championing responsible AI [67].

This study has several limitations, including restricting the extraction of performance metrics to accuracy, correlation coefficient, determination coefficient, and AUROC. Other metrics, such as precision, recall, and F1 score for classification tasks, as well as mean absolute error and mean squared error for regression tasks, could provide additional information about the performance of AI algorithms. These metrics certainly speak to the clinical utility of research findings but were not considered, as evaluating method performance was not the focus of this review. We decided to prioritize the homogeneity of data extraction and focus on the most reported metrics available, which might best reflect the quality of methods, study design, and implementation. Another limitation in interpreting and comparing results across studies is the variability in data types (e.g., single vs. multi-channel, time-series vs. spatial-series), which dictate how a given AI method may be specifically implemented (e.g., a time-series CNN to evaluate IMU data vs. a 2D or 3D CNN to evaluate MRI data). These methodological differences can significantly affect model performance, making direct comparisons across studies challenging. Hence, we chose to aggregate data by dataset and not AI method. Another limitation of this review is the use of an automated article screening pipeline. This, in principle, increases reproducibility, as it reduces human error in title screening, which was needed due to the large number of articles found with broad search terms, but it may have overlooked relevant studies with missing keywords in the title. We aimed to reduce this caveat by randomly screening a sample of these articles, finding no inadequately excluded articles by the automated title screener. However, only 20% of titles were evaluated in our random screening, so it is possible that articles that were not manually reviewed were incorrectly excluded. Finally, QUADAS-2 is not tailored to evaluate bias in accuracy studies powered by AI, possibly conflating the perceived risk of bias. To our knowledge, no specific guidelines exist for this task; QUADAS-AI is under development [68]. These guidelines should highlight reporting critical steps of AI model development and testing, including data collection and preprocessing, model selection and computational considerations, model training procedure, model evaluation, and model generalizability and interpretability.

5. Conclusions

This systematic review demonstrates a growing enthusiasm for research using AI algorithms to investigate hyperkinetic movement disorders, most commonly for diagnosis and prognosis, tracking disease progression, and predicting outcomes. Datasets were analyzed across ataxia, chorea, dystonia, tics, and tremors, and methods were implemented, comparing them against ground-truth assessments to characterize diagnostic and quantitative phenotyping performance. In 36 studies, diagnostic accuracy ranged from 56% to 100%, while ten studies reported correlation coefficients from 0.54 to 0.99 for quantitative phenotyping. Performance metric reporting was heterogeneous: accuracy was often reported, while correlation coefficient was seldom reported. Most studies had at least one feature at high risk of bias, possibly due to inadequate reporting or intrinsic research design flaws. Our results highlight the need to adopt rigorous AI-based research guidelines to minimize reporting heterogeneity and bolster the clinical interpretability of results from these studies and, ultimately, their clinical translation.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jcm13237009/s1, Table S1. Search Terms for Embase And Pubmed.

Author Contributions

J.A.V. contributed to the conception of this review. J.A.V. and S.Y. contributed to the literature search, data collection, and data analysis. J.A.V., S.Y., K.X., C.A.E., M.S. and L.H.H. contributed to data interpretation. J.A.V. contributed to the writing of this review. S.Y., K.X., C.A.E., M.S. and L.H.H. contributed to the critical revision of the manuscript for important intellectual content. All authors have read and agreed to the published version of the manuscript, had full access to all the data in the review, and ensured that questions related to the accuracy or integrity of any part of the work were appropriately investigated and resolved.

Funding

This work was supported by the National Institute of Health (1T32NS091006-10) and the Institute for Translational Medicine and Therapeutics of the Perelman School of Medicine at the University of Pennsylvania.

Acknowledgments

The authors thank Brian Litt for his valuable comments.

Conflicts of Interest

J.A.V.: No financial disclosures. S.Y.: No financial disclosures. K.X.: No financial disclosures. C.A.E.: received grant support from the National Institute of Neurological Disorders and Stroke of the National Institutes of Health (K23NS121520) and received consulting fees from Epiminder. M.S.: received consulting fees from Medtronic, Boston Scientific, and Aspen Neuroscience; clinical trial funding from Abbvie, Supernus, Bial, Scion, and UCB. LHH: received consulting fees from Medtronic.

References

- Duker, A.P.; Espay, A.J. The Phenomenology of Movement Disorders. In Movement Disorders Curricula; Springer: Vienna, Austria, 2017; pp. 29–41. [Google Scholar]

- Chandrabhatla, A.S.; Pomeraniec, I.J.; Ksendzovsky, A. Co-Evolution of Machine Learning and Digital Technologies to Improve Monitoring of Parkinson’s Disease Motor Symptoms. NPJ Digit. Med. 2022, 5, 32. [Google Scholar] [CrossRef] [PubMed]

- Calhoun, V. Data-Driven Approaches for Identifying Links between Brain Structure and Function in Health and Disease. Dialogues Clin. Neurosci. 2018, 20, 87–99. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef]

- Whiting, P.F. QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies. Ann. Intern. Med. 2011, 155, 529. [Google Scholar] [CrossRef] [PubMed]

- Dominguez-Vega, Z.T.; Dubber, D.; Elting, J.W.J.; Sival, D.A.; Maurits, N.M. Instrumented Classification of Patients with Early Onset Ataxia or Developmental Coordination Disorder and Healthy Control Children Combining Information from Three Upper Limb SARA Tests. Eur. J. Paediatr. Neurol. 2021, 34, 74–83. [Google Scholar] [CrossRef]

- Ngo, T.; Pathirana, P.N.; Horne, M.K.; Power, L.; Szmulewicz, D.J.; Milne, S.C.; Corben, L.A.; Roberts, M.; Delatycki, M.B. Balance Deficits Due to Cerebellar Ataxia: A Machine Learning and Cloud-Based Approach. IEEE Trans. Biomed. Eng. 2021, 68, 1507–1517. [Google Scholar] [CrossRef]

- Ngo, T.; Nguyen, D.C.; Pathirana, P.N.; Corben, L.A.; Delatycki, M.B.; Horne, M.; Szmulewicz, D.J.; Roberts, M. Federated Deep Learning for the Diagnosis of Cerebellar Ataxia: Privacy Preservation and Auto-Crafted Feature Extractor. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 803–811. [Google Scholar] [CrossRef]

- Schultz, B.G.; Joukhadar, Z.; Nattala, U.; del Mar Quiroga, M.; Noffs, G.; Rojas, S.; Reece, H.; Van Der Walt, A.; Vogel, A.P. Disease Delineation for Multiple Sclerosis, Friedreich Ataxia, and Healthy Controls Using Supervised Machine Learning on Speech Acoustics. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 4278–4285. [Google Scholar] [CrossRef]

- Kashyap, B.; Horne, M.; Pathirana, P.N.; Power, L.; Szmulewicz, D. Automated Topographic Prominence Based Quantitative Assessment of Speech Timing in Cerebellar Ataxia. Biomed. Signal Process. Control 2020, 57, 101759. [Google Scholar] [CrossRef]

- Nunes, A.S.; Kozhemiako, N.; Stephen, C.D.; Schmahmann, J.D.; Khan, S.; Gupta, A.S. Automatic Classification and Severity Estimation of Ataxia from Finger Tapping Videos. Front. Neurol. 2022, 12, 795258. [Google Scholar] [CrossRef] [PubMed]

- Rojas, F.; García, R.V.; González, J.; Velázquez, L.; Becerra, R.; Valenzuela, O.; San Román, B. Identification of Saccadic Components in Spinocerebellar Ataxia Applying an Independent Component Analysis Algorithm. Neurocomputing 2013, 121, 53–63. [Google Scholar] [CrossRef]

- Hernandez-Castillo, C.R.; Galvez, V.; Morgado-Valle, C.; Fernandez-Ruiz, J. Whole-Brain Connectivity Analysis and Classification of Spinocerebellar Ataxia Type 7 by Functional MRI. Cerebellum Ataxias 2014, 1, 2. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Hu, J.; Chen, X.; Li, M.; Xu, H.-L.; Huang, Z.; Chen, N.; Tu, Y.; Chen, Q.; Gan, S.; Cao, D. Pattern of Cerebellar Grey Matter Loss Associated with Ataxia Severity in Spinocerebellar Ataxias Type 3: A Multi-Voxel Pattern Analysis. Brain Imaging Behav. 2022, 16, 379–388. [Google Scholar] [CrossRef] [PubMed]

- Krishna, R.; Pathirana, P.N.; Horne, M.; Power, L.; Szmulewicz, D.J. Quantitative Assessment of Cerebellar Ataxia, through Automated Limb Functional Tests. J. Neuroeng. Rehabil. 2019, 16, 31. [Google Scholar] [CrossRef]

- Ru, D.; Li, J.; Xie, O.; Peng, L.; Jiang, H.; Qiu, R. Explainable Artificial Intelligence Based on Feature Optimization for Age at Onset Prediction of Spinocerebellar Ataxia Type 3. Front. Neuroinform. 2022, 16, 978630. [Google Scholar] [CrossRef]

- Ru, D.; Li, J.; Peng, L.; Jiang, H.; Qiu, R. Visual Prediction of the Progression of Spinocerebellar Ataxia Type 3 Based on Machine Learning. Curr. Bioinform. 2023, 18, 830–841. [Google Scholar] [CrossRef]

- Kadirvelu, B.; Gavriel, C.; Nageshwaran, S.; Chan, J.P.K.; Nethisinghe, S.; Athanasopoulos, S.; Ricotti, V.; Voit, T.; Giunti, P.; Festenstein, R.; et al. A Wearable Motion Capture Suit and Machine Learning Predict Disease Progression in Friedreich’s Ataxia. Nat. Med. 2023, 29, 86–94. [Google Scholar] [CrossRef]

- Klöppel, S.; Chu, C.; Tan, G.C.; Draganski, B.; Johnson, H.; Paulsen, J.S.; Kienzle, W.; Tabrizi, S.J.; Ashburner, J.; Frackowiak, R.S.J. Automatic Detection of Preclinical Neurodegeneration. Neurology 2009, 72, 426–431. [Google Scholar] [CrossRef]

- Lavrador, R.; Júlio, F.; Januário, C.; Castelo-Branco, M.; Caetano, G. Classification of Huntington’s Disease Stage with Features Derived from Structural and Diffusion-Weighted Imaging. J. Pers. Med. 2022, 12, 704. [Google Scholar] [CrossRef]

- Rizk-Jackson, A.; Stoffers, D.; Sheldon, S.; Kuperman, J.; Dale, A.; Goldstein, J.; Corey-Bloom, J.; Poldrack, R.A.; Aron, A.R. Evaluating Imaging Biomarkers for Neurodegeneration in Pre-Symptomatic Huntington’s Disease Using Machine Learning Techniques. Neuroimage 2011, 56, 788–796. [Google Scholar] [CrossRef] [PubMed]

- De Tommaso, M.; De Carlo, F.; Difruscolo, O.; Massafra, R.; Sciruicchio, V.; Bellotti, R. Detection of Subclinical Brain Electrical Activity Changes in Huntington’s Disease Using Artificial Neural Networks. Clin. Neurophysiol. 2003, 114, 1237–1245. [Google Scholar] [CrossRef] [PubMed]

- Odish, O.F.F.; Johnsen, K.; van Someren, P.; Roos, R.A.C.; van Dijk, J.G. EEG May Serve as a Biomarker in Huntington’s Disease Using Machine Learning Automatic Classification. Sci. Rep. 2018, 8, 16090. [Google Scholar] [CrossRef] [PubMed]

- Bennasar, M.; Hicks, Y.A.; Clinch, S.P.; Jones, P.; Holt, C.; Rosser, A.; Busse, M. Automated Assessment of Movement Impairment in Huntington’s Disease. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2062–2069. [Google Scholar] [CrossRef] [PubMed]

- Mannini, A.; Trojaniello, D.; Cereatti, A.; Sabatini, A. A Machine Learning Framework for Gait Classification Using Inertial Sensors: Application to Elderly, Post-Stroke and Huntington’s Disease Patients. Sensors 2016, 16, 134. [Google Scholar] [CrossRef]

- Miranda, Â.; Lavrador, R.; Júlio, F.; Januário, C.; Castelo-Branco, M.; Caetano, G. Classification of Huntington’s Disease Stage with Support Vector Machines: A Study on Oculomotor Performance. Behav. Res. Methods 2016, 48, 1667–1677. [Google Scholar] [CrossRef]

- Ko, J.; Furby, H.; Ma, X.; Long, J.D.; Lu, X.-Y.; Slowiejko, D.; Gandhy, R. Clustering and Prediction of Disease Progression Trajectories in Huntington’s Disease: An Analysis of Enroll-HD Data Using a Machine Learning Approach. Front. Neurol. 2023, 13, 1034269. [Google Scholar] [CrossRef]

- Mohan, A.; Sun, Z.; Ghosh, S.; Li, Y.; Sathe, S.; Hu, J.; Sampaio, C. A Machine-Learning Derived Huntington’s Disease Progression Model: Insights for Clinical Trial Design. Mov. Disord. 2022, 37, 553–562. [Google Scholar] [CrossRef]

- Zhang, S.; Poon, S.K.; Vuong, K.; Sneddon, A.; Loy, C.T. A Deep Learning-Based Approach for Gait Analysis in Huntington Disease. Stud. Health Technol. Inform. 2019, 264, 477–481. [Google Scholar] [CrossRef]

- Loram, I.; Siddique, A.; Sanchez, M.B.; Harding, P.; Silverdale, M.; Kobylecki, C.; Cunningham, R. Objective Analysis of Neck Muscle Boundaries for Cervical Dystonia Using Ultrasound Imaging and Deep Learning. IEEE J. Biomed. Health Inform. 2020, 24, 1016–1027. [Google Scholar] [CrossRef]

- Valeriani, D.; Simonyan, K. A Microstructural Neural Network Biomarker for Dystonia Diagnosis Identified by a DystoniaNet Deep Learning Platform. Proc. Natl. Acad. Sci. USA 2020, 117, 26398–26405. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Powell, M.; White, J.; Feng, J.; Fu, Q.; Zhang, P.; Schmidt, D.C. A Multi-Stage Transfer Learning Strategy for Diagnosing a Class of Rare Laryngeal Movement Disorders. Comput. Biol. Med. 2023, 166, 107534. [Google Scholar] [CrossRef]

- Vu, J.P.; Cisneros, E.; Lee, H.Y.; Le, L.; Chen, Q.; Guo, X.A.; Rouzbehani, R.; Jankovic, J.; Factor, S.; Goetz, C.G.; et al. Head Tremor in Cervical Dystonia: Quantifying Severity with Computer Vision. J. Neurol. Sci. 2022, 434, 120154. [Google Scholar] [CrossRef] [PubMed]

- Yousef, A.M.; Deliyski, D.D.; Zayernouri, M.; Zacharias, S.R.C.; Naghibolhosseini, M. Deep Learning-Based Analysis of Glottal Attack and Offset Times in Adductor Laryngeal Dystonia. J. Voice 2023, in press. [Google Scholar] [CrossRef] [PubMed]

- Conelea, C.; Liang, H.; DuBois, M.; Raab, B.; Kellman, M.; Wellen, B.; Jacob, S.; Wang, S.; Sun, J.; Lim, K. Automated Quantification of Eye Tics Using Computer Vision and Deep Learning Techniques. Mov. Disord. 2024, 39, 183–191. [Google Scholar] [CrossRef]

- Benito-León, J.; Louis, E.D.; Mato-Abad, V.; Sánchez-Ferro, A.; Romero, J.P.; Matarazzo, M.; Serrano, J.I. A Data Mining Approach for Classification of Orthostatic and Essential Tremor Based on MRI-derived Brain Volume and Cortical Thickness. Ann. Clin. Transl. Neurol. 2019, 6, 2531–2543. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, H.; Tao, L.; Zhang, X.; Wang, H.; He, W.; Li, Q.; Xiao, P.; Xu, B.; Gui, H.; et al. Combined Multivariate Pattern Analysis with Frequency-Dependent Intrinsic Brain Activity to Identify Essential Tremor. Neurosci. Lett. 2022, 776, 136566. [Google Scholar] [CrossRef]

- Zheng, X.; Vieira, A.; Marcos, S.L.; Aladro, Y.; Ordieres-Meré, J. Activity-Aware Essential Tremor Evaluation Using Deep Learning Method Based on Acceleration Data. Park. Relat. Disord. 2019, 58, 17–22. [Google Scholar] [CrossRef]

- Serrano, J.I.; Romero, J.P.; Castillo, M.D.D.; Rocon, E.; Louis, E.D.; Benito-León, J. A Data Mining Approach Using Cortical Thickness for Diagnosis and Characterization of Essential Tremor. Sci. Rep. 2017, 7, 2190. [Google Scholar] [CrossRef]

- Li, Q.; Tao, L.; Xiao, P.; Gui, H.; Xu, B.; Zhang, X.; Zhang, X.; Chen, H.; Wang, H.; He, W.; et al. Combined Brain Network Topological Metrics with Machine Learning Algorithms to Identify Essential Tremor. Front. Neurosci. 2022, 16, 1035153. [Google Scholar] [CrossRef]

- Ferreira, G.A.S.; Teixeira, J.L.S.; Rosso, A.L.Z.; de Sá, A.M.F.L.M. On the Classification of Tremor Signals into Dyskinesia, Parkinsonian Tremor, and Essential Tremor by Using Machine Learning Techniques. Biomed. Signal Process. Control 2022, 73, 103430. [Google Scholar] [CrossRef]

- Ma, C.; Li, D.; Pan, L.; Li, X.; Yin, C.; Li, A.; Zhang, Z.; Zong, R. Quantitative Assessment of Essential Tremor Based on Machine Learning Methods Using Wearable Device. Biomed. Signal Process. Control 2022, 71, 103244. [Google Scholar] [CrossRef]

- Pascual-Valdunciel, A.; Lopo-Martinez, V.; Sendra-Arranz, R.; Gonzalez-Sanchez, M.; Perez-Sanchez, J.R.; Grandas, F.; Torricelli, D.; Moreno, J.C.; Barroso, F.O.; Pons, J.L.; et al. Prediction of Pathological Tremor Signals Using Long Short-Term Memory Neural Networks. IEEE J. Biomed. Health Inform. 2022, 26, 5930–5941. [Google Scholar] [CrossRef] [PubMed]

- Piepjohn, P.; Bald, C.; Kuhlenbäumer, G.; Becktepe, J.S.; Deuschl, G.; Schmidt, G. Real-Time Classification of Movement Patterns of Tremor Patients. Biomed. Eng./Biomed. Tech. 2022, 67, 119–130. [Google Scholar] [CrossRef] [PubMed]

- Hossen, A. Discrimination between Parkinsonian Tremor and Essential Tremor Using Artificial Neural Network with Hybrid Features. Technol. Health Care 2022, 30, 691–702. [Google Scholar] [CrossRef]

- Palmes, P.; Ang, W.T.; Widjaja, F.; Tan, L.C.; Au, W.L. Pattern Mining of Multichannel SEMG for Tremor Classification. IEEE Trans. Biomed. Eng. 2010, 57, 2795–2805. [Google Scholar] [CrossRef] [PubMed]

- Samaee, S.; Kobravi, H.R. Predicting the Occurrence of Wrist Tremor Based on Electromyography Using a Hidden Markov Model and Entropy Based Learning Algorithm. Biomed. Signal Process. Control 2020, 57, 101739. [Google Scholar] [CrossRef]

- Balachandar, A.; Algarni, M.; Oliveira, L.; Marsili, L.; Merola, A.; Sturchio, A.; Espay, A.J.; Hutchison, W.D.; Balasubramaniam, A.; Rudzicz, F.; et al. Are Smartphones and Machine Learning Enough to Diagnose Tremor? J. Neurol. 2022, 269, 6104–6115. [Google Scholar] [CrossRef]

- Suppa, A.; Asci, F.; Saggio, G.; Di Leo, P.; Zarezadeh, Z.; Ferrazzano, G.; Ruoppolo, G.; Berardelli, A.; Costantini, G. Voice Analysis with Machine Learning: One Step Closer to an Objective Diagnosis of Essential Tremor. Mov. Disord. 2021, 36, 1401–1410. [Google Scholar] [CrossRef]

- Wang, X.; Garg, S.; Tran, S.N.; Bai, Q.; Alty, J. Hand Tremor Detection in Videos with Cluttered Background Using Neural Network Based Approaches. Health Inf. Sci. Syst. 2021, 9, 30. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, J.; Cai, M.; Liu, X.; Lu, K.; Lou, Y.; Li, Z. Application of Optimized Convolutional Neural Networks for Early Aided Diagnosis of Essential Tremor: Automatic Handwriting Recognition and Feature Analysis. Med. Eng. Phys. 2023, 113, 103962. [Google Scholar] [CrossRef] [PubMed]

- Lopez-de-Ipina, K.; Solé-Casals, J.; Faúndez-Zanuy, M.; Calvo, P.M.; Sesa, E.; Roure, J.; Martinez-de-Lizarduy, U.; Beitia, B.; Fernández, E.; Iradi, J.; et al. Automatic Analysis of Archimedes’ Spiral for Characterization of Genetic Essential Tremor Based on Shannon’s Entropy and Fractal Dimension. Entropy 2018, 20, 531. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, D.; List, A.; Butler, T.; Hogg, S.; Cawley, M. Discrimination between Parkinsonian Syndrome and Essential Tremor Using Artificial Neural Network Classification of Quantified DaTSCAN Data. Nucl. Med. Commun. 2006, 27, 939–944. [Google Scholar] [CrossRef] [PubMed]

- Bianco, M.G.; Quattrone, A.; Sarica, A.; Aracri, F.; Calomino, C.; Caligiuri, M.E.; Novellino, F.; Nisticò, R.; Buonocore, J.; Crasà, M.; et al. Cortical Involvement in Essential Tremor with and without Rest Tremor: A Machine Learning Study. J. Neurol. 2023, 270, 4004–4012. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Ding, L.; Liu, J.; Wang, X.; Meng, Q. Exploring the Diagnostic Markers of Essential Tremor: A Study Based on Machine Learning Algorithms. Open Life Sci. 2023, 18, 20220622. [Google Scholar] [CrossRef]

- Houston, B.; Thompson, M.; Ko, A.; Chizeck, H. A Machine-Learning Approach to Volitional Control of a Closed-Loop Deep Brain Stimulation System. J. Neural Eng. 2019, 16, 016004. [Google Scholar] [CrossRef]

- Pascual-Valdunciel, A.; Lopo-Martínez, V.; Beltrán-Carrero, A.J.; Sendra-Arranz, R.; González-Sánchez, M.; Pérez-Sánchez, J.R.; Grandas, F.; Farina, D.; Pons, J.L.; Oliveira Barroso, F.; et al. Classification of Kinematic and Electromyographic Signals Associated with Pathological Tremor Using Machine and Deep Learning. Entropy 2023, 25, 114. [Google Scholar] [CrossRef]

- Prasad, S.; Pandey, U.; Saini, J.; Ingalhalikar, M.; Pal, P.K. Atrophy of Cerebellar Peduncles in Essential Tremor: A Machine Learning–Based Volumetric Analysis. Eur. Radiol. 2019, 29, 7037–7046. [Google Scholar] [CrossRef]

- Saccà, V.; Novellino, F.; Salsone, M.; Abou Jaoude, M.; Quattrone, A.; Chiriaco, C.; Madrigal, J.L.M.; Quattrone, A. Challenging Functional Connectivity Data: Machine Learning Application on Essential Tremor Recognition. Neurol. Sci. 2023, 44, 199–207. [Google Scholar] [CrossRef]

- Purrer, V.; Pohl, E.; Lueckel, J.M.; Borger, V.; Sauer, M.; Radbruch, A.; Wüllner, U.; Schmeel, F.C. Artificial-Intelligence-Based MRI Brain Volumetry in Patients with Essential Tremor and Tremor-Dominant Parkinson’s Disease. Brain Commun. 2023, 5, fcad271. [Google Scholar] [CrossRef]

- Jayakumar, S.; Sounderajah, V.; Normahani, P.; Harling, L.; Markar, S.R.; Ashrafian, H.; Darzi, A. Quality Assessment Standards in Artificial Intelligence Diagnostic Accuracy Systematic Reviews: A Meta-Research Study. NPJ Digit. Med. 2022, 5, 11. [Google Scholar] [CrossRef] [PubMed]

- Dzialas, V.; Doering, E.; Eich, H.; Strafella, A.P.; Vaillancourt, D.E.; Simonyan, K.; van Eimeren, T. Houston, We Have AI Problem! Quality Issues with Neuroimaging-Based Artificial Intelligence in Parkinson’s Disease: A Systematic Review. Mov. Disord. 2024; early view. [Google Scholar] [CrossRef]

- Ali, S.; Abuhmed, T.; El-Sappagh, S.; Muhammad, K.; Alonso-Moral, J.M.; Confalonieri, R.; Guidotti, R.; Del Ser, J.; Díaz-Rodríguez, N.; Herrera, F. Explainable Artificial Intelligence (XAI): What We Know and What Is Left to Attain Trustworthy Artificial Intelligence. Inf. Fusion 2023, 99, 101805. [Google Scholar] [CrossRef]

- Ge, W.; Lueck, C.; Suominen, H.; Apthorp, D. Has Machine Learning Over-Promised in Healthcare? Artif. Intell. Med. 2023, 139, 102524. [Google Scholar] [CrossRef]

- Klontzas, M.E.; Gatti, A.A.; Tejani, A.S.; Kahn, C.E. AI Reporting Guidelines: How to Select the Best One for Your Research. Radiol. Artif. Intell. 2023, 5, e230055. [Google Scholar] [CrossRef] [PubMed]

- Stahl, B.C. Embedding Responsibility in Intelligent Systems: From AI Ethics to Responsible AI Ecosystems. Sci. Rep. 2023, 13, 7586. [Google Scholar] [CrossRef]

- Guni, A.; Sounderajah, V.; Whiting, P.; Bossuyt, P.; Darzi, A.; Ashrafian, H. Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies Using AI (QUADAS-AI): Protocol for a Qualitative Study. JMIR Res. Protoc. 2024, 13, e58202. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).