Application of Artificial Intelligence to the Diagnosis and Therapy of Nasopharyngeal Carcinoma

Abstract

1. Introduction

2. AI and Its Technologies

3. Screening of Studies

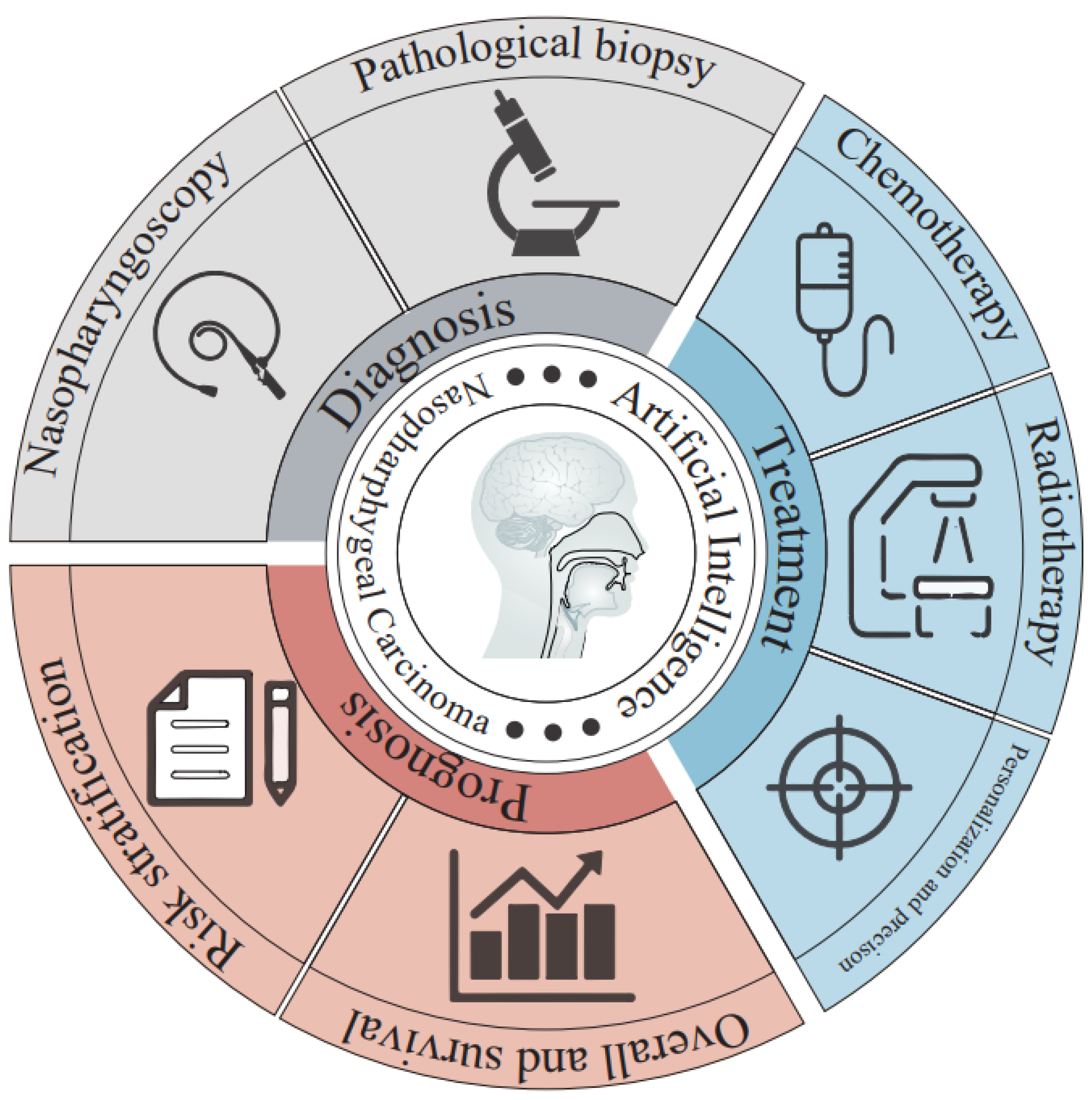

4. Applications of AI to NPC

4.1. AI and NPC Diagnosis

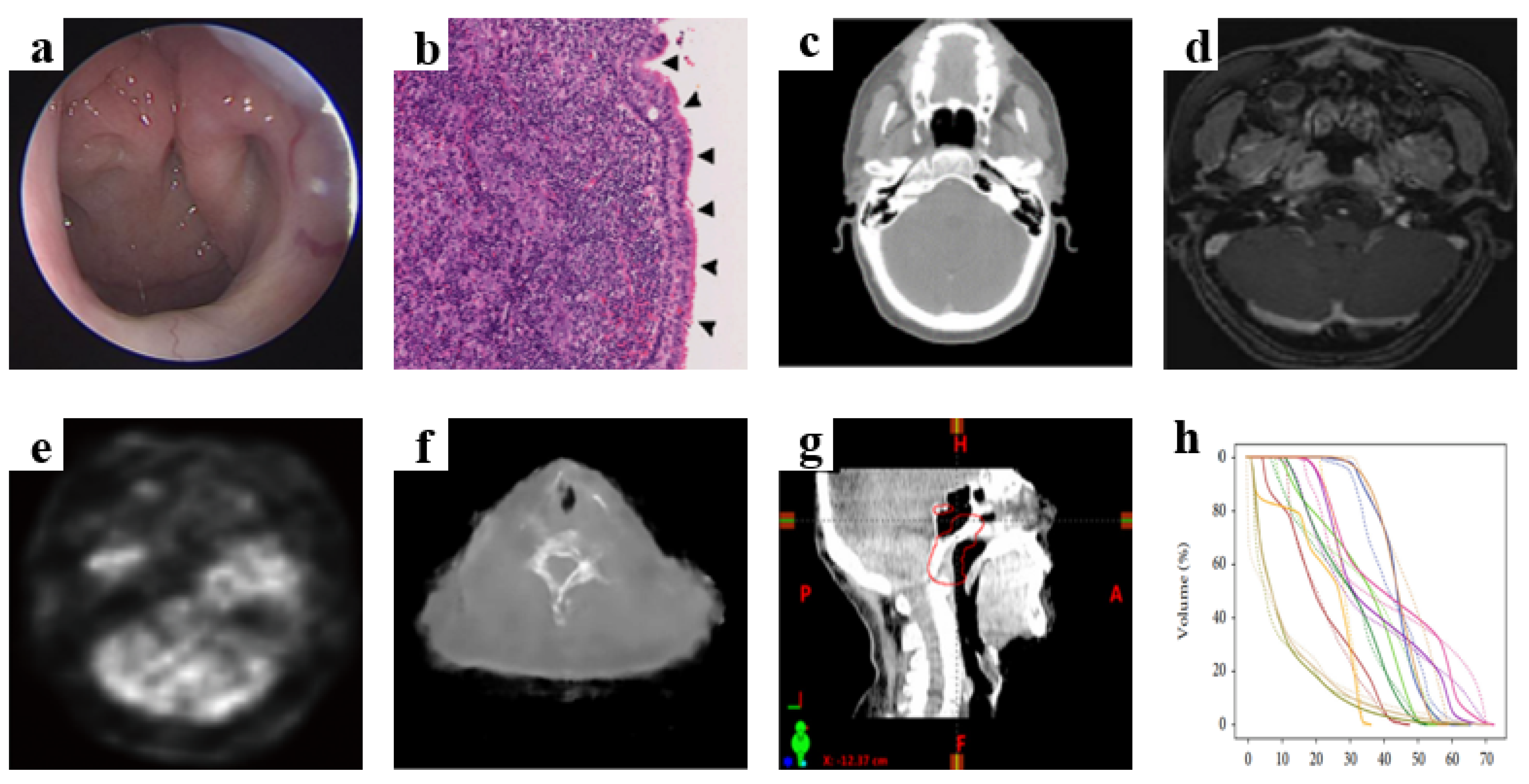

4.1.1. AI Application in Nasopharyngoscopy

4.1.2. AI Application in Pathological Biopsy

4.2. AI and NPC Therapy

4.2.1. AI Application in NPC Chemotherapy

4.2.2. AI Application in NPC Radiotherapy

4.2.3. AI Application in the Personalized and Precise Treatment of NPC

4.3. AI and NPC Prognosis Prediction

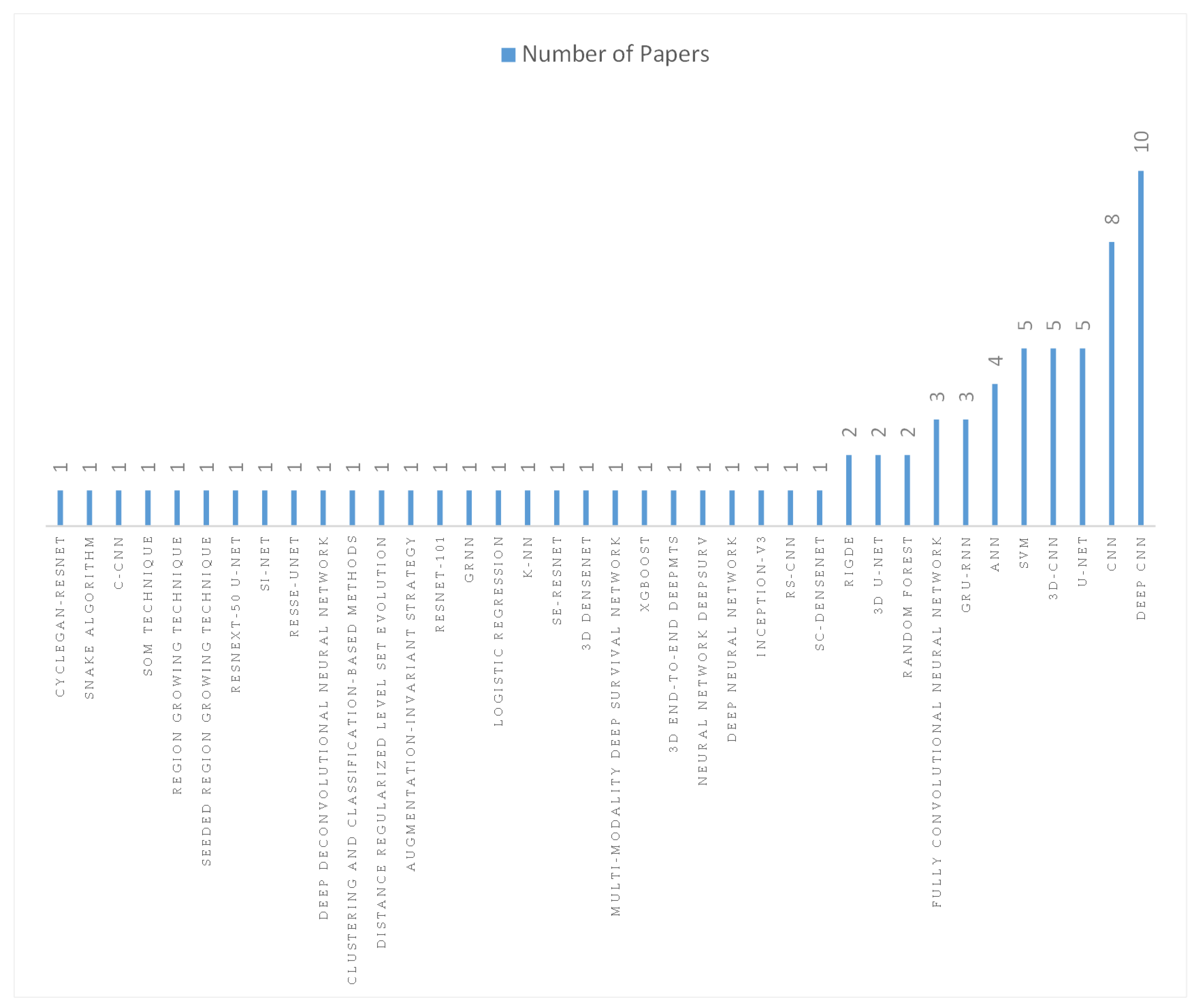

4.4. Current State-of-the-Art AI Algorithms for NPC Diagnosis and Treatment

4.5. Common Training and Testing Methodologies

5. Current Challenges

6. Conclusions and Prospect

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, Y.P.; Chan, A.T.C.; Le, Q.T.; Blanchard, P.; Sun, Y.; Ma, J. Nasopharyngeal carcinoma. Lancet 2019, 394, 64–80. [Google Scholar] [CrossRef] [PubMed]

- Bossi, P.; Chan, A.T.; Licitra, L.; Trama, A.; Orlandi, E.; Hui, E.P.; Halámková, J.; Mattheis, S.; Baujat, B.; Hardillo, J.; et al. Nasopharyngeal carcinoma: ESMO-EURACAN Clinical Practice Guidelines for diagnosis, treatment and follow-up. Ann. Oncol. 2021, 32, 452–465. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.L.; Chen, Y.P.; Chen, C.B.; Chen, M.Y.; Chen, N.Y.; Chen, X.Z.; Du, X.J.; Fang, W.F.; Feng, M.; Gao, J.; et al. The Chinese Society of Clinical Oncology (CSCO) clinical guidelines for the diagnosis and treatment of nasopharyngeal carcinoma. Cancer Commun. 2021, 41, 1195–1227. [Google Scholar] [CrossRef]

- Liang, H.; Xiang, Y.Q.; Lv, X.; Xie, C.Q.; Cao, S.M.; Wang, L.; Qian, C.N.; Yang, J.; Ye, Y.F.; Gan, F.; et al. Survival impact of waiting time for radical radiotherapy in nasopharyngeal carcinoma: A large institution-based cohort study from an endemic area. Eur. J. Cancer 2017, 73, 48–60. [Google Scholar] [CrossRef] [PubMed]

- Lee, N.; Harris, J.; Garden, A.S.; Straube, W.; Glisson, B.; Xia, P.; Bosch, W.; Morrison, W.H.; Quivey, J.; Thorstad, W.; et al. Intensity-modulated radiation therapy with or without chemotherapy for nasopharyngeal carcinoma: Radiation therapy oncology group phase II trial 0225. J. Clin. Oncol. 2009, 27, 3684–3690. [Google Scholar] [CrossRef]

- Sun, X.; Su, S.; Chen, C.; Han, F.; Zhao, C.; Xiao, W.; Deng, X.; Huang, S.; Lin, C.; Lu, T. Long-term outcomes of intensity-modulated radiotherapy for 868 patients with nasopharyngeal carcinoma: An analysis of survival and treatment toxicities. Radiother. Oncol. 2014, 110, 398–403. [Google Scholar] [CrossRef]

- Yi, J.L.; Gao, L.; Huang, X.D.; Li, S.Y.; Luo, J.W.; Cai, W.M.; Xiao, J.P.; Xu, G.Z. Nasopharyngeal carcinoma treated by radical radiotherapy alone: Ten-year experience of a single institution. Int. J. Radiat. Oncol. Biol. Phys. 2006, 65, 161–168. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Hamet, P.; Tremblay, J. Artificial intelligence in medicine. Metabolism 2017, 69s, S36–S40. [Google Scholar] [CrossRef]

- Chen, Z.H.; Lin, L.; Wu, C.F.; Li, C.F.; Xu, R.H.; Sun, Y. Artificial intelligence for assisting cancer diagnosis and treatment in the era of precision medicine. Cancer Commun. 2021, 41, 1100–1115. [Google Scholar] [CrossRef]

- Yang, C.; Jiang, Z.; Cheng, T.; Zhou, R.; Wang, G.; Jing, D.; Bo, L.; Huang, P.; Wang, J.; Zhang, D.; et al. Radiomics for Predicting Response of Neoadjuvant Chemotherapy in Nasopharyngeal Carcinoma: A Systematic Review and Meta-Analysis. Front. Oncol. 2022, 12, 893103. [Google Scholar] [CrossRef]

- Li, S.; Deng, Y.Q.; Zhu, Z.L.; Hua, H.L.; Tao, Z.Z. A Comprehensive Review on Radiomics and Deep Learning for Nasopharyngeal Carcinoma Imaging. Diagnostics 2021, 11, 1523. [Google Scholar] [CrossRef] [PubMed]

- Ng, W.T.; But, B.; Choi, H.C.W.; de Bree, R.; Lee, A.W.M.; Lee, V.H.F.; López, F.; Mäkitie, A.A.; Rodrigo, J.P.; Saba, N.F.; et al. Application of Artificial Intelligence for Nasopharyngeal Carcinoma Management—A Systematic Review. Cancer Manag. Res. 2022, 14, 339–366. [Google Scholar] [CrossRef] [PubMed]

- Brody, H. Medical imaging. Nature 2013, 502, S81. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Ambinder, E.P. A history of the shift toward full computerization of medicine. J. Oncol. Pract. 2005, 1, 54–56. [Google Scholar] [CrossRef]

- Shen, D.; Wu, G.; Suk, H.I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio AA, A.; Ciompi, F.; Ghafoorian, M.; van der Laak JA, W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Chua, M.L.K.; Wee, J.T.S.; Hui, E.P.; Chan, A.T.C. Nasopharyngeal carcinoma. Lancet 2016, 387, 1012–1024. [Google Scholar] [CrossRef]

- Vokes, E.E.; Liebowitz, D.N.; Weichselbaum, R.R. Nasopharyngeal carcinoma. Lancet 1997, 350, 1087–1091. [Google Scholar] [CrossRef]

- Wei, W.I.; Sham, J.S. Nasopharyngeal carcinoma. Lancet 2005, 365, 2041–2054. [Google Scholar] [CrossRef] [PubMed]

- Wong, L.M.; King, A.D.; Ai, Q.Y.H.; Lam, W.K.J.; Poon, D.M.C.; Ma, B.B.Y.; Chan, K.C.A.; Mo, F.K.F. Convolutional neural network for discriminating nasopharyngeal carcinoma and benign hyperplasia on MRI. Eur. Radiol. 2021, 31, 3856–3863. [Google Scholar] [CrossRef]

- Ke, L.; Deng, Y.; Xia, W.; Qiang, M.; Chen, X.; Liu, K.; Jing, B.; He, C.; Xie, C.; Guo, X.; et al. Development of a self-constrained 3D DenseNet model in automatic detection and segmentation of nasopharyngeal carcinoma using magnetic resonance images. Oral Oncol. 2020, 110, 104862. [Google Scholar] [CrossRef] [PubMed]

- Mohammed, M.A.; Abd Ghani, M.K.; Arunkumar, N.; Raed, H.; Mohamad, A.; Mohd, B. A real time computer aided object detection of Nasopharyngeal carcinoma using genetic algorithm and artificial neural network based on Haar feature fear. Future Gener. Comput. Syst. 2018, 89, 539–547. [Google Scholar] [CrossRef]

- Mohammed, M.A.; Abd Ghani, M.K.; Arunkumar, N.; Hamed, R.I.; Mostafa, S.A.; Abdullah, M.K.; Burhanuddin, M.A. Decision support system for Nasopharyngeal carcinoma discrimination from endoscopic images using artificial neural network. J. Supercomput. 2020, 76, 1086–1104. [Google Scholar] [CrossRef]

- Abd Ghani, M.K.; Mohammed, M.A.; Arunkumar, N.; Mostafa, S.; Ibrahim, D.A.; Abdullah, M.K.; Jaber, M.M.; Abdulhay, E.; Ramirez-Gonzalez, G.; Burhanuddin, M.A. Decision-level fusion scheme for Nasopharyngeal carcinoma identification using machine learning techniques. Neu. Comput. Appl. 2020, 32, 625–638. [Google Scholar] [CrossRef]

- Li, C.; Jing, B.; Ke, L.; Li, B.; Xia, W.; He, C.; Qian, C.; Zhao, C.; Mai, H.; Chen, M.; et al. Development and validation of an endoscopic images-based deep learning model for detection with nasopharyngeal malignancies. Cancer Commun. 2018, 38, 59. [Google Scholar] [CrossRef]

- Xu, J.; Wang, J.; Bian, X.; Zhu, J.Q.; Tie, C.W.; Liu, X.; Zhou, Z.; Ni, X.G.; Qian, D. Deep Learning for nasopharyngeal Carcinoma Identification Using Both White Light and Narrow-Band Imaging Endoscopy. Laryngoscope 2022, 132, 999–1007. [Google Scholar] [CrossRef]

- Shu, C.; Yan, H.; Zheng, W.; Lin, K.; James, A.; Selvarajan, S.; Lim, C.M.; Huang, Z. Deep Learning-Guided Fiberoptic Raman Spectroscopy Enables Real-Time In Vivo Diagnosis and Assessment of Nasopharyngeal Carcinoma and Post-treatment Efficacy during Endoscopy. Anal. Chem. 2021, 93, 10898–10906. [Google Scholar] [CrossRef]

- Chuang, W.Y.; Chang, S.H.; Yu, W.H.; Yang, C.K.; Yeh, C.J.; Ueng, S.H.; Liu, Y.J.; Chen, T.D.; Chen, K.H.; Hsieh, Y.Y.; et al. Successful Identification of Nasopharyngeal Carcinoma in Nasopharyngeal Biopsies Using Deep Learning. Cancers 2020, 12, 507. [Google Scholar] [CrossRef]

- Diao, S.; Hou, J.; Yu, H.; Zhao, X.; Sun, Y.; Lambo, R.L.; Xie, Y.; Liu, L.; Qin, W.; Luo, W. Computer-Aided Pathologic Diagnosis of Nasopharyngeal Carcinoma Based on Deep Learning. Am. J. Pathol. 2020, 190, 1691–1700. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Gong, J.; Xi, Y.; Xu, M.; Li, C.; Kang, X.; Yin, Y.; Qin, W.; Yin, H.; Shi, M. MRI-based radiomics nomogram may predict the response to induction chemotherapy and survival in locally advanced nasopharyngeal carcinoma. Eur. Radiol. 2020, 30, 537–546. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Wang, M.; Qiu, K.; Wang, Y.; Ma, X. Computed tomography-based deep-learning prediction of induction chemotherapy treatment response in locally advanced nasopharyngeal carcinoma. Strahlenther. Onkol. 2022, 198, 183–193. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Dong, D.; Fang, M.J.; Li, L.; Tang, L.L.; Chen, L.; Li, W.F.; Mao, Y.P.; Fan, W.; Liu, L.Z.; et al. Prognostic Value of Deep Learning PET/CT-Based Radiomics: Potential Role for Future Individual Induction Chemotherapy in Advanced Nasopharyngeal Carcinoma. Clin. Cancer Res. 2019, 25, 4271–4279. [Google Scholar] [CrossRef]

- Chen, X.; Yang, B.; Li, J.; Zhu, J.; Ma, X.; Chen, D.; Hu, Z.; Men, K.; Dai, J. A deep-learning method for generating synthetic kV-CT and improving tumor segmentation for helical tomotherapy of nasopharyngeal carcinoma. Phys. Med. Biol. 2021, 66, 224001. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, J.; Liu, Z.; Teng, J.; Xie, Q.; Zhang, L.; Liu, X.; Shi, J.; Chen, L. A preliminary study of using a deep convolution neural network to generate synthesized CT images based on CBCT for adaptive radiotherapy of nasopharyngeal carcinoma. Phys. Med. Biol. 2019, 64, 145010. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, C.; Zhang, X.; Deng, W. Synthetic CT Generation Based on T2 Weighted MRI of Nasopharyngeal Carcinoma (NPC) Using a Deep Convolutional Neural Network (DCNN). Front. Oncol. 2019, 9, 1333. [Google Scholar] [CrossRef]

- Chen, S.; Peng, Y.; Qin, A.; Liu, Y.; Zhao, C.; Deng, X.; Deraniyagala, R.; Stevens, C.; Ding, X. MR-based synthetic CT image for intensity-modulated proton treatment planning of nasopharyngeal carcinoma patients. Acta Oncol. 2022, 61, 1417–1424. [Google Scholar] [CrossRef]

- Fitton, I.; Cornelissen, S.A.; Duppen, J.C.; Steenbakkers, R.J.; Peeters, S.T.; Hoebers, F.J.; Kaanders, J.H.; Nowak, P.J.; Rasch, C.R.; van Herk, M. Semi-automatic delineation using weighted CT-MRI registered images for radiotherapy of nasopharyngeal cancer. Med. Phys. 2011, 38, 4662–4666. [Google Scholar] [CrossRef]

- Ma, Z.; Zhou, S.; Wu, X.; Zhang, H.; Yan, W.; Sun, S.; Zhou, J. Nasopharyngeal carcinoma segmentation based on enhanced convolutional neural networks using multi-modal metric learning. Phys. Med. Biol. 2019, 64, 025005. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Y.; Yin, Y.; Li, T.; Liu, X.; Li, X.; Gong, G.; Wang, L. MMFNet: A multi-modality MRI fusion network for segmentation of nasopharyngeal carcinoma. Neurocomputing 2020, 394, 27–40. [Google Scholar] [CrossRef]

- Zhao, L.; Lu, Z.; Jiang, J.; Zhou, Y.; Wu, Y.; Feng, Q. Automatic Nasopharyngeal Carcinoma Segmentation Using Fully Convolutional Networks with Auxiliary Paths on Dual-Modality PET-CT Images. J. Digit. Imaging. 2019, 32, 462–470. [Google Scholar] [CrossRef] [PubMed]

- Chanapai, W.; Ritthipravat, P. Adaptive thresholding based on SOM technique for semi-automatic NPC image segmentation. In Proceedings of the 2009 International Conference on Machine Learning and Applications, IEEE, Miami, FL, USA, 13–15 December 2009; pp. 504–508. [Google Scholar]

- Tatanun, C.; Ritthipravat, P.; Bhongmakapat, T.; Tuntiyatorn, L. Automatic segmentation of nasopharyngeal carcinoma from CT images: Region growing based technique. In Proceedings of the 2010 2nd International Conference on Signal Processing Systems, IEEE, Dalian, China, 5–7 July 2010; Volume 2, pp. 537–541. [Google Scholar]

- Chanapai, W.; Bhongmakapat, T.; Tuntiyatorn, L.; Ritthipravat, P. Nasopharyngeal carcinoma segmentation using a region growing technique. Int. J. Comput. Assist. Radiol. Surg. 2012, 7, 413–422. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.; Hu, Y.; Gong, G.; Yin, Y.; Xia, Y. A deep learning approach to segmentation of nasopharyngeal carcinoma using computed tomography. Biomed. Signal Process. Control 2021, 64, 102246. [Google Scholar] [CrossRef]

- Daoud, B.; Morooka, K.; Kurazume, R.; Leila, F.; Mnejja, W.; Daoud, J. 3D segmentation of nasopharyngeal carcinoma from CT images using cascade deep learning. Comput. Med. Imaging Graph. 2019, 77, 101644. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Xiao, J.; He, L.; Peng, X.; Yuan, X. The Tumor Target Segmentation of Nasopharyngeal Cancer in CT Images Based on Deep Learning Methods. Technol. Cancer Res. Treat. 2019, 18, 1533033819884561. [Google Scholar] [CrossRef]

- Xue, X.; Qin, N.; Hao, X.; Shi, J.; Wu, A.; An, H.; Zhang, H.; Wu, A.; Yang, Y. Sequential and Iterative Auto-Segmentation of High-Risk Clinical Target Volume for Radiotherapy of Nasopharyngeal Carcinoma in Planning CT Images. Front. Oncol. 2020, 10, 1134. [Google Scholar] [CrossRef]

- Jin, Z.; Li, X.; Shen, L.; Lang, J.; Li, J.; Wu, J.; Xu, P.; Duan, J. Automatic Primary Gross Tumor Volume Segmentation for Nasopharyngeal Carcinoma using ResSE-UNet. In Proceedings of the 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS), Rochester, MN, USA, 28–30 July 2020; pp. 585–590. [Google Scholar]

- Wang, X.; Yang, G.; Zhang, Y.; Zhu, L.; Xue, X.; Zhang, B.; Cai, C.; Jin, H.; Zheng, J.; Wu, J.; et al. Automated delineation of nasopharynx gross tumor volume for nasopharyngeal carcinoma by plain CT combining contrast-enhanced CT using deep learning. J. Radiat. Res. Appl. Sci. 2020, 13, 568–577. [Google Scholar] [CrossRef]

- Men, K.; Chen, X.; Zhang, Y.; Zhang, T.; Dai, J.; Yi, J.; Li, Y. Deep Deconvolutional Neural Network for Target Segmentation of Nasopharyngeal Cancer in Planning Computed Tomography Images. Front. Oncol. 2017, 7, 315. [Google Scholar] [CrossRef]

- Huang, W.; Chan, K.L.; Zhou, J. Region-based nasopharyngeal carcinoma lesion segmentation from MRI using clustering- and classification-based methods with learning. J. Digit. Imaging 2013, 26, 472–482. [Google Scholar] [CrossRef]

- Kai-Wei, H.; Zhe-Yi, Z.; Qian, G.; Juan, Z.; Liu, C.; Ran, Y. Nasopharyngeal carcinoma segmentation via HMRF-EM with maximum entropy. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2968–2972. [Google Scholar] [CrossRef]

- Li, Q.; Xu, Y.; Chen, Z.; Liu, D.; Feng, S.T.; Law, M.; Ye, Y.; Huang, B. Tumor Segmentation in Contrast-Enhanced Magnetic Resonance Imaging for Nasopharyngeal Carcinoma: Deep Learning with Convolutional Neural Network. Biomed. Res. Int. 2018, 2018, 9128527. [Google Scholar] [CrossRef] [PubMed]

- Lin, L.; Dou, Q.; Jin, Y.M.; Zhou, G.Q.; Tang, Y.Q.; Chen, W.L.; Su, B.A.; Liu, F.; Tao, C.J.; Jiang, N.; et al. Deep Learning for Automated Contouring of Primary Tumor Volumes by MRI for Nasopharyngeal Carcinoma. Radiology 2019, 291, 677–686. [Google Scholar] [CrossRef]

- Guo, F.; Shi, C.; Li, X.; Wu, X.; Zhou, J.; Lv, J. Image segmentation of nasopharyngeal carcinoma using 3D CNN with long-range skip connection and multi-scale feature pyramid. Soft Comput. 2020, 24, 12671–12680. [Google Scholar] [CrossRef]

- Ye, Y.; Cai, Z.; Huang, B.; He, Y.; Zeng, P.; Zou, G.; Deng, W.; Chen, H.; Huang, B. Fully-Automated Segmentation of Nasopharyngeal Carcinoma on Dual-Sequence MRI Using Convolutional Neural Networks. Front. Oncol. 2020, 10, 166. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Liao, W.; He, Y.; Tang, F.; Wu, M.; Shen, Y.; Huang, H.; Song, T.; Li, K.; Zhang, S.; et al. Deep learning-based accurate delineation of primary gross tumor volume of nasopharyngeal carcinoma on heterogeneous magnetic resonance imaging: A large-scale and multi-center study. Radiother. Oncol. 2023, 180, 109480. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Peng, H.; Dan, T.; Hu, Y.; Tao, G.; Cai, H. Coarse-to-fine Nasopharyngeal Carcinoma Segmentation in MRI via Multi-stage Rendering. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Republic of Korea, 16–19 December 2020; pp. 623–628. [Google Scholar]

- Wong, L.M.; Ai, Q.Y.H.; Mo, F.K.F.; Poon, D.M.C.; King, A.D. Convolutional neural network in nasopharyngeal carcinoma: How good is automatic delineation for primary tumor on a non-contrast-enhanced fat-suppressed T2-weighted MRI? Jpn J. Radiol. 2021, 39, 571–579. [Google Scholar] [CrossRef]

- Liang, S.; Tang, F.; Huang, X.; Yang, K.; Zhong, T.; Hu, R.; Liu, S.; Yuan, X.; Zhang, Y. Deep-learning-based detection and segmentation of organs at risk in nasopharyngeal carcinoma computed tomographic images for radiotherapy planning. Eur. Radiol. 2019, 29, 1961–1967. [Google Scholar] [CrossRef]

- Zhong, T.; Huang, X.; Tang, F.; Liang, S.; Deng, X.; Zhang, Y. Boosting-based Cascaded Convolutional Neural Networks for the Segmentation of CT Organs-at-risk in Nasopharyngeal Carcinoma. Med. Phys. 2019, 46, 5602–5611. [Google Scholar] [CrossRef]

- Peng, Y.; Liu, Y.; Shen, G.; Chen, Z.; Chen, M.; Miao, J.; Zhao, C.; Deng, J.; Qi, Z.; Deng, X. Improved accuracy of auto-segmentation of organs at risk in radiotherapy planning for nasopharyngeal carcinoma based on fully convolutional neural network deep learning. Oral. Oncol. 2023, 136, 106261. [Google Scholar] [CrossRef]

- Zhao, W.; Zhang, D.; Mao, X. Application of Artificial Intelligence in Radiotherapy of Nasopharyngeal Carcinoma with Magnetic Resonance Imaging. J. Health Eng. 2022, 2022, 4132989. [Google Scholar] [CrossRef]

- Zhuang, Y.; Xie, Y.; Wang, L.; Huang, S.; Chen, L.X.; Wang, Y. DVH Prediction for VMAT in NPC with GRU-RNN: An Improved Method by Considering Biological Effects. Biomed. Res. Int. 2021, 2021, 2043830. [Google Scholar] [CrossRef] [PubMed]

- Cao, W.; Zhuang, Y.; Chen, L.; Liu, X. Application of dose-volume histogram prediction in biologically related models for nasopharyngeal carcinomas treatment planning. Radiat. Oncol. 2020, 15, 216. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, Y.; Han, J.; Chen, L.; Liu, X. Dose-volume histogram prediction in volumetric modulated arc therapy for nasopharyngeal carcinomas based on uniform-intensity radiation with equal angle intervals. Phys. Med. Biol. 2019, 64, 23NT03. [Google Scholar] [CrossRef] [PubMed]

- Yue, M.; Xue, X.; Wang, Z.; Lambo, R.L.; Zhao, W.; Xie, Y.; Cai, J.; Qin, W. Dose prediction via distance-guided deep learning: Initial development for nasopharyngeal carcinoma radiotherapy. Radiother. Oncol. 2022, 170, 198–204. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Xia, X.; Fan, J.; Zhao, J.; Zhang, K.; Wang, J.; Hu, W. A hybrid optimization strategy for deliverable intensity-modulated radiotherapy plan generation using deep learning-based dose prediction. Med. Phys. 2022, 49, 1344–1356. [Google Scholar] [CrossRef]

- Jiao, S.X.; Chen, L.X.; Zhu, J.H.; Wang, M.L.; Liu, X.W. Prediction of dose-volume histograms in nasopharyngeal cancer IMRT using geometric and dosimetric information. Phys. Med. Biol. 2019, 64, 23NT04. [Google Scholar] [CrossRef]

- Chen, X.; Men, K.; Zhu, J.; Yang, B.; Li, M.; Liu, Z.; Yan, X.; Yi, J.; Dai, J. DVHnet: A deep learning-based prediction of patient-specific dose volume histograms for radiotherapy planning. Med. Phys. 2021, 48, 2705–2713. [Google Scholar] [CrossRef]

- Zhang, B.; Lian, Z.; Zhong, L.; Zhang, X.; Dong, Y.; Chen, Q.; Zhang, L.; Mo, X.; Huang, W.; Yang, W.; et al. Machine-learning based MRI radiomics models for early detection of radiation-induced brain injury in nasopharyngeal carcinoma. BMC Cancer 2020, 20, 502. [Google Scholar] [CrossRef]

- Bin, X.; Zhu, C.; Tang, Y.; Li, R.; Ding, Q.; Xia, W.; Tang, Y.; Tang, X.; Yao, D.; Tang, A. Nomogram Based on Clinical and Radiomics Data for Predicting Radiation-induced Temporal Lobe Injury in Patients with Non-metastatic Stage T4 Nasopharyngeal Carcinoma. Clin. Oncol. (R Coll. Radiol.) 2022, 34, e482–e492. [Google Scholar] [CrossRef]

- Ren, W.; Liang, B.; Sun, C.; Wu, R.; Men, K.; Xu, Y.; Han, F.; Yi, J.; Qu, Y.; Dai, J. Dosiomics-based prediction of radiation-induced hypothyroidism in nasopharyngeal carcinoma patients. Phys. Med. 2021, 89, 219–225. [Google Scholar] [CrossRef]

- Chao, M.; El Naqa, I.; Bakst, R.L.; Lo, Y.C.; Penagaricano, J.A. Cluster model incorporating heterogeneous dose distribution of partial parotid irradiation for radiotherapy induced xerostomia prediction with machine learning methods. Acta Oncol. 2022, 61, 842–848. [Google Scholar] [CrossRef] [PubMed]

- Zhong, L.; Dong, D.; Fang, X.; Zhang, F.; Zhang, N.; Zhang, L.; Fang, M.; Jiang, W.; Liang, S.; Li, C.; et al. A deep learning-based radiomic nomogram for prognosis and treatment decision in advanced nasopharyngeal carcinoma: A multicentre study. EBioMedicine 2021, 70, 103522. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Liang, Y.J.; Zhang, X.; Wen, D.X.; Fan, W.; Tang, L.Q.; Dong, D.; Tian, J.; Mai, H.Q. Deep learning signatures reveal multiscale intratumor heterogeneity associated with biological functions and survival in recurrent nasopharyngeal carcinoma. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 2972–2982. [Google Scholar] [CrossRef]

- Jiang, R.; You, R.; Pei, X.Q.; Zou, X.; Zhang, M.X.; Wang, T.M.; Sun, R.; Luo, D.H.; Huang, P.Y.; Chen, Q.Y.; et al. Development of a ten-signature classifier using a support vector machine integrated approach to subdivide the M1 stage into M1a and M1b stages of nasopharyngeal carcinoma with synchronous metastases to better predict patients’ survival. Oncotarget 2016, 7, 3645–3657. [Google Scholar] [CrossRef]

- Amin, M.B.; Greene, F.L.; Edge, S.B.; Compton, C.C.; Gershenwald, J.E.; Brookland, R.K.; Meyer, L.; Gress, D.M.; Byrd, D.R.; Winchester, D.P. The Eighth Edition AJCC Cancer Staging Manual: Continuing to build a bridge from a population-based to a more “personalized” approach to cancer staging. CA Cancer J. Clin. 2017, 67, 93–99. [Google Scholar] [CrossRef]

- Cui, C.; Wang, S.; Zhou, J.; Dong, A.; Xie, F.; Li, H.; Liu, L. Machine Learning Analysis of Image Data Based on Detailed MR Image Reports for Nasopharyngeal Carcinoma Prognosis. Biomed. Res. Int. 2020, 2020, 8068913. [Google Scholar] [CrossRef] [PubMed]

- Zhong, L.Z.; Fang, X.L.; Dong, D.; Peng, H.; Fang, M.J.; Huang, C.L.; He, B.X.; Lin, L.; Ma, J.; Tang, L.L.; et al. A deep learning MR-based radiomic nomogram may predict survival for nasopharyngeal carcinoma patients with stage T3N1M0. Radiother. Oncol. 2020, 151, 1–9. [Google Scholar] [CrossRef]

- Zhuo, E.H.; Zhang, W.J.; Li, H.J.; Zhang, G.Y.; Jing, B.Z.; Zhou, J.; Cui, C.Y.; Chen, M.Y.; Sun, Y.; Liu, L.Z.; et al. Radiomics on multi-modalities MR sequences can subtype patients with non-metastatic nasopharyngeal carcinoma (NPC) into distinct survival subgroups. Eur. Radiol. 2019, 29, 5590–5599. [Google Scholar] [CrossRef]

- Du, R.; Lee, V.H.; Yuan, H.; Lam, K.O.; Pang, H.H.; Chen, Y.; Lam, E.Y.; Khong, P.L.; Lee, A.W.; Kwong, D.L.; et al. Radiomics Model to Predict Early Progression of Nonmetastatic Nasopharyngeal Carcinoma after Intensity Modulation Radiation Therapy: A Multicenter Study. Radiol. Artif. Intell. 2019, 1, e180075. [Google Scholar] [CrossRef]

- Li, S.; Wang, K.; Hou, Z.; Yang, J.; Ren, W.; Gao, S.; Meng, F.; Wu, P.; Liu, B.; Liu, J.; et al. Use of Radiomics Combined with Machine Learning Method in the Recurrence Patterns After Intensity-Modulated Radiotherapy for Nasopharyngeal Carcinoma: A Preliminary Study. Front. Oncol. 2018, 8, 648. [Google Scholar] [CrossRef]

- Gonzalez, G.; Ash, S.Y.; Vegas-Sanchez-Ferrero, G.; Onieva Onieva, J.; Rahaghi, F.N.; Ross, J.C.; Diaz, A.; San Jose Estepar, R.; Washko, G.R.; for the COPDGene andECLIPSE Investigators. Disease Staging and Prognosis in Smokers Using Deep Learning in Chest Computed Tomography. Am. J. Respir. Crit. Care Med. 2018, 197, 193–203. [Google Scholar] [CrossRef] [PubMed]

- Du, R.; Cao, P.; Han, L.; Ai, Q.; King, A.D.; Vardhanabhuti, V. Deep convolution neural network model for automatic risk assessment of patients with non-metastatic Nasopharyngeal carcinoma. arXiv 2019, arXiv:1907.11861. [Google Scholar]

- Qiang, M.; Li, C.; Sun, Y.; Sun, Y.; Ke, L.; Xie, C.; Zhang, T.; Zou, Y.; Qiu, W.; Gao, M.; et al. A Prognostic Predictive System Based on Deep Learning for Locoregionally Advanced Nasopharyngeal Carcinoma. J. Natl. Cancer Inst. 2021, 113, 606–615. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wu, X.; Liu, J.; Zhang, B.; Mo, X.; Chen, Q.; Fang, J.; Wang, F.; Li, M.; Chen, Z.; et al. MRI-Based Deep-Learning Model for Distant Metastasis-Free Survival in Locoregionally Advanced Nasopharyngeal Carcinoma. J. Magn. Reson. Imaging 2021, 53, 167–178. [Google Scholar] [CrossRef] [PubMed]

- Jing, B.; Deng, Y.; Zhang, T.; Hou, D.; Li, B.; Qiang, M.; Liu, K.; Ke, L.; Li, T.; Sun, Y.; et al. Deep learning for risk prediction in patients with nasopharyngeal carcinoma using multi-parametric MRIs. Comput. Methods Programs Biomed. 2020, 197, 105684. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Li, Y.; Li, X.; Cao, X.; Xiang, Y.; Xia, W.; Li, J.; Gao, M.; Sun, Y.; Liu, K.; et al. An interpretable machine learning prognostic system for locoregionally advanced nasopharyngeal carcinoma based on tumor burden features. Oral. Oncol. 2021, 118, 105335. [Google Scholar] [CrossRef]

- Meng, M.; Gu, B.; Bi, L.; Song, S.; Feng, D.D.; Kim, J. DeepMTS: Deep Multi-task Learning for Survival Prediction in Patients with Advanced Nasopharyngeal Carcinoma Using Pretreatment PET/CT. IEEE J. Biomed. Health Inf. 2022, 26, 4497–4507. [Google Scholar] [CrossRef]

- Gu, B.; Meng, M.; Bi, L.; Kim, J.; Feng, D.D.; Song, S. Prediction of 5-year progression-free survival in advanced nasopharyngeal carcinoma with pretreatment PET/CT using multi-modality deep learning-based radiomics. Front. Oncol. 2022, 12, 899351. [Google Scholar] [CrossRef]

- Zhang, F.; Zhong, L.Z.; Zhao, X.; Dong, D.; Yao, J.J.; Wang, S.Y.; Liu, Y.; Zhu, D.; Wang, Y.; Wang, G.J.; et al. A deep-learning-based prognostic nomogram integrating microscopic digital pathology and macroscopic magnetic resonance images in nasopharyngeal carcinoma: A multi-cohort study. Adv. Med. Oncol. 2020, 12, 1758835920971416. [Google Scholar] [CrossRef]

- Liu, K.; Xia, W.; Qiang, M.; Chen, X.; Liu, J.; Guo, X.; Lv, X. Deep learning pathological microscopic features in endemic nasopharyngeal cancer: Prognostic value and protentional role for individual induction chemotherapy. Cancer Med. 2020, 9, 1298–1306. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Z.; Li, H.; Li, Y. Integrative Analysis Identified a 6-miRNA Prognostic Signature in Nasopharyngeal Carcinoma. Front. Cell Dev. Biol. 2021, 9, 661105. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Dong, X.; Ni, X.; Li, L.; Lu, X.; Zhang, K.; Gao, Y. Exploration of a Novel Prognostic Risk Signature and Its Effect on the Immune Response in Nasopharyngeal Carcinoma. Front. Oncol. 2021, 11, 709931. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Wu, G.; Yang, Q.; Dai, G.; Li, T.; Chen, P.; Li, J.; Huang, W. Survival rate prediction of nasopharyngeal carcinoma patients based on MRI and gene expression using a deep neural network. Cancer Sci. 2022, 144, 1596–1605. [Google Scholar] [CrossRef]

- Antoniadi, A.M.; Du, Y.; Guendouz, Y.; Wei, L.; Mazo, C.; Becker, B.A.; Mooney, C. Current Challenges and Future Opportunities for XAI in Machine Learning-Based Clinical Decision Support Systems: A Systematic Review. Appl. Sci. 2021, 11, 5088. [Google Scholar] [CrossRef]

- Pawlik, M.; Hutter, T.; Kocher, D.; Mann, W.; Augsten, N. A Link is not Enough—Reproducibility of Data. Datenbank. Spektrum. 2019, 19, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Hamamoto, R.; Suvarna, K.; Yamada, M.; Kobayashi, K.; Shinkai, N.; Miyake, M.; Takahashi, M.; Jinnai, S.; Shimoyama, R.; Sakai, A.; et al. Application of Artificial Intelligence Technology in Oncology: Towards the Establishment of Precision Medicine. Cancers 2020, 12, 3532. [Google Scholar] [CrossRef] [PubMed]

- van de Wiel, M.A.; Neerincx, M.; Buffart, T.E.; Sie, D.; Verheul, H.M. ShrinkBayes: A versatile R-package for analysis of count-based sequencing data in complex study designs. BMC Bioinform. 2014, 15, 116. [Google Scholar] [CrossRef]

- Keyang, C.; Ning, W.; Wenxi, S.; Yongzhao, Z. Research Advances in the Interpretability of Deep Learning. J. Comput. Res. Dev. 2020, 57, 1208–1217. [Google Scholar]

- Lovis, C. Unlocking the Power of Artificial Intelligence and Big Data in Medicine. J. Med. Internet. Res. 2019, 21, e16607. [Google Scholar] [CrossRef]

- Li, J.; Tian, Y.; Zhu, Y.; Zhou, T.; Li, J.; Ding, K.; Li, J. A multicenter random forest model for effective prognosis prediction in collaborative clinical research network. Artif. Intell. Med. 2020, 103, 101814. [Google Scholar] [CrossRef]

| Exclusion | Inclusion |

|---|---|

| Papers that were not written in English. | Journal articles published in the English language. |

| Full text of the document is not accessible on the internet. | Full-text papers that are accessible. |

| Relevant studies that are not based on deep learning or machine learning were used for modeling. | Machine learning algorithms were used for modeling. |

| The information of samples, the image data used, the modeling method or evaluation method are not described. | Deep learning algorithms were used for modeling. |

| Conferences papers, literature reviews and editorial materials that do not belong to original researchers. | The samples, the image data used, the modeling method and evaluation method are described in detail. |

| Author, Year | Purpose | Algorithms | Dataset | Best Algorithm | Best Algorithm Performance |

|---|---|---|---|---|---|

| Wong et al., 2021 [23] | NPC early detection | CNN | 412 individuals | CNN | AUC: 0.960 |

| Ke et sl., 2020 [24] | NPC detection and segmentation | SC-DenseNet | 4100 individuals | SC-DenseNet | Accuracy: 0.978 |

| Mohammed et al., 2018 [25] | NPC detection | ANN | 381 endoscopic images | ANN | Accuracy: 0.962 |

| Mohammed et al., 2020 [26] | NPC detection | ANN, region growing method | 249 endoscopic images | ANN | Precision: 0.957 |

| Abd Ghani et al., 2020 [27] | NPC detection | ANN, SVM, KNN | 381 endoscopic images | ANN | Accuracy: 0.941 |

| Li et al., 2018 [28] | NPC detection | fully convolutional network | 27,536 biopsy-proven images of 7951 patients | fully convolutional network | Overall Accuracy: 0.887 |

| Xu et al., 2022 [29] | NPC diagnosis | DCNN | 4783 nasopharyngoscopy images of 671 patients | DCNN | AUC: 0.986 |

| Shu et al., 2021 [30] | NPC diagnosis and post-treatment follow-up | RS-CNN | 15,354 FP/HW in vivo Raman spectra of 418 subjects | RS-CNN | Overall accuracy: 0.821 |

| Chuang et al., 2020 [31] | NPC identification | CNN | 726 nasopharyngeal biopsies | CNN | AUC: 0.985 |

| Diao et al., 2020 [32] | NPC identification | Inception-v3 | 1970 whole slide images of 731 cases | Inception-v3 | AUC: 0.930 |

| Author, Year | Purpose | Algorithms | Dataset | Best Algorithm | Best Algorithm Performance |

|---|---|---|---|---|---|

| Zhao et al., 2020 [33] | IC treatment response and survival prediction | SVM | Multi-MR images of 123 patients | SVM | C-index: 0.863 |

| Yang et al., 2022 [34] | IC treatment response prediction | CNN, Xception, VGG16, VGG19, InceptionV3, InceptionResNetV2 | Medical records of 297 patients | CNN | AUC: 0.811 |

| Peng et al., 2019 [35] | IC treatment response prediction | DCNN | PET-CT images of 707 patients | DCNN | C-index: 0.722 |

| Chen et al., 2021 [36] | Synthetic CT generation and tumor segmentation | CycleGAN-Resnet, CycleGAN-Unet | Planning kV-CT and MV-CT images of 270 patients | CycleGAN-Resnet | Improvement: CNR 184.0% image uniformity 34.7% SNR 199.0% DSC: 0.790 |

| Li et al., 2019 [37] | synthesized CT generation and dose calculations | DCNN | 70 CBCT/CT paired images | DCNN | MAE: improved from (60, 120) to (6, 27) HU PTVnx70 1%/1 mm gamma pass rates: 98.6% ± 2.9% |

| Wang et al., 2019 [38] | synthetic CT generation | DCNN | CT/MRI images of 33 patients | DCNN | MAE: Soft tissue 97 ± 13 HU Bone 357 ± 44 HU |

| Chen et al., 2022 [39] | synthetic CT generation | DCNN | CT/MRI images of 206 patients | DCNN | The (3 mm/3%) gamma passing rates were above 97.32% |

| Fitton et al., 2011 [40] | NPC delineation | “Snake” algorithm | CT-MR images of 5 patients | “Snake” algorithm | Reducing the average delineation time by 6 min per case |

| Ma et al., 2019 [41] | NPC segmentation | C-CNN, M-CNN, S-CNN | CT-MR images of 90 patients | C-CNN | PPV: CT image 0.714 ± 0.089 MR image 0.797 ± 0.109 |

| Chen et al., 2020 [42] | NPC segmentation | 3D-CNN, U-net,3D U-net | MRI images of 149 patients | 3D-CNN | DSC: 0.724 |

| Zhao et al., 2019 [43] | NPC segmentation | Fully convolutional neural networks | PET-CT scans images of 30 patients | Fully convolutional neural networks | Mean dice score: 0.875 |

| Weerayuth Chanapai et al., 2009 [44] | NPC Segmentation | SOM Technique | CT images of 131 patients | SOM Technique | CR: 0.620 PM: 0.730 |

| Tatanun et al., 2010 [45] | NPC segmentation | Region growing technique | 97 CT Images of 12 cases | Region growing technique | Accuracy: 0.951 |

| Chanapai et al., 2012 [46] | NPC region segmentation | Seeded region growing technique | 578 CT images of 31 patients | Seeded region growing technique | CR: 0.690 PM: 0.825 |

| Bai et al., 2021 [47] | GTV segmentation | ResNeXt-50 U-net | CT images of 60 patients | ResNeXt-50 U-net | DSC: 0.618 |

| Daoud et al., 2019 [48] | NPC segmentation | CNN, U-net | CT images of 70 patients | CNN | DSC: 0.910 |

| Li et al., 2019 [49] | NPC Segmentation | U-net | CT images of 502 patients | U-net | DSC: 0.740 |

| Xue et al., 2020 [50] | CTVp1 segmentation | SI-net | 150 NPC patients | SI-net | DSC: 0.840 |

| Jin et al., 2020 [51] | GTV Segmentation | ResSE-UNet | 1757 annotated CT slices of 90 patients | ResSE-UNet | DSC: 0.840 |

| Wang et al., 2020 [52] | GTV delineation | 3D U-net, 3D CNN, 2D DDNN | CT images and corresponding manually delineated target of 205 patients | 3D U-net | DSC: 0.827 |

| Men et al., 2017 [53] | Target segmentation | DDNN, VGG-16 | 230 patients | DDNN | DSC: GTVnx 0.809 CTV 0.826 |

| Huang et al., 2013 [54] | NPC Segmentation | Clustering and Classification-Based Methods with Learning, SVM | 253 MRI slices | Clustering and Classification-Based Methods with Learning | PPV: 0.9345 Sensitivity: 0.9776 |

| Huang et al., 2015 [55] | NPC segmentation | Distance regularized level set evolution | MR images of 26 patients | Distance regularized level set evolution | CR: 0.913 PM: 91.840 |

| Li et al., 2018 [56] | NPC segmentation | CNN | MR images of 29 patients | CNN | DSC: 0.890 |

| Lin et al., 2019 [57] | NPC segmentation | 3D CNN | MR images of 1021 patients | 3D CNN | DSC: 0.790 |

| Guo et al., 2020 [58] | NPC segmentation | 3D-CNN, 3D U-net, V-net, DDnet, DeepLab-like, CNN-based | MRI images of 120 patients | 3D-CNN | DSC: 0.737 |

| Ye et al., 2020 [59] | NPC segmentation | Dense connectivity embedding U-net | MRI images of 44 patients | Dense connectivity embedding U-net | DSC: 0.870 |

| Luo et al., 2023 [60] | GTV delineation | augmentation-invariant Strategy, nnU-net | MRI images of 1057 patients | augmentation-invariant Strategy | DSC: 0.88 |

| Li et al., 2020 [61] | NPC segmentation | ResNet-101, U-net, Attention U-net, BASNet, DANet, Unet++, RefineNet | 2000 MRI slices of 596 patients | ResNet-101 | DSC: 0.703 |

| Wong et al., 2021 [62] | NPC delineation | U-net, CNN | non-contrast-enhanced MRI of 195 patients | U-net | DSC: 0.710 |

| Liang et al., 2019 [63] | OARs detection and segmentation | CNN | CT images of 180 patients | CNN | DSC: 0.689–0.934 |

| Zhong et al., 2019 [64] | OARs segmentation | Boosting-based cascaded CNN, FCN, U-net | CT images of 140 patients | Boosting-based cascaded CNN | DSC: Parotids 0.923 Thyroids 0.923 Optic nerves 0.893 |

| Peng et al., 2023 [65] | OARs segmentation | fully convolutional neural network, U-net | CT images of 310 patients | fully convolutional neural network | DSC: 0.8375 |

| Zhao et al., 2022 [66] | OARs segmentation | U-net | CT images of 147 patients | U-net | DSC: 0.86 |

| Zhuang et al., 2021 [67] | DVH prediction | GRU-RNN | 80 VMAT plans | GRU-RNN | coefficient r: EUD 0.976 Maximum dose 0.968 |

| Cao et al., 2020 [68] | DVH prediction | GRU-RNN | 100 VMAT plans | GRU-RNN | PTV70 CPs: 70.71 ± 0.83 EPs: 70.77 ± 0.28 |

| Zhuang et al., 2019 [69] | DVH prediction | GRU-RNN | 124 VMAT plans | GRU-RNN | PTV70 CPs: 70.90 ± 0.54 EPs: 71.40 ± 0.51 |

| Yue et al., 2022 [70] | Dose prediction | 3D U-net | Radiotherapy datasets of 161 patients | 3D U-net | GTVnx 3 mm/3% gamma pass rate: 95.445% |

| Sun et al., 2022 [71] | Dose prediction | U-net | 117 NPC patients | U-net | PTV70.4 D95(Gy): 70.4 ± 0.0 |

| Jiao et al., 2019 [72] | DVH prediction | GRNN | 106 nine-field IMRT plans | GRNN | Brainstem R2: 0.98 ± 0.02 Spinal cord R2: 0.98 ± 0.02 |

| Chen et al., 2021 [73] | OARs DVHs prediction | CNN | 180 cases | CNN | D2%(Gy): Brain stem PRV 0.06 ± 4.31 Spinal cord PRV −0.69 ± 1.77 |

| Zhang et al., 2020 [74] | RTLI early detection | RF | MR images of 242 patients | RF | AUC: 0.83 |

| Bin et al., 2022 [75] | RTLI prediction | SVM, RF | 98 stage T4/N0e3/M0 patients | SVM | C-index: 0.82 |

| Ren et al., 2021 [76] | Radiation-induced hypothyroidism prediction | LR, SVM, RF, KNN | 145 patients | LR | AUC: 0.70 |

| Chao et al., 2022 [77] | Radiotherapy-induced xerostomia prediction | SVM, KNN, RF | 155 HNC patients | KNN | Mean accuracy: 0.68–0.7 |

| Zhong et al., 2021 [78] | Treatment decision | SE-ResNet | MRI images of 638 stage T3N1M0 patients | SE-ResNet | HR: 0.17 and 6.24 |

| Zhao et al., 2022 [79] | Risk stratification and survival prediction | light-weighted DCNN | PET-CT images and OS of 420 patients | light-weighted DCNN | C-index: 0.732 |

| Jiang et al., 2016 [80] | Synchronous metastases NPC patients’ prognostic classifier | SVM | Hematological markers and clinical characteristics of 347 patients | SVM | HR: 3.45 |

| Cui et al., 2020 [82] | NPC classification system | Rigde, Lasso | MR images of 792 patients | Rigde | AUC: OS 0.796 LRFS 0.721 |

| Author, Year | Purpose | Algorithms | Dataset | Best Algorithm | Best Algorithm Performance |

|---|---|---|---|---|---|

| Zhong et al., 2020 [83] | Survival prediction | DCNN | MRI images of 638 stage T3N1M0 patients | DCNN | C-index: 0.788 |

| Zhuo et al., 2019 [84] | survival Stratification | SVM | 658 non-metastatic patients | SVM | C-index: 0.814 |

| Du et al., 2019 [85] | Early progression prediction | SVM | MRI images of 277 nonmetastatic patients | SVM | AUC: 0.80 |

| Li et al., 2018 [86] | Recurrence prediction | ANN, KNN, SVM | 306 patients | ANN | Accuracy: 0.812 |

| Qiang et al., 2019 [87] | Disease-free survival prediction | 3D DenseNet | MRI images of 1636 nonmetastatic patients | 3D DenseNet | HR: 0.62 |

| Du et al., 2019 [88] | Risk assessment | DCNN | MRI images of 596 nonmetastatic patients | DCNN | AUC: 0.828 |

| Qiang et al., 2021 [89] | Risk stratification | 3D CNN | MR images and clinical data of 3444 patients | 3D CNN | C-index: 0.776 |

| Zhang et al., 2021 [90] | DMFS prediction and treatment decision | DCNN | 233 patients | DCNN | AUC: 0.796 |

| Jing et al., 2020 [91] | Disease progression prediction | MDSN, BoostCI, LASSO -COX | Multi-parametric MRIs images of 1417 patients | MDSN | C-index: 0.651 |

| Chen et al., 2021 [92] | Distant metastasis prediction | XGBoost | MRIs images of 1643 patients | XGBoost | C-index: 0.760 |

| Meng et al., 2022 [93] | Joint survival prediction and tumor segmentation | 3D end-to-end DeepMTS, LASSO-COX, DeepSurv, 2D CNN-based survival, 3D deep survival network | PET-CT images and clinical data of 193 patients | 3D end-to-end DeepMTS | C-index: 0.722 DSC: 0.760 |

| Gu et al., 2022 [94] | 5-year PFS prediction | 3D CNN | PET/CT images of 257 of patients | 3D CNN | AUC: 0.823 |

| Zhang et al., 2020 [95] | Prognosis prediction | DCNN | MRI images and biopsy specimens whole-slide images of 220 patients | DCNN | C-index: 0.834 |

| Liu et al., 2020 [96] | Prognosis prediction | Neural network DeepSurv | H&E–stained slides of 1229 patients | Neural network DeepSurv | C-index: 0.723 |

| Chen et al., 2021 [97] | Prognosis prediction | Ridge regression, elastic net | miRNA expression profiles and clinical data of 612 patients | Ridge regression, elastic net | 5-year OS AUC: 0.70 |

| Zhao et al., 2021 [98] | Prognosis prediction | RF | RNA-Seq data of 60 tumor biopsies | RF | AUC: OS 0.893 PFS 0.86. |

| Zhang et al., 2022 [99] | Prognosis prediction | DNN | MRI images and gene expression profiles of 151 patients | DNN | AUC: 0.88 |

| Author, Year | Publicly Available | Balanced Classes | Validation Strategy | Data Handing Strategy |

|---|---|---|---|---|

| Wong et al., 2021 [23] | No | No | Cross validation | - |

| Ke et al., 2020 [24] | No | No | Validation set | Excluding |

| Mohammed et al., 2018 [25] | No | No | - | - |

| Mohammed et al., 2020 [26] | No | No | Cross validation | - |

| Abd Ghani et al., 2020 [27] | No | No | - | - |

| Li et al., 2018 [28] | No | No | Validation set | Excluding |

| Xu et al., 2022 [29] | No | No | Cross validation | - |

| Shu et al., 2021 [30] | No | No | Validation set | - |

| Chuang et al., 2020 [31] | No | No | Validation set | - |

| Diao et al., 2020 [32] | No | No | Validation set | - |

| Zhao et al., 2020 [33] | No | No | Validation set | Excluding |

| Yang et al., 2022 [34] | No | No | Validation set | Excluding |

| Peng et al., 2019 [35] | No | No | Validation set | Excluding |

| Chen et al., 2021 [36] | No | No | Validation set | Excluding |

| Li et al., 2019 [37] | No | No | Validation set | Excluding |

| Wang et al., 2019 [38] | No | No | Validation set | Excluding |

| Chen et al., 2022 [39] | No | No | - | Excluding |

| Fitton et al., 2011 [40] | No | No | - | - |

| Ma et al., 2019 [41] | No | No | - | Excluding |

| Chen et al., 2020 [42] | No | No | Cross validation | Excluding |

| Zhao et al., 2019 [43] | No | Yes | Cross validation | Excluding |

| Chanapai et al., 2009 [44] | No | No | Validation set | Excluding |

| Tatanun et al., 2010 [45] | No | No | - | Excluding |

| Chanapai et al., 2012 [46] | No | No | Validation set | Excluding |

| Bai et al., 2021 [47] | No | No | Cross validation | Excluding |

| Daoud et al., 2019 [48] | No | No | Cross validation | Excluding |

| Li et al., 2019 [49] | No | No | Validation set | Excluding |

| Xue et al., 2020 [50] | No | No | Validation set | Excluding |

| Jin et al., 2020 [51] | No | No | Validation set | Excluding |

| Wang et al., 2020 [52] | No | No | Cross validation | Excluding |

| Men et al., 2017 [53] | No | No | Validation set | Excluding |

| Huang et al., 2013 [54] | No | No | Validation set | Excluding |

| Huang et al., 2015 [55] | No | No | - | Excluding |

| Li et al., 2018 [56] | No | No | Cross validation | Excluding |

| Lin et al., 2019 [57] | No | No | Validation set | Excluding |

| Guo et al., 2020 [58] | No | No | Cross validation | Excluding |

| Ye et al., 2020 [59] | No | No | Cross validation | Excluding |

| Luo et al., 2023 [60] | No | No | Validation set | Excluding |

| Li et al., 2020 [61] | No | No | Validation set | Excluding |

| Wong et al., 2021 [62] | No | No | Cross validation | Excluding |

| Liang et al., 2019 [63] | No | No | - | Excluding |

| Zhong et al., 2019 [64] | No | No | Validation set | Excluding |

| Peng et al., 2023 [65] | No | No | Cross validation | Excluding |

| Zhao et al., 2022 [66] | No | No | Cross validation | Excluding |

| Zhuang et al., 2021 [67] | No | No | Validation set | Excluding |

| Cao et al., 2020 [68] | No | No | Validation set | Excluding |

| Zhuang et al., 2019 [69] | No | No | Validation set | Excluding |

| Yue et al., 2022 [70] | No | No | Validation set | Excluding |

| Sun et al., 2022 [71] | No | No | Validation set | Excluding |

| Jiao et al., 2019 [72] | No | No | Validation set | Excluding |

| Chen et al., 2021 [73] | No | No | Validation set | Excluding |

| Zhang et al., 2020 [74] | No | No | Validation set | Excluding |

| Bin et al., 2022 [75] | No | No | Cross validation | Excluding |

| Ren et al., 2021 [76] | No | No | Cross validation | Excluding |

| Chao et al., 2022 [77] | No | No | Cross validation | Excluding |

| Zhong et al., 2021 [78] | No | No | Validation set | Excluding |

| Zhao et al., 2022 [79] | No | No | Validation set | Excluding |

| Jiang et al., 2016 [80] | No | No | Validation set | Excluding |

| Cui et al., 2020 [82] | No | No | Cross validation | Excluding |

| Zhong et al., 2020 [83] | No | No | Cross validation | Excluding |

| Zhuo et al., 2019 [84] | No | No | Validation set | Excluding |

| Du et al., 2019 [85] | No | No | Validation set | Excluding |

| Li et al., 2018 [86] | No | No | Cross validation | Excluding |

| Qiang et al., 2019 [87] | No | No | Validation set | Excluding |

| Du et al., 2019 [88] | No | No | Validation set | Excluding |

| Qiang et al., 2021 [89] | No | No | Validation set | Excluding |

| Zhang et al., 2021 [90] | No | No | Validation set | Excluding |

| Jing et al., 2020 [91] | No | No | Validation set | Excluding |

| Chen et al., 2021 [92] | No | No | Validation set | Excluding |

| Meng et al., 2022 [93] | No | No | Cross validation | Excluding |

| Gu et al., 2022 [94] | No | No | Validation set | Excluding |

| Zhang et al., 2020 [95] | No | No | Validation set | Excluding |

| Liu et al., 2020 [96] | No | No | Validation set | Excluding |

| Chen et al., 2021 [97] | No | No | Validation set | Excluding |

| Zhao et al., 2021 [98] | No | No | Validation set | Excluding |

| Zhang et al., 2022 [99] | No | No | - | Excluding |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Wu, J.; Chen, X. Application of Artificial Intelligence to the Diagnosis and Therapy of Nasopharyngeal Carcinoma. J. Clin. Med. 2023, 12, 3077. https://doi.org/10.3390/jcm12093077

Yang X, Wu J, Chen X. Application of Artificial Intelligence to the Diagnosis and Therapy of Nasopharyngeal Carcinoma. Journal of Clinical Medicine. 2023; 12(9):3077. https://doi.org/10.3390/jcm12093077

Chicago/Turabian StyleYang, Xinggang, Juan Wu, and Xiyang Chen. 2023. "Application of Artificial Intelligence to the Diagnosis and Therapy of Nasopharyngeal Carcinoma" Journal of Clinical Medicine 12, no. 9: 3077. https://doi.org/10.3390/jcm12093077

APA StyleYang, X., Wu, J., & Chen, X. (2023). Application of Artificial Intelligence to the Diagnosis and Therapy of Nasopharyngeal Carcinoma. Journal of Clinical Medicine, 12(9), 3077. https://doi.org/10.3390/jcm12093077