Abstract

A theoretical and computational study on the estimation of the parameters of a single Fitzhugh–Nagumo model is presented. The difference of this work from a conventional system identification is that the measured data only consist of discrete and noisy neural spiking (spike times) data, which contain no amplitude information. The goal can be achieved by applying a maximum likelihood estimation approach where the likelihood function is derived from point process statistics. The firing rate of the neuron was assumed as a nonlinear map (logistic sigmoid) relating it to the membrane potential variable. The stimulus data were generated by a phased cosine Fourier series having fixed amplitude and frequency but a randomly shot phase (shot at each repeated trial). Various values of amplitude, stimulus component size, and sample size were applied to examine the effect of stimulus to the identification process. Results are presented in tabular and graphical forms, which also include statistical analysis (mean and standard deviation of the estimates). We also tested our model using realistic data from a previous research (H1 neurons of blowflies) and found that the estimates have a tendency to converge.

1. Introduction

Application of computational tools in neuroscience is an emerging field of research in the last 50 years. The Hodgkin–Huxley model [1] is a striking development in the field of theoretical and computational neuroscience. Here, the membrane potential and its bursting properties are modeled as a fourth-order nonlinear system. In addition to the membrane potential, it describes the behaviors of sodium and potassium ion channels. Its nonlinear properties make some researchers search for possibilities that yield simpler nonlinear differential equations. One such attempt is the second-order Morris–Lecar [2], which lumps the ion channel activation dynamics into a single recovery variable. It is still a conductance based model. Further simplifications involve complete elimination of physical parameters such as ion conductances. Two major examples are the second-order Fitzhugh–Nagumo [3,4] and the third-order Hindmarsh–Rose [5] models. These can model the pulses and bursts occurring in the membrane potential without the need of physical parameters like ion conductances. In addition, as in the case of Morris–Lecar models, the behaviors of ion channels are lumped into generic variables.

In the case that only the input output (stimulus/response) relationships are important, general neural network models can be a good choice. Some examples from literature are the static feed-forward models [6,7] and nonlinear recurrent dynamical neural network models [8,9]. The dynamical neural network models can be structured such that one may receive a membrane potential information (bursts can be explicitly recovered) or just the instantaneous firing rate as the output [10]. In addition, sometimes only the statistical properties of the stimulus/response pair is import and thus statistical black-box models are taken into account [11,12].

Regardless of the chosen model, stimulus/response data are required to obtain an accurate relationship. Depending on the experiment, these data may be continuous or discrete in nature. In the case of an in-vitro environment such as a patch clamp experiment, one may record a full time dependent profile of membrane potential. That allows computational biologists to perform an identification (parameter estimation) based on traditional minimum mean square estimation (MMSE) techniques. However, in an in-vivo experiment, it is very difficult to collect continuous data revealing exact (or in an acceptable range at least) membrane potential information. If a membrane potential micro electrode contacts a living neuron membrane, the resistive and capacitive properties of the electrode may alter the operation of the neuron. This is not desired as one will not model a realistically functioning neuron at the end of the identification process.

In [7,8], it is suggested that one can record the successive action potential timings if the electrodes are suitably placed in surroundings of the membrane. With that, one is able to form a neural spike train which has the discrete timings of the spikes (or of the action potential bursts). Of course, a spike train cannot have dedicated amplitude information. However, this does not mean that one is hopeless concerning model identification. In [13], it is suggested that neural spike timings largely obey Inhomogeneous Poisson Point Processes (IPPPs). Being aware of the fact that an IPPP can be approximated by a local Bernoulli process [14], it would be convenient to derive suitable likelihood functions and apply statistical parameter identification techniques on that.

In addition, previous research suggests that the transmitted neural information is not directly coded by the membrane potential level but rather vested in the firing rate [15], interspiking intervals (ISI) [16] or individual timings of the spikes [17]. Thus, training neuron models from discrete and stochastic spiking data is expected to be a beneficial approach to understand the computational features of our nervous system.

Concerning the application of statistical techniques based on point process likelihoods to neural modeling, there are a few research works in the related literature. The authors of [6,7] applied maximum a-posteriori estimation (MAP) technique to identification of the weights of a static feed-forward model of the auditory cortex of marmoset monkeys. The authors of [8,9] presented a computational study aiming at the estimation of the network parameters and time constants of a dynamical recurrent neural network model using point process maximum likelihood technique. The authors of [18] applied likelihood techniques to generate models for point process information coding. The authors of [19] trained a state space model from point process neural spiking data.

In a few research studies, Fitzhugh–Nagumo models are involved in stochastic neural spiking related studies. For example, the authors of [20] dealt with the interspike interval statistics when the original Fitzhugh–Nagumo model is modified to include noisy inputs. The number of small amplitude oscillations has a random nature and tend to have an asymptotically geometric distribution. Bashkirtseva et al. [21] studied the effect of stochastic dynamics represented by a standard Wiener process on the limit cycle behavior. In [22], the authors performed research on the hypoelliptic stochastic properties of Fitzhugh–Nagumo neurons. They studied the effect of those properties on the neural spiking behavior of Fitzhugh–Nagumo models. Finally, Zhang et al. [23] investigated the stochastic resonance occurring in the Fitzhugh–Nagumo models when trichotomous noise is present in the model. They found that, when the stimulus power is not sufficient to generate firing responses, trichotomous noise itself may trigger the firing.

In this research, we treated a conventional single Fitzhugh–Nagumo equation [3,4] as a computational model to form a theoretical stimulus/response relationship. We were interested in the algorithmic details of the modeling. Thus, we modified the original equation to provide firing rate output instead of the membrane potential. Based on the findings in [8,9,10], we mapped the firing rate and membrane potential of the neuron by a gained logistic sigmoid function. Sigmoid functions have a significance in neuron models as they are a feasible way of mapping the ion channel activation dynamics and membrane potential [1,2].

Although the output of our model is the neural firing rate, the responses from in vivo neurons are stochastic neural spike timings. To obtain representative data, we simulated the Fitzhugh–Nagumo neurons with a set of true reference parameters and then generated the spikes from the output firing rate by simulating an Inhomogeneous Poisson process on it.

The parameter estimation procedure was based on maximum likelihood method. Similar to that of Eden [14], the likelihood was derived from the local Bernoulli approximation of the inhomogeneous Poisson process. That depends on the individual spike timings rather than the mean firing rate (which is the case in Poisson distribution’s probability mass function).

The stimulus was modeled as a Fourier series in phased cosine form. This choice was made to investigate the performance of the estimation when the same stimulus as that in [8,9] was applied. In the computational framework of this research, the stimulus was applied for a duration 30 ms. This may be observed as a relatively short duration and it is chosen to speed up the computation. In some studies (e.g., [24,25,26]), one can infer that such short duration stimuli may be possible for fast spiking neurons.

In addition, fast spiking responses obtained from a single long random stimulus can be partitioned to segments of short duration such as 30 ms. Thus, the approach in this research can also be utilized in modeling studies that involves longer duration stimuli.

In addition to the computational features of this study, we also investigated the performance of our developments when the training data are taken from a realistic experiment. To achieve this goal, we used the data generated by de Ruyter and Bialek [27]. The data from this research have a 20 min recording of neural spiking responses obtained from H1 neurons of blowfly vision system against white noise random stimulus. The response was divided into segments of 500 ms and the developed algorithms were applied. Each 500 ms segment can be thought as an independent stimulus and its associated response.

2. Materials and Methods

2.1. FitzHugh-Nagumo Model

Fitzhugh–Nagumo (FN) model is a second-order polynomial nonlinear differential equation bearing two states representing the membrane potential and a recovery variable , which lumps all ion channel related processes into one state. Mathematically, it can be represented as shown below [28]:

The above model has four parameters determining its properties. In the original text associated with the FN models, the coefficient of the is ; however, in this work, we suppose that the coefficient of that cubic term is not constant and we assign a parameter d to it. In Equation (1), I represents the stimulus exciting the neuron. It can be thought of as an electric current.

In the introduction, we state that we need a relationship between the membrane potential representative variable V and the firing rate of our neuron. In addition, we also state that we can construct such a map by developing a nonlinear sigmoidal map as shown below:

where r is the firing rate of the neuron in ms and F is the maximum firing rate parameter. Thus, one has five parameters to estimate and they can be vectorally expressed as:

Thus, we can call as the estimates of . In the application, we needed the true values of so that we coul generate the spikes that represent the collected data from a realistic experiment. These are available in Table 1.

2.2. Stimulus

The signal for stimulation was modeled using a phased cosine Fourier series as:

where represents the amplitude, stands for the frequency of the nth Fourier component in , and stands for the phase of the component in radians. The amplitude along with the base frequency (in Hz) were kept constant, whereas the phase was selected randomly from a uniform distribution in radians. The amplitude parameter was unchanged for all mode n and it was set as .

2.3. Neural Spiking and Point Processes

We state in the introduction that the neural spiking is a point process that largely obeys an Inhomogeneous Poisson Process (IPP). A basic Poisson process is characterized by an event rate and has an exponential probability mass function defined by:

where k is the number of events that occur in the interval . In the simplest case, is constant in that interval. In neural operation, the process is much more complex and assuming a constant event rate is insufficient; thus, we refer to a time varying event rate, which is actually equivalent to the firing rate of the neuron (refer to Equation (2)). This yields an inhomogeneous Poisson point process with the event rate replaced by the mean firing rate defined by:

Now, the term k represents the spike count in the interval , which is statistically related to the firing rate ; now represents the mean spike count for the firing rate , which varies with time; and stands for the cumulative total number of spikes up to time , thus making the spike count for the time interval .

Now, let us take a spike train in the time interval . Here, , thus t and become 0 and T. The spike train can be defined using a series of time stamps for K spikes. As a result, the likelihood density function related to any spike train is gained using an inhomogeneous Poisson process [14,30] in the following way:

The function reveals the likelihood of a given spike train to occur with the rate function , which obviously is relying mainly upon network parameters and the stimulus applied.

2.4. Maximum Likelihood Methods and Parameter Estimation

The parameters requiring assessment appear as a vector:

to cover all the parameters in Equation (3). The maximum probability here relies on the function proposed in Equation (7) and includes each spike timing as well. Estimation theory asserts that determining maximum probability is asymptotically effective and goes as far as the Cramér–Rao bound within the scope of large data. Therefore, for us to expand the probability function in Equation (7) to further cover settings with numerous spike trains initiated by numerous stimuli, a series of M stimuli should be assumed. Take the mth stimulus () to initiate a spike train containing spikes in the time window , and the spike timings are given by . By Equation (7). According to Equation (7), the probability function for the spike train can be determined as:

in which represents the firing rate due to the mth stimulus. Let us denote that the rate function entirely relies on the parameters related to neuron parameters and the stimulus. On the left-hand side of Equation (9), its reliance on the neuron parameters can be noted.

Supposing the stimulus and its elicited responses in each mth trial are independent, one can derive a joint likelihood function as:

To improve its convexity, we can make use of natural logarithm and derive a log likelihood function as shown below:

Finally, the maximum likelihood estimates of the parameter vector is obtained by:

2.5. Spike Generation for Data Collection

Since this study was of computational type and targeted the development of an algorithm to be applied in a realistic experiment, we needed a solid approach to generate a dataset to represent the output of a realistic experiment. In the current research, the data were a set of neural spike trains that bear the individual spike timings with no amplitude information. In addition, we also know that the neural spiking process largely obeys inhomogeneous Poisson statistics, thus we could achieve that goal by implementing a stable Poisson process simulation. In other words, we simulated an inhomogeneous Poisson process with as its event rate. There are several algorithms to simulate an inhomogeneous Poisson process. The local Bernoulli approximation [14], thinning [31], and time scale transformation [32] can be shown as examples.

If the time bin is sufficiently small (e.g., ) such that only one spike is fitted, one can use local Bernoulli approximation to generate the neural spiking data very easily. The is also a reasonable choice when the neuron models are integrated by discrete solvers such as the Euler or Runge–Kutta method. One can see a summary of the related algorithm below [8]:

- Given the firing rate of a neuron as .

- Find the probability of firing at time by evaluating where is the integration interval. It should be a small real number such as 1 ms.

- Draw a random number that is uniformly distributed in the interval . Here, U stands for a uniform distribution.

- If , fire a spike at , else do nothing.

- Collect spikes as where will be the total number of spikes collected from one simulation.

3. Application

In this section, we introduce a simulation-based approach to evaluate the parameters of a firing rate-based single Fitzhugh–Nagumo neuron model. The process in brief appears as follows:

- A single run of simulation lasted for ms.

- The stimulus amplitude and base frequency were assigned prior to each trial m. The phase angles was assigned randomly, as defined in Section 2.2.

- Using the approach presented in Section 2.5, the spike train of the mth trial was generated from the firing rate . The number of spikes was at the mth trial.

- The simulation was repeated times to collect several statistically independent spike trains, i.e., .

3.1. Optimization Algorithm

To perform a maximum likelihood estimation (i.e., the problem defined in Equation (12)), we needed an optimizer. Most optimizers target a local minimum and thus require multiple initial guesses to increase the probability of finding a global optimum to the problem. However, this is a time consuming task and in a problem similar to that of this research duration is a crucial parameter. This was even more critical when we are using our algorithms in a physiological experiment. Some optimization algorithms such as genetic, pattern search, or simulated annealing do not require the online computation of gradients but they are computationally extensive and will most likely require a longer duration. Thus, in this research, we preferred a gradient based algorithm and utilized MATLAB’s fmincon routine. It is based on interior-point algorithms (a modified Newton’s method) and allows lower and upper bounds to be set on the result. As all parameters of an FN model are positive, a zero lower bound will prevent unnecessary parameter sweeps.

3.2. Simulation Scenarios

In this section, we introduce the results related to parameter estimation using table for the variation of mean estimated values of parameters . The scenario information for the present problem appear in Table 2. To show impact of various stimulus components , amplitude level , and number of trials , the problem was re-run for a set of different values of those parameters.

Table 2.

Data for the simulation scenario.

The initial conditions of the states representing the membrane potential V and recovery activity W in Equation (1) were assumed as and . This is a reasonable choice as we did not have any information about them.

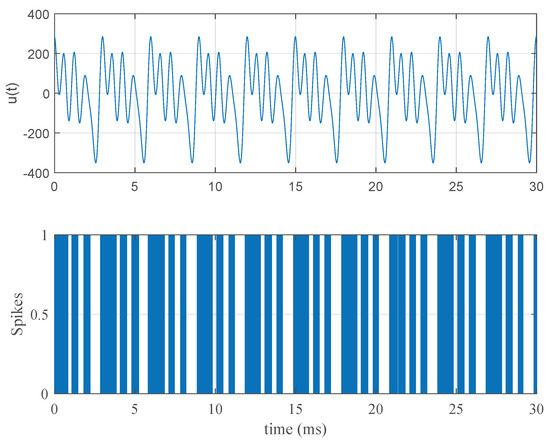

A typical stimulus response relationship can be seen in Figure 1. Here, the stimulus parameters are , kHz, and . The nominal parameters in Table 1 were used in this simulation.

Figure 1.

A typical stimulus and response pattern. In the first pane, a Fourier series stimulus with parameters , Hz, and is displayed. In the second pane, the neural spiking pattern of the Fitzhugh–Nagumo model in Equation (1) with the nominal parameters in Table 1 obtained after Poisson simulation can be seen.

3.3. Estimation of Parameters Using a Realistic Data

As stated in the end of the Introduction, we were likely interested in the results of the estimation when the stimulus/response data (spike trains collected) were collected from realistic neurons. Although performing an experiment may not be possible, one can use data from repositories or other sites on the web. We used the data collected in an experiment performed by de Ruyter and Bialek [27]. Here, the stimulus was of white noise type and the response was measured from H1 neurons of blowfly vision system. The data are available as a MATLAB workspace file on the website http://www.gatsby.ucl.ac.uk/~dayan/book/exercises/c1/data/c1p8.mat. In this dataset, a single stimulus of 20 min duration stimulates the H1 neurons of the flies. We divided these 1200 s long data into 2400 segments, each of which is 500 ms long. Thus, our algorithm was applied as if there were 2400 independent stimuli of 500 ms duration. Since we had a random stimulus here, we could assume that segments were triggered by independent stimuli. The algorithm was provided by subsets of data having 25, 50, 100, 200, 300, 400, 500, 600, 700, 800, 900, 1000, 1100, 1200, 1300, 1400, 1500, 1600, 1700, 1800, 1900, 2000, 2100, 2200, 2300, and 2400 samples (in other words, the value of ).

4. Results

In this section, the results of our example problem are presented. The maximum likelihood estimates of the parameters () in Equation (3) were obtained by maximizing Equation (10) using MATLAB’s fmincon routine.

The relevant results can be categorized under two headings:

- The variations of mean estimated values of against varying sample size , amplitude level , stimulus component size , and base frequency are presented in Section 4.1.

- The variations of standard deviations of the estimated parameters against varying sample size , amplitude level , stimulus component size , and base frequency are presented in Section 4.2.

4.1. Mean Estimated Values

One can see the variation of the mean estimated values of each parameter in Equation (3) against the number of samples , amplitude , component size , and base frequency of the stimulus in Table 3, Table 4, Table 5 and Table 6, respectively.

Table 3.

Estimated value vs. ( = 5, = 100, and Hz).

Table 4.

Estimated value vs. ( = 100, = 100, and Hz).

Table 5.

Estimated value vs. ( = 100, = 5, and Hz).

Table 6.

Estimated value vs. ( = 100, = 5, and = 100). Frequencies are in KHz.

4.2. Standard Deviations

One can see the variation of the standard deviations of the estimates of each parameter in Equation (3) against the number of samples , amplitude , component size , and base frequency of the stimulus in Table 7, Table 8, Table 9 and Table 10, respectively.

Table 7.

Standard deviations vs. ( = 5, = 100, and Hz).

Table 8.

Standard deviations vs. ( = 100, = 100, and Hz).

Table 9.

Standard deviations vs. ( = 100, = 5, and Hz).

Table 10.

Standard deviations vs. ( = 100, = 5, and = 100). The frequencies are in KHz.

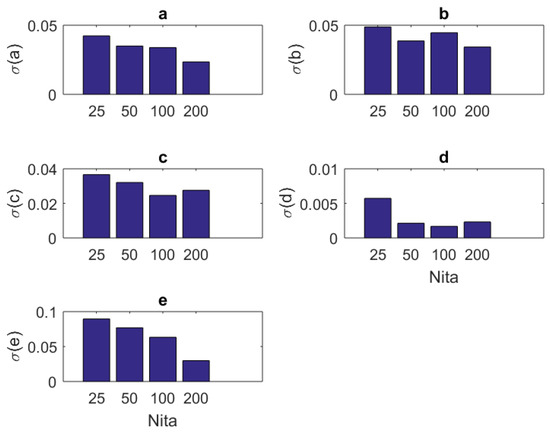

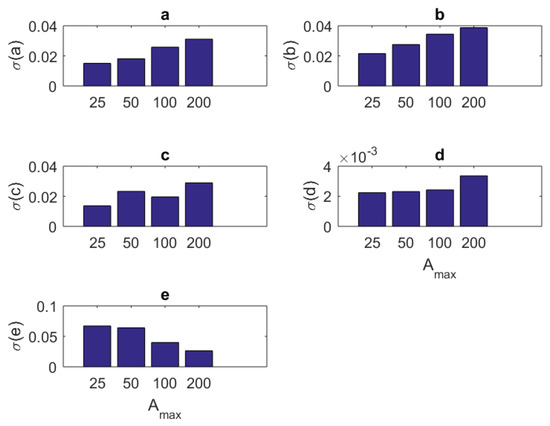

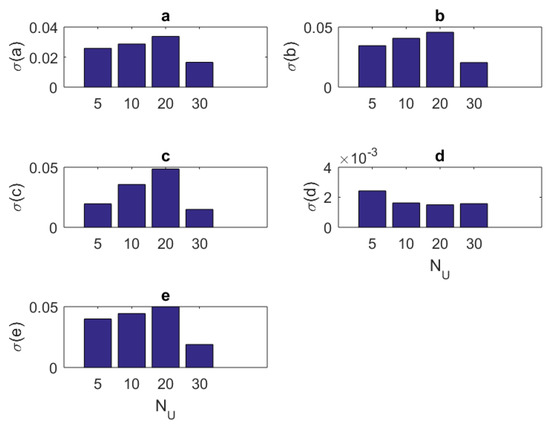

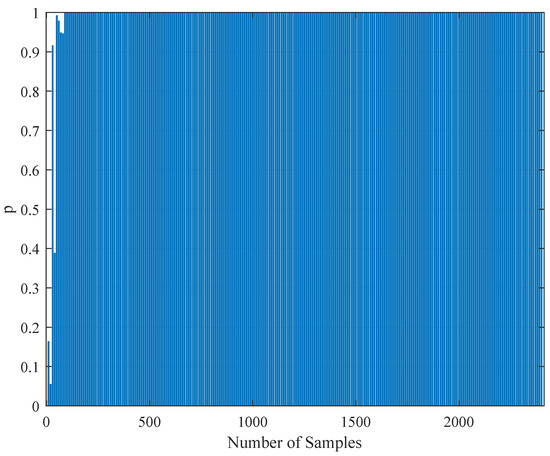

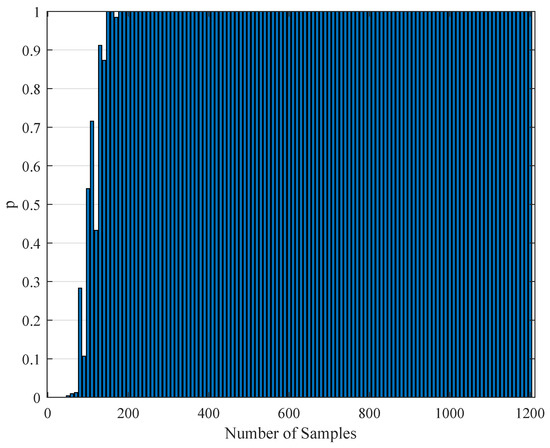

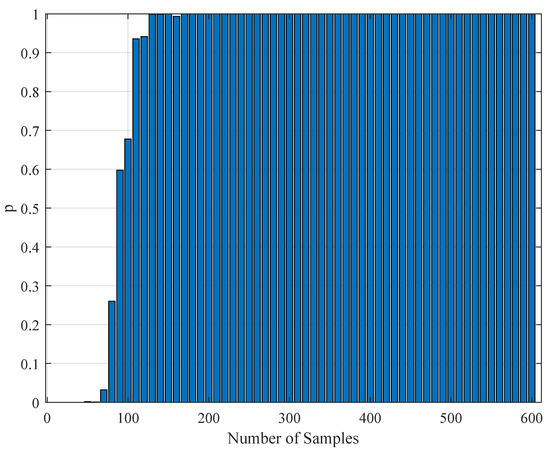

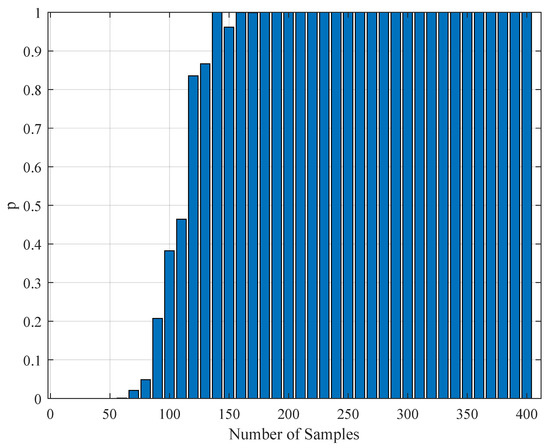

In addition to the tabular results, the variation of the standard deviations are also presented in graphical forms in Figure 2, Figure 3, Figure 4 and Figure 5.

Figure 2.

The variation of individual standard deviations (or relative errors) of the estimates against varying sample (iteration) size . Other stimulus parameters are , , and Hz. For most parameters, these relative errors show an improving behavior with the increasing sample size. However, some parameters such as b do not present any improvement or degradation in relative errors. However, in general, the relative error levels remain small.

Figure 3.

The variation of individual standard deviations (or relative errors) of the estimates against varying stimulus amplitude parameter . Other stimulus parameters are , , and Hz. Except for parameter F, one cannot see an improvement with raising the stimulus amplitude. However, in general, the relative error levels remain small.

Figure 4.

The variation of individual standard deviations (or relative errors) of the estimates against varying stimulus component size . Other stimulus parameters are , , and Hz. Stimuli with small or large component size can be preferred. In general, relative error levels also stay smaller in this case.

Figure 5.

The variation of individual standard deviations (or relative errors) of the estimates against varying base frequency . Other stimulus parameters are , , and . The frequencies are in KHz. Although overall relative error levels are smaller, one can prefer a mid frequency range, e.g. KHz

4.3. Results of Estimation from Realistic Data

As mentioned in Section 3.3, we also utilized realistic data obtained from H1 neurons of blowflies [27]. A little more detailed discussion is available in Section 3.3. The variation of estimated values of neuron parameters against the sample sizes are available in Table 11. In Table 12, the relative error with respect to the case with previous sample size setting is shown. The relative error was computed with the following scheme:

where k refers to each of the cases in Table 11 and they are identified by the sample size parameter . Here, k did not start from because we did not have any data concerning the cases . Thus, in Table 12, the k value starts from . Thus, in its first column, the relative error of the case with was computed against the case with . Similarly, the relative error of the case with was computed against the case with , and so on. When we examine Table 12, we can observe that the relative errors of parameters reduce as the sample size increases (as k progresses). Although there seems a fluctuation of the relative error, the magnitude of this fluctuation tends to decrease. This is noted especially after the case with .

Table 11.

The variation of estimated parameters against increasing sample size in the estimation using realistic stimulus/response data obtained from H1 neurons of blowfly neurons.

Table 12.

The relative error levels against the sample size parameter . The errors were computed by evaluating the difference between the parameter values of the current case k and the previous case in Table 11. With increasing sample sizes, the estimates tend to have smaller fluctuations.

4.4. Statistical Testing of the Parameter Estimation with Realistic Data

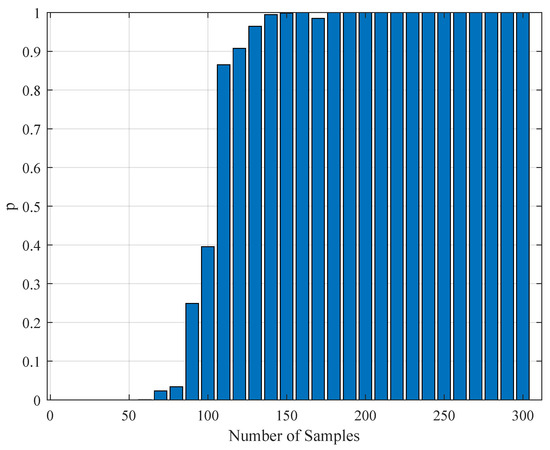

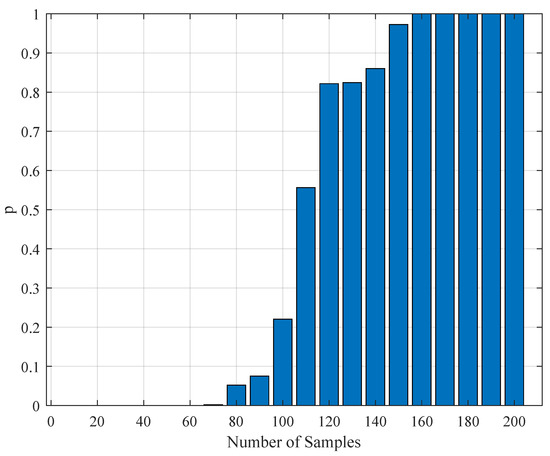

To test the validity of the results of Section 4.3, one needs to perform a statistical comparison test. To achieve this goal, we performed a Kolmogorov–Smirnov test on the interspike intervals of the spike trains obtained from the H1 neuron measurement data and the simulated spike trains with one of the parameter sets in Table 11. As one set of measurement is not statistically adequate, we used superimposed spike sequences. As they were obtained from independent stimuli, their statistical nature was not disturbed. As we did in the estimation experiment, we superimposed the spike sequences in the response segments of both realistic measurements and the simulated output from our model. After obtaining that, we performed a Kolmogorov–Smirnov test for the two samples (one is from realistic response and one is from the simulated response from our model). We applied different segment lengths and plotted the variation of the p-values. The tool used in the application was MATLAB’s kstest2(x1,x2) routine (here, x1 and x2 are two samples from similar or dissimilar distributions). We used the parametric estimations from the last column in Table 11. One can see the relevant results in Figure 6, Figure 7, Figure 8, Figure 9, Figure 10 and Figure 11. From those outcomes, one can note that the p-value starts crossing the line after obtaining about 80 samples of measurement. This may be normal in the view of statistics, as these hypothesis testing algorithms require large numbers of samples to yield strong results.

Figure 6.

The variation of the Kolmogorov–Smirnov test p value with the number of samples obtained from both measurements (simulation and realistic measurement). Here, the segment size is 500 ms.

Figure 7.

The variation of the Kolmogorov–Smirnov test p value with the number of samples obtained from both measurements (simulation and realistic measurement). Here, the segment size is 1 s.

Figure 8.

The variation of the Kolmogorov–Smirnov test p value with the number of samples obtained from both measurements (simulation and realistic measurement). Here, the segment size is 2 s.

Figure 9.

The variation of the Kolmogorov–Smirnov test p value with the number of samples obtained from both measurements (simulation and realistic measurement). Here, the segment size is 3 s.

Figure 10.

The variation of the Kolmogorov–Smirnov test p value with the number of samples obtained from both measurements (simulation and realistic measurement). Here, the segment size is 4 s.

Figure 11.

The variation of the Kolmogorov–Smirnov test p value with the number of samples obtained from both measurements (simulation and realistic measurement). Here, the segment size is 6 s.

5. Conclusions

In this paper, we present a theoretical and computational study aiming at the identification of the parameters of a single Fitzhugh–Nagumo model from stochastic discrete neural spiking data. To pursue this goal, we needed to modify the classical Fitzhugh–Nagumo model so that the output generates a firing rate instead of a membrane potential. We transformed the membrane potential information into that of a time dependent firing rate through a nonlinear map in sigmoidal form. The spiking data that are representative of an experimental application were obtained by simulating the Fitzhugh–Nagumo model and an Inhomogeneous Poisson process together. To assess the performance of the work, we repeated the simulations under different sample sizes (the number of repeated trials), stimulus component sizes, and stimulus base frequencies and amplitudes. The variation of mean estimated values and standard deviations are presented as results. The following concluding remarks can be made:

- The estimation algorithm showed a stable behavior for all examined conditions, as shown in Table 2.

- In general, the standard deviations of estimates present a decreasing behavior increasing sample size (Figure 2). For parameters b and c, there is a slightly oscillating behavior in the standard deviation values (Figure 2b,c). The standard deviations when are slightly larger than those of the case . The situation may be treated inferior to the results of others studies (e.g., [8]). However, one should bear in mind that the model in [8] is a type of generic recurrent neural network and those are known to have universal approximation capabilities [33]. Thus, one should expect that the standard deviations of network weight estimates will have a better correlation to stimulus parameters when a generic model with a universal approximation capability is utilized for model fitting. In addition, the absolute standard deviations of the estimates in this research seem smaller. Thus, the overall results can be considered successful.

- For most of the parameters , the variation of standard deviations against the amplitude parameter has a worsening behavior (Figure 3). The only exception is associated with the maximum firing rate parameter F. It has an improved standard deviation when the amplitude level increases. Concerning the mean estimated values, changing the amplitude from to does not make a sensible variation. Thus, keeping seems a good choice.

- Standard deviations of the estimates showed a little improvement when one has a large number of stimulus components (Figure 4). However, based on the mean estimated values, keeping it smaller together with the amplitude parameter seems a viable choice.

- Concerning the stimulus base frequency , it seems better to keep it in the lower side of the range ( KHz) applied in this research (i.e., KHz).

- For assessing the performance of our model when more realistic data and longer stimuli exist, we performed an estimation attempt using the data from a previous research [27]. We divided a 20 min recording into 2400 segments, the lengths of which equal 500 ms each. The stimulus was randomly generated and thus each segment was treated as an independent experiment. It appears that the estimates of the parameters have a tendency to converge to a final value, with the increasing sample size . This can be understood from the relative errors in Table 12. The errors become smaller and fluctuations diminish as the sample size advances. As a result, our model can be used in modeling studies where the computational features of the neural signal processing is important.

- The statistical Kolmogorov–Smirnov testing reveals that our modified Fitzhugh–Nagumo computational model can successfully describe the statistics stimulus/response relationship.

In general, the obtained results are promising. However, a slight improvement may be obtained if an optimal stimulus profile is generated prior to the identification process. The theory of optimal design of experiments [34] may be beneficial in this respect. An application to the continuous time recurrent neural network models is available in [9]. It appears to improve the mean square errors of network weight estimates (thus also the variance). This may be a part of future related studies under the same topic.

Author Contributions

L.A. wrote the MATLAB codes, performed the simulations, collected the necessary data, and prepared the tabular and graphical results. R.O.D. performed the validation of theoretical framework which this research is based on, polished the text, and brought the manuscript into its final form.

Funding

This research received no external funding.

Acknowledgments

The authors would like to thank to the Editor in Chief, associate editors, and reviewers for their valuable contribution to the improvement of this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hodgkin, A.L.; Huxley, A.F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 1952, 117, 500. [Google Scholar] [CrossRef] [PubMed]

- Morris, C.; Lecar, H. Voltage oscillations in the barnacle giant muscle fiber. Biophys. J. 1981, 35, 193–213. [Google Scholar] [CrossRef]

- FitzHugh, R. Impulses and physiological states in theoretical models of nerve membrane. Biophys. J. 1961, 1, 445–466. [Google Scholar] [CrossRef]

- Nagumo, J.; Arimoto, S.; Yoshizawa, S. An active pulse transmission line simulating nerve axon. Proc. IRE 1962, 50, 2061–2070. [Google Scholar] [CrossRef]

- Hindmarsh, J.L.; Rose, R. A model of neuronal bursting using three coupled first order differential equations. Proc. R. Soc. Lond. B 1984, 221, 87–102. [Google Scholar]

- DiMattina, C.; Zhang, K. Active data collection for efficient estimation and comparison of nonlinear neural models. Neural Comput. 2011, 23, 2242–2288. [Google Scholar] [CrossRef]

- DiMattina, C.; Zhang, K. Adaptive stimulus optimization for sensory systems neuroscience. Front. Neural Circuit 2013, 7, 101. [Google Scholar] [CrossRef]

- Doruk, R.O.; Zhang, K. Fitting of dynamic recurrent neural network models to sensory stimulus-response data. J. Biol. Phys. 2018, 44, 449–469. [Google Scholar] [CrossRef]

- Doruk, O.R.; Zhang, K. Adaptive stimulus design for dynamic recurrent neural network models. Front. Neural Circuits 2019, 12, 119. [Google Scholar] [CrossRef]

- Miller, K.D.; Fumarola, F. Mathematical equivalence of two common forms of firing rate models of neural networks. Neural Comput. 2012, 24, 25–31. [Google Scholar] [CrossRef]

- Barlow, H.B. Sensory Communication, 1961, 1, 217–234. Possible principles underlying the transformation of sensory messages. Sens. Commun. 1961, 1, 217–234. [Google Scholar]

- Fairhall, A.L.; Lewen, G.D.; Bialek, W.; van Steveninck, R.R.D.R. Efficiency and ambiguity in an adaptive neural code. Nature 2001, 412, 787. [Google Scholar] [CrossRef] [PubMed]

- Shadlen, M.N.; Newsome, W.T. Noise, neural codes and cortical organization. Curr. Opin. Neurol 1994, 4, 569–579. [Google Scholar] [CrossRef]

- Czanner, G.; Eden, U.T.; Wirth, S.; Yanike, M.; Suzuki, W.A.; Brown, E.N. Analysis of between-trial and within-trial neural spiking dynamics. J. Neurophysiol. 2008, 99, 2672–2693. [Google Scholar] [CrossRef] [PubMed]

- Adrian, E.D.; Zotterman, Y. The impulses produced by sensory nerve-endings: Part II. The response of a Single End-Organ. J. Physiol. 1926, 61, 151–171. [Google Scholar] [CrossRef]

- Singh, C.; Levy, W.B. A consensus layer V pyramidal neuron can sustain interpulse-interval coding. PLoS ONE 2017, 12, e0180839. [Google Scholar] [CrossRef]

- Smith, E.C.; Lewicki, M.S. Efficient auditory coding. Nature 2006, 439, 978. [Google Scholar] [CrossRef]

- Paninski, L. Maximum likelihood estimation of cascade point-process neural encoding models. Netw.-Comput. Neural Syst. 2004, 15, 243–262. [Google Scholar] [CrossRef]

- Smith, A.C.; Brown, E.N. Estimating a state-space model from point process observations. Neural Comput. 2003, 15, 965–991. [Google Scholar] [CrossRef]

- Berglund, N.; Landon, D. Mixed-mode oscillations and interspike interval statistics in the stochastic FitzHugh–Nagumo model. Nonlinearity 2012, 25, 2303. [Google Scholar] [CrossRef]

- Bashkirtseva, I.; Ryashko, L.; Slepukhina, E. Noise-induced oscillating bistability and transition to chaos in Fitzhugh–Nagumo model. Fluct. Noise Lett. 2014, 13, 1450004. [Google Scholar] [CrossRef]

- Leon, J.R.; Samson, A. Hypoelliptic stochastic FitzHugh–Nagumo neuronal model: Mixing, up-crossing and estimation of the spike rate. Ann. Appl. Probab. 2018, 28, 2243–2274. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, T.; Xu, Y.; Xu, W. Parameter dependence of stochastic resonance in the FitzHugh-Nagumo neuron model driven by trichotomous noise. Eur. Phys. J. B 2015, 88, 125. [Google Scholar] [CrossRef]

- Arabzadeh, E.; Panzeri, S.; Diamond, M.E. Deciphering the spike train of a sensory neuron: Counts and temporal patterns in the rat whisker pathway. J. Neurosci. 2006, 26, 9216–9226. [Google Scholar] [CrossRef]

- Walsh, D.A.; Brown, J.T.; Randall, A.D. In vitro characterization of cell-level neurophysiological diversity in the rostral nucleus reuniens of adult mice. J. Physiol. 2017, 595, 3549–3572. [Google Scholar] [CrossRef]

- Sakai, M.; Chimoto, S.; Qin, L.; Sato, Y. Neural mechanisms of interstimulus interval-dependent responses in the primary auditory cortex of awake cats. BMC Neurosci. 2009, 10, 10. [Google Scholar] [CrossRef]

- De Ruyter, R.; Bialek, W. Timing and Counting Precision in the Blowfly Visual System. In Models of Neural Networks IV; Springer: Berlin, Germany, 2002; pp. 313–371. [Google Scholar]

- Doruk, R.Ö.; Ihnish, H. Bifurcation control of Fitzhugh-Nagumo models. Süleyman Demirel Üniversitesi Fen Bilimleri Enstitüsü Dergisi 2018, 22, 375–391. [Google Scholar] [CrossRef]

- Izhikevich, E.M.; FitzHugh, R. Fitzhugh-nagumo model. Scholarpedia 2006, 1, 1349. [Google Scholar] [CrossRef]

- Brown, E.N.; Barbieri, R.; Ventura, V.; Kass, R.E.; Frank, L.M. The time-rescaling theorem and its application to neural spike train data analysis. Neural Comput. 2002, 14, 325–346. [Google Scholar] [CrossRef]

- Lewis, P.A.; Shedler, G.S. Simulation of nonhomogeneous Poisson processes by thinning. Nav. Res. Logist. Q. 1979, 26, 403–413. [Google Scholar] [CrossRef]

- Klein, R.W.; Roberts, S.D. A time-varying Poisson arrival process generator. Simulation 1984, 43, 193–195. [Google Scholar] [CrossRef]

- Schäfer, A.M.; Zimmermann, H.G. Recurrent neural networks are universal approximators. In International Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2006; pp. 632–640. [Google Scholar]

- Pukelsheim, F. Optimal design of experiments. In SIAM Classics in Applied Mathematics; SIAM: Philadelphia, PA, USA, 1993; Volume 50. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).