Abstract

Background: With the development of artificial intelligence, memristors have become an ideal choice to optimize new neural network architectures and improve computing efficiency and energy efficiency due to their combination of storage and computing power. In this context, spiking neural networks show the ability to resist Gaussian noise, spike interference, and AC electric field interference by adjusting synaptic plasticity. The anti-interference ability to spike neural networks has become an important direction of electromagnetic protection bionics research. Methods: Therefore, this research constructs two types of spiking neural network models with LIF model as nodes: VGG-SNN and FCNN-SNN, and combines pruning algorithm to simulate network connection damage during the training process. By comparing and analyzing the millimeter wave radar human motion dataset and MNIST dataset with traditional artificial neural networks, the anti-interference performance of spiking neural networks and traditional artificial neural networks under the same probability of edge loss was deeply explored. Results: The experimental results show that on the millimeter wave radar human motion dataset, the accuracy of the spiking neural network decreased by 5.83% at a sparsity of 30%, while the accuracy of the artificial neural network decreased by 18.71%. On the MNIST dataset, the accuracy of the spiking neural network decreased by 3.91% at a sparsity of 30%, while the artificial neural network decreased by 10.13%. Conclusions: Therefore, under the same network connection damage conditions, spiking neural networks exhibit unique anti-interference performance advantages. The performance of spiking neural networks in information processing and pattern recognition is relatively more stable and outstanding. Further analysis reveals that factors such as network structure, encoding method, and learning algorithm have a significant impact on the anti-interference performance of both.

1. Introduction

With the rapid development of artificial intelligence technology, especially the wide application of large models such as ChatGPT-4 and DeepSeek, the problem of computing efficiency and energy consumption has become a hot topic of research. In this context, memristor, as a new type of electronic component with revolutionary potential, can realize data storage and computing power at the same time, and even simulate human brain neural networks. It provides innovative solutions to the problems of separation of storage and computing and high energy consumption in traditional computing architectures. The memristor can perform calculations directly in the storage unit, which greatly reduces the latency and energy consumption of data transmission. Due to its unique physical properties and similarity to biological neural networks, memristors are regarded as an ideal choice for building new neural network architectures [1]. With the rapid development of integrated circuits, artificial intelligence, the Internet of Things, and 5G communication technology, the electronic system is rapidly iterating in the direction of high integration, miniaturization, low power consumption, and intelligence [2]. Therefore, we want to minimize the number of memristors required to deploy the network through pruning technology to improve overall computational efficiency and energy efficiency. At present, various electromagnetic interferences have increasingly serious negative effects on electronic systems, which threaten the stability and security of networks [3]. In practical applications, electromagnetic interference can lead to the failure of memristor nodes or loss of connection, which can affect the performance and stability of the network. Therefore, when the network is disturbed or damaged, the anti-interference becomes the key factor for the normal operation of the network. Specifically, the anti-interference capability of the network refers to the ability of the network to maintain a certain degree of structural integrity and original functions after the nodes or connections are subjected to random or deliberate attacks. Therefore, the pruning algorithm is also used to simulate the damage of network connections, so as to explore the robustness of the network in the face of electromagnetic interference. The addition of pruning algorithms can not only help us to deeply understand the network’s performance in the face of interference, but also provide effective solutions to improve the reliability and efficiency of electronic systems. In this context, improving the anti-interference ability of the network not only has theoretical value but also lays a solid foundation for the reliability and stability of electronic systems in practical applications.

In recent years, Spiking Neural Network (SNN), as a novel neural network model, has attracted widespread attention. By using discrete spikes to transmit information, SNN is not only more in line with the information processing mode of the biological brain but also has lower energy consumption. These characteristics highlight the important application value of SNN in electromagnetism and promote the development and innovation of related technologies. SNN differs from traditional artificial neural networks, which are currently the most bio-interpretable Artificial Neural Network (ANN), whose nodes are spiking neuron models, and the edges are synaptic plasticity models [4,5]. Moreover, spiking neural networks use biologically plausible neuron models based on spike dynamics, whereas traditional artificial neural networks only use neurons based on static rates [6]. As a result, traditional ANNs tend to exhibit poor anti-interference to network impairments, which can easily lead to significant performance degradation or system failure. In contrast, SNN exhibits stronger anti-interference performance under network impairments due to their bio-inspired structure and dynamic adaptive mechanism. Guo et al. [7] constructed a spiking neural network with a small-world network and a stochastic network as its topology. They verified that the spiking neural network with small-world characteristics has excellent anti-interference function through electromagnetic interference simulation experiments. This also makes SNN an ideal choice for researching and developing the new generation of robust neuromorphic chips.

At present, there are relatively few studies on the effect of network connection impairments on the anti-interference performance of SNNs, and most of the existing research mainly focus on the impact of network topology on the anti-interference performance of SNNs. For example, Guo et al. [8] investigated the effect of topology on the damage resistance of SNNs, and the simulation results proved that SNNs with stronger biological rationality have better interference resistance. However, the simulation of the anti-interference performance of SNN under complex and changeable real network environments is not comprehensive enough, such as the research on network node damage or connection loss. Moreover, the comparative analysis between spiking neural networks and traditional artificial neural networks is still insufficient. Therefore, the anti-interference performance of SNN and ANN under the same degree of network connection damage is the focus of this paper. The anti-interference function of SNN and traditional ANN is studied under different degrees of network connection damage. Then, the SNN is compared with the traditional ANN, and the anti-interference mechanism of the network is further discussed.

The contributions of this paper are as follows:

- (1)

- Verify the anti-interference performance of SNN and ANN. Construct two types of SNN models: VGG-SNN and FCNN-SNN, and compare them with traditional ANN (VGG and FCNN). VGG-SNN and FCNN-SNN are constructed to help us effectively and fairly evaluate the response ability of SNN and ANN in the face of interference and verify the anti-interference performance of SNN and ANN through experiments. The experimental results show that the SNN model has better anti-interference performance than the traditional ANN.

- (2)

- Analyze the reasons why SNN has anti-interference performance. By analyzing the essential difference between SNN and ANN, the reasons for the different anti-interference performances of SNN and ANN are considered. The main difference between SNN and ANN is the encoding method.

2. Related Work

2.1. Artificial Neural Network

An artificial neural network is a network structure that is designed to handle practical problems with multiple nodes and output points [9]. Although the human brain and artificial neural networks are very powerful in information processing capabilities, there are still many differences between them. With the continuous advancement of artificial neural network technology, its applications have gradually expanded to various fields. AlphaGo’s victory over Go champion Lee Sedol in 2016 has attracted widespread attention to artificial neural networks. At present, artificial neural networks have spawned hundreds of models, among which MultiLayer Perceptron (MLP), Convolutional Neural Network (CNN), and Recursive Neural Network (RNN) are considered the most commonly used artificial neural network algorithms [10]. The structure of a basic ANN consists of three components: input layer, hidden layer, and output layer. This architecture is usually called a Fully Connected Neural Network (FCNN). Moreover, FCNN is a classic artificial neural network and is widely used to solve complex modeling problems [11]. However, compared to FCNN, CNN adopts a local connection approach, where each neuron is only connected to a portion of the neurons in the previous layer, rather than to all neurons [12]. Classic CNN models include LeNet-5 [13], AlexNet [14], VGGNet [15], GoogLeNet [16], and ResNet [17], all of which are improvements based on LeNet. The VGG network has been proven effective in many image-processing tasks due to its simple and moderately deep network structure. This study belongs to image classification tasks, so a deeper and more complex VGG model is chosen. In the VGG series, VGG11 has a relatively shallow network structure, which makes the model more efficient in training and inference processes. Additionally, due to the small number of layers in VGG11, this not only reduces computational complexity and memory consumption but also reduces the risk of overfitting. This is particularly advantageous when dealing with relatively limited datasets. Therefore, in order to adapt to the two datasets used in this research, VGG11 and FCNN are chosen as the experimental models representing ANNs, respectively.

2.2. Spiking Neural Network

The spike neuron model uses spike flow as the transmission form of data and these spike neurons are hierarchically connected to form a network, which is called a spiking neural network. In 1952, Hodgkin and Huxley studied the potential data of giant squid axons and proposed a detailed conductivity-based neuron model (H-H model) [18], successfully reproducing electrophysiological measurement results. However, the H-H model is limited in practical tasks due to its high computational complexity. Therefore, subsequent low-order neuron models usually simplify the H-H model to reduce computational costs. Currently, several common first-order neural models include the Integrate-and-Fire (IF) model [19] and the Leaky Integrate-and-Fire (LIF) model [20]. The IF model provides a good compromise between biological plausibility and computational cost. However, this model mainly focuses on the temporal dynamics of neurons and considers the spatial structure and spatial information processing less. The LIF model summarizes the information transmission process of neurons into three modes: charging, discharging, and resetting, and the model can enhance the spatial perception ability of neurons through appropriate parameter settings and network structure design [21]. Therefore, in this paper, the LIF model was adopted as the SNN element based on spike and simulation. Moreover, to fairly compare the anti-interference performance between SNN and ANN, this research constructs two SNN models with network structures similar to VGG and FCNN, named VGG-SNN and FCNN-SNN, respectively.

2.3. Comparison of Advantages and Disadvantages Between ANN and SNN

After years of development, ANN technology has been widely used for various tasks, and its tools and frameworks have matured. It adopts a backpropagation algorithm, which is easy to train and suitable for large-scale datasets. The ANN particularly excels in processing static data such as image and text recognition. However, ANN is inferior to SNN in processing time-series data and dynamic signals, and ANN has higher resource and energy consumption in large-scale networks, which differs significantly from the operating principles of biological nervous systems. SNN simulates the spike-firing mechanism of the biological nervous system, which has higher biological interpretability and significant energy-saving advantages. Its event-driven calculation method reduces energy consumption compared to traditional ANN. SNN is particularly suitable for processing time series data and dynamic signals, which can effectively capture temporal features and demonstrate stronger anti-interference ability in the face of noise and interference [22]. However, the training algorithm for SNN is relatively complex and existing training methods such as backpropagation are difficult to apply. In addition, the development tools and frameworks of SNN are not yet mature and there are few applications. The network structure and parameter settings of SNN are also complex and require more professional knowledge. In practical applications, algorithms often face various noises and interferences. Studying their anti-interference performance can help improve the stability and reliability of algorithms in complex environments. The advantages and disadvantages of ANN and SNN make them play unique roles in different scenarios. By studying their anti-interference performance, suitable network models can be selected for different application scenarios, thereby improving the overall robustness of the system.

2.4. Pruning Algorithm

Neural networks use pruning algorithms to remove redundant parts in the network, such as connections, neurons, and filters, to reduce the size of the network. This method is widely regarded as an effective optimization strategy [23]. It reduces the resource requirements of the network, including memory, computing, and energy consumption [24]. In recent years, some groundbreaking studies have explored neural network pruning algorithms. Pruning operations are widely used in the fields of ANN and SNN. In the field of ANN, Xue et al. [25] proposed an automatic filter pruning algorithm called AFPruner based on the average similarity of feature maps and reverse search genetic algorithm (RSGA). It automatically searches for the optimal combination of pruning proportions for all convolution layers, evaluates the filter similarity through the feature map average similarity, and then trims the similarity filter. Wang et al. [26] proposed a network channel pruning scheme based on sparse learning and genetic algorithms to achieve a better balance between pruning ratio and accuracy. Bellec et al. [27] further proposed Deep Rewriting (Deep R) as a pruning algorithm for ANN and Long Short-Term Memory (LSTM) spiking neural networks. Deep R has the ability to achieve target connections without further training. Afterward, Liu et al. [28] applied Deep R to the prototype chip of the second-generation SpiNNaker system. Deep R achieved a classification accuracy of 96.6% on an extremely sparse network, which verified the effectiveness of the Deep R algorithm on memory-limited hardware. Ebid et al. [29] proposed a correlation-based pruning algorithm that systematically reduces the network size by identifying and deleting redundant neurons based on their activation correlates. The core idea of the algorithm is to selectively trim neurons while compensating their contributions to the network, so as to maintain the fidelity of the model on different datasets. In the field of SNN, some people attempt to mimic the way the human brain learns to enhance SNN pruning. Among them, Deng et al. [30] combined STBP and ADMM to compress the SNN model in two aspects: connection pruning and weight quantization, greatly reducing memory space and baseline operations. Kappel et al. [31] proposed synaptic sampling to optimize network construction and weights by modeling the spinal motion of spike neurons as Bayesian learning. Chen et al. [32] proposed the Gradient Re routing Pruning Algorithm (Grad R), which redefines the gradient as a new synaptic parameter and better explores the network structure by fully utilizing the competition between connection pruning and regeneration. In addition, Chen et al. also applied the Deep R pruning algorithm to SNN. However, there is a lack of systematic studies that directly compare the reduction in accuracy after SNN and ANN pruning. Therefore, in order to fill this research gap, this paper conducted a pruned direct comparison of SNN and ANN on the same task and dataset. Since Deep R can be effectively applied to SNN and ANN, it proves the universality and flexibility of the Deep R pruning algorithm. Therefore, the Deep R pruning algorithm is chosen to be built into the four network models used in this paper.

2.5. Research on Anti-Interference of Spiking Neural Network

In recent years, multiple papers have studied the anti-interference performance of spiking neural networks. Guo et al. [33] studied the influence of small-world model parameters on the anti-interference function of neural networks. The experimental results showed that spiking neural networks with higher clustering coefficients and lower average path lengths have better anti-interference functions. Subsequently, Guo et al. [34] constructed two complex spiking neural network models (CSNN) and studied the influence of topology on the damage resistance of CSNN. The experimental results show that the robustness of the topology structure is consistent with the anti-damage function of CSNNs, indicating that the anti-damage ability of both types of CSNNs is affected by the topology structure. In further research, Guo et al. [8] constructed five types of spiking neural network models and compared their anti-interference capabilities. The experimental results show that under Gaussian white noise stimulation, complex SNN is superior to small-world SNN, small-world SNN is superior to scale-free SNN, and these are superior to random SNN and conventional SNN. This indicates that SNN with more biological rationality has better anti-interference ability. However, the above research mainly focuses on the impact of network topology on anti-interference performance and there is relatively little research on the impact of SNN anti-interference performance under network connection damage. Therefore, this research focuses on the anti-interference performance of SNN under network connection damage.

3. Methods

The objective of this research is to investigate the anti-interference performance of SNN and ANN under the same degree of network connection damage. Therefore, two kinds of spiking neural network models, VGG-SNN and FCNN-SNN, were designed in this research. These two models are similar to the network structure of VGG and FCNN, respectively, and both use the LIF model as the basic neuron model for constructing SNN. In addition, the Deep R pruning algorithm was applied to the training process of ANN and SNN. By calculating the standard deviation of the weights in the weight matrix, all activity weights with absolute values less than or equal to the set value were pruned to simulate network connection damage. This method helps evaluate the anti-interference performance of ANN and SNN under network connection damage.

3.1. Spike Neuron Model

Simple spiking neuron models have provided many insights into neural coding, memory, network dynamics, and deep learning. The LIF neural model has found the best balance between biological rationality and practicality and is the most commonly used neural model for simulating large-scale spiking neural networks. Therefore, the LIF model was chosen as the basic neural model. The LIF model, as a compromise between the complex dynamic characteristics of biological neurons and simple mathematical forms, can be described using differential functions [35,36]:

where is the time constant, is the membrane potential of the postsynaptic neuron at time , and is the integrated current input by the presynaptic neuron at time . For the convenience of computer simulation, it is necessary to discretize and recursively solve the differential formula to obtain a simple iterative representation of the LIF-SNN layer for inference and training. The specific calculation formula is as follows:

where and represent time steps and layers, and represents the membrane potential generated by coupling, which is generated by the coupling of spatial features and time inputs . determines the output spike tensor, and is a given or zero threshold. is a Heaviside step function, which satisfies when x ≥ 0, otherwise . is the reset potential set after activating the output spike, represents the decay factor, and represents the multiplication of elements.

3.2. Structure of Spiking Neural Network Model

In this research, the SpikingJelly framework is used to construct two types of SNN models, which are designed and evaluated for different datasets. The first model is named VGG-SNN, which has a network structure similar to the VGG model. This model is specifically designed to process the human action dataset used in this paper, which is named the Radar action dataset. The second model is named FCNN-SNN, which has a network structure similar to FCNN and is used to process the MNIST dataset. By constructing an SNN model with a similar structure to ANN, the performance difference between SNN and traditional ANN can be compared more directly. Therefore, the two SNN models proposed are intended to help this study effectively and fairly evaluate the response-ability of SNN and ANN in the face of interference and verify the immunity performance of SNN and ANN through experiments.

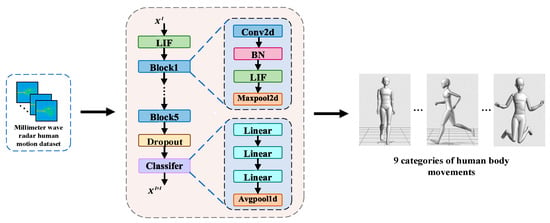

3.2.1. VGG-SNN

The structure of the VGG-SNN model is shown in Figure 1, which consists of neuron layers that generate spikes and synaptic layers including convolutional layers, pooling layers, and fully connected layers. The synaptic layer defines the connection pattern of synapses between spike neurons, which is used for spatially correlated feature extraction or classification. The structure of this model is similar to VGG11, which not only extracts spatial features through the convolutional layer and the pooling layer but also enhances the processing ability of dynamic changes through the temporal encoding mechanism of spike neurons.

Figure 1.

Network structure diagram of VGG-SNN model.

In the input layer, the input feature map is defined as , , representing the number of input channels, and representing the height and width of the feature map. Firstly, perform a 2D convolution operation on the input feature map and the calculation formula is as follows:

where represents the value of the output feature map at position , represents the weight value at position in the channel of the convolution kernel . and are the sizes of the convolution kernel, and and are the position indices of the output feature map. Next, perform batch normalization (BN) on the output feature map using the following formula:

where is the standardized output obtained after BN operation, and are the mean and variance of the input feature map. and are learnable scaling and offset parameters, and is a very small constant used to prevent the denominator from being zero.

Next, the feature map that has undergone the above operations is input into the LIF model for further encoding, where the probability of each neuron firing a spike is proportional to the intensity of the corresponding pixel in the input feature map . The input current is generated by multiplying the input features with synaptic weights and this current accumulates in the membrane potential of subsequent neurons, as shown in Formula (1). Whenever the membrane potential of a posterior neuron exceeds its discharge threshold, the neuron produces an output spike and resets the membrane potential. Otherwise, the membrane potential decays exponentially over time. Each layer of neurons (excluding the output layer) executes the process sequentially based on the weighted peaks received from the previous layer. The formula for calculating the total sum of weighted peak sequences passed from the previous layer over time is as follows:

where represents the sum of spike sequences of the -th pre neuron in layer over time, and represent the current time and the previous time, ≤ . represents the spike that occurred in the first neuron of layer from moment to moment , and represents the time decay factor, which gradually reduces the influence of past time points over time. represents the integrated current that accumulates to the membrane potential of the -th posterior neuron in layer at time . is the number of pre-neurons in layer , and represents the synaptic weight from the -th neuron to the -th neuron in layer . Therefore, according to the above formula, the calculation formula for the membrane potential update process is as follows:

where is the integrated current input to the presynaptic neuron at moment . is the membrane potential of the neuron at the previous moment, and is the membrane potential of the neuron at the current moment.

In the final classification layer, the firing threshold of the neurons is set very high, which prevents these output neurons from producing spiking outputs. In the last layer, the weighted input signals of each neuron accumulate continuously in this layer and these accumulated membrane potentials gradually decay over time to simulate the membrane potential dynamics of biological neurons. In the last time step, the accumulated membrane potential is evaluated to obtain a stable and representative output distribution. When the membrane potential reaches a preset high threshold, the neurons produce spikes. Finally, the cumulative spike count in time step is divided by the total number of time steps , which is obtained as the peak frequency for each neuron. This quantified spike frequency distribution is the final network output for classification decisions. The process is shown in Formula (10):

where, refers to the cumulative spike count in time step . Finally, the output features are trained. Each potential connection of the neural network can be active or dormant at any time during training. Therefore, the Deep R pruning algorithm is applied to the training process of SNN and ANN as an optimizer to simulate network connection damage.

Specifically, the pruning process determines whether to retain each synaptic connection by evaluating its importance. In the implementation process, this study first trains the network to obtain the initial connection weights. Then, the Deep R pruning algorithm is used to evaluate the connection weights to determine which connections can be cut. The feature of the Deep R pruning algorithm is to ensure that the maximum demand for network connections during training will not exceed a certain limit.

First, the connection matrix is initialized. To control the sparsity of the network, the sparsity is set to represent the probability of each connection being activated. When initializing the connection matrix, each connection generates a random number using a uniform distribution. If the generated random number is less than , the connection is activated (value 1), otherwise, it is set to zero (value 0). This means that every connection has an equal chance of being set to zero, which is to ensure randomness and diversity in the network. This ensures that only a portion of the connections in the connection matrix are active, thus achieving sparsity. By adjusting the sparsity, the percentage of pruning can be randomly set. This setting can be adjusted based on the dataset, application-specific accuracy requirements, and power/delay budgets for a more flexible and efficient pruning strategy. The formula for calculating sparsity is as follows:

where, is an element in the connection matrix, 1 indicates active connection and 0 indicates dormant connection. Dormant connections no longer participate in weight updates, which reduces network connectivity.

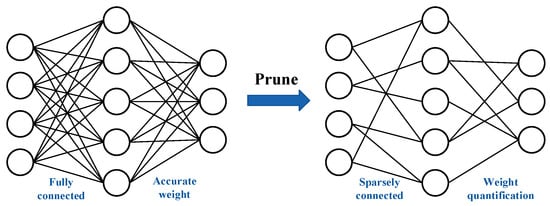

Next, the parameters are initialized. The Deep R pruning algorithm assigns a connection parameter and a constant to each connection . When , the synaptic connection is set to sleep, the contact connection is removed from the network and no longer considered for renewal, with a corresponding weight of = 0. When , the synaptic connection is active and the corresponding network weight is . The connection parameters are represented in such a way that each connection encodes not only information about whether it is active but also the weight of the connection. This method can eliminate unnecessary connections and thus simplify the network structure. The pruning process is shown in Figure 2.

Figure 2.

Network pruning process.

Then, the gradient update of the parameters is carried out. Only those connections that have not been cut and whose weight absolute value has not fallen to 0 will have their parameters updated with the gradient. The error function used in this algorithm is cross entropy error. The update rule includes a regularization term and introduces the noise term , where is the noise sampled from a Gaussian distribution with zero mean and unit variance. The introduction of noise makes the parameter update random, which combines the characteristics of gradient descent and random walk. The formula for gradient updating is shown in Formula (12).

where, is the temperature parameter, which is used to adjust the flatness of the posterior distribution. When , the posterior distribution is restored. When , the posterior distribution is flattened. When , the posterior distribution is sharpened. represents the learning rate, which controls the magnitude of each update. represents the regularization coefficient, which controls the strength of the regularization term.

Finally, the reconnection strategy is implemented. This study determines whether to activate a new connection or delete an existing connection based on the state of the current parameters and connectivity constraints. This process randomly selects dormant connections and activates them according to a set policy. The rules are as follows: If the connection parameter , the connection is set to sleep and does not participate in updates. To ensure that active connections are always maintained during training, new connections are randomly selected from the dormant connections and activated. Therefore, the training objectives of the algorithm are as follows:

where is a normalization constant and and represent the input and output data, respectively. The pseudo-code of the Deep R pruning algorithm is shown below (Algorithm 1) [24], which is a summary of the above content.

| Algorithm 1: Deep R Algorithm |

| Input: Output: 1: for i in [1, ] do 2: for all active connections do 3: ; 4: if then set connection k dormant; 5: end 6: while number of active connections lower than K do 7: select a dormant connection with uniform probability and activate it; 8: 9: end 10: end |

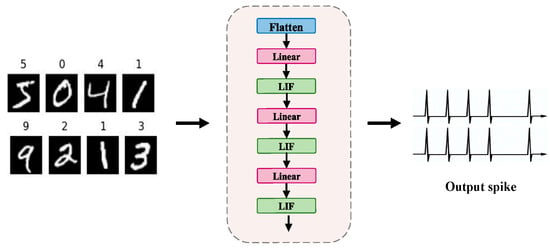

3.2.2. FCNN-SNN

The constructed FCNN-SNN model is a fully connected neural network defined by the number of input neurons n, the number of output neurons m, and an m × n weight matrix. The number of input neurons n can be adjusted according to preprocessing but by default, it is 784, corresponding to the grayscale pixels in each training sample. In this paper, the output layer is set to consist of 500 neurons and is used to classify 10 categories of the MNIST dataset. The MNIST dataset contains 60,000 training images and 10,000 test images. The fully connected network structure of the FCNN-SNN model is shown in Figure 3.

Figure 3.

Network structure diagram of FCNN-SNN model.

Firstly, the input multidimensional data is flattened into a one-dimensional vector for subsequent layer processing. The flattened data is then passed through the linear layer, as shown in the following formula:

where represents the number of samples, is the weight matrix of the -th layer, and represents the output of the -th layer. After passing through the fully connected layer, the output is passed to the LIF layer, which converts the feature map into a spike sequence of Poisson distribution. The process is shown in Formulas (7)–(10) in Section 3.2.1. The pruning operation during the training process is detailed in Section 3.2.1.

4. Results

In this section, the anti-interference performance of two constructed SNN models and two traditional ANN models was evaluated on the Radar action dataset and the MNIST [14] dataset.

4.1. Datasets

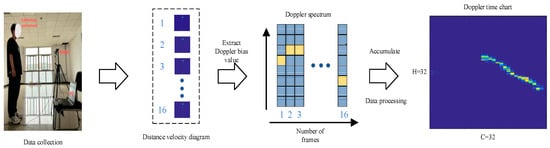

4.1.1. Radar Action Dataset

The Radar action dataset was constructed by our team. During the collection process, special attention is paid to the diversity of the collection personnel, including factors such as gender, height, and body shape. The dataset collected motion samples from three experimental participants, with 330 samples collected for each type of human motion, totaling 2970 sample data. When designing human motion, it is necessary to select representative samples that cover different categories of motion and have universality. In order to improve the practicality and adaptability of sports design, the laboratory also considered the naturalness and fluency of sports. Therefore, a total of 9 types of human movements were designed, including forward walking, backward walking, left walking, right walking, jumping, forward running, backward running, left running, and right running.

Next, the processing process of the radar signal is explained and the acquired radar signal is processed by 2D-FFT twice to obtain a frame of a “range-velocity diagram”. This two-dimensional image can fully reflect the distance and velocity characteristics of the target. In order to remove static target interference, the frame difference method is used for preprocessing. The specific method is to accumulate several frames to calculate the background frame and then subtract the background frame from the original image to obtain the de-noised range-velocity graph. This pre-processing step effectively improves the clarity of the signal and lays the foundation for subsequent analysis. The data collection and processing process is shown in Figure 4, and the calculation formula is as follows:

where is the background frame, represents the cumulative number of background frames in the distance velocity map, and are the total number of pixels in the distance axis and velocity axis of the distance velocity map, respectively.

Figure 4.

Radar action dataset collection and processing process. The yellow color in the figure represents higher Doppler frequencies, while the blue part represents lower Doppler frequencies.

When the human body performs different actions in front of the millimeter wave radar, the frequency of the radar echo signal will change. Based on this Doppler frequency shift feature, this research constructs a Doppler-time graph as input. By using the Doppler shift of human motion, the Doppler spectrum describing the speed of each frame is extracted from the distance-time graph and accumulated over time. Specifically, multiple frames of radar data are accumulated to calculate the Doppler spectrum, which is stacked by time to build a Doppler-time graph. This two-dimensional image not only reflects the dynamic characteristics of the target but also is one of the commonly used inputs in human motion recognition.

The performance of deep learning algorithms largely depends on the quality of the sample data. In order to improve the availability of samples, the paper normalizes the obtained Doppler-time graph sample base. First, standardize the image size, set it to 32 × 32 and adjust all samples to the same resolution. After that, the pixel values are normalized and the grayscale is mapped to the [0, 1] range. Finally, the data were cleaned to eliminate invalid and mislabeled samples, and the human movement dataset of this paper was successfully constructed. These normalized processing steps help to eliminate differences between images, which improves model generalization and improves overall algorithm performance.

4.1.2. MNIST Dataset

The MNIST dataset contains 70,000 handwritten digit images, 60,000 training images, and 10,000 test images. The training set consists of digits handwritten by 250 different people, half from high school students and half from staff members, and the test set followed the same proportions. The MNIST dataset ensures that the training set and the test set are collected by different collectors. Each image is a 28 × 28 pixel point and the dataset will be stored by converting the data from one image into a 784 one-dimensional vector.

4.2. Experimental Setup

In the experiment, SpikingJelly 0.0.4 [37] and Pytorch 2.1 frameworks were used to design and evaluate SNNs. By setting different sparsity parameters, simulate different degrees of network connection damage, to reflect real-world situations where some neurons or connections may fail due to environmental interference or network attacks. Adjusting sparsity can help researchers observe the robustness and recovery ability of the network under different degrees of damage. When there is no need to simulate network damage, both SNN and ANN use Adam as the optimizer. The Adam optimizer adaptively adjusts the learning rate of each parameter according to the first and second moment estimates of the gradient.

To verify the anti-interference performance of SNN in different environments, the VGG-SNN model was used to process the Radar action dataset constructed in the laboratory. Due to the rich temporal information and complex dynamic features contained in this dataset, as well as various environmental noises, it is suitable for evaluating the anti-interference performance of the model under dynamic changes and noise interference. The VGG-SNN model incorporates a deep convolutional neural network structure, which can effectively extract complex time-domain features from time series data. Therefore, VGG-SNN and VGG models are suitable for processing Radar action datasets, which can better verify and evaluate their anti-interference and adaptability in practical applications. In addition, a shallow FCNN-SNN model is used for the MNIST dataset. The MNIST is a relatively simple and well-structured dataset with low computational complexity. Therefore, using the FCNN-SNN model to process the MNIST dataset can reduce computational complexity and complete training faster with higher accuracy.

Table 1 summarizes the hyper-parameter settings of the SNN model on different datasets and provides a reference for subsequent experiments. These hyper-parameter settings help researchers better understand the network’s performance under various conditions, ensuring the reliability and reproducibility of experimental results.

Table 1.

Default values of hyper-parameters for SNN models on different datasets.

4.3. Experimental Results and Analysis

In many practical applications, especially in scenarios involving network connection damage, data often suffers from class imbalance. Simply using accuracy as an evaluation metric may lead to bias towards the main categories, as even if the model’s predictions for a few categories are poor, the overall accuracy may remain at a high level. Therefore, accuracy and recall can more comprehensively reflect the model performance in different categories, especially in recognizing minority categories. The core of studying anti-interference performance lies in understanding the model performance in the face of noise and interference. By using precision, recall, and F1-score, the model performance under uncertain conditions can be better evaluated.

Therefore, to fairly compare the anti-interference performance of the SNN and ANN, five indicators including sparsity, accuracy, precision, recall, and F1-score were comprehensively considered. In the experiment, five sparsity values were set to simulate varying degrees of network connection damage, namely 30%, 40%, 50%, 60%, and 70%, and used as control groups for ablation experiments. By comparing and analyzing the performance differences between the SNN model and ANN model on two datasets under the same damage conditions, a comprehensive evaluation of the SNN’s anti-interference capability can be conducted. The percentage decrease in accuracy in Table 2, Table 3, Table 4 and Table 5 shows the average value, float (top), and minimum value (bottom), while the other data shows the average value, float (top), and maximum value (bottom).

Table 2.

Experimental results of VGG-SNN on Radar action dataset.

Table 3.

Experimental results of VGG on Radar action dataset.

Table 4.

Experimental results of FCNN-SNN on MNIST dataset.

Table 5.

Experimental results of FCNN on MNIST dataset.

4.3.1. Comparison of Experimental Results Between VGG-SNN and VGG

In this section, in order to more intuitively demonstrate the performance differences, Table 2 and Table 3 only show the experimental results with sparsities of 30% and 70%. These two tables respectively reflect the accuracy changes of VGG-SNN and VGG under the same damage conditions on the Radar action dataset. According to the data in Table 2 and Table 3, when the sparsity is 30%, the accuracy of VGG-SNN decreases by 5.83%, while the accuracy of VGG decreases by 18.71%. When the sparsity is 70%, the accuracy decrease rate of VGG-SNN is 15.95%, while the decrease rate of VGG increases to 27.24%. From this, it can be seen that under lower damage conditions, the accuracy decrease in VGG-SNN is significantly smaller than that of VGG, which means that VGG-SNN can more effectively maintain its performance.

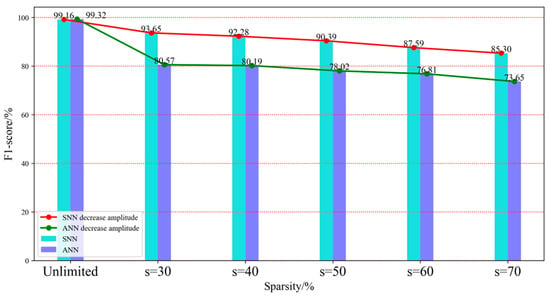

In addition, Figure 5 shows the comparison results of VGG-SNN and VGG on the F1-score index under five sparsity levels. F1-score, as a harmonic mean of precision and recall, can effectively comprehensively consider the accuracy and completeness of the model. This indicator can clearly identify whether SNN can maintain its predictive ability and to what extent it can resist external interference in the event of network damage. From Figure 5, it can be seen that in the Radar action dataset, VGG-SNN exhibits higher a F1-score, indicating that its performance in both accuracy and recall is superior to VGG. This means that VGG-SNN can not only more accurately identify positive samples, but also effectively reduce false alarm rates and improve overall performance.

Figure 5.

F1-score histogram of VGG-SNN and VGG on Radar action dataset.

The Radar action dataset contains rich temporal data that can reflect dynamic changes in human body movements and may involve various environmental factors and noise interference. The above experimental results demonstrate the significant advantages of SNN in dynamic information processing under network connection damage conditions. Especially under high noise and damage conditions, SNN exhibits stronger robustness and adaptability, and can better cope with complex practical application scenarios.

4.3.2. Comparison of Experimental Results Between FCNN-SNN and FCNN

Similar to Section 4.3.1, Table 4 and Table 5 only show the experimental results with sparsities of 30% and 70%. These two tables show the experimental results for FCNN-SNN and FCNN, respectively. When the sparsity is 30%, the accuracy of SNN decreases by 3.91%, while the accuracy of FCNN decreases by 10.13%. When the sparsity is 70%, the accuracy decrease rate of FCNN-SNN is 8.23%, while the decrease rate of FCNN increases to 28.17%. The MNIST dataset is static image data of handwritten digits, focusing on image recognition and feature extraction. In this relatively simple task, the lower decrease exhibited by FCNN-SNN not only demonstrates its effectiveness in maintaining recognition ability but also emphasizes its robustness in processing static images.

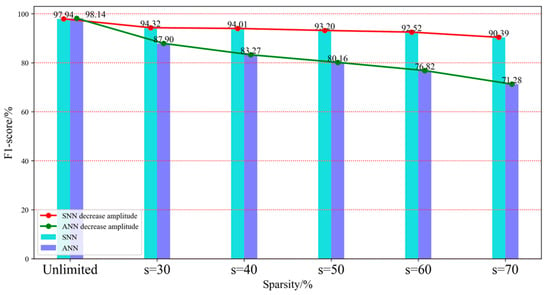

Figure 6 shows the comparison results of FCNN-SNN and FCNN on the F1-score index under five sparsity levels. In the MNIST dataset, FCNN-SNN is similar to the trend of the Radar action dataset and also shows better anti-interference ability than FCNN. FCNN-SNN demonstrates stronger robustness in handling handwritten digit recognition tasks and can maintain high classification accuracy in incomplete or disturbed data.

Figure 6.

F1-score histogram of FCNN-SNN and FCNN on MNIST dataset.

Therefore, the aforementioned findings indicate that in scenarios of network connection damage, SNN can more effectively capture critical features and process input information accurately even when partial connections fail. In contrast, the ANN decreases significantly under the same conditions. The above reflects the vulnerability of ANN when the network connection is damaged, which may lead to the loss of key information.

In summary, by comparing the anti-interference performance of SNN and ANN on the Radar action dataset and MNIST dataset, this research can demonstrate that SNN exhibits higher anti-interference performance and adaptability in the face of network connection damage. Whether in millimeter wave radar data or handwritten digit images, the accuracy decrease in SNN is significantly smaller than that of ANN. This shows that SNN can more effectively maintain its performance and demonstrate its strong anti-interference performance. With the increase in sparsity, that is, the aggravation of network connection damage, although the accuracy decline of SNN has also increased, its performance is still better than ANN. This phenomenon further validates the potential of SNN in handling dynamic and uncertain environments, especially in application scenarios that require high reliability, such as handwritten digit recognition and real-time monitoring systems. These results indicate that SNN exhibits stronger anti-interference ability and classification performance in the face of network damage.

4.3.3. Resource Analysis of SNN and ANN

In this section, the resource usage of SNN and ANN is analyzed statistically considering the resource constraints in practical applications. The purpose is to understand the resource usage characteristics of SNN and ANN, providing an important basis for the effective deployment of deep learning models in resource-constrained environments. The memory and training time data in Table 6 are averages. In Table 6, it can be observed that after the Deep R pruning algorithm is added, the number of parameters, memory usage, and training time of both SNN and ANN are significantly reduced. This shows that the pruning algorithm plays an important role in optimizing model structure and resource utilization, which enables the two models to run with less computing resources and memory usage while maintaining relative performance. This optimization is especially important for model deployments in real-world applications, as they are able to operate efficiently in resource-constrained environments.

Table 6.

Comparison of resource indicators between SNN and ANN.

Although the training time of SNN is usually greater than that of ANN, this is mainly due to the complex dynamic mechanism used by SNN to process information, which leads to high computational complexity. This time difference suggests that when choosing a model, researchers must carefully weigh the training time against the desired accuracy. It is worth noting that the accuracy of SNN decreases relatively little after adding pruning, which indicates that SNN can still maintain high performance in a highly sparse network structure. This makes SNN advantageous in applications requiring high accuracy, such as time series analysis and biological signal processing. In contrast, although ANN’s training time is shorter, the decline in accuracy may be greater after pruning. Therefore, the choice of SNN or ANN should be based on the specific application needs, especially to find the right balance between accuracy and training efficiency.

From the perspective of resources, SNN and ANN have their advantages and disadvantages. SNN performs well in terms of parameter count, memory usage, and power consumption, which makes it suitable for use in resource-constrained environments. ANN has advantages in computational efficiency and training time, which is suitable for dealing with large-scale data and complex tasks. This study considers that it is a very interesting and promising direction to explore the integration of SNN characteristics into ANN. This combination can help make up for the shortcomings of ANN in processing time series data and dynamic signals. For example, the event-driven mechanism and time coding characteristics of SNN can enhance the sensitivity of ANN to timing information, which makes it more robust in complex environments. Similarly, integrating the characteristics of ANN into SNN can significantly improve the training efficiency of SNN, such as an efficient backpropagation algorithm and powerful training capability. This complementary feature not only reduces the training time of SNNS but also improves their performance on large datasets.

In summary, SNN achieves a good balance between the number of parameters, memory usage, training time, and accuracy, which demonstrates the effectiveness of pruning techniques. By incorporating pruning algorithms, SNN can significantly reduce resource requirements while maintaining high accuracy. By introducing pruning algorithms, SNN is able to significantly reduce resource requirements while maintaining high accuracy, which makes SNN particularly suitable for applications in resource-constrained environments. In addition, pruning algorithms facilitate the use of SNN in fast iterative and efficient deployment scenarios, such as real-time data processing and intelligent monitoring systems. Therefore, future research can further explore the application of SNN in edge computing and discuss its integration scheme with edge intelligent devices. In addition, SNN can be combined with the distributed architecture of edge computing to enable more efficient data processing and decision-making, which will drive the development of smart cities, the Internet of Things, and industrial automation. Through these studies, SNN is expected to play a greater role in edge computing, which provides strong support for the realization of intelligence and automation.

5. Discussion

In this chapter, the reasons for the different anti-interference performances of SNN and ANN are analyzed. The main reason is that SNN and ANN are encoded differently. SNN uses spike coding information, which is transmitted by the frequency and time of the spike. In this encoding mode, information is transmitted and expressed through the neuron’s spike release time, which allows information to be transmitted in a more flexible way [34]. The information is encoded through the frequency and time of the spike, which makes SNN more robust to small connection damage or input noise. Even if some connections are lost, the remaining spikes can still transmit information. In contrast, ANN is usually encoded numerically, which relies on continuous values for information transfer and outputs a node-based activation function. Loss of connections can result in significant changes in activation values, which can affect overall performance. When a connection is lost, the activation value in the ANN can change significantly, which can lead to a disruption in the transmission of information and seriously affect overall performance. In the case of a high-noise environment or unstable connection, the robustness of ANN is poor, and it is often difficult to maintain stable output.

In order to verify the above views, this study tries to transfer the weight of SNN to ANN. The aim is to better understand how differences in information encoding between SNNS and ANN affect their robustness, and whether the characteristics of SNNS can effectively enhance the performance of ANN. Table 7 shows the detailed data of the experimental results:

Table 7.

The ANN performance comparison table with SNN weights is introduced.

According to the above results, we observed that when the weight of SNN was transferred to ANN without pruning, the accuracy of ANN was slightly improved. In addition, after the pruning algorithm is applied, the weight of SNN is transferred to the pruned ANN, and the accuracy of the ANN is slightly higher than the original value. However, the difference in accuracy caused by weight transfer is within the range of statistical deviation, which is far less significant than the difference between pruned ANN and pruned SNN. The experimental results show that the performance advantage of SNN after pruning is not due to weight transfer but may be related to other factors. Therefore, our preliminary guess is that the excellent accuracy of the pruned SNN is due to the characteristics of its spike coding information. The sparsity of the spike code not only gives SNN high efficiency in processing information but also enables it to capture and retain critical information more accurately, while effectively reducing redundant calculations. This finding provides important clues for further research into the unique advantages of SNN. In future studies, it is necessary to delve deeper into the mechanism of SNN’s operation, in particular how spike coding affects its performance and how this advantage can be better applied to practical tasks.

6. Conclusions

In this paper, two spiking neural network models, VGG-SNN and FCNN-SNN, are first constructed and systematically compared with the classical VGG model and FCNN model. During the model construction process, the Deep R pruning algorithm is introduced to simulate network connection damage and this combination can dynamically adjust the network structure. The redundant parameters are reduced by pruning so that the performance of different networks after connection damage is more effectively evaluated. Next, the anti-interference performance of the above two SNNs is compared and analyzed with that of the two traditional ANNs under the same degree of network connectivity damage level. The experimental results show that SNNs exhibit stronger perturbation resistance when faced with the same degree of network connectivity damage. This phenomenon suggests that the spike coding and information processing of SNN enables it to retain important information more effectively during information transfer and feature extraction, thus significantly improving the robustness of the model. Finally, the reasons why SNN is better than traditional ANN are considered, analyzed, and verified by experiments. Specifically, SNN may be due to its flexible spike coding method, which makes the SNN model maintain high recognition accuracy in complex environments.

In conclusion, this research not only validates the potential of SNN in image recognition tasks but also reveals its advantages in anti-interference performance. This feature gives SNN a unique advantage in edge computing environments, which facilitates fast, intelligent data processing and real-time feedback. In the future, this paper proposes to explore further the application range of SNN, especially its integration into edge computing. The application of SNN enables smart devices to handle complex tasks without relying on cloud computing, especially in the field of the Internet of Things and autonomous driving. Therefore, the application of SNN in future intelligent systems will not be limited to image recognition, but will also extend to a variety of real-time decision-making and control tasks, which will push smart devices to be more efficient and more autonomous.

Author Contributions

Conceptualization, Y.Z.; methodology, H.P.; software, H.P.; validation, H.P. and M.M.; formal analysis, Y.Z., J.M. and X.Z.; investigation, G.M. and M.M.; resources, G.M. and M.M.; data curation, H.P. and X.Z.; writing—original draft preparation, H.P. and M.M.; writing—review and editing, Y.Z. and M.M.; supervision, Y.Z and J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Foundation of Hebei Province (Grant No. F2022208002) and the Key Project of Science and Technology Research in Higher Education Institutions of Hebei Province (Grant No. ZD2021048).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The MNIST dataset utilized in this study is publicly accessible. To access it, you have the option to use tools such as PyTorch for downloading purposes. Alternatively, you can opt for manual downloads directly from the following website: http://yann.lecun.com/exdb/mnist/ (MNIST accessed on 17 February 2025). The Radar action dataset provided in this study can be provided at the request of the corresponding author due to privacy concerns and the lack of a dedicated open data repository.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shi, T.; Gao, L.; Tian, Y.; Tang, S. Memristor-based feature learning for pattern classification. Nat. Commun. 2025, 16, 913. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Ma, G.; Man, M.; Zhang, M.; Zhang, Y. Progress in bionic research of electromagnetic protection. High Volt. Technol. 2022, 5, 1750–1762. [Google Scholar] [CrossRef]

- Guo, L.; Guo, M.; Wu, Y.; Xu, G. Anti-Disturbance of Scale-Free Spiking Neural Network against Impulse Noise. Brain Sci. 2023, 13, 837. [Google Scholar] [CrossRef] [PubMed]

- Niu, L.; Wei, Y.; Liu, B.; Long, Y.; Xue, H. Research Progress of spiking neural network in image classification: A review. Appl. Intell. 2023, 53, 19466–19490. [Google Scholar] [CrossRef]

- Yamazaki, K.; Vo-Ho, V.-K.; Bulsara, D.; Le, N. Spiking Neural Networks and Their Applications: A Review. Brain Sci. 2022, 12, 863. [Google Scholar] [CrossRef]

- Tavanaei, A.; Ghodrati, M.; Kheradpisheh, S.R.; Masquelier, T.; Maida, A. Deep learning in spiking neural networks. Neural Netw. 2019, 111, 47–63. [Google Scholar] [CrossRef]

- Guo, L.; Wang, Y.; Shi, H. Comparative analysis of anti disturbance function of spiking neural networks with different topological structures. Comput. Appl. Softw. 2021, 38, 46–50. [Google Scholar] [CrossRef]

- Guo, L.; Liu, D.; Wu, Y.; Xu, G. Comparison of spiking neural networks with different topologies based on anti–disturbance ability under external noise. Neurocomputing 2023, 529, 113–127. [Google Scholar] [CrossRef]

- Zhang, C.; Guo, Y.; Li, M. Overview of the Development and Application of Artificial Neural Network Models. Comput. Eng. Appl. 2021, 57, 57–69. Available online: https://kns.cnki.net/kcms/detail/11.2127.TP.20210402.1348.004.html (accessed on 17 February 2025).

- Ding, S.; Li, H.; Su, C.; Yu, J.; Jin, F. Evolutionary artificial neural networks: A review. Artif. Intell. Rev. 2013, 39, 251–260. [Google Scholar] [CrossRef]

- Tian, W.; Zhang, L.; Qiu, R.; Lu, J.; Liu, Y.; Wang, W. Electrical performance of conductive cementitious composites under different curing regimes: Enhanced conduction by carbon fibers towards self-sensing function. Constr. Build. Mater. 2024, 421, 135771. [Google Scholar] [CrossRef]

- Bahrami, S.; Alamdari, S.; Farajmashaei, M.; Behbahani, M.; Jamshidi, S.; Bahrami, B. Application of artificial neural network to multiphase flow metering: A review. Flow Meas. Instrum. 2024, 97, 102601. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural network. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Hodgkin, A.L.; Huxley, A.F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 1952, 117, 500–544. [Google Scholar] [CrossRef]

- Brunel, N.; Mark, C.; Rossum, W. Lapicque’s 1907 paper: From frogs to integrate-and-fire. Biol. Cybern. 2007, 97, 337–339. [Google Scholar] [CrossRef]

- Segee, B. Methods in Neuronal Modeling: From Ions to Networks, 2nd Edition. Comput. Sci. Eng. 1999, 1, 81. [Google Scholar] [CrossRef]

- Zhu, H.; Zeng, X.; Zou, Y.; Zhou, J. Sensitivity of Spiking Neural Networks Due to Input Perturbation. Brain Sci. 2024, 14, 1149. [Google Scholar] [CrossRef]

- Deng, L.; Wu, Y.; Hu, X.; Liang, L.; Ding, Y.; Li, G.; Zhao, G.; Li, P.; Xie, Y. Rethinking the performance comparison between SNNS and ANN. Neural Netw. 2020, 121, 294–307. [Google Scholar] [CrossRef]

- Wang, H.; Qin, C.; Bai, Y.; Zhang, Y.; Fu, Y. Recent advances on neural network pruning at initialization. In Proceedings of the International Joint Conference on Artificial Intelligence(IJCAI), Vienna, Austria, 23–29 July 2022; pp. 5638–5645. [Google Scholar] [CrossRef]

- Deng, L.; Li, G.; Han, S.; Shi, L.; Xie, Y. Model compression and hardware acceleration for neural networks: A comprehensive survey. Proc. IEEE 2020, 108, 485–532. [Google Scholar] [CrossRef]

- Xue, Y.; Yao, W.; Peng, S.; Yao, S. Automatic filter pruning algorithm for image classification. Appl. Intell. 2024, 54, 216–230. [Google Scholar] [CrossRef]

- Wang, Y.; Li, F.; Shi, G.; Xie, X.; Wang, F. Network pruning using sparse learning and genetic algorithm. Neurocomputing 2020, 404, 247–256. [Google Scholar] [CrossRef]

- Belle, G.; Kappe, D.; Maass, W.; Legenstein, R. Deep rewiring: Training very sparse deep networks. In Proceedings of the International Conference on Learning Representations(ICLR), Vancouver, BC, Canada, 30 April–3 May 2018; Available online: https://openreview.net/forum?id=BJ_wN01C- (accessed on 17 February 2025).

- Liu, C.; Bellec, G.; Vogginger, B.; Kappel, D.; Partzsch, J.; Neumärker, F.; Höppner, S.; Maass, W.; Furber, S.; Legenstein, R.; et al. Memory-Efficient Deep Learning on a SpiNNaker 2 Prototype. Front. Neurosci. 2018, 12, 840. [Google Scholar] [CrossRef]

- Ebid, S.; El-Tantawy, S.; Shawky, D.; Abdel-Malek, H. Correlation-based pruning algorithm with weight compensation for feedforward neural networks. Neural Comput. Appl. 2025, 1–7. [Google Scholar] [CrossRef]

- Deng, L.; Wu, Y.; Hu, Y.; Liang, L.; Li, G.; Hu, X.; Ding, Y.; Li, P.; Xie, Y. Comprehensive SNN Compression Using ADMM Optimization and Activity Regularization. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 2791–2805. [Google Scholar] [CrossRef]

- Kappel, D.; Habenschuss, S.; Legenstein, R.; Maass, W. Network plasticity as Bayesian inference. PLoS Comput. Biol. 2015, 11, e1004485. [Google Scholar] [CrossRef]

- Chen, Y.; Yu, Z.; Fang, W.; Huang, T.; Tian, Y. Pruning of deep spiking neural networks through gradient rewiring. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence (IJCAI), Montreal, BC, Canada, 19–27 August 2021; Volume 8, pp. 1713–1721. [Google Scholar] [CrossRef]

- Guo, L.; Feng, H.; Shi, H. Research on the anti-interference function of small world spiking neural networks with different reconnection probabilities. Comput. Eng. Sci. 2020, 42, 1325–1330. [Google Scholar] [CrossRef]

- Guo, L.; Man, R.; Wu, Y.; Yu, H.; Xu, G. Anti-injury function of complex spiking neural networks under targeted attack. Neurocomputing 2021, 462, 260–271. [Google Scholar] [CrossRef]

- Gerstner, W.; Kistler, W.M. Spiking Neuron Models: SingleNeurons, Populations, Plasticit; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar] [CrossRef]

- Wu, Y.; Deng, L.; Li, G.; Zhu, J.; Shi, L. Direct training for spiking neural networks: Faster, larger, better. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 1311–1318. [Google Scholar] [CrossRef]

- Wei, F.; Chen, Y.; Ding, J.; Masquelier, T.; Chen, D.; Huang, L.; Zhou, H.; Li, G.; Tian, Y. SpikingJelly: An open-source machine learning infrastructure platform for spike-based intelligence. Sci. Adv. 2023, 9, eadi1480. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).