Abstract

Diabetic retinopathy (DR) is a visual obstacle caused by diabetic disease, which forms because of long-standing diabetes mellitus, which damages the retinal blood vessels. This disease is considered one of the principal causes of sightlessness and accounts for more than 158 million cases all over the world. Since early detection and classification could diminish the visual impairment, it is significant to develop an automated DR diagnosis method. Although deep learning models provide automatic feature extraction and classification, training such models from scratch requires a larger annotated dataset. The availability of annotated training datasets is considered a core issue for implementing deep learning in the classification of medical images. The models based on transfer learning are widely adopted by the researchers to overcome annotated data insufficiency problems and computational overhead. In the proposed study, features are extracted from fundus images using the pre-trained network VGGNet and combined with the concept of transfer learning to improve classification performance. To deal with data insufficiency and unbalancing problems, we employed various data augmentation operations differently on each grade of DR. The results of the experiment indicate that the proposed framework (which is evaluated on the benchmark dataset) outperformed advanced methods in terms of accurateness. Our technique, in combination with handcrafted features, could be used to improve classification accuracy.

1. Introduction

Diabetic retinopathy is directly associated with the prevalence of diabetes, which is now at epidemic proportions worldwide []. Currently, about 463 million diabetes patients are present, and approximately one-third of them have some form of diabetic retinopathy []. The international diabetes federation (IDF) reported that diabetes patients are expected to reach about 552 million by 2035 and 642 million by 2040 [,]. There are more than 158.2 million people currently suffering from DR, and this number is estimated to increase to about 191 million by 2030 [].

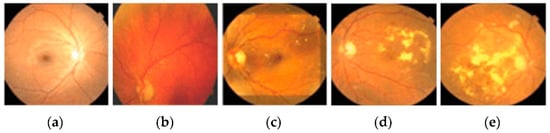

DR is an ocular impediment caused by diabetic disease; it has held its position as one of the main factors behind the occurrence of blindness globally [,,]. DR develops because of the long-standing occurrence of diabetes mellitus. The risks of disease are more common in patients with uncontrolled blood sugar. Generally, DR develops gradually and may not cause any symptoms or only mild vision loss in the primary stages. Eventually, if treatment and diagnosis are not performed in a timely manner, then it tends to cause blindness []. DR is generally categorized into three categories, normal, non-proliferative DR (NPDR), and proliferative DR (PDR), based on the progression of the diabetic retinopathy []. NPDR develops when new retinal blood vessels do not grow, and the blood vessel walls become faded. NPDR is divided further into mild, moderate, or severe stages of disease. In PDR, the retinal area is occupied by new blood vessels and obstructs the blood supply to the retina. Figure 1 shows samples of NPDR, PDR, and its subdivided classes of retinopathy discussed above [].

Figure 1.

These images show the different types of retinopathies in the fundus images. (a) Normal, (b) mild, (c) moderate, (d) severe, and (e) proliferative.

DR detection and grading at initial periods is laborious, time-intensive, and requires domain experts []. Moreover, the manual screening of DR patients indorses ex- tensive inconsistency among various clinicians. Approximately 79% of DR patients belong to underdeveloped or developing nations, which are deficient in ophthalmologists and a basic setup for DR detection []. With the rapid prevalence of DR worldwide, manual screening techniques are unable to keep pace with the demand for diagnosis methods [].

Due to the developments in computer vision techniques, numerous automatic techniques have been projected by researchers for the diagnosis of DR. There are various challenges associated with enhancements in computer-aided diagnosis (CAD) systems, such as identification of lesions from a retinal image, subdivision of optic disc, segmentation of blood vessel, etc. [,]. Although machine learning-based systems have shown resilient performance in DR detection, their efficacy is highly dependent on handcrafted features, which are very difficult to generalize []. To overcome such limitations, deep learning (DL) methods provide automatic feature extraction and classification from fundus images. The major determination of this study is to explain an efficient method for categorizing early-stage DR to assist ophthalmologists. The key contribution of this study is highlighted in the following points:

- A VGGNet model based on transfer learning is proposed for detecting and classifying diabetic retinopathy.

- Implementation of various preprocessing techniques such as interpolation image resizing, weighted Gaussian blur, and CLAHE for improving the value and visibility of retinal images.

- Performed data augmentation operations on each grade of DR individually to overcome annotated data insufficiency and to make a balanced dataset.

- A comprehensive DR classification system is developed with accuracy and robustness.

- Evaluation of the proposed model is performed on a large dataset, EyePACS, with 35,126 retinal fundus images.

- Various performance measures are implemented, such as accuracy, sensitivity, specificity, and the AUC to verify the analytical skill.

2. Methodology

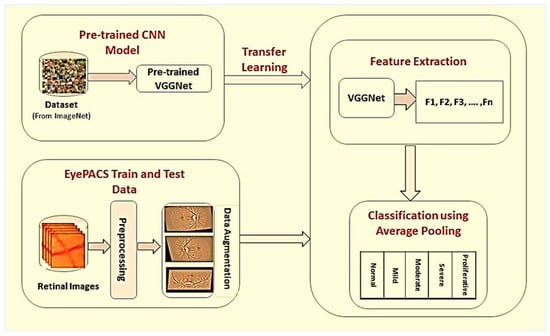

The proposed framework of DR classification was mainly categorized into the following steps: image preprocessing, data augmentation, feature extraction, and classification. The graphical illustration of the proposed work is presented in Figure 2.

Figure 2.

Proposed framework for detection and classification of diabetic retinopathy. In the first phase, retinal images are preprocessed, and data augmentation operations are performed individually on each grade of DR to improve classification accuracy. In the last, model-based on transfer learning is used for automatic features extraction and classification of DR into different stages.

2.1. The Kaggle EyePACS Dataset

The public dataset EyePACS was used for acquiring fundus images of the retina via Kaggle.com (accessed on 24 March 2021). These images were labeled by the ophthalmologists and subdivided into five categories: normal, mild, moderate, severe, and proliferative DR, as shown in Figure 1. The details related to this dataset are stated in Table 1.

Table 1.

Kaggle EyePACS dataset details.

2.2. Image Preprocessing

The EyePACS dataset is a heterogeneous dataset containing images from various smaller datasets captured with different cameras, under different adjustments, of different sizes, and with a lot of brightness and illumination differences; therefore, we adopted various preprocessing steps to standardize these images. First, we resized the fundus images to a uniform size by using bicubic interpolation over a 4 × 4-pixel neighborhood. We preferred this interpolation over simple resizing because it resizes the image by sustaining quality and locking the aspect ratio.

Generally, the retinal images were yellowish with a dark background. The fundus details did not overlap with the background and thus can be abolished to reduce noise. Equalizing the black background of the fundus images resulted in darkness being expanded into the image’s details []. Concerning this matter, we agreed with preprocessing to delete the black background by setting the pixel value to zero and non-zero for all bright regions. After thresholding was performed, the extraction of the green channel was performed. This green channel conserves more retinal data in comparison to the red or blue channels. The implementation of CLAHE, which is the contrast limited adaptive histogram equalization, took place for improving the quality of the retinal image as well as for enhancing the small areas.

Then, the weighted Gaussian blur was applied to images to reduce noise and increase image structure []. The mathematical expression to calculate the Gaussian function in two dimensions (x, y) along with σ standard deviation is given in Equation (1).

2.3. Data Augmentation

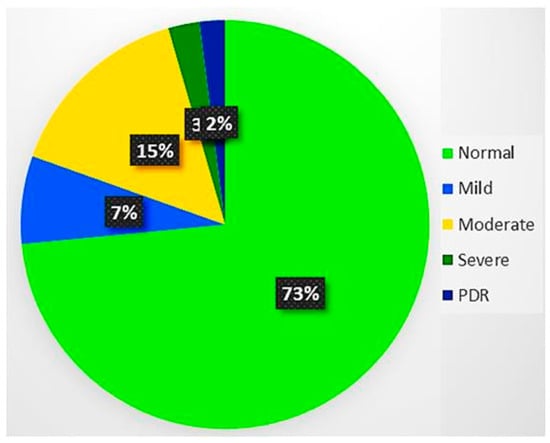

The training dataset size is one of the key features for the efficient performance of the DL models. Therefore, it is mandatory to have a larger dataset for the training of deep learning architecture to prevent generalization and overfitting issues. Although the Kaggle EyePACS dataset size is sufficient, it is considered very small as compared to the ImageNet [] dataset. The dataset distribution over the classes was highly imbalanced, as shown in Figure 3, where the maximum images were from grade 0. This highly imbalanced dataset was in the ratio of (36:3:7:1:1), which may cause incorrect classification. We performed data augmentation operations to amplify the retinal dataset at various scales and to eliminate the noise in fundus images.

Figure 3.

Dataset distribution over DR severity. There were about 73% of images in the normal category, while only 2% of them from the proliferative DR category. Thus, it was an imbalanced dataset with 36:1 for normal and proliferative DR.

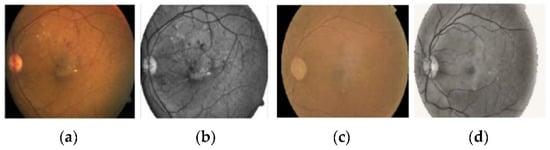

We employed various data augmentation operations on each grade differently because of the highly imbalanced nature of the dataset. The visual exemplification of some augmentation techniques performed on preprocessed images is given. This augmentation operation includes cropping, flipping, translating, shearing, rotating, zooming, Gaussian scale-space theory (GST) augmentation [], and Krizhevsky augmentation []. To produce visually appealing images, the clipping limit of CLAHE was set to 2 along the tile grid and 8, as shown in Figure 4.

Figure 4.

The preprocessed retinal images after applying contrast limited adaptive histogram equalization to adjust contrast in images. (a,c) Original retinal fundus images, (b,d) preprocessed images.

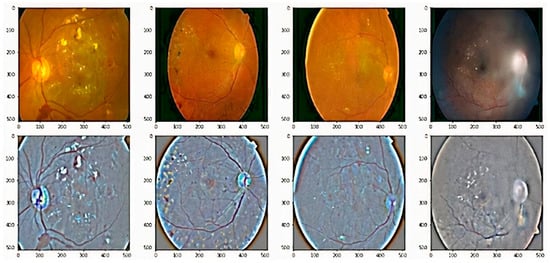

For improvement of image quality and enhancement of image structure, we imployed weighted gaussian blur to the retinal fundus images. Figure 5 shows the effects of gaussian blur on the retinal fundus images. The representation of images before and after applying this method of image processing is shown in the Figure 5.

Figure 5.

Some examples of adding weighted Gaussian blur to the retinal images, which is employed to reduce noise and increase image structure. First row are original fundus images, and second images are output of the preprocessed images.

Cropping: Images were randomly cropped from the corner and center to 60–75% of the original image.

Flipping: Images were flipped on both the X and Y axes.

Translating: Images were shifted between 0 and 30 pixels.

Shearing: Images were sheared between 0 and 180 degrees randomly.

Rotating: Images were rotated randomly in the range of 0 to 360 degrees.

Zooming: Images were zoomed in the range of (0.7, 1.3).

GST Augmentation: The GST-based augmentation was performed for a two-dimensional image.

Krizhevsky Augmentation: Krizhevsky color augmentation technique was used for dataset augmentation.

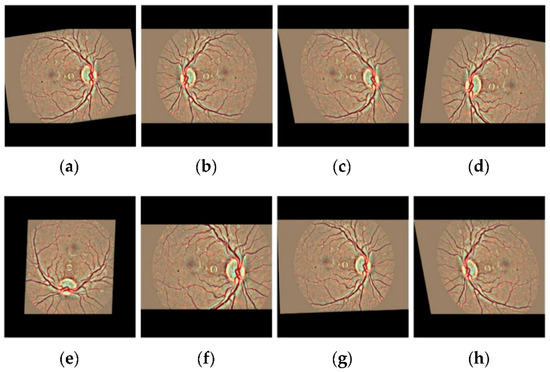

The representation of images after applying various data augemntation operation is displayed in the Figure 6.

Figure 6.

The visual exemplification of some augmentation operations performed on preprocessed images to augment the retinal dataset (a) Original image (b) Cropping (c) Shearing (d) Flipping (e) Rotating (f) Zooming (g) Translating (h) All augmentation.

The details of augmentation operations after applying them to the training dataset are given in Table 2. Different augmentation operations were performed on each grade of fundus images. The augmented dataset was 3.6 times greater than the original dataset and was highly balanced with 1:1 for all grades of DR.

Table 2.

The dataset statistics by using data augmentation operations.

2.4. Proposed Architecture

Although deep learning algorithms are good enough to solve various classification problems, the main problem with the classification of medical images is the unavailability of labeled data. Transfer learning is widely adopted to overcome annotated data insufficiency by reusing already trained deep convolutional neural networks for another identical task. Thus, it may be employed for reducing the training overhead as well as for training with a smaller dataset. There arise questions about the implementation of transfer learning to overwhelm annotated data availability in medical image classification based on deep learning. In this study, we opted for pre-trained VGG 16 to take advantage of fixed size 3 × 3 kernel filters for detection.

The architecture of the proposed model is shown in Table 3. This architecture consists of 16 layers with an input layer is 512 × 512. A 3 × 3 kernel size filter, united bias, and stride of 1 were utilized for all convolutional layers other than the first layer with a stride of 2. A 2 × 2 kernel size filter along with a stride of 2 was selected for all the max-pooling layers; extracted features were flattened before forwarding to the connected layers. The usage of the activation function rectified linear unit (ReLU) was made in all layers, and a drop of 0.5 was applied before the first two fully connected layers to evade overfitting. After convolution layers, two fully connected layers were added, with each having 1024 neurons. Finally, a softmax function was used as a single neuron output layer for the early-stage detection and classifying the diabetic retinopathy.

Table 3.

The proposed architecture for early-stage detection and classification of diabetic retinopathy comprising of 16 layers.

2.5. Training

Training of transfer learning-based VGGNet was performed through the process of fine-tuning the hyperparameters. The model was trained through Adam’s optimization function, and the learning rate was set to 0.0001. The weights of the network were initialized randomly through 32 batch-sizes and then trained on a network for 300 epochs. The momentum size was set at 0.9, and categorical cross-entropy was considered as an objective function. Data augmentation operations were performed separately on each grade to overcome data misbalancing issues. The details of these hyperparameters are given in Table 4.

Table 4.

Diagnostic test for the prevalence of the disease.

3. Results and Discussion

By utilizing a transfer learning technique, a detailed analysis of the working of the proposed scheme for diabetic retinopathy was evaluated. The Kaggle EyePACS dataset after data augmentation operations were segmented into 80%, 20% for the training and testing datasets.

3.1. The System Configurations

A system with the following specifications was used for the methodology.

OS: Linux Mint 18.1 operating system.

CPU: Intel(R) 3rd Generation Core (TM) i5-3470 CPU @ 3.20 GHz @ 3.20 GHz;

CPU Ram: 16 GB;

GPU: Quadro K620;

Graphics card Ram size: 12 GB.

3.2. The Performance Metrics

For evaluation of the overall working of the presented scheme, we analyzed the performance of abnormal human activity recognition from a confusion matrix [] and then computed the following performance metrics.

Precision: Expressed as the ratio of a total number of TP with respect to the total number of component tags as appropriate to the positive class (i.e., the sum of TP and FP). Positive Predictive Value (PPV) is used to denote the precision. Precision can be measured as follows:

F1 Score: The harmonic mean of recall and precision is used to measure it as in Equation (3):

Accuracy: The ratio of a number of components TP and TN to the total number of components TP, TN, FP, and FN is used to measure the accuracy. The accuracy can be calculated using the following Equation (4).

Specificity: It can be expressed in the ratio of the number of TN with respect to the total number of components that are appropriate to the negative class (i.e., the sum of TN and FP). The mathematical expression is shown in Equation (5).

The Area Under the Curve: It can be obtained by performing a definite integral between the two points. This can be measured by using the following mathematical Equation (6).

3.3. Result Analysis

After the training of the proposed model, the learning data was passed to the combined extracted features by using transfer learning. Besides the proposed VGGNet approach, different transfer learning models were also implemented, as shown in Table 5.

Table 5.

The performance comparison of the proposed model on an original and augmented dataset.

From Table 5, we can make observations that the ResNet, GoogLeNet, and AlexNet gave average accuracies of 92.40%, 93.75%, and 94.62%, respectively. Meanwhile, the proposed model stated an accuracy level of about 96.61%. Thus, it is an efficient method for detecting and classifying diabetic retinopathy. Furthermore, the data is split into the training and testing data. For training purposes, 80% of the data were used; for testing the proposed architecture, the rest of the 20% of unseen data were used. The proposed model was assessed through both the original and augmented dataset, as presented in Table 6.

Table 6.

The performance comparison of the proposed model and different existing transfer learning models on an original and augmented dataset.

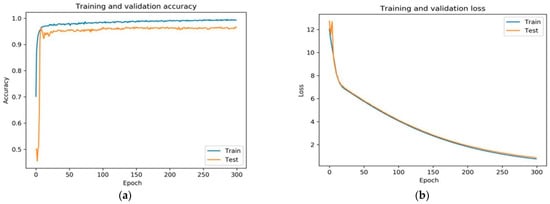

The proposed model showed higher accuracy for detecting and classifying diabetic retinopathy on a balanced augmented dataset. In Figure 7a,b, it can be observed that the training plots and validation accuracy improved constantly.

Figure 7.

(a) Shows the training and validation accuracy of the proposed framework and (b) shows the training and validation loss of the proposed fine-tuned VGGNet framework.

The fine-tuned version of VGGNet displayed exceedingly acceptable performance. The rate of training and validation accuracy continuously improved with an increase in each epoch. The loss curve indicates that the loss of training and validation decreased with each epoch.

The implemetation of various data augmentation techniques, the augmented data was obtained. The acquired augmented data was distributed into training and testing data as shown in the Table 7.

Table 7.

The distribution of augmented data into training and testing data.

In addition to average results, the best performance results were also important and retained in this paper because the validation accuracy can be altered with each epoch during the CNN training. Table 8 shows the prevalence of the disease on the basis of the diagnosis test.

Table 8.

Diagnostic test for the prevalence of the disease.

The comparative analysis of the proposed architecture was carried out with five up-to-date methods to relate its strength, as shown in Table 9.

Table 9.

Performance comparison of the proposed framework with baseline methods.

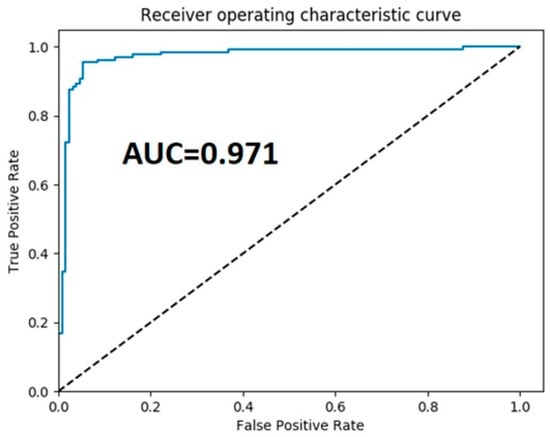

Observations made by the methods in [,,,,,,,,,,,] gave average accuracies of 82.3, 85.3, 90.4, 78.7, 95.68, 95.03%, and so on, as shown in the figure. This method yielded an accuracy of 96.6%, which is much higher than baseline methods. The experimental outputs indicate the strength of the proposed architecture with respect to the accuracy as compared to existing methods. Figure 8 shows the receiver the operating characteristic (ROC) and AUC curve of the proposed model.

Figure 8.

The receiver operator characteristics curve of the proposed framework.

4. Conclusions

We proposed a framework for the automatic detection and classification of diabetic retinopathy by using the transfer learning concept in this paper. For the preprocessing, we employed effective preprocessing techniques such as interpolation image resizing, weighted Gaussian blur, and non-local mean denoising (NLMD) for improving the visibility of an image. After preprocessing, transfer learning-based VGGNet architecture was used for classifying DR into the normal, mild, moderate, severe, and proliferative classes. The experimental studies were conducted on the Kaggle EyePACS public dataset. The effectiveness of the method was evaluated using numerical procedures such as the sensitivity, specificity, accuracy, and area under the curve.

We explored the extraction of features in fundus images using VGGNet architecture and united using the concept of transfer learning to improve classification performance. Moreover, we also employed various data augmentation operations on each grade of DR differently to make a balanced dataset and improve the efficiency of architecture. Finally, the results of the proposed study were compared to many deep learning architectures, and a comparison was made with the baseline methods. It is concluded that this proposed method displays exceptional presentation regarding various statistical measures. In the future, we intend to use hand-engineered features along with CNN to further improve classification accuracy.

Author Contributions

Conceptualization, M.K.J.; methodology, M.K.J.; software, M.K.J.; validation, Z.U.R.; formal analysis, M.K.J., A.J.; investigation, A.J.; resources, H.X.; data curation, M.K.J., Z.U.R.; writing—original draft preparation, M.K.J.; writing—review and editing, H.X., Z.U.R., M.K.J.; visualization, Z.U.R., A.J.; supervision, J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request to the corresponding author.

Acknowledgments

Open Access Funding by the Beijing University of Technology.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, W.; Liu, H.; Al-Shabrawey, M.; Caldwell, R.W.; Caldwell, R.B. Inflammation and diabetic retinal microvascular complications. J. Cardiovasc. Dis. Res. 2011, 2, 96–103. [Google Scholar] [CrossRef] [PubMed]

- Krug, E.G. Trends in diabetes: Sounding the alarm. Lancet 2016, 387, 1485–1486. [Google Scholar] [CrossRef]

- Chen, T.-H.; Tsai, M.-J.; Fu, Y.-S.; Weng, C.-F. The Exploration of Natural Compounds for Anti-Diabetes from Distinctive Species Garcinia linii with Comprehensive Review of the Garcinia Family. Biomolecules 2019, 9, 641. [Google Scholar] [CrossRef]

- Saeedi, P.; Salpea, P.; Karuranga, S.; Petersohn, I.; Malanda, B.; Gregg, E.W.; Unwin, N.; Wild, S.H.; Williams, R. Mortality attributable to diabetes in 20–79 years old adults, 2019 estimates: Results from the International Diabetes Federation Diabetes Atlas. Diabetes Res. Clin. Pract. 2020, 162, 108086. [Google Scholar] [CrossRef]

- Grzybowski, A.; Brona, P.; Lim, G.; Ruamviboonsuk, P.; Tan, G.S.W.; Abramoff, M.; Ting, D.S.W. Artificial intelligence for diabetic retinopathy screening: A review. Eye 2020, 34, 451–460. [Google Scholar] [CrossRef] [PubMed]

- Lam, C.; Yi, D.; Guo, M.; Lindsey, T. Automated Detection of Diabetic Retinopathy using Deep Learning. AMIA Jt. Summits Transl. Sci. Proc. 2018, 2018, 147–155. [Google Scholar]

- Alyoubi, W.L.; Shalash, W.M.; Abulkhair, M.F. Diabetic retinopathy detection through deep learning techniques: A review. Inform. Med. Unlocked 2020, 20, 100377. [Google Scholar] [CrossRef]

- Ishtiaq, U.; Kareem, S.A.; Abdullah, E.R.M.F.; Mujtaba, G.; Jahangir, R.; Ghafoor, H.Y. Diabetic retinopathy detection through artificial intelligent techniques: A review and open issues. Multimed. Tools Appl. 2020, 79, 15209–15252. [Google Scholar] [CrossRef]

- Abràmoff, M.D.; Lou, Y.; Erginay, A.; Clarida, W.; Amelon, R.; Folk, J.C.; Niemeijer, M. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Investig. Ophthalmol. Vis. Sci. 2016, 57, 5200–5206. [Google Scholar] [CrossRef]

- Nentwich, M.M.; Ulbig, M.W. Diabetic retinopathy-ocular complications of diabetes mellitus. World J. Diabetes 2015, 6, 489. [Google Scholar] [CrossRef]

- El-Bab, M.F.; Zaki, N.S.; AL-Sisi, A.; Akhtar, M. Retinopathy and risk factors in diabetic patients from Al-Madinah Al-Munawarah in the Kingdom of Saudi Arabia. Clin. Ophthalmol. 2012, 6, 269–276. [Google Scholar] [CrossRef]

- Nagy, M.; Radakovich, N.; Nazha, A. Machine learning in oncology: What should clinicians know? JCO Clin. Cancer Inform. 2020, 4, 799–810. [Google Scholar] [CrossRef]

- Ting, D.S.W.; Cheung, C.M.G.; Wong, T.Y. Diabetic retinopathy: Global prevalence, major risk factors, screening practices and public health challenges: A review. Clin. Exp. Ophthalmol. 2016, 44, 260–277. [Google Scholar] [CrossRef] [PubMed]

- Gulshan, V.; Rajan, R.; Widner, K.; Wu, D.; Wubbels, P.; Rhodes, T.; Whitehouse, K.; Coram, M.; Corrado, G.; Ramasamy, K.; et al. Performance of a Deep-Learning Algorithm vs Manual Grading for Detecting Diabetic Retinopathy in India. JAMA Ophthalmol. 2019, 137, 987–993. [Google Scholar] [CrossRef] [PubMed]

- ElTanboly, A.; Ismail, M.; Shalaby, A.; Switala, A.; El-Baz, A.; Schaal, S.; Gimel’Farb, G.; El-Azab, M.; Switala, A.; El-Bazy, A. A computer-aided diagnostic system for detecting diabetic retinopathy in optical coherence tomography images. Med. Phys. 2017, 44, 914–923. [Google Scholar] [CrossRef] [PubMed]

- Jadhav, A.S.; Patil, P.B.; Biradar, S. Computer-aided diabetic retinopathy diagnostic model using optimal thresholding merged with neural network. Int. J. Intell. Comput. Cybern. 2020, 13, 283–310. [Google Scholar] [CrossRef]

- Asiri, N.; Hussain, M.; Al Adel, F.; Alzaidi, N. Deep learning based computer-aided diagnosis systems for diabetic retinopathy: A survey. Artif. Intell. Med. 2019, 99, 101701. [Google Scholar] [CrossRef]

- Suma, K.G.; Kumar, V.S. A Quantitative Analysis of Histogram Equalization-Based Methods on Fundus Images for Diabetic Retinopathy Detection. In Computational Intelligence and Big Data Analytics; Springer: Singapore, 2019; pp. 55–63. [Google Scholar] [CrossRef]

- Graham, B. Kaggle Diabetic Retinopathy Detection Competition Report; University of Warwick: Coventry, UK, 2015. [Google Scholar]

- Kornblith, S.; Shlens, J.; Le, Q.V. Do better imagenet models transfer better? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2661–2671. [Google Scholar]

- Imran, A.; Li, J.; Pei, Y.; Mokbal, F.M.; Yang, J.J.; Wang, Q. Enhanced intelligence using collective data augmentation for CNN based cataract detection. In Proceedings of the International Conference on Frontier Computing, Kyushu, Japan, 9–12 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 148–160. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Townsend, J.T. Theoretical analysis of an alphabetic confusion matrix. Percept. Psychophys. 1971, 9, 40–50. [Google Scholar] [CrossRef]

- Rakhlin, A. Diabetic Retinopathy detection through integration of Deep Learning classification framework. BioRxiv 2018, 163, 225508. [Google Scholar]

- Sengupta, S.; Singh, A.; Zelek, J.; Lakshminarayanan, V. Cross-domain diabetic retinopathy detection using deep learning. Appl. Mach. Learn. Int. Soc. Opt. Photonics 2019, 11139, 111390V. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Chang, K.; Balachandar, N.; Lam, C.K.; Yi, D.; Brown, J.; Beers, A.; Rosen, B.R.; Rubin, D.L.; Kalpathy-Cramer, J. Distributed deep learning networks among institutions for medical imaging. J. Am. Med. Inform. Assoc. 2018, 25, 945–954. [Google Scholar] [CrossRef]

- Wan, S.; Liang, Y.; Zhang, Y. Deep convolutional neural networks for diabetic retinopathy detection by image classification. Comput. Electr. Eng. 2018, 72, 274–282. [Google Scholar] [CrossRef]

- Gargeya, R.; Leng, T. Automated Identification of Diabetic Retinopathy Using Deep Learning. Ophthalmology 2017, 124, 962–969. [Google Scholar] [CrossRef] [PubMed]

- Zeng, X.; Chen, H.; Luo, Y.; Ye, W. Automated Diabetic Retinopathy Detection Based on Binocular Siamese-Like Convolutional Neural Network. IEEE Access 2019, 7, 30744–30753. [Google Scholar] [CrossRef]

- Zhang, W.; Zhong, J.; Yang, S.; Gao, Z.; Hu, J.; Chen, Y.; Yi, Z. Automated identification and grading system of diabetic reti-nopathy using deep neural networks. Knowl. Based Syst. 2019, 175, 12–25. [Google Scholar] [CrossRef]

- Lin, G.M.; Chen, M.J.; Yeh, C.H.; Lin, Y.Y.; Kuo, H.Y.; Lin, M.H.; Chen, M.C.; Lin, S.D.; Gao, Y.; Ran, A.; et al. Transforming retinal photographs to entropy images in deep learning to improve automated detection for diabetic retinopathy. J. Ophthalmol. 2018, 2018, 2159702. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Keel, S.; Liu, C.; He, Y.; Meng, W.; Scheetz, J.; Lee, P.Y.; Shaw, J.; Ting, D.; Wong, T.Y.; et al. An automated grading system for detection of vision-threatening referable diabetic retinopathy on the basis of color fundus photographs. Diabetes Care 2018, 41, 2509–2516. [Google Scholar] [CrossRef] [PubMed]

- Seth, S.; Agarwal, B. A hybrid deep learning model for detecting diabetic retinopathy. J. Stat. Manag. Syst. 2018, 21, 569–574. [Google Scholar] [CrossRef]

- Keel, S.; Lee, P.Y.; Scheetz, J.; Li, Z.; Kotowicz, M.A.; MacIsaac, R.J.; He, M. Feasibility and patient acceptability of a novel artificial intelligence-based screening model for diabetic retinopathy at endocrinology outpatient ser-vices: A pilot study. Sci. Rep. 2018, 8, 4330. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).