Abstract

Efficient job scheduling reduces energy consumption and enhances the performance of machines in data centers and battery-based computing devices. Practically important online non-clairvoyant job scheduling is studied less extensively than other algorithms. In this paper, an online non-clairvoyant scheduling algorithm Highest Scaled Importance First (HSIF) is proposed, where HSIF selects an active job with the highest scaled importance. The objective considered is to minimize the scaled importance based flow time plus energy. The processor’s speed is proportional to the total scaled importance of all active jobs. The performance of HSIF is evaluated by using the potential analysis against an optimal offline adversary and simulating the execution of a set of jobs by using traditional power function. HSIF is 2-competitive under the arbitrary power function and dynamic speed scaling. The competitive ratio obtained by HSIF is the least to date among non-clairvoyant scheduling. The simulation analysis reflects that the performance of HSIF is best among the online non-clairvoyant job scheduling algorithms.

1. Introduction

In the current era, the importance of the reduction of energy consumption in data centers and battery based computing devices is emerging. Energy consumption has become a prime concern in the design of modern microprocessors, especially for battery based devices and data centers. Modern microprocessors [1,2] use dynamic speed scaling to save energy. The processors are designed in such a way that they can vary its speed to conserve energy using dynamic speed scaling. The software developed assists operating system to vary the speed of a processor and save energy. As per United States Protection Agency [3], data centers represent 1.5% of total US electricity consumption. The US data center workload requires estimated for 2020 requiring a total electricity use that varies by about 135 billion kWh. Data center workloads continue to grow exponentially; comparable increases in electricity demand have been avoided through the adoption of key energy efficiency measures [4]. Energy consumption can be reduced by scheduling jobs in an appropriate order. In the last few years, a lot of job scheduling algorithms are proposed with dual objectives [5,6]. The objectives considered are: the first, to optimize some scheduling quality (criteria, e.g., flow time, weighted flow) and the second, to minimize energy consumption. Scheduling algorithms with dual objectives have two components [7]: Job Selection: It determines that out of active jobs which job to execute first on a processor. Speed Scaling: At any time t, it determines the speed of a processor.

The traditional power function (power , where s and α > 1 are speed of a processor and a constant, respectively [8,9]) is used widely for the analysis of scheduling algorithms. In this paper, the arbitrary power function [10] is considered. The arbitrary power function is having certain advantages over traditional power function [10]. The motivation to use the arbitrary power function rather than traditional power function is explained comprehensively by the Bansal et al. [10]. Different types of job scheduling models are available in literature. A job is a unit of work/task that an operating system performs. It is like the applications you execute on computer (email client, word-processing, web browsing, printing, information transfer over the Internet, or a specific action accomplished by the computer). Any user/system activity on a computer is handled through some job. The size of a job is the set of operations and microoperations required to be executed for completing some course of action on a computer. In offline job scheduling, the complete job sequence is known in advance, whereas jobs arrive arbitrarily in online job scheduling. To minimize the flow time, big jobs execute at high speed with respect to their actual importance and small jobs execute at low speed with respect to their actual importance. In non-clairvoyant job scheduling, there is no information regarding the size of jobs at arrival time, whereas in clairvoyant job scheduling, the size of any job is known at its arrival time. The practical importance of online non-clairvoyant job scheduling is higher than clairvoyant scheduling [11]. Most processors do not have natural deadlines associated with them, for example in Linux and Microsoft Windows [12]. The non-clairvoyant scheduling problem is faced by the operating system in a time sharing environment [13]. There are several situations where the scheduler has to schedule jobs without knowing the sizes of the jobs [14]. The Shortest Elapsed Time First (SETF) algorithm, a variant of which is used in the Windows NT and Unix operating system scheduling policies, is a non-clairvoyant for minimizing mean slowdown [14].

The theoretical study of speed scaling was initiated by Yao et al. [15]. Motwani et al. [13] introduced the analysis of non-clairvoyant scheduling algorithm. Initial researches [16,17,18,19,20,21] considered the objective to minimize the flow time, i.e., only the quality of service criteria. Later on, some new algorithms were proposed with an objective of minimizing the weighted/prioritized flow time [22,23,24], i.e., not only the quality of service but also the reduction in energy consumption by the machines. Albers and Fujiwara [25] studied the scheduling problem with an objective to minimize the flow time plus energy in the dynamic speed scaling approach. Online non-clairvoyant job scheduling algorithms are studied less extensively than online clairvoyant job scheduling algorithms. Highest Density First (HDF) is optimal [10] in online clairvoyant settings for the objective of fractional weighted/importance-based flow time plus energy. HDF cannot operate in the non-clairvoyant settings. HDF [10] algorithm always runs the job of highest density and the density of a job is its importance divided by its size. In non-clairvoyant settings, the complete size of a job is only known at the completion of it. Therefore, the HDF cannot be used directly in the non-clairvoyant settings. Azar et al. [11] proposed an algorithm (Non-Clairvoyant) NC for the known job densities in the online non-clairvoyant settings on a uniprocessor, using the traditional power function. In NC, the density (i.e., the importance/size) is known at arrival time. Speed scaling and job assignment policy used in non-clairvoyant algorithm NC-PAR (Non-Clairvoyant on Parallel identical machines) is based on a clairvoyant algorithmic approach, which shows that NC-PAR is not a pure non-clairvoyant algorithm. WLAPS (Weighted Latest Arrival Processor Sharing) [26] provides high priority to some latest jobs which increases the average response time. WLAPS does not schedule a fixed portion of active jobs rather it selects jobs having total importance equal to a fixed portion of the total importance of all active jobs. It needs to update the importance of some job to avoid under-scheduling or over-scheduling. It does not consider the importance of jobs in appropriate manner and suffers from high average response time. The above-mentioned deficiencies motivated us to continue the study in this field for the objective of minimizing importance-based importance based flow time plus energy.

In this paper, an online non-clairvoyant scheduling Highest Scaled Importance First (HSIF) is proposed with an objective of minimizing the scaled importance-based flow time plus energy. In HSIF, rather than the complete importance of a job the scaled importance of a job is considered. The scaled importance of a job increases if the job is new and it does not get the chance to execute; consequently, the starvation condition is avoided. If a job executes then the scaled importance will decrease. It the HSIF, the impotence of any job is calculated and it is the scaled value of the fixed importance of that job. As the importance is time dependent it can be termed as dynamic importance/scaled importance. This balances the speed and energy consumption. The speed of a processor is a function of the total scaled importance of all active jobs. The competitive ratio of HSIF is analysed using the arbitrary power function and amortized potential function analysis.

The remaining paper is segregated in the following sections: Next section describes some related previous scheduling algorithms and their results. Section 3 provides notations used in our paper and definitions necessary for discussion. In Section 4, the authors have explained a 2-comptitive scheduling Highest Scaled Importance First (HSIF), which includes the algorithm as well as the comparison of HSIF with the optimal algorithm using amortized analysis (potential function). In Section 5, a set of jobs and traditional power function is used to examine the performance of HSIF. Section 6 draws some concluding remarks and future scope of this study.

2. Related Work

In this section, review of some related work on the online non-clairvoyant job scheduling algorithms using the traditional power function is presented. Irn et al. [27] proposed a concept of migration of jobs and gave an online non-clairvoyant algorithm Selfish Migrate (SelMig). SelMig is O(α2)-competitive using traditional power function with an objective of minimizing the total weighted flow time plus energy on unrelated machines. Azar et al. [11] presented an online non-clairvoyant uni-processor algorithm NC, wherein all jobs arrive with uniform density (i.e., ). NC is -competitive using the traditional power function with an objective of minimizing the fractional flow time plus energy. NC uses unbounded speed model. Most of the studies using arbitrary power function have been conducted with clairvoyant settings. Bansal et al. [12] showed that an online clairvoyant algorithm ALG (Algorithm proposed by Bansal et al.) is -competitive with an objective of minimizing the fractional weighted/importance-based flow time plus energy. ALG uses Highest Density First (HDF) for job selection. The competitive ratio , more specifically for , for , for . For large , the value of . Bansal et al. [10] introduced the concept of arbitrary power function and proved that an online clairvoyant algorithm (OCA) is -competitive with an objective of minimizing the fractional weighted flow time plus energy. Authors presented [28] an expert and intelligent system that applies various energy policies to maximize the energy-efficiency of data-center resources. Authors claimed that around 20% of energy consumption can be saved in without exerting any noticeable impact on data-center performance. Duy et al. [29] described a design, implementation, and evaluation of a green scheduling algorithm using a neural network predictor to predict future load demand based on historical demand for optimizing server power consumption in cloud computing. The algorithm turns off unused servers (and restarts them whenever required) to minimize the number of running servers; thus, minimizing the energy consumption. Authors defined [30] an architectural framework and principles for energy-efficient cloud computing. They presented an energy-aware resource provisioning heuristics that improves energy efficiency of the data center, while delivering the negotiated Quality of Service. Sohrabi et al. [31] introduced a Bayesian Belief Network. It learns over time that which of the overloaded virtual machines is best to be removed from a host. The probabilistic choice is made among virtual machines that are grouped by their degree of Central processing unit (CPU) usage. Juarez et al. [32] proposed a real-time dynamic scheduling system to execute efficiently task-based applications on distributed computing platforms in order to minimize the energy consumption. They presented a polynomial-time algorithm that combines a set of heuristic rules and a resource allocation technique in order to get good solutions on an affordable time scale. In OCA, the work and weights/importance are arbitrary. It uses HDF for job selection and the power consumed is calculated on the basis of speed of a processor, which is a function of fractional weights of all active jobs. Chan et al. [26] showed that an online non-clairvoyant job scheduling algorithm named Weighted Latest Arrival Processor Sharing (WLAPS) is -competitive under the arbitrary power model with an objective of minimizing the weighted flow time plus energy, where . The value of α is commonly believed to be 2 or 3 [26]. HDF is optimal [10] in online clairvoyant settings for the objective of fractional weighted/importance-based flow time plus energy. In clairvoyant job scheduling, the size of a job is known at arrival time but the same is not true in case of non-clairvoyant scheduling, therefore HDF cannot be applied in non-clairvoyant setting. In this paper, a variant strategy of HDF is considered but in online non-clairvoyant setting for the objective of minimizing the scaled importance based flow time plus energy. Authors proposed a new strategy Highest Scaled Importance First (HSIF) in which rather than the complete importance of a job the scaled importance of a job is considered. The scaled importance of a job increases if the job is new and it does not get the chance to execute; consequently, the starvation condition is avoided. If a job executes then the scaled importance will decrease. This balances the speed and energy consumption. The speed of a processor is a function of the total scaled importance of all active jobs. 2-competitive HSIF is analysed using the amortized potential function against an offline adversary and arbitrary power function. The results of HSIF and other related online non-clairvoyant job scheduling algorithm are provided in Table 1.

Table 1.

Summary of previous results. SelMIg: Selfish Migrate; NC: Non-Clairvoyant; ALG: algorithm proposed by Bansal et al.; WLAPS: Weighted Latest Arrival Processor Sharing; OCA: online clairvoyant algorithm; HSIF: Highest Scaled Importance First.

3. Definitions and Notations

The necessary definitions, explanation of the terms for the study, the concept of arbitrary power function and amortized potential function analysis are as follows:

3.1. Scheduling Basics

An online non-clairvoyant uni-processor job scheduling HSIF is proposed, where jobs arrive over time and there is no information about the sizes of jobs. The importance/weight/priority (generated by the system) of any job j is known at job’s arrival and size is known only at the completion of a job. Jobs are sequential in nature and preemption is permitted with no penalty. The speed of a processor s is a rate at which the work is completed. At any time t, a job j is active if arrival time and the remaining work . At time t, the scaled importance of a job j is . Executed time of a job j is current time t minus arrival time , i.e., . The scaled importance based flow of a job is integral over times between the job’s release time and its completion time of its scaled importance at that time. The ascending inverse density of a job j is executed time divided by its importance, i.e., . The ascending inverse density is recalculated discretely either on arrival of a new job or on completion of any job. The response time of a job is the time interval between the starting time of execution and arrival time of a job. The turnaround time is the time duration between completion time and arrival time of a job. The weight, importance and significance of a job are used as the synonyms of the priority of jobs.

3.2. Power Function

The power function specifies the power used when processor executes at speed s. Any reasonable power function which satisfies the following conditions is permitted [33]:

- Acceptable speeds are a countable collection of disjoint subintervals of

- All the intervals, excluding probably the rightmost, are closed on both ends

- The rightmost interval may be open on the right if the power approaches infinity, as the speed s approaches the rightmost endpoint of that interval

- is non-negative, continuous and differentiable on all but countable many points

- Either there is a maximum allowable speed T, or the limit inferior of as s approaches infinity is not zero Without loss of generality, it can be assumed that [24]:

- P is strictly convex and increasing

- P is unbounded, continuous and differentiable

Let be , i.e., provides the speed that a processor can run at, if the limit of is specified.

3.3. Amortized Local Competitive Analysis

The objective considered is (G) scaled importance-based flow time plus energy. Let and be the increase in the objective in the schedule for any algorithm A and offline adversary Opt, respectively at time t. Opt optimizes G. At any time t, for algorithm A, is , where , and are speed of processor, power at speed and scaled importance of all active jobs, respectively. To prove that A is c-competitive a potential function is required which follows the following conditions: Boundary Condition: Initially, when no job is released and at the end, after all jobs are completed Φ = 0. Job Arrival and Completion Condition: There is no increment in Φ, when any job arrives or completes. Running Condition: At any other time when no job arrives or completes, plus the rate of change of Φ is no more than c times of :

Lemma 1.

(Young’s Inequality [34]) Letbe any real-valued, continuous and strictly increasing function such that. Then

where,is the inverse function of.

4. A 2-Comptitive Scheduling Highest Scaled Importance First (HSIF)

4.1. Scaled Importance-Based Flow Plus Energy

An online non-clairvoyant uni-processor scheduling algorithm Highest Scaled Importance First (HSIF) is proposed. In HSIF, all jobs arrive arbitrarily along with their importance and without information about their sizes. The sizes of jobs are known only on the completion of jobs. The possible speeds of a processor are a countable collection of disjoint subintervals of . The working of HSIF is observed using amortized potential analysis. HSIF is 2-competitive for the objective to minimize the scaled importance based flow time plus energy.

4.1.1. Algorithm HSIF

The algorithm HSIF always selects an active job with the highest scaled importance at any time, where the scaled importance of a job is computed as follows:

The executed time of a job j is . At any time t, the processor executes at speed , where and is the total scaled importance of all active jobs for HSIF. As the algorithm HSIF is non-clairvoyant, the executed time assumed is its current size. The intension here is that the instantaneous importance/priority must depend on its importance (system generated) and size. If the job is not executing (job is waiting) then the scaled importance will increase and if the job starts execution the partial importance of a job j will decrease with respect to increase in execution.

| Algorithm Highest Scaled Importance First (HSIF) |

| Input: number of active jobs At time t, the importance of all active jobs and the executed time for all active jobs . Output: The speed of all processors and execution sequence of jobs. |

| 1. On arrival of a job 2. If CPU is idle allocate the job to CPU 3. 4. speed of CPU 5. else if CPU is executing some job 6. 7. 8. speed of CPU 9. On completion of a job 10. if 11. 12. select the job with 13. 14. 15. else speed of CPU |

Theorem 1.

An online non-clairvoyant uni-processor scheduling Highest Scaled Importance First (HSIF) selects job with highest partial importance and consumes power equal to the total partial importance of all active jobs under dynamic speed scaling. HSIF is 2-comptitive for the objective of minimizing scaled importance-based flow time plus energy on arbitrary-work and arbitrary-importance of jobs.

In the rest of this section Theorem 1 is proven. For amortized local competitive analysis of HSIF, a potential function is provided in next sub section.

4.1.2. Potential Function

Let Opt be the optimal offline adversary that minimizes scaled importance based flow time plus energy. At any time t, let and be the total scaled importance of all active jobs for Opt and HSIF, respectively. At any time t, let and be the total scaled importance of all active jobs with at least ascending inverse density in Opt and HSIF, respectively. Let , where . A potential function can be defined as follows:

Since and are increasing, is an increasing function of . Therefore,

To observe the effectiveness of the algorithm, it is required to observe the boundary condition, job arrival and completion condition, and running conditions.

For the boundary condition, one can observe that before arrival of any job and after completion of all jobs , a. Therefore, . On arrival of any job, the value of remains the same for all a, therefore remains the same. The scaled importance of a job decreases continuously when a job is executed by the HSIF or Opt, hence does not decrease on completion of a job. At any other time t when no job arrives or completes, it is required to prove that the following inequality follows:

Since t is the current time only, the superscript t is omitted from the parameters in the rest of the analysis. Let and be the minimum ascending inverse densities of an active job using Opt and HSIF, respectively. Let (or ) be if HSIF (or Opt) has no active job. HSIF executes jobs on the basis of the highest scaled importance first at a speed . Therefore, decreases at the rate of , , and remains the same for . Similarly, changes at the rate of , and remains the same for . Rest of the analysis is based on the three cases depending on , and .

Case 1: If then, one can observe that

(a),

, . Therefore, remains the same. Hence for the rate of change of , i.e., .

(b) If , remains the same, therefore the rate of change of , i.e., . Considering both the sub cases it is observed that

Hence the running condition is satisfied for .

Case 2: If then, one can observe that there is a decrement in at the rate of , due to which the possible maximum rate of increment in is:

, ,

In Equation (1) substituting the values for , and , it provides:

Using Equations (3) and (4) in (2), it provides

Hence the running condition is satisfied for .

Case 3: If then, one can observe that a decrement in creates a decrement in and a decrement in creates an increment in .

, there is a decrement in at the rate of , due to which the possible rate of change of is:

, , thus

, there is a decrement in at the rate of , due to which the possible rate of change of is

, , thus

Adding the Equations (6) and (8)

Let i, and ≥ 0 be real numbers. Since P is strictly increasing and convex, and is strictly increasing. Substituting the values of , and in Equation (1), it provides

Substituting the values from Equation (11) to (9), it provides

Since ⇒ , thus using this value in Equation (12), it provides

Hence the running condition is satisfied for .

5. Illustrative Example

To examine the performance of HSIF, a set of seven jobs and the traditional power model is considered, where and . The jobs arrived along with their importance but the size of jobs was only known at their completion. The jobs are executed by using algorithms HSIF and NC (the best known to date [11]); their executions are simulated. To demonstrate the effectiveness of proposed scheduling, a simulator is used which is developed using Linux kernel. Simulator facilitates to segregate the scheduling algorithm and decisively do not include the effects of other activity present in a real kernel implementation. The jobs are considered as independent. The proposed algorithm is for the identical homogeneous machines. To evaluate the performance of the algorithm the (average) turnaround time and (average) response time is considered. The lesser value of average response time reflects the prompt response of a request of jobs, which helps in avoiding starvation condition. The least value of the turnaround time gives the indication that the algorithm is capable to fulfil the resource requirement of all jobs in minimum time, which is a parameter of better resource utilization. The hardware specifications are mentioned in the Table 2.

Table 2.

Hardware specifications.

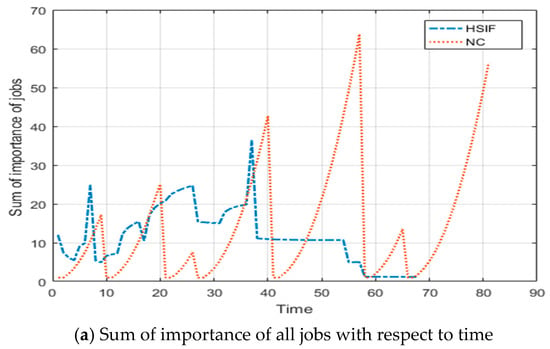

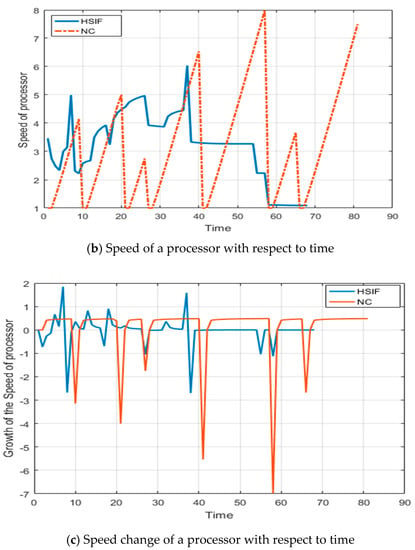

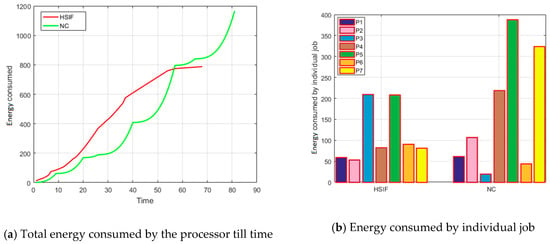

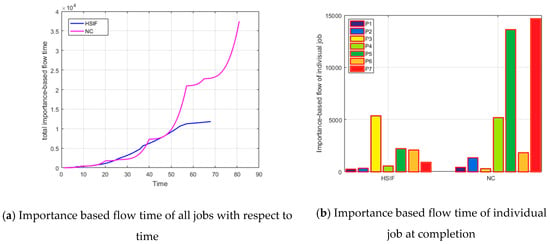

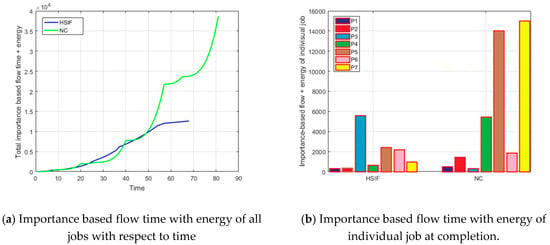

The details of jobs and results computed are shown in the Table 3 and Figure 1, Figure 2, Figure 3 and Figure 4.

Table 3.

Job details and execution information using HSIF and NC.

Figure 1.

The execution results of jobs and processor speed with respect to time.

Figure 2.

Energy Consumption of processes and jobs.

Figure 3.

Importance based flow time of jobs.

Figure 4.

Importance based flow time with energy of jobs.

As per the result stated in the Table 3, the response time (and turnaround time) of most of the jobs and average response time (and average turnaround time) of all jobs executed by HSIF are lesser than NC. It shows that the performance of HSIF is better than NC with respect to scheduling criteria. Table 4 reflects that HSIF consumes less energy and performance objective importance based flow time as well as importance based flow time plus energy is better for HSIF.

Table 4.

Three objectives values for jobs using HSIF and NC.

After observing the graphs of Figure 1a,b, it is clear that the HSIF adjusts the sum of the importance of active jobs frequently (count of maxima), but the change in the values is small (difference in the consecutive maxima). This shows that HSIF is maintaining the consistency in the performance. The speed of a processor depends on the sum of importance; therefore, the speed of a processor is having the same reflection. This frequent but less change in the speed makes the HSIF consistent in performance. In NC, the frequency of change in the sum of the importance of active jobs is lesser, but the difference in the change is very high. The speed of a processor using NC depends on the sum of executed size of active jobs; therefore, the speed of a processor variates highly. This high variation makes the NC less consistent in performance.

In Figure 1c, the number of high speed change (local minima) is six when processor is executing jobs by using NC, which is due to the completion and start of execution of the jobs. There is a big change in the speed of processor when the executing job is changed. There is no affect on the new job’s arrival (accumulation of importance based flow) on the execution speed of executing job. In the speed growth graph of processor using HSIF, more than six maxima and minima are available; it shows that the speed of a processor increases on arrival of a new job, i.e., increases on accumulation of scaled importance based flow time. It eliminates the possibility of starvation condition and improves the performance. It shows that HSIF is capable to adjust the speed for maintaining and improving the performance.

Figure 2a shows that initially, at any time the total energy consumed by processor using HSIF is higher than NC, but at the later stage the total energy consumed by processor using NC increases. The total flowtime of all active jobs when executed using NC is more than HSIF; consequently, the energy consumed by processor when using NC is more than HSIF. The energy consumed by most of the individual jobs when they are executed by HSIF is more than NC, as shown is Figure 2b.

The importance based flow time and importance based flow time plus energy values of individual jobs are shown in the Figure 3 and Figure 4 respectively. Most of the individual jobs are having the lesser values of importance based flow time and importance based flow time plus energy when they are executed by using HSIB than NC. HSIF and NC both are competing to reduce the value of the sum of importance based flow time and importance based flow time plus energy, as shown in Figure 3a and Figure 4a, respectively. In the later stage the total values for HSIF is lesser than NC. The total value of importance based flow time and importance based flow time plus energy for a processor by using HSIF is lesser than NC. The value of objective considered is lesser at most of the time when using HSIF. From the above observation, it is concluded that the performance of the HSIF is better and consistent than the best known algorithm NC.

To extend the analysis of the performance of HSIF, a second set of ten jobs and the traditional power model is considered. The jobs arrived along with their importance but the size of jobs was only known at their completion. This case is designed by assuming that the jobs arrive in the increasing order of size. The jobs are executed by using algorithms HSIF and NC (the best known to date [11]); their executions are simulated. The analysed data is mentioned in the Table 5 and Table 6. In the Table 5 the job’s arrival time and importance are mentioned. The size is computed and observed at the completion of the jobs. On the basis of the arrival time, starting time of execution and computed completion time the metrics of quality are computed. In this analysis, the metrics of quality considered are turnaround time, response time, power consumed and important based flow time. The computed results are mentioned in the Table 5 and Table 6. The lower values computed using HSIF and NC are marked in bold. The jobs details such as arrival time, completion time importance and size are same for table as well as Table 6.

Table 5.

Details and execution information of jobs with increasing-order of size using HSIF and NC.

Table 6.

Three objectives values for jobs arriving with increasing-order of size using HSIF and NC.

In Table 5, the turnaround time of nine jobs (out of ten) is lesser using HSIF than NC. It is clearly visible from the data of Table 4 that nine jobs are having response time lesser using HSIF than NC. As well as the average value of turnaround time and response time is lesser using HSIF than NC. On the basis of such observations one can conclude that the working of HSIF is better than the best-known NC in the special case also where the jobs may arrive in the increasing order of size. In Table 6, three values of three objectives energy consumed, importance-based flow time and importance-based flow time plus energy are mentioned. As per the values of energy consumed by the jobs six out of ten jobs consumes lesser power using HSIF than NC; although, the total energy consumed by all ten jobs is more using HSIF than NC. The importance is one of the main factors which forced the schedule of execution of the jobs. The importance based flow times of eight jobs (out of ten) are lesser using HSIF than NC. It is clearly visible from the data of Table 6 that the total importance based flow times of all ten jobs are also lesser using HSIF than NC. This lesser value of metric reflects the better performance of HSIF than NC. The third metric importance-based flow time plus energy (the main objective of the proposed algorithm) of eight jobs (out of ten) are lesser using HSIF than NC. As well as this, the average values of importance-based flow time plus energy of all ten jobs lesser using HSIF than NC. It can be concluded from the observations mentioned above that the objective is better fulfilled by HSIF than NC.

To extend the analysis and increase the performance evaluation, a set of fifty arbitrary jobs with arbitrary arrival time is considered. The size of jobs is computed at the completion time only. Five different objective sets of values turnaround time, completion time, response time, important based flow time, and importance-based flow time plus energy are computed. The simulation results are stated in the Table 7 and Table 8.

Table 7.

Details and execution information of jobs with random-order of size and importance using HSIF and NC.

Table 8.

Three objectives values for jobs arriving with random-order of size using HSIF and NC.

On the simulation data provided in the Table 7 and Table 8, the statistical analysis is conducted. The Independent Samples t Test is used to compare the means of two independent groups in order to determine whether there is statistical evidence that the associated objective means are significantly different.

In the first Table 9, Group Statistics, provides basic information about the group comparisons, including the sample size (n), mean, standard deviation, and standard error for objectives by group. In the second section, Independent Samples Test, displays the results most relevant to the Independent Samples t Test. There are two parts that provide different pieces of information: t-test for Equality of Means and Levene’s Test for Equality of Variances. If the p value is less than or equal to the 0.05, then one should use the lower row of the output (the row labeled “Equal variances not assumed”). If the p value is greater than 0.05, then one should use the upper row of the output (the row labeled “Equal variances assumed”). Based on the results provided in the Table 9 and Table 10, the following conclusive remarks are considered:

Table 9.

Group statistics of objectives values for HSIF and NC.

Table 10.

Statistics of objectives values for HSIF and NC using Independent Samples t Test.

- For Turnaround Time p-value is less than 0.05 in Levene’s Test for Equality of Variances; therefore, the null hypothesis (the variability of the two groups is equal) is rejected. The lower row of the output (the row labeled “Equal variances not assumed”) is considered. A t test passed to reveal a statistically reliable difference between the mean values of Turnaround Time of HSIF (M = 13.42, s = 12.511366261) and NC (M = 20.6, s = 19.786616792) with t(82.782) = 2.17, p = 0.033.

- The total Turnaround Time for HSIF is 359 time unit lesser than the total Turnaround Time for NC. The average Turnaround Time for HSIF is 7.18 time unit lesser than the average Turnaround Time for NC.

- For Response Time p-value is less than 0.05 in Levene’s Test for Equality of Variances; therefore, the null hypothesis (the variability of the two groups is equal) is rejected. The lower row of the output (the row labeled “Equal variances not assumed”) is considered. A t test passed to reveal a statistically reliable difference between the mean values of Response Time of HSIF (M = 11.72, s = 12.748813) and NC (M = 18.32, s = 19.976966) with t(83.23) = 2.17, p = 0.05.

- The total Response Time for HSIF is 330 time unit lesser than the total Response Time for NC. The average Response Time for HSIF is 6.6 time unit lesser than the average Response Time for NC.

- For Completion Time p-value is greater than 0.05 in Levene’s Test for Equality of Variances; therefore, the null hypothesis (the variability of the two groups is equal) is considered. The upper row of the output (the row labeled “Equal variances assumed”) is considered. A t test failed to reveal a statistically reliable difference between the mean values of Completion Time of HSIF (M = 67.4, s = 30.651431) and NC (M = 74.82, s = 34.902014) with t(98) = 1.13, p = 0.261.

- For Energy Consumed p-value is greater than 0.05 in Levene’s Test for Equality of Variances; therefore, the null hypothesis (the variability of the two groups is equal) is considered. The upper row of the output (the row labeled “Equal variances assumed”) is considered. A t test failed to reveal a statistically reliable difference between the mean values of Energy Consumed of HSIF (M = 196.7, s = 160.31869) and NC (M = 274.778, s = 291.01057) with t(98) = 1.66, p = 0. 1.

- Although, the statistical test failed to identify the difference in HSIF and NC on the basis of energy consumed, the total Energy Consumed for HSIF is 3909.937747 unit lesser than the total Energy Consumed for NC. The average Energy Consumed for HSIF is 78.07875 unit lesser than the average Energy Consumed for NC.

- For Importance-based Flow Time p-value is less than 0.05 in Levene’s Test for Equality of Variances; therefore, the null hypothesis (the variability of the two groups is equal) is rejected. The lower row of the output (the row labeled “Equal variances not assumed”) is considered. A t test passed to reveal a statistically reliable difference between the mean values of Importance-based Flow Time of HSIF (M = 2479.15, s = 3625.2051) and NC (M = 15373.3, s = 21122.893) with t(63.08) = 1.95, p = 0.05.

- The total Importance-based Flow Time for HSIF is 139381.7662 unit lesser than the total Importance-based Flow Time for NC. The average Importance-based Flow Time for HSIF is 2787.635324 unit lesser than the average Importance-based Flow Time for NC.

- For Importance-based Flow Time plus Energy p-value is less than 0.05 in Levene’s Test for Equality of Variances; therefore, the null hypothesis (the variability of the two groups is equal) is rejected. The lower row of the output (the row labeled “Equal variances not assumed”) is considered. A t test passed to reveal a statistically reliable difference between the mean values of Importance-based Flow Time plus Energy of HSIF (M = 2675.83, s = 3774.8105) and NC (M = 5541.57, s = 9740.346) with t(63.39) = 1.94, p = 0.05.

- The total Importance-based Flow Time plus Energy for HSIF is 143286.703 unit lesser than the total Importance-based Flow Time plus Energy for NC. The average Importance-based Flow Time plus Energy for HSIF is 2865.73406 unit lesser than the average Importance-based Flow Time plus Energy for NC.

It is clearly evident from the above statistical analysis and deduced results that HSIF performance better than the best available scheduling algorithm NC.

To extend the perfection of the analysis of the evaluation of working of HSIF in comparison to NC, the normalized Z-values of Energy Consumed by individual job (ECiJ) and importance-based flow time of individual job (IbFTiJ) are computed and provided in the Table 11 and Table 12. The sum of Z values of Energy Consumed by individual job (ECiJ) and the importance-based flow time of individual job (IbFTiJ) are added and converted in to the range [0 1] for individual job, as shown in the Table 11 and Table 12. For all jobs, the total of normalized values of ECiJ+IbFTiJ and the average of the normalized values of ECiJ+IbFTiJ are provided (in the Table 11 and Table 12) to reflect the difference between the working of both algorithms. The normalized total values and average values of ECiJ+IbFTiJ are lesser for HSIF than NC. It reflects that the normalized value of dual objective (i.e., the sum of energy consumed and importance based flow time) for HSIF is lesser and better than NC. It is concluded from the above analysis that HSIF performs better than NC.

Table 11.

Normalized objectives values for HSIF using z-score.

Table 12.

Normalized objectives values for NC using z-score.

6. Conclusions and Future Work

An online non-clairvoyant job scheduling algorithm Highest Scaled Importance First (HSIF) is proposed with an objective to minimize the sum of scaled importance based flow time and energy consumed. HSIF uses the arbitrary power function and dynamic speed scaling policy for uni-processor system. The working of HSIF is analysed using the amortized potential function analysis against an optimal offline adversary. The competitive ratio of HSIF is 2. The competitive ratio of HSIF is lesser than the non-clairvoyant scheduling algorithms LAPS, SelMig, NC, , EtRR, ALG, and WLAPS; similar to an online clairvoyant scheduling Alg. Additionally, a set of jobs is considered as an illustrative example and the execution of the jobs on a processor is simulated by using HSIF and the best known algorithm NC. The simulation results show that the performance of the HSIF is consistent and better than the other online non-clairvoyant algorithm. On the basis of amortized potential function analysis and simulation results, it is concluded that the HSIF performs better than any other online non-clairvoyant algorithm. Use of HSIF in data centres and in battery based devices will reduce power consumption and improve computing capability. The further enhancement of our study will be to evaluate the working of HSIF in the multi-processor environment and the experiments will be conducted in the real time environment as well as with more number of test cases. Along with the amortized analysis and simulation the result will be analysed using statistical tests. The working of HSIF will be evaluated in the cloud/fog environment for resource allocation and energy optimization. One open problem is to reduce the competitive ratio that is achieved in this paper. In the further extension of this work, the number of jobs may be increased significantly to enhance the analysis of the algorithmic evaluation.

Author Contributions

All authors have worked on this manuscript together and all authors have read and approved the final manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Singh, P. Prashast Release Round Robin: R3 an energy-aware non-clairvoyant scheduling on speed bounded processors. Karbala Int. J. Mod. Sci. 2015, 1, 225–236. [Google Scholar] [CrossRef]

- Singh, P.; Wolde-Gabriel, B. Executed-time Round Robin: EtRR an online non-clairvoyant scheduling on speed bounded processor with energy management. J. King Saud Univ. Comput. Inf. Sci. 2016, 29, 74–84. [Google Scholar] [CrossRef]

- U.S. Environmental Protection Agency. EPA Report on server and data centre energy efficiency. Available online: https://www.energystar.gov/index.cfm?c=prod_development.server_efficiency_study (accessed on 10 June 2015).

- Shehabi, A.; Smith, S.J.; Masanet, E.; Koomey, J. Data center growth in the United States: Decoupling the demand for services from electricity use. Environ. Res. Lett. 2018, 13, 1–12. [Google Scholar] [CrossRef]

- Fernández-Cerero, D.; Jakobik, A.; Grzonka, D.; Kołodziej, J.; Fernandez-Montes, A. Security supportive energy-aware scheduling and energy policies for cloud environments. J. Parallel Distrib. Comput. 2018, 119, 191–202. [Google Scholar] [CrossRef]

- Lei, H.; Wang, R.; Zhang, T.; Liu, Y.; Zha, Y. A multi-objective co-evolutionary algorithm for energy-efficient scheduling on a green data center. Comput. Oper. Res. 2016, 75, 103–117. [Google Scholar] [CrossRef]

- Chan, H.-L.; Edmonds, J.; Pruhs, K. Speed Scaling of Processes with Arbitrary Speedup Curves on a Multiprocessor. Theory Comput. Syst. 2011, 49, 817–833. [Google Scholar] [CrossRef][Green Version]

- Bansal, N.; Kimbrel, T.; Pruhs, K. Dynamic speed scaling to manage energy and temperature. J. ACM 2007, 54, 1–39. [Google Scholar] [CrossRef]

- Pruhs, K.; Uthaisombut, P.; Woeginger, G. Getting the best response for your erg. ACM Trans. Algorithms 2008, 4, 1–17. [Google Scholar] [CrossRef]

- Bansal, N.; Chan, H.L.; Pruhs, K. Speed scaling with an arbitrary power function. In Proceedings of the Annual ACM-SIAM Symposium on Discrete Algorithms, New York, NY, USA, 4–6 January 2009; pp. 693–701. [Google Scholar]

- Azar, Y.; Devanur, N.R.; Huang, Z.; Panigrahi, D. Speed Scaling in the Non-clairvoyant Model. In Proceedings of the Annual ACM Symposium on Parallelism in Algorithms and Architectures, Portland, OR, USA, 13–15 June 2015; pp. 133–142. [Google Scholar]

- Bansal, N.; Pruhs, K.; Stein, C. Speed Scaling for Weighted Flow Time. SIAM J. Comput. 2009, 39, 1294–1308. [Google Scholar] [CrossRef]

- Motwani, R.; Phillips, S.; Torng, E. Nonclairvoyant scheduling. Theor. Comput. Sci. 1994, 30, 17–47. [Google Scholar] [CrossRef]

- Bansal, N.; Dhamdhere, K.; Konemann, J.; Sinha, A. Non-clairvoyant Scheduling for Minimizing Mean Slowdown. Algorithmica 2004, 40, 305–318. [Google Scholar] [CrossRef]

- Yao, F.; Demers, A.; Shenker, S. A scheduling model for reduced CPU energy. In Proceedings of the Annual Symposium on Foundations of Computer Science, Berkeley, CA, USA, 23–25 October 1995; pp. 374–382. [Google Scholar]

- Leonardi, S.; Raz, D. Approximating total flow time on parallel machines. In Proceedings of the ACM Symposium on Theory of Computing, El Paso, TX, USA, 4–6 May 1997; pp. 110–119. [Google Scholar]

- Chekuri, C.; Khanna, S.; Zhu, A. Algorithms for minimizing weighted flow time. In Proceedings of the ACM Symposium on Theory of Computing, Crete, Greece, 6–8 July 2001; pp. 84–93. [Google Scholar]

- Awerbuch, B.; Azar, Y.; Leonardi, S.; Regev, O. Minimizing the Flow Time Without Migration. SIAM J. Comput. 2002, 31, 1370–1382. [Google Scholar] [CrossRef]

- Avrahami, N.; Azar, Y. Minimizing total flow time and total completion time with immediate dispatching. In Proceedings of the ACM Symposium on Parallelism in Algorithms and Architectures, San Diego, CA, USA, 7–9 June 2003; p. 11. [Google Scholar]

- Chekuri, C.; Goel, A.; Khanna, S.; Kumar, A. Multiprocessor scheduling to minimize flow time with epsilon resource augmentation. In Proceedings of the ACM Symposium on Theory of Computing, Chicago, IL, USA, 13–15 June 2004; pp. 363–372. [Google Scholar]

- Pruhs, K.; Sgall, J.; Torng, E. Online Scheduling. In Handbook of Scheduling: Algorithms, Models, and Performance Analysis, 1st ed.; Leung, J.Y.-T., Ed.; CRC Press: Boca Raton, FL, USA, 2004; pp. 15–41. [Google Scholar]

- Becchetti, L.; Leonardi, S.; Marchetti-Spaccamela, A.; Pruhs, K. Online weighted flow time and deadline scheduling. J. Discret. Algorithms 2006, 4, 339–352. [Google Scholar] [CrossRef][Green Version]

- Chadha, J.; Garg, N.; Kumar, A.; Muralidhara, V. A competitive algorithm for minimizing weighted flow time on unrelated processors with speed augmentation. In Proceedings of the Annual ACM Symposium on Theory of Computing, Bethesda, MD, USA, 31 May–2 June 2009; pp. 679–684. [Google Scholar]

- Anand, S.; Garg, N.; Kumar, A. Resource Augmentation for Weighted Flow-time explained by Dual Fitting. In Proceedings of the Twenty-Third Annual ACM-SIAM Symposium on Discrete Algorithms, Kyoto, Japan, 17–19 January 2012; pp. 1228–1241. [Google Scholar]

- Albers, S.; Fujiwara, H. Energy-efficient algorithms for flow time minimization. ACM Trans. Algorithms 2007, 3, 49. [Google Scholar] [CrossRef]

- Chan, S.-H.; Lam, T.-W.; Lee, L.-K. Non-clairvoyant Speed Scaling for Weighted Flow Time. In Proceedings of the Annual European Symposium, Liverpool, UK, 6–8 September 2010; pp. 23–35. [Google Scholar]

- Im, S.; Kulkarni, J.; Munagala, K.; Pruhs, K. SelfishMigrate: A Scalable Algorithm for Non-clairvoyantly Scheduling Heterogeneous Processors. In Proceedings of the 2014 IEEE 55th Annual Symposium on Foundations of Computer Science (FOCS), Philadelphia, PA, USA, 18–21 October 2014; pp. 531–540. [Google Scholar]

- Fernández-Cerero, D.; Fernández-Montes, A.; Ortega, J.A. Energy policies for data-center monolithic schedulers. Expert Syst. Appl. 2018, 110, 170–181. [Google Scholar] [CrossRef]

- Duy, T.V.T.; Sato, Y.; Inoguchi, Y. Performance evaluation of a Green Scheduling Algorithm for energy savings in Cloud computing. In Proceedings of the IEEE international Symposium on Parallel & Distributed Processing, Workshops and Phd Forum (IPDPSW), Atlanta, GA, USA, 19–23 April 2010; pp. 1–8. [Google Scholar]

- Beloglazov, A.; Abawajy, J.; Buyya, R. Energy-aware resource allocation heuristics for efficient management of data centers for Cloud computing. Futur. Gener. Comput. Syst. 2012, 28, 755–768. [Google Scholar] [CrossRef]

- Sohrabi, S.; Tang, A.; Moser, I.; Aleti, A. Adaptive virtual machine migration mechanism for energy efficiency. In Proceedings of the 2016 IEEE/ACM 5th International Workshop on Green and Sustainable Software (GREENS), Austin, TX, USA, 16 May 2016; pp. 8–14. [Google Scholar]

- Juarez, F.; Ejarque, J.; Badia, R.M. Dynamic energy-aware scheduling for parallel task-based application in cloud computing. Futur. Gener. Comput. Syst. 2018, 78, 257–271. [Google Scholar] [CrossRef]

- Gupta, A.; Krishnaswamy, R.; Pruhs, K. Scalably Scheduling Power-Heterogeneous Processors. In Proceedings of the 37th International Colloquium Conference on Automata, Languages and Programming, Bordeaux, France, 6–10 July 2010; pp. 312–323. [Google Scholar]

- Sun, H.; He, Y.; Hsu, W.-J.; Fan, R. Energy-efficient multiprocessor scheduling for flow time and makespan. Theor. Comput. Sci. 2014, 550, 1–20. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).