4.1. Experimental Setup

The dataset used in our experiments was WSDM-KKBOX

https://www.kaggle.com/c/kkbox-music-recommendation-challenge/data. KKBOX is the biggest online music streaming platform in Asia and the corresponding dataset was used in the 11th ACM International Conference on Web Search and Data Mining (WSDM 2018) challenge. The dataset contains the users’ listening records during a certain time period. The records before a certain point are used as training data, and the records after this point are the test data. The time period and the separation point are chosen by WSDM. In addition, the metadata of songs and users is also provided. These metadata includes the song length, song genres, artist names, composer names, languages, user ages, user locations, user genders etc.

In the WSDM challenge, competitors are asked to predict the chances of a user listening to a song repetitively after the first observable listening event. In this work, the purpose of DTNMR was to recommend songs for users based on their listening histories, thus DTNMR helps the users find new interesting songs. In order to achieve this goal, we predicted the chances of a user listening to an un-listened song in the future and ranked all un-listened songs according to the predicted chances. Therefore, our task was different from WSDM in terms of input, output and also the training process. The differences of DTNMR and WSDM are elaborated in

Table 3.

Thus, we could not compare our method with the methods in the WSDM challenge.

During pre-processing, the songs and users, which do not appear in both the training and test dataset, were removed. After that, we removed the records that appeared in the training dataset from the test dataset. This was because recommendation systems are used to help users find new interests, not to remind them of interests already known. At last, there are 34,403 users, 41,9781 songs, 7,377,304 training records and 2,236,323 testing records in the dataset.

Table 4 shows the feature statistic results of the songs.

Seven baseline methods were used to evaluate the effectiveness of our method.

As the UserKNN model needs to find Top-K similar users for each user-item group, it is too complex for a dataset with 30K users and 400K items. Therefore, we found the top 1000 similar users for each user, and rated songs according the top 50 users who listened to the corresponding song among these 1000 similar users. Since some of the methods were stochastic models, we ran these methods 10 times and calculated the standard deviation of these methods. We implemented the DTNMR and LSTM methods with Tensorflow 1.0.1. The other base lines were implemented by LibRec

https://www.librec.net/, a GPL-licensed Java library which contains a suite of start-of-art recommendation algorithms.

The evaluation matrixes used in this work are listed as follows:

P@10: The precision of the top-ten results.

R@10: The recall of the top-ten results.

F@10: The f-measure of the top-ten results.

MAP@10: The mean average precision of the top-ten results.

UCOV@10: The user coverage of the top-ten results (e.g., the percentage of users received at least one correct item).

AUC: The area under the curve, equal to the probability that a randomly chosen interested song ranked higher than a randomly chosen uninterested song

We used the top-ten results because most recommendation systems recommend ten songs for the users each time.

4.2. Experiment Results

The first step of DTNMR is model training. The model training process is run in CPU mode (16 kernels), and takes less than six hours. In order to find the Top-K results for a user, we needed to compute the scores of each songs. Running the whole network was extremely slow since the song features are recalculated for each user. In practise, we utilized pre-calculated song features to recommend songs for users because song features are stable over time. In this case, it took about one second recommending for a user.

In order to determine the combination function

h in Equation (

4), we randomly selected 90% of users as training data, and the other 10% users as validation data. After running each combination functions ten times, we did not find any significant difference between them. We used “element-wise add" function in all the experiments.

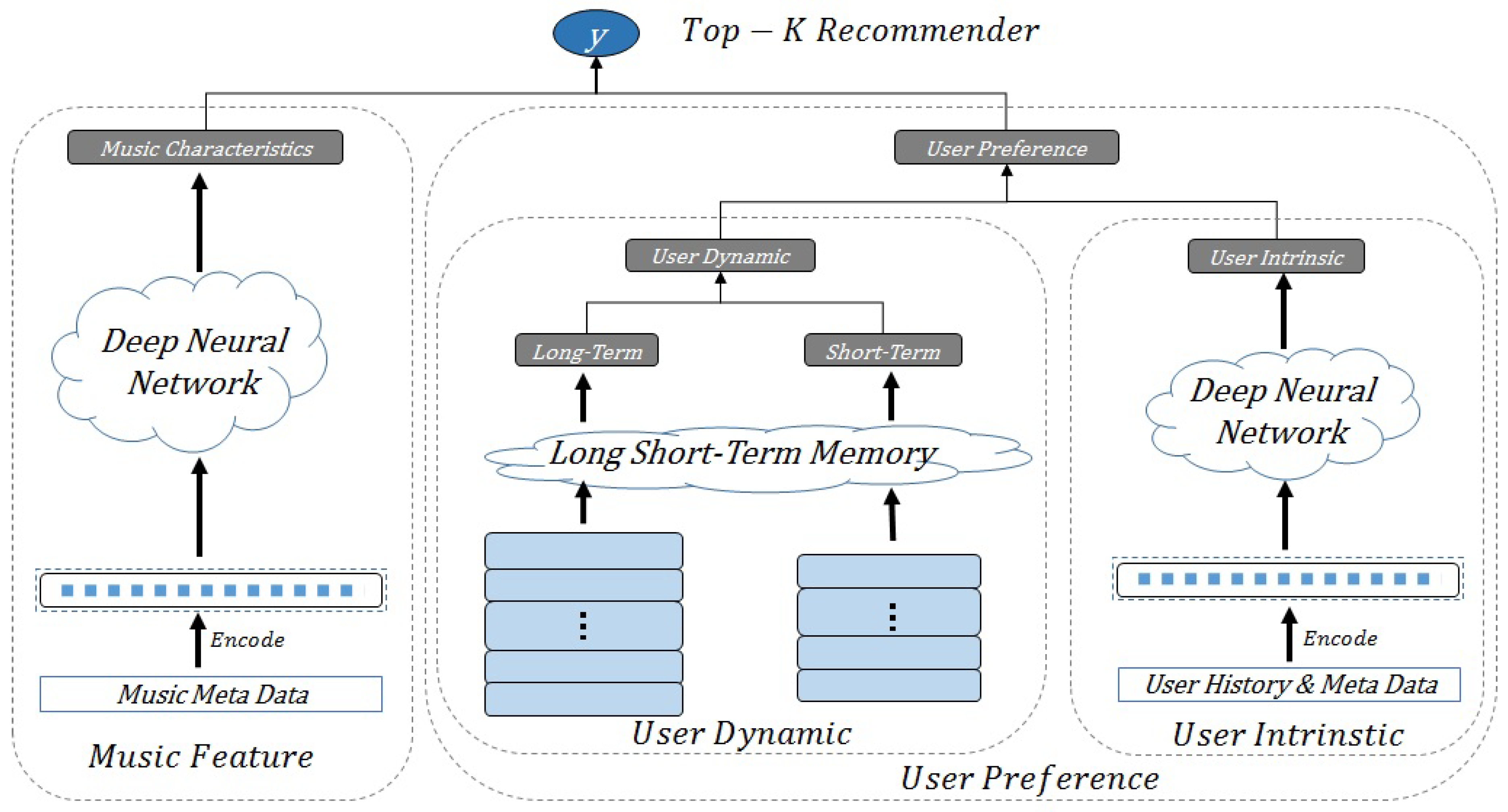

There are four components in DTNMR, the music feature component, the user long-term component, the user short-term component and the user intrinsic component. Among them, only the music feature is necessary in DTNMR; the other three components are not required by DTNMR. Additionally, in the user long-term component and the user short-term component, we could omit the behavior type of the listening histories. Therefore, our first experiment was set up to examine the influence of these components and behavior type. We conducted an experiment to evaluate the performances of DTNMR with different components.

As shown in

Table 5, we proposed the results of five different combinations. The components U and L were used to extract users’ dynamic preferences, while component L had more records than U, therefore, we chose component L to evaluate the contribution of the users’ dynamic preference. The component B was the users’ behavior types while listening to music, and it could not be applied to DTNMR independently. Therefore, there was only one experiment of component B. DTNMR-U was the worst among all the models. Because user preferences change over time, only considering user intrinsic preference was not enough. The performances of DTNMR-L, DTNMR-UL and DTNMR-ULS were similar. All of them took user temporal preferences into consideration with the help of LSTM. The short-term preference and the intrinsic preference could help DTNMR describe user preferences better, but they were less important than long-term preference. The DTNMR-ULSB performed the best because it considered the behavior type additionally. With the help of the user behavior type, DTNMR was able to evaluate the different importance of user listening histories and models the user dynamic preferences in a better way. The Precision, Recall, F-Measure, MAP, User Coverage and AUC were 14.26%, 3.63%, 2.90%, 8.38%, 58.09% and 79.02% respectively.

Table 6 shows the performances of the seven baseline methods and DTNMR. DTNMR-ULSB was the best in terms of Precision, Recall, F-Measure, MAP and User Coverage. About 58% users were satisfied with at least one song in the top-ten recommendations.

SVD++ and RBM failed to recommend correct items for users. Their performances were no better than that of choosing songs randomly for users (0.02% in terms of P@10). This was because both of them are based on explicit item ratings. During our experiment, only user listening histories were provided.

The performances of implicit feedback based methods AoBPR and WRMF were much better than those of SVD++ and RBM. The precisions were 7.9% and 10.6% respectively. But these two methods could not handle the extremely sparse dataset, their performances are worse than our method.

The performance of LSTM was similar to AoBPR. LSTM model needed to train a neural network for each song. In music recommendation, however, most songs were listened to by a small number of users, and the neural network of these songs were not trained sufficiently. Therefore, the LSTM model also suffered from the sparse data problem.

MostPop recommended the same songs for all users. The precision of this method was 11.2% and the user coverage was 44.1%. We could infer that 44% users listened to at least one popular song, and some of them listened to multiple popular songs.

UserKNN was the second best method among all the compared methods. As UserKNN recommends items based on similar users, it does not suffer from the sparse data problem because we had enough users in the training set. Therefore, its performance was better than other baselines. The efficiency was the biggest problem of UserKNN. It needs to calculate the similarity between each user pairs and find the top users for each song. In our experiment, in which we found the top 1000 similar users for each user and recommended according to these users, the time taken for each user is about 10 s.

DTNMR recommends songs more precisely than other methods by considering music characteristics and users’ temporal preferences. The music characteristics are extracted from their metadata by a deep neural network. The user preferences are extracted from the users listen histories with RNNs and DNNs. In addition, DTNMR overcomes the sparse data problem by using the metadata. Since metadata exists in all songs and users, DTNMR can describe songs and users more precisely. DTNMR outperformed UserKNN by 0.73%, 0.82%, 0.57%, 0.19%, 6.03% and 3.01% in terms of Precision, Recall, F-Measure, MAP, User Coverage and AUC. Compared with MostPop, these values are 2.71%, 1.19%, 0.89%, 1.28%, 14.07% and 6.96% respectively.

Finally, we conducted an experiment to examine the ability of recommending songs to new users and to demonstrate that DTNMR can learn new preferences for users. In this experiment, 10 percents of users were removed from the training set. After training the DTNMR with the remaining data, we utilized the trained model to recommend songs for the removed users. Each user’s recommendation results were based on their listening histories in the original training set, and the evaluation result was based on the test set. The performance of recommending songs for these users of DTNMR was , , , and in terms of P@10, R@10, F-M@10, MAP@10, UCOV@10 respectively. This performance was similar with training DTNMR with the whole training set.

When DTNMR did not know any information about the users, it infered the behavior patterns from existing users and recommend new users accordingly. The long-term and short-term components described users dynamic preferences based on their recently actions. Therefore, users’ dynamic preferences changed when they listen to new songs. Initially, there was no dynamic preferences for the new users, and after some actions, DTNMR described their dynamic preferences based on the new records. This characteristic was guaranteed by the LSTM mechanism. LSTM is developed for learning sequential information from sequence input. It’s performance is verified in multiple domains such as language modeling, machine translation, speech recognition and image descriptions generation. In DTNMR, LSTM is used to learn users’ preference from their recently actions, and the preference varied while the actions changed. The intrinsic preferences varied much slower than dynamic preferences since it recorded the users’ entire histories.

Table 7 shows the recommendation results of two users at different times.

In this table, bold songs are the correct recommendation. From this table, we found that the recommendation results varied as time changed. For the user 1500, song 212,724 ranked higher at time than that at time . A similar phenomenon existed in the results of user 13,207, where song 190,396 ranked higher at time . The users’ intrinsic preferences remain the same as time changed, therefore, this phenomenon was caused by the different user dynamic preferences at different times. In other words, DTNMR predicted that the user 1500 was more interested in song 212,724 when than since the dynamic preferences generated by LSTM changed. As a conclusion, DTNMR could automatically adapt to new users and learns new features when they listened to more songs, and LSTM could learn different dynamic preferences of the same user at different times.

To recommend songs to users without listening histories, DTNMR asked the users to select their interested genres or artists, and recommended relevant and popular songs based on their selection.