Abstract

As the importance of emotional interaction between humans and robots continues to gain attention, numerous studies have been conducted to identify the characteristics and effects of emotional HRI (Human–Robot Interaction) elements applied to robots. However, no study has yet combined various HRI elements into a single robot and conducted large-scale user experiments to determine which HRI element users prefer the most. This study selected four characteristics that facilitate attachment and emotional bonding between humans and animals: grooming, emotional transfer, imprinting, and cooperative hunting (play). These four characteristics were incorporated into the design and behavioral patterns of the robot EDIE as HRI elements. To allow users to effectively experience these elements, a 30 min runtime robot performance content featuring EDIE as the main character was developed. This large-scale experiment in the form of a performance enabled participants to engage with all four HRI elements and then respond to a survey identifying their most preferred element. Over two experiments involving a total of 3760 participants, this study examined trends in user preferences regarding the robot’s characteristics. By identifying the most effective HRI elements for fostering user attachment to robots, the findings aim to contribute to the harmonious coexistence of humans and robots.

1. Introduction

This study aimed to foster attachment between humans and robots by examining the process of attachment formation between animals, particularly between humans and companion animals. Common characteristics observed in these relationships were identified and incorporated as HRI behavioral elements in the design and behavior of the robot.

Amoit’s 2017 study found that humans form attachment and a sense of connection through interactions with pets, which provide emotional satisfaction and help alleviate social isolation [1]. However, due to the burden and responsibility of caring for a living animal, some people are unable to adopt pets despite their desire for emotional companionship. According to Sinatra’s 2012 study [2], human–animal interaction and human–robot interaction may involve similar psychological mechanisms. In particular, humans are more likely to feel affinity and emotional connection toward robots with biological appearances compared to those with purely mechanical designs. As a result, there is an increasing interest in companion robots with biological appearances, which can provide emotional satisfaction while reducing the burden of social responsibility through interaction.

Kim’s 2022 study [3] revealed that the appearance of companion robots influences consumer preferences, with differences observed based on gender. For instance, male consumers tended to prefer companion robots with a more lifelike, biological appearance over mechanical designs, whereas female consumers did not exhibit a particular preference for realistic appearances in companion robots.

Based on 2023 data from the global research firm Statista [4], dogs and cats are the most popular companion animals worldwide. Similarly, the 2022 report by the American Pet Products Association (APPA) [5] revealed that approximately 70% of U.S. households own pets, with dogs making up 48% and cats 45%.

Bradwell’s 2021 study [6] suggests that companion robots that resemble familiar forms such as dogs and cats tend to have higher user acceptance, with a particular preference for features like soft fur, interactivity, and large, appealing eyes.

Based on the findings of Sinatra, Kim and Bradwell, the design of the robot EDIE incorporates the biological characteristic of “fur,” commonly associated with actual dogs and cats.

Additionally, representative behavioral patterns and interaction elements of dogs and cats were selected for integration into the robot, focusing on those that are feasible to implement.

Interactions between humans and companion animals encompass diverse activities such as communication, affection, training, protection, walking, play, and feeding. These interactions often involve specific behaviors, such as animals requesting petting or engaging in eye contact to express emotion. Physical touch during petting has been shown to increase oxytocin levels in both humans and animals, reducing stress and fostering attachment [7].

To replicate these effects, we incorporated features of dogs and cats into the design of the robot EDIE (Figure 1), emphasizing its fluffy white appearance reminiscent of fur. EDIE responds to petting with smiles and laughter, facilitating positive emotional transfer between the robot and the user. Additional behaviors like protection, walking, and play were reinterpreted as imprinting effects and cooperative hunting, and integrated into EDIE’s design and behavior as key HRI (Human–Robot Interaction) elements.

Figure 1.

EDIEs.

To allow as many users as possible to experience EDIE’s four HRI elements, we developed a 30 min performance featuring EDIE as the protagonist. Participants were surveyed after the performance to identify their most preferred HRI element among grooming (petting), emotional transfer, imprinting, and cooperative hunting (play). The performance format was chosen for three main reasons:

- It allowed for a large number of participants.

- It minimized the potential for researcher bias influencing participant responses.

- It ensured participant anonymity, aligning with ethical research standards.

This study employed large-scale surveys to identify broader trends and preferences rather than conducting in-depth analyses. A higher user preference for a specific HRI element indicated that the element effectively increased the user’s affinity and attachment to the robot. A total of 3760 participants responded to the surveys, with 1461 participants in the first experiment and 2299 participants in the second.

In the first experiment, participants engaged with EDIE for 5~10 min during the introductory phase of the content. In this setting, imprinting effects were the most preferred HRI element. Conversely, in the second experiment, where participants interacted with EDIE for less than 3 min, emotional transfer emerged as the most favored element.

This suggests that Long-Term Familiarization (5~10 min) fosters attachment through imprinting, while Short-Term Familiarization (less than 3 min) relies more on emotional transfer. These findings illustrate how specific HRI elements can influence user preferences and attachment, with the hope that they contribute to the practical development of emotionally engaging robots that promote human-robot coexistence.

2. Materials

The study takes the form of a performance to secure a large number of voluntary experimental participants. Each performance lasts approximately 30 min, during which one participant is paired with a robot to enter the performance space. Participants experience and enjoy the HRI features applied to EDIE and return the robot upon exiting the performance. The performance scenario is meticulously designed to ensure that participants evenly experience all of EDIE’s HRI elements.

The HRI elements implemented in EDIE consist of four key features: grooming, emotional transfer, imprinting effect, and cooperative hunting. These elements are simplified representations of the complex and multi-layered interactions commonly observed between humans and companion animals (e.g., dogs and cats), such as communication, affection, training and learning, protection, walking, playing, and feeding activities.

To ensure the integrity of the experimental performance, it is crucial to prevent interruptions caused by robot malfunctions during the performance. If a robot malfunctions and the participant must restart their experience, this could lead to an uneven exposure to certain HRI elements or diminish the immersive quality of the performance, thereby contaminating the response samples. Therefore, to minimize the likelihood of robot malfunctions, the functionalities for interactions have been simplified.

2.1. The Fuzzy Robot Representing Grooming

The fuzzy robot interacts with users by rubbing its body against their hands, inviting them to groom it. This behavior mimics a form of communication and affection observed between humans and animals.

As confirmed by previous research, soft tactile stimulation through fur is closely linked to emotional comfort. H. Harlow’s 1958 study highlighted the correlation between fur and psychological stability [8], while James Burkett’s 2016 research demonstrated that oxytocin is released during the gentle stroking of fur [9], fostering emotional bonding. Furthermore, Amy McCullough’s 2018 study found that owning pets with soft fur and engaging in grooming behaviors not only provides psychological satisfaction but also significantly reduces stress [10]. These findings have been consistently validated over time.

Based on these insights, this study incorporated the characteristics of grooming into the design of EDIE’s appearance. The robot’s white, fuzzy exterior was specifically chosen to provide users with emotional satisfaction through soft tactile stimulation, while also evoking the familiarity of mammalian animals.

However, to prevent users from forming preconceived notions about EDIE’s behavioral patterns, the robot was designed to resemble no specific animal. While EDIE’s appearance is reminiscent of a mammal with fur, its design deliberately avoids imitating any particular species.

The outer covering of EDIE was crafted using microfiber fabric with a hair length of 15 mm. This length was chosen to allow users’ fingers to sink into the fur, maximizing tactile stimulation. The soft microfiber fur is designed to penetrate between the fingers, replicating the emotional comfort experienced when stroking a dog or cat.

Through this design, the study aimed to provide users with emotional satisfaction via grooming interactions with EDIE, fostering a sense of attachment to the robot.

2.2. EDIE’s Expressions and Sounds Representing Emotional Transfer

Laughter and smiling reduce cortisol, the stress hormone, relax the body, and enhance feelings of happiness, leading to a more positive emotional state. These effects help promote bonding and a sense of unity [11].

Laughter and smiling go beyond mere emotional expressions; they strengthen social connections. In 2018, P. Mui explained that people tend to mimic each other’s smiles, resulting in emotional contagion [12]. Thus, laughter and smiling are among the most intuitive and immediate reactions, effectively fostering positive relationships.

To enable users to experience the emotional transfer HRI element, this study designed EDIE to smile in response to being groomed by the user.

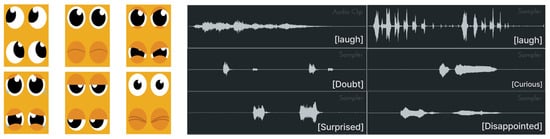

EDIE’s laughter combines expressions and sounds. For facial expression, EDIE’s eyes were designed to occupy one-third of its face. This large size allows for exaggerated expressions, a technique commonly used in animation to emphasize characters’ emotions. The eyes were animated at 20 frames per second and displayed on an LCD screen, ensuring vivid expression and emphasizing the robot’s character.

Although companion animals cannot use human language, people interpret their intentions by integrating factors such as the length, pitch, and tone of their vocalizations, along with facial expressions and gestures [13]. Similarly, EDIE emits unique robotic sounds instead of language, utilizing variations in length, pitch, and tone to represent different emotions. These sounds were synchronized with EDIE’s eye expressions to convey emotional responses.

This study anticipated that EDIE’s laughter and smiles would transfer positive emotions to users, fostering affinity and attachment based on these positive emotional interactions (Figure 2).

Figure 2.

Eye Expression and Sound Pattern.

2.3. Following User Representing Imprinting Effect

The “imprinting effect” was first identified in 1937 by the Austrian biologist and ethologist K. Lorenz [14]. It refers to the phenomenon where young animals form a bond with their mother during early life stages. Over time, the concept expanded to describe how exposure to specific stimuli or environments during critical developmental periods creates a strong and lasting imprint on the brain. This is commonly observed in young animals that perceive the first moving object they encounter after birth as their parent.

Maternal attachment, where a mother forms a bond with her offspring, is similarly linked to the imprinting response. During early life stages, when an individual experiences safety and protection from its mother, this memory influences its behavior as it matures and becomes a parent itself. Recognizing its offspring’s dependence, the individual forms an attachment that fosters care and protection, ultimately enhancing the offspring’s survival rate. This process contributes to the species’ continuity through successful reproduction and survival. Observing a companion animal following its owner and feeling maternal attachment toward it is also a complex interaction of biological, psychological, and evolutionary factors, directly connected to the imprinting effect.

This study aimed to evoke maternal attachment in users toward EDIE by assigning the robot the role of an infant. EDIE was designed to follow a single designated user as its companion.

However, the study’s experimental format posed a challenge, as it was structured as a 30 min performance where participants changed frequently. This left no time for the robot to learn to recognize and follow its companion through training. To address this issue, EDIE was programmed to recognize specific patterns such as flowers, butterflies, or clouds. A fabric sleeve featuring these patterns was created to wrap around the user’s calf, enabling EDIE to track the sleeve as its designated target.

Additionally, the performance scenario required participants to wear the sleeve to be paired with the robot. This setup encouraged participants to actively engage with the experiment by wearing the sleeve, allowing EDIE to follow its designated user seamlessly.

2.4. A Game with the Robot Representing Cooperative Hunting

Cooperative hunting is a behavior where multiple individuals act collectively to effectively hunt larger or faster prey. This behavior is widely observed across various species, from lions, wolves, and whales to ants and bees. According to K.Tsutsui’s 2024 study, cooperative hunting allows for the efficient acquisition of energy necessary for survival while also strengthening social solidarity [15].

The previously discussed features, grooming and emotional transfer, provide emotional satisfaction through sensory interactions between humans and robots. Similarly, the imprinting effect fosters psychological and instinctive bonding, encouraging attachment to the robot.

Cooperative hunting, however, differs in that it promotes emotional bonding through solidarity, which is then leveraged to form attachment. Bonding refers to emotional connections formed between individuals or small groups based on trust, affection, and mutual understanding, while solidarity denotes emotional ties that arise among individuals sharing common goals or benefits. Through collective actions to achieve a shared purpose, individuals develop a sense of belonging and stability within the group, as well as emotional reliance on other group members, ultimately forming attachment.

In summary, solidarity and bonding share the commonality of fostering close relationships that lead to attachment. However, solidarity originates within a broader social context, while bonding is more personal and intimate, creating emotionally positive relationships.

Cooperative hunting between humans and animals has evolved into forms of play as humans moved away from a survival mode based on hunting and gathering. Activities like playing frisbee with a dog or engaging a cat with a fishing rod are forms of play that foster emotional connection between humans and their pets. While traditional cooperative hunting rewarded teamwork with “food,” modern play rewards “fun” as the primary incentive.

To incorporate cooperative hunting as an HRI element for EDIE, this study developed a game that the user and EDIE could enjoy together. The game was designed not as a screen-based activity but as a physical experience enabled through digital art.

Research by N.Rob has shown that sharing experiences with others has a significant impact on attachment formation [16].

Thus, by engaging the user and EDIE in a cooperative game where they complete missions together and experience enjoyment as a reward, this study aims to foster human attachment to the robot.

2.5. Development of an HRI Experience Scenario

The primary focus in designing the content scenario was ensuring that users could experience all four HRI elements—grooming, emotional transfer, imprinting effect, and cooperative hunting—through the story’s progression. The overall storyline is as follows:

EDIE is a robotic lifeform residing on an alien planet (Figure 3) that has been invaded by an evil computer virus. Participants, visiting the planet to save EDIE from this crisis, meet their designated EDIE companion (imprinting effect). They calm the frightened EDIE by grooming it (grooming), and when EDIE regains its courage and begins to smile again (emotional transfer), the group collaborates with EDIE to rebuild the planet damaged by the virus (cooperative hunting). The HRI elements applied to EDIE are organized and matched within the scenario as shown in Table 1.

Figure 3.

The Venue Representing Alien Planet.

Table 1.

HRI Elements Applied to EDIE and Experience Scenario.

Although the storyline is simple, it meticulously integrates all four HRI elements selected for this study. To ensure participants fully experience these elements, a docent was deployed to guide and facilitate the experience.

2.6. Development of the Robot EDIE

The hardware design and construction of the robot were focused on implementing the HRI elements of grooming, emotional transfer, imprinting effect, and cooperative hunting.

EDIE was designed as a wheel-based robot with a spherical body approximately the size of a soccer ball. One side of the sphere was cut diagonally to form the face, allowing EDIE to always look upward toward the user.

The robot’s exterior was crafted using white microfiber fabric to implement grooming. This fabric was draped over the robot’s body, ensuring that users would not feel any discomfort while stroking the fur. Ultra-thin FSR (Force Sensitive Resistor) sensors were installed under the fabric to detect the user’s touch. When a touch was detected, EDIE would respond by expressing positive emotions, such as laughter and smiles. These emotions were conveyed through a combination of eye shapes displayed on an LCD screen and corresponding sounds.

A camera was installed on the forehead of EDIE’s face to enable vision recognition. This allowed EDIE to identify the patterns on the user’s sleeve and follow the corresponding user.

To help users distinguish their designated EDIE, each robot was equipped with an LED strip. The LEDs emitted soft, distinct colors that were visible through the white fur, making each EDIE uniquely identifiable. The appearance of EDIE and its underlying structure beneath the fur are shown in Figure 4.

Figure 4.

EDIE’s Hardware and Appearance Design.

These features enable EDIE to provide users with a dynamic and interactive experience while supporting various HRI elements integrated into the robot’s design and behavior.

To develop the platform responsible for EDIE’s processing, ROS (Robot Operating System) was utilized. ROS simplifies development by breaking down the robot’s components into minimal units called nodes. The entire system was structured around a central Behavior Control node, with input nodes including Camera, FSR, and Laser, and output nodes such as Motor, LED, Display, and Sound.

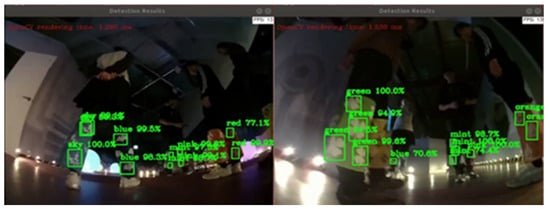

To enable EDIE to follow users, the camera first captures the pattern on the sleeve. The system filters this input to detect each distinct pattern drawn on the fabric, as shown in Figure 5. The captured image is then digitally labeled and processed through a supervised learning procedure to build an AI model. This trained model is deployed on EDIE’s onboard Neural Stick, allowing the robot to identify and track the designated user using on-device AI.

Figure 5.

EDIE’s Pattern Recognition.

The implementation of on-device AI, rather than relying on cloud-based systems, was intended to ensure stable content operation without being affected by unreliable network communications.

3. Methods

The experiment in this study was conducted in the form of a performance. This approach was chosen to prevent participants from being consciously aware that they were part of an experiment, which might influence their responses to the subsequent survey about their interaction with EDIE. Additionally, this format aimed to encourage large-scale user participation to enhance the reliability of the response data while ensuring participants’ anonymity.

3.1. Participants

The number of audience members per performance session was limited to a maximum of seven. Thus, each group consisted of seven participants, seven EDIE robots, and one docent.

The docent, through prior training, was instructed not to interfere with the interaction between participants and EDIE to avoid influencing the participants’ experiences. However, the docent played a role in guiding the overall performance narrative to ensure that participants could experience all four HRI elements.

The performance was conducted twice. The first performance, held over 15 days, attracted a total of 2272 participants. The second performance, held over three months, had over 12,000 participants. Most participants attended as family units or were local employees working near the performance venue. They were primarily drawn to the event through posters promoting the performance. The participants’ ages ranged widely, from under 5 years old to adults. However, the exact age of participants was not recorded, as researchers refrained from any intervention to ensure participants’ anonymity and voluntary participation.

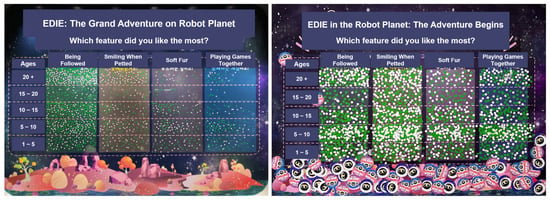

Through the performance, participants were able to experience the HRI elements applied to EDIE and were subsequently asked to participate in a survey vote. The survey board was placed near the exit of the venue to align with the flow of participants. They were invited to vote on which aspects of EDIE they liked the most if they enjoyed the performance. The survey board presented options distinguishing the four HRI elements applied to EDIE. The intent of the questions represented by each option (HRI elements) is organized in Table 2.

Table 2.

Survey Question and Intent.

For the voting process, male participants were given green stickers, while female participants were given white stickers. They placed their stickers at the intersection of their age group (row) and their preferred EDIE element (column). For participants under the age of five, accompanying guardians were asked to explain the questions and assist the children in selecting their answers and placing their stickers where they wanted.

This method allowed researchers to identify participants’ gender, age group, and most preferred HRI elements at a glance. Additionally, since no personally identifiable information was collected, participants experienced less psychological burden, enabling the collection of more data.

In the first performance, 1461 out of 2272 participants participated in the survey over the 15-day period. During the second performance, which spanned 18 days within a three-month period, 2299 out of approximately 12,000 participants participated in the survey vote.

3.2. Experiment 1

The first experiment was conducted over 15 days in October 2019 in Seoul, with 1461 participants responding to the survey after the performance.

The performance scenario was designed to include two instances each of grooming, emotional transfer, and imprinting effect, and four instances of cooperative hunting. Although cooperative hunting appeared to have more opportunities compared to the other elements, the balance among the four HRI elements was ultimately maintained.

This balance was achieved as participants, while playing the game (cooperative hunting) with the robot, often spontaneously engaged in grooming by stroking the robot, observed its smiles (emotional transfer), and experienced the robot following them throughout the performance space (imprinting effect). These spontaneous interactions were not included in the calculated experience counts, ensuring equal emphasis on all four HRI elements. The images of the site during the first experiment are shown in Figure 6.

Figure 6.

Scene from the First Experiment.

3.3. Experiment 2

The experiment, which had been suspended due to the COVID-19 pandemic, resumed in 2023 and was conducted over 18 days in Busan, with 2299 participants responding to the survey.

The intended number of experiences for the four HRI elements in the performance was the same as in the first experiment. However, there was one key difference. In the scenario, after participants were paired with EDIE at the beginning of the performance, they were given time to groom and observe EDIE’s reactions to build familiarity.

In the first experiment, this interaction lasted for over 5 min, allowing sufficient time for bonding. In the second experiment, however, this duration was reduced to under 3 min due to the overwhelming number of participants who signed up for the performance following the transition to an endemic phase.

The images of the site during the second experiment are shown in Figure 7.

Figure 7.

Scene from the Second Experiment.

4. Results

The results of the survey responses from a total of 3760 participants in the performance-based experiments are as follows: 1461 respondents from the first experiment and 2299 respondents from the second experiment.

Figure 8 shows the actual images of the survey boards used during each experiment.

Figure 8.

Results of the First (L) and Second Surveys (R).

4.1. Results of the First Experiment

Among the 1461 participants in the first experiment’s survey, 712 were female, and 749 were male. By age group, there were 607 children (under 10 years old), 420 adolescents (10–20 years old), and 434 adults.

Of the HRI elements applied to EDIE, the Imprinting effect was the most favored, with 767 votes, more than double the votes for the second-ranking element, Emotional Transfer, which received 324 votes. Grooming and Cooperative Hunting followed with 210 and 160 votes, respectively.

The results of the first vote are shown in Table 3.

Table 3.

Experiment 1 Survey Votes.

4.2. Results of the Second Experiment

In the second experiment’s survey, 1115 participants were female, and 1184 were male. By age group, there were 1190 children (under 10 years old), 650 adolescents (10–20 years old), and 459 adults.

In this survey, Emotional transfer was the most favored HRI element, receiving 773 votes, followed by Imprinting effect with 572 votes, Cooperative Hunting with 531 votes, and Grooming with 423 votes.

Unlike the first survey, where there was a preference gap of more than twice between the first and second ranked elements, the second survey showed relatively smaller differences in preference across all elements.

The results of the second vote are shown in Table 4.

Table 4.

Experiment 2 Survey Votes.

4.3. Analysis

The four HRI elements incorporated into the performance scenarios—Imprinting Effect, Emotional Transfer, Grooming, and Cooperative Hunting—were applied the same number of times in both the 2019 and 2023 scenarios: Imprinting Effect (2 times), Emotional Transfer (2 times), Grooming (2 times), and Cooperative Hunting (4 times). Additionally, both performances had similar runtimes of approximately 25 min, and the number of users admitted per session ranged from 7 to 8, making the experimental conditions nearly identical.

The key difference between the two performances (experiments) lies in time. Specifically, it refers to whether users had sufficient time to interact with EDIE upon first encounter and whether this provided enough opportunity to build rapport.

In his 2013 book, Knapp [17] stated that in situations where there is limited information about the other party, nonverbal cues such as gaze, facial expressions, appearance, and posture significantly influence the formation of trust and likability. Similarly, Mumm’s 2011 study found that for groups with low affinity toward robots, increased gaze behavior from the robot reduced the physical distance between humans and the robot [18]. Leite’s 2014 study [19] also showed that humans exhibit psychological responses toward robots that express emotions through facial expressions, such as feeling a sense of closeness toward robots displaying empathetic expressions.

Song’s 2017 research suggested that human–robot interaction can follow psychological processes similar to attachment formation [20]. Specifically, when humans have a positive initial experience with a robot, the likelihood of forming an attachment to the robot increases. Song explained that if the robot exhibits positive responses, such as a soft tactile sensation, smiles, or eye contact, it can enhance the initial imprinting effect. Furthermore, repeated exposure to these interactions can lead to trust-building through consistent and predictable interactions, which ultimately fosters attachment formation.

In the first performance, participants spent approximately 5–10 min during the introduction engaging with the robot. This involved stroking the robot, observing the robot’s smiling responses, and noting how well the robot followed them. In the second performance, the initial interaction time was reduced to less than 3 min while following the same interaction format.

Based on previous studies, it can be hypothesized that differences in the duration of exposure to EDIE’s nonverbal cues—such as tactile interaction with its soft fur, observation of positive emotional reactions (e.g., smiling or laughter), or responsive behaviors (e.g., following the participant)—may be associated with differences in the degree of user attachment when information about EDIE is limited. This interpretation remains speculative, as the degree of attachment was not directly measured in the present study.

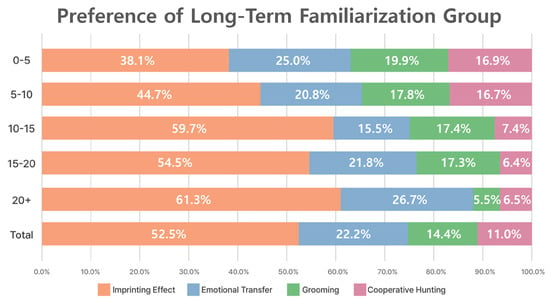

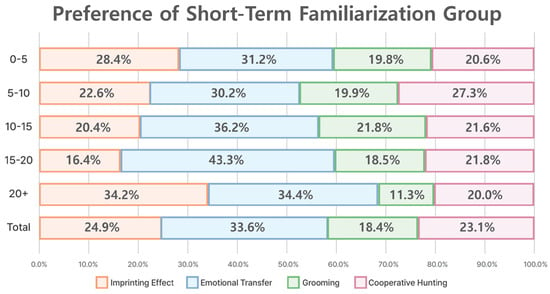

Therefore, based on the degree of interaction experienced with the robots during the introduction of the performance, the 1461 participants from 2019 were classified as the Long-Term Familiarization group, and the 2299 participants from 2023 were classified as the Short-Term Familiarization group to evaluate their responses to the HRI elements. Figure 9 visualizes the responses of 1461 participants from the first performance, who experienced long interactions. Figure 10 visualizes the responses of 2299 participants from the second performance, who experienced short interactions.

Figure 9.

Preferences of Long-Term Familiarization.

Figure 10.

Preferences of Short-Term Familiarization.

For reference, the sample sizes correspond to margins of error of ±2.56% (n = 1461) and ±2.04% (n = 2299) at a 95% confidence level, respectively. However, group differences were formally examined using a Chi-Square Test of Independence to assess the relationship between age group and preferred HRI element. When significant associations were observed, post hoc pairwise comparisons of proportions were conducted with Bonferroni correction. The results were consistent with the descriptive patterns observed across the groups.

4.4. Discussion

Grooming provides satisfaction through tactile stimulation, fostering attachment, and is thus referred to as “sensory stimulation.” Emotional Transfer delivers emotional satisfaction by conveying positive emotions, so it is termed “emotional stimulation.” The Imprinting Effect, grounded in attachment theory, represents the instinctual behavior of young individuals to ensure survival and forms the basis of maternal attachment, earning it the label “instinctual stimulation.” Cooperative Hunting, as applied to EDIE, provides enjoyment through the reward of mission completion and is therefore called “playful stimulation”.

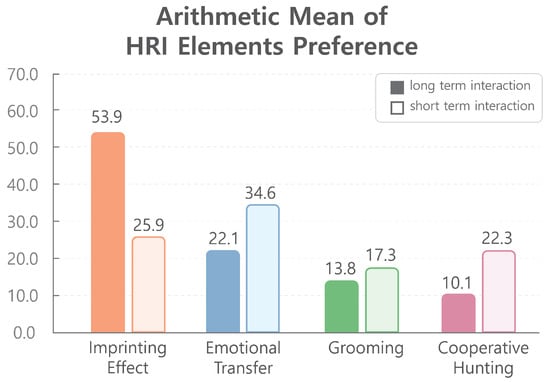

As shown in Figure 11, an analysis of the overall participant responses revealed that the Long-Term Familiarization group showed the highest preference (53.9%) for the instinctual stimulation of the robot following users. Conversely, the Short-Term Familiarization group exhibited the highest preference (34.6%) for the emotional stimulation of the robot’s smiling expression, indicating that the degree of interaction with the robot influences the preferred HRI element.

Figure 11.

Arithmetic Mean of HRI Elements Preference.

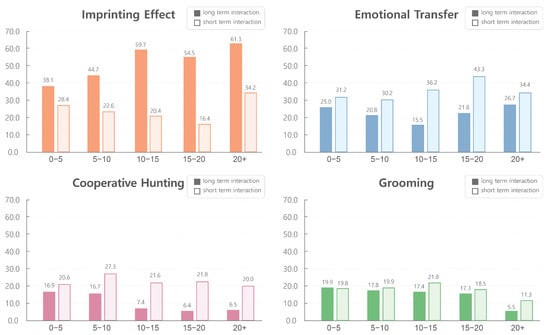

Figure 12 organizes the preference for HRI elements applied to EDIE based on age groups, where the dark-colored bars represent Long-Term Familiarization and the light-colored bars represent Short-Term Familiarization. In the case of Long-Term Familiarization, a distinct preference for the imprinting effect, which corresponds to instinctual stimulation, can be observed. Notably, compared to children, adolescents and adults show a higher preference for instinctual stimulation interactions, and the differences between the long-term and short-term familiarization groups are clearly evident.

Figure 12.

Preference by HRI Elements.

In the first experiment, participants had a relatively longer time at the beginning to observe EDIE and establish rapport. During this time, participants, from their perspective as caregivers, replaced the robot EDIE with an infant, fostering maternal attachment through the Imprinting Effect. Once maternal attachment was established, it demonstrated a significantly stronger impact compared to other HRI elements. This suggests that activating the instinctual behavior of maternal care during the process of fostering attachment has a force that surpasses sensory, emotional, and playful stimulation.

Song explains the process of attachment formation between humans and robots based on Bowlby’s attachment theory [20]. Through initial interactions, individuals can develop an imprinting attachment toward the counterpart. Additionally, when a robot expresses emotions or maintains continuous interaction, users tend to anthropomorphize the robot, which serves as the foundation for emotional connection. If humans feel emotional stability through repeated interactions with a robot, they begin to trust the robot’s predictable behavior, which eventually leads to attachment formation.

In the case of the Long-Term Familiarization group, participants experienced repetitive interactions with the robot for 5–10 min during the introductory phase of the performance. This allowed them to form attachment through instinctual stimulation, resulting in a notable preference for the imprinting effect.

On the other hand, the short-term familiarization group, which lacked sufficient time to establish rapport, showed a relatively high preference for emotional transference in the second experiment. This is likely because participants, unable to deliberate preferences across sensory, emotional, instinctual, and playful factors, intuitively and immediately responded to “laughter,” which stimulates both visual and auditory senses. However, this observation warrants further in-depth study in the future.

5. Conclusions

This study was initiated to identify the most effective characteristics that encourage attachment between humans and robots, particularly focusing on companion robots that provide emotional stability and satisfaction to users in a world where humans and robots coexist.

To this end, the robot EDIE was designed with four HRI elements in its appearance and behavioral patterns: Grooming, Emotional Transfer, Imprinting Effect, and Cooperative Hunting. When sufficient time for interaction and rapport-building with users is ensured, the Imprinting Effect, wherein EDIE follows the user, proved to be the most effective in fostering user attachment.

Bowlby’s attachment theory explains that humans are biologically evolved to form emotional bonds and attachments, which play a decisive role in development [21]. Children who grow up within such relationships eventually perceive and raise their own offspring as attachment figures based on their experiences.

Rob applied Bowlby’s attachment theory to robots, emphasizing the importance of the concepts of Secure Base and Safe Haven [16]. A robot can become an attachment figure when it is perceived as “always supporting me and staying by my side”.

The findings of this study align with Bowlby and Rob’s assertions, as the Imprinting Effect and Emotional Transfer were the most favored HRI elements by users. EDIE follows a designated individual and responds positively to the user’s actions with smiles and laughter. In doing so, EDIE fulfills the roles of a Secure Base and a Safe Haven, always supporting and staying close to the user.

Therefore, for companion robots designed to engage in long-term familiarization with users, it is advantageous to incorporate HRI elements that satisfy instinctual stimulation, such as the imprinting effect. Strategically, such robots should be designed to become objects of care and affection for users while also possessing the ability to positively influence the user’s emotions. This aligns with the fundamental purpose of companion robots as emotionally supportive entities.

6. Future Work

This study raises questions about whether the conclusions drawn would be consistent across different cultural backgrounds. Specifically, while this research focused on identifying HRI characteristics that facilitate attachment formation in companion robots, it would be worth exploring in future studies whether robots are more perceived as “beings that give affection to users” or as “beings that become the target of users’ attachment.” This exploration would help determine which perception better aligns with the original research objective of addressing social issues through robots.

Currently, the eighth generation of EDIE is under development. Once EDIE 8 is completed, we plan to conduct large-scale field tests, as in previous versions, by securing a substantial sample population.

Through these tests, we aim to explore whether a companion robot that loves humans or one that is loved by humans better fulfills the purpose of companion robots.

It is our hope that this study will inspire researchers in the field of companion robots and contribute to the expansion of the companion robot market as a whole.

Author Contributions

Conceptualization, Y.E. and J.H.; Methodology, Y.E., C.P. and G.K.; Software, C.P. and Y.C.; Validation, Y.E.; Formal analysis, Y.E. and J.H.; Resources, G.K., Y.C. and J.H.; Data curation, Y.E., C.P. and Y.C.; Writing—original draft, Y.E.; Writing—review & editing, C.P. and G.K.; Visualization, Y.E.; Supervision, J.H.; Project administration, Y.E. and J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the MOTIE (Ministry of Trade, Industry, and Energy) in Korea, under the Korea Robot Industry Core Technology Development Project (20018699), supervised by the Korea Evaluation Institute of Industrial Technology (KEIT).

Institutional Review Board Statement

This study was a low-risk, non-invasive observational study involving no interventions and does not qualify as medical research. Therefore, in accordance with the Declaration of Helsinki and the Czech Ethical Framework of Research, ethics approval was not required.

Informed Consent Statement

Verbal informed consent was obtained from all participants prior to the experiment. This method was appropriate due to the non-invasive nature of the study, the absence of sensitive data collection, and the minimal level of risk involved.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Amiot, C.; Bastian, B. Solidarity with animals: Assessing a relevant dimension of social identification with animals. PLoS ONE 2017, 12, e0168184. [Google Scholar] [CrossRef] [PubMed]

- Sinatra, A.; Sims, V.; Chin, M.; Lum, H. If it looks like a dog: The effect of physical appearance on human interaction with robots and animals. Interact. Stud. 2012, 13, 235–262. [Google Scholar] [CrossRef]

- Kim, J.; Kang, D.; Choi, J.; Kwak, S. Effects of Realistic Appearance and Consumer Gender on Pet Robot Adoption. In International Conference on Social Robotics; Springer: Cham, Switzerland, 2022; pp. 627–639. [Google Scholar]

- Statista. TGM Research Average Share of Pet Ownership Worldwide as of 2023, by Pet Type. 2023. Available online: https://www.statista.com/ (accessed on 11 December 2024).

- American Pet Products Association. APPA 2021–2022 APPA National Pet Owners Survey. 2021. Available online: https://books.google.co.kr/books?id=RyuHzgEACAAJ (accessed on 1 August 2024).

- Bradwell, H.; Edwards, K.; Shenton, D.; Winnington, R.; Thill, S.; Jones, R. User-centered design of companion robot pets involving care home resident-robot interactions and focus groups with residents, staff, and family: Qualitative study. JMIR Rehabil. Assist. Technol. 2021, 8, e30337. [Google Scholar] [CrossRef] [PubMed]

- Nagasawa, M.; Mitsui, S.; En, S.; Ohtani, N.; Ohta, M.; Sakuma, Y.; Onaka, T.; Mogi, K.; Kikusui, T. Oxytocin-gaze positive loop and the coevolution of human-dog bonds. Science 2015, 348, 333–336. [Google Scholar] [CrossRef] [PubMed]

- Harlow, H. The nature of love. Am. Psychol. 1958, 13, 673. [Google Scholar] [CrossRef]

- Burkett, J.; Andari, E.; Johnson, Z.; Curry, D.; Waal, F.; Young, L. Oxytocin-dependent consolation behavior in rodents. Science 2016, 351, 375–378. [Google Scholar] [CrossRef] [PubMed]

- McCullough, A.; Jenkins, M.; Ruehrdanz, A.; Gilmer, M.; Olson, J.; Pawar, A.; Holley, L.; Sierra-Rivera, S.; Linder, D.; Pichette, D.; et al. Others Physiological and behavioral effects of animal-assisted interventions on therapy dogs in pediatric oncology settings. Appl. Anim. Behav. Sci. 2018, 200, 86–95. [Google Scholar] [CrossRef]

- Kramer, C.; Leitao, C. Laughter as medicine: A systematic review and meta-analysis of interventional studies evaluating the impact of spontaneous laughter on cortisol levels. PLoS ONE 2023, 18, e0286260. [Google Scholar] [CrossRef] [PubMed]

- Mui, P.; Goudbeek, M.; Roex, C.; Spierts, W.; Swerts, M. Smile mimicry and emotional contagion in audio-visual computer-mediated communication. Front. Psychol. 2018, 9, 2077. [Google Scholar] [CrossRef] [PubMed]

- Marler, P. Animal Communication Signals: We are beginning to understand how the structure of animal signals relates to the function they serve. Science 1967, 157, 769–774. [Google Scholar] [CrossRef] [PubMed]

- Lorenz, K. Der Kumpan in der Umwelt des Vogels. Der Artgenosse als auslösendes Moment sozialer Verhaltungsweisen. J. Ornithol. Beiblatt. 1935, 83, 137–213. [Google Scholar] [CrossRef]

- Tsutsui, K.; Tanaka, R.; Takeda, K.; Fujii, K. Collaborative hunting in artificial agents with deep reinforcement learning. Elife 2024, 13, e85694. [Google Scholar] [CrossRef] [PubMed]

- Rabb, N.; Law, T.; Chita-Tegmark, M.; Scheutz, M. An attachment framework for human-robot interaction. Int. J. Soc. Robot. 2022, 14, 539–559. [Google Scholar] [CrossRef]

- Knapp, M.; Hall, J.; Horgan, T. Nonverbal Communication in Human Interaction. (Cengage Learning, 2013). Available online: https://books.google.co.kr/books?id=-g7hkSR_mLoC (accessed on 1 August 2024).

- Mumm, J.; Mutlu, B. Human-robot proxemics: Physical and psychological distancing in human-robot interaction. In Proceedings of the 6th International Conference on Human-Robot Interaction, Lausanne, Switzerland, 8–11 March 2011; pp. 331–338. [Google Scholar]

- Leite, I.; Castellano, G.; Pereira, A.; Martinho, C.; Paiva, A. Empathic robots for long-term interaction: Evaluating social presence, engagement and perceived support in children. Int. J. Soc. Robot. 2014, 6, 329–341. [Google Scholar] [CrossRef]

- Song, G.; Lee, S. Human-Robot Social Interaction: Applying Attachment Theory. In Proceedings of the 2017 Korea Technology Innovation Society Fall Conference; Korea Technology Innovation Society: Daejeon, Republic of Korea, 2017; pp. 425–438. [Google Scholar]

- Bowlby, J. Attachment and loss: Retrospect and prospect. Am. J. Orthopsychiatry 1982, 52, 664. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.