Abstract

This study investigates prompt engineering (PE) strategies to mitigate hallucination, a key limitation of multimodal large language models (MLLMs). To address this issue, we explore five prominent multimodal PE techniques: in-context learning (ICL), chain of thought (CoT), step-by-step reasoning (SSR), tree of thought (ToT), and retrieval-augmented generation (RAG). These techniques are systematically applied across multiple datasets with distinct domains and characteristics. Based on the empirical findings, we propose the greedy prompt engineering strategy (Greedy PES), a methodology for optimizing PE application across different datasets and MLLM models. To evaluate user satisfaction with MLLM-generated responses, we adopt a comprehensive set of evaluation metrics, including BLEU, ROUGE, METEOR, S-BERT, MoverScore, and CIDEr. A weighted aggregate evaluation score is introduced to provide a holistic assessment of model performance under varying conditions. Experimental results demonstrate that the optimal prompt engineering strategy varies significantly depending on both dataset properties and the MLLM model used. Specifically, datasets categorized as general benefit the most from ICL, ToT, and RAG, whereas mathematical datasets perform optimally with ICL, SSR, and ToT. In scientific reasoning tasks, RAG and SSR emerge as the most effective strategies. Applying Greedy PES leads to a substantial improvement in performance across different multimodal tasks, achieving an average evaluation score enhancement of 184.3% for general image captioning, 90.3% for mathematical visual question answering (VQA), and 49.1% for science visual question answering (VQA) compared to conventional approaches. These findings highlight the effectiveness of structured PE strategies in optimizing MLLM performance and provide a robust framework for PE-driven model enhancement across diverse multimodal applications.

1. Introduction

1.1. Multimodal Large Language Models: Foundations and Architectures

With the recent advancements in artificial Iintelligence (AI), large language models (LLMs) have demonstrated remarkable performance across various natural language processing (NLP) tasks. However, human language comprehension extends beyond mere text-based processing; it integrates multiple sensory modalities, including vision, hearing, and contextual reasoning to achieve a more holistic understanding. To overcome this limitation, multimodal large language models (MLLMs) have emerged as a new paradigm. These models are designed to process and interpret not only textual data but also diverse input modalities such as images, audio, and video, thereby enabling a more comprehensive and context-aware understanding of information.

MLLMs are designed to process not only textual data but also various modalities such as images, videos, and audio. While conventional LLMs are trained exclusively on textual data, MLLMs integrate visual and auditory information, allowing them to leverage richer contextual cues. This multimodal capability extends beyond traditional language comprehension, enabling more sophisticated decision making and reasoning by combining linguistic and perceptual information. However, the incorporation of multimodal data introduces new technical challenges, particularly concerning the architecture and training methodologies of MLLMs. These challenges arise from the need to effectively align, fuse, and interpret multiple modalities within a unified framework, necessitating advancements in model design and optimization strategies.

The architecture of MLLMs is primarily composed of three key components: (1) pre-trained modality encoder, (2) pre-trained large language model (LLM), and (3) cross-modality transformer [1].

The pre-trained modality encoder is responsible for processing and extracting features from non-textual data, such as images, audio, and video. A prominent example of such an encoder is CLIP (Contrastive Language–Image Pretraining) [2], which plays a crucial role in learning the relationships between vision and language. These encoders enable MLLMs to bridge the gap between different modalities by effectively mapping non-textual inputs into a representational space that aligns with linguistic information.

The pre-trained LLM serves as the core text processing component of MLLMs. It utilizes existing LLM architectures, such as GPT [3,4,5], Llama [6,7], Gemini [8], and Mistral [9], to generate refined responses by integrating textual information with extracted multimodal features. These LLMs act as the reasoning engine of MLLMs, enabling context-aware and semantically coherent responses by leveraging both linguistic and non-linguistic information.

The cross-modality transformer facilitates the effective fusion of features extracted from non-textual data with the linguistic representations processed by the LLM. This component is essential for aligning, integrating, and contextualizing multimodal information, allowing MLLMs to learn semantic relationships across different modalities. By incorporating multimodal reasoning capabilities, the cross-modality transformer enables MLLMs to generate more accurate, context-aware, and semantically enriched outputs across diverse multimodal tasks.

To optimize the performance of MLLMs, various training strategies, including pretraining, instruction tuning, and alignment tuning, are employed [1].

Pretraining serves as the foundational phase, where the model learns fundamental representation learning by leveraging large-scale multimodal datasets. During this stage, MLLMs are trained on image–text, audio–text, and other modality–text combinations, allowing them to understand and capture the relationships between different modalities. This step is crucial for enabling MLLMs to process and integrate information from diverse sources effectively. Following pretraining, instruction tuning is applied to enhance the model’s ability to generate task-specific responses. This process fine-tunes the MLLM to align with user prompts, ensuring that the model can produce outputs that are more coherent, relevant, and tailored to specific tasks. By learning from structured instructions, MLLMs become more adept at following user queries and delivering accurate and context-aware responses. To further refine the quality, reliability, and trustworthiness of the model’s outputs, alignment tuning is incorporated. This involves techniques such as reinforcement learning from human feedback (RLHF) [10], which adjusts the model’s responses to better reflect human preferences and ethical considerations. In particular, RLHF plays a critical role in reducing hallucination in large language models (LLMs) [11]. Alignment tuning plays a vital role in mitigating hallucinations and biases, ensuring that MLLMs produce factually accurate and contextually appropriate outputs. By integrating these training methods, MLLMs can achieve improved multimodal understanding and enhanced user interaction capabilities.

1.2. Technical Challenges and Hallucination in MLLMs

Despite the powerful capabilities of MLLMs enabled by their architectural design and training methodologies, several performance limitations remain. One of the most critical challenges is hallucination, which refers to instances where the model generates responses that do not accurately correspond to the actual visual information [12]. This phenomenon occurs when MLLMs produce information that is not present in the training data or misinterpret visual content, leading to inaccurate or misleading outputs. Hallucination is particularly problematic in tasks such as image captioning, object recognition, and scene understanding, where precise alignment between textual descriptions and visual data is crucial. Recent studies [13] have highlighted the risks associated with semantic gaps and misalignment between different modalities in MLLMs. These issues arise when the textual and visual components of the model fail to integrate effectively, leading to inconsistencies in generated responses. To address this, it is essential to develop effective modality alignment techniques that ensure a coherent and accurate representation of multimodal data. Furthermore, improper alignment strategies can lead to unnecessary increases in model parameters without guaranteeing performance improvements, underscoring the need for careful selection of alignment methods to optimize both efficiency and accuracy in MLLMs.

1.3. Prompt Engineering for Enhancing MLLM Performance

To mitigate the hallucination problem and enhance the performance of MLLMs, various prompt engineering (PE) techniques have been proposed, similar to those developed for LLMs [14,15,16]. However, unlike LLMs, which rely solely on textual inputs, MLLMs process visual content in addition to text. As a result, strategic prompt design must go beyond simple text-based prompting and consider alignment with visual information to ensure coherence and accuracy in multimodal reasoning.

First, in-context learning (ICL) [17] requires providing relevant examples within a given multimodal image–text pair context to enable the model to generate appropriate responses. Chain of thought (CoT) [18] should guide the model to solve complex reasoning tasks by leveraging sequential textual explanations based on image analysis. Similarly, step-by-step reasoning (SSR) [19] encourages the model to perform spatial and stepwise visual analysis, ensuring a structured reasoning process. Tree of thought (ToT) [19] extends this concept by considering multiple cognitive pathways derived from the image, allowing the model to select the most reliable response based on different analytical perspectives. On the other hand, retrieval-augmented generation (RAG) [20] enhances multimodal understanding by retrieving external knowledge related to the given image, enabling the model to generate evidence-based responses even when dealing with previously unseen information. In summary, prompt engineering in MLLMs must evolve beyond simple text-based design to strategically integrate visual information, ensuring that the model effectively utilizes multimodal inputs to improve response accuracy and reliability.

In fact, existing prompt engineering research aimed at mitigating hallucination has predominantly focused on LLMs, while the systematic optimization of PE strategies for multimodal data remains underdeveloped. However, hallucination phenomena arising specifically from multimodal inputs present challenges that cannot be fully addressed by conventional approaches alone. Therefore, the development of prompt engineering techniques tailored to multimodal data is essential for generating accurate and contextually grounded responses.

This study aims to develop an optimal prompt engineering strategy that maximizes user satisfaction and response accuracy in practical MLLM service deployment while minimizing computational resource requirements. Specifically, instead of performing additional fine-tuning on pre-trained modality encoders, pre-trained LLMs, or cross-modality transformers, we explore how multimodal-specific prompt engineering techniques alone can enhance MLLM performance.

To achieve this, we systematically investigate the application of RAG, CoT, ICL, SSR, and ToT as effective prompt engineering strategies. In particular, we evaluate the impact of these techniques on state-of-the-art MLLMs, including Phi [21,22], Llama [23], Pixtral [24], and Qwen [25,26], providing empirical insights into their effectiveness across different architectures.

To ensure that our evaluation closely aligns with user satisfaction, we employ a diverse set of performance metrics, including bilingual evaluation understudy (BLEU), recall-oriented understudy for gisting eval (ROUGE), metric for evaluation of translation with explicit ordering (METEOR), sentence-bidirectional encoder representations from transformers (S-BERT), MoverScore, and consensus-based image description evaluation (CIDEr). These metrics collectively assess the quality, fluency, and relevance of multimodal-generated responses.

For benchmark datasets, we utilize MathVista [27], CVBench [28], ScienceQA [29], nocaps [30], MSCOCO [31], and Flickr30k [32]. These datasets span a variety of domains and multimodal tasks, allowing for a comprehensive analysis of prompt engineering strategies in multimodal natural language generation. Based on the results, we propose a greedy prompt engineering strategy (Greedy PES) that optimally selects the most effective prompt engineering technique for each dataset and MLLM model, maximizing response quality and reliability.

The proposed Greedy PES method enables the identification of the optimal MLLM model and the most effective PE combination for each dataset, based on the exhaustive evaluation of all possible PE configurations. Furthermore, by employing a weighted metric computation scheme that adaptively reflects the characteristics of each dataset and user preferences, this approach achieves a closer alignment with user satisfaction compared to conventional methods.

2. Related Works

MLLMs [21,22,23,24,25,26], which aim to generate text by processing diverse multimodal inputs through LLMs, have been the focus of extensive research in terms of architectural advancements [1,15] and training methodologies [1]. Despite continuous improvements in performance, MLLMs still face significant challenges, particularly in regard to generating hallucinated responses that fail to accurately reflect the provided visual or contextual inputs [12]. To mitigate these limitations, prompt engineering (PE) strategies have emerged as a promising solution to enhance response quality and reliability [14,15,16]. Additionally, recent research has explored user experience optimization methods specifically tailored for MLLMs, employing diverse evaluation frameworks to assess model effectiveness [11,33,34,35].

In terms of architectural studies, Fu et al. [1] categorized MLLM architectures into three core components: pre-trained modality encoders, pre-trained LLMs, and cross-modality transformers. This structure allows MLLMs to integrate multimodal information efficiently while leveraging LLMs’ textual reasoning capabilities. In contrast, Zhang et al. [15] proposed a more fine-grained MM-LLM framework, decomposing it into modality encoders, input projectors, LLM backbones, output projectors, and modality generators. This expanded framework extends beyond text generation, encompassing multimodal content generation, including images and audio, thus broadening the application scope of MLLMs.

In contrast to architectural research, studies on MLLM training methodologies have primarily focused on pretraining, instruction tuning, and alignment tuning [1]. Pretraining aims to align different modalities while embedding multimodal world knowledge into the model [1]. Instruction tuning is designed to teach MLLMs how to follow user instructions and effectively perform assigned tasks. Meanwhile, alignment tuning ensures that MLLMs are aligned with specific human preferences, improving their ability to generate responses that are both reliable and contextually appropriate.

Despite these advanced training strategies, achieving perfect alignment between different modality encoders remains a fundamental challenge. This misalignment issue often leads to multimodal hallucination, where the content generated by an MLLM does not accurately correspond to the provided visual input. When combined with an LLM, multimodal hallucination can manifest in three distinct types [12]: existence hallucination, attribute hallucination, and relationship hallucination. Existence hallucination is the most fundamental form of hallucination, where the model incorrectly asserts the presence of objects that do not actually exist in the image. Attribute hallucination occurs when the model misdescribes the attributes of an object, such as failing to correctly identify the color of a dog. This type of hallucination is often correlated with existence hallucination, as attribute descriptions should be grounded in the actual objects present in the image. Relationship hallucination is a more complex phenomenon that extends beyond the existence of objects. It refers to incorrect descriptions of relationships between objects, such as relative positioning or interactions, leading to misinterpretations of the scene’s contextual meaning. These hallucination challenges highlight the inherent difficulties in aligning multimodal representations, necessitating effective prompt engineering strategies to mitigate the issue and improve response reliability.

To help address the hallucination problem in LLMs, various prompt engineering techniques such as ICL [17], CoT [18], SSR [19], ToT [19], and RAG [20] have been introduced. These approaches aim to guide the model in structured reasoning, contextual retrieval, and incremental step-wise reasoning, thereby improving response accuracy. However, applying these techniques directly to MLLMs presents performance limitations, as MLLMs require strategic prompt design that accounts for alignment with visual information, rather than relying solely on text-based prompts. To overcome these challenges, recent studies have proposed multimodal-specific prompt engineering techniques. Yin et al. [14,36,37] introduced multimodal ICL (M-ICL), multimodal CoT (M-CoT), and LLM-aided visual reasoning (LAVR) to mitigate multimodal hallucination by integrating visual and textual reasoning. Similarly, He et al. [38] proposed prompt optimization for enhancing multimodal reasoning (POEM), a visual analysis system that optimizes prompts to enhance multimodal reasoning capabilities in large language models. Expanding on this, Zhang et al. [15] introduced Multimodal-CoT, a framework that extends chain-of-thought reasoning to process multimodal inputs (text and images), thereby improving joint linguistic and visual inference. Additionally, Wu et al. [16] explored visual prompting techniques for MLLMs, categorizing different types of visual prompts and investigating their impact on compositional reasoning, visual grounding, and object reference within multimodal contexts.

In parallel, MLLM evaluation methodologies have also been a subject of extensive research. Xu et al. [33] provided a comprehensive review of MM-LLM efficiency improvement techniques, introducing various benchmarks for measuring multimodal effectiveness. Li et al. [34] highlighted the limitations of existing evaluation methods, noting that most benchmarks require fixed answers, which constrains the evaluation of creative responses. Additionally, they emphasized the lack of effective hallucination assessment, the inadequate evaluation of multimodal knowledge learning, and the absence of causality understanding metrics. To address these shortcomings, they proposed adopting user-centric evaluation, multimodal expansion evaluation, and interactive and dynamic evaluation methods. Similarly, Huang et al. [11] systematized MLLM evaluation concepts, categorizing evaluation approaches based on what to evaluate (evaluation objectives), how to evaluate (evaluation methodologies), and where to evaluate (evaluation scope). Furthermore, Xie et al. [35] proposed a standardized evaluation framework that incorporates accuracy-based metrics (BLEU, ROUGE, CIDEr, and MoverScore based on Wasserstein distance), as well as human evaluation, to ensure a more holistic assessment of MLLM performance.

The key contributions of this paper are as follows:

- Comprehensive Performance Analysis: We present an extensive performance evaluation of various MLLMs, along with prompt engineering techniques designed to enhance their capabilities. This analysis is conducted across multiple datasets and assessed using a diverse set of performance metrics.

- Weighted Aggregate Performance Metric: We introduce a weighted aggregate performance metric that integrates multiple evaluation metrics, such as BLEU, ROUGE, METEOR, SBERT, MoverScore, and CIDEr, to provide a holistic assessment of prompt engineering strategies.

- Optimization Strategy for Prompt Engineering in MLLMs: We investigate the impact of prompt engineering strategies on different datasets and MLLM architectures, leading to the formulation of an optimized strategy tailored to dataset characteristics and MLLM models. Additionally, we propose a greedy prompt engineering strategy (Greedy PES) to further refine the application of prompt engineering for improved model performance.

The structure of this paper is as follows: Section 3 provides a detailed explanation of the MLLM models used in this study. Section 4 introduces the evaluation metrics employed for performance measurement, while Section 5 describes the benchmark datasets used for experimentation. Section 6 presents the proposed Greedy PES for optimizing MLLM performance. Section 7 discusses the experimental results, including the impact of different parameters, the effect of Greedy PES, the derived MLLM optimization strategies, and further insights. Finally, Section 9 concludes the paper.

3. System Models

In this study, we conduct experiments using four state-of-the-art MLLM models: Phi-3.5, Llama-3.2, Pixtral, and Qwen-2.5. These models have been recently introduced, are widely adopted, and exhibit strong performance while maintaining a parameter size of approximately 10 billion. A summary of the technical specifications of these models is presented in Table 1.

Table 1.

Technical summary of major MLLMs.

3.1. Phi

Phi-3.5-Vision-Instruct is a lightweight multimodal model developed by Microsoft in October 2024. It is designed to perform a wide range of vision–language tasks, including general image understanding, optical character recognition (OCR), chart and table comprehension, multi-image comparison, and video clip summarization [39].

The model architecture consists of a CLIP ViT-L/14-based image encoder and a Phi-3.5-mini-based pre-trained LLM. It employs rotary position embedding (RoPE) for positional encoding and utilizes the SwiGLU activation function [40]. The model supports a maximum context length of 128K tokens and has been pre-trained on approximately 0.5T tokens from image–text datasets. Additionally, supervised fine-tuning (SFT) and direct preference optimization (DPO) were applied for post-training.

3.2. Llama

Llama-3.2-11B-Vision-Instruct is an 11-billion-parameter multimodal language model developed by Meta Platforms in September 2024. It is designed to process both text and images simultaneously, enabling multimodal conversations and visual reasoning tasks. Built upon the Llama 3.1 architecture, this model integrates visual information to support various applications across different domains [7].

The architecture consists of a CLIP-based image encoder and a Llama 3.1-based pre-trained LLM. It employs RoPE for positional encoding and utilizes the SwiGLU activation function [40]. The model supports a maximum context length of 128K tokens and has been pre-trained on approximately 15.6T tokens comprising image–text datasets. For post-training, it has undergone SFT and DPO.

3.3. Pixtral

Pixtral-12B is a 12-billion-parameter multimodal language model developed and released by Mistral AI in October 2024. It is designed to understand both images and text simultaneously, enabling advanced multimodal reasoning and language generation [24].

The architecture comprises a CLIPA-based image encoder [41], a Mistral Nemo 12B-based pre-trained LLM, and a Mistral Nemo 12B-based multimodal decoder. It employs RoPE-2D for positional encoding and utilizes the SwiGLU activation function [40]. The model supports a maximum context length of 128K tokens and has been pre-trained on billions of image–text pairs. For post-training, SFT and DPO were applied.

3.4. Qwen

Qwen2-VL-7B-Instruct is a 7-billion-parameter multimodal language model developed by Alibaba Group in 2024. It is designed to function as a visual agent, enabling advanced multimodal understanding and reasoning [42].

The architecture consists of a 600-million-parameter ViT-based encoder [43] and a Qwen2-7B-based pre-trained LLM. It employs rotary multimodal rotary position embedding (M-ROPE) for positional encoding and utilizes the SwiGLU activation function [40]. The model supports a maximum context length of 128K tokens and has been pre-trained on approximately 7 trillion tokens of image–text data.

For post-training, SFT and DPO were applied to refine the model’s output. Additionally, to extend the context window, yet another RoPE extension method (YARN) [44] and dual chunk attention (DCA) [45] techniques were employed.

4. Performance Metric

This paper aims to analyze the performance of MLLMs from multiple perspectives by considering various evaluation metrics that effectively reflect user satisfaction with model responses. To achieve this, we employ a diverse set of widely used and well-established evaluation metrics, each with its own unique characteristics. Specifically, we utilize BLEU, ROUGE, METEOR, S-BERT, MoverScore, and CIDEr as our primary evaluation criteria.

4.1. BLEU

BLEU [46] is one of the most widely used metrics for evaluating the performance of machine translation and natural language generation models. It measures the similarity between a generated sentence and a reference sentence based on n-gram overlap. BLEU typically calculates precision from unigrams (1-g) to four-grams (4-g) and applies a brevity penalty (BP) to address the issue of shorter sentences receiving disproportionately high scores. The BLEU score is computed using the following formula:

where represents the n-gram precision value, and denotes the weight, which is typically set as . The brevity penalty (BP) is introduced to penalize excessively short generated sequences and can be formally defined by the following equation:

where c represents the length of the generated sentence, while r denotes the length of the reference sentence.

BLEU allows for quantitative performance comparison. However, it has limitations in capturing contextual meaning, as it does not account for synonyms or the flexibility of sentence structures.

4.2. ROUGE

ROUGE score [47] is primarily used to measure the similarity between generated text and reference text in summarization tasks. It includes several variants, such as ROUGE-N, ROUGE-L, and ROUGE-W. ROUGE-N is based on n-gram overlap, while ROUGE-L relies on the longest common subsequence (LCS). ROUGE-W is a weighted version of ROUGE-L, assigning greater importance to longer common subsequences.

In this study, we utilized the Evaluate library’s ROUGE module to compute the ROUGE scores, specifically using ROUGE-1 as the primary evaluation metric.

The formula for ROUGE-N is as follows:

where n-gram is sequence of n consecutive words, is the frequency of the n-gram in the reference text, and is the frequency of the n-gram in the candidate text.

4.3. METEOR

The METEOR metric differs from BLEU in that it considers not only simple n-gram matches but also synonymy, stemming, and word order alignment. METEOR utilizes the harmonic mean of unigram precision and recall, making it more closely correlated with human evaluation compared to BLEU [48]. The corresponding formula is as follows:

where P is precision, R is recall, and is weight factor, normally set to .

4.4. Sentence-BERT

S-BERT is a model designed to measure semantic similarity at the sentence level. In this study, sentence embeddings for both reference and generated sentences are obtained using a BERT-based model, and the semantic similarity between sentences is computed based on these embeddings [49].

where and are dense vector representations of reference sentence X and generated sentence Y, respectively. S-BERT is a fine-tuned BERT-based model that outputs sentence-level embeddings.

The similarity between sentences is calculated using the following cosine similarity formula:

4.5. MoverScore

MoverScore [50] is a metric designed to measure semantic similarity between sentences more accurately by combining word mover’s distance (WMD) with word embeddings. The corresponding formula is as follows:

where and denote the constituent words of the reference sentence X and the generated sentence Y, respectively. is cosine similarity between the word embeddings and , and is the optimal transport matrix determining how much of the embedding from should be moved to .

4.6. CIDEr

CIDEr [51] measures the similarity between the generated sentence and reference sentence using a term frequency-inverse document frequency (TF-IDF) weighted n-gram matching approach. It emphasizes informative content (rare but meaningful words) while reducing the influence of common, less informative words. The CIDEr formula is as follows:

where the TF-IDF weight of an n-gram g for each reference sentence X and generated sentence Y is defined as follows:

where and represent the term frequency (TF) of the n-gram g in the candidate and reference sentences, respectively. is the inverse document frequency of g, computed as:

where N is the total number of captions in the corpus. D represents the set of all reference captions. is an indicator function equal to 1 if g appears in document d, and 0 otherwise.

5. Dataset

In this study, we selected MSCOCO, Flickr30k, nocaps, and CVBench as benchmark datasets for the general natural language understanding category; ScienceQA for the scientific reasoning category; and MathVista for the mathematical reasoning category. These datasets were chosen to evaluate a wide range of language capabilities, ensuring a balanced representation across general natural language understanding, scientific reasoning, and mathematical reasoning. A comparative analysis of these datasets is presented in Table 2.

Table 2.

Comparison of benchmark datasets for image–text and visual reasoning tasks.

The MSCOCO 2014 5K Test Image-Text Retrieval dataset is utilized for evaluating image–text retrieval and matching performance. It consists of a total of 5000 test samples and is used to assess a model’s ability to retrieve appropriate textual descriptions for a given image or to find images corresponding to a given text query [31].

The Flickr30k dataset is designed for image captioning research, focusing on learning and evaluating the relationship between images and textual descriptions. It comprises 31,800 test samples and is widely used to evaluate models that generate natural language descriptions of images [32].

The nocaps dataset is specifically constructed for image captioning performance evaluation, particularly in scenarios where the images contain objects or scenes that are challenging for conventional captioning models. The dataset includes 4500 samples in the validation set and 10,600 samples in the test set [30].

The ScienceQA dataset is designed for evaluating scientific question answering (QA) models and contains a diverse set of scientific questions. The dataset consists of 12,700 samples in the training set, 4240 samples in the validation set, and 4240 samples in the test set. It focuses on assessing a model’s scientific knowledge and reasoning capabilities [29].

The MathVista dataset is constructed to evaluate mathematical visual question answering (Math-VQA) models by integrating mathematical concepts with visual information. It is used to test a model’s ability to perform mathematical reasoning and visual interpretation. The dataset includes 5140 test samples and provides an additional 1000-sample Test Mini set for smaller-scale evaluations [27].

The CVBench dataset serves as a computer vision benchmark (CV-Bench) for visual question answering (VQA) tasks, measuring model performance in visual understanding and question answering accuracy. The dataset includes 2640 test samples and is used to evaluate a model’s ability to process visual information and generate correct responses to image-based questions [28].

6. Greedy Prompt Engineering Strategy

This section describes the greedy prompt engineering strategy (Greedy PES), which is designed to identify and apply optimal prompt engineering techniques for different MLLM deployment environments, including the various MLLM models and benchmark datasets discussed in the previous sections.

In addition, the RAG approach was extended by integrating it with CoT, ToT, and SSR, whereby external information is retrieved and reformulated based on each respective reasoning strategy. These variants are denoted as R(C), R(T), and R(S), respectively.

The greedy prompt engineering strategy (Greedy PES) aims to determine the optimal combination of MLLM models and prompt engineering techniques for each dataset by identifying the highest achievable performance across all possible prompt engineering (PE) combinations. To formalize this, let d represent a dataset, p a prompt engineering technique, e an evaluation metric, and m an MLLM model. The evaluation score derived from these parameters is denoted as . Furthermore, the weight assigned to each evaluation metric is defined as , which accounts for the varying dynamic ranges of different evaluation metrics to prevent imbalance when aggregating scores. Additionally, these weights reflect the relative importance of each metric in assessing model performance.

The applied prompt engineering techniques are represented using the following abbreviations:

Then, the objective is to identify the MLLM model m and prompt engineering technique p that maximize the aggregated evaluation score across multiple evaluation metrics. This can be formulated as the following optimization equation:

The optimal MLLM model and the optimal prompt engineering technique may vary depending on each dataset d.

7. Simulation Result

This section presents the experimental setup designed to validate the effectiveness of the proposed Greedy PES algorithm for optimizing MLLM performance, along with a detailed performance analysis across different benchmark datasets.

Table 3 presents the experimental setup used in this study. The selected MLLM models include Llama-3.2-11B, Phi-3.5-4.2B, Pixtral-12B, and Qwen2-VL-7B, and the performance of various PE strategies, including ICL, CoT, RAG, ToT, SSR, and their hybrid combinations, was analyzed. In Section 7.1, Section 7.2, Section 7.3, Section 7.4, Section 7.5, Section 7.6, performances are evaluated, compared, and analyzed based on the experimental setup in Table 3 across the six datasets.

Table 3.

Experimental setup.

The responses for performance evaluation were generated using prompt formats derived from the corresponding PE strategies, with temperature = 0.1 and top-P = 0.9 applied as decoding parameters. For RAG, the prompt is automatically augmented with an image that exhibits high cosine similarity to the input image. Specifically, RAG employs a retrieval-augmented strategy to enhance multimodal reasoning. A subset of the dataset is pre-embedded using the CLIP [2] model to construct a retrieval database via ChromaDB. When the original image is given, it is encoded into a vector using the same CLIP model, and the most semantically similar image is retrieved based on cosine similarity. Prior to presenting the target image, the retrieved image and its caption are shown to provide relevant contextual knowledge and assist the model in generating more accurate responses.

For performance analysis, inference was conducted by applying various prompt engineering techniques to the pretrained models, including Llama-3.2-11B, Phi-3.5-4.2B, Pixtral-12B, and Qwen2-VL-7B, utilizing NVIDIA H100 Tensor Core GPU computing resources.

Finally, Section 7.7 analyzes the best-performing PE strategy for each dataset and MLLM model to derive a PE optimization strategy and discuss insights for performance enhancement.

7.1. MSCOCO

Analyzing the baseline performance (B) in Table 4 and Table 5, it is evident that Qwen-2 achieves the highest performance across most evaluation metrics. This is followed by Pixtral, Llama 3.2, and Phi-3.5 in descending order of performance. Notably, Llama 3.2 exhibits the best results in semantic similarity metrics (S-BERT, MoverScore), suggesting that the generated captions are likely to be more semantically appropriate. Meanwhile, Phi-3.5 achieves a higher CIDEr score than Pixtral and Llama 3.2, although it records the lowest performance in other metrics.

Table 4.

Performances of BLEU, ROUGE, and METEOR according to MLLM models and prompt engineering techniques on the MSCOCO dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Table 5.

Performances of S-BERT, MoverScore, and CIDEr according to MLLM models and prompt engineering techniques on the MSCOCO dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

However, when applying the Greedy PES, the optimal model and optimal prompt engineering strategy are found to be Phi-3.5 with the base RAG technique. This indicates that although Phi-3.5 initially exhibited the lowest baseline performance, it outperforms all other models when Greedy PES is applied. This underscores the significant impact of PE on MLLM performance. Additionally, it is noteworthy that Phi-3.5, despite being the smallest model at 4.2B parameters, achieves superior performance compared to larger models when optimized using Greedy PES. Furthermore, following Phi-3.5, the models rank in performance as Qwen-2, Pixtral, and Llama 3.2. Interestingly, Qwen-2, which had the lowest baseline performance, demonstrates the second-best performance under Greedy PES. The BLEU score improvement for Qwen-2 through Greedy PES is nearly tenfold, highlighting the effectiveness of prompt engineering optimization. On the other hand, for the CIDEr metric, the ToT technique proves to be the most effective, with Qwen-2 emerging as the best-performing model.

7.2. Flickr30k

Analyzing the baseline performance (B) in Table 6 and Table 7, it is evident that Qwen-2 achieves the highest performance across most evaluation metrics. In particular, Qwen-2 records the highest scores in BLEU, ROUGE, and CIDEr, indicating its superior baseline performance in caption generation. Meanwhile, Pixtral achieves the highest performance in METEOR and also records a high MoverScore, suggesting strong semantic similarity between generated and reference captions. Phi-3.5 demonstrates a higher CIDEr score than Pixtral and Llama 3.2, but it records the lowest performance in most other metrics. When applying the greedy prompt engineering strategy (Greedy PES), the optimal model and optimal PE strategy are found to be Qwen-2 with the ToT technique. Notably, this combination achieves the highest performance across METEOR, S-BERT, MoverScore, and CIDEr, further confirming its effectiveness in enhancing captioning performance. Additionally, Phi-3.5, despite being the smallest model with only 4.2B parameters, demonstrates comparable performance. This suggests that Phi-3.5 could be a resource-efficient alternative for general captioning tasks, particularly in hardware-constrained environments where computational efficiency is a key requirement.

Table 6.

Performances of BLEU, ROUGE, and METEOR according to MLLM models and prompt engineering techniques on the FLICKR30k dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Table 7.

Performances of S-BERT, MoverScore, and CIDEr according to MLLM models and prompt engineering techniques on the FLICKR 30k dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

7.3. nocaps

As observed in Table 8 and Table 9, the baseline performance (B) analysis reveals that Qwen-2 achieves the highest scores in BLEU, ROUGE, and METEOR, indicating that it possesses the strongest baseline performance in caption generation. In contrast, Pixtral outperforms the other models in MoverScore and CIDEr, while Llama 3.2 achieves the highest score in S-BERT, demonstrating its strength in semantic similarity evaluation.

Table 8.

Performances of BLEU, ROUGE, and METEOR according to MLLM models and prompt engineering techniques on the nocaps dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Table 9.

Performances of S-BERT, MoverScore, and CIDEr according to MLLM models and prompt engineering techniques on the nocaps dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

When applying the Greedy PES, the optimal model and optimal PE strategy are found to be Qwen-2 with the ToT technique. However, it is noteworthy that Phi-3.5, despite being the smallest model, achieves the best performance in BLEU and ROUGE. Additionally, Phi-3.5 also demonstrates performance comparable to Qwen-2 across METEOR, S-BERT, and MoverScore, indicating its efficiency in multimodal captioning tasks despite its lower parameter count.

7.4. ScienceQA

As observed in Table 10 and Table 11, the baseline performance (B) analysis demonstrates that Qwen-2 achieves the highest performance across all evaluation metrics. Following Qwen-2, Phi-3.5, Llama 3.2, and Pixtral exhibit strong performance in descending order.

Table 10.

Performances of BLEU, ROUGE, and METEOR according to MLLM models and prompt engineering techniques on the ScienceQA dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Table 11.

Performances of S-BERT, MoverScore, and CIDEr according to MLLM models and prompt engineering techniques on the ScienceQA dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Upon applying the greedy prompt engineering strategy (Greedy PES), the optimal model and optimal prompt engineering strategy are identified as Phi-3.5 with the base RAG technique. Additionally, Phi-3.5 with the ICL and SSR combination also demonstrates strong performance, albeit with a marginal difference. This result highlights the importance of knowledge expansion and step-by-step reasoning techniques, such as base RAG, ICL, and SSR, in scientific question-answering tasks, where structured reasoning and contextual information retrieval are crucial for generating accurate responses.

Furthermore, after applying Greedy PES, the combination of Qwen-2 with SSR follows Phi-3.5 in terms of performance. Notably, Qwen-2 also exhibited strong performance in the baseline results, reinforcing its effectiveness in scientific-domain-specific response generation.

7.5. MathVista

As observed in Table 12 and Table 13, the baseline performance (B) analysis demonstrates that Phi-3.5 outperforms all models across all evaluation metrics, followed by Qwen-2, Llama 3.2, and Pixtral in descending order. This result indicates that Phi-3.5 and Qwen-2 exhibit strong mathematical problem-solving and reasoning capabilities, whereas Pixtral demonstrates relatively lower performance in this domain.

Table 12.

Performances of BLEU, ROUGE, and METEOR according to MLLM models and prompt engineering techniques on the MathVista dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Table 13.

Performances of S-BERT, MoverScore, and CIDEr according to MLLM models and prompt engineering techniques on the MathVista dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Upon applying the greedy prompt engineering strategy (Greedy PES), the optimal model and optimal prompt engineering strategy are identified as Qwen-2 with the ToT approach. However, it is also notable that Phi-3.5 with the ICL approach achieves the best performance in the S-BERT and MoverScore metrics. These findings confirm that even after applying prompt engineering techniques, Phi-3.5 and Qwen-2 maintain a performance advantage over other models in mathematical reasoning and problem-solving tasks. Additionally, ToT and ICL emerge as the most effective prompt engineering strategies for optimizing MLLM performance in mathematical domains.

7.6. CVBench

As observed in Table 14 and Table 15, the base performance (B) indicates that Qwen-2 demonstrates the highest overall performance, with Phi-3.5 also exhibiting comparable proficiency in understanding multimodal data. Specifically, Phi-3.5 achieves the highest scores in BLEU, ROUGE, and METEOR, while Qwen-2 records the best performance in S-BERT, MoverScore, and CIDEr. This suggests that responses generated by Qwen-2 are semantically more appropriate and natural compared to other models.

Table 14.

Performances of BLEU, ROUGE, and METEOR according to MLLM models and prompt engineering techniques on the CVBench dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Table 15.

Performances of S-BERT, MoverScore, and CIDEr according to MLLM models and prompt engineering techniques on the CVBench dataset (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

When applying the Greedy PES, the optimal model and prompt engineering strategy are determined to be Phi-3.5 combined with the ICL technique. Furthermore, since ICL consistently emerges as the most effective prompt engineering method across various evaluation metrics for other MLLM models, this indicates that ICL is particularly advantageous for datasets such as CVBench, which require a fundamental yet comprehensive understanding of both text and image-based inputs.

7.7. Performance Analysis and Discussion

This section provides a quantitative analysis of the best-performing MLLM, the best-performing MLLM with PES, and the degree of performance enhancement, based on the previously presented results across datasets, MLLM models, and evaluation metrics.

Table 16 presents the optimal prompt engineering strategies (PES) and MLLM models across various datasets and evaluation metrics, derived from the results in Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11, Table 12, Table 13, Table 14 and Table 15. As observed in the results, the optimal PES varies significantly depending on the dataset and the chosen MLLM model. Generally, for general category datasets, ICL, ToT, and RAG are predominantly utilized. This trend can be attributed to the characteristics of multimodal data, where generating captions from recognized objects and input text requires in-context reasoning, multi-path inference, and knowledge expansion to deepen the relationship between objects and textual context. In contrast, for math-related datasets, ICL, SSR, and ToT are the primary techniques, while for science-related datasets, RAG and SSR are more frequently employed. The emphasis on SSR in the math and science domains compared to the general domain is notable, as solving mathematical and scientific problems inherently demands step-by-step reasoning, which is crucial for handling complex problem-solving tasks.

Table 16.

Comparison of results across MLLM models and datasets using different metrics (The boldfaced numbers indicate the highest performance for each language model across different PES variants).

Additionally, while Qwen-2 consistently achieves the highest performance across most cases when no PES is applied, it is noteworthy that Phi-3.5 also emerges as a strong contender when Greedy PES is applied. More significantly, despite being the smallest model, Phi-3.5 exhibits substantial performance improvement when PES is applied, demonstrating the effectiveness of PE in enhancing MLLM performance. These findings suggest that Greedy PES has strong potential for MLLM model optimization, highlighting its applicability for further expansion and future advancements in multimodal AI research.

A more detailed analysis of each dataset is now presented to examine the optimal MLLM and PES combinations for different multimodal tasks.

The MSCOCO dataset is designed for image captioning, encompassing diverse scenes and objects. The optimal MLLM–PES combinations identified through Greedy PES are Phi-3.5 with RAG and Qwen-2 with ToT. The results indicate that Qwen-2 exhibits strong image captioning capabilities even without additional prompt engineering, suggesting that it is inherently well trained for general multimodal image–text alignment. In contrast, Phi-3.5, when integrated with RAG, demonstrates a more effective retrieval-based approach, allowing the model to extract relevant information from the image and generate high-quality captions.

Flickr30k focuses on understanding relationships between people and objects within an image to generate relevant captions. The optimal MLLM–PES combination is Qwen-2 with ToT, reinforcing the finding that Qwen-2 is a strong candidate for text generation in general multimodal datasets. The results further suggest that the ToT-based approach facilitates enhanced logical reasoning, allowing the model to establish deeper semantic connections between elements in the image, ultimately producing more contextually relevant captions.

The nocaps dataset is designed for open-domain image captioning, where models must generate captions that describe the main content of an image, even for unseen objects. As observed in prior datasets, the optimal MLLM-PES combination remains Qwen-2 with ToT, reinforcing its capability in open-domain captioning. Furthermore, in the baseline setting (B), Qwen-2 outperforms the other models, highlighting its robustness in unconstrained image captioning tasks.

The ScienceQA dataset evaluates scientific reasoning and question answering, requiring the model to comprehend scientific concepts and principles. While Qwen-2 achieves the highest performance in the baseline setting (B), applying Greedy PES leads to optimal MLLM–PES combinations of Phi-3.5 with RAG or Phi-3.5 with ICL and SSR. This suggests that RAG and structured step-by-step reasoning (ICL, SSR) are the most effective strategies for solving scientific problems, as they facilitate information retrieval, logical deduction, and structured reasoning.

MathVista is designed to assess mathematical problem solving, numerical computation, and logical reasoning in a multimodal context. In the baseline setting (B), Phi-3.5 emerges as the best-performing model. However, when applying Greedy PES, the optimal MLLM–PES combination shifts to Qwen-2 with ToT, demonstrating that the ToT framework enhances logical reasoning and enables structured multi-step problem solving, particularly for mathematical tasks requiring iterative hypothesis evaluation and validation.

CVBench serves as a computer-vision-focused multimodal benchmark, where models are assessed on object recognition and scene description based on image–text relationships. In the baseline setting (B), Phi-3.5 and Qwen-2 achieve the highest performance, while Greedy PES identifies Phi-3.5 with ICL as the optimal combination. This finding indicates that ICL effectively optimizes image descriptions by incorporating diverse in-context examples, making it the most suitable approach for tasks requiring fine-grained multimodal understanding.

Ultimately, the application of the Greedy PES resulted in significant performance improvements across different multimodal tasks. The observed performance improvements are as follows:

- 184.3% increase in evaluation scores for general image captioning tasks compared to conventional methods.

- 90.3% increase in evaluation scores for mathematical VQA.

- 49.1% increase in evaluation scores for science VQA.

These results underscore the importance of prompt engineering in MLLM optimization, illustrating how Greedy PES can significantly enhance model performance by aligning multimodal reasoning techniques with dataset-specific requirements.

7.8. Prompt Examples

Table 17 presents examples of the prompts used for in the aforementioned experiments.

Table 17.

Prompt examples for each PE technique (B, I, C, R(B), S, T, R(C)).

Table 18, Table 19, Table 20 and Table 21 present comparative results obtained by applying various PE techniques using images and questions from Figure 1 as inputs. The images and captions in Figure 1 were extracted from the nocaps dataset.

Table 18.

Image captioning results for Figure 1 (Pixtral).

Table 19.

Image captioning results for Figure 1 (Llama 3.2).

Table 20.

Image captioning results for Figure 1 (Phi-3.5).

Table 21.

Image captioning results for Figure 1 (Qwen2).

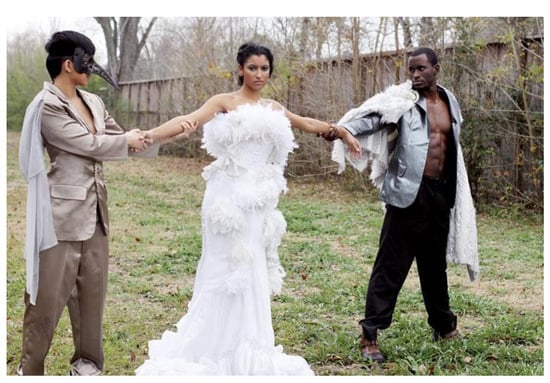

Figure 1.

Input image and caption from the nocaps dataset (caption: a woman in a white dress is standing between two suit-wearing men in a yard).

We now summarize and analyze the above prompt examples. B generally elicited strong visual grounding and basic descriptions, though Phi-3.5 and Llama 3.2 occasionally misinterpreted scenes negatively. I offered concise referencing but lacked contextual depth and emotional nuance across models. C encouraged creativity and narrative richness, but some models misread humorous cues. R yielded clear and concise outputs, though fine-grained detail was sometimes inconsistent. S and T aimed to deepen reasoning, revealing model-specific differences in analytical and emotional interpretation. R(C) supported creative, emotional framing but sometimes induced speculative responses. Model-wise, Phi-3.5 performed well with B and C; Llama 3.2 with I and S; Pixtral with C and R(C); and Qwen 2 with T and R. These results suggest that each prompt strategy effectively exposes the strengths and limitations of different MLLMs.

8. Featured Application

The rapid progress of MLLMs has opened up diverse application domains where natural language generation is required to be grounded in multimodal inputs. MLLMs have demonstrated strong potential in a wide range of use cases including image–text visual question answering (VQA) [27], medical image captioning [52,53], multimodal dialogue systems [54,55], robotics-based visual reasoning [56], legal document visual summarization [38], and math education support systems [15,27]. These applications leverage the capability of MLLMs to reason across textual, visual, and sometimes auditory modalities to deliver more informed and context-aware responses.

Despite these promising applications, deploying MLLMs in real-world scenarios faces several key challenges. One major limitation arises from the computational overhead of advanced prompt engineering strategies. Specifically, the proposed Greedy PES exhaustively explores all available combinations of prompts to identify optimal strategies for a given dataset and model. This approach, while empirically effective, is computationally intensive and resource demanding, making it less feasible in resource-constrained environments such as mobile or embedded devices [57,58].

To mitigate such constraints, recent work has proposed several solutions. For example, meta prompt selectors dynamically choose suitable prompts based on input domain or task characteristics [59]; heuristic rules can be used to predefine prompt configurations based on prior dataset analysis [38]; and prompt distillation techniques attempt to consolidate multiple prompt types into a unified, lighter-weight form [58]. These approaches enable more scalable and deployment-friendly usage of prompt engineering in practical settings.

Additionally, the effectiveness of each PE technique, such as ICL [60], CoT [15], SSR [19], ToT [19], and RAG [20], varies considerably depending on the task domain and dataset characteristics. For instance, ToT has proven particularly effective in scenarios requiring structured reasoning over visual inputs, while RAG is optimal in tasks that demand external knowledge retrieval and grounding, such as in scientific QA tasks [59]. These findings suggest that domain-aware prompt adaptation is essential for achieving optimal performance across applications.

While MLLMs have demonstrated strong generalization and reasoning capabilities, their effective deployment in real-world applications relies heavily on prompt strategies that are computationally efficient, domain-specific, and adaptively optimized. The proposed Greedy PES provides an empirical framework for identifying such strategies but also highlights the need for future research in lightweight and domain-adaptive prompt optimization.

9. Conclusions

This study investigated optimal PE strategies to mitigate one of the key limitations of MLLMs—the hallucination phenomenon. To achieve this, we analyzed representative multimodal PE techniques, including ICL, CoT, SSR, ToT, and RAG. These techniques were systematically applied across multiple datasets with distinct domain characteristics, allowing for a comprehensive performance evaluation.

The primary contribution of this work is the proposal of the greedy prompt engineering strategy (Greedy PES), a methodology designed to select the optimal prompt engineering strategy based on dataset and model characteristics. To ensure an objective and quantitative evaluation of MLLM responses, we employed a range of evaluation metrics, including BLEU, ROUGE, METEOR, S-BERT, MoverScore, and CIDEr. Additionally, a weighted aggregate evaluation score was introduced to facilitate a holistic comparison of model performance.

Experimental results demonstrate that the optimal PES varies depending on the dataset and the model used. General image captioning datasets benefited most from ICL, ToT, and RAG, suggesting that multimodal models require enhanced contextual reasoning, structured thought processing, and external knowledge retrieval for effective caption generation. Mathematical reasoning tasks (mathematical category) were best addressed by ICL, SSR, and ToT, highlighting the importance of incremental, structured reasoning in mathematical problem-solving. Scientific reasoning tasks (science category) showed the highest gains with RAG and SSR, reinforcing the need for external knowledge augmentation and systematic logical inference in scientific domains.

In the absence of prompt engineering, Qwen-2 emerged as the most effective model across various benchmarks. However, when Greedy PES was applied, Phi-3.5 also achieved competitive performance, despite being the smallest model in terms of parameter count. This finding underscores the potential of PES to significantly enhance the efficiency of smaller-scale models, making Phi-3.5 a highly efficient and accurate model when coupled with optimized prompt strategies.

These results empirically validate the hypothesis that PE can significantly enhance model performance and compensate for inherent model limitations. Moving forward, future research should extend the validation of Greedy PES to a broader range of multimodal applications and explore additional techniques to mitigate hallucination effects within MLLMs. Furthermore, domain-specific optimizations (e.g., medical, legal applications) should be investigated to refine PES methodologies for specialized fields where precision and reliability are paramount.

Author Contributions

Conceptualization, S.L.; methodology, S.L. and M.S.; software, M.S.; validation, S.L. and M.S.; formal analysis, S.L.; investigation, S.L.; resources, S.L.; data curation, M.S.; writing—original draft preparation, S.L.; writing—review and editing, S.L.; visualization, S.L. and M.S.; supervision, S.L.; project administration, S.L.; funding acquisition, S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Soonchunhyang University Research Fund.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

We acknowledge the support by the Soonchunhyang University Research Fund.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fu, C.; Zhang, Y.F.; Yin, S.; Li, B.; Fang, X.; Zhao, S.; Duan, H.; Sun, X.; Liu, Z.; Wang, L.; et al. MME-Survey: A Comprehensive Survey on Evaluation of Multimodal LLMs. arXiv 2024, arXiv:2411.15296v2. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; Volume 139, pp. 8748–8763. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Ye, J.; Chen, X.; Xu, N.; Zu, C.; Shao, Z.; Liu, S.; Cui, Y.; Zhou, Z.; Gong, C.; Shen, Y.; et al. A Comprehensive Capability Analysis of GPT-3 and GPT-3.5 Series Models. arXiv 2023, arXiv:2303.10420. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama 2: Open Foundation and Fine-Tuned Chat Models. arXiv 2023, arXiv:2307.09288. [Google Scholar]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar]

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A Family of Highly Capable Multimodal Models. arXiv 2023, arXiv:2312.11805. [Google Scholar]

- Jiang, A.Q.; Sablayrolles, A.; Mensch, A.; Bamford, C.; Chaplot, D.S.; de Las Casas, D.; Bressand, F.; Lengyel, G.; Lample, G.; Saulnier, L.; et al. Mistral 7B. arXiv 2023, arXiv:2310.06825. [Google Scholar]

- Kaufmann, T.; Weng, P.; Bengs, V.; Hüllermeier, E. A Survey of Reinforcement Learning from Human Feedback. arXiv 2023, arXiv:2312.14925. [Google Scholar]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Zhai, B.; Yang, S.; Zhao, X.; Xu, C.; Shen, S.; Zhao, D.; Keutzer, K.; Li, M.; Yan, T.; Fan, X. Halle-switch: Rethinking and controlling object existence hallucinations in large vision language models for detailed caption. arXiv 2023, arXiv:2310.01779. [Google Scholar]

- Song, S.; Li, X.; Li, S.; Zhao, S.; Yu, J.; Ma, J.; Mao, X.; Zhang, W.; Wang, M. How to Bridge the Gap between Modalities: Survey on Multimodal Large Language Model. arXiv 2023, arXiv:2311.07594v3. [Google Scholar]

- Yin, S.; Fu, C.; Zhao, S.; Li, K.; Sun, X.; Xu, T.; Chen, E. A Survey on Multimodal Large Language Models. Natl. Sci. Rev. 2024, 11, nwae403. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Zhang, A.; Li, M.; Zhao, H.; Karypis, G.; Smola, A. Multimodal Chain-of-Thought Reasoning in Language Models. arXiv 2023, arXiv:2302.00923. [Google Scholar]

- Wu, J.; Zhang, Z.; Xia, Y.; Li, X.; Xia, Z.; Chang, A.; Yu, T.; Kim, S.; Rossi, R.A.; Zhang, R.; et al. Visual Prompting in Multimodal Large Language Models: A Survey. arXiv 2024, arXiv:2409.15310. [Google Scholar]

- Dong, Q.; Li, L.; Dai, D.; Zheng, C.; Wu, Z.; Chang, B.; Sun, X.; Xu, J.; Li, L.; Sui, Z. A Survey on In-context Learning. arXiv 2022, arXiv:2301.00234. [Google Scholar] [CrossRef]

- Amatriain, X. Prompt Design and Engineering: Introduction and Advanced Methods. arXiv 2024, arXiv:2401.14423. [Google Scholar]

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering in Large Language Models: A comprehensive review. arXiv 2023, arXiv:2310.14735. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-T.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual, 6–12 December 2020; Volume 33, pp. 9459–9474. [Google Scholar]

- Gunasekar, S.; Zhang, Y.; Aneja, J.; Mendes, C.C.; Del Giorno, A.; Gopi, S.; Javaheripi, M.; Kauffmann, P.; de Rosa, G.; Saarikivi, O.; et al. Textbooks Are All You Need. arXiv 2023, arXiv:2306.11644. [Google Scholar]

- Li, Y.; Bubeck, S.; Eldan, R.; Del Giorno, A.; Gunasekar, S.; Lee, Y.T. Textbooks Are All You Need II: Phi-1.5 technical report. arXiv 2023, arXiv:2309.05463. [Google Scholar]

- Meta AI. Llama 3.2: Revolutionizing Edge AI and Vision with Open, Customizable Models. Available online: https://ai.meta.com/blog/llama-3-2-connect-2024-vision-edge-mobile-devices/ (accessed on 31 March 2025).

- Agrawal, P.; Antoniak, S.; Hanna, E.B.; Bout, B.; Chaplot, D.; Chudnovsky, J.; Costa, D.; De Monicault, B.; Garg, S.; Gervet, T.; et al. Pixtral 12B: A Multimodal Language Model. arXiv 2024, arXiv:2410.07073. [Google Scholar]

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Dang, K.; Deng, X.; Fan, Y.; Ge, W.; Han, Y.; Huang, F.; et al. Qwen Technical Report. arXiv 2023, arXiv:2309.16609. [Google Scholar]

- Yang, A.; Yang, B.; Hui, B.; Zheng, B.; Yu, B.; Zhou, C.; Li, C.; Li, C.; Liu, D.; Huang, F.; et al. Qwen2 Technical Report. arXiv 2024, arXiv:2407.10671. [Google Scholar]

- Lu, P.; Bansal, H.; Xia, T.; Liu, J.; Li, C.; Hajishirzi, H.; Cheng, H.; Chang, K.-W.; Galley, M.; Gao, J. MathVista: Evaluating Mathematical Reasoning of Foundation Models in Visual Contexts. In Proceedings of the 3rd Workshop on Mathematical Reasoning and AI (MATH-AI), NeurIPS 2023, New Orleans, LA, USA, 15 December 2023. [Google Scholar]

- Tong, S.; Brown, E.; Wu, P.; Woo, S.; Middepogu, M.; Akula, S.C.; Yang, J.; Yang, S.; Iyer, A.; Pan, X.; et al. Cambrian-1: A Fully Open, Vision-Centric Exploration of Multimodal LLMs. In Proceedings of the 38th Conference on Neural Information Processing Systems (NeurIPS), Vancouver, BC, USA, 10–15 December 2024. [Google Scholar]

- Lu, P.; Mishra, S.; Xia, T.; Qiu, L.; Chang, K.W.; Zhu, S.C.; Tafjord, O.; Clark, P.; Kalyan, A. Learn to Explain: Multimodal Reasoning via Thought Chains for Science Question Answering. In Proceedings of the 38th Conference on Neural Information Processing Systems (NeurIPS), Vancouver, BC, USA, 10–15 December 2024. [Google Scholar]

- Agrawal, H.; Desai, K.; Lee, S. NoCaps: Novel Object Captioning at Scale. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Plummer, B.A.; Wang, L.; Cervantes, C.M.; Caicedo, J.C.; Hockenmaier, J.; Lazebnik, S. Flickr30k Entities: Collecting Region-to-Phrase Correspondences for Richer Image-to-Sentence Models. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Xu, M.; Yin, W.; Cai, D.; Yi, R.; Xu, D.; Wang, Q.; Wu, B.; Zhao, Y.; Yang, C.; Wang, S.; et al. A Survey of Resource-efficient LLM and Multimodal Foundation Models. arXiv 2024, arXiv:2401.08092. [Google Scholar]

- Li, J.; Lu, W.; Fei, H.; Luo, M.; Dai, M.; Xia, M.; Jin, Y.; Gan, Z.; Qi, D.; Fu, C.; et al. A Survey on Benchmarks of Multimodal Large Language Models. arXiv 2024, arXiv:2408.08632. [Google Scholar]

- Xie, J.; Chen, Z.; Zhang, R.; Wan, X.; Li, G. Large Multimodal Agents: A Survey. arXiv 2024, arXiv:2402.15116. [Google Scholar]

- Baldassini, F.B.; Shukor, M.; Cord, M.; Soulier, L.; Piwowarski, B. What Makes Multimodal In-Context Learning Work? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 17–28 June 2024. [Google Scholar]

- Mitra, C.; Huang, B.; Darrell, T.; Herzig, R. Compositional Chain-of-Thought Prompting for Large Multimodal Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar]

- He, J.; Wang, X.; Liu, S.; Wu, G.; Silva, C.; Qu, H. POEM: Interactive Prompt Optimization for Enhancing Multimodal Reasoning of Large Language Models. arXiv 2024, arXiv:2306.13549v4. [Google Scholar]

- Microsoft Research. Phi-3.5: A Lightweight Multimodal Model for Vision and Language Tasks. arXiv 2024, arXiv:2410.11223.

- Shazeer, N. GLU Variants Improve Transformer. arXiv 2020, arXiv:2002.05202v1. [Google Scholar]

- Li, X.; Wang, Z.; Xie, C. An Inverse Scaling Law for CLIP Training. In Proceedings of the Advances in Neural Information Processing Systems 36 (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Alibaba Group. Qwen2-VL-7B-Instruct: Advancements in Vision-Language Understanding. arXiv 2024, arXiv:2401.12345.

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Peng, B.; Quesnelle, J.; Fan, H.; Shippole, E. YaRN: Efficient context window extension of large language models. arXiv 2023, arXiv:2309.00071. [Google Scholar]

- An, C.; Huang, F.; Zhang, J.; Gong, S.; Qiu, X.; Zhou, C.; Kong, L. Training-free long-context scaling of large language models. arXiv 2024, arXiv:2402.17463. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.-J. BLEU: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics (ACL), Philadelphia, PA, USA, 6–12 July 2002. [Google Scholar]

- Lin, C.-Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the ACL-04 Workshop on Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Zhao, W.; Peyrard, M.; Liu, F.; Gao, Y.; Meyer, C.M.; Eger, S. MoverScore: Text Generation Evaluating with Contextualized Embeddings and Earth Mover Distance. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing (EMNLP), Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. CIDEr: Consensus-based Image Description Evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Naseem, U.; Thapa, S.; Masood, A. Advancing Accuracy in Multimodal Medical Tasks Through Bootstrapped Language-Image Pretraining (BioMedBLIP): Performance Evaluation Study. J. Med. Internet Res. Med Inform. 2024, 12, e56627. [Google Scholar]

- Liu, F.; Zhu, T.; Wu, X.; Yang, B.; You, C.; Wang, C.; Lu, L.; Liu, Z.; Zheng, Y.; Sun, X.; et al. A medical multimodal large language model for future pandemics. npj Digit. Med. 2023, 6, 226. [Google Scholar]

- Yang, Z.; Li, L.; Wang, J.; Lin, K.; Azarnasab, E.; Ahmed, F.; Liu, Z.; Liu, C.; Zeng, M.; Wang, L. MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action. arXiv 2023, arXiv:2303.11381. [Google Scholar]

- Lu, M.Y.; Chen, B.; Williamson, D.F.; Chen, R.J.; Zhao, M.; Chow, A.K.; Ikemura, K.; Kim, A.; Pouli, D.; Patel, A.; et al. A multimodal generative AI copilot for human pathology. Nature 2024, 634, 466–473. [Google Scholar]

- Yang, Y.; Zhou, T.; Li, K.; Tao, D.; Li, L.; Shen, L.; He, X.; Jiang, J.; Shi, Y. Embodied Multi-Modal Agent trained by an LLM from a Parallel TextWorld. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 26265–26275. [Google Scholar]

- Liu, Y.; Duan, H.; Zhang, Y.; Li, B.; Zhang, S.; Zhao, W.; Yuan, Y.; Wang, J.; He, C.; Liu, Z.; et al. MMBench: Is Your Multi-modal Model an All-around Player? In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Liu, H.I.; Galindo, M.; Xie, H.; Wong, L.K.; Shuai, H.H.; Li, Y.H.; Cheng, W.H. Lightweight Deep Learning for Resource-Constrained Environments: A Survey. arXiv 2024, arXiv:2404.07236v2. [Google Scholar] [CrossRef]

- Jiang, C.; Xu, H.; Dong, M.; Chen, J.; Ye, W.; Yan, M.; Ye, Q.; Zhang, J.; Huang, F.; Zhang, S. Hallucination Augmented Contrastive Learning for Multimodal Large Language Model. arXiv 2024, arXiv:2312.06968v3. [Google Scholar]

- Doveh, S.; Perek, S.; Mirza, M.J.; Lin, W.; Alfassy, A.; Arbelle, A.; Ullman, S.; Karlinsky, L. Towards Multimodal In-Context Learning for Vision & Language Models. arXiv 2024, arXiv:2403.12736. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).